A Review of Machine Learning and Transfer Learning Strategies for Intrusion Detection Systems in 5G and Beyond

Abstract

1. Introduction

- ■

- This paper presents an in-depth examination of 5G network security challenges and the evolving role of IDSs in mitigating these threats. Existing IDS frameworks and datasets are critically evaluated to underscore the need for more reliable and representative data in developing effective NIDSs. The use of a back-to-back combinatorial algorithm for keylogger detection and analysis is also proposed.

- ■

- This paper introduces a robust testbed architecture integrated with the 5GTN platform. This architecture facilitates the generation of realistic datasets, enabling a more accurate assessment of security solutions compared to traditional testbed or simulation environments.

- ■

- It reviews the application of diverse ML models for intrusion detection using the 5G-NIDD dataset. The analysis highlights the strengths and limitations of each algorithm under the unique conditions of 5G networks, providing insights into their practical applicability for real-world intrusion detection.

- ■

- This study outlines critical challenges, including data leakage prevention, the scarcity of live network datasets, and the effective use of time-series analysis (TL). These insights offer valuable guidance for future AI-/ML-based network security research.

2. Background and Related Work

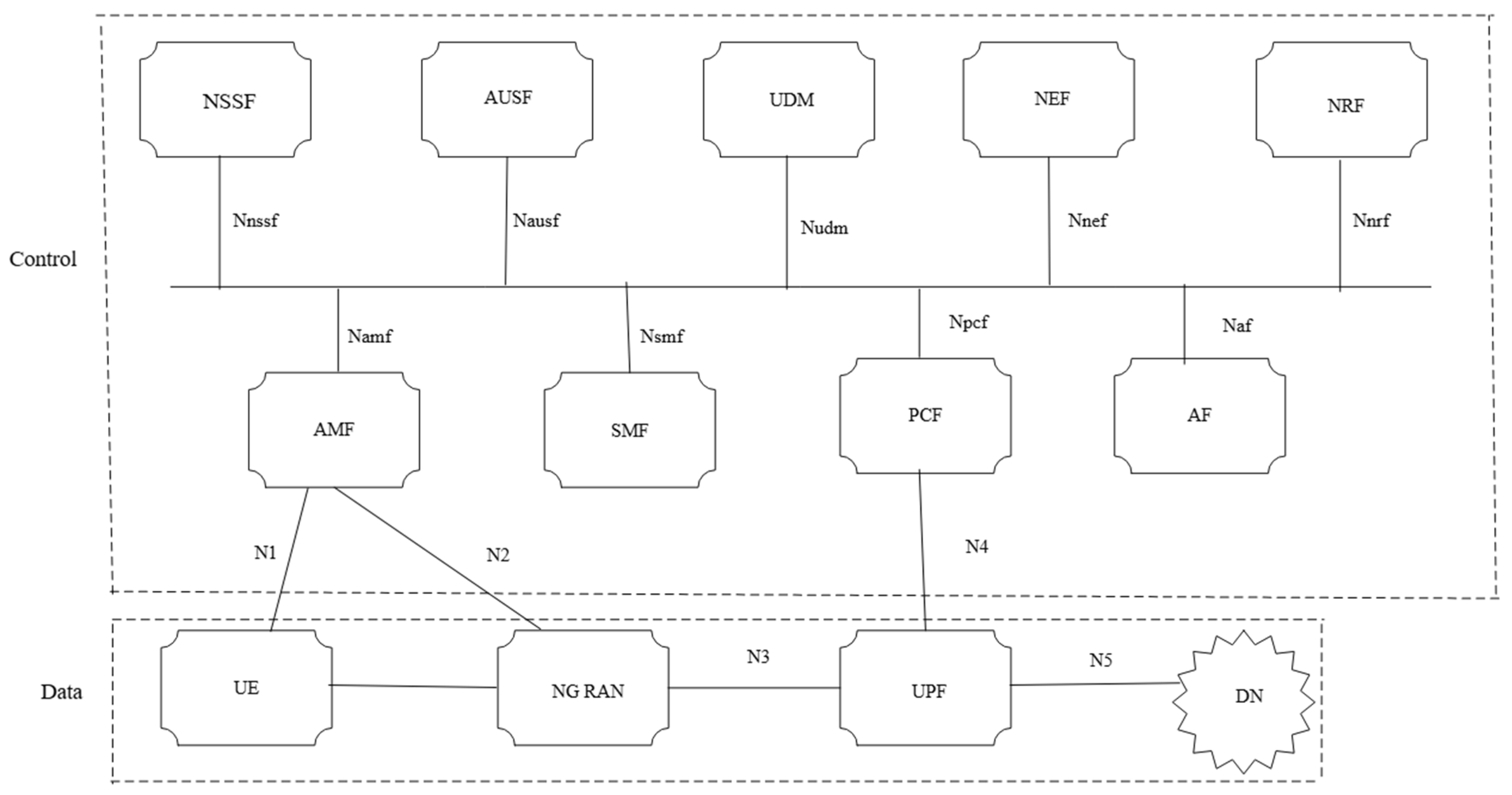

3. Fifth-Generation Core Network and Security Challenges

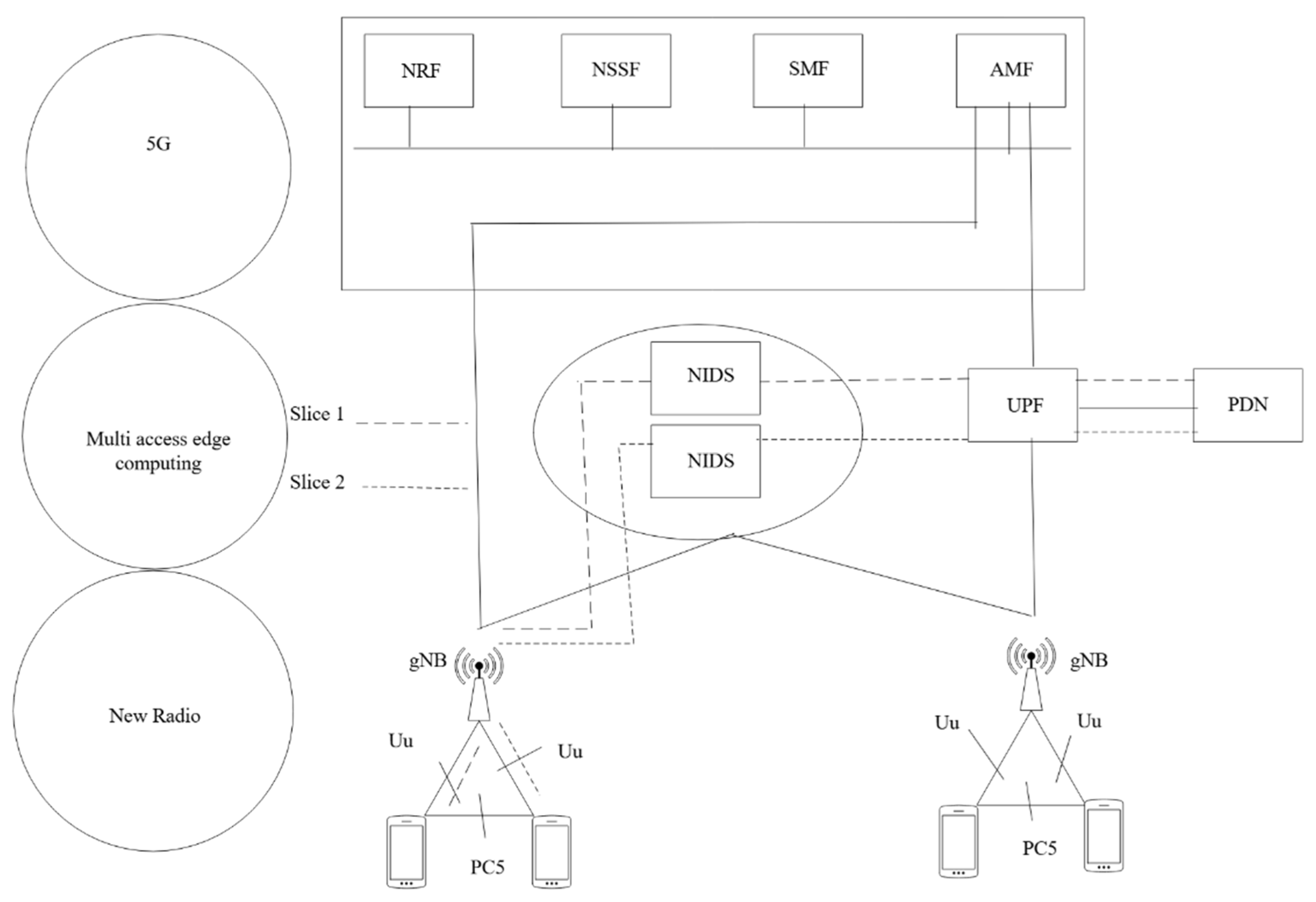

3.1. Intrusion Detection in 5G Networks

3.2. Existing Datasets for 5G Network Security

4. Intrusion Detection Systems (IDSs) and Classification

4.1. NIDS for IoT

4.2. Comparison of Open-Source NIDSs

4.3. Role of Datasets in NIDS Development

4.4. ML Datasets

4.5. Integrating Explainable and Hybrid AI

4.6. DL Models

Enhancing 5G Security: A Transfer Learning-Based IDS Approach

4.7. Quantum Machine Learning

4.8. Comparison of Batch Learning Models (BLMs) and Data Streaming Models (DSMs)

4.9. Dataset Comparison

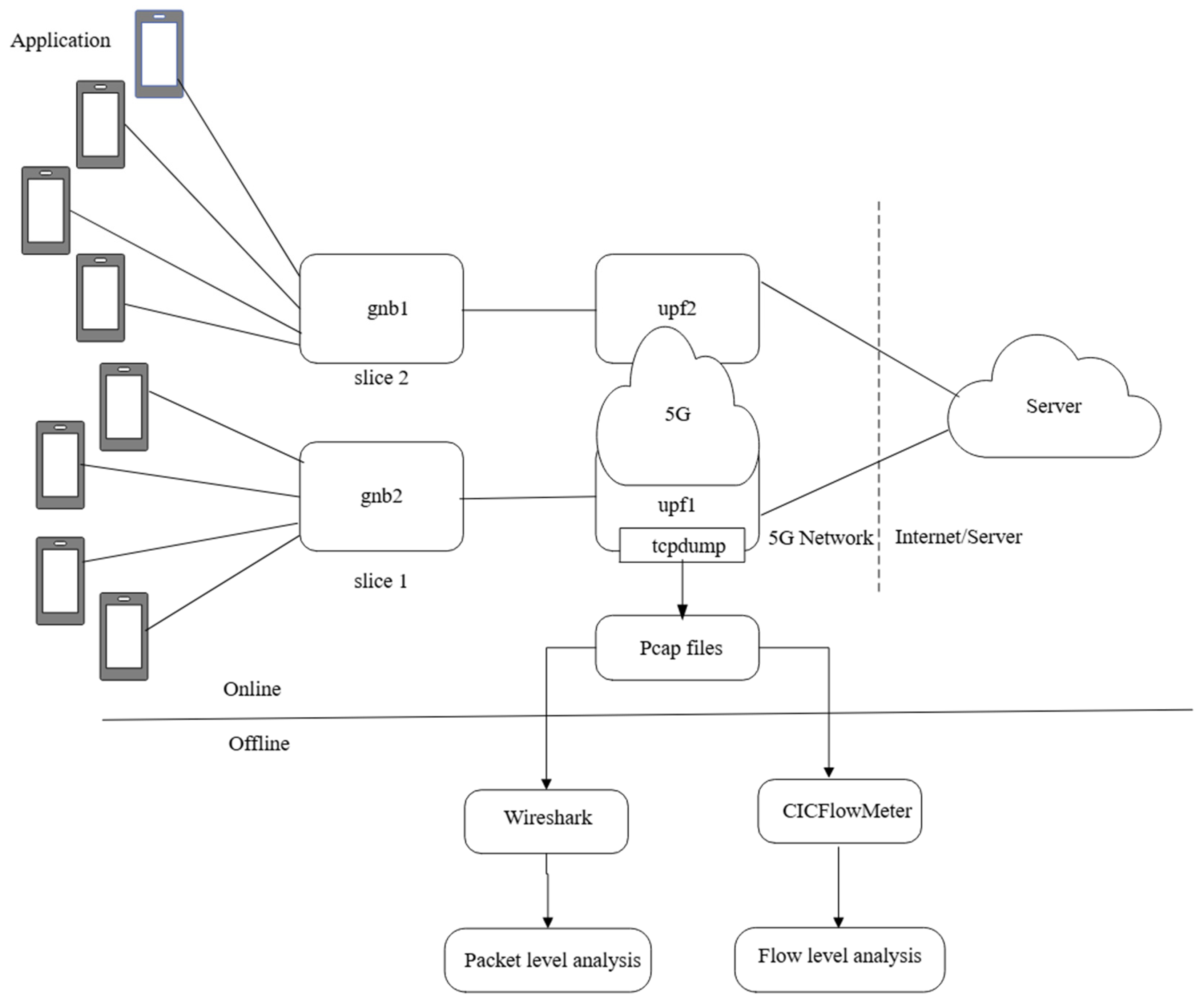

5. Development of the 5G NIDD Datasets

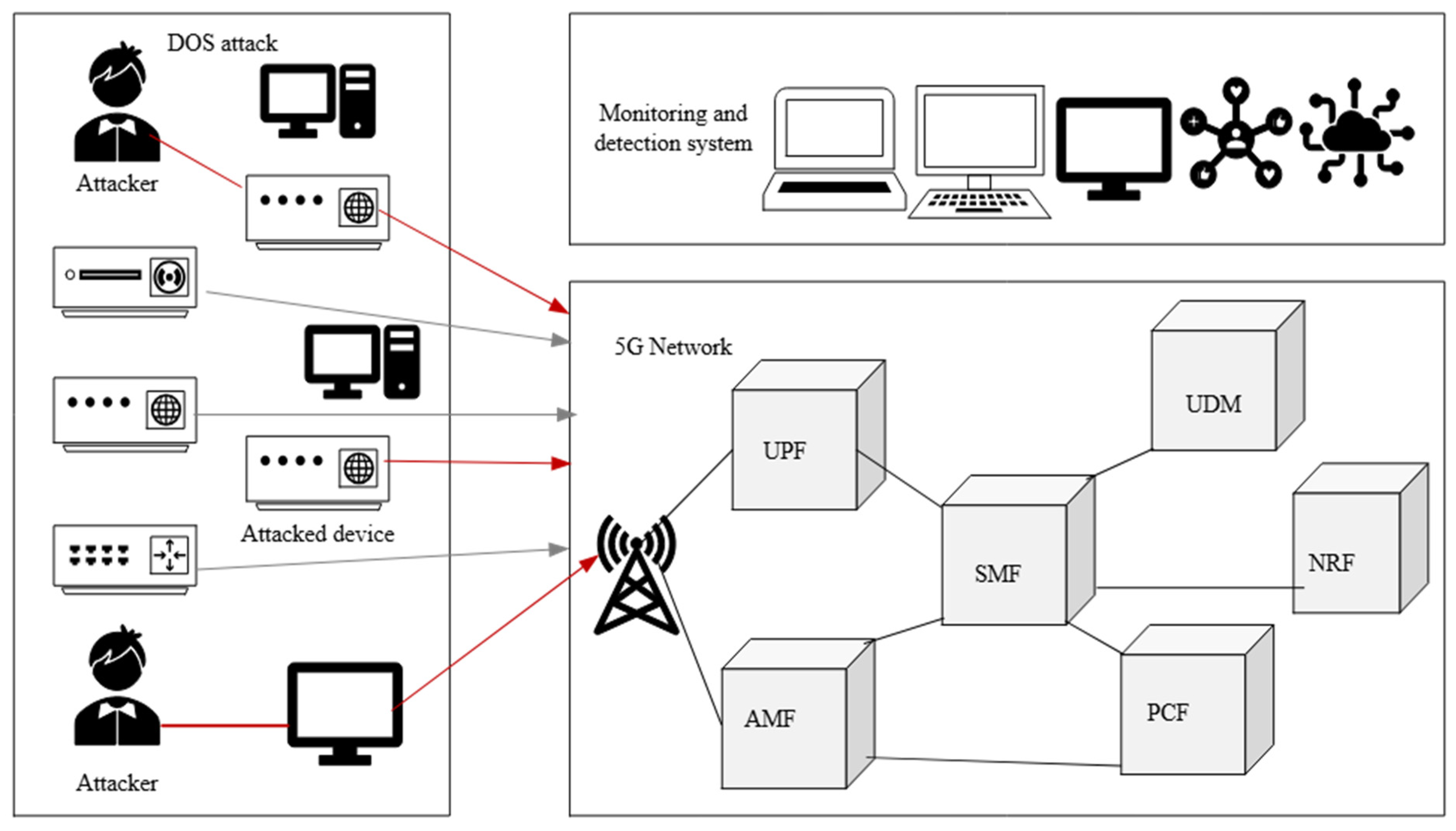

5.1. Simulated Attack Types

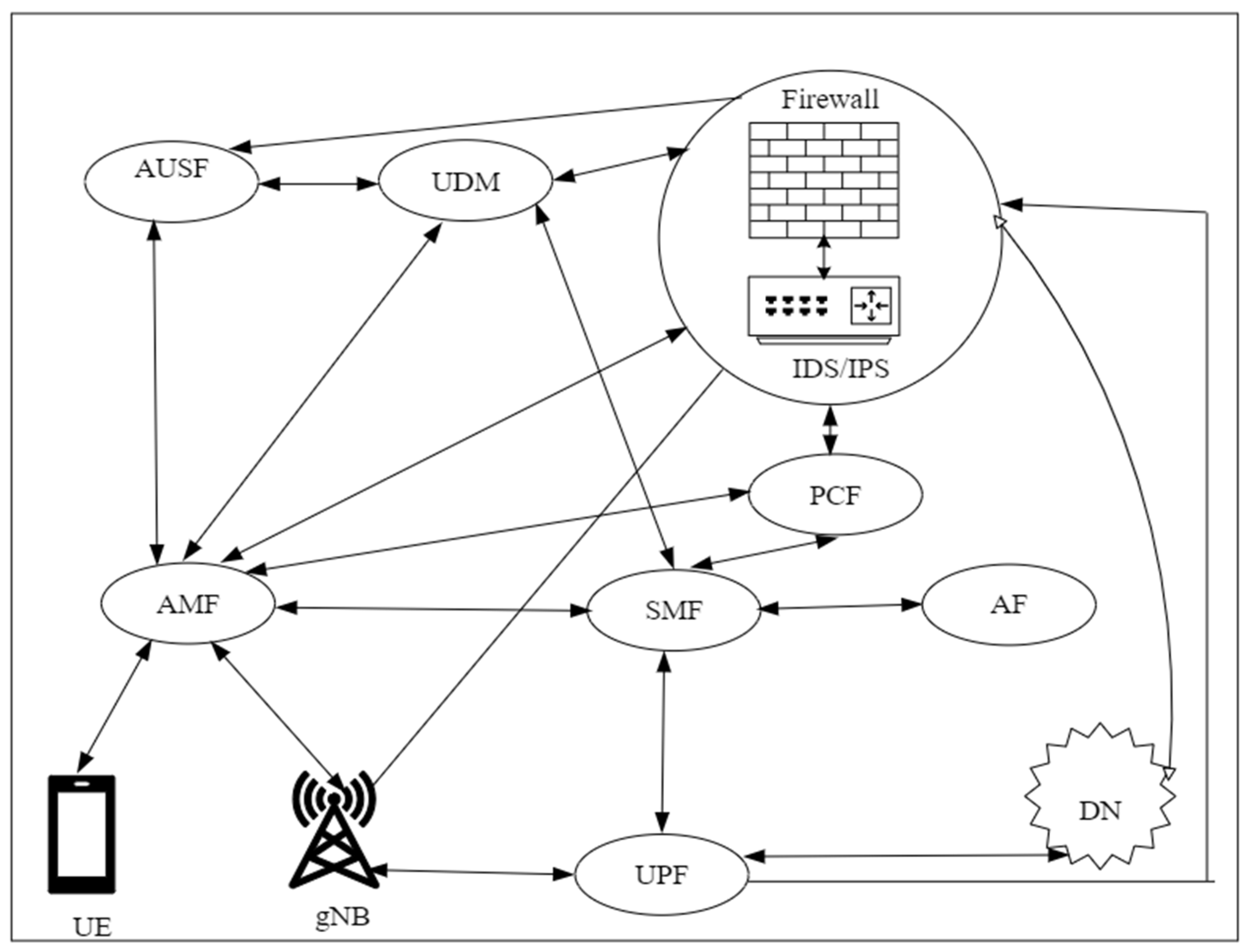

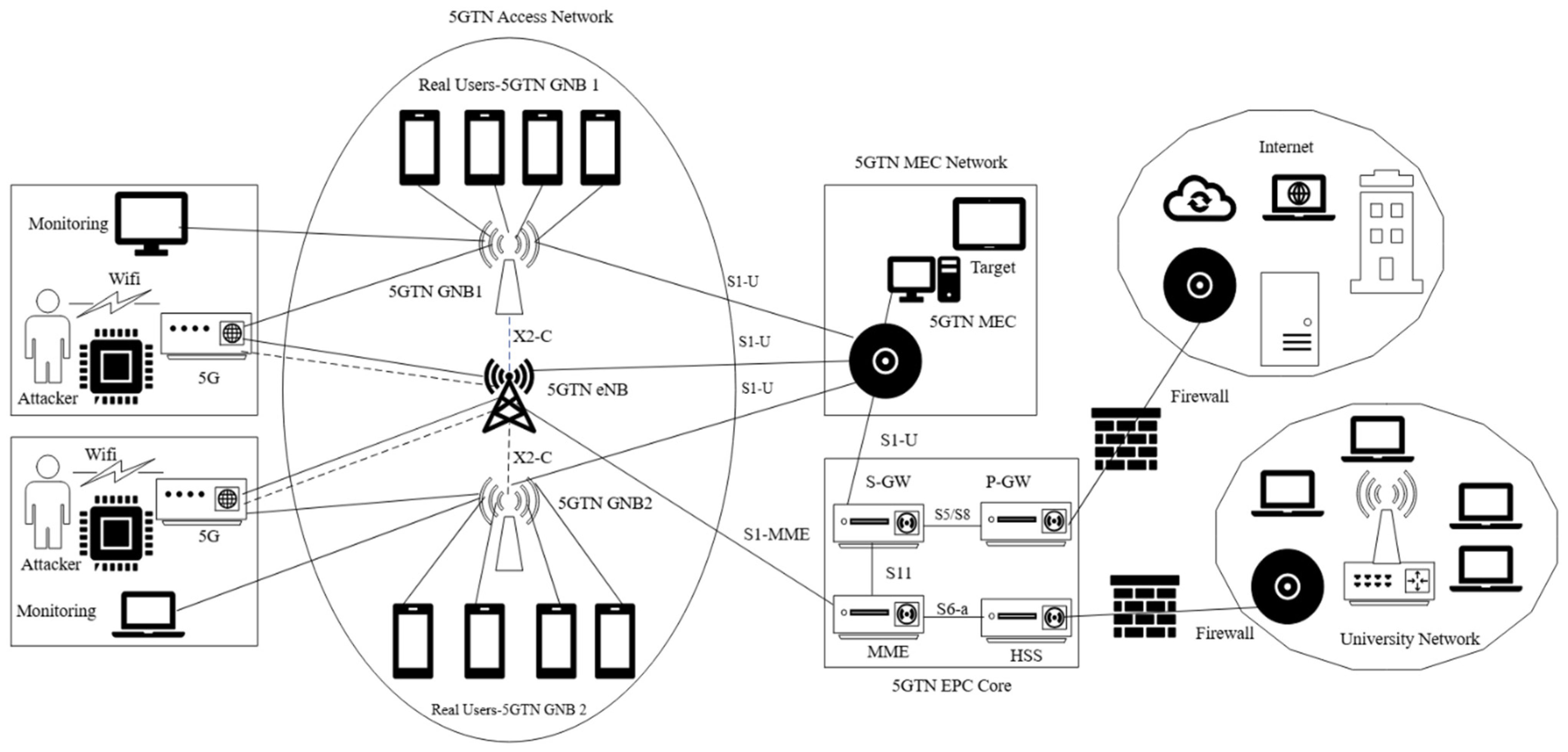

5.2. System Architecture

5.3. Comparison with Other Architectures

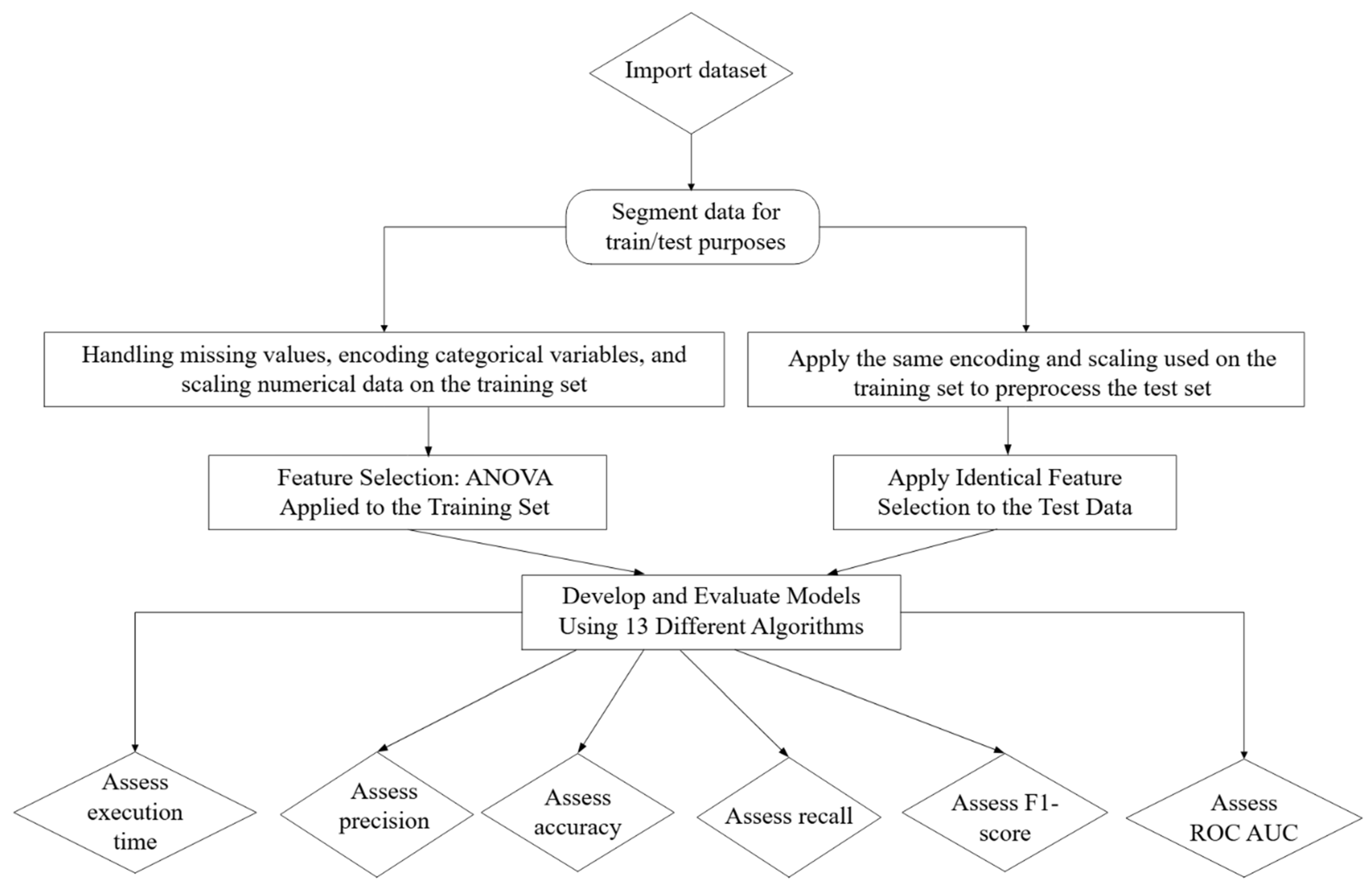

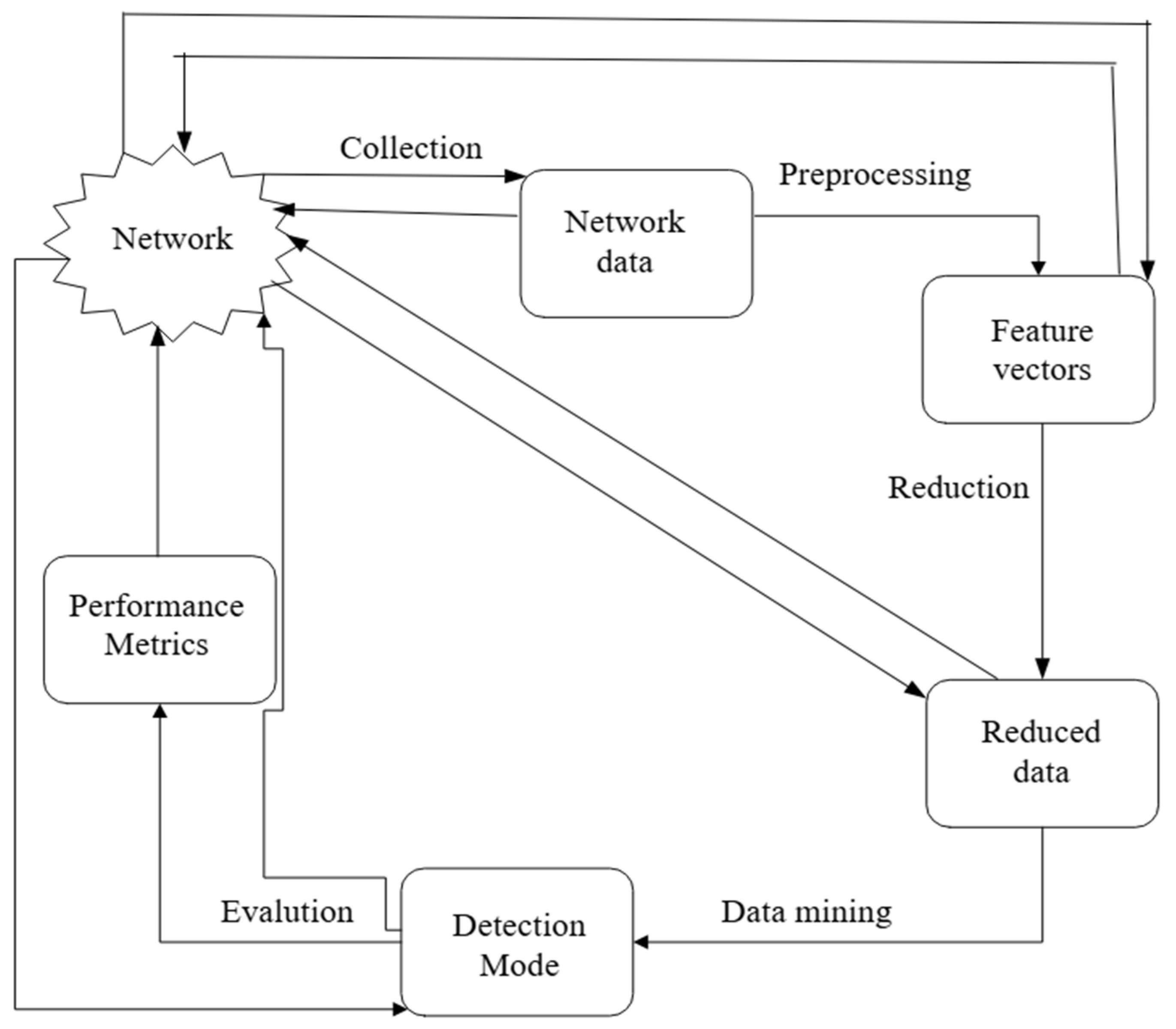

6. Knowledge Discovery in Databases (KDD)

6.1. Data Cleaning and Feature Transformation in NIDS Preprocessing

6.2. Data Acquisition and Processing

6.2.1. Data Transformation

6.2.2. Correlation Coefficient

6.2.3. ANOVA Statistical Evaluation

6.2.4. Data Standardization

6.3. Data Mining Stage

6.4. Performance Assessment

6.4.1. Evaluation Technique

6.4.2. Challenges in Incremental Evaluation Technique

6.4.3. Attacks and Impact Analysis

6.4.4. ML Models and Performance Evaluation

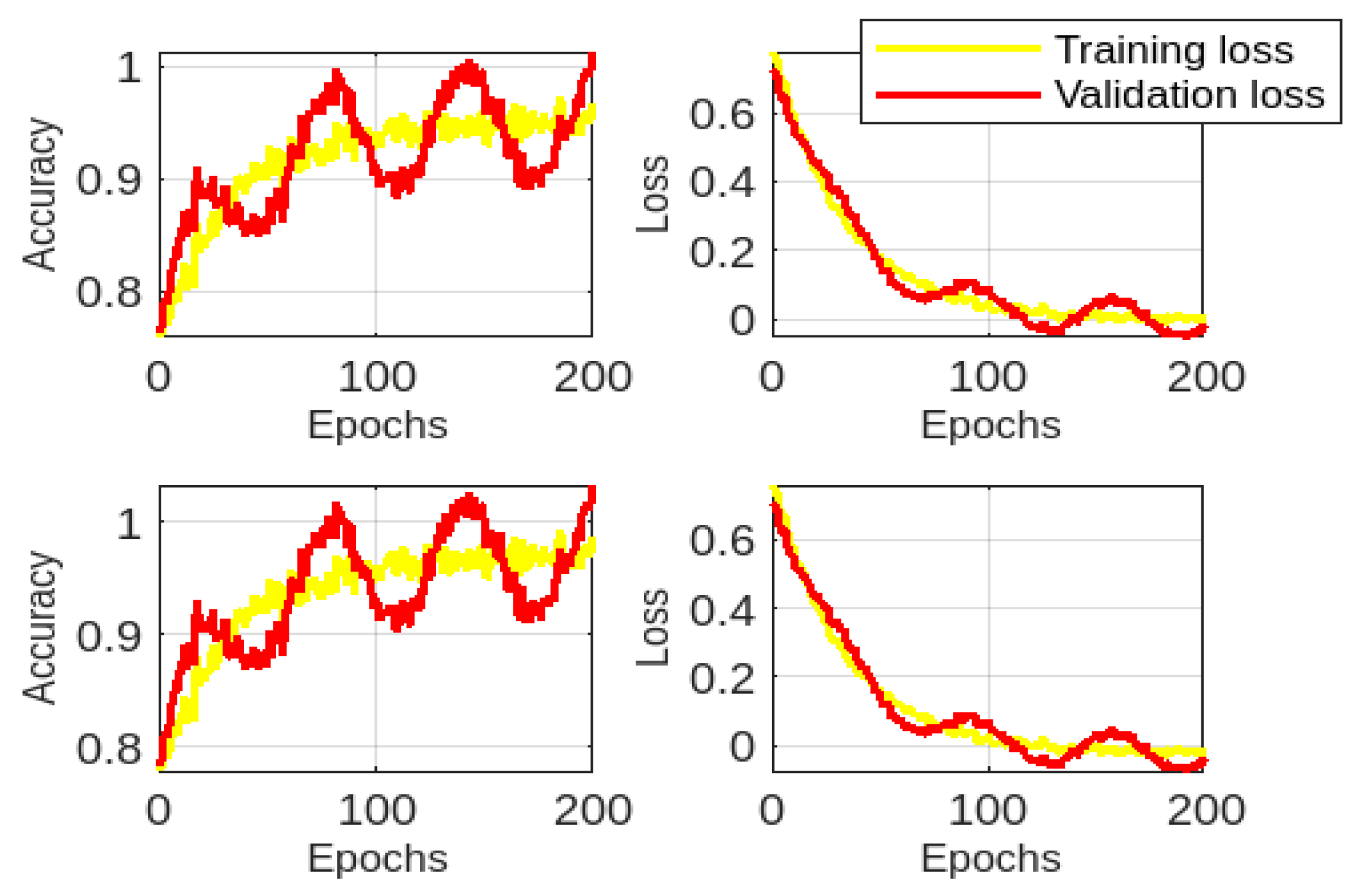

6.4.5. Evaluation of DL Models

6.4.6. Comparative Model Analysis

7. Challenges and Limitations of the NIDS

8. Recent Trends, Future Research Directions, and Lessons Learned

8.1. Recent Trends

8.2. Future Research Directions

8.3. Lesson Learned

- Lesson 1: Reflections on IDS Technologies

- Lesson 2: Evolving AI Strategies in Cybersecurity

- Lesson 3: Enhancing 5G IoT Security

- Lesson 4: Ensuring Consistency in Evaluation

- Lesson 5: Incorporating Dynamic Variability

- Lesson 6: Development of base models

- Lesson 7: Domain Transfer Learning (DTL) Strategies

- Lesson 8: Freezing Layers and Selective Retraining

- DTL0: In this scenario, all layers except the last one are frozen, and only the final decision-making layer is retrained. This allows the model to retain the features learned during the initial training while adjusting the final output layer to the specific task.

- DTL1: This method freezes all layers except for the last 33%, which are then retrained. This enables deeper layers to adapt to the new task while maintaining the integrity of the initial feature extraction layers.

- DTL2: Similar to DTL1, the last 66% of layers are unfrozen and retrained. This scenario provides greater flexibility in adapting the model, allowing more parameters to be updated compared to DTL1.

- Lesson 9: Removing Layers, Adding New Layers, and Retraining

- DTL3: In this scenario, all layers are frozen except for the last one, which is removed and replaced by a new layer initialized randomly. This modification ensures the model can adapt to the new task if the output structure differs from the original.

- DTL4: This is the same approach as DTL3, but two new layers are added instead of one. This increases the model’s capacity to learn more complex patterns and provides greater adaptability to the new task.

- DTL5: In this scenario, three new layers are added after removing the last layer. This offers the highest flexibility for adapting the model to tasks requiring significant modifications in output behavior.

- Lesson 10: Advancing Anomaly Detection with LSTM for Improved Network Security

- Lesson 11: Choice of ML model

- Lesson 12: Integrating IDS, SDN Security, and ML

9. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

List of Acronyms

| Abbreviation | Full Meaning |

| ML | Machine Learning |

| IDS | Intrusion Detection System |

| KNN | K-Nearest Neighbors |

| ROC | Receiver Operating Characteristic |

| AUC | Area Under the Curve |

| DDoS | Distributed Denial of Service |

| DoS | Denial of Service |

| DL | Deep Learning |

| DTL | Deep Transfer Learning |

| BiLSTM | Bidirectional Long Short-Term Memory |

| CNN | Convolutional Neural Network |

| NNs | Neural Networks |

| DTs | Decision Trees |

| NB | Naive Bayes |

| LR | Logistic Regression |

| GB | Gradient Boosting |

| DNNs | Deep Neural Networks |

| ResNet | Residual Network |

| NIDSs | Network Intrusion Detection Systems |

| AI | Artificial Intelligence |

| IoT | Internet of Things |

| TL | Transfer Learning |

| B5G | Beyond-5G |

| 5GTN | 5G Test Network |

| CN | Core Network |

| IIoT | Industrial Internet of Things |

| DDPG | Deep Deterministic Policy Gradient |

| QoS | Quality of Service |

| EDoS | Economical Denial of Sustainability |

| ANNs | Artificial Neural Networks |

| SVMs | Support Vector Machines |

| SL | Supervised Learning |

| USL | Unsupervised Learning |

| SSL | Semi-Supervised Learning |

| RL | Reinforcement Learning |

| CG-GRU | Control Gated–Gated Recurrent Unit |

| XGB | Extreme Gradient Boost |

| eMBB | Enhanced Mobile Broadband |

| uRLLC | Ultra-Reliable Low-Latency Communication |

| mMTC | Massive Machine-Type Communications |

| NSA | Non-Standalone |

| SA | Standalone |

| SBA | Service-Based Architecture |

| AMF | Access and Mobility Management Function |

| UPF | User Plane Function |

| SDN | Software-Defined Networking |

| MITM | Man in the Middle |

| NFV | Network Function Virtualization |

| RAN | Radio Access Network |

| SMF | Session Management Function |

| SIDSs | Signature-Based Intrusion Detection Systems |

| AIDSs | Anomaly-Based Intrusion Detection Systems |

| HIDS | Host-based IDS |

| WIDS | Wireless-based IDS |

| NBA | Network Behavior Analysis |

| MIDS | Mixed IDS |

| SNs | Standard Networks |

| FPs | False Positives |

| FNs | False Negatives |

| R-NIDS | Reliable-NIDS |

| GUI | Graphical User Interface |

| ANOVA | Analysis of Variance |

| UE | User Equipment |

| PFCP | Packet Forwarding Control Protocol |

| MEC | Multi-access Edge Computing |

| SFTP | Secure File Transfer Protocol |

| PCA | Principal Component Analysis |

| MMD | Maximum Mean Discrepancy |

| NVF | Network Virtualization Function |

| KDD | Knowledge Discovery in Databases |

| GTP-U | General Packet Radio Service Tunneling Protocol |

| CV | Cross-Validation |

| TNR | True Negative Rate |

| FPR | False Positive Rate |

| FNR | False Negative Rate |

| TPR | True Positive Rate |

| NGAP | Next-Generation Application Protocol |

| RRC | Radio Resource Control |

| UDP | User Datagram Protocol |

| ICMP | Internet Control Message Protocol |

| XAI | Explainable AI |

| VMs | Virtual Machines |

| RSS | Received Signal Strength |

| CAVs | Autonomous Vehicles |

| AE | Autoencoder |

| SAE | Stacked Autoencoder |

| RBM | Restricted Boltzmann Machine |

| RNN | Recurrent Neural Network |

| LSTM | Long Short-Term Memory |

| DBN | Deep Belief Network |

| GRU | Gated Recurrent Unit |

| TA-IDPS | Trust-Aware Intrusion Detection and Prevention System |

| MANETs | Mobile Ad Hoc Networks |

| TA | Trusted Authority |

| CH | Cluster Head |

| MFO | Moth Flame Optimization |

| CIoT | Consumer-Centric Internet of Things |

| FL | Federated Learning |

| FDL | Federated Deep Learning |

| BLMs | Batch Learning Models |

| DSMs | Data Streaming Models |

| HT | Hoeffding Tree |

| OBA | OzaBagAdwin |

| R2L | Remote-to-Local |

| U2R | User-to-Root |

| RF | Random Forest |

| QNNs | Quantum Neural Networks |

References

- Moubayed, A.; Manias, D.M.; Javadtalab, A.; Hemmati, M.; You, Y.; Shami, A. OTN-over-WDM optimization in 5G networks: Key challenges and innovation opportunities. Photonic Netw. Commun. 2023, 45, 49–66. [Google Scholar] [CrossRef]

- Aoki, S.; Yonezawa, T.; Kawaguchi, N. RobotNEST: Toward a Viable Testbed for IoT-Enabled Environments and Connected and Autonomous Robots. IEEE Sensors Lett. 2022, 6, 6000304. [Google Scholar] [CrossRef]

- Siriwardhana, Y.; Porambage, P.; Liyanage, M.; Ylianttila, M. AI and 6G Security: Opportunities and Challenges. In Proceedings of the 2021 Joint European Conference on Networks and Communications & 6G Summit (EuCNC/6G Summit), Porto, Portugal, 8–11 June 2021; pp. 616–621. [Google Scholar]

- Dini, P.; Elhanashi, A.; Begni, A.; Saponara, S.; Zheng, Q.; Gasmi, K. Overview on Intrusion Detection Systems Design Exploiting Machine Learning for Networking Cybersecurity. Appl. Sci. 2023, 13, 7507. [Google Scholar] [CrossRef]

- Sadhwani, S.; Mathur, A.; Muthalagu, R.; Pawar, P.M. 5G-SIID: An intelligent hybrid DDoS intrusion detector for 5G IoT networks. Int. J. Mach. Learn. Cybern. 2024, 16, 1243–1263. [Google Scholar] [CrossRef]

- Ahuja, N.; Mukhopadhyay, D.; Singal, G. DDoS attack traffic classification in SDN using deep learning. Pers. Ubiquitous Comput. 2024, 28, 417–429. [Google Scholar] [CrossRef]

- Nguyen, C.T.; Van Huynh, N.; Chu, N.H.; Saputra, Y.M.; Hoang, D.T.; Nguyen, D.N.; Pham, Q.-V.; Niyato, D.; Dutkiewicz, E.; Hwang, W.-J. Transfer Learning for Wireless Networks: A Comprehensive Survey. Proc. IEEE 2022, 110, 1073–1115. [Google Scholar] [CrossRef]

- Bouke, M.A.; Abdullah, A. An empirical study of pattern leakage impact during data preprocessing on machine learning-based intrusion detection models reliability. Expert Syst. Appl. 2023, 230, 120715. [Google Scholar] [CrossRef]

- Bouke, M.A.; Abdullah, A. An empirical assessment of ML models for 5G network intrusion detection: A data leakage-free approach. e-Prime-Adv. Electr. Eng. Electron. Energy 2024, 8, 100590. [Google Scholar] [CrossRef]

- Jayasinghe, S.; Siriwardhana, Y.; Porambage, P.; Liyanage, M.; Ylianttila, M. Federated Learning based Anomaly Detection as an Enabler for Securing Network and Service Management Automation in Beyond 5G Networks. In Proceedings of the 2022 Joint European Conference on Networks and Communications & 6G Summit (EuCNC/6G Summit), Grenoble, France, 7–10 June 2022; pp. 345–350. [Google Scholar]

- Nait-Abdesselam, F.; Darwaish, A.; Titouna, C. Malware forensics: Legacy solutions, recent advances, and future challenges. In Advances in Computing, Informatics, Networking and Cybersecurity: A Book Honoring Professor Mohammad S. Obaidat’s Significant Scientific Contributions; Springer: Cham, Switzerland, 2022; pp. 685–710. [Google Scholar]

- Saranya, T.; Sridevi, S.; Deisy, C.; Chung, T.D.; Khan, M. Performance Analysis of Machine Learning Algorithms in Intrusion Detection System: A Review. Procedia Comput. Sci. 2020, 171, 1251–1260. [Google Scholar] [CrossRef]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef]

- Khan, M.S.; Farzaneh, B.; Shahriar, N.; Hasan, M.M. DoS/DDoS Attack Dataset of 5G Network Slicing; IEEE Dataport: Piscataway, NJ, USA, 2023. [Google Scholar]

- Imanbayev, A.; Tynymbayev, S.; Odarchenko, R.; Gnatyuk, S.; Berdibayev, R.; Baikenov, A.; Kaniyeva, N. Research of Machine Learning Algorithms for the Development of Intrusion Detection Systems in 5G Mobile Networks and Beyond. Sensors 2022, 22, 9957. [Google Scholar] [CrossRef] [PubMed]

- Joseph, L.P.; Deo, R.C.; Prasad, R.; Salcedo-Sanz, S.; Raj, N.; Soar, J. Near real-time wind speed forecast model with bidirectional LSTM networks. Renew. Energy 2023, 204, 39–58. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar]

- Stahlke, M.; Feigl, T.; García MH, C.; Stirling-Gallacher, R.A.; Seitz, J.; Mutschler, C. Transfer learning to adapt 5G AI-based fingerprint localization across environments. In Proceedings of the 2022 IEEE 95th Vehicular Technology Conference (VTC2022-Spring), Helsinki, Finland, 19–22 June 2022; pp. 1–5. [Google Scholar]

- Yang, B.; Fagbohungbe, O.; Cao, X.; Yuen, C.; Qian, L.; Niyato, D.; Zhang, Y. A Joint Energy and Latency Framework for Transfer Learning Over 5G Industrial Edge Networks. IEEE Trans. Ind. Inform. 2021, 18, 531–541. [Google Scholar] [CrossRef]

- Lv, Z.; Lou, R.; Singh, A.K.; Wang, Q. Transfer Learning-powered Resource Optimization for Green Computing in 5G-Aided Industrial Internet of Things. ACM Trans. Internet Technol. 2021, 22, 1–16. [Google Scholar] [CrossRef]

- Guan, J.; Cai, J.; Bai, H.; You, I. Deep transfer learning-based network traffic classification for scarce dataset in 5G IoT systems. Int. J. Mach. Learn. Cybern. 2021, 12, 3351–3365. [Google Scholar] [CrossRef]

- Coutinho, R.W.L.; Boukerche, A. Transfer Learning for Disruptive 5G-Enabled Industrial Internet of Things. IEEE Trans. Ind. Inform. 2021, 18, 4000–4007. [Google Scholar] [CrossRef]

- Mai, T.; Yao, H.; Zhang, N.; He, W.; Guo, D.; Guizani, M. Transfer Reinforcement Learning Aided Distributed Network Slicing Optimization in Industrial IoT. IEEE Trans. Ind. Inform. 2021, 18, 4308–4316. [Google Scholar] [CrossRef]

- Benzaïd, C.; Taleb, T.; Sami, A.; Hireche, O. A Deep Transfer Learning-Powered EDoS Detection Mechanism for 5G and Beyond Network Slicing. In Proceedings of the GLOBECOM 2023—2023 IEEE Global Communications Conference, Kuala Lumpur, Malaysia, 4–8 December 2023; pp. 4747–4753. [Google Scholar]

- Benzaïd, C.; Taleb, T.; Sami, A.; Hireche, O. FortisEDoS: A Deep Transfer Learning-Empowered Economical Denial of Sustainability Detection Framework for Cloud-Native Network Slicing. IEEE Trans. Dependable Secur. Comput. 2023, 21, 2818–2835. [Google Scholar] [CrossRef]

- Kasongo, S.M.; Sun, Y. Performance Analysis of Intrusion Detection Systems Using a Feature Selection Method on the UNSW-NB15 Dataset. J. Big Data 2020, 7, 105. [Google Scholar] [CrossRef]

- Thakkar, A.; Lohiya, R. Fusion of statistical importance for feature selection in Deep Neural Network-based Intrusion Detection System. Inf. Fusion 2023, 90, 353–363. [Google Scholar] [CrossRef]

- Shaukat, K.; Luo, S.; Varadharajan, V.; Hameed, I.A.; Xu, M. A Survey on Machine Learning Techniques for Cyber Security in the Last Decade. IEEE Access 2020, 8, 222310–222354. [Google Scholar] [CrossRef]

- Su, T.; Sun, H.; Zhu, J.; Wang, S.; Li, Y. BAT: Deep Learning Methods on Network Intrusion Detection Using NSL-KDD Dataset. IEEE Access 2020, 8, 29575–29585. [Google Scholar] [CrossRef]

- Rodríguez, M.; Alesanco, Á.; Mehavilla, L.; García, J. Evaluation of Machine Learning Techniques for Traffic Flow-Based Intrusion Detection. Sensors 2022, 22, 9326. [Google Scholar] [CrossRef]

- Ayantayo, A.; Kaur, A.; Kour, A.; Schmoor, X.; Shah, F.; Vickers, I.; Kearney, P.; Abdelsamea, M.M. Network intrusion detection using feature fusion with deep learning. J. Big Data 2023, 10, 167. [Google Scholar] [CrossRef]

- Vashishtha, L.K.; Chatterjee, K. Strengthening cybersecurity: TestCloudIDS dataset and SparkShield algorithm for robust threat detection. Comput. Secur. 2025, 151, 104308. [Google Scholar] [CrossRef]

- Bekkouche, R.; Omar, M.; Langar, R.; Hamdaoui, B. A Dynamic Predictive Maintenance Approach for Resilient Service Orchestration in Large-Scale 5G Infrastructures. SSRN 5123374. 2025. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5123374 (accessed on 1 February 2025).

- Kheddar, H.; Himeur, Y.; Awad, A.I. Deep transfer learning for intrusion detection in industrial control networks: A comprehensive review. J. Netw. Comput. Appl. 2023, 220, 103760. [Google Scholar] [CrossRef]

- Kheddar, H.; Dawoud, D.W.; Awad, A.I.; Himeur, Y.; Khan, M.K. Reinforcement-Learning-Based Intrusion Detection in Communication Networks: A Review. IEEE Commun. Surv. Tutor. 2024. [Google Scholar] [CrossRef]

- Hancke, G.P.; Hossain, M.A.; Imran, M.A. 5G beyond 3GPP Release 15 for connected automated mobility in cross-border corridors. Sensors 2020, 20, 6622. [Google Scholar]

- Rischke, J.; Sossalla, P.; Itting, S.; Fitzek, F.H.P.; Reisslein, M. 5G Campus Networks: A First Measurement Study. IEEE Access 2021, 9, 121786–121803. [Google Scholar] [CrossRef]

- Singh, V.P.; Singh, M.P.; Hegde, S.; Gupta, M. Security in 5G Network Slices: Concerns and Opportunities. IEEE Access 2024, 12, 52727–52743. [Google Scholar] [CrossRef]

- Granata, D.; Rak, M.; Mallouli, W. Automated Generation of 5G Fine-Grained Threat Models: A Systematic Approach. IEEE Access 2023, 11, 129788–129804. [Google Scholar] [CrossRef]

- Bao, S.; Liang, Y.; Xu, H. Blockchain for Network Slicing in 5G and Beyond: Survey and Challenges. J. Commun. Inf. Netw. 2022, 7, 349–359. [Google Scholar] [CrossRef]

- Iashvili, G.; Iavich, M.; Bocu, R.; Odarchenko, R.; Gnatyuk, S. Intrusion detection system for 5G with a focus on DOS/DDOS attacks. In Proceedings of the 2021 11th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Krakow, Poland, 22–25 September 2021; Volume 2, pp. 861–864. [Google Scholar]

- Silva, R.S.; Meixner, C.C.; Guimaraes, R.S.; Diallo, T.; Garcia, B.O.; de Moraes, L.F.M.; Martinello, M. REPEL: A Strategic Approach for Defending 5G Control Plane from DDoS Signalling Attacks. IEEE Trans. Netw. Serv. Manag. 2020, 18, 3231–3243. [Google Scholar] [CrossRef]

- Nencioni, G.; Garroppo, R.G.; Olimid, R.F. 5G Multi-Access Edge Computing: A Survey on Security, Dependability, and Performance. IEEE Access 2023, 11, 63496–63533. [Google Scholar] [CrossRef]

- Fakhouri, H.N.; Alawadi, S.; Awaysheh, F.M.; Hani, I.B.; Alkhalaileh, M.; Hamad, F. A Comprehensive Study on the Role of Machine Learning in 5G Security: Challenges, Technologies, and Solutions. Electronics 2023, 12, 4604. [Google Scholar] [CrossRef]

- Iavich, M.; Gnatyuk, S.O.; Odarchenko, R.; Bocu, R.; Simonov, S. The novel system of attacks detection in 5G. In Proceedings of the 35th International Conference on Advanced Information Networking and Applications (AINA-2021), Toronto, ON, Canada, 12–14 May 2021; pp. 580–591. [Google Scholar]

- Yang, L.; Shami, A. A Transfer Learning and Optimized CNN Based Intrusion Detection System for Internet of Vehicles. In Proceedings of the ICC 2022—IEEE International Conference on Communications, Seoul, Republic of Korea, 16–20 May 2022; pp. 2774–2779. [Google Scholar]

- Sullivan, S.; Brighente, A.; Kumar, S.A.P.; Conti, M. 5G Security Challenges and Solutions: A Review by OSI Layers. IEEE Access 2021, 9, 116294–116314. [Google Scholar] [CrossRef]

- Salazar, Z.; Nguyen, H.N.; Mallouli, W.; Cavalli, A.R.; de Oca, E.M. 5Greplay: A 5G Network Traffic Fuzzer—Application to Attack Injection. In Proceedings of the ARES 2021: The 16th International Conference on Availability, Reliability and Security, Virtual, 17–20 August 2021; pp. 1–8. [Google Scholar]

- Amponis, G.; Radoglou-Grammatikis, P.; Nakas, G.; Goudos, S.; Argyriou, V.; Lagkas, T.; Sarigiannidis, P. 5G core PFCP intrusion detection dataset. In Proceedings of the 2023 12th International Conference on Modern Circuits and Systems Technologies (MOCAST), Athens, Greece, 28–30 June 2023; pp. 1–4. [Google Scholar]

- Coldwell, C.; Conger, D.; Goodell, E.; Jacobson, B.; Petersen, B.; Spencer, D.; Anderson, M.; Sgambati, M. Machine learning 5G attack detection in programmable logic. In Proceedings of the 2022 IEEE Globecom Workshops (GC Wkshps), Rio de Janeiro, Brazil, 4–8 December 2022; pp. 1365–1370. [Google Scholar]

- Alkasassbeh, M.; Baddar, S.A.-H. Intrusion Detection Systems: A State-of-the-Art Taxonomy and Survey. Arab. J. Sci. Eng. 2023, 48, 10021–10064. [Google Scholar] [CrossRef]

- Kannari, P.R.; Chowdary, N.S.; Biradar, R.L. An anomaly-based intrusion detection system using recursive feature elimination technique for improved attack detection. Theor. Comput. Sci. 2022, 931, 56–64. [Google Scholar] [CrossRef]

- Sharma, B.; Sharma, L.; Lal, C.; Roy, S. Explainable artificial intelligence for intrusion detection in IoT networks: A deep learning based approach. Expert Syst. Appl. 2024, 238, 121751. [Google Scholar] [CrossRef]

- Popoola, S.I.; Imoize, A.L.; Hammoudeh, M.; Adebisi, B.; Jogunola, O.; Aibinu, A.M. Federated Deep Learning for Intrusion Detection in Consumer-Centric Internet of Things. IEEE Trans. Consum. Electron. 2023, 70, 1610–1622. [Google Scholar] [CrossRef]

- Meng, R.; Gao, S.; Fan, D.; Gao, H.; Wang, Y.; Xu, X.; Wang, B.; Lv, S.; Zhang, Z.; Sun, M.; et al. A survey of secure semantic communications. arXiv 2025, arXiv:2501.00001. [Google Scholar]

- Jiang, S.; Zhao, J.; Xu, X. SLGBM: An Intrusion Detection Mechanism for Wireless Sensor Networks in Smart Environments. IEEE Access 2020, 8, 169548–169558. [Google Scholar] [CrossRef]

- Ozkan-Okay, M.; Samet, R.; Aslan, O.; Gupta, D. A Comprehensive Systematic Literature Review on Intrusion Detection Systems. IEEE Access 2021, 9, 157727–157760. [Google Scholar] [CrossRef]

- Di Mauro, M.; Galatro, G.; Fortino, G.; Liotta, A. Supervised feature selection techniques in network intrusion detection: A critical review. Eng. Appl. Artif. Intell. 2021, 101, 104216. [Google Scholar] [CrossRef]

- Qaddoura, R.; Al-Zoubi, A.M.; Faris, H.; Almomani, I. A Multi-Layer Classification Approach for Intrusion Detection in IoT Networks Based on Deep Learning. Sensors 2021, 21, 2987. [Google Scholar] [CrossRef]

- Ali, M.H.; Jaber, M.M.; Abd, S.K.; Rehman, A.; Awan, M.J.; Damaševičius, R.; Bahaj, S.A. Threat Analysis and Distributed Denial of Service (DDoS) Attack Recognition in the Internet of Things (IoT). Electronics 2022, 11, 494. [Google Scholar] [CrossRef]

- Santos, L.; Gonçalves, R.; Rabadão, C.; Martins, J. A flow-based intrusion detection framework for internet of things networks. Clust. Comput. 2023, 26, 37–57. [Google Scholar] [CrossRef]

- de Souza, C.A.; Westphall, C.B.; Machado, R.B.; Sobral, J.B.M.; Vieira, G.d.S. Hybrid approach to intrusion detection in fog-based IoT environments. Comput. Netw. 2020, 180, 107417. [Google Scholar] [CrossRef]

- Keserwani, P.K.; Govil, M.C.; Pilli, E.S.; Govil, P. A smart anomaly-based intrusion detection system for the Internet of Things (IoT) network using GWO–PSO–RF model. J. Reliab. Intell. Environ. 2021, 7, 3–21. [Google Scholar] [CrossRef]

- Sharma, H.S.; Singh, K.J. Intrusion detection system: A deep neural network-based concatenated approach. J. Supercomput. 2024, 80, 13918–13948. [Google Scholar] [CrossRef]

- Parra, G.D.L.T.; Rad, P.; Choo, K.-K.R.; Beebe, N. Detecting Internet of Things attacks using distributed deep learning. J. Netw. Comput. Appl. 2020, 163, 102662. [Google Scholar] [CrossRef]

- Mohamed, D.; Ismael, O. Enhancement of an IoT hybrid intrusion detection system based on fog-to-cloud computing. J. Cloud Comput. 2023, 12, 41. [Google Scholar] [CrossRef]

- Anwer, M.; Khan, S.M.; Farooq, M.U.; Waseemullah. Attack Detection in IoT using Machine Learning. Eng. Technol. Appl. Sci. Res. 2021, 11, 7273–7278. [Google Scholar] [CrossRef]

- Ullah, I.; Mahmoud, Q.H. A Two-Level Flow-Based Anomalous Activity Detection System for IoT Networks. Electronics 2020, 9, 530. [Google Scholar] [CrossRef]

- Farrukh, Y.A.; Wali, S.; Khan, I.; Bastian, N.D. AIS-NIDS: An intelligent and self-sustaining network intrusion detection system. Comput. Secur. 2024, 144, 103982. [Google Scholar] [CrossRef]

- Singh, G.; Khare, N. A survey of intrusion detection from the perspective of intrusion datasets and machine learning techniques. Int. J. Comput. Appl. 2021, 44, 659–669. [Google Scholar] [CrossRef]

- Magán-Carrión, R.; Urda, D.; Diaz-Cano, I.; Dorronsoro, B. Improving the Reliability of Network Intrusion Detection Systems Through Dataset Integration. IEEE Trans. Emerg. Top. Comput. 2022, 10, 1717–1732. [Google Scholar] [CrossRef]

- Keserwani, P.K.; Govil, M.C.; Pilli, E.S. An effective NIDS framework based on a comprehensive survey of feature optimization and classification techniques. Neural Comput. Appl. 2023, 35, 4993–5013. [Google Scholar] [CrossRef]

- Layeghy, S.; Gallagher, M.; Portmann, M. Benchmarking the benchmark—Comparing synthetic and real-world Network IDS datasets. J. Inf. Secur. Appl. 2024, 80, 103689. [Google Scholar] [CrossRef]

- Engelen, G.; Rimmer, V.; Joosen, W. Troubleshooting an Intrusion Detection Dataset: The CICIDS2017 Case Study. In Proceedings of the 2021 IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 27 May 2021; pp. 7–12. [Google Scholar]

- Stamatis, C.; Barsanti, K.C. Development and application of a supervised pattern recognition algorithm for identification of fuel-specific emissions profiles. Atmos. Meas. Tech. 2022, 15, 2591–2606. [Google Scholar] [CrossRef]

- Tsourdinis, T.; Makris, N.; Korakis, T.; Fdida, S. AI-driven network intrusion detection and resource allocation in real-world O-RAN 5G networks. In Proceedings of the 30th Annual International Conference on Mobile Computing and Networking (MobiCom ’24), Washington, DC, USA, 18–22 November 2024; pp. 1842–1849. [Google Scholar]

- Dhanushkodi, K.; Thejas, S. AI Enabled Threat Detection: Leveraging Artificial Intelligence for Advanced Security and Cyber Threat Mitigation. IEEE Access 2024, 12, 173127–173136. [Google Scholar] [CrossRef]

- Soliman, H.M.; Sovilj, D.; Salmon, G.; Rao, M.; Mayya, N. RANK: AI-Assisted End-to-End Architecture for Detecting Persistent Attacks in Enterprise Networks. IEEE Trans. Dependable Secur. Comput. 2023, 21, 3834–3850. [Google Scholar] [CrossRef]

- Yadav, N.; Pande, S.; Khamparia, A.; Gupta, D. Intrusion Detection System on IoT with 5G Network Using Deep Learning. Wirel. Commun. Mob. Comput. 2022, 2022, 9304689. [Google Scholar] [CrossRef]

- Farzaneh, B.; Shahriar, N.; Al Muktadir, A.H.; Towhid, M.S. DTL-IDS: Deep transfer learning-based intrusion detection system in 5G networks. In Proceedings of the 2023 19th International Conference on Network and Service Management (CNSM), Niagara Falls, ON, Canada, 30 October–2 November 2023; pp. 1–5. [Google Scholar]

- Zhou, M.-G.; Liu, Z.-P.; Yin, H.-L.; Li, C.-L.; Xu, T.-K.; Chen, Z.-B. Quantum neural network for quantum neural computing. Research 2023, 6, 0134. [Google Scholar] [CrossRef] [PubMed]

- Zhou, M.-G.; Cao, X.-Y.; Lu, Y.-S.; Wang, Y.; Bao, Y.; Jia, Z.-Y.; Fu, Y.; Yin, H.-L.; Chen, Z.-B. Experimental Quantum Advantage with Quantum Coupon Collector. Research 2022, 2022, 9798679. [Google Scholar] [CrossRef]

- Adewole, K.S.; Salau-Ibrahim, T.T.; Imoize, A.L.; Oladipo, I.D.; AbdulRaheem, M.; Awotunde, J.B.; Balogun, A.O.; Isiaka, R.M.; Aro, T.O. Empirical Analysis of Data Streaming and Batch Learning Models for Network Intrusion Detection. Electronics 2022, 11, 3109. [Google Scholar] [CrossRef]

- Umar, M.A.; Chen, Z.; Shuaib, K.; Liu, Y. Effects of feature selection and normalization on network intrusion detection. J. Inf. Technol. Data Manag. 2025, 8, 23–39. [Google Scholar] [CrossRef]

- Algan, G.; Ulusoy, I. Image classification with deep learning in the presence of noisy labels: A survey. Knowl.-Based Syst. 2021, 215, 106771. [Google Scholar] [CrossRef]

- Gupta, A.R.; Agrawal, J. The multi-demeanor fusion based robust intrusion detection system for anomaly and misuse detection in computer networks. J. Ambient Intell. Humaniz. Comput. 2021, 12, 303–319. [Google Scholar] [CrossRef]

- Nabi, F.; Zhou, X. Enhancing intrusion detection systems through dimensionality reduction: A comparative study of machine learning techniques for cyber security. Cyber Secur. Appl. 2024, 2, 100033. [Google Scholar] [CrossRef]

- Dogan, A.; Birant, D. Machine learning and data mining in manufacturing. Expert Syst. Appl. 2021, 166, 114060. [Google Scholar] [CrossRef]

- Belouadah, E.; Popescu, A.; Kanellos, I. A comprehensive study of class incremental learning algorithms for visual tasks. Neural Netw. 2021, 135, 38–54. [Google Scholar] [CrossRef] [PubMed]

- Amponis, G.; Radoglou-Grammatikis, P.; Lagkas, T.; Mallouli, W.; Cavalli, A.; Klonidis, D.; Markakis, E.; Sarigiannidis, P. Threatening the 5G core via PFCP DoS attacks: The case of blocking UAV communications. EURASIP J. Wirel. Commun. Netw. 2022, 2022, 124. [Google Scholar] [CrossRef]

- Mazarbhuiya, F.A.; Alzahrani, M.Y.; Mahanta, A.K. Detecting anomaly using partitioning clustering with merging. ICIC Express Lett. 2020, 14, 951–960. [Google Scholar]

- Samarakoon, S.; Siriwardhana, Y.; Porambage, P.; Liyanage, M.; Chang, S.-Y.; Kim, J.; Kim, J.; Ylianttila, M. 5G-NIDD: A comprehensive network intrusion detection dataset generated over 5G wireless network. arXiv 2022, arXiv:2212.01298. [Google Scholar]

- Farzaneh, B.; Shahriar, N.; Al Muktadir, A.H.; Towhid, S.; Khosravani, M.S. DTL-5G: Deep transfer learning-based DDoS attack detection in 5G and beyond networks. Comput. Commun. 2024, 228, 107927. [Google Scholar] [CrossRef]

- Berei, E.; Khan, M.A.; Oun, A. Machine Learning Algorithms for DoS and DDoS Cyberattacks Detection in Real-Time Environment. In Proceedings of the 2024 IEEE 21st Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 6–9 January 2024; pp. 1048–1049. [Google Scholar]

- Ghani, H.; Salekzamankhani, S.; Virdee, B. Critical analysis of 5G networks’ traffic intrusion using PCA, t-SNE, and UMAP visualization and classifying attacks. In Proceedings of Data Analytics and Management; Springer: Singapore, 2024; pp. 375–389. [Google Scholar]

- Vu, L.; Nguyen, Q.U.; Nguyen, D.N.; Hoang, D.T.; Dutkiewicz, E. Deep Transfer Learning for IoT Attack Detection. IEEE Access 2020, 8, 107335–107344. [Google Scholar] [CrossRef]

- Hossain, S.; Senouci, S.-M.; Brik, B.; Boualouache, A. A privacy-preserving Self-Supervised Learning-based intrusion detection system for 5G-V2X networks. Ad Hoc Netw. 2024, 166, 103674. [Google Scholar] [CrossRef]

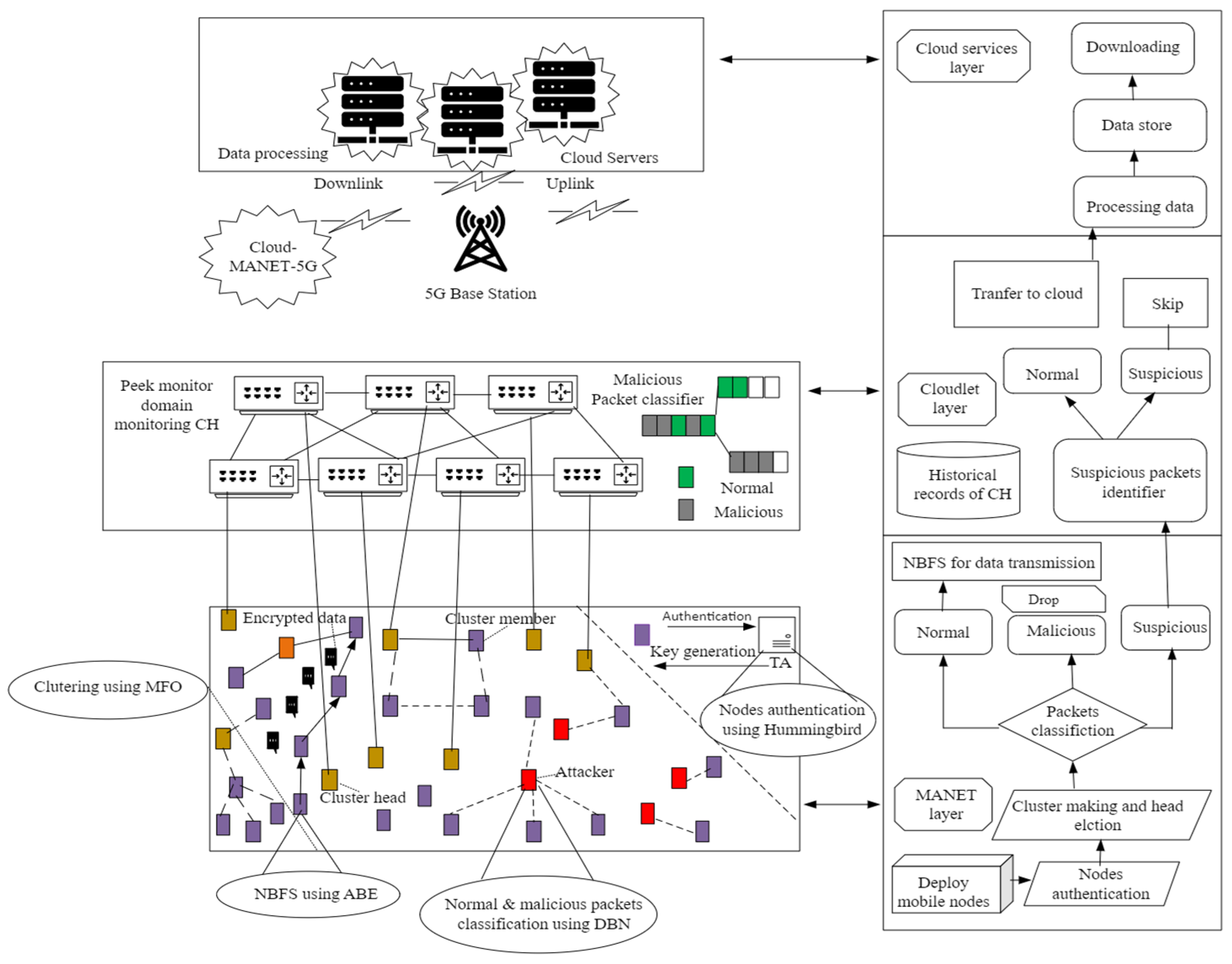

- Alghamdi, S.A. Novel trust-aware intrusion detection and prevention system for 5G MANET–Cloud. Int. J. Inf. Secur. 2022, 21, 469–488. [Google Scholar] [CrossRef]

- Sousa, B.; Magaia, N.; Silva, S. An Intelligent Intrusion Detection System for 5G-Enabled Internet of Vehicles. Electronics 2023, 12, 1757. [Google Scholar] [CrossRef]

- Ciaburro, G.; Iannace, G. Machine Learning-Based Algorithms to Knowledge Extraction from Time Series Data: A Review. Data 2021, 6, 55. [Google Scholar] [CrossRef]

- Jurkiewicz, P. Flow-models: A framework for analysis and modeling of IP network flows. SoftwareX 2022, 17, 100929. [Google Scholar] [CrossRef]

- Larin, D.V.; Get’man, A.I. Tools for Capturing and Processing High-Speed Network Traffic. Program. Comput. Softw. 2022, 48, 756–769. [Google Scholar] [CrossRef]

- Sheikhi, S.; Kostakos, P. DDoS attack detection using unsupervised federated learning for 5G networks and beyond. In Proceedings of the 2023 Joint European Conference on Networks and Communications and 6G Summit (EuCNC/6G Summit), Gothenburg, Sweden, 6–9 June 2023; pp. 442–447. [Google Scholar]

- Reddy, R.; Gundall, M.; Lipps, C.; Schotten, H.D. Open source 5G core network implementations: A qualitative and quantitative analysis. In Proceedings of the 2023 IEEE International Black Sea Conference on Communications and Networking (BlackSeaCom), Istanbul, Turkey, 4–7 July 2023; pp. 253–258. [Google Scholar]

- Rouili, M.; Saha, N.; Golkarifard, M.; Zangooei, M.; Boutaba, R.; Onur, E.; Saleh, A. Evaluating Open-Source 5G SA Testbeds: Unveiling Performance Disparities in RAN Scenarios. In Proceedings of the NOMS 2024—2024 IEEE Network Operations and Management Symposium, Seoul, Republic of Korea, 6–10 May 2024; pp. 1–6. [Google Scholar]

- Liu, X.; Wang, X.; Jia, J.; Huang, M. A distributed deployment algorithm for communication coverage in wireless robotic networks. J. Netw. Comput. Appl. 2021, 180, 103019. [Google Scholar] [CrossRef]

- Nguyen, L.G.; Watabe, K. A Method for Network Intrusion Detection Using Flow Sequence and BERT Framework. In Proceedings of the ICC 2023—IEEE International Conference on Communications, Rome, Italy, 28 May–1 June 2023; pp. 3006–3011. [Google Scholar]

- Büyükkeçeci, M.; Okur, M.C. A Comprehensive Review of Feature Selection and Feature Selection Stability in Machine Learning. Gazi Univ. J. Sci. 2023, 36, 1506–1520. [Google Scholar] [CrossRef]

- Dissanayake, K.; Md Johar, M.G. Comparative study on heart disease prediction using feature selection techniques on classification algorithms. Appl. Comput. Intell. Soft Comput. 2021, 2021, 5581806. [Google Scholar] [CrossRef]

- Singh, D.; Singh, B. Investigating the impact of data normalization on classification performance. Appl. Soft Comput. 2020, 97, 105524. [Google Scholar] [CrossRef]

- Yuliana, H.; Iskandar; Hendrawan. Comparative Analysis of Machine Learning Algorithms for 5G Coverage Prediction: Identification of Dominant Feature Parameters and Prediction Accuracy. IEEE Access 2024, 12, 18939–18956. [Google Scholar] [CrossRef]

- Nahum, C.V.; Pinto, L.D.N.M.; Tavares, V.B.; Batista, P.; Lins, S.; Linder, N.; Klautau, A. Testbed for 5G Connected Artificial Intelligence on Virtualized Networks. IEEE Access 2020, 8, 223202–223213. [Google Scholar] [CrossRef]

- Mehdi, A.; Bali, M.K.; Abbas, S.I. Unleashing the Potential of Grafana: A Comprehensive Study on Real-Time Monitoring and Visualization. In Proceedings of the 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), Delhi, India, 6–8 July 2023; pp. 1–5. [Google Scholar]

- Abbasi, A.; Javed, A.R.; Chakraborty, C.; Nebhen, J.; Zehra, W.; Jalil, Z. ElStream: An Ensemble Learning Approach for Concept Drift Detection in Dynamic Social Big Data Stream Learning. IEEE Access 2021, 9, 66408–66419. [Google Scholar] [CrossRef]

- Hamza, M.A.; Ejaz, U.; Kim, H.-C. Cyber5Gym: An Integrated Framework for 5G Cybersecurity Training. Electronics 2024, 13, 888. [Google Scholar] [CrossRef]

- Al-Fuhaidi, B.; Farae, Z.; Al-Fahaidy, F.; Nagi, G.; Ghallab, A.; Alameri, A. Anomaly-Based Intrusion Detection System in Wireless Sensor Networks Using Machine Learning Algorithms. Appl. Comput. Intell. Soft Comput. 2024, 2024, 2625922. [Google Scholar] [CrossRef]

- Pérez-Hernández, F.; Tabik, S.; Lamas, A.; Olmos, R.; Fujita, H.; Herrera, F. Object Detection Binary Classifiers methodology based on deep learning to identify small objects handled similarly: Application in video surveillance. Knowl.-Based Syst. 2020, 194, 105590. [Google Scholar] [CrossRef]

- Dhanke, J.; Patil, R.N.; Kumari, I.; Gupta, S.; Hans, S.; Kumar, K. Comparative study of machine learning algorithms for intrusion detection. Int. J. Intell. Syst. Appl. Eng. 2023, 12, 647–653. [Google Scholar]

- Gupta, S.; Kumar, S.; Singh, A. A hybrid intrusion detection system based on decision tree and support vector machine. In Proceedings of the 2020 IEEE 5th International Conference on Computing Communication and Automation (ICCCA 2020), Greater Noida, India, 30–31 October 2020; pp. 510–515. [Google Scholar]

- Lansky, J.; Ali, S.; Mohammadi, M.; Majeed, M.K.; Karim, S.H.T.; Rashidi, S.; Hosseinzadeh, M.; Rahmani, A.M. Deep Learning-Based Intrusion Detection Systems: A Systematic Review. IEEE Access 2021, 9, 101574–101599. [Google Scholar] [CrossRef]

- Awotunde, J.B.; Folorunso, S.O.; Imoize, A.L.; Odunuga, J.O.; Lee, C.-C.; Li, C.-T.; Do, D.-T. An Ensemble Tree-Based Model for Intrusion Detection in Industrial Internet of Things Networks. Appl. Sci. 2023, 13, 2479. [Google Scholar] [CrossRef]

- Akbar, R.; Zafer, A. Next-Gen Information Security: AI-Driven Solutions for Real-Time Cyber Threat Detection in Cloud and Network Environments. J. Cybersecur. Res. 2024, 12, 123–145. [Google Scholar]

- Rana, P.; Batra, I.; Malik, A.; Imoize, A.L.; Kim, Y.; Pani, S.K.; Goyal, N.; Kumar, A.; Rho, S. Intrusion detection systems in cloud computing paradigm: Analysis and overview. Complexity 2022, 2022, 3999039. [Google Scholar] [CrossRef]

- Teuwen, K.T.; Mulders, T.; Zambon, E.; Allodi, L. Ruling the Unruly: Designing Effective, Low-Noise Network Intrusion Detection Rules for Security Operations Centers. arXiv 2025, arXiv:2501.09808. [Google Scholar]

- Jimoh, R.G.; Imoize, A.L.; Awotunde, J.B.; Ojo, S.; Akanbi, M.B.; Bamigbaye, J.A.; Faruk, N. An Enhanced Deep Neural Network Enabled with Cuckoo Search Algorithm for Intrusion Detection in Wide Area Networks. In Proceedings of the 2022 5th Information Technology for Education and Development (ITED), Abuja, Nigeria, 1–3 November 2022; pp. 1–5. [Google Scholar]

- AbdulRaheem, M.; Oladipo, I.D.; Imoize, A.L.; Awotunde, J.B.; Lee, C.-C.; Balogun, G.B.; Adeoti, J.O. Machine learning assisted snort and zeek in detecting DDoS attacks in software-defined networking. Int. J. Inf. Technol. 2024, 16, 1627–1643. [Google Scholar] [CrossRef]

| Reference | Methodology | Findings | Limitations | Future Scope |

|---|---|---|---|---|

| [11] | Multi-stage ML-based NIDS; oversampling to reduce training size; compare feature selection (info gain vs. correlation); hyperparameter tuning; evaluated on CICIDS 2017 and UNSW-NB 2015. | Training samples were reduced by up to 74%, features by up to 50%, with a detection accuracy of over 99%, and a 1–2% improvement over recent works. | Evaluation is limited to specific datasets due to potential computational overhead from multi-stage processing. | Real-time implementation, broader dataset testing, integration of deep learning, and further optimization. |

| [12] | Comparative study of ML algorithms (LDA, CART, RF) for IDSs across various domains (fog computing, IoT, big data, smart city, 5G) using the KDD-CUP dataset. | Measured and compared the efficiency of different ML models in detecting intrusions. | Evaluation is limited to the KDD-CUP dataset and selected ML algorithms; it may not reflect the latest advancements. | Extend research to diverse, real-world datasets; incorporate advanced ML techniques; explore applicability to emerging network environments. |

| [13] | Review of ML algorithms (SL, USL, SSL, RL DL) and their applications in Industry 4.0. | ML is key for processing diverse digital data and enabling smart applications. | Broad scope; limited empirical depth. | Encourage domain-specific studies and tackle implementation challenges. |

| [14] | Analyzed DoS/DDoS impact on 5G slices; created a dataset from a simulated testbed; evaluated a bidirectional LSTM (SliceSecure). | DoS/DDoS attacks reduce bandwidth/latency; SliceSecure achieved 99.99% accuracy. | Based on simulation, it may not reflect real-world conditions. | Collect real-world data; further refine detection models. |

| [15] | Proposed an ML-based IDS integrated into the 5G core; compared ML and DL algorithms using the CICIDS2017 and CSE-CIC-IDS-2018 datasets. | GB achieved 99.3% (secure) and 96.4% (attack) accuracy. | Limited to two benchmark datasets; lacks real-world implementation. | Extend to real-world 5G environments; explore additional datasets and algorithms. |

| [16] | Hybrid BiLSTM using wind speed and climate indices; three-stage feature selection (partial auto-/cross-correlation, RReliefF, Boruta-RF) with Bayesian tuning; benchmarked vs. LSTM, RNN, multilayer perceptron (MLP), RF. | Achieved best performance with 76.6–84.8% of errors ≤ 0.5 m/s; lowest RMSE (9.6–23.8%) and MAPE (8.8–21.5%). | Not explicitly discussed; may require further validation across diverse conditions. | Real-time deployment; broader testing across various sites; scalability and adaptability improvements. |

| [19] | TL-enabled edge-CNN framework pre-trained on an image dataset and fine-tuned with limited device data; joint energy–latency optimization via uploading decision and bandwidth allocation. | Achieved ~85% prediction accuracy with ~1% of model parameters uploaded (32× compression ratio) on ImageNet. | Evaluation limited to ImageNet; potential challenges in generalizing to diverse industrial scenarios. | Validate with real-world industrial data; further, optimize energy–latency trade-offs; extend the framework to other applications and datasets. |

| [20] | 5G virtualization architecture; TL-enhanced AdaBoost for IoT classification; sub-channel reuse for cellular and D2D links. | High classification accuracy; improved spectrum utilization through extensive resource reuse. | Focuses solely on resource management; limited real-world validation. | Extend to real-world 5G IoT scenarios; explore further optimization strategies. |

| [21] | DTL with weight transfer and fine-tuning for network traffic classification in 5G IoT systems with scarce labeled data. | With only 10% labeled data, accuracy nearly matches that of full-data training. | Validation is limited to specific datasets/scenarios; computational demands of fine-tuning may be challenging. | Extend to diverse 5G IoT scenarios and reduce human intervention in model training. |

| [23] | SDN-based network slicing architecture for industrial IoT; DDPG-based slice optimization algorithm, TL-based multiagent DDPG for LoRa gateways. | Enhanced QoS, energy efficiency, and reliability; accelerated training process across multiple gateways. | Real-world scalability and deployment challenges are not fully addressed. | Validate in real deployments; further, optimize scalability and integration with diverse IoT environments. |

| [24] | FortisEDoS: DTL with CG-GRU (graph + RNN) for EDoS detection in B5G slicing. | Outperforms baselines in detection and efficiency. | Not explicitly discussed: potential real-world deployment challenges. | Validate in real-world scenarios; enhance scalability and integration with other security measures. |

| [25] | FortisEDoS: DTL with CG-GRU (graph + RNN) for EDoS detection in cloud-native network slicing; transfer learning adapts detection across slices. | CG-GRU achieves a detection rate of over 92% with low complexity; transfer learning yields a sensitivity of over 91% and speeds up training by at least 61%; and it provides explainable decisions. | False alarms may trigger unnecessary scaling, impacting SLAs; they lack a mitigation strategy. | Develop intelligent resource provisioning, improve VNFs/slice selection, and integrate FL for privacy-preserving mitigation. |

| [27] | Fusion of statistical importance (std. dev and diff. of mean/median) for feature selection in DNN-based IDSs; evaluated on NSL-KDD, UNSW_NB-15, and CIC-IDS-2017. | Enhanced performance (accuracy, precision, recall, F-score, and FPR) and reduced execution time; improvements statistically validated. | It may be dataset-specific; further validation is required across diverse environments. | Extend to online/real-time IDSs and explore integration with other DL architectures. |

| [30] | Evaluated multiple ML techniques using Weka on the CICIDS2017 dataset; compared full vs. reduced attribute sets via CFS and Zeek-based extraction. | Tree-based methods (PART, J48, RF) achieved near-perfect F1 scores (≈0.999 full, 0.990 with 6 CFS attributes, 0.997 with 14 Zeek attributes) with fast execution. | Findings are based solely on CICIDS2017, potential dataset dependency. | Analyze other Zeek logs for additional features and validate models on different IDS datasets. |

| [31] | Proposed three DL models—early fusion, late fusion, and late ensemble—that use feature fusion with fully connected networks; evaluated on the UNSW-NB15 and NSL-KDD datasets. | Late-fusion and late-ensemble models outperform early-fusion and state-of-the-art methods with improved generalization and robustness against class imbalance. | Limited exploration of long-term dependencies and explainability. | Explore fusion with recurrent units for long dependencies and integrate post hoc Explainable AI (XAI) to clarify attribute contributions. |

| [32] | Proposed the TestCloudIDS dataset (15 DDoS variants, 76 features) and SparkShield—a Spark-based IDS—evaluated on UNSW-NB15, NSL-KDD, CICIDS2017, and TestCloudIDS. | Achieves improved threat classification with recent attack patterns; highlights the inadequacy of older datasets for zero-day attacks. | Based on simulated cloud data, real-world validation is needed. | Expand dataset, test on real networks, and update with evolving attack strategies. |

| [33] | Orchestrator in RAN via Docker that dynamically selects adaptive ML/DL models (DT, RF, MLP, LLM) trained on CICDDOS2019 for real-time security. | Enhanced dynamic security with effective real-time attack detection/mitigation and improved accuracy via UE feedback. | Evaluated in a simulated environment; lacks real-world testbed validation. | Integrate into a real 5G testbed; refine model selection and mitigation strategies. |

| [34] | Reviewed post-2015 studies on DTL-based IDSs, analyzing datasets, techniques, and evaluation metrics. | DTL improves IDSs by transferring knowledge, addressing data scarcity, and enhancing performance. | Requires labeled data, faces overfitting, adversarial attacks, and high complexity. | Improve robustness, efficiency, and security with adversarial-resistant models and blockchain integration. |

| [35] | A comprehensive review of deep RL-based IDSs, categorizing studies, analyzing datasets, techniques, and evaluation metrics. | Deep RL improves IDS accuracy, adaptability, and decision-making across IoT, ICSs, and smart grids. | Scalability issues, real-time constraints, adversarial vulnerabilities, and dataset limitations. | Enhance interpretability, robustness, energy efficiency, and integration with edge/fog computing. |

| 5G Domain | ML Techniques | TL Techniques | Dedicated Attack Types |

|---|---|---|---|

| eMBB | DL, NN, SVM, KNN | TL for anomaly detection, TL for intrusion detection | DoS, eavesdropping, Man-in-the-Middle (MitM), data injection |

| mMTC | RF, DT, RL | TL for device authentication, TL for network traffic analysis | Device impersonation, jamming attacks, data injection, Sybil attacks |

| uRLLC | CNN, RNN, Autoencoders (AEs) | TL for signal processing, TL for traffic prediction | Timing attacks, spoofing, signal interference, jamming |

| Layer Name | Protocol Stack Component | Security Threats and Risks | Mitigation Strategies | Challenges and Future Considerations |

|---|---|---|---|---|

| 1 Physical | Wireless signal and hardware layer | (i) Power leakage leading to private data exposure. (ii) Eavesdropping on wireless signals. (iii) Injection of fabricated data. (iv) Malicious signal amplification. (v) Expansion of attack surface due to increased network entry points. | (i, ii) Power control, beamforming, and clustering. (ii, iii) Defining secure transmission zones, implementing device-to-device (D2D) communication, and leveraging Physical Layer Security (PLS). (iv) No established countermeasure identified. (v) Continuous network monitoring and threat detection. | (i–v) Prioritizing research on the most secure 5G physical layer technologies over fragmented explorations of multiple approaches. |

| 2 Data link | Frame transmission and MAC security | (i) Exploitation of IEEE 802.1 security gaps using penetration tools. | (i) Firmware updates, ML-IDS, and Received Signal Strength (RSS)-based security mechanisms. | (i) Firmware updates may not always be feasible; ML-IDS has training inefficiencies, and RSS-based methods are susceptible to noise interference. |

| 3 Network | Routing and packet forwarding | (i) Breaches in data confidentiality and integrity, along with susceptibility to replay attacks. | (i) Encryption via IPsec. | (i) IPsec alone does not ensure complete end-to-end security, requiring additional protection across other layers. |

| 4 Transport | Data flow control and transmission security | (i) DoS attacks, unauthorized rule modifications, and insertion of malicious policies in SDN controllers. | (i) Embedding security measures within the SDN architecture. | (i) A practical, widely accepted SDN security framework remains underdeveloped. |

| 5 Session | Authentication and connection management | (i) Session hijacking and interception via plaintext credentials. (ii) Security flaws in NetBIOS leading to unauthorized resource sharing. (iii) Identity exposure, DoS, and interception vulnerabilities in authentication protocols. | (i) No universal countermeasure established. (ii) Disabling null sessions and enforcing strong admin credentials. (iii) Adoption of improved authentication protocols. | (i, ii) Additional research required to address session-layer security risks. (iii) Enhanced authentication methods improve security but introduce computational overhead. |

| 6 Presentation | Data formatting and encoding | (i) Concealing malicious payloads using multimedia files. (ii) Buffer overflow due to insufficient input validation. (iii) Format string vulnerabilities leading to unauthorized code execution. | (i, ii, iii) Implementing rigorous input/output validation at the application and presentation layers. (i, ii, iii) Periodic updates to cryptographic algorithms. | (i, ii, iii) Limited recent research on presentation layer security mechanisms. |

| 7 Application | User interface and data services | (i) Distributed Denial of Service (DDoS) targeting blockchain-based protocols. (ii) Exploitation of transaction malleability in blockchain systems. (iii) Manipulated multimedia uploads in connected vehicles. | (i) No widely adopted countermeasure. (ii) Implementation of Segregated Witness (SegWit). (iii) Use of public key cryptography. | (i, ii) Blockchain scalability challenges necessitate stronger cryptographic functions. (iii) The effectiveness of proposed defenses needs further evaluation. |

| Reference | Methodology | Findings | Limitations | Future Scope |

|---|---|---|---|---|

| [19] | 5G Threat Intelligence Dataset | Network traffic logs for 5G services, including various attack scenarios such as DoS and DDoS attacks, are labeled for enhanced threat detection. | It may not represent all attack vectors; it is limited to specific scenarios and conditions. | Integration of dynamic attack patterns, multivector attacks, and more complex network environments. |

| [22] | Green Computing in 5G IoT | Uses improved AdaBoost and resource reuse for data classification and optimization in 5G IoT. | Limited to resource management, lacks real-world validation, and ignores energy trade-offs. | Focus on real-world testing, energy-efficient methods, and scalability in IoT. |

| [48] | 5greplay Tool | A tool designed to fuzz 5G network traffic by injecting attack vectors to assess network vulnerabilities. | Limited to specific attack scenarios and experimental setups; lacks extensive real-world evaluation. | Extend to real-world 5G IoT scenarios; explore further optimization strategies. |

| [49] | 5GC PFCP Intrusion Detection Dataset | Labeled dataset for AI-powered intrusion detection in 5G CNs, focusing on PFCP-based cyberattacks. Includes pcap files and TCP/IP flow statistics. | Limited to four PFCP attack types, may not cover all real-world threats, and requires further validation in diverse 5G environments. | Expansion to more attack scenarios, real-world validation, and integration with advanced AI-based security frameworks. |

| [50] | ML-5G attack detection | Uses ML on programmable logic to detect 5G network attacks in real time. | Limited evaluation on a specific hardware platform; experimental setup only. | Broader real-world testing, extended attack scenarios, and deeper 5G integration. |

| [51] | 5G Traffic Anomaly Detection Dataset | Includes traffic anomalies in 5G networks, such as attacks exploiting vulnerabilities like eavesdropping or DDoS. | It may not capture all attack vectors or reflect the latest network architecture changes. | Addition of new vulnerabilities and real-time traffic anomalies, especially for emerging 5G applications. |

| [52] | 5G Cybersecurity Dataset | The dataset contains normal and attack traffic patterns for 5G networks, including DoS and DDoS attacks. | Limited to a specific set of attack types and lacks real-world data diversity. | Expansion to include more diverse attack types, real-world traffic, and cross-domain scenario. |

| [53] | 5G IoT Security Dataset | Focuses on IoT devices in 5G networks, including malicious activities such as botnets and unauthorized access. | Primarily simulated data, which may not fully capture real-world IoT device behavior. | Collection of real-world IoT device data, more diverse attack patterns, and adaptive models for evolving threats. |

| [54] | SPEC5G: A Dataset for 5G Cellular Network Protocol Analysis | The dataset contains 3,547,586 sentences with 134M words from 13,094 cellular network specifications and 13 online websites to automate 5G protocol analysis. | Limited to textual data; may not cover all aspects of 5G network security. | Integration with other data types, such as network traffic data, to provide a more comprehensive analysis. |

| [55] | Secure SemCom Dataset | Explores security challenges in SemCom and mitigation techniques like adversarial training, cryptography, and blockchain. | High computational complexity of encryption and differential privacy, evolving backdoor attacks, challenges in smart contract integration, and vulnerability to semantic adversarial attacks. | Development of dynamic data cleaning, optimized encryption techniques, multi-strategy backdoor defense, smart contract-enabled SemCom, and robust countermeasures using semantic fingerprints. |

| Feature | HIDS | NIDS | WIDS | NBA |

|---|---|---|---|---|

| Components | Agent (software, inline); MS: 1 − n; DS: optional | Sensor (inline/passive); MS: 1 − n; DS: optional | Sensor (passive); MS: 1 − n; DS: optional | Sensor (mostly passive); MS: 1 − n (optional); DS: optional |

| Detection scope | Single host | Network segment or subnet | WLAN, wireless clients | Network subnets and hosts |

| Architecture | Managed or standard network | Managed network | Managed or standard network | Managed or standard network |

| Strengths | Effective in analyzing encrypted end-to-end communications | A broad analysis of AP protocols | High accuracy due to narrow focus; uniquely monitors wireless protocols | Excellent for detecting reconnaissance scans, malware infections, and DoS attacks |

| Technology limitations | Accuracy challenges due to lack of context, host resource usage, security conflicts | Cannot detect wireless protocols; delays in reporting; prone to false positives/negatives. | Vulnerable to physical jamming; limited security for wireless protocols | Batch processing causes delays in detection; lacks real-time monitoring |

| Security capabilities | Monitors system calls, file system activities, and traffic | Monitors hosts, OS, APs, and network traffic | Tracks wireless protocol activities and devices | Inspects host services and protocol traffic (IP, TCP, UDP) |

| Detection methodology | Combination of signature and anomaly detection | Primarily signature detection, with anomaly and specification-based detection | Predominantly anomaly detection, supplemented by signature- and specification-based methods | Major reliance on anomaly detection, incorporating specification-based methods |

| References | Method | Description | Accuracy |

|---|---|---|---|

| [59] | Sparse Convolutional Network | Intrusion classification with evolutionary techniques using an IGA-BP autoencoder model in MATLAB | 98.98% |

| [59] | Stacked Autoencoder (SAE) | DL-based NIDS for IEEE 802.11 networks | 98.66% |

| [60] | IP Flow-based IDS | Real-time intrusion detection using flow features, outperforming Snort and Zeek | Near perfect |

| [61] | Fog-layer NIDS | Hybrid DNN-kNN model for low resource consumption | 99.77% (NSL-KDD), 99.85% (CICIDS2017) |

| [62] | Efficient IDS using GWO and PSO | Feature selection using Grey Wolf and Particle Swarm Optimization with RF | 99.66% |

| [63] | DL-based NIDS | Concatenated CNN models (VGG16, VGG19, Xception) for intrusion detection | 96.23% (UNSW-NB15), 99.26% (CIC DDoS 2019) |

| [64] | Multilayer DL-NIDS | Two-stage detection process achieving improved results | G-mean: 78% |

| [65] | Hybrid CNN-LSTM System | Device-level phishing and cloud-based botnet detection | >94% |

| [66] | Neural Networks (NNs) Optimized by Genetic Algorithms | Enhanced fog computing-based intrusion detection with reduced execution time | Not specified |

| [67] | ML-based Framework (SVM, GBM, RF) | RF for detecting malicious traffic in the NSL-KDD dataset | 85.34% |

| [68] | Two-level Anomaly Detection | Abnormal traffic detection using DT and RF | 99.9% |

| NIDS | Strengths | Limitations |

|---|---|---|

| Snort | Lightweight intrusion detection system with strong industry adoption; regular updates, extensive feature set, and multiple administrative front-ends; comprehensive documentation with active community support; proven reliability with thorough testing and a simple deployment process. | Lacks an intuitive GUI; the administrative console may be challenging to use; packet loss issues when handling high-speed traffic (100–200 Mbps) before exceeding a single CPU’s limit. |

| Suricata | Multi-threaded processing enables high-speed traffic analysis, leveraging hardware acceleration for network traffic inspection. It supports LuaJIT scripting for more efficient threat detection and logs additional network data, including TLS/SSL certificates, HTTP requests, and DNS queries. Additionally, it is capable of detecting file downloads. | Higher memory and CPU consumption compared to Snort. |

| Bro-IDS (Zeek) | Implements both SIDS and AIDS; uses advanced signature detection techniques; offers high-level network traffic analysis; retains historical data for threat analysis and correlation, making it suitable for high-speed networks. | Only runs on UNIX-based operating systems, lacks a built-in GUI, primarily relies on log files, and requires expert-level knowledge for setup and configuration. |

| Kismet | Can expand capabilities to different network types via plugins; supports channel hopping for detecting multiple networks; remains undetectable while monitoring wireless packets; a well-maintained open-source tool for wireless monitoring; enables real-time capture and live streaming over HTTP. | Cannot directly retrieve IP addresses; restricted to wireless network monitoring. |

| OpenWIPS-ng | Modular architecture with plugin support for extended functionality, designed for easy deployment by non-experts, and enhanced detection capabilities due to support for multiple sensors. | Limited to wireless networks; lacks encrypted communication between sensors and servers; less popular, with minimal documentation and community backing; it is still underdeveloped in comparison to other NIDS. |

| Security Onion | Highly flexible security monitoring solution; integrates real-time analysis with GUI support via Sguil; simple installation with customizable configurations; regular updates to enhance security levels. | It inherits certain limitations from its integrated tools. Initially, it functions as an IDS and requires additional configuration to work as an IPS. |

| Sagan | Optimized for real-time log analysis with a multi-threaded architecture; supports various log formats and normalization techniques; capable of geolocating IP addresses; distributes processing across multiple servers, enabling Efficient resource utilization with lightweight CPU and memory usage; supports active development and provides ease of installation. | Primarily focused on log analysis rather than direct intrusion detection. |

| Approach | Objective | Challenges | Key Strengths |

|---|---|---|---|

| DL-based AI-driven network threat detection. | Strengthening threat identification in IoT networks. | Computational demands are high. | Achieves high precision in real-time threat identification. |

| Adversarial training for improved intrusion detection. | Enhancing detection efficiency against sophisticated threats. | Susceptible to adversarial manipulations. | Increased robustness against evolving security threats. |

| XAI for Industry 5.0 cybersecurity. | Boosting interpretability and transparency in AI-based security solutions. | Difficulties in implementing explainability frameworks. | Provides clear AI-driven insights for cybersecurity decisions. |

| AI-driven protection framework for cyber threat intelligence. | Delivering comprehensive defense mechanisms for AI workloads. | Struggles with novel and evolving threat patterns. | Adaptable and scalable for diverse environments. |

| AI-powered automated architecture for continuous attack detection. | Enhancing real-time attack detection in enterprise systems. | Requires high-quality and extensive data for optimal functioning. | Reduces manual workload while improving detection efficiency. |

| Transformer-based AI for social media threat monitoring. | Detecting emerging cybersecurity risks on platforms like Twitter. | Effectiveness depends on the volume and quality of textual data. | Efficient at analyzing and processing extensive text-based datasets. |

| Federated learning-based detection of adversarial threats. | Improving adversarial attack identification in federated networks. | Resource-intensive nature of federated learning. | Ensures data confidentiality while bolstering security in distributed systems. |

| Multi-domain Trojan identification through domain adaptation. | Strengthening Trojan malware detection across diverse environments. | Complex adaptation process when applied to multiple domains. | High accuracy in identifying cross-domain Trojans. |

| Technique | Architecture | Advantages | Limitations | Applications |

|---|---|---|---|---|

| Generative Architectures | USL, dynamically trained on raw data | Learns without labeled data, flexible for different tasks | Requires large data, complex optimization | Data synthesis, anomaly detection, SSL |

| Autoencoder (AE) | Encoder–decoder network | Effective for dimensionality reduction and feature learning | Sensitive to noisy inputs, requires careful tuning | Data compression, anomaly detection, feature extraction |

| Stacked Autoencoder (SAE) | Deep AE with multiple hidden layers | Captures hierarchical feature representations | High computational cost, prone to overfitting | Intrusion detection, image recognition, speech processing |

| Sparse Autoencoder (SAE) | AE with sparsity constraints | Reduces redundant features, improves feature learning | Needs careful selection of sparsity constraints | Intrusion detection, compressed sensing, representation learning |

| Denoising Autoencoder (DAE) | AE trained with corrupted inputs | Learns robust feature representations | Requires noise level adjustment | Noise reduction, speech enhancement, anomaly detection |

| Restricted Boltzmann Machine (RBM) | A probabilistic model with two layers | Efficient feature learning, suitable for pre-training deep networks | Slow convergence, complex training process | Feature extraction, recommendation systems, anomaly detection |

| Deep Belief Network (DBN) | Stacked RBMs trained layer-wise | Fast learning, effective feature extraction | Computationally intensive, needs large datasets | Pattern recognition, speech recognition, cybersecurity |

| Recurrent Neural Network (RNN) | Sequential network with loops | Captures temporal dependencies | Prone to vanishing gradient problem | Time-series forecasting, speech recognition, text processing |

| Long Short-Term Memory (LSTM) | RNN variant with memory cells | Handles long-term dependencies, mitigates vanishing gradient | High computational cost | Speech recognition, financial forecasting, network intrusion detection |

| Gated Recurrent Unit (GRU) | Simplified version of LSTM | Faster training, fewer parameters | Less expressive than LSTM in complex sequences | Real-time speech recognition, sequence modeling, anomaly detection |

| Neural Classic Network (NCN) | Fully connected multilayer perceptron | Simple structure, efficient for basic tasks | Limited in learning complex patterns | Image classification, binary classification tasks |

| Linear Function (LF) | Single-layer function | Computationally efficient | Limited in handling complex problems | Linear regression, signal processing |

| Nonlinear Function (NLF) | Nonlinear activation functions (sigmoid, tanh, etc.) | Can model complex relationships | May cause vanishing gradient issues | NNs, DL applications |

| Model Type | Algorithm | Binary Accuracy (%) | Multiclass Accuracy (%) | Key Insights |

|---|---|---|---|---|

| Batch Learning Models (BLMs) | J48 | 94.73 | 87.66 | Best BLM for multiclass classification; widely used in intrusion detection |

| PART | 92.83 | 87.05 | Slightly lower accuracy than J48; rule-based classification | |

| Data Streaming Models (DSMs) | Hoeffding Tree (HT) | 98.38 | 71.98 | High binary accuracy but weak multiclass performance |

| OBA | 99.67 | 82.80 | Highest binary accuracy; computationally intensive |

| Datasets | Benefits | Drawbacks | Covered Attacks | Use Cases |

|---|---|---|---|---|

| KDD99 | Widely recognized and frequently used dataset; contains labeled data; includes 41 distinct features per connection, along with class labels; provides network traffic in PCAP format. | Imbalanced classification issues; considered outdated. It does not include IoT and 5G-related data. | Covers various attack types such as DoS, R2U, User to Root U2R, and Probing. | IDS research, classic ML-based anomaly detection. |

| NSL-KDD | Improved version of KDD99; addresses some of KDD99’s limitations and eliminates duplicate records in training and testing datasets. | Lacks scenarios for modern, low-footprint attacks; does not support IoT systems or 5G. | DoS, R2L, U2R, Probing | ML-based IDS research, benchmark dataset for anomaly detection. |

| UNSW-NB15 | Represents a blend of real and synthetic modern network activities and cyber threats; offers network traffic data in PCAP and CSV formats. | It is more complex than KDD99 due to similarities between legitimate and malicious network behaviors. | Encompasses nine attack categories, including Fuzzers, Analysis, Backdoors, DoS, Exploits, Generic, Reconnaissance, Shellcode, and Worms. | Modern IDS evaluation, ML-based attack detection. |

| IoT Dataset | Designed for IoT network traffic analysis, represents real-world IoT network environments, and provides network traffic in PCAP and CSV formats. | Lacks labeled data, no attack data are included, aimed at IoT device proliferation and traffic characterization rather than security analysis. | No attacks included, normal traffic analysis only. | IoT device behavior analysis, traffic classification. |

| CICIDS | It contains labeled network flow data; suitable for ML and DL applications; offers network traffic in PCAP and CSV formats. | Restricted access is not publicly available and does not cover IoT-based network security scenarios. | Simulates multiple attack types such as Brute Force (FTP and SSH), DoS, Heartbleed, Web Attacks, Infiltration, Botnet, and DDoS. | ML-/DL-based IDS research, DL security models. |

| CSE-CIC-IDS2018 | Network flows with labeled features; designed for ML and DL research; provides network traffic in PCAP, CSV, and log formats; dynamically generated and adaptable dataset; extensible, modifiable, and reproducible. | Not publicly accessible. It does not include IoT-specific traffic analysis. | Brute Force, Heartbleed, Botnet, DoS, DDoS, Web Attacks, and Local Network Infiltration. | ML-/DL-based IDS, cyber threat detection, forensics analysis. |

| 5G-NIDD Dataset | It focuses on 5G network slicing and NFV security; provides network traffic at different layers; suitable for AI-based security research. | Limited labeled data, not widely available. | DDoS, network slicing attacks, NFV exploits. | 5G attack detection, AI-based anomaly detection. |

| 5G-Emulation Dataset | Simulates realistic 5G network conditions, covering slicing and jamming threats, and is helpful in developing ML-/DL-based security solutions. | The simulated dataset may not fully reflect real-world traffic. | DDoS, jamming, and slicing-based attacks. | ML-/DL-based IDS for 5G, network slicing security. |

| SDN/NFV Security Dataset | Tailored for 5G SDN and NFV research, it covers virtualization-based threats and helps in securing cloud-based 5G infrastructure. | Does not include IoT security and limited real-world deployment. | Spoofing, flooding, malware propagation, SDN-/NFV-specific attacks | SDN-based 5G network security, virtualized network security analysis. |

| IoT/5G Dataset (TON_IoT) | Captures real-world IoT device traffic in 5G environments, supports AI/ML research, available in multiple formats. | Limited data for advanced 5G-specific attacks. | Botnets, scanning, backdoors, IoT exploits | IoT-5G security monitoring, smart environment attack detection. |

| 5G-AI Security Dataset | Focuses on AI-powered security, includes adversarial attack scenarios, and helps in developing AI-based 5G intrusion detection models. | Not publicly available; requires specialized AI models. | Model evasion, AI poisoning, adversarial attacks in 5G. | AI-powered IDS, adversarial attack detection in 5G. |

| Feature | Network Architecture | ||||||

|---|---|---|---|---|---|---|---|

| [92] | [93] | [94] | [96] | [97] | [98] | [99] | |

| Objective | Creating a dataset for intrusion detection in 5G networks | DDoS attack detection using DTL | Real-time detection of DoS and DDoS attacks using ML algorithms | Analyzing 5G network traffic and detecting attack anomalies | Develop a privacy-preserving IDS using SSL with minimal labeled data to protect 5G-V2X networks | Secure 5G MANET–Cloud against masquerading, MITM, and black/gray-hole attacks; reduce energy and delay | Develop an IDS to detect flooding attacks in 5G-enabled vehicular (IoV) scenarios |

| Network setup | 5G network testbed with Free5GC and UERANSIM for simulation | 5G testbed with Free5GC and UERANSIM, focusing on attack scenarios | Real-time system with ML algorithms for DoS/DDoS detection | 5G-CN using Open5GS, UERANSIM, and Prometheus for monitoring | 5G-V2X environment with CAVs connected via Uu and PC5 interfaces; IDS deployed as NVFs in MEC-enabled network slices | 5G-based MANET integrated with cloudlets and a cloud service layer | 5G vehicular network simulated via NS-3 (5G-LENA) and SUMO with senders and receivers |

| Key attacks detected | Various DoS attacks, including UDP flood, TCP SYN flood | DDoS attacks like UDP flood, TCP SYN flood, and more | DoS and DDoS attacks (e.g., SYN flood, ICMP flood) | GTP-U DoS, attach request flooding, PFCP-based attacks | Detects various cyber threats, including DDoS and other intrusion attacks in vehicular networks | Masquerading, MITM, black-hole, gray-hole attacks | Flooding attacks |

| Traffic source | Automated Python scripts simulating web browsing, streaming, etc. | Simulated and real-world traffic flows with attack scenarios | Traffic from mobile devices using headless browsers | Live traffic from mobile devices and Raspberry Pi-based attackers | Combines extensive unlabeled vehicular network traffic with a small set of expert-labeled samples | Mobile node traffic aggregated at cluster heads and forwarded via cloudlets | Simulated vehicular traffic with diverse mobility and node densities |

| Dataset | 5G-NIDD dataset with benign and malicious traffic | 5G-NIDD with labeled benign and malicious traffic types | ML-based dataset from real-time attacks in 5G networks | 5GTN dataset (live traffic from actual mobile devices) | A large-scale 5G-V2X traffic dataset refined with minimal expert labeling for SSL pre-training and fine-tuning | Simulated 5G MANET–Cloud traffic (NS3.26) | Four datasets with 45, 45, 70, and 100 vehicles (2, 4, 7, and 9 attackers); features include time, nodeId, imsi, packet details, delay, jitter, coordinates, speed, and attack class |

| Testbed environment | Testbed with Free5GC and UERANSIM | 5G testbed with Free5GC and UERANSIM | Real-time 5G network with attack simulation | Nokia Flexi Zone Indoor Pico Base Stations, Dell N1524 Switch | Training on edge devices with model aggregation on a cloud server; final IDS deployed as an NVF in the MEC environment | NS3.26 simulation | Ubuntu VM (i5-8300H, four cores, 8 GB RAM) using NS-3 and SUMO |

| Key methodology | Feature extraction from live traffic using CICFlowMeter | DTL for DDoS attack detection in 5G networks | ML algorithms for classification and real-time detection | PCA, t-SNE, and UMAP for attack classification and visualization | Uses SSL pre-training on edge devices within a FL framework, followed by supervised fine-tuning | UL symmetric crypto, MFO clustering, DBN classification, adaptive Bayesian routing | ML-based IDS using DTs, RFs, and MLP; optimized via grid search and cross-validation |

| Traffic type for DDoS detection | HTTP, HTTPS, SSH, SFTP, DDoS traffic | HTTP, HTTPS, SSH, SFTP, and malicious DDoS traffic | HTTP, HTTPS, SSH, SFTP, DoS, and DDoS traffic | HTTP, HTTPS, SSH, SFTP, attack traffic | Not exclusively for DDoS; designed to detect a range of intrusion types in vehicular networks | Focuses on intrusion attacks rather than DDoS | Vehicular traffic under simulated flooding attacks |

| Feature extraction | CICFlowMeter for flow-based analysis | CICFlowMeter for flow-based analysis | Traffic features used for ML-based detection | PCA for feature reduction, focus on flow duration and packet length | Automatic DL-based extraction during SSL pre-training | Extracts node direction, position, distance, RSSI, trust value, and residual energy via DBN | nodeId, imsi, packet size, dstPort, delay, jitter, coordinates, and speed from simulation logs |

| Data preprocessing | Downsampling for class imbalance, normalization | Downsampling for class imbalance, feature selection | Data scaling and balancing for ML-based models | Removal of infinite values, NaN conversion | Leverages SSL to minimize manual labeling; supports data augmentation for enhanced robustness | Includes node registration/authentication (U-LSCT) and clustering (MFO) | Removal of features causing overfitting and hyperparameter tuning to optimize classifier performance |

| Evaluation metrics | Metrics for traffic classification accuracy | Evaluation using DTL-based models | Evaluation using real-time detection metrics | MMD between source and target datasets | Assessed using accuracy, precision, recall, F1-score, and efficiency; up to 9% improvement even with limited labeled data | DR, FPR, energy consumption, PDR, throughput, routing overhead, and delay | Primary metric: F1 score; also reports accuracy, precision, and recall |

| Use case | Intrusion detection in 5G networks | DDoS attack detection in 5G networks | Real-time attack detection using ML | Anomaly detection in 5G network traffic | Real-time intrusion detection for 5G-V2X networks, ensuring robust automotive cybersecurity while preserving data privacy | Enhance security and QoS in 5G MANET–Cloud environments | Enhance security in 5G-enabled IoV by accurately detecting flooding attacks in vehicular networks |

| Metric | Formula | Description |

|---|---|---|

| DR, TPR, Recall, Sensitivity | Measures the percentage of actual attacks correctly detected. | |

| FAR, FPR, Specificity | Measures the rate of normal traffic being falsely classified as an attack. | |

| TNR | Measures the percentage of normal traffic correctly classified as benign. | |

| FNR, Miss Rate | Measures the rate of attacks incorrectly classified as benign. | |

| Precision | Measures the accuracy of attack detection when an attack is classified. | |

| Accuracy | Measures the overall performance of IDS in detecting attacks correctly. | |

| Error Rate | Measures the percentage of misclassified instances. | |

| F-measure | Harmonic mean of precision and recall, useful when data are imbalanced. | |

| Latency in IDS Detection (ms) | (Time of Detection–Time of Attack Start) | Measures the time taken by an IDS to detect an attack, crucial for 5G real-time security. |

| Energy Efficiency (Joules/Detection) | Total Power Consumption/Number of Detections | Measures the power consumption of IDS, crucial for 5G edge computing. |

| False Alarm Rate (FAR) for 5G Slices | FP in Slice X/(FP in Slice X + TN in Slice X) | Evaluates misclassification of benign traffic in specific 5G network slices. |

| Transferability Score (TS) | (Performance on Target Domain)/(Performance on Source Domain) | Evaluates how well a model trained in one environment adapts to a new dataset/domain. |