An Artificial Neural Network Method for Simulating Soliton Propagation Based on the Rosenau-KdV-RLW Equation on Unbounded Domains

Abstract

1. Introduction

2. Artificial Neural Network Method

| Algorithm 1: Adam optimizer from Optax |

|

| Listing 1: Loss function related to the initial conditions using jacfwd, hessian, and vmap functions. |

|

1 from jax import jacfwd, hessian, vmap 2 3 u = make_prediction(t_0, x_0) 4 5 def get_u_x(get_u, t, x): 6 u_x = jacfwd(get_u, 1)(t, x) 7 return u_x 8 u_x_vmap = vmap(get_u_x, in_axes=(None, 0, 0)) 9 u_x = u_x_vmap(make_prediction, t_0, x_0).reshape(-1,1) 10 11 def get_u_xx(get_u, t, x): 12 u_xx = hessian(get_u, 1)(t, x) 13 return u_xx 14 u_xx_vmap = vmap(get_u_xx, in_axes=(None, 0, 0)) 15 u_xx = u_xx_vmap(make_prediction, t_0, x_0).reshape(-1,1) 16 17 def get_phi_x(get_phi, x): 18 phi_x = jacfwd(get_phi, 0)(x) 19 return phi_x 20 phi_x_vmap = vmap(get_phi_x, in_axes=(None,0)) 21 phi_x = phi_x_vmap(initial_cond1, x_0).reshape(-1,1) 22 23 def get_phi_xx(get_phi, x): 24 phi_xx = hessian(get_phi, 0)(x) 25 return phi_xx 26 phi_xx_vmap = vmap(get_phi_xx, in_axes=(None,0)) 27 phi_xx = phi_xx_vmap(initial_cond1, x_0).reshape(-1,1) 28 29 mse_0_loss = MSE(u, u_0) 30 mse_0_loss_dP_x = MSE(phi_x, u_x) 31 mse_0_loss_dP_xx = MSE(phi_xx, u_xx) 32 33 Loss_Data = mse_0_loss + mse_0_loss_dP_x + mse_0_loss_dP_xx |

| Listing 2: Loss function related to the PDE using jacfwd, hessian, and vmap functions. |

|

1 from jax import jacfwd, hessian, vmap 2 3 def get_u_t(get_u, t, x): 4 u_t = jacfwd(get_u, 0)(t, x) 5 return u_t 6 u_t_vmap = vmap(get_u_t, in_axes=(None, 0, 0)) 7 u_t = u_t_vmap(make_prediction, t_c, x_c).reshape(-1,1) 8 9 def get_u_x(get_u, t, x): 10 u_x = jacfwd(get_u, 1)(t, x) 11 return u_x 12 u_x_vmap = vmap(get_u_x, in_axes=(None, 0, 0)) 13 u_x = u_x_vmap(make_prediction, t_c, x_c).reshape(-1,1) 14 15 def get_u_x_power(get_u, t, x, p): 16 u_xpower = jacfwd(get_u, 1)(t, x, p) 17 return u_xpower 18 u_x_power_vmap = vmap(get_u_x_power, in_axes=(None, 0, 0, None)) 19 u_x_power = u_x_power_vmap(make_prediction_squared, t_c, x_c, p).reshape(-1,1) 20 21 def get_u_xxx(get_u, t, x): 22 u_xxx = jacfwd(hessian(get_u, 1), 1)(t, x) 23 return u_xxx 24 u_xxx_vmap = vmap(get_u_xxx, in_axes=(None, 0, 0)) 25 u_xxx = u_xxx_vmap(make_prediction, t_c, x_c).reshape(-1,1) 26 27 def get_u_xxt(get_u, t, x): 28 u_xxt = jacfwd(hessian(get_u, 1), 0)(t, x) 29 return u_xxt 30 u_xxt_vmap = vmap(get_u_xxt, in_axes=(None, 0, 0)) 31 u_xxt = u_xxt_vmap(make_prediction, t_c, x_c).reshape(-1,1) 32 33 def get_u_xxxxt(get_u, t, x): 34 u_xxxxt = jacfwd(hessian(hessian(get_u, 1), 1), 0)(t, x) 35 return u_xxxxt 36 u_xxxxt_vmap = vmap(get_u_xxxxt, in_axes=(None, 0, 0)) 37 u_xxxxt = u_xxxxt_vmap(make_prediction, t_c, x_c).reshape(-1,1) 38 39 residual = u_t + a*u_x + b*u_x_power - c*u_xxt + d*u_xxx + u_xxxxt 40 Loss_Physics = MSE(residual) |

3. Convergence

4. Numerical Experiments

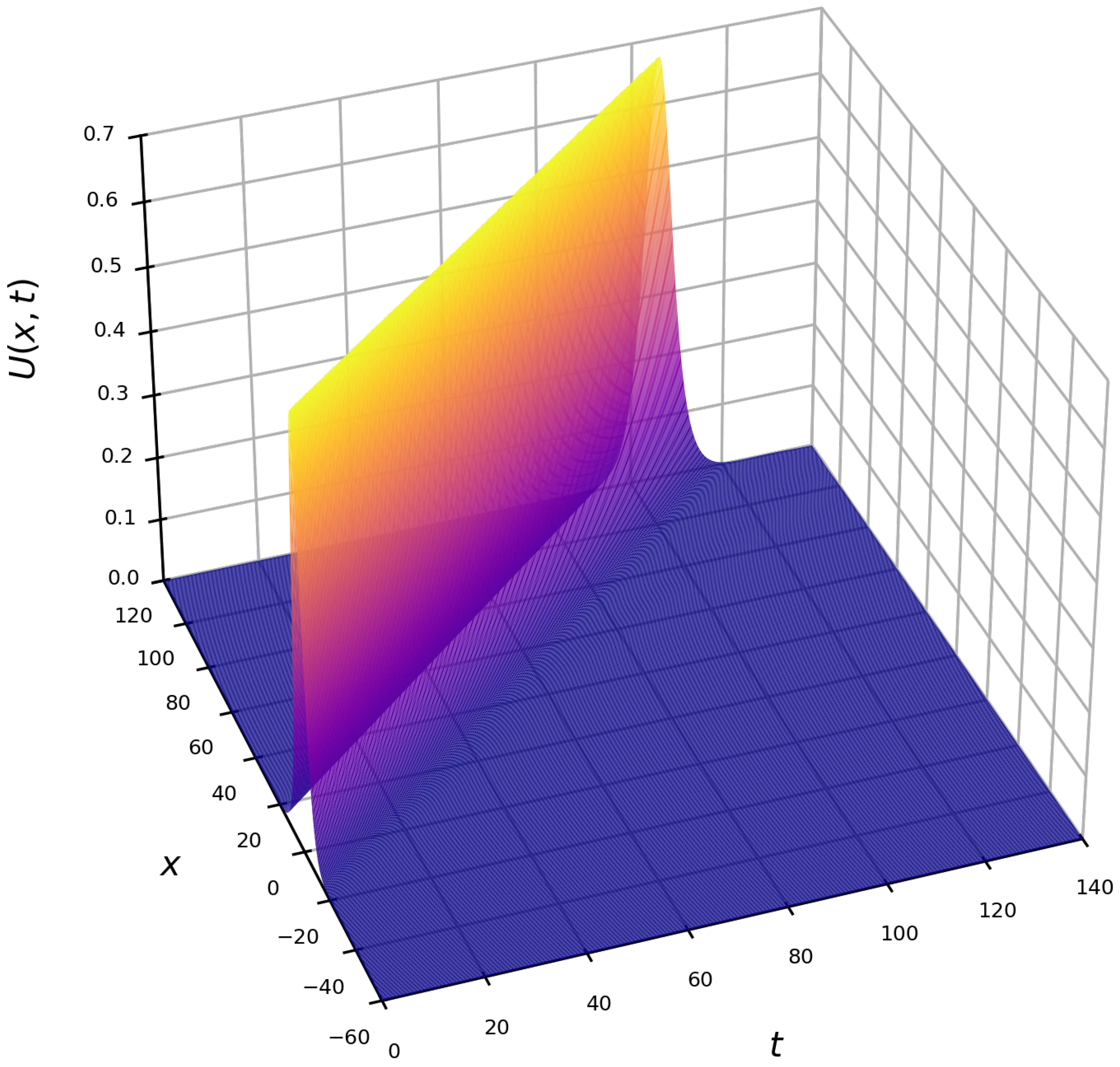

4.1. Example 1

4.2. Example 2

4.3. Example 3

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Korteweg, D.J.; de Vries, G. XLI. On the change of form of long waves advancing in a rectangular canal, and on a new type of long stationary waves. Lond. Edinb. Dublin Philos. Mag. J. Sci. 1895, 39, 422–443. [Google Scholar] [CrossRef]

- Rosenau, P. A quasi-continuous description of a nonlinear transmission line. Phys. Scr. 1986, 34, 827. [Google Scholar] [CrossRef]

- Rosenau, P. Dynamics of dense discrete systems: High order effects. Prog. Theor. Phys. 1988, 79, 1028–1042. [Google Scholar]

- Pan, X.; Zhang, L. On the convergence of a conservative numerical scheme for the usual Rosenau-RLW equation. Appl. Math. Model. 2012, 36, 3371–3378. [Google Scholar] [CrossRef]

- Pan, X.; Zheng, K.; Zhang, L. Finite difference discretization of the Rosenau-RLW equation. Appl. Anal. 2013, 92, 2578–2589. [Google Scholar] [CrossRef]

- Wang, X.; Dai, W. A new conservative finite difference scheme for the generalized Rosenau–KdV–RLW equation. Comput. Appl. Math. 2020, 39, 237. [Google Scholar] [CrossRef]

- Guo, C.; Li, F.; Zhang, W.; Luo, Y. A conservative numerical scheme for Rosenau-RLW equation based on multiple integral finite volume method. Bound. Value Probl. 2019, 2019, 168. [Google Scholar] [CrossRef]

- Guo, C.; Wang, Y.; Luo, Y. A conservative and implicit second-order nonlinear numerical scheme for the Rosenau-KdV equation. Mathematics 2021, 9, 1183. [Google Scholar] [CrossRef]

- He, D.; Pan, K. A linearly implicit conservative difference scheme for the generalized Rosenau–Kawahara-RLW equation. Appl. Math. Comput. 2015, 271, 323–336. [Google Scholar] [CrossRef]

- Alrzqi, S.F.; Alrawajeh, F.A.; Hassan, H.N. An efficient numerical technique for investigating the generalized Rosenau–KDV–RLW equation by using the Fourier spectral method. AIMS Math. 2024, 9, 8661–8688. [Google Scholar] [CrossRef]

- Atouani, N.; Omrani, K. A new conservative high-order accurate difference scheme for the Rosenau equation. Appl. Anal. 2015, 94, 2435–2455. [Google Scholar] [CrossRef]

- Karakoc, S.B.G. A new numerical application of the generalized Rosenau-RLW equation. Sci. Iran. 2020, 27, 772–783. [Google Scholar] [CrossRef]

- Seddek, L.F.; El-Zahar, E.R.; Chung, J.D.; Shah, N.A. A novel approach to solving fractional-order Kolmogorov and Rosenau–Hyman models through the q-Homotopy analysis transform method. Mathematics 2023, 11, 1321. [Google Scholar] [CrossRef]

- Pan, X.; Zhang, L. A novel conservative numerical approximation scheme for the Rosenau-Kawahara equation. Demonstr. Math. 2023, 56, 20220204. [Google Scholar] [CrossRef]

- Jiang, C.; Cui, J.; Cai, W.; Wang, Y. A novel linearized and momentum-preserving Fourier pseudo-spectral scheme for the Rosenau-Korteweg de Vries equation. Numer. Methods Partial Differ. Equ. 2023, 39, 1558–1582. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, C.; Jiang, C.; Zheng, C. Arbitrary high-order linearly implicit energy-conserving schemes for the Rosenau-type equation. Appl. Math. Lett. 2023, 138, 108530. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, Y.; Liang, Y.; Luo, Z. A reduced-order extrapolated model based on splitting implicit finite difference scheme and proper orthogonal decomposition for the fourth-order nonlinear Rosenau equation. Appl. Numer. Math. 2021, 162, 192–200. [Google Scholar] [CrossRef]

- Wang, X.; Dai, W. A three-level linear implicit conservative scheme for the Rosenau–KdV–RLW equation. J. Comput. Appl. Math. 2018, 330, 295–306. [Google Scholar] [CrossRef]

- Abbaszadeh, M.; Zaky, M.A.; Hendy, A.S.; Dehghan, M. A two-grid spectral method to study of dynamics of dense discrete systems governed by Rosenau-Burgers’ equation. Appl. Numer. Math. 2023, 187, 262–276. [Google Scholar] [CrossRef]

- Cinar, M.; Secer, A.; Bayram, M. An application of Genocchi wavelets for solving the fractional Rosenau-Hyman equation. Alex. Eng. J. 2021, 60, 5331–5340. [Google Scholar] [CrossRef]

- Ankur; Jiwari, R.; Kumar, N. Analysis and simulation of Korteweg-de Vries-Rosenau-regularised long-wave model via Galerkin finite element method. Comput. Math. Appl. 2023, 135, 134–148. [Google Scholar] [CrossRef]

- Ghiloufi, A.; Kadri, T. Analysis of new conservative difference scheme for two-dimensional Rosenau-RLW equation. Appl. Anal. 2017, 96, 1255–1267. [Google Scholar] [CrossRef]

- Yasmin, H.; Alshehry, A.S.; Saeed, A.M.; Shah, R.; Nonlaopon, K. Application of the q-Homotopy analysis transform method to fractional-order Kolmogorov and Rosenau–Hyman models within the Atangana–Baleanu operator. Symmetry 2023, 15, 671. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, Z.; Lin, F.; Jiao, J. Asymptotic analysis and error estimate for Rosenau-Burgers equation. Math. Probl. Eng. 2019, 2019, 1–8. [Google Scholar] [CrossRef]

- Wang, X.; Cheng, H.; Dai, W. Conservative and fourth-order compact difference schemes for the generalized Rosenau–Kawahara–RLW equation. Eng. Comput. 2022, 38, 1491–1514. [Google Scholar] [CrossRef]

- Shi, D.; Jia, X. Convergence analysis of the Galerkin finite element method for the fourth-order Rosenau equation. Appl. Math. Lett. 2023, 135, 108432. [Google Scholar] [CrossRef]

- Coclite, G.M.; Ruvo, L. Convergence of the Rosenau-Korteweg-de Vries Equation to the Korteweg-de Vries one. Contemp. Math. 2020, 1, 365–392. [Google Scholar] [CrossRef]

- Hu, B.; Xu, Y.; Jinsong, H. Crank-Nicolson finite difference scheme for the Rosenau-Burgers equation. Appl. Math. Comput. 2008, 204, 311–316. [Google Scholar] [CrossRef]

- Danumjaya, P.; Balaje, K. Discontinuous Galerkin finite element methods for one-dimensional Rosenau equation. J. Anal. 2022, 30, 1407–1426. [Google Scholar] [CrossRef]

- Mouktonglang, T.; Yimnet, S.; Sukantamala, N.; Wongsaijai, B. Dynamical behaviors of the solution to a periodic initial–boundary value problem of the generalized Rosenau-RLW-Burgers equation. Math. Comput. Simul. 2022, 196, 114–136. [Google Scholar] [CrossRef]

- Dangskul, S.; Suebcharoen, T. Evaluation of shallow water waves modelled by the Rosenau-Kawahara equation using pseudo-compact finite difference approach. Int. J. Comput. Math. 2022, 99, 1617–1637. [Google Scholar] [CrossRef]

- Chung, S.K.; Ha, S.N. Finite element Galerkin solutions for the Rosenau equation. Appl. Anal. 1994, 54, 39–56. [Google Scholar] [CrossRef]

- Chousurin, R.; Mouktonglang, T.; Charoensawan, P. Fourth-order conservative algorithm for nonlinear wave propagation: The Rosenau-KdV equation. Thai J. Math. 2019, 17, 789–803. [Google Scholar]

- Wang, S.; Su, X. Global existence and asymptotic behavior of solution for Rosenau equation with Stokes damped term. Math. Methods Appl. Sci. 2015, 38, 3990–4000. [Google Scholar] [CrossRef]

- Kumbinarasaiah, S.; Adel, W. Hermite wavelet method for solving nonlinear Rosenau–Hyman equation. Partial Differ. Equations Appl. Math. 2021, 4, 100062. [Google Scholar] [CrossRef]

- Zhou, D.; Mu, C. Homogeneous initial–boundary value problem of the Rosenau equation posed on a finite interval. Appl. Math. Lett. 2016, 57, 7–12. [Google Scholar] [CrossRef]

- Oruç, Ö. Integrated Chebyshev wavelets for numerical solution of nonlinear one-dimensional and two-dimensional Rosenau equations. Wave Motion 2023, 118, 103107. [Google Scholar] [CrossRef]

- Wang, Y.; Feng, G. Large-time behavior of solutions to the Rosenau equation with damped term. Math. Methods Appl. Sci. 2017, 40, 1986–2004. [Google Scholar] [CrossRef]

- Ajibola, S.O.; Oke, A.S.; Mutuku, W.N. LHAM approach to fractional order Rosenau-Hyman and Burgers’ equations. Asian Res. J. Math. 2020, 16, 1–14. [Google Scholar] [CrossRef]

- Ming, M. Long-time behavior of solution for Rosenau-Burgers equation (I). Appl. Anal. 1996, 63, 315–330. [Google Scholar] [CrossRef]

- Ming, M. Long-time behaviour of solution for Rosenau-Burgers equation (II). Appl. Anal. 1998, 68, 333–356. [Google Scholar] [CrossRef]

- Atouani, N.; Ouali, Y.; Omrani, K. Mixed finite element methods for the Rosenau equation. J. Appl. Math. Comput. 2018, 57, 393–420. [Google Scholar] [CrossRef]

- Tamang, N.; Wongsaijai, B.; Mouktonglang, T.; Poochinapan, K. Novel algorithm based on modification of Galerkin finite element method to general Rosenau-RLW equation in (2 + 1)-dimensions. Appl. Numer. Math. 2020, 148, 109–130. [Google Scholar] [CrossRef]

- Wang, X.; Dai, W.; Yan, Y. Numerical analysis of a new conservative scheme for the 2D generalized Rosenau-RLW equation. Appl. Anal. 2021, 100, 2564–2580. [Google Scholar] [CrossRef]

- Pan, X.; Wang, Y.; Zhang, L. Numerical analysis of a pseudo-compact C-N conservative scheme for the Rosenau-KdV equation coupling with the Rosenau-RLW equation. Bound. Value Probl. 2015, 2015, 65. [Google Scholar] [CrossRef]

- Labidi, S.; Rahmeni, M.; Omrani, K. Numerical approach of dispersive shallow water waves with Rosenau-KdV-RLW equation in (2 + 1)-dimensions. Discret. Contin. Dyn. Syst.-S 2023, 16, 2157–2176. [Google Scholar] [CrossRef]

- Erbay, H.A.; Erbay, S.; Erkip, A. Numerical computation of solitary wave solutions of the Rosenau equation. Wave Motion 2020, 98, 102618. [Google Scholar] [CrossRef]

- Chunk, S.K.; Pani, A.K. Numerical methods for the rosenau equation: Rosenau equation. Appl. Anal. 2001, 77, 351–369. [Google Scholar] [CrossRef]

- Özer, S. Numerical solution by quintic B-spline collocation finite element method of generalized Rosenau–Kawahara equation. Math. Sci. 2022, 16, 213–224. [Google Scholar] [CrossRef]

- Deng, W.; Wu, B. Numerical solution of Rosenau–KdV equation using Sinc collocation method. Int. J. Mod. Phys. C 2022, 33, 2250132. [Google Scholar] [CrossRef]

- Apolinar–Fernández, A.; Ramos, J.I. Numerical solution of the generalized, dissipative KdV–RLW–Rosenau equation with a compact method. Commun. Nonlinear Sci. Numer. Simul. 2018, 60, 165–183. [Google Scholar] [CrossRef]

- Verma, A.K.; Rawani, M.K. Numerical solutions of generalized Rosenau–KDV–RLW equation by using Haar wavelet collocation approach coupled with nonstandard finite difference scheme and quasilinearization. Numer. Methods Partial Differ. Equ. 2023, 39, 1085–1107. [Google Scholar] [CrossRef]

- Özer, S.; Yağmurlu, N.M. Numerical solutions of nonhomogeneous Rosenau type equations by quintic B-spline collocation method. Math. Methods Appl. Sci. 2022, 45, 5545–5558. [Google Scholar] [CrossRef]

- Ak, T.; Dhawan, S.; Karakoc, S.B.G.; Bhowmik, S.K.; Raslan, K.R. Numerical study of Rosenau-KdV equation using finite element method based on collocation approach. Math. Model. Anal. 2017, 22, 373–388. [Google Scholar] [CrossRef]

- Furioli, G.; Pulvirenti, A.; Terraneo, E.; Toscani, G. On Rosenau-type approximations to fractional diffusion equations. Commun. Math. Sci. 2015, 13, 1163–1191. [Google Scholar] [CrossRef]

- Coclite, G.M.; Ruvo, L. On the classical solutions for a Rosenau–Korteweg-deVries–Kawahara type equation. Asymptot. Anal. 2022, 129, 51–73. [Google Scholar] [CrossRef]

- Li, S.; Kravchenko, O.V.; Qu, K. On the L∞ convergence of a novel fourth-order compact and conservative difference scheme for the generalized Rosenau-KdV-RLW equation. Numer. Algorithms 2023, 94, 789–816. [Google Scholar] [CrossRef]

- Deqin Zhou, L.W.; Mu, C. On the regularity of the global attractor for a damped Rosenau equation on R. Appl. Anal. 2017, 96, 1285–1294. [Google Scholar] [CrossRef]

- Demirci, A.; Hasanoğlu, Y.; Muslu, G.M.; Özemir, C. On the Rosenau equation: Lie symmetries, periodic solutions and solitary wave dynamics. Wave Motion 2022, 109, 102848. [Google Scholar] [CrossRef]

- Wongsaijai, B.; Poochinapan, K. Optimal decay rates of the dissipative shallow water waves modeled by coupling the Rosenau-RLW equation and the Rosenau-Burgers equation with power of nonlinearity. Appl. Math. Comput. 2021, 405, 126202. [Google Scholar] [CrossRef]

- Safdari-Vaighani, A.; Larsson, E.; Heryudono, A. Radial basis function methods for the Rosenau equation and other higher order PDEs. J. Sci. Comput. 2018, 75, 1555–1580. [Google Scholar] [CrossRef]

- Muatjetjeja, B.; Adem, A.R. Rosenau-KdV equation coupling with the Rosenau-RLW equation: Conservation laws and exact solutions. Int. J. Nonlinear Sci. Numer. Simul. 2017, 18, 451–456. [Google Scholar] [CrossRef]

- Avazzadeh, Z.; Nikan, O.; Machado, J.A.T. Solitary wave solutions of the generalized Rosenau-KdV-RLW equation. Mathematics 2020, 8, 1601. [Google Scholar] [CrossRef]

- Zuo, J.M. Solitons and periodic solutions for the Rosenau–KdV and Rosenau–Kawahara equations. Appl. Math. Comput. 2009, 215, 835–840. [Google Scholar] [CrossRef]

- Prajapati, V.J.; Meher, R. Solution of time-fractional Rosenau-Hyman model using a robust homotopy approach via formable transform. Iran. J. Sci. Technol. Trans. A Sci. 2022, 46, 1431–1444. [Google Scholar] [CrossRef]

- Arı, M.; Karaman, B.; Dereli, Y. Solving the generalized Rosenau-KdV equation by the meshless kernel-based method of lines. Cumhur. Sci. J. 2022, 43, 321–326. [Google Scholar] [CrossRef]

- Muyassar Ahmat, M.A.; Jianxian Qiu, J.Q. SSP IMEX Runge-Kutta WENO scheme for generalized Rosenau-KdV-RLW equation. J. Math. Study 2022, 55, 1–21. [Google Scholar] [CrossRef]

- Kutluay, S.; Karta, M.; Uçar, Y. Strang time-splitting technique for the generalised Rosenau–RLW equation. Pramana 2021, 95, 148. [Google Scholar] [CrossRef]

- Dimitrienko, Y.I.; Li, S.; Niu, Y. Study on the dynamics of a nonlinear dispersion model in both 1D and 2D based on the fourth-order compact conservative difference scheme. Math. Comput. Simul. 2021, 182, 661–689. [Google Scholar] [CrossRef]

- Arslan, D. The comparison study of hybrid method with RDTM for solving Rosenau-Hyman equation. Appl. Math. Nonlinear Sci. 2020, 5, 267–274. [Google Scholar] [CrossRef]

- Kumbinarasaiah, S.; Mulimani, M. The Fibonacci wavelets approach for the fractional Rosenau–Hyman equations. Results Control Optim. 2023, 11, 100221. [Google Scholar] [CrossRef]

- Hasan, M.T.; Xu, C. The stability and convergence of time-stepping/spectral methods with asymptotic behaviour for the Rosenau–Burgers equation. Appl. Anal. 2020, 99, 2013–2025. [Google Scholar] [CrossRef]

- Abbaszadeh, M.; Dehghan, M. The two-grid interpolating element free Galerkin (TG-IEFG) method for solving Rosenau-regularized long wave (RRLW) equation with error analysis. Appl. Anal. 2018, 97, 1129–1153. [Google Scholar] [CrossRef]

- Sabi’u, J.; Rezazadeh, H.; Cimpoiasu, R.; Constantinescu, R. Traveling wave solutions of the generalized Rosenau–Kawahara-RLW equation via the sine–cosine method and a generalized auxiliary equation method. Int. J. Nonlinear Sci. Numer. Simul. 2022, 23, 539–551. [Google Scholar] [CrossRef]

- Özer, S. Two efficient numerical methods for solving Rosenau-KdV-RLW equation. Kuwait J. Sci. 2020, 48, 8610. [Google Scholar] [CrossRef]

- Bazeia, D.; Das, A.; Losano, L.; Santos, M.J. Traveling wave solutions of nonlinear partial differential equations. Appl. Math. Lett. 2010, 23, 681–686. [Google Scholar] [CrossRef]

- Wongsaijai, B.; Mouktonglang, T.; Sukantamala, N.; Poochinapan, K. Compact structure-preserving approach to solitary wave in shallow water modeled by the Rosenau-RLW equation. Appl. Math. Comput. 2019, 340, 84–100. [Google Scholar] [CrossRef]

- Hu, J.; Wang, Y. A high-accuracy linear conservative difference scheme for Rosenau-RLW equation. Math. Probl. Eng. 2013, 2013, 870291. [Google Scholar] [CrossRef][Green Version]

- Wongsaijai, B.; Poochinapan, K.; Disyadej, T. A compact finite difference method for solving the general Rosenau–RLW equation. IAENG Int. J. Appl. Math. 2014, 44. [Google Scholar]

- Sanchez, P.; Ebadi, Q.; Mojaver, A.; Mirzazadeh, M.; Eslami, M.; Biswas, A. Solitons and other solutions to perturbed Rosenau-KdV-RLW equation with power law nonlinearity. Acta Phys. Pol. A 2015, 127, 1577–1587. [Google Scholar] [CrossRef]

- Wongsaijai, B.; Poochinapan, K. A three-level average implicit finite difference scheme to solve equation obtained by coupling the Rosenau–KdV equation and the Rosenau–RLW equation. Appl. Math. Comput. 2014, 245, 289–304. [Google Scholar] [CrossRef]

- (Razborova) Sanchez, P.; Moraru, L.; Biswas, A. Perturbation of dispersive shallow water waves with Rosenau-KdV-RLW equation with power law nonlinearity. Rom. J. Phys. 2014, 59, 658–676. [Google Scholar]

- Ramos, J. Explicit finite difference methods for the EW and RLW equations. Appl. Math. Comput. 2006, 179, 622–638. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Vadyala, S.R.; Betgeri, S.N.; Betgeri, N.P. Physics-informed neural network method for solving one-dimensional advection equation using PyTorch. Array 2022, 13, 100–110. [Google Scholar] [CrossRef]

- Cuomo, S.; Di Cola, V.S.; Giampaolo, F.; Rozza, G.; Raissi, M.; Piccialli, F. Scientific machine learning through physics–informed neural networks: Where we are and what is next. J. Sci. Comput. 2022, 92, 88. [Google Scholar] [CrossRef]

- Wen, Y.; Chaolu, T. Learning the nonlinear solitary wave solution of the Korteweg–De Vries equation with novel neural network algorithm. Entropy 2023, 25, 704. [Google Scholar] [CrossRef] [PubMed]

- Bai, Y.; Chaolu, T.; Bilige, S. Data-driven discovery of modified Kortewegde Vries equation, Kdv–Burger equation and Huxley equation by deep learning. Neural Process. Lett. 2022, 54, 1549–1563. [Google Scholar] [CrossRef]

- Jagtap, A.D.; Kharazmi, E.; Karniadakis, G.E. Conservative physics-informed neural networks on discrete domains for conservation laws: Applications to forward and inverse problems. Comput. Methods Appl. Mech. Eng. 2020, 365, 113028. [Google Scholar] [CrossRef]

- Guo, Y.; Cao, X.; Liu, B.; Gao, M. Solving partial differential equations using deep learning and physical constraints. Appl. Sci. 2020, 10, 5917. [Google Scholar] [CrossRef]

- Zhang, Z.Y.; Zhang, H.; Zhang, L.S.; Guo, L.L. Enforcing continuous symmetries in physics-informed neural network for solving forward and inverse problems of partial differential equations. J. Comput. Phys. 2023, 492, 112415. [Google Scholar] [CrossRef]

- Zhang, H.; Cai, S.J.; Li, J.Y.; Liu, Y.; Zhang, Z.Y. Enforcing generalized conditional symmetry in physics-informed neural network for solving the KdV-like equation with Robin initial/boundary conditions. Nonlinear Dyn. 2023, 111, 10381–10392. [Google Scholar] [CrossRef]

- Bradbury, J.; Frostig, R.; Hawkins, P.; Johnson, M.J.; Leary, C.; Maclaurin, D.; Necula, G.; Paszke, A.; VanderPlas, J.; Wanderman-Milne, S.; et al. JAX: Composable transformations of Python+NumPy programs. 2018. Available online: http://github.com/jax-ml/jax (accessed on 1 March 2025).

- Heek, J.; Levskaya, A.; Oliver, A.; Ritter, M.; Rondepierre, B.; Steiner, A.; Zee, M.v. Flax: A Neural Network Library and Ecosystem for JAX. 2024. Available online: http://github.com/google/flax (accessed on 1 March 2025).

- DeepMind; Babuschkin, I.; Baumli, K.; Bell, A.; Bhupatiraju, S.; Bruce, J.; Buchlovsky, P.; Budden, D.; Cai, T.; Clark, A.; et al. The DeepMind JAX Ecosystem. 2020. Available online: http://github.com/google-deepmind (accessed on 1 March 2025).

| Layers/Neurons | Total Loss | Max. Abs. Error |

|---|---|---|

| 3 Layers of 50 Neurons | ||

| 3 Layers of 100 Neurons | ||

| 5 Layers of 50 Neurons 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Finch, L.; Dai, W.; Bora, A. An Artificial Neural Network Method for Simulating Soliton Propagation Based on the Rosenau-KdV-RLW Equation on Unbounded Domains. Mathematics 2025, 13, 1036. https://doi.org/10.3390/math13071036

Finch L, Dai W, Bora A. An Artificial Neural Network Method for Simulating Soliton Propagation Based on the Rosenau-KdV-RLW Equation on Unbounded Domains. Mathematics. 2025; 13(7):1036. https://doi.org/10.3390/math13071036

Chicago/Turabian StyleFinch, Laurence, Weizhong Dai, and Aniruddha Bora. 2025. "An Artificial Neural Network Method for Simulating Soliton Propagation Based on the Rosenau-KdV-RLW Equation on Unbounded Domains" Mathematics 13, no. 7: 1036. https://doi.org/10.3390/math13071036

APA StyleFinch, L., Dai, W., & Bora, A. (2025). An Artificial Neural Network Method for Simulating Soliton Propagation Based on the Rosenau-KdV-RLW Equation on Unbounded Domains. Mathematics, 13(7), 1036. https://doi.org/10.3390/math13071036