1. Introduction

During the past few years, increasing attention has been devoted to multi-agent systems (MASs), which consist of autonomous agents cooperating through interaction to complete tasks [

1]. Among studies on MASs (e.g., controllability [

2,

3,

4], stabilizability [

5,

6], containment control [

7,

8], leader-following consensus [

9,

10,

11]), consensus remains one of the primary research directions.

The concept of consensus was first introduced by DeGroot [

12], who proposed a model demonstrating how agents can reach an agreement in parameter estimation. This work laid the foundation for subsequent research on consensus in MASs. Olfati-Saber [

13] studied the problem of average consensus in connected undirected graphs and extended it to cases involving time delays and switched topologies. The work of Ren and Beard [

14] demonstrated that for multi-agent systems under directed topologies, the existence of a spanning tree in the communication graph is the fundamental criterion for achieving consensus. Liu and Ji [

15] introduced a flexible dynamic event-triggering approach related to the triggering threshold and addressed consensus in MASs with general linear dynamics, resulting in significantly reduced communication requirements. It is worth noting that the above results are only applicable to linear systems. A significant advancement was made by Liu et al. [

16], who derived consensus criteria for nonlinear systems subject to both fixed and switching topologies. For MASs with communication topologies involving negative weights, Tian et al. [

17] established a unified framework. Their work provides necessary and sufficient conditions for consensus under such conditions.

The aforementioned studies concentrated exclusively on MASs with a single type of dynamics and did not consider the more prevalent hybrid MASs encountered in practical engineering applications, which comprise two interacting components: subsystems with discrete-time (DT) dynamics [

18] and subsystems with continuous-time (CT) dynamics. Numerous instances of such systems exist [

19,

20]; consequently, investigating the coordinated control of hybrid MASs is of substantial practical significance and application value. For example, in human–robot systems, commands from humans are discrete, while the movements of the robot are continuous [

21]. Zheng et al. [

22,

23] investigated the consensus problem in first-order and second-order hybrid MASs under fixed directed communication topologies. By analyzing various communication strategies between DT and CT agents, they established a foundational framework for hybrid systems interactions.

In practical scenarios, various hybrid systems could be formulated as switching systems, wherein the dynamical behavior of agents may switch arbitrarily between DT and CT dynamics. Such switching behavior commonly arises in applications including power systems and automatic speed regulation systems. For instance, a system might be controlled by either physical or digital regulators, governed by switching rules between these regulators. When addressing the issue of long sampling periods, a discretized model of CT dynamics is considered; whereas for high-precision and short-sampling-period systems, it is necessary to handle time-varying values. Consequently, the system functions as a switching system that coordinates continuous-time and discrete-time dynamics. Zhai et al. [

24] conducted a stability analysis of continuous–discrete systems using a Lie algebraic technique. On the basis of this, Zheng et al. [

25] used classic protocol control methods to achieve consistency under switching dynamics. In addition, Lin et al. [

26] also conducted research on finite time control and quantified control to achieve consensus. Liu et al. [

27,

28] proposed two control protocols for the consensus control of second-order MASs concerning switching dynamics, and provided several sufficient and necessary conditions to guaranteeing consensus regarding network topology and sampling period.

As the demand for reducing energy consumption in MASs continues to grow in practical applications, game theory has been applied for distributed control of MASs. Each agent in MASs functions as an independent player, making individual decisions based on local information exchange with its neighbors. By defining utility functions for the agents and assuming they adjust their behavior to optimize their utility, the collaborative task can be modeled as a distributed game.Ma et al. [

29] analyzed games between CT and DT MASs, establishing sufficient/necessary conditions for consensus in the resulting hybrid system and proposing a method to accelerate convergence. Wang et al. [

30] broadened the result to the more general scenario of an arbitrary number of interacting subsystems. A data-driven approach was employed by Xie et al. [

31] to address the multi-player nonzero-sum game in unknown linear systems, enabling the derivation of dynamic output feedback Nash strategies using only input–output data. Motivated by the aforementioned works, this paper examines the consensus problem for second-order MASs with switching dynamics under a leader–follower framework, using a game-theoretic approach. In contrast to existing game-theoretic approaches [

29,

30,

31], our work is distinguished by its integration of switching CT-DT dynamics with a non-cooperative game among multiple leaders. While prior studies primarily focus on systems with a single type of dynamics or cooperative interactions, this study explicitly models the strategic competition between leaders under arbitrary switching patterns, establishing a unique framework that bridges game theory and hybrid system control.

Relative to the existing literature, the principal contributions of our work are delineated as follows.

The competitive behaviors among multiple leaders in MASs are modeled as a non-cooperative game, within which the individual cost function for each player is designed. Solving this game reveals that it admits a unique Nash equilibrium solution.

Based on the formulated game, two control strategies are developed for consensus attainment. One employs distinct CT and DT inputs for the respective subsystems, while the other utilizes a unified sampled-data control protocol for both, thus avoiding controller frequent switching.

Two sufficient and necessary conditions are established for the proposed control protocols.

The proposed framework finds potential applications in several real-world domains where hybrid continuous–discrete dynamics and competitive interactions coexist. For instance, in smart grid systems, multiple distributed energy resources (leaders) may compete to optimize their own profits while coordinating with local controllers (followers) to maintain frequency and voltage stability—a scenario naturally captured by our non-cooperative game model. Similarly, in multi-robot collaborative systems, such as warehouse logistics or search-and-rescue missions, robots may switch between continuous motion and discrete decision-making modes, while competing for tasks or resources. Our unified sampled-data control protocol can effectively avoid frequent controller switching and ensure consensus under dynamic topologies. These examples illustrate the practical relevance and generality of our theoretical results.

The paper is organized as follows.

Section 2 introduces the necessary preliminaries. The main theoretical results are developed in

Section 3 and

Section 4.

Section 5 presents numerical simulations to illustrate the findings. The work is concluded in

Section 6.

Some notations and symbols are listed in

Table 1.

3. Main Results

Consider a CT-DT switching dynamic MAS on a directed graph . Assume that each agent in the system satisfies , and that the interactions among these agents are modeled as a game . The cost functions of the leader players and the follower players correspond to Equations (13) and (14), respectively. Let the state set of followers be and the state set of leaders be . And .

Theorem 1. The game has a unique NE with holding, where

, , , and , .

Proof of Theorem 1. For

,

has a global minimum solution

, which satisfies

for

, if

,

is fixed, obviously

is a quadratic function with respect to

, thus

has a global minimum solution

satisfying

According to (15)–(17), the game

has a unique NE solution. Furthermore,

is the NE solution if and only if

By solving (18), one obtains

Let

with

. The Equation (

19) can be written in matrix form as

where

,

and

. For convenience, let

,

, then (20) could be rewritten as

. Observing

, it can be found that

which implies that

is strictly diagonally dominant. By Lemma 1 the

is invertible. Therefore, we have

As a consequence, game has the unique Nash equilibrium with holding. □

This theorem establishes the existence of a unique Nash Equilibrium (NE) in the game involving multiple leaders and followers. It signifies that each leader can find an optimal balance between minimizing its own state deviation and reducing disagreements with other leaders. The followers, in contrast, do not engage in competition and simply adjust their states according to the leaders’ directives. The existence of this unique NE not only provides a stable optimization objective for the system but also forms a solid foundation for designing the subsequent consensus control protocols.

All agents participate in the game

, and adjust their states at the next time step based on the NE. Under the framework of the game, embedding the CT system (11) into (21) yields

where

. Similarly, embedding the DT system (12) into (21) yields

By analyzing the matrix

, we can conclude that

and

. Since the weight factors satisfy

, we have

. Thus, we obtain

According to the foregoing, it can be known that

, assume that the eigenvalues of

are

,

. Based on Lemma 3, we obtain

, where

Clearly, each element of the matrix is nonnegative. Accordingly, the inverse matrix is nonnegative and possesses positive diagonal entries. According to (25) and (26), is a stochastic matrix with positive diagonal elements. By Lemma 4, the eigenvalue corresponds to the eigenvector , while the other eigenvalues satisfy .

The Jordan canonical form of

L associated with the directed graph

is defined as

where Jordan block

is given by

where

is an eigenvalue of

L with algebraic multiplicity

,

. Moreover, these multiplicities satisfy

Let

J denote the Jordan canonical form of

L, such that

. The columns of the nonsingular matrix

P comprise the right eigenvectors of

L, and the rows of

comprise the left eigenvectors. Letting

, the CT game control system (22) is cast to

By the system (23), through a similarity transformation, we obtain the DT game control system as

Lemma 5 ([

28])

. Assume that the directed graph contains a directed spanning tree, the CT-DT switching dynamic MAS can achieve consensus if where . We have made the following assumptions.

- A1

The graph admits a directed spanning forest.

- A2

The control gain simultaneously satisfies conditions (29) and (30).

where

is the eigenvalue of

L,

is the eigenvalue of the game control matrix

.

Theorem 2. Consensus is achieved in the CT-DT switching MAS (27)–(28) if and only if A1 and A2 are satisfied.

Proof of Theorem 2. (Sufficiency) Let

,

, then (27) and (28) could be expressed as

Note that

, for any initial state

, we have

Since

, where

P is a nonsingular matrix. Hence, the characteristic polynomial of

is

where

,

. Substituting

into

and

, we obtain

and

The determinant of the block matrix is computed as follows. Since the matrix is block upper triangular, we have

For the first term, corresponding to the follower subsystem, we note that

is block diagonal (Jordan form of

), so

which simplifies to

For the second term, corresponding to the leader subsystem under game-based control, we have

which gives

therefore, the full characteristic polynomial is

This confirms that the eigenvalues of are the roots of for , and (with multiplicity ) for the leaders. The stability of the system depends on the Hurwitz stability of , which is analyzed in the subsequent steps.

The relevant pairs of the real part and the imaginary part are and , respectively. is Hurwitz stable if and only if the following requirements are met:

The polynomial possesses two distinct real roots, denoted by ;

The crossing condition is satisfied, where is the unique root of ;

.

The two roots of are and which satisfy (1). The root of is , according to condition (2), is obtained. It can be seen from the expression of and that ,, and . According to condition (3), we get . For , follow the steps above, is obtained. After organizing, if the condition (29) in Theorem 1 holds, the CT game control system is Hurwitz stable, indicateing all the eigenvalues of have negative real parts.

Similarly, in the DT game control setting, the characteristic polynomial of

is

where

and

. However, under DT dynamics, the appropriate stability criterion is Schur stability rather than Hurwitz stability. To this end, bilinear transformations

is applied. Then we get

and

Considering

, we have

and

Hence, Hurwitz stability of

and

leads to Schur stability of

and

. The proof of Hurwitz stability is similar to the previous text. According to conditions (a)–(c), we obtain

,

and

. Correspondingly,

is Hurwitz stable if

where

. After calculating, if the condition (30) in Theorem 1 is satisfied, the DT game control system is Schur stable, which means all eigenvalues of

are within the unit circle.

Therefore, when A1 and A2 in Theorem 2 are satisfied, system (31) meets Definition 2, which means the CT-DT switching dynamic MAS can achieve consensus.

(Necessity) Suppose the directed communication graph does not contain a directed spanning forest, meaning a minimum of one follower cannot receive information from the leader. This implies that the state of that follower is not influenced by the leader, and thus, the system cannot achieve consensus. If the CT-DT switching dynamic MAS can achieve consensus under arbitrary switching, Definition 2 must be satisfied, so that , is necessarily Hurwitz stable and , is Schur stable. This can suggest the necessity. □

Remark 2. The system operates in two distinct modes—CT game control and DT game control—between which it switches arbitrarily. Only one mode is active at any time.

This theorem provides the necessary and sufficient conditions for achieving consensus in the Continuous–Discrete switching multi-agent system. Condition A1 requires the communication graph to contain a directed spanning forest, which ensures that information can flow from the leaders to all followers. Condition A2 imposes constraints on the control gains and , guaranteeing system stability under arbitrary switching dynamics. Collectively, these conditions ensure that all agents’ states converge to a common value, even as the system switches arbitrarily between continuous-time and discrete-time dynamics.

4. Control Input with Sampled Data

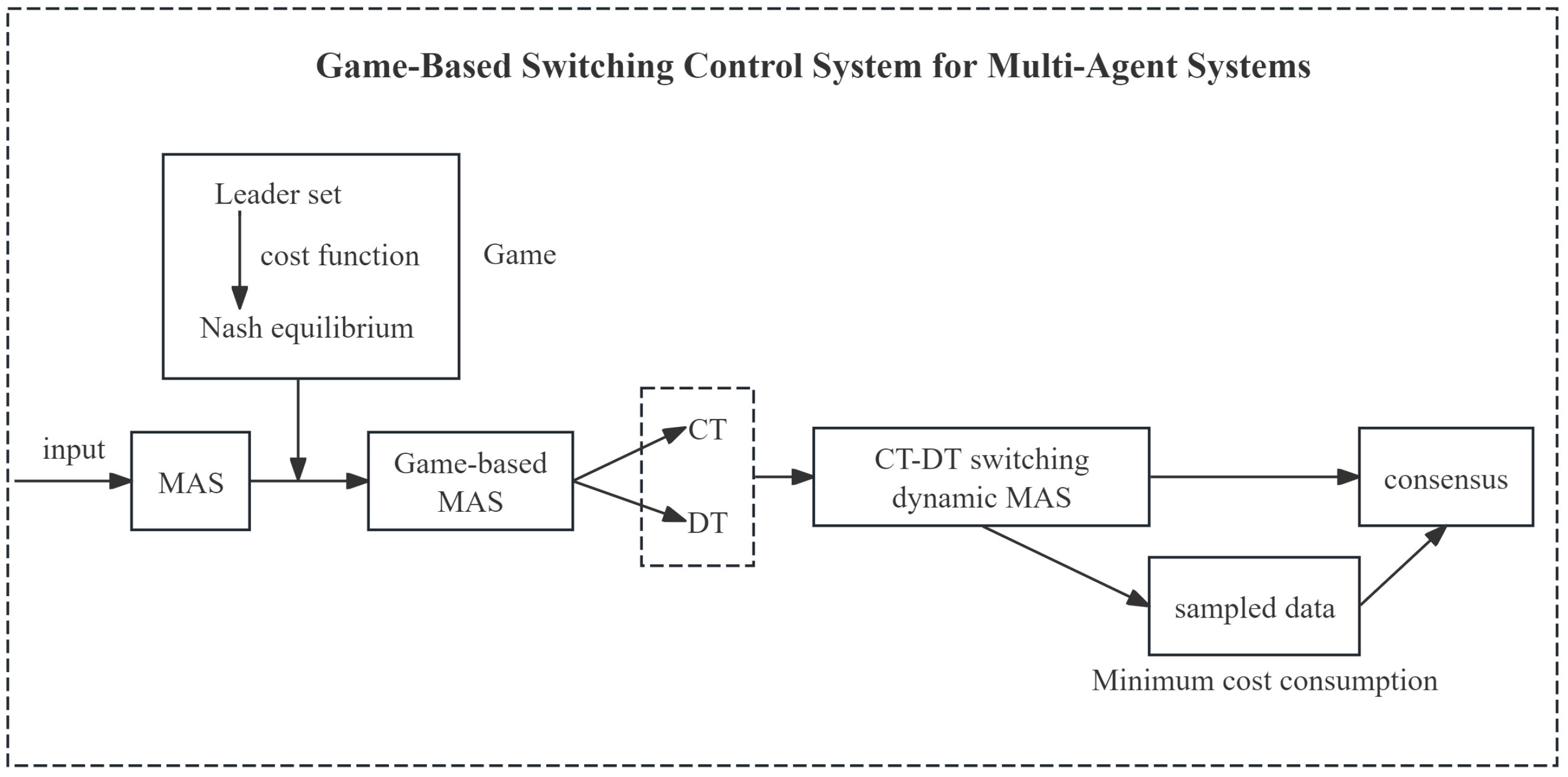

To provide a clearer and more intuitive overview of the proposed control system architecture, a block diagram illustrating the interaction among the game-based decision-making module, the unified sampled-data controller, and the switching multi-agent system is presented in

Figure 1.

We use the same control protocol for systems (1) and (3), the sampled data protocol is designed as

Under this protocol, the system (1) is updated to

where

,

. Under the influence of the game framework, the CT system is updated to the CT game control system

Let

, then (36) could be written as

For DT game control systems, by similar transformations, we have

where

.

We have made the following assumptions.

- B1

contains a directed spanning forest.

- B2

The control gain of the system simultaneously satisfies (39) and (40).

Theorem 3. The CT-DT switching MAS (37)–(38) achieves consensus if and only if conditions B1 and B2 are satisfied.

Remark 3. This protocol implements a unified sampled-data control framework applicable to both CT and DT systems. During system switching, the controller avoids frequent switching between two control strategies, it only needs to adjust the dynamic behavior of the system.

This theorem introduces a unified sampled-data control protocol applicable to both CT and DT subsystems. Its primary advantage lies in avoiding frequent controller switching during system mode changes, thereby simplifying implementation. Conditions B1 and B2 pertain to the graph structure and the constraints on control gains and the sampling period h, respectively. These conditions ensure the system converges to consensus even with sampled information. This control strategy is particularly suitable for practical hybrid systems that require a unified control framework.

5. Simulations

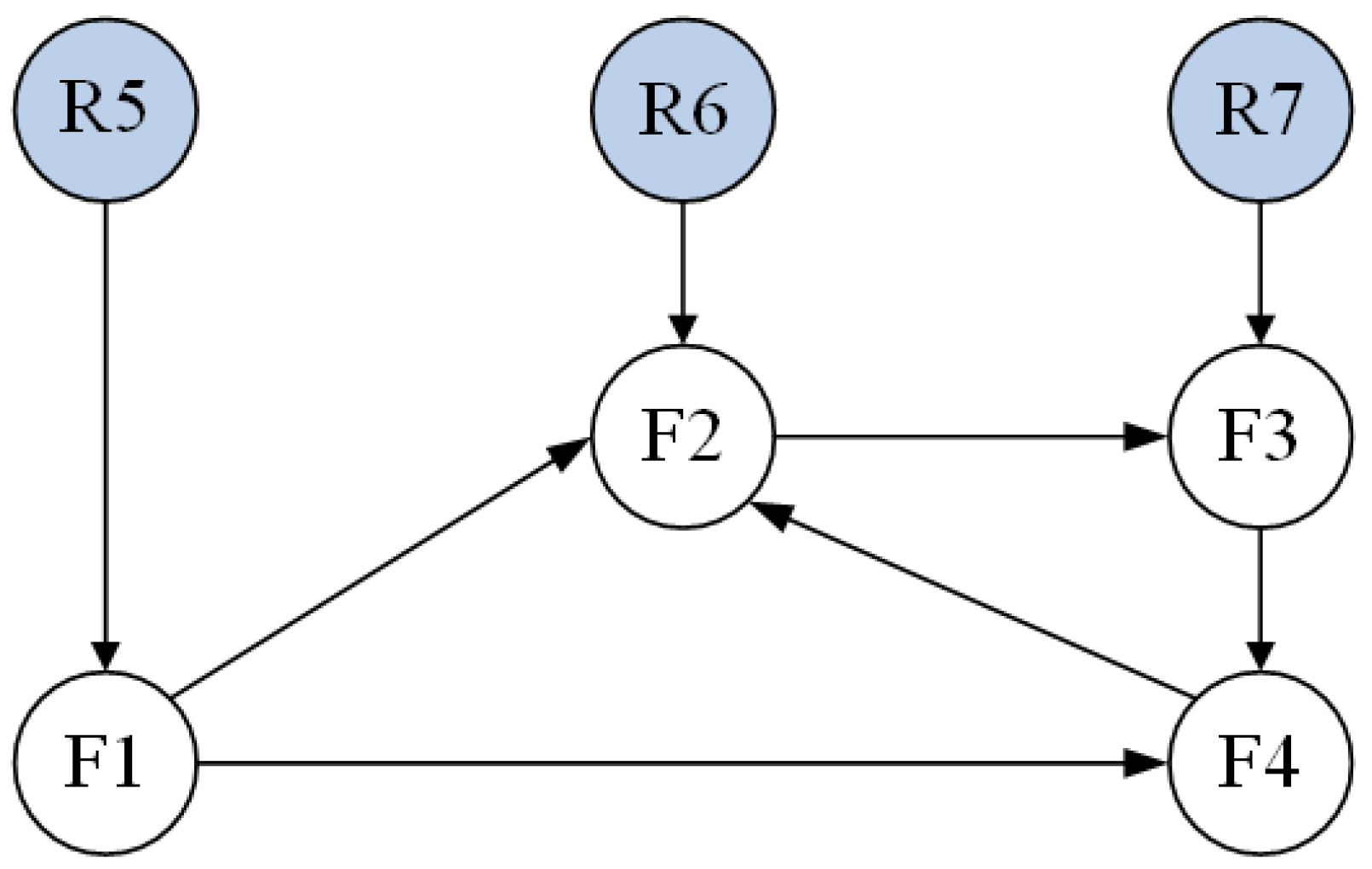

Consider a directed graph consisting of 7 agents, where R1, R2, R3 are leaders and F1, F2, F3, F4 are followers, as shown in

Figure 2. Clearly, it contains a spanning forest.

The Laplacian matrix

L is constructed from the directed graph topology shown in

Figure 1. For a directed graph

, the Laplacian matrix

is defined by

where

if there exists a directed edge from agent

j to agent

i, otherwise

. Based on the communication topology in

Figure 1, the adjacency matrix

is first determined. Subsequently, the Laplacian matrix

L is computed as follows

whose eigenvalue are

,

,

,

. The matrix is partitioned according to the leader–follower structure, where the first four rows and columns correspond to followers F1–F4, and the last three rows and columns correspond to leaders R1–R3. Then the eigenvalues of

could be calculated as

,

,

. The initial state of each agent is specified as

and

, The initial position vector and velocity vector are intentionally set to be dispersed in both magnitude and direction. This setup ensures that the system starts from a challenging configuration, thereby effectively demonstrating the convergence capability of the control protocols. The following example is completed to verify the correctness of Theorems 2 and 3.

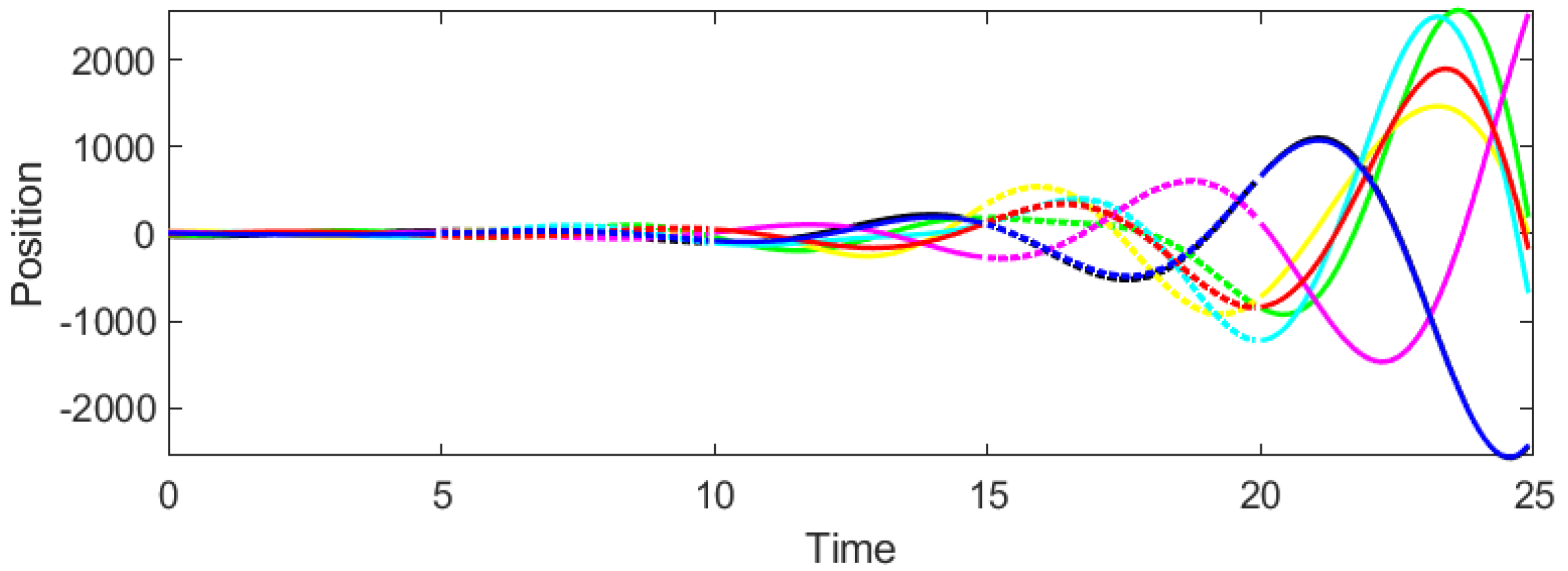

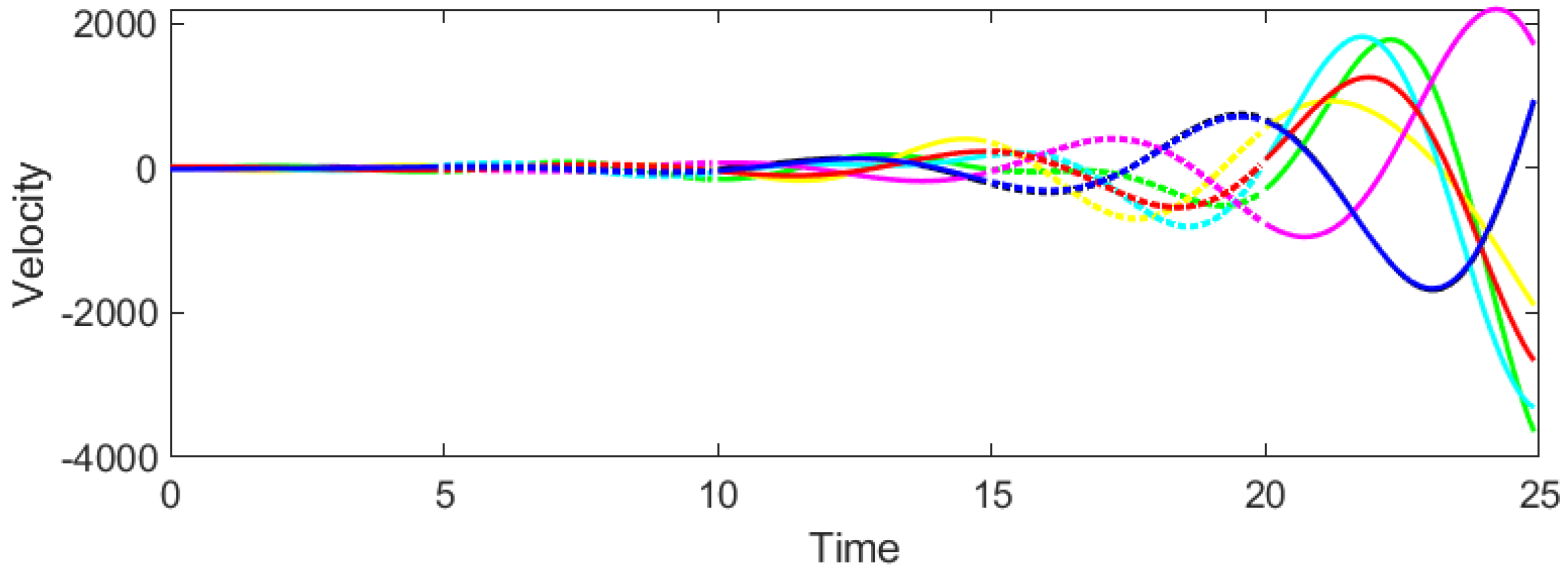

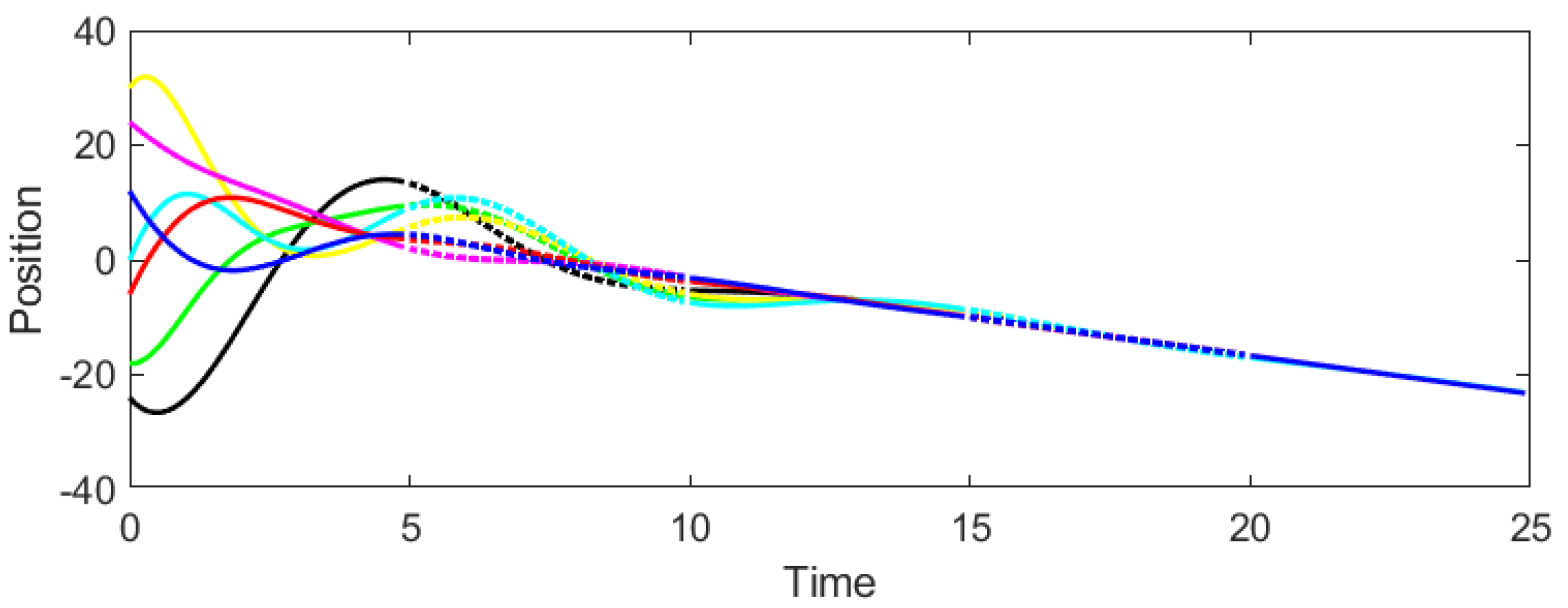

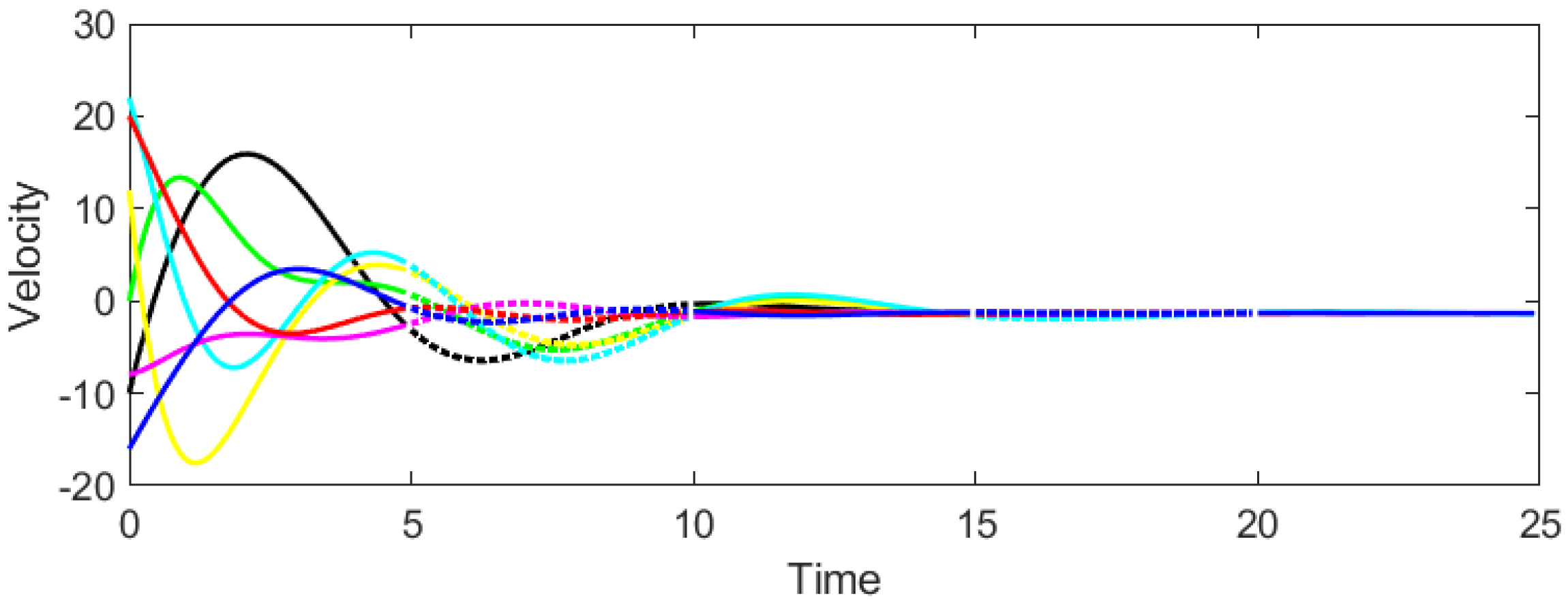

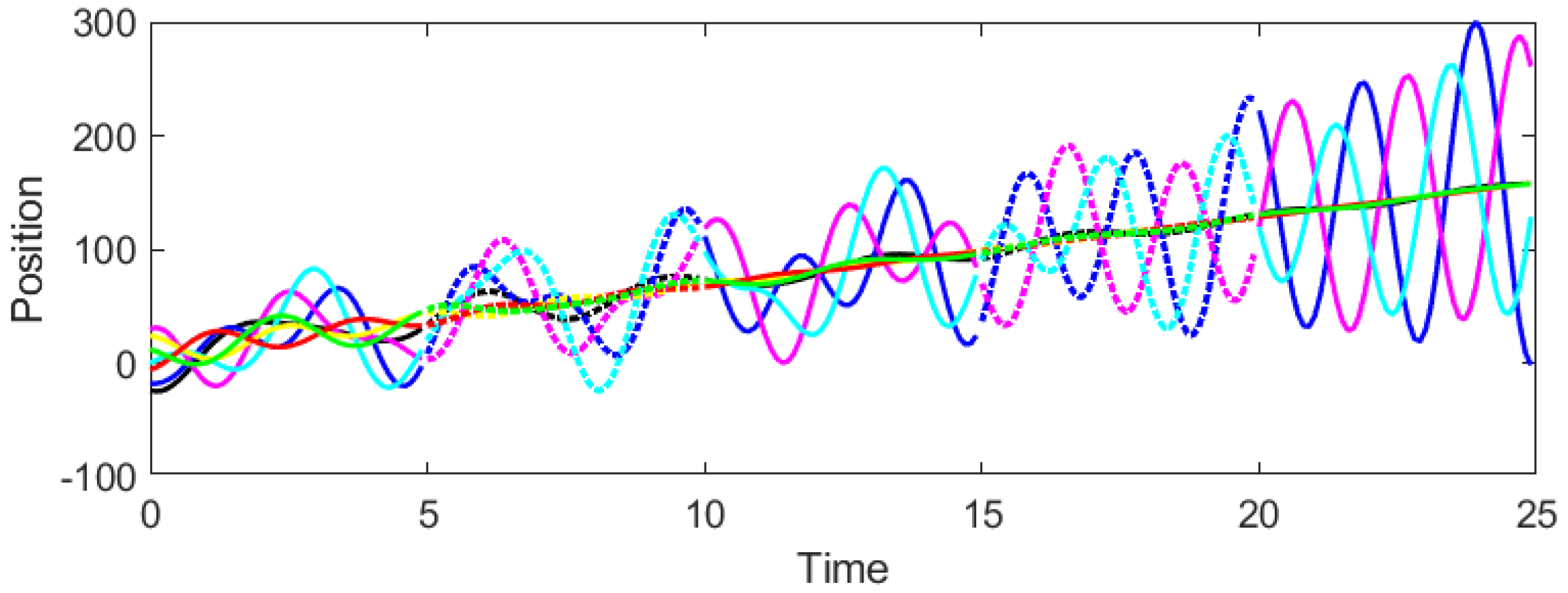

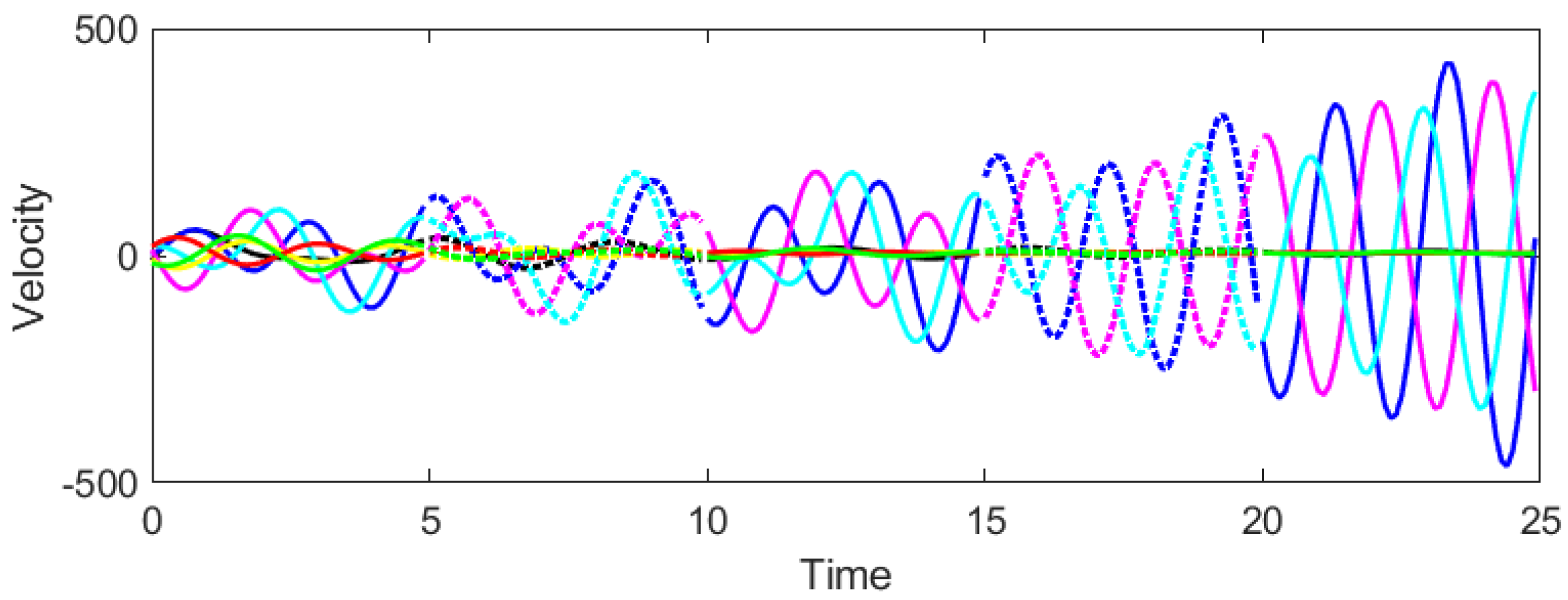

Example 1. Consider the CT-DT switching system (1)–(4) under protocol (31) and stochastic switching, with . According to Theorem 2, the admissible interval of β is derived as . Then we select and to test whether consensus could be achieved. It can be seen from Figure 3 and Figure 4 that when , the system cannot reach consensus. Instead, when , Figure 5 and Figure 6 signify that the system achieves consensus, which verifies the precision of Theorem 2. As shown in

Figure 3 and

Figure 4, the system fails to achieve consensus. The positions and velocities of the agents diverge over time, indicating instability. This aligns with Theorem 2, as

violates the gain condition (29), leading to the loss of Hurwitz/Schur stability in the error dynamics.

Figure 5 and

Figure 6 demonstrate that all agents successfully achieve consensus. The position and velocity trajectories converge to common values, confirming the theoretical prediction. The convergence is smooth and occurs within approximately 15 s, illustrating the effectiveness of the proposed game-based control strategy under switching dynamics.

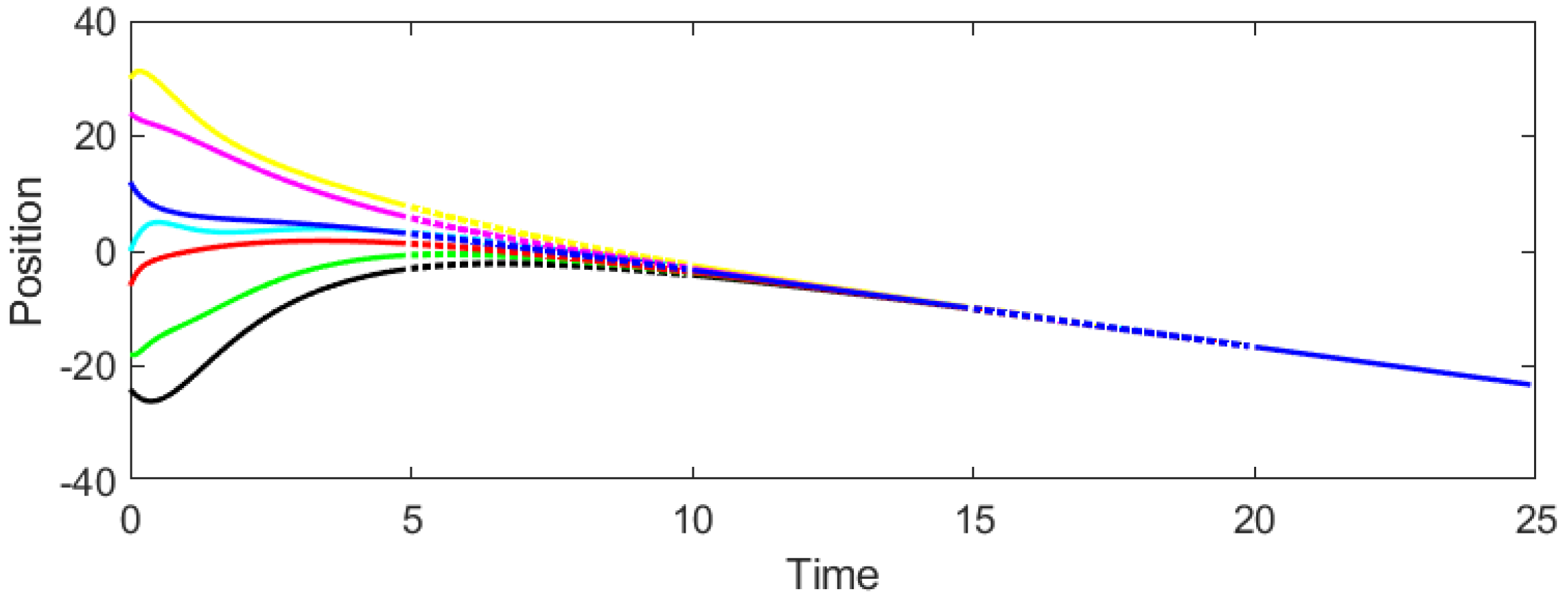

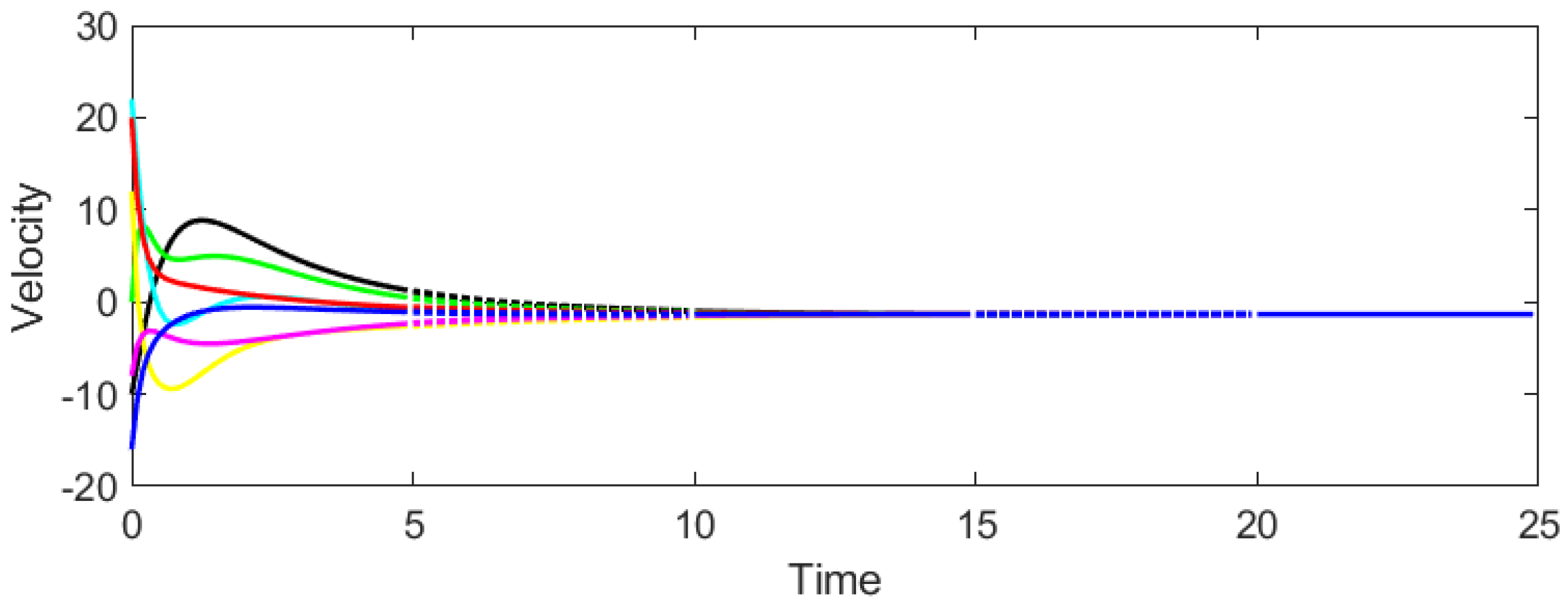

Example 2. Under protocol (34) with stochastic switching, the CT-DT system (1)–(4) is analyzed with the parameter value set to . From Theorem 3, we get the admissible interval β is . Afterwards we set to determine the appropriate range for h with 0 < h < 1.143. We select and . Figure 7 and Figure 8 demonstrate that the system achieves consensus when . In contrast, Figure 9 and Figure 10 show that consensus is not attained when , thereby confirming the validity of Theorem 3. Figure 7 and

Figure 8 show that the system achieves consensus. The trajectories converge steadily, validating the sampled-data control approach. The smaller sampling period ensures sufficient data updates to maintain stability under switching.

Figure 9 and

Figure 10 reveal that consensus is not attained. The system exhibits oscillatory and divergent behavior, consistent with the violation of the maximum allowable sampling period This underscores the critical role of the sampling period in

maintaining system stability under sampled-data control.

Remark 4. The initial states selected in the simulations are representative of a general case with significant initial disagreement. In practice, the proposed control strategies are robust to variations in initial conditions, as the consensus achievement is structurally ensured by the directed spanning forest in the communication graph and the designed game-based control laws.

To address the practical contribution and performance of the proposed method, we provide a comparative analysis based on the convergence behavior observed in our simulations. While the primary goal of this paper is to establish the theoretical foundation and prove consensus achievement under the game-theoretic framework, the simulation results inherently demonstrate favorable performance characteristics.

This convergence performance can be favorably compared with existing non-game-theoretic approaches for switching systems. Compared to the classic consensus protocols for switched systems in [

25], which often exhibit slower convergence due to passive reaction to neighborhood errors, our game-based approach enables agents (especially leaders) to proactively adjust their strategies by anticipating others’ actions. This strategic behavior, guided by the cost functions, leads to a more coordinated and efficient path to consensus, as reflected in the swift settling time in our results.Compared to the sampled-data control method in [

27], our unified protocol (Example 2) not only avoids frequent controller switching but also, as seen in

Figure 7 and

Figure 8, maintains a fast convergence rate. This suggests that the integration of the game-theoretic decision-making process enhances the system’s coordination efficiency even under a unified control structure.