AORO: Auto-Optimizing Reasoning Order for Multi-Hop Question Answering

Abstract

1. Introduction

- This paper presents AORO to automatically optimize the reasoning order by iteratively training the model and selecting the better retrieval target.

- On two multi-hop QA datasets, QASC and MultiRC, AORO outperforms both non-ranking and rule-based ranking methods, demonstrating its effectiveness.

- Analysis across different PTMs and datasets indicates that the learned order is consistently superior and that the easier to harder retrieval strategy generalizes, underscoring the importance of selecting an appropriate reasoning order before launching the reasoning process.

2. Background and Related Work

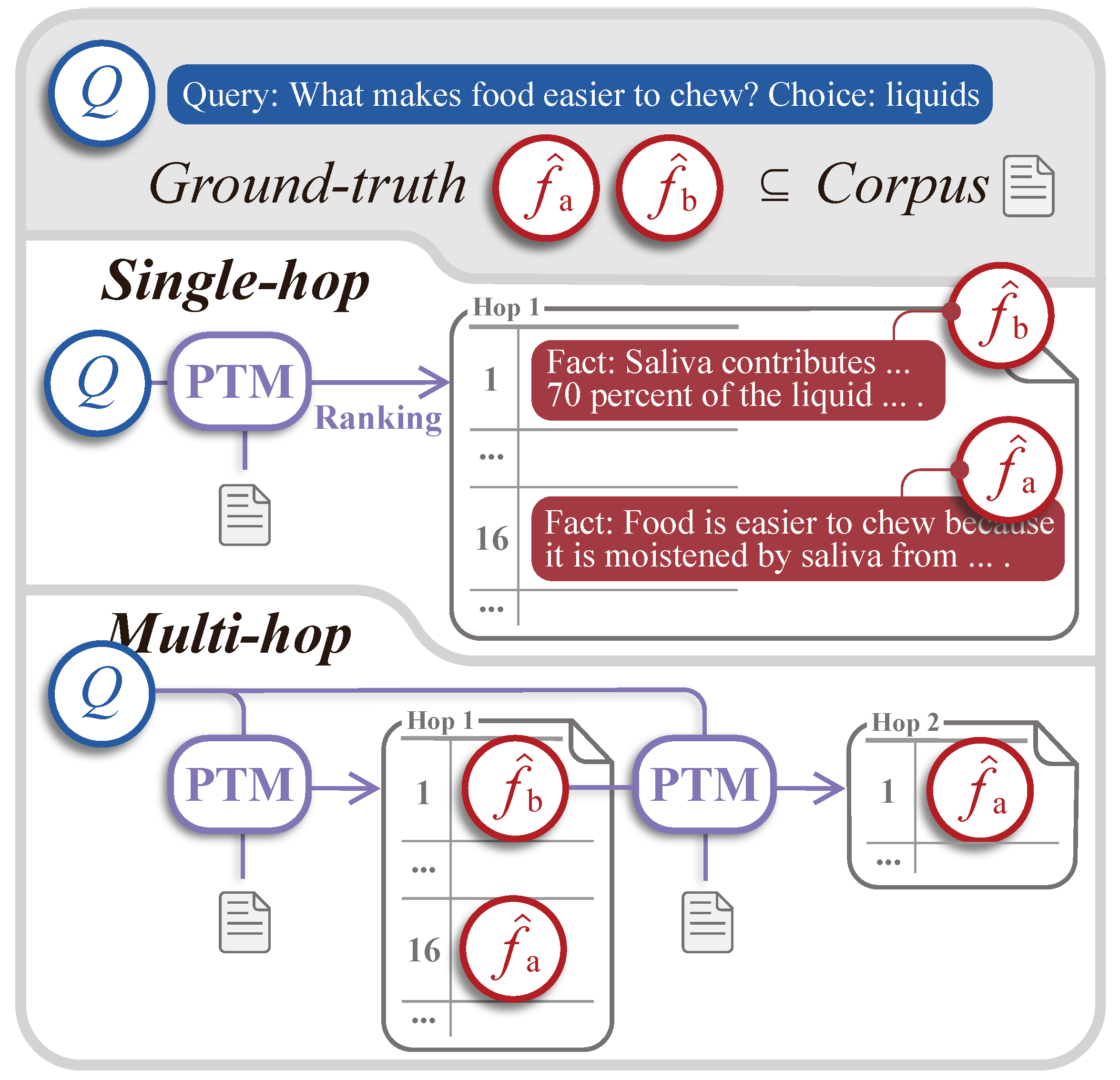

2.1. Multi-Hop Task

2.2. Multi-Hop Retrieval

2.3. Reasoning Order

3. Methods

3.1. Task and Notation Definition

| Algorithm 1 Automatic optimization of reasoning order. |

| Input: Q, , PTM |

| Output: |

|

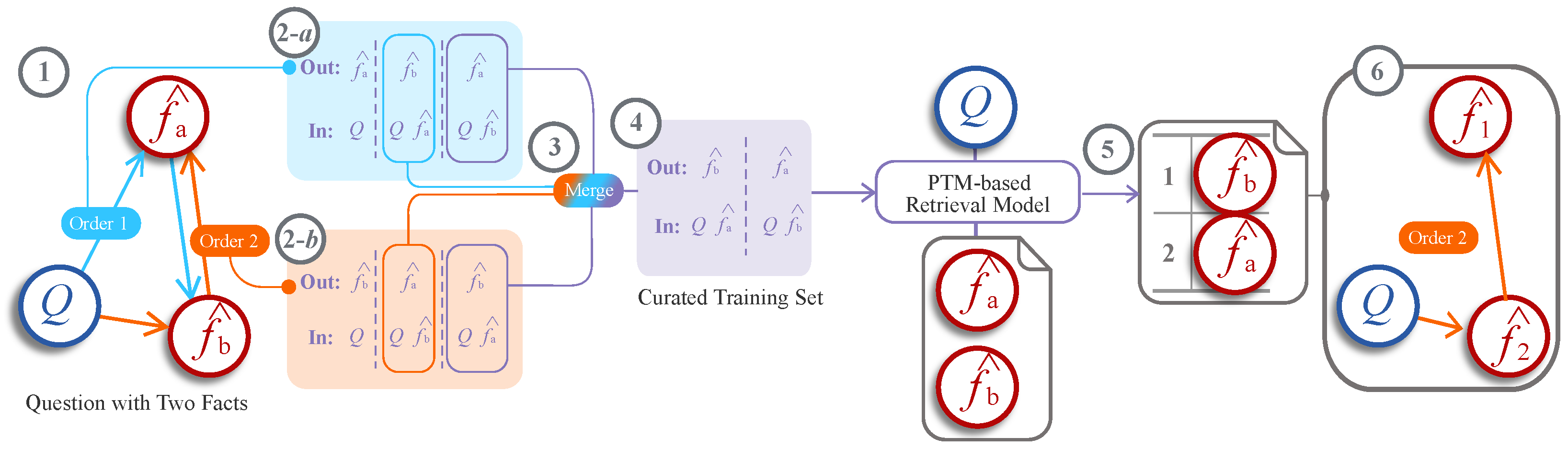

3.2. Automatic Optimization of Reasoning Order

3.2.1. Building Unbiased Training Data

- Standard samples, which are generated based on the reasoning orders. For a given order, each fact is treated as the target output, with the query and all preceding facts serving as the input.

- Augmented samples, which are created by inserting a different fact into the reasoning order. This simulates a scenario where a different fact was mistakenly retrieved in a previous step, thereby training the retrieval model to recover from the error. The objective is to guide the model back to the correct target for the current stage.

3.2.2. Training

3.2.3. Prediction

3.3. Retraining Based on Orders

4. Experiments

4.1. Datasets

- QASC [21]: This dataset consists of an 8-choice QA task (https://allenai.org/data/qasc (accessed on 4 January 2024)) with a knowledge base containing 17 million facts. For each question, there are always two ground-truth facts, denoted as . Following the evaluation protocol of prior work on this dataset [31,35], performance is primarily measured using Recall@10 (both found and at least one found). This means that in the top ten retrieved facts, the recall is measured for both facts being found as well as for at least one fact being found. The candidate set C on QASC is extracted from the knowledge base using Heuristic+IR [21], resulting in an upper bound for the validation set of 81.8 for Recall@10 at least one found and 61.3 for Recall@10 both found. After training, each sample in the validation set retrieves one fact in the first hop and nine facts in the second hop, resulting in a total of ten facts collected over the two hops.

- MultiRC [30]: This dataset is a multiple-choice QA task, where each sample includes a question, a set of 2 to 14 answer choices, 2 to 4 ground-truth facts for the validation set, 2 to 6 ground-truth facts for the training set, and a corresponding paragraph. The version of MultiRC used in this paper is the original MultiRC (https://cogcomp.seas.upenn.edu/multirc/ (accessed on 4 January 2024)) and not the one included in SuperGLUE [40]. The candidate set, denoted as C, is derived from all the facts within the paragraph. In the training phase, the maximum number of ground-truth facts is six, which also restricts the maximum number of iterations for auto-optimization to six. To ensure a fair comparison with RPA, AORO utilizes the same dynamic hop-stopping method during the MultiRC validation process. Specifically, for each query, the query’s non-stop words that appear in the retrieved facts are removed. Once all applicable words have been removed, the process of hopping stops. Given that the validation set contains a maximum of four facts, the maximum number of hops allowed is also four. Since the number of hops changes dynamically, the F1 score is used as the evaluation metric.

4.2. Baselines

- BM25 [41] (no order): The traditional BM25 retrieval algorithm ranks a set of facts based on query terms that appear within each fact, without considering their proximity.

- AutoROCC [41] (no order): This method introduces an unsupervised strategy for selecting facts. It is an enhanced version of BM25 that aims to (i) maximize the relevance of the selected facts, (ii) minimize the overlap between the selected facts, and (iii) maximize the coverage of both the question and the answer. Based on these principles, which are tailored for multi-hop QA, AutoROCC delivers better search results compared to BM25.

- AIR [31] (no order): This is a straightforward, fast, and unsupervised iterative evidence retrieval method known as AIR (Alignment-based Iterative Retriever). AIR is based on three key concepts: (i) an unsupervised alignment method that soft-aligns questions and answers with facts using embeddings, such as GloVe; (ii) an iterative process that reformulates queries to emphasize terms not addressed by the existing facts; (iii) a stopping criterion that halts retrieval when the terms in the question and candidate answers have been covered by the retrieved facts. Because AIR uses an iterative approach, facts are gathered one at a time, which establishes a specific reasoning order. Although the primary objective of AIR is collection rather than sequence, it can also incorporate a method for reordering research. In this context, one of its alignment methods, GloVe, is discussed in Section 4.6, focusing on training-free techniques.

- RPA [14] (optimized order): Reasoning Path Augmentation (RPA) is a method that adjusts the order of facts based on the number of overlapping words, which is an artificial rule. This method operates under the assumption that the more overlapping terms a fact has with the query, the more relevant it is to that query. By prioritizing these relevant facts, RPA can help reduce complexity and enhance retrieval results. However, it is uncertain whether the artificially determined order is indeed the most effective one. For the sake of comparison, RPA’s order is replicated and tested in the AORO environment.

4.3. PTMs

- RoBERTa [37]: RoBERTa has the same model structure as BERT, but it differs in several important ways. RoBERTa utilizes more data, a larger batch size, and a dynamic masking method, all of which enhance its performance on downstream tasks. To compare with RPA, which also optimizes reasoning orders, AORO employs the same RoBERTa model.

- DeBERTa [42]: DeBERTa encodes the content and positional information of words separately and employs disentangled matrices to calculate attention weights. This approach distinguishes it from BERT and RoBERTa. As a result, DeBERTa outperforms both RoBERTa and BERT in various downstream tasks.

4.4. Training Details

4.5. Similarity Metric for Orders

- RPA’s optimized order (): The reasoning order is optimized by RPA. Since RPA uses RoBERTa, .

- AORO’s optimized order (): The reasoning order is optimized by the AORO algorithm. It is further divided into and , reflecting the differences between the models.

4.6. Training-Free Methods

5. Experimental Results

5.1. Performance

- AORO (RoBERTa): When the PTM is RoBERTa, AORO generates , which is then used to train RoBERTa to achieve improved search performance. In the QASC dataset (see Table 2), when using the same RoBERTa as the training PTM, AORO outperforms the RPA by 0.4 in the Recall@10 both found metric and shows an improvement of 0.3 in Recall@10 at least one found. On the MultiRC dataset (see Table 3), AORO also exceeds RPA by 1.0 in the F1 metric. This indicates that the proposed AORO provides a more search-friendly reasoning order than RPA. Specifically, is superior to , confirming that automatic reasoning optimization can achieve a more logical order compared to the manual rules used in RPA. However, it is evident that the improvement observed on QASC is less significant than that on MultiRC, which is analyzed in detail in Section 5.3.

- AORO (DeBERTa): Similar to AORO (RoBERTa), AORO utilizes to train DeBERTa for the search task. Given that AORO outperforms RPA (RoBERTa) in search performance, DeBERTa further enhances the results of AORO. For instance, on the QASC dataset, as shown in Table 2, DeBERTa achieves a 1.2 improvement in Recall@10 both found over AORO (RoBERTa). Additionally, on the MultiRC dataset, as demonstrated in Table 3, it shows a 3.3 point increase in F1 score. This improvement is not only attributable to the advantages of compared to but also to DeBERTa’s superior model architecture and its powerful encoding capabilities in search tasks.

- AORO (RoBERTa on DeBERTa): To further investigate why AORO (DeBERTa) outperforms AORO (RoBERTa), AORO (RoBERTa on DeBERTa) is introduced, which utilizes to train RoBERTa. In this experiment, the performance of AORO (RoBERTa on DeBERTa) is compared using the same RoBERTa. If AORO (RoBERTa on DeBERTa) surpasses AORO (RoBERTa), it would indicate that is more effective than in reasoning tasks. As expected, AORO (RoBERTa on DeBERTa) performs 0.2 better on Recall@10 both found for the QASC dataset (see Table 2) and achieves a 0.6 increase in F1 score for the MultiRC dataset (see Table 3). Although these improvements are modest, they support the observation that outperforms . This suggests that the stronger the pre-trained model, the more effective its reasoning capabilities become. It also implies that even weaker models can achieve better results when using an effective reasoning order.

5.2. Quantitative Analysis of

5.3. Qualitative Analysis

5.4. Regularities in Reasoning Orders

- TF-IDF, RPA, GloVe: First, Q and s are processed in three steps: (i) they are split using the word_tokenize from NLTK (Natural Language ToolKit) as tokenizer, (ii) stop words are removed, and (iii) stems are extracted using the Porter Stemmer. This results in lists of words that are used for similarity calculations. The ranking process involves the following steps: (i) identifying the fact that has the highest sentence similarity with Q, (ii) removing overlapping words between Q and , and (iii) using the remaining words as a new Q to compute similarity with the remaining s. This ranking process continues iteratively until no s remain.

- spaCy: When using spaCy’s similarity method, it is important to note that once the sentence is split into individual words, spaCy cannot compute inter-sentence similarity. As a result, the new Q is created by combining the old Q and the retrieved .

5.5. Case Studies

- Confirm the query going crazy ().

- Validate the choice see a light ().

- Investigates the cause of crazy, identifying it as hallucinating noises ().

- Combines Q and to establish the relationship that sound comes from bell ().

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Talmor, A.; Herzig, J.; Lourie, N.; Berant, J. Commonsenseqa: A question answering challenge targeting commonsense knowledge. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; Burstein, J., Doran, C., Solorio, T., Eds.; Volume 1 (Long and Short Papers). Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 4149–4158. [Google Scholar] [CrossRef]

- Abdalla, M.; Kasem, M.; Mahmoud, M.; Yagoub, B.; Senussi, M.; Abdallah, A.; Kang, S.; Kang, H. ReceiptQA: A Question-Answering Dataset for Receipt Understanding. Mathematics 2025, 13, 1760. [Google Scholar] [CrossRef]

- Sha, Y.; Feng, Y.; He, M.; Liu, S.; Ji, Y. Retrieval-Augmented Knowledge Graph Reasoning for Commonsense Question Answering. Mathematics 2023, 11, 3269. [Google Scholar] [CrossRef]

- Xiao, G.; Liao, J.; Tan, Z.; Yu, Y.; Ge, B. Hyperbolic Directed Hypergraph-Based Reasoning for Multi-Hop KBQA. Mathematics 2022, 10, 3905. [Google Scholar] [CrossRef]

- Tang, Y.; Ng, H.T.; Tung, A.K.H. Do multi-hop question answering systems know how to answer the single-hop sub-questions? In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, EACL 2021, Online, 19–23 April 2021; Merlo, P., Tiedemann, J., Tsarfaty, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 3244–3249. [Google Scholar] [CrossRef]

- Feldman, Y.; El-Yaniv, R. Multi-hop paragraph retrieval for open-domain question answering. In Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, 28 July–2 August 2019; Korhonen, A., Traum, D.R., Màrquez, L., Eds.; Volume 1: Long Papers. Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 2296–2309. [Google Scholar] [CrossRef]

- Das, R.; Godbole, A.; Kavarthapu, D.; Gong, Z.; Singhal, A.; Yu, M.; Guo, X.; Gao, T.; Zamani, H.; Zaheer, M.; et al. Multi-step entity-centric information retrieval for multi-hop question answering. In Proceedings of the 2nd Workshop on Machine Reading for Question Answering, MRQA@EMNLP 2019, Hong Kong, China, 4 November 2019; Fisch, A., Talmor, A., Jia, R., Seo, M., Choi, E., Chen, D., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 113–118. [Google Scholar] [CrossRef]

- Yang, Z.; Qi, P.; Zhang, S.; Bengio, Y.; Cohen, W.W.; Salakhutdinov, R.; Manning, C.D. Hotpotqa: A dataset for diverse, explainable multi-hop question Answering. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; Riloff, E., Chiang, D., Hockenmaier, J., Tsujii, J., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 2369–2380. [Google Scholar] [CrossRef]

- Lee, H.; Yang, S.; Oh, H.; Seo, M. Generative multi-hop retrieval. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; Goldberg, Y., Kozareva, Z., Zhang, Y., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 1417–1436. [Google Scholar] [CrossRef]

- Ho, X.; Nguyen, A.D.; Sugawara, S.; Aizawa, A. Constructing A multi-hop QA dataset for comprehensive evaluation of reasoning steps. In Proceedings of the 28th International Conference on Computational Linguistics, COLING 2020, Barcelona, Spain (Online), 8–13 December 2020; Scott, D., Bel, N., Zong, C., Eds.; International Committee on Computational Linguistics: Barcelona, Spain, 2020; pp. 6609–6625. [Google Scholar] [CrossRef]

- Lee, K.; Hwang, S.; Han, S.; Lee, D. Robustifying multi-hop QA through pseudo-evidentiality training. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 1: Long Papers), Virtual Event, 1–6 August 2021; Zong, C., Xia, F., Li, W., Navigli, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 6110–6119. [Google Scholar] [CrossRef]

- Su, D.; Xu, P.; Fung, P. QA4QG: Using question answering to constrain multi-hop question generation. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2022, Virtual and Singapore, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 8232–8236. [Google Scholar] [CrossRef]

- Li, S.; Li, X.; Shang, L.; Jiang, X.; Liu, Q.; Sun, C.; Ji, Z.; Liu, B. Hopretriever: Retrieve hops over wikipedia to answer complex questions. In Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Thirty-Third Conference on Innovative Applications of Artificial Intelligence, IAAI 2021, The Eleventh Symposium on Educational Advances in Artificial Intelligence, EAAI 2021, Virtual Event, 2–9 February 2021; AAAI Press: Washington, DC, USA, 2021; pp. 13279–13287. [Google Scholar] [CrossRef]

- Cao, Z.; Liu, B.; Li, S. RPA: Reasoning path augmentation in iterative retrieving for multi-hop QA. In Proceedings of the Thirty-Seventh AAAI Conference on Artificial Intelligence, AAAI 2023, Thirty-Fifth Conference on Innovative Applications of Artificial Intelligence, IAAI 2023, Thirteenth Symposium on Educational Advances in Artificial Intelligence, EAAI 2023, Washington, DC, USA, 7–14 February 2023; Williams, B., Chen, Y., Neville, J., Eds.; AAAI Press: Washington, DC, USA, 2023; pp. 12598–12606. [Google Scholar] [CrossRef]

- Asai, A.; Hashimoto, K.; Hajishirzi, H.; Socher, R.; Xiong, C. Learning to retrieve reasoning paths over wikipedia graph for question answering. In Proceedings of the 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, 26–30 April 2020; OpenReview.net: Alameda, CA, USA, 2020. Available online: https://openreview.net/forum?id=SJgVHkrYDH (accessed on 19 May 2020).

- Das, R.; Dhuliawala, S.; Zaheer, M.; McCallum, A. Multi-step retriever-reader interaction for scalable open-domain question answering. In Proceedings of the 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019; OpenReview.net: Alameda, CA, USA, 2019. Available online: https://openreview.net/forum?id=HkfPSh05K7 (accessed on 9 May 2019).

- Xiong, W.; Li, X.L.; Iyer, S.; Du, J.; Lewis, P.S.H.; Wang, W.Y.; Mehdad, Y.; Yih, S.; Riedel, S.; Kiela, D.; et al. Answering complex open-domain questions with multi-hop dense retrieval. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021; OpenReview.net: Alameda, CA, USA, 2021. Available online: https://openreview.net/forum?id=EMHoBG0avc1 (accessed on 6 May 2021).

- Zhang, J.; Zhang, X.; Yu, J.; Tang, J.; Tang, J.; Li, C.; Chen, H. Subgraph retrieval enhanced model for multi-hop knowledge base question answering. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, ACL 2022, Dublin, Ireland, 22–27 May 2022; Muresan, S., Nakov, P., Villavicencio, A., Eds.; Volume 1: Long Papers. Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 5773–5784. [Google Scholar] [CrossRef]

- Bai, Y.; Lv, X.; Li, J.; Hou, L.; Qu, Y.; Dai, Z.; Xiong, F. SQUIRE: A sequence-to-sequence framework for multi-hop knowledge graph reasoning. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; Goldberg, Y., Kozareva, Z., Zhang, Y., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 1649–1662. [Google Scholar] [CrossRef]

- Piekos, P.; Malinowski, M.; Michalewski, H. Measuring and improving bert’s mathematical abilities by predicting the order of reasoning. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 2: Short Papers), Virtual Event, 1–6 August 2021; Zong, C., Xia, F., Li, W., Navigli, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 383–394. [Google Scholar] [CrossRef]

- Khot, T.; Clark, P.; Guerquin, M.; Jansen, P.; Sabharwal, A. QASC: A dataset for question answering via sentence composition. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, 7–12 February 2020; AAAI Press: Washington, DC, USA, 2020; pp. 8082–8090. [Google Scholar] [CrossRef]

- Bengio, Y.; Louradour, J.; Collobert, R.; Weston, J. Curriculum Learning. In Proceedings of the 26th Annual International Conference on Machine Learning, ICML 2009, Montreal, QC, Canada, 14–18 June 2009; Danyluk, A.P., Bottou, L., Littman, M.L., Eds.; ICML: San Diego, CA, USA, 2009; pp. 41–48. [Google Scholar] [CrossRef]

- Fang, Y.; Sun, S.; Gan, Z.; Pillai, R.; Wang, S.; Liu, J. Hierarchical graph network for multi-hop question answering. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, 16–20 November 2020; Webber, B., Cohn, T., He, Y., Liu, Y., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 8823–8838. [Google Scholar] [CrossRef]

- Mavi, V.; Jangra, A.; Jatowt, A. A survey on multi-hop question answering and generation. arXiv 2022. [Google Scholar] [CrossRef]

- Wu, C.; Hu, E.; Zhan, K.; Luo, L.; Zhang, X.; Jiang, H.; Wang, Q.; Cao, Z.; Yu, F.; Chen, L. Triple-fact retriever: An explainable reasoning retrieval model for multi-hop QA problem. In Proceedings of the 38th IEEE International Conference on Data Engineering, ICDE 2022, Kuala Lumpur, Malaysia, 9–12 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1206–1218. [Google Scholar] [CrossRef]

- Nguyen, T.; Rosenberg, M.; Song, X.; Gao, J.; Tiwary, S.; Majumder, R.; Deng, L. MS MARCO: A human generated machine reading comprehension dataset. In Proceedings of the Workshop on Cognitive Computation: Integrating neural and symbolic approaches 2016 co-located with the 30th Annual Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 9 December 2016; Besold, T.R., Bordes, A., d’Avila Garcez, A.S., Wayne, G., Eds.; Ser. CEUR Workshop Proceedings. CEUR-WS.org: Aachen, Germany, 2016; Volume 1773. Available online: https://ceur-ws.org/Vol-1773/CoCoNIPS_2016_paper9.pdf (accessed on 10 December 2023).

- Yang, Y.; Yih, W.; Meek, C. Wikiqa: A challenge dataset for open-domain question answering. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, EMNLP 2015, Lisbon, Portugal, 17–21 September 2015; Màrquez, L., Callison-Burch, C., Su, J., Pighin, D., Marton, Y., Eds.; The Association for Computational Linguistics: Stroudsburg, PA, USA, 2015; pp. 2013–2018. [Google Scholar] [CrossRef]

- Beltagy, I.; Peters, M.E.; Cohan, A. Longformer: The long-document Transformer. arXiv 2020. [Google Scholar] [CrossRef]

- Ainslie, J.; Onta nón, S.; Alberti, C.; Cvicek, V.; Fisher, Z.; Pham, P.; Ravula, A.; Sanghai, S.; Wang, Q.; Yang, L. ETC: Encoding long and structured inputs in transformers. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, 16–20 November 2020; Webber, B., Cohn, T., He, Y., Liu, Y., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 268–284. [Google Scholar] [CrossRef]

- Khashabi, D.; Chaturvedi, S.; Roth, M.; Upadhyay, S.; Roth, D. Looking beyond the surface: A challenge set for reading comprehension over multiple sentences. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2018, New Orleans, LA, USA, 1–6 June 2018; Walker, M.A., Ji, H., Stent, A., Eds.; Volume 1 (Long Papers). Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 252–262. [Google Scholar] [CrossRef]

- Yadav, V.; Bethard, S.; Surdeanu, M. Unsupervised alignment-based iterative evidence retrieval for multi-hop question answering. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J.R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 4514–4525. [Google Scholar] [CrossRef]

- Yadav, V.; Bethard, S.; Surdeanu, M. Alignment over Heterogeneous Embeddings for Question Answering. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; Volume 1 (Long And hort Papers). Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 2681–2691. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, EMNLP 2014, Doha, Qatar, 25–29 October 2014; Moschitti, A., Pang, B., Daelemans, W., Eds.; A Meeting of SIGDAT, a Special Interest Group of the ACL; ACL: Brooklyn, NY, USA, 2014; pp. 1532–1543. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; Burstein, J., Doran, C., Solorio, T., Eds.; Volume 1 (Long and Short Papers). Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Yadav, V.; Bethard, S.; Surdeanu, M. If you want to go far go together: Unsupervised joint candidate evidence retrieval for multi-hop question Answering. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2021, Online, 6–11 June 2021; Toutanova, K., Rumshisky, A., Zettlemoyer, L., Hakkani-Tür, D., Beltagy, I., Bethard, S., Cotterell, R., Chakraborty, T., Zhou, Y., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 4571–4581. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, H.; Zhang, D.; Liu, Y.; Huang, S. End-to-end beam retrieval for multi-hop question answering. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), NAACL 2024, Mexico City, Mexico, 16–21 June 2024; Duh, K., Gómez-Adorno, H., Bethard, S., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 1718–1731. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized BERT pretraining approach. arXiv 2019. [Google Scholar] [CrossRef]

- Xu, W.; Deng, Y.; Zhang, H.; Cai, D.; Lam, W. Exploiting reasoning chains for multi-hop science question answering. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021, Virtual Event/Punta Cana, Dominican Republic, 16–20 November 2021; Moens, M., Huang, X., Specia, L., Yih, S.W., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 1143–1156. [Google Scholar] [CrossRef]

- Khattab, O.; Potts, C.; Zaharia, M.A. Baleen: Robust multi-hop reasoning at scale via condensed retrieval. In Proceedings of the Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, NeurIPS 2021, Virtual, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y.N., Liang, P., Vaughan, J.W., Eds.; NeurIPS: San Diego CA, USA, 2021; pp. 27670–27682. Available online: https://proceedings.neurips.cc/paper/2021/hash/e8b1cbd05f6e6a358a81dee52493dd06-Abstract.html (accessed on 20 December 2021).

- Wang, A.; Pruksachatkun, Y.; Nangia, N.; Singh, A.; Michael, J.; Hill, F.; Levy, O.; Bowman, S.R. Superglue: A stickier benchmark for general-purpose language understanding systems. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; Wallach, H.M., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E.B., Garnett, R., Eds.; NeurIPS: San Diego CA, USA, 2019; pp. 3261–3275. Available online: https://proceedings.neurips.cc/paper/2019/hash/4496bf24afe7fab6f046bf4923da8de6-Abstract.html (accessed on 20 December 2020).

- Yadav, V.; Bethard, S.; Surdeanu, M. Quick and (not so) dirty: Unsupervised selection of justification sentences for multi-hop question answering. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019; Inui, K., Jiang, J., Ng, V., Wan, X., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 2578–2589. [Google Scholar] [CrossRef]

- He, P.; Liu, X.; Gao, J.; Chen, W. Deberta: Decoding-enhanced bert with disentangled attention. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021; OpenReview.net: Alameda, CA, USA, 2021. Available online: https://openreview.net/forum?id=XPZIaotutsD (accessed on 7 May 2021).

- You, Y.; Li, J.; Reddi, S.J.; Hseu, J.; Kumar, S.; Bhojanapalli, S.; Song, X.; Demmel, J.; Keutzer, K.; Hsieh, C. Large batch optimization for deep learning: Training BERT in 76 minutes. In Proceedings of the 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, 26–30 April 2020; OpenReview.net: Alameda, CA, USA, 2020. Available online: https://openreview.net/forum?id=Syx4wnEtvH (accessed on 30 May 2020).

- Xiong, L.; Xiong, C.; Li, Y.; Tang, K.; Liu, J.; Bennett, P.N.; Ahmed, J.; Overwijk, A. Approximate nearest neighbor negative contrastive learning for dense text retrieval. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021; OpenReview.net: Alameda, CA, USA, 2021. Available online: https://openreview.net/forum?id=zeFrfgyZln (accessed on 8 May 2021).

- Sammut, C.; Webb, G.I. (Eds.) TF–IDF; Springer: Boston, MA, USA, 2010; pp. 986–987. [Google Scholar] [CrossRef]

- Ferguson, J.; Hajishirzi, H.; Dasigi, P.; Khot, T. Retrieval Data Augmentation Informed by Downstream Question Answering Performance. In Proceedings of the Fifth Fact Extraction And VERification Workshop (FEVER), Dublin, Ireland, 26 May 2022; pp. 1–5. Available online: https://aclanthology.org/2022.fever-1.1/ (accessed on 28 May 2022).

- Qiu, X.; Sun, T.; Xu, Y.; Shao, Y.; Dai, N.; Huang, X. Pre-trained models for natural language processing: A survey. arXiv 2020. [Google Scholar] [CrossRef]

| Pretrained Models | RoBERTa-Large | DeBERTa-Large |

|---|---|---|

| Parameters (M) | 355 | 405 |

| Batch size (QASC) | 32 | 4 |

| Batch size (MultiRC) | 12 | 2 |

| Method | Both Found | At Least One Found |

|---|---|---|

| No order | ||

| BM25 [31] | 27.8 | 65.7 |

| Heuristics [21] | 41.6 | 64.6 |

| BERT-LC [21] | 41.6 | 64.4 |

| AIR (parallel = 5) [31] | 44.8 | 68.6 |

| SingleRR [35] | 44.4 | 69.6 |

| Two-step IR [21] | 44.4 | 69.9 |

| SupA + QA [46] | 47.8 | - |

| Optimized order | ||

| RPA (RoBERTa) [14] | 52.7 | 77.5 |

| AORO (RoBERTa) | 53.1 | 77.8 |

| AORO (RoBERTa on DeBERTa) | 53.3 | 78.1 |

| AORO (DeBERTa) | 54.3 | 78.4 |

| Methods | Hop(s) | Precision | Recall | F1 |

|---|---|---|---|---|

| No order | ||||

| Entire passage [31] | - | 17.4 | 100.0 | 29.6 |

| BM25 [41] | Single | 43.8 | 61.2 | 51.0 |

| AutoROCC [41] | Single | 48.2 | 68.2 | 56.4 |

| RoBERTa-retriever (All passages) [31] | Single | 63.4 | 61.1 | 62.3 |

| SingleRR (RoBERTa) [35] | Multiple | - | - | 64.0 |

| AIR top chain [31] | Multiple | 66.2 | 63.1 | 64.2 |

| Optimized order | ||||

| RPA (RoBERTa) [14] | Multiple | 60.2 | 69.7 | 64.6 |

| AORO (RoBERTa) | Multiple | 61.1 | 70.8 | 65.6 |

| AORO (RoBERTa on DeBERTa) | Multiple | 61.5 | 71.7 | 66.2 |

| AORO (DeBERTa) | Multiple | 63.8 | 73.5 | 68.3 |

| Training-Free Method | QASC /Recall@10 Both Found | MultiRC /F1 |

|---|---|---|

| AORO (DeBERTa) | 100/54.3 | 100/68.3 |

| TF-IDF | 82.2/52.0 (↓2.3) | 67.2/64.5 (↓3.8) |

| RPA | 83.1/52.7 (↓1.6) | 67.1/64.6 (↓3.7) |

| GloVe | 78.0/51.6 (↓2.7) | 65.7/64.3 (↓4.0) |

| spaCy | 74.1/51.7 (↓2.6) | 59.7/63.5 (↓4.8) |

| QASC | Q with 2 Ground-Truth Facts |

|---|---|

| Q | Query: What are invaluable for soil health? Choice: annelids. |

| Fact: Annelids are worms such as the familiar earthworm. | |

| Fact: Earthworms are invaluable for soil health. | |

| () | |

| (same with ) | |

| TF-IDF | (same with ) |

| RPA | (same with ) |

| GloVe | (same with ) |

| spaCy | (same with ) |

| MultiRC | with 4 Ground-Truth Facts |

| Q | Query: Why did the writer think he was going crazy? Choice: He heard a sound and saw a light in a vacuum. |

| Fact: Hotel California My first thought: I was going crazy. | |

| Fact: Twenty-four hours of silence (vacuum, remember); was I hallucinating noises now? | |

| Fact: It was a fine bell, reminiscent of ancient stone churches and the towering cathedrals I’d seen in documentaries. | |

| Fact: And accompanying the bell, I saw a light. | |

| () | |

| (same with ) | |

| /For samples whose , | |

| TF-IDF | (same with )/50.0 |

| RPA | (same with )/51.2 |

| GloVe | /45.5 |

| spaCy | /42.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Cao, Z.; Bu, K.; Ji, Z. AORO: Auto-Optimizing Reasoning Order for Multi-Hop Question Answering. Mathematics 2025, 13, 3489. https://doi.org/10.3390/math13213489

Li S, Cao Z, Bu K, Ji Z. AORO: Auto-Optimizing Reasoning Order for Multi-Hop Question Answering. Mathematics. 2025; 13(21):3489. https://doi.org/10.3390/math13213489

Chicago/Turabian StyleLi, Shaobo, Ziyi Cao, Kun Bu, and Zhenzhou Ji. 2025. "AORO: Auto-Optimizing Reasoning Order for Multi-Hop Question Answering" Mathematics 13, no. 21: 3489. https://doi.org/10.3390/math13213489

APA StyleLi, S., Cao, Z., Bu, K., & Ji, Z. (2025). AORO: Auto-Optimizing Reasoning Order for Multi-Hop Question Answering. Mathematics, 13(21), 3489. https://doi.org/10.3390/math13213489