CoEGAN-BO: Synergistic Co-Evolution of GANs and Bayesian Optimization for High-Dimensional Expensive Many-Objective Problems

Abstract

1. Introduction

- Constructing a high-fidelity model from limited sample data poses substantial challenges, encountering issues such as reduced optimization efficiency and compromised model precision [20]. Moreover, the time required for model construction escalates exponentially with rising dimensionality, further complicating the optimization process [21].

- As the dimension of the objective space increases, the number of non-dominated solutions tends to burgeon exponentially, thereby intensifying selection pressure throughout the evolutionary process [22].

- Inherently, the sparsity of samples in the high-dimensional decision spaces contributes to a significant level of uncertainty in the value of each predictive sample [23]. This uncertainty complicates the selection of the most advantageous solutions, which are critical for enhancing the precision of the model while concurrently providing more effective guidance for the population’s search trajectory.

- Model-Construction-Based BO: In pursuit of refining the surrogate-assisted model’s fidelity, hybrid surrogate modeling has become a key technique [24]. This method capitalizes on the synergy of multiple models to better fit the real objective function, thereby reducing overreliance on the predictive power of any single model. Surrogate models such as the Radial Basis Function (RBF) model [25], Artificial Neural Networks (ANNs) [26], Gaussian process [27,28], and Support Vector Machine (SVM) models [29] are utilized for their respective strengths in various optimization contexts. This illustrates the tailored application of surrogates to different optimization problems.

- Model-Management-Based BO: As the complexity of the objective function increases, not only does the prediction error of the surrogate model intensify, but it also exacerbates the challenge of approaching the Pareto front, making the means of distinguishing between better individuals more difficult. These challenges can be addressed by adopting a more efficient infill criterion.

- Novel Search-Strategy-Based BO: Environment selection is an important part of multi-objective evolutionary algorithms [30]. Individuals with better fitness are selected as the next initial population from the mixed population. Existing methods still use the predicted value and uncertainty of the model to select the next initial population. However, the predicted value and uncertainty variance given by the surrogate model decrease rapidly with the increase in the dimensions. Therefore, the effectiveness of the evolutionary algorithm is also reduced.

| Method Category | Algorithm | Summary of Algorithm Ideas |

|---|---|---|

| MOEA/D-EGO [31] | Adopt fuzzy clustering-based modeling in the decision space; construct multiple local surrogate models for each objective function. | |

| Model-Construction-Based BO | KTA2 [32] | Combine two point-insensitive models with the original Gaussian process to adaptively approximate each objective function, minimizing outlier impacts. |

| CLBO [33] | Build several Gaussian processes; impose protocol constraints on all/part of them during training to ensure reasonable and accurate predictions. | |

| EI (Expected Improvement) [24,34] | A common infill criterion for selecting promising candidates. Loses effectiveness with increasing dimension (uncertainty differences shrink) and fails to optimize all problems. | |

| Model-Management-Based BO | PI (Probability of Improvement) [35,36] | Another widely used infill criterion. Similar to EI: dimension growth reduces uncertainty distinction, leading to invalidation; not optimal for all problems. |

| LCB (Lower Confidence Bound) [37,38] | A mainstream infill criterion. Faces the same issues as EI/PI: dimension increase weakens uncertainty measurement, and a single criterion cannot fit all scenarios. | |

| Novel Search-Strategy-Based BO | NSGA-III [39] | Integrate Pareto dominance and decomposition strategies; balance convergence and diversity effectively in high-dimensional problems. |

| Two-Arch2 [40] | Establish two updated archives (via metrics and Pareto dominance). Performs well in high dimensions by considering both convergence and diversity. |

- (1)

- The proposed method adeptly extracts useful information from both real and predicted samples. It employs a secondary assessment of the current model’s predictive outcomes. This is designed to mitigate the deterioration in predictive accuracy that can arise due to the trajectory deviation of the surrogate model in the context of high-dimensional expensive optimization challenges.

- (2)

- We introduce an efficient model management strategy. This strategy synergistically combines the -norm with a convergence criterion based on the extremities of distances (maximum and minimum). The main purpose is to significantly reduce computational complexity and to be able to accurately determine which individuals have high “potential”.

- (3)

- The proposed CoEGAN-BO exhibits considerable advantages when addressing the “curse of dimensionality” challenges in HEMaOPs. The CoEGAN-BO algorithm accelerates population evolution by conducting a secondary selection of more competitive predicted solutions from the non-dominated solutions by reducing non-dominated selection pressure.

- (4)

- The proposed CoEGAN-BO demonstrates outstanding performance; the experimental results clearly demonstrate that this method is highly competitive in solving high-dimensional problems of 50D and 100D, as well as many-objective problems with 3, 4, 6, 8, and 10 objectives.

2. Preliminaries

2.1. Gaussian Process

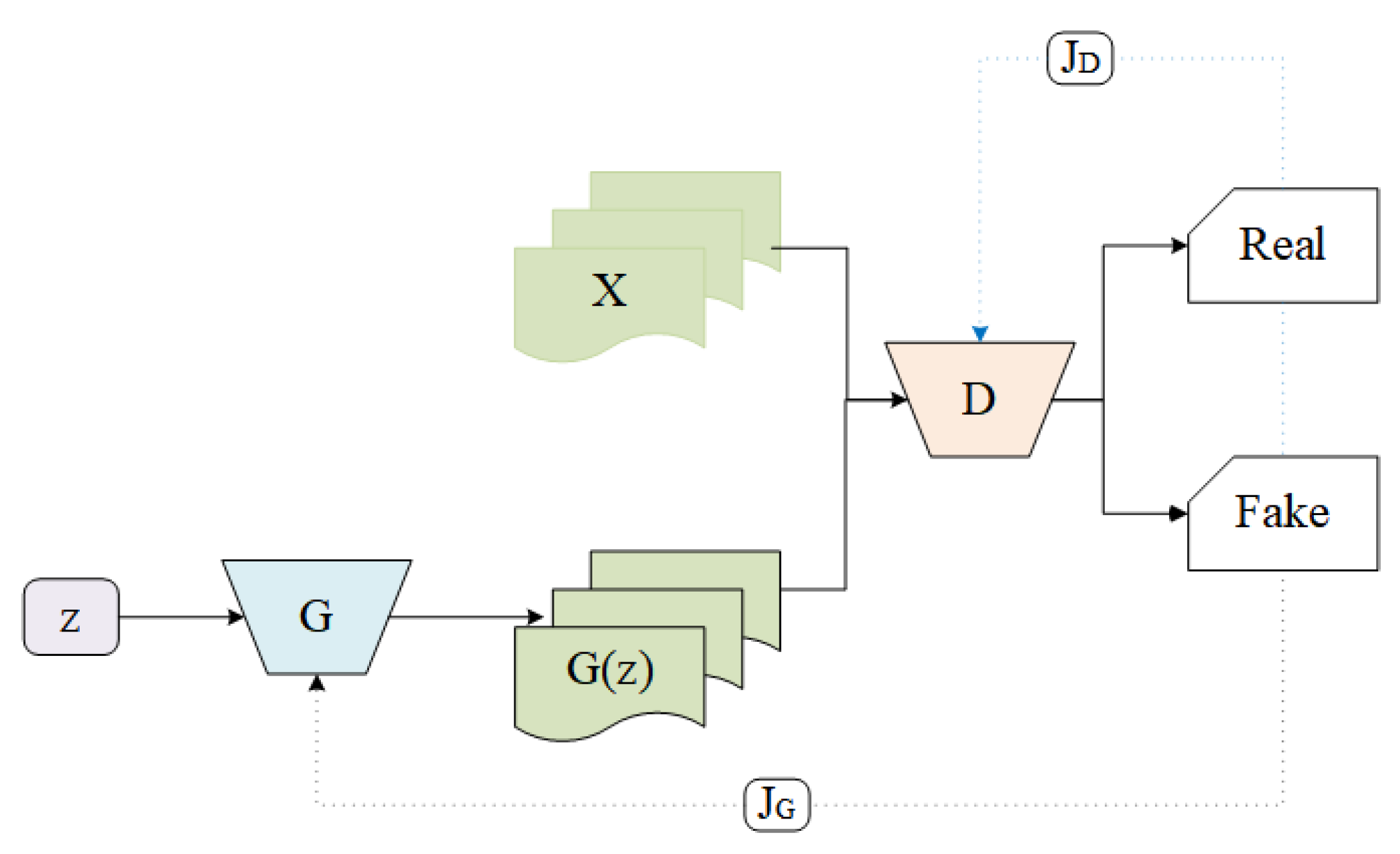

2.2. Generative Adversarial Networks

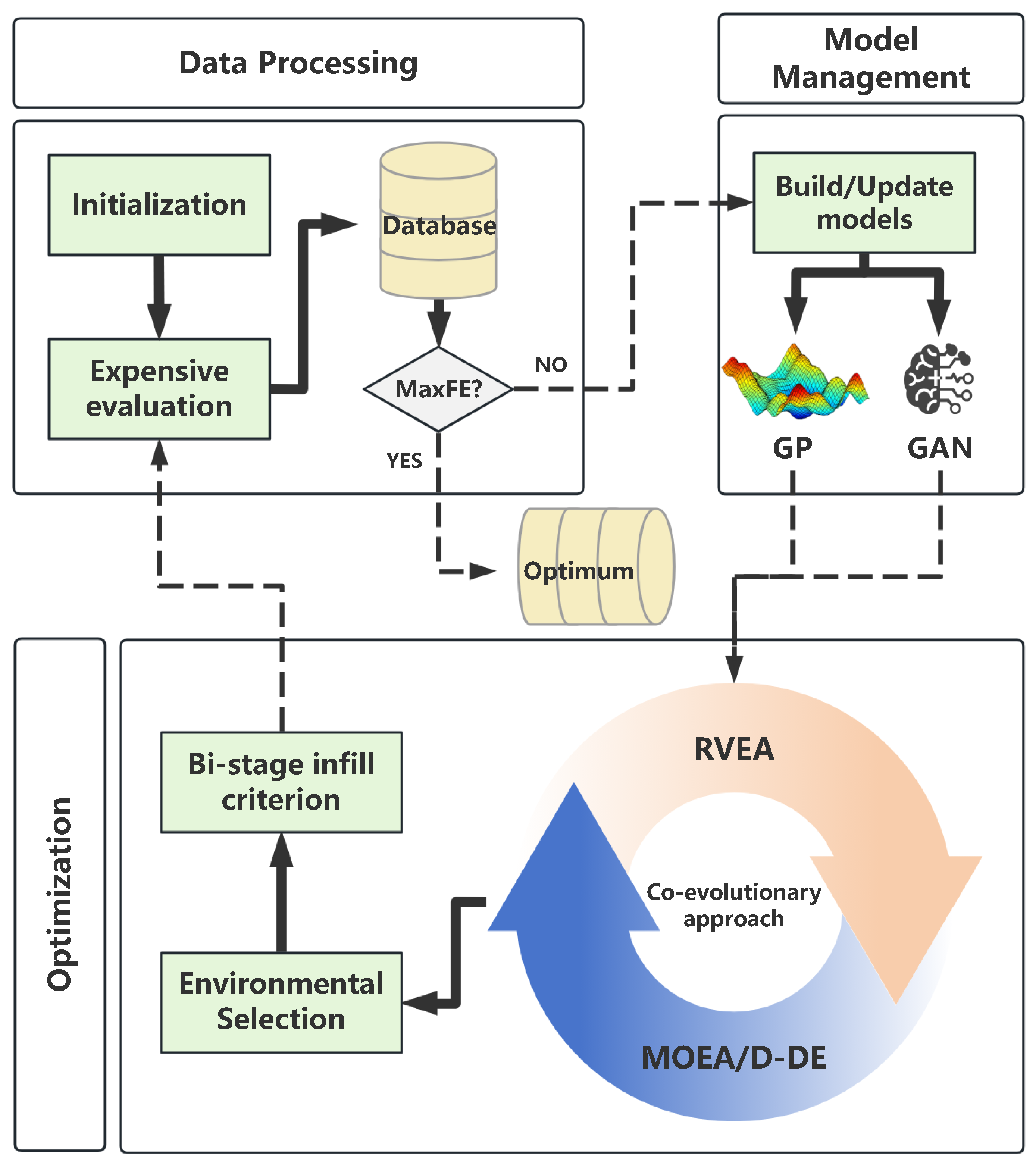

3. The Proposed CoEGAN-BO Algorithm

| Algorithm 1 Framework of CoEGAN-BO |

|

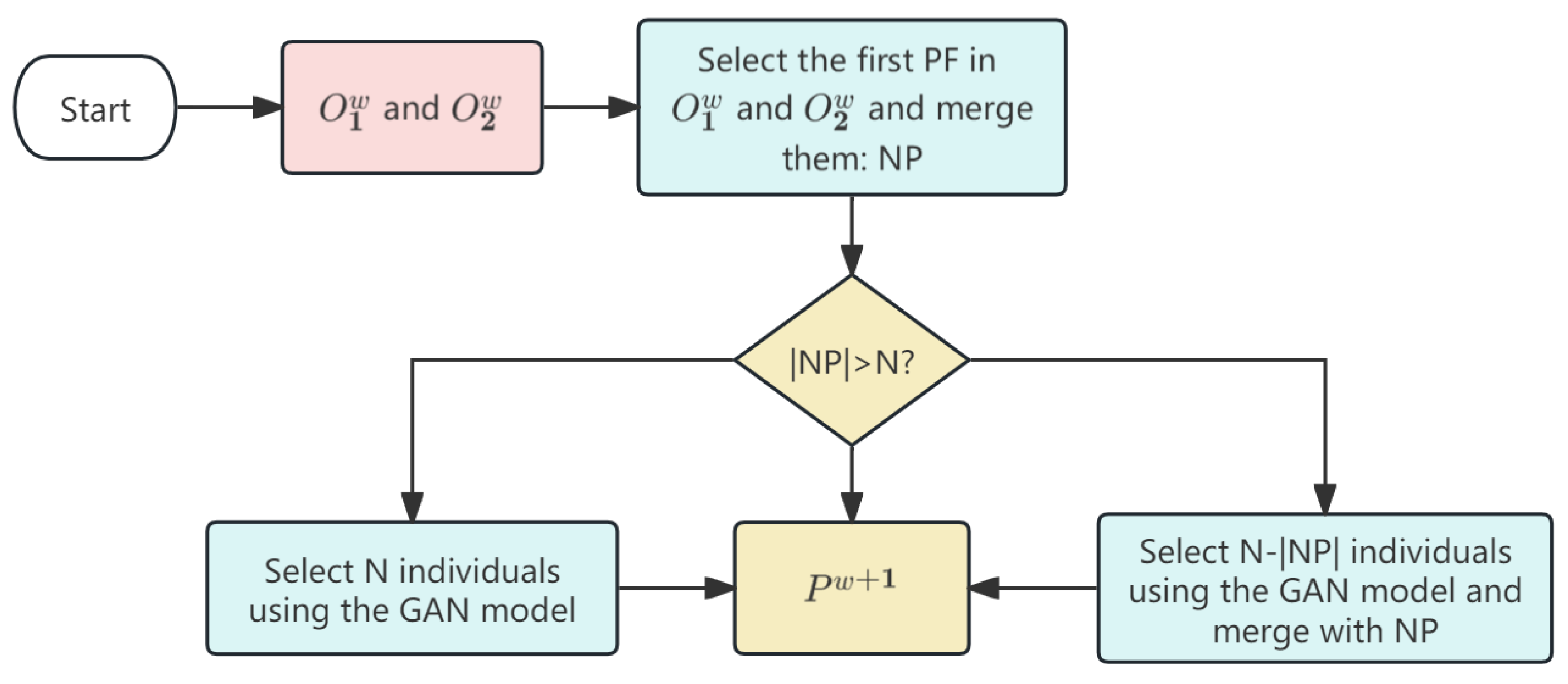

| Algorithm 2 Environmental selection strategy using GAN |

|

| Algorithm 3 Bi-stage infill sampling criterion |

|

3.1. Environmental Selection Strategy Based on Generative Adversarial Network

3.2. Bi-Stage Infill Criterion

3.3. Computational Complexity Analysis

4. Experimental Evaluation

4.1. Experimental Settings

- The initial population size was uniformly set to for consistency across all algorithms;

- The maximum allowable number of expensive function evaluations was limited to 300, ensuring a level playing field for evaluation;

- Parameters governing reproduction, including crossover and mutation, had values of , , , and across all algorithms;

- To maintain uniformity, the maximum number of generations before updating the Gaussian process was uniformly established at for all considered algorithms.

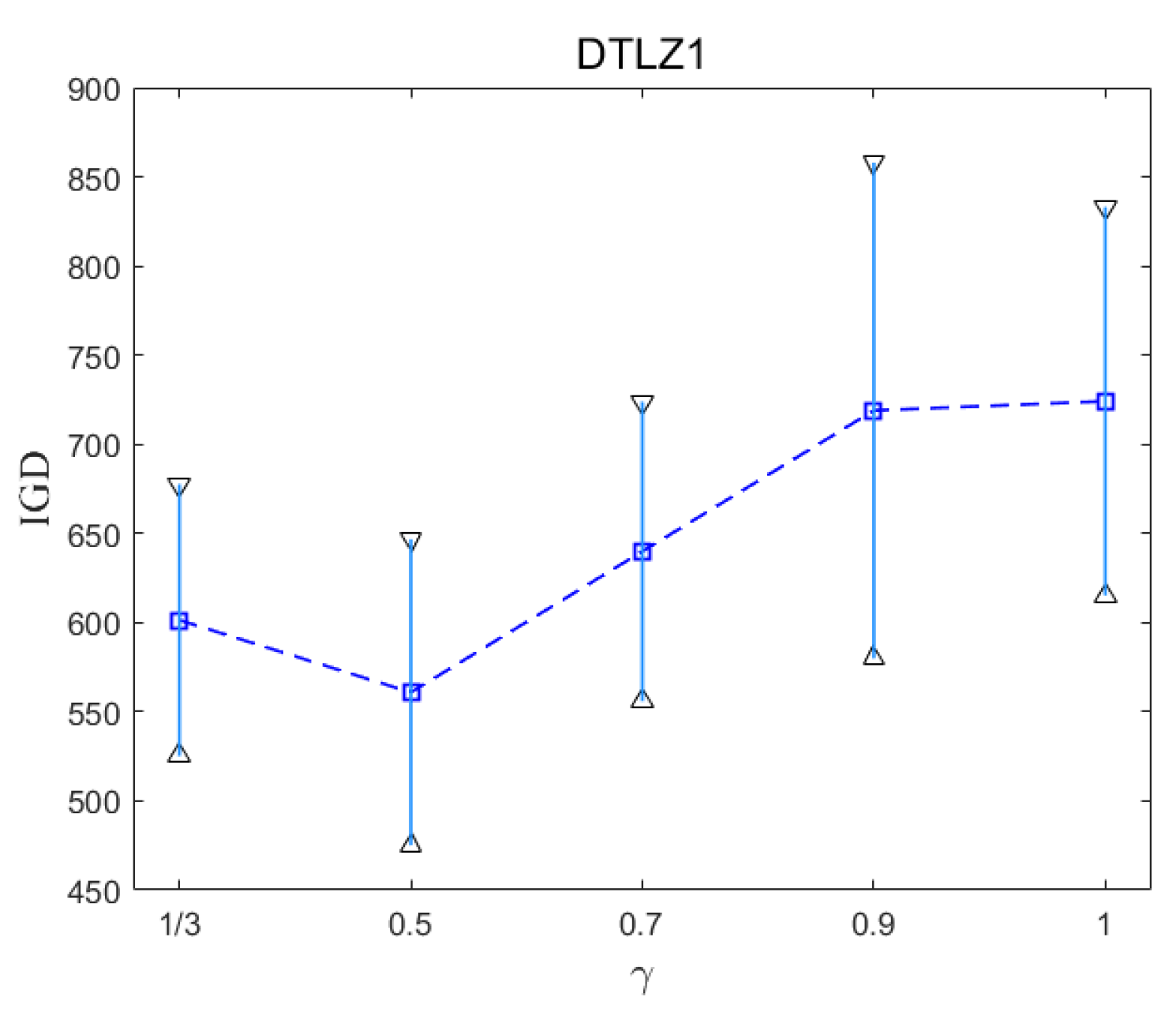

4.2. Sensitivity Analysis Based on Bi-Stage Division Threshold

4.3. Impact of GAN-Based Environmental Selection

4.4. Comparison Results on DTLZ Test Problems

4.5. Comparison Results on MaF Problems

4.6. Runtime

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shu, X.; Liu, Y.; Liu, J.; Yang, M.; Zhang, Q. Multi-objective particle swarm optimization with dynamic population size. J. Comput. Des. Eng. 2023, 10, 446–467. [Google Scholar] [CrossRef]

- Stewart, R.; Palmer, T.S.; Bays, S. An agent-based blackboard system for multi-objective optimization. J. Comput. Des. Eng. 2022, 9, 480–506. [Google Scholar] [CrossRef]

- Sheikh, H.M.; Lee, S.; Wang, J.; Marcus, P.S. Airfoil optimization using design-by-morphing. J. Comput. Des. Eng. 2023, 10, 1443–1459. [Google Scholar] [CrossRef]

- Tariq, R.; Recio-Garcia, J.A.; Cetina-Quiñones, A.J.; Orozco-del Castillo, M.G.; Bassam, A. Explainable Artificial Intelligence twin for metaheuristic optimization: Double-skin facade with energy storage in buildings. J. Comput. Des. Eng. 2025, 12, qwaf015. [Google Scholar] [CrossRef]

- Nicolaou, C.A.; Brown, N. Multi-objective optimization methods in drug design. Drug Discov. Today Technol. 2013, 10, e427–e435. [Google Scholar] [CrossRef]

- Machairas, V.; Tsangrassoulis, A.; Axarli, K. Algorithms for optimization of building design: A review. Renew. Sustain. Energy Rev. 2014, 31, 101–112. [Google Scholar] [CrossRef]

- Liu, D.; Ouyang, H.; Li, S.; Zhang, C.; Zhan, Z.H. Hyperparameters optimization of convolutional neural network based on local autonomous competition harmony search algorithm. J. Comput. Des. Eng. 2023, 10, 1280–1297. [Google Scholar] [CrossRef]

- El-Shorbagy, M.; Alhadbani, T.H. Monarch butterfly optimization-based genetic algorithm operators for nonlinear constrained optimization and design of engineering problems. J. Comput. Des. Eng. 2024, 11, 200–222. [Google Scholar] [CrossRef]

- Soltani, S.; Murch, R.D. A Compact Planar Printed MIMO Antenna Design. IEEE Trans. Antennas Propag. 2015, 63, 1140–1149. [Google Scholar] [CrossRef]

- del Valle, Y.; Venayagamoorthy, G.K.; Mohagheghi, S.; Hernandez, J.C.; Harley, R.G. Particle Swarm Optimization: Basic Concepts, Variants and Applications in Power Systems. IEEE Trans. Evol. Comput. 2008, 12, 171–195. [Google Scholar] [CrossRef]

- Dai, R.; Jie, J.; Wang, Z.; Zheng, H.; Wang, W. Automated surrogate-assisted particle swarm optimizer with an adaptive parental guidance strategy for expensive engineering optimization problems. J. Comput. Des. Eng. 2025, 12, 145–183. [Google Scholar] [CrossRef]

- Zhu, S.; Zeng, L.; Cui, M. Symmetrical Generalized Pareto Dominance and Adjusted Reference Vector Cooperative Evolutionary Algorithm for Many-Objective Optimization. Symmetry 2024, 16, 1484. [Google Scholar] [CrossRef]

- Jin, Y. Surrogate-assisted evolutionary computation: Recent advances and future challenges. Swarm Evol. Comput. 2011, 1, 61–70. [Google Scholar] [CrossRef]

- Li, J.; Wang, P.; Dong, H.; Shen, J.; Chen, C. A classification surrogate-assisted multi-objective evolutionary algorithm for expensive optimization. Knowl.-Based Syst. 2022, 242, 108416. [Google Scholar] [CrossRef]

- Park, J.; Kang, N. BMO-GNN: Bayesian mesh optimization for graph neural networks to enhance engineering performance prediction. J. Comput. Des. Eng. 2024, 11, 260–271. [Google Scholar] [CrossRef]

- Wang, X.; Jin, Y.; Schmitt, S.; Olhofer, M. Recent advances in Bayesian optimization. ACM Comput. Surv. 2023, 55, 1–36. [Google Scholar] [CrossRef]

- He, C.; Zhang, Y.; Gong, D.; Ji, X. A review of surrogate-assisted evolutionary algorithms for expensive optimization problems. Expert Syst. Appl. 2023, 217, 119495. [Google Scholar] [CrossRef]

- Zhan, D.; Xing, H. Expected improvement for expensive optimization: A review. J. Glob. Optim. 2020, 78, 507–544. [Google Scholar] [CrossRef]

- Li, J.Y.; Zhan, Z.H.; Zhang, J. Evolutionary computation for expensive optimization: A survey. Mach. Intell. Res. 2022, 19, 3–23. [Google Scholar] [CrossRef]

- Chandra, N.K.; Canale, A.; Dunson, D.B. Escaping the curse of dimensionality in Bayesian model-based clustering. J. Mach. Learn. Res. 2023, 24, 1–42. [Google Scholar] [CrossRef]

- Lanthaler, S.; Stuart, A.M. The curse of dimensionality in operator learning. arXiv 2023, arXiv:2306.15924. [Google Scholar] [CrossRef]

- Gao, R. Finite-sample guarantees for Wasserstein distributionally robust optimization: Breaking the curse of dimensionality. Oper. Res. 2023, 71, 2291–2306. [Google Scholar] [CrossRef]

- Altman, N.; Krzywinski, M. The curse(s) of dimensionality. Nat. Methods 2018, 15, 399–400. [Google Scholar] [CrossRef] [PubMed]

- Chugh, T.; Jin, Y.; Miettinen, K.; Hakanen, J.; Sindhya, K. A Surrogate-Assisted Reference Vector Guided Evolutionary Algorithm for Computationally Expensive Many-Objective Optimization. IEEE Trans. Evol. Comput. 2016, 22, 129–142. [Google Scholar] [CrossRef]

- Gutmann, H.M. A radial basis function method for global optimization. J. Glob. Optim. 2001, 19, 201–227. [Google Scholar] [CrossRef]

- Wang, S.C. Artificial neural network. In Interdisciplinary Computing in Java Programming; Springer: Berlin/Heidelberg, Germany, 2003; pp. 81–100. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, Q.; Gielen, G.G. A Gaussian process surrogate model assisted evolutionary algorithm for medium scale expensive optimization problems. IEEE Trans. Evol. Comput. 2013, 18, 180–192. [Google Scholar] [CrossRef]

- Wang, B.; Yan, L.; Duan, X.; Yu, T.; Zhang, H. An integrated surrogate model constructing method: Annealing combinable Gaussian process. Inf. Sci. 2022, 591, 176–194. [Google Scholar] [CrossRef]

- Pisner, D.A.; Schnyer, D.M. Support vector machine. In Machine Learning; Elsevier: Amsterdam, The Netherlands, 2020; pp. 101–121. [Google Scholar] [CrossRef]

- Cheng, J.; Yen, G.G.; Zhang, G. A many-objective evolutionary algorithm with enhanced mating and environmental selections. IEEE Trans. Evol. Comput. 2015, 19, 592–605. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, W.; Tsang, E.; Virginas, B. Expensive multiobjective optimization by MOEA/D with Gaussian process model. IEEE Trans. Evol. Comput. 2009, 14, 456–474. [Google Scholar] [CrossRef]

- Song, Z.; Wang, H.; He, C.; Jin, Y. A Kriging-assisted two-archive evolutionary algorithm for expensive many-objective optimization. IEEE Trans. Evol. Comput. 2021, 25, 1013–1027. [Google Scholar] [CrossRef]

- Guo, Z.; Ong, Y.S.; He, T.; Liu, H. Co-Learning Bayesian Optimization. IEEE Trans. Cybern. 2022, 52, 9820–9833. [Google Scholar] [CrossRef]

- Jones, D.R.; Schonlau, M.; Welch, W.J. Efficient global optimization of expensive black-box functions. J. Glob. Optim. 1998, 13, 455–492. [Google Scholar] [CrossRef]

- Jones, D.R. A taxonomy of global optimization methods based on response surfaces. J. Glob. Optim. 2001, 21, 345–383. [Google Scholar] [CrossRef]

- Couckuyt, I.; Deschrijver, D.; Dhaene, T. Fast calculation of multiobjective probability of improvement and expected improvement criteria for Pareto optimization. J. Glob. Optim. 2014, 60, 575–594. [Google Scholar] [CrossRef]

- El-Beltagy, M.A.; Keane, A.J. Evolutionary optimization for computationally expensive problems using gaussian processes. Proc. Int. Conf. Artif. Intell. 2001, 1, 708–714. [Google Scholar]

- Dennis, J.; Torczon, V. Managing approximation models in optimization. Multidiscip. Des. Optim. State Art 1997, 5, 330–347. [Google Scholar]

- Deb, K.; Jain, H. An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part I: Solving problems with box constraints. IEEE Trans. Evol. Comput. 2013, 18, 577–601. [Google Scholar] [CrossRef]

- Wang, H.; Jiao, L.; Yao, X. Two_Arch2: An improved two-archive algorithm for many-objective optimization. IEEE Trans. Evol. Comput. 2014, 19, 524–541. [Google Scholar] [CrossRef]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative adversarial networks: An overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Wang, K.; Gou, C.; Duan, Y.; Lin, Y.; Zheng, X.; Wang, F.Y. Generative adversarial networks: Introduction and outlook. IEEE/CAA J. Autom. Sin. 2017, 4, 588–598. [Google Scholar] [CrossRef]

- Qian, W.; Xu, H.; Chen, H.; Yang, L.; Lin, Y.; Xu, R.; Yang, M.; Liao, M. A Synergistic MOEA Algorithm with GANs for Complex Data Analysis. Mathematics 2024, 12, 175. [Google Scholar] [CrossRef]

- He, C.; Huang, S.; Cheng, R.; Tan, K.C.; Jin, Y. Evolutionary Multiobjective Optimization Driven by Generative Adversarial Networks (GANs). IEEE Trans. Cybern. 2021, 51, 3129–3142. [Google Scholar] [CrossRef]

- Chen, S.; Chen, S.; Hou, W.; Ding, W.; You, X. EGANS: Evolutionary Generative Adversarial Network Search for Zero-Shot Learning. IEEE Trans. Evol. Comput. 2024, 28, 582–596. [Google Scholar] [CrossRef]

- Xue, Y.; Tong, W.; Neri, F.; Chen, P.; Luo, T.; Zhen, L.; Wang, X. Evolutionary Architecture Search for Generative Adversarial Networks Based on Weight Sharing. IEEE Trans. Evol. Comput. 2024, 28, 653–667. [Google Scholar] [CrossRef]

- Li, H.; Zhang, Q. Multiobjective optimization problems with complicated Pareto sets, MOEA/D and NSGA-II. IEEE Trans. Evol. Comput. 2008, 13, 284–302. [Google Scholar] [CrossRef]

- Cheng, R.; Jin, Y.; Olhofer, M.; Sendhoff, B. A reference vector guided evolutionary algorithm for many-objective optimization. IEEE Trans. Evol. Comput. 2016, 20, 773–791. [Google Scholar] [CrossRef]

- Morgan, R.; Gallagher, M. Sampling techniques and distance metrics in high dimensional continuous landscape analysis: Limitations and improvements. IEEE Trans. Evol. Comput. 2013, 18, 456–461. [Google Scholar] [CrossRef]

- Zhan, D.; Cheng, Y.; Liu, J. Expected improvement matrix-based infill criteria for expensive multiobjective optimization. IEEE Trans. Evol. Comput. 2017, 21, 956–975. [Google Scholar] [CrossRef]

- Likas, A.; Vlassis, N.; Verbeek, J.J. The global k-means clustering algorithm. Pattern Recognit. 2003, 36, 451–461. [Google Scholar] [CrossRef]

- Kwak, N. Principal component analysis based on L1-norm maximization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1672–1680. [Google Scholar] [CrossRef]

- Danielsson, P.E. Euclidean distance mapping. Comput. Graph. Image Process. 1980, 14, 227–248. [Google Scholar] [CrossRef]

- Hao, H.; Zhou, A.; Qian, H.; Zhang, H. Expensive multiobjective optimization by relation learning and prediction. IEEE Trans. Evol. Comput. 2022, 26, 1157–1170. [Google Scholar] [CrossRef]

- Guo, D.; Wang, X.; Gao, K.; Jin, Y.; Ding, J.; Chai, T. Evolutionary optimization of high-dimensional multiobjective and many-objective expensive problems assisted by a dropout neural network. IEEE Trans. Syst. Man Cybern. Syst. 2021, 52, 2084–2097. [Google Scholar] [CrossRef]

- Tian, Y.; Cheng, R.; Zhang, X.; Jin, Y. PlatEMO: A MATLAB platform for evolutionary multi-objective optimization [educational forum]. IEEE Comput. Intell. Mag. 2017, 12, 73–87. [Google Scholar] [CrossRef]

- Bosman, P.A.; Thierens, D. The balance between proximity and diversity in multiobjective evolutionary algorithms. IEEE Trans. Evol. Comput. 2003, 7, 174–188. [Google Scholar] [CrossRef]

| Problem | CoEGAN-BO (GAN) | CoEGAN-BO () | CoEGAN-BO |

|---|---|---|---|

| DTLZ1 | 6.45 | 6.01 | 5.61 |

| DTLZ2 | 1.62 | 1.67 | 1.57 |

| DTLZ3 | 1.24 | 1.32 | 1.20 |

| DTLZ5 | 1.65 | 1.62 | 1.49 |

| DTLZ6 | 2.20 | 2.21 | 2.24 |

| DTLZ7 | 9.19 | 9.09 | 8.59 |

| Problem | M | K-RVEA | MOEA/D-EGO | KTA2 | REMO | EDN-ARMOEA | CoEGAN-BO |

|---|---|---|---|---|---|---|---|

| DTLZ1 | 3 | 1.1630 (7.89 ) − | 9.8024 (8.76 ) − | 1.1909 (1.30 ) − | 8.9557 (8.73 ) − | 1.1999 (5.50 ) − | 5.6089(8.58 ) |

| 4 | 1.0674 (5.90 ) − | 8.7119 (5.75 ) − | 9.6466 (1.63 ) − | 8.8421 (8.66 ) − | 1.0493 (6.44 ) − | 5.5394 (1.22 ) | |

| 6 | 9.0109 (7.09 ) − | 7.7411 (6.08 ) − | 8.0940 (3.22 ) − | 7.3680 (7.20 ) − | 9.0269 (6.09 ) − | 5.3822 (9.80 ) | |

| 8 | 7.9409 (7.25 ) − | 7.0443 (5.89 ) − | 7.6470 (1.41 ) − | 6.6895 (7.95 ) − | 8.4174 (4.86 ) − | 5.4340 (9.26 ) | |

| 10 | 6.5519 (8.55 ) − | 6.0232 (6.57 ) − | 7.9799 (9.15 ) − | 5.6624 (7.74 ) − | 7.6990 (5.12 ) − | 4.1843 (1.20 ) | |

| DTLZ2 | 3 | 3.0168 (1.26 ) − | 2.0717 (2.20 ) − | 2.5237 (3.08 ) − | 1.6689 (2.74 ) = | 2.7616 (1.19 ) − | 1.5716 (4.69 ) |

| 4 | 3.0025 (1.45 ) − | 2.4457 (2.16 ) − | 2.6122 (3.66 ) − | 2.1026 (2.43 ) − | 2.7210 (1.26 ) − | 1.5617 (2.91 ) | |

| 6 | 2.9962 (1.18 ) − | 2.6470 (2.11 ) − | 2.5842 (3.72 ) − | 2.1645 (2.02 ) − | 2.7891 (1.09 ) − | 1.5619 (2.61 ) | |

| 8 | 2.8051 (1.87 ) − | 2.6686 (1.31 ) − | 2.7268 (3.22 ) − | 2.0794 (1.65 ) − | 2.7682 (9.51 ) − | 1.5613 (2.98 ) | |

| 10 | 2.7647 (1.67 ) − | 2.6465 (2.01 ) − | 2.5056 (2.99 ) − | 2.0173 (1.99 ) − | 2.6952 (9.71 ) − | 1.5378 (1.87 ) | |

| DTLZ3 | 3 | 3.5193 (2.18 ) − | 3.0694 (2.69 ) − | 3.2725 (6.62 ) − | 2.8104 (2.54 ) − | 3.7080 (1.52 ) − | 1.1958 (2.25 ) |

| 4 | 3.3967 (2.32 ) − | 3.1343 (2.51 ) − | 2.7809 (9.35 ) − | 2.8618 (2.67 ) − | 3.6016 (1.57 ) − | 1.1265 (1.79 ) | |

| 6 | 3.1608 (2.26 ) − | 3.1859 (2.10 ) − | 1.7952 (6.63 ) − | 2.6210 (2.79 ) − | 3.4518 (1.41 ) − | 9.5895 (2.79 ) | |

| 8 | 2.9514 (1.95 ) − | 3.0723 (2.00 ) − | 1.8546 (6.86 ) − | 2.6058 (2.66 ) − | 3.2543 (1.27 ) − | 9.5564 (2.43 ) | |

| 10 | 2.7507 (2.14 ) − | 2.9255 (2.29 ) − | 2.0252 (7.83 ) − | 2.3794 (2.73 ) − | 3.0955 (1.63 ) − | 9.8153 (2.27 ) | |

| DTLZ5 | 3 | 2.8829 (1.62 ) − | 2.1090 (1.75 ) − | 2.4662 (4.68 ) − | 1.7606 (1.59 ) − | 2.6462 (1.05 ) − | 1.4994 (3.39 ) |

| 4 | 2.8584 (1.49 ) − | 2.3209 (1.82 ) − | 2.4170 (4.70 ) − | 2.0165 (3.34 ) − | 2.6011 (1.22 ) − | 1.4336 (3.57 ) | |

| 6 | 2.6142 (1.79 ) − | 2.5474 (1.50 ) − | 2.5377 (3.62 ) − | 1.8241 (3.11 ) − | 2.4741 (1.40 ) − | 1.2413 (2.84 ) | |

| 8 | 2.3461 (1.33 ) − | 2.4404 (1.21 ) − | 2.2628 (1.98 ) − | 1.6780 (1.97 ) − | 2.3209 (9.32 ) − | 1.1171 (2.92 ) | |

| 10 | 2.1783 (1.38 ) − | 2.3175 (1.63 ) − | 2.0085 (3.10 ) − | 1.6264 (2.26 ) − | 2.2470 (6.59 ) − | 9.1949 (2.55 ) | |

| DTLZ6 | 3 | 3.7862 (6.27 ) − | 3.8309 (9.45 ) − | 2.9367 (3.59 ) − | 3.8616 (1.44 ) − | 4.1882 (2.91 ) − | 2.2441 (2.59 ) |

| 4 | 3.7501 (1.23 ) − | 3.8952 (7.66 ) − | 2.8591 (3.71 ) − | 3.8753 (1.16 ) − | 4.1156 (1.09 ) − | 2.5876 (2.89 ) | |

| 6 | 3.6577 (7.35 ) − | 3.8246 (4.90 ) − | 2.7463 (2.95 ) − | 3.7040 (1.10 ) − | 3.9301 (2.42 ) − | 2.4404 (1.82 ) | |

| 8 | 3.5115 (8.54 ) − | 3.6498 (7.45 ) − | 2.5257 (4.64 ) − | 3.5939 (9.76 ) − | 3.7511 (2.38 ) − | 2.2870 (2.42 ) | |

| 10 | 3.3429 (1.01 ) − | 3.4783 (5.58 ) − | 2.1562 (3.07 ) = | 3.4070 (9.54 ) − | 3.5672 (2.05 ) − | 2.0519 (1.72 ) | |

| DTLZ7 | 3 | 5.4355 (7.98 ) + | 5.6601 (7.65 ) + | 9.3943 (8.12 ) − | 5.4656 (9.92 ) + | 9.2824 (5.35 ) − | 8.5884 (7.91 ) |

| 4 | 8.6090 (9.32 ) + | 9.1196 (1.44 ) + | 1.1873 (9.73 ) = | 8.9490 (8.38 ) + | 1.2503 (5.41 ) − | 1.1532 (1.52 ) | |

| 6 | 1.4746 (3.04 ) + | 1.5391 (1.75 ) + | 1.9032 (1.66 ) − | 1.5532 (1.36 ) + | 1.9573 (9.33 ) − | 1.7448 (2.20 ) | |

| 8 | 2.7182 (1.20 ) + | 2.2466 (2.36 ) = | 2.5169 (2.93 ) − | 2.2078 (1.84 ) = | 2.6114 (1.49 ) − | 2.3463 (2.54 ) | |

| 10 | 5.2089 (1.87 ) + | 2.8486 (3.95 ) = | 3.2039 (2.65 ) − | 2.7666 (2.82 ) = | 3.2454 (2.07 ) − | 2.9126 (4.30 ) | |

| +/−/= | 5/25/0 | 3/25/2 | 0/28/2 | 3/24/3 | 0/29/1 | ||

| Problem | M | K-RVEA | MOEA/D-EGO | KTA2 | REMO | EDN-ARMOEA | CoEGAN-BO |

|---|---|---|---|---|---|---|---|

| DTLZ1 | 3 | 2.7400 (9.95 ) − | 2.3905 (1.58 ) − | 2.7076 (1.33 ) − | 2.2310 (9.09 ) − | 2.6023 (5.46 ) − | 1.3536 (4.04 ) |

| 4 | 2.4209 (1.10 ) − | 2.1237 (1.25 ) − | 2.4253 (1.00 ) − | 2.0944 (1.46 ) − | 2.2831 (6.71 ) − | 1.3482 (2.03 ) | |

| 6 | 1.9867 (6.60 ) − | 1.8757 (1.09 ) − | 2.0993 (1.35 ) − | 1.8480 (1.15 ) − | 1.9940 (1.07 ) − | 1.2423 (1.95 ) | |

| 8 | 1.8175 (1.09 ) − | 1.7335 (9.42 ) − | 2.0243 (1.15 ) − | 1.7154 (1.42 ) − | 1.8348 (8.79 ) − | 1.0897 (3.27 ) | |

| 10 | 1.7690 (1.06 ) − | 1.5889 (1.37 ) − | 1.9312 (1.48 ) − | 1.6480 (1.27 ) − | 1.7663 (9.13 ) − | 9.4570 (2.76 ) | |

| DTLZ2 | 3 | 6.5568 (2.95 ) − | 5.5056 (3.00 ) − | 6.4772 (3.25 ) − | 4.9357 (4.93 ) = | 6.1443 (1.78 ) − | 4.6614 (9.00 ) |

| 4 | 6.4980 (3.25 ) − | 6.0500 (3.00 ) − | 6.5618 (2.50 ) − | 5.3265 (3.68 ) − | 6.0854 (2.08 ) − | 4.7588 (8.49 ) | |

| 6 | 6.5986 (1.79 ) − | 6.1751 (2.37 ) − | 6.5517 (2.89 ) − | 5.1044 (3.36 ) − | 6.1155 (1.56 ) − | 4.1289 (1.06 ) | |

| 8 | 6.5548 (2.69 ) − | 6.1776 (3.83 ) − | 6.5600 (2.36 ) − | 5.2862 (4.16 ) − | 6.0762 (1.99 ) − | 4.5706 (9.46 ) | |

| 10 | 6.3792 (3.22 ) − | 6.0196 (2.06 ) − | 6.4229 (3.74 ) − | 5.1812 (3.58 ) − | 6.0105 (1.60 ) − | 4.4632 (9.27 ) | |

| DTLZ3 | 3 | 8.3362 (3.61 ) − | 7.8510 (2.23 ) − | 8.2749 (4.88 ) − | 7.1383 (4.10 ) − | 8.4466 (1.83 ) − | 2.6985 (1.36 ) |

| 4 | 8.3606 (2.67 ) − | 8.0465 (3.08 ) − | 8.1113 (9.15 ) − | 7.3300 (4.70 ) − | 8.2661 (2.00 ) − | 2.4775 (2.79 ) | |

| 6 | 8.2088 (2.68 ) − | 8.0250 (3.06 ) − | 7.4869 (9.23 ) − | 7.1297 (2.84 ) − | 7.9735 (2.59 ) − | 2.1852 (5.92 ) | |

| 8 | 8.0873 (2.09 ) − | 7.8899 (2.56 ) − | 6.8763 (1.58 ) − | 7.0811 (5.28 ) − | 7.8185 (2.58 ) − | 1.9900 (8.30 ) | |

| 10 | 7.7497 (3.33 ) − | 7.5194 (3.35 ) − | 6.8331 (1.51 ) − | 6.8932 (4.77 ) − | 7.7091 (2.35 ) − | 2.3608 (7.12 ) | |

| DTLZ5 | 3 | 6.4983 (2.24 ) − | 5.5380 (3.18 ) − | 6.4745 (2.86 ) − | 4.6592 (4.69 ) = | 5.9920 (1.61 ) − | 4.4818 (9.80 ) |

| 4 | 6.5053 (2.27 ) − | 5.6927 (3.17 ) − | 6.3412 (3.41 ) − | 5.4709 (4.64 ) − | 5.9715 (1.24 ) − | 4.5460 (1.17 ) | |

| 6 | 6.3855 (3.00 ) − | 5.9243 (2.10 ) − | 6.3203 (2.52 ) − | 5.1026 (4.31 ) − | 5.8540 (2.80 ) − | 4.1627 (1.03 ) | |

| 8 | 6.1416 (2.44 ) − | 5.9718 (3.16 ) − | 6.0392 (5.27 ) − | 5.0663 (3.82 ) − | 5.7729 (1.65 ) − | 3.7756 (9.71 ) | |

| 10 | 6.1396 (1.96 ) − | 5.9373 (2.38 ) − | 5.7663 (5.84 ) − | 4.7344 (4.74 ) − | 5.6017 (1.35 ) − | 3.9932 (1.12 ) | |

| DTLZ6 | 3 | 8.2975 (1.05 ) − | 8.4323 (8.88 ) − | 7.5523 (6.66 ) − | 8.2203 (1.63 ) − | 8.6402 (2.76 ) − | 4.6775 (4.12 ) |

| 4 | 8.2596 (8.57 ) − | 8.4379 (6.46 ) − | 7.8968 (5.13 ) − | 8.3351 (9.80 ) − | 8.5521 (3.77 ) − | 4.7666 (4.08 ) | |

| 6 | 8.1410 (7.52 ) − | 8.2948 (5.82 ) − | 7.5985 (6.10 ) − | 8.1505 (1.23 ) − | 8.3769 (2.57 ) − | 4.6297 (3.95 ) | |

| 8 | 8.0194 (8.38 ) − | 8.1350 (8.09 ) − | 7.5311 (6.77 ) − | 7.9948 (1.04 ) − | 8.1799 (3.99 ) − | 4.4825 (4.25 ) | |

| 10 | 7.8081 (8.25 ) − | 7.9716 (6.16 ) − | 7.0222 (1.05 ) − | 7.7877 (7.50 ) − | 8.0155 (2.29 ) − | 4.3439 (3.77 ) | |

| DTLZ7 | 3 | 4.2957 (7.69 ) + | 9.4168 (4.29 ) + | 1.0478 (4.20 ) − | 6.9445 (4.93 ) + | 9.9256 (3.47 ) = | 1.0015 (6.16 ) |

| 4 | 4.8264 (1.41 ) + | 1.2636 (9.37 ) + | 1.3982 (9.53 ) = | 1.0970 (7.67 ) + | 1.3366 (5.16 ) = | 1.3532 (9.69 ) | |

| 6 | 7.3726 (1.57 ) + | 2.0451 (1.10 ) = | 2.1300 (1.02 ) = | 1.9530 (7.65 ) + | 2.0726 (5.75 ) = | 2.1114 (1.35 ) | |

| 8 | 9.3152 (1.38 ) + | 2.8779 (1.34 ) = | 2.9089 (1.53 ) = | 2.7428 (1.09 ) + | 2.8210 (1.08 ) = | 2.8402 (1.46 ) | |

| 10 | 1.1131 (1.62 ) + | 3.5965 (1.51 ) = | 3.6868 (1.21 ) − | 3.4709 (1.19 ) + | 3.5519 (1.13 ) = | 3.6030 (1.31 ) | |

| +/−/= | 5/25/0 | 2/25/3 | 0/27/3 | 5/23/2 | 0/25/5 | ||

| Problem | M | K-RVEA | MOEA/D-EGO | KTA2 | REMO | EDN-ARMOEA | CoEGAN-BO |

|---|---|---|---|---|---|---|---|

| MaF1 | 3 | 3.0630 (1.67 ) − | 2.0202 (2.83 ) − | 2.5657 (4.97 ) − | 1.7246 (2.73 ) − | 3.0720 (1.94 ) − | 1.0977 (1.90 ) |

| 4 | 3.5592 (3.55 ) − | 2.3167 (4.56 ) − | 3.3756 (7.06 ) − | 2.0812 (3.14 ) − | 3.9069 (2.59 ) − | 1.4582 (3.63 ) | |

| 6 | 4.4377 (4.79 ) − | 2.8709 (4.03 ) − | 4.6643 (7.89 ) − | 2.6182 (2.19 ) = | 5.1277 (2.72 ) − | 2.4861 (5.48 ) | |

| 8 | 4.7617 (6.96 ) − | 3.2560 (6.56 ) = | 5.4752 (7.56 ) − | 3.0219 (4.76 ) = | 5.7178 (3.20 ) − | 3.2295 (6.66 ) | |

| 10 | 5.0680 (6.58 ) − | 3.2540 (4.78 ) + | 6.5188 (7.06 ) − | 3.0217 (5.44 ) + | 6.1669 (2.90 ) − | 3.6704 (7.31 ) | |

| MaF2 | 3 | 2.6263 (7.31 ) − | 2.0954 (1.12 ) − | 2.3592 (1.09 ) − | 2.0265 (1.65 ) − | 2.4247 (5.19 ) − | 1.7090 (1.09 ) |

| 4 | 2.2340 (7.35 ) − | 2.0269 (5.54 ) − | 2.1383 (8.81 ) − | 2.0096 (9.29 ) − | 2.0679 (3.01 ) − | 1.9284 (9.25 ) | |

| 6 | 2.7449 (1.60 ) = | 2.0713 (7.43 ) + | 2.6282 (1.40 ) + | 2.7039 (1.58 ) = | 2.3129 (6.94 ) + | 2.7770 (1.27 ) | |

| 8 | 3.1911 (1.68 ) = | 2.2804 (8.30 ) + | 3.1711 (2.36 ) = | 3.2468 (2.07 ) = | 2.7502 (1.15 ) + | 3.2753 (2.52 ) | |

| 10 | 3.5788 (2.58 ) = | 2.3443 (1.09 ) + | 3.4783 (2.28 ) = | 3.6012 (2.22 ) = | 3.0043 (1.09 ) + | 3.5505 (2.44 ) | |

| MaF3 | 3 | 1.5968 (1.45 ) + | 2.9779 (5.41 ) − | N/A | 1.6799 (7.00 ) + | 2.1325 (2.21 ) = | 2.0445 (6.14 ) |

| 4 | 1.6168 (2.10 ) + | 2.6638 (5.80 ) − | N/A | 1.5377 (3.47 ) + | 2.0561 (2.90 ) = | 1.9783 (6.77 ) | |

| 6 | 1.5305 (2.07 ) − | 2.3244 (5.64 ) − | N/A | 1.8147 (4.41 ) − | 1.8280 (2.29 ) − | 9.9529 (5.80 ) | |

| 8 | 1.3950 (2.52 ) − | 2.1341 (4.55 ) − | N/A | 1.8048 (6.65 ) − | 1.7721 (2.91 ) − | 7.7932 (4.69 ) | |

| 10 | 1.2835 (2.32 ) − | 2.1700 (3.85 ) − | N/A | 1.5644 (7.46 ) − | 1.4193 (2.76 ) − | 5.4190 (2.50 ) | |

| MaF4 | 3 | 1.1714 (7.59 ) − | 1.0856 (1.12 ) − | N/A | 8.7103 (8.20 ) − | 1.1913 (5.38 ) − | 7.2791 (1.83 ) |

| 4 | 2.4369 (1.81 ) − | 2.5217 (1.86 ) − | N/A | 1.8465 (2.37 ) − | 2.4869 (1.58 ) − | 1.6300 (3.33 ) | |

| 6 | 9.2871 (5.95 ) − | 1.0140 (9.25 ) − | N/A | 7.0090 (9.57 ) − | 9.4063 (6.03 ) − | 6.0758 (1.34 ) | |

| 8 | 3.5739 (2.59 ) − | 3.8744 (3.11 ) − | N/A | 2.6748 (4.12 ) − | 3.7234 (1.79 ) − | 2.1231 (4.17 ) | |

| 10 | 1.2808 (1.17 ) − | 1.4273 (1.25 ) − | N/A | 9.7701 (1.08 ) − | 1.3940 (7.71 ) − | 8.3542 (1.60 ) | |

| MaF5 | 3 | 9.1984 (5.39 ) = | 9.5013 (2.21 ) = | 1.0741 (2.91 ) = | 6.3104 (1.97 ) + | 1.0056 (9.10 ) = | 1.0048 (2.18 ) |

| 4 | 1.0502 (8.87 ) + | 1.1610 (2.67 ) = | 1.2108 (2.31 ) = | 8.3278 (1.13 ) + | 1.3135 (1.66 ) − | 1.1784 (1.86 ) | |

| 6 | 1.7812 (2.67 ) + | 2.8493 (7.96 ) − | 2.5577 (6.56 ) = | 1.9600 (3.15 ) + | 2.8780 (6.34 ) − | 2.3089 (2.94 ) | |

| 8 | 4.6440 (6.74 ) + | 6.0658 (1.04 ) − | 5.7401 (1.22 ) = | 5.2089 (9.93 ) = | 8.0599 (2.57 ) − | 5.3179 (6.30 ) | |

| 10 | 1.5650 (1.73 ) + | 1.8395 (4.56 ) = | 1.6005 (2.10 ) + | 1.8052 (2.02 ) = | 2.3848 (4.50 ) − | 2.1777 (9.91 ) | |

| MaF6 | 3 | 2.7284 (2.66 ) − | 1.6807 (2.61 ) − | N/A | 1.3929 (2.58 ) = | 2.4823 (1.99 ) − | 1.3966 (4.21 ) |

| 4 | 2.6540 (1.65 ) − | 2.1893 (2.74 ) − | N/A | 1.4843 (2.54 ) = | 2.4619 (1.58 ) − | 1.4091 (3.31 ) | |

| 6 | 2.4028 (2.04 ) − | 2.2333 (2.28 ) − | N/A | 1.5078 (2.87 ) − | 2.2341 (1.12 ) − | 1.2670 (3.65 ) | |

| 8 | 2.1140 (1.78 ) − | 2.1633 (1.99 ) − | N/A | 1.3969 (2.67 ) − | 2.1538 (1.37 ) − | 1.1838 (3.60 ) | |

| 10 | 2.0077 (1.70 ) − | 2.0177 (1.70 ) − | N/A | 1.2808 (2.75 ) − | 2.0416 (1.56 ) − | 9.6546 (5.06 ) | |

| MaF7 | 3 | 5.9342 (9.20 ) + | 6.0456 (7.44 ) + | 8.7173 (1.20 ) = | 5.5385 (9.61 ) + | 9.4068 (4.46 ) − | 8.8519 (8.05 ) |

| 4 | 8.9847 (1.21 ) + | 8.5943 (1.72 ) + | 1.1882 (1.72 ) = | 9.1095 (1.33 ) + | 1.2849 (4.94 ) − | 1.1682 (9.83 ) | |

| 6 | 1.6050 (4.26 ) + | 1.5978 (2.03 ) + | 1.6023 (3.35 ) = | 1.5290 (1.12 ) + | 1.9694 (8.66 ) − | 1.7763 (2.96 ) | |

| 8 | 2.7957 (1.15 ) + | 2.2782 (2.69 ) = | 2.3852 (2.34 ) = | 2.2239 (1.32 ) = | 2.5877 (7.13 ) − | 2.4003 (2.83 ) | |

| 10 | 4.6937 (1.63 ) + | 2.8617 (3.45 ) = | 3.0500 (2.86 ) = | 2.8646 (2.56 ) = | 3.2563 (1.30 ) − | 2.8807 (4.67 ) | |

| +/−/= | 11/20/4 | 7/22/6 | 2/7/11 | 9/15/11 | 3/29/3 | ||

| Problem | K-RVEA | MOEA/D-EGO | KTA2 | REMO | EDN-ARMOEA | CoEGAN-BO |

|---|---|---|---|---|---|---|

| DTLZ1 | 1.4427 | 3.7621 | 3.5105 | 3.7793 | 1.3358 | 5.1477 |

| DTLZ2 | 1.5611 | 4.5886 | 8.7350 | 2.3010 | 1.3099 | 5.0543 |

| DTLZ3 | 1.8730 | 4.3973 | 9.0765 | 4.4563 | 1.6600 | 5.0490 |

| DTLZ5 | 1.3001 | 4.5142 | 9.2378 | 2.9397 | 1.5870 | 4.8810 |

| DTLZ6 | 1.3371 | 4.6383 | 8.8606 | 3.1500 | 1.4933 | 4.6461 |

| DTLZ7 | 1.4691 | 4.4943 | 8.5553 | 1.8000 | 1.5293 | 4.7112 |

| Problem | Bayesian optimization (BO) | Generative Adversarial Network (GAN) | Total Runtime | ||

|---|---|---|---|---|---|

| Avg. Train Time | Total Train Time | Avg. Train Time | Total Train Time | ||

| DTLZ1 | 1.7787 | 6.9370 | 6.5291 | 2.5464 | 5.1477 |

| DTLZ2 | 1.6424 | 6.4054 | 7.6485 | 2.9829 | 5.0543 |

| DTLZ3 | 1.6385 | 6.3900 | 6.7070 | 2.6157 | 5.0490 |

| DTLZ5 | 1.5958 | 6.2237 | 6.8467 | 2.6702 | 4.8810 |

| DTLZ6 | 1.6909 | 6.5947 | 6.7778 | 2.6433 | 4.6461 |

| DTLZ7 | 1.7023 | 6.6392 | 7.5646 | 2.9502 | 4.7112 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, J.; Bian, H.; Zhang, Y.; Zhang, X.; Liu, H. CoEGAN-BO: Synergistic Co-Evolution of GANs and Bayesian Optimization for High-Dimensional Expensive Many-Objective Problems. Mathematics 2025, 13, 3444. https://doi.org/10.3390/math13213444

Tian J, Bian H, Zhang Y, Zhang X, Liu H. CoEGAN-BO: Synergistic Co-Evolution of GANs and Bayesian Optimization for High-Dimensional Expensive Many-Objective Problems. Mathematics. 2025; 13(21):3444. https://doi.org/10.3390/math13213444

Chicago/Turabian StyleTian, Jie, Hongli Bian, Yuyao Zhang, Xiaoxu Zhang, and Hui Liu. 2025. "CoEGAN-BO: Synergistic Co-Evolution of GANs and Bayesian Optimization for High-Dimensional Expensive Many-Objective Problems" Mathematics 13, no. 21: 3444. https://doi.org/10.3390/math13213444

APA StyleTian, J., Bian, H., Zhang, Y., Zhang, X., & Liu, H. (2025). CoEGAN-BO: Synergistic Co-Evolution of GANs and Bayesian Optimization for High-Dimensional Expensive Many-Objective Problems. Mathematics, 13(21), 3444. https://doi.org/10.3390/math13213444