Abstract

Sarcasm is a complex emotional expression often marked by semantic contrast and incongruity between textual and visual modalities. In recent years, multi-modal sarcasm detection (MMSD) has emerged as a vital task in affective computing. However, existing models frequently rely on superficial spurious cues—such as emojis or hashtags—during training and inference, limiting their ability to capture deeper semantic inconsistencies and undermining generalization to real-world scenarios. To tackle these challenges, we propose Multi-Modal Mixture-of-Experts (MM-MoE), a novel framework that integrates diverse expert modules through a global dynamic gating mechanism for adaptive cross-modal interaction and selective semantic fusion. This architecture allows for the model to better capture modality-level incongruity. Furthermore, we introduce MMSD3.0 and MMSD4.0, two cross-dataset evaluation benchmarks derived from two open source benchmark datasets, MMSD and MMSD2.0, to assess model robustness under varying distributions of spurious cues. Extensive experiments demonstrate that MM-MoE achieves strong performance and generalization ability, consistently outperforming state-of-the-art baselines when encountering superficial spurious correlations.

Keywords:

multi-modal sarcasm detection; Mixture-of-Experts; cross-modal fusion; spurious cues; robust multi-modal modeling MSC:

68T50; 68T07

1. Introduction

Sarcasm is a subtle and cognitively complex linguistic phenomenon characterized by a mismatch between surface expression and intended meaning [1,2]. With the proliferation of multi-modal content on social medias, users increasingly employ both text and images to express irony, sarcasm, or satire. Accurately detecting such multi-modal sarcasm is pivotal in applications such as sentiment analysis, misinformation detection, and public opinion monitoring [3,4,5,6,7].

The core challenge in multi-modal sarcasm detection (MMSD) lies in capturing the semantic incongruity and emotional tension between textual and visual components. This incongruity may occur at the semantic level (e.g., contradiction between textual description and image content) or the affective level (e.g., cheerful text paired with a gloomy image), making sarcasm expressions diverse, implicit, and highly context-dependent [8,9]. Therefore, effectively modeling cross-modal interactions remains a primary challenge for MMSD.

Despite advancements in cross-modal fusion methods, two key issues remain unresolved:

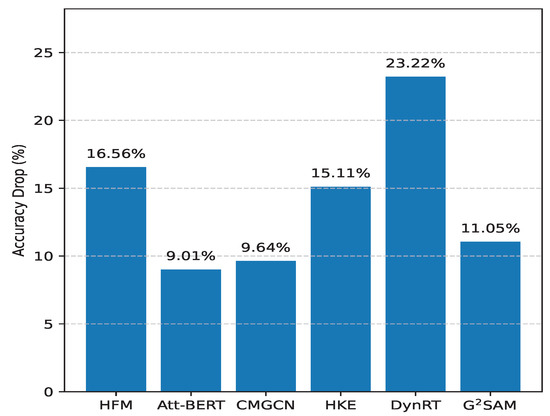

- Mainstream models often exploit superficial textual cues (e.g., emojis and hashtags) that are prevalent in noisy datasets. While prior works employ various fusion strategies, their architectures often fail to distinguish these cues from genuine semantic conflicts, inadvertently learning shortcuts that do not generalize to real-world scenarios. This fundamental weakness is exposed when such cues are removed, causing model performance to degrade significantly [10]. For example, as shown in Figure 1, traditional multi-modal models such as HFM [11], Att-BERT [12], CMGCN [13], HKE [14], DynRT [15], and G2SAM [16] perform significantly worse on the cleaned MMSD2.0 dataset [10], as the accuracy of the DynRT model drops by 23.22% from MMSD [11] to MMSD2.0.

Figure 1. Accuracy drop when removing superficial cues (MMSD → MMSD2.0).

Figure 1. Accuracy drop when removing superficial cues (MMSD → MMSD2.0). - Lack of dynamic fusion mechanisms: Most current MMSD models adopt static fusion strategies, applying the same integration method to all inputs. This uniformity ignores the varying dominance or noise in different modalities across contexts, resulting in unstable performance.

To tackle these challenges, we propose MM-MoE, a multi-modal sarcasm detection framework based on the Mixture-of-Experts (MoE) paradigm. To provide a comprehensive analysis of multi-modal incongruity, MM-MoE is explicitly designed with four functionally distinct expert types: (1) Text Self-Attention Experts to capture internal textual context, (2) Image Self-Attention Experts to model local and global visual cues, (3) Text-Guided Cross-Modal Experts to identify how text semantics guide attention over visual features and (4) Image-Guided Cross-Modal Experts to perform the symmetric operation. This selection is justified by the need to model both intra-modal consistency and inter-modal incongruity, which are central to sarcasm. These experts are managed by a global gating network that dynamically adjusts their contributions based on the input context, facilitating fine-grained, adaptive fusion. Additionally, we introduce a data augmentation strategy using in-batch negative sampling, which serves as a regularization term to guide the model toward learning true semantic mismatches and reduce reliance on spurious correlations.

To thoroughly evaluate robustness and generalization, we design two cross-dataset setups:

- MMSD3.0: Trained on noisy MMSD, tested on clean MMSD2.0.

- MMSD4.0: Trained on clean MMSD2.0, tested on noisy MMSD.

These settings simulate realistic shifts in spurious cues distributions and enable rigorous testing of the model’s semantic grounding. Our contributions are summarized as follows:

- We propose a new systematic evaluation protocol to assess the robustness of multi-modal sarcasm detection models against distributional shifts of spurious cues. We instantiate this protocol with two challenging benchmarks, MMSD3.0 and MMSD4.0, which are designed to rigorously measure a model’s true generalization ability beyond memorizing superficial dataset artifacts.

- We propose MM-MoE, a Mixture-of-Experts framework that integrates diverse expert modules and a dynamic gating mechanism for adaptive semantic fusion. A regularization loss based on negative sampling augmentation is further introduced to enhance semantic alignment and reduce dependence on superficial cues.

- Extensive experiments show that MM-MoE performs well in the presence of spurious cues in multi-modal sarcasm detection, consistently outperforms state-of-the-art baselines, and exhibits strong generalization capabilities to spurious correlations.

2. Related Work

2.1. Multi-Modal Sarcasm Detection

Multi-modal sarcasm detection (MMSD) aims to identify sarcastic meanings expressed jointly through text, images, and other modalities. This task is inherently challenging due to three core characteristics: modal collaboration, implicit expression, and strong contextual dependency. As a result, sarcasm is often difficult to detect using uni-modal information, thus necessitating advanced cross-modal modeling techniques [8].

Early studies on sarcasm detection focused predominantly on textual content, exploring features such as sentiment reversal and semantic incongruity [17,18,19,20]. With the rise of image-rich expressions on social medias, researchers recognized the complementary semantic and emotional value of visual information in sarcastic communication. Schifanella et al. were among the first to introduce multi-modal sarcasm detection [21], followed by Cai et al., who constructed the MMSD dataset, paving the way for systematic research in this area [11].

Based on MMSD, various approaches have been proposed to model text–image sarcasm. For instance, a Graph Convolutional Network (GCN) can capture the ironic relationship between modalities [13,22]. Wen et al. designed a Dual Incongruity Perception (DIP) network to simultaneously detect emotional and factual mismatches [23]. Liu et al. designed a model framework that combines atomic-level consistency based on multi-head cross-attention with component-level consistency based on a graph neural network, which effectively improves the cross-modal sarcasm recognition ability [14]. Wei et al. further improved the model’s detection of cross-modal sarcasm by constructing global semantic consistency features [16].

While these methods achieve promising results on in-domain datasets, recent studies have revealed a significant limitation: many models rely heavily on explicit surface-level features such as emojis and hashtags, which do not generalize well to real-world sarcastic expressions. Qin et al. highlighted the issue of spurious correlations in the original MMSD dataset and proposed a cleaned version, MMSD2.0, by removing such misleading cues [10]. On MMSD2.0, most mainstream models suffered notable performance drops (for example, the DIP’s F1 score decreased by over 10% [24], indicating insufficient semantic incongruity modeling. While recent approaches like ESAM [25] improve robustness by applying static mechanisms like feature masking, our work introduces a different paradigm. We focus on making the fusion process itself dynamic and input-dependent through a Mixture-of-Experts architecture that adaptively selects the most suitable analysis pathway for each sample. Moreover, existing fusion architectures are typically static, applying the same integration strategy to all samples without considering the modality dominance or incongruity level. This rigidity is seen as a key reason for poor generalization.

2.2. Mixture-of-Experts (MoE) Architectures

Mixture-of-Experts (MoE) is a type of machine learning architecture that dynamically schedules and combines multiple sub-models (i.e., “experts”) to complete complex tasks. It was first proposed by Jacobs et al. [26]. Its core idea is to build a gating network that dynamically allocates the participation weight of each expert according to the characteristics of the input sample to achieve task-oriented adaptive modeling [27,28,29]. The MoE architecture divides complex problems into several subtasks, which are modeled by different experts, and only activates some experts in the inference stage, taking into account both model expressivity and computational efficiency. It is one of the key technologies that has promoted the development of large-scale deep learning models in recent years [30,31,32,33]. Since Google proposed the Switch Transformer [34], MoE has made significant progress in many frontier fields such as natural language processing (NLP), computer vision (CV), and multi-modal learning [35,36,37,38].

In multi-modal learning, MoE offers unique advantages by facilitating flexible expert routing tailored to the heterogeneous nature of modalities. Previous works have explored its application in various contexts: MIMoE-FND [39] employs semantic consistency for expert selection in fake news detection, MoPE leverages prompt-level expert fusion to enhance training efficiency [40], and MoBA integrates lightweight adapters for modeling modality conflict in sarcasm detection [41]. However, these methods still face significant limitations when applied to sarcasm detection, a task that inherently requires fine-grained modeling of semantic incongruity and pragmatic tension. Specifically, (1) many existing MoE-based approaches rely on static or locally scoped gating mechanisms, lacking a global view of modality dominance, and (2) they do not explicitly model cross-modal semantic misalignment, which is central to identifying sarcastic content.

To address the above challenges, we introduce a novel dynamic Mixture-of-Experts (MoE) modeling paradigm for multi-modal sarcasm detection. Specifically, we design a fine-grained expert system consisting of four structurally unique expert types: textual self-attention experts, visual self-attention experts, text-guided cross-modal interaction experts, and image-guided cross-modal interaction experts. We develop a global dynamic gating mechanism that adaptively routes input representations to the most appropriate combination of experts based on the fused multi-modal features; this goes beyond the traditional MoE architecture, which relies on a unified expert structure and static gating. In addition, we augment the training objective with a regularization term derived from mismatched pairs (negative samples), which enhances the model’s ability to capture inter-modal sarcasm. This combination effectively reduces the model’s reliance on surface cues such as emojis and hashtags, leading to more robust sarcasm understanding.

3. Methodology

This section presents the architecture of our proposed multi-modal sarcasm detection framework, MM-MoE, detailing the task formulation, feature extraction, expert module design, dynamic gating mechanism, multi-modal fusion strategy, and loss functions.

Our model is based on the pre-trained CLIP framework [42], which provides initial semantic representations for both text and image inputs. These representations are projected into a shared latent space and then processed through a Mixture-of-Experts module composed of four expert types:

- Text Self-Attention Experts;

- Image Self-Attention Experts;

- Text-Guided Cross-Modal Experts;

- Image-Guided Cross-Modal Experts.

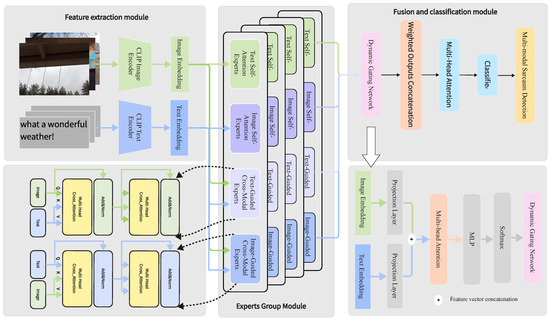

A global dynamic gating network assigns context-dependent weights to the outputs of all experts, and a dynamically fused representation is generated for the final prediction. The model is optimized using a classification loss, which is enhanced by a data augmentation strategy that treats mismatched pairs as negative samples. Figure 2 illustrates the overall workflow.

Figure 2.

The overall architecture of the proposed MM-MoE framework. Blue arrows indicate the flow of textual features, green arrows represent visual features, and orange arrows show the gating mechanism’s control flow.

3.1. Task Definition

Given an image-text pair , where denotes the visual input and the corresponding text, the task is to predict a sarcasm label , where 1 represents sarcasm and 0 denotes a non-sarcastic instance. To obtain high-quality initial features, we adopt the pre-trained CLIP model, which encodes both image and text using a contrastive training paradigm, yielding powerful cross-modal alignment capabilities. Specifically, we extract

where and represent the text and image embeddings, respectively. These are projected into a shared hidden space through learnable linear projections:

with , and being trainable matrices and being the hidden size. The vectors and are fed into the subsequent MoE module.

3.2. Mixture-of-Experts Module

To precisely capture the diverse intra-modal and inter-modal semantic information, we designed a Mixture-of-Experts (MoE) module comprising multiple types of experts. The design of these four expert types provides a comprehensive toolkit for sarcasm detection. It includes two specialized uni-modal experts (Text Self-Attention and Image Self-Attention) to independently model the unique context within each modality. Crucially, to capture the often asymmetric nature of sarcastic incongruity, it also features two-directional cross-modal experts (Text-Guided and Image-Guided), allowing for the model to analyze how one modality reframes the other. Our framework is composed of four functionally distinct expert types, each built upon the Transformer attention mechanism.

The fundamental structure of each expert module includes a multi-head attention layer, residual connections with Layer Normalization, and a Feed-Forward Network (FFN). For the j-th expert, its operation on an input feature vector can be formally expressed as

In these equations, j is the expert index, is the input feature vector, is the intermediate output from the attention sub-layer, and is the final output representation of the expert module. The MultiHeadAttn function is defined by the standard scaled dot-product attention mechanism, , where Q, K, and V represent the Query, Key, and Value matrices, and is the dimensionality of the key vectors. The FFN consists of two linear layers with a GELU activation function. The inputs to the attention mechanism vary by expert type. For the self-attention experts, the input corresponds to a single modality’s feature ( or ), which is used to derive Q, K, and V. For the cross-modal experts, the inputs are adapted to model inter-modal relationships, where the Query is derived from one modality and the Key and Value are derived from the other (i.e., operations such as or replace the self-attention block).

The four specific types of experts we designed are as follows:

- Text Self-Attention Experts: These experts focus on capturing the internal contextual dependencies and semantic relationships within the text modality. Their input is the projected text feature T′.

- Image Self-Attention Experts: These experts are used to capture global and local visual cues within the image modality. Their input is the projected image feature I′.

- Text-Guided Cross-Modal Experts: These experts aim to model how textual semantics attend to visual features to identify cross-modal incongruities.

- Image-Guided Cross-Modal Experts: Symmetrical to the previous type, these experts model how visual semantics attend to textual features to enrich their representations.

3.3. Dynamic Fusion via Gating and Attention

To adaptively integrate the outputs of various experts based on the characteristics of different input samples, we introduce a dynamic fusion mechanism. This mechanism consists of a global Dynamic Gating Network and a final Fusion Attention Layer.

Dynamic Gating Network: We first concatenate the projected text and image features to serve as the input to the gating network.

We then use a lightweight multi-head self-attention layer to capture the contextual information within this fused input, followed by a Multi-Layer Perceptron (MLP) to predict a weight for each of the N experts. Specifically, the MLP consists of two linear layers with a GELU activation function in between. It projects the input feature z from a dimension of to a hidden dimension of , and then to the final output dimension N, which corresponds to the total number of experts.

Here, . These weights, , reflect the importance of each expert for the current sample.

Multi-Modal Fusion Strategy: Instead of simply using the weighted expert outputs for classification, we designed a more robust fusion strategy, as depicted in Figure 2. First, we form a combined representation, , by concatenating the weighted outputs of all experts :

To further capture high-order interactions among the different expert perspectives, we then pass through a final Fusion Attention Layer (a standard multi-head self-attention module) to obtain the final multi-modal representation, , for classification.

This design allows for the model not only to dynamically select experts, but also to deeply integrate the selected information, enhancing the representation’s robustness.

3.4. Training Objective with Negative Sampling Augmentation

The model is optimized using the standard binary cross-entropy loss, . To enhance robustness and prevent the model from relying on spurious uni-modal cues, we introduce a data augmentation strategy based on in-batch negative sampling.

The core idea is to treat randomly mismatched image-text pairs as synthetic “non-sarcastic” samples. For each batch of real training data , we construct a corresponding batch of augmented samples. A synthetic sample is created by pairing a text feature from one sample with an image feature from a different sample within the same batch (where ). Drawing on the idea of contrastive learning, these augmented pairs are assumed to be inherently non-sarcastic and are thus assigned a ground-truth label of 0.

Both the original batch and the augmented batch are passed through the model to obtain classification predictions. The total loss, , is a weighted sum of the classification loss on the real data () and the classification loss on the augmented data ():

where is a hyperparameter to balance the contribution of the augmented data. Each loss component is calculated using the binary cross-entropy formula:

This multi-task training approach compels the model not only to correctly classify authentic sarcastic expressions, but also to recognize the lack of semantic connection in mismatched pairs, thereby learning a more robust cross-modal understanding.

4. Experiments

4.1. Datasets

We evaluate our model by constructing datasets targeting spurious cues based on two open source benchmark datasets for multi-modal sarcasm detection, MMSD [11] and MMSD2.0 [10]. The details of the original two datasets are summarized in Table 1. Both datasets contain paired image-text posts and are widely used in multi-modal sarcasm detection research.

Table 1.

Dataset statistics for MMSD and MMSD2.0.

- MMSD is constructed from English tweets, where sarcasm is weakly labeled via hashtags such as #sarcasm. It contains numerous spurious cues, such as emojis and hashtags, which may bias the learning process. And the author officially divided the training set, the validation set, and the test set.

- MMSD2.0 [10] is a refined version of MMSD, where superficial cues (e.g., emojis, hashtags) are systematically removed, aiming to better expose genuine semantic incongruity between modalities. At the same time, MMSD2.0 does not change the original division of MMSD, but only processes the text and changes some inappropriate labels, so there is no data leakage problem at all.

Although MMSD2.0 significantly reduces spurious correlations, it still retains residual label and lexical biases, making generalization challenging. To facilitate a rigorous analysis of model robustness, we first establish clear definitions based on these datasets. We define “noisy” data as samples from the original MMSD dataset, which contains numerous explicit spurious cues (e.g., hashtags like #sarcasm, emojis) that are highly correlated with the labels. Conversely, we define “clean” data as samples from MMSD2.0, where these specific textual cues have been systematically removed, compelling models to rely on genuine semantic incongruity. To comprehensively evaluate model robustness in realistic scenarios, we propose two cross-dataset evaluation setups:

- MMSD3.0: Trained and validated on MMSD (with noise), tested on MMSD2.0 (clean).

- MMSD4.0: Trained and validated on MMSD2.0 (clean), tested on MMSD (noisy).

These setups simulate real-world distributional shifts in the presence of spurious cues and assess the true generalization ability of the model.

4.2. Evaluation Metrics

Following previous studies [10], we use four standard metrics to evaluate the performance of sarcasm detection: Accuracy, Precision, Recall, and F1 Score. Among them, the F1 score is the most valuable in the case of class imbalance and can better reflect the model’s ability to distinguish between sarcastic and non-sarcastic content.

4.3. Baselines

To validate the effectiveness of the proposed MM-MoE framework, we conduct comprehensive comparisons against a diverse set of strong baseline models, categorized into three groups: text-only, image-only, and multi-modal fusion methods.

- Text-Only Modality:

- -

- TextCNN [43]: A Convolutional Neural Network-based model for sentence classification. It utilizes convolutional filters of varying sizes to extract n-gram features, and has shown competitive performance across various text classification tasks.

- -

- Bi-LSTM [44]: A bidirectional long short-term memory network that captures both past and future dependencies in text sequences. It is widely used to model contextual semantics in sentiment and sarcasm detection.

- -

- SMSD [45]: The Self-Matching and Low-Rank Bilinear Pooling model, specifically designed for sarcasm detection. It captures intra-sentence incongruities by computing rich word-to-word interactions, and is effective in modeling subtle ironic cues.

- -

- BERT [46]: A Transformer-based language representation model pre-trained on large corpora. We fine-tune BERT for sarcasm classification tasks, leveraging its strong contextual modeling ability to capture implicit sentiment conflicts within text.

- Image-Only Modality:

- -

- ResNet [47]: A residual Convolutional Neural Network pre-trained on ImageNet. It is used as a visual backbone to extract deep hierarchical features from input images, enabling sarcasm detection based solely on visual signals.

- -

- ViT [48]: Vision Transformer, which splits images into patches and applies multi-head self-attention to model long-range dependencies. It provides global visual context, and has demonstrated strong performance in many vision tasks.

- Multi-Modal Fusion Modality:

- -

- HFM [11]: The Hierarchical Fusion Model, one of the earliest works in multi-modal sarcasm detection. It performs multi-level fusion of text, image, and visual attribute features to improve sarcasm recognition.

- -

- CMGCN [13]: The Cross-Modal Graph Convolutional Network constructs a graph across textual and visual modalities, capturing intricate sarcasm-related relationships via structured cross-modal interactions.

- -

- DIP [23]: The Dual Incongruity Perceiving Network introduces a two-branch architecture to simultaneously detect semantic and emotional incongruities between text and image, significantly enhancing multi-modal sarcasm understanding.

- -

- DynRT [15]: A dynamic routing transformer network that adapts fusion pathways based on input characteristics. It aims to handle semantic mismatches by dynamically routing features through different sub-networks.

- -

- Multi-View CLIP [10]: A multi-perspective framework based on CLIP that integrates textual, visual, and interactional views. It aggregates complementary sarcastic cues from various perspectives for robust sarcasm detection.

- -

- DMSD-CL [49]: This paper introduces a novel framework for debiasing multi-modal sarcasm detection using contrastive learning. It constructs positive samples with dissimilar word biases and negative samples with similar biases via counterfactual data augmentation, enhancing the model’s robustness in OOD settings.

- -

- G2SAM [16]: The paper introduces a novel inference paradigm leveraging graph-based global semantic awareness for multi-modal sarcasm detectio. It is the first work to combine global semantic congruity with label-aware contrastive learning for multi-modal classification.

- -

- ESAM [25]: The paper proposes a multi-modal sarcasm detection model called Enhancing Semantic Awareness Model (ESAM), which addresses feature shift issues through Sentiment Consistency Constraint (SCC) and Automatic Outlier Masking (AOM), thereby enhancing the model’s capability to identify sarcastic sentiments in multi-modal data.

- -

- GPT-4o [50]: The multi-modal large language model released by OpenAI has powerful multi-modal capabilities and has achieved advanced performance in many fields.

- -

- Qwen [51]: Qwen is a multi-modal large language model released by Alibaba. Its performance in all aspects is at the forefront of the world, especially its multi-modal processing capabilities.

4.4. Experimental Setup

To ensure a fair and reproducible comparison, all baseline models were reproduced using their official code repositories with default hyperparameters and pre-trained weights, without any modification. Both our method and the baselines were implemented using PyTorch 1.13.1 [52]. Visual and textual features were extracted from the frozen CLIP-ViT-L/14-336 encoder provided by Huggingface [42].

Our model was trained using the AdamW optimizer [53] with an initial learning rate of , weight decay of , cosine annealing scheduler, and a batch size of 64. The training was conducted for up to 100 epochs, with early stopping based on validation F1 (patience = 10). We optimized the total loss function, which is a weighted sum of the classification loss on real data and an augmentation loss on mismatched pairs, as defined in Equation (8).

All experiments were performed on a single NVIDIA RTX 4090 GPU. Each result is averaged over ten random seeds, and we report the mean.

To assess the statistical significance of performance improvements, we conducted a two-tailed paired t-test between our model and the baseline models, with a significance level (alpha) set at 0.05. We specifically tested if the improvements observed on the F1 score are statistically significant.

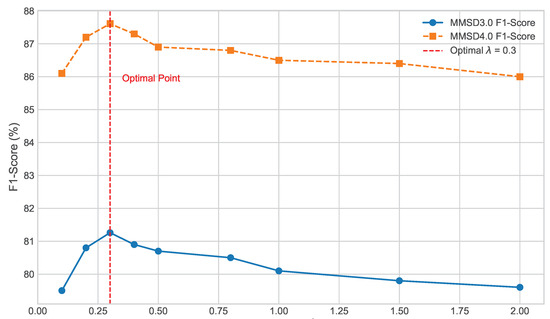

4.5. Hyperparameter Analysis

To validate our parameter choices, we performed a hyperparameter analysis, focusing on the most critical component of our training objective: the weighting coefficient for the augmentation loss, as defined in Equation (8). This hyperparameter controls the influence of the regularization term derived from in-batch negative sampling. Our experiments, varying from 0.1 to 2.0, revealed that the model achieves optimal performance with around , as shown in Figure 3. This setting strikes the best balance between the primary classification objective on authentic pairs () and the regularization task of identifying mismatched pairs (), effectively guiding the model to learn a robust cross-modal understanding without destabilizing the training process. This empirically validated setting was used for all of the main experiments.

Figure 3.

Effect of the augmentation loss weight on the F1 Score for MMSD3.0 and MMSD4.0.

4.6. Results and Analysis

To comprehensively evaluate the robustness and generalization capabilities of our proposed model, we conducted extensive experiments on two challenging cross-dataset benchmarks, MMSD3.0 and MMSD4.0. The detailed performance comparison against a wide range of baseline models is presented in Table 2.

Table 2.

Experimental results of different types of baseline models on MMSD3.0 and MMSD4.0 datasets. The best results are highlighted in bold. Performance is reported as mean ± std (%) over ten runs.

Overall, our proposed model, MM-MoE (ours), achieves state-of-the-art performance on both benchmarks across all evaluation metrics, including Accuracy, Precision, Recall, and F1 score. This consistently superior performance validates the effectiveness of our adaptive multi-modal fusion framework based on the Mixture-of-Experts architecture.

- Performance on MMSD3.0 (Train on Noisy, Test on Clean): This benchmark is particularly challenging, as it directly assesses a model’s ability to learn genuine semantic incongruity from a training set rife with spurious cues (e.g., hashtags, emojis) and generalize to a clean test set devoid of them. As shown in the table, our model obtains an accuracy of 81.61% and an F1 score of 81.26%. This result significantly surpasses the next best performing model, Multi-View CLIP, which scored an F1 of 78.07%. The improvement of 3.19 percentage points in F1 score is not only substantial in magnitude, but is also statistically significant (p < 0.05, paired t-test), which strongly highlights our model’s ability to mitigate the influence of spurious correlations. In contrast, several baseline models, such as DynRT, exhibit a dramatic performance collapse on this task with an F1 score of only 55.95%, indicating their heavy reliance on superficial cues and the failure to generalize.

- Performance on MMSD4.0 (Train on Clean, Test on Noisy): This setting evaluates the model’s robustness when faced with distracting spurious cues at test time, after having been trained on a clean dataset. Our model once again demonstrates its superiority, achieving a top accuracy of 87.21% and an F1 score of 87.61%. This performance represents a significant margin over other strong multi-modal methods like DIP (F1 of 84.70%) and Multi-View CLIP (F1 of 84.99%). Crucially, the performance gap between our model and the strongest baseline (Multi-View CLIP) is statistically robust, as confirmed by our significance test (p < 0.05). This success suggests that, by learning from clean data, our model builds a robust understanding of sarcasm that is not easily perturbed by the reintroduction of noisy signals. The dynamic gating mechanism in our framework likely plays a key role in adaptively focusing on meaningful cross-modal interactions while down-weighting potentially misleading uni-modal cues.

- Overall Observations: A key trend observed across nearly all models is that performance on MMSD4.0 is considerably higher than on MMSD3.0. This suggests that generalizing from a noisy training distribution is a fundamentally harder problem than being robust to noise in the test distribution. It underscores the importance of clean training data or, in its absence, models like ours that are explicitly designed to handle spurious correlations. Furthermore, the results consistently show that multi-modal models outperform their uni-modal counterparts (Text-only and Image-only methods), reaffirming the necessity of leveraging information from both modalities for reliable sarcasm detection. In conclusion, the comprehensive results strongly validate the effectiveness, generalization ability, and robustness of our proposed MM-MoE model.

4.7. Ablation Study

To investigate the individual contributions of the key components within our MM-MoE framework, we conducted a series of ablation studies on both the MMSD3.0 and MMSD4.0 datasets. We systematically removed one component at a time from our full model (termed BASELINE) and observed the impact on performance. The results are detailed in Table 3. To maintain conciseness in the table, we use the following abbreviations to denote the removed modules: w/o Text_Self and w/o Image_Self refer to the Text and Image Self-Attention Experts; w/o Cross_Text and w/o Cross_Image denote the Text-Guided and Image-Guided Cross-Modal Experts; w/o Gating_network signifies the Dynamic Gating Network; and w/o Aug indicates the removal of the augmentation loss component (). w/o All_Cross means the all cross-modal experts.

Table 3.

Ablation study results on MMSD3.0 and MMSD4.0. Performance is reported as mean ± std (%) over ten runs. BASELINE refers to our full proposed model without any components removed.

The experimental results clearly demonstrate that all proposed components are integral to the model’s final performance, as their removal invariably leads to a decrease in both F1 score and accuracy. We can draw several key insights from these results:

- The Importance of Core Mechanisms: The two most critical components of our framework are the augmentation loss (Aug) and the dynamic gating network (Gating_network). Removing the augmentation loss (w/o Aug) results in the most substantial performance drop, with the F1 score decreasing by 4.46% on MMSD3.0 and 3.59% on MMSD4.0. This underscores the vital role of the negative sampling strategy in forcing the model to learn genuine cross-modal semantic alignment, which is essential for generalizing beyond spurious cues. Similarly, removing the gating network (w/o Gating_network) also causes a significant decline, proving that the ability to dynamically weight and select experts based on input context is crucial for robust fusion.

- The Necessity of Cross-Modal Interaction: The removal of either the text-guided (w/o Cross_Text) or image-guided (w/o Cross_Image) cross-modal experts leads to a notable drop in performance. This is expected, as sarcasm often arises from the incongruity between modalities. The degradation in performance confirms that explicitly modeling these cross-modal relationships is a cornerstone of effective sarcasm detection. To further emphasize their collective importance, removing all cross-modal experts at once (w/o All_Cross) triggers the most severe performance drop in all of the expert ablations, as detailed in Table 3. This powerfully confirms that the model’s ability to capture inter-modal incongruity is the single most vital element of the framework.

- The Role of Uni-Modal Understanding: The ablation of uni-modal self-attention experts (w/o Text_Self and w/o Image_Self) also negatively impacts the results. This indicates that a thorough understanding of each modality’s internal context is a necessary prerequisite for subsequent, more complex cross-modal reasoning. Interestingly, the performance drop from removing the image self-attention expert (w/o Image_Self) is the least pronounced on the MMSD3.0 task. This suggests that, when trained on the noisy MMSD dataset, where textual cues might be more dominant or misleading, the model learns to rely less heavily on intra-modal visual information for generalization.

In summary, the ablation study systematically validates our design choices. Each component, from the specific expert types to the overarching augmentation strategy and dynamic gating mechanism, contributes meaningfully to the model’s ability to robustly detect sarcasm, especially in challenging cross-dataset scenarios.

4.8. Performance on Standard Benchmarks

To offer a more holistic evaluation of our proposed MM-MoE model, beyond validating its robustness on our constructed cross-dataset benchmarks (MMSD3.0 and MMSD4.0), we also conducted an in-domain evaluation on the two widely-used standard benchmarks: MMSD and MMSD2.0. This experiment aims to directly compare MM-MoE against existing state-of-the-art (SOTA) models in a traditional setting. The detailed results are presented in Table 4.

Table 4.

Performance comparison on the original MMSD and MMSD2.0 test sets. The best results are highlighted in bold. Performance is reported as mean ± std (%) over ten runs.

- Analysis of MMSD: The MMSD dataset, known to be “noisy” due to its inclusion of numerous spurious cues like hashtags (for example, #sarcasm) and emojis, poses a unique challenge. As observed in Table 4, our MM-MoE model achieves a comprehensive lead on this dataset, with an Accuracy of 91.12% and an F1 score of 90.63%. Both key metrics surpass all existing baselines, demonstrating that, even in an environment with superficial shortcuts, the Mixture-of-Experts architecture and dynamic fusion mechanism of MM-MoE can efficiently learn and integrate multi-modal information to achieve superior sarcasm detection.

- Analysis of MMSD2.0: The MMSD2.0 dataset, a refined version of MMSD with spurious cues removed, provides a more authentic test of a model’s ability to capture semantic incongruity. On this “clean” dataset, our MM-MoE model once again achieves SOTA performance, with an F1 score of 85.87% and an accuracy of 85.89%. It is noteworthy that MM-MoE maintains a clear performance advantage over strong competitors like G2SAM (74.93% F1 score) and ESAM (84.56% F1 score). This strongly indicates that our model’s success is not reliant on spurious cues, but rather on a deeper understanding of the semantic relationships between text and images.

- Observation on Performance Shift from MMSD to MMSD2.0: A critical observation is that nearly all models, including MM-MoE, experience a performance drop when transitioning from MMSD to MMSD2.0. This universally validates the core motivation of our study: many models inadvertently learn to depend on spurious correlations. For instance, the DIP model’s F1 score falls sharply from 86.18% to 77.94%, indicating a strong reliance on such cues. In contrast, while MM-MoE’s performance also naturally declines (F1 from 90.63% to 85.87%), its ability to remain the top-performing model after the removal of spurious cues highlights the superiority of its design. The dynamic gating network and the augmentation loss objective likely contribute to its robustness, enabling it to adaptively focus on genuine cross-modal conflicts when obvious cues are absent.In summary, these in-domain experimental results provide a powerful complement to our cross-domain findings. MM-MoE not only excels at generalization tasks involving distribution shifts (MMSD3.0 & MMSD4.0), but also sets a new performance benchmark in standard in-domain evaluations, comprehensively validating its effectiveness and robustness as an advanced framework for multi-modal sarcasm detection.

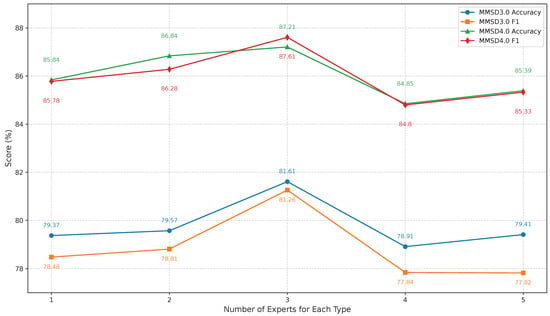

4.9. Impact of Expert Number

To determine the optimal number of experts for our MM-MoE framework, we experimented with varying the number of experts and evaluated the model’s performance on both MMSD3.0 and MMSD4.0 datasets. The results of this experiment are summarized in Figure 4.

Figure 4.

Performance analysis with a varying quantity of experts for each of the four expert types on MMSD3.0 and MMSD4.0.

As illustrated in the figure, the model’s performance is sensitive to the number of experts employed. We observe a clear trend: the F1 score and accuracy on both datasets initially increase as the number of experts grows from one to three. Specifically, on MMSD3.0, the F1 score increases from 78.48% (one expert) to a peak of 81.26% (three experts). A similar pattern is evident on MMSD4.0, where the F1 score improves from 85.78% to a maximum of 87.61% with three experts. This initial improvement suggests that increasing the number of experts enhances the model’s capacity and provides a richer set of specialized functions. With more experts, the gating network has more diverse options to choose from, enabling it to learn more fine-grained and effective routing strategies for different samples.

However, a further increase in the number of experts beyond three leads to a noticeable decline in performance. For instance, increasing the experts from three to four causes the F1 score to drop by 3.42% on MMSD3.0 and 2.81% on MMSD4.0. This degradation indicates that an overly complex model with too many experts may lead to overfitting on the training data, especially given the dataset’s size. A larger number of experts can also make the optimization of the gating network more challenging, potentially resulting in suboptimal expert combinations and harming the model’s generalization ability.

Based on these empirical results, we conclude that setting the number of experts to three provides the best trade-off between model capacity and generalization performance. Therefore, we adopted this configuration for all other experiments reported in this paper.

4.10. Computational Efficiency Analysis and Occam’s Razor

In machine learning, the principle of Occam’s Razor [54] suggests that among competing hypotheses that predict equally well, the one with the fewest assumptions or the simplest structure should be selected. In the context of deep learning, the complexity of the model is often correlated with the number of trainable parameters. A model that achieves high performance with fewer parameters is not only more computationally efficient, but is often considered more robust and better at generalization. This section analyzes our proposed MM-MoE model from this perspective.

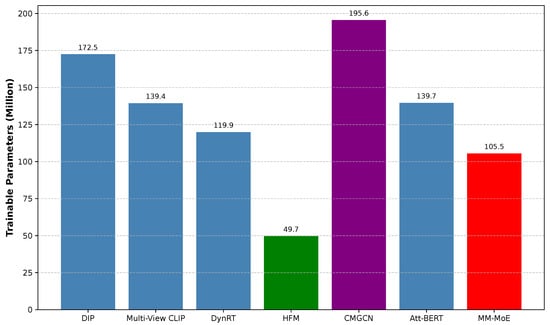

As presented in Figure 5, our MM-MoE model, with approximately 105.5 million trainable parameters, embodies this principle of simplicity and efficiency. This marks a significant reduction in complexity compared to many other high-performing multi-modal methods. For instance, our model is considerably more lightweight than complex architectures like CMGCN (195.6 M) and DIP (172.5 M). It also maintains a smaller parameter footprint than other strong baselines like Multi-View CLIP (139.4 M) and Att-BERT (139.7 M).

Figure 5.

Comparison of trainable parameters across models.

This efficiency is a direct result of the Mixture-of-Experts architecture. While the model contains a large pool of specialized expert modules, the dynamic gating network ensures that only a sparse subset of these experts is activated for any given input. This sparse activation paradigm is key: it allows for the MM-MoE to maintain a high model capacity and flexibility, capable of handling diverse inputs, without incurring the full computational cost associated with a single, monolithic dense model of similar size. The model is large in capacity, but light in execution.

In conclusion, our MM-MoE framework provides a compelling case for the application of Occam’s Razor. It not only achieves state-of-the-art performance in robust sarcasm detection, but does so with a more parsimonious and efficient design. This strikes an excellent balance between high performance and computational feasibility, making MM-MoE a powerful, practical, and elegant solution for multi-modal sarcasm detection.

4.11. Qualitative Analysis and Visualization

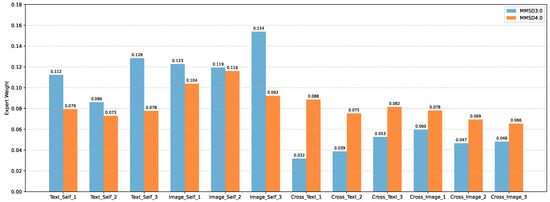

To gain a deeper, more intuitive understanding of MM-MoE’s internal mechanisms, we visualized the average activation weights assigned by the dynamic gating network to each expert. This analysis allows us to “look inside the black box” and observe how the model adapts its strategy when confronted with different distributions of spurious cues. We compare two challenging settings: MMSD3.0 (training on a noisy dataset, testing on a clean one) and MMSD4.0 (training on a clean dataset, testing on a noisy one). The distinct behavioral patterns, shown in Figure 6, provide compelling evidence for our model’s adaptive reasoning capabilities.

Figure 6.

Comparison of all expert weights on MMSD3.0 and MMSD4.0.

- Analysis of MMSD3.0 (Train Noisy → Test Clean): When the model is trained on the noisy MMSD dataset, which is rife with spurious correlations (e.g., hashtags, emojis), it learns a “defensive” strategy. The dynamic gate assigns significantly higher weights to uni-modal experts, particularly Image_Self_3 (average weight 0.154), while suppressing the cross-modal experts. This behavior suggests that, during training, the model discovers that relying on the relationship between modalities can be misleading due to noise. Instead, it learns to distrust the cross-modal signals and latches onto more reliable, albeit superficial, patterns within a single modality as predictive shortcuts. This reliance on isolated features is a classic symptom of models exposed to spurious cues, and, while it shows adaptability during training, it also explains why generalizing from a noisy dataset is fundamentally challenging.

- Analysis of MMSD4.0 (Train Clean → Test Noisy): In stark contrast, when MM-MoE is trained on the clean MMSD2.0 dataset, it is forced to learn the genuine semantic and emotional incongruity that defines sarcasm. Having built this robust, “semantically-grounded” understanding, its behavior at test time on noisy data becomes highly insightful. As shown in Figure 6, the model dynamically and significantly increases the weights for the cross-modal experts (Cross_Text and Cross_Image). This strategic shift is crucial: when faced with distracting signals within the text or image, the model intelligently focuses its resources on analyzing the interaction between the modalities. It essentially learns to “cut through the noise” by prioritizing the cross-modal relationship, which it knows is the most reliable path to identifying true sarcastic incongruity. This is not merely a static fusion strategy; it is an active, input-dependent adaptation that serves as direct evidence of the model’s robustness.

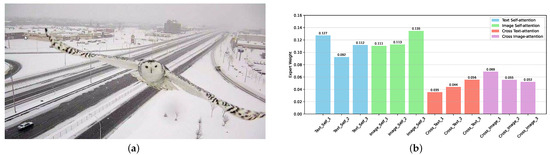

- Case Study on Nuanced Sarcasm: To provide a more granular insight beyond average weights, we present a case study on the sarcastic example shown in Figure 7. The input consists of an image of a snowy owl, as shown in Figure 7a and the text: “snowy owl came too close to traffic camera and he is being ticketed for not reading the signs in #french”. Here, the sarcasm arises not from a direct visual-semantic conflict, but from a commonsense violation. Interestingly, the expert weight distribution in Figure 7b reveals that the gating network assigns the highest weights to uni-modal experts (both Image Self-attention and Text Self-attention) and relatively low weights to the cross-modal experts. This suggests a sophisticated reasoning process: the model first dedicates resources to deeply understand the image’s real-world context and the text’s internal absurdity independently. Only after forming strong uni-modal representations can the model detect the higher-level, commonsense incongruity in their combination. This case vividly illustrates our model’s flexibility in handling nuanced sarcasm where robust uni-modal understanding is a prerequisite to revealing the irony.

Figure 7. A case study on a sarcastic example. (a) The image of an image-text pair that conveys sarcasm by violating common sense. (b) The corresponding expert weights assigned by the dynamic gating network for this sample, indicating a focus on uni-modal understanding.

Figure 7. A case study on a sarcastic example. (a) The image of an image-text pair that conveys sarcasm by violating common sense. (b) The corresponding expert weights assigned by the dynamic gating network for this sample, indicating a focus on uni-modal understanding.

5. Conclusions and Future Work

This paper addresses the critical challenge of robust multi-modal sarcasm detection, where models often fail by relying on spurious cues rather than understanding true semantic incongruity. We introduced MM-MoE, a novel Mixture-of-Experts framework designed to overcome this limitation. At its core, MM-MoE employs a synergistic approach: a global dynamic gating mechanism adaptively routes multi-modal inputs to specialized experts (uni-modal and cross-modal), while a regularization loss based on negative sampling explicitly guides the model to learn genuine cross-modal alignment. To rigorously evaluate this capability, we proposed a new cross-dataset evaluation protocol, instantiated as the MMSD3.0 and MMSD4.0 benchmarks, which simulate real-world distributional shifts. Extensive experiments demonstrate that MM-MoE achieves state-of-the-art performance, significantly outperforming existing models in generalizing from and to noisy data, thereby proving its superior robustness.

Despite these advances, we acknowledge several limitations in our current work, which in turn paves the way for exciting future research directions.

First, the most significant limitation lies in the model’s reliance on self-contained information. Our framework, like most existing methods, operates as a closed system and lacks access to external commonsense knowledge. This restricts its ability to comprehend sarcasm that requires a deep understanding of real-world context or cultural nuances. For instance, identifying the irony in a statement like “Enjoying my spacious new mansion” paired with an image of a cramped studio apartment requires knowledge beyond the text–image pair itself. A promising future direction is to integrate external knowledge sources, such as knowledge graphs or Large Language Models (LLMs), to equip the model with the commonsense reasoning capabilities necessary for these more complex instances.

Second, the scope of generalization, while a core focus of this work, can be further expanded. Our evaluation is primarily conducted on the Twitter-originated MMSD dataset family. The model’s performance on other domains with distinct linguistic styles and content formats, such as product reviews, news forums, or other social media platforms, remains an open question. Furthermore, our analysis of robustness is centered on distributional shifts caused by spurious cues. Another critical dimension, adversarial robustness, has not been explored. Investigating the model’s resilience to subtle, maliciously crafted perturbations in text or images would be a crucial step towards developing more secure and reliable systems.

Finally, while our visualization of expert weights offers a valuable glimpse into the model’s decision-making process at a modular level, the internal workings of each expert remain a “black box”. Future work could pursue a more fine-grained interpretability, aiming to pinpoint which specific textual words and visual regions contribute most significantly to the detection of semantic conflict. This would not only enhance the transparency of the model, but also provide deeper insights into the nature of multi-modal sarcasm itself.

To facilitate these future endeavors, our code, data setups, and experimental configurations will be made publicly available.

Author Contributions

Conceptualization, G.Z., L.L. and J.Z.; Methodology, G.Z. and L.L.; Validation, G.Z.; Formal Analysis, Y.Z. and X.Y.; Resources, X.Y., L.L. and J.Z.; Data Curation, X.Y.; Writing—Original Draft Preparation, G.Z.; Writing—Review and Editing, Y.Z., L.L. and J.Z.; Supervision, L.L. and J.Z.; Project Administration, L.L. and J.Z.; Funding Acquisition, X.Y. and L.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Strategic Priority Research Program of Chinese Academy of Sciences under Grant XDA0480301; the Informatization Project, Chinese Academy of Sciences Grant CAS-wx2022gc0304; Youth Fund of the Computer Network Information Center, Chinese Academy of Sciences (Grant No. 25YF04).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tiwari, D.; Kanojia, D.; Ray, A.; Nunna, A.; Bhattacharyya, P. Predict and Use: Harnessing Predicted Gaze to Improve Multimodal Sarcasm Detection. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; Bouamor, H., Pino, J., Bali, K., Eds.; pp. 15933–15948. [Google Scholar] [CrossRef]

- Maynard, D.G.; Greenwood, M.A. Who cares about sarcastic tweets? Investigating the impact of sarcasm on sentiment analysis. In Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC’14), Reykjavik, Iceland, 26–31 May 2014; European Language Resources Association (ELRA): Reykjavik, Iceland, 2014; pp. 4238–4243. [Google Scholar]

- Li, F.; Si, X.; Tang, S.; Wang, D.; Han, K.; Han, B.; Zhou, G.; Song, Y.; Chen, H. Contextual Distillation Model for Diversified Recommendation. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 5307–5316. [Google Scholar] [CrossRef]

- Frenda, S. The role of sarcasm in hate speech. A multilingual perspective. In Proceedings of the Doctoral Symposium of the XXXIV International Conference of the Spanish Society for Natural Language Processing (SEPLN 2018), Seville, Spain, 18–21 September 2018; Lloret, E., Saquete, E., Martínez-Barco, P., Moreno, I., Eds.; pp. 13–17. [Google Scholar]

- Rothermich, K.; Ogunlana, A.; Jaworska, N. Change in humor and sarcasm use based on anxiety and depression symptom severity during the COVID-19 pandemic. J. Psychiatr. Res. 2021, 140, 95–100. [Google Scholar] [CrossRef]

- Das, R.; Singh, T.D. Multimodal sentiment analysis: A survey of methods, trends, and challenges. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Alevizopoulou, S.; Koloveas, P.; Tryfonopoulos, C.; Raftopoulou, P. Social Media Monitoring for IoT Cyber-Threats. In Proceedings of the 2021 IEEE International Conference on Cyber Security and Resilience (CSR), Virtual Event, Rhodes, Greece, 26–28 July 2021; pp. 436–441. [Google Scholar] [CrossRef]

- Farabi, S.; Ranasinghe, T.; Kanojia, D.; Kong, Y.; Zampieri, M. A Survey of Multimodal Sarcasm Detection. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, IJCAI-24, Jeju, Republic of Korea, 3–9 August 2024; Larson, K., Ed.; pp. 8020–8028. [Google Scholar] [CrossRef]

- Dutta, P.; Bhattacharyya, C.K. Multi-Modal Sarcasm Detection in Social Networks: A Comparative Review. In Proceedings of the 2022 6th International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 29–31 March 2022; pp. 207–214. [Google Scholar] [CrossRef]

- Qin, L.; Huang, S.; Chen, Q.; Cai, C.; Zhang, Y.; Liang, B.; Che, W.; Xu, R. MMSD2.0: Towards a Reliable Multi-modal Sarcasm Detection System. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2023, Toronto, ON, Canada, 9–14 July 2023; pp. 10834–10845. [Google Scholar]

- Cai, Y.; Cai, H.; Wan, X. Multi-Modal Sarcasm Detection in Twitter with Hierarchical Fusion Model. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Korhonen, A., Traum, D., Màrquez, L., Eds.; pp. 2506–2515. [Google Scholar] [CrossRef]

- Pan, H.; Lin, Z.; Fu, P.; Qi, Y.; Wang, W. Modeling Intra and Inter-modality Incongruity for Multi-Modal Sarcasm Detection. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Online, 16–20 November 2020; Cohn, T., He, Y., Liu, Y., Eds.; pp. 1383–1392. [Google Scholar] [CrossRef]

- Liang, B.; Lou, C.; Li, X.; Yang, M.; Gui, L.; He, Y.; Pei, W.; Xu, R. Multi-Modal Sarcasm Detection via Cross-Modal Graph Convolutional Network. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; Muresan, S., Nakov, P., Villavicencio, A., Eds.; pp. 1767–1777. [Google Scholar] [CrossRef]

- Liu, H.; Wang, W.; Li, H. Towards Multi-Modal Sarcasm Detection via Hierarchical Congruity Modeling with Knowledge Enhancement. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; Goldberg, Y., Kozareva, Z., Zhang, Y., Eds.; pp. 4995–5006. [Google Scholar] [CrossRef]

- Tian, Y.; Xu, N.; Zhang, R.; Mao, W. Dynamic Routing Transformer Network for Multimodal Sarcasm Detection. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Rogers, A., Boyd-Graber, J., Okazaki, N., Eds.; Volume 1: Long Papers, pp. 2468–2480. [Google Scholar] [CrossRef]

- Wei, Y.; Yuan, S.; Zhou, H.; Wang, L.; Yan, Z.; Yang, R.; Chen, M. G2SAM: Graph-Based Global Semantic Awareness Method for Multimodal Sarcasm Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 9151–9159. [Google Scholar]

- Khodak, M.; Saunshi, N.; Vodrahalli, K. A Large Self-Annotated Corpus for Sarcasm. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018), Miyazaki, Japan, 7–12 May 2018; Calzolari, N., Choukri, K., Cieri, C., Declerck, T., Goggi, S., Hasida, K., Isahara, H., Maegaard, B., Mariani, J., Mazo, H., et al., Eds.; [Google Scholar]

- Oprea, S.; Magdy, W. iSarcasm: A Dataset of Intended Sarcasm. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J., Eds.; pp. 1279–1289. [Google Scholar] [CrossRef]

- Hazarika, D.; Poria, S.; Gorantla, S.; Cambria, E.; Zimmermann, R.; Mihalcea, R. CASCADE: Contextual Sarcasm Detection in Online Discussion Forums. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; Bender, E.M., Derczynski, L., Isabelle, P., Eds.; pp. 1837–1848. [Google Scholar]

- Liu, Y.; Wang, Y.; Sun, A.; Meng, X.; Li, J.; Guo, J. A Dual-Channel Framework for Sarcasm Recognition by Detecting Sentiment Conflict. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2022, Seattle, WA, USA, 10–15 July 2022; Carpuat, M., de Marneffe, M.C., Meza Ruiz, I.V., Eds.; pp. 1670–1680. [Google Scholar] [CrossRef]

- Schifanella, R.; de Juan, P.; Tetreault, J.; Cao, L. Detecting Sarcasm in Multimodal Social Platforms. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 1136–1145. [Google Scholar] [CrossRef]

- Liang, B.; Lou, C.; Li, X.; Gui, L.; Yang, M.; Xu, R. Multi-Modal Sarcasm Detection with Interactive In-Modal and Cross-Modal Graphs. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 4707–4715. [Google Scholar] [CrossRef]

- Wen, C.; Jia, G.; Yang, J. DIP: Dual Incongruity Perceiving Network for Sarcasm Detection. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 2540–2550. [Google Scholar] [CrossRef]

- Chen, J.; Yu, H.; Huang, S.; Liu, S.; Zhang, L. InterCLIP-MEP: Interactive CLIP and Memory-Enhanced Predictor for Multi-modal Sarcasm Detection. arXiv 2024, arXiv:2406.16464. [Google Scholar]

- Yuan, S.; Wei, Y.; Zhou, H.; Xu, Q.; Chen, M.; He, X. Enhancing Semantic Awareness by Sentimental Constraint with Automatic Outlier Masking for Multimodal Sarcasm Detection. IEEE Trans. Multimed. 2025, 27, 5376–5386. [Google Scholar] [CrossRef]

- Jacobs, R.A.; Jordan, M.I.; Nowlan, S.J.; Hinton, G.E. Adaptive mixtures of local experts. Neural Comput. 1991, 3, 79–87. [Google Scholar] [CrossRef]

- Masoudnia, S.; Ebrahimpour, R. Mixture of experts: A literature survey. Artif. Intell. Rev. 2014, 42, 275–293. [Google Scholar] [CrossRef]

- Yuksel, S.E.; Wilson, J.N.; Gader, P.D. Twenty years of mixture of experts. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 1177–1193. [Google Scholar] [CrossRef]

- Cai, W.; Jiang, J.; Wang, F.; Tang, J.; Kim, S.; Huang, J. A survey on mixture of experts. arXiv 2024, arXiv:2407.06204. [Google Scholar]

- Zhou, Y.; Lei, T.; Liu, H.; Du, N.; Huang, Y.; Zhao, V.; Dai, A.; Chen, Z.; Le, Q.; Laudon, J. Mixture-of-experts with expert choice routing. Adv. Neural Inf. Process. Syst. 2022, 35, 7103–7114. [Google Scholar]

- Chen, Z.; Deng, Y.; Wu, Y.; Gu, Q.; Li, Y. Towards understanding the mixture-of-experts layer in deep learning. Adv. Neural Inf. Process. Syst. 2022, 35, 23049–23062. [Google Scholar]

- Du, N.; Huang, Y.; Dai, A.M.; Tong, S.; Lepikhin, D.; Xu, Y.; Krikun, M.; Zhou, Y.; Yu, A.W.; Firat, O.; et al. GLaM: Efficient Scaling of Language Models with Mixture-of-Experts. In Proceedings of the 39th International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; Chaudhuri, K., Jegelka, S., Song, L., Szepesvari, C., Niu, G., Sabato, S., Eds.; Volume 162, pp. 5547–5569. [Google Scholar]

- Lin, B.; Tang, Z.; Ye, Y.; Cui, J.; Zhu, B.; Jin, P.; Huang, J.; Zhang, J.; Pang, Y.; Ning, M.; et al. Moe-llava: Mixture of experts for large vision-language models. arXiv 2024, arXiv:2401.15947. [Google Scholar]

- Fedus, W.; Zoph, B.; Shazeer, N. Switch transformers: Scaling to trillion parameter models with simple and efficient sparsity. J. Mach. Learn. Res. 2022, 23, 1–39. [Google Scholar]

- Gao, Z.F.; Liu, P.; Zhao, W.X.; Lu, Z.Y.; Wen, J.R. Parameter-Efficient Mixture-of-Experts Architecture for Pre-trained Language Models. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; Calzolari, N., Huang, C.R., Kim, H., Pustejovsky, J., Wanner, L., Choi, K.S., Ryu, P.M., Chen, H.H., Donatelli, L., Ji, H., et al., Eds.; pp. 3263–3273. [Google Scholar]

- Wu, Z.; Chen, X.; Pan, Z.; Liu, X.; Liu, W.; Dai, D.; Gao, H.; Ma, Y.; Wu, C.; Wang, B.; et al. Deepseek-vl2: Mixture-of-experts vision-language models for advanced multimodal understanding. arXiv 2024, arXiv:2412.10302. [Google Scholar]

- Mustafa, B.; Riquelme, C.; Puigcerver, J.; Jenatton, R.; Houlsby, N. Multimodal contrastive learning with limoe: The language-image mixture of experts. Adv. Neural Inf. Process. Syst. 2022, 35, 9564–9576. [Google Scholar]

- Goyal, A.; Kumar, N.; Guha, T.; Narayanan, S.S. A multimodal mixture-of-experts model for dynamic emotion prediction in movies. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 2822–2826. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y.; Li, Z.; Yao, R.; Zhang, Y.; Wang, D. Modality Interactive Mixture-of-Experts for Fake News Detection. arXiv 2025, arXiv:2501.12431. [Google Scholar]

- Jiang, R.; Liu, L.; Chen, C. MoPE: Mixture of Prompt Experts for Parameter-Efficient and Scalable Multimodal Fusion. arXiv 2024, arXiv:2403.10568. [Google Scholar]

- Xie, Y.; Zhu, Z.; Chen, X.; Chen, Z.; Huang, Z. MoBA: Mixture of Bi-directional Adapter for Multi-modal Sarcasm Detection. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October–1 November 2024; pp. 4264–4272. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; Meila, M., Zhang, T., Eds.; Volume 139, pp. 8748–8763. [Google Scholar]

- Chen, Y. Convolutional Neural Network for Sentence Classification. Master’s Thesis, University of Waterloo, Waterloo, ON, Canada, 2015. [Google Scholar]

- Zhou, P.; Shi, W.; Tian, J.; Qi, Z.; Li, B.; Hao, H.; Xu, B. Attention-Based Bidirectional Long Short-Term Memory Networks for Relation Classification. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; Erk, K., Smith, N.A., Eds.; Volume 2: Short Papers, pp. 207–212. [Google Scholar] [CrossRef]

- Xiong, T.; Zhang, P.; Zhu, H.; Yang, Y. Sarcasm Detection with Self-matching Networks and Low-rank Bilinear Pooling. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 2115–2124. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, Minnesota, 2–7 June 2019; Burstein, J., Doran, C., Solorio, T., Eds.; Volume 1: Long and Short Papers, pp. 4171–4186. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Jia, M.; Xie, C.; Jing, L. Debiasing Multimodal Sarcasm Detection with Contrastive Learning. Proc. AAAI Conf. Artif. Intell. 2024, 38, 18354–18362. [Google Scholar] [CrossRef]

- Hurst, A.; Lerer, A.; Goucher, A.P.; Perelman, A.; Ramesh, A.; Clark, A.; Ostrow, A.; Welihinda, A.; Hayes, A.; Radford, A.; et al. Gpt-4o system card. arXiv 2024, arXiv:2410.21276. [Google Scholar] [CrossRef]

- Yang, A.; Li, A.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Gao, C.; Huang, C.; Lv, C.; et al. Qwen3 technical report. arXiv 2025, arXiv:2505.09388. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in pytorch. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Rasmussen, C.; Ghahramani, Z. Occam’s Razor. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 27 November–2 December 2000; Leen, T., Dietterich, T., Tresp, V., Eds.; MIT Press: Cambridge, MA, USA, 2000; Volume 13. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).