1. Introduction

Critical edge deletion in network interdiction problems typically involves two entities, a decision-maker (defender) and a disruptor (attacker), competing in a specific Stackelberg game. The decision-maker operates the network to optimize their objective function, such as shortest path, maximum flow, or minimum cost flow, whereas the disruptor attempts to degrade this performance by interdicting several arcs with limited resources [

1].

The interdiction problem was first applied to the shortest-path problem by Corley and Sha in [

2] in the shortest-path interdiction problem (denoted by (SPIP)). Ball et al. [

3] proved that this problem is NP-hard. Israeli et al. [

4] transformed this problem into a bilevel mixed integer programming problem. Khachiyan et al. [

5] proved that this problem does not admit an approximation algorithm with a performance ratio of 2, which is the current best result. Chen et al. [

6] provided an

algorithm for the problem on undirected networks when

. Nardelli et al. [

7] improved the time complexity to

, where

is the inverse Ackermann function, and

m and

n are the number of edges and nodes, respectively. Building upon classical deterministic frameworks, recent advances in SPIP have expanded into stochastic, temporal, and information-asymmetric settings while simultaneously enhancing our understanding of the problem’s computational complexity and solution methods. Henke et al. [

8] established the NP-hardness of the bilevel SPIP, clearly delineating its intractability. Similarly, Boeckmann et al. [

9] demonstrated that the temporal version remains NP-hard even when interdicting a single edge. To address uncertainty, Punla-Green et al. [

10] and Azizi et al. [

11] both developed models for SPIP with incomplete information; the former employed a max–min framework for asymmetric uncertainty, while the latter adopted a robust optimization approach. Nguyen et al. [

12] further enriched the stochastic domain by incorporating the conditional value-at-risk (CVaR) metric to model asymmetric risk aversion in an uncertain environment. Beyond traditional mathematical programming, Huang et al. [

13] pioneered a novel approach by solving the SPIP using reinforcement learning, demonstrating the potential of AI-based methods. Finally, previous research also clarifies strategic choices as Holzmann et al. [

14] laid a key complexity foundation by proving that the problem with randomized interdiction strategies is generally NP-hard but can be solved in pseudo-polynomial time, while Borrero et al. [

15] tackled sequential decision-making in a stochastic environment, proposing an approximate dynamic programming algorithm for optimal interdiction policies. Collectively, these studies significantly broaden the modeling landscape and clarify the hardness frontiers of SPIP under various complex conditions.

Currently, the majority of research in this area focuses on the SPIP under the assumption of critical edge deletion. However, factors such as rapid system recovery mechanisms and the presence of backup pathways often make complete edge deletion difficult or even infeasible in practical scenarios. For instance, in a military network scenario, available mobile forces may only be capable of interdicting support between

K pairs of adjacent enemy positions. The objective is to choose which

K pairs to disrupt so as to maximize the enemy’s support time [

16]. This represents a typical application of the shortest-path interdiction problem in military operations. Classical interdiction models focus on completely severing support between enemy positions, that is, deleting edges between the

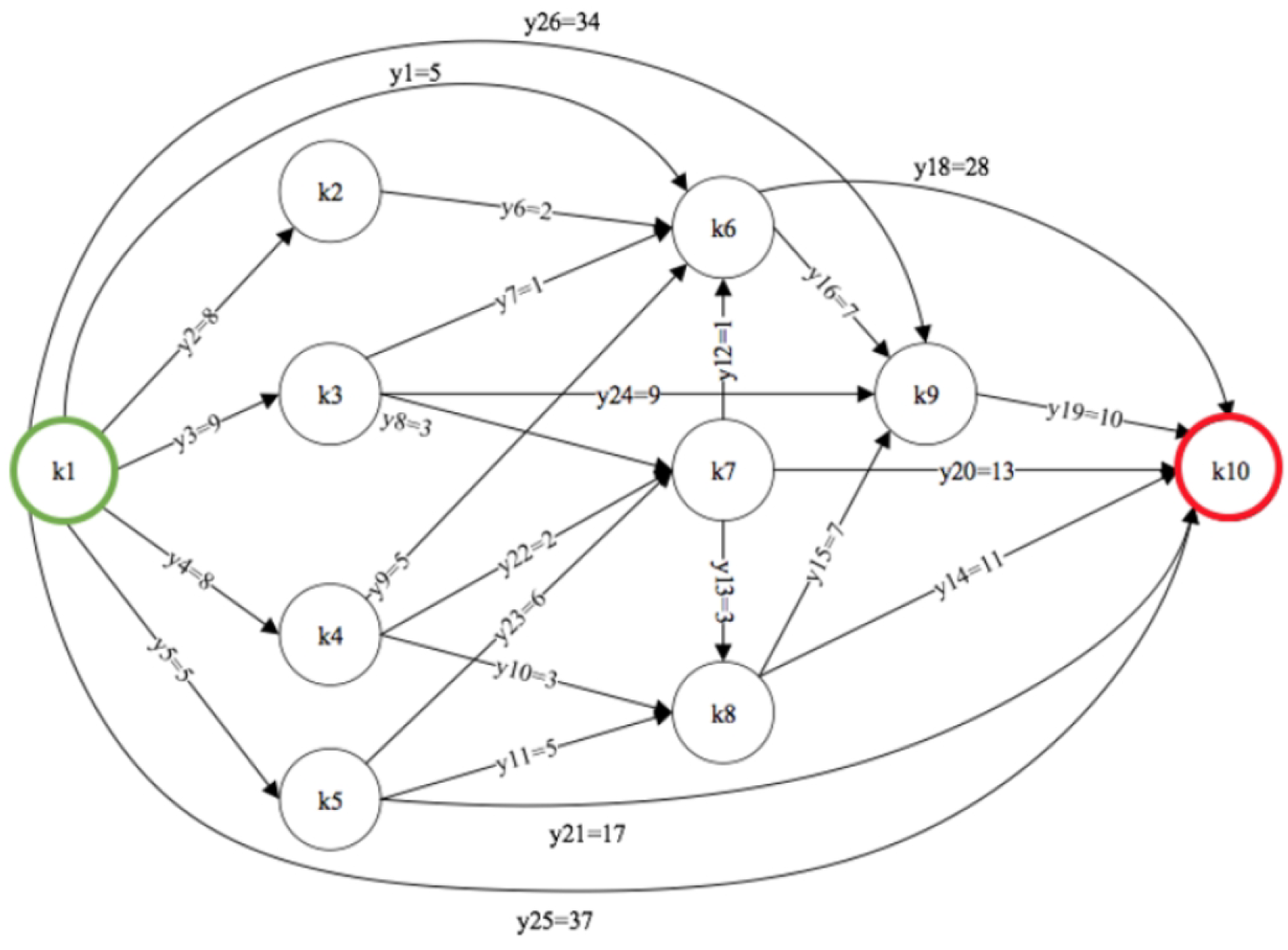

K selected pairs to make communication impossible. However, in reality, adjacent positions may maintain connectivity through multiple alternative means, such as sea, rail, or air transport. As a result, interdiction efforts may only partially disrupt certain support channels rather than entirely eliminating all connections. Another example is shown in

Figure 1 [

17]: Once the disruptor attacks node

, a fire will break out at that node. The disruptor aims to delay the fire truck’s arrival by maximizing the shortest path from node

to

with a limited interdiction budget. In this case, the disruptor can only increase the length of certain arcs rather than deleting them entirely. Correspondingly, the decision-maker must proactively identify vulnerable arcs and preemptively plan safer alternative routes to ensure the fire truck can reach the incident as quickly as possible. Recent work on the quickest path reliability problem underlines a similar focus on optimizing path performance under resource constraints [

18]. This connection highlights the broad applicability of our interdiction and upgrade framework to diverse network optimization scenarios, such as ensuring reliable flow transmission.

Therefore, the classical SPIP with critical edge deletion has inherent limitations. To overcome these, refs. [

19,

20] introduced the concept of upgrading edges and investigated the maximum shortest-path interdiction problem involving upgrading edges on trees (denoted by MSPIT), which can be defined as follows. Let

be an edge-weighted tree rooted at

, where

, and

be the sets of nodes and edges, respectively. Let

be the set of leaves. Let

and

be the original weight and upper bounds of the upgraded weight for an edge

, respectively, where

. Let

be a cost to upgrade the edge

.

denotes the deviation between

and

.

denotes the unique path from

to

on the tree

T and

the “root–leaf path” of the leaf node

in brief. Define

as the “root–leaf distance” for a weight vector

f and a root–leaf path

, where

The problem MSPIT involves finding an upgrade scheme

to maximize the shortest root–leaf distance of the tree on the premise that the total upgrade cost with some norm is upper bounded by a given value

M. The mathematical model can be stated as follows.

The corresponding minimum-cost shortest-path interdiction problem involving upgrading edges on trees (denoted by MCSPIT) entails upgrading some edges to minimize the total cost with measurement on the premise that the shortest root–leaf distance of the tree is bounded below by a given value

D. The mathematical model can be stated as follows.

Using the weighted

norm, Zhang et al. [

16] proposed primal–dual algorithms with time complexity

for both problems and with the unit

norm and developed linear-time algorithms. Using the weighted Hamming distance, Zhang et al. [

21] proved that both problems are NP-hard and designed dynamic programming algorithms with time complexities

and

for the problems MSPIT and MCSPIT with unit Hamming distance, respectively. Yi et al. [

22] subsequently improved these algorithms with local optimizations for time complexities

and

, respectively. Zhang et al. [

23] also investigated the sum of the root–leaf distance interdiction problem involving upgrading edges on trees along with its corresponding minimum cost problem. Using the weighted

norm, they provided linear-time algorithms for both problems. They showed the NP-hardness of the problems with the weighted Hamming distance and unit Hamming distance and designed a linear-time greedy algorithm and an

time algorithm based on a binary search method for each problem. Furthermore, Li et al. [

24] studied the problem with cardinality constraints and its corresponding minimum cost problem. Using the weighted

norm, they provided two algorithms with time complexity

and

for the problems, respectively, where

N denotes the cardinality budget as the maximum number of edges that can be upgraded. They proposed two algorithms using the weighted bottleneck Hamming distance, both in

time.

Research on extensions of the shortest-path interdiction problem involving upgrading edges remains relatively limited. Mohammadi et al. [

25] studied the maximum capacity path interdiction problem involving upgrading edges with the sum-type Hamming distance. Unlike the traditional shortest-path problem, where the weight of a path is defined as the sum of the weights of all edges along it, this problem uses the maximum edge weight along the path as its capacity. They provided a strongly polynomial-time algorithm for the case where the cost of reducing each arc’s capacity is fixed. Tayyebi et al. [

26] studied the continuous maximum capacity path interdiction problem, where the capacity of each arc in the network can be reduced by any continuous amount. They proposed an efficient algorithm based on a binary search method and the discrete Newton method, solving the problem in polynomial time. Li et al. [

27] introduced the minimum-cost shortest-path double interdiction problem involving upgrading edges on trees (MCDSPIT). They addressed the problem that measures the upgrade cost with both weighted

and weighted sum Hamming distances. They showed that the problem is NP-hard and proposed a pseudo-polynomial time algorithm. Research on the shortest-path interdiction problem involving upgrading edges is highly significant and greatly enriches the theory of network interdiction problems.

In this paper, we concentrate on the problems MSPIT and MCSPIT with the weighted

norm, denoted by MSPIT

∞ and MCSPIT

∞, respectively. They can be formulated as follows by applying the weighted

norm to the

in models (

1) and (

2), respectively.

We analyzed their models and properties and designed optimization algorithms with time complexity analysis. As summarized in

Table 1, our study delineates the computational complexity of the MSPIT, MCSPIT, and MCDSPIT with various norms, including

, Hamming distance, and

. A key contribution of this work is the establishment of efficient algorithms with complexities of

and

for the weighted

norm variants, thereby resolving previously open problems.

The paper is organized as follows. In

Section 2, we propose an

time algorithm to solve the problem MSPIT

∞. In

Section 3, we research the problem MCSPIT

∞ and propose a greedy algorithm in

time based on a binary search method. In

Section 4, based on the problem MCSPIT

∞, the problem MCDSPIT

∞ is studied, and an

time algorithm is proposed. In

Section 5, we present some computational experiments to show the effectiveness of the algorithms. In

Section 6, we conclude and present directions for future research.

3. Solving the Problem MCSPIT∞

In this section, we address the problem MCSPIT∞. Through a series of property analyses and case discussions, we developed an efficient algorithm. The algorithm leverages greedy strategies and a binary search method to achieve a time complexity of .

We begin by sorting

in a non-decreasing order and removing any duplicates to obtain the sequence

:

Define

for

. We can then apply (

6) using the assignment

to compute the value

and the corresponding shortest root–leaf distance

. Since

is increasing, the output

is non-decreasing.

Specifically, for

and

, the processes are defined as

and

These computations yield and along with their respective distances and . Upon analysis, we derive the following two straightforward lemmas.

Lemma 1. The optimal objective value of the problem MCSPIT∞ is in the range .

Lemma 2. If is an optimal solution of the problem MCSPIT∞, then its relevant length of the shortest root–leaf distance satisfies .

According to Lemma 2, the problem MCSPIT∞ is infeasible for as all edges have been upgraded to their maximum capacity. For , the optimal solution is conversely w with an optimal value 0 since w already satisfies the feasibility conditions. We can consequently state the following theorems.

Theorem 3. (1) If , the (MCSPIT∞) is infeasible.

(2) If , the optimal solution is w, and the optimal value is 0.

We next consider the problem MCSPIT∞ when . The optimal objective value is denoted by . The optimal objective value of problem therefore satisfies . Consequently, the upgrade amount for any edge is given by . Let be the set of leaves with root–leaf distances of less than D. Then, for each path , holds. Hence, we have the following theorem.

Theorem 4. When , is the optimal objective value of problem MCSPIT∞, where .

Proof. Let be the corresponding optimal solution of the objective value as defined in the theorem.

(1) We show the feasibility of the solution

. For any

,

Hence,

is feasible.

(2) Next, we show the optimality. Suppose is not an optimal solution, but (for ) is, where is its corresponding objective value. Let be the shortest path with the weight .

(2.1) If

is also the shortest path with the weight

, then

according to the definition of

. We then have

which contradicts the fact that

is feasible.

(2.2) If

is not the shortest path with the weight

, but

is, and

, then we have

which contradicts the fact that

is optimal.

In conclusion, is an optimal solution to the problem with its relevant optimal objective value . □

When , we employ a binary search method to determine the range of the optimal solution for the problem MCSPIT∞. We present the optimal solution by identifying the smallest cost among the infeasible paths and provide an time algorithm to solve the problem MCSPIT∞.

Specifically, we first use a binary search method based on the ascending sequence in (

7) to determine the index

k satisfying

. We call (

6) to solve

, obtaining

and its corresponding shortest root–leaf path length

. Next, we call (

6) to solve

, obtaining

and its corresponding shortest root–leaf path length

. When

, the current cost

is just enough to satisfy the constraint, and the following theorem is obvious.

Theorem 5. If , then is the optimal value to problem MCSPIT∞, and the corresponding optimal solution is .

Then, we have the following theorem to give the optimal solution.

Theorem 6. Suppose for an index k based on the ascending sequence in (7). The optimal objective value of the problem MCSPIT∞ iswhere and . Then, the corresponding optimal solution is given by Proof. We mainly show that

is a feasible solution of the problem MCSPIT

∞. Notice that for any edge

, we have

, and

also holds. Otherwise, the case

occurs, which contradicts

, implying no values between

and

in sequence (

7). Additionally, for any edge

, we obviously have

.

For any , we have .

The proof for the optimality of follows a similar argument to that in part (2) of Theorem 4, and we omit the details here. □

Based on the analysis above, we have the following Algorithm 1 to solve the problem MCSPIT∞.

Theorem 7. The problem MCSPIT∞ can be solved with Algorithm 1 in time.

Proof. We analyze the time complexity of Algorithm 1 step by step to establish the bound. The algorithm begins by calculating the weight difference vector in Line 1. This involves a simple subtraction operation for each of the edges, which requires time. In Line 2, sorting the edges based on the value of is a fundamental operation. Since there are edges, sorting them requires time.

In Lines 4–5, the algorithm calls the

MSPIT∞ subroutine as (

6) twice for the extreme values

and

in

time. Lines 6–13 handle special cases regarding the feasibility and triviality of the problem. The checks

and

involve simple comparisons. The calculation of

for the case

involves iterating over the paths to leaves in the set

, which can be obtained from a breadth-first search in

time. Thus, this entire block runs in

time.

| Algorithm 1 |

Input: The tree rooted at a root node , The set Y of leaf nodes, The cost vector c, Two weight vectors w, u, A given value D. Output: An optimal solution , Its relevant objective value .

- 1:

Calculate . - 2:

Sort edges by in ascending order (remove duplicates). - 3:

Let the sorted distinct values be: . - 4:

Call ( 6) . - 5:

Call ( 6) . - 6:

if then - 7:

return “The problem is infeasible.” - 8:

else if then - 9:

return . - 10:

else if then - 11:

where . - 12:

Call ( 6) . - 13:

return . - 14:

else - 15:

Initialize , , - 16:

if then - 17:

- 18:

end if - 19:

while do - 20:

. - 21:

Call ( 6) . - 22:

Call ( 6) . - 23:

if then - 24:

. - 25:

else if then - 26:

. - 27:

else if then - 28:

. - 29:

end if - 30:

end while - 31:

. - 32:

. - 33:

Calculate as ( 8) and as ( 9). - 34:

return . - 35:

end if

|

The binary search loop in Lines 15–30 is the core component of the algorithm. The loop performs a binary search over the (which is ) sorted values to find the critical interval such that . The number of iterations of this binary search is . In each iteration, the algorithm makes two calls to the MSPIT∞ subroutine (Lines 21 and 22) to compute and for the current pivot and its successor . Since each call to MSPIT∞ takes time, the cost per iteration is . Therefore, the total time cost of the binary search loop is .

After identifying the critical index

, the final solution is constructed. Lines 31–32 define the sets

and

, which can be determined by checking each leaf and each edge, taking

time. Line 33 calculates

and the optimal weight vector

according to (

8) and (

9) in

time.

To sum up, the overall time complexity is dominated by the sorting operation and the binary search loop, both of which are . Hence, Algorithm 1 solves the MCSPIT∞ problem in time. □

4. Minimum-Cost Shortest-Path Double Interdiction Problem Involving Upgrading Edges on Trees with Weighted Norm

In this section, we consider an extended version of the problem MCSPIT

∞, referred to as the minimum-cost shortest-path double interdiction problem involving upgrading edges on trees (MCDSPIT) with the weighted

norm (MCDSPIT

∞). Li et al. [

27] introduced the MCDSPIT problem, which has significant implications in diverse areas such as transportation networks, military strategies, and counter-terrorism efforts. The objective is to find a minimum-cost upgrade scheme such that two constraints are satisfied: the shortest root–leaf distance is at least a given value

D, and the sum of all root–leaf distances is no less than a given value

B. The mathematical model can be stated as follows.

When the

norm applies to

in model (

12), we can obtain the problem MCDSPIT

∞, which is formulated as follows.

It follows from the model of the problem MCDSPIT

∞ that if upgrading all edges to their upper bounds still cannot satisfy the conditions (

14) or (

15), then the problem is infeasible.

Theorem 8. If or , then the problem MCDSPIT∞ is infeasible.

Notice that (

13), (

14), and (

16) are the problem MCSPIT

∞. An optimal solution of the problem MCDSPIT

∞ is thus a feasible solution of the problem MCSPIT

∞. Then, we have the following conclusion for the optimal objective values of the two problems. Note that for any

,

Lemma 3. We have , where and are the optimal values of the problems MCDSPIT∞ and MCSPIT∞, respectively.

For any

, define the weight vector

as follows:

Then, we have the following lemma to show the relationship between the optimal value of the problem MCDSPIT∞ and any cost .

Lemma 4. The vector as (17) is feasible for MCDSPIT∞ if and only if , where is the optimal values of the problem MCDSPIT∞. Moreover, if , then is an optimal solution of the problem MCDSPIT∞. Proof. Necessity: Let

be defined as (

17), a feasible solution to the problem MCDSPIT

∞, and suppose

. This contradicts that

is the optimal value of the problem MCDSPIT

∞.

Sufficiency: Suppose is an optimal solution to problem MCDSPIT∞. We will prove that for any , holds. Suppose there exists , such that .

(1) If , then , which contradicts .

(2) If , then , which implies that This contradicts .

Therefore, for any

,

holds so that constraints (

14)–(

16) hold for the vector

, confirming that

is indeed feasible. □

We can solve the problem MCDSPIT∞ from the analysis above based on an optimal solution of MCSPIT∞ with its corresponding optimal cost . If the constraint for the sum of the root–leaf distance holds already, then is also optimal for the problem MCDSPIT∞.

Theorem 9. If , then is an optimal solution of the problem MCDSPIT∞, where is an optimal solution of the problem MCSPIT∞.

Otherwise, we have the case when

, which is the most significant and difficult part of solving the problem MCSPIT

∞. To address this case, we first choose the sequence (

7) by removing any duplicates and the values lower than

to obtain the sequence

:

Define for . Then, the following lemma holds immediately.

Lemma 5. The optimal value of the problem MCDSPIT∞ lies in .

We can consequently determine the index

k through a binary search method such that

, that is,

, where

and

are constructed as (

17) with

and

, respectively. For convenience, we introduce the following concept

containing the leaf nodes controlled by an edge

Definition 1 ([

23]).

Define as the set of leaf nodes with root–leaf paths passing through e. If , then the leaf node is controlled by the edge . Based on the index

k with

in an non-decreasing sequence (

18), we can then calculate the optimal value

as the following theorem.

Theorem 10. The valueis the optimal objective value of the (MCDSPIT∞), where and . Then, as (17) is an optimal solution. Proof. (1) We first prove that

is a feasible solution of the (MCDSPIT

∞). It is obvious that

for

because it is constructed as (

17). We then need to show that

satisfies the constraints (

14) and (

15).

For constraint (

14), as

, then for any

, we have

.

For constraint (

15), notice that for any edge

, we have

and

also holds. Otherwise, the case

occurs, which contradicts

, implying no values between

and

in sequence (

18). We also obviously have

for any edge

. Then, we have

Therefore,

as (

17) with

is a feasible solution of the problem MCDSPIT

∞.

(2) We next prove that

is the optimal value. Suppose that

is the optimal value of the problem MCDSPIT

∞ and

is a corresponding optimal solution; then, we have

which contradicts the feasibility of

. Hence,

is the optimal value of the problem MCDSPIT

∞. □

From the above analysis, we have the following algorithm to solve the problem MCDSPIT∞.

Theorem 11. The problem MCDSPIT∞ can be solved using Algorithm 2 in time.

| Algorithm 2 |

Input: The tree rooted at a root node , The set Y of leaf nodes, The cost vector c, Two weight vectors w, u, Given values D and B. Output: An optimal solution , Its relevant objective value .

- 1:

if or then - 2:

return “The problem is infeasible.” - 3:

else - 4:

Call . - 5:

if then - 6:

return . - 7:

end if - 8:

Calculate . - 9:

Construct the sequence by removing duplicates and values lower than from sequence ( 7): - 10:

Let , for . - 11:

Initialize , . - 12:

while do - 13:

. - 14:

Construct as Formula ( 17). - 15:

if then - 16:

. - 17:

else if then - 18:

. - 19:

end if - 20:

end while - 21:

Let . - 22:

Determine , . - 23:

Calculate as Formula ( 19). - 24:

Determine optimal value as Formula ( 17). - 25:

return . - 26:

end if

|

Proof. We analyze the time complexity of Algorithm 2 step by step to establish the bound. The algorithm begins with a feasibility check in Lines 1–2. This involves computing the sum of path weights and finding the minimum path weight over all leaf nodes, which can be obtained from a breadth-first search in time. In Line 3, the algorithm calls Algorithm 1 MCSPIT∞. As established in Theorem 7, Algorithm 1 has a time complexity of , which contributes significantly to the overall complexity.

Lines 4–5 perform a condition check and potentially return early. This operation involves summing path weights for all leaves, requiring time. Line 6 calculates the weight difference vector , which is a simple subtraction operation over edges, taking time. The sequence construction in Lines 7–9 involves sorting and filtering operations. Constructing the sequence from the original sequence requires removing duplicates and values below . The sorting step dominates this process with time complexity, while the subsequent initialization of values takes time.

The core of Algorithm 2 is the binary search loop in Lines 10–20. This loop performs a binary search over the (which is ) sorted values, resulting in iterations. Within each iteration, the algorithm computes the midpoint ( time); constructs using Formula (17) which processes edges ( time); and evaluates the condition which requires time. Therefore, each iteration takes time, and the entire binary search loop requires time.

Finally, Lines 21–24 handle the solution construction after the binary search. These steps include simple assignments (), set operations on edges (), and calculations using Formulas (19) and (17) ( each). The total time for these final steps is . To sum up, the overall time complexity is dominated by the operations. □

5. Computational Experiments

We used synthetic trees and enforced bit-level reproducibility within machine precision across typical environments to demonstrate the efficiency and reproducibility of our experimental results. All randomness was keyed with per-trial seeds, costs were median-normalized so that budget magnitudes do not drift with n, and all targets and path-sum budgets were calibrated on an instance-intrinsic, unit-free scale. These choices ensure that the results are comparable across sizes and that any observed differences reflect algorithmic behavior, not arbitrary units or sampling noise.

Instances were tested in the rooted recursive trees

: node

i attaches uniformly to

. Each edge

e receives a base weight

and an available slack

, with the upper bound

. Raw costs

are converted to unit-free costs via median normalization:

. For a budget

, upgrading along an edge follows

which in turn determines the minimum root–leaf distance

. Let

and

. We set the target distance at the midpoint,

and quantify the instance difficulty with

so that

measures how far the target lies between “no upgrades’’ and “maximal upgrades’’ on that specific instance. In the double-constraint setting, we analogously work with the aggregate path sum

. Let

be the MCSPIT

∞ solution that attains the chosen

D, and define

which pairs

as difficulty indices for distance and path-sum goals, respectively. Rare degeneracies in which the reachable interval collapses are resolved by a minimal backoff (e.g.,

). All feasibility checks and accept/reject outcomes were recorded so that third parties can replay the exact run list.

We evaluated the algorithms for the problems MSPIT

∞, MCSPIT

∞, and MCDSPIT

∞. Unless stated otherwise, problem MSPIT

∞ was parameterized by

with

in the main results. Timing isolated the algorithms: instance generation and

calibration occurred once per trial, after which inputs were frozen. Each routine received a single untimed warm-up call and was then timed with

timeit; timed functions contained no internal random draws, guaranteeing byte-identical repeats with a fixed seed. We swept

; ran 30 trials per size; and report the mean, maximum, and minimum wall-clock seconds in

Table 2. To diagnose growth rates, we regressed time on

n and on

and report

in

Table 3.

All artifacts followed a strict provenance protocol. A manifest

MANIFEST.csv enumerated every run by

, and optional per-instance

.mat files stored

for bit-level reproduction. Experiments were conducted in

Matlab R2025a on a laptop (Intel

® Core™ i9-14900HX, 2.20 GHz; Windows 11). Absolute times naturally depend on hardware and BLAS/JIT settings, but our seeded, deterministic protocol ensures that relative comparisons and scaling trends are stable across environments. See the

Supplementary Material (“Program_Result”) for the full experiment manifest, fixed seed list, and results for all experiments and figures.

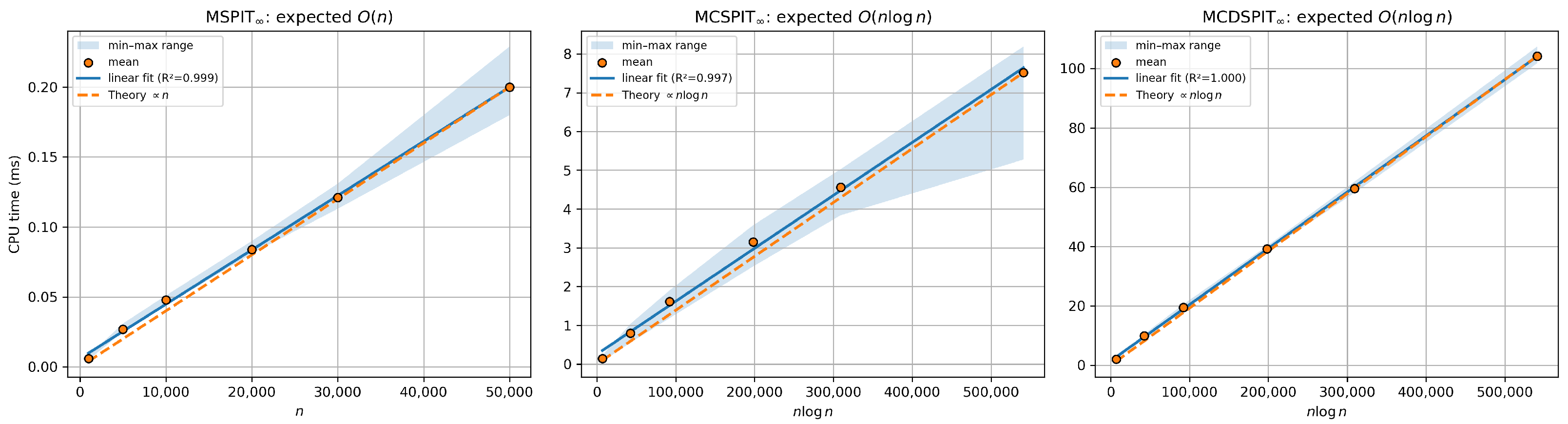

The results align with the intended complexity interpretations. Across a

increase in

n, MSPIT

∞ grows by

, MCSPIT

∞ by

, and MCDSPIT

∞ by

, indicative of near-linear behavior over our range. Least-squares fits achieve

throughout; the log–log slope is

for MSPIT

∞ and ≈1.00 for MCSPIT

∞ and MCDSPIT

∞, with the latter showing a mild finite-size preference for

in the parametric regressions (

Table 3). Together, these diagnostics suggest

versus

behavior in practice for the studied algorithms.

The empirical scaling trends are further visualized in

Figure 2, where each panel reports the mean CPU time (dots), the min–max range across instances (shaded band), a least-squares linear fit (solid line with

value), and a reference curve proportional to the theoretical complexity (dashed line). The figure clearly demonstrates the linear growth for MSPIT

∞ and the

scaling for MCSPIT

∞ and MCDSPIT

∞.

Recent work studied related problems for the l

1 norm and Hamming distance. We do not report cross-norm runtime plots because changing the norm (for example, from the infinity norm to the l

1 norm or to Hamming distance) changes the problem class itself and leads to different algorithmic structures and complexity profiles. As is typical in combinatorial optimization, each norm induces its own objective geometry and constraints, which in turn invite specialized methods—for instance, decision-diagram dynamic programming for bottleneck-style objectives versus flow-based, knapsack-like, or budgeted-edit formulations for additive or Hamming-style objectives. Direct wall-clock comparisons across different norms would therefore mix modeling choices with implementation details and hardware/software constants and would not yield a fair apples-to-apples assessment. Our experiments instead focus on validating the predicted scaling behavior for the studied infinity-norm model using a deterministic and reproducible protocol.

Table 1 situates the related problem families and summarizes their typical algorithmic landscapes to make the scope of our comparisons explicit.

6. Conclusions and Further Research

Three interdiction problems on trees with the weighted norm were investigated in this study: the maximum shortest-path interdiction problem MSPIT∞, its corresponding minimum-cost shortest-path interdiction problem MCSPIT∞, and the double-interdiction variant MCDSPIT∞. The problems have wide applications in cybersecurity, logistics, and infrastructure resilience. For MSPIT∞, we presented a linear-time greedy algorithm. For MCSPIT∞, we developed an efficient -time algorithm based on greedy selection and binary search. Building on this framework, we extended the methodology to handle the additional constraint in MCDSPIT∞ and proposed an algorithm with the same asymptotic complexity. Numerical experiments on synthetic instances confirmed the scalability and practical effectiveness of the proposed methods.

Further research is needed to design more advanced approaches for extremely large-scale graphs with millions of edges and nodes, such as graph decomposition, to enhance scalability. It should also be noted that while our algorithms address the interdiction problems with the norm, they may not directly extend to other norms—a common challenge in combinatorial optimization, where tailored methods are often necessary. Nevertheless, the methodology developed in this work provides a foundation that can be adapted to related interdiction problems in alternative infinite-norm settings.

In summary, a comprehensive and efficient algorithmic framework for solving a class of critical interdiction problems on tree networks was established in this work, providing both theoretical guarantees and practical computational tools. Future research directions include extending the proposed models and algorithms to more general graph classes (e.g., series–parallel graphs or general directed acyclic graphs), as well as developing stochastic and robust variants where edge upgrade costs or capacity bounds are uncertain. Moreover, interdiction problems involving node upgrades—rather than edge upgrades— remain largely unexplored and present both theoretical and computational challenges. Finally, applying these models to real-world domains such as cybersecurity, logistics, and infrastructure resilience represents a promising avenue for empirical validation and practical impact.