1. Introduction

Remote sensing has emerged as a vital technology for observing and analyzing Earth’s surface, offering important insights for areas such as urban development, agriculture, environmental monitoring, and disaster response. Within this field, high-spatial resolution (HSR) imagery is particularly valuable due to its ability to capture detailed visual information about ground features. These images provide clear and close-up views of both natural landscapes and built environments, making them especially useful for studying human settlements, vegetation, water bodies, and various land cover types on large scales [

1,

2].

The value of HSR imagery lies in its practical applications, including land use classification, environmental change detection, and resource management. Despite its advantages, interpreting HSR images remains a significant challenge due to the complexity and diversity of the objects they depict. Ground elements in these images often exhibit a wide range of shapes, textures, and spectral properties. This complexity creates a growing demand for advanced and intelligent techniques that can effectively analyze and extract meaningful information from these detailed and information-rich images [

3,

4].

Visual Question Answering (VQA) requires generating accurate natural language answers to open-ended questions about images. While VQA has advanced significantly with natural images, its extension to remote sensing, known as Remote Sensing VQA (RSVQA), remains limited. Traditional methods rely on convolutional networks for visual feature extraction and recurrent networks for language processing, enabling basic image and text interaction but lacking strong reasoning and knowledge integration. As a result, early RSVQA systems struggle with complex contextual and relational analysis in high-resolution imagery, highlighting the need for more advanced knowledge-driven approaches [

5,

6,

7].

This lack of interpretability presents a major challenge for Remote VQA, particularly because high-spatial resolution remote sensing images are often complex and abstract. Properly understanding these images relies heavily on spatial relationships, domain-specific patterns, and multi-scale information. For example, answering questions about the number of residential buildings in a region, identifying crop types in agricultural zones, detecting flooded areas after heavy rainfall, or locating newly constructed roads requires not only recognizing features in the image but also reasoning across spatial patterns and interpreting the question in context. As a result, there is a strong need for models that can not only generate accurate answers but also provide human-understandable explanations, helping improve transparency and reliability in geospatial decision-making [

8,

9].

Recent advances in large multimodal models (LMMs) such as Claude 3.7 Sonnet [

10], Grok 3 [

11], Gemini [

12], GPT-4 [

13], LLaMA 4 Scout [

14], and DeepSeek-R1 [

15] have significantly improved visual reasoning and cross-modal understanding by jointly processing visual and textual inputs, making them well suited for tasks that require interpreting images with natural language. In remote sensing, specialized models such as GeoChat [

16], LHRSBot [

17], and RSGPT [

18] address domain-specific challenges in geospatial imagery and question answering. Approaches such as the Semantic Object Awareness (SOBA) framework integrate object-aware segmentation with hybrid attention mechanisms and a numerical difference loss to unify classification and regression tasks, achieving 78.14 percent accuracy in Earth VQA [

1]. Other methods employ two-step fine-tuning with domain-adaptive pretraining and prompt-based adaptation to enable natural language answer generation without predefined categories, demonstrating strong performance on the RSVQAxBEN dataset [

2]. Additionally, the MM-RSVQA model fuses multimodal, multi-resolution remote sensing imagery, including high-resolution RGB, multi-spectral, and SAR data, using a VisualBERT-based transformer and achieving 65.56 percent accuracy on the TAMMI dataset [

19], which highlights the benefits of multimodal integration for remote sensing VQA.

Previous studies have improved accuracy and enhanced the fusion of diverse data sources, but they share key limitations. They lack interpretability, providing no clear explanation of how inputs and processing steps lead to predictions. They rely mainly on traditional metrics such as accuracy, precision, and recall, which do not fully capture the quality or trustworthiness of answers, especially for questions requiring deeper reasoning and contextual understanding. Evaluations typically focus only on the correctness of the final answer, ignoring the clarity and coherence of the reasoning process, which is critical for complex remote sensing tasks. Furthermore, robust evaluation frameworks that integrate automated metrics with human judgment are lacking, limiting the transparency and reliability of these models in real-world applications [

20,

21].

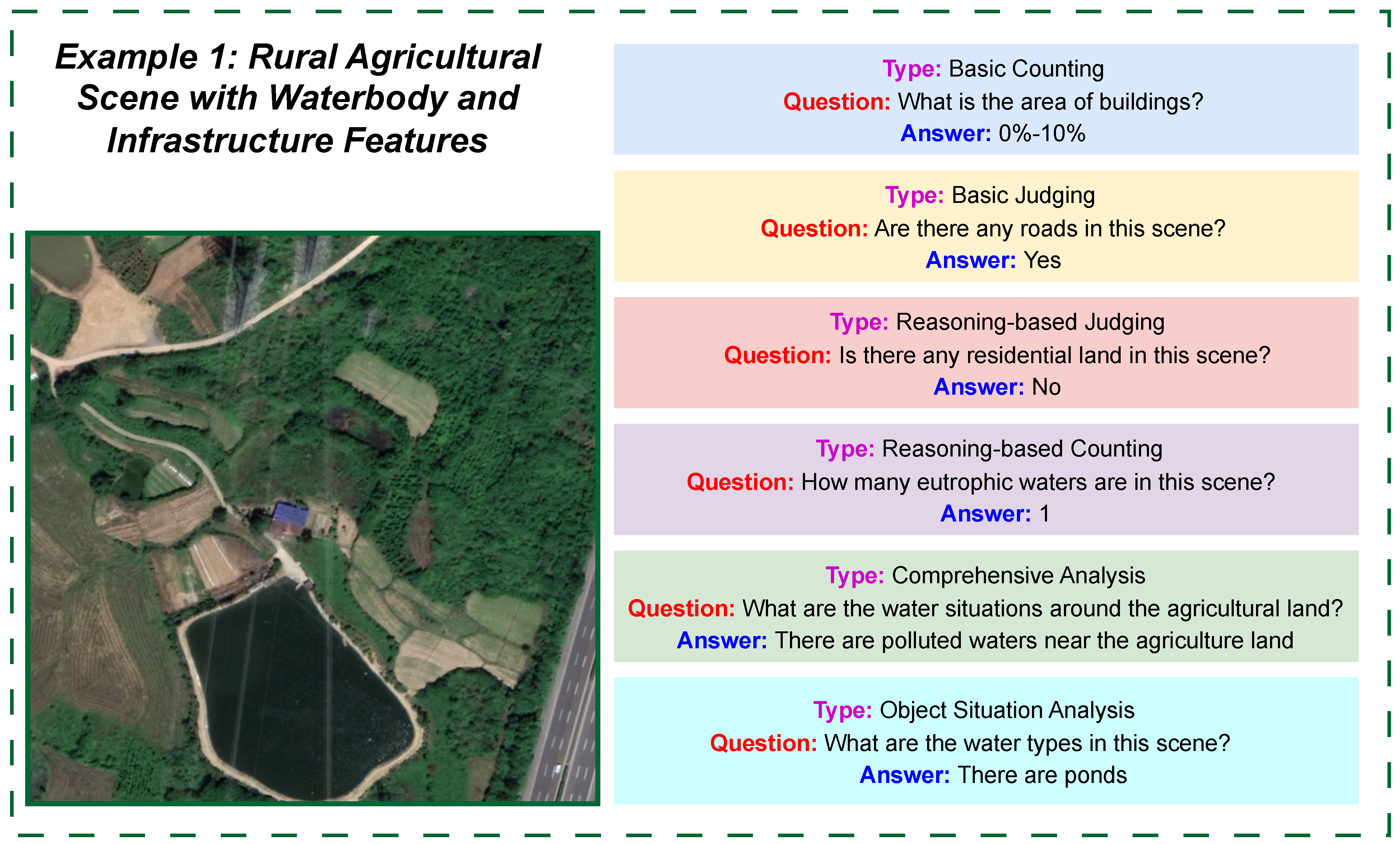

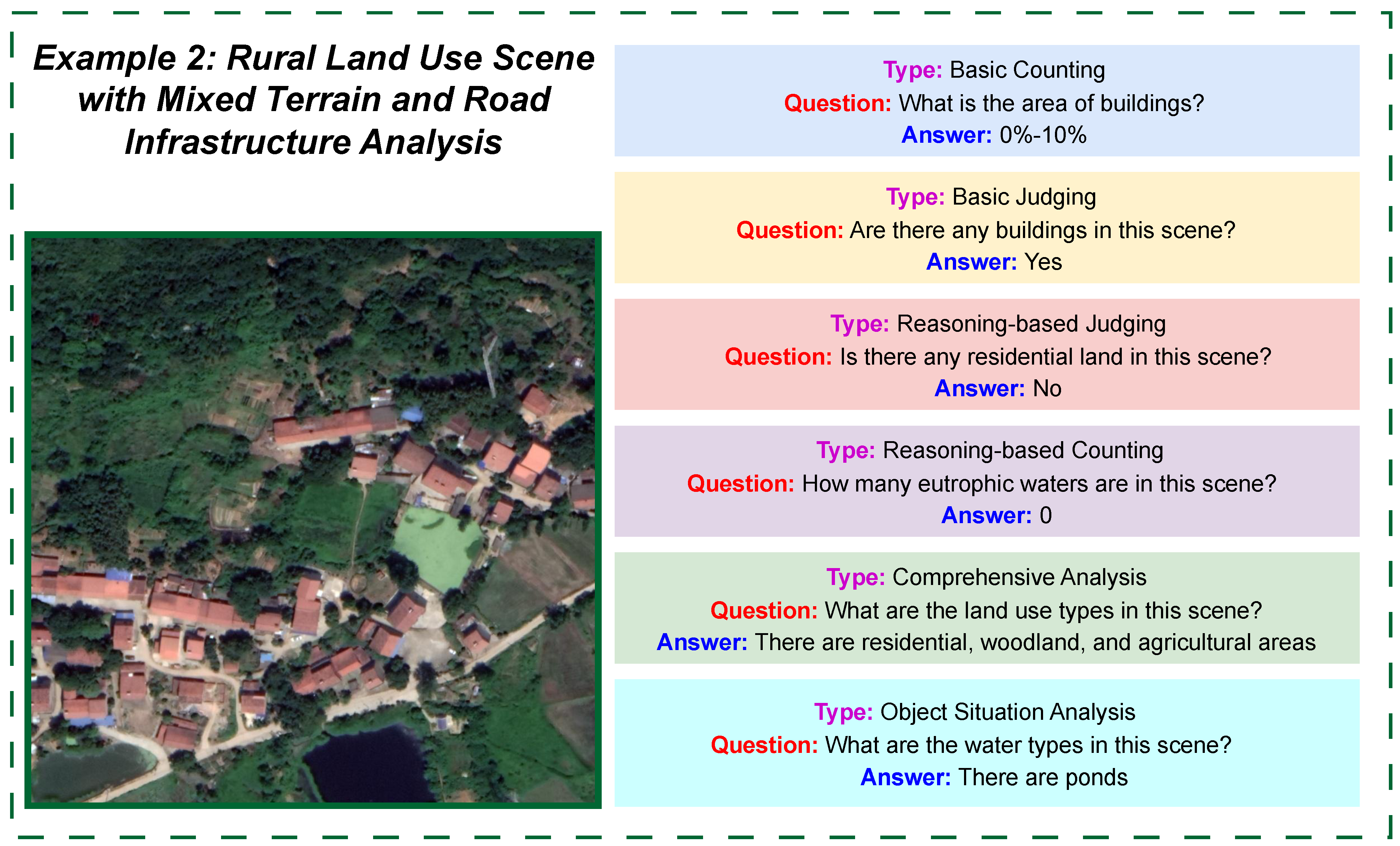

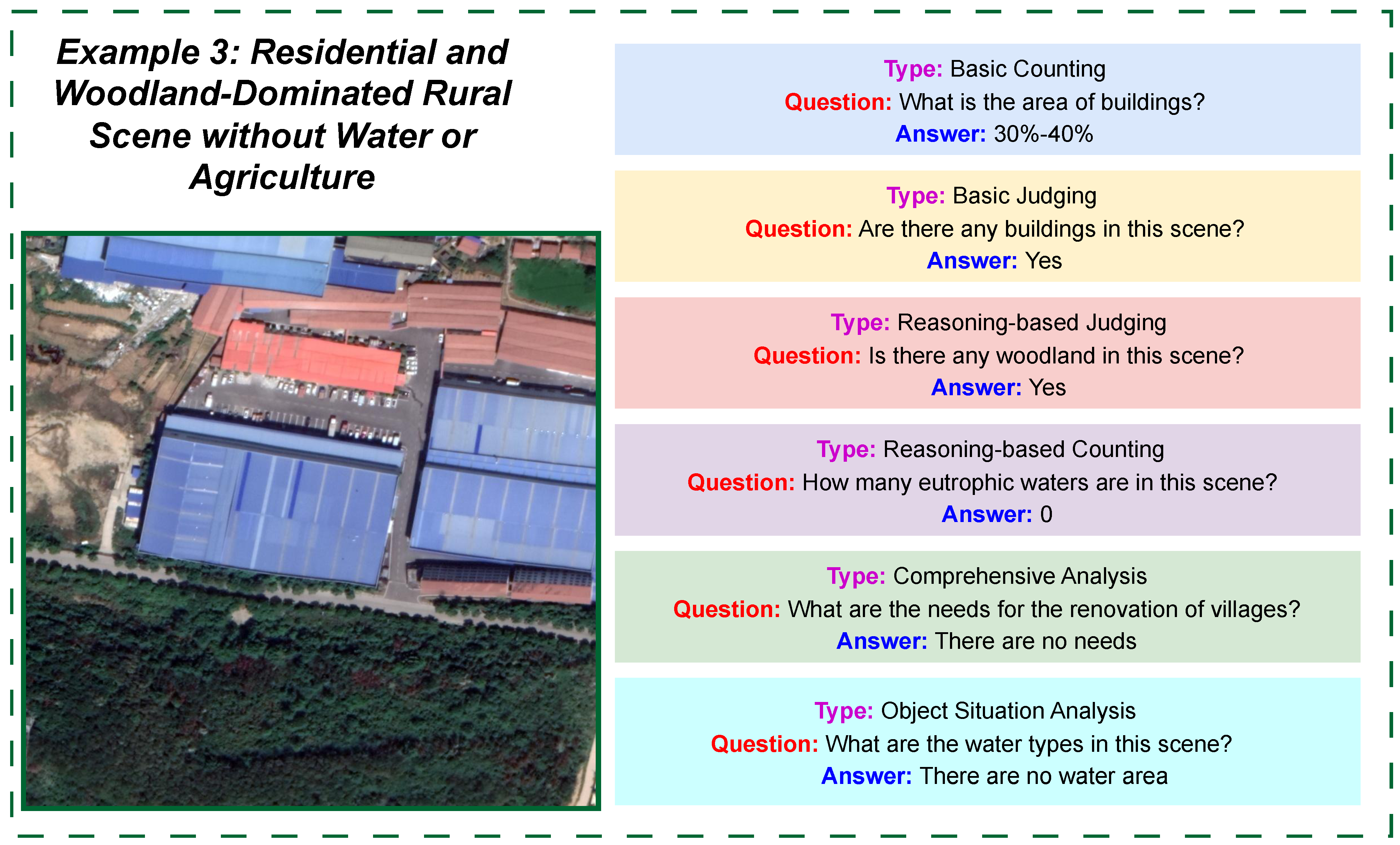

In response to these challenges, this study introduces three frameworks as follows: Zero-GeoVision, CoT-GeoReason, and Self-GeoSense. These are aimed at enhancing the reasoning abilities of LMMs in RSVQA without requiring task-specific fine-tuning. For our experiments, we work with the EarthVQA dataset, which was originally developed by another research group [

1] and consists of 6000 high-resolution satellite images and 208,593 question–answer pairs. From this large dataset, we selected a representative subset of 200 images (100 rural and 100 urban), along with their associated question–answer pairs (29 per rural image and 42 per urban image), to conduct a focused evaluation.

The Zero-GeoVision framework applies zero-shot prompting to draw direct answers from the pretrained knowledge of LMMs, serving as a baseline. The CoT-GeoReason framework introduces chain-of-thought prompting, guiding models step by step through feature detection, spatial analysis, and answer synthesis to improve reasoning transparency. Building upon this, the Self-GeoSense framework incorporates self-consistency by generating five independent reasoning chains per question and combining their outputs through majority voting, thereby improving robustness against ambiguous or complex inputs.

This study made the following contributions to the field of remote sensing visual question answering:

Introduced three task-specific frameworks—Zero-GeoVision, CoT-GeoReason, and Self-GeoSense—which employed zero-shot prompting, chain-of-thought reasoning, and self-consistency, respectively, to improve LMMs’ performance without fine-tuning.

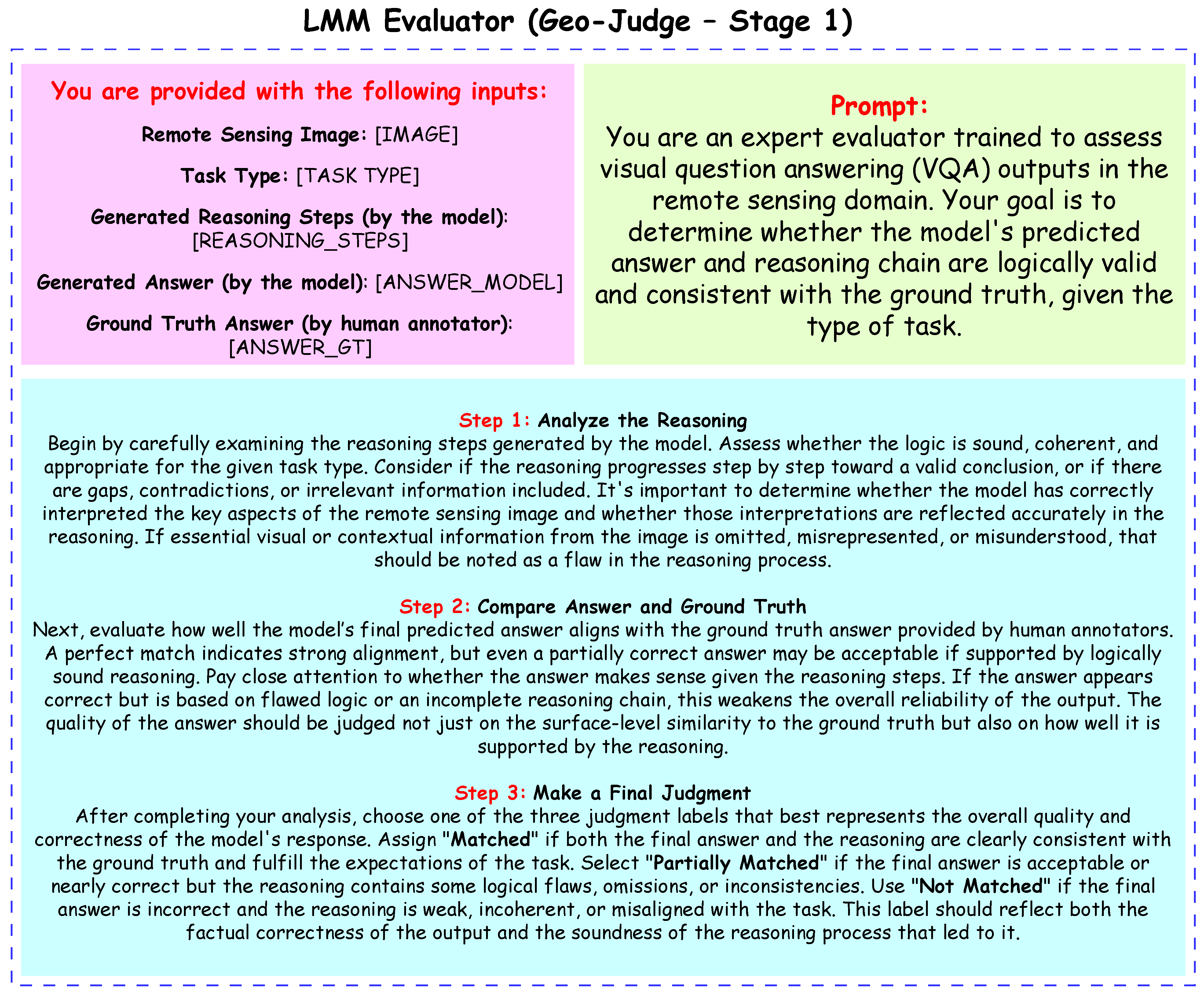

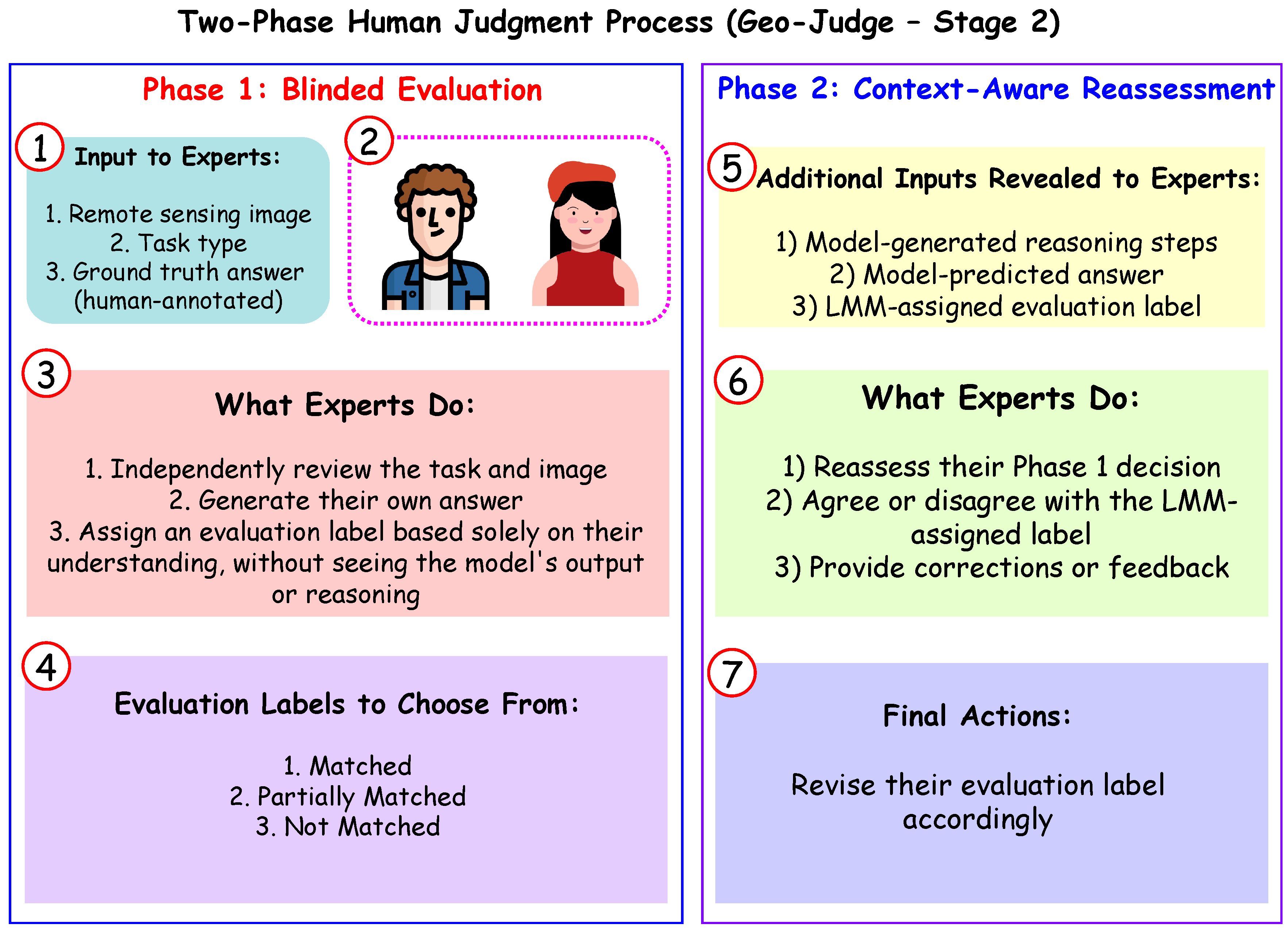

Proposed an LMM Judge that automatically evaluated model outputs for remote sensing tasks. It assessed both the final answer and reasoning chain, assigning one of three labels (Matched, Partially Matched, or Not Matched) to support the computation of strict accuracy metrics while enhancing interpretability.

Demonstrated that the Self-GeoSense framework, especially with Grok 3, outperformed the existing Semantic Object Awareness (SOBA) framework baseline across all tasks, showing the effectiveness of self-consistency and structured reasoning in complex RSVQA tasks.

Integrated human expert evaluations to align model outputs with domain knowledge, addressing the limitations of purely automated metrics and enhancing the trustworthiness of the results.

The paper is organized as follows:

Section 2 reviews prior work on task-specific deep learning and vision-language model-based approaches for RSVQA, highlighting their strengths and limitations.

Section 3 describes the EarthVQA dataset and evaluation metrics such as accuracy, RMSE, and the Geo-Judge framework, forming the foundation for the experiments.

Section 4 details the proposed frameworks, Zero-GeoVision, CoT-GeoReason, and Self-GeoSense, including their task-specific prompt designs and inference processes.

Section 5 presents a detailed task-wise comparison of these frameworks against the SOBA baseline, analyzing the effects of different reasoning strategies and evaluation methods.

Section 6 evaluates the computational cost and efficiency of the proposed frameworks, reporting the estimated token usage, average inference cost, and their implications for real-time and resource-constrained applications.

Section 7 discusses the limitations of the proposed frameworks, including dataset biases and dependence on human evaluations.

Section 8 proposes future research directions, such as optimizing computational efficiency, expanding dataset diversity, and reducing reliance on human evaluations. Finally,

Section 9 summarizes the key findings, emphasizing the effectiveness of the proposed frameworks and their implications for RSVQA research.

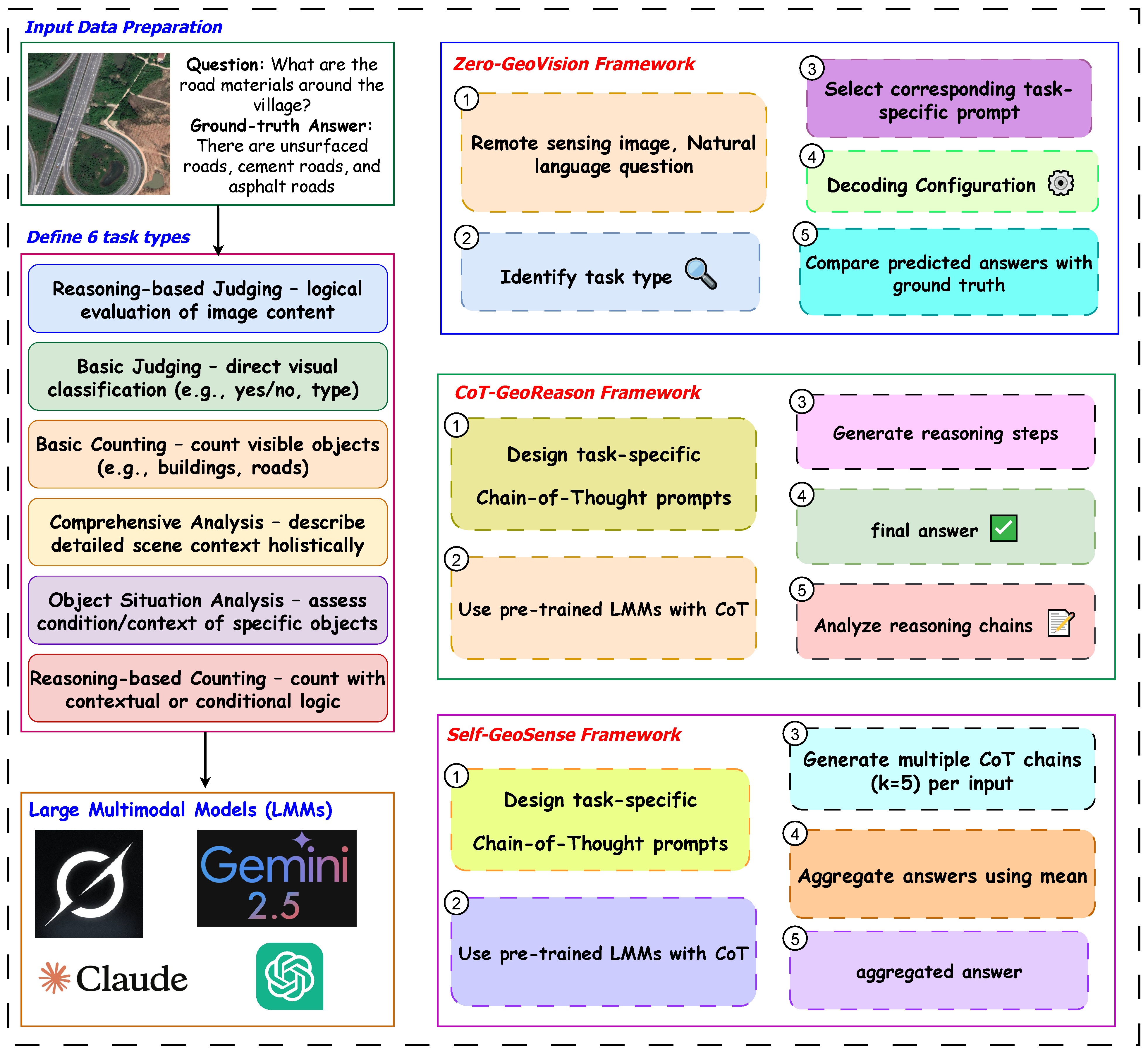

4. Proposed Methodology

In this study, we analyze high-spatial resolution remote sensing images to assess residential environments, transportation infrastructure, and renovation needs for water bodies and unsurfaced roads. The overall end-to-end process flow for this analysis is illustrated in

Figure 6. We propose three frameworks integrated with LMMs:

Zero-GeoVision, which enables zero-shot [

13,

32] instruction for immediate image interpretation;

CoT-GeoReason, which applies chain-of-thought [

33] instruction to support detailed step-by-step reasoning; and

Self-GeoSense, which extends this approach with self-consistency to iteratively refine and validate the analysis.

4.1. Zero-GeoVision Framework

The Zero-GeoVision framework leverages the pretrained knowledge embedded within LMMs to perform remote sensing image analysis without any task-specific fine-tuning or additional training. It operates in a zero-shot setting by constructing tailored prompts that guide the model to interpret the input image and question directly based on its general understanding of visual and textual data.

In a zero-shot learning setup, the model inference relies solely on its prior knowledge without any gradient updates. Formally, the predicted answer

is obtained as follows:

where

is the pretrained LMM,

I is the input image,

T is the image type,

Q is the question, and

is the task-specific prompt. No model parameters are updated, i.e.,

indicating zero gradient descent on model weights

. The model responds based on its internal representations learned during pretraining.

For each task type defined in the previous section, a unique prompt template is carefully designed to emphasize the particular reasoning or analysis required. These prompts incorporate the remote sensing image I, its type T, and the question Q, explicitly instructing the model on the expected form of the response. By customizing prompts according to task characteristics, the framework maximizes the LMM’s ability to generalize across diverse remote sensing scenarios.

During inference, we adopt a consistent set of decoding parameters to ensure stable and diverse response generation. Specifically, the generation is performed using a temperature of 0.7 to balance creativity and precision, a nucleus sampling probability (top-p) of 0.9 to filter unlikely tokens, a top-k sampling value of 5 to limit token choices at each step, and a maximum token length of 512. No task-specific tuning or gradient updates are applied to the model weights, keeping the evaluation fully in the zero-shot regime. These configurations help maintain consistency while allowing the model’s pretrained reasoning capabilities to handle a wide range of question types across different remote sensing tasks.

Steps for Applying the Zero-GeoVision framework:

Input Preparation: Each data instance is represented as a tuple , where I is the raw remote sensing image, T denotes the image type (e.g., optical or SAR), Q is the question to be answered, and A is the ground-truth answer. The image is processed and encoded in its native format, while the type and question are formatted as natural language text.

Task-Specific Prompt Design: Distinct prompt templates are created for each task type . Some examples include the following:

Prompting Guideline for Bas Ju: Identify and classify based on straightforward visual cues.

Prompting Guideline for Rel Ju: Evaluate the image features logically to make a reasoned decision.

Prompting Guideline for Bas Co: Count specific objects visible in the image.

Prompting Guideline for Rel Co: Combine object detection with contextual reasoning to refine counting results.

Prompting Guideline for Obj An: Focus on the condition or contextual state of particular objects.

Prompting Guideline for Com An: Describe multiple aspects of the image in detail.

These tailored prompts help the LMM focus its reasoning and interpretation aligned with the demands of each task.

Model Inference: The LMM takes the remote sensing image, its type, the associated question, and a task-specific prompt as input, and processes them to generate a predicted answer. In other words, the model’s inference function maps the combination of image, image type, question, and prompt to produce the predicted answer.

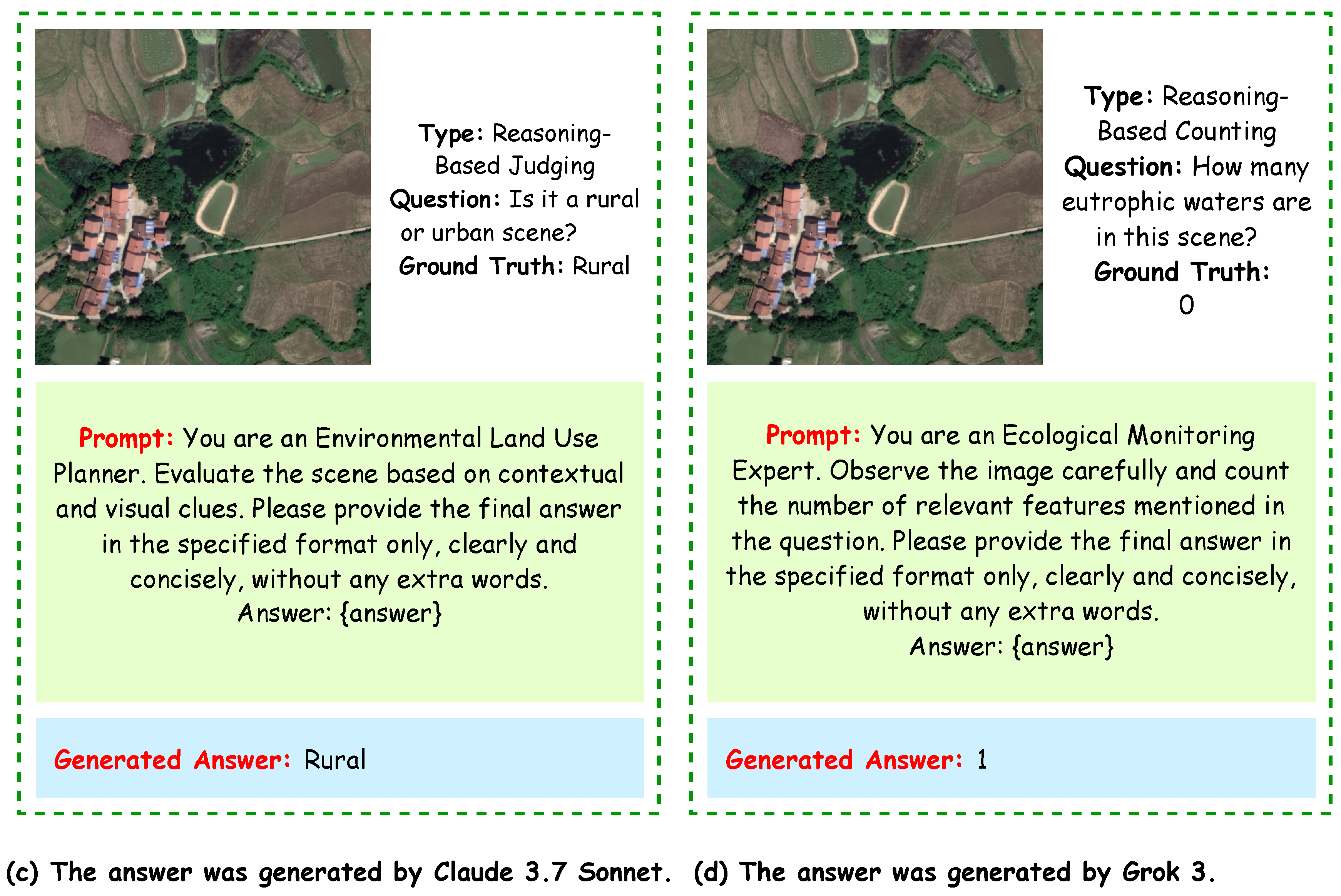

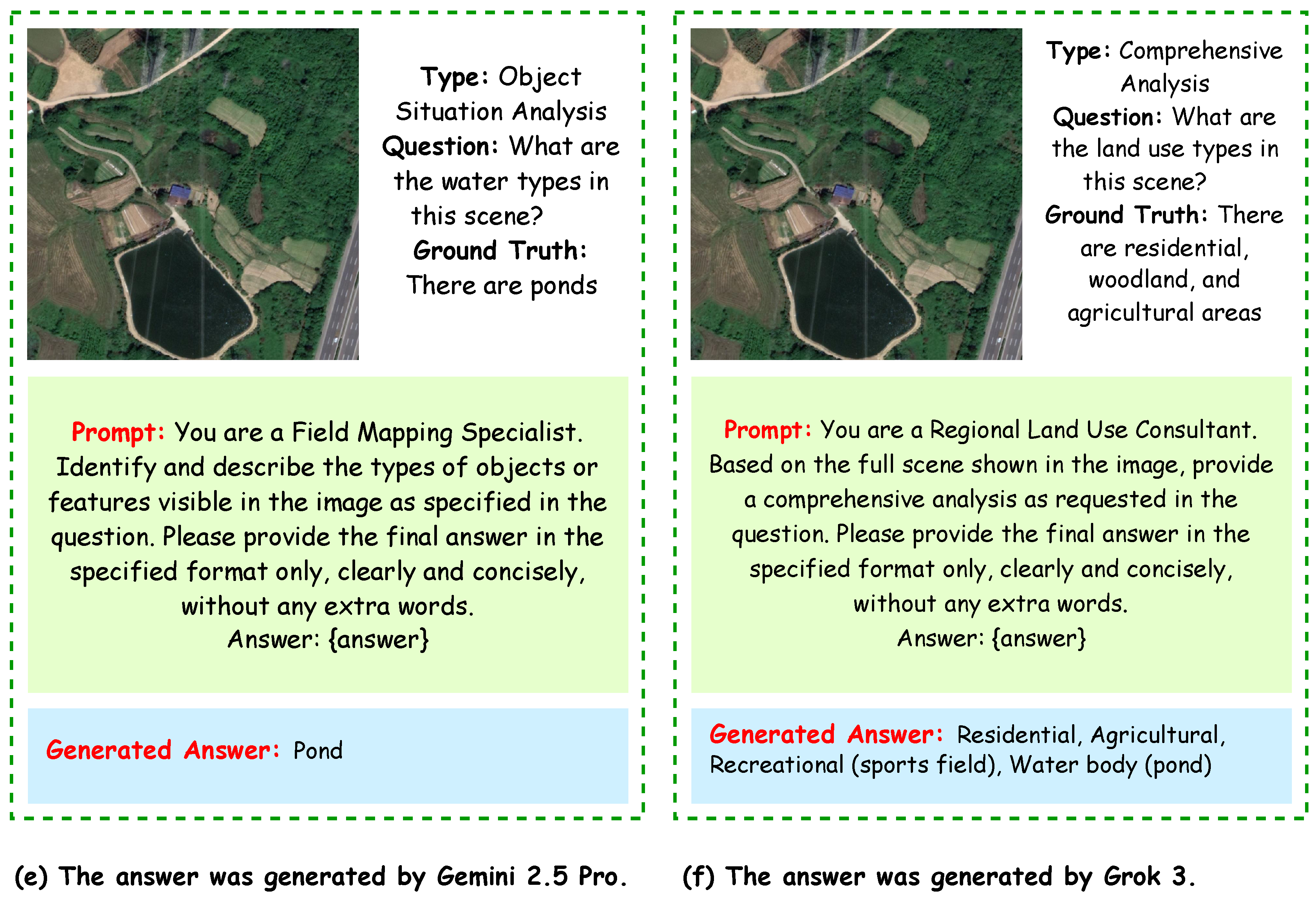

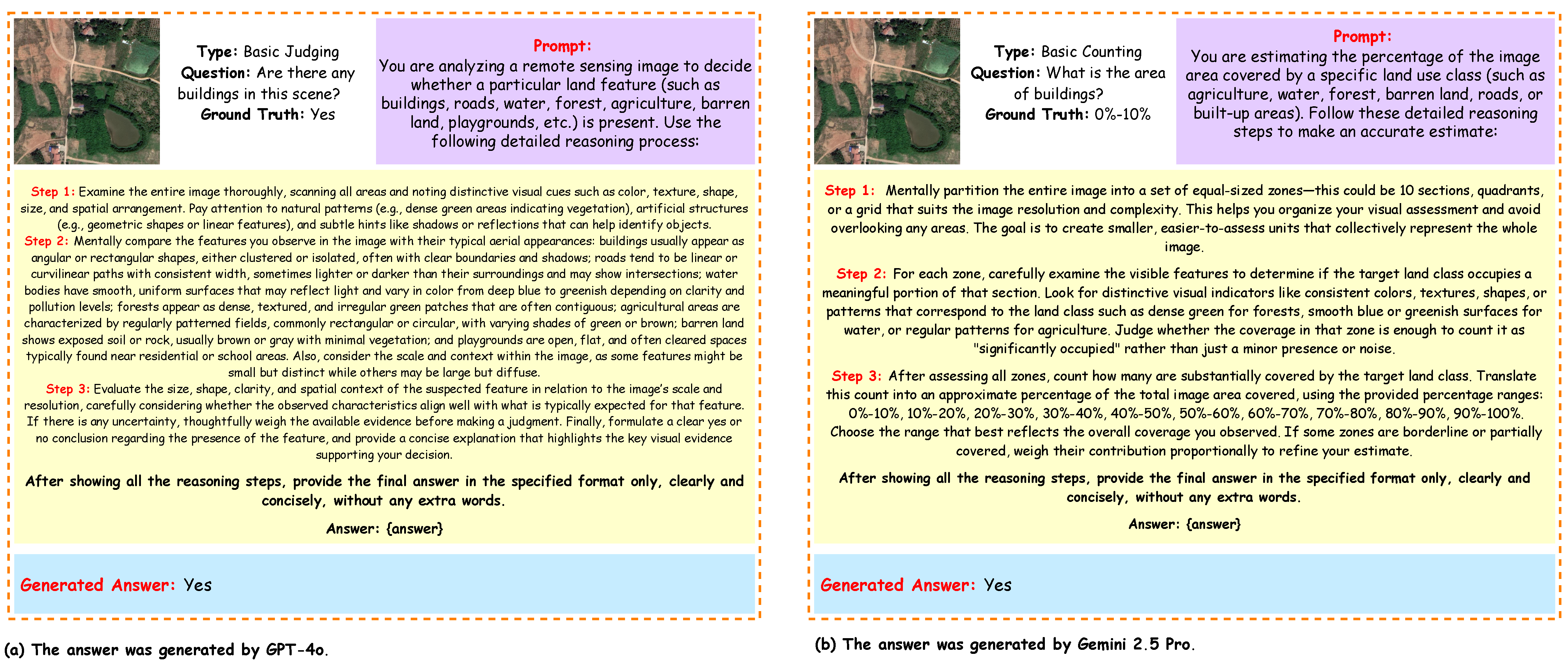

The Zero-GeoVision framework employs task-specific prompt designs tailored to each task type, which guide the LMM in interpreting the remote sensing image and question to generate accurate zero-shot responses, as illustrated in

Figure 7,

Figure 8 and

Figure 9 further illustrate the prompt designs for the six task types grouped in pairs.

We performed evaluation with the Zero-GeoVision framework to assess the performance of four LMMs:

GPT-4o [

13],

Grok 3 [

11],

Gemini 2.5 Pro [

12], and

Claude 3.7 Sonnet [

10]. We measured Accuracy for all task types and RMSE for the counting-related tasks. Unlike our other reasoning-based frameworks, the Zero-GeoVision evaluation relied solely on basic zero-shot prompting without incorporating any explicit reasoning steps. Therefore, the results reflect model capabilities under straightforward prompt conditions. A summary of the evaluation results categorized by task type and model is presented in

Table 3.

4.2. CoT-GeoReason Framework

The CoT-GeoReason framework builds upon zero-shot capabilities by employing chain-of-thought (CoT) prompting to guide LMMs in performing structured, step-by-step reasoning without any task-specific training or fine-tuning. This approach leverages the model’s inherent general knowledge and reasoning abilities by prompting it to articulate intermediate reasoning steps before producing the final answer. For each task type, carefully crafted CoT prompts emphasize the specific reasoning process required, enabling the model to handle complex remote sensing questions more effectively.

In this framework, rather than producing a direct answer, the model is encouraged to generate a sequence of intermediate reasoning steps

, followed by a final answer

. This process can be formally modeled as the following factorized conditional generation:

Here, the following hold:

I is the input image.

T is the image type (e.g., optical, SAR).

Q is the natural language question.

M is the fixed pretrained LMM.

is the i-th reasoning step.

, and

is the complete sequence of reasoning steps.

No gradient updates are applied to the model parameters during inference as follows:

This enforces the zero-shot inference constraint while still enabling complex reasoning chains through prompt engineering.

During inference, decoding parameters are configured to balance output quality and diversity. We use a temperature of 0.6 to promote focused yet flexible responses, a nucleus sampling (top-p) value of 0.85 to restrict token selection to likely candidates, and a top-k value of 10 to limit token choices at each step. The maximum token length is set to 768 to allow detailed reasoning while maintaining computational efficiency. This zero-shot CoT prompting framework enables comprehensive reasoning over diverse remote sensing tasks without additional model training.

Steps for Applying the CoT-GeoReason Framework:

Input Preparation: As in the zero-shot framework, prepare the input tuple , ensuring the image I, type T, and question Q are formatted appropriately for the LMM.

Task-Specific CoT Prompt Design: Construct a prompt that instructs the model to follow a step-by-step reasoning process as follows:

Prompting Strategy for Rel Ju: Identify relevant image features, analyze their implications, and make a reasoned judgment.

Prompting Strategy for Bas Ju: Identify key visual characteristics and directly link them to a judgment.

Prompting Strategy for Bas Co: Detect objects and systematically count them.

Prompting Strategy for Com An: Segment the image, describe each region, and synthesize a comprehensive response.

Prompting Strategy for Obj An: Identify objects and analyze their condition or context.

Prompting Strategy for Rel Co: Combine object detection with reasoning about contextual factors to produce a count.

Model Inference with Reasoning Steps: The LMM generates a sequence of reasoning steps

, culminating in the predicted answer

. The overall reasoning flow can be viewed as follows:

where

is the chain-of-thought prompt designed for task type

t.

Output Evaluation: Compare with the ground-truth answer A to evaluate performance. The generated reasoning chain provides insights into the model’s internal reasoning and decision-making process.

By applying task-specific CoT prompts, the CoT-GeoReason framework ensures that the reasoning process aligns with the demands of each task type, enabling a comparative analysis of performance across the six tasks.

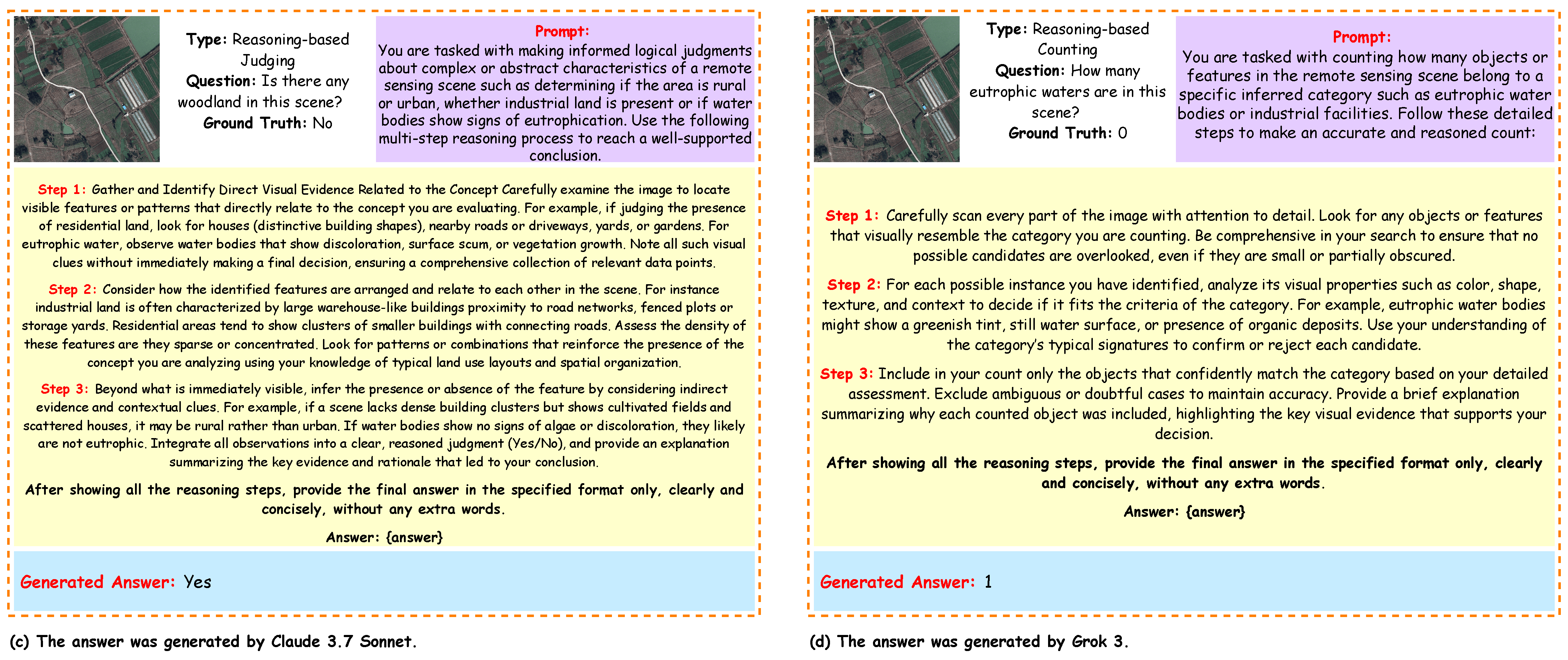

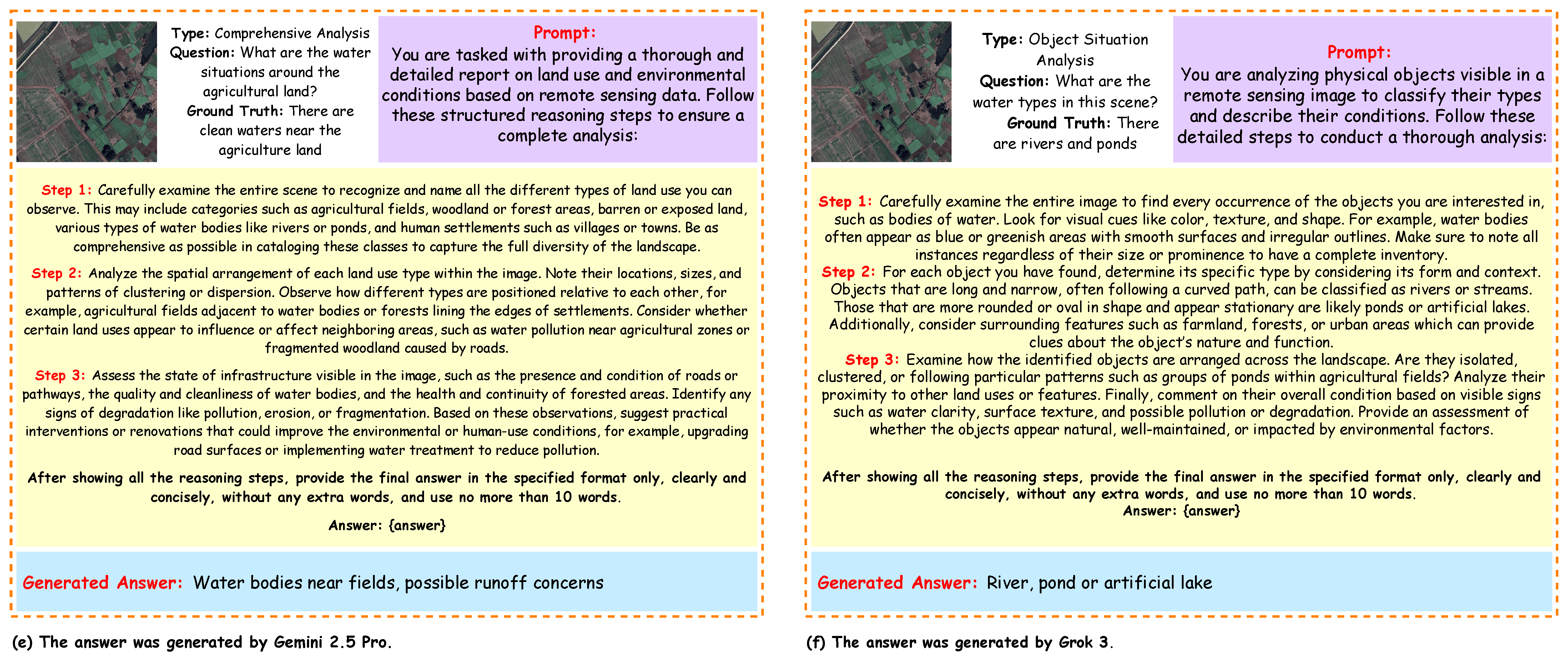

The CoT-GeoReason framework employs task-specific prompt designs tailored to each task type, which guide the LMM in interpreting the remote sensing image and question through chain-of-thought reasoning to generate accurate responses, as illustrated in

Figure 10,

Figure 11 and

Figure 12. These figures illustrate the prompt designs for the six task types grouped in pairs. The performance of the LMMs within the CoT-GeoReason framework is summarized in

Table 4.

We applied the

CoT-GeoReason framework to comprehensively evaluate the performance of four LMMs:

GPT-4o,

Grok 3,

Gemini 2.5 Pro, and

Claude 3.7 Sonnet. Our assessment involved measuring Accuracy across all task types and RMSE specifically for counting-related tasks. For tasks such as Basic Judging and Basic Counting, Accuracy was calculated directly by comparing model outputs against objective ground truth labels. In contrast, for more complex tasks, namely Reasoning-Based Judging, Comprehensive Analysis, and Object Situation Analysis, the Accuracy metric incorporates semantic evaluation based on in-depth reasoning. This semantic evaluation was conducted through a combination of human expert judgment and LMM-as-a-judge approaches, allowing us to capture subtle and context-dependent aspects of model performance that are not fully reflected by automatic metrics alone. By integrating these multiple evaluation layers, the CoT-GeoReason framework delivers a comprehensive and nuanced assessment of model capabilities. Detailed results broken down by task type and model are presented in

Table 4.

4.3. Self-GeoSense Framework

The Self-GeoSense framework extends the CoT-GeoReason framework by generating multiple independent chains of reasoning for each input and then aggregating these outputs to produce a more reliable and robust final answer. This Self-Consistency with Chain of Thought (CoT-SC) approach effectively reduces variability in LMM outputs, which is especially important for complex remote sensing tasks where image interpretation can be ambiguous. By leveraging the same task-specific CoT prompts as the CoT-GeoReason framework, Self-GeoSense improves the consistency of results through the generation and integration of multiple reasoning paths for each task type.

Example:| Task Type: | Basic Judging |

| Question: | Are there any roads in this scene? |

| Correct Answer: | Yes |

Example Reasoning Chains:

Chain 1: Observe the image for linear features ⇒ Linear patterns detected, resembling paved surfaces ⇒ No vegetation covering these lines ⇒ Conclude roads are present ⇒ Answer: Yes.

Chain 2: Identify straight or curved lines in the image ⇒ Lines appear man-made and consistent with roads ⇒ Confirm with surrounding context (e.g., buildings) ⇒ Roads are present ⇒ Answer: Yes.

Chain 3: Check for natural features like rivers or paths ⇒ No water or organic patterns detected ⇒ Linear features suggest infrastructure ⇒ Likely roads ⇒ Answer: Yes.

Chain 4: Look for vehicle movement or road-like structures ⇒ No vehicles visible, but linear paths exist ⇒ Paths align with road characteristics ⇒ Roads exist ⇒ Answer: Yes.

Chain 5: Analyze image for urban elements ⇒ Linear features don’t match natural terrain ⇒ Patterns suggest man-made roads ⇒ No roads detected ⇒ Answer: No.

Formal Description:

For each input

, the model generates

k independent reasoning chains as follows:

where the following hold:

is the i-th reasoning chain.

is the j-th reasoning step in the i-th chain.

is the answer predicted by the i-th chain.

The full probabilistic generation process for each chain is as follows:

Each chain is generated with stochastic decoding parameters to encourage diversity: temperature = 0.8, top-p = 0.9, and top-k = 60.

After all

k chains are generated, their final predicted answers

are aggregated using a function

g to compute the final answer as follows:

The aggregation function g is defined based on the task type as follows:

For

categorical tasks (e.g., Basic Judging):

For

numerical tasks (e.g., counting tasks):

Example Aggregation:

For the example, the answers from the five chains are Yes, Yes, Yes, Yes, No. Using majority voting for this categorical task yields the following:

The final answer is Yes, as it has the majority.

The final output probability can be approximated by marginalizing over the chains as follows:

This ensemble-style aggregation reduces sensitivity to any single reasoning path, enhancing consistency and reliability across tasks characterized by high ambiguity or complexity.

Steps for Applying the Self-GeoSense Framework:

Input Preparation: Prepare the input tuple as described in the previous frameworks. For the example, I is the satellite image, T is Basic Judging, Q is "Are there any roads in this scene?", and A is "Yes".

Task-Specific CoT Prompt Reuse: Use the task-specific CoT prompt designed for the CoT-GeoReason framework, as already shown in the previous framework, tailored for Basic Judging to guide the model in identifying roads in the image.

Multiple Reasoning Chains: For each input, generate independent CoT reasoning chains , each producing a sequence of reasoning steps (separated by ⇒) and a predicted answer . The number of chains k is chosen to balance robustness and computational cost.

Answer Aggregation: Aggregate the k answers using the appropriate aggregation function g. For the example, majority voting selects "Yes" as the final answer.

Output Evaluation: Compare the final answer ("Yes") with the ground-truth answer A ("Yes") to assess performance. The ensemble process approximates a more reliable response distribution across chains.

The

Self-GeoSense framework enhances the reliability of remote sensing analysis by generating multiple independent chains of reasoning for each input and aggregating their outputs to produce a more robust final answer. While the prompting approach is based on the standard Chain of Thought (CoT) technique, Self-GeoSense employs task-specific prompts that are carefully tailored to the unique characteristics of each task type. This customization ensures that each reasoning chain effectively addresses the specific demands of its respective task.

Figure 10,

Figure 11 and

Figure 12 illustrate the CoT-style prompt designs used in the Self-GeoSense framework for the six task types, grouped in pairs.

We conducted an evaluation using the Self-GeoSense framework to assess the performance of four LMMs:

GPT-4o,

Grok 3,

Gemini 2.5 Pro, and

Claude 3.7 Sonnet. Accuracy was measured across all task types, while RMSE was calculated specifically for counting-related tasks. For Basic Judging and Basic Counting, Accuracy was determined directly based on objective criteria and ground truth labels. In contrast, for Reasoning-Based Judging, Comprehensive Analysis, and Object Situation Analysis tasks, Accuracy reflects a semantic evaluation incorporating both human expert judgment and LMM-as-a-judge methodologies. This combined approach harnesses automated reasoning alongside domain expertise to capture nuanced facets of model performance that exceed purely automatic metrics. A detailed comparison of model performance across task types within the Self-GeoSense framework is presented in

Table 5.

5. Result Analysis

5.1. Detailed Task-Wise Comparison of Zero-GeoVision Framework vs. Semantic Object Awareness Framework (SOBA) Method

Table 3 presents the performance of the

Zero-GeoVision framework, leveraging zero-shot prompting with LMMs (

GPT-4o,

Grok 3,

Gemini 2.5 Pro,

Claude 3.7 Sonnet), against the

Semantic Object Awareness framework (SOBA) across six task categories:

Basic Judging (Bas Ju),

Reasoning-Based Judging (Rel Ju),

Basic Counting (Bas Co),

Reasoning-Based Counting (Rel Co),

Object Situation Analysis (Obj An), and

Comprehensive Analysis (Com An).

For Bas Ju, Grok 3 led Zero-GeoVision at 78.55%, while SOBA achieved 89.63%, outperforming the best Zero-GeoVision model by 11.08%. In Rel Ju, Grok 3 scored 73.12%, with SOBA at 82.64% (+9.52%). For Bas Co and Rel Co, top Zero-GeoVision models scored 78.12% (Claude 3.7 Sonnet) and 64.85% (Grok 3), respectively, while SOBA maintained higher accuracies of 80.17% and 67.86%. In Obj An and Com An, Zero-GeoVision peaked at 59.23% and 48.34%, whereas SOBA achieved 61.40% and 49.30%, highlighting challenges in tasks requiring relational reasoning.

Human expert analysis confirmed that Zero-GeoVision models often failed to capture relational cues and complex spatial-semantic interactions, producing superficial answers in Obj An and Com An tasks. To address this, we introduced CoT-GeoReason and Self-GeoSense frameworks, which incorporate structured, stepwise reasoning to guide models in analyzing and synthesizing visual information, mimicking human expert workflows. Initial evaluations show that reasoning-augmented frameworks significantly improve performance, particularly in Obj An and Com An, validating the importance of integrating human-like reasoning for high-level remote sensing visual question answering.

5.2. Detailed Task-Wise Comparison of CoT-GeoReason Framework vs. Semantic Object Awareness Framework (SOBA) Method

Table 4 presents the results of the

CoT-GeoReason framework, which applies chain-of-thought prompting to LMMs (

GPT-4o,

Grok 3,

Gemini 2.5 Pro, and

Claude 3.7 Sonnet) in comparison with the

SOBA baseline across six remote sensing VQA tasks. In

Bas Ju, Grok 3 achieves 88.69%, closely matching SOBA’s 89.63% with only a 0.94% difference, while the other CoT-GeoReason models remain competitive. For

Rel Ju, Grok 3 leads with 85.42%, exceeding SOBA’s 82.64%, and all CoT-GeoReason models surpass the baseline. In counting tasks,

Bas Co and

Rel Co, Grok 3 achieves 90.37% and 78.27%, representing improvements of +10.20% and +10.41% over SOBA, with the other models also outperforming the baseline. On the reasoning-intensive tasks,

Obj An and

Com An, Grok 3 records 69.64% (+8.24%) and 58.29% (+8.99%) respectively, showing consistent gains over SOBA and confirming the strength of chain-of-thought reasoning in complex analyses.

Error analysis with RMSE further supports these findings. CoT-GeoReason models maintain lower error rates than SOBA, with Grok 3 achieving the best balance between accuracy and reliability (0.7214 in Bas Co and 0.8725 in Rel Co compared to SOBA’s higher 0.7856 and 1.1457). Overall, CoT-GeoReason consistently matches or surpasses SOBA across all categories, demonstrating that chain-of-thought prompting enhances both accuracy and reasoning quality in multimodal remote sensing VQA tasks. To ensure robustness, outputs from CoT-GeoReason were first evaluated by a proprietary GPT-4o-mini LMM Judge, which assessed logical coherence and correctness. These judgments, along with detailed reasoning traces, were independently reviewed by human domain experts. This two-tier evaluation, combining automated reasoning validation with expert review, helped quantify agreement, identify model strengths and weaknesses, and refine prompting strategies. Integrating human oversight ensured that the observed improvements from CoT-GeoReason translated into meaningful performance gains, bridging the gap between automated model outputs and expert-level understanding.

5.3. Detailed Task-Wise Comparison of Self-GeoSense Framework vs. Semantic Object Awareness Framework (SOBA) Method

Building on insights from CoT-GeoReason, we observed that multiple valid reasoning paths often lead to correct answers in complex remote sensing VQA tasks. Outputs from LMMs were first assessed by an GPT-4o-mini LMM Judge for logical coherence, then blindly reviewed by human experts. This revealed the value of self-consistency: instead of relying on a single deterministic chain, Self-GeoSense samples multiple reasoning trajectories and selects the answer most consistently supported across them. Combined with spatial grounding, this approach improves reliability and captures intricate object–context relationships.

Table 5 compares Self-GeoSense performance with the SOBA baseline. In

Bas Ju, Grok 3 achieves the highest accuracy of 94.69% (+5.06%). For

Rel Ju, Grok 3 again leads with 89.42% (+6.78%). In

Bas Co, Grok 3 records the top score of 93.18% (+12.99%). For

Rel Co, Gemini 2.5 Pro achieves the best performance at 85.48% (+17.62%).

Performance gains are particularly notable in higher-level reasoning tasks. In Obj An, Grok 3 attains the highest score of 77.64% (+16.24%). In Com An, Grok 3 again leads with 65.29% (+15.99%). For error sensitivity, Grok 3 obtains the lowest RMSE values (0.7102 Bas Co, 0.8551 Rel Co), followed closely by Gemini 2.5 Pro (0.7283 and 0.8387).

Overall, Self-GeoSense consistently outperforms SOBA across all tasks and error metrics, underscoring the effectiveness of integrating self-consistent reasoning with spatial grounding for robust multimodal interpretation in remote sensing VQA.

6. Computational Cost and Efficiency Analysis

This section evaluates the computational cost and efficiency of the proposed frameworks:

Zero-GeoVision,

CoT-GeoReason, and

Self-GeoSense. We report the estimated average token usage per inference and the corresponding estimated average cost in USD per one million tokens, considering variable prompt lengths for different reasoning frameworks. These metrics provide insight into the practical applicability of each framework, particularly in real-time or resource-constrained scenarios. The

Self-GeoSense framework generates multiple independent reasoning chains, which increases both token usage and computational cost (see

Table 6).

6.1. Approach to Cost Estimation

Token usage is composed of both input tokens (prompts and image embeddings) and output tokens (answers and reasoning chains). Image embeddings for high-resolution images are typically fixed-size (e.g., 512 tokens), while prompt length varies depending on the task and framework as follows:

Zero-GeoVision: concise prompts ( 100 tokens).

CoT-GeoReason: detailed prompts for step-by-step reasoning ( 500 tokens).

Self-GeoSense: comprehensive prompts for multiple independent reasoning chains ( 800 tokens).

Output tokens include short answers in Zero-GeoVision, step-by-step reasoning chains in CoT-GeoReason, and multiple reasoning chains in Self-GeoSense (five chains per input). Cost is calculated using API pricing (September 2025) as follows:

GPT-4o, owned by OpenAI: $2.50 per 1 M input tokens, $10.00 per 1 M output tokens.

Claude 3.7 Sonnet, owned by Anthropic: $3.00 per 1 M input tokens, $15.00 per 1 M output tokens.

Grok 3, owned by xAI: $3.00 per 1M input tokens, $15.00 per 1 M output tokens.

Gemini 2.5 Pro, owned by Google DeepMind: $1.25 per 1 M input tokens, $10.00 per 1 M output tokens.

6.2. Cost Computation

Costs are computed using the following formula:

Representative token counts for a single high-resolution image with a complex prompt are outlined as follows:

Zero-GeoVision: Input = 100 + 512 = 612 tokens, Output = 150 tokens.

CoT-GeoReason: Input = 500 + 512 = 1012 tokens, Output = 500 tokens.

Self-GeoSense: Input = 800 + 512 = 1312 tokens, Output = 5 × 500 = 2500 tokens.

6.3. Observations and Implications

Zero-GeoVision remains the most cost-efficient, with estimated average costs between $0.00226 and $0.00409 per inference, suitable for low-latency applications.

CoT-GeoReason balances reasoning performance and cost, with estimated average costs between $0.00626 and $0.01054 per inference.

Self-GeoSense incurs the highest cost due to multiple reasoning chains, ranging from $0.02664 to $0.04144 per inference, and may be less practical for real-time scenarios.

Token usage and estimated average cost vary depending on image resolution, prompt length, and reasoning chain complexity. Optimization strategies, such as selective chain generation, parallel processing, or adaptive prompt truncation, can help reduce computational overhead for Self-GeoSense.

7. Limitations

Despite the promising results of the proposed frameworks, several limitations warrant consideration. First, the computational cost of the Self-GeoSense framework, which generates multiple reasoning chains (), is significantly higher than that of Zero-GeoVision or CoT-GeoReason, potentially limiting its scalability for real-time applications or resource-constrained environments. Additionally, the EarthVQA dataset, while diverse, is geographically limited to regions in Nanjing, Changzhou, and Wuhan, which may introduce biases and restrict generalization to other global contexts or varied satellite imagery types (e.g., SAR or hyperspectral). Furthermore, the reliance on human expert evaluations in the Geo-Judge framework introduces subjectivity and time-intensive processes, potentially affecting scalability and consistency across large datasets. Moreover, the large multimodal models (LMMs) tested (GPT-4o, Grok 3, Gemini 2.5 Pro, Claude 3.7 Sonnet) operate in a zero-shot setting without fine-tuning, which may cap their performance compared to models specifically adapted for remote sensing tasks. These limitations highlight the need for further optimization and broader dataset inclusion to enhance practical applicability. In addition, the effectiveness of the Self-GeoSense and CoT-GeoReason frameworks heavily depends on carefully crafted prompts and reasoning templates, and this reliance on prompt engineering may reduce robustness when adapting to new or unforeseen question types, thereby requiring domain experts for optimal configuration. Moreover, the frameworks currently do not explicitly model or quantify uncertainty in predictions or reasoning chains, potentially leading to overconfident outputs in ambiguous or low-quality imagery scenarios. Also, the EarthVQA dataset’s composition may bias evaluation results toward certain land cover types or seasonal conditions, thereby limiting real-world generalizability. While the dual-stage evaluation approach improves assessment accuracy, it introduces a scalability bottleneck due to dependence on human-in-the-loop judgment for large-scale datasets or continuous model monitoring. Furthermore, the frameworks do not account for temporal changes in remote sensing imagery, limiting their suitability for monitoring dynamic environmental phenomena or detecting changes over time. Lastly, the LMMs may inherit biases from their pretraining data, potentially influencing their interpretation of remote sensing inputs and leading to skewed or culturally biased reasoning outcomes.

8. Future Work

Future research can address the identified limitations by exploring several directions to improve the reliability and applicability of the proposed frameworks for remote sensing VQA. First, optimizing the Self-GeoSense framework to reduce computational overhead is critical for real-time applications; this could involve dynamically adjusting the number of reasoning chains based on task complexity or leveraging efficient sampling techniques, such as adaptive top k or top p strategies, to balance performance and resource usage. Furthermore, developing multimodal agent architectures tailored to each task type (for example, Basic Judging, Reasoning based Counting, Comprehensive Analysis) could improve performance by assigning specialized submodules for visual processing, reasoning, and answer synthesis. For instance, a dedicated agent for spatial analysis could enhance object detection in Basic Counting, while a reasoning focused agent could improve Comprehensive Analysis. In addition, expanding the dataset to include remote sensing images from a broader range of cities within Bangladesh beyond major urban centers like Dhaka, Chittagong, and Khulna such as Sylhet, Rajshahi, Barisal, or Rangpur would enhance geographical diversity and mitigate regional biases. Moreover, incorporating diverse types of remote sensing imagery, including Synthetic Aperture Radar (SAR), hyperspectral, thermal infrared, and LiDAR data, could improve model generalization across varied environmental conditions and imaging modalities. This would enable the frameworks to handle complex scenarios such as nighttime imaging or cloud covered scenes, which are critical for applications like disaster response. Additionally, reducing reliance on human expert evaluations within the Geo-Judge framework could be achieved by developing advanced LMM based judges or incorporating inter-rater agreement metrics (for example, Cohen’s kappa) to quantify consistency between automated and human judgments, which would streamline evaluation while maintaining robustness. Finally, fine-tuning LMMs using few shot learning with carefully curated examples from the EarthVQA dataset could enhance performance without requiring extensive retraining. For instance, providing five to ten task specific QA pairs per task type could help models better adapt to the nuances of remote sensing imagery.

9. Conclusions

This study advances remote sensing VQA by showing how structured reasoning strategies improve LMMs on the EarthVQA dataset, which includes 6000 satellite images and over 208,000 question–answer pairs. From this dataset, we randomly selected 200 images with 29 QA pairs each. To evaluate LMM performance on diverse geospatial reasoning tasks, we proposed three hybrid frameworks—Zero-GeoVision, CoT-GeoReason, and Self-GeoSense—targeting six task types—Basic Judging, Reasoning-Based Judging, Basic Counting, Reasoning-Based Counting, Object Situation Analysis, and Comprehensive Analysis. Using LMMs such as GPT-4o, Grok 3, Gemini 2.5 Pro, and Claude 3.7 Sonnet, these frameworks consistently outperformed the baseline Semantic Object Awareness framework (SOBA), as confirmed by the Geo-Judge framework combining automated and expert human evaluations. Zero-GeoVision leverages LMMs’ zero-shot capabilities with task-specific prompts to interpret images and questions directly, but it underperformed compared to SOBA in tasks requiring deeper geospatial understanding. To overcome this, the CoT-GeoReason framework incorporates chain-of-thought prompting, guiding models through step-by-step reasoning and intermediate analyses. Evaluated with the Geo-Judge framework and human experts, CoT-GeoReason outperformed Zero-GeoVision and surpassed SOBA in several reasoning-intensive tasks. Building further, the Self-GeoSense framework enhances robustness by generating multiple reasoning chains per input with stochastic decoding. Validated by Geo-Judge, Self-GeoSense achieved the highest performance across all tasks, with Grok 3 exceeding SOBA by 5.06% in Basic Judging, 12.99% in Basic Counting, 6.78% in Reasoning-Based Judging, 15.43% in Reasoning-Based Counting, 16.24% in Object Situation Analysis, and 15.99% in Comprehensive Analysis. This work demonstrates that structured reasoning and self-consistency significantly enhance LMM performance for complex geospatial VQA tasks while ensuring reliable evaluation through combined automated and human expert assessments.