Vision-AQ: Explainable Multi-Modal Deep Learning for Air Pollution Classification in Smart Cities

Abstract

1. Introduction

2. Literature Review

2.1. Evolution of AQI

2.2. Traditional and Sensor-Based AQM

2.3. Satellite-Based Remote Sensing for Air Quality

2.4. Image-Based Air Pollution Estimation: Visual Sensing

3. Methodology

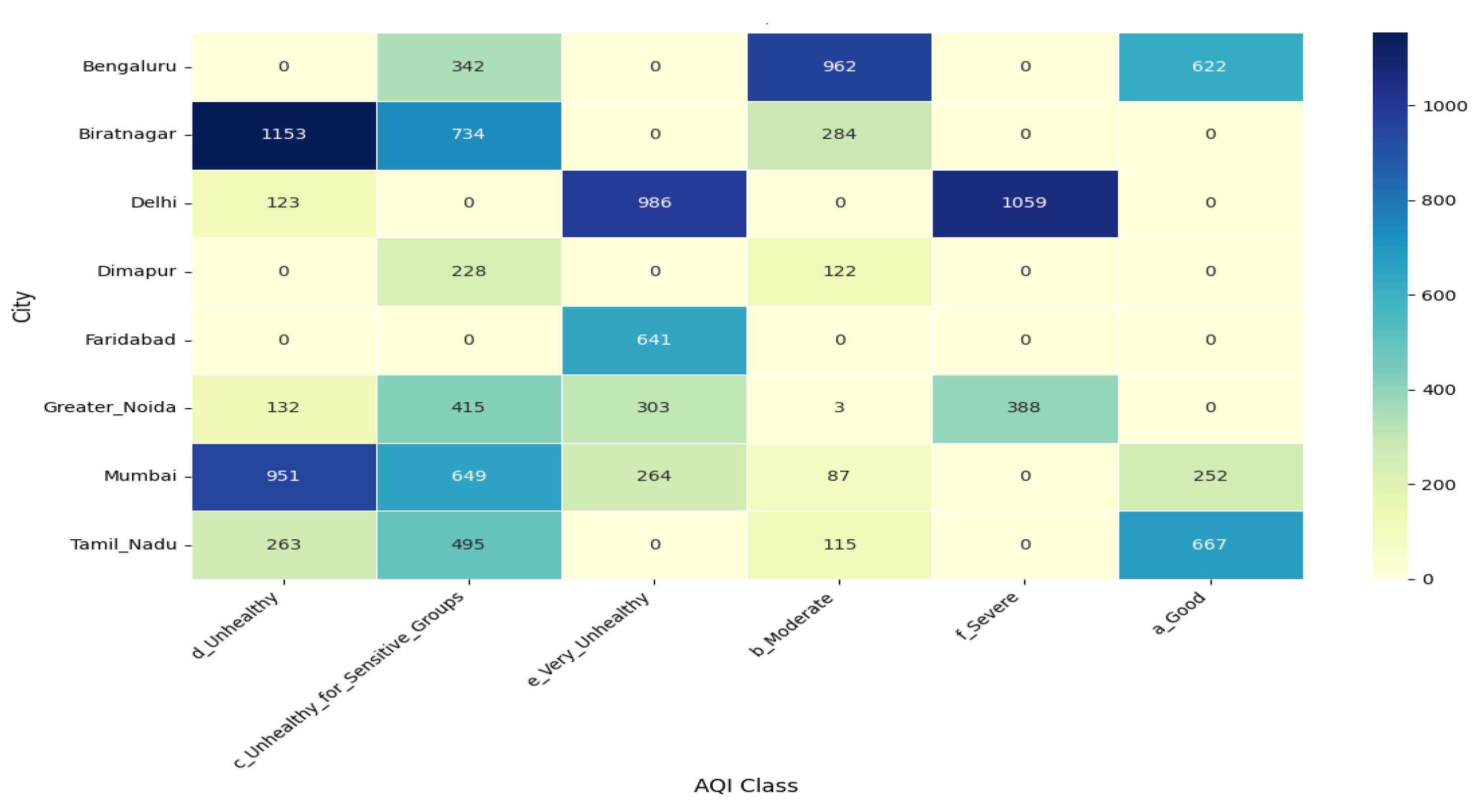

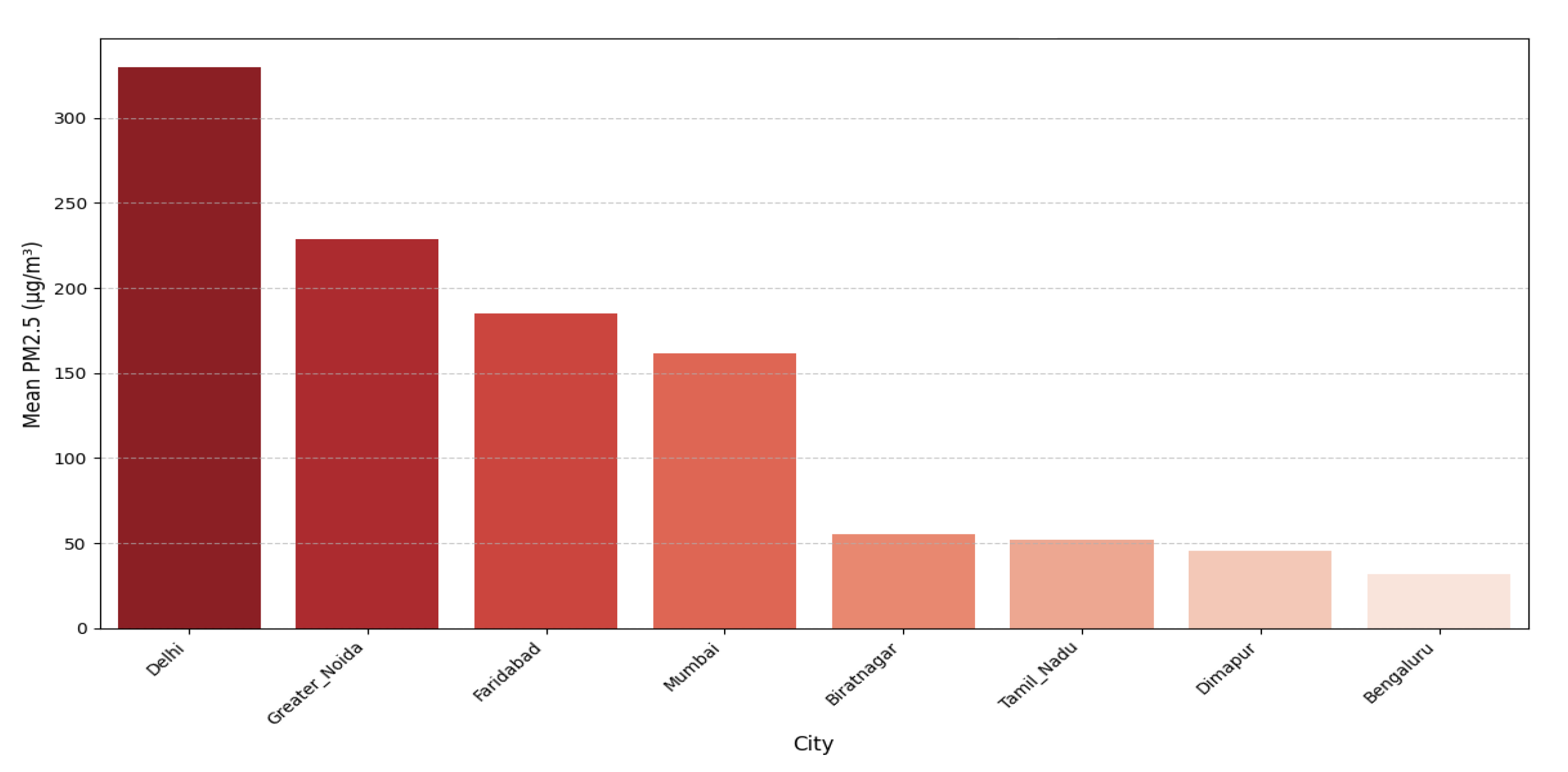

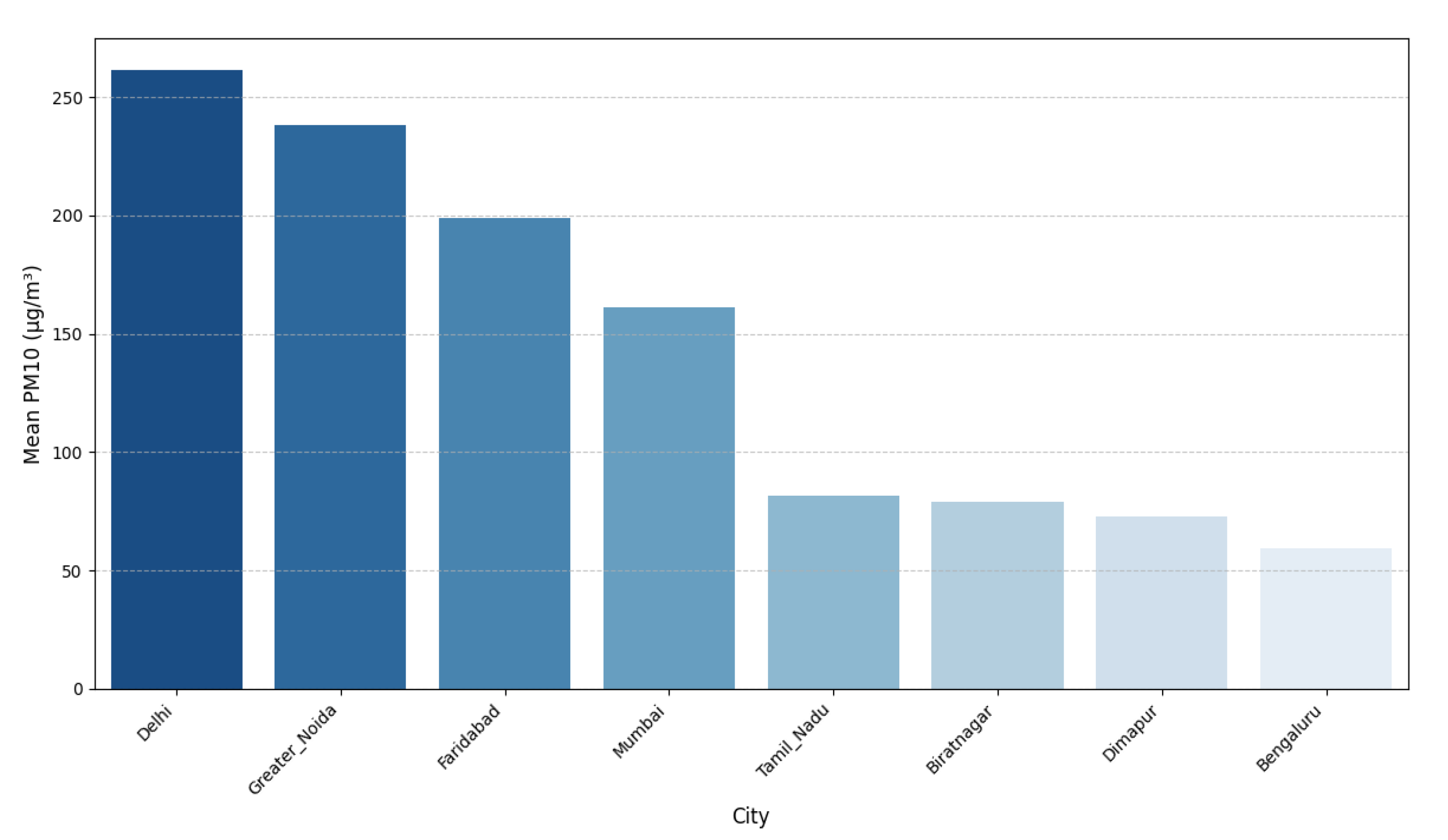

3.1. Exploratory Data Analysis

3.2. Data Acquisition and Pre-Processing

3.3. The Vision-AQ Model Architecture

3.4. Training and Fine-Tuning Strategy

3.5. Grad-CAM

4. Results

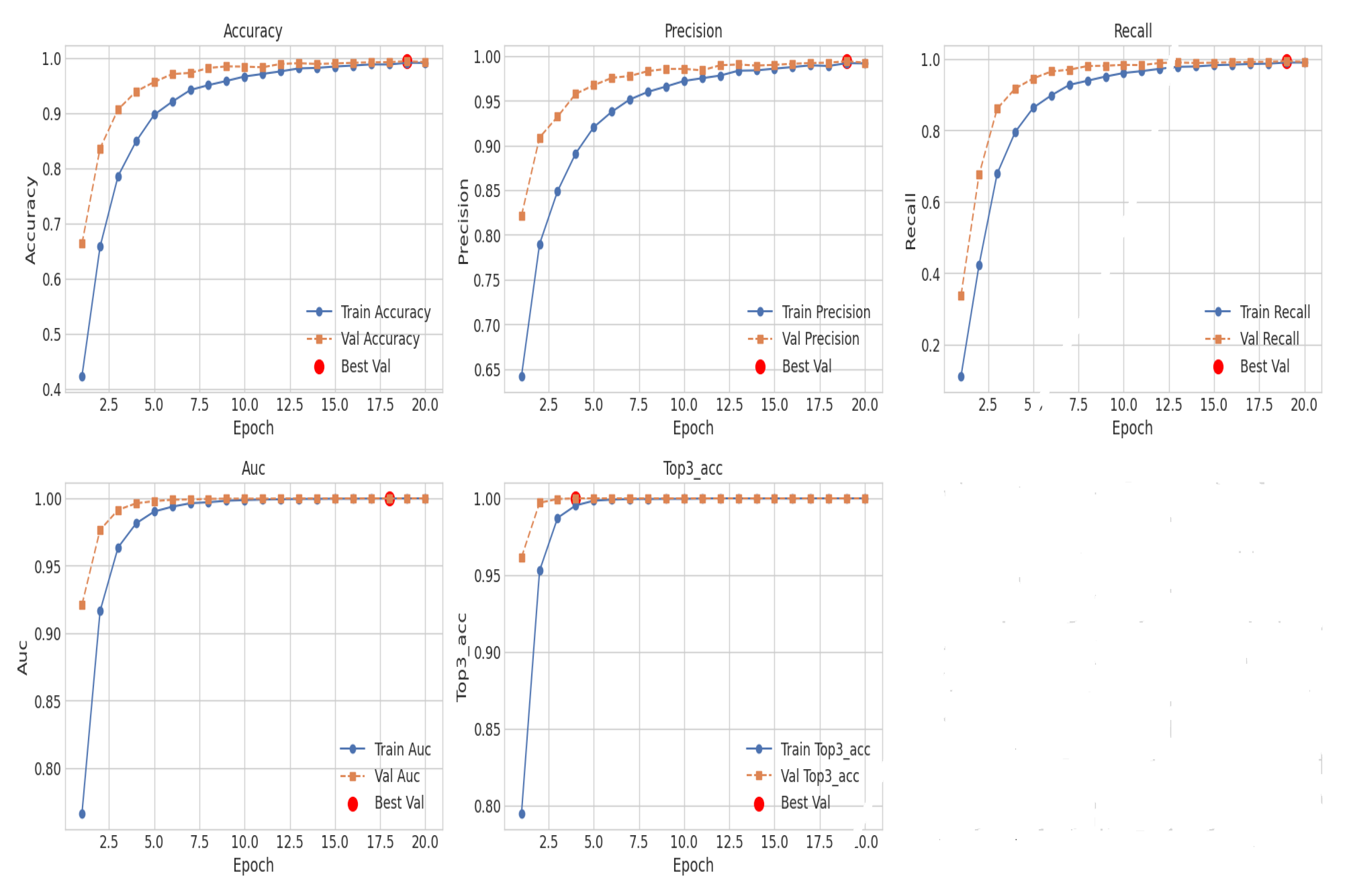

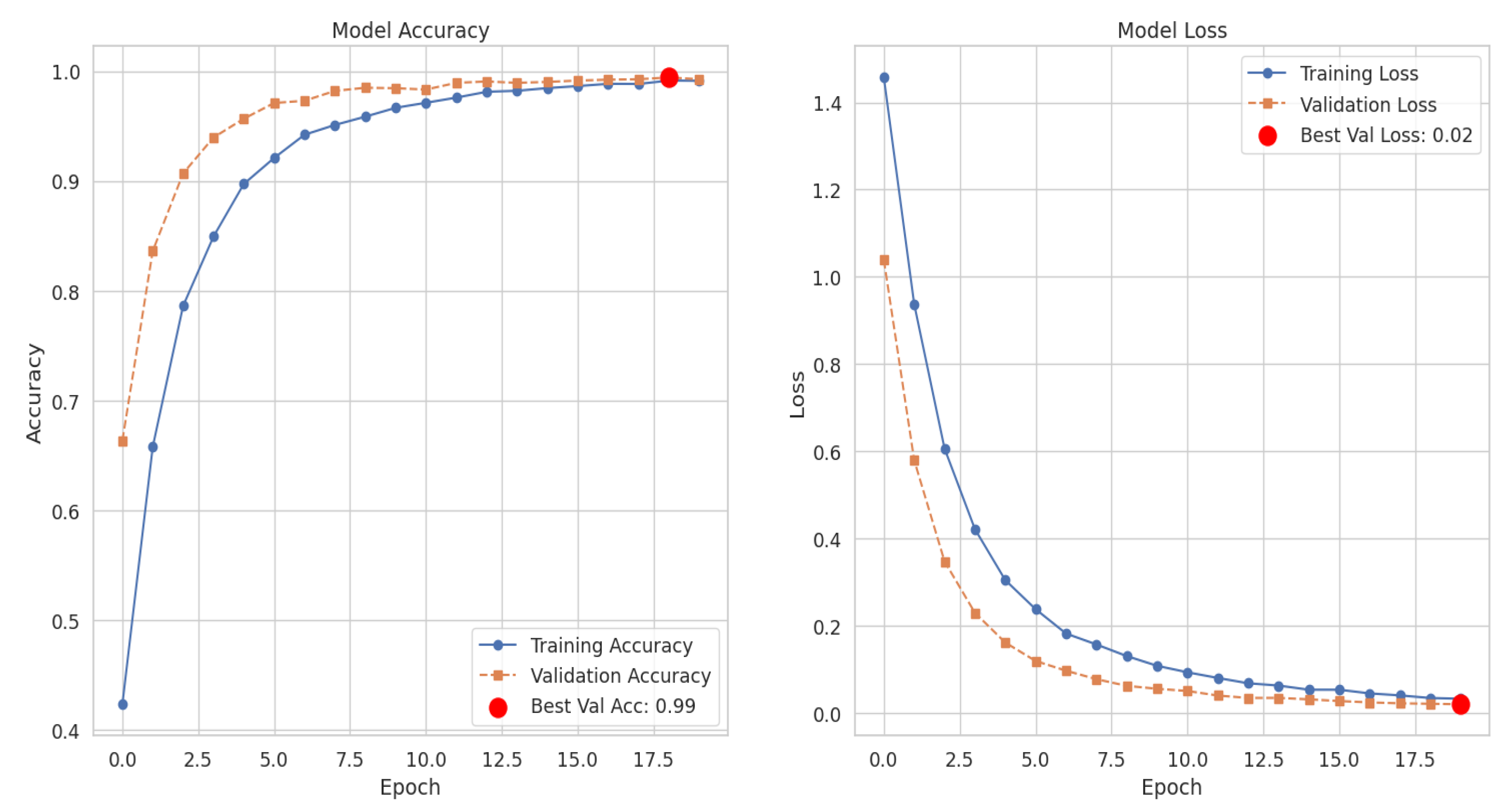

4.1. Training Performance

4.2. Evaluation Metrics

4.3. Interpreting Predictions

5. Discussion

- Real-time public health alerts via mobile applications.

- Dynamic pollution maps to help citizens avoid hotspots.

- Evidence-based urban planning informed by high-resolution environmental data.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhao, L.; Li, Z.; Qu, L. A novel machine learning-based artificial intelligence method for predicting the air pollution index PM2.5. J. Clean. Prod. 2024, 468, 143042. [Google Scholar] [CrossRef]

- Dewage, P.M.; Wijeratne, L.O.; Yu, X.; Iqbal, M.; Balagopal, G.; Waczak, J.; Fernando, A.; Lary, M.D.; Ruwali, S.; Lary, D.J. Providing Fine Temporal and Spatial Resolution Analyses of Airborne Particulate Matter Utilizing Complimentary In Situ IoT Sensor Network and Remote Sensing Approaches. Remote Sens. 2024, 16, 2454. [Google Scholar] [CrossRef]

- Chakma, A.; Vizena, B.; Cao, T.; Lin, J.; Zhang, J. Image-based air quality analysis using deep convolutional neural network. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3949–3952. [Google Scholar]

- Essamlali, I.; Nhaila, H.; El Khaili, M. Supervised Machine Learning Approaches for Predicting Key Pollutants and for the Sustainable Enhancement of Urban Air Quality: A Systematic Review. Sustainability 2024, 16, 976. [Google Scholar] [CrossRef]

- Ćurić, M.; Zafirovski, O.; Spiridonov, V. Air quality and health. In Essentials of Medical Meteorology; Springer: Berlin/Heidelberg, Germany, 2021; pp. 143–182. [Google Scholar]

- Bayazid, A.B.; Jeong, S.A.; Azam, S.; Oh, S.H.; Lim, B.O. Neuroprotective effects of fermented blueberry and black rice against particulate matter 2.5 μm-induced inflammation in vitro and in vivo. Drug Chem. Toxicol. 2025, 48, 16–26. [Google Scholar] [CrossRef]

- Tang, Q.; Zhang, M.; Yu, L.; Deng, K.; Mao, H.; Hu, J.; Wang, C. Seasonal Dynamics of Microbial Communities in PM2.5 and PM10 from a Pig Barn. Animals 2025, 15, 1116. [Google Scholar] [CrossRef] [PubMed]

- Obodoeze, F.C.; Nwabueze, C.A.; Akaneme, S.A. Monitoring and prediction of PM2.5 pollution for a smart city. Int. J. Adv. Eng. Res. Sci 2021, 6, 181–185. [Google Scholar]

- Tham, H.P.; Yip, K.Y.; Aitipamula, S.; Mothe, S.R.; Zhao, W.; Choong, P.S.; Benetti, A.A.; Gan, W.E.; Leong, F.Y.; Thoniyot, P.; et al. Influence of particle parameters on deposition onto healthy and damaged human hair. Int. J. Cosmet. Sci. 2025, 47, 58–72. [Google Scholar] [CrossRef]

- Chauhan, A. Environmental Pollution and Management; Techsar Pvt. Ltd.: New Delhi, India, 2025. [Google Scholar]

- Mago, N.; Mittal, M.; Bhimavarapu, U.; Battineni, G. Optimized outdoor parking system for smart cities using advanced saliency detection method and hybrid features extraction model. J. Taibah Univ. Sci. 2022, 16, 401–414. [Google Scholar] [CrossRef]

- Huang, C.J.; Kuo, P.H. A deep CNN-LSTM model for particulate matter (PM2.5) forecasting in smart cities. Sensors 2018, 18, 2220. [Google Scholar] [CrossRef] [PubMed]

- Song, S.; Lam, J.C.; Han, Y.; Li, V.O. ResNet-LSTM for Real-Time PM2.5 and PM10 Estimation Using Sequential Smartphone Images. IEEE Access 2020, 8, 220069–220082. [Google Scholar] [CrossRef]

- Chen, S.; Kan, G.; Li, J.; Liang, K.; Hong, Y. Investigating China’s Urban Air Quality Using Big Data, Information Theory, and Machine Learning. Pol. J. Environ. Stud. 2018, 27, 565–578. [Google Scholar] [CrossRef] [PubMed]

- Bekkar, A.; Hssina, B.; Douzi, S.; Douzi, K. Air-pollution prediction in smart city, deep learning approach. J. Big Data 2021, 8, 161. [Google Scholar] [CrossRef]

- Njaime, M.; Abdallah, F.; Snoussi, H.; Akl, J.; Chaaban, K.; Omrani, H. Transfer learning based solution for air quality prediction in smart cities using multimodal data. Int. J. Environ. Sci. Technol. 2025, 22, 1297–1312. [Google Scholar] [CrossRef]

- Siddique, M.A.; Naseer, E.; Usama, M.; Basit, A. Estimation of Surface-Level NO2 Using Satellite Remote Sensing and Machine Learning: A review. IEEE Geosci. Remote Sens. Mag. 2024, 12, 8–34. [Google Scholar] [CrossRef]

- Mondal, S.; Adhikary, A.S.; Dutta, A.; Bhardwaj, R.; Dey, S. Utilizing Machine Learning for air pollution prediction, comprehensive impact assessment, and effective solutions in Kolkata, India. Results Earth Sci. 2024, 2, 100030. [Google Scholar] [CrossRef]

- Zhou, S.; Wang, W.; Zhu, L.; Qiao, Q.; Kang, Y. Deep-learning architecture for PM2.5 concentration prediction: A review. Environ. Sci. Ecotechnol. 2024, 21, 100400. [Google Scholar] [CrossRef]

- Chelani, A.; Rao, C.C.; Phadke, K.; Hasan, M. Formation of an air quality index in India. Int. J. Environ. Stud. 2002, 59, 331–342. [Google Scholar] [CrossRef]

- Holzinger, A.; Malle, B.; Saranti, A.; Pfeifer, B. Towards multi-modal causability with graph neural networks enabling information fusion for explainable AI. Inf. Fusion 2021, 71, 28–37. [Google Scholar] [CrossRef]

- Zhang, S.; Li, Y.; Mei, S. Exploring uni-modal feature learning on entities and relations for remote sensing cross-modal text-image retrieval. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–17. [Google Scholar] [CrossRef]

- Sagl, G.; Resch, B.; Blaschke, T. Contextual sensing: Integrating contextual information with human and technical geo-sensor information for smart cities. Sensors 2015, 15, 17013–17035. [Google Scholar] [CrossRef]

- Wang, S.; Mei, L.; Liu, R.; Jiang, W.; Yin, Z.; Deng, X.; He, T. Multi-modal fusion sensing: A comprehensive review of millimeter-wave radar and its integration with other modalities. IEEE Commun. Surv. Tutor. 2024, 27, 322–352. [Google Scholar] [CrossRef]

- Rai, A. Explainable AI: From black box to glass box. J. Acad. Mark. Sci. 2020, 48, 137–141. [Google Scholar] [CrossRef]

- Kumar, M.; Khan, L.; Choi, A. RAMHA: A Hybrid Social Text-Based Transformer with Adapter for Mental Health Emotion Classification. Mathematics 2025, 13, 2918. [Google Scholar] [CrossRef]

- Lauriks, T.; Longo, R.; Baetens, D.; Derudi, M.; Parente, A.; Bellemans, A.; Van Beeck, J.; Denys, S. Application of improved CFD modeling for prediction and mitigation of traffic-related air pollution hotspots in a realistic urban street. Atmos. Environ. 2021, 246, 118127. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Y.; Zhao, F. Important meteorological variables for statistical long-term air quality prediction in eastern China. Theor. Appl. Climatol. 2018, 134, 25–36. [Google Scholar] [CrossRef]

- Ashby, L.; Anderson, M. Studies in the politics of environmental protection: The historical roots of the British Clean Air Act, 1956: II. The appeal to public opinion over domestic smoke, 1880–1892. Interdiscip. Sci. Rev. 1977, 2, 9–26. [Google Scholar] [CrossRef]

- Stern, A.C.; Professor, E. History of air pollution legislation in the United States. J. Air Pollut. Control Assoc. 1982, 32, 44–61. [Google Scholar] [CrossRef] [PubMed]

- Schmalensee, R.; Stavins, R.N. Policy evolution under the clean air act. J. Econ. Perspect. 2019, 33, 27–50. [Google Scholar] [CrossRef]

- Snyder, H.R. Major depressive disorder is associated with broad impairments on neuropsychological measures of executive function: A meta-analysis and review. Psychol. Bull. 2013, 139, 81. [Google Scholar] [CrossRef]

- Dillon, L.; Sellers, C.; Underhill, V.; Shapiro, N.; Ohayon, J.L.; Sullivan, M.; Brown, P.; Harrison, J.; Wylie, S.; EPA Under Siege Writing Group. The Environmental Protection Agency in the early Trump administration: Prelude to regulatory capture. Am. J. Public Health 2018, 108, S89–S94. [Google Scholar] [CrossRef]

- Gupta, P.; Christopher, S.A.; Wang, J.; Gehrig, R.; Lee, Y.; Kumar, N. Satellite remote sensing of particulate matter and air quality assessment over global cities. Atmos. Environ. 2006, 40, 5880–5892. [Google Scholar] [CrossRef]

- Morawska, L.; Thai, P.K.; Liu, X.; Asumadu-Sakyi, A.; Ayoko, G.; Bartonova, A.; Bedini, A.; Chai, F.; Christensen, B.; Dunbabin, M.; et al. Applications of low-cost sensing technologies for air quality monitoring and exposure assessment: How far have they gone? Environ. Int. 2018, 116, 286–299. [Google Scholar] [CrossRef]

- Karagulian, F.; Temimi, M.; Ghebreyesus, D.; Weston, M.; Kondapalli, N.K.; Valappil, V.K.; Aldababesh, A.; Lyapustin, A.; Chaouch, N.; Al Hammadi, F.; et al. Analysis of a severe dust storm and its impact on air quality conditions using WRF-Chem modeling, satellite imagery, and ground observations. Air Qual. Atmos. Health 2019, 12, 453–470. [Google Scholar] [CrossRef]

- Northam, A.E. Development and Evaluation of a Model to Correct Tapered Element Oscillating Microbalance (TEOM) Readings of PM2.5 in Chullora, Sydney. Ph.D. Thesis, University of Wollongong, Wollongong, Australia, 2017. [Google Scholar]

- Trujillo, C. Evaluation of Sensor-Based Air Monitoring Networks in the US and Globally: Guidance for Urban Networks. Master’s Thesis, University of Illinois at Chicago, Chicago, IL, USA, 2025. [Google Scholar]

- Van Donkelaar, A.; Martin, R.V.; Brauer, M.; Kahn, R.; Levy, R.; Verduzco, C.; Villeneuve, P.J. Global estimates of ambient fine particulate matter concentrations from satellite-based aerosol optical depth: Development and application. Environ. Health Perspect. 2010, 118, 847–855. [Google Scholar] [CrossRef] [PubMed]

- Barnaba, F.; Putaud, J.P.; Gruening, C.; dell’Acqua, A.; Dos Santos, S. Annual cycle in co-located in situ, total-column, and height-resolved aerosol observations in the Po Valley (Italy): Implications for ground-level particulate matter mass concentration estimation from remote sensing. J. Geophys. Res. Atmos. 2010, 115. [Google Scholar] [CrossRef]

- Li, J. Pollution trends in China from 2000 to 2017: A multi-sensor view from space. Remote Sens. 2020, 12, 208. [Google Scholar] [CrossRef]

- Yu, X.; Wong, M.S.; Nazeer, M.; Li, Z.; Kwok, C.Y.T. A novel algorithm for full-coverage daily aerosol optical depth retrievals using machine learning-based reconstruction technique. Atmos. Environ. 2024, 318, 120216. [Google Scholar] [CrossRef]

- Liu, C.; Tsow, F.; Zou, Y.; Tao, N. Particle pollution estimation based on image analysis. PLoS ONE 2016, 11, e0145955. [Google Scholar] [CrossRef]

- Wan, H.; Xu, R.; Zhang, M.; Cai, Y.; Li, J.; Shen, X. A novel model for water quality prediction caused by non-point sources pollution based on deep learning and feature extraction methods. J. Hydrol. 2022, 612, 128081. [Google Scholar] [CrossRef]

- Zhou, C.; Zhou, C.; Zhu, H.; Liu, T. AIR-CNN: A lightweight automatic image rectification CNN used for barrel distortion. Meas. Sci. Technol. 2024, 35, 045402. [Google Scholar] [CrossRef]

- Zhang, Q.; Han, Y.; Li, V.O.; Lam, J.C. Deep-AIR: A hybrid CNN-LSTM framework for fine-grained air pollution estimation and forecast in metropolitan cities. IEEE Access 2022, 10, 55818–55841. [Google Scholar] [CrossRef]

- Zhang, C.; Yan, J.; Li, C.; Rui, X.; Liu, L.; Bie, R. On estimating air pollution from photos using convolutional neural network. In Proceedings of the 24th ACM international conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 297–301. [Google Scholar]

- Chen, H.; Chen, A.; Xu, L.; Xie, H.; Qiao, H.; Lin, Q.; Cai, K. A deep learning CNN architecture applied in smart near-infrared analysis of water pollution for agricultural irrigation resources. Agric. Water Manag. 2020, 240, 106303. [Google Scholar] [CrossRef]

- Ahmed, N.; Islam, M.N.; Tuba, A.S.; Mahdy, M.; Sujauddin, M. Solving visual pollution with deep learning: A new nexus in environmental management. J. Environ. Manag. 2019, 248, 109253. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, M.; Shen, Y.; Ahmed, M.; Xiao, Z.; Cheng, P.; Ali, N.; Ghaffar, A.; Ali, S. AQE-Net: A deep learning model for estimating air quality of Karachi city from mobile images. Remote Sens. 2022, 14, 5732. [Google Scholar] [CrossRef]

- Bishoi, B.; Prakash, A.; Jain, V. A comparative study of air quality index based on factor analysis and US-EPA methods for an urban environment. Aerosol Air Qual. Res. 2009, 9, 1–17. [Google Scholar] [CrossRef]

- Bhawan, P.; Nagar, E.A. Central pollution control board. Cent. Pollut. Control Board New Delhi India Tech. Rep. 2020, 20–21. [Google Scholar]

- Rouniyar, A.; Utomo, S.; John, A.; Hsiung, P.A. Air Pollution Image Dataset from India and Nepal; Kaggle: San Francisco, CA, USA, 2023. [Google Scholar]

| Label Class | AQI | () | () |

|---|---|---|---|

| Good | 0–50 | 0–12 | 0–54 |

| Moderate | 51–100 | 12.1–35.4 | 55–154 |

| Unhealthy for Sensitive Groups | 101–150 | 35.5–55.4 | 155–254 |

| Unhealthy | 151–200 | 55.5–150.4 | 255–354 |

| Very Unhealthy | 201–300 | 150.5–250.4 | 355–424 |

| Severe | >300 | >250.4 | >424 |

| Layer Block | Description | Output Shape | Params |

|---|---|---|---|

| Inputs | |||

| image_input | Image input (224 × 224 × 3) | (None, 224, 224, 3) | 0 |

| tabular_input | Sensor input (3 features) | (None, 3) | 0 |

| Image Branch | |||

| resnet50 | CNN base (frozen) | (None, 7, 7, 2048) | 0 |

| global_avg_pool | Feature pooling | (None, 2048) | 0 |

| image_features | Dense layer (64-dim) | (None, 64) | 131,136 |

| Tabular Branch | |||

| MLP Layers | 64 → 32 → 16 | (None, 16) | 2800 |

| Fusion & Classifier | |||

| feature_fusion | Concat (image+tabular) | (None, 80) | 0 |

| classifier_dense | Dense (64) | (None, 64) | 5184 |

| classifier_dropout | Dropout (0.5) | (None, 64) | 0 |

| output | Dense (6, Softmax) | (None, 6) | 390 |

| Total Params: | 23,733,510 | ||

| Trainable: | 139,510 | ||

| Non-trainable: | 23,594,000 | ||

| Class | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|

| a_Good | 0.99 | 0.97 | 0.98 | 308 |

| b_Moderate | 0.97 | 0.99 | 0.98 | 315 |

| c_Unhealthy_for_Sensitive_Groups | 1.00 | 1.00 | 1.00 | 573 |

| d_Unhealthy | 1.00 | 1.00 | 1.00 | 524 |

| e_Very_Unhealthy | 1.00 | 1.00 | 1.00 | 439 |

| f_Severe | 0.99 | 1.00 | 1.00 | 289 |

| Accuracy | 0.99 | 2448 | ||

| Macro Avg | 0.99 | 0.99 | 0.99 | 2448 |

| Weighted Avg | 0.99 | 0.99 | 0.99 | 2448 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mehmood, F.; Rehman, S.U.; Choi, A. Vision-AQ: Explainable Multi-Modal Deep Learning for Air Pollution Classification in Smart Cities. Mathematics 2025, 13, 3017. https://doi.org/10.3390/math13183017

Mehmood F, Rehman SU, Choi A. Vision-AQ: Explainable Multi-Modal Deep Learning for Air Pollution Classification in Smart Cities. Mathematics. 2025; 13(18):3017. https://doi.org/10.3390/math13183017

Chicago/Turabian StyleMehmood, Faisal, Sajid Ur Rehman, and Ahyoung Choi. 2025. "Vision-AQ: Explainable Multi-Modal Deep Learning for Air Pollution Classification in Smart Cities" Mathematics 13, no. 18: 3017. https://doi.org/10.3390/math13183017

APA StyleMehmood, F., Rehman, S. U., & Choi, A. (2025). Vision-AQ: Explainable Multi-Modal Deep Learning for Air Pollution Classification in Smart Cities. Mathematics, 13(18), 3017. https://doi.org/10.3390/math13183017