Abstract

Continual Learning (CL), the ability of a model to learn new tasks without forgetting previously acquired knowledge, remains a critical challenge in artificial intelligence. This is particularly true for Vision Transformers (ViTs) that utilize Multilayer Perceptrons (MLPs) for global representation learning. Catastrophic forgetting, where new information overwrites prior knowledge, is especially problematic in these models. This research proposes the replacement of MLPs in ViTs with Kolmogorov–Arnold Networks (KANs) to address this issue. KANs leverage local plasticity through spline-based activations, ensuring that only a subset of parameters is updated per sample, thereby preserving previously learned knowledge. This study investigates the efficacy of KAN-based ViTs in CL scenarios across various benchmark datasets (MNIST, CIFAR100, and TinyImageNet-200), focusing on this approach’s ability to retain accuracy on earlier tasks while adapting to new ones. Our experimental results demonstrate that KAN-based ViTs significantly mitigate catastrophic forgetting, outperforming traditional MLP-based ViTs in both knowledge retention and task adaptation. This novel integration of KANs into ViTs represents a promising step toward more robust and adaptable models for dynamic environments.

Keywords:

Kolmogorov–Arnold network; continual learning; catastrophic forgetting; Vision Transformers; deep learning MSC:

68-04

1. Introduction

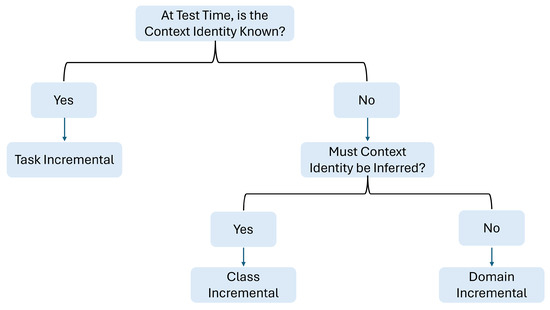

Incremental learning [1,2], also referred to as Continual Learning (CL), addresses the critical challenge of enabling Machine Learning (ML) models to adapt to new data and tasks sequentially without forgetting previously acquired knowledge (see Figure 1). This capability is essential for real-world applications where data distributions evolve over time or where it is impractical to retrain models from scratch with the entire dataset each time new information becomes available [3]. CL algorithms strive to build versatile AI agents that learn from a continuous sequence of tasks, contributing to the development of generally intelligent systems [4,5,6]. However, achieving stable and robust incremental learning is difficult because neural networks often suffer from catastrophic forgetting, whereby the acquisition of new information leads to a drastic decline in performance on old tasks [7]. To mitigate catastrophic forgetting, various strategies have been developed, which can be broadly categorized into three main scenarios—task-incremental learning, domain-incremental learning, and class-incremental learning—as shown in Figure 2. CL is an ML approach in which models are built to continuously learn from a stream of data, incorporating new knowledge while preserving and leveraging previously learned information [8], as illustrated in Figure 1 (1). Excessive plasticity can impair the retention of prior knowledge, whereas too much stability can obstruct the acquisition of new information. Instead of simply balancing these two aspects, a robust CL strategy should also promote strong generalization to effectively manage variations within and between tasks, as shown in Figure 1 (2). In recent years, a wide range of CL methods have emerged to tackle various ML challenges. These approaches can generally be grouped into five main categories, as depicted in Figure 1 (3). Importantly, many of these methods overlap; for example, both regularization and replay techniques affect gradient directions during training. These methods can also work synergistically, such as by improving replay efficiency through distillation of knowledge from earlier models. When applied to real-world problems, CL faces additional challenges that fall into two broad areas—scenario complexity and task-specific requirements—as illustrated in Figure 1 (4).

Figure 1.

A conceptual framework for CL. 1. CL involves adapting to incremental tasks where data distributions change over time. 2. An effective approach must balance stability (shown by the blue arrow) and plasticity (orange arrow) while maintaining strong generalization both within individual tasks (black arrow) and across different tasks (red arrow). 3. To meet the goals of CL, leading methods have focused on different ML components. 4. In real-world applications, CL is tailored to overcome specific challenges that arise from the complexity of scenarios and the unique requirements of each task.

Figure 2.

Decision tree for the three CL scenarios.

Task-incremental learning assumes that task identities are known at both training and test times, allowing the model to utilize this information to maintain task-specific knowledge [7,9]. In this scenario, the model incrementally learns a set of clearly distinguishable tasks, where the boundaries between tasks are well-defined [10]. At inference, the model is provided with the task identity, allowing it to select the appropriate parameters or modules for that specific task. One common approach in task-incremental learning involves using separate model components or parameters for each task, as seen with dynamically expandable networks. These methods allocate new capacity for each task, preventing interference between different tasks and mitigating catastrophic forgetting. However, this kind of approaches can suffer from scalability issues; as the number of tasks increases, significant memory and computational resources are required. Another strategy involves using task-specific modulation techniques, where task embeddings are used to modulate the activations or parameters of a shared network. These modulation techniques allow the model to adapt its behavior to each task while maintaining a shared representation across all tasks. Task-incremental learning provides a simplified setting for studying CL, as the task identity provides a strong signal for guiding the learning process while preventing interference between tasks. However, in real-world scenarios, task boundaries are often ambiguous or unknown, making these methods impractical.

Domain-incremental learning [11,12] presents a more challenging scenario where the model learns the same problem but in different contexts, leading to shifts in the data distribution over time. In this setting, the task identity is not explicitly provided, but the model must adapt to changing input distributions while preserving previously learned knowledge [7]. Domain-incremental learning is relevant to many real-world applications where the environment or characteristics of the input data change over time, such as in robotics, autonomous driving, or financial modeling. A key challenge in domain-incremental learning is to detect and adapt to these distribution shifts without forgetting previously learned domains. One popular approach involves using domain adaptation techniques to align the feature distributions of different domains. These methods aim to learn domain-invariant representations that are robust to changes in the input distribution, allowing the model to generalize across different domains. Another strategy involves using techniques like replay, where data from previous domains is stored and replayed during training on new domains. By replaying old data, the model can consolidate its knowledge and prevent catastrophic forgetting. However, replay-based methods can be limited by memory constraints and privacy concerns. Alternatively, regularization-based methods add a penalty term to the loss function to prevent the model’s parameters from deviating too much from their previous values. The ability to discern which features vary across source and target datasets is crucial for reducing domain discrepancy and ensuring accurate knowledge transfer [13,14].

Class-incremental learning [15,16,17,18] poses the most challenging scenario, where the model must incrementally learn to distinguish between a growing number of classes without revisiting data from previous classes. This setting is particularly relevant in applications such as image recognition, object detection, and natural language processing, where the set of possible categories or concepts can expand over time. Class-incremental learning is challenging because the model must learn new classes without forgetting previously learned classes and without having access to the data from previous classes. This can lead to a significant bias towards the new classes, resulting in a decline in performance on old classes, also known as catastrophic forgetting. One common approach involves using techniques like knowledge distillation, where the model learns to mimic the output of a previous model trained on the old classes. This helps to preserve the knowledge learned from the old classes while adapting to the new classes. Another strategy involves using techniques like exemplar replay, where a small subset of data from previous classes is stored and replayed during training on new classes. However, exemplar replay methods can be limited by memory constraints, and representative exemplars need to be carefully selected. Generative replay, where a generative model trained on previous tasks produces synthetic data that is interleaved with data from the current task, is an alternative approach to CL [19]. When combined with methods like parameter regularization, data augmentation, or label smoothing, activation regularization techniques can improve model robustness [20]. Data augmentation, which involves applying transformations to the training data, can also improve the generalization ability of the model and prevent overfitting [14,21].

Among these three types of CL, task-based incremental learning presents a distinct paradigm within the realm of CL, offering notable advantages over other incremental learning methodologies by explicitly delineating the learning process into discrete tasks. This structured approach facilitates a more controlled and interpretable learning trajectory, mitigating the detrimental effects of catastrophic forgetting that often plague traditional ML models when exposed to sequential data streams [3]. The explicit task boundaries enable the implementation of targeted strategies to preserve previously acquired knowledge, such as rehearsal-based methods that replay representative samples from past tasks or regularization techniques that constrain the model’s parameter drift, preventing it from deviating too far from previously learned representations. Furthermore, task-based incremental learning aligns well with certain real-world scenarios where tasks are naturally segmented, allowing for seamless integration of new skills or knowledge without disrupting existing capabilities [10]. By leveraging the locality of splines, Kolmogorov–Arnold Network (KANs) can avoid catastrophic forgetting. This paradigm allows for more flexible and expressive functional approximations.

The clear task separation inherent in task-based incremental learning allows for the development of specialized learning strategies tailored to the unique characteristics of each task. This adaptability enhances the overall learning efficiency and effectiveness, enabling the model to acquire new knowledge more rapidly and accurately. For instance, a model might employ a more aggressive learning rate for tasks with abundant data and a conservative learning rate for tasks with limited data to prevent overfitting. Moreover, task-based incremental learning facilitates the evaluation and comparison of different learning algorithms in a standardized manner, providing a clear benchmark for assessing their performance on specific tasks so that a given approach’s ability to retain knowledge across multiple tasks can be quantified easily. Explicit task boundaries also enable the use of task-specific meta-learning techniques, whereby the model learns to learn new tasks more efficiently based on its experience with previous tasks [4]. When a network learns a new task, it modifies its weights to minimize the error for that specific task. However, these weight updates can significantly alter the knowledge acquired from previous tasks.

KANs offer a compelling approach to functional approximation that is underpinned by the Kolmogorov–Arnold representation theorem. This theorem posits that any multivariate continuous function can be represented as a composition of univariate functions. The theoretical robustness of KANs makes them particularly suited to complex learning scenarios. In the context of CL, where models are required to sequentially acquire knowledge across multiple tasks without forgetting previous ones, the inherent features of KANs align well with the need for both compact representations and knowledge retention [22,23].

CL presents significant challenges, particularly in mitigating catastrophic forgetting. Traditional architectures like MultiLayer Perceptrons (MLPs) often struggle to balance the trade-off between learning new tasks (plasticity) and retaining old ones (stability). Investigating KANs allows us to explore their potential to improve stability, maintain generalization, and provide more robust solutions for CL tasks, particularly in the image classification domain.

Moreover, Vision Transformers (ViTs), inspired by the success of Transformers in natural language processing, have revolutionized computer vision [24] by employing self-attention mechanisms [25] to achieve state-of-the-art performance across various tasks. The ability of transformers to model long-range dependencies and capture global context has proven particularly advantageous in image recognition, object detection [26], and semantic segmentation [27,28]. However, the inherent architecture of ViTs, particularly the reliance on MLPs within each Transformer block, presents some significant challenges, including catastrophic forgetting. Catastrophic forgetting refers to the phenomenon whereby a neural network, after being trained on a sequence of tasks, abruptly loses its ability to perform well on previously learned tasks when trained for a new task. This limitation hinders the deployment of ViTs in real-world CL scenarios where models must adapt to new data without forgetting previously acquired knowledge.

The core problem of catastrophic forgetting in MLPs arises from their global representation learning mechanism, wherein each neuron’s weights are updated for every input sample, thereby modifying large regions of the parameter space when new data are encountered. In contrast, Liu et al. [29] hypothesize that KANs can alleviate catastrophic forgetting through the local plasticity enabled by their use of spline-based activations. Under this paradigm, each sample primarily influences only a small subset of spline coefficients, leaving the majority of parameters unaffected, thereby preserving previously learned knowledge. Building on this premise, this work integrates KANs into ViTs by replacing conventional MLP blocks with KAN layers. This design leverages KANs’ selective parameter updates to reduce interference between tasks, enabling the model to acquire new knowledge while maintaining high performance on earlier tasks.

Specifically, this work investigates the replacement of MLPs in ViTs with KANs to enhance knowledge retention across multiple tasks in a way that mitigates catastrophic forgetting. In this study, we systematically evaluate KAN-based ViTs in CL settings, comparing their performance to traditional MLP-based ViTs. Our study focuses on maintaining accuracy on previously learned tasks while adapting to new information, providing insights into the role of learnable spline-based activations in improving learning capabilities. This research addresses the critical challenge of catastrophic forgetting, advancing ViTs’ applicability in dynamic environments where continuous adaptation is essential. Experimental results demonstrate that KAN-based ViTs achieve superior accuracy over their conventional MLP-based counterparts, highlighting the benefits of localized, learnable activations. The contributions of this study are stated below:

- The replacement of traditional MLP layers in ViTs with KANs to leverage their local plasticity and mitigate catastrophic forgetting;

- In-depth exploration and evaluation of different KAN configurations, shedding light on their impact on performance and scalability;

- Demonstration of superior knowledge retention and task adaptation capabilities of KAN-based ViTs in CL scenarios, validated through experiments on benchmark datasets like MNIST, CIFAR100, and TinyImageNet-20;

- Development of a robust experimental framework for simulating real-world sequential learning scenarios to enable rigorous evaluation of catastrophic forgetting and knowledge retention in ViTs.

The code is available at https://github.com/Zahid672/KAN-CL-ViT-main/tree/main?tab=readme-ov-file (accessed on 25 August 2025).

The remainder of this paper is organized as follows: Section 2 presents a comprehensive review of background knowledge to contextualize our research. Next, in Section 3, we detail the proposed methodology, including the dataset and the main architecture. We then describe our experimental setup and implementation details in Section 4. Section 5 consists of the results and discussion. Finally, in Section 6, we present the conclusion and future work.

2. Background Knowledge and Motivation

2.1. Continual Learning and Catastrophic Forgetting

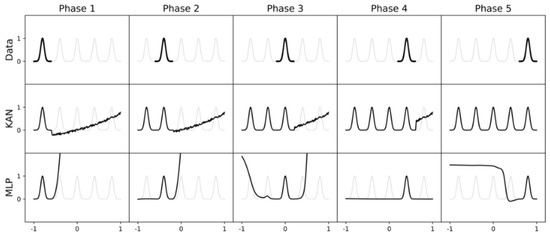

CL enables models to sequentially learn multiple tasks while retaining prior knowledge, mimicking how humans learn [7]. As shown in Figure 3, a central challenge in CL is catastrophic forgetting [30], where neural networks lose performance on earlier tasks when trained on new ones. This occurs because shared model parameters are overwritten during updates, and standard training methods lack mechanisms to mitigate this problem by preserving task-specific knowledge.

Figure 3.

Catastrophic forgetting MLP vs. KAN. The MLP waveforms become distorted as new phases are introduced, indicating catastrophic forgetting, whereas the KAN waveforms remain consistent across subsequent phases, showing better retention of knowledge across phases.

2.2. Vision Transformers in Continual Learning

ViTs [27] have advanced computer vision by capturing long-range dependencies via self-attention. However, they remain vulnerable to catastrophic forgetting when learning sequential tasks, especially in data-scarce domains like medical imaging [31]. To mitigate forgetting, recent work proposed replacing ViT MLP blocks with KANs, which utilize spline-based activations to selectively update parameters, thereby reducing task interference and improving knowledge retention [29].

2.3. Task-Based Incremental Learning and Replay Methods

Task-based incremental learning provides explicit task identifiers, simplifying adaptation to changing data and knowledge retention [32]. Replay-based methods mitigate forgetting by interleaving data from previous tasks during training, either via stored exemplars (experience replay) [33] or synthesized samples (generative replay) [34]. While effective, these approaches face challenges in scalability, memory efficiency, and data fidelity.

To mitigate catastrophic forgetting in CL, we integrate a replay buffer that stores a fixed number of representative samples from previous tasks. Rather than retaining the entire historical dataset, we employ reservoir sampling to maintain a balanced representation of past knowledge within a constrained memory budget. During training, each mini batch consists of a mixture of current-task samples and replayed samples from the buffer. This strategy ensures that gradient updates are influenced by both new and historical data, thereby preserving previously learned spline parameters and control-point positions in the KAN modules.

Given that replay introduces samples from multiple tasks, each with potentially different difficulty levels and gradient magnitudes, we adopt a fixed loss-scaling approach to stabilize optimization. Specifically, the total loss is computed as follows:

where and denote losses from current-task and replayed samples, respectively. Coefficients and are empirically fixed to balance stability and plasticity, preventing the model from overfitting to the current task or forgetting prior tasks. This fixed scaling avoids oscillations in gradient magnitudes that may occur with fully adaptive weighting, leading to more consistent convergence across CL sessions.

2.3.1. Comparative Analysis

Replay-based methods are often contrasted with other CL strategies. Regularization-based methods, such as Elastic Weight Consolidation and Synaptic Intelligence, penalize changes to parameters critical to previous tasks, thereby reducing forgetting. Architectural approaches like progressive networks allocate separate modules for each task, avoiding interference but at the cost of scalability. Replay-based methods strike a balance between these extremes by leveraging prior task data to reinforce learning while maintaining flexibility for new tasks.

2.3.2. Existing Gaps

Despite their success, replay-based approaches face limitations, including memory efficiency, effective task representation, and robustness to distribution shifts. Additionally, previous research into task-based incremental learning scenarios has often lacked a comprehensive exploration of how replay-based strategies can enhance knowledge transfer across tasks. Addressing these gaps requires innovative mechanisms to optimize memory usage, improve generative data quality, and integrate task-specific insights into replay dynamics.

This work aims to advance the state of task-based incremental learning by proposing novel replay-based mechanisms tailored to task-specific requirements. By addressing the aforementioned challenges, this study contributes to the broader understanding and application of CL in dynamic environments. The integration of KANs into the ViT architecture represents an innovative attempt to combine the theoretical strengths of KAN with the practical successes of ViT. KAN’s ability to approximate functions efficiently and its potential for better knowledge retention complement ViT’s superior feature extraction and scalability. This hybrid approach seeks to answer several key questions, including the following: Can KANs enhance ViT’s capacity for CL by mitigating forgetting while maintaining learning efficiency? Does this combination provide a synergistic effect that improves overall task performance, especially in challenging scenarios with complex data like those found in the CIFAR100 dataset?

This research is motivated by the desire to develop architectures that not only achieve high accuracy but also exhibit resilience and adaptability in CL environments, paving the way for more advanced solutions for lifelong learning systems.

Another important direction in CL focuses not on modifying the backbone but on stabilizing the classifier layer. For instance, the Discrete Representation Classifier replaces the traditional fully connected classifier with a discrete, feature-salient classifier that emphasizes high-importance features and suppresses less relevant ones. This results in a classifier that is both efficient and effective at preserving previously learned decision boundaries without altering the backbone architecture [35]. In contrast, our KAN-based ViTs tackle forgetting by introducing localized plasticity within the network core, specifically in the MLP replacement with spline-based activations that ensure only a sparse subset of parameters is updated per sample. Thus, while the Discrete Representation Classifier operates at the output boundary to “freeze” classification behavior, our approach embeds forgetting resistance in the model’s internal dynamics, underscoring the novelty of our architectural strategy.

2.4. Summary

KANs have shown promise in image classification tasks due to their foundation in the Kolmogorov–Arnold representation theorem, which states that any continuous multivariate function can be represented as a superposition of univariate functions. This mathematical framework enables KANs to approximate complex mappings with high efficiency and compactness, making them particularly suitable for image classification, where intricate patterns and relationships need to be captured. By leveraging this ability, KANs can efficiently model non-linear relationships in image data, leading to accurate and robust classification outcomes. Moreover, their structure may provide advantages in terms of adaptability and knowledge retention, which are critical in dynamic tasks like CL. In this context, KANs can serve as a standalone classifiers or be integrated into more complex architectures, such as ViTs, to enhance feature extraction and classification performance. The unique theoretical strengths of KANs, combined with their practical applicability, make them a valuable tool for tackling remaining challenges in modern image classification. The central hypothesis of this research is that replacing MLPs in ViTs with KANs will lead to a reduction in catastrophic forgetting and an enhancement in the overall learning capabilities of the model in CL scenarios. For instance, Liu et al. [29] posited that KANs exhibit local plasticity and can avoid catastrophic forgetting by leveraging the locality of splines. They highlighted that spline bases are local, so a new sample will only affect a few nearby spline coefficients, leaving far-away coefficients intact. This is in contrast with MLPs that remodel whole regions after seeing new data samples. This research aims to empirically validate this claim and explore the effectiveness of KANs in various CL settings. ViTs represent a paradigm shift in computer vision. Since their introduction, we have moved away from the traditional dominance of Convolutional Neural Networks [36]. Unlike ConvNets, which rely on convolutional layers [36] to extract features, ViTs divide an image into patches and treat these patches as tokens, similar to words in a sentence, feeding them into a Transformer architecture [37]. This approach allows ViTs to capture global relationships between often remote image regions through self-attention mechanisms.

3. Materials and Methods

3.1. Kolmogorov–Arnold Networks

KANs, grounded in the Kolmogorov–Arnold representation theorem [38], model multivariate functions as sums of univariate continuous functions. Unlike traditional MLPs, KANs employ learnable spline-based activation functions, enabling local parameter updates that preserve prior knowledge during training [29]. Efficient variants like EfficientKAN reduce computational costs [39], making them practical for CL applications. One of the key benefits of KANs is their enhanced interpretability; they break down complex functions into simpler, single-variable components, allowing for a clearer understanding of model predictions [29,40,41].

KANs are based on a theorem that states that any multivariate continuous function can be decomposed into a sum of univariate functions. This mathematical foundation enables KANs to approximate complex mappings with high precision, even in high-dimensional spaces. The KAN equation is expressed as follows:

where represents the spline functions, while represents the transformations.

The activation function () is defined as follows:

where w represents a weight, is the basis function (implemented as silu), and spline is the spline function. The basis function () is defined as follows:

The spline function spline is expressed as follows:

KANs utilize a hierarchical design, where univariate functions model specific input dimensions and their interactions are synthesized through aggregation layers. This design allows for efficient representation and generalization across diverse tasks.

KANs are particularly advantageous in CL due to their ability to preserve previously learned representations. Their modular design helps mitigate catastrophic forgetting by isolating task-specific representations. KANs outperform traditional MLPs in scenarios where preserving prior knowledge while learning new tasks is critical, as demonstrated by their superior performance on incremental classification tasks.

3.2. Kolmogorov–Arnold Network-ViT

The KAN-ViT architecture combines the theoretical strengths of KAN with the powerful feature extraction capabilities of ViTs. ViTs utilize self-attention mechanisms to capture global dependencies in image data, enabling them to model relationships between image patches effectively. In KAN-ViT, the KAN framework is embedded within the ViT architecture to enhance the model’s adaptability and efficiency in learning diverse tasks. KAN modules replace or augment MLP layers in the transformer blocks, improving the model’s robustness in CL scenarios. The main advantage of this architecture comes from how the KAN’s hierarchical function approximation complements the ViT’s attention mechanisms, resulting in richer feature representations. The KAN-ViT architecture also retains knowledge better than standard ViTs in sequential tasks, especially in the early stages of incremental learning. While KAN-ViT demonstrates an initial improvement in incremental accuracy compared to standalone MLP or ViT architectures, its performance converges with ViTs in later stages, highlighting areas for further optimization.

In summary, each of these architectures (MLP, KAN, and KAN-ViT) offers distinct advantages for image classification tasks. MLPs serve as a baseline model, while KAN provides a theoretically grounded approach for efficient functional approximation and knowledge retention. KAN-ViT leverages the strengths of both KANs and ViTs, offering a hybrid solution that excels in the early CL stages. Together, these architectures represent a spectrum of strategies for tackling image classification challenges in dynamic and evolving learning environments. Integrating KANs into the ViT framework [42] involves replacing the standard MLP layers in ViTs with KAN modules. This integration leverages the theoretical advantages of KAN’s hierarchical functional approximation while maintaining the powerful global feature extraction capabilities of ViTs. Below is a detailed explanation of the integration process.

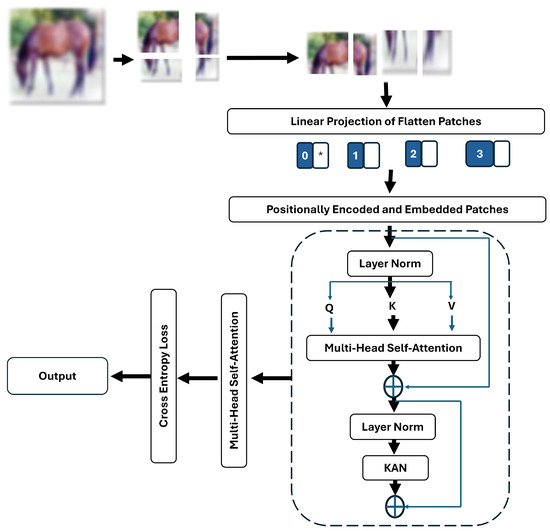

3.3. Replacement of MLP Layers with Kolmogorov–Arnold Networks

In the standard ViT architecture, the MLP layers within each transformer block play a crucial role in processing the outputs of the self-attention mechanism. These MLP layers consist of fully connected layers that perform non-linear transformations and are vital for refining feature representations. KAN replaces these MLP layers with a modular architecture inspired by the Kolmogorov–Arnold theorem, as shown in Figure 4. The KAN modules decompose complex multi-dimensional mappings into simpler univariate functions and their compositions. KANs’ hierarchical decomposition provides several advantages, such as the ability to approximate complex functions, making them highly suitable for learning intricate patterns in image data. In addition, KANs’ modular structure helps isolate representations, reducing interference between tasks in CL scenarios.

Figure 4.

The proposed methodology replaces MLP layers in the selected ViT model with KANs. * represents the special classification token ([CLS]) and 0, 1, 2, 3 denote the indices of patch embeddings.

3.4. Mathematical Formulation

In a standard ViT encoder layer, the Feed-Forward Network (FFN) is expressed as follows:

where is the sequence of token embeddings, d is the hidden dimension, , are weight matrices, is an activation function (e.g., GELU), and is the intermediate dimension.

In our KAN-based FFN, the MLP is replaced with a kernel-based adaptive computation:

where is a learnable kernel expansion mapping () to a richer feature space, is a linear shortcut path, and are learnable projection matrices, and is a bias term. The kernel expansion is computed as follows:

where is a learnable set of anchor points, is the kernel dimension, and is a non-linear kernel function (e.g., Gaussian RBF, spline).

Finally, the KAN block replaces the MLP in the encoder while retaining residual connections and normalization:

Algorithm 1 details the forward pass of the proposed ViT-KAN model, where the proposed approach modifies the standard ViT by replacing the conventional MLP in each Transformer encoder block with a KAN layer. The input image is first split into non-overlapping patches, linearly projected into token embeddings, and combined with positional encodings. Each encoder block applies multi-head self-attention, followed by a KAN layer. In the KAN layer, the linear projection output is passed through a spline-based activation, modeled as a weighted sum of B-spline basis functions. Unlike global activations (e.g., ReLU and GELU), B splines have compact, local support, so only coefficients corresponding to the active input interval receive gradient updates during backpropagation. This inherently restricts updates to a small subset of parameters, enforcing localized parameter adaptation and minimizing interference with previously learned representations. Such locality makes KANs particularly suitable for CL, as this local adaptation mitigates catastrophic forgetting while retaining ViTs’ representational power. Finally, the [CLS] token is passed to a classification head to produce class probabilities.

| Algorithm 1 ViT with KANs replacing MLPs. | |

| Require: Image | |

| Ensure: Class probabilities | |

| 1: Patch Embedding: | |

| 2: | |

| 3: | |

| 4: Transformer Encoder Blocks: | |

| 5: for to N do | |

| 6: | |

| 7: | |

| 8: KAN Layer replacing MLP: | |

| 9: | |

| 10: | |

| 11: end for | |

| 12: Classification Head: | |

| 13: | |

| 14: | |

| 15: function KAN_Layer(z) | |

| 16: | ▹ Linear projection |

| 17: | ▹ Spline-based activation |

| 18: | |

| 19: return v | |

| 20: end function | |

3.5. Ensuring Seamless Integration of KAN Layers

To ensure smooth integration, the KAN modules are designed to be compatible with the dimensions and computational requirements of the original MLP layers. The input and output dimensions of the KAN modules align with those of the replaced MLP layers, ensuring no disruption in the Transformer block pipeline. The hierarchical structure of KAN is implemented in a way that retains a similar computational footprint, making it scalable to large datasets and high-dimensional image tasks. The integration process may involve the fine-tuning of hyperparameters, adopting a specialized optimizer, or employing regularization techniques to ensure stable and efficient training of the KAN layers within the ViT framework.

3.6. Synergy Between Kolmogorov and Vision Transformer

The combination of KANs and ViTs creates a synergistic effect; for instance, while ViT’s self-attention mechanism excels at modeling global dependencies between image patches, KANs enhance the non-linear transformations applied to these dependencies, enriching the overall feature representation. KAN’s modular design integrates seamlessly into the sequential structure of ViTs, ensuring adaptability to various datasets and tasks. Moreover, KAN’s capacity to isolate learned knowledge makes the hybrid model more resistant to catastrophic forgetting when compared to traditional MLPs.

The ViT architecture has revolutionized the field of computer vision, demonstrating remarkable performance across various tasks [31]. However, despite their success, ViTs often grapple with the challenge of catastrophic forgetting, wherein the model’s ability to retain previously learned knowledge diminishes upon exposure to new tasks. To address this limitation, this paper explores the integration of KANs as replacements for traditional MLP layers within a standard ViT architecture, aiming to enhance knowledge retention and mitigate catastrophic forgetting. ViTs inherently capture long-range dependencies and enable parallel processing yet lack inductive biases and efficiency benefits. This means they face significant computational and memory challenges that limit their real-world applicability [43]. The fundamental concept of ViTs involves partitioning an input image into a sequence of patches, which are then treated as tokens akin to words in natural language processing [44]. By replacing the MLP layers in ViTs with KANs, it is hypothesized that the resulting architecture will exhibit superior performance in CL scenarios, demonstrating improved resistance to catastrophic forgetting while maintaining or enhancing accuracy on individual tasks.

This research investigates the effects of replacing the standard MLPs in ViTs with KANs to address catastrophic forgetting and improve learning capabilities. KANs are based on the Kolmogorov–Arnold representation theorem, which posits that any continuous function with multiple variables can be expressed as a finite sum of univariate function compositions. In this work, a standard pre-trained ViT model is selected, and the MLP layers are replaced with KAN layers while ensuring smooth integration within the ViT architecture. Unlike MLPs, which use fixed activation functions, KANs employ learnable activation functions on edges, enabling flexible and expressive functional approximations. The inherent locality of spline bases in KANs ensures that each sample primarily affects only a few nearby spline coefficients, thereby minimizing the impact on distant regions and preserving previously learned knowledge.

The integration of KANs into ViTs represents a novel approach to CL, offering the potential to overcome the limitations of traditional MLP-based architectures. The objective of this approach is to enhance the ability of ViTs to retain knowledge across multiple tasks, reducing the impact of catastrophic forgetting, as well as providing a comprehensive analysis of various optimizers and their effects on KAN-based ViTs. Experimental results on image classification tasks reveal that KAN-based ViTs exhibit superior performance in CL scenarios, demonstrating improved resistance to catastrophic forgetting compared to traditional MLP-based ViTs. The implementation of adaptive token merging mitigates information loss through adaptive token reduction across layers and batches by adjusting layer-specific similarity thresholds [45].

The KAN-ViT architecture’s resistance to catastrophic forgetting is rigorously measured and compared against traditional MLP-based ViTs. The findings provide valuable insights into the strengths and limitations of KAN-based ViTs, paving the way for future research in CL and adaptive neural networks. B splines, which are piecewise polynomial functions used to create smooth and flexible curves, offer local control, i.e., adjustments to one part of the spline do not affect distant regions. CL approaches such as replay, parameter regularization, functional regularization, optimization-based approaches, context-dependent processing, and template-based classification are employed to further mitigate catastrophic forgetting. This investigation ultimately seeks to provide a thorough understanding of the capabilities of KAN-based ViTs in CL settings. The results highlight their potential for real-world applications where knowledge retention is paramount.

3.7. Datasets and Task Splits

To rigorously evaluate the efficacy and generalizability of the proposed methodology, we conducted a comprehensive suite of experiments utilizing three benchmark datasets widely recognized in the field of ML, namely, the MNIST (https://www.kaggle.com/datasets/hojjatk/mnist-dataset, (accessed on 18 June 2025)), CIFAR100 ([39] https://www.kaggle.com/c/cifar100-image-classification/data, (accessed on 29 June 2025)), and TinyImageNet-200 [46] datasets. These datasets were chosen due to their distinct characteristics and representational complexities, allowing for a thorough assessment of our approach under varying conditions [47]. The MNIST dataset comprises a large collection of handwritten digits ranging from 0 to 9 [48]. It serves as a foundational dataset for image classification tasks, enabling researchers to prototype and evaluate novel algorithms with relative ease. CIFAR100, on the other hand, presents a more challenging scenario with its collection of 100 distinct object classes, each containing a diverse set of images. The TinyImageNet-200dataset is a compact version of the original ImageNet dataset, created to facilitate faster experimentation and model evaluation while maintaining diverse visual content. It contains 200 object categories, each with 500 training images, 50 validation images, and 50 test images, totaling around 120,000 images. As shown in Figure 5, all images are resized to a uniform resolution of pixels, significantly reducing storage and computational requirements compared to the full ImageNet dataset. The use of publicly available datasets and benchmarks has been crucial to ML progress by allowing for quantitative comparison of new methods [49].

Figure 5.

TinyImageNet-200: 200 classes (64 × 64 images) dataset.

In the experiments conducted to evaluate the performance of MLPs and KANs, the MNIST, CIFAR100, and TinyImageNet-200 datasets were strategically divided into multiple tasks to simulate a CL scenario. This setup was designed to mimic real-world environments where models must learn and adapt to sequentially presented data without access to previously seen information. By segmenting the datasets into distinct, non-overlapping tasks, the experiments aimed to test the model’s ability to retain knowledge from earlier tasks while learning new ones, addressing the critical challenge of catastrophic forgetting.

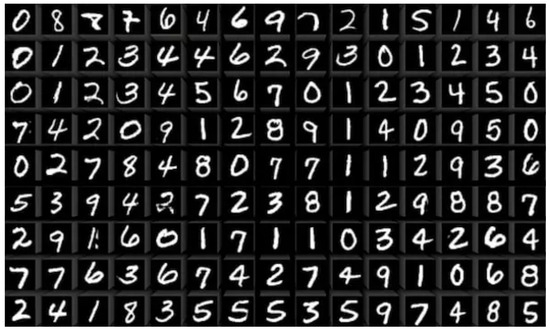

For the MNIST dataset, which contains 10 classes for digits from 0 to 9, as shown in Figure 6 (https://datasets.activeloop.ai/docs/ml/datasets/mnist/, (accessed on 29 June 2025)), the data was divided into 5 tasks, each comprising 2 classes. For example, the first task involved training the model on classes 0 and 1, the second task on classes 2 and 3, and so forth, until all 10 classes were covered. This sequential division ensured that each task introduced entirely new data, requiring the model to update its knowledge base while being unable to revisit the data from previous tasks. The training process was tailored to the incremental learning setup, with 7 epochs allocated for the first task to allow the model sufficient time to build a strong foundation. For the remaining tasks, the model was trained for 5 epochs each, testing its efficiency in integrating new information without over-relying on extended training.

Figure 6.

Images from the MNIST dataset.

The CIFAR100 dataset, a more complex and diverse dataset consisting of 100 classes, was divided into 10 tasks, with each task containing 10 distinct classes. Similar to the MNIST setup, the tasks were arranged sequentially, with the first task involving classes 0 to 9, the second task covering classes 10 to 19, and so on, until all 100 classes were utilized. This division introduced a greater challenge compared to MNIST, as the model had to handle a larger number of classes with more intricate visual features. The training schedule for CIFAR100 reflected that dataset’s complexity; 25 epochs were dedicated to the first task, enabling the model to establish a robust initial representation. For subsequent tasks, the model was trained for 10 epochs per task, emphasizing the need to adapt rapidly to new data while retaining prior knowledge. Images from the CIFAR100 dataset are shown in Figure 7 (https://datasets.activeloop.ai/docs/ml/datasets/cifar-100-dataset/, (accessed on 29 June 2025)). In our CL setup, the TinyImageNet-200dataset was partitioned into five sequential tasks to simulate incremental exposure to new classes over time. Each task contained 40 distinct classes (out of a total of 200), with no overlap in categories between tasks. This division preserved the dataset’s balance, ensuring that each task included the same number of training, validation, and test samples per class. By structuring the data this way, the CL experiments could evaluate how well different models retained knowledge from previously learned tasks (stability) while acquiring new information (plasticity). This multi-task configuration also allowed for direct measurement of catastrophic forgetting, average incremental accuracy, and other CL metrics in a controlled, reproducible setting.

Figure 7.

Example images from the CIFAR-100 dataset. The figure illustrates the diversity of the dataset across 100 object categories.

By structuring the datasets in this way, the experiments effectively simulated the sequential nature of CL, where the model encounters new information in stages and must balance the learning of new tasks (plasticity) with the preservation of previously acquired knowledge (stability). The absence of access to prior task data heightened the challenge, requiring the model to rely on its internal mechanisms to mitigate catastrophic forgetting. This experimental design provided a controlled and rigorous environment to assess the potential of KANs and their integration with ViTs for CL applications.

3.8. Evaluation Metrics

We utilized various evaluation parameters to evaluate the performance of the proposed method.

3.8.1. Last Task Accuracy

We measure the overall performance of the model on the test set of all tasks by calculating the average accuracy of the model on the test set of the classes that it has seen until task . This metric is denoted by .

3.8.2. Average Incremental Accuracy

We measure the average performance of the model on all tasks by computing the average accuracy of the model for each task. We then take the average of these accuracies across all tasks.

3.8.3. Average Global Forgetting

We quantify the model’s ability to retain knowledge from previous tasks during the process of learning new tasks. This is achieved by calculating the difference in accuracy between the previous task and the current task. This metric is denoted as follows:

Furthermore, average global forgetting is measured as follows:

3.8.4. Experimental Validation and Benefits

Empirical results from experiments on datasets such as MNIST and CIFAR100 validate the effectiveness of KAN-ViT. These experiments demonstrate that the integration of KANs enhances the model’s performance in the early stages of incremental learning by improving average incremental accuracy and knowledge retention. The hybrid model performs competitively in later stages, maintaining the advantages of both KANs’ theoretical strengths and ViTs’ powerful attention mechanisms. Replacing the MLP layers in a ViT with KAN modules transforms the architecture into a more robust and adaptive system. This integration not only preserves the strengths of ViTs but also incorporates the functional approximation and modular advantages of KANs, making the hybrid KAN-ViT model a compelling choice for complex classification tasks, particularly in CL scenarios.

4. Experimental Setup

The details of the experimental setup are described in their corresponding subsections.

4.1. Training Procedure

The training epochs for Task 1 and the remaining tasks in the MNIST and CIFAR100 experiments were carefully designed to balance the model’s ability to learn the initial task thoroughly while maintaining computational efficiency for subsequent tasks. This division reflects the unique demands of CL, where the model must acquire knowledge incrementally while mitigating catastrophic forgetting of prior tasks. Below is a detailed explanation of the training strategy:

4.1.1. Training Epochs for Task 1

- 1.

- MNIST Experiments: The first task in the MNIST experiments was allocated seven epochs, which is slightly more than the subsequent tasks. This additional training time allows the model to establish a solid foundation by thoroughly learning the features of the initial set of classes (two classes in this case). Since the model starts with no prior knowledge, extra epochs are needed to ensure the weights are optimized effectively for the initial learning process. This helps create robust feature representations that can serve as a basis for learning subsequent tasks.

- 2.

- CIFAR100 Experiments: In the CIFAR100 experiments, the first task receives 25 epochs. The larger number of epochs compared to MNIST reflects the increased complexity of the CIFAR100 dataset, which consists of higher resolution images and more diverse classes. The model requires more training time to process and extract meaningful patterns from the more complex data to successfully recognize the first 10 classes. This ensures that the model builds a robust understanding of the dataset, reducing the risk of misrepresentation as new tasks are introduced.

4.1.2. Training Epochs for Remaining Tasks

MNIST Experiments: After the initial task, the model undergoes five epochs of training for each new task. By this stage, the model has already developed basic feature extraction capabilities. The focus shifts to integrating new class-specific knowledge while retaining previously learned information. Limiting the epochs ensures the process is computationally efficient, reducing training time without significantly compromising performance.

CIFAR100 Experiments: Each subsequent task in the CIFAR100 experiments is trained for 10 epochs. The complexity of the CIFAR100 images necessitates more epochs per task compared to MNIST to fine-tune the model for new classes. However, fewer epochs are needed compared to the first task, since that initial learning has already established general features that the model can reuse. his balanced approach prevents overfitting to new tasks while maintaining computational feasibility.

4.1.3. Balancing Training Objectives

The differences in epochs between Task 1 and the remaining tasks reflect the following priorities such that the extra epochs for Task 1 ensure that the model builds a strong initial representation. The reduced epochs for the remaining tasks help balance computational resources and prevent overfitting to newer tasks while preserving knowledge from previous tasks. This epoch distribution aligns with the CL goal of incrementally acquiring knowledge across tasks while mitigating forgetting, ensuring robust performance over sequential learning scenarios.

The primary loss function used during training is the cross-entropy loss, which is standard for classification tasks. This loss measures the divergence between the predicted probability distribution and the true class labels, ensuring the model learns accurate classifications. Additionally, for subsequent tasks beyond Task 1, a replay mechanism is employed to mitigate catastrophic forgetting. Replay loss, which incorporates data from previous tasks, is scaled by a factor of 0.5 to balance its contribution with the primary task’s loss. This scaling ensures that while the model retains knowledge from earlier tasks, it does not overly prioritize old data at the expense of learning new information.

A script calculates accuracy as the ratio of correctly predicted samples to the total number of samples in a batch. This metric is computed for each task and aggregated across all tasks learned so far. Incremental accuracy is determined as the mean of these task-specific accuracies, offering a comprehensive view of the model’s performance throughout its CL journey. Forgetting is assessed by comparing the accuracy on previous tasks before and after learning the current task. This metric quantifies the degree to which learning new tasks affects the model’s retention of earlier tasks.

Training is performed in mini batches, a standard practice that optimizes memory usage and accelerates computations on modern hardware like GPUs. This approach splits the dataset into manageable portions, allowing the model to iteratively update its parameters. Mini-batch training ensures that gradients are calculated and applied more frequently, leading to smoother and more stable convergence.

Incremental learning strategies are embedded into the training process, where the model learns tasks sequentially while retaining knowledge from previous tasks. This is achieved through mechanisms like replay loaders and task-specific data loaders. Each task has a unique configuration for the number of epochs, replay data usage, and evaluation methods, ensuring the training process is tailored to the specific requirements of each task.

4.2. Implementation Details

All the experiments were performed on the MNIST, CIFAR-100, and TinyImageNet-200 datasets using an NVIDIA RTX-3090 GPU and 3.8 GHz CPU with 64 GB RAM. The models were implemented using the PyTorch 2.7.0+cu118 framework.

5. Results and Discussion

The pursuit of adaptable and efficient ML models has led to the exploration of novel architectures to look for models that are capable of learning continuously without forgetting previously acquired knowledge. In this work, we delve into the efficacy of KANs in the context of CL for image classification, a paradigm shift from traditional ML that necessitates models to sequentially learn from a stream of tasks [4]. The challenge of catastrophic forgetting, wherein models experience a significant decline in performance on previously learned tasks upon learning new ones, is a central concern in CL [3]. Our investigation encompasses a comparative analysis of standalone MLPs and KAN variants, alongside an examination of KAN integration into the ViT architecture [7]. Through empirical evaluations on the MNIST, CIFAR100, and TinyImageNet-200 datasets, we aim to elucidate the strengths and limitations of KANs in mitigating catastrophic forgetting and enhancing overall CL performance [50].

Our experimental design involved partitioning the MNIST, CIFAR-100, and TinyImageNet-200 datasets into distinct tasks to simulate CL scenarios that mirror the real-world challenges of non-stationary data distributions. Specifically, the MNIST dataset, comprising 10 classes, was divided into 5 tasks, each containing 2 classes, while the CIFAR100 dataset, with its 100 classes, was split into 10 tasks, each encompassing 10 classes, whereas TinyImageNetwas split into 5 tasks. The rationale behind this setup was to mimic a scenario where a model encounters new information incrementally, thereby requiring it to adapt and learn without compromising its existing knowledge base. The models underwent training for a predetermined number of epochs per task, with the number of epochs for the initial task being greater than for subsequent tasks. This allowed us to observe how the models adapted to the initial learning phase versus subsequent incremental learning phases. We evaluated the performance of KAN variants against traditional MLPs and within the ViT architecture, focusing on metrics indicative of both forward learning (acquiring new knowledge) and backward transfer (retaining old knowledge).

The core objective of this research was to rigorously assess the potential of KANs as a viable alternative to traditional MLPs in CL settings, with a specific focus on image classification tasks. By comparing the performance of KAN variants with traditional MLPs and integrating KANs into the ViT architecture, we aimed to gain insights into the potential advantages and drawbacks of using KANs for CL tasks while looking to gain a comprehensive understanding of KANs’ capabilities in this domain. Our findings suggest that, in standalone configurations, KAN models exhibit a notable advantage in CL scenarios, showcasing superior resistance to catastrophic forgetting compared to their MLP counterparts. The theoretical underpinnings of KANs lie in the Kolmogorov–Arnold representation theorem, which posits that any multivariate continuous function can be expressed as a finite sum of compositions of continuous univariate functions. This inherent flexibility, coupled with learnable activation functions on edges, empowers KANs to approximate complex functions with greater precision compared to MLPs with fixed activation functions.

The integration of KANs into the ViT architecture yielded a nuanced set of results, with a slight improvement in average incremental accuracy observed, particularly during the initial stages of incremental learning, suggesting that KANs can facilitate faster adaptation to new tasks early in the learning process. However, as the CL process progressed, the performance of KAN-ViT converged with that of MLP-ViT, indicating that the benefits of KANs may diminish over time, possibly due to the increasing complexity of the learned representations or optimization challenges associated with deeper architectures. One potential explanation for this convergence is the inherent capacity of ViTs to capture long-range dependencies through self-attention mechanisms, which may overshadow the representational advantages offered by KANs in deeper layers. Further investigation is warranted to explore strategies for maximizing the benefits of KANs within transformer-based architectures for CL. The use of attention modules and large-scale pre-training contributes to the robustness of ViTs [51].

The integration of KANs into ViTs aims to enhance the resulting model’s capacity to adapt to new tasks without compromising its ability to perform CL. The architecture of KANs is based on B splines, which create smooth curves using a set of control points. The results illustrate that KAN-based ViTs achieve higher accuracy than traditional MLP-based ViTs by using learnable activation functions on edges.

Overall Performance Analysis

The overall performance of the proposed technique was evaluated using various metrics. Table 1 compares the performance of three models—namely, FASTKAN, MLP, and EfficientKAN—on the CIFAR100 and MNIST datasets in terms of their accuracy, convergence speed, and computational efficiency. On CIFAR100, EfficientKAN achieves the highest test accuracy (57.5%). FastKAN, while slightly less accurate on CIFAR100 (54.6%), provides faster training and testing times (1.939 s/epoch and 0.123 s/epoch, respectively). In contrast, MLP demonstrates poor performance on CIFAR100, with only 54.0% test accuracy and the lowest training accuracy (64.0%). On MNIST, FastKAN delivers the best overall performance, with a test accuracy of 98.3%, achieving a good balance between accuracy and computational efficiency. EfficientKAN closely follows, with 98.1% test accuracy, requiring fewer epochs (7) to converge; however, it achieves this with the highest training and testing times. MLP, while achieving comparable test accuracy (98.0%), sacrifices generalization on more complex datasets like CIFAR100.

Table 1.

Performance comparison of FASTKAN, MLP, and EfficientKAN.

Overall, EfficientKAN excels in accuracy, particularly for more complex datasets, while FastKAN provides a good trade-off between accuracy and efficiency. The results indicate that model choice depends on the specific dataset and whether accuracy or computational speed is prioritized.

As shown in Table 2, incorporating CNN features into KAN and MLP models markedly boosts accuracy on TinyImageNet-200, with EfficientKAN achieving 51.21% test accuracy, compared to an accuracy below 12% without CNN features, at the cost of slightly increased inference time.

Table 2.

Performance comparison of FASTKAN, MLP, and EfficientKAN models with and without CNN feature integration on the TinyImageNet-200 dataset.

Table 3 presents a comparison of CL performance metrics between the MLP and EfficientKAN models on the MNIST dataset, focusing on three key indicators: average incremental accuracy, last task accuracy, and average global forgetting. EfficientKAN outperforms MLP across all metrics. It achieves a higher average incremental accuracy of 52.2% compared to MLP’s 45.8%, indicating that EfficientKAN consistently performs better across sequential tasks. For last task accuracy, EfficientKAN significantly surpasses MLP, achieving 50.9% compared to MLP’s 22.1%. This result highlights EfficientKAN’s superior ability to adapt to new tasks while retaining knowledge from earlier tasks. In terms of average global forgetting, EfficientKAN demonstrates a marked improvement, achieving 71.0%, compared to MLP’s 95.2%. Lower forgetting indicates that EfficientKAN is more effective at preserving knowledge from earlier tasks in a CL setting.

Table 3.

CL performance comparison: simple MLP vs. EfficientKAN.

In general, these results emphasize EfficientKAN’s robustness in mitigating catastrophic forgetting and its superior adaptability and retention in CL scenarios compared to MLP.

Table 4 reports the CL performance of MLP, Efficient-KAN, and Fast-KAN models on the TinyImageNet-200 dataset using Average Incremental Accuracy (AIA) and Last Task Accuracy (LTA) as evaluation metrics. The results show that the MLP baseline has the weakest performance, with an AIA of 6.53% and an LTA of only 0.50%, reflecting its susceptibility to catastrophic forgetting. In contrast, Efficient-KAN achieves improved results, with an AIA of 10.97% and an LTA of 0.57%, demonstrating that its spline-based representation is more effective at retaining knowledge across tasks. Fast-KAN delivers the highest performance, achieving an AIA of 18.06% and a substantially higher LTA of 8.46%, indicating superior task adaptability and knowledge preservation. Overall, the findings highlight the advantage of KAN-based architectures over traditional MLPs in CL, with Fast-KAN providing the most robust performance on TinyImageNet-200.

Table 4.

CL performance comparison of MLP, Efficient-KAN, and Fast-KAN models on the TinyImageNet-200 dataset.

Table 5 compares the CL performance of ViT-MLP and ViT-KAN across two datasets: MNIST and CIFAR100, focusing on three key metrics. For the MNIST dataset, ViT-KAN achieves a slightly higher average incremental accuracy (18.44%) compared to ViT-MLP (17.70%), indicating better performance in handling sequential tasks. However, in last task accuracy, ViT-MLP (6.66%) outperforms ViT-KAN (4.67%), suggesting that ViT-MLP retains better accuracy for the most recent task. In terms of average global forgetting, ViT-KAN demonstrates a marginal improvement, achieving 33.47% compared to ViT-MLP’s 35.16%, reflecting better retention of earlier tasks. For the CIFAR100 dataset, ViT-KAN again shows superior average incremental accuracy (15.49%) compared to ViT-MLP (13.63%), underscoring its effectiveness in sequential task learning. In last task accuracy, the two models perform almost identically, with ViT-KAN scoring 4.77% and ViT-MLP scoring 4.75%. However, ViT-KAN exhibits slightly higher average global forgetting (49.85%) compared to ViT-MLP (44.67%), indicating a trade-off in retention of earlier tasks in this case.

Table 5.

Performance comparison of ViT_KAN vs. ViT_MLP in CL tasks.

The results highlight ViT-KAN as a promising alternative to ViT-MLP, with noticeable improvements in incremental learning accuracy and modest benefits in catastrophic forgetting, especially on MNIST. However, the trade-offs in retention and last task accuracy on CIFAR100 suggest areas for further refinement.

Table 6 presents a performance comparison between ViT-MLP and ViT-KAN while incorporating replay mechanisms in the context of CL on the CIFAR100 dataset. ViT-KAN outperforms ViT-MLP in Average Incremental Accuracy, achieving 17.23% compared to ViT-MLP’s 16.58%. This indicates that ViT-KAN is better at learning sequential tasks while maintaining a higher overall performance across all tasks. In Last Task Accuracy, which reflects the model’s ability to retain performance on the most recent task, ViT-KAN again has an edge, scoring 6.47% compared to ViT-MLP’s 6.09%. This suggests that ViT-KAN adapts more effectively to new tasks while preserving recent knowledge. However, in terms of Average Global Forgetting Accuracy, which measures the overall forgetting across all tasks, ViT-KAN shows slightly higher forgetting (57.45%) compared to ViT-MLP (55.72%). While this represents a trade-off, the increased incremental and last task accuracies suggest that ViT-KAN prioritizes learning and adapting to new tasks at a small cost to retention of knowledge from earlier tasks.

Table 6.

CL performance: ViT_KAN vs. ViT_MLP with replay.

Table 7 presents the CL performance of ViT_MLP and ViT_KAN when combined with a replay strategy on the TinyImageNet-200 dataset. The results indicate that ViT_KAN outperforms ViT_MLP in both average incremental accuracy (12.89% vs. 10.65%) and last task accuracy (5.69% vs. 5.32%), suggesting that replacing MLP blocks with KAN blocks improves knowledge retention and adaptability across tasks. However, the average global forgetting is slightly higher for ViT_KAN (29.27%) compared to ViT_MLP (24.67%), implying that while KAN enhances overall performance and stability, it may also be more prone to forgetting previously learned tasks. Overall, the findings highlight the trade-off between accuracy gains and increased forgetting when using KAN-based architectures in CL with replay.

Table 7.

CL performance comparison of ViT_MLP with replay vs. ViT_KAN with replay on the TinyImageNet-200 dataset.

In conclusion, the inclusion of replay mechanisms highlights ViT-KAN as a more effective model for CL on CIFAR100, balancing better task adaptation and incremental performance with slightly increased global forgetting.

We analyzed the computational and memory overhead of KANs relative to standard MLPs. KANs have a notably higher parameter count, with 5.84 million parameters, compared to 3.21 million in MLPs, indicating increased model complexity. This complexity results in longer training and evaluation times per epoch; specifically, KANs require more training time and more evaluation time than MLPs. Peak memory consumption during training is also elevated for KANs. Although these factors imply greater computational and memory demands, the enhanced representational power of KANs justifies this overhead in the context of improved model performance.

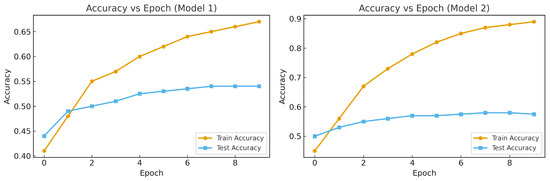

Figure 8 provides a comparative analysis of the training and testing performance of MLP and EfficientKAN models on the CIFAR-100 dataset in terms of accuracy. For both models, training accuracy steadily increases as the number of epochs progresses, indicating that both models effectively learn from the training data. However, the testing accuracy of EfficientKAN is consistently higher than that of the MLP model. This suggests that EfficientKAN generalizes better to unseen data compared to the MLP model, as evidenced by the more significant gap in test accuracy improvement.

Figure 8.

Base-level accuracy of MLP and EfficientKAN on CIFAR-100 dataset.

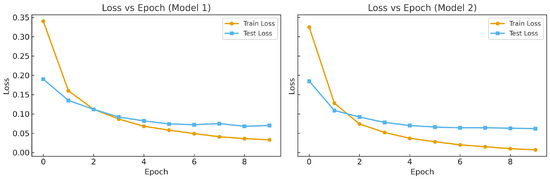

Figure 9 shows the losses of MLP and EfficientKAN over multiple epochs. The loss curves demonstrate the convergence behavior of both models. The MLP shows a gradual decrease in training loss, but the test loss remains relatively higher, indicating potential overfitting. In contrast, EfficientKAN reveals a sharper reduction in training loss, alongside a significantly lower and more stable test loss, suggesting better learning dynamics and improved generalization to test data.

Figure 9.

Base-level loss of MLP and EfficientKAN on CIFAR-100 dataset.

Looking at Figure 8 and Figure 9, we can see that EfficientKAN outperforms MLP in terms of both test accuracy and test loss. The results highlight EfficientKAN’s ability to achieve superior generalization, likely due to architectural advantages that promote efficient learning and robustness in CL scenarios. These observations underscore EfficientKAN’s suitability for challenging tasks like CIFAR-100, where maintaining generalization is critical.

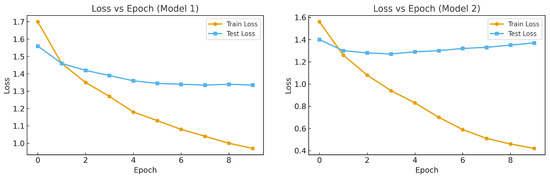

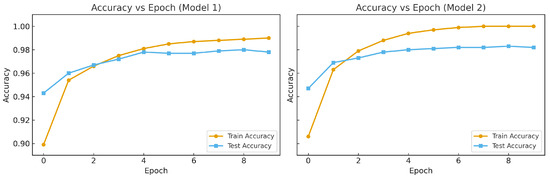

Figure 10 and Figure 11 present the comparative performance of MLP and EfficientKAN models on the MNIST dataset, evaluating their accuracy and loss over training epochs.

Figure 10.

Base-level accuracy of MLP and EfficientKAN on MNIST dataset.

Figure 11.

Base-level loss of MLP and EfficientKAN on MNIST dataset.

In Figure 10, both models show rapid improvement in training and test accuracy during the initial epochs, with the training accuracies nearing 100%. However, EfficientKAN consistently achieves slightly higher test accuracy compared to MLP throughout the epochs. This suggests that EfficientKAN generalizes better to unseen data, maintaining better performance across training iterations.

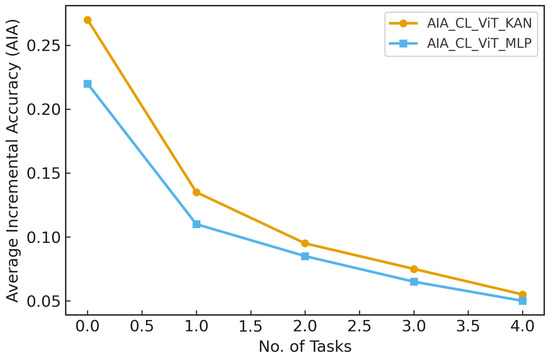

Figure 11 shows the training and test loss curves for the two models. Both models exhibit a sharp reduction in training loss within the first few epochs, indicating effective convergence. The test loss, however, is consistently lower for EfficientKAN compared to MLP, demonstrating better generalization and stability during training. Additionally, the test loss curve for EfficientKAN shows less fluctuation, highlighting its reliability. Figure 12 shows AIA across sequential tasks on TinyImageNet-200.

Figure 12.

Average incremental accuracy (AIA) across sequential tasks on the TinyImageNet-200 dataset, comparing ViT_KAN and ViT_MLP models in a continual learning setting. ViT_KAN consistently outperforms ViT_MLP, indicating better knowledge retention over tasks.

In conclusion, EfficientKAN outperforms MLP in terms of both accuracy and loss on the MNIST dataset. It converges faster, achieves higher test accuracy, and exhibits lower test loss, showcasing its superior ability to extract and retain essential features while minimizing overfitting.

The performance comparison across datasets reveals that while the KAN-based model frequently achieves competitive or superior accuracy, the gains are not uniform across all evaluation metrics or datasets. This mixed performance suggests that the theoretical strengths of KANs, particularly the local plasticity, which enables fine-grained adjustments in specific regions of their input space, are most beneficial when the target task involves highly localized and complex decision boundaries. For instance, in datasets with heterogeneous feature distributions, the KAN-based model can selectively refine its responses, leading to measurable performance improvements. However, in scenarios where the data is more homogeneous or where global structure dominates the decision-making process, the advantage of localized adjustments becomes less pronounced, sometimes resulting in comparable or even slightly lower performance than that of baseline models. Furthermore, the added flexibility of KANs may introduce a risk of overfitting, particularly in smaller datasets, which could explain the observed variations in generalization performance. These findings highlight how the benefits of KAN integration are context-dependent, and the trade-off between adaptability and potential overfitting should be considered when applying KANs to different domains. Future work should investigate adaptive spline regularization, architecture tuning, and hybrid designs that integrate KAN layers selectively to balance accuracy, training stability, and efficiency.

Table 8 compares the CL performance of ViT_MLP and ViT_KAN models on the TinyImageNet-200 dataset using two different optimizers: Adam and SGD. When trained with Adam, both models perform better than when trained with with SGD. Specifically, ViT_KAN with Adam achieves the highest performance overall, with an AIA of 12.89% and an LTA of 5.69%, outperforming ViT_MLP with Adam (AIA of 10.65% and LTA of 5.32%). However, Adam also results in higher forgetting for ViT_KAN (AGF of 29.27%) compared to ViT_MLP (AGF of 24.67%). On the other hand, SGD yields consistently lower accuracies for both models, although with relatively reduced forgetting (AGF values of 13.50% and 15.37%).

Table 8.

Comparison of CL performance for ViT_MLP and ViT_KAN models on the TinyImageNet-200 dataset using different optimizers.

In summary, Adam provides superior accuracy but at the cost of increased forgetting, while SGD offers lower accuracy but slightly better retention stability. Among all cases, ViT_KAN with Adam demonstrates the strongest overall performance in terms of incremental and last task accuracy.

6. Conclusions and Future Work

This study presents a novel approach to addressing the critical challenge of catastrophic forgetting in ViTs by replacing traditional MLP layers with KANs. Leveraging the local plasticity of spline-based activations used by KANs, the proposed architecture demonstrates superior knowledge retention and adaptability in CL settings. Rigorous experiments on benchmark datasets such as MNIST and CIFAR100 reveal that KAN-based ViTs consistently outperform their MLP-based counterparts, KAN-based ViTs showcase improved task accuracy and resistance to catastrophic forgetting. A comprehensive analysis of optimization techniques highlights the trade-offs between first-order optimizers; specifically, ADAM was shown to provide improved computational efficiency. This analysis emphasizes the critical role of optimizer selection in maximizing the performance of KAN-based ViTs. By introducing a systematic framework for sequential learning, this work provides valuable insights into the potential of KANs to enhance the scalability and robustness of ViTs in dynamic environments. These findings not only pave the way for more effective neural network architectures in CL scenarios but also open up avenues for further exploration of spline-based methods in other domains. In conclusion, the integration of KANs into ViTs represents a promising step toward the creation of more adaptable and efficient vision models. Our results have significant implications for how researchers tackle real-world applications that require CL and robust knowledge retention in the future.

Future work will investigate adaptive spline basis selection, with the number and placement of control points dynamically optimized during training according to the input distribution. This can be realized by introducing learnable knot positions () that are updated via backpropagation or by employing online insertion and pruning strategies based on local approximation error. We also plan to incorporate regularization terms to penalize redundant spline segments, thereby reducing unnecessary parameters and improving model efficiency. Such dynamic adaptation is expected to preserve expressiveness while mitigating overfitting and computational overhead. We propose a multi-level KAN architecture in which lower-level spline modules capture fine-grained local variations while higher-level modules capture coarse, global semantic patterns. This can be achieved by stacking KAN layers with progressively increasing receptive-field sizes or by embedding KANs within a multi-scale transformer backbone. The hierarchical structure is anticipated to improve both interpretability and robustness by explicitly modeling feature dependencies at multiple resolutions. We aim to extend the use of KANs to multi-modal CL settings, where each modality (e.g., image, audio, or text) employs modality-specific spline bases but shares cross-modal attention for joint representation learning. We will design joint spline-embedding spaces () with alignment constraints to ensure semantic coherence across modalities. For streaming data scenarios, we plan to implement incremental spline adaptation methods, where control points and coefficients are updated online with a low-memory footprint, without storing full historical datasets. Potential strategies include reservoir the sampling and compression of replay buffers, combined with local plasticity constraints to mitigate catastrophic forgetting in real-time applications.

Author Contributions

Z.U.: conceptualization, data curation, methodology, software, formal analysis, investigation, writing—original draft, and writing—review and editing. J.K.: conceptualization, writing—review and editing, formal analysis, investigation, supervision, project administration, and funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the MSIT (Ministry of Science and ICT) of Korea under the ITRC (Information Technology Research Center) support program (IITP-2025-RS-2020-II201789), and the Global Research Support Program in the Digital Field (RS-2024-00426860), supervised by the IITP (Institute for Information & Communications Technology Planning & Evaluation).

Data Availability Statement

The data presented in this study are openly available in [Kaggle] at [https://www.kaggle.com/datasets/hojjatk/mnist-dataset, accessed on 20 May 2025].

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Wang, L.; Zhang, X.; Su, H.; Zhu, J. A comprehensive survey of continual learning: Theory, method and application. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5362–5383. [Google Scholar] [CrossRef] [PubMed]

- Van de Ven, G.M.; Tuytelaars, T.; Tolias, A.S. Three types of incremental learning. Nat. Mach. Intell. 2022, 4, 1185–1197. [Google Scholar] [CrossRef]

- Gupta, R.; Gupta, S.; Parikh, R.; Gupta, D.; Javaheri, A.; Shaktawat, J.S. Personalized Artificial General Intelligence (AGI) via Neuroscience-Inspired Continuous Learning Systems. arXiv 2025, arXiv:2504.20109. [Google Scholar] [CrossRef]

- Lesort, T.; Caccia, M.; Rish, I. Understanding continual learning settings with data distribution drift analysis. arXiv 2021, arXiv:2104.01678. [Google Scholar]

- Lesort, T.; Lomonaco, V.; Stoian, A.; Maltoni, D.; Filliat, D.; Díaz-Rodríguez, N. Continual learning for robotics: Definition, framework, learning strategies, opportunities and challenges. Inf. Fusion 2020, 58, 52–68. [Google Scholar] [CrossRef]

- Xu, X.; Chen, J.; Thakur, D.; Hong, D. Multi-modal disease segmentation with continual learning and adaptive decision fusion. Inf. Fusion 2025, 118, 102962. [Google Scholar] [CrossRef]

- Parisi, G.I.; Kemker, R.; Part, J.L.; Kanan, C.; Wermter, S. Continual lifelong learning with neural networks: A review. Neural Netw. 2019, 113, 54–71. [Google Scholar] [CrossRef] [PubMed]

- Thrun, S. Lifelong learning algorithms. In Learning to Learn; Springer: Boston, MA, USA, 1998; pp. 181–209. [Google Scholar]

- Aljundi, R.; Chakravarty, P.; Tuytelaars, T. Expert gate: Lifelong learning with a network of experts. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3366–3375. [Google Scholar]