Abstract

Terrorism remains a critical global challenge, and the proliferation of social media has created new avenues for monitoring extremist discourse. This study investigates stance detection as a method to identify Arabic tweets expressing support for or opposition to specific organizations associated with extremist activities, using Hezbollah as a case study. Thousands of relevant Arabic tweets were collected and manually annotated by expert annotators. After extensive preprocessing and feature extraction using term frequency–inverse document frequency (tf-idf), we implemented traditional machine learning (ML) classifiers—Support Vector Machines (SVMs) with multiple kernels, Multinomial Naïve Bayes, and Weighted K-Nearest Neighbors. ML models were selected over deep learning (DL) approaches due to (1) limited annotated Arabic data availability for effective DL training; (2) computational efficiency for resource-constrained environments; and (3) the critical need for interpretability in counterterrorism applications. While interpretability is not a core focus of this work, the use of traditional ML models (rather than DL) makes the system inherently more transparent and readily adaptable for future integration of interpretability techniques. Comparative experiments using FastText word embeddings and tf-idf with supervised classifiers revealed superior performance with the latter approach. Our best result achieved a macro F-score of 78.62% using SVMs with the RBF kernel, demonstrating that interpretable ML frameworks offer a viable and resource-efficient approach for monitoring extremist discourse in Arabic social media. These findings highlight the potential of such frameworks to support scalable and explainable counterterrorism tools in low-resource linguistic settings.

MSC:

68T50; 68P20; 91D30; 68T05

1. Introduction

Social media has become an integral part of modern life, serving not only as a primary platform for content sharing but also as a dominant source of information. As of April 2025, over 5.3 billion people—nearly 65% of the global population—use social media, with the typical user engaging across about 6.9 platforms monthly [1]. Platforms such as Facebook, YouTube, Instagram, TikTok, and Twitter encompass diverse communication forms, including personal updates, news, and educational content. Studies report that users often engage with multiple platforms simultaneously—such as browsing Instagram or YouTube while interacting on Twitter—highlighting the multi-modal and concurrent nature of social media consumption [2,3].

Twitter, one of the most prominent microblogging platforms, allows users to share short posts (tweets) limited to 280 characters. Although the platform has been renamed X, we retain the term “Twitter” throughout this study for clarity and consistency with prior studies. Tweets are published to the user’s “followers”—subscribers to their account—who can interact by replying, retweeting, or marking them as favorites [4].

While sentiment analysis and stance detection are closely related, they address distinct objectives. Sentiment analysis determines the polarity of a text (positive, negative, or neutral) by identifying emotional cues such as “love” or “hate.” However, sentiment alone is often insufficient; understanding a user’s position toward a predefined topic—known as stance detection—is equally crucial [5]. As an emerging research area, which is particularly underexplored for Arabic-language content, stance detection seeks to classify a text as favoring, opposing, or remaining neutral toward a pre-determined target.

A key challenge in stance detection lies in the implicitness of targets. Often, the target is not explicitly mentioned within the text, requiring systems to infer stance from indirect references, rhetorical devices, or context-specific cues that assume shared knowledge with the audience. For instance, consider a synthetic tweet such as “They say progress is being made, but the streets tell a different story” does not explicitly name the government, yet it may reflect opposition to its policies. Conversely, “Finally, someone is taking bold steps to fix years of neglect” might imply support. Addressing such complexities, where stance must be inferred rather than directly observed, is a central objective of stance detection systems [5].

The term terrorism originates from the French word terrorisme, which was derived from the Latin term terror (“great fear” or “dread”), and is related to terrere (“to frighten”). While historically applied to state-imposed terror during revolutionary periods (e.g., the Reign of Terror), contemporary usage more commonly describes subnational violence against states [6], though state-sponsored violence against civilian populations also falls within many scholarly definitions. Despite its widespread use, no universal legal or scientific consensus exists, with over 109 distinct definitions documented [7]. Governments and legal systems employ varying interpretations, and political sensitivities have prevented international agreement. https://en.wikipedia.org/wiki/Definition_of_terrorism (accessed on 9 June 2025). Attempts like Alex Schmid’s 1992 proposal to define terrorism as “peacetime equivalents of war crimes” based on international humanitarian law failed to gain adoption.

Nevertheless, terrorism remains a pressing global issue, with governments dedicating substantial resources to monitoring and countering terrorist activities. Motivated by this, we focus on Hezbollah as the central target of this study due to its classification as a terrorist organization by the Gulf Cooperation Council (GCC) and multiple international bodies. Hezbollah’s prominent role in regional conflicts, particularly in Syria and Lebanon, and its extensive discussion on Arabic social media make it an ideal case for examining stance detection in highly polarized and sensitive discourse.

Critically, we contend that this focused approach is a methodological strength. Unlike prior research, which often examines stance detection in broad or unspecified contexts, our deliberate emphasis on Hezbollah enables a deeper, contextually grounded analysis of the group’s unique discourse patterns, rhetorical strategies, and regional linguistic nuances. To analyze public sentiment, we compiled thousands of Arabic tweets—concise texts by nature—and developed a robust system for detecting stances specifically regarding Hezbollah’s activities in Syria. For brevity, we refer to this subject simply as “Hezbollah” throughout the paper. This specificity not only enhances the validity and reliability of our methodology for Hezbollah-related content but also provides a clear, replicable framework that can be adapted to other organizations in future work. To the best of our knowledge, this is the first stance detection study tailored to a specific organization, setting a precedent for more precise and comparable analyses in this domain.

In this work, we employed traditional machine learning (ML) classifiers—including Support Vector Machines (SVMs), Multinomial Naïve Bayes (MNB), and weighted K-Nearest Neighbors—rather than deep learning (DL) approaches. While state-of-the-art performance in Arabic stance detection is currently achieved using DL models like AraBERT, our decision was driven by three specific objectives critical to counterterrorism contexts: (i) model interpretability, as opaque “black box” predictions are unsuitable for high-stakes scenarios requiring validation and justification, with serious consequences for decision-making; (ii) computational efficiency, given many real-world applications operate in resource-constrained environments; and (iii) effective operation with limited training data, since high-quality annotated Arabic datasets remain scarce and DL models are prone to overfitting.

We recognize this choice may sacrifice some absolute performance compared to modern DL methods. However, it ensures broader applicability in low-resource or explainability-critical settings while establishing a clear, well-documented baseline. This foundation enables future studies to precisely measure improvements when applying DL models while currently providing policymakers with the transparent classification logic essential for counterterrorism analysis.

1.1. Problem Definition

The computational analysis of terrorist organizations on social media involves two complementary tasks: content detection and stance detection. Content detection identifies posts containing extremist material—such as propaganda, recruitment messages, hate speech, or violent imagery—using binary or multi-class classification. This supports content moderation and counterterrorism monitoring by flagging material that explicitly promotes or supports terrorist activities.

Stance detection, in contrast, provides a finer-grained analysis by determining the author’s position (supporting, opposing, or neutral) toward a specific organization like Hezbollah. Unlike content detection, which focuses on the presence of extremist themes, stance detection examines ideological alignment and rhetorical patterns to assess public sentiment. Such analysis is critical for understanding polarization, mapping societal attitudes, and informing policy decisions in conflict-prone regions.

The distinction lies in analytical granularity: content detection broadly scans for terrorism-related material, while stance detection targets individual attitudes toward predefined entities. Together, these tasks offer a comprehensive framework for monitoring terrorist organizations’ digital presence—from operational content identification to public opinion mapping.

1.2. Our Contributions

The main contributions of this work are as follows:

- Creation of a specialized Arabic dataset: A collection of 7053 tweets focused on Hezbollah’s activities was compiled and annotated, addressing the scarcity of labeled data for Arabic stance detection research. We made the dataset available for non-commercial use.

- Development of a practical stance detection system: An efficient ML-based framework for classifying stances in Arabic tweets about Hezbollah’s involvement in Syria was developed.

While interpretability is not a core focus of this work, the use of traditional ML models (rather than deep learning) makes the system inherently more transparent and easily expandable to incorporate interpretability techniques for stance analysis in future work.

The remainder of this paper is structured as follows: Section 2 provides the necessary background. Section 3 reviews related work, beginning with research in stance detection and proceeding to studies on terrorism detection. Section 4 details the proposed methodology for stance detection in Arabic tweets. Section 5 presents experimental setups and discusses the results. Finally, Section 6 concludes the study and suggests promising directions for future research.

2. Background

In this section, we provide essential background on the Arabic language, outline its inherent linguistic challenges, and present an overview of stance detection along with the key difficulties it entails.

2.1. Challenges in Handling Arabic Tweets

Twitter has emerged as a vital platform for studying contemporary public discourse, offering researchers unprecedented access to real-time public opinion and diverse societal voices [8]. While the platform’s big data capabilities complement traditional survey methods [9], scholars must navigate significant methodological challenges, including algorithmic biases, echo chambers, and the performative nature of online self-presentation. There is a growing consensus about the limitations of hashtag-centric studies and platform-specific analyses, and the field is witnessing a shift toward more robust theoretical frameworks and interdisciplinary approaches to move beyond descriptive analyses toward a deeper understanding of mediated public discourse [10].

From a computational perspective, this work is hampered by the unique nature of Twitter data itself. The platform is characterized by brevity and inherent noise, and this short length severely restricts the available word co-occurrence statistics and contextual information, which are crucial for deriving reliable semantic similarity measures [11].

These challenges are profoundly exacerbated by the intrinsic complexities of the Arabic language. Modern Standard Arabic (MSA) is typically written without diacritical markings (harakat), leading to pervasive lexical and semantic ambiguity. This issue is compounded by the language’s rich morphology, its extensive use of synonyms, and its highly inflectional and derivational structure.

The problem is further complicated by the diglossic reality of Arabic social media, where tweets are composed in a blend of MSA and various regional colloquial dialects [12]. These dialects exhibit greater variation than those typically found in European languages and lack standardized spelling rules or formal dictionaries [12]. Consequently, a single word can possess contradictory meanings across different dialects; for instance, the word بلش can mean “finished” in one dialect but “started” in another.

The core of the disambiguation problem lies in the absence of short vowel diacritics. These markings are essential for resolving a word’s precise meaning and grammatical function. While a human reader can use context to infer the correct interpretation of an unvowelized word like علم (which can mean “science,” “flag,” “taught,” “knew,” or “knowledge”), this task is exceptionally difficult for automated systems [12]. This ambiguity even extends to vowelized texts, where a word like عَام can mean either “year” or “public,” requiring deep contextual understanding for accurate disambiguation.

The intertwined challenges of ambiguity, dialectal variation, and sparse contexts are not peripheral concerns for social media analysis; they represent a core bottleneck to the progress of Arabic NLP at large. Addressing them demands not incremental refinements but domain-specific innovation and substantial resource investment. For example, the TaSbeeb judicial decision support system [13] illustrates the scale of effort required to adapt NLP to the rigor of legal discourse, while the Arabic essay evaluator in [14,15] underscores the difficulty of simultaneously assessing linguistic proficiency and semantic depth in educational settings. These cases demonstrate that overcoming the inherent linguistic complexity of Arabic is indispensable for building robust and socially impactful AI systems. Nowhere is this more critical than in computational analysis of Arabic Twitter data, where meaningful societal insights can only emerge if models effectively navigate the dense interplay between dialect, implicitness, and limited contexts.

2.2. Stance Detection

Stance detection is the task of automatically determining whether the author of a text is in favor, neutral, or against a given target (e.g., person, organization, movement, or topic). While progress has been made in detecting stance toward explicitly mentioned targets, a persistent challenge arises when the target is implicit—that is, not directly mentioned in the text but only implied through the context, allusion, or shared knowledge. This implicitness, also referred to as target latency, transforms stance detection from a surface-level classification problem into a complex reasoning task that integrates natural language understanding, commonsense inference, and knowledge retrieval.

Several interconnected factors make implicit targets particularly difficult to handle:

- Disambiguation Problem: A stance-bearing statement may not identify its target explicitly but instead reference related sub-events, policies, or entities. For instance, a tweet stating, “They finally lifted the ban!” conveys a positive stance, but without an explicit target, it could refer to (1) women’s driving rights in Saudi Arabia, (2) COVID-19 travel restrictions, or (3) the reinstatement of a suspended player. Correct interpretation requires deep contextual modeling and extensive world knowledge to resolve that the term “they” refers to a specific authority and “the ban” to a specific policy.

- Limits of Lexical and Syntactic Patterns: Traditional supervised and feature-based approaches (e.g., relying on tf-idf, n-grams, or syntactic dependencies) struggle when surface lexical cues are absent. If words such as “climate,” “global,” or “warming” never appear, these models lack anchors to tether stance predictions to the correct topic. This illustrates why implicit targets cannot be resolved by shallow statistical associations alone.

- Knowledge Gap for LLMs: Modern large language models (LLMs) encapsulate vast world knowledge, yet they are not immune to errors with implicit targets. The challenge lies in retrieval and alignment: the model must (a) recall the relevant event (e.g., that lifting the ban on Saudi women driving occurred in 2018), (b) align this knowledge to ambiguous references such as “they” and “ban,” and (c) filter this event from countless similar “ban-lifting” instances. This process is highly sensitive to context length, prompt phrasing, and model biases, which often results in inconsistent stance predictions.

- Context and Figurative Language: Implicit stance frequently co-occurs with figurative language such as irony, sarcasm, or metaphor, where the literal form diverges from the intended meaning. Statements like “What a brilliant idea!” could signal sarcasm and convey a negative stance, requiring pragmatic inference beyond lexical cues.

- Data Scarcity and Annotation Difficulty: Constructing datasets for implicit stance detection is particularly challenging. Human annotators can rely on commonsense reasoning to infer implicit targets, but for machine learning, this requires datasets labeled not only with stance but also with supporting evidence and often explicit target resolution. This is expensive, time-intensive, and hard to scale. Consequently, most benchmarks (e.g., SemEval-2016) underrepresent implicit targets, leaving models poorly trained for real-world ambiguity.

- Evaluation Dilemma: Assessing stance detection performance on implicit targets is also non-trivial. For example, if a model labels a post as “against” a policy, but the gold label associates the stance with the political party responsible for the policy, should this be considered correct? Simple accuracy or F-scores are insufficient, and evaluation frameworks must account for reasoning chains and conceptual proximity to capture model competence fairly.

The implicitness of targets magnifies the difficulty of stance detection because it requires resolving hidden references, integrating discourse-level and contextual information, and applying external world knowledge. While LLMs have advanced the field, reliably bridging the gap between explicit utterances and implicit meaning remains one of the most fundamental challenges.

The challenge of implicit targets compounds the difficulty of stance detection by necessitating the resolution of unstated references, the integration of discourse and contextual cues, and the application of external world knowledge. Although LLMs have propelled the field forward, reliably inferring implicit meaning from explicit utterances remains a fundamental unsolved problem. This challenge is further amplified by dialectal variation when shifting focus across regional groups. For instance, while this work concentrates on Hezbollah—which is associated with a specific dialect—applying these methods to groups using other Arabic dialects (e.g., Maghrebi or Gulf Arabic) [16] introduces additional complexity due to significant linguistic variation. Effective cross-dialect stance detection thus requires more than additional training data; it demands the development of dialect-specific lexical resources to address contradictory word senses, the creation of large-scale annotated corpora capturing unique morphological and syntactic features, and the design of dialect-aware models capable of dynamically adapting to the blended MSA–dialect continuum prevalent in social media.

3. Related Works

Our review of related work follows two key strands of research: (1) studies focusing on stance detection in social media, which provide methodological foundations for analyzing user perspectives and (2) research on terrorism-related content detection, which offers domain-specific insights relevant to our study of Hezbollah-related discourse. This dual perspective allows us to situate our work at the intersection of computational methods and security applications.

3.1. Stance Detection

Stance detection, the computational task of determining a text’s position toward a specific target, has become a cornerstone of computational linguistics. The field gained significant momentum with the establishment of standardized benchmarks through the SemEval-2016 Task 6 competition [17], which continues to influence contemporary methodologies. Prior foundational work by Somasundaran and Wiebe [18] combined sentiment and argument features in supervised classifiers, achieving 63.93% accuracy on contentious topics. Similarly, Addawood et al. [19] leveraged syntactic, lexical, and argumentative features in an SVM model to analyze public reaction to a specific event, attaining a 90.4% F-score.

The SemEval-2016 shared task [17] provided a formal definition and a dataset of 4,163 tweets, catalyzing further research. The best score attained in the shared task was an F-score of 67.8%. This benchmark was later advanced by Al-Ghadir et al. [5], whose pipeline combining tf-idf with sentiment features achieved a state-of-the-art macro F-score of 76.45%. The IberEval-2017 shared task [20] expanded the scope to include multilingual tweets on Catalan’s independence, combining stance detection with author profiling.

The advent of LLMs has transformed the field, as analyzed in the taxonomy introduced by Pangtey et al. [21], which categorizes approaches by learning methods, data modalities, and target relationships. Methodological progress has since advanced along multiple fronts. Architecturally, Garg and Caragea [22] proposed Stanceformer, which integrates a target awareness matrix to amplify attention to target-relevant terms. In the multimodal domain, Barel et al. [23] introduced TASTE, a model that fuses transformer-based textual embeddings with a structural social context using a gated residual network (GRN). A key innovation in prompting is the “Chain of Stance” (CoS) method by Ma et al. [24], which decomposes the detection process into intermediate reasoning steps for superior zero-shot and few-shot performance.

Substantial work has also focused on improving generalization. Zhao et al. [25] designed a framework using multi-expert cooperative learning, where a gating mechanism fuses diverse semantic features to enhance transfer to unseen targets. Similarly, Ma et al. [26] pioneered a zero-shot multi-agent debate (ZSMD) framework, where opposing agents debate a text’s stance with access to external knowledge for more robust predictions.

Research in Arabic stance detection has progressed from initial studies to rich shared tasks. Abdelhade et al. [27] developed a deep neural network to classify Arabic tweets in financial and sports domains, achieving a 90.68% F-score. A significant large-scale analysis was conducted by Azmi and Al-Ghadir [8], who examined Twitter as a real-time barometer of public opinion during Saudi Arabia’s 2016–2017 reform period. Their study collected approximately 200 million Arabic tweets, identifying 50,000 unique hashtags. From this corpus, they performed a two-tiered analysis: first, a behavioral study clustered 4000 prevalent hashtags into 12 primary categories, from which five key topics—four related to gender relations (e.g., women’s driving and male guardianship)—were selected for in-depth stance detection. In the second phase, they employed a dual-labeling process to classify tweets as supportive, neutral, or opposed to these social changes. Among multiple classifiers evaluated, a weighted 9-NN model achieved the highest performance, with an F-score of 72.45%. This work highlighted Twitter’s utility as a complementary tool to traditional surveys for capturing nuanced public sentiment during socio-political transformations.

The creation of new resources like the ArabicStanceX dataset by Alkhathlan et al. [28] and the multi-dialectal corpus by Charfi et al. [29] provided valuable benchmarks for dialect-aware research. This progression culminated in StanceEval 2024 [30], the first Arabic shared task for stance detection, where fine-tuned AraBERT by Badran et al. [31] achieved the highest F-score (84.38%), underscoring the transformative impact of LLMs in Arabic NLP.

The foundational landscape of the field is thoroughly mapped in the comprehensive survey by Zhang et al. [32], which details core definitions, datasets, and the methodological progression from traditional models to LLM-based approaches, while outlining emergent challenges. For a more focused examination of recent algorithmic advances, the survey by Gera and Neal [33] provides a specialized overview of deep learning techniques within stance detection.

3.2. Terrorism Detection

Recent studies have developed various computational approaches to identify terrorism-related content on social media. Omer [34] proposed a machine learning framework to classify radical vs. non-radical tweets collected using certain ideological hashtags, accepting both Arabic and non-Arabic content. After preprocessing (removing retweet tags, URLs, and annotations), the text was lemmatized and tokenized. Experiments with stylometric, sentiment-based, and time-based features showed that AdaBoost outperformed SVM and NB classifiers when features were combined.

Alsaedi and Burnap [35] developed a robust framework for detecting disruptive events through real-time Twitter analysis. Their methodology leveraged Twitter’s streaming API to collect geolocated data using region-specific keywords and hashtags, with MongoDB employed for efficient data storage. The preprocessing pipeline incorporated stop word removal and content filtering, followed by feature extraction using tf-idf and dual-language stemming (Khoja for Arabic and Porter for English). The system achieved notable performance (85.43% F-score) through a novel combination of NB classification in R and online clustering for topic identification, culminating in a voting-based summarization mechanism. This approach demonstrated particular effectiveness in handling multilingual social media data during crisis events.

For terrorist affiliation detection, Ali [36] collected tweets via Twitter API and preprocessed them by eliminating numbers, stop words, and punctuation. An unsupervised clustering algorithm grouped the data, with cluster numbers determined by frequency histograms. Visualization tools (R, NodeXL, and Gephi) revealed network patterns, demonstrating that combining basic data mining tools with linguistic analysis effectively identifies terrorist affiliations.

The ALT-TERROS system [37] is an AI-enabled framework designed to detect terrorist behavior in Arabic social media. It analyzed 10,000 tweets, assigning suspicion probabilities based on hostile emotions; links to terrorist organizations; and violent imagery. Microsoft Cognitive Services supported semantic tagging, while a risk-scoring mechanism generated alerts. By integrating heuristic rules with advanced analytics and visualizations, ALT-TERROS offers an end-to-end workflow for extracting and evaluating textual and visual data. The system also addresses challenges such as limited Arabic API support and the need for robust language resources to enhance sentiment analysis.

Al-Shawakfa et al. [38] proposed RADAR#, a system combining two neural network approaches—a CNN-BiLSTM model and the AraBERT transformer—using an ensemble technique to identify radicalization indicators in tweets. When tested on nearly 90,000 Arabic tweets, the system achieved high detection accuracy (98%) while revealing important insights through component analysis. Key findings show that the model struggles most with sarcastic posts (a third of the errors) and regional dialect variations (one-fifth of errors), highlighting areas needing improvement for real-world counterterrorism applications. The technical approach demonstrates how combining different AI methods can improve detection of harmful online content in Arabic language contexts.

Another model to detect extremist content in Arabic social media using AraBERT was proposed by [39]. The model combined AraBERT with sentiment features (SFs) and multilayer perceptrons (MLPs). The study evaluated the model on three datasets: (1) 89,816 Arabic tweets with 56% extremist content collected via X’s APIs; (2) 24,078 ISIS-related Arabic tweets having 10,000 extremist contents, analyzed via a 3400-tweet subset; and (3) 100 professionally translated Kazakh texts, half of which is extremist content for cross-lingual validation. These were, respectively, sourced from [40,41,42]. The highest performance achieved was an F-score of 96% on the first dataset using AraBERT with SF and MLP.

3.3. Concluding Remarks

This review examined two interrelated research areas: stance detection and terrorism-related content identification in social media. Current work in both domains demonstrates a clear progression toward deep learning methods, particularly LLMs, as evidenced by recent surveys of advances in stance detection [32,33] and prominent NLP tasks such as abstractive summarization [43] and question answering [44]. However, our analysis reveals a significant research gap in stance detection specifically applied to terrorism-related Arabic tweets. Sabri and Abdullah [45]’s comprehensive survey on Arabic extremism content detection highlights two key findings: (1) most studies focused on MSA despite widespread use of regional dialects in social media, and (2) among traditional machine learning approaches (excluding deep learning), SVM classifiers consistently outperform other algorithms (e.g., Naïve Bayes and Random Forests) in detection accuracy.

4. Our Proposed System

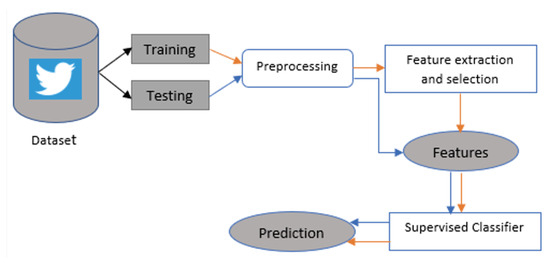

In this section, we present the key aspects of our proposed stance detection system for terrorism-related Arabic tweets. The discussion begins with the processes of data collection and annotation and concludes with the classification phase. This study focuses on terrorism as the target of interest. Recognizing that the definition of terrorism varies across individuals and governments, we adopt the organizations designated as terrorist by the Gulf Cooperation Council (GCC) as our targets of interest. Specifically, this research centers on Hezbollah-related terrorism in Syria. As noted earlier, stance detection is an emerging area of research, and this work represents one of the few studies addressing stance detection in the Arabic language. Our proposed system comprises four main components: data collection and annotation, preprocessing, feature extraction and selection, and stance classification. These components are illustrated in Figure 1.

Figure 1.

Our proposed terrorism stance detection system.

4.1. Dataset and Annotation

We collected over 8000 tweets using Twitter’s API, which after filtering redundant and irrelevant content resulted in about 7000 usable tweets. The final dataset comprises 7053 tweets discussing Hezbollah, categorized as 3201 supporting, 2352 opposing, and 1500 neutral.

For supervised classification, we employed manual annotation by two native Arabic speakers who labeled tweets as “favor,” “against,” or “neither” (following [46]’s combined neutral/no-stance category). Although labor-intensive, this approach ensured reliable and trustworthy annotations given the task’s sensitivity. Crowdsourcing alternatives like ClickWorker or Amazon Mechanical Turk proved problematic for Arabic texts due to quality control issues [47], making professional annotation essential for this high-stakes domain. Table 1 shows some samples of tweets and their stance.

Table 1.

Sample tweets from the dataset, accompanied by their translations and associated stance labels.

The dataset was partitioned into the training (70%) and testing (30%) subsets, preserving the original distribution of all stance categories (see Table 2). This standard split ratio, consistent with SemEval-2016’s methodology [17], ensures balanced model development and evaluation.

Table 2.

The distribution of tweets in our Hezbollah dataset across the three stance categories: in favor, against, and neutral.

4.2. Tweet Preprocessing

The preprocessing phase cleans the dataset by removing noise, reducing features, and improving classification performance. We first eliminate non-Arabic content, including special symbols (“@” and “#”), URLs, retweet markers (RTs), and end-of-line characters. Next, we normalize Arabic words to their standard forms (e.g., مرررحبا to مرحبا) and remove Arabic stop words using Python’s NLTK 3.6.5 library. Finally, we tokenize the text into individual word units using standard tokenization methods [47,48].

4.3. Stemming

Stemming reduces words to their base or root form [48]. For instance, various Arabic verb conjugations like قرأ (“he read”), قرأت (“she read”), and سيقرأ (“he will read”) all share the same root meaning of reading. A stemming system consolidates these variants into the single-root form قرأ, significantly simplifying text processing.

This study employs the ISRI stemmer from Python’s NLTK library, an Arabic root-based stemmer developed by the Information Science Research Institute [49]. The stemmer first attempts to find the word’s root in its dictionary, returning the normalized form if the root is not found, rather than the original word.

5. Experiments and Discussion

In this section, we present the experimental setup and discuss the results obtained. Two distinct experimental configurations were designed for the dataset: (i) classification without the application of SMOTE and (ii) classification with SMOTE applied to address class imbalance. Across all experiments, we evaluated the performance of four SVM kernels (linear, RBF, polynomial, and sigmoid), alongside Multinomial Naive Bayes (MNB) and weighted K-Nearest Neighbors (K-NN) classifiers.

The synthetic minority over-sampling technique (SMOTE) is a widely used method for addressing class imbalance in datasets [50]. It generates synthetic samples for the minority class by interpolating between existing minority instances and their nearest neighbors. For example, consider two minority class instances and in a two-dimensional feature space. SMOTE can create a new synthetic instance by interpolating between them as follows: , where is a random number. If, for example, , then . This process reduces the bias towards the majority class during training and enhances classifier performance, particularly in imbalanced classification tasks. First, we cover the evaluation metrics.

5.1. Evaluation Metrics

We evaluated classification performance using standard metrics, namely accuracy, precision (P), recall (R), and the F-score, which are derived from fundamental prediction outcomes. True positives (TPs) and true negatives (TNs) represent correct classifications of positive and negative samples, respectively, while false positives (FPs) and false negatives (FNs) indicate misclassified negative and positive samples. These measures form the basis for all subsequent performance calculations, with FPs and FNs being particularly critical for assessing model reliability in imbalanced datasets.

Precision represents the fraction of correctly predicted positives among all predicted positives: , while recall indicates the fraction of correctly identified positives among all actual positives: . The F-score provides their harmonic mean:

Following the evaluation protocol established in the SemEval-2016 stance detection shared tasks [17], we employed the macro-averaged F-score evaluation metric, focusing exclusively on the “favor” and “against” classes while treating “neutral” as negative. The F-average () metric is computed as

where and are calculated as

where and denote the precision values for the classes “favor” and “against”, respectively, while and represent the corresponding recall values for these two classes.

This evaluation framework aligns with established stance detection competitions and enables direct performance comparisons across systems [17].

5.2. Word Embedding Using FastText

The word embedding approach represents words as real-valued vectors in a predefined space, capturing semantic relationships by assigning similar vectors to words with related meanings [51].

We employed Facebook’s FastText library, which offers pretrained models for over 290 languages and supports both supervised and unsupervised word vector generation [51]. For our experiment, we trained a supervised FastText model on the datasets, using the default vector size of 300.

Model tuning focused on two key parameters:

- Epochs: The term refers to the number of times the instance is seen by FastText. The standard range for the number of epochs is 5–50.

- Learning rate (LR): It refers to the amount of change performed by the model after processing each instance. The standard range for LR is 0.1–1.0.

Our experimental results demonstrate that the optimal configuration of an LR = 0.1, and 25 epochs yielded peak performance with an F-score of 70.86%, as detailed in Table 3.

Table 3.

Results of word embedding using FastText. We boldface the best entry.

5.3. Supervised Classifiers

Our feature selection process began with term frequency–inverse document frequency (tf-idf) weighting, which evaluates word importance through a balance of two factors: term frequency tf (how often a word appears in a document) and inverse document frequency df (how rare it is across all documents) [52,53]. It is calculated by

where denotes occurrences of word i in document j, tracks how many documents contain word i, and D is our total document count. This formula automatically boosts distinctive terms while suppressing overly common ones.

When we cross-validated these tf-idf features using Chi-square selection, we found complete agreement—both methods identified the same set of meaningful features. This gave us confidence to proceed with these optimized word representations.

To determine the optimal number of features, we evaluated our system using 100, 500, 1000, 1500, and 2000 features, measuring the impact on across three classifiers: Multinomial Naïve Bayes (MNB), an SVM with the RBF kernel, and weighted K-NN (with ). The dataset was partitioned into 70% for training and 30% for testing, adhering to the established evaluation framework used in the SemEval-2016 stance detection shared tasks [17]. We assessed all combinations of unigrams, bigrams, and trigrams across these feature set sizes, leveraging their established effectiveness for Arabic text processing.

The results, as detailed in Table 4, reveal a clear performance trend. The SVM-RBF classifier achieved its highest of 0.7243 using a combination of 1000 unigram and trigram features. A close second result of was attained using 1000 unigram and bigram features for the same classifier. Both values appear as 0.724 in the table due to rounding. MNB performed fairly well, but weighted 1NN yielded the least favorable performance.

Table 4.

Performance tuples (, , , , , , and ) for MNB, the SVM with the RBF kernel, and W-1NN across different feature counts and n-gram configurations. The first six tuples are, respectively, designated by , , , , , and . The N refers to the size of the N-gram, with 1 refering to unigram, 1 + 3 refering to unigram and trigram (combined), and so on. For each classifier we boldface the best .

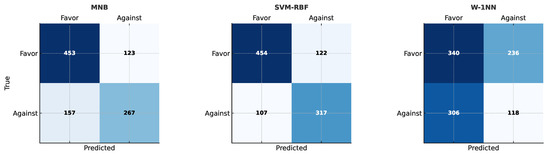

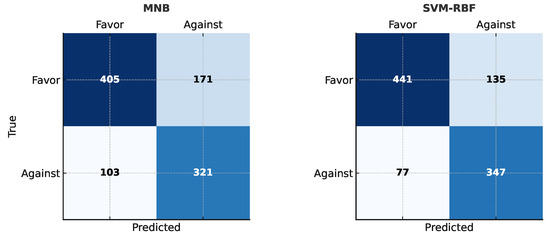

Figure 2 presents the confusion matrix heatmaps for the top-performing feature configuration (1000 unigram and trigram features, from Table 4) under imbalanced data conditions. Several observations can be made. SVM-RBF achieves the most favorable trade-off, yielding both higher TP for the favor class (454) and higher TN for the against class (317). MNB performs reasonably well but suffers from a larger number of false positives (157 cases). In contrast, W-1NN exhibits the weakest performance, misclassifying a substantial portion of both favor and against examples.

Figure 2.

The confusion matrix heapmap for 1000 features (unigram and trigram) for three different classifiers.

Interestingly, the number of TPs for the favor class is almost identical for MNB and SVM-RBF, despite the latter being the stronger classifier overall. The key advantage of SVM-RBF lies not in identifying favor instances but in avoiding the misclassification of against examples as favor. Specifically, MNB produces 157 FPs (against predicted as favor), whereas SVM-RBF reduces this to only 107 FPs. Consequently, although both models capture approximately 79% of the favor class (similar recall), SVM-RBF achieves superior precision by minimizing false positives.

In summary, the strength of SVM-RBF is evident in its effective management of true negatives and false positives rather than true positives alone. This capability leads to consistently higher precision and scores, underscoring its advantages over MNB in handling imbalanced stance detection tasks.

5.4. Hyper-Parameter Optimization

The goal of a learning algorithm A is to find a function f that minimizes the expected loss over samples x drawn from the true data distribution . The algorithm A maps the training set to f and depends on hyper-parameters , which must be tuned to produce the best-performing model. Thus, the key challenge is selecting the optimal that minimizes the generalization error:

This selection process is known as hyper-parameter optimization [54].

Among the available optimization methods, grid search is particularly effective in low-dimensional spaces due to its simplicity [54]. In this study, we employed grid search to tune the hyper-parameters of the SVM classifier.

The SVM’s performance is highly sensitive to its hyper-parameters, particularly C (regularization parameter) and (kernel coefficient). The C parameter controls the trade-off between maximizing training accuracy and ensuring a smooth decision boundary—higher values enforce strict classification of training samples, while lower values prioritize smoother decision surfaces [55]. The parameter determines the influence range of a single training instance; higher values mean only nearby points significantly affect the decision boundary [56].

To optimize C and , we employed a grid search strategy combined with 10-fold cross-validation on the training dataset. The search space for both parameters was defined as . The results, presented in Table 5, was evaluated in terms of classification accuracy [54].

Table 5.

Hyper-parameter tuning results for our dataset for the SVM classifier.

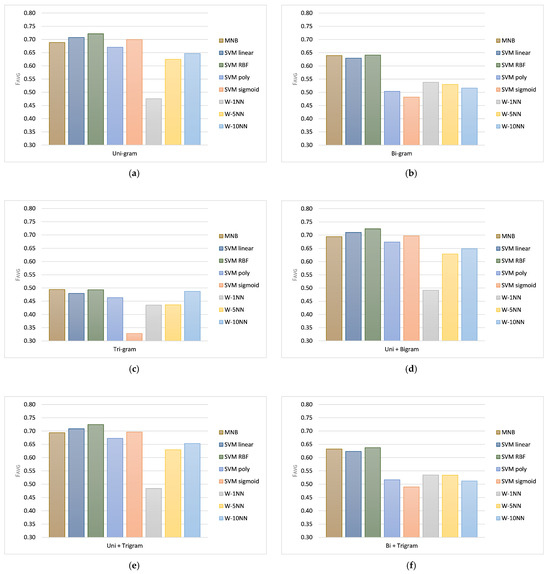

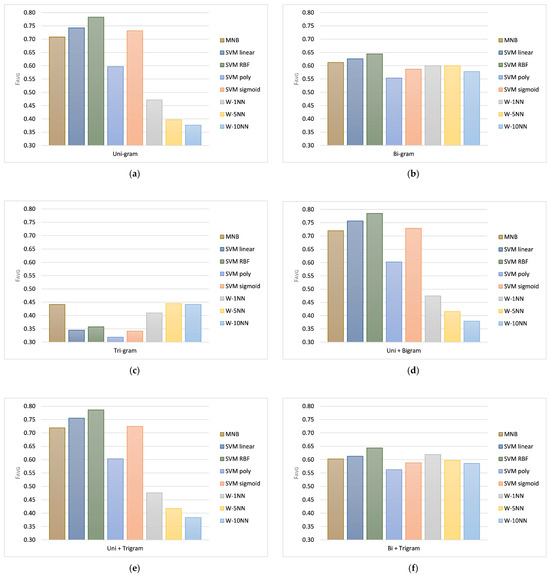

Table 6 presents a comparative analysis of stance detection performance on the Hezbollah dataset, evaluating results both without and with the application of SMOTE. The table details the precision, recall, and F-score metrics for the “favor” and “against” classes, in addition to the overall macro-average F-score (). Complementary to these results, Figure 3 and Figure 4 illustrate the comparative performance across different classifiers and n-gram feature combinations under both experimental conditions.

Table 6.

Results for our dataset without SMOTE and after applying it. N refers to the size of the N-gram combinations (same as in Table 4). For each N, we boldface the best .

Figure 3.

The behavior of without SMOTE across different n-gram combinations and classifiers: (a) unigram, (b) bigram, (c) trigram, (d) unigram + bigram, (e) unigram + trigram, and (f) bigram + trigram.

Figure 4.

The behavior of with SMOTE (Synthetic Minority Over-sampling Technique) for identical n-gram combinations and classifiers as in the previous figure. To enable direct comparison, the y-axis limits are kept identical.

Employing a combination of unigram and trigram features, the SVM-RBF classifier yielded the strongest performance in our experiments, achieving an of 72.43% without SMOTE (Figure 3e; see also Table 4) and improving further to 78.62% with SMOTE (Figure 4e).

The analysis of feature combinations revealed that integrating multiple n-grams consistently equaled or exceeded the performance of any single feature type. For instance, without SMOTE, combining unigram and bigram features increased the MNB classifier’s from 68.85% (unigrams alone) and 63.92% (bigrams alone) to 69.41%, demonstrating a clear synergistic effect. Among the classifiers we evaluated—MNB, the SVM (with multiple kernels), and weighted K-NN—MNB delivered competitive results approaching the SVM’s performance while requiring significantly less computation time. The weighted K-NN, while inefficient for our dataset, demonstrated good performance on the SemEval-2016 English dataset, as reported in [5]. In kernel comparisons, RBF consistently outperformed polynomial and sigmoid kernels for our Hezbollah dataset.

5.5. Discussion

In the context of stance detection on extremist groups, recall is a more critical metric than precision. The primary objective is to ensure that supportive or sympathetic stances are comprehensively detected, as overlooking such content (FNs) may result in missed signals of radicalization, recruitment, or propaganda dissemination, with direct implications for national security. While precision remains important for reducing false alarms and avoiding misallocation of resources, its trade-off is less consequential than recall in high-stakes domains. From an operational standpoint, it is preferable to flag more potential threats—even at the cost of some FPs—than to miss genuine supportive expressions. Thus, models for this task must be optimized for high recall while maintaining an acceptable level of precision to ensure feasibility in deployment.

The application of SMOTE underscores a critical divergence in how classifiers balance the precision–recall trade-off in stance detection, as evidenced by the confusion matrices in Figure 5. When compared to the non-SMOTE performance (Figure 2), both MNB and SVM-RBF exhibit improved specificity against the “against” class, with FPs decreasing from 157 to 103 and from 107 to 77, respectively. However, their behavior diverges sharply in terms of recall. MNB undergoes a conservative shift that severely compromises its sensitivity: TPs for the “favor” class drop from 453 to 405, while FNs increase from 123 to 171. This significant loss of recall—wherein numerous genuine supportive stances are overlooked—renders MNB unsuitable for applications such as counter-extremism, where detecting subtle or early signals of radicalization depends on high recall. In such contexts, failing to capture authentic “favor” instances poses a far greater risk than misclassifying a limited number of negative examples.

Figure 5.

The confusion matrix heatmaps for unigram and trigram features using two classifiers trained with SMOTE. For comparability with the earlier results, the dataset size is normalized to 1000 instances.

At the same time, the ethical implications of FPs cannot be ignored. Each misclassification of an “against” stance as “favor” constitutes a false accusation, potentially stigmatizing individuals as sympathizers of extremist groups when in fact they are not. This risk of creating “false victims” underscores the necessity of maintaining strong precision to protect individuals from wrongful suspicion and to preserve trust in automated systems. In this respect, SVM-RBF demonstrates a more desirable profile: it maintains high recall with only a marginal decrease in TPs (454 to 441) while simultaneously achieving greater precision by substantially reducing FPs and increasing TNs (317 to 347). By balancing the need to capture supportive stances (high recall) with the responsibility to avoid wrongful accusations (high precision), SVM-RBF emerges as the more robust and socially responsible classifier. This dual consideration highlights that effective stance detection in sensitive domains must optimize not only for security imperatives but also for ethical safeguards.

We observed an interesting performance gap between FastText (, Table 3) and SVM-RBF (). While we included FastText for its distinct neural approach and proven effectiveness with morphologically rich languages like Arabic, two main factors contributed to this difference: (1) Our dataset size was relatively limited for optimal FastText performance, which typically benefits from larger corpora or pretrained embeddings, while SVM-RBF excels with smaller datasets when using well-engineered features like tf-idf and n-grams. (2) FastText’s shallow architecture is less capable of modeling complex decision boundaries compared to SVM-RBF’s kernel-based approach.

We maintained FastText in our comparisons to provide a comprehensive methodological perspective and demonstrate how different approaches perform under identical conditions. Although it underperformed relative to SVM-RBF in this specific context, FastText remains a valuable baseline that could potentially achieve better results with larger-scale pretraining or enhanced preprocessing techniques.

6. Conclusions

This study aims to develop an automated system for stance detection in Arabic tweets, focusing on Hezbollah’s activities in Syria as a case study. The proposed algorithm addresses the unique challenges of Arabic tweets, including dialectal variations in stance identification, as noted by the annotators. Despite these complexities, the system demonstrates strong performance by first preprocessing tweets using the ISRI stemmer, then converting them into feature vectors via tf-idf vectorization. These vectors serve as input for multiple classifiers, including MNB, SVMs, and weighted K-NN.

The experiments were conducted on a dataset of approximately 7000 tweets from Hezbollah-related discussions. The results indicate that the system performs effectively, particularly when the dataset is balanced. We achieved an score of 78.63%—a competitive result compared to state-of-the-art performance in English tweet stance detection, where the highest reported F-average score for the SemEval-2016 dataset was 76%. This underscores the system’s capability to handle the linguistic nuances of Arabic social media content.

For future work we plan to incorporate a profiler, similar to the one in [5]. This profiler would enable the system to infer demographic attributes of users, such as gender and age group, thereby providing richer contexts for stance detection and content analysis.

Author Contributions

Conceptualization, A.K.A. and A.M.A.; methodology, A.K.A.; software, A.K.A.; validation, A.K.A.; formal analysis, A.M.A. and A.K.A.; investigation, A.K.A.; resources, A.M.A.; data curation, A.K.A.; writing—original draft preparation, A.K.A.; writing—review and editing, A.M.A.; supervision, A.M.A.; funding acquisition, A.M.A. All authors have read and agreed to the published version of the manuscript.

Funding

The authors would like to thank the Ongoing Research Funding Program (ORFFT-2025-006-4), King Saud University, Riyadh, Saudi Arabia for financial support.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author. They are available for non-commercial research purposes.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Backlinko Team. Social Media Users & Growth Statistics. 2024. Available online: https://backlinko.com/social-media-users (accessed on 9 June 2025).

- DataReportal/Kepios. Global Social Media Statistics. 2025. Available online: https://datareportal.com/social-media-users (accessed on 12 June 2025).

- Boczkowski, P.J.; Matassi, M.; Mitchelstein, E. How Young Users Deal With Multiple Platforms: The Role of Meaning-Making in Social Media Repertoires. J. Comput.-Mediat. Commun. 2018, 23, 245–259. [Google Scholar] [CrossRef]

- Zubiaga, A.; Aker, A.; Bontcheva, K.; Liakata, M.; Procter, R. Detection and resolution of rumours in social media: A survey. Acm Comput. Surv. (Csur) 2018, 51, 1–36. [Google Scholar] [CrossRef]

- Al-Ghadir, A.I.; Azmi, A.M.; Hussain, A. A novel approach to stance detection in social media tweets by fusing ranked lists and sentiments. Inf. Fusion 2020, 67, 29–40. [Google Scholar] [CrossRef]

- Williamson, M. Terrorism, War and International Law: The Legality of the Use of Force Against Afghanistan in 2001; Routledge: London, UK, 2016. [Google Scholar]

- Kruglanski, A.W.; Fishman, S. Terrorism between “syndrome” and “tool”. Curr. Dir. Psychol. Sci. 2006, 15, 45–48. [Google Scholar] [CrossRef]

- Azmi, A.M.; Al-Ghadir, A.I. Using Twitter as a digital insight into public stance on societal behavioral dynamics. J. King Saud-Univ.-Comput. Inf. Sci. 2024, 36, 102078. [Google Scholar] [CrossRef]

- Callegaro, M.; Yang, Y. The role of surveys in the era of “big data”. In The Palgrave Handbook of Survey Research; Palgrave Macmillan: Cham, Switzerland, 2017; pp. 175–192. [Google Scholar]

- Bruns, A.; Stieglitz, S. Twitter Data: What do they represent? IT—Inf. Technol. 2014, 56, 240–245. [Google Scholar] [CrossRef]

- Alruily, M.; Manaf Fazal, A.; Mostafa, A.M.; Ezz, M. Automated Arabic long-tweet classification using transfer learning with BERT. Appl. Sci. 2023, 13, 3482. [Google Scholar] [CrossRef]

- Azmi, A.M.; Aljafari, E.A. Universal web accessibility and the challenge to integrate informal Arabic users: A case study. Univers. Access Inf. Soc. 2018, 17, 131–145. [Google Scholar] [CrossRef]

- Almuzaini, H.A.; Azmi, A.M. TaSbeeb: A judicial decision support system based on deep learning framework. J. King Saud-Univ.-Comput. Inf. Sci. 2023, 35, 101695. [Google Scholar] [CrossRef]

- Al-Jouie, M.F.; Azmi, A.M. Automated Evaluation of School Children Essays in Arabic. In Proceedings of the 3rd International Conference on Arabic Computational Linguistics (ACLing 2017), Dubai, United Arab Emirates, 5–6 November 2017; Volume 117, pp. 19–22. [Google Scholar]

- Alqahtani, A.; Al-Saif, A. Automated Arabic essay evaluation. In Proceedings of the 17th International Conference on Natural Language Processing (ICON), Patna, India, 18–21 December 2020; pp. 181–190. [Google Scholar]

- AlShenaifi, N.; Azmi, A. Arabic dialect identification using machine learning and transformer-based models. In Proceedings of the Seventh Arabic Natural Language Processing Workshop (WANLP 2022), Abu Dhabi, United Arab Emirates, 8 December 2022; pp. 464–467. [Google Scholar]

- Mohammad, S.; Kiritchenko, S.; Sobhani, P.; Zhu, X.; Cherry, C. Semeval-2016 Task 6: Detecting stance in tweets. In Proceedings of the SemEval, San Diego, CA, USA, 16–17 June 2016; pp. 31–41. [Google Scholar]

- Somasundaran, S.; Wiebe, J. Recognizing stances in ideological on-line debates. In Proceedings of the NAACL HLT 2010 Workshop on Computational Approaches to Analysis and Generation of Emotion in Text, Los Angeles, CA, USA, 1–6 June 2010; pp. 116–124. [Google Scholar]

- Addawood, A.; Schneider, J.; Bashir, M. Stance Classification of Twitter Debates: The Encryption Debate as A Use Case. In Proceedings of the 8th International Conference on Social Media & Society, Toronto, ON, Canada, 28–30 July 2017. [Google Scholar]

- Taulé, M.; Martí, A.; Rangel, F.; Rosso, P.; Bosco, C.; Patti, V. Overview of the Task on Stance and Gender Detection in Tweets on Catalan Independence at IberEval 2017. In Proceedings of the 2nd Workshop on Evaluation of Human Language Technologies for Iberian Languages, Murcia, Spain, 19 September 2017. [Google Scholar]

- Pangtey, L.; Choudhary, R.; Mehta, P. Large Language Models Meet Stance Detection: A Survey of Tasks, Methods, Applications, Challenges and Future Directions. arXiv 2025, arXiv:2505.08464. [Google Scholar] [CrossRef]

- Garg, K.; Caragea, C. Stanceformer: Target-Aware Transformer for Stance Detection. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; pp. 4969–4984. [Google Scholar]

- Barel, G.; Tsur, O.; Vilenchik, D. Acquired TASTE: Multimodal Stance Detection with Textual and Structural Embeddings. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; pp. 6492–6504. [Google Scholar]

- Ma, J.; Wang, C.; Xing, H.; Zhao, D.; Zhang, Y. Chain of stance: Stance detection with large language models. In Natural Language Processing and Chinese Computing (NLPCC 2024); Lecture Notes in Computer Science; Wong, D.F., Wei, Z., Yang, M., Eds.; Springer: Singapore, 2024; Volume 15363, pp. 82–94. [Google Scholar]

- Zhao, X.; Li, F.; Wang, H.; Zhang, M.; Liu, Y. Zero-Shot Stance Detection Based on Multi-Expert Collaboration. Sci. Rep. 2024, 14, 15923. [Google Scholar] [CrossRef]

- Ma, J.; Qian, Y.; Yang, J. Exploring Multi-Agent Debate for Zero-Shot Stance Detection: A Novel Approach. Appl. Sci. 2025, 15, 4612. [Google Scholar] [CrossRef]

- Abdelhade, N.; Soliman, T.; Ibrahim, H. Detecting Twitter users’ opinions of Arabic comments during various time episodes via deep neural network. In Proceedings of the International Conference on Advanced Intelligent Systems and Informatics, Cairo, Egypt, 1–3 September 2018. [Google Scholar]

- Alkhathlan, A.; Alahmadi, F.; Kateb, F.; Al-Khalifa, H. Constructing and evaluating ArabicStanceX: A social media dataset for Arabic stance detection. Front. Artif. Intell. 2025, 8, 1615800. [Google Scholar] [CrossRef]

- Charfi, A.; Khene, M.; Belguith, L.H. Stance Detection in Arabic with a Multi-Dialectal Cross-Domain Stance Corpus. Soc. Netw. Anal. Min. 2024, 14, 69. [Google Scholar] [CrossRef]

- Alturayeif, N.; Luqman, H.; Alyafeai, Z.; Yamani, A. StanceEval 2024: The first Arabic stance detection shared task. In Proceedings of the Second Arabic Natural Language Processing Conference, Bangkok, Thailand, 16 August 2024; pp. 774–782. [Google Scholar]

- Badran, M.; Hamdy, M.; Torki, M.; El-Makky, N.M. AlexUNLP-BH at StanceEval2024: Multiple contrastive losses ensemble strategy with multi-task learning for stance detection in Arabic. In Proceedings of the Second Arabic Natural Language Processing Conference, Bangkok, Thailand, 16 August 2024; pp. 823–827. [Google Scholar]

- Zhang, B.; Jiang, Y.; Wang, L.; Wang, Z.; Li, X. A Survey of Stance Detection on Social Media: New Directions and Perspectives. arXiv 2024, arXiv:2409.15690. [Google Scholar] [CrossRef]

- Gera, P.; Neal, T. Deep Learning in Stance Detection: A Survey. ACM Comput. Surv. 2025, 58, 1–37. [Google Scholar] [CrossRef]

- Omer, E. Using Machine Learning to Identify Jihadist Messages on Twitter. In Proceedings of the 2015 European Intelligence and Security Informatics Conference, Manchester, UK, 7–9 September 2015. [Google Scholar]

- Alsaedi, N.; Burnap, P. Arabic event detection in social media. In Computational Linguistics and Intelligent Text Processing (CICLing 2015); Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9041, pp. 384–401. [Google Scholar]

- Ali, G. Identifying Terrorist Affiliations through Social Network Analysis Using Data Mining Techniques. Master’s Thesis, Valparaiso University, Valparaiso, IN, USA, 2016. [Google Scholar]

- Alhalabi, W.; Jussila, J.; Jambi, K.; Visvizi, A.; Qureshi, H.; Lytras, M.; Malibari, A.; Adham, R. Social mining for terroristic behavior detection through Arabic tweets characterization. Future Gener. Comput. Syst. 2021, 116, 132–144. [Google Scholar] [CrossRef]

- Al-Shawakfa, E.M.; Alsobeh, A.M.; Omari, S.; Shatnawi, A. RADAR#: An Ensemble Approach for Radicalization Detection in Arabic Social Media Using Hybrid Deep Learning and Transformer Models. Information 2025, 16, 522. [Google Scholar] [CrossRef]

- Himdi, H.; Alhayan, F.; Shaalan, K. Neural Networks and Sentiment Features for Extremist Content Detection in Arabic Social Media. Int. Arab J. Inf. Technol. (IAJIT) 2025, 22, 522–534. [Google Scholar] [CrossRef]

- Aldera, S.; Emam, A.; Al-Qurishi, M.; Alrubaian, M.; Alothaim, A. Annotated Arabic Extremism Tweets. IEEE Dataport, 8 August 2021. [Google Scholar] [CrossRef]

- Fraiwan, M. Identification of Markers and Artificial Intelligence-Based Classification of Radical Twitter Data. Appl. Comput. Inform 2022. [Google Scholar] [CrossRef]

- Mussiraliyeva, S.; Bolatbek, M.; Omarov, B.; Bagitova, K. Detection of extremist ideation on social media using machine learning techniques. In Proceedings of the International Conference on Computational Collective Intelligence (ICCCI 2020); Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12496, pp. 743–752. [Google Scholar]

- Almohaimeed, N.; Azmi, A.M. Abstractive text summarization: A comprehensive survey of techniques, systems, and challenges. Comput. Sci. Rev. 2025, 57, 100762. [Google Scholar] [CrossRef]

- Abdel-Nabi, H.; Awajan, A.; Ali, M.Z. Deep learning-based question answering: A survey. Knowl. Inf. Syst. 2023, 65, 1399–1485. [Google Scholar] [CrossRef]

- Sabri, R.F.; Abdullah, N.A. A Review for Arabic Extremism Detection Using Machine Learning. Iraqi J. Sci. 2024, 65, 6617–6630. [Google Scholar] [CrossRef]

- Mohammad, S.; Kiritchenko, S.; Sobhani, P.; Zhu, X.; Cherry, C. A dataset for detecting stance in tweets. In Proceedings of the 10th Edition of the the Language Resources and Evaluation Conference (LREC), Portorož, Slovenia, 23–28 May 2016. [Google Scholar]

- Almuzaini, H.A.; Azmi, A.M. An unsupervised annotation of Arabic texts using multi-label topic modeling and genetic algorithm. Expert Syst. Appl. 2022, 203, 117384. [Google Scholar] [CrossRef]

- Al-Zyoud, A.; Al-Rabayah, W. Arabic Stemming Techniques: Comparisons and New Vision. In Proceedings of the 8th IEEE GCC Conference and Exhibition, Muscat, Oman, 1–4 February 2015. [Google Scholar]

- Taghva, K.; Elkhoury, R.J.; Coombs, J. Arabic stemming without a root dictionary. In Proceedings of the International Conference on Information Technology: Coding and Computing (ITCC’05)-Volume II, Las Vegas, NV, USA, 4–6 April 2005; Volume 1, pp. 152–157. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef]

- Sparck Jones, K. A Statistical Interpretation of Term Specificity and Its Application in Retrieval. J. Doc. 1972, 28, 11–21. [Google Scholar] [CrossRef]

- Salton, G.; Buckley, C. Term-weighting approaches in automatic text retrieval. Inf. Process. Manag. 1988, 24, 513–523. [Google Scholar] [CrossRef]

- Bergstra, J.; Bengio, Y. Random Search for Hyper-Parameter Optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Schölkopf, B.; Burges, C.J.; Vapnik, V.N. Comparing Support Vector Machines with Gaussian Kernels to Radial Basis Function Classifiers. In Proceedings of the 9th International Conference on Artificial Neural Networks (ICANN), Edinburgh, UK, 7–10 September 1997; pp. 63–72. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).