Abstract

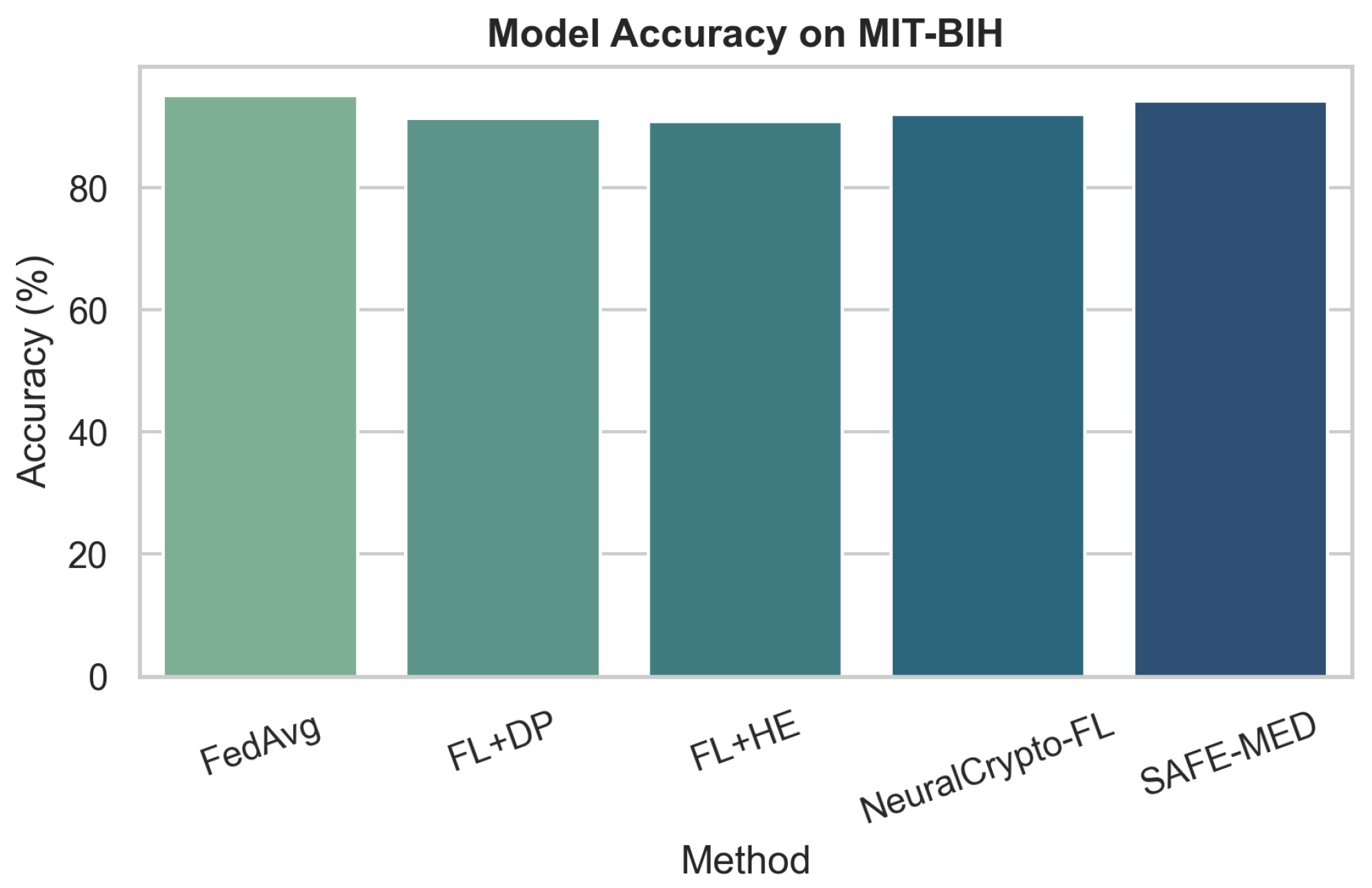

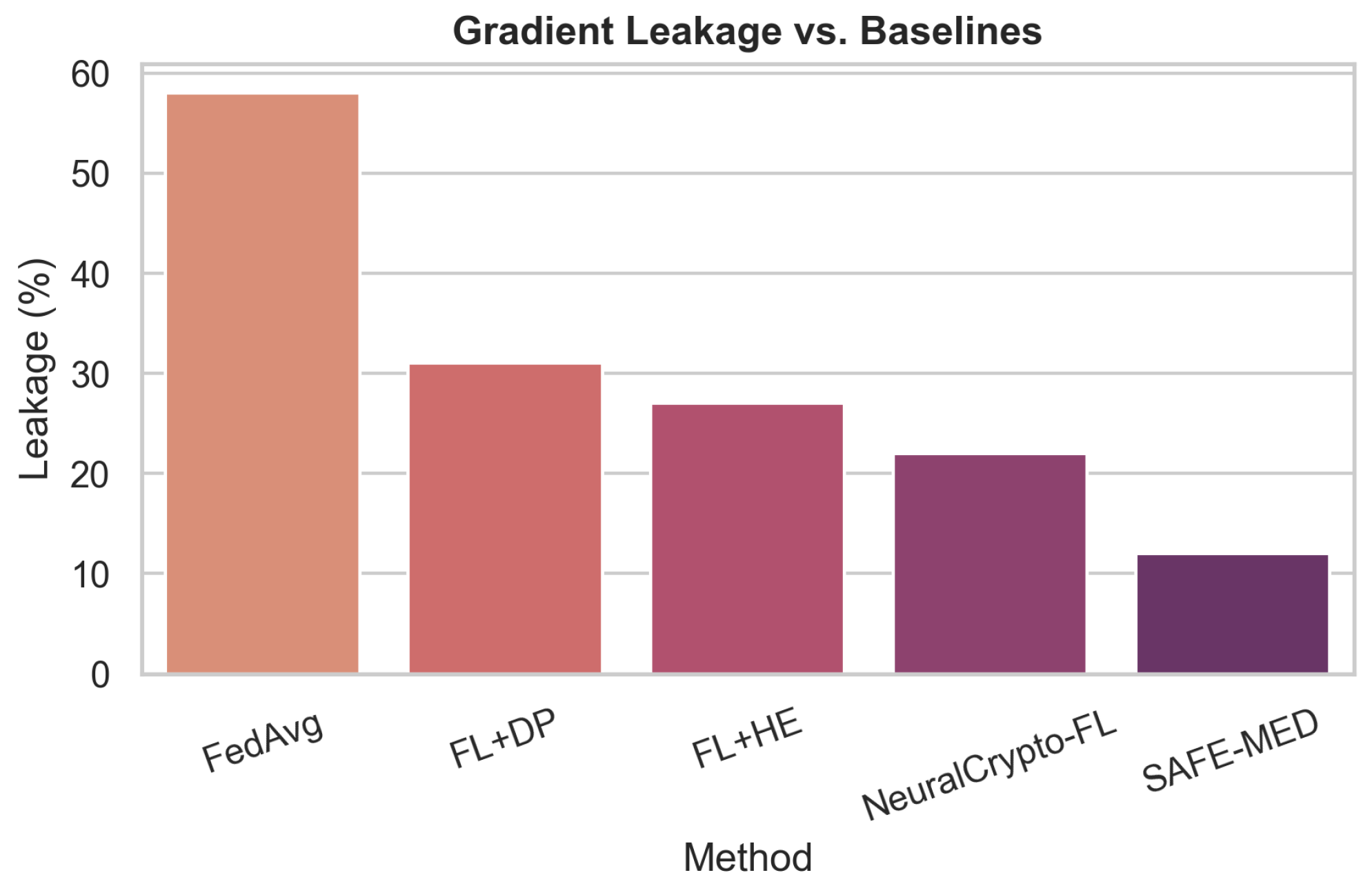

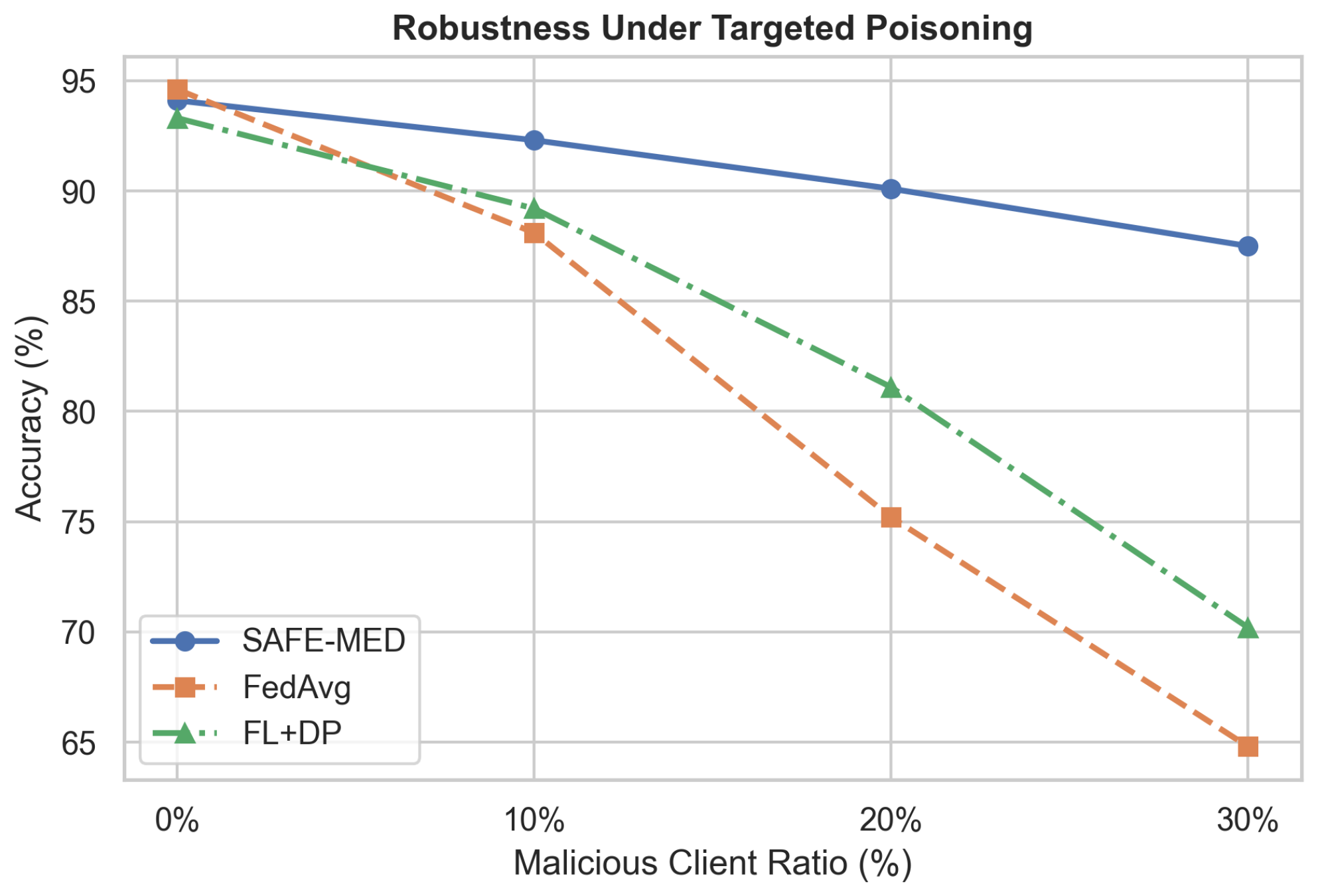

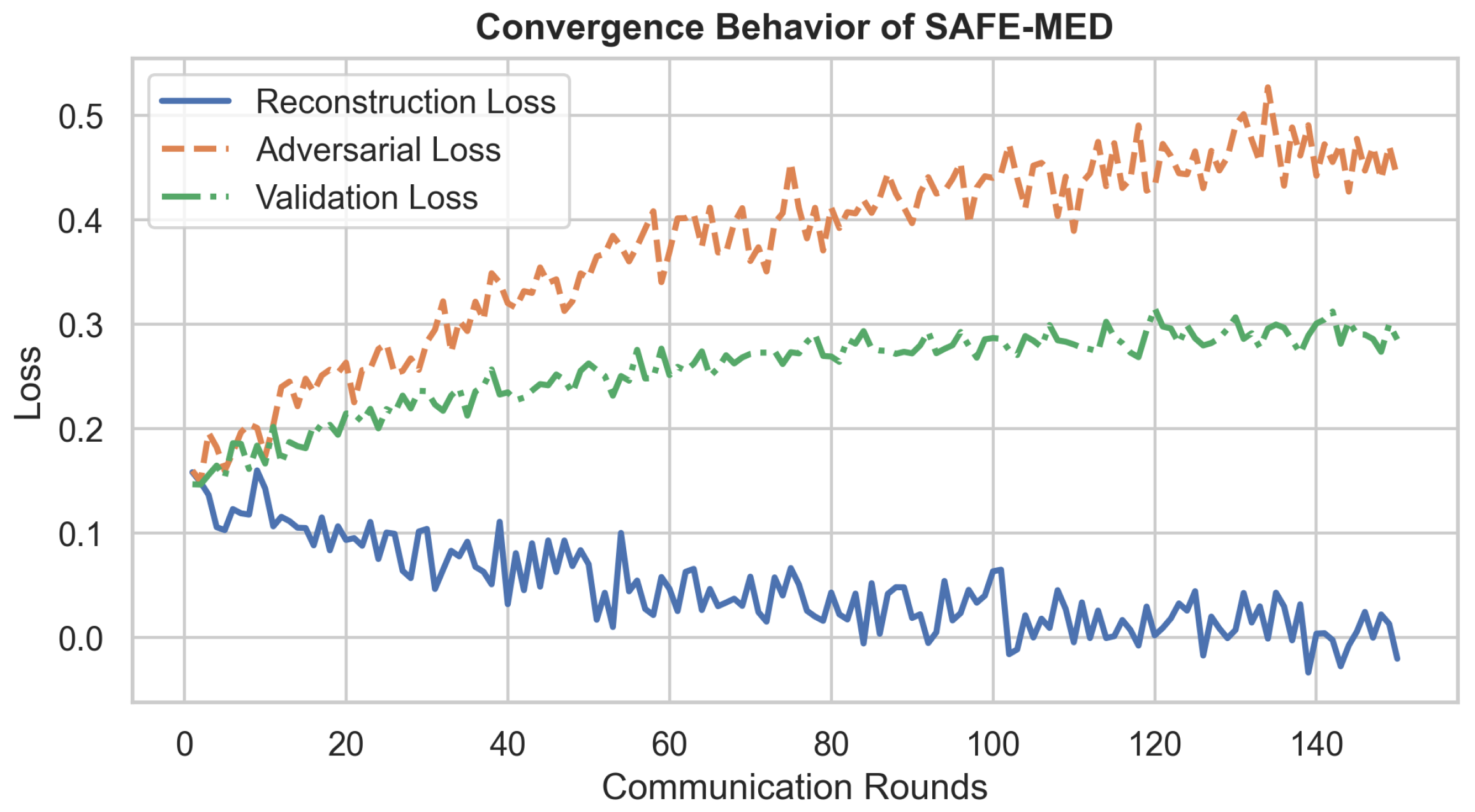

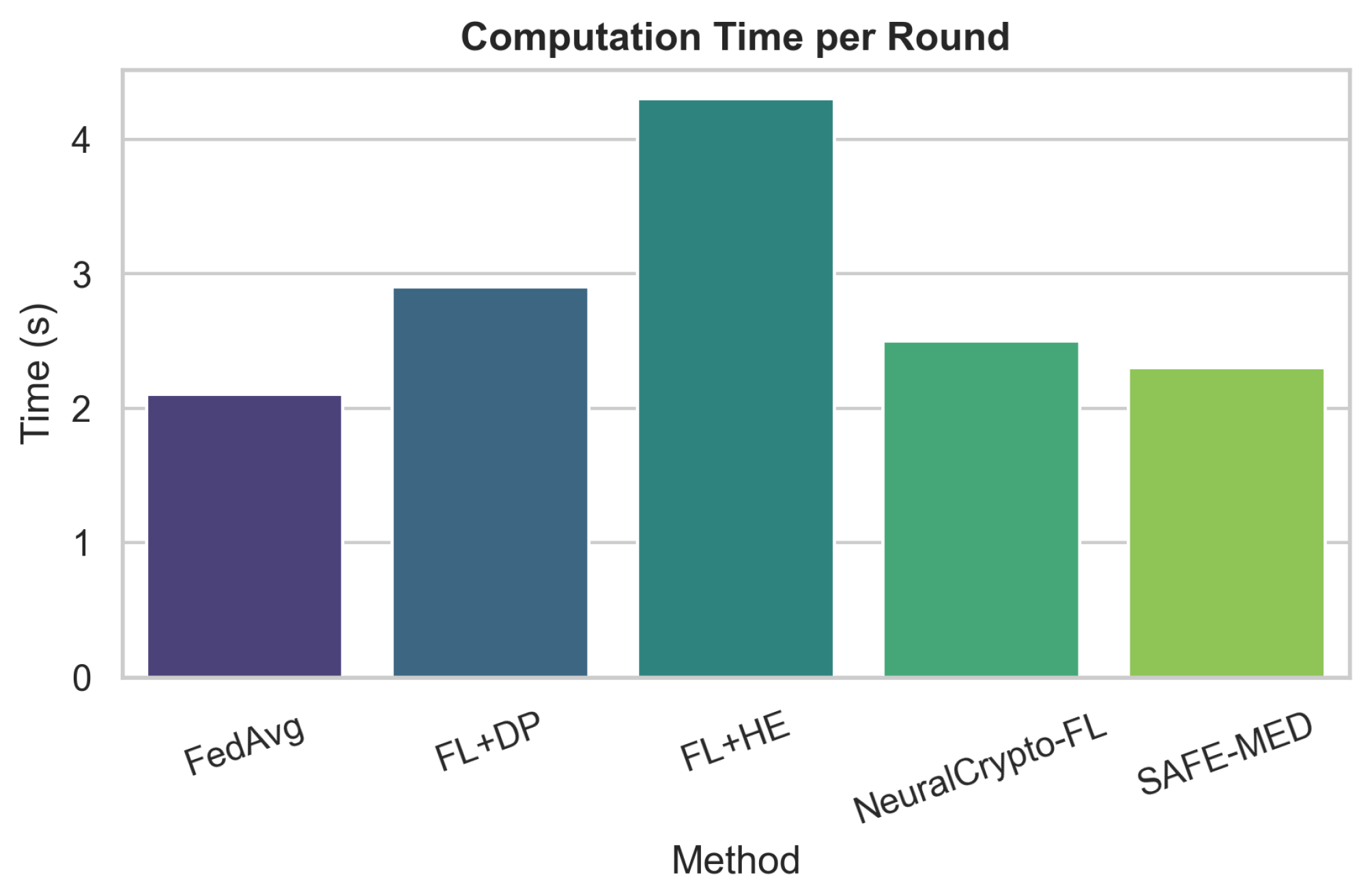

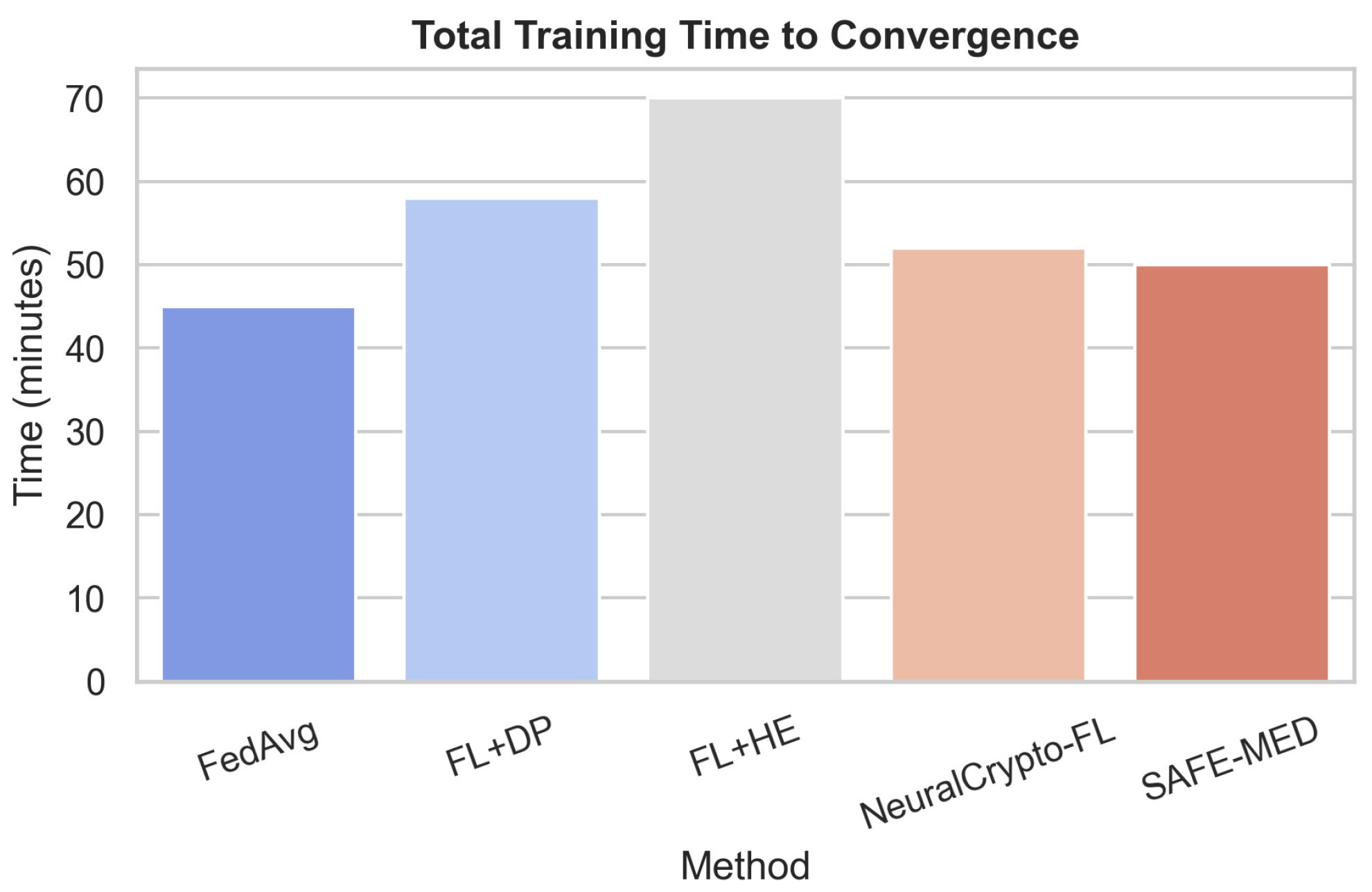

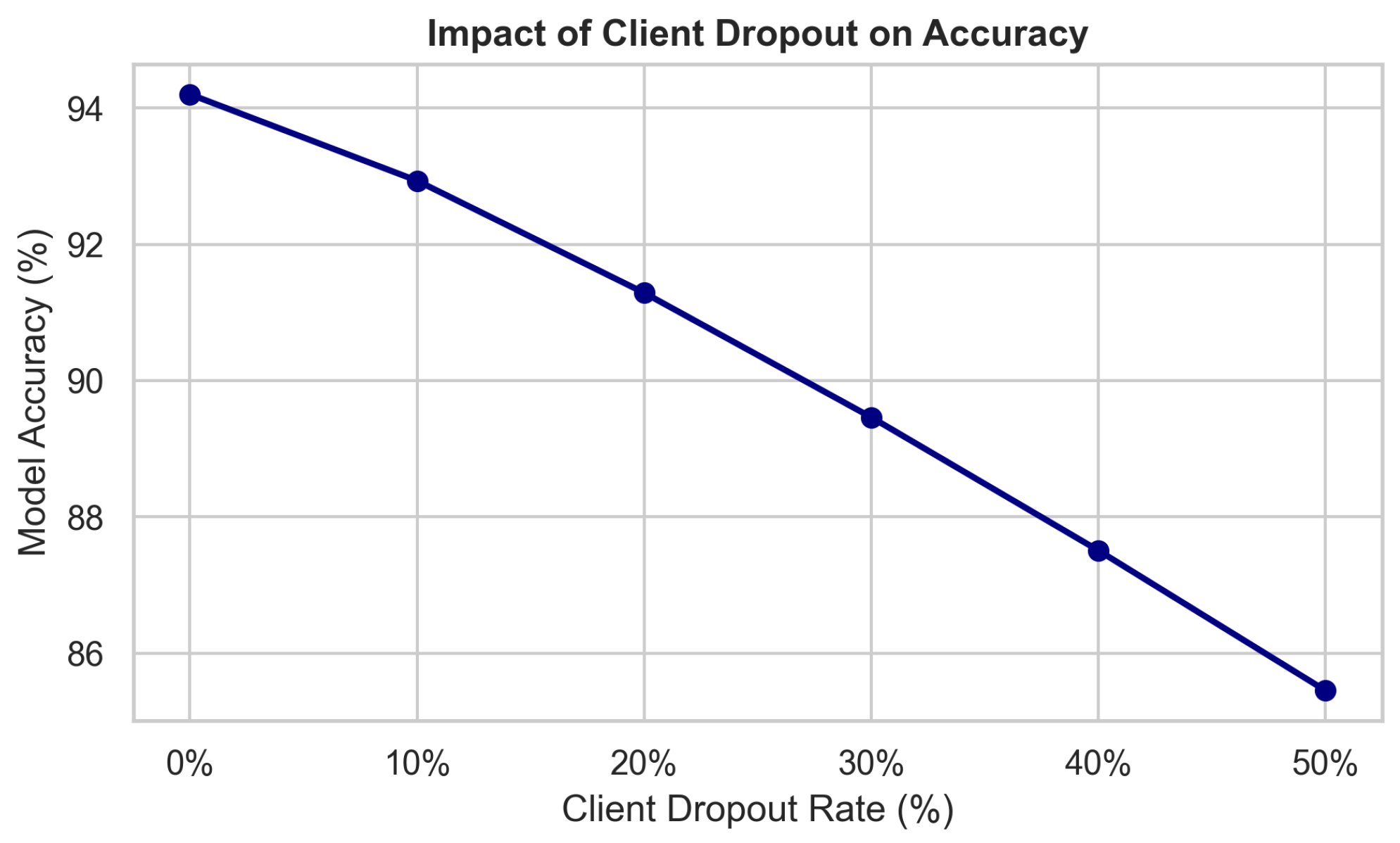

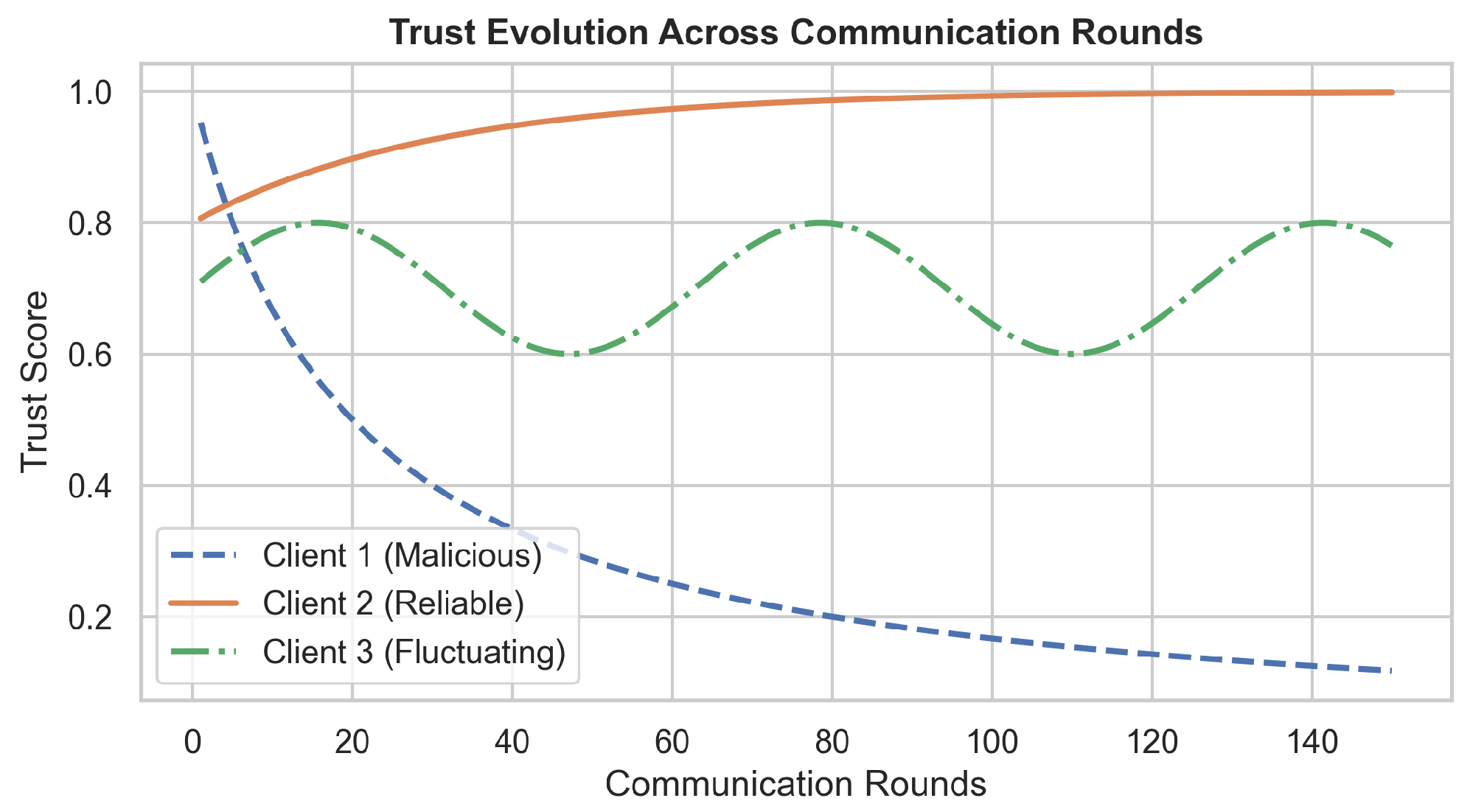

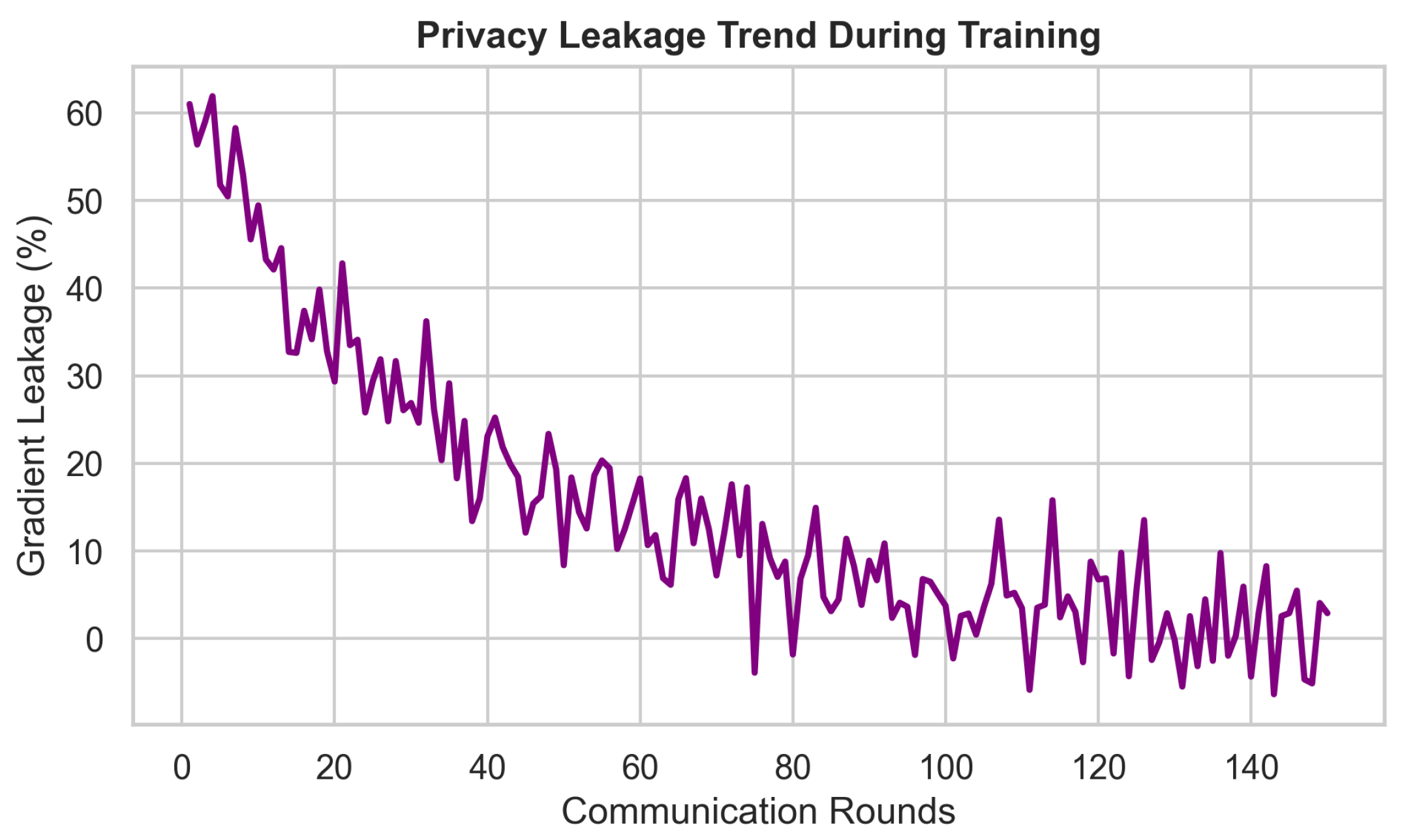

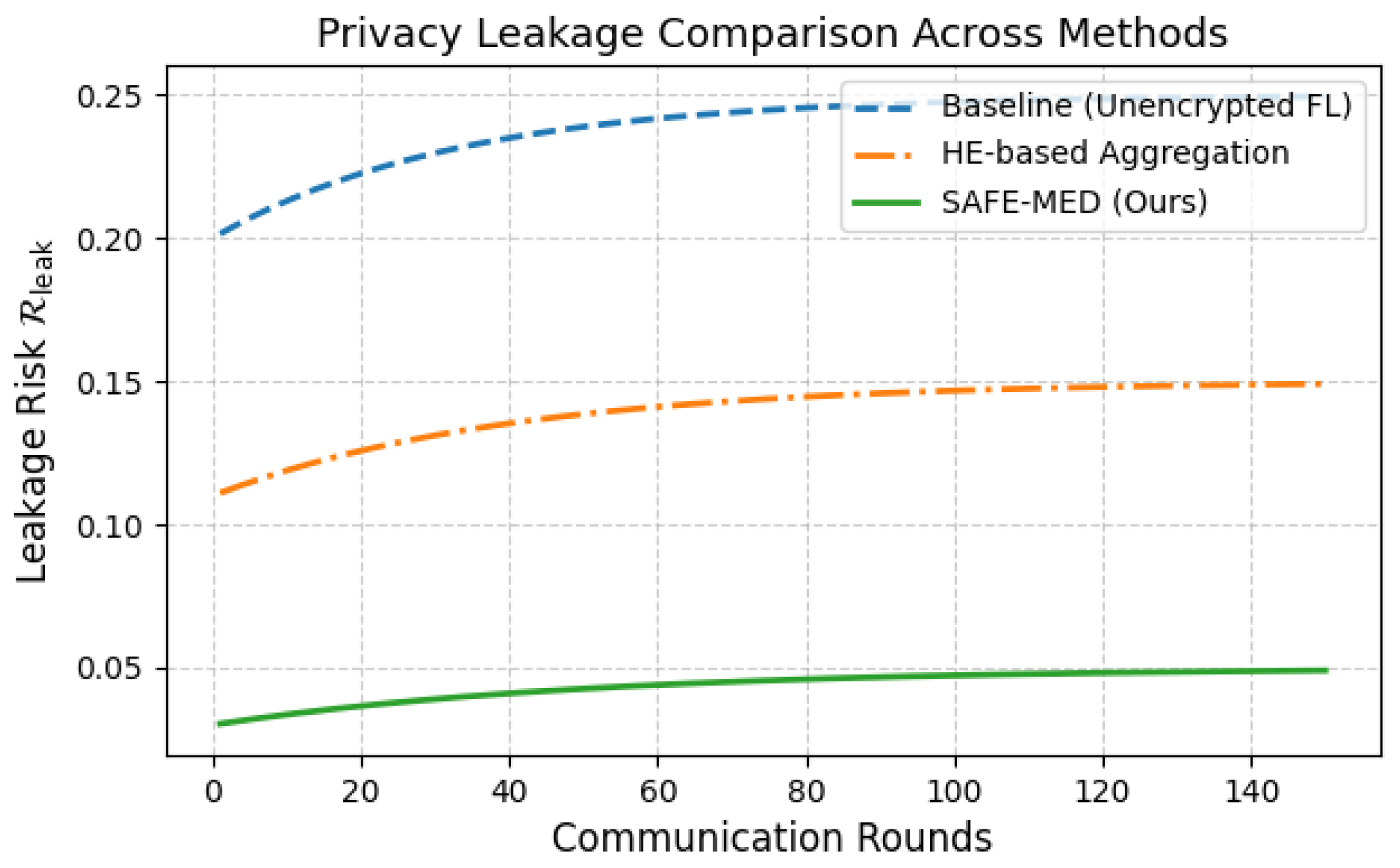

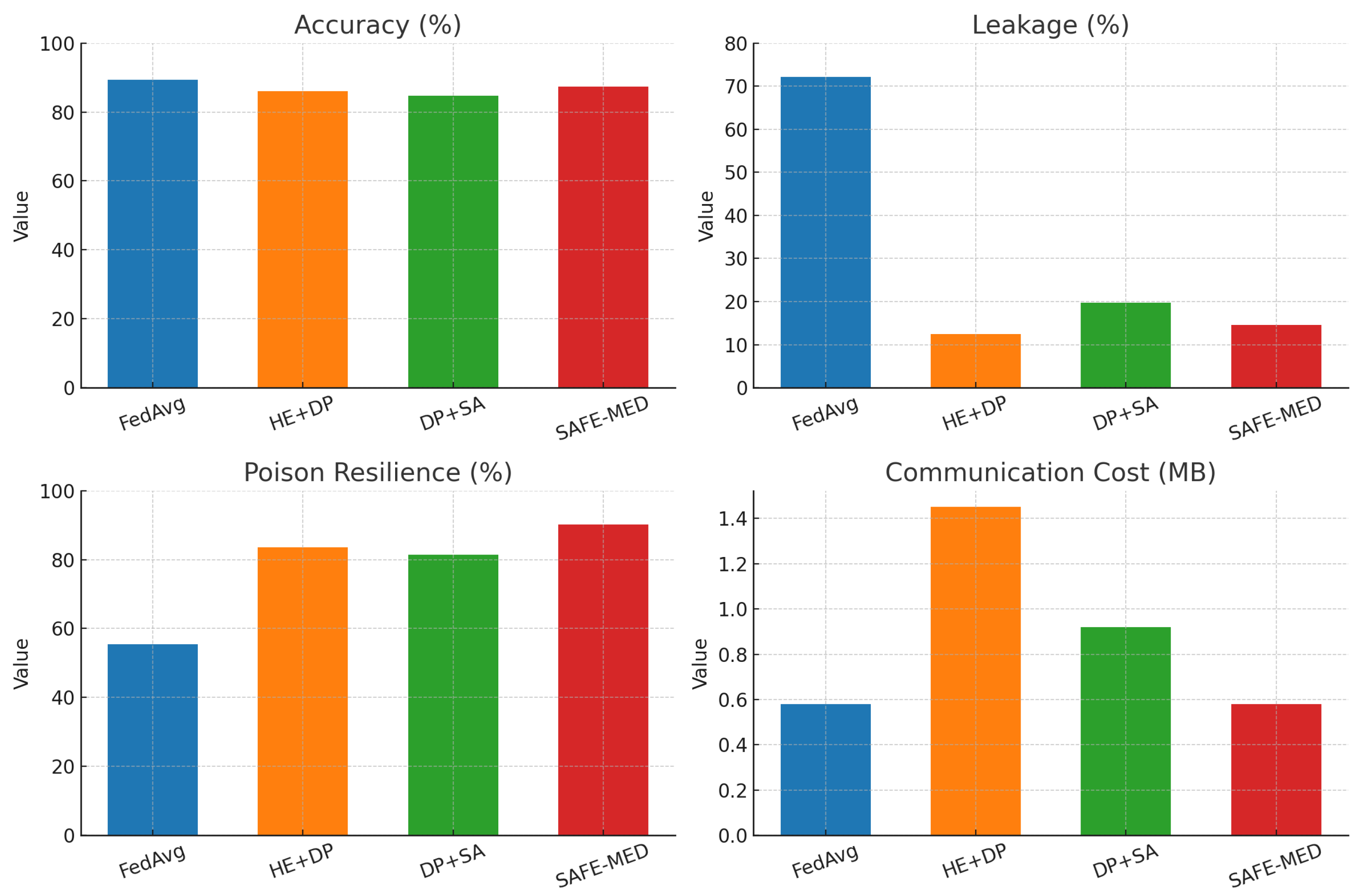

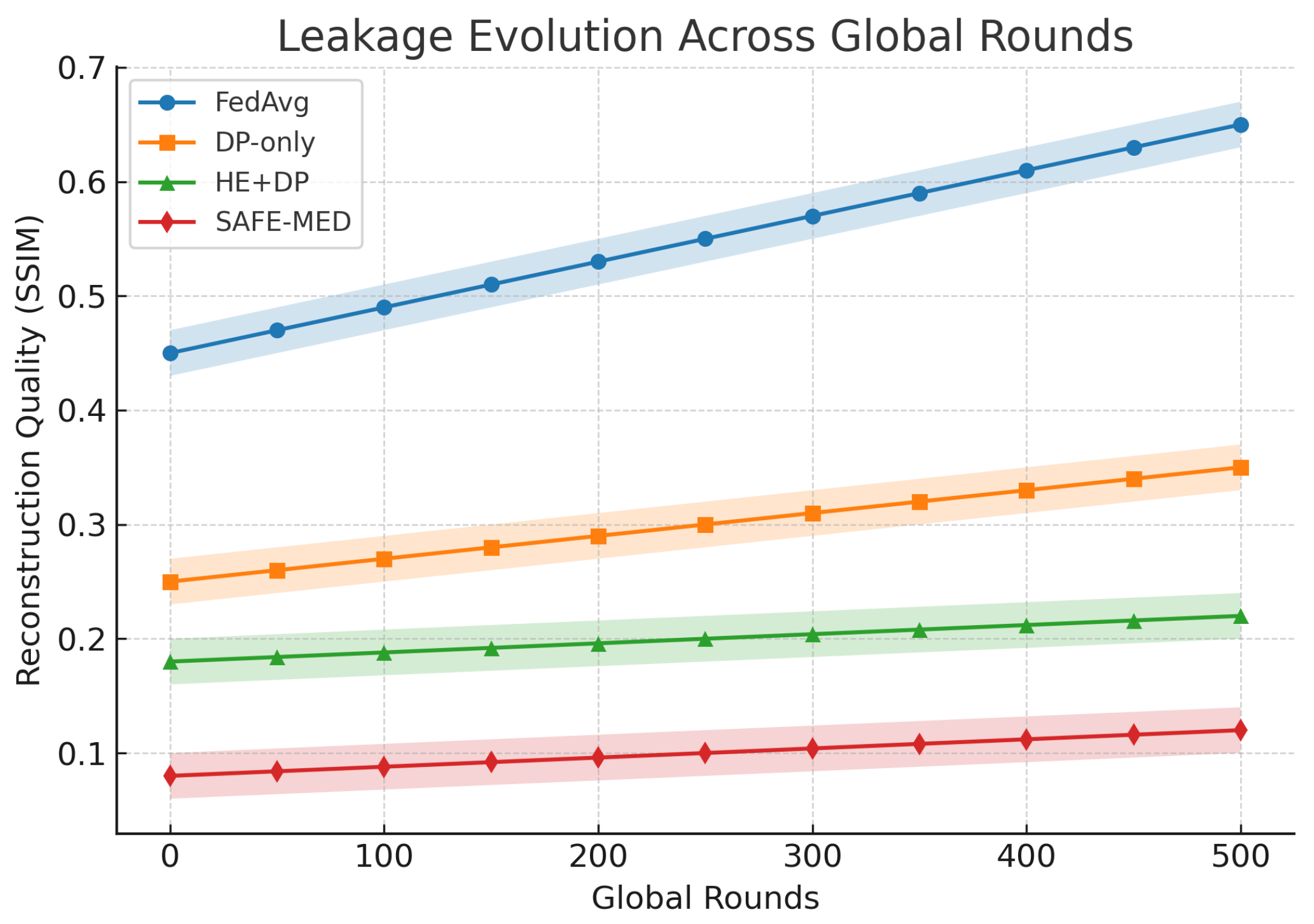

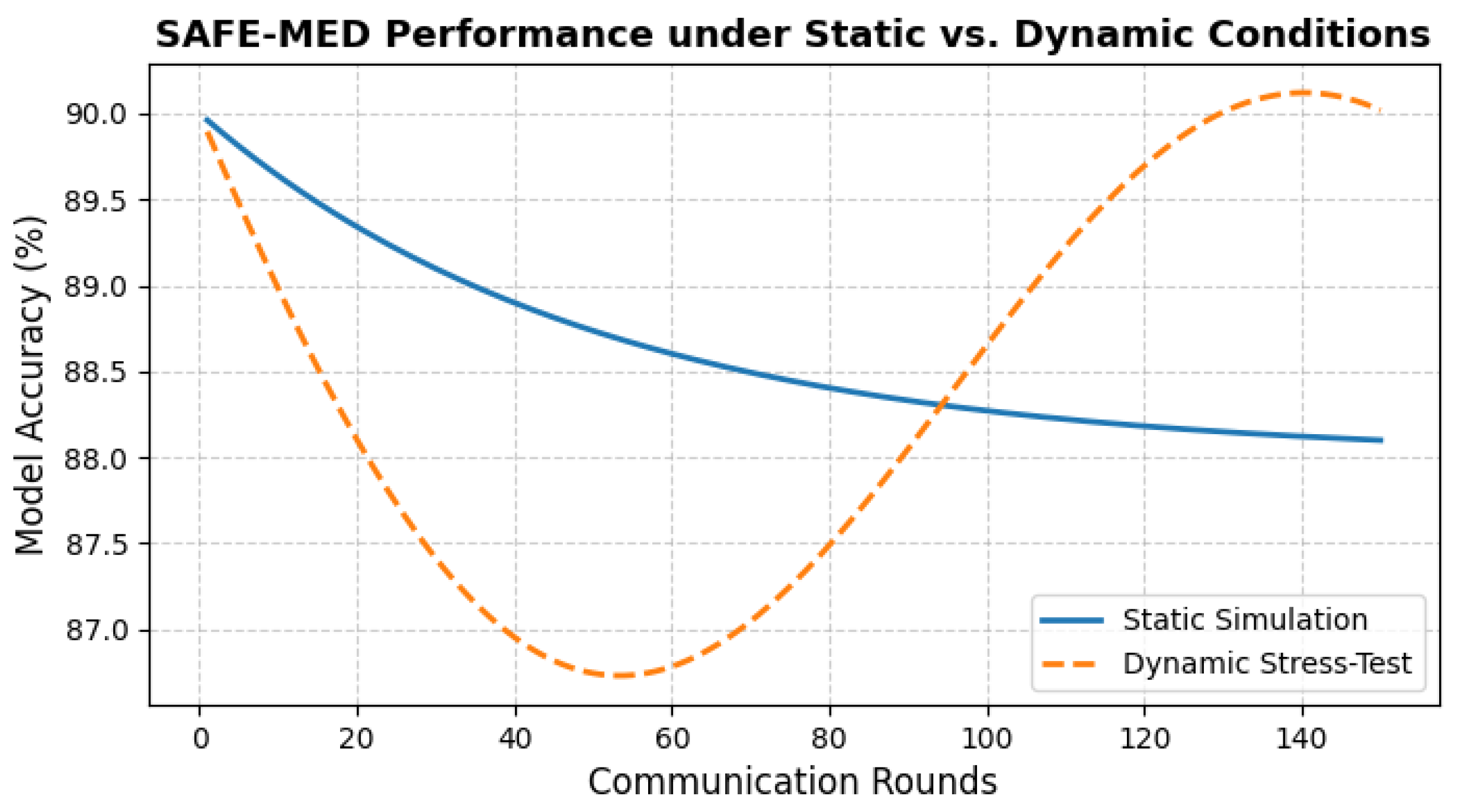

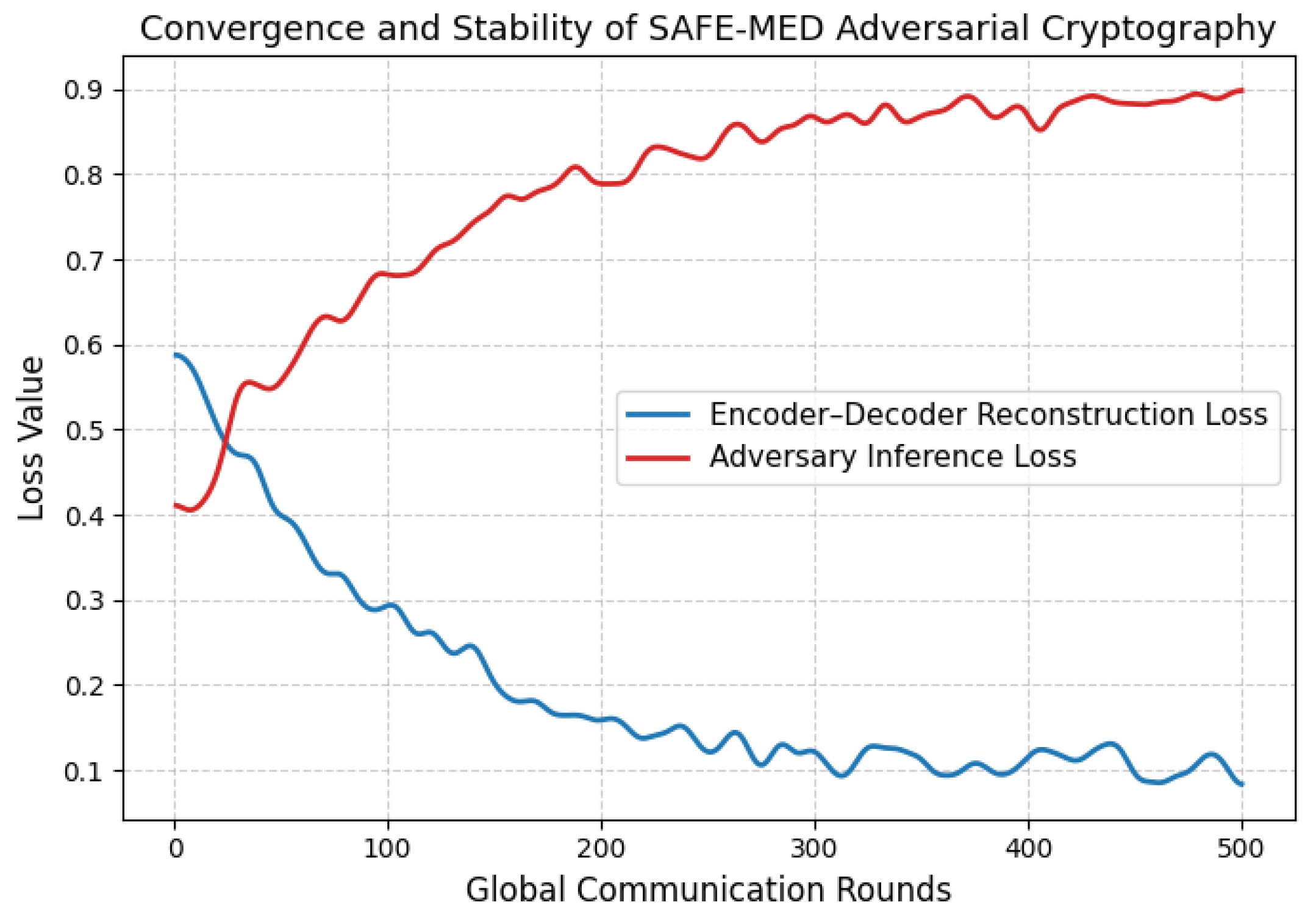

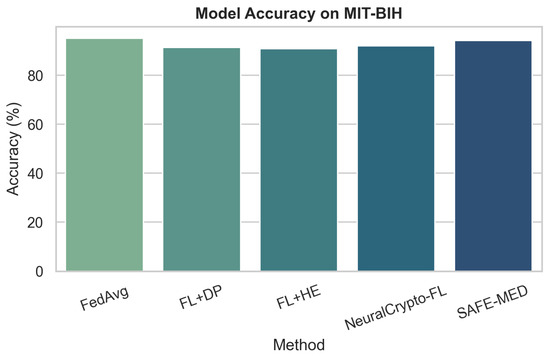

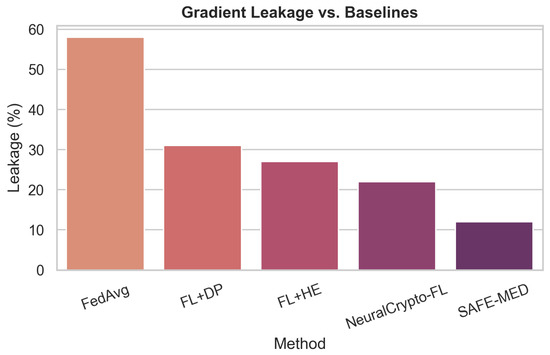

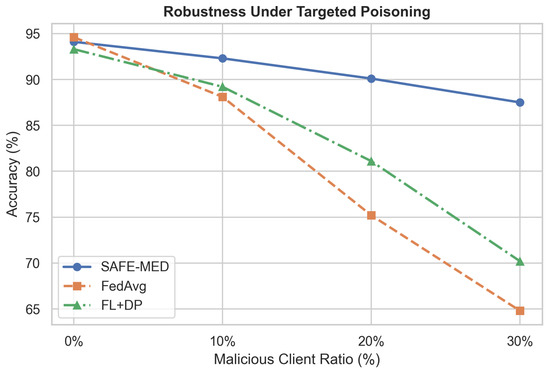

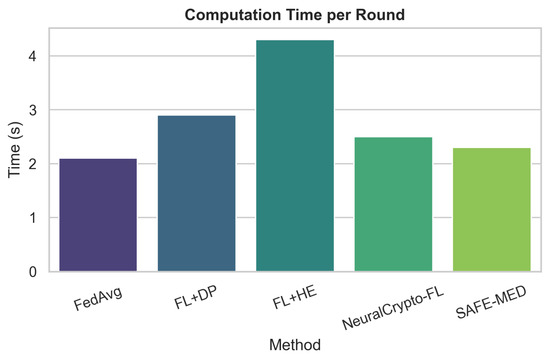

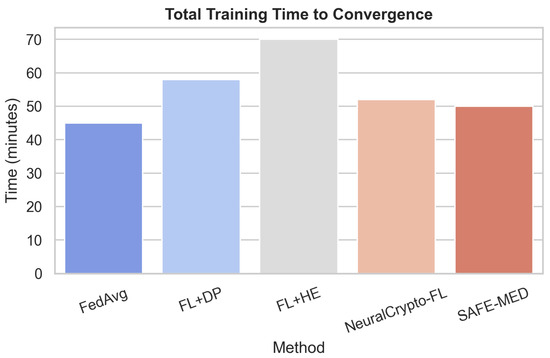

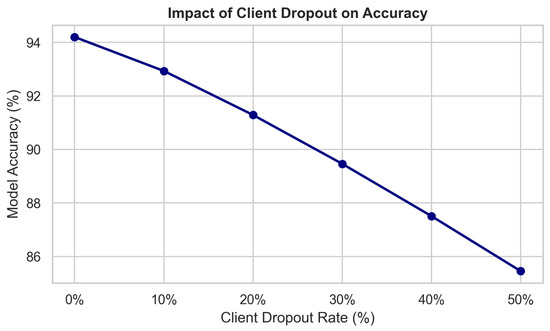

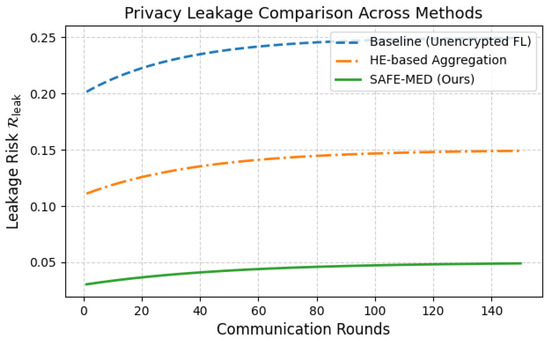

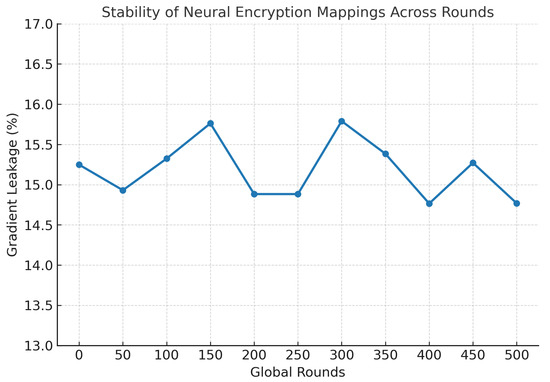

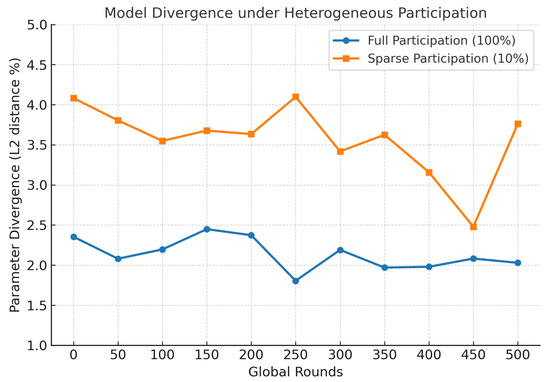

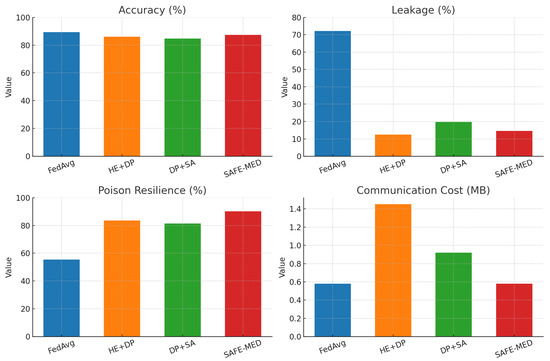

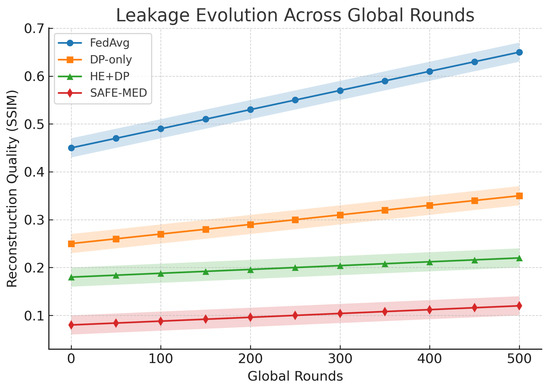

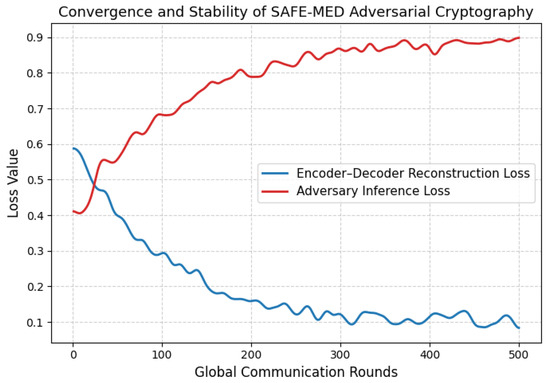

Federated learning (FL) offers a promising paradigm for distributed model training in Internet of Medical Things (IoMT) systems, where patient data privacy and device heterogeneity are critical concerns. However, conventional FL remains vulnerable to gradient leakage, model poisoning, and adversarial inference, particularly in privacy-sensitive and resource-constrained medical environments. To address these challenges, we propose SAFE-MED, a secure and adversarially robust framework for privacy-preserving FL tailored for IoMT deployments. SAFE-MED integrates neural encryption, adversarial co-training, anomaly-aware gradient filtering, and trust-weighted aggregation into a unified learning pipeline. The encryption and decryption components are jointly optimized with a simulated adversary under a minimax objective, ensuring high reconstruction fidelity while suppressing inference risk. To enhance robustness, the system dynamically adjusts client influence based on behavioral trust metrics and detects malicious updates using entropy-based anomaly scores. Comprehensive experiments are conducted on three representative medical datasets: Cleveland Heart Disease (tabular), MIT-BIH Arrhythmia (ECG time series), and PhysioNet Respiratory Signals. SAFE-MED achieves near-baseline accuracy with less than 2% degradation, while reducing gradient leakage by up to 85% compared to vanilla FedAvg and over 66% compared to recent neural cryptographic FL baselines. The framework maintains over 90% model accuracy under 20% poisoning attacks and reduces communication cost by 42% relative to homomorphic encryption-based methods. SAFE-MED demonstrates strong scalability, reliable convergence, and practical runtime efficiency across heterogeneous network conditions. These findings validate its potential as a secure, efficient, and deployable FL solution for next-generation medical AI applications.

Keywords:

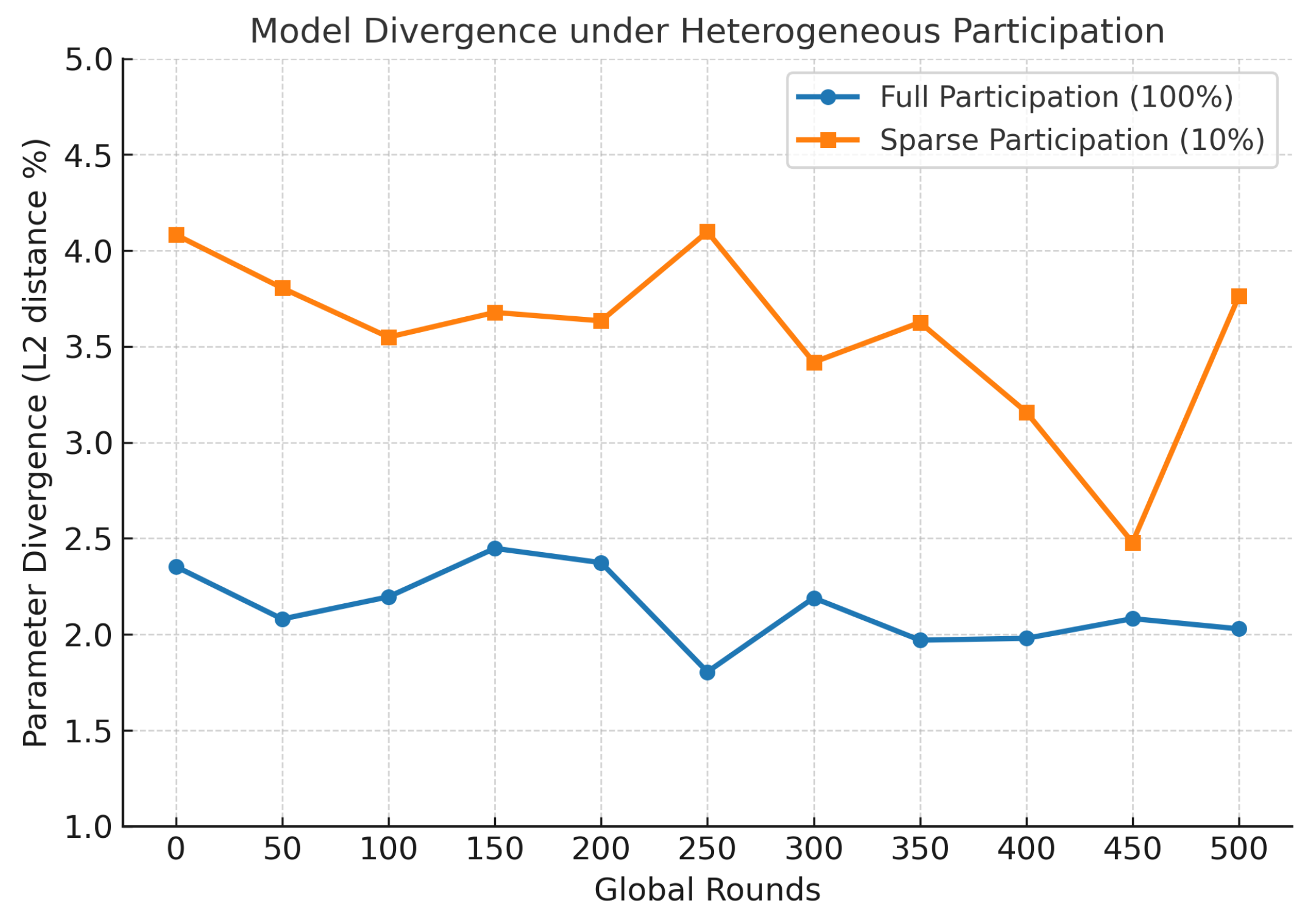

federated learning; Internet of Medical Things (IoMT); privacy preservation; neural cryptography; adversarial robustness; trust-based aggregation MSC:

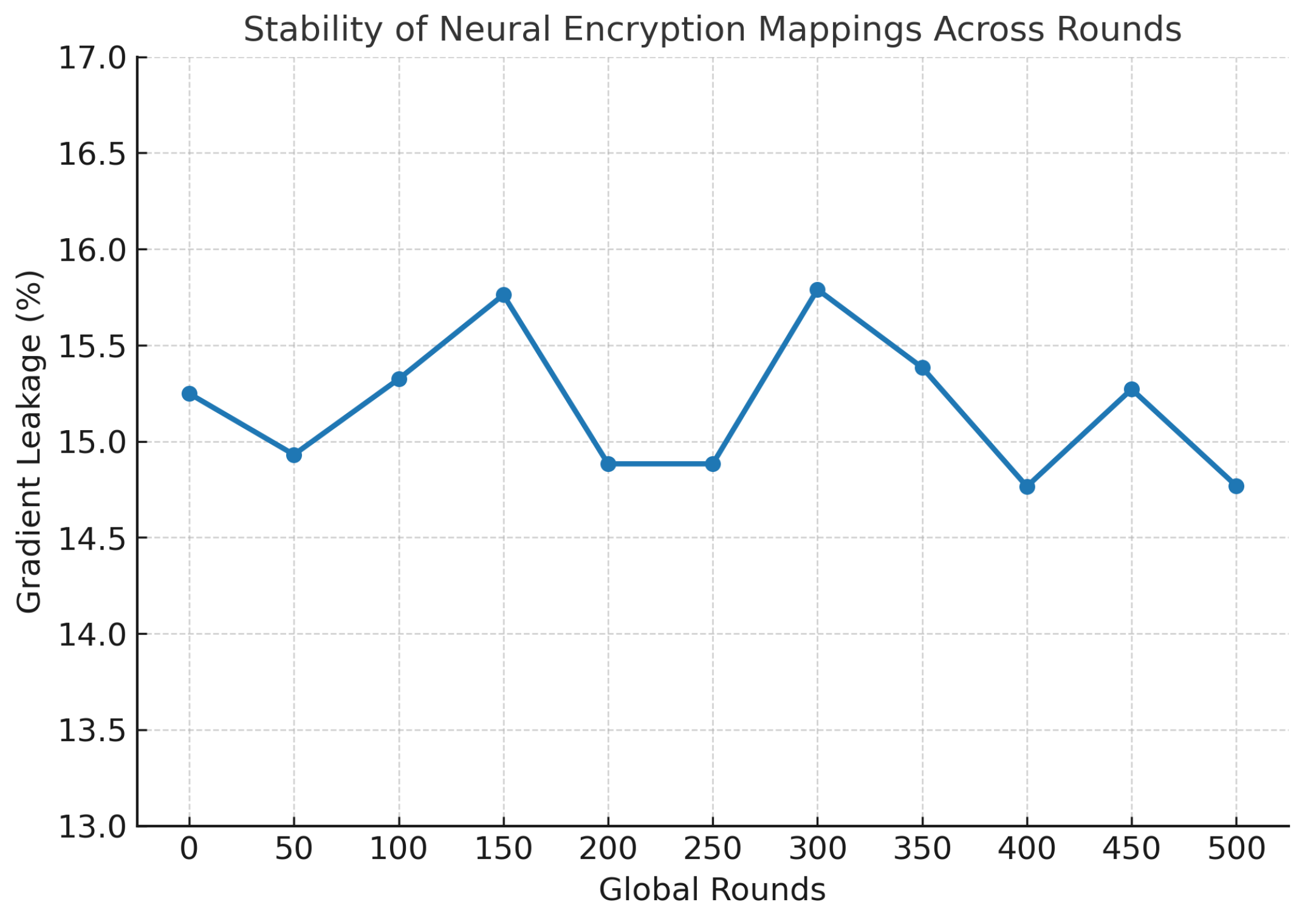

68M25; 68T07

1. Introduction

The rapid proliferation of Internet of Medical Things (IoMT) devices has led to a fundamental shift in how healthcare services are delivered, monitored, and managed. By integrating a variety of interconnected sensors, wearables, smart implants, and remote diagnostic systems, the IoMT ecosystem enables real-time monitoring and intelligent decision making [1]. This transformation is largely driven by the growing demand for personalized, remote, and intelligent healthcare solutions, particularly in the post-pandemic era where telemedicine, virtual care, and mobile diagnostics have gained significant traction [2]. However, Despite its potential, IoMT presents critical challenges. First, the highly sensitive nature of medical data demands stringent privacy preservation during model training and communication. Second, IoMT devices often operate under severe computational and energy constraints, limiting their ability to support complex security protocols. Third, the distributed nature of federated learning introduces new adversarial threats, such as gradient leakage, model inversion, and model poisoning, where malicious clients may manipulate gradient updates to degrade or hijack the global model [3]. Recent studies have demonstrated that gradients shared in federated learning systems can be exploited to reconstruct sensitive physiological signals, such as electrocardiograms (ECGs), through gradient inversion attacks [4]. These privacy violations pose serious risks to patient confidentiality, especially in remote diagnostic scenarios. Federated learning (FL) offers a decentralized alternative that aligns with data locality and privacy regulations in medical contexts. However, it still suffers from critical vulnerabilities such as gradient leakage and poisoning, which we address in this work and discussed in Section 2.

To mitigate these risks, existing solutions have adopted secure aggregation, differential privacy (DP), and homomorphic encryption (HE) [5]. While secure aggregation prevents individual update inspection, it incurs high computational and communication overhead. Differential privacy introduces random noise to ensure statistical privacy, often at the cost of model accuracy, especially in healthcare applications where fidelity is critical. Homomorphic encryption enables encrypted computation but remains too resource-intensive for deployment on IoMT devices [6]. Moreover, these solutions do not inherently address adversarial threats wherein malicious actors may attempt to reconstruct sensitive features from gradients or inject adversarial perturbations to manipulate global model behavior.

To overcome these trade-offs, we propose SAFE-MED, a scalable federated learning framework for IoMT that leverages Adversarial Neural Cryptography (ANC) to dynamically encrypt gradient updates without fixed keys or static noise. Unlike traditional HE/DP or fixed-key approaches, ANC adapts to data distributions and adversarial threats while remaining lightweight [7]. SAFE-MED further integrates anomaly-aware validation and trust-weighted aggregation to defend against gradient leakage, model inversion, and poisoning attacks, achieving strong security guarantees with high model fidelity and communication efficiency across heterogeneous devices (see Section 4).

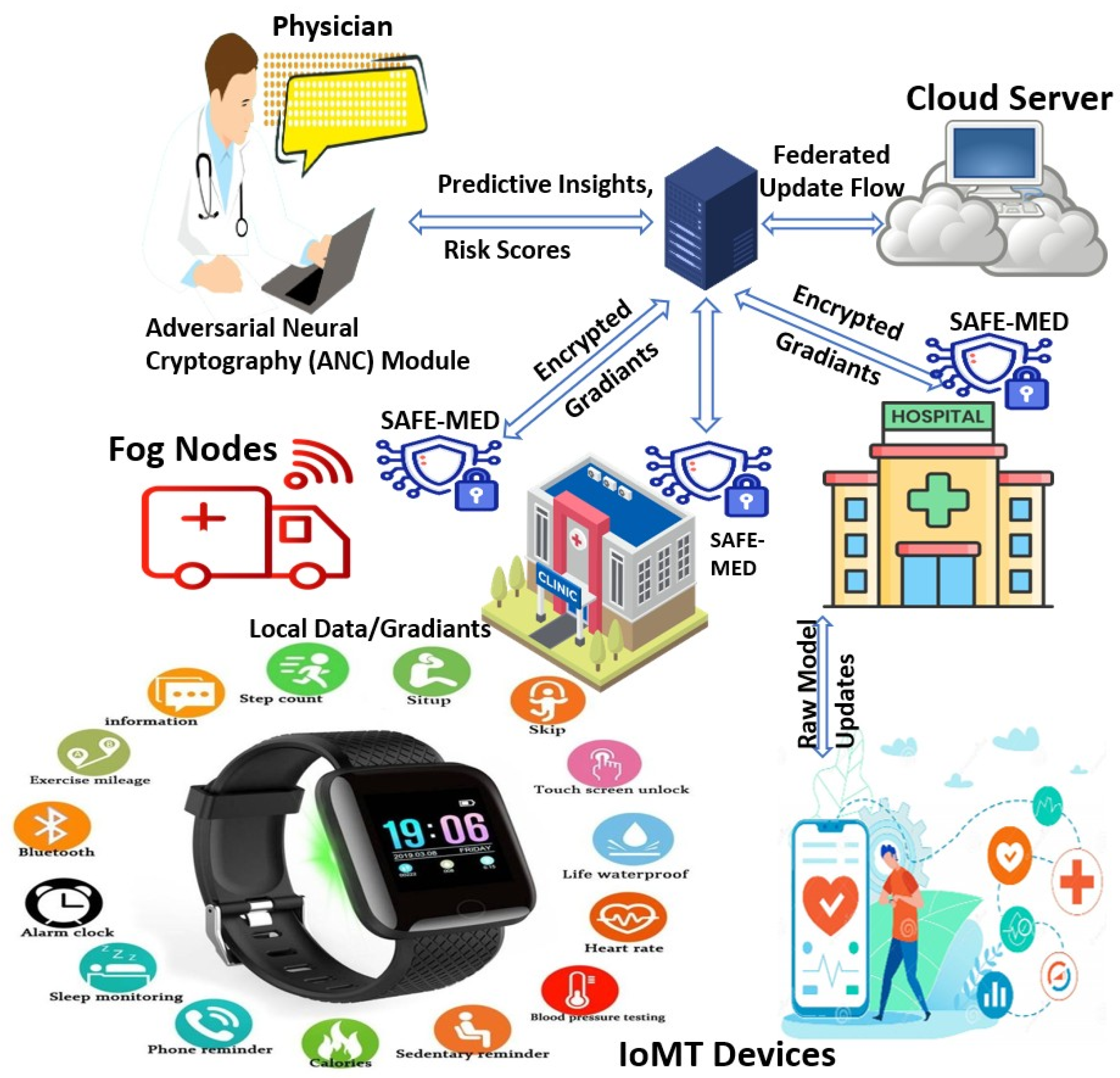

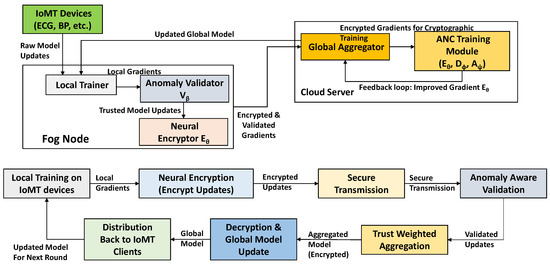

Figure 1 illustrates the overall architecture of the proposed SAFE-MED framework. The system is structured in a hierarchical manner, comprising IoMT devices, fog nodes, and a centralized cloud platform. Each fog node integrates a SAFE-MED module responsible for encrypting and validating gradient updates before forwarding them to the cloud.

Figure 1.

SAFE-MED system architecture.

To the best of our knowledge, SAFE-MED is the first framework to tightly couple adversarial neural cryptography with federated learning for privacy preservation in Internet of Medical Things environments. Unlike conventional secure aggregation or differential privacy methods, which either require substantial computational overhead or sacrifice utility for privacy, SAFE-MED introduces a trainable cryptographic architecture that learns encryption and decryption protocols through adversarial co-evolution. This provides both computational scalability and adaptability to diverse and dynamic IoMT conditions. Moreover, existing neural cryptography works have not been integrated into a federated learning pipeline, particularly in the healthcare domain. SAFE-MED uniquely bridges this gap by embedding neural cryptography directly into the federated update transmission stage, enabling real-time encryption of gradients in a lightweight, attack-resilient manner. Its capability to defend against both passive and active adversaries, while maintaining model accuracy and communication efficiency, sets it apart from existing privacy-preserving solutions.

Motivation

Even with advances in federated learning and privacy-preserving techniques, IoMT systems face unresolved challenges. Gradient updates remain vulnerable to leakage and inversion attacks, exposing sensitive patient data. Cryptographic methods such as homomorphic encryption and secure aggregation are often too resource-intensive for low-power IoMT devices, while differential privacy can severely reduce diagnostic accuracy. Moreover, conventional frameworks lack adversarial robustness, leaving them exposed to poisoning and manipulation attacks.

Existing methods thus fall short: secure aggregation adds heavy overhead, differential privacy introduces accuracy loss, and homomorphic encryption is impractical for real-time healthcare. Furthermore, these approaches are not inherently resilient against adversarial behaviors such as model poisoning or gradient inversion. To overcome these gaps, we propose SAFE-MED, a lightweight, adaptive, and adversarially robust framework that integrates neural cryptography, anomaly-aware gradient validation, and trust-weighted aggregation, enabling strong privacy preservation without sacrificing accuracy or efficiency in IoMT deployments.

This gap highlights the absence of a lightweight, adaptive, and adversarially robust framework that can ensure privacy preservation without compromising accuracy or efficiency in IoMT deployments. Our proposed SAFE-MED framework directly addresses this gap by embedding adversarial neural cryptography into the federated learning pipeline, combined with anomaly-aware validation and trust-weighted aggregation, to deliver strong privacy, resilience, and scalability in real-world medical AI scenarios.

In response to the critical challenges of privacy leakage, resource heterogeneity, and adversarial resilience in federated IoMT systems, the key technical contributions of this work are as follows:

- We propose SAFE-MED, a novel federated learning framework that integrates adversarial neural cryptography for privacy preservation in IoMT.

- We design a lightweight, trainable tripartite architecture (encryptor, decryptor, adversary) that eliminates fixed-key cryptographic assumptions.

- We introduce anomaly-aware gradient validation and differential compression to detect malicious clients and reduce communication overhead.

- We conduct comprehensive experiments on real-world medical datasets under adversarial conditions, demonstrating significant improvements in privacy, robustness, and efficiency over state-of-the-art FL methods.

The remainder of the paper is organized as follows. Section 2 presents background knowledge and related work in federated learning, privacy-preserving techniques, and neural cryptography in the context of IoMT. Section 3 describes the overall system architecture, threat model, and design goals of the SAFE-MED framework. Section 4 details the proposed adversarial neural encryption mechanism and its integration into the federated learning pipeline. Section 5 outlines the implementation specifics, including model training, client orchestration, and experimental evaluations across multiple datasets and adversarial scenarios. Finally, Section 6 concludes the paper, discussing its limitations and future directions.

2. Related Work

The integration of Artificial Intelligence (AI) into healthcare has accelerated with the advancement of IoMT infrastructures [8]. These systems enable distributed sensing and decision making, but their privacy-sensitive nature demands robust privacy-preserving learning frameworks [9]. Federated learning (FL) has emerged as a promising approach for decentralized AI training in medical environments, driving research into secure and efficient architectures [10]. This section reviews related work across five themes: FL in IoMT, secure aggregation, cryptographic privacy mechanisms, adversarial resilience, and neural cryptography, and highlights how SAFE-MED advances beyond prior approaches.

Federated learning protects patient privacy by keeping sensitive data on local devices [11]. The original Federated Averaging algorithm [12] reduced data exposure but assumed stable connectivity and benign clients, conditions rarely met in IoMT. Extensions such as FedHealth [13] and FedIoT [14] adapted FL to health and biomedical contexts. Fed-Health introduced personalization through global–local model fusion but lacked defenses against privacy leakage, while FedIoT optimized client selection and resource scheduling without cryptographic guarantees.

Secure aggregation protocols are central to protecting gradient updates in federated learning [15]. The widely used scheme by Bonawitz et al. [16] applies threshold homomorphic encryption for secure summation but imposes high computation and communication costs, making it unsuitable for resource-limited IoMT devices. Alternatives such as SEAL-PSI and Prio [17] reduce complexity via polynomial approximations or secure multiparty computation, yet remain fragile and hardware-dependent. Differential privacy (DP) has also been applied in federated learning to provide formal guarantees [18]. Recent work has explored multi-objective optimization for secure and efficient IoMT data aggregation [19], but such approaches do not explicitly address privacy leakage or adversarial robustness, which SAFE-MED aims to overcome. Client-level DP [20] adds Gaussian noise to gradients, while the moments accountant method [21] improves privacy loss tracking. Similar to recent efforts in communication systems that integrate optimization with enhanced security guarantees [22], our work emphasizes the importance of jointly addressing efficiency and privacy in IoMT environments.

Adversarial attacks pose major risks to federated learning, including poisoning (malicious gradient injection) and inference attacks such as model inversion and gradient leakage. Prior studies [23,24] showed that backdoor and model replacement attacks can compromise FL systems. Defenses like Krum [25] and Trimmed Mean [26] filter outliers but fail against stealthy or colluding adversaries, while FLTrust [27] relies on trusted baselines that are impractical in IoMT. SAFE-MED takes a different approach by encrypting updates before transmission, blocking inference and corruption even if the aggregator or clients are compromised. Neural cryptography trains neural networks to learn encryption protocols through adversarial training [28]. Abadi et al. [29] first demonstrated secure neural message exchange, with later extensions applied to images and sequential data. However, most works remain theoretical and neglect real-world issues such as heterogeneity, latency, and bandwidth in IoMT [30].

For hybrid baselines, we followed the configurations reported in prior work. Homomorphic encryption (HE) combined with differential privacy (DP) has been shown to provide strong leakage reduction but typically incurs high computational and communication overheads, particularly in IoT settings [31,32,33]. Lightweight Paillier-based HE introduces ciphertext expansion of up to 2–3×, which is consistent with our observed communication cost of 1.45 MB per round. Similarly, combining differential privacy with secure aggregation (DP + SA) [16,34] reduces leakage but leads to utility loss under non-IID conditions due to compounded DP noise. The accuracy degradation (2–5%) and moderate poisoning resilience (80–82%) observed in our experiments are in line with these prior studies. To ensure fairness, all baselines were re-implemented under identical IoMT settings, and the comparative outcomes confirm that SAFE-MED achieves a more favorable privacy–utility–efficiency trade-off.

SAFE-MED also addresses scalability and heterogeneity challenges in federated learning, where devices vary in capability, connectivity, and participation. Methods such as FedAsync [35], FedProx [36], and Scaffold [37] mitigate asynchrony and non-IID effects through gradient correction and adaptive aggregation, but these approaches assume plaintext communication, leaving them exposed to eavesdropping and gradient-matching attacks. Communication-efficient schemes based on sparsification, quantization, or sketching [38] reduce transmission costs but often degrade accuracy or neglect privacy. In contrast, SAFE-MED combines differential gradient compression with neural encryption, thereby reducing communication overhead while preserving security in both cross-device and cross-silo settings.

Homomorphic encryption (HE) enables computations on encrypted data [39] and has been incorporated in frameworks such as CryptoNet [40], HEFL [41], and FHE-FL [42]. While these approaches provide strong privacy guarantees, they incur high computational latency, memory overhead, and complex key management, making them impractical for real-time IoMT. Fully homomorphic schemes are particularly constrained by their incompatibility with nonlinear operations, requiring costly approximations or retraining. Trusted Execution Environments (TEEs) such as Intel SGX, ARM TrustZone, and AMD SEV can secure FL computations by isolating sensitive data within hardware enclaves [43]. TEE-based FL solutions [44] protect gradients during aggregation, but their reliance on specialized hardware restricts deployment across heterogeneous IoMT infrastructures. Furthermore, TEEs remain vulnerable to side-channel attacks (e.g., Foreshadow, PlunderVolt, MicroScope) and cannot protect updates during transmission. SAFE-MED addresses these challenges by employing end-to-end neural encryption, ensuring updates remain protected from device to aggregator. Unlike TEEs, it is fully software-defined, platform-agnostic, and free from hardware trust dependencies [45]. Resource constraints in IoMT devices make computational efficiency a critical requirement. Lightweight FL efforts such as FedLite [46], FedML [47], and LIFL [48] leverage pruning, quantization, or sparse encoding to reduce overhead. While these approaches improve efficiency, they provide limited or no intrinsic privacy guarantees. Hybrid defenses that combine multiple mechanisms have also been proposed, including DP-AggrFL, which integrates differential privacy with secure aggregation, and HyFed [49], which employs homomorphic encryption alongside robust aggregation. While these hybrid schemes offer composite protection, their complexity scales poorly with client heterogeneity and larger participant populations [50]. Moreover, authors in [51] proposed a privacy-preserving federated learning framework for IoMT-driven big data analytics using edge computing, demonstrating emerging interest in efficient, decentralized healthcare data processing.

Practical IoMT deployments must handle client churn, packet loss, noisy data, and asynchrony. While adaptive FL frameworks such as FedAdapt [52] and FedDyn [53] address variability, they lack intrinsic privacy and adversarial resilience. SAFE-MED overcomes these gaps through a decentralized orchestration layer that dynamically adapts synchronization while embedding adversarially trained neural encryption to defend against gradient trajectory and inversion attacks [54]. This ensures secure operation even in resource-limited settings, as summarized in Table 1, which contrasts SAFE-MED with state-of-the-art privacy-preserving FL approaches and highlights its unique suitability for IoMT.

Table 1.

Comparison of state-of-the-art privacy-preserving federated learning approaches with SAFE-MED.

SAFE-MED’s security evaluation distinguishes it from prior work. While most studies test defenses only against limited adversaries or in controlled settings [55], SAFE-MED provides both theoretical and empirical validation. It frames adversarial learning as a constrained minimax optimization and evaluates robustness via mutual information, adversarial accuracy, and reconstruction fidelity under gradient matching, membership inference, and poisoning attacks in realistic IoMT conditions. Overall, SAFE-MED introduces a lightweight, trainable encryption framework embedded in the learning pipeline. Its modular, hardware-agnostic design resists both passive and active threats, enabling secure and scalable healthcare AI deployments.

3. Problem Formulation

To support privacy-preserving federated learning in dynamic and resource-constrained IoMT environments, this section formalizes the underlying problem setting. We define the system architecture, threat assumptions, and design goals that shape the SAFE-MED framework. Unlike conventional formulations that assume idealized conditions, we consider real-world scenarios characterized by intermittent connectivity, heterogeneous computation, and exposure to both passive and active adversaries. The formulation aims to develop a secure and adaptive learning framework that minimizes information leakage, communication overhead, and performance degradation. It captures interactions across the client, fog, and cloud tiers and quantifies trade-offs among privacy, accuracy, and efficiency under practical deployment conditions.

3.1. Network Scenario

We consider a large-scale, heterogeneous IoMT environment comprising a dynamically changing set of medical edge devices denoted by . Each device represents a resource-constrained medical sensor, wearable monitor, implantable device, or mobile diagnostic tool operating under varying computational, energy, and connectivity constraints. Devices are grouped into logical clusters corresponding to edge gateways or regional health hubs, enabling decentralized orchestration. The connectivity between devices and the cloud aggregator is modeled as a time-varying directed graph , where represents the communication links at time t, influenced by wireless bandwidth, latency, and energy constraints.

The federated learning process proceeds asynchronously over non-IID local datasets residing on each device . Clients may join or leave at any round r based on availability, denoted by a binary participation function . The system incorporates adversarial elements, including passive eavesdroppers attempting gradient inversion and active adversaries injecting poisoned updates. These threats are modeled within a threat set , encompassing both internal compromised devices and external network-level adversaries.

3.2. System Model

We consider a three-tier architecture comprising a set of resource-constrained IoMT devices , fog nodes , and a centralized cloud aggregator . Each device participates in local model training using its private dataset and periodically communicates encrypted gradient updates to an associated fog node , which then transmits aggregated representations to the cloud node .

Let T denote the total number of training rounds. At each round , a subset of devices is randomly selected for participation. The participation of device is modeled by a Bernoulli random variable with success probability :

where denotes the set of clients selected at round t, and represents the participation probability of client i. Each client selection follows a Bernoulli distribution with parameter , ensuring that the probability definition is consistent with the stochastic sampling process used in federated rounds.

Each participating device trains a local model on its private data by minimizing a local empirical risk function:

where is a supervised loss function (e.g., cross-entropy), and is the model parameterized by weights .

The local gradient of client i at round t is computed as

where denotes the client’s local loss function and p is the gradient dimension. This gradient is then passed to the neural encryption module

where is a learnable encryption function parameterized by neural weights . Finally, a differential compression operator reduces communication overhead:

where denotes the compression ratio.

The encryption process is implemented using a triplet neural architecture consisting of an encryptor , a decryptor , and an adversary . Let denote the original gradient vector computed locally by a client. The encryptor produces an encrypted gradient update , the decryptor attempts to reconstruct from , and the adversary attempts to infer sensitive information from .

The training objective of this adversarial mechanism is formulated as a minimax game:

where is the distribution of gradient vectors and is a regularization coefficient that balances reconstruction accuracy against adversarial resistance. The norm is used to measure both reconstruction fidelity and adversarial leakage. In practice, typically lies within the range , depending on the assumed adversarial strength.

To account for bandwidth limitations, SAFE-MED applies a gradient compression function . Given an encrypted gradient update , the compressed representation is

where controls the compression ratio

with denoting the number of nonzero elements.

The total communication overhead in each training round is then expressed as

where is a link cost coefficient that reflects the bandwidth and latency characteristics of device , and is the Euclidean norm.

Fog nodes validate client updates using a statistical anomaly detection function , which evaluates the consistency of received compressed encrypted gradient updates before aggregation:

where is the compressed encrypted gradient update of client , denotes the round-wise mean update, and is a scalar outlier score measuring the divergence of client ’s update from this mean. The threshold determines whether an update is flagged as anomalous and excluded from aggregation.

Valid encrypted updates are aggregated at the cloud through weighted averaging of the decrypted gradients:

where denotes the global model parameters at round t, is the global learning rate, and is the weighting coefficient for client i, typically proportional to its local dataset size or an assigned trust score. The set contains the subset of client updates that successfully pass fog-level anomaly validation. The decryptor maps each compressed encrypted update back into gradient space before aggregation.

The joint optimization objective for SAFE-MED is formulated as a multi-objective minimization problem:

where denotes a generic local gradient vector. The three components are defined as follows:

- is the cumulative loss of the global model across all clients.

- captures the expected communication overhead per training round t.

- quantifies adversarial leakage, measured as the reconstruction error between the original gradient and the adversary’s estimate from the encrypted gradient update .

The scalar weights and are tunable hyperparameters that control the trade-off between communication efficiency and privacy preservation, allowing SAFE-MED to adapt to different system and security requirements.

The number of local samples typically ranges from to depending on device type. Gradient vectors belong to with P in the range of to , depending on the model. Compression ratios r are set between to to preserve gradient semantics under resource constraints. Encryption model parameters are shared across devices or fog nodes depending on the training strategy, which may involve either centralized pretraining or federated co-evolution. Trust coefficients may be adapted using past behavior or anomaly scores . The adversarial strength and regularization weights are empirically tuned to ensure a trade-off between model fidelity, communication efficiency, and leakage minimization. These system-level parameters define the operational context in which privacy-preserving learning must occur, thereby directly informing the adversarial assumptions and threat models considered in the SAFE-MED framework.

3.3. Threat Modeling

In the context of IoMT-driven federated learning, threat modeling requires careful characterization of adversarial capabilities and attack surfaces due to the sensitive nature of data, the heterogeneity of devices, and the decentralized nature of learning. The SAFE-MED framework is designed to operate under a rigorous adversarial model that accounts for both passive and active threats while considering the computational and bandwidth constraints typical of IoMT environments.

We assume an honest-but-curious cloud aggregator that correctly follows the federated protocol but may attempt to infer private information from received encrypted gradients. Additionally, we model the presence of Byzantine adversaries among the participating clients. These clients may inject malicious updates or manipulate gradient values with the objective of either corrupting the global model or extracting sensitive information from other participants. The threat model also considers gradient-based inference attacks, including model inversion, membership inference, and gradient leakage, which aim to reconstruct training data or identify specific user attributes from shared gradient updates. The SAFE-MED system is built to defend against these threats while preserving system efficiency and scalability.

3.3.1. Adversary Types and Assumption Boundaries

To provide a rigorous foundation for the security analysis, we formalize the adversary types and their capabilities as follows:

- 1.

- Passive Adversary (Honest-but-Curious): An entity, such as the cloud aggregator, that correctly follows the federated protocol but attempts to infer sensitive information by analyzing received ciphertext gradients. The passive adversary has access only to encrypted updates and is not permitted access to plaintext gradients.

- 2.

- Active Adversary (Byzantine Client): A malicious client that may arbitrarily manipulate or craft its model updates, with the objective of degrading global model performance (poisoning) or extracting information through inference. The active adversary cannot directly compromise the encryption module but may attempt to exploit statistical patterns.

- 3.

- Adaptive Adversary: An adversary that evolves strategies across training rounds by observing ciphertext distributions and outcomes. Adaptive adversaries do not have access to encryption/decryption parameters but can attempt gradient reconstruction attacks over time.

- 4.

- Insider Adversary (Malicious Fog Node): A compromised fog node may access subsets of encrypted updates but is prevented from observing plaintext gradients due to the neural encryption mechanism. SAFE-MED mitigates this risk via trust-weighted aggregation and anomaly detection at both fog and cloud layers.

- 5.

- Coordination Assumption: We assume up to 20% of clients may collude maliciously, but not all fog nodes and the cloud aggregator are simultaneously compromised. This boundary sets the threat model scope for collusion and coordination.

To prevent long-term pattern leakage, SAFE-MED incorporates randomized nonces and stochastic gradient perturbations so that encrypted gradients remain unpredictable across rounds, even when underlying updates are identical. This thwarts adaptive adversarial inference while preserving stable mappings. Beyond adversarial threats, SAFE-MED also addresses divergence from heterogeneous participation by applying trust-weighted aggregation and fog-level clustering, thereby limiting the impact of biased or anomalous clients on the global model.

The adversarial objective in neural cryptographic systems is mathematically captured through a minimax optimization framework. Consider a client i that generates a local gradient update at round t. The SAFE-MED framework employs three neural modules: an encryptor , a decryptor , and an adversary , parameterized by weights , , and , respectively. The encryptor transforms the raw gradient into an encrypted gradient update

which is then transmitted to the aggregator. The decryptor attempts to reconstruct the original gradient

while the adversary simultaneously attempts to infer the same gradient

The interaction between these modules is modeled as a minimax optimization problem, with the goal of minimizing the reconstruction loss while maximizing the adversary’s difficulty in recovering the gradients:

where denotes the distribution of gradient vectors, is the reconstruction loss (typically mean squared error), and quantifies the adversary’s success in approximating . The hyperparameter controls the trade-off between utility and privacy. This formulation ensures that the decryptor learns to reconstruct encrypted gradients for benign aggregation, while the encryptor simultaneously learns to thwart adversarial inference, yielding a dynamically obfuscated encryption mechanism.

The adversary’s inference objective can be explicitly defined. A model inversion attack aims to reconstruct an approximation of the original input by solving the following optimization problem.

In this expression, is the local model with parameters , is the training loss function, y is the label, and represents the encrypted or compressed gradient observed by the adversary. By minimizing the discrepancy between the true gradient and the gradient generated from a guessed input, the adversary attempts to reconstruct training samples. SAFE-MED counters this by introducing adversarial noise and non-linear mappings via , thereby rendering such optimization ineffective.

Additionally, the SAFE-MED framework includes a defense mechanism against malicious updates submitted by compromised clients. Such updates may be intentionally crafted to poison the global model or introduce distributional drift. To address this threat, an update validation mechanism is employed at the aggregator. For each received encrypted gradient , the system computes an anomaly score using a statistical consistency function.

where is the reference update, computed either as an aggregate of trusted gradients or as the mean of previously validated updates. The update is accepted into the aggregation only if lies within a pre-defined tolerance threshold, as specified below.

The threshold is dynamically adjusted based on observed variance in previous rounds. This mechanism ensures that malicious updates with high divergence are detected and excluded from model aggregation, enhancing robustness against Byzantine behaviors.

To reduce the communication cost of encrypted gradient transmission in bandwidth-constrained environments, SAFE-MED incorporates a differential gradient compression mechanism. Each encrypted gradient update is compressed as follows:

where denotes the compressed encrypted gradient, selects the k largest-magnitude components of the encrypted vector, and is Gaussian noise added to enforce -differential privacy. The compression ratio (i.e., ) and noise variance are tunable hyperparameters based on device capacity and privacy requirements.

3.3.2. Neural Cryptographic Architecture

For reproducibility, we now provide the detailed configurations of the encoder, decoder, and adversary networks used in SAFE-MED.

- Encoder : Implemented as a three-layer MLP with hidden sizes [256, 128, 64]. Each hidden layer is followed by a ReLU activation and batch normalization. The final layer uses a tanh activation to bound ciphertext representations within . Weights are initialized using Xavier uniform initialization.

- Decoder : Configured as a symmetric three-layer MLP with hidden sizes [64, 128, 256]. ReLU activations and batch normalization are applied after each hidden layer, while the output layer uses linear activation to reconstruct the gradient vector.

- Adversary : Designed as a two-layer MLP with hidden sizes [256, 128]. Each hidden layer applies ReLU activation and dropout () for regularization. The final output layer is linear, producing gradient reconstructions.

- Training setup: All networks are trained with the Adam optimizer (learning rate = , , ), using mean squared error (MSE) as both reconstruction and adversarial loss. A batch size of 256 is used, with early stopping based on validation loss (patience = 10 rounds).

The threat model further accounts for asynchronous behavior and system heterogeneity. Clients may be offline, slow, or have intermittent connectivity. This exposes the system to drop-out-based attack vectors where colluding clients can perform delayed poisoning. To defend against this, SAFE-MED employs a decentralized consistency check across successive rounds. Clients maintain short-term model state hashes and verify the integrity of the aggregated model by comparing these to predicted update trajectories. Divergence in trajectory beyond statistical expectations triggers model rollback or quorum-based validation procedures.

SAFE-MED combines adversarial training, encrypted gradient obfuscation, anomaly detection, and adaptive compression into a multi-layered defense aligned with distinct IoMT threat vectors. This holistic design justifies the framework’s architectural complexity and ensures resilience in decentralized, adversary-prone medical environments. The next section details its system architecture and workflow across client, fog, and cloud layers. Table 2 summarizes the mathematical notations used throughout this work, including variables related to federated optimization, cryptographic modules, and system parameters.

Table 2.

List of notations.

4. Proposed SAFE-MED Framework

The SAFE-MED framework is developed to address the growing demand for secure, privacy-preserving, and efficient collaborative learning across IoMT environments. By integrating adversarial neural cryptography within a federated learning paradigm, SAFE-MED enables encrypted gradient exchange without the need for pre-shared keys or centralized trust. The framework is designed to withstand gradient leakage, model inversion, and poisoning attacks, while remaining compatible with heterogeneous and resource-constrained medical devices. In the following section, we detail the architecture that underpins SAFE-MED, outlining the computational roles, data flow, and collaborative cryptographic training procedures that span across the device, fog, and cloud layers of the system.

4.1. System Architecture

To implement SAFE-MED in a scalable and privacy-preserving manner, we adopt a three-tier architecture comprising device, fog, and cloud layers, tailored for dynamic IoMT environments. The design is modular, communication-efficient, and resilient to adversarial behavior while adapting to heterogeneous device capabilities and intermittent connectivity. At the device layer, medical sensors and wearables (e.g., ECG, respiration, glucose, and blood pressure monitors) locally train lightweight models and compute gradients at each round. The gradient vector lies in , where d is the number of model parameters, with its norm bounded by to satisfy differential privacy constraints. To ensure confidentiality, each device passes through an adversarially trained neural encryptor , yielding an encrypted gradient update:

This encrypted gradient update is then compressed using a lossy compression function , such as top-k sparsification or quantization, and differentially perturbed with a calibrated noise vector , ensuring compliance with a local privacy budget . The transmitted payload is bounded in size, typically between 512 and 2048 bytes, depending on and network conditions.

The fog layer acts as a semi-trusted intermediary that receives encrypted and compressed updates from proximate IoMT devices. Each fog node performs statistical integrity validation on received updates by computing an anomaly score , derived as

where is a reference gradient estimated from previous trusted rounds, and is a moving-window standard deviation. If , where is the poisoning tolerance threshold (typically between 2 and 3 standard deviations), the update is flagged and discarded. This detection mechanism enables early rejection of potentially corrupted or adversarial inputs without compromising the overall training dynamics.

In latency-sensitive contexts or intermittent connectivity, fog nodes may also attempt partial decryption using a locally cached decryptor model , producing an approximate gradient . While decryption fidelity is bounded by , where is an empirically derived reconstruction loss threshold, this fallback mechanism enables emergency decisions and local model inference when real-time synchronization with the cloud is infeasible.

The cloud layer serves as the global coordinator for federated learning, cryptographic model training, and anomaly auditing. It aggregates decrypted gradients from validated updates using a weighted averaging scheme:

where is the learning rate, are contribution weights based on trust scores, and is the set of valid clients in round t. To maintain secure model evolution, the cloud orchestrates a joint adversarial training loop between the encryptor , the decryptor , and the adversary model , optimized through the objective:

Here, quantifies reconstruction fidelity (e.g., using mean squared error), and measures the adversary’s ability to infer plaintext gradients. The balancing coefficient is tuned to regulate the privacy–utility tradeoff. By training over batches of real-world gradients , the system simulates worst-case attack scenarios and proactively hardens the encryption model.

To ensure secure and synchronized cryptographic models across heterogeneous clients, the system employs a trust-weighted federated ensemble update for and :

where denotes a trusted subset of clients at round t, selected based on historical anomaly scores, bandwidth availability, and consistency of participation. The trust score is dynamically normalized such that , ensuring fairness and robustness against sybil attacks.

The SAFE-MED framework operates in a multi-tier IoMT ecosystem with device, fog, and cloud layers. Devices collect physiological signals (e.g., ECG, glucose) and locally train models, producing gradients that are encrypted by , compressed, and perturbed via , yielding for secure, efficient transmission. Fog nodes validate updates with anomaly detector and manage delay-tolerant queues with TTL, filtering malicious updates and ensuring timely forwarding. At the cloud, federated aggregation, adversarial retraining, and anomaly detection are performed. This modular, scalable design supports secure, real-time operation, adapts to non-i.i.d. data, client churn, and communication variability, without relying on static topologies or central key authorities.

In addition to anomaly validation, the fog layer may deploy localized decryptors to approximate gradients , enabling hybrid operation where urgent healthcare decisions can be made autonomously under bandwidth or latency constraints. Validated encrypted updates are then forwarded to the cloud, which hosts and the adversary co-trained with the encryptor in an adversarial loop. Acting as an honest-but-curious entity, the cloud coordinates global aggregation via weighted averaging, redistributes the updated model, and simulates game-theoretic dynamics on rotating client gradients to strengthen privacy through adversarial neural cryptography.

This centralized adversarial training enables the encryption model to evolve continuously against simulated attacks, strengthening privacy guarantees without relying on explicit cryptographic key distribution. Learned parameters and are periodically broadcast to fog nodes and IoMT clients, while an asynchronous update protocol with time-to-live (TTL) counters and delay-tolerant queues at fog nodes accommodates sporadic client participation and network dropouts, ensuring robustness in mobile or rural deployments. To support secure synchronization without traditional PKI, SAFE-MED employs adversarial synchronization with trust-weighted validation, where encryptor and decryptor networks are trained on non-overlapping data shards and periodically re-aligned through federated ensemble averaging. The encryptor and decryptor networks are treated as stochastic models trained on non-overlapping data shards and periodically re-aligned using federated ensemble averaging, denoted by

where denotes the selected set of trustworthy clients and are weight factors computed from historical anomaly scores, bandwidth contribution, and participation consistency. This adaptive trust modeling ensures that clients contributing stable and privacy-preserving behavior gain more influence in the cryptographic model evolution.

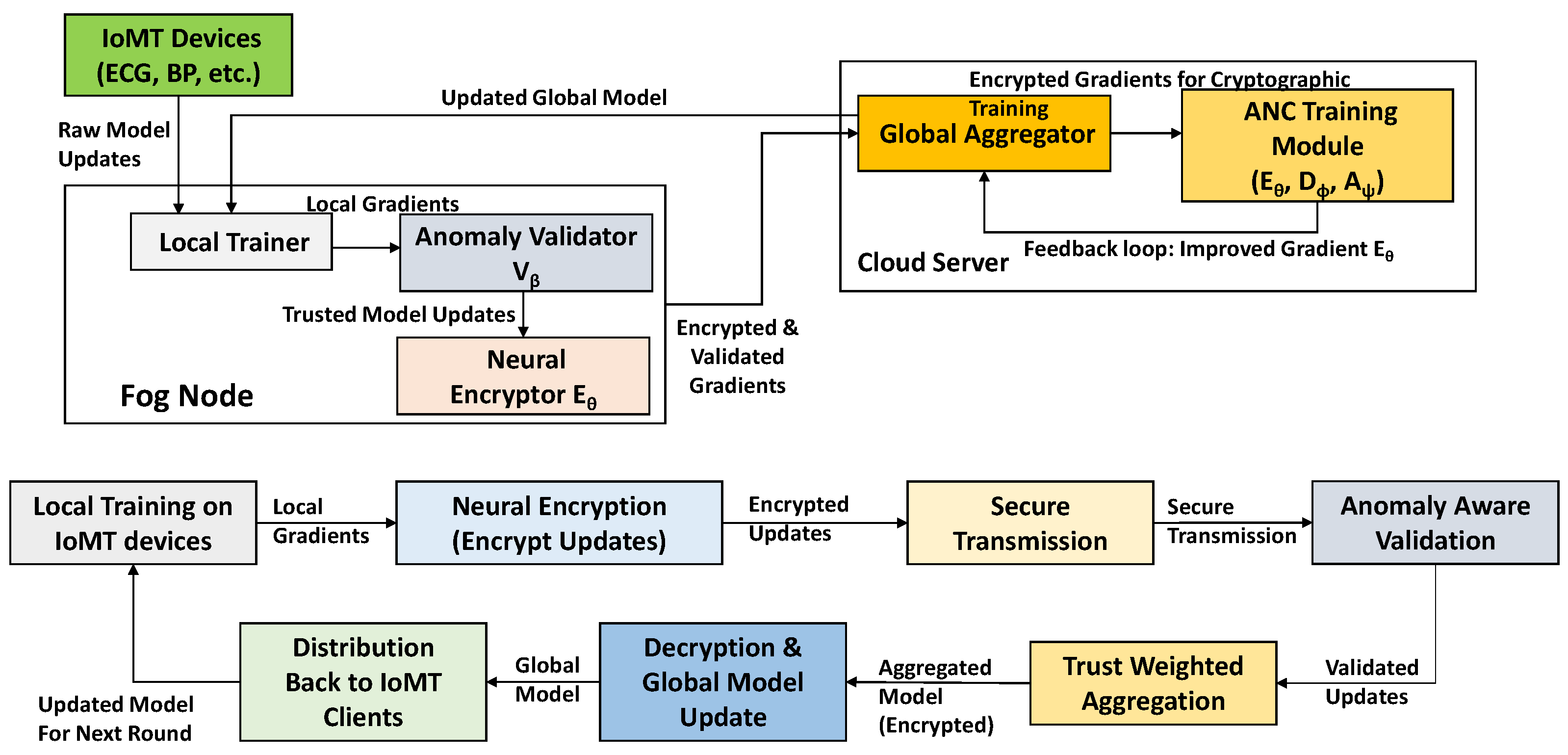

The architecture forms a unified pipeline for privacy-preserving, robust, and efficient federated learning, with client-side encryption, fog-level validation, and cloud adversarial training. Its dynamic design adapts to heterogeneous devices and unstable networks without compromising security or model performance. Figure 2 illustrates the internal data flow and component interactions within the SAFE-MED framework, highlighting how federated learning and neural encryption are jointly realized across the fog and cloud layers.

Figure 2.

Functional block diagram of the SAFE-MED framework.

4.2. Neural Cryptographic Protocol Design

The cryptographic core of the SAFE-MED framework is grounded in Adversarial Neural Cryptography (ANC), where a tripartite network comprising an encryptor , a decryptor , and an adversary jointly engage in a competitive learning process. The goal is to enable IoMT clients to encrypt their gradient updates such that they can be accurately decrypted by authorized aggregators (e.g., fog nodes or the cloud) while remaining indecipherable to unauthorized entities or attackers. This design obviates the need for classical key exchange mechanisms, making it particularly suited for decentralized, heterogeneous, and intermittently connected medical networks.

Let represent the local model gradient computed by client i during round t. The encryptor transforms this gradient into an encrypted gradient update :

The decryptor then reconstructs the original gradient from the encrypted gradient update:

Simultaneously, an adversarial network attempts to infer the plaintext gradient from the same encrypted gradient update:

The training objective is structured as a minimax optimization problem, where the encryptor and decryptor cooperate to minimize the reconstruction loss while simultaneously maximizing the adversary’s error:

where is the decrypted gradient, is the adversary’s reconstruction, and denote scalar reconstruction and adversarial loss functions, respectively.

The training dynamics follow a federated process where, at each round, a subset of clients encrypts local gradients and transmits them to the cloud for adversarial optimization of using synthetic or historical gradients. Updated parameters are periodically broadcast to fog nodes and clients for synchronization. Both the encryptor and decryptor are lightweight, dimension-preserving MLPs with two fully connected layers, ReLU activations, and batch normalization, ensuring negligible overhead on IoMT devices.

To prevent mode collapse and ensure diversity in the encrypted gradient space, random noise vectors are concatenated with the gradient vectors during encryption:

This stochasticity improves generalization, mitigates deterministic leakage, and adds a layer of defense against statistical inference attacks.

The adversary is designed to simulate strong attackers by employing deeper networks (e.g., 3 to 5 layers) with skip connections and attention modules, thereby raising the cryptographic challenge for the encryptor. This arms-race style training leads to more robust encryption mechanisms that are resilient against a wide range of inference attacks, including gradient matching, reconstruction via autoencoders, and GAN-based attacks.

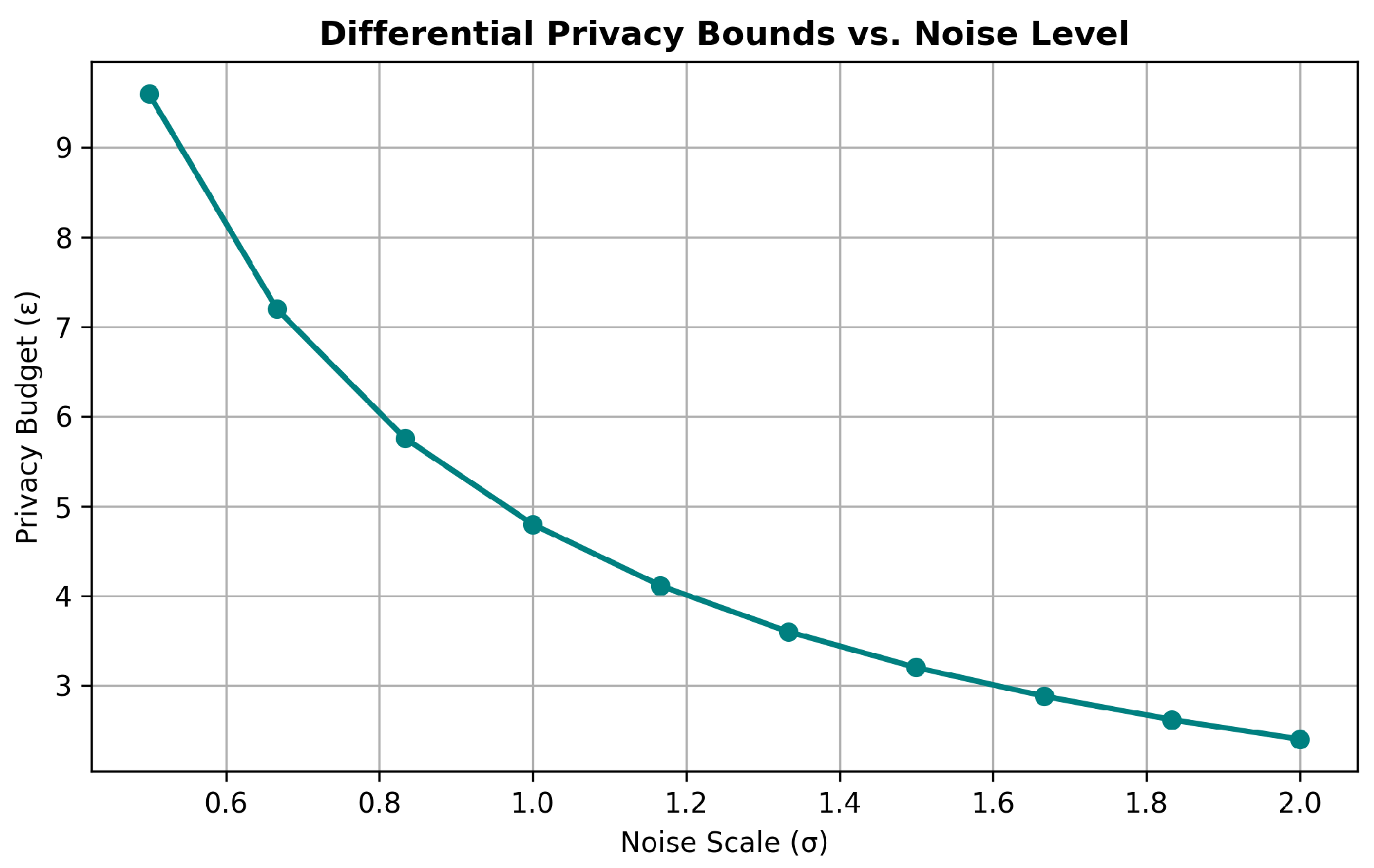

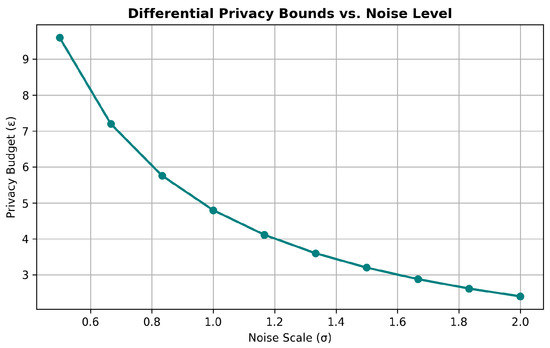

All cryptographic modules are trained under privacy-preserving federated protocols, ensuring that plaintext gradients never leave client devices. To further enhance privacy, Gaussian noise is optionally added to the encrypted gradient updates before transmission, achieving -differential privacy. The noise scale is calibrated based on the sensitivity of the encryption function and the desired privacy budget, using the relation:

where denotes the global sensitivity of the encryptor output with respect to gradient inputs.

This adversarial cryptographic pipeline enables SAFE-MED to maintain high model accuracy while offering formal privacy guarantees and resilience against malicious clients and curious aggregators. By embedding cryptographic functionality into learnable neural networks, the framework achieves flexible, scalable, and keyless encryption that is suitable for deployment in evolving real-world healthcare environments.

4.3. Federated Training and Validation Pipeline

The federated training and validation pipeline in SAFE-MED is designed to ensure robust, privacy-preserving learning under real-world IoMT constraints, including intermittent connectivity, unbalanced data, and adversarial client behavior. The pipeline orchestrates local training, neural encryption, differential compression, anomaly detection, and global aggregation across three network layers in a coordinated manner, while respecting the computational and communication limitations of the participating devices.

At the onset of each global round t, the cloud coordinator selects a subset of eligible clients based on availability, trust score, and network latency profile. Each selected client locally trains its model using its private dataset , typically over a few local epochs. The resulting local gradient , where denotes the client’s local loss function, is then processed through the neural encryptor to obtain the encrypted gradient update:

Here, represents the gradient compression function parameterized by compression ratio , is the noise input for stochastic encryption, and is the calibrated Gaussian noise vector for differential privacy. The output is a compressed, encrypted, and privacy-perturbed update.

Each client transmits its encrypted update to its associated fog node. Upon reception, the fog node performs integrity and trustworthiness checks before forwarding the update to the cloud. These checks involve anomaly scoring against a temporal reference model , which is maintained for each client as an exponentially smoothed history of past updates. The anomaly score is computed as

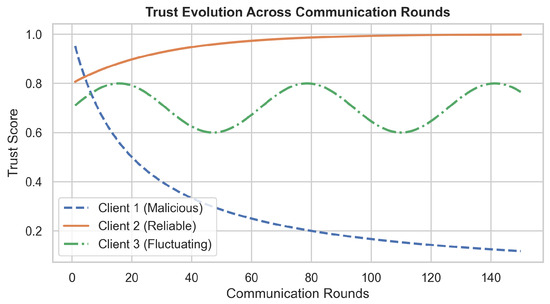

where denotes the fog-level decryptor and is the expected variance in the client i’s update distribution. If , where is a predefined anomaly threshold, the update is discarded and the client is penalized in its trust score . The trust score is updated using a fading memory model:

where is the memory factor and is the indicator function. This dynamic trust score regulates the client’s future participation and directly influences its weighting coefficient in the global aggregation step.

Validated encrypted gradient updates are then forwarded to the cloud, which decrypts them using the global decryptor and aggregates them into the global model . SAFE-MED supports both synchronous and asynchronous aggregation modes. In the synchronous setting, updates are aggregated as

where denotes the subset of clients whose updates passed fog-level validation, is the trust-based weight associated with client i, and is the global learning rate.

In asynchronous mode, each client update is incorporated immediately upon arrival, with staleness-aware decay applied as

where is the delay since the last global update involving client i, and is a decay factor that down-weights stale gradients.

The cloud also uses a portion of decrypted gradients to update the neural cryptographic models , , and via adversarial training, as defined in Equation (36). To prevent overfitting or memorization of client-specific patterns, a replay buffer is maintained with anonymized gradient samples, where anonymization is performed through randomized permutation and Gaussian masking layers. The adversarial training objective remains:

To further secure the pipeline, the fog and cloud layers apply consensus-based gradient filtering, where candidate updates are compared across fog nodes and only updates consistent with the median or majority trend are forwarded. Sparse decoding is applied to suppress rare spikes and noise-amplified entries. To tolerate straggler devices or delayed gradients, each fog node maintains a temporal buffer of maximum size and a priority queue for late arrivals. Updates received within are conditionally aggregated based on both their anomaly score and communication timestamp, balancing timeliness and robustness in asynchronous environments.

The federated training pipeline thus embodies secure multi-party learning under cryptographic transformation, ensuring confidentiality, robustness, and efficiency. Each component, including client encryption, fog validation, and cloud adversarial coordination, operates under mathematical guarantees that align with the goals set forth in the problem formulation and architectural design.

4.4. Security and Privacy Analysis

The SAFE-MED framework is designed to provide robust security and strong privacy guarantees against a wide range of adversarial threats in federated IoMT systems. Its design integrates adversarial neural cryptography, differential privacy, anomaly-aware validation, and decentralized trust modeling to collectively safeguard sensitive medical data during collaborative training. This section rigorously analyzes the framework’s defense mechanisms across various threat models, quantifies privacy risks, and evaluates the impact of adversarial behaviors on system reliability.

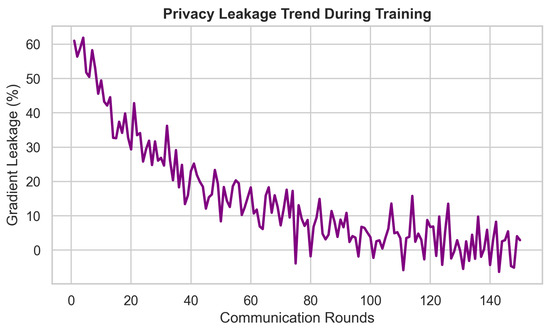

We begin by analyzing confidentiality preservation. Unlike conventional federated learning where raw gradients are transmitted, SAFE-MED encrypts each local gradient using a neural encryptor that does not rely on static cryptographic keys. The encrypted gradient update is generated using randomly sampled noise and trained adversarially to resist reconstruction by an adversary network . This encryption model is dynamically updated to evolve with potential threats, thereby offering adaptive defense against reconstruction attacks. To quantify resistance, we define adversarial leakage risk as

A low indicates high resistance to model inversion and gradient leakage, both of which are mitigated by the joint minimax objective and the adversarial retraining loop.

In addition to confidentiality, the framework enforces differential privacy through the injection of calibrated noise to the encrypted gradient before transmission. For each client update, the noise is sampled from a zero-mean Gaussian distribution with variance that satisfies -differential privacy, where bounds the maximum change in output due to a single sample and denotes the tolerance for violation. The client-level privacy budget accumulates over rounds t as

This formulation ensures that even if an attacker intercepts encrypted updates across multiple rounds, the ability to infer sensitive input data remains strictly bounded under a formal privacy guarantee.

SAFE-MED also addresses active adversaries, which include clients that aim to poison the global model by submitting crafted or corrupted updates. The anomaly-aware fog validation mechanism computes a per-round anomaly score using reference gradient statistics and filters out updates that exhibit statistically implausible behavior. To evaluate detection robustness, we define the poisoning resilience rate as

where is the set of poisoned updates and the set of updates accepted for aggregation at round t. A high reflects effective exclusion of adversarial gradients without degrading overall model convergence.

To maintain integrity and availability in asynchronous settings, SAFE-MED employs delay-tolerant aggregation queues and trust-weighted model synchronization. Fog nodes track stale updates using a time-to-live (TTL) mechanism, discarding excessively delayed gradients to prevent replay attacks. Furthermore, each client’s influence on the cryptographic model parameters and is modulated by a dynamic trust score , which penalizes anomalous, bursty, or unresponsive behavior. The trust-weighted synchronization rule is expressed as follows.

This mechanism ensures that only consistently reliable clients have a significant influence on the evolution of the encryption and decryption networks, thereby reducing the risk of collusion or Sybil attacks within the cryptographic training process.

The neural encryption approach also protects against external eavesdropping attacks, as intercepted encrypted gradient updates lack semantic interpretability and cannot be decrypted without access to synchronized parameters and the corresponding stochastic keys . This approach eliminates the risk associated with key management infrastructures such as PKI, making the system suitable for resource-constrained and mobile IoMT deployments.

From a system-wide perspective, SAFE-MED achieves a fine-grained balance between security, privacy, and model utility. Each encrypted update maintains fidelity through reconstruction loss while maximizing adversarial uncertainty via . Additionally, the inclusion of compression ensures bandwidth efficiency without compromising the cryptographic integrity of updates. To formally express this trade-off, we define the effective utility metric as follows.

where and are weighting constants reflecting system priorities. A high denotes secure, private, and communication-aware learning with minimal compromise on task performance.

SAFE-MED provides formal and empirical guarantees for privacy preservation, confidentiality, and adversarial robustness. Its neural cryptographic backbone, decentralized validation, and adaptive synchronization constitute a layered defense strategy that aligns with the stringent security requirements of medical cyber-physical systems. The security model is extensible to new threat vectors via retrainable adversarial modules and tunable trust mechanisms, ensuring long-term resilience in volatile IoMT environments.

4.5. Optimization Objective

The SAFE-MED framework employs an adversarial optimization paradigm to jointly learn privacy-preserving gradient encryption and robust federated aggregation in IoMT environments. This section details the problem formulation, objective function, solution methodology, and practical optimization steps undertaken to achieve secure and efficient learning.

4.5.1. Objective Formulation

The training process of SAFE-MED is cast as a constrained minimax optimization problem involving three neural networks: the encryptor , the decryptor , and the adversary . Each IoMT client computes a local gradient at communication round t, which is encrypted before transmission. The goal is to train and such that can accurately reconstruct the original gradient from the encrypted signal, while is unable to infer any sensitive information from the encrypted gradient update.

We define the optimization objective through the following expression.

Here, denotes the reconstruction loss between the original gradient and the output of the decryptor, while represents the adversarial loss quantifying the adversary’s inference success. The hyperparameter controls the tradeoff between decryption fidelity and privacy.

The optimization is subject to several practical constraints inherent to IoMT environments. First, encrypted gradients must be compressible under bandwidth limitations using a lossy compression function , such that the transmission cost satisfies , where denotes the allowable bandwidth per training round. Second, clients exhibiting anomalous behavior are excluded based on a dynamic anomaly score , and an update is accepted only if , thereby preventing poisoned or erratic data from disrupting the optimization process. Third, given the heterogeneous computational capacity of edge devices, the encryptor must be lightweight enough to operate within hardware constraints. Specifically, it must satisfy , where is the CPU frequency of device i, and is a predefined utilization threshold. These constraints are enforced through selective client participation, model pruning, and bandwidth-aware scheduling in the SAFE-MED framework.

4.5.2. Optimization Methodology

The non-convex, adversarial optimization problem in Equation (42) is solved using alternating stochastic gradient descent (SGD) with decoupled update steps, as described below. In each iteration, the adversary’s parameters are first updated to maximize , thereby enhancing its ability to infer sensitive information from encrypted gradients. Subsequently, the parameters and of the encryptor and decryptor are updated to minimize while suppressing , ensuring that the learned encryption remains robust against adversarial inference.

To further regularize the adversary’s behavior and prevent overfitting, the loss is augmented with an entropy term that promotes output uncertainty.

Here, denotes the entropy of the adversary’s prediction distribution, and is a tunable hyperparameter. This encourages uncertainty in the adversary’s outputs, increasing resilience to inference attacks. At each global round, a mini-batch of client gradients is selected and encrypted. The adversary and decryptor are trained using backpropagation, with the following loss functions applied to guide their updates.

where and . BCE denotes binary cross-entropy for classification-based adversaries.

While adversarial training enhances gradient obfuscation, it may not fully prevent information leakage under strong inference attacks. To further strengthen privacy guarantees, SAFE-MED incorporates calibrated differential privacy through noise injection at the encryption stage.

4.5.3. Differential Privacy Noise Calibration

To enhance privacy guarantees and mitigate gradient leakage, SAFE-MED applies calibrated Gaussian noise to the encrypted gradients prior to adversarial training. This mechanism follows the Gaussian Differential Privacy (GDP) model, where noise is sampled from , and the noise variance is tuned based on the desired privacy level.

Definition 1

(Gaussian Mechanism for Differential Privacy). Let denote the -sensitivity of the gradient update, i.e.,

where D and are neighboring datasets differing in a single client’s data. The Gaussian mechanism adds zero-mean Gaussian noise with standard deviation σ to each coordinate of the clipped gradient update.

Theorem 1

(Privacy Guarantee). Under the Gaussian mechanism, the federated update process satisfies -differential privacy if

Here, ϵ controls the privacy–utility trade-off, while δ bounds the probability of privacy leakage. In practice, is enforced via per-client gradient clipping before noise addition, ensuring bounded sensitivity. This formulation enables tunable noise calibration, allowing practitioners to balance accuracy and privacy guarantees by scaling σ according to target .

Following noise calibration and adversarial obfuscation, the decrypted gradients are processed for global aggregation using a trust-weighted scheme.

4.5.4. Gradient Aggregation with Trust Weighting

Once decrypted, valid gradients are aggregated using a trust-weighted scheme to compute the global model update.

Here, is the learning rate and are dynamic trust weights computed using a composite score from anomaly detection, past accuracy, and participation frequency. This ensures the global model is influenced predominantly by trustworthy devices. To accommodate asynchronous client participation, SAFE-MED integrates a time-to-live (TTL) mechanism at the fog and aggregator layers. Each update from a client is tagged with a staleness counter , representing the number of rounds it is delayed relative to the current aggregation round t. If an update arrives within the allowable TTL window , it is accepted for aggregation but is down-weighted using a staleness-aware trust factor. Specifically, the aggregation weight is computed based on the client’s trust score and local data contribution.

This ensures that fresher, timely updates exert more influence on the global model, while delayed updates contribute less to model evolution. Updates that exceed the TTL threshold are discarded to prevent outdated or misaligned gradients from affecting model performance. This strategy preserves optimization stability while enabling participation from intermittently available IoMT clients.

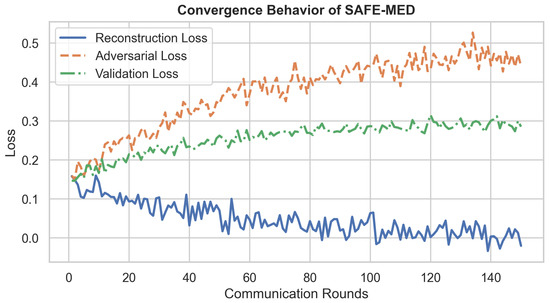

To ensure stable convergence, SAFE-MED employs a two-time-scale learning rate (slower adaptation for the encryptor), gradient clipping to prevent exploding updates, and entropy regularization in to avoid overconfident adversaries. These techniques enable faster convergence than standard federated GANs, while jointly ensuring privacy, robustness, efficiency, and adaptability in heterogeneous IoMT environments. The following procedure outlines the training steps used to optimize the adversarial components of SAFE-MED under this joint objective.

4.6. Adversarial Optimization Procedure

To solve the minimax optimization problem introduced in Equation (3), we adopt an alternating gradient descent strategy that iteratively updates the parameters of the encryptor , the decryptor , and the adversary . This approach aligns with adversarial training paradigms and enables the encoder to evolve towards producing obfuscated representations that are simultaneously reconstructible by the decryptor and resistant to inference by the adversary. The optimization proceeds in a federated and privacy-preserving manner, respecting the decentralized nature of the IoMT environment and the communication constraints of the network layers.

The procedure begins with the initialization of model parameters , , and using uniform Xavier initialization. A subset of trustworthy clients is selected at each round t based on anomaly scores, historical participation, and bandwidth availability. Each client computes its local gradient and encrypts it using the current encryptor to produce . The encrypted update is then transmitted to the cloud server via the fog layer, subject to validation and compression.

Upon receiving the encrypted updates, the cloud executes three sequential steps. In the first step, it updates the adversary parameters to maximize the adversarial loss , thereby training the adversary to infer private gradients from encrypted data. This maximization is typically solved using stochastic gradient ascent with a learning rate using the following update rule.

In the second step, the decryptor parameters are updated to minimize the reconstruction loss via stochastic gradient descent. This ensures that decrypted gradients approximate the original ones and preserves model fidelity. The corresponding update rule is given below.

In the third step, the encryptor parameters are updated to jointly minimize the reconstruction loss and maximize adversarial confusion, as defined by the objective in Equation (3). The gradient descent update for the encryptor incorporates to balance privacy and utility, as shown below.

This cycle is repeated for a fixed number of adversarial iterations or until the performance metrics reach a convergence threshold. A convergence criterion is defined by the stabilization of the validation loss and adversarial success rate across multiple rounds. Due to the non-convex nature of the objective, the optimization is conducted with momentum-based optimizers such as Adam or RMSProp to escape local minima and saddle points.

To ensure fairness and reduce client-side computation, model synchronization is conducted periodically using a secure federated averaging protocol. Let and denote the local copies of the encryptor and decryptor at client i. The global parameters are updated via trust-weighted aggregation:

The weights are computed dynamically based on each client’s anomaly score history, training consistency, and bandwidth contribution. This adaptive weighting mechanism ensures that clients behaving reliably and preserving data privacy are given greater influence in shaping the cryptographic model.

To enhance robustness, differential privacy noise is injected into the encrypted gradients before adversarial training to prevent overfitting to private information. The framework also monitors the adversarial success rate as an empirical privacy metric, defined as the accuracy with which the adversary recovers sensitive gradient components. A decline in adversarial accuracy over epochs indicates improving encryption strength.

This optimization procedure balances fidelity and privacy via adversarial learning, alternating updates, trust-weighted aggregation, and privacy-enhancing noise injection. It is computationally efficient, scalable to large client populations, and robust to real-world threats such as gradient leakage, model inversion, and poisoning attacks. The resulting model parameters provide an encrypted federated learning environment suitable for critical medical applications. Algorithm 1 presents the full procedure for adversarial optimization and trust-aware aggregation in SAFE-MED. The theoretical foundation of Algorithm 1 is summarized in Theorems 2 and 3. These theorems respectively formalize the confidentiality guarantee of the encryption scheme and the convergence of the learning process under bounded distortion. Detailed proofs are provided in Appendix A.

| Algorithm 1 Adversarial optimization in SAFE-MED |

|

Theorem 2

(Confidentiality Guarantee). Let denote the local gradient of client i at round t, and let be the corresponding encrypted update produced by the SAFE-MED encryptor. Assume that the adversary is bounded by polynomial-time computation and is trained adversarially against the encryptor–decryptor pair. Then, at equilibrium of Algorithm 1, the adversary’s expected reconstruction error satisfies

for some non-trivial constant that depends on the capacity of and the encryption complexity. In particular, the adversary’s success probability in recovering is no better than random guessing up to negligible advantage.

Theorem 3

(Convergence Under Encryption). Consider the federated optimization problem

where are sampling weights with . Let denote the local stochastic gradients and their encrypted form. Suppose the decryptor satisfies

for some bounded distortion . Further assume that is L-smooth and that stochastic gradients have bounded variance. Then the global model update in Algorithm 1 converges to a stationary point of with the same asymptotic rate as standard FedAvg, up to an additive error term introduced by the encryption–decryption process.

In addition to confidentiality and convergence, it is essential to demonstrate that SAFE-MED remains computationally and communicationally feasible for large-scale IoMT deployments. To this end, we establish Theorem 4, which formally bounds the per-round cost of the proposed framework. To further analyze the practicality of SAFE-MED, we evaluate the computational and communication complexity of one training round. In particular, we provide formal guarantees in Theorem 4, which complement the confidentiality and convergence results established earlier.

Theorem 4

(Complexity Bound). Let d denote the model gradient dimension, the number of participating clients in round t, and the number of local samples per client. Then one round of SAFE-MED adversarial optimization (Algorithm 1) has

- Local computation cost: per client, dominated by gradient evaluation.

- Encryption/decryption cost: per update, due to the linear complexity of neural modules and .

- Adversary update cost: per gradient.

- Communication cost: per client, reducible to under compression ratio .

- Aggregation cost: at the server for weighted averaging.

Therefore, the total complexity per round is in computation and in communication. This is asymptotically linear in the gradient dimension d and the number of selected clients m, significantly improving over homomorphic encryption-based schemes which often incur or worse.

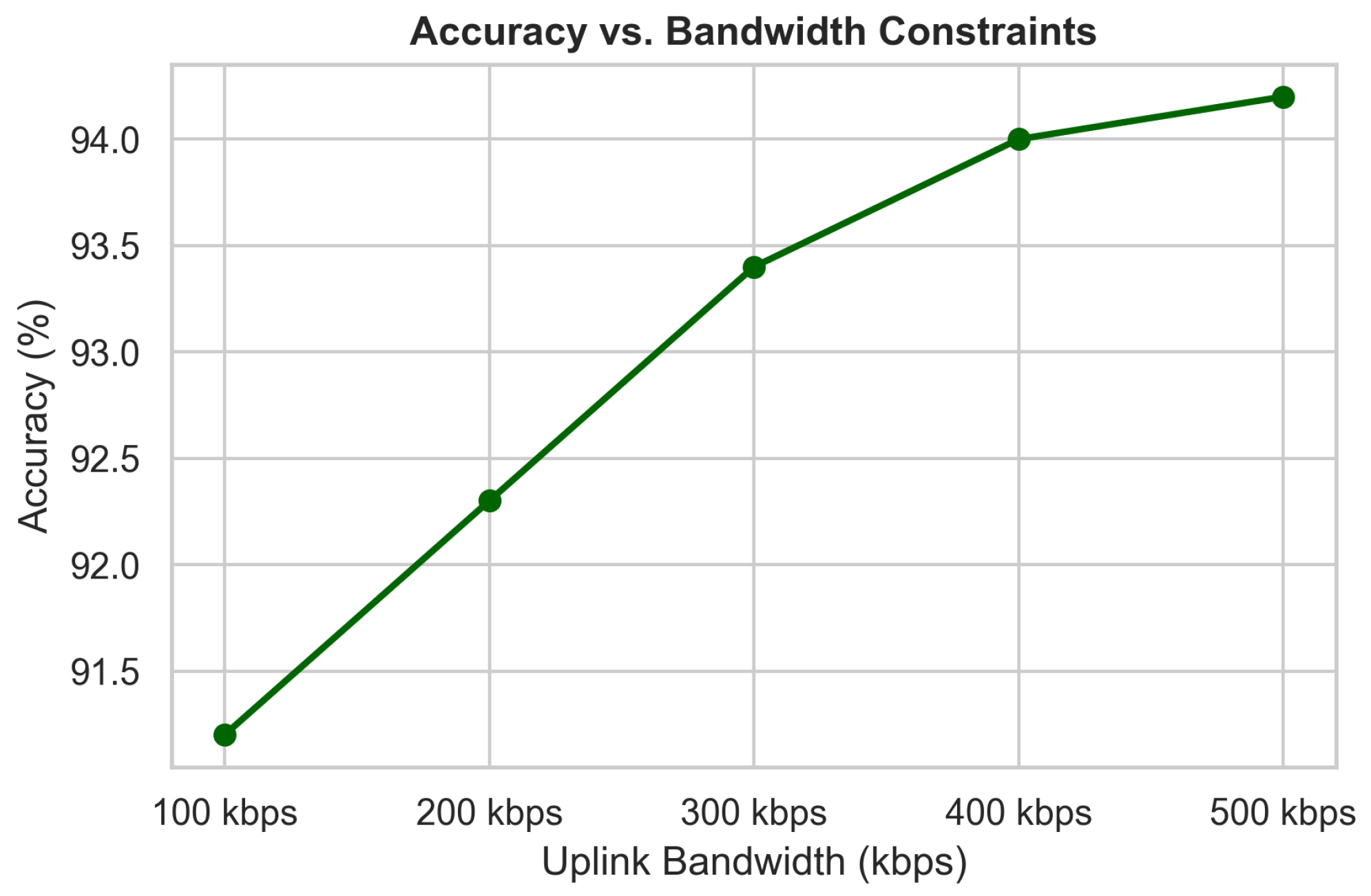

SAFE-MED’s complexity scales linearly with gradient dimension d, making its encryption and decryption overhead negligible compared to local training . With communication cost , it remains practical under 100–500 kbps bandwidth typical of IoMT devices. Unlike homomorphic encryption or MPC, which incur quadratic costs and large ciphertext expansion, SAFE-MED’s linear-time design ensures efficiency on low-power processors while preserving security.

4.7. Solution Strategy for Minimax Objectives

The minimax formulations in Equations (6), (16), and (21) are optimized using an alternating stochastic gradient descent (SGD) procedure. Specifically, in each communication round, the adversary network is updated to maximize the adversarial loss, while the encryptor and decryptor are updated to minimize the reconstruction loss while penalizing adversarial success. This procedure follows the standard adversarial training paradigm and converges empirically to a Nash equilibrium where the adversary cannot improve beyond random guessing.

To ensure consistency of parameters across the distributed IoMT environment, updated encryptor and decryptor weights are synchronized at the cloud aggregator using trust-weighted federated averaging:

where is the selected client set and are trust weights. This ensures that the global encryption-decryption pipeline remains synchronized despite client heterogeneity and partial participation.

To evaluate the practical feasibility of SAFE-MED in real-world deployments, we analyze its computational and communication complexity across the client, fog, and cloud layers. Let n denote the number of participating clients per round, d the dimensionality of the gradient vector, and the compression ratio applied prior to transmission.

SAFE-MED maintains low overhead with client-side complexity , adversary training , communication , and aggregation being significantly lighter than homomorphic encryption systems. To stabilize its non-convex adversarial training, it employs two-time-scale learning rates, entropy regularization, and gradient clipping, enabling convergence within 100 global rounds. These design choices ensure efficiency, robustness, and privacy preservation under diverse IoMT threat and deployment conditions.

5. Experimental Evaluation

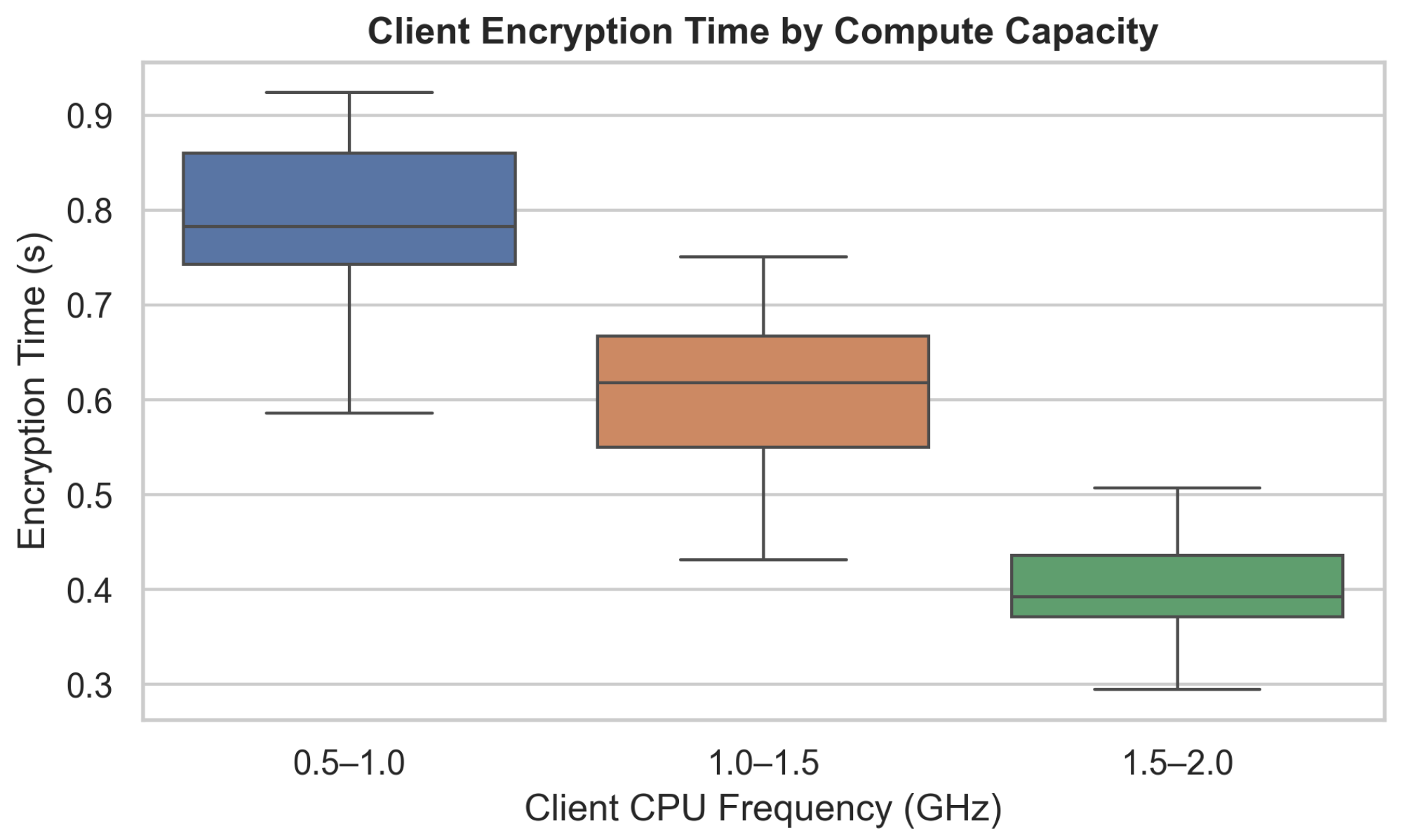

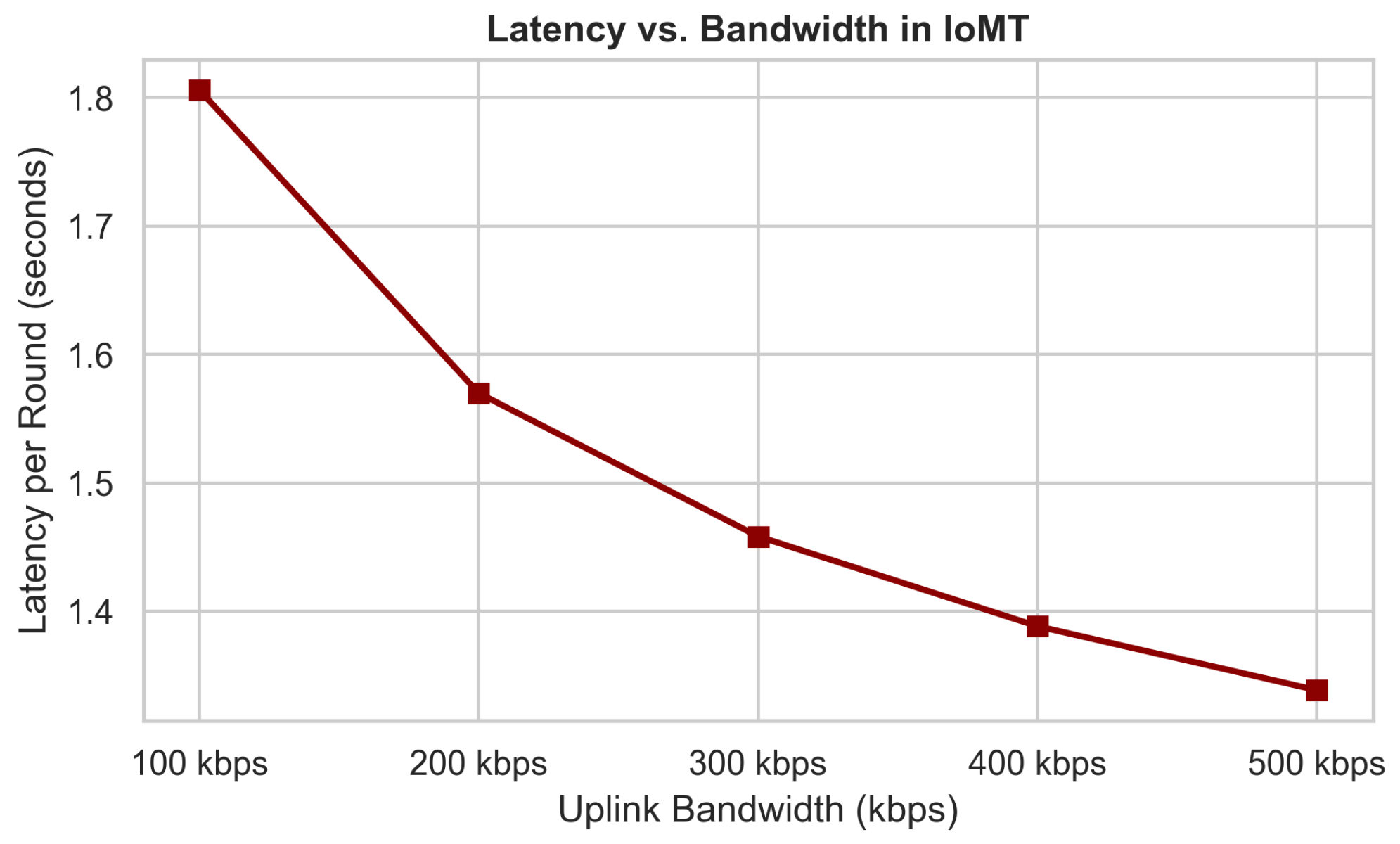

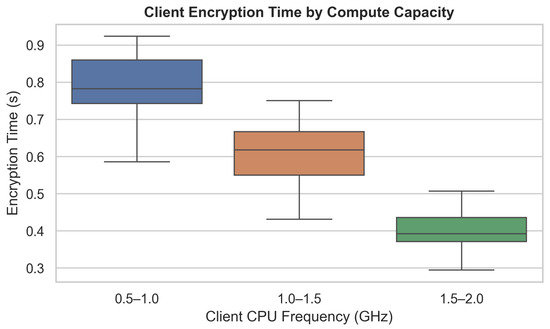

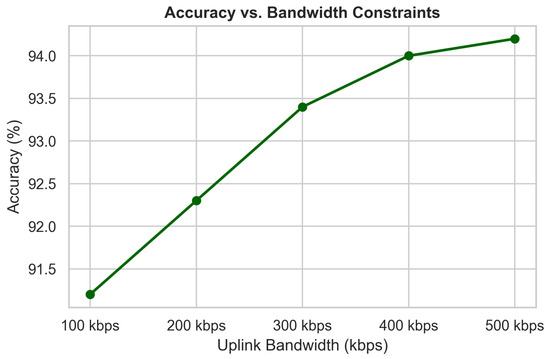

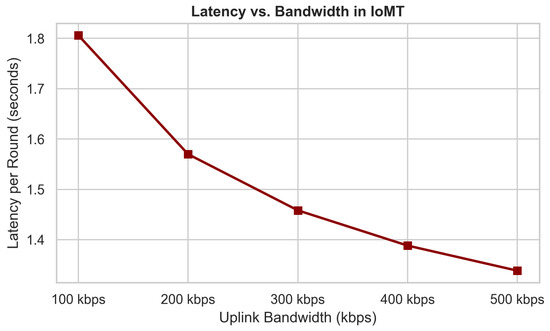

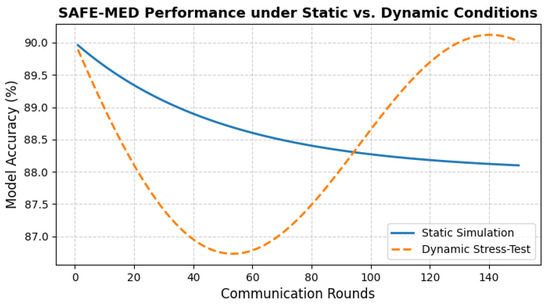

To evaluate the performance, scalability, and privacy-preserving capabilities of SAFE-MED, we conduct experiments under realistic IoMT conditions. The evaluation covers accuracy, privacy leakage, adversarial resilience, communication efficiency, and computational overhead. The framework is implemented in PyTorch (v2.8.0) and TensorFlow Federated (v0.87.0), with adversarial routines integrated via PySyft (v0.9.5), and executed on a workstation with an AMD Ryzen 7950X CPU, 128 GB RAM, and four NVIDIA RTX A6000 GPUs. We simulate 100 heterogeneous IoMT clients with processing frequencies from 0.5–2.0 GHz and uplink bandwidths of 100–500 kbps, connected to 10 fog nodes emulated as resource-constrained Docker containers (256 MB memory, limited CPU). Fog nodes forward encrypted updates to a multi-threaded cloud aggregator. Asynchronous communication and delays are modeled using event-driven queues. Fixed random seeds ensure deterministic and reproducible experiments.

The testbed uses a three-tier hierarchical topology with 100 IoMT clients organized into 10 fog clusters, each connected to a central cloud server. Patient devices send data to local fog nodes for intermediate processing and secure aggregation before forwarding encrypted updates to the cloud. This setup reflects real-world healthcare deployments, supporting decentralized learning while minimizing communication latency and preserving patient privacy. SAFE-MED is evaluated on three publicly available healthcare datasets representing diverse diagnostic modalities. The Cleveland Heart Disease [56] dataset contains 303 patient records with 14 clinical features for binary heart disease classification, suitable for edge-based clinical decision support. The MIT-BIH Arrhythmia dataset [57] includes over 100,000 annotated ECG segments from 48 patients for multi-class arrhythmia classification, presenting a challenging time-series scenario with class imbalance. The PhysioNet Respiratory Database [58] provides biosignal time-series from plethysmography and airflow sensors for respiratory pattern classification in continuous monitoring. Together, these datasets cover varied data types, task complexities, and medical conditions, enabling a comprehensive assessment of SAFE-MED under realistic and heterogeneous IoMT workloads.

Datasets are partitioned non-IID using Dirichlet sampling with concentration parameter to capture realistic patient heterogeneity. All features are normalized before training. For time-series data (e.g., ECG, respiratory signals), sequences are truncated or zero-padded into fixed-length windows, and models are built with lightweight 1D CNNs (three convolutional layers with ReLU activations and a fully connected head). For the tabular Cleveland dataset, we use a three-layer MLP with ReLU activations for binary classification. These architectures balance efficiency and representational capacity, enabling deployment on resource-constrained IoMT devices.

5.1. Simulation Parameters and Training Settings

To emulate a realistic federated IoMT environment, we simulate a three-tier architecture consisting of 100 heterogeneous client devices, 10 fog nodes, and a centralized cloud aggregator. Each fog node manages a cluster of 10 clients, with device-specific compute frequencies and bandwidths sampled from predefined distributions to reflect deployment variability. At each global communication round, 10% of clients are randomly selected to participate, capturing the effects of intermittent connectivity and limited availability typically observed in medical IoT scenarios. To stabilize convergence under sparse participation, the server applies an adaptive learning rate schedule that scales updates based on client availability. Combined with fog-level clustering and trust-weighted aggregation, this mechanism prevents significant parameter drift even when client updates are highly heterogeneous or biased.

Each client performs local training for two epochs with a batch size of 16. Task-specific models are used: a three-layer multilayer perceptron (MLP) for tabular datasets and gradient-based tasks, and a lightweight 1D CNN for time-series inputs (e.g., ECG, respiration). The federated encryption pipeline consists of a three-layer MLP encryptor and decryptor (128 hidden units, LeakyReLU activations), trained jointly with an adversary modeled as a symmetric MLP with a sigmoid output. Training employs the Adam optimizer with tuned learning rates to ensure adversarial stability: (encryptor), (decryptor), and a lower (adversary). Differential privacy is applied via Gaussian noise with standard deviation , and gradient clipping with norm threshold precedes encryption. To emulate IoMT bandwidth constraints, encrypted gradients are further compressed using a fixed ratio . Training proceeds for 150 global communication rounds. Every fifth round, model performance is validated on a held-out test set to track convergence and generalization. Each experiment is repeated five times using different random seeds, and reported results represent the average across runs to ensure statistical reliability. A comprehensive summary of the system parameters, model hyperparameters, and client-specific settings is provided in Table 3.

Table 3.

Simulation parameters and system settings.

5.2. Deployment Benchmarking on IoMT-Grade Hardware

In addition to large-scale simulations, we validate the practical feasibility of SAFE-MED on resource-constrained embedded devices that are representative of real-world IoMT nodes. Specifically, we emulated SAFE-MED components on an ARM Cortex-M4 MCU (80 MHz clock, 64 KB SRAM, 256KB Flash), a widely deployed platform in wearable and bedside medical devices.