Two-Stage Mining of Linkage Risk for Data Release

Abstract

1. Introduction

- Main Research Question: How can we systematically and quantitatively measure the latent linkage risk when releasing multiple heterogeneous datasets in a manner that is both scalable and robust to real-world data inconsistencies?

- RQ1: Integration of Risk Levels: How can a unified framework effectively integrate both inter-dataset (global) structural correlations and intra-dataset (local) record-level similarities to produce a comprehensive risk score?

- RQ2: Semantic Heterogeneity: How can the framework automatically account for semantic drift in attribute schemas (e.g., ‘salary’ vs. ‘income’, ‘birthdate’ vs. ‘age’) without relying on manual pre-processing?

- RQ3: Performance and Efficiency: Does a hierarchical, two-stage approach offer superior accuracy and computational efficiency in detecting linkage risks compared to traditional, single-stage matching methodologies?

- We propose a unified two-stage linkability detection framework that systematically quantifies linkage risks at both global (inter-dataset) and local (intra-dataset) levels, enabling comprehensive measurement of linkage vulnerabilities across heterogeneous data releases.

- We introduce a novel linkability risk score computation method that fuses global attribute distribution similarities with local record-level overlaps, providing interpretable and fine-grained metrics that reflect both structural and value-based privacy exposures.

- We develop a generalizable dataset construction strategy tailored for linkage risk assessment, which facilitates robust benchmarking and supports diverse linkage scenarios beyond traditional static settings.

- We design an unsupervised clustering algorithm that jointly adapts to attribute schema and record values, eliminating the need for ground truth labels and demonstrating scalability compared to prior approaches in dynamic and heterogeneous data environments.

2. Related Work

2.1. Privacy Attacks and Linkage Attacks

2.2. Defence Mechanisms Against Linkage Attacks

- Syntactic anonymization methods, such as k-anonymity [27], l-diversity [28], and t-closeness [29], which generalize or suppress quasi-identifiers to limit record uniqueness. While effective against simple re-identification, these methods often fail to address attribute disclosure and are vulnerable to adversaries with background knowledge [20,30];

- Probabilistic models, most notably differential privacy [31], which inject calibrated noise to query results or data releases, providing strong theoretical guarantees against individual re-identification. However, these models may significantly reduce data utility and lack fine-grained interpretability for specific linkage risks, making it difficult for practitioners to assess concrete threats in real-world scenarios [32];

- Data perturbation and synthetic data generation techniques [33,34], which transform or generate new datasets to mask original records. Despite their promise, recent studies have shown that synthetic data can still leak sensitive information through distributional similarities or model inversion attacks [35,36]. In particular, publishing synthetic data does not fundamentally resolve the risk of linkage, as adversaries may exploit residual correlations between synthetic and real data.

2.3. Privacy Linkability Detection Methods

2.4. Positioning of Our Work

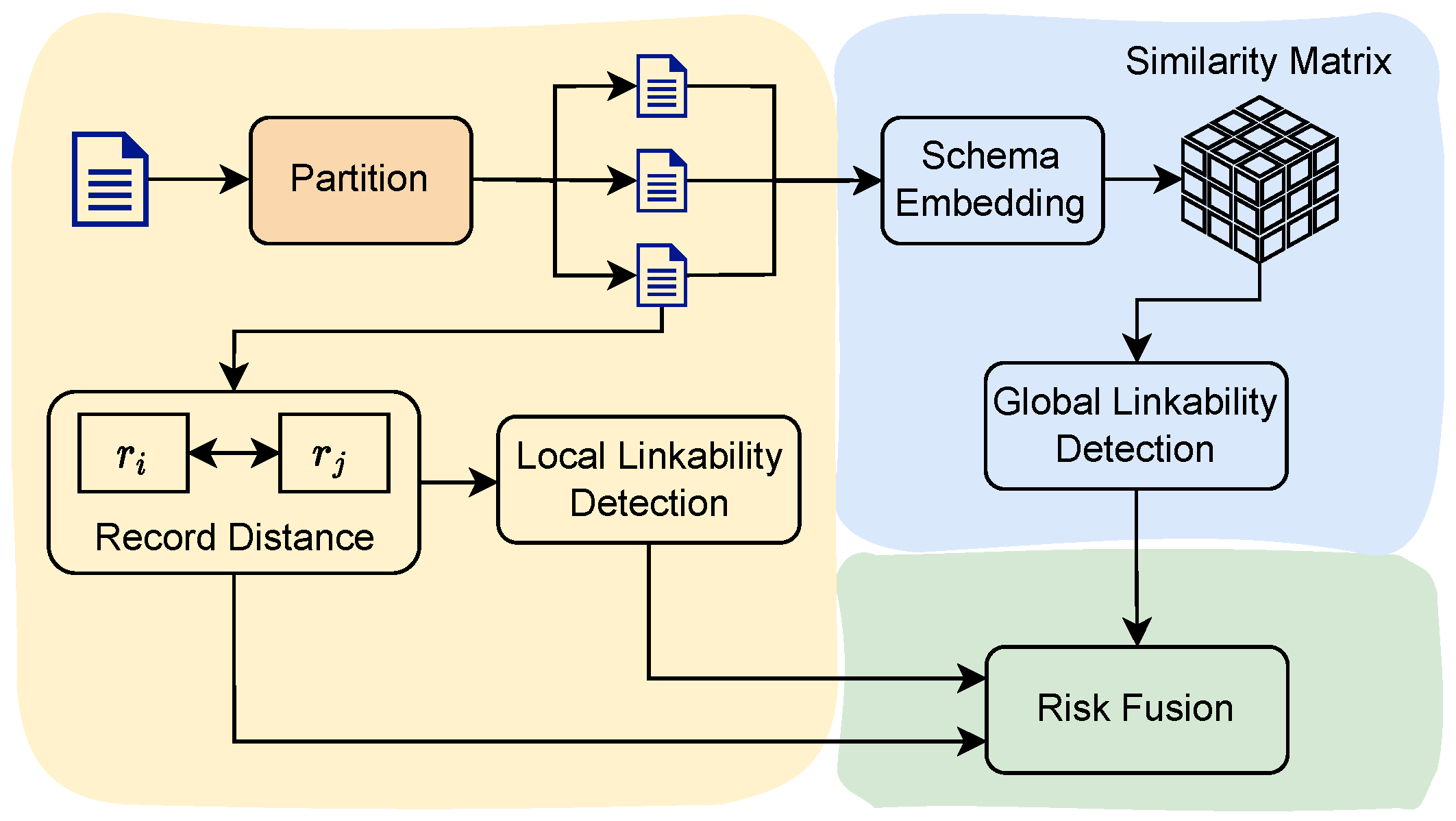

3. Privacy Risk Mining Framework

3.1. Basic Notions of Linkability

- Global linkability represents the possibility of linking datasets that contain records corresponding to the same data subjects.

- Local linkability represents the possibility of linking records corresponding to the same data subject.

3.2. Two-Stage Mining Framework

- Global Linkability Detector: Computes inter-dataset correlations using attribute distribution divergence.

- Local Linkability Detector: Identifies intra-dataset vulnerable record clusters via value intersection patterns.

- Risk Fusion: Synthesizes multi-dimensional linkability risks into a normalized metric space.

| Algorithm 1 Unified privacy risk computation. |

|

3.3. Measuring Global Linkability

3.3.1. Semantic Attribute Embedding

3.3.2. Aligned Attribute Representations

3.3.3. Attribute Weighting Scheme

3.3.4. Algorithm for Datasets Clustering

3.3.5. Global Linkability Computation

- Distribution Divergence: We use the Jensen–Shannon (JS) divergence to measure the similarity between the value distributions of the aligned attributes. The JS divergence is defined aswhere is the average distribution, and denotes the Kullback–Leibler divergence defined asWe selected this metric over others due to its well-established mathematical properties that are highly advantageous for our task: it is symmetric (i.e., ), it is always finite and bounded (between 0 and 1 when using log base 2), and it is robust even when dealing with sparse empirical distributions that may contain zero-probability events [48]. These properties ensure a stable and consistent comparison of attribute distributions, which is critical in a heterogeneous data environment.

- Value Set Overlap: To capture the concrete linkage potential, we compute the Jaccard similarity between the sets of observed values for the aligned attributes as follows:where and are the sets of unique values for attribute in and , respectively. This metric reflects the proportion of shared values, indicating the likelihood of direct record linkage based on attribute values.

- Complementary Risk Dimensions: The two metrics capture different but equally critical facets of linkage risk. The Jaccard similarity identifies immediate, direct linkage opportunities, while the JS divergence reveals latent, structural relationships between the underlying populations, which is crucial for detecting risk even with sparse value overlap.

- Unbiased Default (No-Informative Prior): In the absence of domain-specific knowledge or labeled validation data, there is no objective basis to favor one risk dimension over the other. Setting represents a conservative and unbiased stance, preventing the model from becoming myopic (i.e., over-focusing on direct overlaps while ignoring structural clues, or vice-versa).

- Robustness and Generalizability: This balanced approach ensures generalizability across diverse datasets and linkage scenarios, making the framework robust without requiring per-dataset tuning, which would violate its unsupervised design principle.

3.4. Measuring Local Linkability

3.4.1. Unsupervised Record Clustering

3.4.2. Importance-Aware Record Distance

3.4.3. Algorithm for Records Clustering

3.4.4. Local Linkability Computation

3.5. Risk Quantification Model

3.5.1. Unified Risk Score

3.5.2. Interpretation of the Unified Risk Score

3.5.3. Consistency and Comparability of Risk Measurement

- Standardized Semantic Space: By mapping all attribute schemas to a single, external reference (a pre-trained Word2Vec model), we ensure that semantic similarity is evaluated against a fixed, universal standard. This allows for a fair and consistent comparison of schema relatedness, regardless of the specific datasets involved.

- Normalized Scoring: All components of our risk score, from the global GL to the local LL, are normalized. The final unified risk score R is bounded within the [0, 1] range, making the risk levels directly comparable across different dataset pairs. A score of 0.7 has the same interpretation of risk severity, irrespective of which datasets generated it.

- Temporal Stability: As long as the external reference models remain constant, the framework provides a stable baseline for risk assessment over time. When new datasets are introduced into an ecosystem, their linkage risk can be measured against the same consistent standard, allowing for meaningful tracking of risk evolution.

3.5.4. Algorithmic Implementation

4. Experiments and Insights

4.1. Horizontal–Vertical Partitioning Procedure

- Vertical Partitioning (Schema-Level): First, vertical partitioning is performed on the attribute schema to generate sub-datasets that share overlapping attributes. For example, the Adults dataset with 14 attributes can be vertically sliced into two sub-datasets and , containing 10 and 12 attributes respectively, with 2 attributes overlapping.

- Horizontal Partitioning (Record-Level): Horizontal partitioning is applied on the records to produce sub-datasets with overlapping instances. Given that the Adults dataset contains 45,000 records, it is horizontally sliced into two sub-datasets with 25,000 and 20,000 records, respectively, resulting in 5000 overlapping records. These overlapping records represent 11.11% of the original dataset.

- Semantic Schema Drift: We intentionally altered attribute names in the partitioned sub-datasets to simulate curation by different organizations. For example, in one dataset, an attribute might be named ‘income’, while in another, it was changed to ‘salary’. Similarly, ‘work-class’ was mapped to ‘employment_type’ and ‘education’ to ‘edu_level’. This directly tested our framework’s core capability of using semantic embeddings to identify substantively identical attributes despite syntactic differences.

- Structural and Format Transformation: We also simulated deeper structural differences. For instance, an attribute like ‘birthdate’ (e.g., ‘1990-05-15’) in one dataset was transformed into a numerical ‘age’ (e.g., 35) in another. This moved beyond simple name changes and tested the robustness of our combined global and local risk metrics.

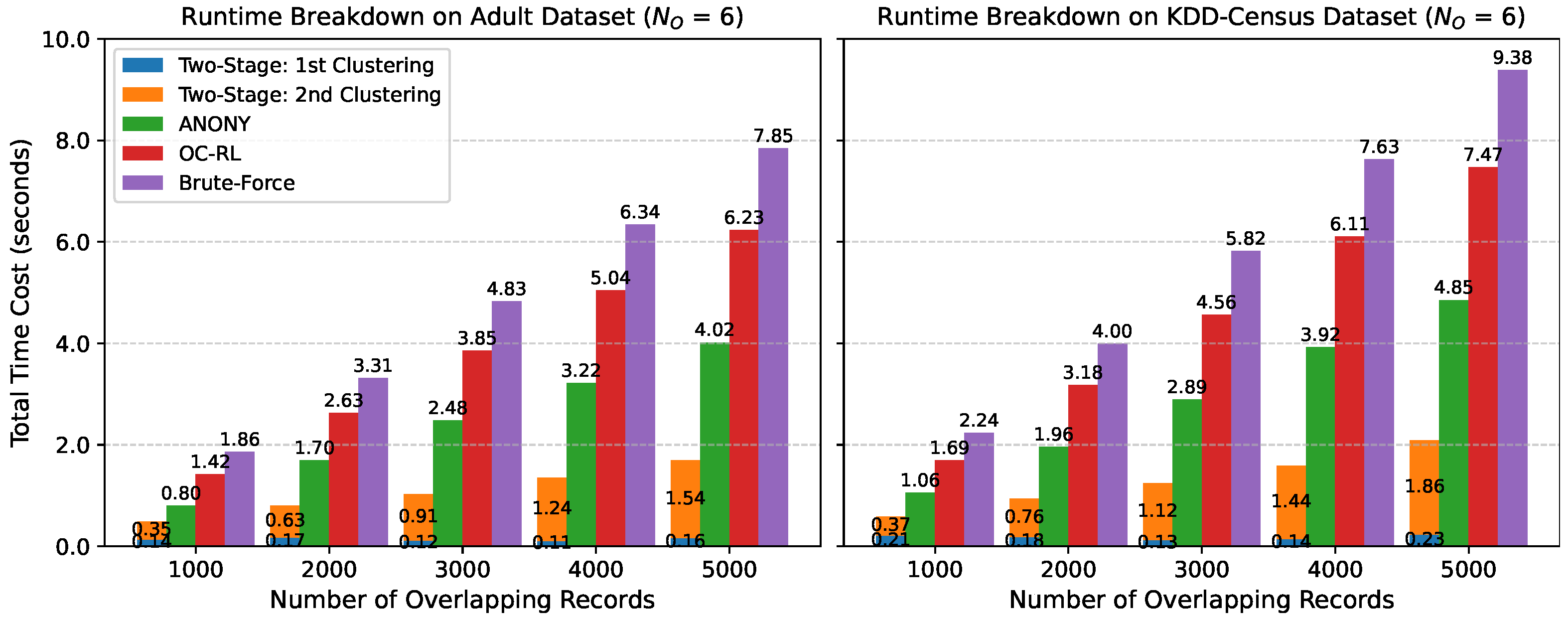

4.2. Quantitative Comparison with Prior Work

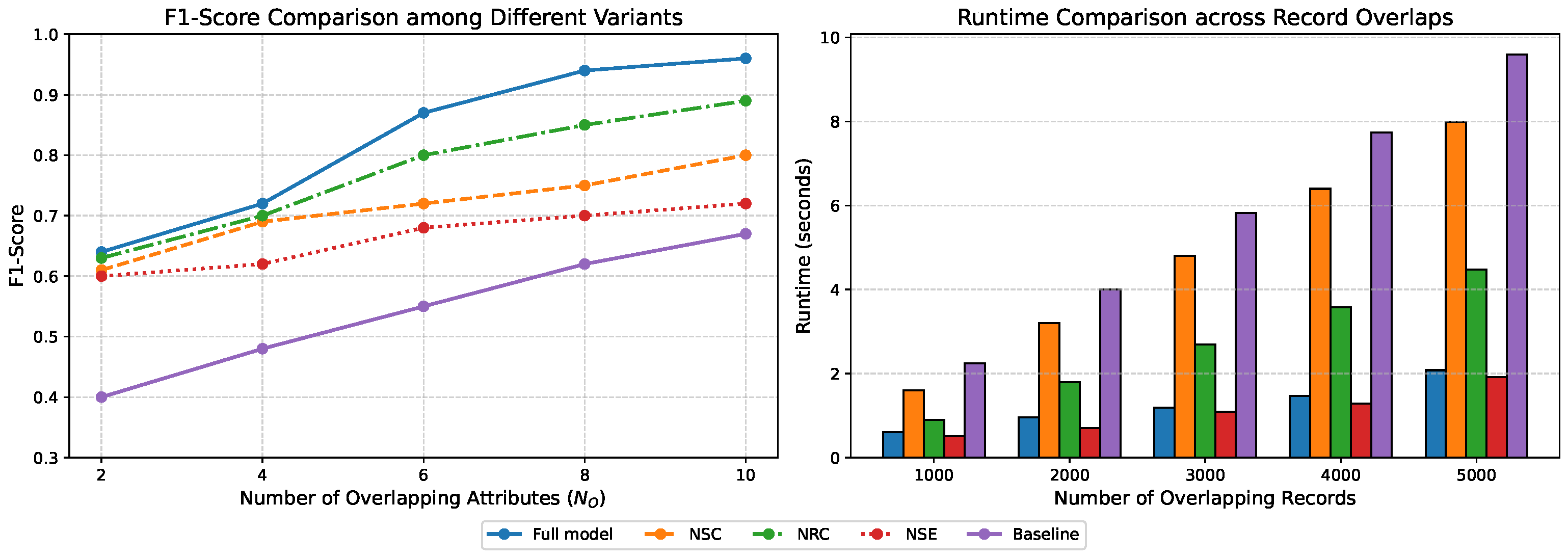

4.3. Ablation Study

4.3.1. Experimental Setup

- Full model (two-stage clustering): Our proposed method combining schema-aware dataset clustering and record-level k-member clustering.

- No schema clustering (NSC): Skips the first stage and performs record-level clustering directly on the union of all datasets.

- No record clustering (NRC): Applies only schema-level dataset grouping, followed by brute-force matching across all records within grouped datasets.

- No schema embedding (NSE): Replaces semantic-aware attribute comparison (e.g., word embedding-based similarity) with exact attribute name matching in the schema clustering stage.

- Flat matching (Baseline): Removes both clustering stages and performs global record-level matching across all datasets.

4.3.2. Results and Analysis

- The full model consistently outperformed all ablated variants, confirming that both schema-level and record-level clustering contribute synergistically to risk detection accuracy.

- Removing schema clustering (NSC) led to a noticeable drop in F1-score, especially when the number of overlapping attributes was small. This illustrates the value of coarse-grained clustering in guiding fine-grained linkage.

- Eliminating record-level clustering (NRC) reduced precision, as brute-force matching introduced more false positives due to lack of localized filtering.

- Using exact attribute names (NSE) instead of semantic similarity significantly weakened the model’s generalization to real-world scenarios, where synonymous or heterogeneously formatted attributes are common.

- The baseline flat matching method performed the worst across all conditions, reaffirming the limitations of monolithic matching in complex, cross-domain datasets.

4.3.3. Efficiency Comparison

4.3.4. Interpretation and Significance

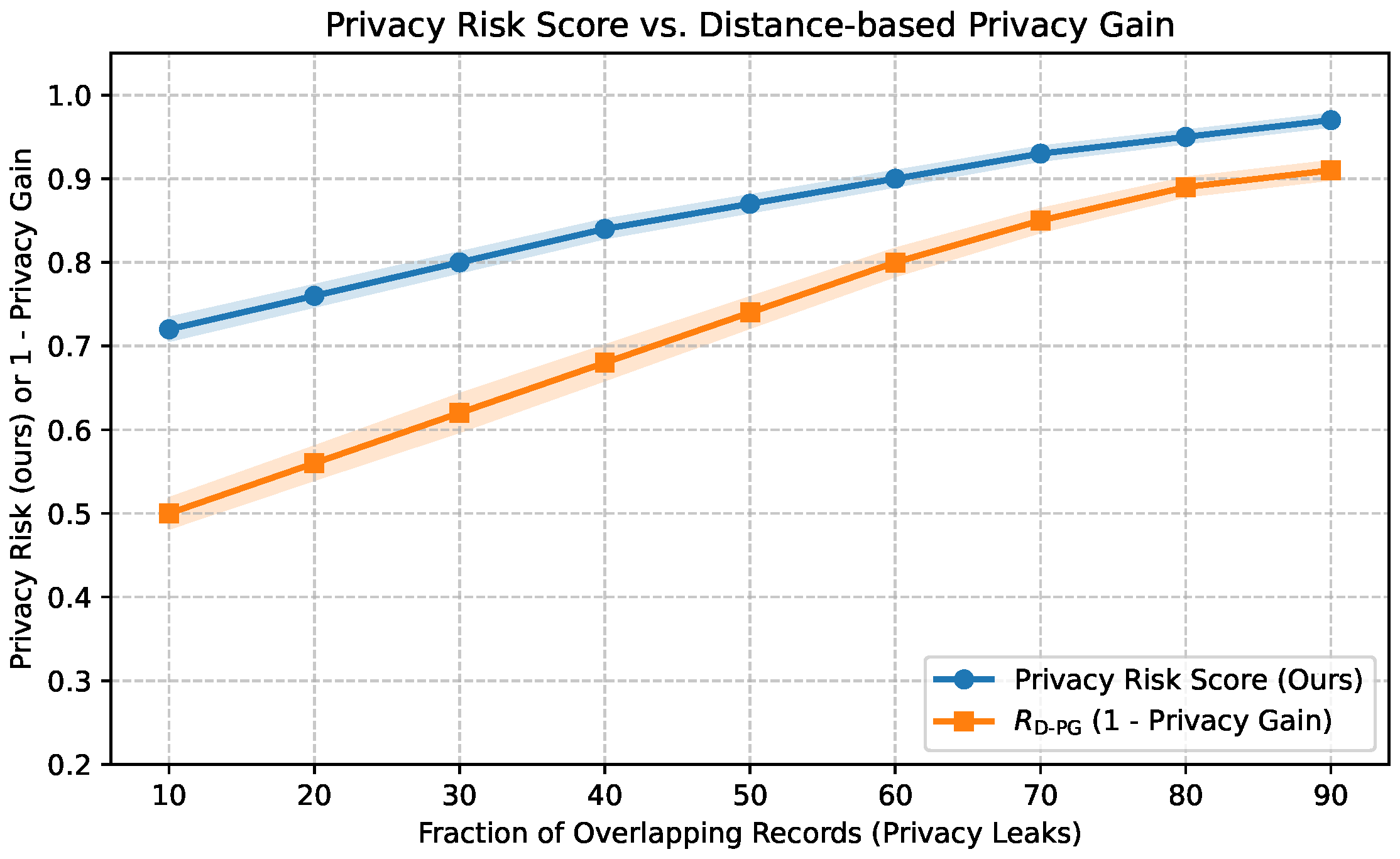

4.4. Evaluation of Privacy Risk Score

4.4.1. Distance-Based Privacy Gain (D-PG)

4.4.2. Experimental Design

4.4.3. Results and Analysis

4.5. Hyperparameter Sensitivity Analysis

4.5.1. Sensitivity of Linkage Detection to Parameter k

4.5.2. Sensitivity of the Risk Score to Scoring Parameters and

Analysis of Fusion Parameter

Analysis of Global Score Weight

4.6. Case Studies

4.6.1. A Computational Case Study: Step-by-Step Risk Calculation

Step 1: Global Linkability (GL) Calculation

- For {zip, postal_code}:

- Jaccard Similarity: The set of unique values for zip is . For postal_code, it is . The intersection , and the union . The Jaccard similarity is .

- JS Divergence: The probability distributions over the union of values are and . Based on these, the computed Jensen–Shannon divergence is .

- Attribute Score: .

- For {birth_year, age}: After standardizing birth_year to age, the value sets and distributions become identical. The resulting attribute score is .

- For {gender, sex}: The value sets are identical, but the distributions differ. The resulting attribute score is .

Step 2: Local Linkability (LL) Calculation

- The normalized distance between these perfectly matching records is .

- Thus, the local linkability (LL) score is 1.0.

Step 3: Unified Risk Score (R) Calculation

4.6.2. Applied Case Studies: Deployment Scenarios in Healthcare and Smart Cities

Healthcare Data Sharing Scenario

Smart City Mobility Analysis Scenario

5. Conclusions and Future Work

5.1. Discussion and Conclusions

5.2. Limitations and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Abouelmehdi, K.; Beni-Hessane, A.; Khaloufi, H. Big healthcare data: Preserving security and privacy. J. Big Data 2018, 5, 1. [Google Scholar] [CrossRef]

- Ullah, I.; Boreli, R.; Kanhere, S.S. Privacy in targeted advertising: A survey. arXiv 2020, arXiv:2009.06861. [Google Scholar]

- Eckhoff, D.; Wagner, I. Privacy in the smart city—Applications, technologies, challenges, and solutions. IEEE Commun. Surv. Tutor. 2017, 20, 489–516. [Google Scholar] [CrossRef]

- Zeng, W.; Zhang, C.; Liang, X.; Xia, J.; Lin, Y.; Lin, Y. Intrusion detection-embedded chaotic encryption via hybrid modulation for data center interconnects. Opt. Lett. 2025, 50, 4450–4453. [Google Scholar] [CrossRef]

- Samarati, P.; Sweeney, L. Protecting Privacy When Disclosing Information: k-Anonymity and Its Enforcement Through Generalization and Suppression; Technical Report Technical Report SRI-CSL-98-04; SRI International: Tokyo, Japan, 1998. [Google Scholar]

- Narayanan, A.; Shmatikov, V. How to break anonymity of the netflix prize dataset. arXiv 2006, arXiv:cs/0610105. [Google Scholar]

- Mercorelli, L.; Nguyen, H.; Gartell, N.; Brookes, M.; Morris, J.; Tam, C.S. A framework for de-identification of free-text data in electronic medical records enabling secondary use. Aust. Health Rev. 2022, 46, 289–293. [Google Scholar] [CrossRef]

- Wairimu, S.; Iwaya, L.H.; Fritsch, L.; Lindskog, S. On the Evaluation of Privacy Impact Assessment and Privacy Risk Assessment Methodologies: A Systematic Literature Review. IEEE Access 2024, 12, 19625–19650. [Google Scholar] [CrossRef]

- Wu, N.; Tamilselvan, R. A Personal Privacy Risk Assessment Framework Based on Disclosed PII. In Proceedings of the 2023 7th International Conference on Cryptography, Security and Privacy (CSP), Tianjin, China, 21–13 April 2023; pp. 86–91. [Google Scholar] [CrossRef]

- European Commission. General Data Protection Regulation. Off. J. Eur. Union 2016, L119, 1–88. [Google Scholar]

- Wuyts, K.; Sion, L.; Joosen, W. LINDDUN GO: A Lightweight Approach to Privacy Threat Modeling. In Proceedings of the 2020 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Genoa, Italy, 7–11 September 2020; pp. 302–309. [Google Scholar] [CrossRef]

- Shin, S.; Seto, Y.; Hasegawa, K.; Nakata, R. Proposal for a Privacy Impact Assessment Manual Conforming to ISO/IEC 29134: 2017. In Proceedings of the International Conference on Computer Information Systems and Industrial Management Applications, Olomouc, Czech Republic, 27–29 September 2018. [Google Scholar] [CrossRef]

- Wuyts, K.; Landuyt, D.V.; Hovsepyan, A.; Joosen, W. Effective and efficient privacy threat modeling through domain refinements. In Proceedings of the 33rd Annual ACM Symposium on Applied Computing, Pau, France, 9–13 April 2018. [Google Scholar] [CrossRef]

- Powar, J.; Beresford, A.R. SoK: Managing Risks of Linkage Attacks on Data Privacy. Proc. Priv. Enhancing Technol. 2023, 2023, 97–116. [Google Scholar] [CrossRef]

- Data Protection Working Party. Opinion 05/2014 on Anonymisation Techniques. 2014. Available online: https://ec.europa.eu/justice/article-29/documentation/opinion-recommendation/files/2014/wp216_en.pdf (accessed on 21 August 2025).

- Nentwig, M.; Hartung, M.; Ngomo, A.C.N.; Rahm, E. A survey of current Link Discovery frameworks. Semant. Web 2016, 8, 419–436. [Google Scholar] [CrossRef]

- Vaidya, J.; Zhu, Y.; Clifton, C. Privacy Preserving Data Mining; Advances in Information Security; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar] [CrossRef]

- Verykios, V.S.; Bertino, E.; Fovino, I.N.; Provenza, L.P.; Saygin, Y.; Theodoridis, Y. State-of-the-art in privacy preserving data mining. SIGMOD Rec. 2004, 33, 50–57. [Google Scholar] [CrossRef]

- Sweeney, L. Simple Demographics Often Identify People Uniquely; Technical Report Working Paper 3; Carnegie Mellon University, Data Privacy Laboratory: Pittsburgh, PA, USA, 2000. [Google Scholar]

- Narayanan, A.; Shmatikov, V. Robust De-anonymization of Large Sparse Datasets. In Proceedings of the 2008 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 18–22 May 2008; IEEE: New York, NY, USA, 2008; pp. 111–125. [Google Scholar] [CrossRef]

- Golle, P.; Partridge, K. On the Anonymity of Home/Work Location Pairs. In Proceedings of the International Conference on Pervasive Computing, Nara, Japan, 11–14 May 2009. [Google Scholar] [CrossRef]

- Farzanehfar, A.; Houssiau, F.; de Montjoye, Y.A. The risk of re-identification remains high even in country-scale location datasets. Patterns 2021, 2, 100204. [Google Scholar] [CrossRef]

- Narayanan, A.; Shmatikov, V. Myths and fallacies of “Personally Identifiable Information”. Commun. ACM 2010, 53, 24–26. [Google Scholar] [CrossRef]

- Sánchez, P.M.S.; Valero, J.M.J.; Celdrán, A.H.; Bovet, G.; Pérez, M.G.; Pérez, G.M. A Survey on Device Behavior Fingerprinting: Data Sources, Techniques, Application Scenarios, and Datasets. IEEE Commun. Surv. Tutor. 2020, 23, 1048–1077. [Google Scholar] [CrossRef]

- Fellegi, I.P.; Sunter, A.B. A theory for record linkage. J. Am. Stat. Assoc. 1969, 64, 1183–1210. [Google Scholar] [CrossRef]

- Goga, O.; Lei, H.; Parthasarathi, S.H.K.; Friedland, G.; Sommer, R.; Teixeira, R. Exploiting innocuous activity for correlating users across sites. In Proceedings of the 22nd International Conference on World Wide Web, Rio de Janeiro, Brazil, 13–17 May 2013. [Google Scholar] [CrossRef]

- Sweeney, L. k-anonymity: A model for protecting privacy. Int. J. Uncertain. Fuzziness-Knowl. Based Syst. 2002, 10, 557–570. [Google Scholar] [CrossRef]

- Machanavajjhala, A.; Kifer, D.; Gehrke, J.; Venkitasubramaniam, M. ℓ-Diversity: Privacy Beyond k-Anonymity. ACM Trans. Knowl. Discov. Data 2007, 1, 3. [Google Scholar] [CrossRef]

- Li, N.; Li, T.; Venkatasubramanian, S. t-Closeness: Privacy Beyond k-Anonymity and ℓ-Diversity. In Proceedings of the 23rd IEEE International Conference on Data Engineering, Istanbul, Turkey, 15 April–20 April 2007; pp. 106–115. [Google Scholar] [CrossRef]

- de Montjoye, Y.; Hidalgo, C.A.; Verleysen, M.; Blondel, V.D. Unique in the Crowd: The Privacy Bounds of Human Mobility. Sci. Rep. 2013, 3, 1376. [Google Scholar] [CrossRef]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating Noise to Sensitivity in Private Data Analysis. In Proceedings of the Third Theory of Cryptography Conference, Lecture Notes in Computer Science. New York, NY, USA, 4–7 March 2006; Volume 3876, pp. 265–284. [Google Scholar] [CrossRef]

- Ding, Z.; Wang, Y.; Wang, G.; Zhang, D.; Kifer, D. Detecting violations of differential privacy. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018; pp. 475–489. [Google Scholar] [CrossRef]

- Turgay, S.; İlter, İ. Perturbation methods for protecting data privacy: A review of techniques and applications. Autom. Mach. Learn. 2023, 4, 31–41. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, T.; Li, N.; Honorio, J.; Backes, M.; He, S.; Chen, J.; Zhang, Y. {PrivSyn}: Differentially private data synthesis. In Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), online, 11–13 August 2021; pp. 929–946. [Google Scholar]

- Stadler, T.; Oprisanu, B.; Troncoso, C. Synthetic data-A privacy mirage. arXiv 2020, arXiv:2011.07018. [Google Scholar]

- Dibbo, S.V. SoK: Model Inversion Attack Landscape: Taxonomy, Challenges, and Future Roadmap. In Proceedings of the 2023 IEEE 36th Computer Security Foundations Symposium (CSF), Dubrovnik, Croatia, 10–14 July 2023; pp. 439–456. [Google Scholar] [CrossRef]

- Giomi, M.; Boenisch, F.; Wehmeyer, C.; Tasnádi, B. A Unified Framework for Quantifying Privacy Risk in Synthetic Data. arXiv 2023, arXiv:2211.10459. [Google Scholar] [CrossRef]

- Heng, Y.; Armknecht, F.; Chen, Y.; Schnell, R. On the Effectiveness of Graph Matching Attacks Against Privacy-Preserving Record Linkage. PLoS ONE 2022, 17, e0267893. [Google Scholar] [CrossRef]

- Carey, C.J.; Dick, T.; Epasto, A.; Munoz Medina, A.; Mirrokni, V.; Vassilvitskii, S.; Zhong, P. Measuring Re-identification Risk. arXiv 2023, arXiv:2304.07210. [Google Scholar] [CrossRef]

- Jiang, Y.; Mosquera, L.; Jiang, B.; Kong, L.; Emam, K.E. Measuring re-identification risk using a synthetic estimator to enable data sharing. PLoS ONE 2022, 17, e0269097. [Google Scholar] [CrossRef] [PubMed]

- Runshan, H.; Stalla-Bourdillon, S.; Yang, M.; Schiavo, V.; Sassone, V. Bridging Policy, Regulation, and Practice? A Techno-Legal Analysis of Three Types of Data in the GDPR; Hart Publishing: Oxford, UK, 2017. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.S.; Dean, J. Efficient Estimation of Word Representations in Vector Space. In Proceedings of the International Conference on Learning Representations, Scottsdale, AZ, USA, 2–4 May 2013. [Google Scholar]

- Zhou, K.; Ethayarajh, K.; Card, D.; Jurafsky, D. Problems with Cosine as a Measure of Embedding Similarity for High Frequency Words. arXiv 2022, arXiv:2205.05092. [Google Scholar] [CrossRef]

- Chen, X.; Yin, W.; Tu, P.; Zhang, H. Weighted k-Means Algorithm Based Text Clustering. In Proceedings of the 2009 International Symposium on Information Engineering and Electronic Commerce, Washington, DC, USA, 16–17 May 2009; pp. 51–55. [Google Scholar] [CrossRef]

- Christen, P. Data Matching: Concepts and Techniques for Record Linkage, Entity Resolution, and Duplicate Detection; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Vatsalan, D.; Christen, P. Scalable Privacy-Preserving Record Linkage for Multiple Databases. In Proceedings of the 23rd ACM International Conference on Conference on Information and Knowledge Management, Shanghai, China, 3–7 November 2014. [Google Scholar] [CrossRef]

- Guha, R.V.; Brickley, D.; Macbeth, S. Schema.org: Evolution of Structured Data on the Web. Queue 2015, 13, 10–37. [Google Scholar] [CrossRef]

- Lin, J. Divergence measures based on the Shannon entropy. IEEE Trans. Inf. Theory 1991, 37, 145–151. [Google Scholar] [CrossRef]

- Abril, D.; Navarro-Arribas, G.; Torra, V. Improving record linkage with supervised learning for disclosure risk assessment. Inf. Fusion 2012, 13, 274–284. [Google Scholar] [CrossRef]

- Byun, J.W.; Kamra, A.; Bertino, E.; Li, N. Efficient k-anonymization using clustering techniques. In Proceedings of the International Conference on Database Systems for Advanced Applications, Bangkok, Thailand, 9–12 April 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 188–200. [Google Scholar] [CrossRef]

- Fung, B.C.M.; Wang, K.; Chen, R.; Yu, P.S. Privacy-preserving data publishing: A survey of recent developments. ACM Comput. Surv. 2010, 42, 14:1–14:53. [Google Scholar] [CrossRef]

- Becker, B.; Kohavi, R. Adult. Uci Mach. Learn. Repos. 1996. [Google Scholar] [CrossRef]

- Dataset. Census-Income (KDD). Uci Mach. Learn. Repos. 2000. [Google Scholar] [CrossRef]

- William, W.; Olvi, M.; Nick, S.; Street, W. Breast Cancer Wisconsin (Diagnostic). Uci Mach. Learn. Repos. 1993. [Google Scholar] [CrossRef]

- De Bruin, J. Python Record Linkage Toolkit: A toolkit for record linkage and duplicate detection in Python(v0.14). Zenodo 2019. [Google Scholar] [CrossRef]

- Bleiholder, J.; Naumann, F. Data fusion. ACM Comput. Surv. 2009, 41, 1:1–1:41. [Google Scholar] [CrossRef]

- Stadler, T.; Oprisanu, B.; Troncoso, C. Synthetic Data—Anonymisation Groundhog Day. In Proceedings of the USENIX Security Symposium, Boston, MA, USA, 12–14 August 2020. [Google Scholar] [CrossRef]

| p_id | birth_year | gender | zip | diagnosis |

|---|---|---|---|---|

| 101 | 1985 | F | 90*10 | Hypertension |

| 102 | 1992 | M | 94*03 | Diabetes |

| 103 | 1985 | M | 10*01 | Asthma |

| c_id | age | sex | postal_code | occupation |

|---|---|---|---|---|

| 5534 | 40 | F | 90*10 | Engineer |

| 5535 | 33 | M | 10*01 | Teacher |

| 5536 | 40 | F | 80*02 | Doctor |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, R.; Lin, Y.; Yang, M.; Yu, Y.; Sassone, V. Two-Stage Mining of Linkage Risk for Data Release. Mathematics 2025, 13, 2731. https://doi.org/10.3390/math13172731

Hu R, Lin Y, Yang M, Yu Y, Sassone V. Two-Stage Mining of Linkage Risk for Data Release. Mathematics. 2025; 13(17):2731. https://doi.org/10.3390/math13172731

Chicago/Turabian StyleHu, Runshan, Yuanguo Lin, Mu Yang, Yuanhui Yu, and Vladimiro Sassone. 2025. "Two-Stage Mining of Linkage Risk for Data Release" Mathematics 13, no. 17: 2731. https://doi.org/10.3390/math13172731

APA StyleHu, R., Lin, Y., Yang, M., Yu, Y., & Sassone, V. (2025). Two-Stage Mining of Linkage Risk for Data Release. Mathematics, 13(17), 2731. https://doi.org/10.3390/math13172731