The four extracted principal components were used as input features for the model, while the closing price served as the prediction target. To avoid issues such as gradient instability or impaired convergence due to inconsistent feature scales, the principal components were further normalized to the range

. The normalization formula is as follows:

where

is the original feature value,

and

denote the minimum and maximum values of the corresponding feature, respectively. After normalization, the dataset was split into training and testing sets using an 8:2 ratio. The training set was used for model learning and hyperparameter tuning, while the testing set was employed to evaluate the model’s generalization and predictive performance on unseen data.

4.1. Parameters Selection

(1) Wavelet denoising parameters configuration

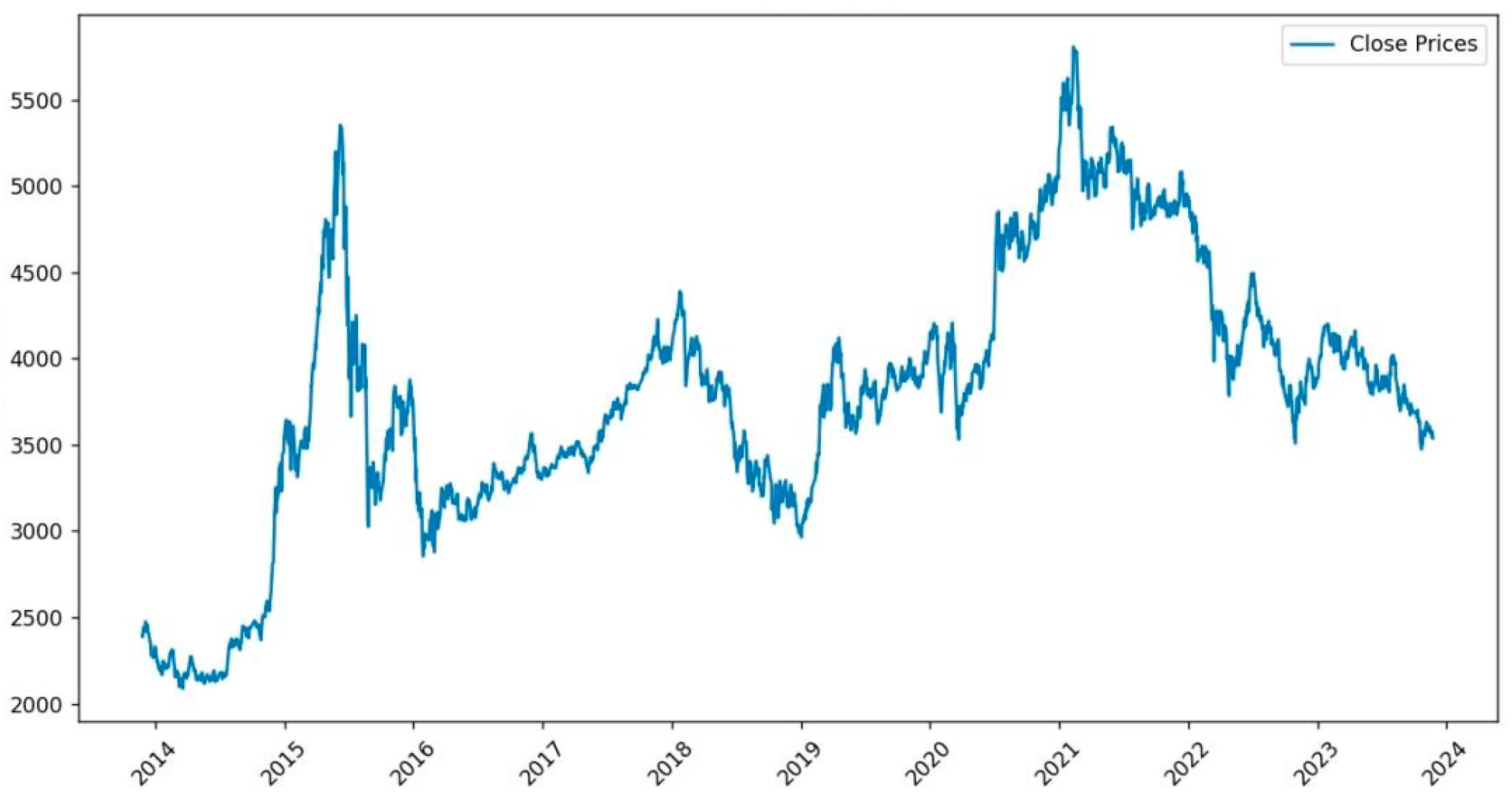

To improve the stability and effectiveness of data modeling, this study first conducted a statistical examination of the CSI 300 Index closing price. As shown in

Figure 4, the distribution of the data exhibits multimodal characteristics and deviates from normality, indicating the presence of notable volatility and noise. Accordingly, denoising is necessary to enhance the quality of the input data.

To better preserve the integrity of stock price waveforms, wavelet bases with higher symmetry should be selected. Among various types, Coiflets wavelets offer notable advantages in signal smoothing and detail extraction. When processing signals with sharp discontinuities, Coiflets can provide a smooth approximation while accurately retaining edge features, effectively minimizing phase distortion during signal reconstruction [

35]. This ensures that the denoised data more faithfully reflects actual market trends.

Given that stock price series exhibit both stationary trends and high-frequency fluctuations, this study adopts Coiflets wavelets with vanishing moments of order 4, striking an optimal balance between filter length and reconstruction accuracy. Compared to higher-order configurations, this choice effectively balances noise reduction and feature preservation while avoiding the risk of overfitting high-frequency noise. Additionally, the strong symmetry of Coiflets helps reduce phase shifts during reconstruction, enabling the denoised signal to better preserve local discontinuities and align more closely with real market dynamics.

In practice, a single-level discrete wavelet transform is applied, with a fixed thresholding rule and hard thresholding used for high-frequency coefficients. This configuration outperforms the soft thresholding scheme in terms of RMSE, better retaining the original structural information. After wavelet denoising, local high-frequency disturbances in the original series are effectively suppressed, and the overall trend becomes smoother, meeting the input stability and interpretability requirements of subsequent modeling.

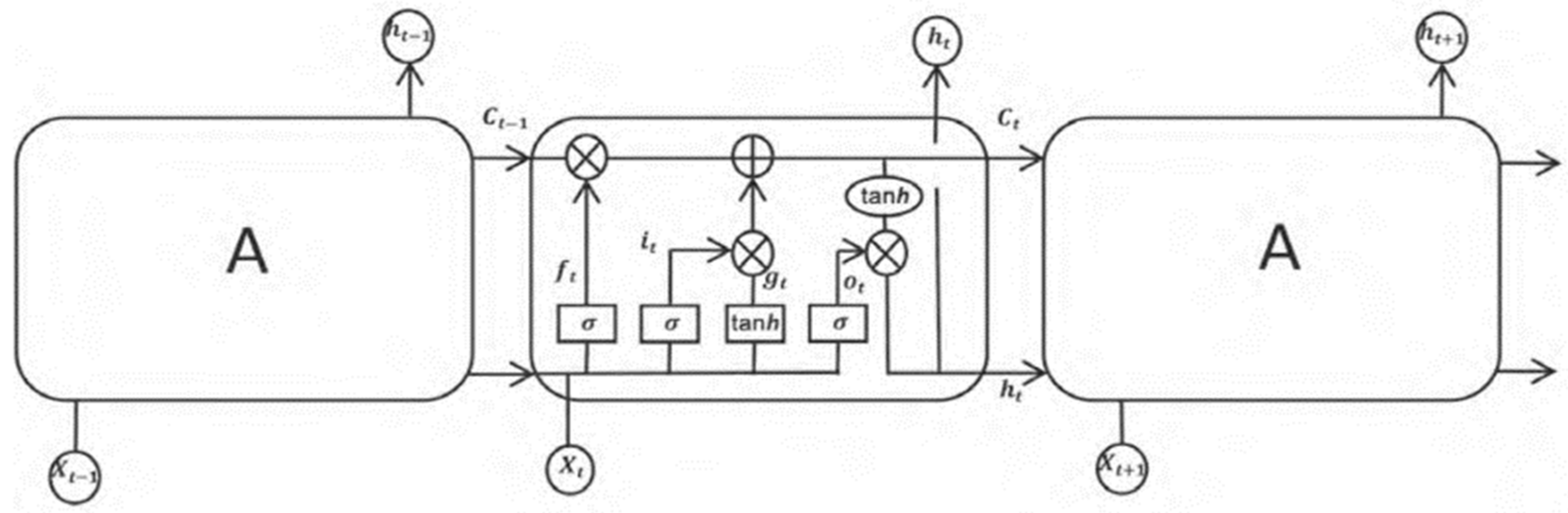

(2) MHABiLSTM model parameters configuration

The MHABiLSTM model enhances the standard BiLSTM architecture by incorporating a multi-head attention mechanism with 8 attention heads, aiming to strengthen the model’s ability to learn long-term dependencies.

The model uses Mean Squared Error (MSE) as the loss function, the Adam optimizer for parameter updates, and the sigmoid function as the activation function. Considering the memory effect of the market while avoiding noise accumulation caused by an excessively long window, the time step was set to 60 trading days in accordance with the characteristics of financial time series. The learning rate was set to 0.001, with a maximum of 100 training epochs and a batch size of 10. To prevent overfitting, early stopping was applied during training. The detailed parameter settings are shown in

Table 2.

(3) ARIMA model parameters configuration

To correct for the linear trends remaining in the residuals of the MHABiLSTM model, an ARIMA model was introduced for error modeling. The Augmented Dickey–Fuller (ADF) test confirmed that the residual series was stationary (), and the Ljung–Box test ruled out the null hypothesis of white noise.

By analyzing the Autocorrelation Function (ACF) and Partial Autocorrelation Function (PACF) plots, the AR and MA orders were determined to be and , respectively. Thereby, an ARIMA (3,0,7) model was constructed and applied in a rolling prediction manner to iteratively correct the residual errors of MHABiLSTM, improving the model’s capacity to capture short-term fluctuations.

(4) XGBoost model parameters configuration

The XGBoost model’s hyper-parameters were optimized using grid search, and the best configuration was selected based on 5-fold cross-validation, jointly minimizing the Root Mean Square Error (RMSE) and Mean Absolute Error (MAE).

The final model used gbtree as the base learner and reg:squarederror as the objective function. The optimal parameters are summarized in

Table 3.

4.2. Evaluation Metrics

To assess the predictive performance of the models, four commonly used evaluation metrics were employed in this study:

(1) Root Mean Square Error (RMSE)

RMSE measures the magnitude of deviation between predicted and actual values. It emphasizes larger errors by assigning them more weight in the total error. The formula is defined as:

where

is the predicted value,

is the actual value, and

is the total number of samples.

(2) Mean Absolute Percentage Error (MAPE)

MAPE reflects the prediction error as a percentage of the actual value, providing an intuitive interpretation. It is computed as:

(3) Mean Absolute Error (MAE)

MAE evaluates the average absolute difference between predicted and actual values. It is defined as:

(4) Coefficient of Determination (R2)

R

2 measures the proportion of variance in the dependent variable that is predictable from the independent variables. Its value ranges from 0 to 1, and the closer it is to 1, the better the model fits the data. It is calculated as:

where

is the mean of the actual values.

4.3. Predictive Performance

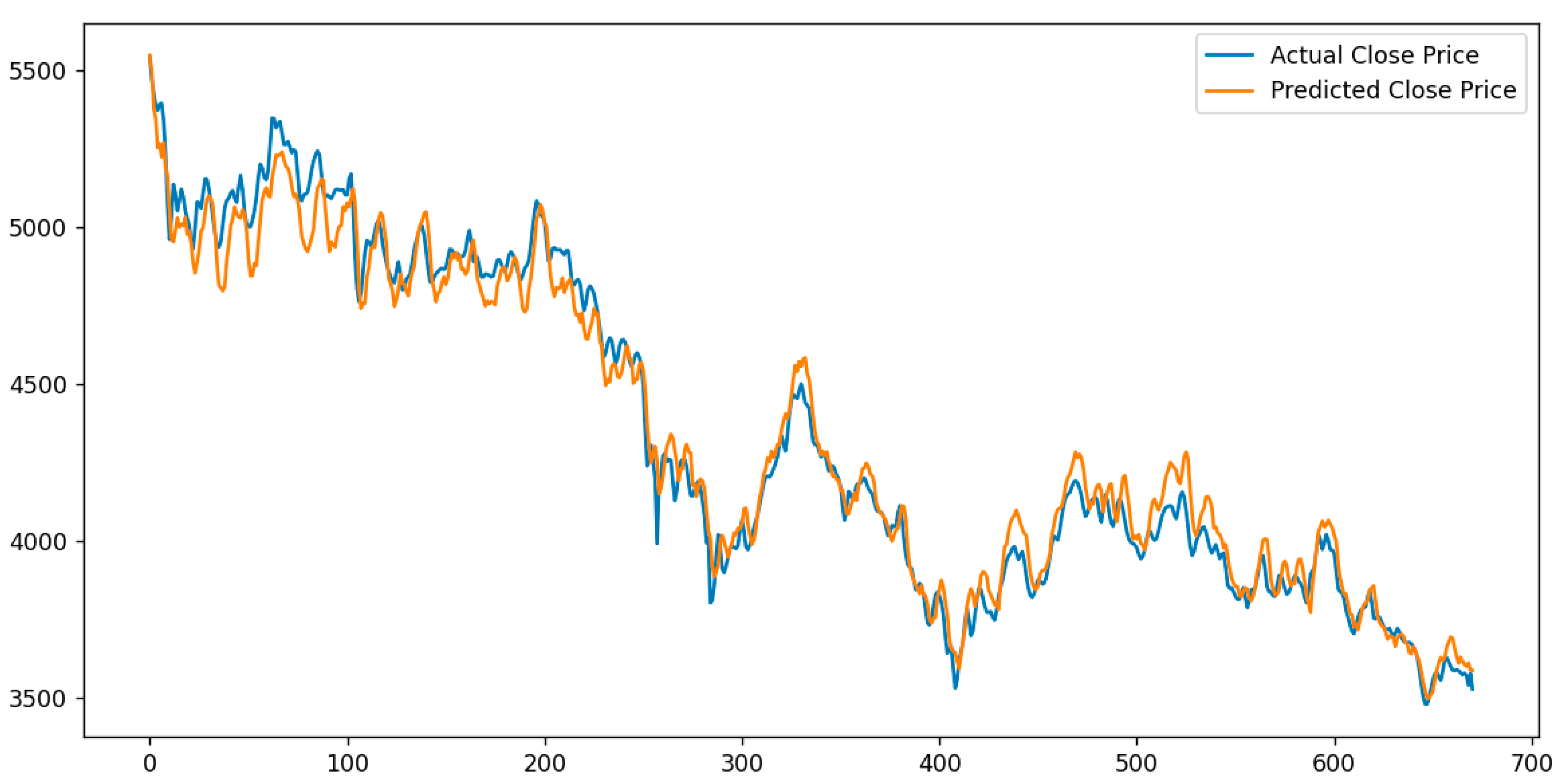

The MHABiLSTM model uses a sliding window approach with a time window of 60 trading days to construct training samples. This design enables the model to effectively capture and fit the long-term trend of stock prices (see

Figure 5).

In the testing set, the predicted values of the MHABiLSTM model closely followed the actual stock price trends, demonstrating satisfactory alignment in overall movement (see

Table 4). The model achieved an RMSE of 45.883 and an R

2 of 0.959, indicating robust nonlinear modeling capabilities. However, certain discrepancies were observed in local short-term fluctuations, suggesting that the model may still have limitations in capturing rapid, high-frequency variations.

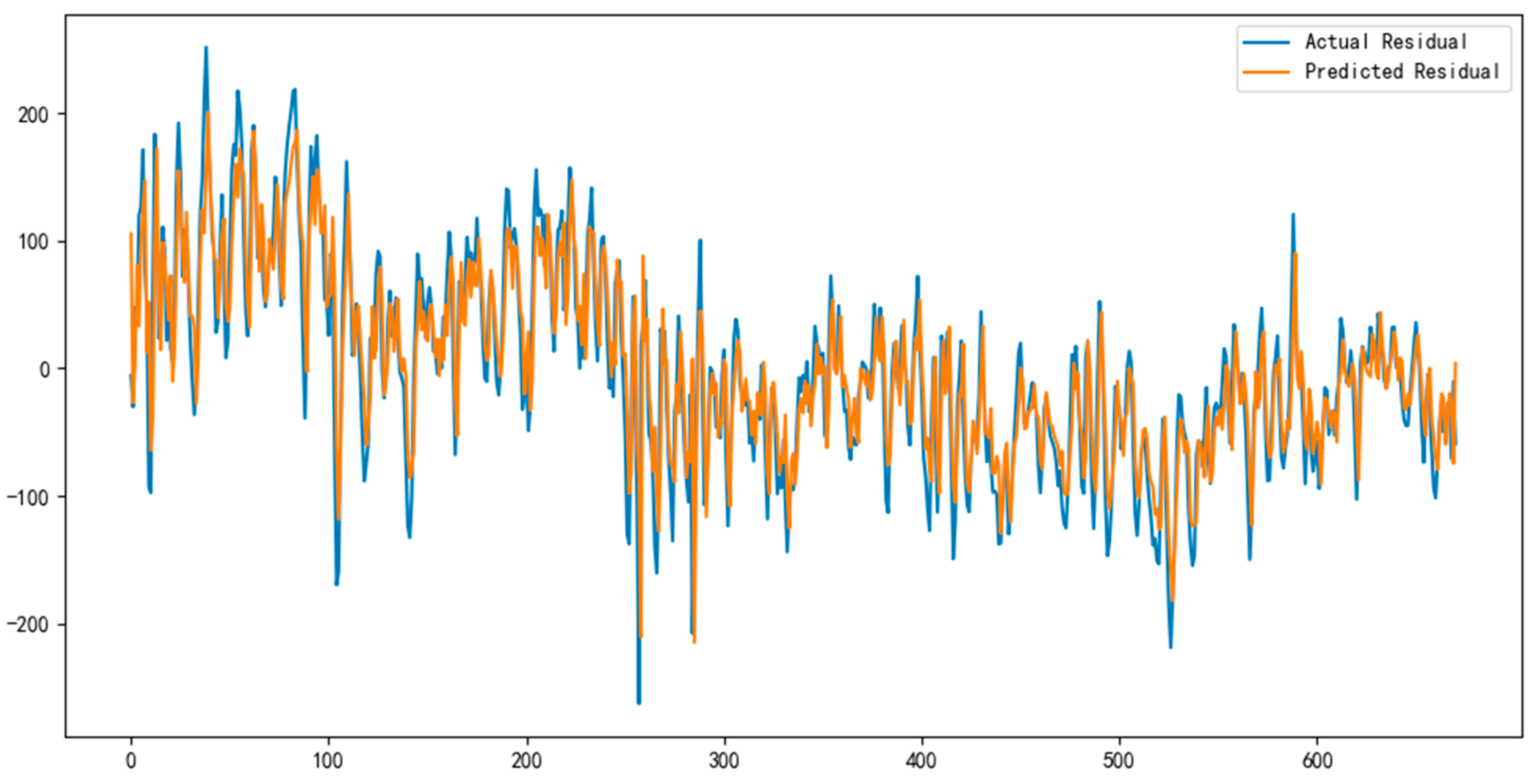

To further address the MHABiLSTM model’s limitations in capturing linear trends, an ARIMA model was applied to its residual sequence (see

Figure 6). By extracting the linear trend components from the residuals, the ARIMA model served to correct systematic errors and enhance the overall prediction accuracy.

After training and fitting the ARIMA model, its predicted residuals were added to the MHABiLSTM predictions to obtain the final forecasts of the MHABiLSTM-ARIMA hybrid model. A comparison between the predicted values and the actual values on the test set is illustrated in the

Figure 7.

The results in

Table 5 indicate that the MHABiLSTM-ARIMA hybrid model demonstrates clear improvements over the standalone MHABiLSTM model in metrics such as RMSE and MAE. Specifically, the RMSE decreased to 41.750, and the R

2 increased to 0.966, suggesting that the ARIMA component effectively compensates for the deep learning model’s limitations in capturing linear structures.

By linearly correcting the prediction errors of the MHABiLSTM model using ARIMA, and fusing the results, the RMSE was reduced by 9.01%, and the R2 became closer to 1.000. This confirms that ARIMA effectively compensates for MHABiLSTM’s deficiencies in modeling linear trends.

The XGBoost model also demonstrated promising performance in capturing long-term trends. A comparison between the predicted and actual stock prices of the CSI 300 Index on the test set is shown in the

Figure 8.

Table 6 presents the predictive performance of the XGBoost model on the test dataset. The model achieved an R

2 of 0.988, demonstrating effective capability in feature extraction. However, due to the lack of explicit temporal structure modeling, the model showed limited ability to capture short-term fluctuations, resulting in a relatively higher RMSE of 60.793.

The XGBoost model demonstrated promising performance in capturing the overall directional movement of CSI 300 stock prices, with outstanding long-term trend fitting. However, it exhibited noticeable bias in modeling short-term fluctuations. As reflected in its accuracy metrics, the MAE reached 32.107, indicating that single-point prediction errors were generally within an acceptable range. Nevertheless, the RMSE climbed to 60.793, suggesting relatively large deviations in certain periods, especially during sharp price swings. XGBoost showed promising performance in error control and long-term trend tracking, but its ability to handle complex short-term volatility was limited. Therefore, integrating sequence-based models to enhance short-term fluctuation modeling, or employing ensemble learning to capture nonlinear trend segments, can further improve forecasting performance.

To this end, the MHABiLSTM-ARIMA and XGBoost model outputs were integrated using the error reciprocal weighting method [

36]. This method dynamically allocates greater weight to the model with smaller prediction error on the training set, offering simplicity, adaptability, and effective generalization.

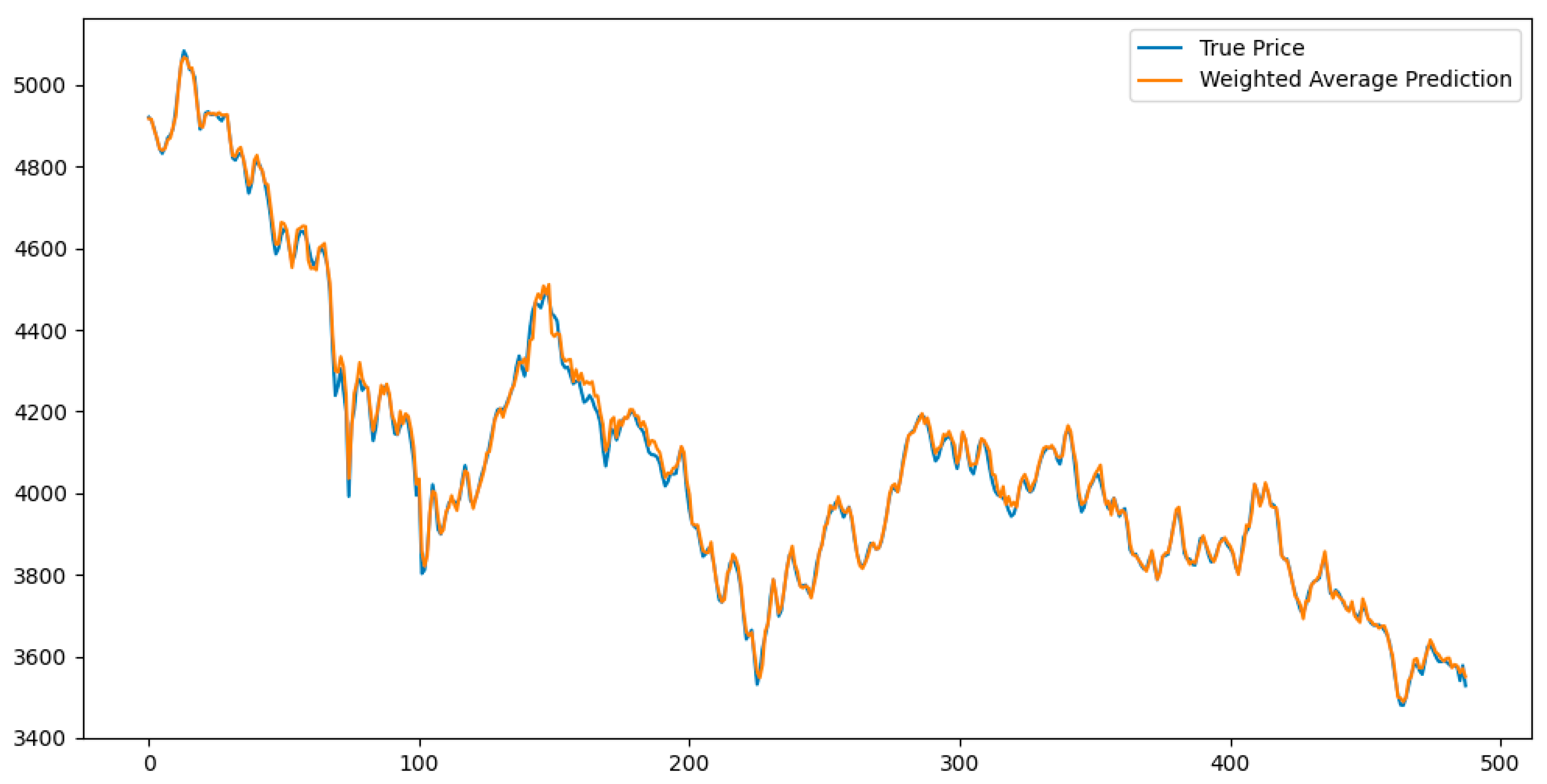

By applying this inverse-error-weighted fusion strategy, the final forecast for CSI 300 stock prices was obtained.

Figure 9 illustrates the comparison between the fused predictions and the actual values on the test set, validating the effectiveness of the ensemble strategy in enhancing forecast accuracy and variance explanation.

Table 7 presents the predictive performance of the MHABiLSTM-ARIMA-XGBoost ensemble model on the test dataset. The results show that the model achieves satisfactory accuracy, with an RMSE of 20.664, MAE of 21.810, MAPE of 0.488, and an R

2 of 0.998, indicating that the ensemble strategy effectively enhances both prediction precision and variance explanation capacity.

The prediction results of the MHABiLSTM-ARIMA-XGBoost ensemble model exhibit a high degree of consistency with the actual trends of the CSI 300 Index, accurately capturing both long-term movements and short-term fluctuations. Among all metrics, the model achieved an RMSE of 20.664, MAE of 21.810, MAPE of 0.488, and an R2 value approaching 1, indicating a substantial improvement in prediction accuracy. The improved performance stems from the complete modeling pipeline: wavelet-denoised data were first processed by MHABiLSTM to extract nonlinear patterns, ARIMA was then applied to correct the residual linear trend, and finally, XGBoost was used to integrate predictions through inverse-error weighting. This comprehensive approach outperforms any individual model, validating the accuracy and robustness of the proposed ensemble framework.

The prediction results for the CSI 300 Index further demonstrate notable performance differences among the tested models. A comparative analysis (see

Table 8) clearly shows that the MHABiLSTM-ARIMA-XGBoost ensemble yields the highest R

2 and the lowest values for RMSE, MAE, and MAPE, highlighting its superior predictive capabilities. By combining the nonlinear modeling strength of MHABiLSTM, the linear trend-capturing ability of ARIMA, and the feature learning power of XGBoost, the ensemble model effectively leverages the strengths of each component. This hybrid integration clearly enhances its ability to forecast both long-term trends and short-term volatility, delivering better overall performance than any single model.

4.5. Generalization Capability Validation

To evaluate the proposed ensemble model’s applicability across different financial assets and further verify its stability and generalization capability, an empirical analysis was conducted using individual stock data from Fuyao Glass (600660.SH), in addition to the CSI 300 Index. The dataset spans from 25 November 2013 to 24 November 2023, covering a total of 2436 trading days. Following the same preprocessing pipeline, all models were trained and tested using features derived from wavelet denoising and PCA.

Table 10 presents the predictive results of each model on the Fuyao Glass test set. Compared to the MHABiLSTM model, the MHABiLSTM-ARIMA-XGBoost ensemble reduced RMSE, MAE, and MAPE by 43.6%, 46.1%, and 46.1%, respectively. Relative to XGBoost, the reductions were 22.2%, 34.5%, and 24.7%, respectively. The model also achieved an R

2 of 0.971, indicating satisfactory fitting performance.

The results demonstrate that the MHABiLSTM-ARIMA-XGBoost model not only performs well on index-level data, but also consistently achieves reliable and stable predictive performance at the individual stock level.

By integrating the strengths of multiple model types, the ensemble effectively captures nonlinear patterns, linear trends, and complex feature interactions in financial time series, validating its robustness and broad applicability across diverse asset classes.