1. Introduction

Batteries are a crucial energy carrier in diverse fields such as consumer electronics, electric vehicles, smart grids, and others [

1,

2,

3,

4]. Currently, the commonly used types of batteries include Lead–Acid, Nickel–Metal Hydride, and Lithium-ion batteries [

5,

6,

7,

8]. Lithium-ion batteries are highly sought after due to their extended lifespan and exceptional energy density [

9,

10,

11,

12].

In battery systems, the SOC is a crucial indicator that represents a battery’s available capacity [

13]. However, the SOC is difficult to measure directly due to the battery’s chemical complexity, nonlinear response, and potential measurement errors [

14,

15]. Inaccurate estimation of the SOC can result in overcharging or over-discharging of the battery, potentially leading to thermal runaway, fire, or even explosion in severe scenarios [

16,

17,

18].

Various methods for SOC estimation have been discussed. Traditional SOC estimation method includes the Coulomb counting (CC) method [

19] and the open-circuit voltage (OCV) method [

20]. The CC method involves integrating the current over time. However, current measurement errors can lead to cumulative errors with this approach, reducing estimation accuracy. The OCV method establishes a look-up table, allowing for a precise SOC estimate to be obtained by matching the measured OCV with the corresponding SOC value in the table. However, during operation, the value of OCV is difficult to measure or calculate due to varying currents [

20].

The model-based filtering method is a reliable approach, with several methods available such as sliding mode observer [

21], H-infinity observer [

22,

23], Kalman filter (KF) [

24,

25], and others. The KF is widely used among these methods due to its excellent autoregressive and state estimation ability. However, the standard KF is limited to linear models, while battery models exhibit nonlinearity. To overcome this limitation, an extended Kalman filter (EKF) has been proposed and employed in SOC estimation [

24]. However, the EKF ignores high-order terms of the system, which leads to decreased filtering accuracy. Therefore, an unscented Kalman filter (UKF) has sometimes been adopted as a substitute. However, the fixed initial noise set by the algorithm does not adjust with the battery’s operational dynamics, which can lead to significant fluctuations in the estimation results. To update the noise adaptively, Wang et al. [

25] employed an adaptive algorithm that allows noise to vary with the battery’s operation. The validation conducted on a third-order resistance–capacitance model showed that this method achieved improved accuracy and robustness than UKF. Currently, various modifications and adaptations of model-based filtering methods are proposed to enhance the robustness of results. However, building an accurate and universal battery model is challenging because of the inherent complexity of chemical reactions and dynamic environments within batteries [

26].

Compared to the model-based filtering method, data-driven approaches utilize extensive relevant data to establish mathematical relationships between SOC and input parameters, avoiding the necessity for constructing intricate mechanistic models [

27]. Common data-driven methods include support vector machines [

28], neural networks [

29,

30,

31,

32,

33], and enhanced networks [

34]. For instance, Deng et al. [

30] proposed a data-driven approach utilizing Gaussian Process Regression (GPR) to address SOC estimation challenges in battery packs. The results verified that the proposed model has better SOC estimation performance than the regular model. Chaoui et al. [

31] and Hong et al. [

33] applied a recurrent neural network (RNN) and LSTM network in online electric vehicle battery analysis. Results demonstrated that the LSTM network exhibited more strong and efficient prediction capabilities. To achieve optimal performance in deep learning methods, optimization algorithms are used to optimize the network. Mao et al. [

34] utilized particle swarm optimization to optimize the neural network, aiming to enhance the precision of SOC estimations. However, the results obtained by this approach did not meet the desired expectations. It was evident that employing a more effective optimization algorithm could yield superior outcomes. Abualigah et al. [

35] introduced the AOA and evaluated its performance on actual engineering issues.

Existing data-driven methods primarily rely on neural networks for SOC estimation, enhancing estimation accuracy through optimization algorithms. By employing suitable networks and optimization algorithms, improved estimations can be achieved. However, data-driven methods rely on a substantial amount of data, and noise in the dataset can cause significant errors in the estimation results [

36]. In response to this situation, researchers have designed hybrid methods that leverage the advantages of various methods. Common hybrid methods include segmented models that combine traditional SOC estimation methods with model-based filtering methods [

37] and fusion models that integrate data-driven methods with model-based filtering methods [

38,

39,

40,

41]. For instance, Misyris et al. [

37] combined the OCV method with a linear Kalman filter to achieve both short-term and long-term SOC estimation. However, this method relies on an accurate equivalent battery model and struggles to estimate the SOC of nonlinear battery systems effectively. Charkhgard et al. [

38] presented a hybrid model that combined a neural network (NN) and extended Kalman filter (EKF). In this model, the NN was employed to perform an initial SOC estimation using historical battery data, while the EKF was utilized to refine the SOC estimations based on the battery’s terminal voltage. This approach offered improved estimation accuracy and reduced disturbances compared to estimating the SOC using either an NN or EKF alone. However, the extent of improvement was limited by the methods employed in the hybrid method. The traditional NN had difficulty predicting time-series data effectively, and the EKF faced challenges in achieving high-precision filtering for nonlinear systems like batteries. Therefore, He et al. [

39] and Cui et al. [

40] developed a hybrid method utilizing a combination of neural networks and UKF. However, the neural networks used in [

39,

40] fail to fully exploit historical data to enhance the relationship between input data and the SOC. Moreover, fixed-noise statistical characteristics of the UKF determined based on prior knowledge can potentially cause filtering failure, leading to errors in system state estimation. Zhang et al. [

41] employed an LSTM network optimized by an attentional mechanism algorithm combined with the KF for SOC estimation. The LSTM network was effective at handling time-series data, and the attentional mechanism algorithm improved the correlation between input data in the model. Finally, the KF was used to achieve higher-precision SOC estimation. However, this model requires manual tuning of the LSTM network hyperparameters, which is highly time-consuming. In summary, existing hybrid methods typically combine neural networks with Kalman filtering algorithms. However, current research lacks suitable algorithms for optimizing network hyperparameters. The employed filtering algorithms need to be adaptable to nonlinear systems while ensuring adaptive noise adjustment. As a result, there is a demand for a combined framework that achieves two goals: optimizing the initial hyperparameters of the data-driven method and adaptively updating noise to ensure precise and consistent SOC estimation.

In this paper, a combined framework is presented, which combines an LSTM network optimized by an Improved Arithmetic Optimization Algorithm (IAOA) and Adaptive Unscented Kalman Filter (AUKF) algorithm. The combined framework aims to address the challenges of optimizing LSTM hyperparameters, ensuring the applicability of nonlinear systems, and adapting to noise covariance, thus facilitating accurate SOC estimation. The LSTM network is utilized for preliminary SOC estimation, with the IAOA determining the optimal hyperparameters of the network and the AUKF providing the final SOC estimations. The main contributions are summarized as follows:

- (a)

This study presents a hybrid framework that combines a data-driven approach (LSTM) with a model-based filtering (AUKF) method for estimating the State of Charge (SOC). The framework automates the time-consuming hyperparameter tuning process and combines the predictive strength of deep learning with the error correction strength of AUKF. This results in an efficient and robust solution for high-accuracy SOC estimation that does not require complex battery modeling.

- (b)

An IAOA is incorporated within the proposed framework to efficiently optimize the LSTM network’s hyperparameters. The IAOA enhances the standard Arithmetic Optimization Algorithm (AOA) in two key aspects: an adaptive iteration mechanism that improves computational efficiency by terminating early when a fitness threshold is met, and a genetic mutation strategy that helps the algorithm escape local optima, thereby enhancing its global search capability.

- (c)

The AUKF can adaptively update its noise covariance in real-time based on the battery’s operational state; thus, the estimation errors are adaptively reduced. The integration of the AUKF further improves the accuracy and robustness of the estimation, especially under the complex and dynamic conditions found in real-world applications.

- (d)

The proposed framework is rigorously validated not only on a public dataset under various standard conditions but also on operational data from two distinct real-world electric vehicle battery packs, confirming its practical effectiveness and generalization capability.

The subsequent structure is as follows.

Section 2 provides a detailed description of the proposed method.

Section 3 analyzes its performance and practicality. Finally,

Section 4 summarizes the paper.

2. Methodology

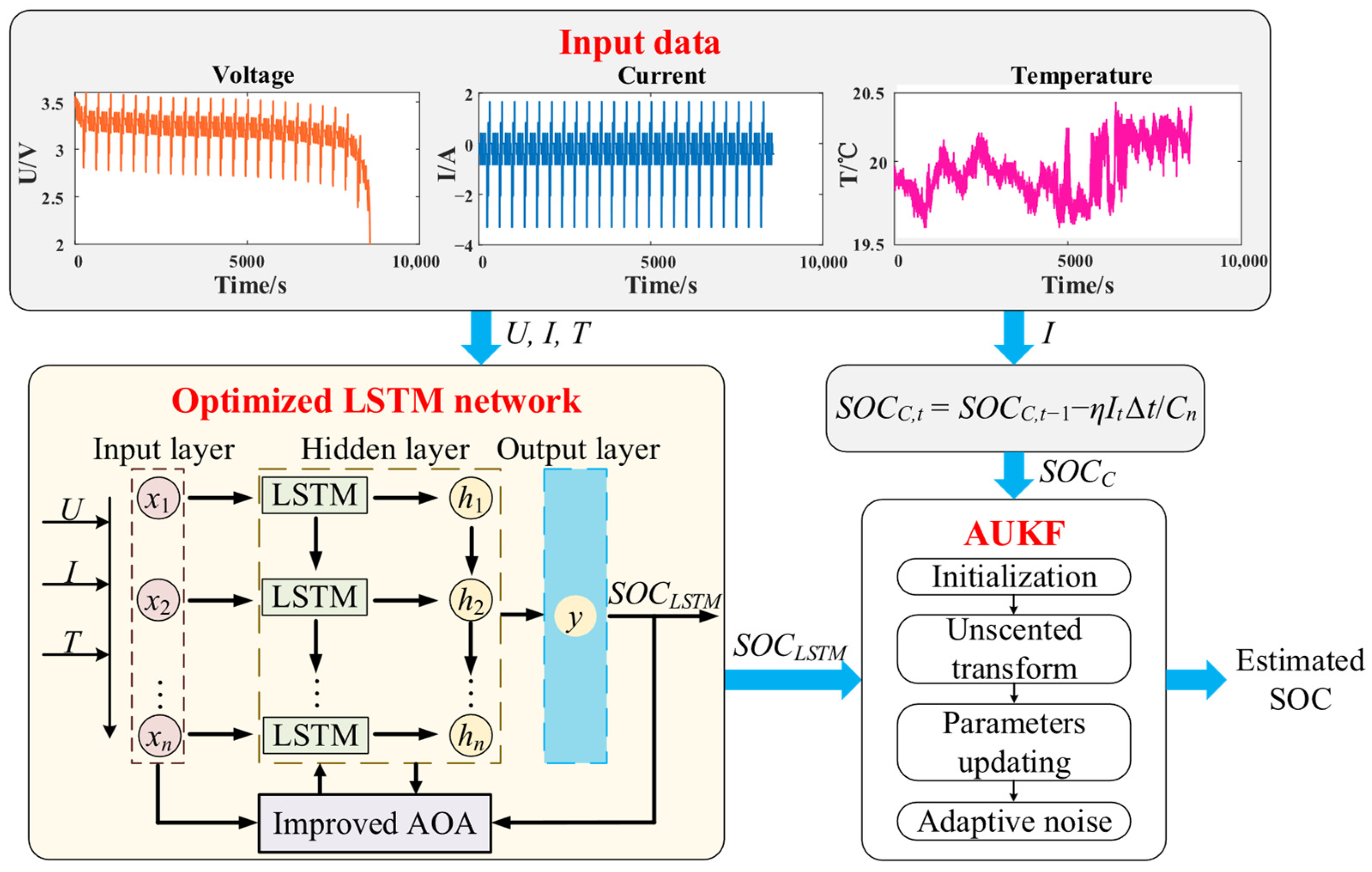

Figure 1 depicts the combined framework for SOC estimation. It comprises three main components: LSTM construction, IAOA for parameter optimization, and AUKF correction. The IAOA is utilized to determine the initial hyperparameters for the LSTM network. The SOC estimation from the optimized LSTM network serves as the output of the measurement function, while the SOC estimates from Coulomb counting (CC) are selected as the output of the state function. By incorporating the AUKF and combining these two outputs, an accurate SOC estimation is ultimately obtained.

2.1. Structure of the LSTM Network

To address the modeling issues of a series of serialized data with nonlinear features over the time scale, the RNN has been widely applied. However, the RNN struggles to handle long-term dependencies within time series data, and training failures may occur. The LSTM has been developed as an enhanced version of the RNN to overcome this challenge, specifically developed to handle long-term dependencies.

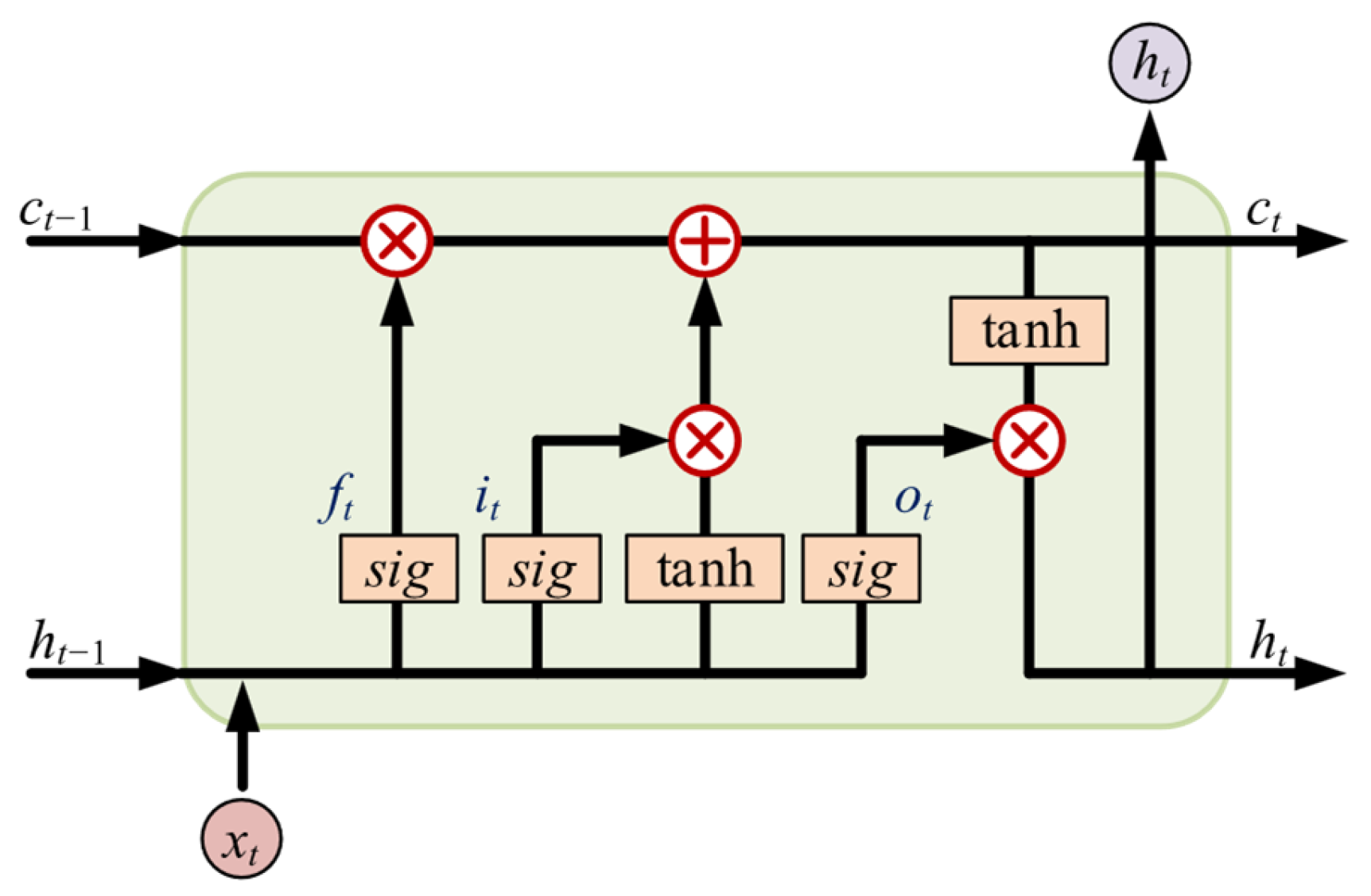

The structure of an LSTM cell is depicted in

Figure 2. The LSTM network comprises three main components: the forget gate, input gate, and output gate. These components collaborate to determine whether to retain or forget newly received information. Specifically, the input gate decides how much of the current input data should be saved to the cell state; the forget gate determines how much of the previous cell state should be retained in the current cell state; and the output gate controls how much of the current cell state should be passed on as the current output value. The mathematical expressions of the LSTM unit are as given by (1).

where

and

represent weights and bias,

,

, and

denote the input sequence, output data, and memory of hidden cell at time

t, and

means the sigmoid activation function.

Within the LSTM unit, the input data is combined with the output data from the previous neuron. Each is multiplied by their respective weights and added with biases to obtain the forget gate factor . The forget gate factor is responsible for generating a new memory cell based on the memory cell from the previous neuron, influencing the output data of the current neuron accordingly.

Simultaneously, the input gate factor is involved in the update of the memory cell, allowing the memory cell to be influenced by both the previous memory cell and the current input factor . Finally, the output gate factor is responsible for adjusting the value of the output data .

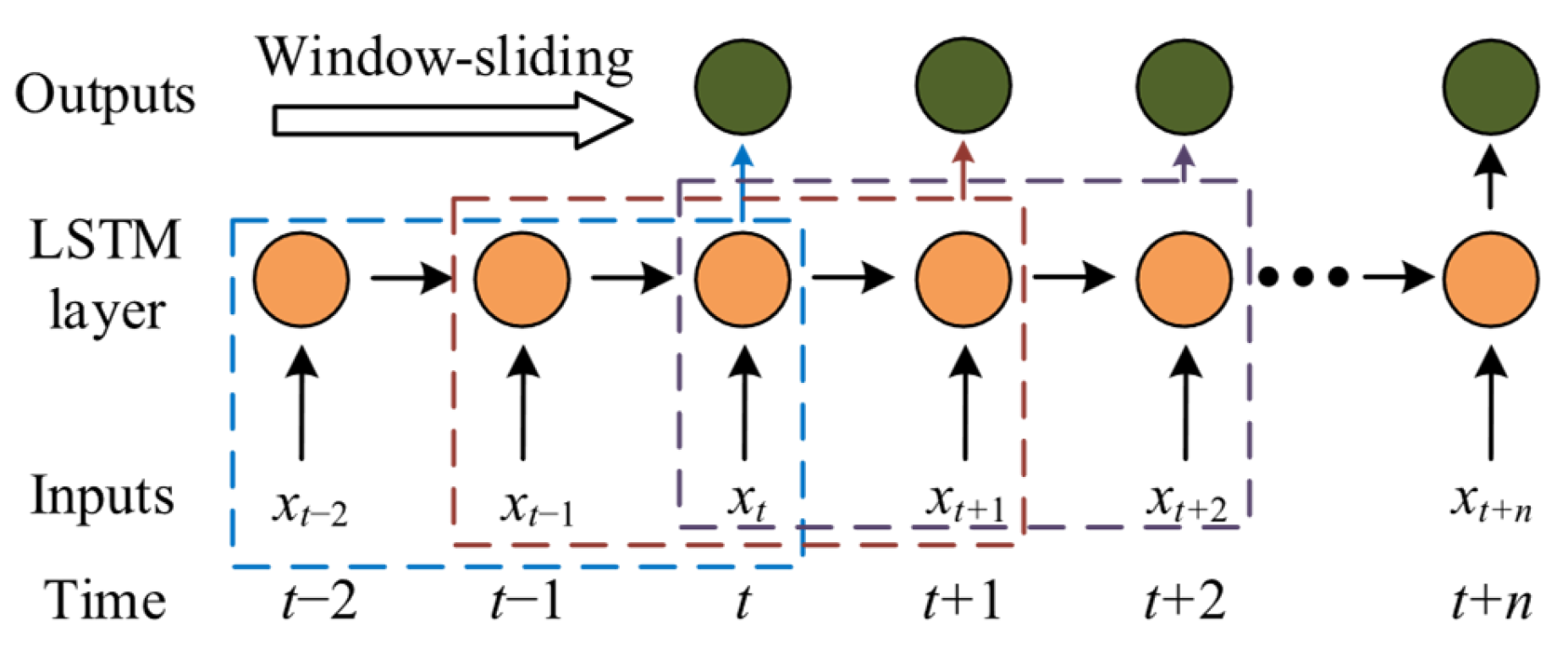

The LSTM network takes battery voltage, current, and temperature data as inputs, represented as

, and outputs the corresponding SOC value

. To enhance the utilization of historical information in the input data, an information enhancement unit was designed, with its architecture illustrated in

Figure 3. This unit employs a sliding window technique with a window size of

s, reconstructing the input as

, where

represents the number of time steps.

To fully leverage the capabilities of LSTM for accurate SOC estimation, several hyperparameters require careful configuration during the training process. These critical hyperparameters include the number of hidden layers, the number of neurons, the maximum epoch, the initial learning rate, and the dropout rate. It is noteworthy that while increasing the number of hidden layers can enhance LSTM performance to some extent, this improvement comes at the cost of exponential growth in computational complexity and increased risk of overfitting. To maintain a balance between computational efficiency and overfitting prevention, a single hidden layer is chosen.

Regarding the optimization of remaining hyperparameters, manual tuning through repetitive experiments proves to be time-consuming and unlikely to yield optimal configurations. To address this challenge, we have designed IAOA to systematically search for and identify the optimal combination of these hyperparameters, thereby enhancing the overall efficiency of the LSTM network.

2.2. Improved Arithmetic Optimization Algorithm

The AOA is a meta-heuristic optimization approach that leverages arithmetic operators to achieve global optimization. It possesses notable advantages such as rapid convergence speed and high precision.

The standard AOA’s optimization process primarily consists of three stages: initialization, exploration, and development. Initially, a math optimizer accelerated (MOA) is initialized to determine a search stage and set a math optimizer probability (MOP) to identify the optimal position. The calculations for MOA and MOP are defined by Equations (2) and (3), respectively.

where

represents the current iteration,

represents the maximum iterations,

and

represent the lower and upper bounds of the MOA, and

denotes the sensitive parameter defining the convergence accuracy during the iterative process.

Next, a random number r1 is generated within the range of 0 and 1 to determine whether the algorithm should proceed to the exploration or development stage. During the exploration stage, the AOA conducts a global search within the search interval and employs different search strategies based on division and multiplication. On the other hand, the development stage focuses on high-precision calculations using subtraction and addition operations, which are suitable for local area search. In both stages, random numbers r2 and r3 are utilized to determine whether multiplication, division, addition, or subtraction operations should be employed.

Lastly, a fitness function is utilized to assess each individual’s optimality during each current iteration. After

iterations, the optimal individual can be determined as the combination of the hyperparameters (the number of neurons, the maximum epoch, the initial learning rate, and the dropout rate). In the case of AOA, the RMSE is adopted as the fitness function as given by (4).

where

represents the data sample size,

represents the current individual number,

represents the estimated SOC value, and

represents the actual SOC value.

However, the standard AOA has two notable issues. Firstly, the AOA requires iterating until reaches , even if the fitness function value is already sufficiently small. This will result in additional computational costs. Secondly, the standard AOA may encounter the problem of converging to a local optimal value during the iteration process, which can lead to the optimal individual failing to meet the desired requirements. To address these two problems, some approaches have been introduced to enhance the standard AOA. Firstly, an adaptive iteration method is employed to dynamically adjust the number of iterations based on the convergence status. This helps to reduce unnecessary computation and improve efficiency. Secondly, a genetic mutation technique is integrated into the AOA, enhancing its capability and mitigating the risk of becoming trapped in local optima.

In contrast to the standard iteration process, the adaptive iteration approach employs the optimal fitness function (OFF) to determine whether to terminate the iteration before reaches . If the value of OFF drops below the fitness function threshold, the iteration is concluded early. Conversely, if the value of OFF does not meet the termination criterion, the iteration continues until reaches . This adaptive iteration strategy enables the rapid acquisition of the optimal individual. To avoid premature termination of the iteration without achieving optimal results, it is crucial to set a small fitness function threshold FF. The value of FF is typically set to a target optimum value to ensure the iteration continues until an acceptable level of optimization is reached. This prevents premature convergence to suboptimal solutions.

To prevent the algorithm from becoming trapped in local optima, a genetic mutation operator is incorporated into the AOA. This operator introduces random variations by occasionally reinitializing individuals during the update process. Such a mechanism enables the algorithm to explore new regions of the search space, thereby increasing the probability of discovering globally superior solutions.

In summary, the IAOA is employed to optimize the remaining hyperparameters for the LSTM network. The parameter search ranges were set to [32, 50, 0.001, 0.1] for the lower bounds and [256, 300, 0.01, 0.5] for the upper bounds, corresponding to the number of neurons, maximum epochs, initial learning rate, and dropout rate, respectively. The process involves initializing the parameters, updating individuals using four operators, and calculating fitness function values. Additionally, the

OFF is continuously updated during the iteration and compared to a predefined threshold to determine whether to terminate the iteration. At the end of each iteration, the individual undergoes genetic mutation using the genetic mutation algorithm. The value of the optimal individual is then assigned to the desired hyperparameters, completing the construction of the LSTM network. The operational procedure of the IAOA is detailed in Algorithm 1.

| Algorithm 1 Improved Arithmetic Optimization Algorithm |

| Input: Current iteration and maximum iterations Ct, Mt, fitness function threshold FF, upper and lower limits of hyperparameters UB, LB, sensitive parameter α, and initial individual x. |

| Output: Optimal individual xbest. |

| 1: While OFF > FF && Ct < Mt % adaptive iteration |

| 2: Calculate the updated MOA and MOP. |

| 3: for i = 1: size(x, 1) |

| 4: for j = 1: size(x, 2) |

| 5: Set random numbers r1, r2, r3. |

| 6: if r1 > MOA |

| 7: Entering the exploration stage. |

| 8: Choose multiplication or division based on r2. |

| 9: else |

| 10: Entering the development stage. |

| 11: Choose addition or subtraction based on r3. |

| 12: end |

| 13: end |

| 14: Determine and update the optimal individual. |

| 15: end |

| 16: Ct = Ct + 1 |

| 17: Genetic mutation algorithm. |

| 18: end |

2.3. Adaptive Unscented Kalman Filter

In UKF, noise covariances are treated as constant. However, the noise calculated by prior knowledge may not accurately represent the actual noise generated during battery operation, which can vary over time. Estimating the system state using constant noise can lead to significant errors. To address this issue and improve estimation accuracy, an adaptive noise covariance method is employed. Firstly, initialize system parameters and compute the mean and covariance of the state variables. Then, sample the state variables using a sigma sampling strategy and calculate the updated parameters through the unscented transformation. Subsequently, update the cross-covariance and Kalman gain based on the modified parameters. Finally, flexibly tune the model’s noise variance to better match the observed noise characteristics during battery operation. By incorporating the adaptive noise covariance method into the UKF framework, the AUKF aims to improve accuracy by considering the time-varying characteristics of the noise.

In this paper, the initial estimation of the SOC is conducted using the IAOA-LSTM method, resulting in a set of measured values. Subsequently, the AUKF is employed to refine the measured values, integrating the Coulomb counting method, thereby achieving high-precision estimation. The proposed model is given by (5).

where

indicates coulomb efficiency, and

,

, and

represent current, voltage, and temperature at time

, respectively.

denotes the sampling interval,

is the nominal capacity,

and

are the process and measurement noises with variances

and

, and

and

represent the calculated SOC of Coulomb counting and the measured SOC by the AOA-LSTM network, respectively.

Then, the UT is utilized to obtain new state variables and joint covariance through point transformation. Sigma points are constructed for the state variables

and

, and updated sigma points are generated for the state variable

and the output variable

. Subsequently, the joint covariance

and the Kalman gain

of the state and output variables are calculated as given by (6) and (7).

where

represents the sample number,

represents the covariance weight,

represents the mean of the output variable, and

indicates the covariance of the output variable.

Finally, online correction of the covariance of noises is conducted using the error sequence

between the actual output and measurement output, as given by (8)–(10). The calculated noise covariances are incorporated into the state space model. The flow of AUKF can be expressed as Algorithm 2.

The above analysis shows that the SOC calculated using the Coulomb counting method is treated as a state vector and is involved in the unscented transformation, yielding an estimated value. This estimated value is then combined with the measured value obtained from the LSTM network to update the Kalman gains, state vector, and covariance, resulting in an improved SOC estimation.

| Algorithm 2 Adaptive Unscented Kalman Filter |

| Input: Initial value of state variable x0, and number of samples n. |

| Output: State after filtering Xnew. |

| 1: Building the state space model. |

| 2: Calculate state mean x0 and covariance P0|x. |

| 3: for t = 1: n − 1 |

| 4: Sampling sigma points. |

| 5: Unscented transform. |

| 6: Calculate joint covariance Pxy,t and Kalman gain Kkal,t. |

| 7: Update state variable Xnew,t = xt, and state covariance Px,t. |

| 8: Noise variances Qt and Rt adaptive correction. |

| 9: Update noise w(t) and v(t). |

| 10: end |

3. Experiment Results and Analysis

This section provides a comprehensive description of the collected experimental data, encompassing a public battery test dataset and a set of operational data from batteries in practical engineering applications. Based on the experimental data, we have designed a series of comparative experiments to rigorously validate the superiority and generalizability of the proposed framework.

3.1. Datasets and Parameters Setting

The experimental data consists of two parts: the first part uses a public battery cell dataset to verify the predictive performance of the method, and the second part uses the battery pack data from actual engineering vehicles to verify the robustness and generalization performance of the method.

The battery cell dataset is derived from the A123 battery subset within the University of Maryland open source battery dataset [

42], with detailed information available at

https://calce.umd.edu/battery-data#INR (accessed on 13 December 2023). The A123 battery cell dataset encompasses comprehensive voltage, current, and temperature measurements under various operating conditions at multiple temperatures (0 °C, 20 °C, 30 °C, and 50 °C). The dataset includes diverse operational profiles, specifically the Dynamic Stress Test (DST), US06 (United States Government’s Light Vehicle Drive Cycle) and Federal Urban Driving Schedule (FUDS). This dataset is utilized to validate the effectiveness of the proposed framework across different operating conditions. The dataset was partitioned into training, validation, and test sets in a 50%/10%/40% split.

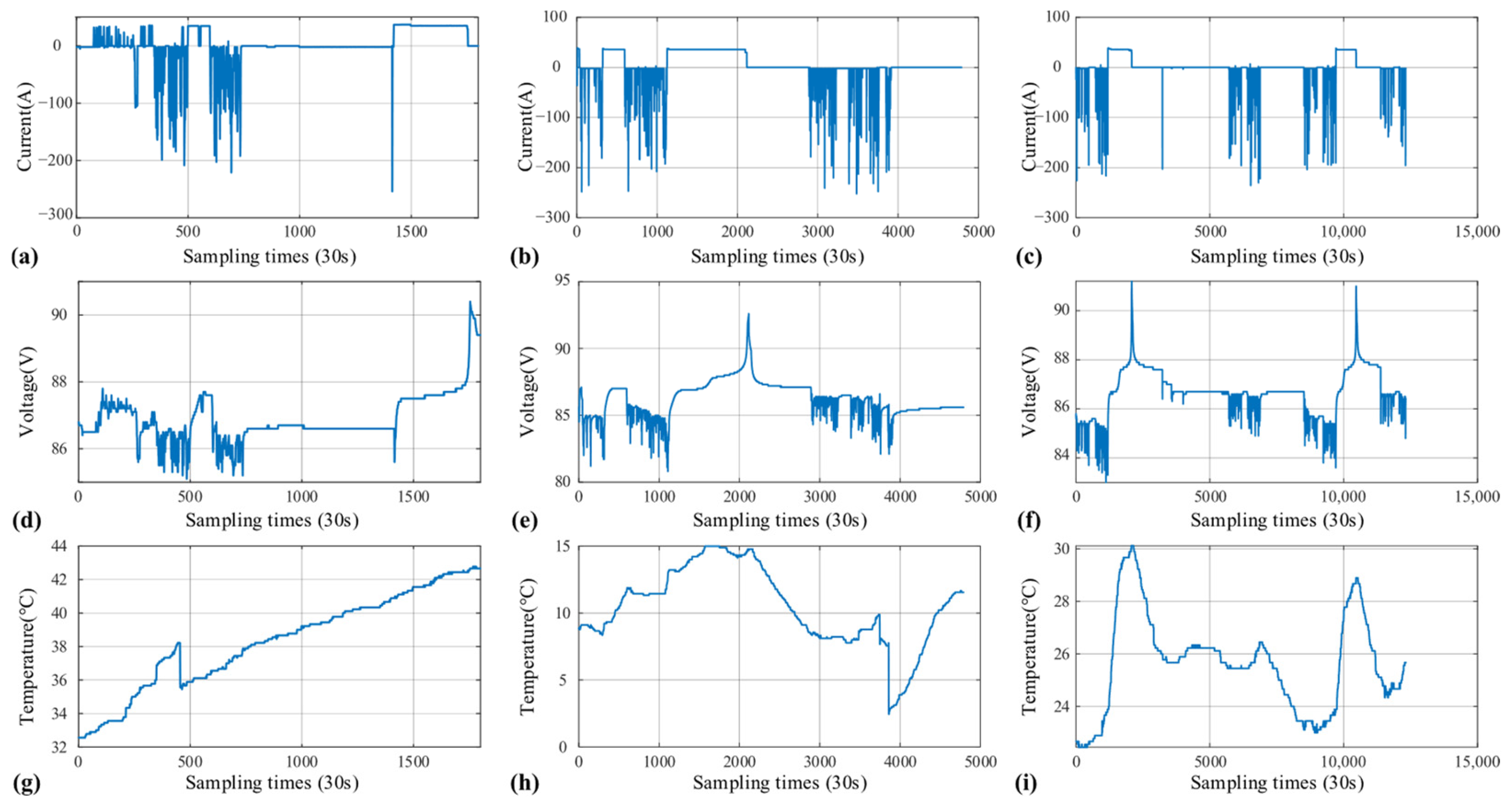

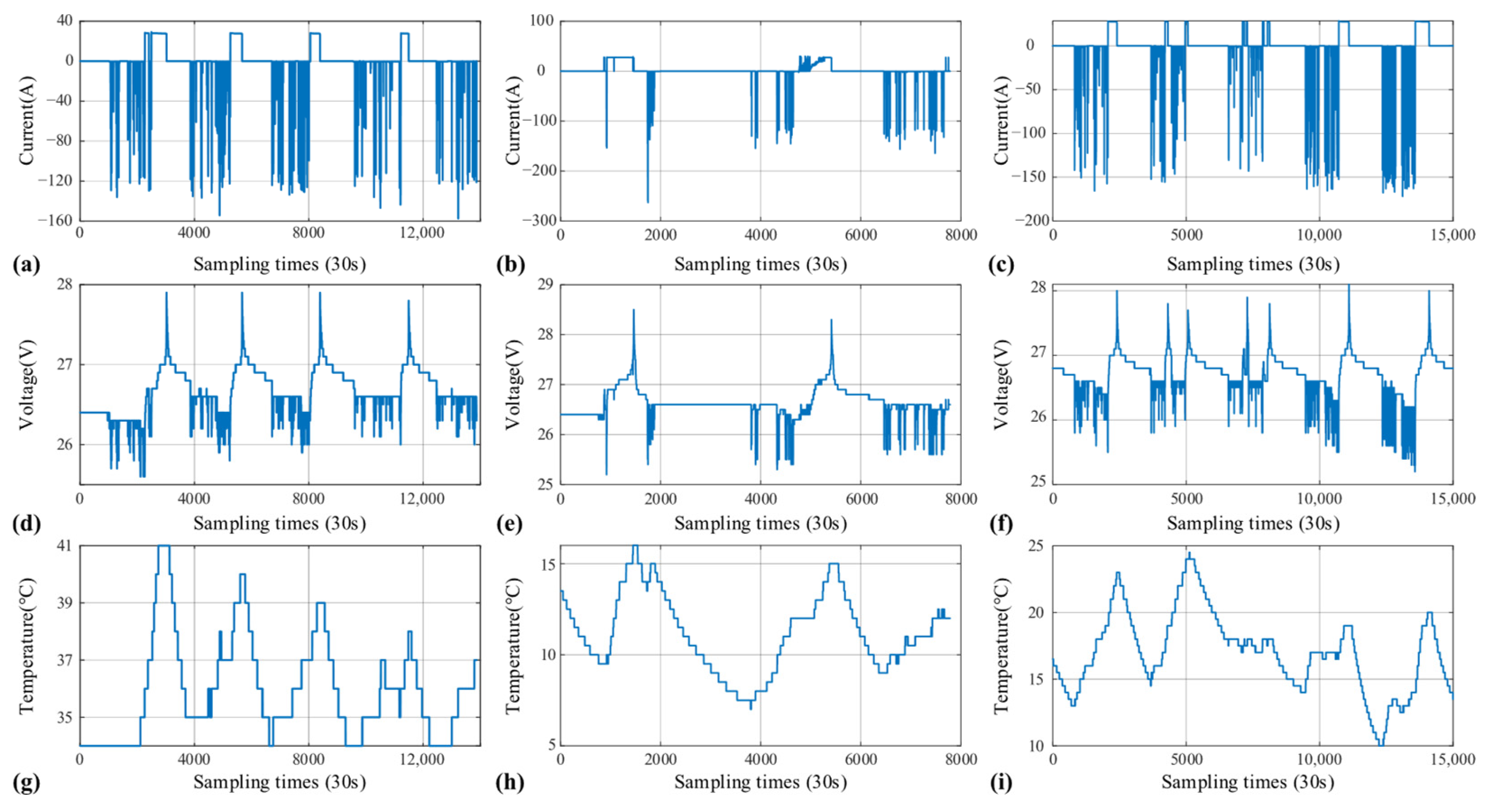

The battery pack dataset is derived from the operational data of two distinct engineering vehicles. The dataset includes current, voltage, and temperature measurements from two vehicle battery packs in Shanghai and Hefei. The dataset collects data from different months of the year to simulate the effectiveness of the method under different temperature conditions. The historical data from electric vehicles is used as the training/validation set, while the data in August 2022, December 2022, and April 2023 are utilized as the test set, respectively.

Table 1 summarizes the fundamental parameters of the battery cells and packs in the datasets. Additionally,

Table 2 lists the initial parameters of the algorithms employed in the proposed combined framework, and their settings were determined based on the following consideration:

Sliding Window Parameters (s and k): The sliding window interval (s = 4) and the number of time steps (k = 30) were chosen to provide the LSTM network with sufficient historical context for accurate time-series prediction. These values represent a trade-off between capturing long-term battery dynamics and maintaining manageable computational complexity.

IAOA Parameters (Mt, FF, μ and Pm): The maximum number of iterations (Mt = 100) was set to ensure the IAOA had adequate opportunity to converge to an optimal solution without incurring excessive computational time. The fitness function threshold (FF = 0.01) serves as an early stopping criterion, allowing the optimization to terminate if a sufficiently low error (RMSE < 1%) is achieved, thereby improving efficiency. The mutation probability (Pm = 0.1) is set to maintain sufficient diversity for escaping local optima, without compromising the algorithm’s convergence due to excessive mutation. The sensitive parameter (μ = 5) is a standard value recommended by the algorithm, chosen to maintain a proper balance between the algorithm’s exploration and exploitation phases.

To improve convergence, enhance model performance, prevent numerical overflow, and reduce the risk of overfitting, the data is converted into the range of [−1, 1] with the normalization formula as given by (11).

where

and

denote the maximum and minimum values of the data,

denotes the average value, and

xi denotes the individual data point.

RMSE and MAX are selected as the performance indicators. The equations of RMSE and MAX are as given by (4) and (12), respectively.

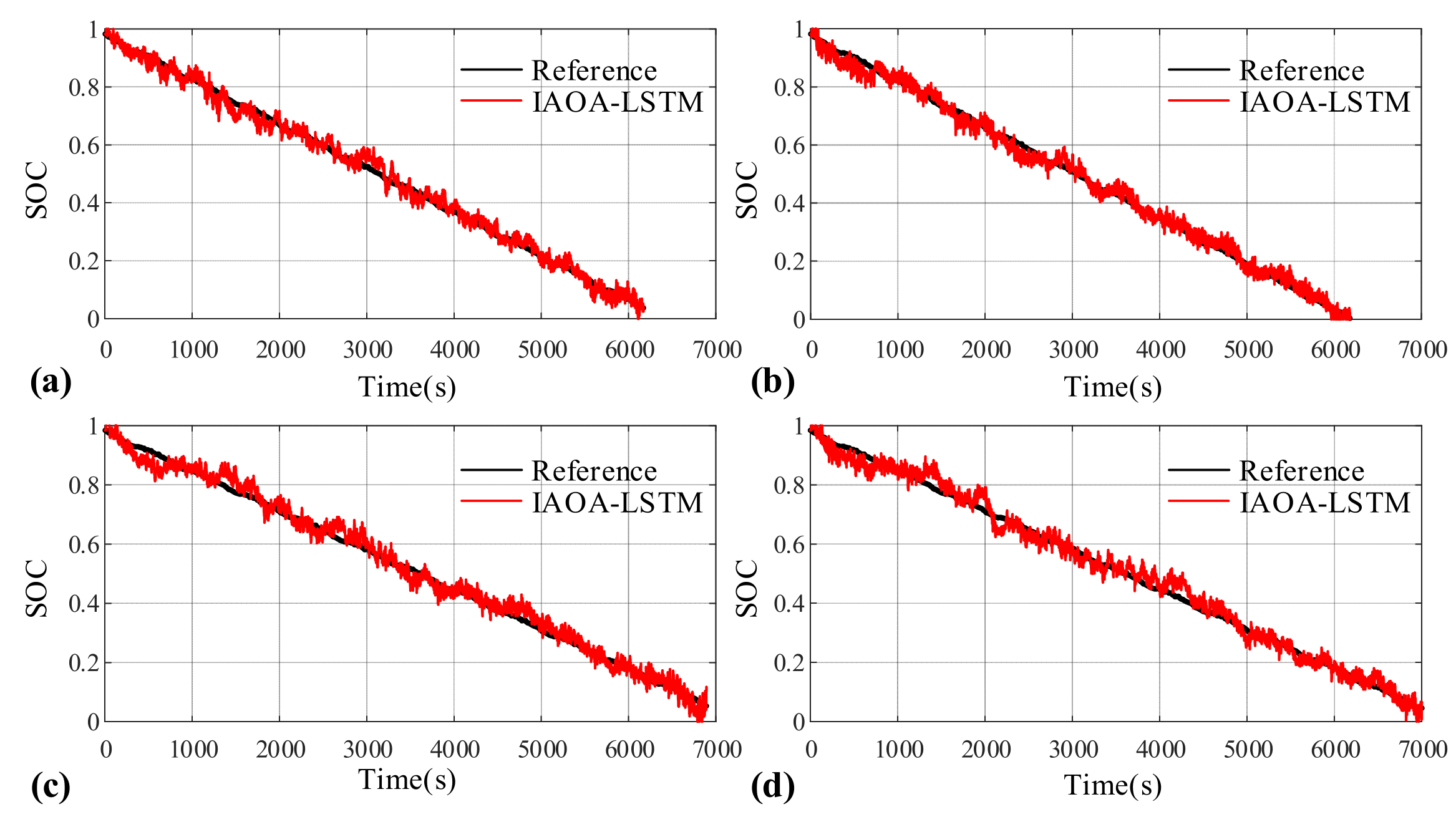

3.2. SOC Estimation with the IAOA-LSTM Network

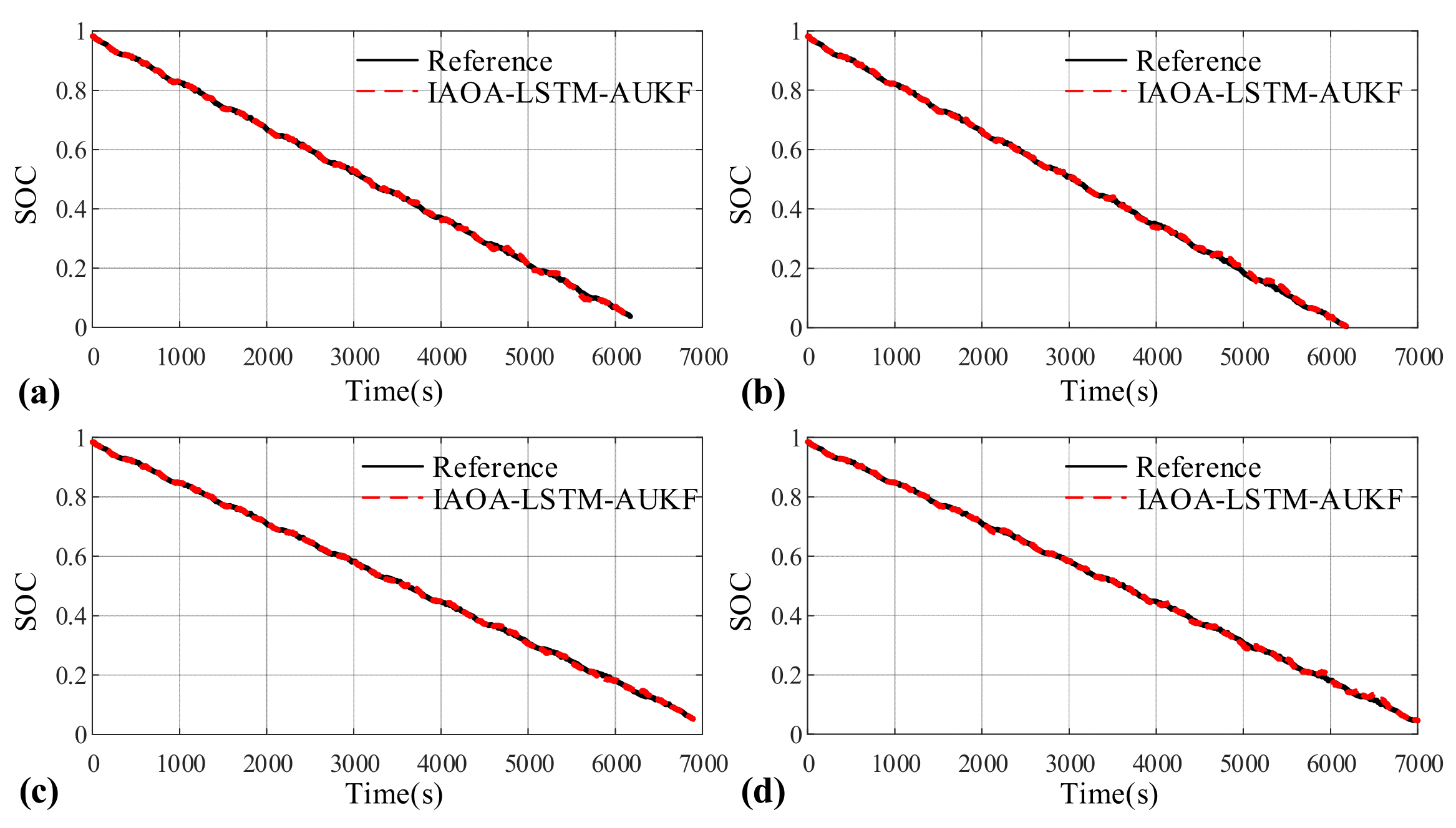

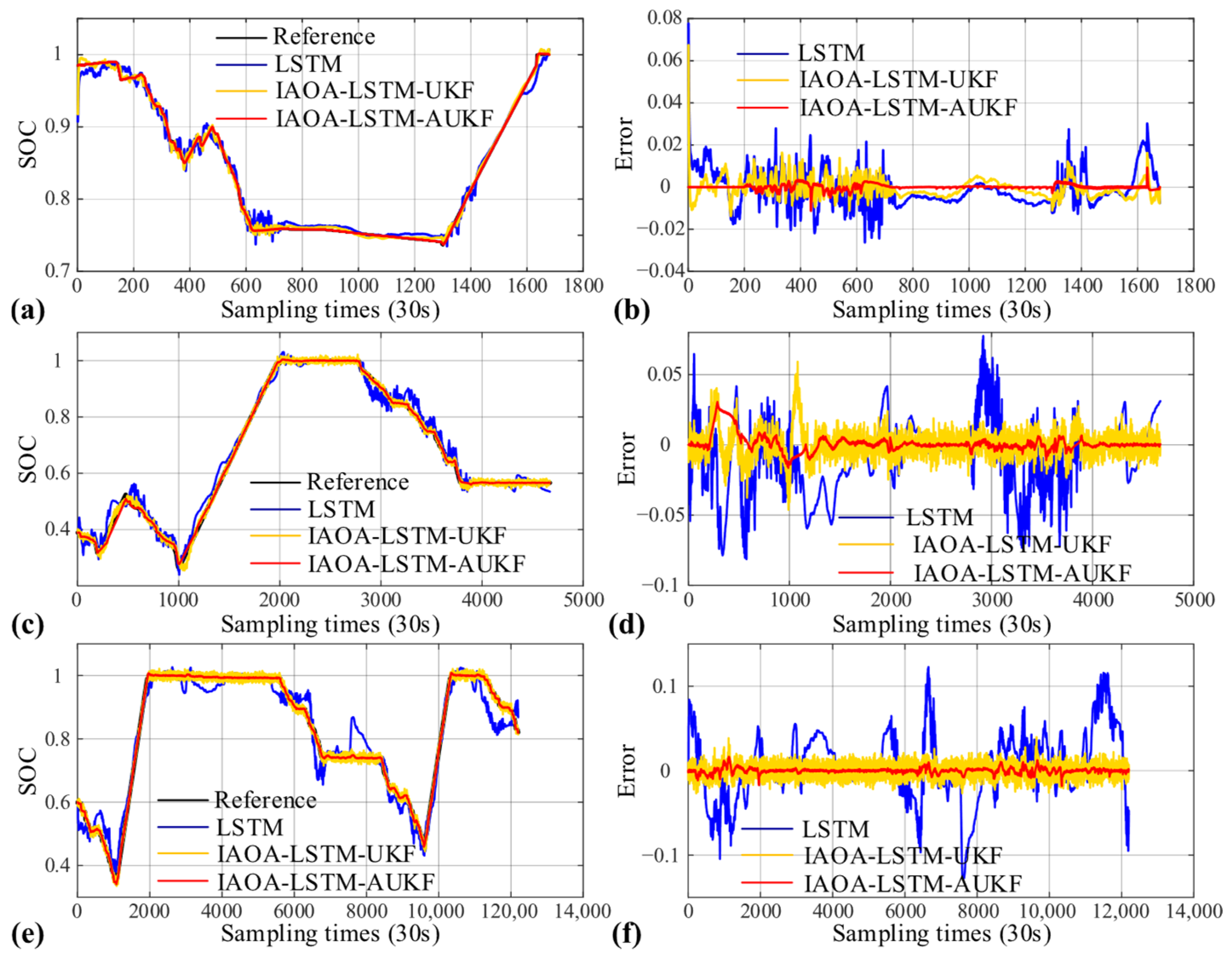

In this subsection, the US06, FUDS, and DST datasets of A123 battery cells are used to validate the accuracy of the method. The results under the US06 cycle are depicted in

Figure 4. In this study, it is assumed that the discharge processes begin at 100% SOC. Additionally, considering the precision of the measuring instrument and data preprocessing, the actual SOC values can be calculated using the CC method.

The results demonstrate that the IAOA-LSTM network can generally estimate the SOC trend with an RMSE of around 2.5%. However, the MAX of the results for both testing cycles is within the range of 7% to 10%. This indicates that while the IAOA-LSTM network can achieve SOC estimation to a certain extent, there is room for improving the results’ precision and resilience. To tackle this challenge, the proposed method incorporates AUKF to mitigate fluctuations and enhance the precision of the results.

3.3. SOC Estimation with the Proposed Combined Framework

AUKF, which incorporates adaptive noise correction and compensates for UKF’s limitations, is utilized to enhance the outcomes of the IAOA-LSTM network. The results of the proposed method under the US06 cycle are illustrated in

Figure 5. It is apparent that the proposed method achieves precise SOC estimation across different temperatures, yielding smooth results. The comparative experimental results are summarized in

Table 3,

Table 4 and

Table 5.

The results indicate that the proposed method achieves significantly lower values of both RMSE and MAX for SOC estimation compared to the other three methods. The proposed combined method exhibits significant improvements compared to the IAOA-LSTM-UKF method. Under the US06 cycle at 30 °C, the RMSE is reduced from 1.09% to 0.53%, and under the FUDS cycle at 30 °C, it decreases from 1.60% to 0.47%. The MAX is significantly reduced from 4.22% to 2.19% under the US06 cycle at 30 °C and from 7.31% to 2.21% under the FUDS cycle at 30 °C. Overall, the proposed combined method maintains an RMSE within 0.7% and a MAX within 2.5% for SOC estimation. It exhibits high accuracy in SOC estimation for battery cells.

To evaluate the proposed model’s estimation performance, it is compared with some advanced existing methods, including LSTM-EKF [

43], LTG-SABO-GRU [

44], RNN with small sequence [

45], and unidirectional LSTM [

46].

Table 6 shows the RMSE of various models on the FUDS test condition at 20 °C.

It can be observed that the proposed IAOA-LSTM-AUKF exhibits the best estimation performance with an RMSE of 0.43%. The proposed model synergistically integrates an optimization algorithm, a data-driven method, and a model-based filtering method, amalgamating the advantages of these two types of hybrid models. Moreover, it optimizes the hyperparameters of the data-driven model and exhibits superiority in handling nonlinear problems. As a result, it demonstrates superior performance in SOC estimation compared to other methods.

3.4. Discussion for Computational Burden

While the proposed method can significantly enhance SOC estimation accuracy, it is essential to avoid incurring an excessive computational burden in the process. To evaluate the computational load of the proposed model, it is compared with LSTM and LSTM-UKF. All experiments were conducted on a desktop workstation with the following hardware configuration. The system was equipped with an Intel Core i7-8700K CPU operating at 3.70 GHz, 32 GB of DDR4 RAM, and an NVIDIA GeForce GTX 1050 Ti GPU with 4 GB of VRAM.

Table 7 presents the results for SOC estimation under FUDS condition. The results contain the estimation errors and computation times of the BPNN, LSTM, IAOA-LSTM, LSTM-UKF, and proposed IAOA-LSTM-AUKF methods. Compared to the LSTM network, the proposed method exhibits superior performance with highly accurate results, albeit requiring a longer computation time. At 30 °C, the LSTM network yields estimation results that exhibit significant fluctuations, as evidenced by an RMSE of 2.77%. In contrast, the proposed hybrid method delivers highly accurate and smooth results, with an RMSE of only 0.48%. Although the proposed method takes less than twice the computation time of the LSTM network, it produces errors that are 4–5 times smaller. Overall, the proposed model demonstrates better performance than the BPNN, LSTM, IAOA-LSTM and LSTM-UKF regarding the RMSE while still maintaining an acceptable computation time.

3.5. Assessment of the Convergence Rate

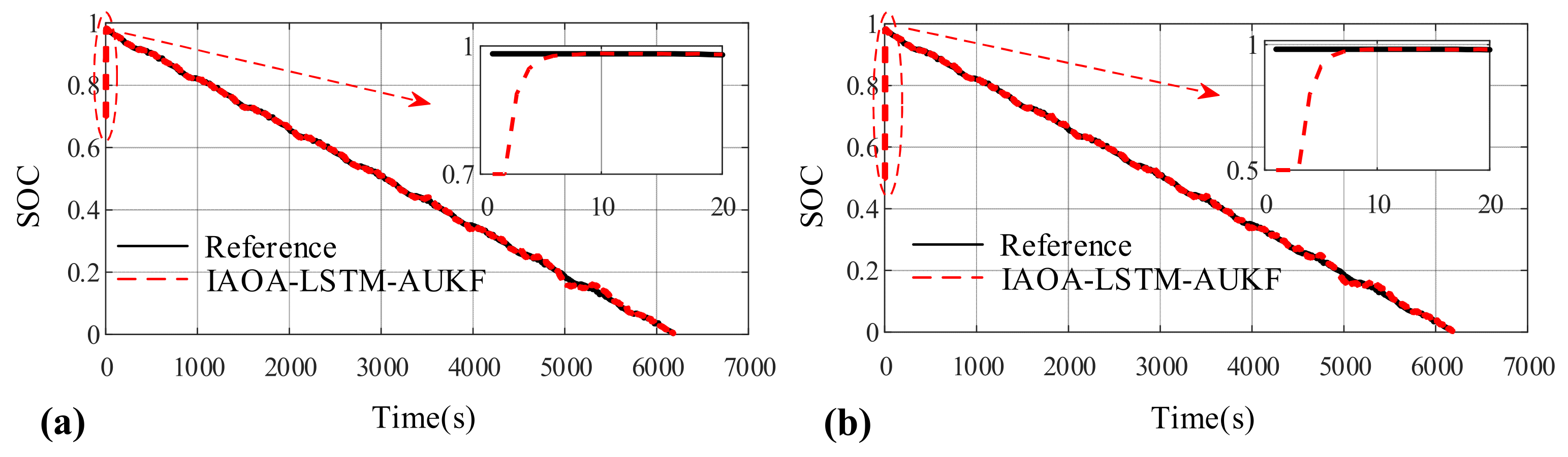

To estimate SOC utilizing the CC method in AUKF, it is difficult to obtain a precise initial SOC value. However, in practical applications, obtaining such a value can be challenging as the measured initial SOC may be influenced by temperature and sensor performance, leading to errors. Therefore, a reliable SOC estimation method should quickly correct any errors in the initial value. In this subsection, the initial SOC is initialized to 70% and 50% under US06 cycle at 20 °C, whereas the actual remains 100%.

Figure 6 displays the SOC estimation results obtained using the proposed method. In the figure, the solid line represents the actual SOC values, gradually decreasing from 100% to 0%. The dashed line, on the other hand, represents the estimated SOC values, starting from either 70% or 50% and gradually fitting to the actual values, eventually reaching 0%.

It is shown that with the proposed method, regardless of the initial SOC being configured at 70% or 50%, the SOC converges rapidly to its actual value within 10 s. This demonstrates the adaptability of the proposed method in correcting initial noise. Even if there are significant changes or errors in the initial SOC due to environmental or other factors, the proposed method exhibits excellent convergence ability and accurately estimates the true SOC value.

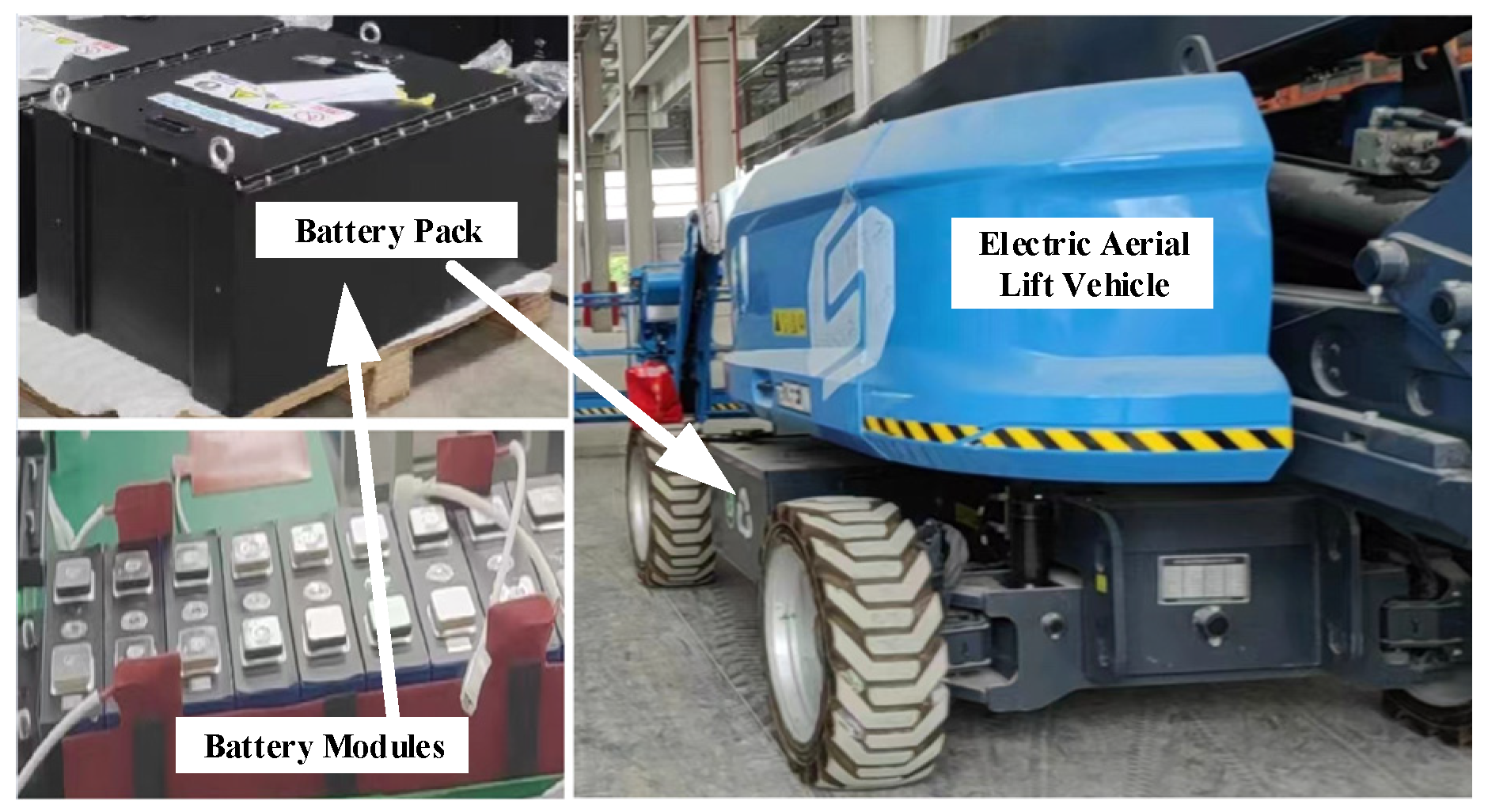

3.6. Verification by Electric Vehicle Battery Pack

In practical engineering applications, batteries are typically deployed as battery packs in electric vehicles. To validate the effectiveness of the proposed method in applications, actual performance data from electric vehicles is utilized for testing purposes. In this subsection, the practicality of the proposed framework in real-world scenarios is evaluated by collecting operation data from two electric aerial lift vehicle (EALV) battery packs, as illustrated in

Figure 7. The operating conditions of battery pack 1 and pack 2 from EALV models G01BB03 and G01JB01 are illustrated in

Figure 8 and

Figure 9, respectively. The operating condition data are collected on the basis of the complete battery pack assembly. While minor inconsistencies between individual cells can create a small self-balancing current (100–300 mA), its effect on SOC estimation is negligible. Given that the rated current of the packs exceeds 200 A, this balancing current accounts for less than 0.15% of the total current and does not significantly impact the results.

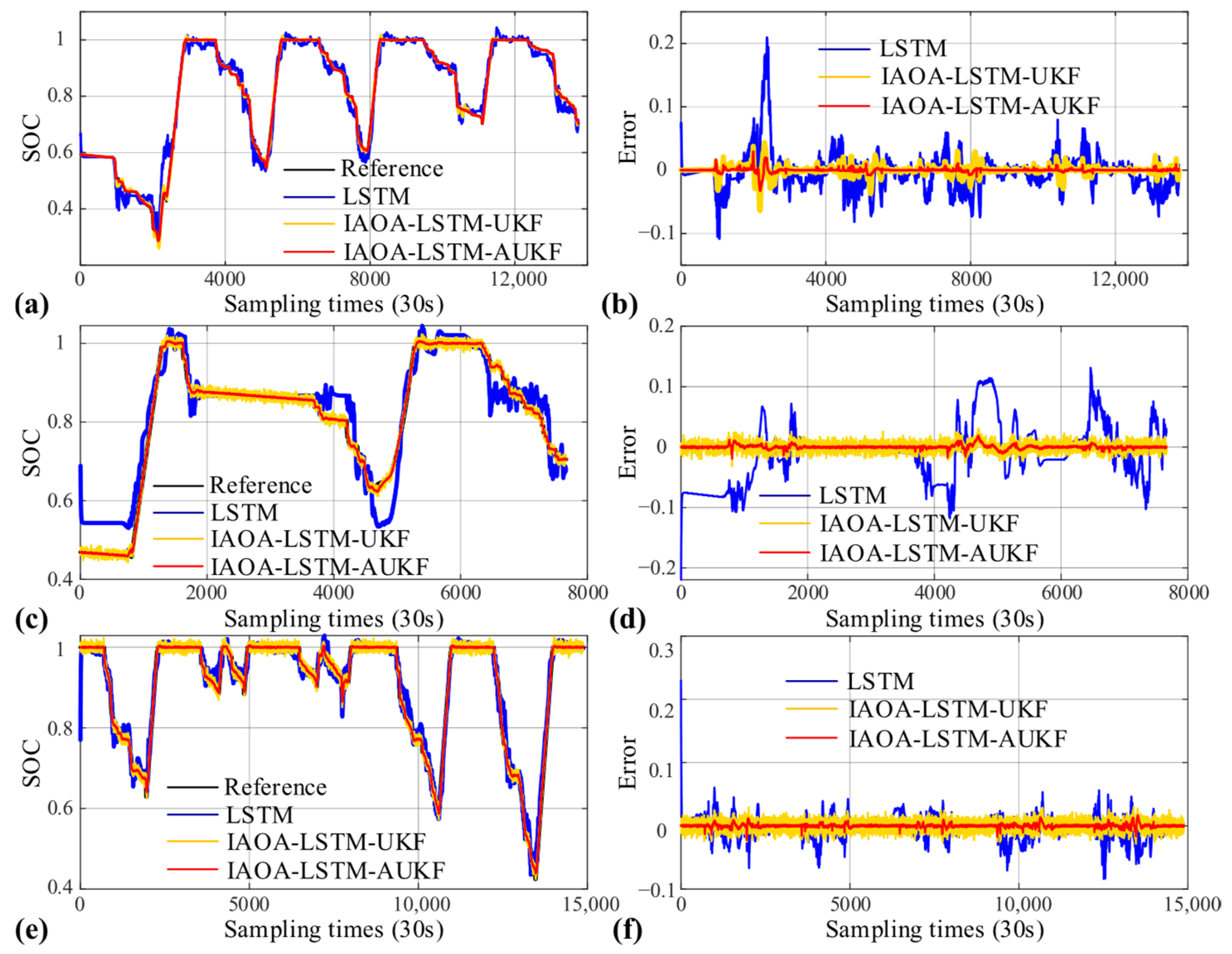

The results and errors obtained from the experimental testing are presented in

Figure 10 and

Figure 11, while a summary is provided in

Table 8 and

Table 9. For battery pack1, the proposed method demonstrates superior accuracy compared to the other two methods, with an RMSE of 0.6% and MAX of 3.05% in December 2022, and an 0.31% RMSE of 0.31% and MAX of 1.82% in April 2023. Regarding battery pack 2, the proposed method attains remarkable precision, reflecting an RMSE of 0.24% and a MAX of 2.25% for the actual battery SOC estimation, demonstrating its excellent applicability. These results demonstrate that the proposed method can provide accurate SOC estimations of battery packs across various temperature conditions throughout the year.

The results demonstrate that the proposed method excels in accurately and smoothly estimating the SOC of battery cells. The proposed method effectively corrects errors arising from the initial SOC offset and achieves exceptional SOC estimation for actual electric vehicle battery packs. These findings highlight the potential of the proposed method for practical applications. Accurate SOC estimation using the proposed method is essential for optimizing battery management and improving the overall performance and reliability of electric vehicle systems.