1. Introduction

Experience replay is a core technique in deep reinforcement learning (RL) that enables efficient use of past interactions by storing transitions in a replay buffer [

1]. By breaking the temporal correlation between sequential experiences, uniform sampling from this memory improves the stability and efficiency of neural network training. This strategy has been fundamental to the success of deep reinforcement learning (DRL) in various domains [

2,

3,

4,

5,

6,

7,

8,

9]. However, the performance and convergence speed of RL algorithms are highly sensitive to how experiences are sampled and replayed [

10]. Prioritized Experience Replay (PER) [

11] addresses this by sampling transitions with higher temporal difference errors more frequently, which has led to significant performance improvements in various DRL techniques [

12,

13,

14,

15,

16,

17,

18]. Nevertheless, PER suffers from outdated priority estimates and may not ensure sufficient coverage of the experience space, which usually happens in complex and real-world tasks [

19,

20].

One such challenging domain is piano fingering estimation. This problem has drawn interest from both pianists and computational researchers [

21,

22,

23,

24,

25,

26,

27]. As proper fingering directly influences performance quality, speed, and articulation, it is a key aspect of expressive music performance [

28,

29,

30,

31,

32]. From a computational standpoint, fingering estimation is a combinatorial problem with many valid solutions, depending on the passage and the player’s interpretation. In the model-free RL method, [

33] introduced a model-free reinforcement learning framework for monophonic piano fingering, modeling each piece as an environment and demonstrating that value-based methods outperform probability-based approaches. In this paper, we extend the approach to the more complex polyphonic case and propose a novel training strategy to improve learning efficiency. Thus, in this paper, we introduce the approach to estimate the piano fingering for polyphonic passages through Finger Dueling Deep Q-Network (Finger DuelDQN), which approximates multi-output Q-values, where each output corresponds to a candidate fingering configuration.

Furthermore, to address the slow convergence commonly observed in sparse reward reinforcement learning tasks, we propose a new memory replay strategy called Elite Episode Replay (EER). This method selectively replays the highest-performing episodes once per episode update, providing a more informative learning signal in early training. This strategy enhances sample efficiency while preserving overall performance.

Hence, the main contributions of this paper are threefold:

We present an online model-free reinforcement learning framework for polyphonic fingering, detailing both the architecture and strategy.

We introduce a novel training strategy, Elite Episode Replay (EER), which accelerates convergence speed and improves learning efficiency by prioritizing high-quality episodic experiences.

We conduct an empirical analysis to evaluate how elite memory size affects learning performance and convergence speed.

The rest of the paper is organized as follows:

Section 2 explores the background of experience replay and monophonic piano fingering in reinforcement learning.

Section 3 details the configuration of RL for the polyphonic piano fingering estimation.

Section 4 details the proposed Elite Episode Replay.

Section 5 discusses the experimental settings, results, and discussion. Lastly, the conclusion will be discussed in

Section 6.

3. Polyphonic Piano Fingering with Reinforcement Learning

In music, monophony refers to a musical texture characterized by a single melody or notes played individually, whereas polyphony involves multiple notes played simultaneously, such as chords. The monophonic representation in [

33] cannot be directly extended to polyphonic music due to the presence of multiple simultaneous notes. Therefore, we define the state

as a collection of notes at time

t,

, and their previous notes-fingering set, the notes after, as shown in Equation (

6). The state is formulated by considering the last two note-action pairs,

and

, which represent the hand’s recent position. With this context, along with the current notes

and upcoming notes

, the agent must learn the optimal action

. In addition, each action may be constrained by the number of notes, i.e., single notes may only be played with one finger, two notes may only be played with two fingers, and so on.

In such settings, we define

as a scalar number of notes played at one musical step at time

t,

, where

. Thus, with the

-greedy method, the actions taken are now based on the highest estimated

Q based on the number of fingers. Moreover, the number of available actions at time

t is determined by the number of concurrent notes, i.e.,

, where

.

Then, the reward function

is evaluated by the negative fingering difficulty in Equation (

4). Furthermore, as illustrated in

Figure 1, the state of the environment is encoded into the

vector matrix and becomes an input of the network. The output is the state-action approximation

for each fingering combination. Then, the Q update for one run is constrained by the

and

, as follows:

As the rewarding process depends on the fingering rules

, we use the definition of fingering distance defined by [

29]. Let

f and

g be the fingers taken from finger

f to

g. The MaxPrac(

) is the practically maximum fingering distance to be stretched, MaxComf(

) is the distance that two fingers can be played comfortably, and MaxRel(

) is the distance two fingers can be played completely relaxed. Using a piano with an invisible black key [

40], and assuming the pianist has a big hand, we use the distance matrix defined by [

25] in Equation (

8) (

Table 1).

Table 1.

Piano fingering rules from [

25], all rules were adjusted to the RL settings.

Table 1.

Piano fingering rules from [

25], all rules were adjusted to the RL settings.

| No. | Type | Description | Score | Source |

|---|

| For general case |

| 1 | All | add 2 points per each unit difference if the interval of each note in and is below MinComf or larger than MaxComf | 2 | [29] |

| 2 | All | add 1 point per each unit difference if the interval of each note in and is below MinRel or larger than MaxRel | 1 | [29,40] |

| 3 | All | add 10 points per each unit difference the interval of each note in and is below MinPrac or larger than MaxPrac | 10 | [25] |

| 4 | All | add 1 point if is identical to but played with different fingering | 1 | [25] |

| 5 | Monophonic Action | add 1 point if is monophonic and is played with finger 4 | 1 | [29,40] |

| 6 | Polyphonic Action | Apply the rule 1,2,3 with a double scores within one chord | | [25] |

| For three consecutive monophonic case |

| 7 | Monophonic | (a) add 1 point if note distance between the and the is below MinComf or above their MaxComf | 1 | [29] |

| | | (b) add 1 more point if , is finger 1, and the note distance between the and the is below MinPrac or above their MaxPrac | 1 | |

| | | (c) add 1 more point if , but not equals to | 1 | |

| 8 | Monophonic | add 1 point per each unit difference if the interval of each note in and is below MinComf or larger than MaxComf | 1 | [29] |

| 9 | Monophonic | add 1 point if not equals to , finger same as , and | 1 | [29,40] |

| For two consecutive monophonic case |

| 10 | Monophonic | Add 1 point if finger and is the finger 3 and finger 4 or its combination consecutively | 1 | [29] |

| 11 | Monophonic | Add 1 point if fingers 3 and 4 are played consecutively with 3 in a black key and 4 in a white key | 1 | [29] |

| 12 | Monophonic | Add 2 point if is white key played not by finger 1, and is black key played with finger 1 | 2 | [29] |

| 13 | Monophonic | Add 1 point if is played by not by finger 1 and is played with finger 1 | 1 | [29] |

| For three consecutive monophonic case |

| 14 | Monophonic | (a) add 0.5 point if is black key and played with finger 1 | 0.5 | [29] |

| | | (b) add 1 more point if is white key | 1 | |

| | | (c) add 1 more point if is white key | 1 | |

| 15 | Monophonic | if the is black key and played with finger 5 | | [29] |

| | | (a) add 1 point if is white key | 1 | |

| | | (b) add 1 more point if is white key | 1 | |

The matrices above describe the maximum note distance stretching, where positive distance means the maximum stretch from finger f to g in consecutive ascending notes, and negative distance is the maximum stretch in consecutive descending notes. Minimum practically stretch, MinPrac can be defined as , and the rest can be defined similarly.

Since the matrix describes the distance matrix on the right hand, similarly with [

24,

25], the left-hand matrices can be retrieved simply by swapping the order of the fingering

; for example,

. These matrices serve as the basis for computing fingering difficulty scores in Equation (

3), and the reward function in Equation (

4). We use the fingering rules implemented by [

25], which combine heuristics from [

22,

29,

40], with minor adjustments tailored to our implementation.

Figure 1 presents the Finger DuelDQN, whose basic structure of the agent is similar to that of the Dueling [

12]. The aforementioned condition

transforms the last part of the last layer to a multi-output Q-value. Hence, the agent architecture used for all pieces is as follows. The input to the neural network is a 1 × 362 vector produced by the state encoding process. The network consists of three fully connected layers with 1024, 512, and 256 units, respectively, each followed by a ReLU activation function. Then, the final layer splits into five output heads representing the advantage functions

for

, each corresponding to a note count

, with

output units per head. An additional head computes the value function

, and the final

values are computed using Equation (

2).

4. Elite Episode Replay

Human behavior develops through interaction with the environment and is shaped by experience. From the perspective of learning theory, behavior is influenced by instrumental conditioning, where reinforcement and punishment govern the likelihood of actions being repeated. Positive reinforcement strengthens behavior by associating it with rewarding outcomes [

41,

42]. In neuroscience, such learning mechanisms are linked to experience-dependent neural plasticity, which underpins the behavior that can be shaped and stabilized through repeated, salient experiences that induce long-term neural changes [

43,

44,

45]. Repetition of action is critical to reinforce learned or relearned behaviors and emphasizes that repetition enhances memory performance and long-term retention [

45,

46]. However, the effectiveness of repetition is influenced by timing and spacing. From the optimization perspective, these episodes serve as near-optimal episodes that encode valuable information and serve as strong priors in parameter updates. By focusing on repeating this high-value information early, the noise from uninformative or random transitions that can dominate early in learning can be mitigated. Motivated by this, we proposed a new replay method strategy to build agent behavior by repeating the best episode (elite episode) and training once per episode, called Elite Episode Replay(EER).

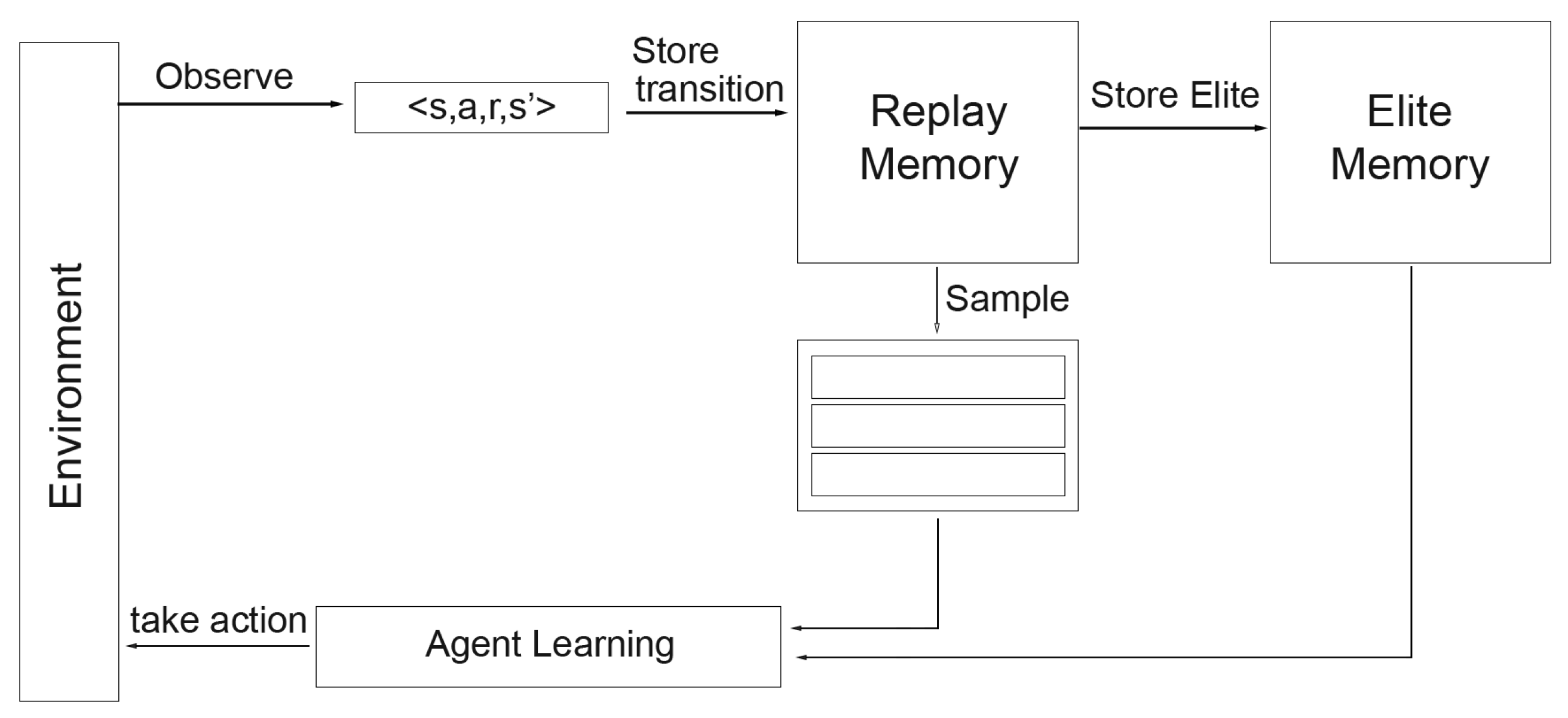

Figure 2 shows the overview of our proposed framework. We introduce an additional elite memory

that stores the high-quality on-run experiences, separate from the standard replay memory

. After each episode, the experience is evaluated using total reward. If the memory

has not reached its capacity of

E, the episode is added directly. Otherwise, it is compared against the lowest-scoring episodes in

, and a replacement is made only if the episode achieves a higher score. This design ensures

maintains a top-performing episode throughout training. During learning, a mini-batch of size

B is sampled as follows: we first attempt to draw a transition from

. If every

has been trained or the remaining contains fewer than

B transitions in total, the remaining transitions are sampled from the standard replay memory

. Consequently, this design is modular and compatible with arbitrary sampling strategies.

The elite memory

can be implemented as a doubly linked list sorted in descending order by episode score. Training proceeds sequentially through the list, starting from the best-performing episode and continuing toward lower-ranked episodes. The process of storing experiences in elite memory and experience sampling with Elite Memory is outlined in Algorithms 1 and 2, respectively. The concept of elite and elitism also appears in evolutionary algorithm fields, where top individuals are preserved across generations to ensure performance continuity [

47,

48]. However, although it has similar terms, we are not operating on a population or using crossover or mutation mechanisms. Instead, our elitism refers solely to selecting top-performing episodes for prioritized replay.

| Algorithm 1 Storing Experience with Elite Memory. |

Input: New experience , end of episode status , replay memory , elite memory with capacity of E, temporary list L with total reward T Output: The updated , , and L

- 1:

Append experience X to and L ▹ Store to - 2:

Add to the - 3:

if is True then ▹ End of episode, update - 4:

if then - 5:

Append to - 6:

else - 7:

- 8:

if then - 9:

Replace - 10:

end if - 11:

end if - 12:

Set T to 0 and L to None - 13:

end if

|

| Algorithm 2 Sampling with Elite Memory. |

Input: Replay memory , elite memory with elite size E, batch size B Output: Batch experience with B experiences.

- 1:

Initiate and temporary elite list L - 2:

Let m be be number of experiences in elite that has not been trained - 3:

Preserve current untrained elite experience to L - 4:

while and next elite in exists do - 5:

Load next elite to L - 6:

end while - 7:

if

then - 8:

Sample B number of experience X from L and append to - 9:

Mark sampled experience from L in as trained - 10:

else if

then - 11:

Take m number of experience from L - 12:

Mark sampled experience from L in as trained - 13:

Sample number of experience from and append to - 14:

else - 15:

Sample B experiences from and append to - 16:

end if

|

In this implementation of EER, all the sampling is performed uniformly within each memory. Specifically, for each training step, we construct a mini-batch

of size

B by first sampling as many transitions as available from

, denoted by

. The remaining

transition are then sampled from

. Once the batch

is formed, we compute the loss as the average squared TD error over the combined transitions as below.

Since EER focuses on replaying elite experiences, the agent’s exploration capacity is affected by the size of the elite memory,

E. Suppose there are

M transitions per episode, with

B experiences being sampled per batch. Then the total number of transitions sampled per from the elite memory is

, and the total number sampled from the replay memory is

Thus,

E directly influences the exploration level as increasing

E emphasizes exploitation (learning from successful experience), while decreasing

E increases exploration via more diverse sampling from

. Moreover, setting

implies purely exploratory training (i.e., all samples from

), while

results in fully exploitative training using only elite experiences (Algorithm 3).

| Algorithm 3 Finger DuelDQN with Elite Episode Replay |

- 1:

Initialize replay memory , elite memory with total reward - 2:

Initialize action-value Q with weight , temporary elite L with total reward - 3:

Initialize target action-value function with weight - 4:

for episode to M do - 5:

for t = 1 to N do - 6:

Observe , extract note condition - 7:

Take action with probability otherwise - 8:

Retrieve and add to total episode score - 9:

Store to memory using Algorithm 1 ▹ Store experience - 10:

Sample mini batch experience using Algorithm 2 ▹ Sample experience - 11:

for all , do - 12:

= - 13:

Perform gradient descent step on with respect to - 14:

end for - 15:

Mark experience in as learned if has sampled that data from . - 16:

Every M steps, copy Q to - 17:

end for - 18:

Unmark learned for all elite in - 19:

Change elite to L and to if - 20:

Set L to None and to 0 - 21:

end for

|

Computational Complexity Analysis of EER

This section analyzes the computational complexity of the proposed Elite Episode Replay (EER) algorithm, specifically in terms of sampling, insertion, priority update, elite memory update, and space complexity, in comparison to uniform and PER approaches. Let denote the experience replay with a buffer size of N, where each episode consists of M transitions and each transition is of fixed dimension d, and B experiences are sampled every step. Then, in a uniform strategy, the insertion and sampling are efficient with complexity of and , respectively. The overall space complexity is .

Assume that the experience memory in PER is implemented using a sum-tree structure [

11], which supports logarithmic-time operations. Each insertion requires

time per update. Since

B transitions are sampled and updated per step, both the sampling and priority update complexities are

per step. Additionally, the space complexity for PER is

.

In the EER method, we have an additional elite memory

with size of

E episodes. During training, we prioritize sampling from

and we fall back to

once

is exhausted. Assuming uniform sampling from both

and

, the sampling complexity remains

. The insertion into

remains

while elite memory updates only occur once per episode by checking whether the new episode outperforms the stored ones. This requires scanning

once, resulting in a per-episode update complexity of

. The total space complexity is

, accounting for the replay memory

, and elite memory

(

Table 2).

5. Experiment

5.1. Experiment Setting

The training was conducted on a single 8GB NVIDIA RTX 2080TI GPU with a quad-core CPU and 16GB RAM. We utilized 150 musical pieces from the piano fingering dataset compiled from 8 pianists [

21]. Out of these, 69 pieces were selected for training based on the following criteria:

All human fingering sequences must start with the same finger.

No finger substitution is allowed (e.g., 3 then switch 1 transitions are excluded).

Following the setup in [

33], each music piece is treated as a separate environment to find the optimal fingering for that piece. Performance is evaluated using the fingering difficulty metric defined in Equation (

5). The rest of the settings are written below (

Table 3).

The input 1 × 362 is provided in three fully connected layers—1024, 512, and 256 units, respectively—each followed by a ReLU Layer. The last layer consists of five outputs, , representing each condition , with 5, 10, 10, 5, and 1 layers. Training was run for 500 episodes without early stopping, and the fingering sequence with the highest return was selected as the final result. Training began only after a minimum of 10 times the piece length or 1000 steps, whichever was larger.

5.2. Experimental Results

Table 4 presents the comparative evaluation of uniform replay [

3], prioritized experience replay (PER) [

11], and our proposed Elite Episode Replay (EER). Each method was trained across 69 piano pieces, and its performance was assessed using Equation (

5), training time, and maximum episode reward. The win column indicates how often each method produced the lowest fingering difficulty among all methods.

To evaluate the performance across different training horizons, we conducted a pairwise Wilcoxon signed-rank test with 95% confidence interval on the initial 200 episodes and the full 500 episodes. In the early phase (first 200 episodes), no statistically significant differences were found among the algorithms. This indicates that all methods exhibited comparable initial learning behavior. In contrast, over the full training horizon, both Uniform and EER significantly outperformed PER with p-values of and , respectively. However, no significant difference was found between Uniform and EER, suggesting that both converge to a similar level of performance.

In terms of computational efficiency, EER reduced the average training time per step by 21% compared to Uniform replay (p < 0.01) and by 34% compared to PER (p < 0.01). In terms of learning efficiency, EER reaches the maximum reward 18% faster than Uniform and 30% faster than PER, although this difference was not statistically significant. Although EER and Uniform converge to a similar difficulty level, EER reached this performance significantly faster. This highlights the EER ability to accelerate learning without sacrificing fingering quality.

Table 5 further compares the EER and its baselines with the FHMM approach [

21] on a 30-piece subset using four match-rate metrics: general (

), high-confidence (

), soft match (

), and recovery match (

). EER maintained competitive match rates across match metrics while offering lower difficulty scores than all HMM variants. Although HMM-based methods slightly outperformed in exact match rate metrics, particularly FHMM2 and FHMM3, the deep RL methods, especially EER, achieved greater reductions in fingering difficulty. Overall, these results demonstrate that Elite Episode Replay provides an effective and computationally efficient mechanism for learning optimal piano fingerings using reinforcement learning. Additionally, when evaluated against human-labeled fingerings. EER produced more optimal fingerings in 59 out of 69 pieces.

Despite being built on the base algorithm, EER achieves faster training by prioritizing learning from elite episodes. These episodes offer a more informative transition, resulting in the agent receiving more informative gradient signals in early training, reducing the total number of updates required to achieve convergence. Moreover, EER retains the same sampling complexity as Uniform replay and introduces minimal overhead for managing the elite buffer, thereby preserving computational efficiency while significantly improving learning speed.

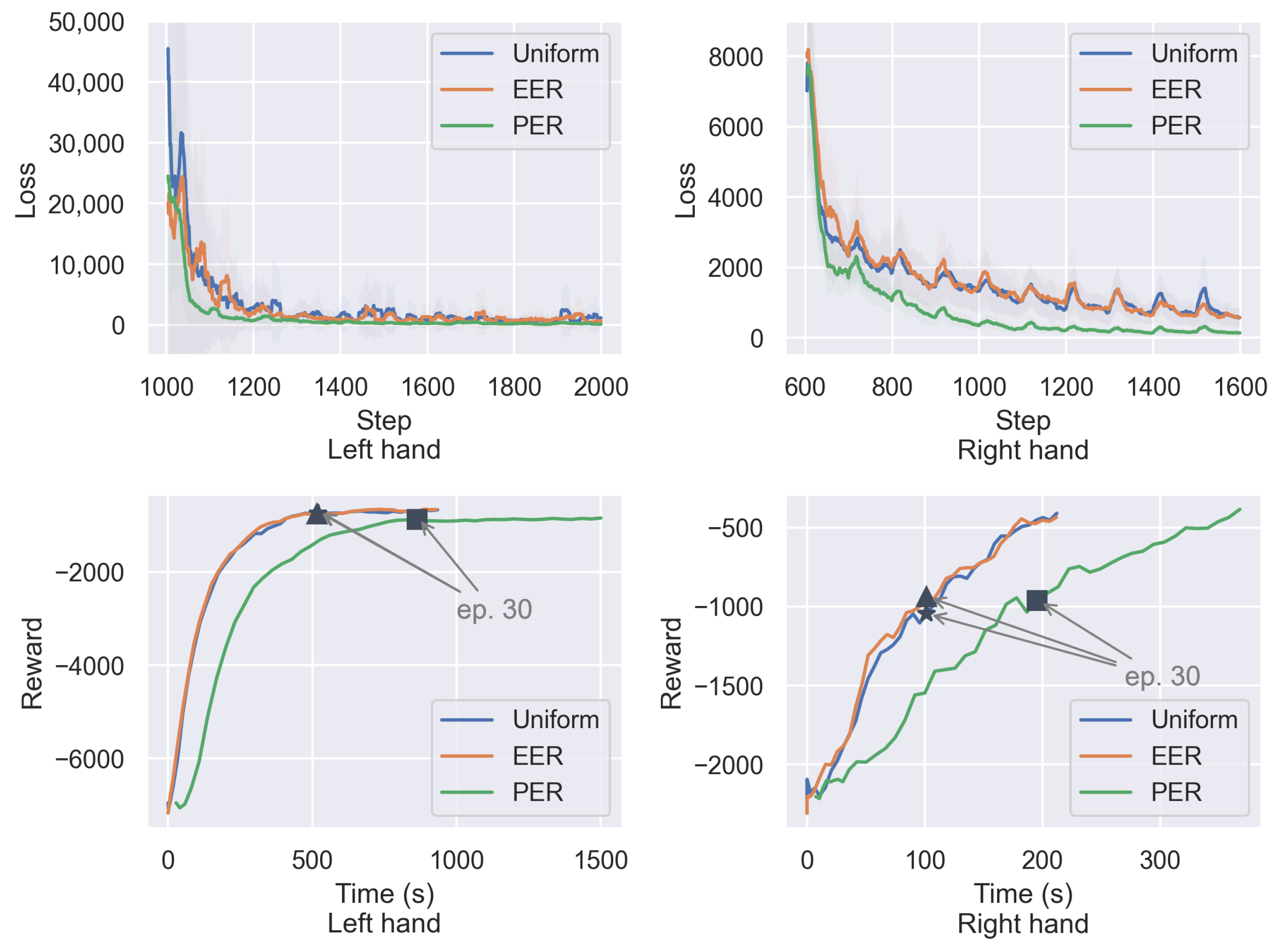

5.3. Error and Training Speed Analysis

Since our model approximates five Q-values in a single run, we examine whether polyphonic and monophonic passages affect TD error and convergence speed. To investigate this, we trained on piece No. 107, Rachmaninoff’s

Moment Musicaux Op. 16 No. 4 bars 1–9, which feature a polyphonic right hand and a monophonic left hand. We analyzed the loss per step and the reward progression over time in

Figure 3.

The results indicate that PER achieves a lower loss per learning step by emphasizing transitions with high TD-error. Our method (EER) also attains a lower per-step error than Uniform. In polyphonic passages, EER yields more stable learning compared to Uniform. In monophonic settings, although PER maintains the lowest TD error, it suffers from significantly slower training speeds. Across both types of passages, EER outperforms the other methods in terms of training speed and reaches higher total rewards earlier in training. Furthermore, EER exhibits a loss pattern similar to that of Uniform, reflecting our hybrid sampling by retaining elite experiences while sampling the rest uniformly. This combination allows EER to balance convergence speed with exploration and improves both training stability and overall efficiency.

Since we use an elite size of one, a simple implementation of the elite memory could be based on either a list or a linked list. To evaluate sampling speed, we compared both data structures by measuring the time from the start to the first sampled transition on piece No. 077, Chopin

Waltz Op. 69 No. 2. As shown in

Table 6, the sampling times for list and linked list implementations are nearly identical, with the linked list being slightly faster in some cases. This suggests that both structures are interchangeable when the elite size is kept minimal.

5.4. Elite Studies

To examine the impact of elite replay, we trained a subset of 30 music pieces (see

Appendix A) with various elite sizes and analyzed the corresponding training time and reward per episode. We implemented the elite memory

using a queue based on a doubly linked list. In each iteration, the episode with the highest reward was prioritized first, followed by subsequent elites in descending order of reward. Once all elites were replayed, the remaining samples were drawn uniformly from the replay memory.

Table 7 shows the difficulty and training time per step (in seconds) for different elite sizes. With an elite size of

, the average difficulty increases by 0.1 points, while the training time per step decreases by 20.1%. However, further increasing the elite size tends to raise the difficulty, but does not result in significant training speed improvements.

Figure 4 illustrates that using elite memory can enhance reward acquisition during the early stages of learning.

To further analyze the effect of elite memory, we visualize the training process for piece No. 077 (Chopin

Waltz Op. 69 No. 2). As shown in

Figure 5, when the elite size reaches 32 (equal to the batch size), the agent learns exclusively from

. In this condition, exploration becomes limited as

decreases, and learning quality gradually deteriorates after episode 64. This observation aligns with Equation (

10), which implies that a higher number of elites reduces the diversity of sampled experiences and limits the model’s learning flexibility.

Furthermore, we also observe from

Figure 4 and

Figure 5 that introducing elite memory improves convergence speed. Increasing

E initially improves performance, as seen in the polyphonic setting where expanding

E from 1 to 4 leads to higher rewards. However, an excessively large number of elites can result in premature convergence and suboptimal performance due to reduced exploration.This effect is particularly noticeable when the elite episode stored approaches the batch size, potentially leading the agent to overfit to recent experiences and fall into a local optimum. Statistical analysis reveals that configurations with

do not show a significant difference in performance, while

and

yield significantly lower rewards (

p < 0.01), suggesting a threshold effect where performance gains only beyond a certain elite memory size. This finding suggests that elite memory plays a meaningful role in reinforcing successful episodes and guiding learning. Identifying the optimal size of

E for different environments and balancing it with exploration remains an open and promising direction for future work.

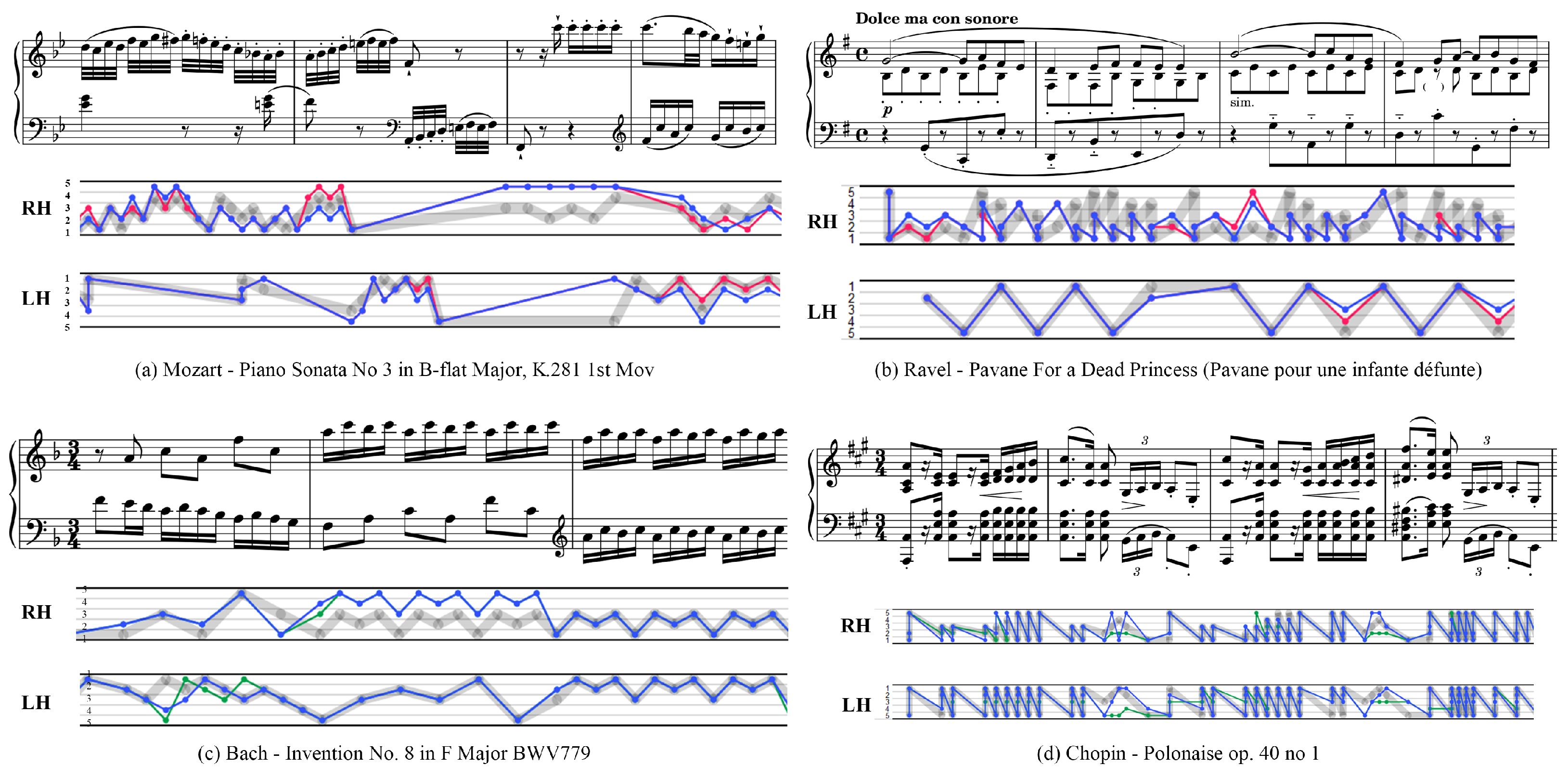

5.5. Analysis of Piano Fingering

We analyze the piano fingering generated by different agents by visualizing the fingering transitions for EER-Uniform and EER-PER pairs, as shown in

Figure 6 [

21]. In

Figure 6c, our reinforcement learning (RL) formulation generates the right hand (RH) fingering for the passage A5–C5–B♭5–C5 as 3/4–5–4–5, which is relatively easier to perform than an alternative such as 1–3–2–3 that requires full-hand movement.

In

Figure 6a, the EER-generated fingering 1–2–3–2–3–1 for D5–E5–F5–E5–F5–F4 results in smoother transitions than the uniform agent’s 1–4–5–4–5–1, which involves a larger and more awkward movement between D5 and E5 using fingers 1 and 4. Similarly, in

Figure 6c (left hand), although both EER and PER produce fingering close to human standards, the PER agent’s decision to use 3–5–1 for the D5–C5–D5 passage in the first bar results in awkward fingerings due to the small note interval. In contrast, both human and EER agents tend to perform the passage with seamless turns such as 1–2–1 or 3–4–1, allowing for smoother motion. In polyphonic settings, the EER fingering (5, 2, 1) for the chord (A2, E3, A3) shown in

Figure 6d (left hand) is more natural and consistent with human fingering than the PER’s choice of (3–2–1). Furthermore, for a one-octave interval (A2, A3), the use of (3, 1) is often considered awkward in real performance, whereas EER’s (5, 1) fingering is more conventional and widely used by human players.

Despite these promising results,

Figure 6 also reveals that all agents struggle with passages involving significant leaps, as illustrated in

Figure 7. Such jumps occur when two consecutive notes or chords are far apart, such as the left-hand F2 to F4 or the right hand F4 to C6 transitions in

Figure 6a. Additionally, the agents face difficulty in handling dissonant harmonies over sustained melodies, as in

Figure 6b, where the base melody must be held with one finger while simultaneously voicing a second melodic line. These findings indicate that while the agent can learn effectively under the current constraints, achieving piano-level fluency likely requires more context-aware reward design. We view these as important indicators of where the current reward formulation falls short and as valuable benchmarks for guiding future improvement.

5.6. Limitation and Future Works

While our study provides valuable insights into piano fingering generation and reinforcement learning strategies, it also has several limitations. First, since we formulate each musical piece as a separate environment, training time depends on the length of the score. Longer pieces inherently require more time per episode. For instance, training on piece No. 033, which has 1675 steps per episode, takes 3.5 min for Uniform, 3.0 min for EER, and 4.7 min for PER per episode. This suggests that optimizing the training pipeline for longer compositions remains an important area for improvement. Consequently, solving piano fingering estimation through per-piece limits the scalability for real-world deployment. As such, an important direction for future work is to extend our approach towards a generalized model using curriculum learning or sequential transfer learning with reinforcement learning. This would enable the training of a shared policy across multiple environments.

Second, the quality of the generated fingering is heavily influenced by the rule-based reward design. As discussed in

Section 5.5, the model still struggles with advanced piano techniques such as large leaps and dissonant harmonies. Enhancing the reward structure or incorporating more nuanced rules could lead to improved generalization in such cases. Furthermore, since piano fingering rule development is an ongoing research area [

32], our framework can serve as a testbed to validate the effectiveness of newly proposed rules. Future work could involve dynamically adapting reward functions based on performance context, style, or user feedback, further to improve the quality and realism of generated fingerings.

6. Conclusions

We presented an experience-based training strategy that leverages elite memory, which stores high-reward episodes for prioritized learning by the agent. The agent was trained to interpret and respond to musical passages formulated as a reinforcement learning problem. We proposed a Finger DuelDQN that is capable of producing multiple action Q-values per state. Our results show that the Episodic Elite Replay (EER) method improves both convergence speed and fingering quality compared to standard experience replay. We also investigated the impact of elite memory size on training performance. Our experiments reveal that incorporating a small number of elite episodes can guide the agent toward early-stage success by reinforcing effective past behaviors. Compared to uniform and prioritized experience replay, our method achieved lower training error and yielded higher rewards per unit time, demonstrating its efficiency.

This study highlights the potential of elite memory in structured decision-making tasks like piano fingering. However, further research is needed to generalize its effectiveness across different domains and to explore combinations of elite memory with other sampling strategies. Moreover, human pianists typically develop fingering skills progressively through foundational exercises, such as scales, cadences, arpeggios, and études, before tackling complex musical pieces. Therefore, future work could investigate the transfer learning frameworks to incorporate such pedagogical knowledge in deep reinforcement learning based fingering systems.