CPEL: A Causality-Aware, Parameter-Efficient Learning Framework for Adaptation of Large Language Models with Case Studies in Geriatric Care and Beyond

Abstract

1. Introduction

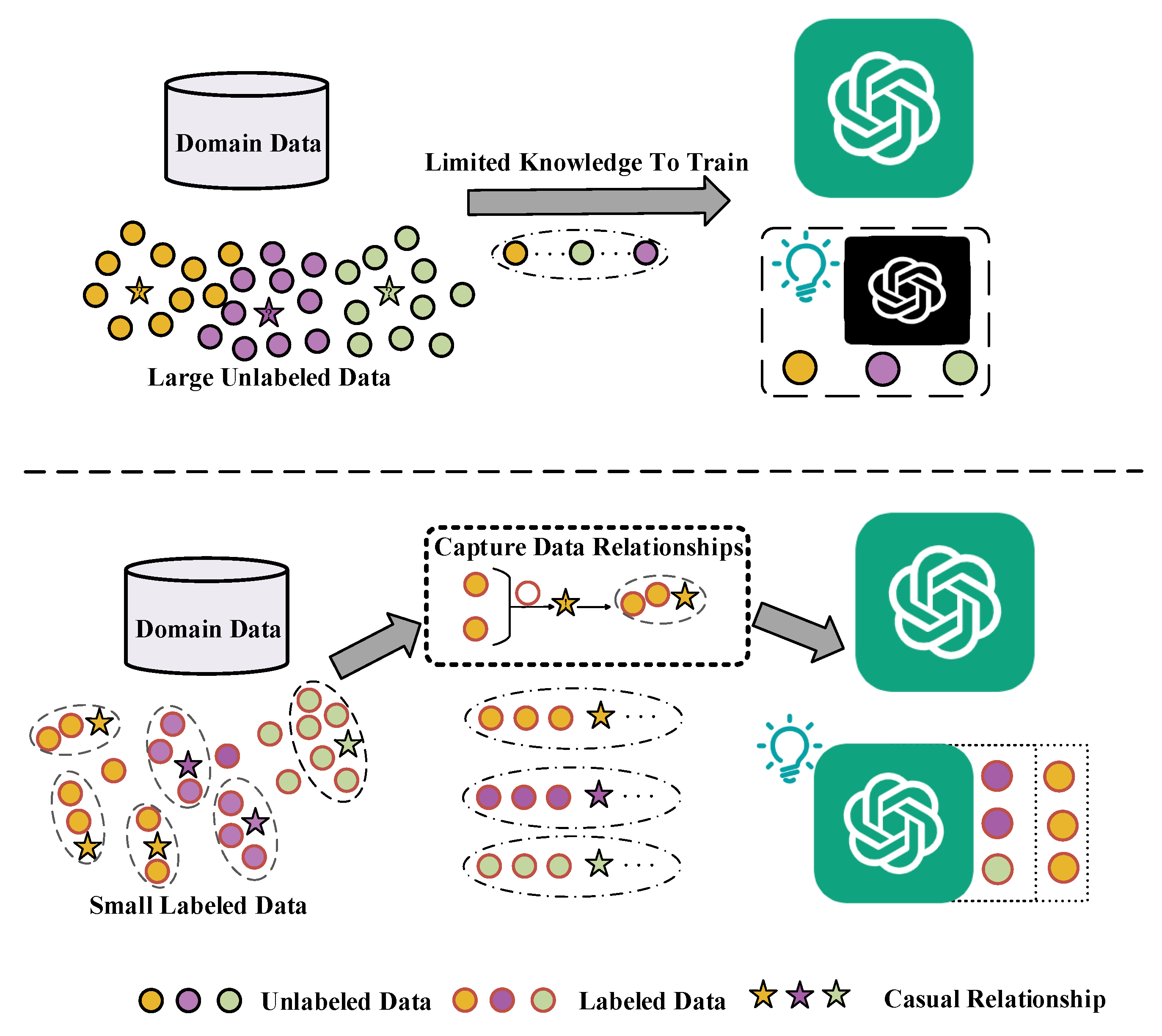

- This study identifies a critical challenge in adapting large language models (LLMs) to specialized, resource-constrained domains like geriatric care: the underutilization of causal information amidst data scarcity and limited computation. We propose enhancing parameter-efficient prompt learning with counterfactual reasoning and causal insights to improve the adaptability of LLMs in low-resource settings without extensive retraining.

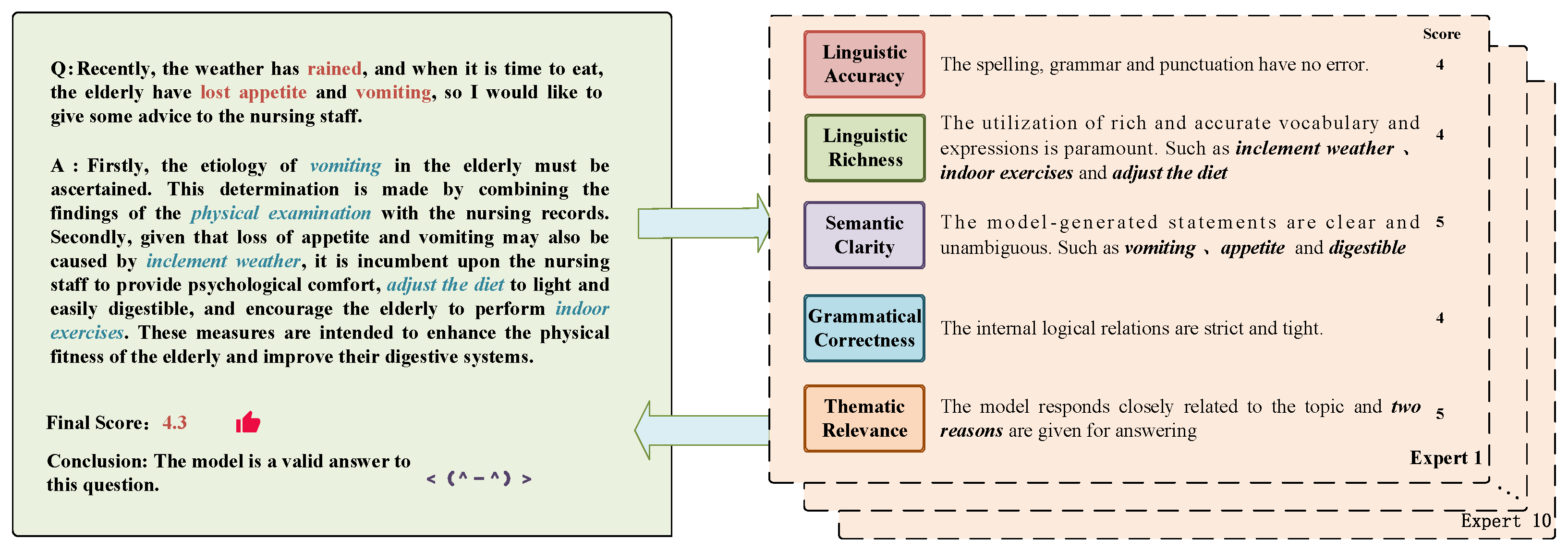

- We introduce Causality-Aware Parameter-Efficient Learning (CPEL), a novel two-stage pipeline. Specifically, The Parameter-Efficient Fine-Tuning (MPEFT) module employs a two-step LoRA-Adapter routine for lightweight adaptation. The Causal Prompt Generator (CPG) further generates counterfactual prompts to enhance task performance. This framework enables efficient LLM specialization in low-resource settings.

- The effectiveness of the proposed CPEL framework is rigorously validated through extensive experiments, and the results demonstrate that CPEL achieves significant improvements compared to mainstream LLMs and outperforms frontier models by 1–2 points in auto-scoring tasks. Moreover, it reduces training parameters to approximately 0.001% of those used by the original model, significantly lowering computational costs and training time.

2. Related Works

2.1. The Specialized Field Large Language Model

2.2. Causal Representation Learning in Large Language Models

2.3. Research on Model Domain Adaptation Strategies

3. Methodology

3.1. Problem Statement

3.1.1. Step 1: The Learning Phase—Domain Adaptation

3.1.2. Step 2: The Fine-Tuning Stage—Instruction in Downstream Tasks

3.1.3. Definitions of Tasks Related to Geriatric Care

- Health-Knowledge Question Answering (HKQA): This task involves answering domain-specific questions related to health management for the elderly, care in the context of chronic disease, nutrition, medication, and mental well-being. The goal is to provide concise and accurate responses grounded in nursing knowledge.

- Health-Event Causal Reasoning (HECR): This involves identifying cause–effect relationships from user descriptions or care records, with aims such as linking symptoms to underlying conditions or recognizing causal chains in events of daily life (e.g., “Poor sleep leads to high blood pressure”).

- Care-Plan Generation (CPG): Based on the user’s current state, historical records, or specific queries, the model generates personalized care suggestions or daily routines that align with clinical guidelines and individual needs.

3.2. Causality-Aware Parameter-Efficient Learning Framework

3.2.1. MPEFT Module

3.2.2. Causal Prompt Generator

4. Experiment

4.1. Dataset

- mental_health_counseling_conversations was curated by aggregating questions and responses from various online counseling and therapy platforms. Comprising 3510 entries, the content of this dataset is predominantly centered around mental health concerns. Importantly, the responses were contributed by certified mental health counselors, ensuring the high quality of the data.

- Medical_Customer_care comprises 207 K entries primarily focused on health-related knowledge and information. Each entry offers comprehensive details and recommendations.

- The HCaring dataset is a curated corpus designed to support domain adaptation and evaluation in elder-care scenarios. It is built from a diverse collection of video materials sourced from public platforms such as YouTube, Bilibili, and TikTok, covering topics like nursing procedures, rehabilitation training, psychological counseling, daily care routines, and promotion of a healthy lifestyle. To enable structured training of language models, the dataset is transformed into a set of high-quality QA pairs. Each entry follows a standardized schema consisting of the following: (1) an instruction field—an optional category or scenario tag describing the caregiving context; (2) an input field—a natural-language question derived from real-world situations related to geriatric care; (3) an output field—a reference answer crafted by human annotators based on domain-specific guidelines, expert consensus, or verified clinical resources.All QA pairs were constructed and reviewed following task-specific annotation protocols to ensure consistency, domain coverage, and quality control. A representative sample is provided in Appendix B to illustrate the data format and annotation style.

4.2. Domain-Adaptive Training

4.2.1. Experimental Setup

4.2.2. Experimental Results

4.2.3. Experimental Analysis

4.3. Downstream Task-Instruction Fine-Tuning

4.3.1. Experimental Results

4.3.2. Experimental Analysis

4.4. Ablation Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1

| Task | Prompt Type | Prompt | Output Behavior |

|---|---|---|---|

| HKQA | Base Prompt | Why should caregivers help elderly individuals brush their teeth every day? | General reasoning; hygiene-focused |

| Causal Prompt | Given that lack of oral hygiene can lead to tooth decay and systemic infection, why is it important for caregivers to help elderly individuals brush their teeth daily? | More specific causal reasoning (cause–effect-prevention) | |

| HECR | Base Prompt | After successful CPR, how to place the elderly? | Describes position; lacks rationale |

| Causal Prompt | Given that the elderly patient has just been resuscitated from cardiac arrest, what is the proper placement to ensure airway patency and vital sign stability while waiting for emergency services? | Emphasizes causal consequence of improper posture, safety relevance |

Appendix A.2

| Task | Base Prompt and Output | Causal Prompt and Output |

|---|---|---|

| Example 1—HKQA | Prompt: Why should caregivers help elderly individuals brush their teeth every day? Output: “To maintain oral hygiene and prevent dental problems.” | Prompt: Given that lack of oral hygiene can lead to tooth decay and systemic infection, why is it important for caregivers to help elderly individuals brush their teeth daily? Output: “Because inadequate oral hygiene can result in systemic infections such as aspiration pneumonia, it is crucial for caregivers to assist elderly individuals in daily brushing to ensure both oral and overall health.” |

| Example 2—HECR | Prompt: After successful CPR, how to place the elderly? Output: “Place in supine position and monitor vital signs.” | Prompt: Given that the elderly patient has just been resuscitated from cardiac arrest, what is the proper placement to ensure airway patency and vital sign stability while waiting for emergency services? Output: “To prevent airway obstruction and maintain adequate blood circulation after resuscitation, the elderly should be laid in a supine position with the airway open and continuously monitored until emergency staff arrives.” |

| Example 3—CPG | Prompt: What is the standard procedure for administering insulin? Output: “Follow steps to inject insulin under the skin.” | Prompt: Considering that improper insulin administration can lead to hypoglycemia or hyperglycemia, what is the correct step-by-step procedure for safely administering insulin to elderly patients? Output: “To avoid dangerous glucose fluctuations, caregivers must ensure correct dose selection, site rotation, and post-injection monitoring during insulin administration in elderly patients.” |

Appendix B

Appendix C

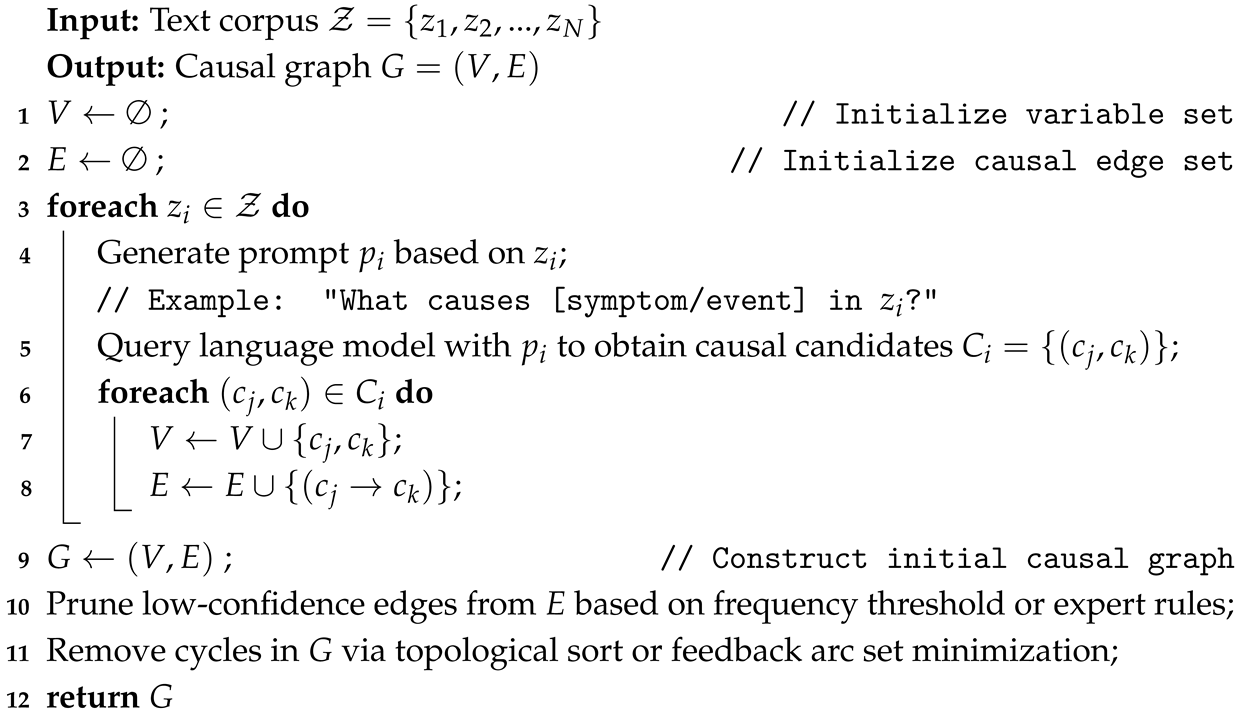

| Algorithm A1: Prompt-Based Causal Relation Discovery |

|

Appendix D

References

- Shim, J.-Y.; Kang, B.-Y.; Yun, T.-J.; Lee, B.-R.; Kim, I.-S. The Present Situation of the Research and Development of the Electromagnetic Pulse Technology. Mater. Today Proc. 2020, 10, 142–149. [Google Scholar] [CrossRef]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A Survey of Large Language Models. arXiv 2023, arXiv:2303.18223. [Google Scholar] [PubMed]

- Minaee, S.; Mikolov, T.; Nikzad, N.; Chenaghlu, M.; Socher, R.; Amatriain, X.; Gao, J. Large Language Models: A Survey. arXiv 2024, arXiv:2402.06196. [Google Scholar]

- Xu, M.; Yin, W.; Cai, D.; Yi, R.; Xu, D.; Wang, Q.; Wu, B.; Zhao, Y.; Yang, C.; Wang, S. A Survey of Resource-Efficient LLM and Multimodal Foundation Models. arXiv 2024, arXiv:2401.08092. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models Are Unsupervised Multitask Learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Luo, Z.; Xu, C.; Zhao, P.; Geng, X.; Tao, C.; Ma, J.; Lin, Q.; Jiang, D. Augmented Large Language Models with Parametric Knowledge Guiding. arXiv 2023, arXiv:2305.04757. [Google Scholar]

- Ling, C.; Zhao, X.; Lu, J.; Deng, C.; Zheng, C.; Wang, J.; Chowdhury, T.; Li, Y.; Cui, H.; Zhang, X.; et al. Domain Specialization as the Key to Make Large Language Models Disruptive: A Comprehensive Survey. arXiv 2023, arXiv:2305.18703. [Google Scholar]

- Aduragba, O.T.; Yu, J.; Cristea, A.; Long, Y. Improving Health Mention Classification Through Emphasising Literal Meanings: A Study Towards Diversity and Generalisation for Public Health Surveillance. In Proceedings of the ACM Web Conference 2023, Austin, TX, USA, 30 April–4 May 2023; pp. 3928–3936. [Google Scholar]

- Huang, L.; Yu, W.; Ma, W.; Zhang, Y.; Li, S.; Liu, J. A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions. ACM Trans. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Yang, R.; Tan, T.F.; Lu, W.; Thirunavukarasu, A.J.; Ting, D.S.W.; Liu, N. Large Language Models in Health Care: Development, Applications, and Challenges. Health Care Sci. 2023, 2, 255–263. [Google Scholar] [CrossRef]

- Zhu, Z.; Lin, K.; Jain, A.K.; Zhou, J. Transfer Learning in Deep Reinforcement Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13344–13362. [Google Scholar] [CrossRef]

- Singhal, P.; Walambe, R.; Ramanna, S.; Kotecha, K. Domain Adaptation: Challenges, Methods, Datasets, and Applications. IEEE Access 2023, 11, 6973–7020. [Google Scholar] [CrossRef]

- Li, J.; Yu, Z.; Du, Z.; Zhu, L.; Shen, H. A Comprehensive Survey on Source-Free Domain Adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5743–5762. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Sun, H.; Li, J.; Liu, R.; Li, Y.; Liu, Y.; Gao, Y.; Huang, H. MindLLM: Lightweight Large Language Model Pre-Training, Evaluation and Domain Application. AI Open 2024, 5, 155–180. [Google Scholar] [CrossRef]

- Hu, L.; Liu, Z.; Zhao, Z.; Hou, L.; Nie, L.; Li, J. A Survey of Knowledge Enhanced Pre-Trained Language Models. IEEE Trans. Knowl. Data Eng. 2023, 6, 1413–1430. [Google Scholar] [CrossRef]

- Ding, N.; Qin, Y.; Yang, G.; Wei, F.; Yang, Z.; Su, Y.; Hu, S.; Chen, Y.; Chan, C.-M.; Chen, W. Parameter-Efficient Fine-Tuning of Large-Scale Pre-Trained Language Models. Nat. Mach. Intell. 2023, 5, 220–235. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Halgamuge, M.N. Leveraging Deep Learning to Strengthen the Cyber-Resilience of Renewable Energy Supply Chains: A Survey. IEEE Commun. Surv. Tutor. 2024, 26, 2146–2175. [Google Scholar] [CrossRef]

- Wu, M.; Subramaniam, G.; Zhu, D.; Li, C.; Ding, H.; Zhang, Y. Using Machine Learning-Based Algorithms to Predict Academic Performance—A Systematic Literature Review. In Proceedings of the 2024 4th International Conference on Innovative Practices in Technology and Management (ICIPTM), Noida, India, 21–23 February 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Yang, F.; Li, X.; Duan, H.; Xu, F.; Huang, Y.; Zhang, X.; Long, Y.; Zheng, Y. MRL-Seg: Overcoming Imbalance in Medical Image Segmentation with Multi-Step Reinforcement Learning. IEEE J. Biomed. Health Inform. 2024, 28, 858–869. [Google Scholar] [CrossRef]

- Han, Z.; Gao, C.; Liu, J.; Zhang, J.; Zhang, S.Q. Parameter-Efficient Fine-Tuning for Large Models: A Comprehensive Survey. arXiv 2024, arXiv:2403.14608. [Google Scholar]

- Zhang, Y.; Wang, L.; Zhong, G. Design and Analysis of a Variable-Parameter Noise-Tolerant ZNN for Solving Time-Variant Nonlinear Equations and Applications. Appl. Intell. 2025, 55, 460. [Google Scholar] [CrossRef]

- Wang, J.; Sun, Q.; Li, X.; Gao, M. Boosting Language Models Reasoning with Chain-of-Knowledge Prompting. arXiv 2023, arXiv:2306.06427. [Google Scholar]

- Xu, L.; Zhang, J.; Li, B.; Wang, J.; Cai, M.; Zhao, W.X.; Wen, J.-R. Prompting Large Language Models for Recommender Systems: A Comprehensive Framework and Empirical Analysis. arXiv 2024, arXiv:2401.04997. [Google Scholar]

- Petruzzelli, A.; Musto, C.; Laraspata, L.; Rinaldi, I.; de Gemmis, M.; Lops, P.; Semeraro, G. Instructing and Prompting Large Language Models for Explainable Cross-Domain Recommendations. In Proceedings of the 18th ACM Conference on Recommender Systems, Bari, Italy, 14–18 October 2024; pp. 298–308. [Google Scholar]

- Li, X.; Peng, S.; Yada, S.; Wakamiya, S.; Aramaki, E. GenKP: Generative Knowledge Prompts for Enhancing Large Language Models. Appl. Intell. 2025, 55, 464. [Google Scholar] [CrossRef]

- Liu, J.M.; Li, D.; Cao, H.; Ren, T.; Liao, Z.; Wu, J. ChatCounselor: A Large Language Models for Mental Health Support. arXiv 2023, arXiv:2309.15461. [Google Scholar]

- Yang, S.; Zhao, H.; Zhu, S.; Zhou, G.; Xu, H.; Jia, Y.; Zan, H. Zhongjing: Enhancing the Chinese Medical Capabilities of Large Language Model through Expert Feedback and Real-World Multi-Turn Dialogue. Proc. AAAI Conf. Artif. Intell. 2024, 38, 19368–19376. [Google Scholar] [CrossRef]

- Xiong, H.; Wang, S.; Zhu, Y.; Zhao, Z.; Liu, Y.; Huang, L.; Wang, Q.; Shen, D. DoctorGLM: Fine-Tuning Your Chinese Doctor Is Not a Herculean Task. arXiv 2023, arXiv:2304.01097. [Google Scholar]

- Zhang, H.; Chen, J.; Jiang, F.; Yu, F.; Chen, Z.; Li, J.; Chen, G.; Wu, X.; Zhang, Z.; Xiao, Q. HuaTuoGPT: Towards Taming Language Model to Be a Doctor. arXiv 2023, arXiv:2305.15075. [Google Scholar]

- Dai, Y.; Feng, D.; Huang, J.; Jia, H.; Xie, Q.; Zhang, Y.; Han, W.; Tian, W.; Wang, H. LAiW: A Chinese Legal Large Language Models Benchmark (A Technical Report). arXiv 2023, arXiv:2310.05620. [Google Scholar]

- Zhang, X.; Yang, Q. Xuanyuan 2.0: A Large Chinese Financial Chat Model with Hundreds of Billions Parameters. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, Birmingham, UK, 21–25 October 2023; pp. 4435–4439. [Google Scholar]

- Yang, H.; Liu, X.-Y.; Wang, C.D. FinGPT: Open-Source Financial Large Language Models. arXiv 2023, arXiv:2306.06031. [Google Scholar] [CrossRef]

- Dan, Y.; Lei, Z.; Gu, Y.; Li, Y.; Yin, J.; Lin, J.; Ye, L.; Tie, Z.; Zhou, Y.; Wang, Y. EduChat: A Large-Scale Language Model-Based Chatbot System for Intelligent Education. arXiv 2023, arXiv:2308.02773. [Google Scholar]

- Luo, Y.; Zhang, J.; Fan, S.; Yang, K.; Wu, Y.; Qiao, M.; Nie, Z. BiomedGPT: Open Multimodal Generative Pre-Trained Transformer for Biomedicine. arXiv 2023, arXiv:2308.09442. [Google Scholar]

- Arora, A.; Jurafsky, D.; Potts, C. CausalGym: Benchmarking Causal Interpretability Methods on Linguistic Tasks. arXiv 2024, arXiv:2402.12560. [Google Scholar]

- Zeng, J.; Wang, R. A Survey of Causal Inference Frameworks. arXiv 2022, arXiv:2209.00869. [Google Scholar]

- Xie, B.; Chen, Q.; Wang, Y.; Zhang, Z.; Jin, X.; Zeng, W. Graph-Based Unsupervised Disentangled Representation Learning via Multimodal Large Language Models. arXiv 2024, arXiv:2407.18999. [Google Scholar]

- Xu, Z.; Ichise, R. FinCaKG-Onto: The Financial Expertise Depiction via Causality Knowledge Graph and Domain Ontology. Appl. Intell. 2025, 55, 4617. [Google Scholar] [CrossRef]

- Mu, F.; Li, W. A Causal Approach for Counterfactual Reasoning in Narratives. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; pp. 6556–6569. [Google Scholar]

- Miao, X.; Li, Y.; Qian, T. Generating Commonsense Counterfactuals for Stable Relation Extraction. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP), Singapore, 6–10 December 2023; pp. 5654–5668. [Google Scholar]

- Zhang, C.; Zhang, L.; Wu, J.; Zhou, D.; He, Y. Causal Prompting: Debiasing Large Language Model Prompting Based on Front-Door Adjustment. arXiv 2024, arXiv:2401.09042. [Google Scholar] [CrossRef]

- Farahmand, F. Commonsense for AI: An Interventional Approach to Explainability and Personalization. AI Soc. 2024, 39, 3673–3681. [Google Scholar] [CrossRef]

- Viswanathan, V.; Zhao, C.; Bertsch, A.; Wu, T.; Neubig, G. Prompt2Model: Generating Deployable Models from Natural Language Instructions. arXiv 2023, arXiv:2308.12261. [Google Scholar]

- Shi, Z.; Lipani, A. DEPT: Decomposed Prompt Tuning for Parameter-Efficient Fine-Tuning. arXiv 2023, arXiv:2309.05173. [Google Scholar]

- Li, X.; Lin, L.; Wang, S.; Qian, C. Unlock the Power: Competitive Distillation for Multi-Modal Large Language Models. arXiv 2023, arXiv:2311.08213. [Google Scholar]

- Wu, T.; Fan, Z.; Liu, X.; Zheng, H.-T.; Gong, Y.; Jiao, J.; Li, J.; Guo, J.; Duan, N.; Chen, W. AR-Diffusion: Auto-Regressive Diffusion Model for Text Generation. Adv. Neural Inf. Process. Syst. 2023, 36, 39957–39974. [Google Scholar]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y. A Survey on Evaluation of Large Language Models. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–45. [Google Scholar] [CrossRef]

| Base Model | Method | Trainable Params | Train Time (h:m:s) | LLM Score (0–9) |

|---|---|---|---|---|

| LLaMA3.2-1B | LoRA | 1.02 M | 2:37:42 | 4.3 |

| LLaMA3.2-3B | LoRA | 3.07 M | 5:29:01 | 4.4 |

| LLaMA2-7B | LoRA | 7.18 M | 8:40:39 | 3.2 |

| LLaMA3.1-8B | LoRA | 8.20 M | 8:53:18 | 6.2 |

| Model | ROUGE (%) | BLEU-4 (%) | LLM Score (0–9) |

|---|---|---|---|

| DeepSeek-R1-Distill-Qwen-7B | |||

| + Zero-shot | 8.49 | 3.46 | 4.64 |

| + Zero-shot * | 14.51 (+6.02) | 13.30 (+9.84) | 5.47 (+0.83) |

| Gemini-pro | |||

| + Zero-shot | 10.56 | 4.21 | 5.01 |

| + Zero-shot * | 14.63 (+3.80) | 13.82 (+9.61) | 5.98 (+0.97) |

| GPT-4o-mini | |||

| + Zero-shot | 16.09 | 9.08 | 5.33 |

| + Zero-shot * | 20.42 (+4.33) | 15.48 (+6.40) | 6.74 (+1.41) |

| LLaMA-70B | |||

| + Zero-shot | 11.74 | 5.08 | 4.92 |

| + Zero-shot * | 16.34 (+4.60) | 10.37 (+5.29) | 5.48 (+0.56) |

| LLaMA-8B | 8.60 | 3.02 | 4.00 |

| Caring LLaMA-LoRA | 12.25 (+3.65) | 5.21 (+2.19) | 5.14 (+1.14) |

| Downstream LLaMA-LoRA | 29.41 (+17.16) | 20.91 (+15.7) | 6.57 (+1.43) |

| Downstream LLaMA-LoRA * | 34.96 (+5.55) | 25.41 (+4.50) | 7.06 (+0.49) |

| Q1: In the early morning, an old man suffering from Alzheimer’s disease got up early and sat on the bedside chair, looking out of the window. At this time, the nursing staff came in. What should be done next? |

| AI (ChatGPT): The nurse approached softly and greeted the old man with a smile: “Good morning, did you sleep well?” Then gently guided the old man out of bed, helped him to groom and prepare breakfast. |

| AI (Gemini): Nursing staff should help the agedly start the day safely and smoothly through cordial communication, clear guidance, and careful care. |

| AI (WenXinYiYan): The nursing staff would first engage in a brief conversation with the old man to understand his emotions and needs. They would then help him tidy up the bed, check his physical condition to ensure safety and wellbeing, and assist with daily activities such as washing up, dressing, or preparing breakfast. |

| AI (Geriatric Care LLaMA): Walk into the room gently, avoiding loud noises so as not to scare the agedly. Approach, crouch down, and talk in a soft, friendly tone. While communicating, observe the emotional state, posture, and eye contact to assess willingness to engage. If the person shows confusion or discomfort, first ask about their condition and whether they miss their children, and offer comfort and encouragement. Once emotionally stable and willing to cooperate, suggest washing and gargling. When aiding gargling, prepare toiletries with appropriate water temperature. Initially provide assistance, encourage imitation, and promote self-confidence and sense of participation. |

| Q2: At 11:30 a.m., an agedly man lying in bed with aphasia suddenly stuttered and spoke unclearly. The nursing staff happened to pass by and noticed. How should this be handled? |

| AI (ChatGPT): The nursing staff should immediately assess the state of consciousness, check respiration and pulse, keep the agedly lying flat, and call emergency services. Monitor vital signs and avoid movement or feeding to prevent asphyxia. |

| AI (Gemini): The priority is to initiate an emergency call. Press the emergency button and communicate briefly and clearly with the agedly to assess the situation. Keep them calm and observe respiration and consciousness until medical staff arrive. Use pen, paper, or gestures to understand needs. |

| AI (WenXinYiYan): If speech is unclear, nursing staff should observe closely and notify the medical team to ensure airway safety and prevent asphyxia. While waiting for the team, soothe the patient, assist with follow-up examinations, and document the event. |

| AI (Geriatric Care LLaMA): If the health status is stable, consider other causes. At 11:30, it may be lunchtime for the agedly. Consider if they made noise due to hunger, and patiently guide communication. Closely monitor their mood and state to understand intentions. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, J.; Gao, J.; Liu, X.; Yang, G.; Liu, J.; Long, Y.; Huang, Z.; Yang, K. CPEL: A Causality-Aware, Parameter-Efficient Learning Framework for Adaptation of Large Language Models with Case Studies in Geriatric Care and Beyond. Mathematics 2025, 13, 2460. https://doi.org/10.3390/math13152460

Xu J, Gao J, Liu X, Yang G, Liu J, Long Y, Huang Z, Yang K. CPEL: A Causality-Aware, Parameter-Efficient Learning Framework for Adaptation of Large Language Models with Case Studies in Geriatric Care and Beyond. Mathematics. 2025; 13(15):2460. https://doi.org/10.3390/math13152460

Chicago/Turabian StyleXu, Jinzhong, Junyi Gao, Xiaoming Liu, Guan Yang, Jie Liu, Yang Long, Ziyue Huang, and Kai Yang. 2025. "CPEL: A Causality-Aware, Parameter-Efficient Learning Framework for Adaptation of Large Language Models with Case Studies in Geriatric Care and Beyond" Mathematics 13, no. 15: 2460. https://doi.org/10.3390/math13152460

APA StyleXu, J., Gao, J., Liu, X., Yang, G., Liu, J., Long, Y., Huang, Z., & Yang, K. (2025). CPEL: A Causality-Aware, Parameter-Efficient Learning Framework for Adaptation of Large Language Models with Case Studies in Geriatric Care and Beyond. Mathematics, 13(15), 2460. https://doi.org/10.3390/math13152460