Abstract

Deep learning (DL), as a cutting-edge technology in artificial intelligence, has significantly impacted fields such as computer vision and natural language processing. Loss function determines the convergence speed and accuracy of the DL model and has a crucial impact on algorithm quality and model performance. However, most of the existing studies focus on the improvement of specific problems of loss function, which lack a systematic summary and comparison, especially in computer vision and natural language processing tasks. Therefore, this paper reclassifies and summarizes the loss functions in DL and proposes a new category of metric loss. Furthermore, this paper conducts a fine-grained division of regression loss, classification loss, and metric loss, elaborating on the existing problems and improvements. Finally, the new trend of compound loss and generative loss is anticipated. The proposed paper provides a new perspective for loss function division and a systematic reference for researchers in the DL field.

MSC:

68T05; 68T07

1. Introduction

Deep learning (DL) is a multi-layer neural network architecture that adopts an end-to-end learning mechanism to learn data features and patterns [1,2,3]. DL has been widely applied in numerous fields including computer vision and natural language processing [4,5,6].

In DL, the loss function, as the core component of the model, quantifies the deviation between model’s predicted results and ground truth (GT) and guides the adjustment of model parameters through the optimization [7,8,9,10]. In essence, it is a mathematical tool that maps the predicted error to non-negative values, thereby providing a gradient-based optimization objective function for learning [11,12,13]. A loss function is defined as follows: assume the sample sets as , where xi is the input sample, yi is the GT, and N is the sample quantity; the model as f(x; θ), where θ is the trainable parameter, and the loss function L(θ) is shown in Equation (1).

where provides an objective measurement of the model by calculating the deviation between the predicted value and the GT .

From Equation (1), it is clear that the loss function leads the direction of model’s learning optimization, which serves as a core component of DL. Choosing an inappropriate loss function will affect the model’s effectiveness [14,15]. The mean square error (MSE) and cross-entropy loss (CE), which are provided earlier, lay the foundation of loss function [10,11]. With the increase in task complexity, new functions have been successively proposed, such as adversarial loss, triplet loss, center loss, and so on [16,17,18].

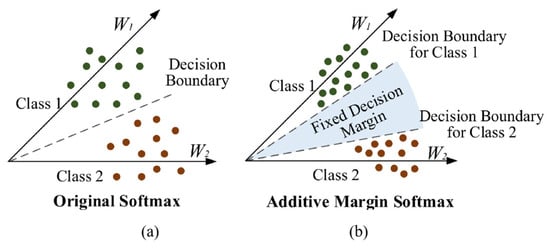

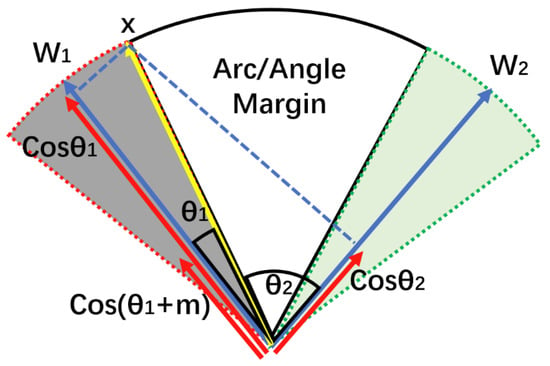

However, most research focuses on improving or constructing a novel loss function [9]. Traditionally, loss function is usually divided into regression loss and classification loss [7,8,9,19]. This division does not consider the sample interrelationships, so it is necessary to propose a new loss category, called metric loss. Unlike classification loss and regression loss that directly minimize the deviation between predicted values and GT, the optimization target of metric loss is to construct a highly discriminative embedding space through the geometric relationship between samples [20,21,22]. For example, contrast loss shortens the embedding distance of similar samples and push away dissimilar samples [18]; large-margin softmax increases the inter-class angle margin to enhance the embedding discriminability [23]. Furthermore, regression loss and classification loss calculate gradient solely dependent on continuous or discrete labels of single sample. However, metric loss requires collaborative calculation, depending on similar relationships of sample pairs, which should be parallel to regression loss and classification loss, jointly supporting the optimization requirements of DL models in complex tasks.

Therefore, this paper reclassifies and summarizes the loss function of DL and incorporates metric loss into the division. Also, it makes a more fine-grained division of regression, classification, and metric loss, elaborating on the improvement paths of these losses. Finally, the new trend of loss function, such as compound loss and generative loss, is anticipated.

The main contributions of the proposed paper are as follows:

- This proposed paper reclassifies and summarizes the loss function of DL and incorporates metric loss into the division of loss functions.

- This proposed paper makes a more fine-grained division of regression, classification, and metric loss.

- This proposed paper looks forward to new trends of loss function, including compound loss and generative loss.

The structure of this paper is as follows: This section serves as an introduction to present the main background, existing problems in the survey, and the main contributions. Section 2 will introduce regression loss, Section 3 will introduce classification loss, Section 4 will introduce metric loss, and Section 5 is the conclusion and prospects.

2. Motivation, Materials, and Methodology

This research selects the literature database Web of Science, ACM Digital Library, and ScienceDirect. The search keyword used in Web of Science is “TS = (“loss function*” OR “cost function*”) AND TS = (review OR survey) AND PY = (2015–2025).” TS means text subject. The publication year is restrained to 2015–2025. In total, 1023 papers were obtained by this keyword. Because the search results include papers in engineering, automation and control, and other fields, the research field is restrained into computer science, and 528 papers were obtained. Through a simple browsing of these 528 articles, it is found that most of them are not surveyed. Therefore, this study adds the search keyword “DT = (Review)”, which restricts the search result marked “Review” by Web of Science. The preliminary screening is to simply read the title and abstract and exclude papers that are not survey or whose topic is other, such as deep learning survey. Meanwhile, it includes a survey whose topic is loss function. A preliminary screening of 138 articles was conducted using these criteria, and a total of 6 papers were obtained. The secondary screening is to carefully read the full text, excluding loss function surveys in a single field, such as in image segmentation. Meanwhile, it includes loss function surveys in deep learning. A secondary screening of 6 articles was conducted using these criteria, and a total of 2 papers were obtained. The preliminary screening and the secondary screening are shown in Table 1.

Table 1.

Inclusion criteria and exclusion criteria.

Since the ACM Digital Library had no subject option, we could search anywhere with the keyword “(“loss function *” OR “cost function *”) AND (“review” OR “survey”)”. The publication year is restrained to 2015–2025. A total of 12477 papers were obtained. Similar to the Web of Science, most of these are not surveyed. Therefore, this study restricts the content type to “Review”, and 533 papers were obtained. By using similar criteria in Table 1, the preliminary screening of 533 articles was conducted using these criteria, and a total of 2 papers were obtained, but there were 0 satisfactory papers found in the secondary screening.

In the “Advanced Search” interface of ScienceDirect, we selected “Title, abstract, keywords” to search. The search keyword was “(‘loss function” or “cost function”) AND (review OR survey)”. A total of 1277 papers were obtained. Because the search results included papers in engineering, mathematics, and other fields, the research field was restrained to computer science, and 335 papers were obtained. We checked the “Review article” in the “Article type” to further ensure that only surveys were retained; 72 papers were obtained. Similarly, by using criteria in Table 1, the preliminary screening of 72 papers was conducted using these criteria, and a total of 3 papers were obtained, but there were 0 satisfactory papers found in the secondary screening. The search and scan results in these three databases are shown in Table 2.

Table 2.

Search and screening results.

Most of the existing research on loss function is to propose or improve a novel loss function, and the survey of loss function in deep learning is less and not comprehensive. Existing loss function surveys focus on a specific field, such as image segmentation. Jurdi et al. [10] summarized the loss functions of semantic segmentation based on CNN. Similarly, Shruti et al. [24] summarized some well-known loss functions widely used in image segmentation and listed the situations where using them helped the model converge faster. On the other hand, some more comprehensive surveys introduced loss functions in multiple fields. For instance, Tian et al. [11] conducted deep analysis and discussion on the loss functions in image segmentation, face recognition, and object detection within the field of computer vision. Introducing loss functions based on different fields affects the integrity of technological development and is prone to missing loss functions in cross-fields.

Meanwhile, other surveys divide loss into regression loss and classification loss. Qi et al. [7] summarized and analyzed 31 classic loss functions in deep learning, classifying loss functions into regression and classification. Similarly, Lorenzo et al. [9] also classified loss functions in the same way. Jadon et al. [8] summarized 14 regression loss functions commonly used in the time-series prediction. Terven et al. [15] conducted a comprehensive survey of loss functions and performance metrics in deep learning, dividing loss functions into regression loss and classification loss, and listed loss functions in the fields of image segmentation, face recognition, and image generation. However, this binary classification method does not consider the interrelationship of samples, and the contrast loss and a series of loss of face recognition are difficult to include in these categories. Therefore, this paper proposes a new loss category called metric loss, which constructs the loss based on the similarity between samples by distance or angle.

Specifically, this study was inspired by existing surveys, retaining some of loss function in these papers and supplementing it with papers searched and screened on Web of Science. Papers [7,8,9,15] summarize regression loss. Among them, paper [15] categorizes MSE, mean absolute error loss (MAE), Huber loss, log-cosh loss, and quantile loss as regression loss. Similarly, paper [7] also introduces these 5 regression losses. Paper [8] introduces other regression losses improved based on MSE and MAE, such as root mean squared error loss (RMSE) and root mean squared logarithmic error loss (RMSLE). RMSE performs a square root operation on MSE, while RMSLE performs a logarithmic operation on the predicted value and GT. Paper [9] introduces the above 7 losses, as well as the smooth L1 loss and balanced L1 loss improved based on Huber loss. The smooth L1 loss is a special form of Huber L1 loss, applied to the R-CNN model, while the balanced L1 loss is an improvement of the smooth L1 loss, applied to image segmentation. Furthermore, MSE and MAE originate from the basic mean bias error loss (MBE), and this study briefly introduces MBE. In essence, these 10 losses are a type of quantity difference loss, which achieves model optimization by minimizing the point-wise error between the predicted value and GT.

Regression loss is commonly used for bounding box regression in object tracking. However, bounding boxes are geometric shapes rather than single coordinate points. Quantity difference loss only penalizes the numerical error of coordinate points independently, resulting in poor regression performance for bounding boxes. Therefore, researchers initially proposed the Intersection over Union (IoU) loss, which optimizes the model by calculating the overlap between predicted bounding box and GT bounding box [25]. Subsequently, many researchers have made improvements based on IoU loss.

This study conducted a search in the Web of Science database using the keyword TS = (“Loss Function” AND “IoU” AND “Bounding Box Regression”) to obtain a total of 86 papers. The final papers were determined through two rounds of screening. The preliminary screening through reading the titles, keywords, and abstracts to eliminate some papers was conducted. These papers merely cited other people’s works and did not propose new ones. A total of 33 papers were obtained after the preliminary screening. The secondary screening through carefully reading the full texts, and a total of 8 papers were obtained. During the secondary screening, some losses specific to particular scenarios were eliminated because they did not have good generalization performance. Also, some losses with minor improvement were also eliminated. These papers consider the geometric properties of bounding boxes compared to the quantity difference loss, named geometric difference loss. The search and screening results of geometric difference loss are shown in the first row of Table 3.

Table 3.

The result of searching and screening in Web of Science.

Correspondingly, papers [7,9,15] summarize classification loss. Paper [15] categorizes binary cross-entropy loss (BCE), categorical cross-entropy loss (CCE), sparse CCE, weighted cross-entropy loss (WCE), label smoothing CE loss, poly loss, and Hinge loss as classification losses. Paper [7] categorizes 0–1 loss, Hinge loss, exponential loss, and Kullback–Leibler divergence loss as classification losses. Paper [9] introduces more comprehensive to 0–1 loss, Hinge loss, and smoothed Hinge loss, as well as BCE and CCE. Meanwhile, this paper also categorizes focal loss, dice loss, and Tversky Loss as classification losses. However, losses such as Hinge loss and exponential loss differ from cross-entropy-based losses in terms of their mathematical forms. This study categorizes the former as margin loss, which introduces marginal parameters to quantify the difference between predicted value and GT. The most typical margin loss is Hinge loss, while the smoothed hinge loss, quadratic smoothed hinge loss, and modified Huber loss, which are improvements based on hinge loss, can be categorized as margin loss.

Functions such as BCE, CCE, and focal loss are designed to make the predicted probability distribution closer to the GT distribution. In this study, they are named as probability loss. The most typical probability loss is loss based on cross-entropy. In this study, BCE, CCE, sparse CCE, WCE, BaCE, and label smoothing CE loss are categorized as probability loss. In addition, focal loss, dice loss, and Tversky Loss, as well as the log-cosh dice loss, generalized dice loss, and focal Tversky loss, which are improvements of them, are also included in this category. Probability loss also includes the poly loss and Kullback–Leibler divergence loss mentioned in [7,15].

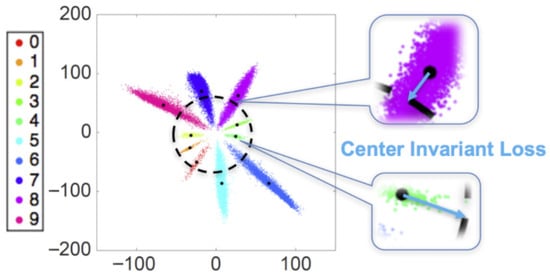

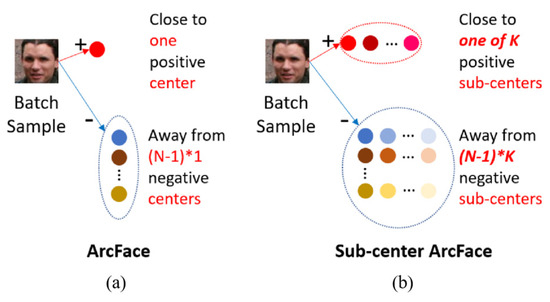

This study proposes metric loss, which uses the similarity between samples based on their distance or angular relationship to construct an optimization objective. This study divides metric loss into Euclidean distance loss and angular margin loss. Classic contrastive loss, triplet loss, and center loss are reasonably categorized as Euclidean distance loss. Our research search on Web of Science with the keyword TS = (“Center Loss” AND “Improvement”) and obtain a total of 26 papers. After the preliminary screening, papers that cited others’ improved work were eliminated; 8 papers remained. After the secondary screening, papers that did not have generalization were eliminated; 2 papers remained. These 2 typical works were range loss [26] and center-invariant loss [27], which can be categorized into Euclidean distance loss. The search and screening results are shown in the second row of Table 3.

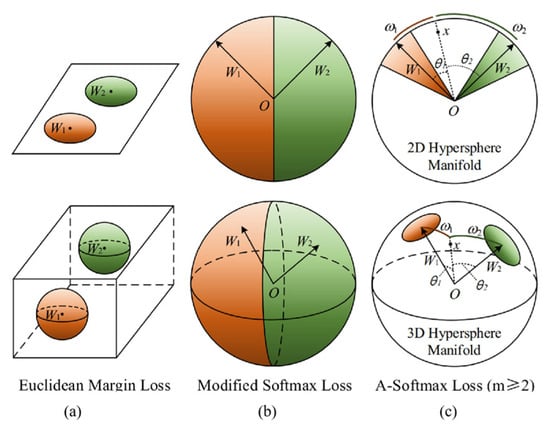

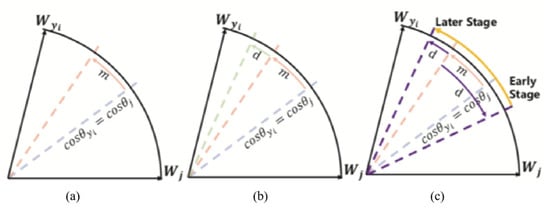

Angular margin loss is an improvement based on softmax loss, commonly used in face recognition. This study search on Web of Science with the keyword keywords TS = (“Loss Function” AND “Face recognition” AND “Softmax”) obtained a total of 70 papers. The preliminary screening eliminated articles that were irrelevant to the topic or cited existing works. A total of 21 papers were obtained after the preliminary screening. The secondary screening selects more typical and pioneering papers, resulting in a total of 9 papers. The search and screening for angular margin loss are shown in the third row of Table 3.

3. Regression Loss

Regression model predicts dependent variables based on values of one or more independent variables [28,29]. Let be a regression model governed by parameters , which maps independent variables , where , to dependent variables . The model estimates parameters by minimizing the loss function to make predictions as close as possible to GT.

Loss functions for regression tasks are all based on the residual function, that is, the loss function is constructed from the difference between the GT and the predicted value . This chapter will introduce some classic quantity difference loss, such as mean squared error loss (MSE) and mean absolute error loss (MAE) and their improvements. Furthermore, this paper specifically introduces a series of IoU-based loss used for bounding box regression in the object detection task, named geometric difference loss.

3.1. Quantity Difference Loss

Quantity difference loss directly measures the error of point-by-point value between the prediction and the GT. It guides models to approximate the value distribution by minimizing this error. The typical representative loss includes MSE and MAE, which are applicable to general regression tasks.

3.1.1. Mean Bias Error Loss (MBE)

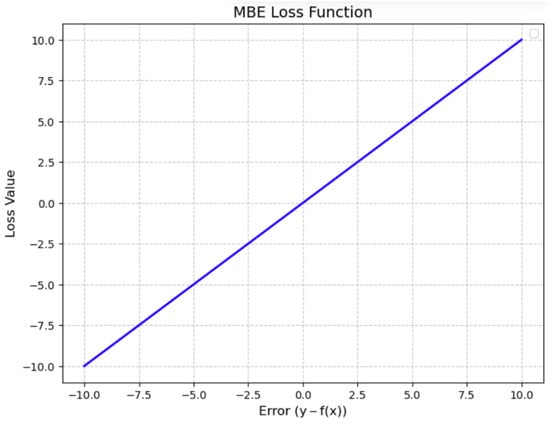

The mean bias error loss (MBE) is a basic numerical loss function [30], which captures the average deviation in the prediction. However, it is rarely used as a loss function to train regression models as positive errors can offset negative ones, potentially causing incorrect parameter estimation. It serves as the starting point for the mean absolute error loss function and is commonly used to evaluate model performance. The mathematical formula of MBE is shown in Equation (2):

where is the number of samples; is the GT; and is the predicted value. The MBE loss function curve is shown in Figure 1.

Figure 1.

The function curve of MBE.

3.1.2. Mean Absolute Error Loss (MAE)

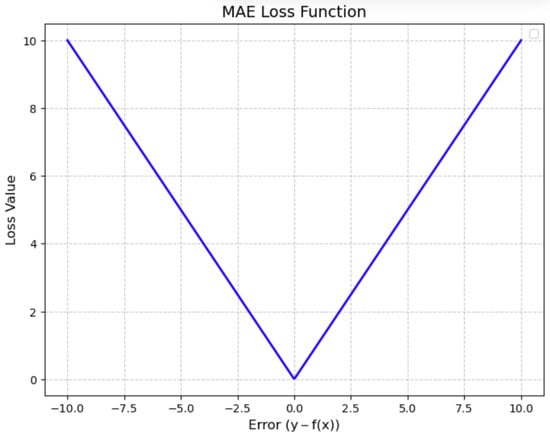

Mean absolute error loss (MAE), also known as L1 loss, is one of the most fundamental loss functions in regression tasks. It measures the average of absolute deviations in predictions [16]. The absolute value overcomes the problem that the positive error of MBE offsets the negative error. Similar to MBE, MAE is also used to evaluate the performance of the model. The mathematical formula of MAE is shown in Equation (3):

It should be noted that the contribution of the error follows a linear pattern, which means that small errors are as important as large ones, making the model less sensitive to outliers. MAE function curve is shown in Figure 2.

Figure 2.

The function curve of MAE.

Furthermore, it can be known from Figure 2 that when the predicted value is equal to GT, the MAE function does not have tangent. The slope of the tangent to the left is , while it suddenly changes to +1 on the right. At this point, the function is non-differentiable, and there is no single definite gradient, resulting in discontinuous gradients. The sub-gradient method allows any value between −1 and 1 to be used as a sub-gradient at this point, thus enabling parameter updates to continue at these points.

Since is the basic measure quantity in the loss, we use in following content.

3.1.3. Mean Squared Error Loss (MSE)

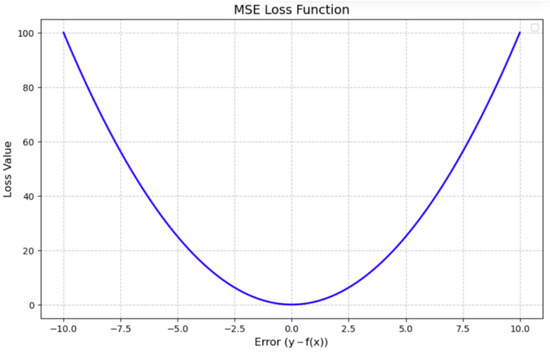

Mean squared error loss (MSE), also known as L2 loss, is the average of the squares of the differences between the predicted values and GT [16]. The square term can solve the problem of cancellation of positive and negative errors. Meanwhile, the square form can magnify larger errors, making the model pay more attention to the correction of large errors. Furthermore, the derivatives are continuous and smooth, which is convenient for the optimization of the gradient descent algorithm. The mathematical formula of MSE is shown in Equation (4):

However, MSE may be overly sensitive to outliers. Large errors can significantly increase the loss value, causing the model to be prone to overfitting outliers. The function curve of MSE is shown in Figure 3.

Figure 3.

The function curve of MSE.

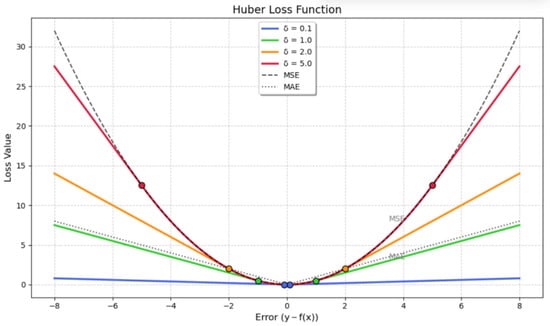

3.1.4. Huber Loss

Huber loss combines the advantages of MSE and MAE by using a threshold a to determine which form of loss to use [30]. When the error is less than , MSE is used. When the error is greater than , MAE is used. This makes the error linear when the difference between the model’s predicted values and GT is large. So, the Huber loss is less sensitive to outliers. Conversely, when the error is small, Huber loss follows the MSE, making convergence faster and differentiable at 0. The use of enhances the model robustness to outliers.

The mathematical formula of Huber loss is shown in Equation (5):

The choice of is crucial, and it is dynamically adjusted during training based on results. The Huber loss function curve is shown in Figure 4. Figure 4 selects four cases which values: 0.1, 1.0, 2.0, and 5.0. The marked points are the junction between and the equal error. The two gray curves are MAE and MSE.

Figure 4.

The function curve of Huber loss.

3.1.5. Smooth L1 Loss

Smooth L1 loss (also known as Huber-like loss) is a special case of Huber loss and is obtained when . Smooth L1 loss was originally applied to the R-CNN framework [31], where it is more accurate than MSE while guaranteeing differentiability to 0. For the regression of a single coordinate, its mathematical formula is shown in Equation (6):

where is the true coordinates; is the predicted coordinate; and is the threshold that controls the transition between squared and linear error components.

When , the loss function adopts the squared error form, making it smoother and more optimization-friendly for smaller errors; when , it switches to the linear error form, providing more stability for larger errors and preventing the sharp increases in loss that occurs with traditional MSE. When , it is equivalent to smooth L1 loss.

The smooth L1 loss function curve is illustrated by the green line in Figure 4.

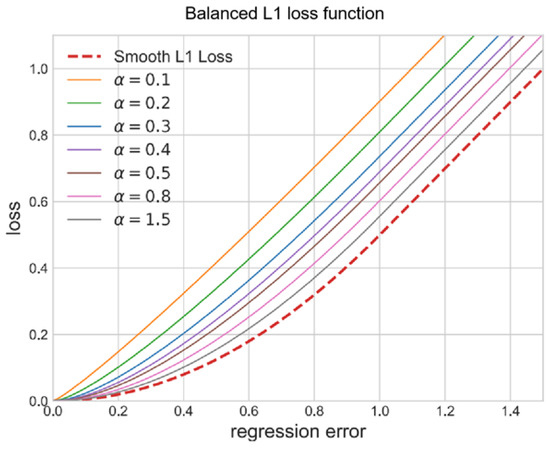

3.1.6. Balanced L1 Loss

Balanced L1 loss is an enhanced version of the standard L1 loss for bounding box regression in image segmentation. It aims to address the limitations of smooth L1 in handling sample imbalance [32]. The key idea is to introduce an inflection point to distinguish between inliers and outliers. Logarithmic functions smooth the loss gradients for inliers, while linear functions limit the gradient impact of outliers. This approach balances the contributions of easy-to-classify and hard-to-classify samples, improving training effectiveness. The mathematical formula of balanced L1 loss is shown in Equation (7):

where is the regression error; is the parameter that controls the gradient boost for inliers; a smaller α enhances the gradient for inliers without affecting the values of outliers; the parameter limits the maximum gradient for outliers; is a constant determined by α and , which must satisfy ; is the constant term that is used to ensure that the function is continuous at . The function curve of balanced L1 loss is shown in Figure 5.

Figure 5.

The function curve of balanced L1 loss with different α.

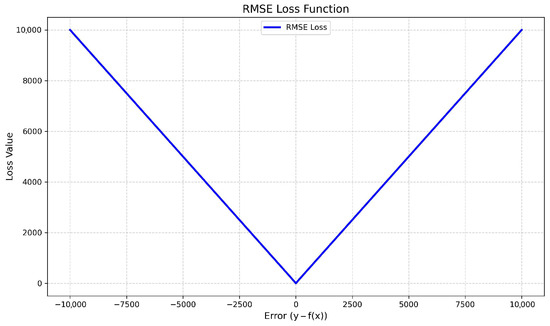

3.1.7. Root Mean Squared Error Loss (RMSE)

Root mean squared error loss (RMSE) is the square root of MSE [16]. It explains the changes in the actual values and measures the average magnitude of the errors. The square root makes the penalty of RMSE for error less than that of MSE. Even if the error is large, the loss of RMSE is relatively small. The mathematical formula of RMSE is shown in Equation (8):

However, RMSE is still a linear function, and the gradient is abrupt near the minimum. The function curve of RMSE is shown in Figure 6.

Figure 6.

The function curve of RMSE.

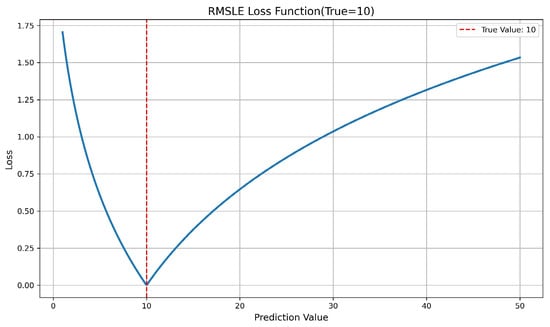

3.1.8. Root Mean Squared Logarithmic Error Loss (RMSLE)

Root mean squared logarithmic error loss (RMSLE) processes the difference between the predicted value and GT through logarithmic transformation. It is applicable to scenarios where the data has a skewed distribution or outliers [33]. The logarithmic function compresses the numerical range of large errors and reduces the impact of outliers on the overall error. Furthermore, it adopts an asymmetric punishment mechanism. When the predicted value is lower than GT, the punishment of RMSLE is more severe. When the predicted value is higher than GT, the penalty is lighter. Its mathematical formula is shown in Equation (9):

where the addition of 1 before the logarithmic operation is to avoid taking the logarithm of 0, which may occur when GT or the predicted value is 0.

The function curve of RMSLE is shown in Figure 7. The GT is fixed at 10, marked with a dashed red line, while the horizontal axis represents the predicted value.

Figure 7.

The function curve of RMSLE.

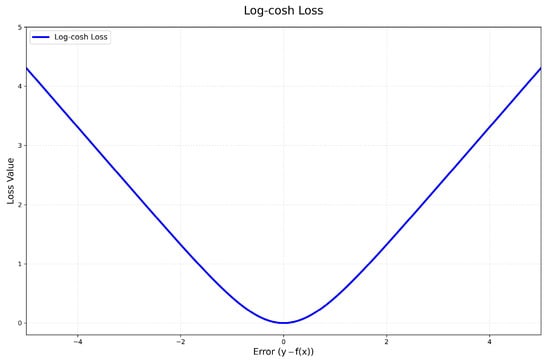

3.1.9. Log-Cosh Loss

The core idea of log-cosh loss is to smooth the predicted errors using the hyperbolic cosine function and then take the logarithm of the result as the loss value [34]. It serves a similar purpose to MSE but is less affected by significant predicted errors. The mathematical formula of log-cosh loss is shown in Equation (10):

where is the hyperbolic cosine function.

When the error is small (), the loss is , which is similar to MSE and emphasizes precise fitting. When the error is large (), the loss is , which is similar to MAE and reduces sensitivity to outliers. Log-cosh loss combines the smoothness of MSE and the robustness of MAE to outliers, avoiding excessive punishment for large errors while maintaining the optimizability of the loss function. The function curve of log-cosh loss is shown in Figure 8.

Figure 8.

The function curve of log-cosh loss.

3.1.10. Quantile Loss

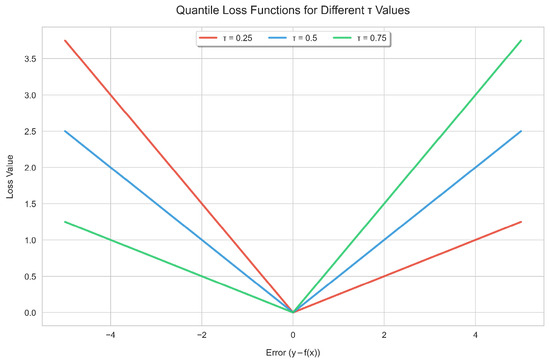

Quantile loss is designed to predict specific quantiles (such as the median, 0.25 quantile, etc.) of the target variable rather than the mean [35]. Its mathematical form assigns different penalty weights based on the direction of the deviation between the predicted value and GT. The mathematical formula of quantile loss is shown in Equation (11):

where is the target quantile, such as for the media. When the predicted value is lower than GT, the loss weight is ; otherwise, it is .

This asymmetry allows the model to adjust its focus on overestimation or underestimation flexibly. In the case of underestimation, the first part of the formula will dominate, and in the case of overestimation, the second part will dominate. Different penalties are given for over-prediction and under-prediction based on the chosen quantile value. The function curves of quantile loss for different values are shown in Figure 9. Here, takes 0.25, 0.5, and 0.75 as examples.

Figure 9.

The function curve of quantile loss.

3.2. Geometric Difference Loss

In object detection tasks, the target of bounding box regression is to achieve geometric alignment between predicted bounding box and GT bounding box. Quantity difference loss only independently penalizes the numerical deviations of the coordinate points, ignoring their inherent geometric properties. Therefore, researchers have proposed geometric difference loss [25]. This loss directly optimizes the geometric Intersection over Union (IoU) between predicted box and GT box, which is also the core metric for evaluating detection accuracy. Geometric difference loss no longer views the coordinates as isolation but considers them as a whole geometric entity, optimizing the geometric relationship between the predicted box and the GT box.

3.2.1. Intersection over Union Loss

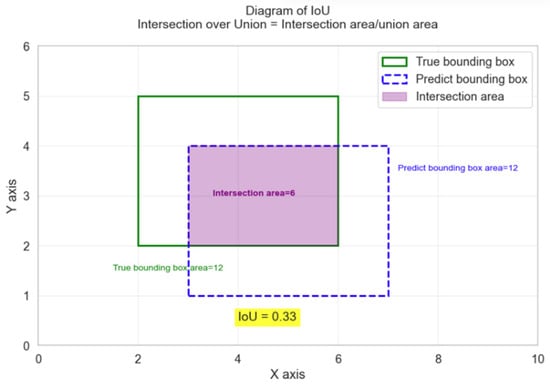

Intersection over Union (IoU) is a metric used in object detection to measure the overlap between two bounding boxes [36]. The mathematical formula of IoU is shown in Equation (12):

where is the predicted bounding box, is the GT bounding box, the intersection is the area of the overlapping part between the the and , and the union is the total coverage area of the and . The schematic diagram of IoU is shown in Figure 10.

Figure 10.

The schematic diagram of IoU.

IoU loss is a loss function widely used in object detection and image segmentation tasks. It optimizes the model by calculating the overlap degree between the coverage area of the predicted bounding box and the coverage area of the GT bounding box [25]. The loss function based on IoU is shown in Equation (13):

This function encourages the predicted bounding box to overlap highly with the GT bounding box. A larger IoU loss value means that the predicted box is closer to the GT box, and a smaller IoU loss value means that the predicted bounding box is farther away from the GT bounding box. The IoU loss function is commonly used in single-stage detectors as part of a multi-task loss function that includes classification loss [31,37].

However, there are some limitations to the IoU loss. When the predicted box and the GT box do not overlap, that is, the IoU is 0. Also, the loss function cannot provide gradient information, resulting in optimization difficulties. However, when the predicted box and GT box overlap completely, IoU loss cannot distinguish the difference in position between the two boxes. When the shape and size of the boxes are irregular, IoU cannot effectively reflect the relative position relationship between the boxes. As a result, more loss functions based on IoU loss improvements emerge.

3.2.2. Generalized IoU (GIoU) Loss

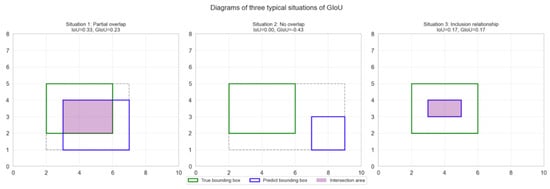

Generalized IoU (GIoU) loss solves the problem of vanishing gradients gradient disappearance in traditional IoU without overlapping by introducing a minimum bounding rectangle so that the loss function can more comprehensively reflect the difference between the predicted box and the GT box [38]. The mathematical formula of GIoU loss is shown in Equation (14):

where is the minimum bounding rectangle encloses both and ; represents the area of the parts do not overlap by and within .

Compared with the standard IoU loss, the GIoU loss provides meaningful gradients when the two bounding boxes do not overlap at all, while the IoU loss will degrade to 0.

However, GIoU loss has a drawback in that it does not consider the aspect ratio differences between bounding boxes. This leads to situations where two boxes have a high IoU but significantly different aspect ratios, resulting in suboptimal bounding box regression, especially when objects have different shapes.

Figure 11 illustrates the differences between GIoU and IoU in various scenarios. In Figure 11, the green line indicates the GT box, the blue line indicates the predicted box, and the purple region shows the overlapping area. The gray dashed line denotes the enclosing box. The figure provides values for IoU and GIoU in three scenarios: partial overlap, non-overlap, and complete overlap. When the predicted box and GT box do not overlap, IoU is 0, but GIoU has value and can offer gradients. Conversely, when the predicted box contains the GT box or vice versa, GIoU degenerates to IoU.

Figure 11.

The differences between GIoU and IoU in various scenarios.

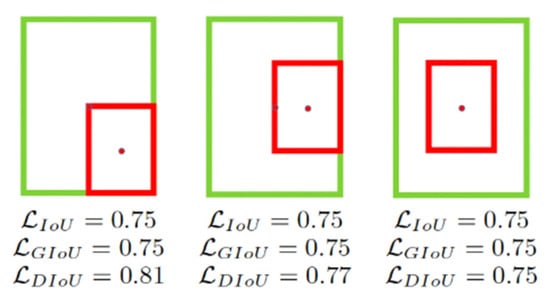

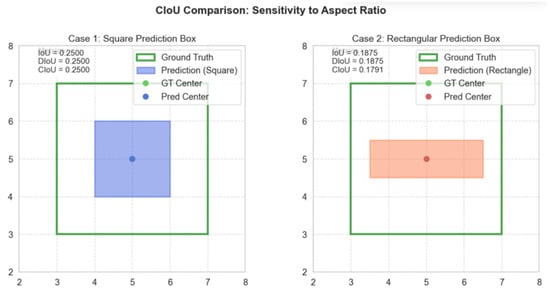

3.2.3. Distance IoU (DIoU) Loss and Complete IoU (CIoU) Loss

To solve the problem of GIoU loss, the distance IoU (DIoU) loss introduces the ratio of the distance between the center points of the predicted box and the GT box to the diagonal, measuring the distance relationship of the box [39]. When the GT box contains the predicted box, DIoU loss directly measures the distance between the two boxes, making the convergence speed faster. DIoU loss can be defined in Equation (13):

where is the Euclidean distance between the center of predicted box and GT box , and is the diagonal length of the minimum bounding rectangle enclosing both boxes.

By incorporating the distance term, DIoU loss reflects the spatial relationship between the predicted box and GT box. This is particularly useful when the boxes overlap as it can still effectively distinguish between them. However, DIoU loss only focuses on optimizing the center points and overlapping areas and does not consider the aspect ratio of the predicted box.

Figure 12 shows the losses of IoU, GIoU, and DIoU in the case of the GT box containing the predicted box. In this case, DIoU can distinguish between two boxes for its consideration of center distance, while GIoU and IoU cannot.

Figure 12.

The comparation of IoU loss, GIoU loss, and DIoU loss.

Reference [39] proposed the complete IoU loss (CIoU). CIoU loss is a further improved loss function based on the DIoU loss. It introduces the aspect ratio constraint to solve the problem that the aspect ratio is not considered in the DIoU loss [40]. The CIoU loss introduces a penalty term based on DIoU loss and can be defined in Equation (16):

where is the penalty term of the aspect ratio of the predicted box, which is used to measure the aspect ratio difference between the predicted box and the GT box, and is the hyperparameter of the balance factor, which is used to control the weight of the aspect ratio penalty term. The mathematical formula of is shown in Equation (17):

where and are the length and width of the ground-truth, and and are the length and width of the predicted box.

In Equation (17), is used to measure the shape difference between the predicted box and the GT box. By quantifying the aspect ratio deviation through angle differences, the limitation that DIoU cannot distinguish different shapes when the centers coincide can be avoided. Greater difference in the aspect ratio between the predicted box and the real box means higher value of and greater penalty intensity. The mathematical formula of is shown in Equation (18):

Here, dynamically adjusts the contribution of the aspect ratio. In the early stage of training when the IoU is low, the center distance is optimized first. Later, when the IoU approaches 1, the focus is on adjusting the aspect ratio. The schematic diagram of CIoU when the aspect ratio of the predicted box is different is shown in Figure 13. When the aspect ratio of the predicted box is different, CIoU is sensitive to it, while IoU and DIoU are not sensitive to the aspect.

Figure 13.

Comparison of CIoU’s sensitivity to aspect ratio.

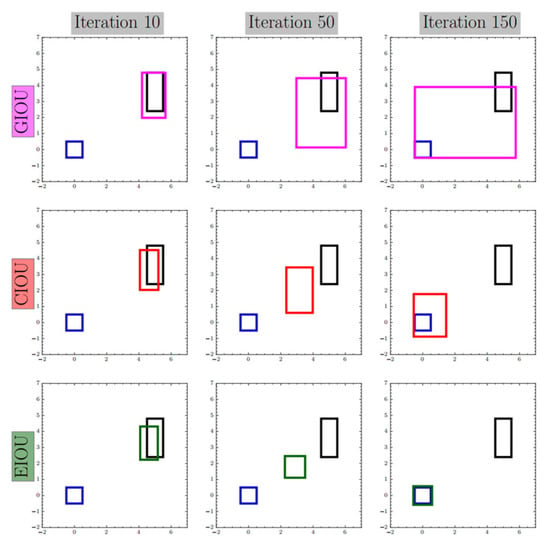

3.2.4. Efficient IoU (EIoU) Loss

Efficient IoU (EIoU) loss is based on the penalty term of CIoU loss, which separates the aspect ratio of the predicted box and the GT box, calculates the length and width of the GT box and the predicted box, respectively, and adds focal loss to focus on high-quality bounding boxes to solve the problems existing in CIoU and accelerate convergence [41]. The mathematical formula of EIoU loss is shown in Equation (19):

where is the standard IoU loss; is the loss of center point distance, which is used to measure the distance between the center points of two frames; is the aspect ratio loss, used to measure the aspect ratio difference between the predicted box and the GT box; is equal to , is the square of the Euclidean distance between the center point of the predicted box and the center point of the ground-truth; is equal to ; and respectively, represent the squared differences in width and height between the predicted box and the GT box.

Furthermore, the EIoU loss directly minimizes the difference in width and length between the GT box and the predicted box, thereby achieving a faster convergence speed and better positioning effect. By independently optimizing the length and width parameters, EIoU loss avoids the gradient oscillation caused by the arctan function in CIoU loss. As shown in Figure 14, EIoU can make the width and height of the predicted box approach the GT box quickly.

Figure 14.

Comparison of iteration speeds among GIoU loss, CIoU loss, and EIoU loss.

3.2.5. SIoU Loss

Traditional IoU mainly focuses on the distance, overlapping area, and aspect ratio but ignores the direction alignment problem between the predicted box and the GT box. This directional deviation will lead to a slower convergence speed of the predicted box during the training process, affecting the model accuracy. SIoU introduces a direction-aware penalty term to guide the predicted box to approach the GT box quickly along the nearest coordinate axis preferentially [42]. The SIoU loss function consists of four parts: Angle Cost, Distance Cost, Shape Cost, and IoU Cost. The mathematical formula for SIoU loss is shown in Equation (20):

where is distance cost, used to measure the distance between the center points of the predicted box and the GT box; is shape cost, used to measure the aspect ratio difference between the predicted box and the GT box, enhancing the constraint on shape similarity.

The mathematical formula for distance cost is shown in Equation (21):

where is the scaling factor of the angle weight; and are the length and width of the GT box; and are the distance between the centers of the predicted box and the GT box on the x-axis and y-axis. The mathematical formula for shape cost is shown in Equation (22):

where and are the length and width of the predicted box; is the shape penalty factor, usually set to 1, to control the intensity of the length-to-width ratio constraint.

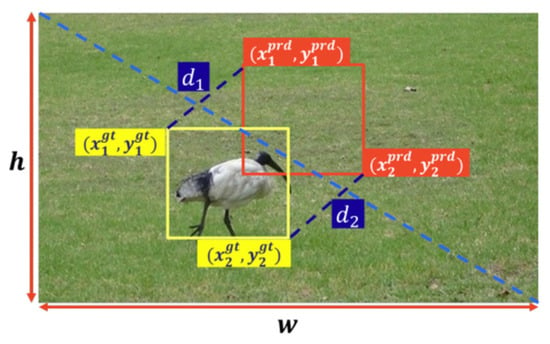

3.2.6. Minimum Point Distance (MPD) IoU Loss

When the predicted box and GT box have the same aspect ratio but different sizes, CIoU cannot distinguish the optimization direction. Minimum point distance (MPD) IoU loss addresses this by directly minimizing the distance between the top-left and bottom-right corners of the predicted and GT box through geometric analysis [43]. It introduces a new IoU measure based on MPD and converts it into a loss function. MPD IoU is defined in Equation (23):

where and are the Euclidean distance between the upper-left corner point and the lower-right corner point of the predicted box and the GT box; and respectively, represent the width and length of the input image and are used to normalize the distance term. Figure 15 illustrates the illustrated diagrams of and and and .

Figure 15.

The illustrated diagrams of and and and .

The MPD IoU loss directly optimizes the coordinates of the upper left and lower right corners of the predicted box and the GT box and achieves more accurate geometric alignment by minimizing the Euclidean distance between the two pairs of key points. The mathematical formula of the MPD IoU loss is shown in Equation (24):

The MPD IoU only needs to calculate the Euclidean distances between the top-left and bottom-right corners of the predicted box and GT box. Without the need to calculate the width and height penalty terms that are split like EIoU or the angle parameters like CIoU, it significantly reduces the computational complexity. Meanwhile, when the predicted box and GT box share the same aspect ratio but differ in scale, the CIoU/EIoU loss provides no useful gradient; however, the MPD IoU reflects size differences by minimizing corner point distances, and it is more suitable for real-time detection models.

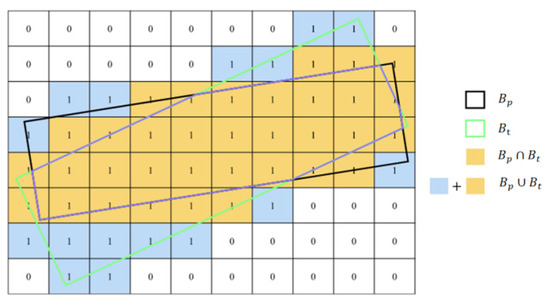

3.2.7. Pixel-IoU (PIoU) Loss

Pixel-IoU (PIoU) loss is a loss function specially designed for rotating target detection, aiming to solve the problem of angular error in the traditional rotation box regression [44]. PIoU loss directly takes pixel-level IoU calculation as the optimization objective and achieves derivability through approximation methods. It solves the complex problem of IoU calculation for rotating frames and enhances the sensitivity to shape and angle. The mathematical formula for PIoU loss is shown in Equation (25):

where is the pixel-level intersection and union ratio of the predicted box and the GT box; is the set of positive sample pairs, defined as the matching pairs where IoU ≥ 0.5. As shown in Figure 16, an example of PIoU loss is presented.

Figure 16.

An example of PIoU loss.

3.2.8. Alpha-IoU Loss

Alpha-IoU loss adjusts the loss weights for different IoU values by tuning the parameter to enhance the detection ability of the model for high IoU and low IoU targets [45]. For instance, when , the loss function focuses more on high-IoU targets, whereas when , it becomes more sensitive to low-IoU targets. The mathematical formula for Alpha-IoU loss is shown in Equation (26):

where is a power parameter, usually taking a value greater than 0, used to control the sensitivity of losses to high IoU targets. When , the loss and gradient of the high IoU target are enhanced to improve the positioning accuracy.

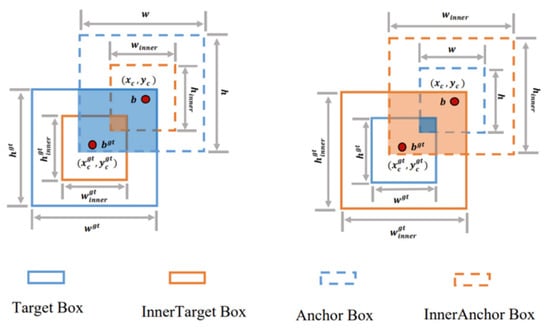

3.2.9. Inner-IoU Loss

The Inner-IoU loss adaptively optimizes the regression process of different IoU samples by dynamically adjusting the scale of the auxiliary bounding box [46]. It generates auxiliary bounding boxes through the ratio to replace the original bounding boxes for IoU calculation. First, introduce the ratio to generate the boundary coordinates of the auxiliary box; this is shown in Equation (27):

where is the center point of the ground-truth, and the width and length are, respectively, and ; is the center point of the ground-truth predicted box, and the width and length are, respectively, and ; , , , and represent the left boundary, right boundary, upper boundary, and lower boundary of the predicted auxiliary box.

Similarly, , , , and represent the boundary of the real auxiliary box. represents magnification, and indicates shrinking. The descriptions of the meanings of each symbol of Inner-IoU are shown in Figure 17.

Figure 17.

Description of Inner-IoU.

The formula of Inner-IoU is shown in Equation (28):

where is shown in Equation (29):

and is shown in Equation (30):

The Inner-IoU loss can be seamlessly integrated into existing IoU variants. Taking CIoU as an example, the formula of the Inner-CIoU loss is shown in Equation (31):

The Inner-IoU loss can achieve dynamic gradient adjustment. For high IoU samples with nearly completed target positioning, a smaller auxiliary box is used to increase the absolute value of the gradient and accelerate convergence. For low IoU samples with large initial positioning deviations, a larger auxiliary box is used to expand the effective regression range and avoid gradient vanishing. Furthermore, Inner-IoU does not require modification of the model structure and can directly replace the existing IoU losses, achieving plug-and-play.

4. Classification Loss

The goal of classification is to assign a certain class label from discrete classes to the input [47,48,49]. Similar to regression, classification trains the parameters of the model by minimizing loss function. Classification includes binary classification and multi-classification. For binary classification, the value of is , where 0 represents the negative class, and 1 represents the positive class. For the output of the sigmoid function, represents the probability that the model predicts the sample belongs to the positive class. For multi-classification problems, 0 indicates that the sample does not belong to the category . Similarly, for the output obtained by normalizing the softmax function, , it represents the probability that the model predicts the sample belongs to the category .

Classification loss can be divided into margin loss and probability loss. Margin loss introduces margin parameter to quantify difference between predicted value and GT, forcing the model to maintain a safe distance between the predicted value and the decision boundary on the basis of correct classification [50,51]. Probability loss improves the generalization ability of the classification model by optimizing the accuracy of probability prediction [52,53].

4.1. Margin Loss

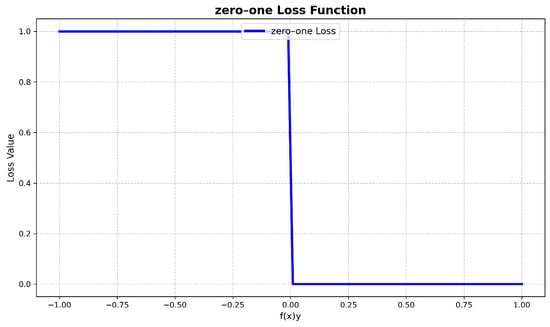

4.1.1. Zero-One Loss

The most fundamental and intuitive margin loss is the zero-one loss [54]. It takes 1 when the predicted value is different from GT; otherwise, it takes 0, as shown in Equation (32):

This kind of loss directly reflects the classification error rate, but it is overly sensitive to outliers and lacks convexity and differentiability. Thus, it cannot be used directly. However, the usable loss can be derived based on the zero-one loss. So, it is the basis of other margin loss. The function curve of zero-one loss is shown in Figure 18.

Figure 18.

The function curve of zero–one loss.

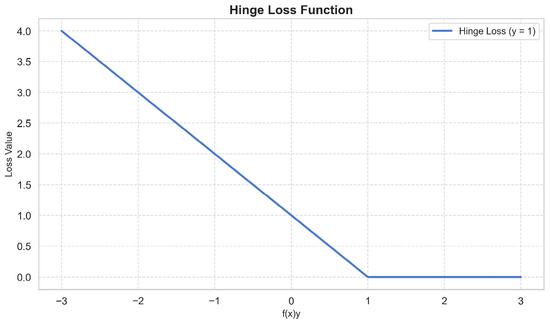

4.1.2. Hinge Loss

Hinge loss is often used in support vector machines (SVMs) [54] to optimize the model by penalizing the gap between the predicted values and GT. Its mathematical formula is shown in Equation (33):

It can be known from this formula that there is a loss only when the product of the predicted value and GT is less than 1. This forces the model to maintain a margin of at least 1 on the basis of correct classification. In this way, penalties are only imposed on samples that are misclassified or have insufficient intervals, making the model pay more attention to the overall error rather than individual samples. However, this loss is non-differentiable at ; the gradient is discontinuous. The function curve of hinge loss is shown in Figure 19.

Figure 19.

The function curve of hinge loss.

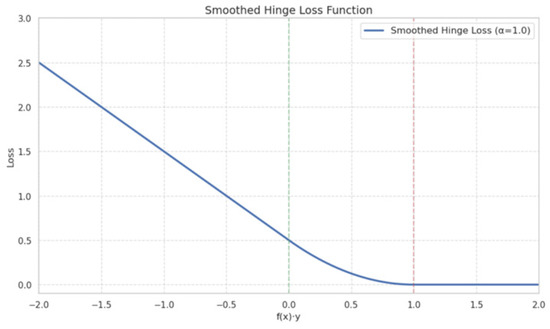

4.1.3. Smoothed Hinge Loss

To address the non-smoothness issue of hinge loss, smoothed hinge loss achieves continuous differentiability through smoothing processing, realizes more stable gradient descent, and optimizes training stability [55]. Its mathematical formula is shown in Equation (34):

where is the smoothing parameter, which controls the width of the smooth area. This loss is continuous and second-order differentiable at ; has no loss at ; has smoothly transitions with a quadratic function at ; and maintains a linear penalty at , similar to the original hinge loss.

The function curve of smoothed hinge loss is shown in Figure 20. In Figure 20, takes 1.0. The green line and the red line represent the boundary line.

Figure 20.

The function curve of smoothed hinge loss.

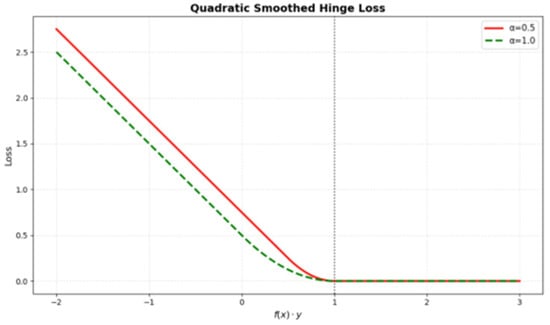

4.1.4. Quadratic Smoothed Hinge Loss

Another common variant of hinge loss is the quadratic smoothed hinge loss, which is globally second-order differentiable and suitable for scenarios requiring second-order optimization [56]. Its mathematical formula is shown in Equation (35):

where the hyperparameters determine the degree of smoothness.

When approaching 0, the loss becomes the original hinge loss. The function curve of quadratic smoothed hinge loss is shown in Figure 21. In this figure, the case of taking and is shown.

Figure 21.

The function curve of quadratic smoothed hinge loss.

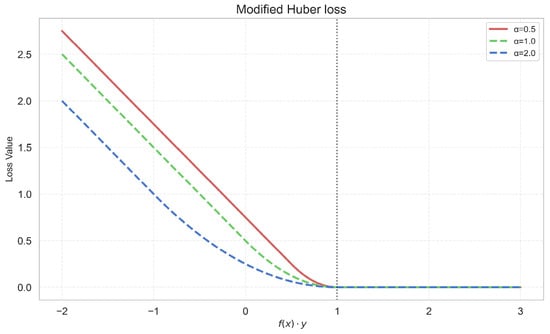

4.1.5. Modified Huber Loss

Modified Huber loss is a minor variation of the regression Huber loss and is a special case of the quadratic smooth hinge loss when [56]. The function curve of the modified Huber loss is shown in Figure 22. In this figure, the red line represents modified Huber loss, and the green line and blue line are the same as the lines in Figure 21.

Figure 22.

The function curve of modified Huber loss.

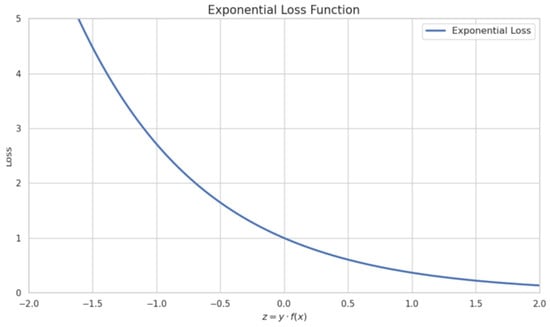

4.1.6. Exponential Loss

The background of exponential loss can be traced back to the proposal of the AdaBoost algorithm. AdaBoost dynamically adjusts the sample weights so that in each round of iteration, higher weights are assigned to the samples with classification errors, thereby paying more attention to these hard samples in the subsequent training. This mechanism enables AdaBoost to perform well in noisy datasets or imbalanced datasets. Exponential loss, as the objective function of AdaBoost, is originally designed to amplify the influence of classification errors through exponential penalties [57]. Thereby, it guides the model to gradually improve the classification performance. The mathematical formula of exponential loss is shown in Equation (36):

The function curve of the exponential loss is shown in Figure 23.

Figure 23.

The function curve of the exponential loss.

4.2. Probability Loss

The probability loss function quantifies the difference between predicted probability distribution and true data distribution, making the predicted probability distribution approaches to the true data distribution through the optimization process. Given the observational data , where , is the input, , is the true output, which is the discrete classification label. Set the model and adjust it by parameters . Set as the predicted probability distribution where the sample is of the positive category. The target of the probability loss function is to find the optimal parameters so as to minimize the difference between the predicted probability distribution by the model and the true data distribution.

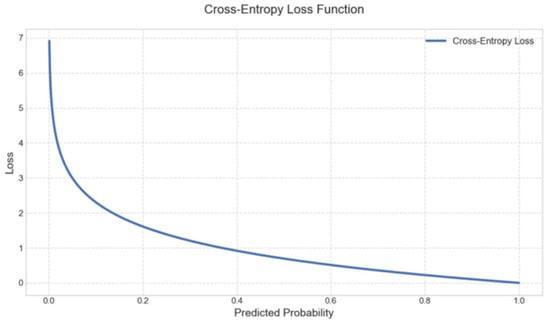

4.2.1. Binary Cross-Entropy Loss (BCE)

The cross-entropy (CE) originated from information entropy and is used to measure the difference between predicted probability distribution and GT probability distribution [17,58]. CE is well suited for probabilistic models because it turns the intuitive idea “give high probability to what actually happened” into a concrete number. When the predicted probability assigned to the correct result is already high, the loss stays low, and when it is low, the loss rises sharply. Therefore, CE is used for classification tasks in deep neural networks.

BCE is the application of cross-entropy in binary classification scenarios [59] and is applicable to tasks with labels of 0 or 1. The formula of BCE is shown in Equation (37):

where is the sample size, is GT, and is the predicted probability of the model pair, .

The BCE is intuitive and easy to implement. As the maximum likelihood estimation objective, the gradient of BCE is directly proportional to the prediction error due to its own design characteristics. The larger the error is, the stronger the gradient is. It helps to alleviate the problem of gradient disappearance. Thus, during the training process, it provides stable gradient updates, which favor the model convergence. However, in datasets with category imbalance, BCE will cause the model to bias a certain category and ignore the features of a minority of categories. Assuming GT is category 1, the loss function curve of BCE is shown in Figure 24.

Figure 24.

The function curve of BCE.

4.2.2. Categorical Cross-Entropy Loss (CCE)

CCE expands the binary classification scenarios and handles multi-classification problems [60]. Let be the predicted probability distribution of samples, . The mathematical formula of CCE is shown in Equation (38):

where is the number of categories; is the total number of samples; is GT; and is the probability that the model predicts the sample belongs to the category . In model training, minimization makes close to 1 for category and close to 0 for other categories.

In neural networks, models usually output normalized results through the softmax function. A softmax function is shown in Equation (39):

where and are the inputs of a neuron.

4.2.3. Sparse Categorical Cross-Entropy Loss

Sparse categorical cross-entropy loss (sparse CCE) is applicable to the situation where the category label is an integer rather than a unique hot vector [60]. Its mathematical formula is shown in Equation (40):

where is the probability assigned to the correct class .

Compared with CCE with one hot coding, sparse CCE has higher computational efficiency, but it is lacking in representation granularity. Sparse CCE directly represents the label with a single real number (such as ) instead of storing entire one hot encoding (such as ), which improves the computational efficiency. However, sparse real number labels compress categories into a continuous dimensional space, resulting in a limited number of categories that can be clearly distinguished, while one hot coding can distinguish any number of categories by assigning higher dimensions to each category. Thus, compared with sparse CCE, CCE with one hot coding has advantages in fine-grained large-scale classification.

4.2.4. Weighted Cross-Entropy Loss (WCE)

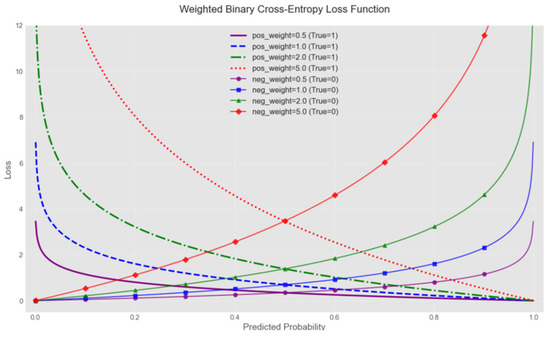

BCE and CCE are sensitive to category imbalance. When there are more samples of a certain category in the dataset and fewer samples of other categories, the model may ignore a minority category [60]. WCE introduces category weights on the basis of CE to adjust the contribution of different categories to the total loss [61]. By allocating weights to different categories, the model’s focus on minority categories or key categories is enhanced, which is particularly suitable for data scenarios with imbalanced categories. The mathematical formula of binary WCE is shown in Equation (41):

where is the weight applied to the positive category samples; represents GT, . represents a negative category, and represents positive category. represents the probability distribution predicted by the model, .

In multi-classification scenarios, each label is assigned a weight based on its frequency or importance. Then, calculate a binary WCE term for each label and sum it up. The mathematical formula for multi-classification WCE is shown in Equation (42):

where is the total number of categories, and is the weight of the category .

WCE helps ensure that a minority category has sufficient influence on the gradient during the training process and reduces the model’s bias towards the majority classes.

Figure 25 shows the function curve of the binary WCE. Figure 25 presents a two-graph display. The left graph shows the loss curves under different positive sample weights when the GT is 1, and the right graph shows the loss curves under different negative sample weights when GT is 0. At the same time, four weight values were adopted, namely, 0.5, 1.0, 2.0, and 5.0.

Figure 25.

The function curve of the binary weighted cross-entropy loss.

4.2.5. Balanced Cross-Entropy Loss (BaCE)

BaCE [61] is similar to WCE. It proposes that not only the positive class samples should be weighted but also the negative class samples are very important. The formula for the equilibrium cross-entropy of binary classification is shown as Equation (43):

where is the weights of the positive class.

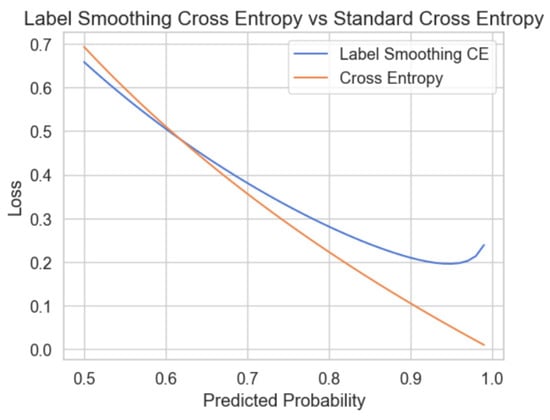

4.2.6. Label Smoothing Cross-Entropy Loss (Label Smoothing CE Loss)

Label smoothing CE loss improves the traditional cross-entropy loss by introducing label smoothing technology [62]. It replaces the real labels of one-hot encoding with soft labels, that is, by transferring part of the probability quality from GT to other categories, thereby reducing the model’s overconfidence in a single category. The formula obtained by smoothing the label is shown in Equation (44):

where is the smoothing factor, . The typical value range from 0.05 to 0.2. Regulation can be achieved by verifying performance.

The mathematical formula of label smoothing CE is shown in Equation (45):

After smoothing, the model does not allow a probability of 1 to be assigned to any single category. Label smoothing can prevent overconfident predictions. Empirical evidence indicates that label smoothing helps the model avoid overfitting on noisy data or unrepresentative training samples. As shown in Figure 26, it presents the comparison of the function curves between label smoothing CE and standard CE. Figure 26 shows the situation where the predicted probability value is higher than 0.5. When the predicted probability approaches 1, CE gradually approaches 0, while label smoothing CE does not approach 0 but converges to a fixed value.

Figure 26.

Comparison of the function curves of label smoothing CE and standard CE.

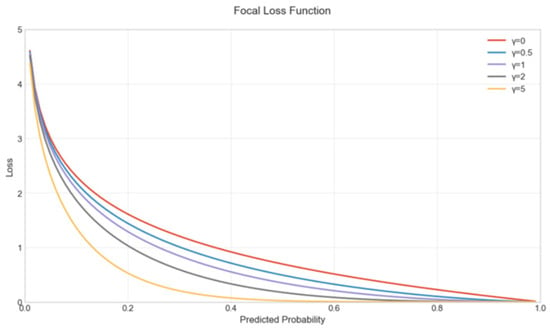

4.2.7. Focal Loss

Focal loss is a loss function designed for the problem of category imbalance, used to solve the problem of imbalance in the number of positive class samples and negative class samples. It reduces the weight of easy-to-classify samples by adjusting parameters, enabling the model to focus more on learning hard-to-classify samples [63]. For binary classification problems, the mathematical formula of focal loss is shown in Equation (46):

where is the predicted probability of the model for the correct category, and is a hyperparameter and a category balance factor, used to balance the weights of positive and negative samples. When the proportion of positive samples is relatively small, a balance factor can be set to increase the loss contribution of positive samples; is the focusing parameter. When , the loss controls the weight distribution of easy-to-classify and hard-to-classify samples; when , focal loss degenerates into WCE. When increases, the loss contribution of easy-to-classify samples will be significantly suppressed, forcing the model to focus on hard-to-classify samples.

The function curves of focal loss are shown in Figure 27. Curves with the five values of 0, 0.5, 1, 2, and 5 are plotted, respectively. It can be seen from Figure 27 that as increases, the loss of the easy-to-classify samples is compressed smaller, the relative weight of the hard-to-classify samples increases, and the curve becomes steeper in the low-probability area.

Figure 27.

The function graph of focal loss.

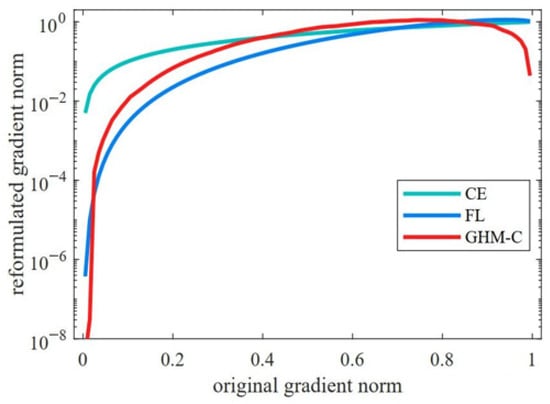

4.2.8. Gradient Harmonizing Mechanism (GHM) Loss

In the single-stage target detector, there exists the problem of imbalance in the gradient distribution of easy and hard samples, that is, a large number of easy samples will dominate the gradient update. However, the traditional loss based on CE merely alleviates the class imbalance by adjusting the loss weights but does not fully consider the dynamic balance at the gradient level. For this purpose, Li et al. proposed GHM loss [64]. GHM loss dynamically adjusts the weight loss of the sample through gradient density. It is divided into classification task loss (GHM-C) and regression task loss (GHM-R). The mathematical formula of GHM-C is shown in Equation (47):

where is the gradient norm, representing the deviation between the predicted probability and GT. is gradient density, which defined as the number of samples within a unit gradient modulus interval. It is used to measure the density of the gradient distribution.

The gradient density is obtained by statistically counting the number of samples between intervals of the gradient modulus. The mathematical formula of gradient density is shown in Equation (48):

where is the indicator function, which determines whether the sample gradient belongs to the current interval. is the length of the interval.

GHM automatically reduces the weight of easy samples (i.e., the gradient modulus is close to 0 or 1) based on the gradient density while enhancing the contribution of moderately hard samples, avoiding the training being dominated by a large number of simple, and maintaining the dynamic balance of the gradient.

As shown in Figure 28, the gradient norm adjustment effect using GHM-C, CE and focal loss is demonstrated. Specifically, the horizontal coordinate of Figure 28 is the original gradient norm, representing the gradient size caused by the difference between the model’s predicted values and GT. The vertical coordinate is the reconstructed gradient norm, representing the gradient size adjusted by GHM-C, CE, and focal loss. It can be known from Figure 28 that GHM-C effectively suppresses the overly strong gradient signals of easy samples, avoids the overfitting noise of the model, and at the same time retains the key gradient signals of hard samples to prevent training collapse.

Figure 28.

The gradient norm adjustment effect using GHM-C, CE, and focal loss.

4.2.9. Class-Balanced Loss

Cui et al. proposed the theory of effective number of samples, introduced the category balance term, and dynamically adjusted the loss weights to solve the category imbalance problem in long-tail datasets [65]. The category balance term is defined in Equation (49):

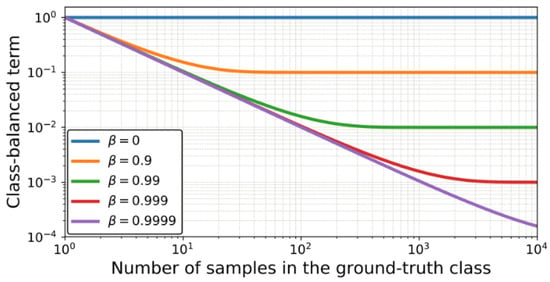

where is the number of samples in the true category, and is a hyperparameter to control the attenuation rate of data coverage. From this formula, it can be known that when , valid samples , that is, all samples are independent information. Similarly, when the valid samples , that is, all samples were completely overlapping, which is equivalent to the traditional weighting strategy. The meaning of this formula is that as the number of samples increases, the information gain brought by the new samples gradually decreases.

Figure 29 shows the relationship between the number of samples in the true category and the category balance term under different values. As can be seen from Figure 29, with the increase in , the curve begins to tilt downward. This indicates that the category balance term corresponding to the category with a large number of samples decreases, thereby reducing the weight of the large sample category when calculating the loss. In particular, when is approaching 1, the category equilibrium term of the curve approaches 1 when the sample size is very small, but as the sample size increases, the category equilibrium term drops sharply, indicating a strong inhibitory effect on categories with a large sample size.

Figure 29.

Effect of values on class-balanced term vs. sample size.

The class-balanced loss adjusts the weights inversely proportional to the number of valid samples. Its mathematical formula is shown in Equation (50):

where is the basic loss function, such as CE or focal loss.

The class-balanced loss is modeled through the effective sample size to avoid the extreme imbalance caused by simple inverse frequency weighting and is more in line with the actual coverage of the data distribution. Furthermore, the class-balanced loss can be integrated into multiple loss functions.

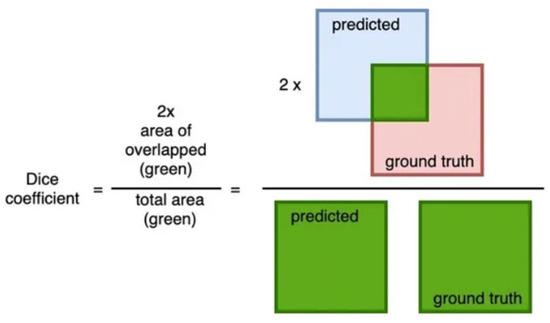

4.2.10. Dice Loss

Dice loss originates from the dice coefficient index, which measures the similarity between two sets and is often used in the field of image segmentation. To better understand, this subsection takes the image segmentation task as an example. In image segmentation, dice coefficient is used to evaluate the degree of overlap between the predicted segmentation mask and the true segmentation mask. The dice coefficient is defined as the size of the intersection of the predicted segmentation mask and the true segmentation mask divided by their sum [66]. The formula of dice coefficient is shown in Equation (51):

where is the binary segmentation mask predicted by the model. In the mask matrix, 1 represents the target region, 0 represents the background, and represents the predicted segmentation region; is the binary segmentation mask of GT, representing the true segmentation region. The values range from 0 to 1, where 0 indicates no overlap between the two regions, and 1 indicates complete overlap.

The visualization of dice coefficient is shown in Figure 30.

Figure 30.

The visualization of dice coefficient.

Dice loss changes from this metric [67], which calculates the overlapping part between the predicted region and the true region. In tasks with relatively scarce foreground categories, such as in lesion detection or other small target scenarios, dice–based loss achieves a classification loss better than the pixel level by directly optimizing the spatial overlap between the predicted region and the real region. The mathematical formula of dice loss is shown in Equation (52):

where is the predicted probability value of the model for the -th pixel, is the true probability value of the -th pixel, and is the smoothing coefficient to prevent the denominator from being zero and causing numerical instability and, at the same time, alleviate the gradient vanishing problem of extreme prediction. is the intersection part of the predicted region and GT region and represents the sum of the total pixels of the predicted region and GT region.

In fact, in binary classification problems, the dice coefficient is mathematically equivalent to F1–score, and the dice coefficient can be transformed into Equation (53):

where TP (the true-positive sample) refers to the number of positive examples correctly identified by the model. FP (the false-positive sample) refers to the number of negative examples that the model mistakenly judges as positive examples. TN (the true-negative sample) is the number of negative examples correctly identified by the model. FN (the false-negative sample) refers to the number of positive examples that the model misses. These four indicators together constitute the core of the confusion matrix.

The F1score provides a balanced measure between precision and recall rate and is suitable for cases of category imbalance. Similarly, the dice loss measures the degree of overlap by calculating the ratio of the intersection of two sets to the union, which can balance the accuracy and recall rate.

4.2.11. Log-Cosh Dice Loss

Dice loss has the problem of non-convexity, which may lead to instability in the optimization process when training imbalanced datasets. Therefore, Jadon et al. proposed the log-cosh dice loss [24], which enhances its smoothness and robustness by wrapping the log-cosh function outside the dice loss. The mathematical formula of log-cosh dice loss is shown in Equation (54):

where is a hyperbolic cosine function, which defined as . The derivative tanh of the log-cosh function is a smooth function within the range .

The introduction of the log-cosh function makes the loss function smoother when the predicted values are far from the GT values, avoids the sharp fluctuations of the dice loss in extreme cases, and has better robustness against outliers and noise. Furthermore, due to its smoothness, the log-cosh dice loss is more suitable for optimization algorithms, such as the gradient descent method, thereby improving the convergence speed and stability of the model.

4.2.12. Generalized Dice Loss

Although the dice loss can solve the category imbalance problem to a certain extent, it is not applicable to severe category imbalance problems. Generalized dice loss is an extension of the traditional dice loss to address the shortcomings of the traditional dice loss when dealing with imbalanced datasets. Sudre et al. proposed a generalized dice loss, which adjusted the contributions of different categories by introducing weights, thereby handling imbalanced datasets more effectively [68]. The generalized dice loss is shown in Equation (55):

where is the weight, usually defined as .

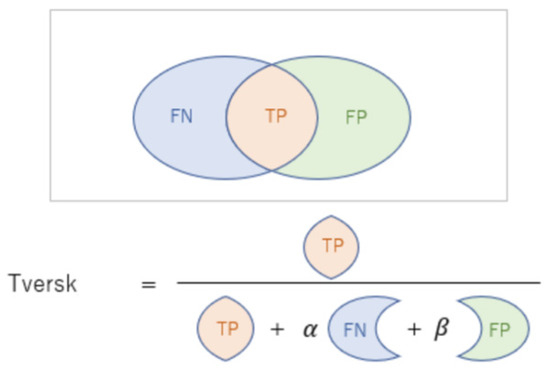

4.2.13. Tversky Loss

Tversky loss is designed for the problem of category imbalance and performs particularly well in image segmentation. It achieves flexible control of different misclassification costs by adjusting the penalty weights of FP and FN [69]. Its core formula is based on the Tversky index (TI), and the basic Tversky index is shown as Equation (56):

where is the predicted mask of the model, is GT, with representing TP, that is, the number of pixels where the predicted region overlaps with the GT region; is FP, that is, the number of pixels predicted to be positive but actually negative; is FN, that is, the number of pixels predicted to be negative but actually positive; are the hyperparameter for adjusting FP and FN. Another form of expression is shown in Figure 31.

Figure 31.

Another definition of .

The Tversky loss is defined in Equation (57):

When , the Tversky loss was equivalent to the dice loss. It is applicable in situations where it is necessary to balance false positives and false negatives. When dealing with highly imbalanced datasets, the values and will be adjusted according to the requirements, to prioritize reducing the impact of a certain type of error. If the impact of FN is greater, set and increase the penalties for false negatives. If the impact of FP is greater, set to increase the penalties for false positives.

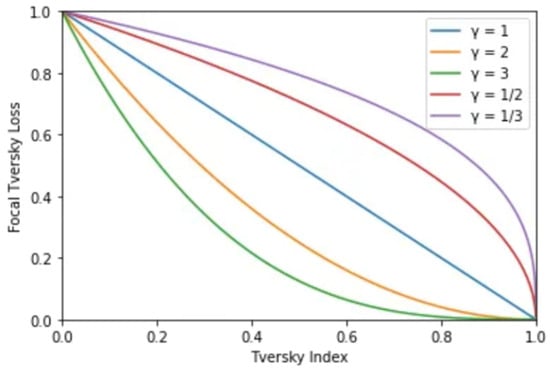

4.2.14. Focal Tversky Loss

Focal Tversky loss is an improved loss that combines Tversky loss and focal loss [70], aiming to enhance the model’s ability to focus on small targets or difficult samples. Focal Tversky loss introduces the concept of focal loss on the basis of Tversky loss and enhances the focus on difficult samples by adjusting parameters. The mathematical formula of focal Tversky loss is shown in Equation (58):

where is the Tversky index, and is the modulation parameter and controls the degree of focus on hard samples. Larger values of will make the model pay more attention to samples with a lower Tversky index, thereby improving the recognition ability for fewer samples.

Figure 32 shows the influence of different values on the focal Tversky loss. As the Tversky index increases, the overlap between the prediction and reality increases, and the loss decreases. The larger is, the faster the curve drops, meaning that a stronger suppression is applied to samples with a high Tversky index, that is, those with better predictions, thereby paying more attention to hard samples.

Figure 32.

The curves between focal Tversky loss and Tversky index under different .

4.2.15. Sensitivity Specificity Loss

Sensitivity-specificity loss is a loss that solves the problem of category imbalance by balancing the penalty weights of FN and FP [71]. The core idea is to combine the two indicators of sensitivity and specificity (also known as recall rate) and adjust the degree of concern of the model for missed detection and false detection through a weighting mechanism. In image segmentation, sensitivity is defined in Equation (59):

Specificity is defined in Equation (60):

The sensitivity-specificity loss formula is defined in Equation (61):

where is the weight parameter, which is used to control the trade-off between sensitivity and specificity; is the smoothness coefficient. This formula strikes a balance between sensitivity and specificity by adjusting the weight parameters . When approaches 0, the model pays more attention to improving specificity; when approaches 1, the model pays more attention to improving the sensitivity.

4.2.16. Poly Loss

Poly loss is a loss function framework designed from the perspective of polynomial expansion, inspired by Taylor expansion [72]. This framework allows for flexible adjustment of the importance of different polynomial bases by representing the loss as a linear combination of polynomial. Thereby it can adapt to the requirements of different tasks and datasets. The traditional CE and focal loss are special cases of poly loss, and poly loss provides a more general perspective to redesign and understand these losses. The general form of poly loss is expressed in Equation (62):

where is the predicted probability of the true category of a single sample, and is the coefficient of the polynomial of the -th term and controls the contribution size of each term . Larger will make losses more sensitive to minor deviations of .

A simplified form of poly loss is the poly-1 loss, and its mathematical formula is expressed in Equation (63):

where is the basic cross-entropy loss, and is a hyperparameter. Setting makes the Poly-1 loss return to the basic cross-entropy loss. When , the term imposes a greater penalty for credible incorrect predictions, which can alleviate overfitting.

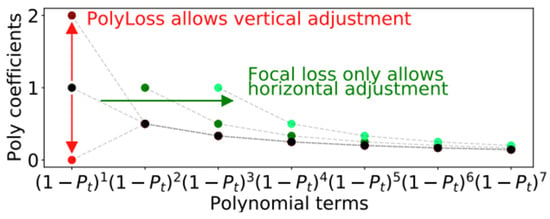

Figure 33 reveals the differences between poly loss and focal loss in the design of the loss. The black dotted line represents the changing trend of the polynomial coefficients. In the poly loss framework, focal loss can only move the polynomial coefficients horizontally (indicated by the green arrow), while the proposed poly loss framework is more universal. It also allows for vertical adjustment of the polynomial coefficients of each polynomial term (indicated by the red arrow).

Figure 33.

Polynomial coefficients of different loss in the bases of .

4.2.17. Kullback–Leibler Divergence Loss

Kullback–leibler divergence (KL divergence loss) is mainly used to measure the difference between two probability distributions and is often applied in fields such as generative models (such as GANs), variational autoencoders (VAEs), and reinforcement learning [73]. For example, in generative adversarial networks (GANs), the KL divergence loss is used to reward the degree of proximity between the embedding vectors generated by the generator and the prior distribution. For discrete distributions, the definition of KL divergence loss is shown in Equation (64):

where is the true distribution, and is the predicted distribution by the model, that is, the distribution that needs to be optimized.

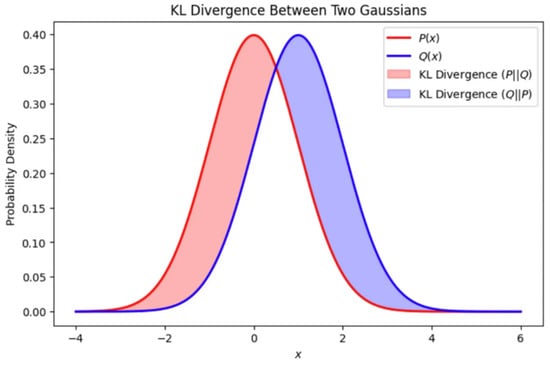

It should be noted that the KL divergence does not satisfy symmetry, which is . Therefore, in practical applications, it is necessary to determine which distribution should be used as the true distribution based on specific problems. Figure 34 shows the KL divergence between two Gaussian distributions. The horizontal axis of this graph represents the values of random variables, and the vertical axis represents the probability density. In the figure, there are two curves, where red represents , blue represents , pink area represents , and blue area represents . The asymmetry of KL divergence can be seen from Figure 34.

Figure 34.

Visualization of KL divergence asymmetry between Gaussian distributions.

5. Metric Loss

Metric learning maps the input data to the embedding space by learning a customized distance metric function so that the distance between similar samples in this space is reduced as much as possible and the distance between dissimilar samples is increased as much as possible. Correspondingly, the metric loss is the loss based on metric learning, aiming to guide the model to learn a high-quality, generalizable embedding space or feature representation. This type of loss hopes that the feature vectors of similar samples in the embedding space are highly similar (i.e., the distance between the vectors is small), while the feature vectors of dissimilar samples should be significantly different (i.e., the distance between the vectors is large). This kind of loss usually serves similar metric tasks, such as face verification, image retrieval, or anomaly detection [74,75,76], etc. The final predicted value is often accomplished by calculating the similarity of feature vectors and making threshold judgments. The similarity calculation of vectors can be directly achieved through the calculation of vector distances or the included angles between vectors, which is commonly known as cosine similarity. According to the different metrics for calculating similarity in the embedding space, the losses based on feature embedding can be divided into two categories: the Euclidean distance loss and the angular margin loss.

5.1. Euclidean Distance Loss

The loss based on Euclidean distance is a metric learning method [75], which is a loss that directly takes the geometric distance in the embedding space as the optimization target. It attempts to decrease the distance of similar samples in the embedding space and increase the distance of dissimilar samples in the embedding space, thereby achieving the training and parameter adjustment of the model. The mathematical formula of the Euclidean distance is shown in Equation (65):

where are the feature vectors of two samples in the vector space.

Contrastive loss, triplet loss, and center loss are commonly used in Euclidean distance loss.

5.1.1. Contrastive Loss

The core idea of contrastive loss is to enhance the discriminative ability of the model by optimizing the relative distance between samples. Specifically, the target of contrastive loss is to make positive sample pairs of the same category as close as possible in the embedding space, while negative sample pairs of different categories are as far apart as possible [18,76,77]. The mathematical formula of contrastive loss is shown in Equation (66):

where is the Euclidean distance between the samples ; is a label indicating whether a sample pair belongs to the same category, . If it belongs to the same category, is 1; if not, is 0. Set as the margin. If are positive sample pairs, it is hoped that their Euclidean distance is as small as possible. If are negative sample pairs, it is hoped that their Euclidean distance is greater than the margin.

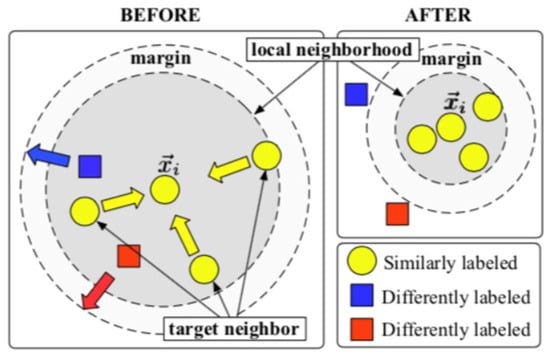

Contrastive loss is trained through pairwise input. For example, in the face recognition task, the model will simultaneously receive two images as input samples. When the two samples come from the same person, we give label . Otherwise, the given label . When , the corresponding loss is and when , the corresponding loss is . On the one hand, the smaller the Euclidean distance of the same samples in the embedding space, the smaller the loss value, ensuring the similarity of samples from the same person. On the other hand, the greater the Euclidean distance of different samples in the embedding space, the smaller the loss value, ensuring the differences of samples from different personnel. Furthermore, set a margin . If the distances of negative sample pairs from different people are included, the model will generate loss, thereby increasing the distances of negative sample pairs and causing them to separate. Therefore, the contrastive loss achieves the pairwise matching degree and also effectively trains the feature extraction model. Figure 35 shows the effect of contrastive loss, bringing similar samples closer and pushing different samples farther away.

Figure 35.

The effect of the contrastive loss.

The classic work by using contrastive loss is the DeepID series of networks. DeepID2 [18] adopts softmax loss to increase the inter-class differences and introduces contrast loss to reduce the intra-class differences among the same identity. DeepID2+ [76] extends on the basis of DeepID2 and adds the dimension of the hidden representation. Supervision over the early convolutional layers was added, and DeepID3 [78] further introduced VGGNet and GoogLeNet.

However, in contrast to loss, the margin parameter is often difficult to select. Furthermore, due to the extreme imbalance between negative sample pairs and positive sample pairs, how to select the appropriate negative sample pairs is also a difficulty in the research.

5.1.2. Triplet Loss

Unlike contrastive loss that considers the absolute distance between matched pairs and unmatched pairs, triple loss takes into account the relative difference in distance between matched pairs and unmatched pairs. With Google’s proposal of FaceNet [79], Triplet loss [80,81] was introduced into the face recognition task. The mathematical formula of the triple loss is shown in Equation (67):