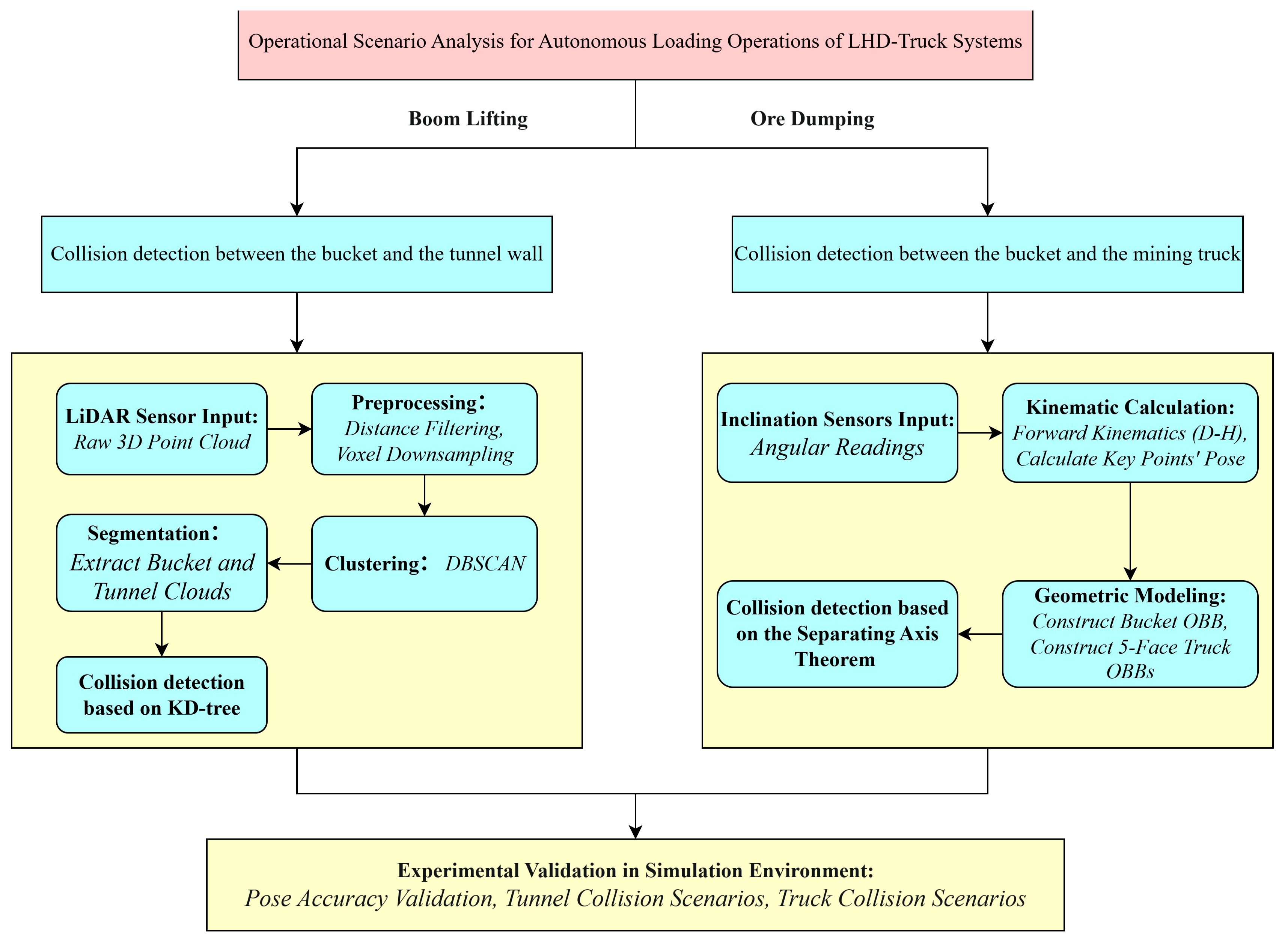

Collision Detection Algorithms for Autonomous Loading Operations of LHD-Truck Systems in Unstructured Underground Mining Environments

Abstract

1. Introduction

2. Materials and Methods

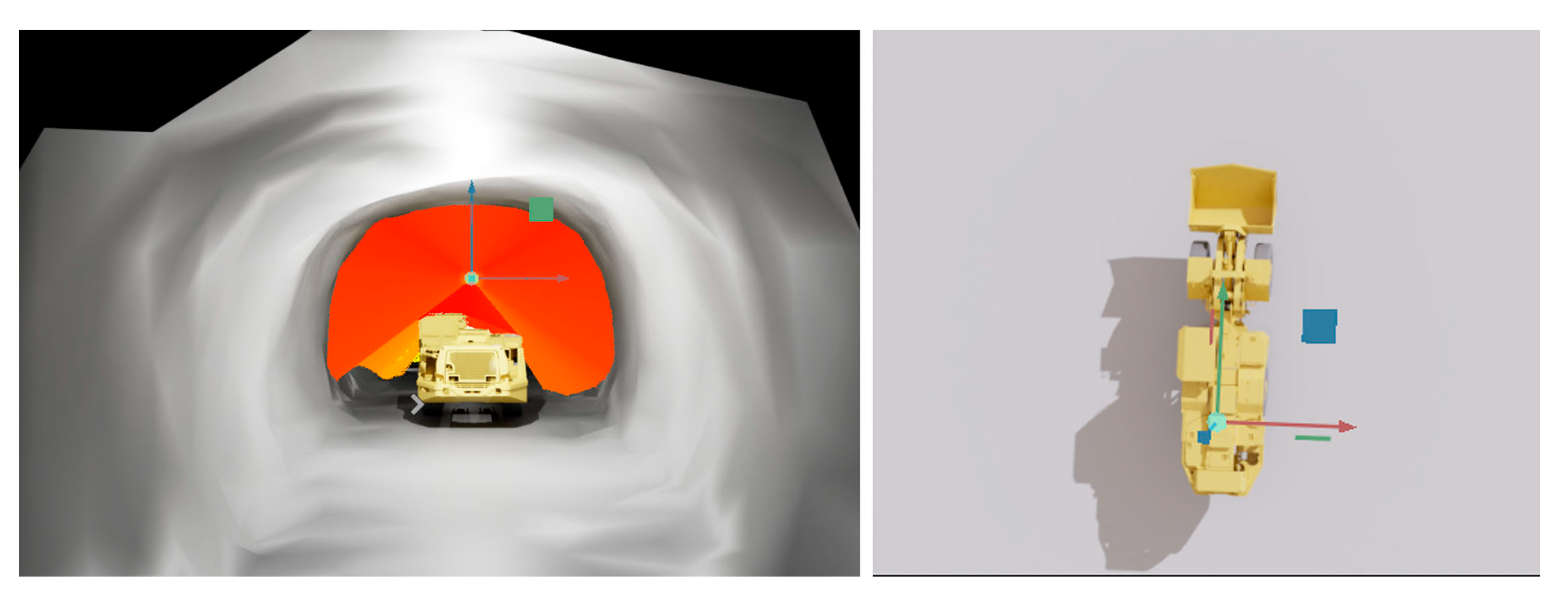

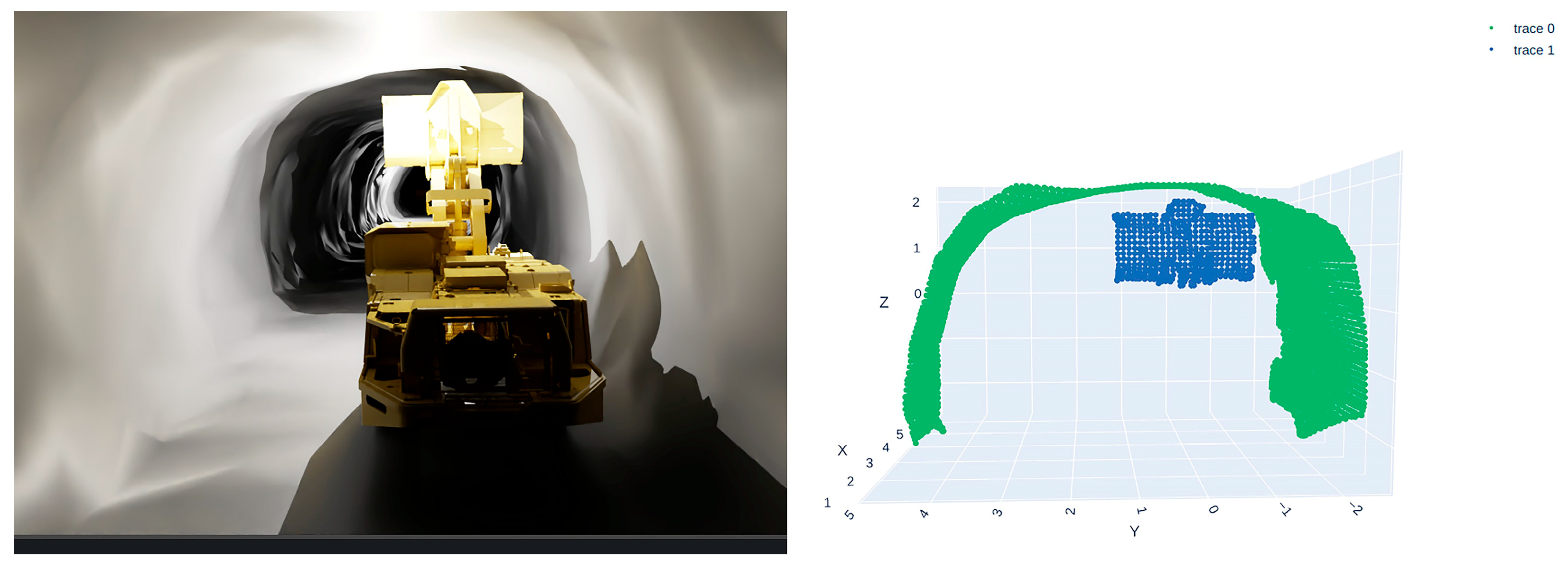

2.1. Construction of a Simulation Environment for Ore Loading Operations in Underground Metal Mines

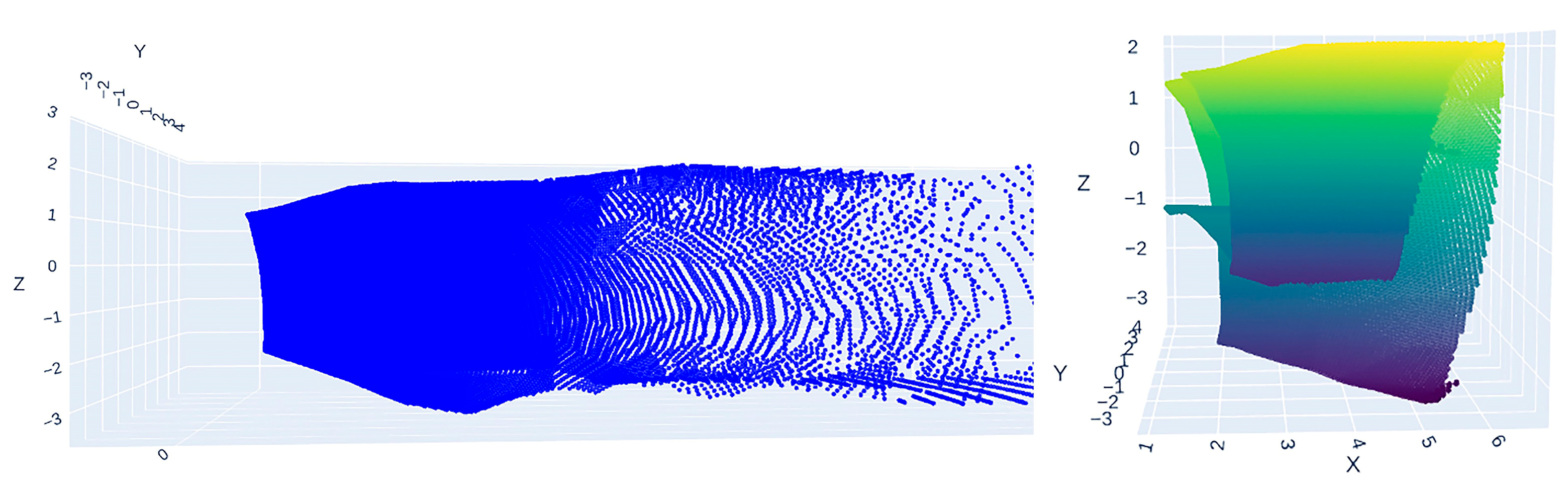

2.2. LiDAR-Based Collision Detection Between the Bucket and the Tunnel

2.2.1. LiDAR Installation Position and Data Acquisition

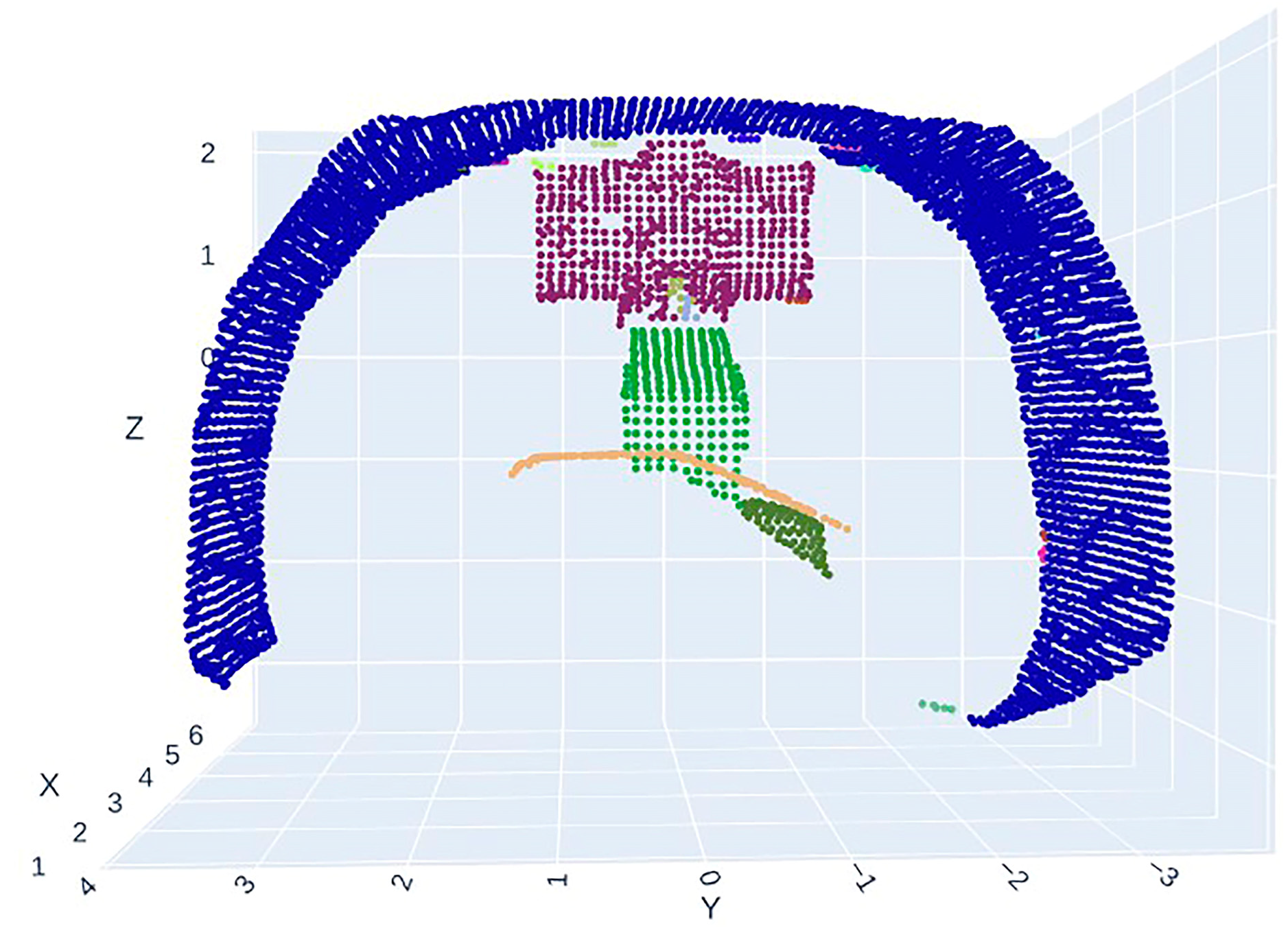

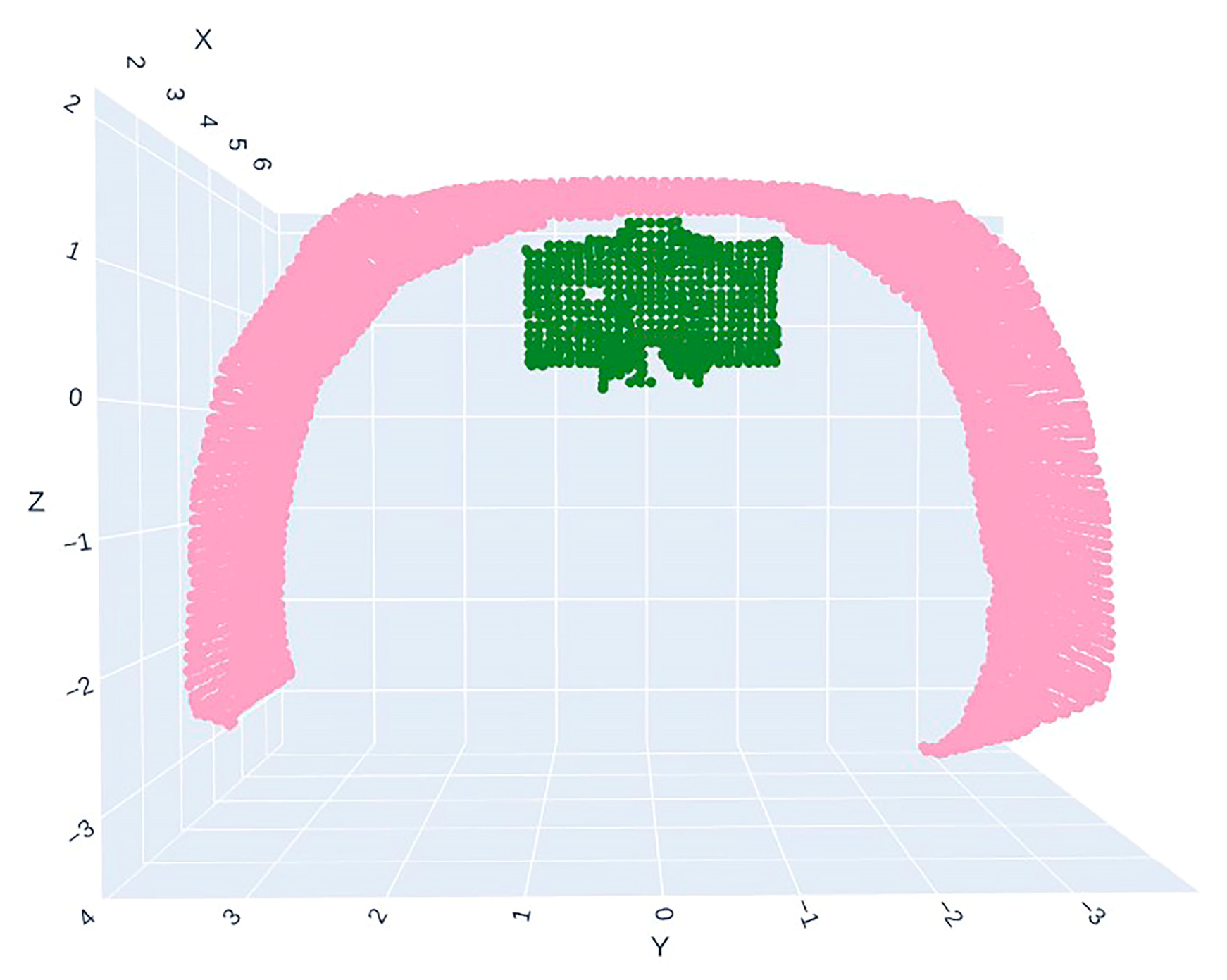

2.2.2. Point Cloud Preprocessing

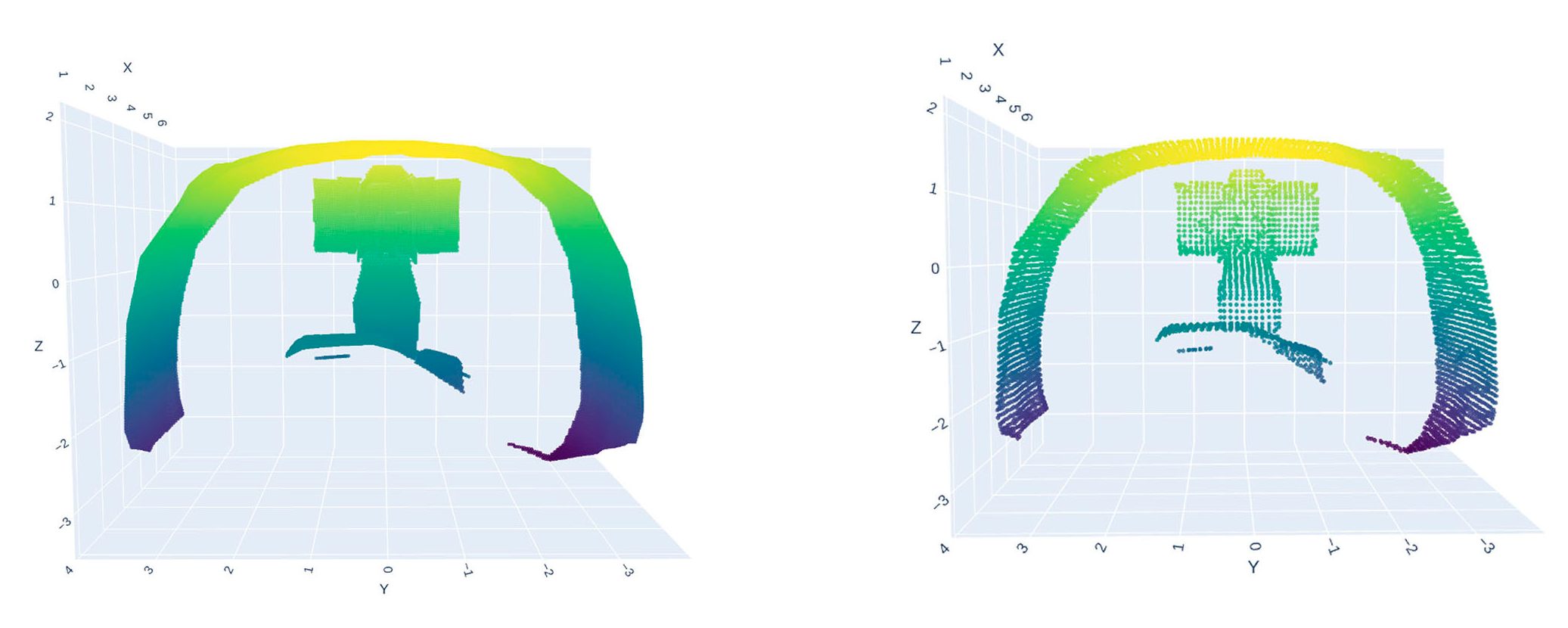

2.2.3. Point Cloud Clustering and Segmentation

- 1.

- Core point: A point is considered a core point if its neighborhood (i.e., within a radius ) contains at least points. In other words, the density around the point is greater than or equal to ;

- 2.

- Border point: A point is located within the neighborhood of another core point, but it is not itself a core point. Specifically, its neighborhood contains fewer than points, but it belongs to the neighborhood of a core point;

- 3.

- Noise point: A point that is neither a core point nor a border point. It does not belong to any cluster and is typically considered an outlier or noise;

- 4.

- Neighborhood: Given a point , its neighborhood refers to all points within a radius of .

2.2.4. Collision Detection Based on KD-Tree

- 1.

- Constructing the KD-tree: Build a KD-tree for the tunnel point cloud. This process partitions the space and organizes the tunnel point cloud into a tree structure;

- 2.

- Setting the collision threshold: Define a to determine whether two points are considered to be in collision;

- 3.

- Iterating through the bucket point cloud: For each point in the bucket point cloud, use the KD-tree to query the nearest point in the tunnel point cloud;

- 4.

- Computing the Euclidean distance: Calculate the Euclidean distance between the query point and its nearest neighbor in the tunnel point cloud using the formulawhere and are the coordinates of the two points;

- 5.

- Querying the nearest neighbor: Utilize the KD-tree to search for the nearest neighbor in the tunnel point cloud. The query process can be represented using the following formula:

- 6.

- Collision determination: If the computed distance is smaller than the predefined threshold (), a collision is considered to have occurred:where represents the Euclidean distance between and , and is the predefined distance threshold.

| Algorithm 1: PointCloudPreprocessor | |

| Input: P_raw, The raw point cloud from the LiDAR sensor; d_max, The maximum distance threshold for filtering. (e.g., 7 m); v_size, The voxel size for downsampling. (e.g., 0.1). | |

| Output: P_processed, The preprocessed (filtered and downsampled) point cloud. | |

| 1 | function PreprocessPointCloud(P_raw, d_max, v_size): |

| 2 | // Step 1: Filter by Distance |

| 3 | P_filtered ← an empty point cloud |

| 4 | for each point p in P_raw: |

| 5 | dist ← Calculate Euclidean distance of p from the origin |

| 6 | if dist < d_max then |

| 7 | P_filtered ← P_filtered ∪ {p} |

| 8 | end if |

| 9 | end for |

| 10 | // Step 2: Voxel Grid Downsampling |

| 11 | P_processed ← VoxelGridDownsample(P_filtered, v_size) |

| 12 | return P_processed |

| 13 | |

| 14 | function VoxelGridDownsample(P_in, voxel_size): |

| 15 | Create a voxel grid with resolution voxel_size over P_in |

| 16 | P_out ← an empty point cloud |

| 17 | for each non-empty voxel v in the grid: |

| 18 | p_centroid ← Compute the centroid of all points within v |

| 19 | P_out ← P_out ∪ {p_centroid} |

| 20 | end for |

| 21 | return P_out |

| Algorithm 2: DBSCAN Clustering and Collision Detection | |

| Input: P_in, a preprocessed point cloud; eps, DBSCAN radius; min_pts, DBSCAN minimum points; N, number of clusters to select; τ, collision threshold. | |

| Output: CollisionAlert, a boolean value. | |

| 1 | function ClusterAndCheckCollision(P_in, eps, min_pts, N, τ): |

| 2 | // Step 1: Point Cloud Clustering |

| 3 | labels ← DBSCAN(P_in, eps, min_pts) |

| 5 | // Step 2: Select Largest Clusters |

| 6 | all_clusters ← Group points from P_in by labels (excluding noise) |

| 7 | Sort all_clusters by size in descending order |

| 8 | largest_clusters ← Select top N clusters from all_clusters |

| 9 | C_tunnel ← largest_clusters[0] |

| 10 | C_bucket ← largest_clusters[1] |

| 12 | // Step 3: Collision Detection |

| 13 | kd_tree ← BuildKDTree(C_tunnel) |

| 14 | for each point p in C_bucket: |

| 15 | dist ← FindNearestNeighborDistance(p, kd_tree) |

| 16 | if dist < τ then |

| 17 | return True // Collision detected |

| 18 | end if |

| 19 | end for |

| 20 | |

| 21 | return False // No collision detected |

2.3. Collision Detection Algorithm Based on the Pose of Key Points on the Bucket

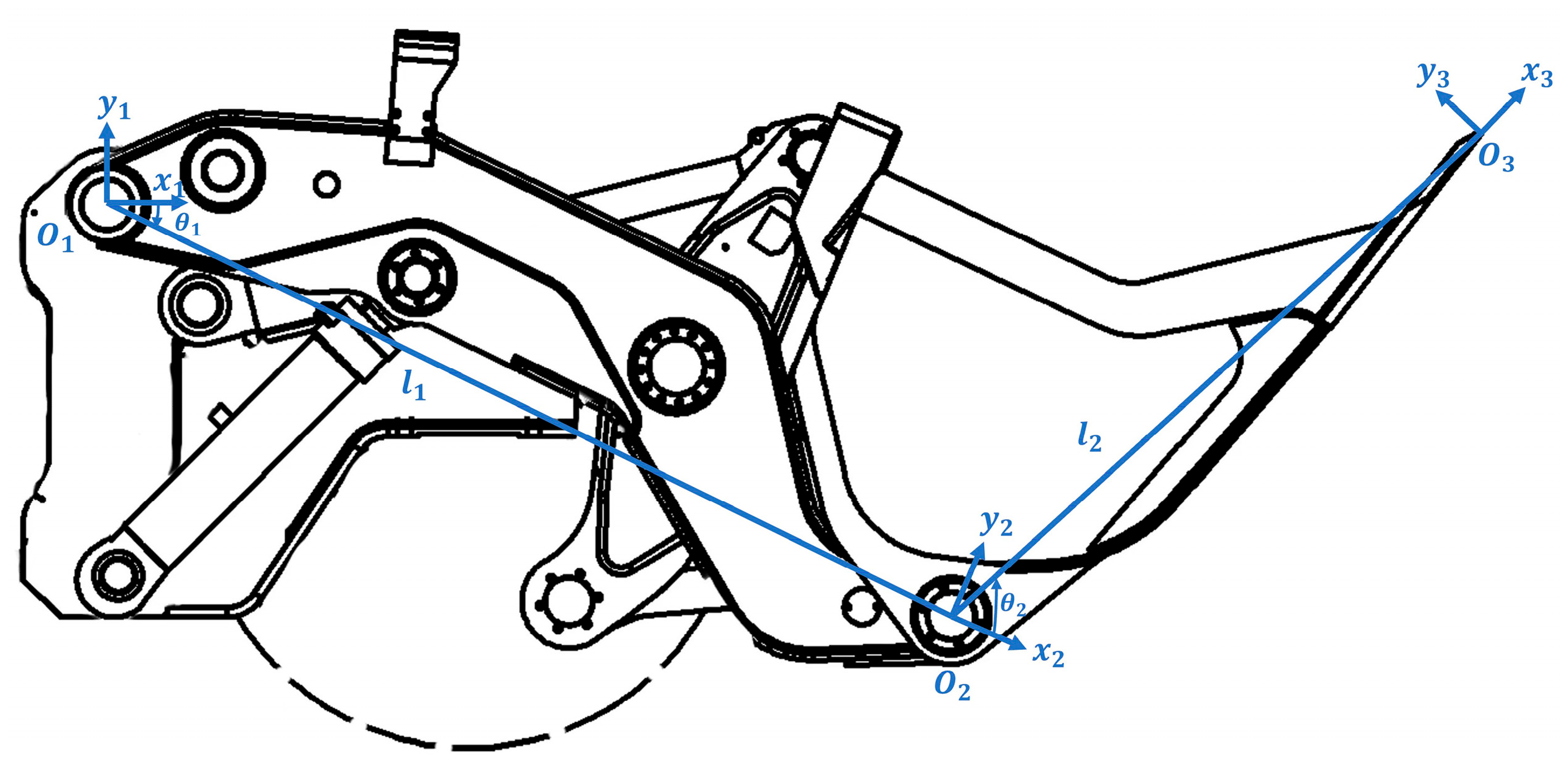

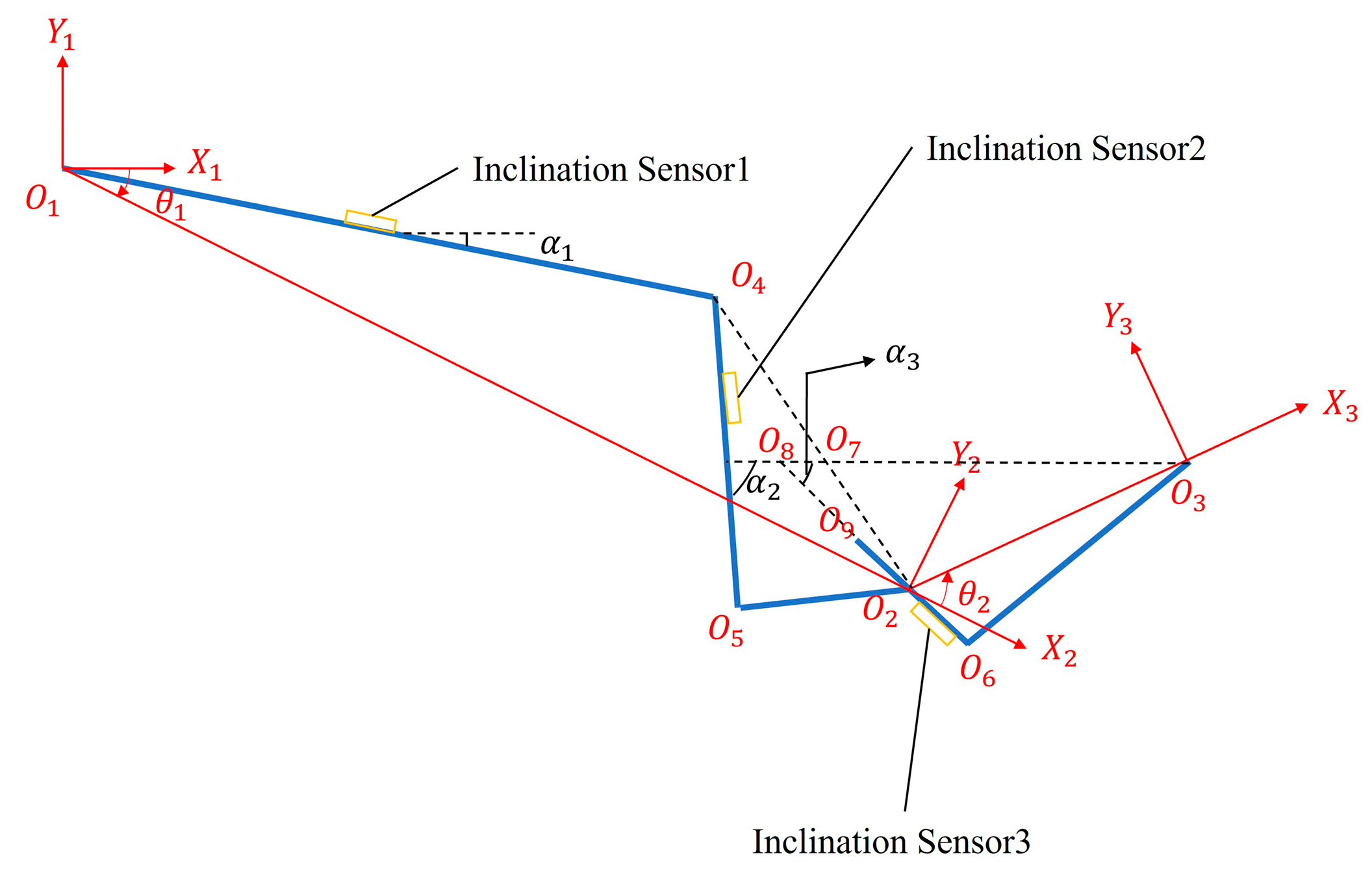

2.3.1. Kinematic Model of the LHD’s Working Device

- 1.

- : Joint angle (rotation angle), which represents the rotation of the previous link relative to the current link around the axis. This parameter is typically associated with the rotation of the joint, with clockwise rotation defined as negative and counterclockwise rotation as positive;

- 2.

- : Link offset (translation), which represents the displacement of the current link along the axis. This parameter is typically associated with the displacement of the joint;

- 3.

- : Link length, which represents the distance between the previous and current links along the axis. This parameter describes the fixed length between the links;

- 4.

- : Link twist angle, which represents the rotation of the previous link relative to the current link around the axis.

- 1.

- , , and represent the origins of the three coordinate systems. is the articulation point between the boom and the base, is the articulation point between the bucket and the boom, and is located at the tip of the bucket;

- 2.

- The , , and axes are parallel and perpendicular to the plane;

- 3.

- The axis is along the reference horizontal plane, the axis points in the direction of , and the axis points in the direction of .

2.3.2. Forward Kinematics Analysis

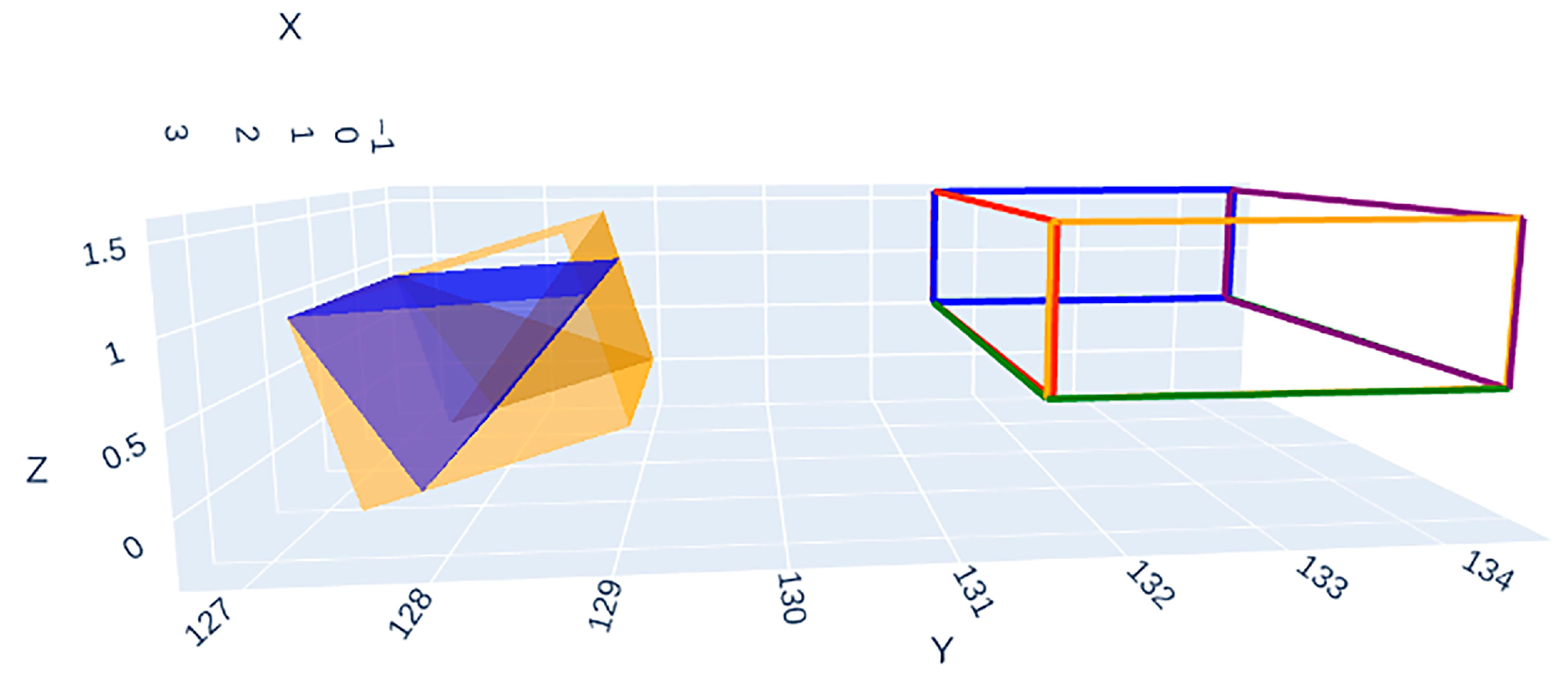

2.3.3. Constructing the OBB

- 1.

- Compute the principal direction of the point cloud: The first step in constructing an OBB is determining the primary direction of the point cloud. This is typically achieved using principal component analysis (PCA), which identifies the principal directions by analyzing the covariance matrix of the point cloud. The principal direction corresponds to the direction with the highest variance in the data;

- 2.

- Steps of PCA: Compute the mean of the point cloud:where represents the -th point in the point cloud, and is the mean of all points.

- 3.

- Rotating the point cloud: Using the principal direction obtained from PCA, a rotation matrix is constructed so that after the point cloud is projected into the new coordinate system, the principal direction aligns with the coordinate axes;

- 4.

- Calculating the dimensions of the point cloud: After rotation, the point cloud typically forms a rectangular box along three axes. The dimensions (length, width, and height) of the bounding box can be determined by calculating the maximum and minimum values of the rotated point cloud along each axis.

- 5.

- Determine the center point and rotation information of the bounding box: The center () of the OBB is the mean of the rotated point cloud:

2.3.4. SAT Method for Collision Detection

- 1.

- Generation of candidate separating axes:

- 2.

- Projection onto separating axes:

- 3.

- Collision determination:

3. Results

3.1. Experimental Setup

- Algorithm 1 (LiDAR-based) Parameters:

- LiDAR Sensor: 120° horizontal FoV, 60° vertical FoV.

- Voxel Downsampling: Voxel size = 0.1 m.

- DBSCAN: Neighborhood radius () = 0.11 m, minimum points () = 4. These parameters were empirically tuned to balance sensitivity and noise rejection.

- Collision Threshold: 0.15 m. An alarm is triggered if the minimum distance between the bucket and tunnel point clouds is less than this value.

- 2.

- Algorithm 2 (Kinematics-based) Parameters:

- Sensor Model: The inclination sensors were simulated to provide ideal, noise-free measurements. This approach was chosen to validate the intrinsic accuracy of the kinematic model itself.

- Evaluation Metric: The SAT output. A negative overlap value indicates a collision (penetration), with its magnitude representing the penetration depth. A positive value indicates separation, with its magnitude representing the minimum clearance distance between the OBBs.

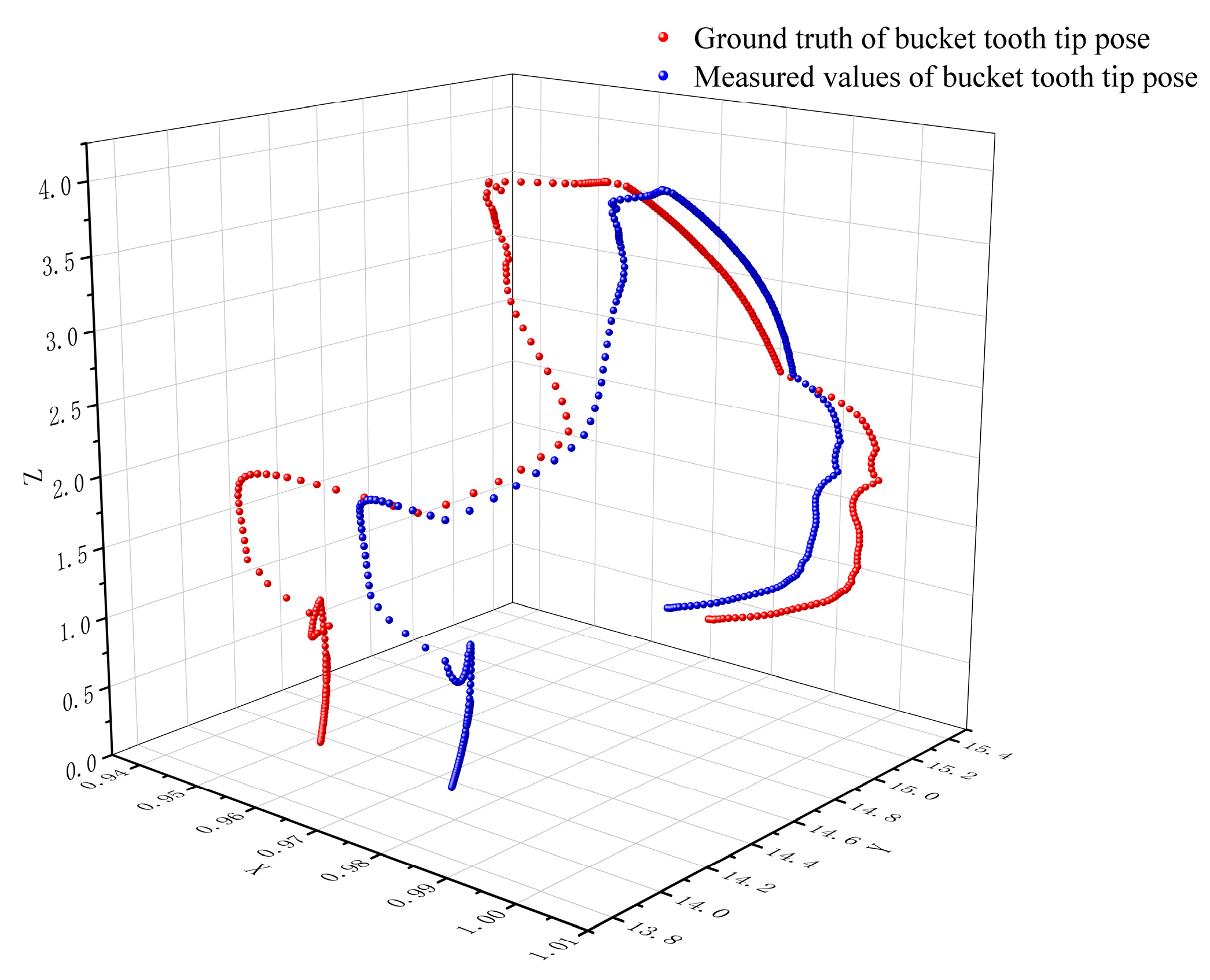

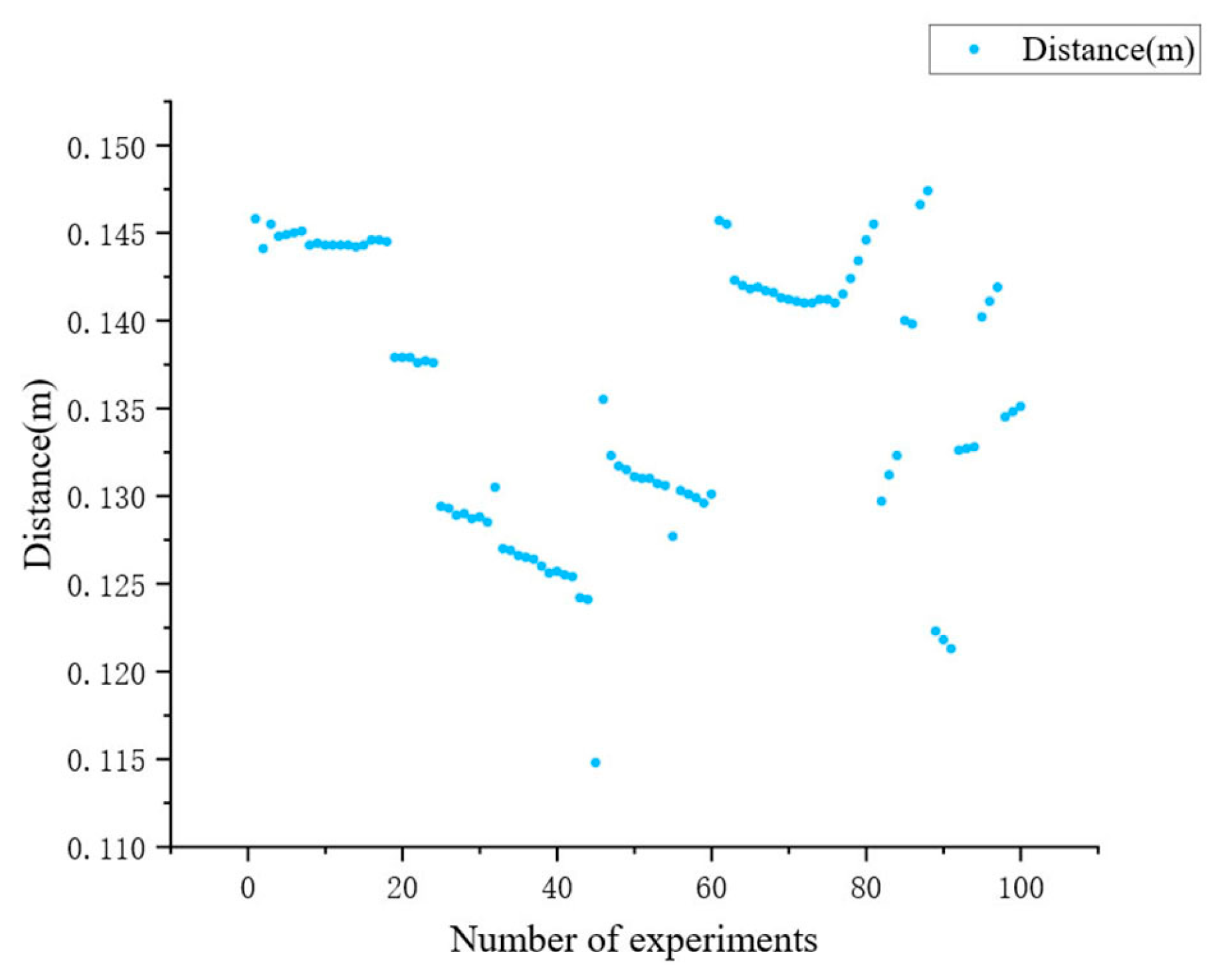

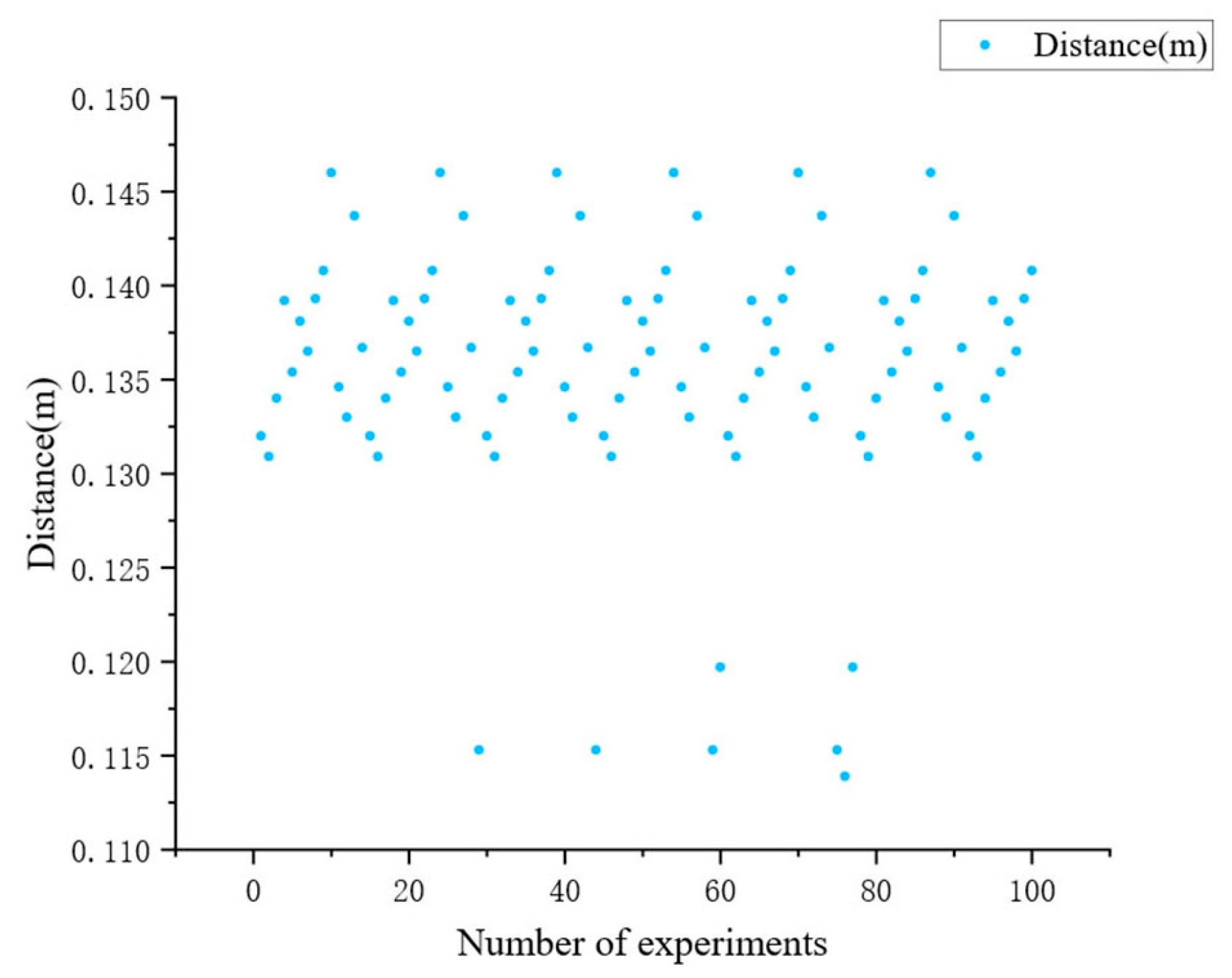

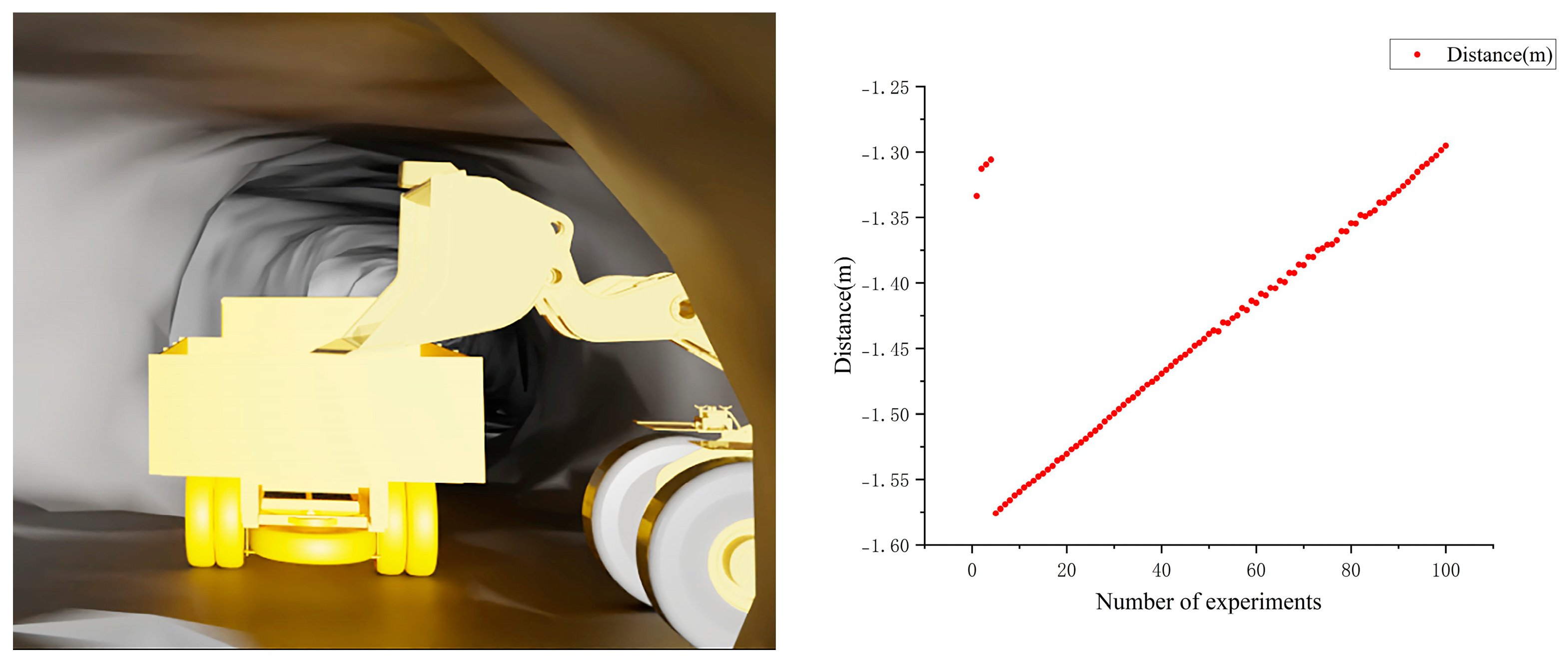

3.2. Verification of the Bucket Tooth Tip Pose

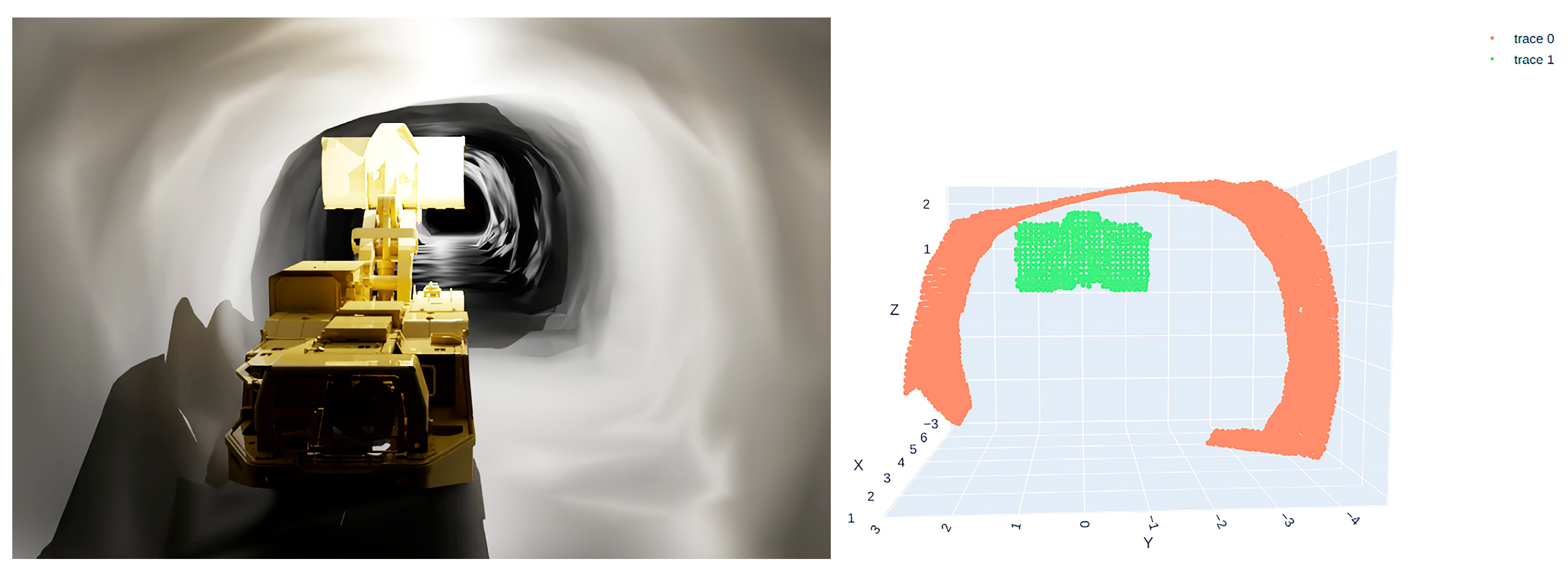

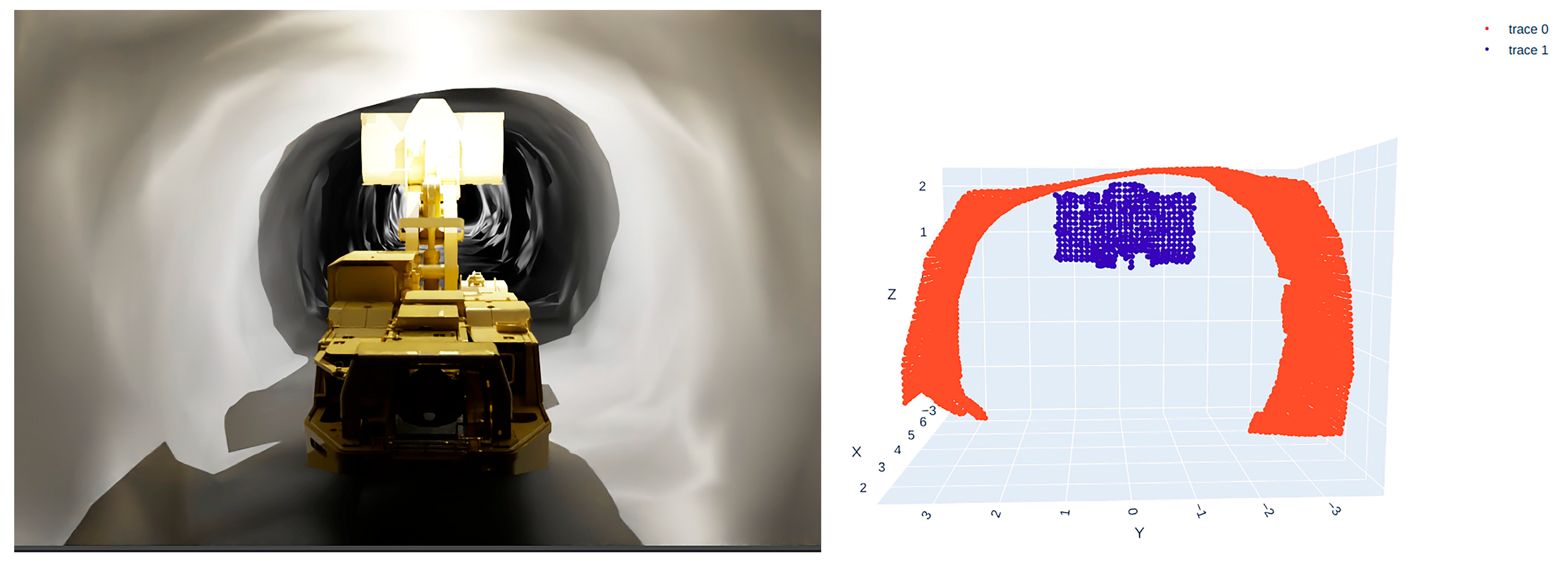

3.3. Validation of Collision Detection Algorithm in Different Scenarios

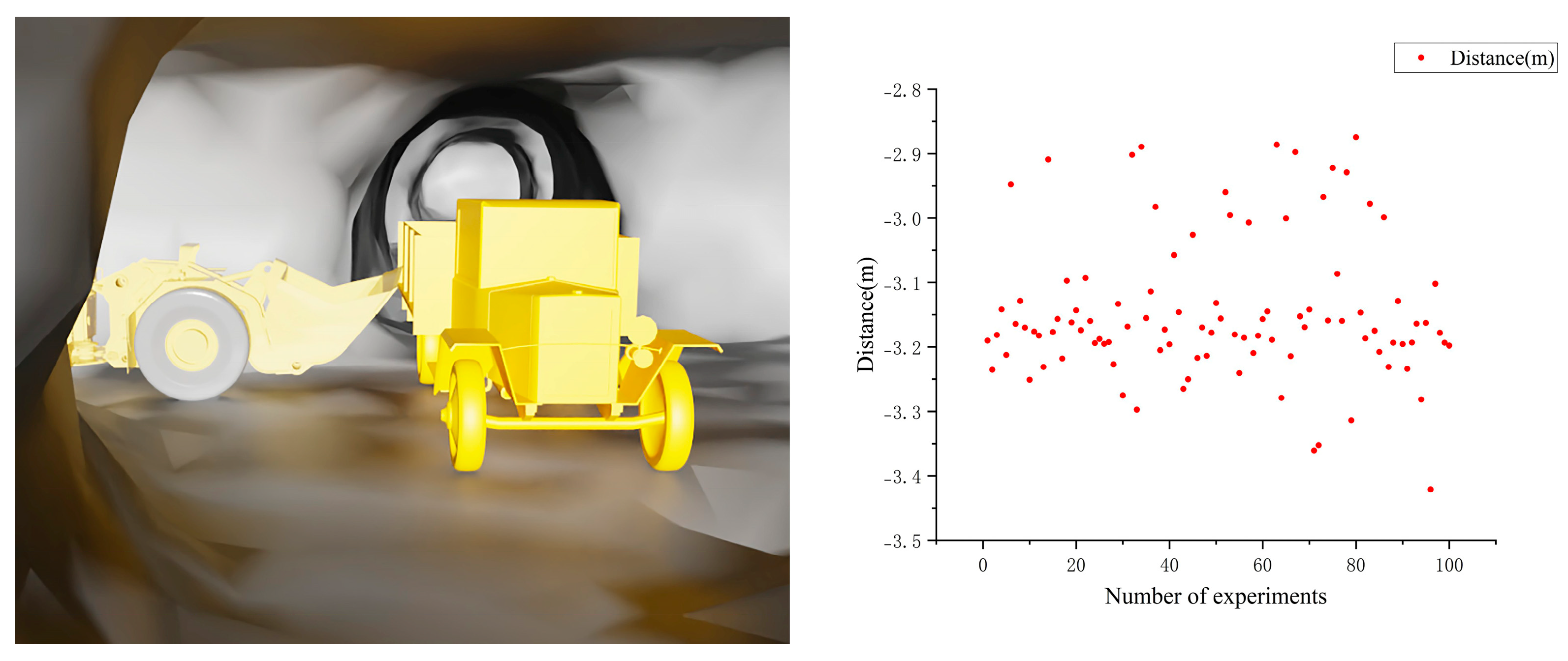

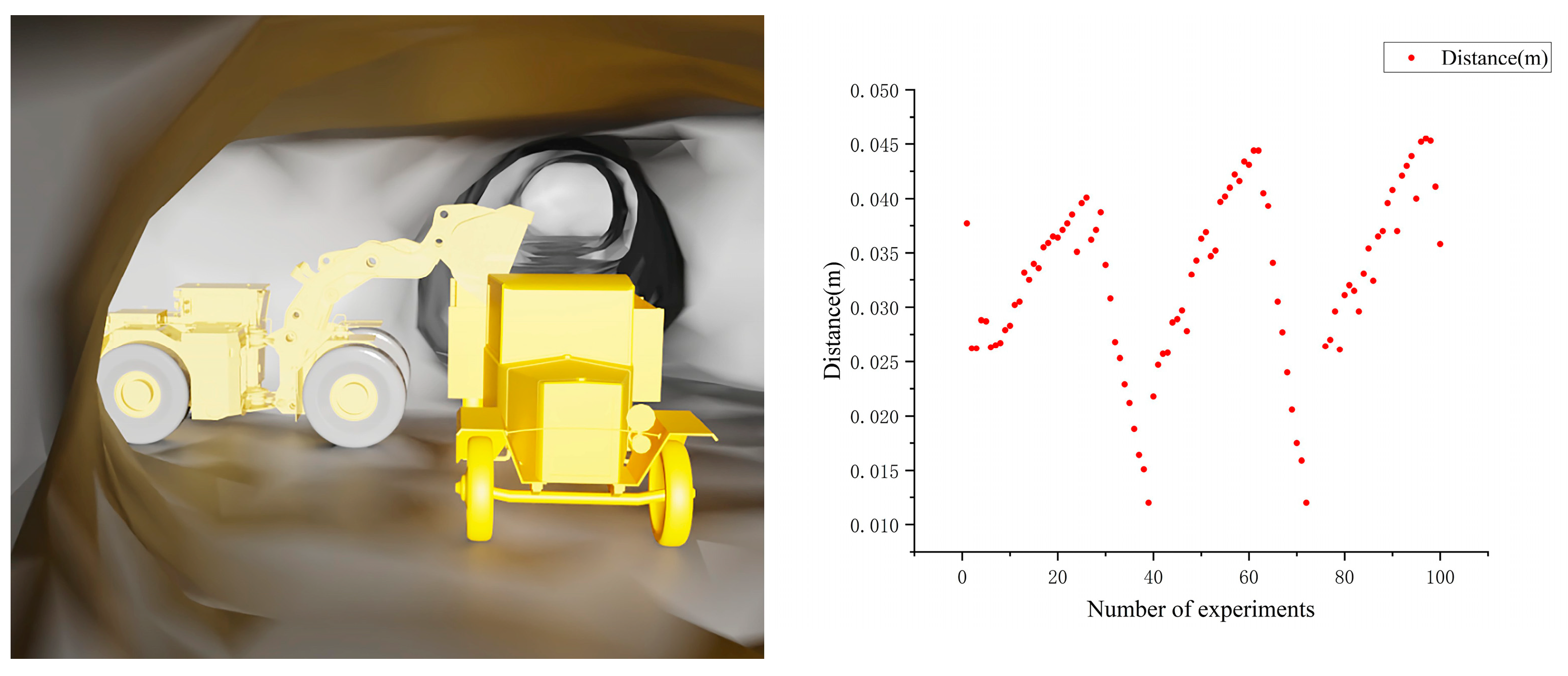

3.3.1. Validation of Bucket and Tunnel Wall Collision Detection Algorithm

- Scenario 1: LHD positioned close to the left tunnel wall (Figure 14);

- Scenario 2: LHD positioned in the center of the tunnel (Figure 15);

- Scenario 3: LHD positioned close to the right tunnel wall (Figure 16).

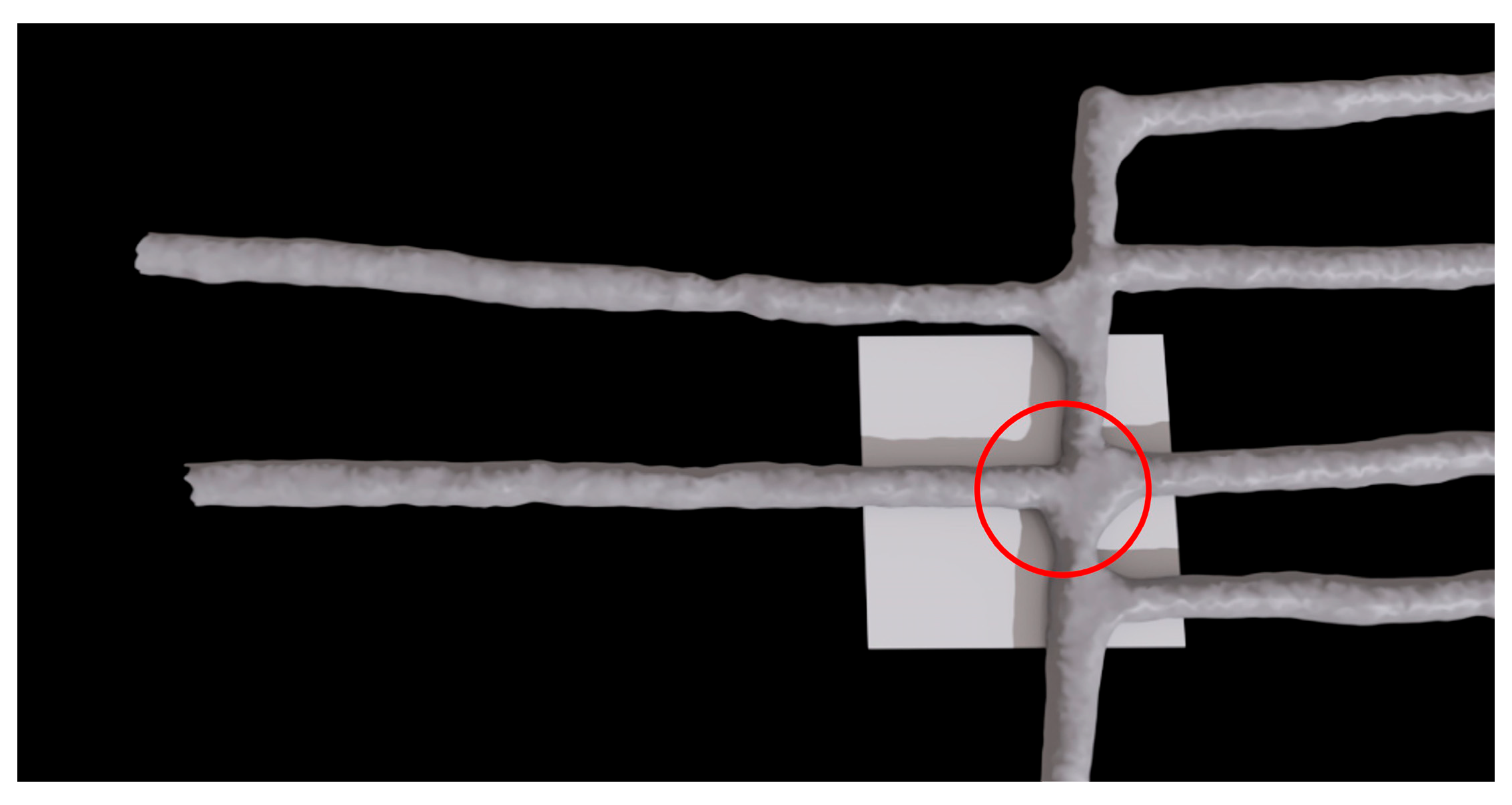

3.3.2. Verification of the Collision Detection Algorithm for the Bucket and Mining Truck

- The kinematic pose formula achieves centimeter-level accuracy (1.41 cm average error), establishing a reliable foundation for the subsequent collision detection;

- The algorithm for bucket-and-tunnel collision detection, which is based on LiDAR, provides 100% reliable, real-time protection. Its robustness is underscored by the DBSCAN method’s success in preventing cluster-merging failures, even when the objects are in extreme proximity;

- The kinematics-based algorithm for bucket-and-truck collision detection also achieved a perfect 100% success rate, critically overcoming the challenges of sensor occlusion and solving the intractable false-alarm problem inherent in naive models of the open-top truck.

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| LHD | Load-haul-dump |

| DH | Denavit–Hartenberg |

| OBB | Oriented bounding box |

| SAT | Separating axis theorem |

| AABB | Axis-aligned bounding box |

| DBSCAN | Density-based spatial clustering of applications with noise |

| PCA | Principal component analysis |

| TSA | Time-series analysis |

| BVHs | Bounding volume hierarchies |

| EBBs | Ellipsoidal bounding boxes |

| SDFs | Signed distance functions |

| GJK | Gilbert–Johnson–Keerthi |

References

- Li, J.; Zhan, K. Intelligent Mining Technology for an Underground Metal Mine Based on Unmanned Equipment. Engineering 2018, 4, 381–391. [Google Scholar] [CrossRef]

- Cucuzza, J. The Status and Future of Mining Automation: An Overview. IEEE Ind. Electron. Mag. 2021, 15, 6–12. [Google Scholar] [CrossRef]

- Wang, J.; Wang, L.; Peng, P.; Jiang, Y.; Wu, J.; Liu, Y. Efficient and Accurate Mapping Method of Underground Metal Mines Using Mobile Mining Equipment and Solid-State Lidar. Measurement 2023, 221, 113581. [Google Scholar] [CrossRef]

- Wang, J.; Wang, L.; Jiang, Y.; Peng, P.; Wu, J.; Liu, Y. A Novel Global Re-Localization Method for Underground Mining Vehicles in Haulage Roadways: A Case Study of Solid-State LiDAR-Equipped Load-Haul-Dump Vehicles. Tunn. Undergr. Space Technol. 2025, 156, 106270. [Google Scholar] [CrossRef]

- Xiao, W.; Liu, M.; Chen, X. Research Status and Development Trend of Underground Intelligent Load-Haul-Dump Vehicle—A Comprehensive Review. Appl. Sci. 2022, 12, 9290. [Google Scholar] [CrossRef]

- Huh, S.; Lee, U.; Shim, H.; Park, J.-B.; Noh, J.-H. Development of an Unmanned Coal Mining Robot and a Tele-Operation System. In Proceedings of the 2011 11th International Conference on Control, Automation and Systems, Gyeonggi-do, Republic of Korea, 26–29 October 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 31–35. [Google Scholar]

- Ranjan, A.; Zhao, Y.; Sahu, H.B.; Misra, P. Opportunities and Challenges in Health Sensing for Extreme Industrial Environment: Perspectives from Underground Mines. IEEE Access 2019, 7, 139181–139195. [Google Scholar] [CrossRef]

- Maric, B.; Jurican, F.; Orsag, M.; Kovacic, Z. Vision Based Collision Detection for a Safe Collaborative Industrial Manipulator. In Proceedings of the 2021 IEEE International Conference on Intelligence and Safety for Robotics (ISR), Tokoname, Japan, 4–6 March 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 334–337. [Google Scholar]

- Bauer, P.; Hiba, A. Vision Only Collision Detection with Omnidirectional Multi-Camera System. IFAC-Pap. 2017, 50, 15215–15220. [Google Scholar] [CrossRef]

- Zhang, F.; Liu, H.; Chen, Z.; Wang, L.; Zhang, Q.; Guo, L. Collision Risk Warning Model for Construction Vehicles Based on YOLO and DeepSORT Algorithms. J. Constr. Eng. Manag. 2024, 150, 04024053. [Google Scholar] [CrossRef]

- Lu, S.; Xu, Z.; Wang, B. Human-Robot Collision Detection Based on the Improved Camshift Algorithm and Bounding Box. Int. J. Control Autom. Syst. 2022, 20, 3347–3360. [Google Scholar] [CrossRef]

- Imam, M.; Baina, K.; Tabii, Y.; Ressami, E.M.; Adlaoui, Y.; Benzakour, I.; Abdelwahed, E.H. The Future of Mine Safety: A Comprehensive Review of Anti-Collision Systems Based on Computer Vision in Underground Mines. Sensors 2023, 23, 4294. [Google Scholar] [CrossRef] [PubMed]

- Xiang, Q.; Chen, C.; Jiang, Y. Servo Collision Detection Control System Based on Robot Dynamics. Sensors 2025, 25, 1131. [Google Scholar] [CrossRef] [PubMed]

- Sun, S.; Song, C.; Wang, B.; Huang, H. Research on Dynamic Parameter Identification and Collision Detection Method for Cooperative Robots. Ind. Robot. 2023, 50, 1024–1035. [Google Scholar] [CrossRef]

- Xu, T.; Fan, J.; Fang, Q.; Zhu, Y.; Zhao, J. A New Robot Collision Detection Method: A Modified Nonlinear Disturbance Observer Based-on Neural Networks. J. Intell. Fuzzy Syst. 2020, 38, 175–186. [Google Scholar] [CrossRef]

- Ye, J.; Fan, Y.; Kang, Q.; Liu, X.; Wu, H.; Zheng, G. MomentumNet-CD: Real-Time Collision Detection for Industrial Robots Based on Momentum Observer with Optimized BP Neural Network. Machines 2025, 13, 334. [Google Scholar] [CrossRef]

- Zhang, T.; Hong, J. Collision Detection Method for Industrial Robot Based on Envelope-like Lines. Ind. Robot. 2019, 46, 510–517. [Google Scholar] [CrossRef]

- Zhang, T.; Ge, P.; Zou, Y.; He, Y. Robot Collision Detection Without External Sensors Based on Time-Series Analysis. J. Dyn. Syst. Meas. Control 2021, 143, 041005. [Google Scholar] [CrossRef]

- Zhang, D.; Zhao, J.; Zhang, H. Six-Degree-of-Freedom Manipulator Fast Collision Detection Algorithm Based on Sphere Bounding Box. In Proceedings of the 2024 2nd International Conference on Artificial Intelligence and Automation Control (AIAC), Guangzhou, China, 20–22 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 184–187. [Google Scholar]

- Dong, L.; Xiao, Y.; Li, Y.; Shi, R. A Collision Detection Algorithm Based on Sphere and EBB Mixed Hierarchical Bounding Boxes. IEEE Access 2024, 12, 62719–62729. [Google Scholar] [CrossRef]

- Nie, S.; Chen, B.; Li, Y.; Wang, D.; Xu, Y. The Autonomous Route Planning Algorithm for Rock Drilling Manipulator Based on Collision Detection. J. Field Robot. 2025, in press. [CrossRef]

- Yang, W.; Ji, Y.; Zhang, X.; Zhao, D.; Ren, Z.; Wang, Z.; Tian, S.; Du, Y.; Zhu, L.; Jiang, J. A Multi-Camera System-Based Relative Pose Estimation and Virtual-Physical Collision Detection Methods for the Underground Anchor Digging Equipment. Mathematics 2025, 13, 559. [Google Scholar] [CrossRef]

- Hou, D.; Wang, X.; Liu, J.; Yang, B.; Hou, G. Research on Collision Avoidance Technology of Manipulator Based on AABB Hierarchical Bounding Box Algorithm. In Proceedings of the 2021 5th Asian Conference on Artificial Intelligence Technology (ACAIT), Haikou, China, 29–31 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 406–409. [Google Scholar]

- Gan, B.; Dong, Q. An Improved Optimal Algorithm for Collision Detection of Hybrid Hierarchical Bounding Box. Evol. Intel. 2022, 15, 2515–2527. [Google Scholar] [CrossRef]

- Schauer, J.; Nüchter, A. Collision Detection between Point Clouds Using an Efficient K-d Tree Implementation. Adv. Eng. Inform. 2015, 29, 440–458. [Google Scholar] [CrossRef]

- Huang, Z.; Yang, X.; Min, J.; Wang, H.; Wei, P. Collision Detection Algorithm on Abrasive Belt Grinding Blisk Based on Improved Octree Segmentation. Int. J. Adv. Manuf. Technol. 2022, 118, 4105–4121. [Google Scholar] [CrossRef]

- Lu, B.; Wang, Q.; Li, A. Massive Point Cloud Space Management Method Based on Octree-Like Encoding. Arab. J. Sci. Eng. 2019, 44, 9397–9411. [Google Scholar] [CrossRef]

- Xiaolong, C.; Zhangyan, C.; Jun, X.; Long, Z.; Ying, W. Voxel-Based Meshing Collision Detection Accelerating Algorithm for DDA2D. J. Univ. Chin. Acad. Sci. 2023, 40, 540. [Google Scholar]

- Yang, P.; Shen, F.; Xu, D.; Liu, R. A Fast Collision Detection Method Based on Point Clouds and Stretched Primitives for Manipulator Obstacle-Avoidance Motion Planning. Int. J. Adv. Robot. Syst. 2024, 21, 17298806241283382. [Google Scholar] [CrossRef]

- Wang, Z.; Yu, B.; Chen, J.; Liu, C.; Zhan, K.; Sui, X.; Xue, Y.; Li, J. Research on Lidar Point Cloud Segmentation and Collision Detection Algorithm. In Proceedings of the 2019 6th International Conference on Information Science and Control Engineering (ICISCE), Shanghai, China, 20–22 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 475–479. [Google Scholar]

- Yang, A.; Liu, Q.; Naeem, W.; Fei, M. Robot Dynamic Collision Detection Method Based on Obstacle Point Cloud Envelope Model. In Intelligent Equipment, Robots, and Vehicles; Han, Q., McLoone, S., Peng, C., Zhang, B., Eds.; Springer: Singapore, 2021; Volume 1469, pp. 370–378. [Google Scholar]

- Niwa, T.; Masuda, H. Interactive Collision Detection for Engineering Plants Based on Large-Scale Point-Clouds. Comput.-Aided Des. Appl. 2016, 13, 511–518. [Google Scholar] [CrossRef]

- Ueki, S.; Mouri, T.; Kawasaki, H. Collision Avoidance Method for Hand-Arm Robot Using Both Structural Model and 3D Point Cloud. In Proceedings of the 2015 IEEE/SICE International Symposium on System Integration (SII), Nagoya, Japan, 12–13 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 193–198. [Google Scholar]

- Ramsey, C.W.; Kingston, Z.; Thomason, W.; Kavraki, L.E. Collision-Affording Point Trees: SIMD-Amenable Nearest Neighbors for Fast Collision Checking. arXiv 2024, arXiv:2406.02807. [Google Scholar]

- López-Adeva Fernández-Layos, P.; Merchante, L.F.S. Convex Body Collision Detection Using the Signed Distance Function. Comput. Aided Des. 2024, 170, 103685. [Google Scholar] [CrossRef]

- Xia, R.; Wang, D.; Mou, C. Collision Detection Between Convex Objects Using Pseudodistance and Unconstrained Optimization. IEEE Trans. Robot. 2025, 41, 253–268. [Google Scholar] [CrossRef]

- Heo, Y.J.; Kim, D.; Lee, W.; Kim, H.; Park, J.; Chung, W.K. Collision Detection for Industrial Collaborative Robots: A Deep Learning Approach. IEEE Robot. Autom. Lett. 2019, 4, 740–746. [Google Scholar] [CrossRef]

- Wu, D.; Yu, Z.; Adili, A.; Zhao, F. A Self-Collision Detection Algorithm of a Dual-Manipulator System Based on GJK and Deep Learning. Sensors 2023, 23, 523. [Google Scholar] [CrossRef] [PubMed]

- Cao, J.; Wang, M. A Fast and Generalized Broad-Phase Collision Detection Method Based on KD-Tree Spatial Subdivision and Sweep-and-Prune. IEEE Access 2023, 11, 44696–44710. [Google Scholar] [CrossRef]

- Qi, B.; Pang, M. An Enhanced Sweep and Prune Algorithm for Multi-Body Continuous Collision Detection. Vis. Comput. 2019, 35, 1503–1515. [Google Scholar] [CrossRef]

- Li, J.-R.; Xin, R.-H.; Wang, Q.-H.; Li, Y.-F.; Xie, H.-L. Graphic-Enhanced Collision Detection for Robotic Manufacturing Applications in Complex Environments. Int. J. Adv. Manuf. Technol. 2024, 130, 3291–3305. [Google Scholar] [CrossRef]

- Serpa, Y.R.; Rodrigues, M.A.F. Flexible Use of Temporal and Spatial Reasoning for Fast and Scalable CPU Broad-Phase Collision Detection Using KD-Trees. Comput. Graph. Forum 2019, 38, 260–273. [Google Scholar] [CrossRef]

- Chitalu, F.M.; Dubach, C.; Komura, T. Binary Ostensibly-Implicit Trees for Fast Collision Detection. Comput. Graph. Forum 2020, 39, 509–521. [Google Scholar] [CrossRef]

- Pinkham, R.; Zeng, S.; Zhang, Z. QuickNN: Memory and Performance Optimization of k-d Tree Based Nearest Neighbor Search for 3D Point Clouds. In Proceedings of the 2020 IEEE International Symposium on High Performance Computer Architecture (HPCA), San Diego, CA, USA, 22–26 February 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 180–192. [Google Scholar]

- Park, J.S.; Park, C.; Manocha, D. Efficient Probabilistic Collision Detection for Non-Convex Shapes. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1944–1951. [Google Scholar]

- Zou, Y.; Zhang, H.; Feng, D.; Liu, H.; Zhong, G. Fast Collision Detection for Small Unmanned Aircraft Systems in Urban Airspace. IEEE Access 2021, 9, 16630–16641. [Google Scholar] [CrossRef]

- Fan, Q.; Tao, B.; Gong, Z.; Zhao, X.; Ding, H. Fast Global Collision Detection Method Based on Feature-Point-Set for Robotic Machining of Large Complex Components. IEEE Trans. Autom. Sci. Eng. 2023, 20, 470–481. [Google Scholar] [CrossRef]

- Montaut, L.; Le Lidec, Q.; Petrik, V.; Sivic, J.; Carpentier, J. GJK++: Leveraging Acceleration Methods for Faster Collision Detection. IEEE Trans. Robot. 2024, 40, 2564–2581. [Google Scholar] [CrossRef]

- Melero, F.J.; Aguilera, Á.; Feito, F.R. Fast Collision Detection between High Resolution Polygonal Models. Comput. Graph. 2019, 83, 97–106. [Google Scholar] [CrossRef]

- Liu, Z.; Yan, S.; Zou, K.; Xie, M. Collision Detection Method for Anchor Digging Machine Water Drilling Rig. Sci. Rep. 2025, 15, 726. [Google Scholar] [CrossRef] [PubMed]

- Ren, T.; Dong, Y.; Wu, D.; Chen, K. Collision Detection and Identification for Robot Manipulators Based on Extended State Observer. Control Eng. Pract. 2018, 79, 144–153. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, Portland, OR, USA, 2–4 August 1996; AAAI Press: Washington, DC, USA, 1996; pp. 226–231. [Google Scholar]

- Zhang, J.; Zhang, Z.; Luo, N. Kinematics Analysis and Trajectory Planning of the Working Device for Hydraulic Excavators. J. Phys. Conf. Ser. 2020, 1601, 062024. [Google Scholar] [CrossRef]

- Gottschalk, S.; Lin, M.C.; Manocha, D. OBBTree: A Hierarchical Structure for Rapid Interference Detection. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New York, NY, USA, 1 August 1996; Association for Computing Machinery: New York, NY, USA, 1996; pp. 171–180. [Google Scholar]

| Link | ||||

|---|---|---|---|---|

| 2 | 0 | 0 | ||

| 3 | 0 | 0 |

| Maximum Distance (m) | Minimum Distance (m) | Average Distance (m) |

|---|---|---|

| 0.0252 | 0 | 0.0141 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, M.; Peng, P.; Wang, L.; Liu, Y.; Lei, R.; Zhang, C.; Zhang, Y.; Liu, Y. Collision Detection Algorithms for Autonomous Loading Operations of LHD-Truck Systems in Unstructured Underground Mining Environments. Mathematics 2025, 13, 2359. https://doi.org/10.3390/math13152359

Lei M, Peng P, Wang L, Liu Y, Lei R, Zhang C, Zhang Y, Liu Y. Collision Detection Algorithms for Autonomous Loading Operations of LHD-Truck Systems in Unstructured Underground Mining Environments. Mathematics. 2025; 13(15):2359. https://doi.org/10.3390/math13152359

Chicago/Turabian StyleLei, Mingyu, Pingan Peng, Liguan Wang, Yongchun Liu, Ru Lei, Chaowei Zhang, Yongqing Zhang, and Ya Liu. 2025. "Collision Detection Algorithms for Autonomous Loading Operations of LHD-Truck Systems in Unstructured Underground Mining Environments" Mathematics 13, no. 15: 2359. https://doi.org/10.3390/math13152359

APA StyleLei, M., Peng, P., Wang, L., Liu, Y., Lei, R., Zhang, C., Zhang, Y., & Liu, Y. (2025). Collision Detection Algorithms for Autonomous Loading Operations of LHD-Truck Systems in Unstructured Underground Mining Environments. Mathematics, 13(15), 2359. https://doi.org/10.3390/math13152359