Abstract

The rapid densification of wireless networks demands efficient evaluation of special functions underpinning system-level performance metrics. To facilitate research, we introduce a computational framework tailored for the zero-balanced Gauss hypergeometric function , a fundamental mathematical kernel emerging in Signal-to-Interference-plus-Noise Ratio (SINR) coverage analysis of non-uniform cellular deployments. Specifically, we propose a novel Reciprocal-Argument Transformation Algorithm (RTA), derived rigorously from a Mellin–Barnes reciprocal-argument identity, achieving geometric convergence with . By integrating RTA with a Pfaff-series solver into a hybrid algorithm guided by a golden-ratio switching criterion, our approach ensures optimal efficiency and numerical stability. Comprehensive validation demonstrates that the hybrid algorithm reliably attains machine-precision accuracy () within 1 μs per evaluation, dramatically accelerating calculations in realistic scenarios from hours to fractions of a second. Consequently, our method significantly enhances the feasibility of tractable optimization in ultra-dense non-uniform cellular networks, bridging the computational gap in large-scale wireless performance modeling.

Keywords:

Gauss hypergeometric functions; reciprocal-argument transformation; SINR coverage; golden-ratio threshold; pfaff transformation MSC:

33C05

1. Introduction

The Gauss hypergeometric function is one of the most ubiquitous special functions in mathematics and physics. Since Gauss’s classical work established its fundamental properties [1], has been recognized as a “universal” function encompassing a wide array of elementary and special functions. In modern applications, the hypergeometric function plays a crucial role across disciplines, from quantum mechanics and quantum field theory [2,3] to finance and statistics [4]. In wireless communications theory, frequently appears in closed-form solutions for performance metrics such as signal coverage probability, connection reliability, and outage probability [5,6,7].

A particularly significant case is the degenerate form , which naturally arises when evaluating integrals in stochastic-geometry models of cellular networks [5,7]. As highlighted by Cai et al. [5] and others [6,7], it emerges as central to formulas for coverage and link reliability in 5G and heterogeneous networks. In composite fading channels such as – and – channels, closed-form solutions for symbol-error rates and outage probabilities reduce to , where and y is proportional to the instantaneous Signal-to-Noise Ratio (SNR) [6]. In secrecy and covert communication systems, integrals of the form evaluate exactly to , linking eavesdropper coverage directly to [8]. In stochastic-geometry modeling of cellular networks, when base stations are distributed according to a non-uniform Poisson point process within a finite disk, the Laplace transform of aggregate interference involves , where k takes values such as 2, 3, and 4, in its angular integral. A notable example is the coverage-probability framework introduced by Pratt et al. [9], where position-dependent Signal-to-Interference-plus-Noise Ratio (SINR) coverage in ultra-dense regions is expressed through repeated evaluations of , embedded in a double integral over user location and nearest-neighbor distance. These applications highlight the pivotal role of in wireless communication, serving as an essential mathematical tool for analyzing network performance.

Despite its importance, the computational evaluation of Gauss hypergeometric functions remains challenging. The defining series converges only for , so direct summation for works only when and diverges when [5]. Even inside the unit disc, convergence may be slow if parameters are large or y is close to 1. Therefore, efficient evaluation relies on analytic continuation, transformation formulas, and asymptotics [4,10]. Classical linear transformations are invaluable. Using Pfaff’s transformation [11], Cai et al. [5] obtain , yielding a rapidly convergent series in for large y. However, even with Pfaff’s transformation, significant inefficiency persists when . As y increases, the transformed argument approaches unity, placing the Gauss hypergeometric series at the boundary of its convergence domain. Consequently, the series converges very slowly, and an increasingly large number of terms must be summed to attain a fixed precision. The practical effect is a steep increase in computational cost, and the accumulation of rounding errors from summing many terms degrades numerical stability.

These issues become particularly acute in large-scale simulations and iterative computations typical in wireless communications and other fields, where evaluations may be performed millions of times. In such settings, the slow convergence of the Pfaff-transformed series can dominate the overall runtime and propagate errors across evaluations, undermining both efficiency and accuracy. Motivated by these limitations, this paper develops a new algorithm for efficiently evaluating in the regime . The proposed method employs a novel series representation that remains rapidly convergent even as y becomes large, thereby overcoming the shortcomings of existing approaches. This work provides the theoretical framework and numerical analysis demonstrating the improved performance of the new algorithm compared to classical transformations. Specially, our contributions to this work are as follows:

- (1)

- We derive from the Mellin–Barnes integral and construct a fast solver that eliminates the slow-convergence bottleneck of the Pfaff series for large y.

- (2)

- We analytically determine the optimal switch between reciprocal and Pfaff forms, producing a hybrid algorithm that is uniformly efficient for all and keeps the error at roughly .

- (3)

- We prove geometric convergence and rigorously bound round-off propagation, thereby guaranteeing numerical stability across the entire parameter space.

- (4)

- Through extensive experiments, we demonstrate up to speed-ups over widely used built-in implementations and achieve at least a thousandfold reduction in runtime for ultra-dense network simulations while preserving high numerical precision.

2. Related Works

The Gauss hypergeometric function is a classical special function with significant applications across pure and applied mathematics [12,13]. Its fundamental properties, including satisfying a second-order differential equation, are extensively documented in standard references [12,14]. Historically, Gauss leveraged extensively in elliptic integral theory, where the complete elliptic integral of the first kind is compactly represented as [12,14]. More broadly, subsumes numerous elementary and special functions such as binomial series and Legendre functions, underscoring its ubiquity in theoretical physics and engineering contexts [13,14]. Indeed, hypergeometric functions frequently appear when integrals or series require analytic continuation beyond elementary forms; solutions of Laplace’s equation in spherical and cylindrical coordinates often involve or its confluent limits [13,14]. Beyond classical scalar scenarios, recent advancements have significantly generalized hypergeometric functions. Cuchta et al. systematically developed discrete and matrix-valued hypergeometric series, elucidating intricate convergence conditions and divergence criteria essential for numerical stability and practical computations [15,16,17]. In particular, Cuchta’s work has advanced discrete hypergeometric series, highlighting their applicability to difference equations and discrete analogues of classical special functions [15,16]. Distinct from these generalized formulations, the two-variable scalar function has emerged as noteworthy in applied analysis. Originally arising in analytic number theory as the count of y-smooth integers up to x, its asymptotic behavior was first studied by Dickman [18], refined by de Bruijn [19], and further advanced by Hildebrand and Tenenbaum [20]. The function is also integral to integer factorization algorithms, particularly in analyzing the distribution of smooth numbers [18,20]. Furthermore, prominently appears in SINR coverage analysis within wireless communications, especially in non-uniform cellular network deployments, necessitating efficient numerical computation methods. Thus, while Cuchta’s seminal contributions emphasize broader generalizations and theoretical frameworks, our current work provides tailored algorithmic solutions optimized specifically for rapid, numerically stable evaluations of in practical, large-scale wireless scenarios. By clearly delineating these parallel yet complementary research streams, our study bridges classical analytic theory and modern computational demands, enhancing the feasibility of tractable optimization in real-world applications.

Special functions such as and frequently appear in closed-form performance metrics for wireless communications, underpinning critical parameters like coverage, connection probability, outage, ergodic capacity, and error rates [5,21]. For instance, the Gaussian hypergeometric function arises naturally in cellular coverage and outage probability analyses [5]. Coverage probabilities and SINR thresholds often involve integrals expressible neatly as [21,22], commonly observed in stochastic geometry approaches to wireless network analysis. Notably, Jo et al. employed hypergeometric functions in downlink evaluations of heterogeneous networks [22], while Zhang et al. utilized similar methods to establish coverage bounds for multi-slope path-loss models [21]. Recent studies in ultra-dense networks (UDNs) further underline the importance of managing interference, efficient resource allocation, and energy utilization under extremely dense conditions, thus highlighting potential avenues where special functions like could significantly contribute to analytical simplification and computational efficiency. Moghaddam and Ashoor [23] developed a Kuhn–Munkres-based resource allocation algorithm improving throughput and user connectivity, while Stoynov et al. [24] provided a comprehensive taxonomy emphasizing key performance indicators and interference mitigation. Cho et al. [25] discussed critical challenges and emerging technologies required for 6G densification scenarios, highlighting the necessity for intelligent interference control. Furthermore, Kwong et al. [26] introduced a reinforcement learning framework optimizing handover parameters, and Kim and Kim [27] proposed coordinated multi-point transmission methods to significantly reduce interference in UDNs. Thus, the role of special functions such as extends beyond mere mathematical convenience, potentially offering vital analytical tools to manage and optimize performance in increasingly complex ultra-dense network environments.

The numerical evaluation of and related functions has been the subject of extensive research. Classic references present numerous linear and quadratic transformations (Euler, Pfaff, etc.) and contiguous relations [12,14]. In particular, the Pfaff transformation and its variants are widely used to move the argument z of into regions of convergence [12,14]. By these identities, Cai et al. [5] accelerate by a Pfaff-transformed algorithm, yet the sole reliance on this form causes slow convergence and rising cost as . Earlier, Forrey developed a general “transformation theory” for (restricting to real parameters) to avoid cancellation and slow convergence [28]. Michel and Stoitsov extended such ideas to the complex-parameter case, combining series expansions with analytic continuation to compute reliably [3]. These works underline that applying Pfaff and Euler transforms is a key computational strategy. Despite these advances, existing methods often target particular parameter ranges. For arbitrary parameters, the algorithm of Michel and Stoitsov [3] or Forrey’s method [28] remain among the most general but can become unstable if parameters are large or near singularities. These gaps motivate us to study the efficient and robust evaluation of .

3. Preliminaries

3.1. Gauss Hypergeometric Function and the Special Case

The Gauss hypergeometric function is a classical special function defined by the power series

where is the Pochhammer symbol. This function generalizes many elementary and special functions and satisfies the Gauss hypergeometric differential equation. It converges for , and admits analytic continuation to via identities such as Euler’s and Pfaff’s transformations. In particular, for , the series does not converge, but transformation formulas (e.g., Pfaff transformation or Euler integral representation as follows) are used to recast the function into a convergent form.

Lemma 1

(Pfaff’s transformation [11]). For with parameters and , the following transformation holds:

where the argument is shifted to , transforming the series into a form with faster convergence for z in the domain .

Lemma 2

(Euler integral representation [29]). If and , the Gauss hypergeometric function can be represented as

In this work, we focus on the special zero-balanced case with parameters , , and argument . Its series expansion for becomes

When either parameter a or b of Equation (1) is a non-positive integer, the series terminates into a polynomial. Here, prevents truncation, so the function generally is non-elementary. We can derive an integral representation for via Euler’s formula (3) as , which shows that decays for large y (roughly like when ) and provides a basis for numerical evaluation in that regime.

3.2. The Pfaff-Based Fast Algorithm for

In many simulations (e.g., the scenario mentioned in Section 3.3), one must evaluate millions of times. Cai et al. [5] observed that this becomes a computational bottleneck, and proposed a specialized Fast Pfaff Transformation Algorithm (FPT) tailored to the case . The core idea is to apply Pfaff’s transformation to infer that

which maps the argument y to . After this change of variable, the inner function has parameters and , and its Taylor series converges for .

In essence, FPT applies a Pfaff relation to write in a form whose series coefficients decrease rapidly when y is modest. The resulting series can then be summed term-by-term until convergence. Cai et al. [5] show that the partial-sum error after m terms satisfies an upper bound of the form

where is the Beta function. Crucially, when , the factor is close to 1, so the series converges very slowly. In other words, the Pfaff-based expansion is efficient for moderate y but becomes inefficient when y is very large. It is evident by taking in the error bound, the convergence rate is approximately equal to , which approaches , indicating poor performance. This weakness significantly restricts the performance of FPT in real-world applications.

3.3. Application in Non-Uniform Cellular Networks

The function arises naturally in a range of applied contexts, including probability theory, statistical distributions, and wireless network coverage analysis. A representative case is the study by Pratt et al. [9], who investigated coverage optimization in ultra-dense cellular networks over a finite region with non-uniform node density. They considered a circular domain of radius R, where access points (APs) are distributed according to a radially symmetric Poisson point process with intensity for , while mobile users follow a non-uniform spatial distribution. Their objective was to determine the optimal AP deployment strategy that maximizes the average SINR coverage probability across the user distribution. By applying tools from stochastic geometry, the analysis yielded closed-form expressions for both the nearest-AP distance distribution and the SINR coverage probability at any arbitrary location r, wherein plays a central computational role.

In practice, the coverage probability , as developed by Pratt et al. [9], relies on the Laplace transform to characterize interference from other APs. Under assumptions of Rayleigh fading and ignoring noise effects, the probability is:

where represents distance to the serving AP, is the path-loss exponent, indicates path-loss attenuation, is the identical transmit power of each access APs, is the average thermal noise power, and denotes the Laplace transform of the aggregate interference. For the non-uniform Poisson Point Process (PPP), Pratt et al. [9] show that can be expressed in closed form by integrating over the finite domain, where is the angle of intersection and is the radial distance. After separating radial and angular integrals, they arrived at an intermediate function that involves terms of the form . In other words, one finds expressions such as

Even in the special case of a user at the center (), they also obtain that

Thus, the Gauss hypergeometric function naturally emerges as a fundamental analytical tool for evaluating interference integrals in coverage analyses. As demonstrated by Pratt et al. [9], optimizing finite-domain cellular network coverage directly results in formulas involving this special function. Specifically, starting from the network model, through deriving nearest-neighbor statistics and coverage probabilities, to calculating the interference Laplace transform over a two-dimensional area, the evaluation culminates precisely in terms of . Consequently, efficient numerical algorithms, such as the Pfaff-based fast algorithm above, are crucial for practical and rapid optimization of wireless network performance.

4. Methodology and Analysis

We now present two high-precision series solvers for and their hybridization. First, we develop an Reciprocal-Argument Transformation Algorithm (RTA) based on a Mellin–Barnes reciprocal-argument identity. Then, we describe the hybrid method that chooses between the Pfaff-based solver (suitable for small y) and the RTA (for large y) according to a golden-ratio threshold. Finally, we analyze the convergence and numerical stability of these algorithms.

4.1. Reciprocal-Argument Transformation Algorithm (RTA)

To overcome the slow convergence issue encountered by Cai et al. [5] when evaluating for large values of y, we propose a novel method called the Reciprocal-Argument Transformation Algorithm (RTA). This approach exploits a reciprocal-argument linear transformation derived from the Mellin–Barnes integral representation, effectively addressing the convergence bottleneck.

Lemma 3

(Mellin–Barnes Integral Representation [29]). For parameters satisfying suitable convergence conditions (e.g., ), the Gauss hypergeometric function admits the representation

Leveraging Lemma 3, we establish the following theorem for :

Theorem 1

(Reciprocal-Argument Expansion). For all and , can be expressed as an infinite series involving elementary functions:

Proof.

Equation (8) significantly enhances computational efficiency by replacing Gamma functions with elementary trigonometric functions, enabling faster and numerically stable evaluations. However, for integer values , the formula exhibits removable discontinuities due to the denominator . We address these discontinuities explicitly via limit analysis in the following theorem.

Theorem 2

(Integer-Argument Case). For integer and , can be simplified to:

Proof.

For , we analyze the limit expression (11), and then isolate singular terms as follows.

Applying L’Hôpital’s rule iteratively, we can simplify the expression by

Guided by these theoretical developments, we introduce the Reciprocal-Argument Transformation Algorithm (RTA), presented formally in Algorithm 1, providing robust and highly efficient computation for large arguments y.

| Algorithm 1 Reciprocal-Argument Transformation Algorithm (RTA) |

Require: , , tolerance Ensure: |

This formal algorithmic treatment provides a comprehensive and numerically stable solution to efficiently evaluate the special function across a broad range of parameter values, thus overcoming previously identified limitations and significantly enhancing computational performance.

4.2. Analysis of Convergence and Numerical Stability

In this subsection, we analyze the convergence and numerical stability of Algorithm 1, establishing sharp upper bounds on its truncation error and confirming its geometric convergence rate. We begin with a key property that provides a uniform bound for the difference between fractional terms, as shown in Property 1.

Property 1.

Let and define . Then, for any integer and , the following bound holds:

Proof.

Let with . Define . Since , we have

For , set with . We have . Thus, is strictly decreasing with n, and

For , we have for , for , and always. Thus, , which is the bound; for , is monotonically decreasing, with the maximum is . Therefore, holds for all . Substituting into (13) yields the desired inequality (12). □

The above property provides a crucial uniform bound, which is essential for establishing the uniform convergence and numerical stability of the RTA. Building on this, we present a theorem that characterizes the geometric decay of the truncation error.

Theorem 3

(Error Bound of RTA). Let , and is denoted as the truncation error after m iterations of Algorithm 1. Then:

- If and ,

- If ,

In either case, the error decays geometrically with the ratio as m increases.

Proof.

For , from Equation (8), the truncation error after m iterations is

Setting in (16) as k, Property 1 ensures Therefore, The geometric series sum is This establishes (14).

For , we analyze with . Observe that when or , the corresponding term is absent from the expression. For , the expression reduces to ; for , it reduces to . For , it is straightforward to check that For , we have In all cases, the magnitude is always bounded by 1. Therefore, every term in the remainder sum is uniformly bounded, so that which proves (15). □

Theorem 3 establishes that RTA achieves geometric error decay with ratio , ensuring that only a few iterations suffice to reach high accuracy when y is moderate or large. The explicit error bounds guarantee numerical stability and robustness, as the truncation error remains tightly controlled even in high-precision settings. Furthermore, the closed-form bound enables users to directly determine the required number of iterations for any target accuracy. These properties confirm the suitability of RTA for precision-demanding scientific computations, combining theoretical reliability with practical efficiency.

4.3. Hybrid Algorithm for Solving

Both the FPT algorithm proposed by Cai et al. [5] and Algorithm 1 exhibit rapid convergence for computing , albeit each is optimal under distinct parameter regimes. Specifically, FPT demonstrates superior efficiency for small values of y, whereas RTA excels as y grows large. To uniformly harness these complementary strengths, we introduce a hybrid approach that adaptively selects the optimal solver based on the parameter y.

The convergence rate of each method is governed by y. For FPT [5], the error diminishes by a factor per iteration, while for RTA, the error reduction per iteration is Selecting the most efficient algorithm thus corresponds to solving the inequality , explicitly yielding This quadratic inequality identifies the positive root as a precise theoretical threshold. Hence, RTA demonstrates superior asymptotic convergence for , whereas the FPT algorithm is preferable for .

Compared to empirical comparisons, which are susceptible to various practical conditions and implementation-specific factors, the threshold above is established through a rigorous analysis of the intrinsic convergence rates of the respective algorithms. This theoretically derived threshold offers a principled and broadly applicable basis for the hybrid strategy, ensuring robustness and consistency across a wide range of computational scenarios. Building on this foundation, we present a hybrid solver for that adaptively selects the most efficient algorithm according to the parameter regime, as shown in Algorithm 2.

| Algorithm 2 Hybrid Algorithm |

Require: , Ensure:

|

Algorithm 2 synthesizes the complementary strengths of FPT [5] and our RTA method, guided by a theoretically established golden-ratio threshold. This unified framework achieves optimal iteration complexity and reliability across all parameter regimes, making it especially suitable for large-scale, high-precision applications where robust and efficient evaluation of is critical.

5. Experimental Evaluation

In this section, we rigorously evaluate the proposed RTA and Hybrid algorithms for computing the Gauss hypergeometric function , benchmarking them against Gauss hypergeometric implementation with Matlab R2024a (MatHyp, which is implemented by the function ‘hypergeom’) and Python 3.12.10 (PyHyp, which is implemented by SciPy’s ‘hyp2f1’). Experiments comprehensively cover runtime efficiency, numerical accuracy, robustness across representative parameter ranges , and practical performance in the realistic application scenarios, as shown in Section 3.3. All experiments were conducted on a hardware platform comprising an Intel Core i9-12900H CPU @ 2.50 GHz, 32 GB RAM. The experimental code has been made publicly available at https://github.com/imcjp/GaussRTA (accessed on 17 July 2025).

5.1. Convergence and Runtime Evaluation

We systematically examine the numerical convergence and runtime performance of the proposed RTA and Hybrid algorithms, comparing them against MatHyp, PyHyp, and the FPT method [5]. Representative values of are strategically selected to represent various practical regimes. Each case is repeated 1000 times with averaged results reported to ensure statistically reliable and meaningful performance assessments.

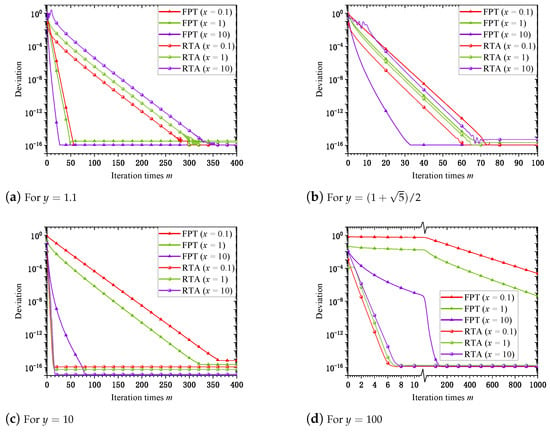

Figure 1 illustrates the convergence behaviors of the proposed RTA and the existing FPT algorithms as functions of the iteration count. We select representative values of y from the set , systematically covering moderate to large parameter regimes that satisfy the operational condition required by RTA. The convergence metric employed is deviation, defined rigorously as the absolute difference from MatHyp, serving as a high-precision numerical reference. Both RTA and FPT achieve deviations of the order of , reaching the double-precision floating-point epsilon, thus demonstrating complete numerical convergence. At the critical point , the convergence rates of RTA and FPT closely match, validating the rationality and correctness of choosing this specific y value as the switching threshold in the Hybrid algorithm. Notably, at larger values of y, RTA significantly outperforms FPT by achieving complete convergence within substantially fewer iterations. Especially at , FPT displays considerable convergence difficulty, failing to reach satisfactory accuracy even after 1000 iterations. In sharp contrast, RTA achieves complete convergence within remarkably fewer iterations, clearly highlighting its robust numerical stability and computational superiority in large-y scenarios. These results strongly confirm that RTA is particularly well suited for numerically challenging applications involving large y values, thereby underscoring its practical value in real-world computational tasks.

Figure 1.

Deviation of algorithms from criterion across different y.

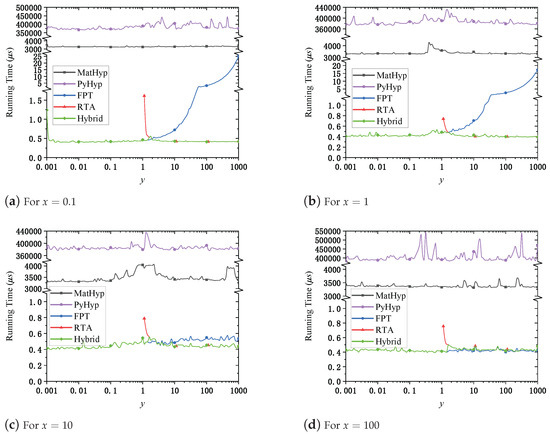

Figure 2 evaluates the runtime behavior of the algorithms as a function of y for representative fixed values of , covering both small and large magnitudes frequently encountered in practical simulations. Both MatHyp and PyHyp serve as reference baselines, consistently exhibiting the higher computational overhead against our method. Specifically, MatHyp requires approximately 3–4 ms per evaluation, while PyHyp incurs an even greater cost, reaching up to 0.4 s. As y increases beyond unity, the runtime of FPT markedly increases, especially pronounced for smaller values of x, revealing FPT’s limited scalability under large-y conditions. In contrast, RTA maintains consistently low runtimes (typically below 1 μs) when y exceeds the threshold value , clearly exhibiting its efficiency and complementary advantage in large-y regimes. The Hybrid algorithm leverages this complementary relationship, dynamically switching between RTA and FPT according to y, thus achieving uniformly stable and minimal runtime across all evaluated conditions. This approach yields speedups of up to , enabling rapid and reliable large-scale simulations. However, it is noted that the relative advantage of RTA diminishes somewhat with increasing x.

Figure 2.

Algorithmic running time versus y growth for different x.

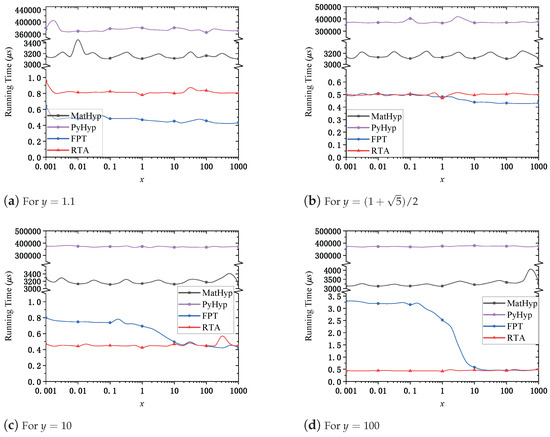

Figure 3 investigates runtime performance variations with respect to increasing x at selected fixed values of , again chosen to represent critical operational regimes. MatHyp and PyHyp remain computationally stable but slow across all examined values of x. Notably, RTA consistently demonstrates stable, low, and predictable runtime across the entire range of x, underscoring its robustness. For small x values, RTA consistently surpasses FPT in computational efficiency, an advantage particularly prominent at larger values of y. As x increases, however, the performance gap between RTA and FPT narrows, indicating the diminishing relative benefit of RTA in this regime. The Hybrid algorithm strategically combines the strengths of both methods, selecting the optimal algorithm for each pair. This adaptability ensures consistently optimal performance, regardless of the magnitudes of x and y, demonstrating its practical value in adaptive computation scenarios.

Figure 3.

Algorithmic running time versus x growth for different y.

Collectively, these experiments validate that RTA consistently achieves rapid convergence and exhibits computational stability, particularly advantageous for large values of y. This comprehensive evaluation clearly highlights the effectiveness and practical value of our proposed approaches in efficiently computing .

5.2. Performance in Non-Uniform Cellular Coverage Simulation

To further validate the practical efficacy and accuracy of our proposed Hybrid algorithm, we conduct an in-depth evaluation on SINR coverage calculation within the non-uniform cellular network coverage scenario introduced in Section 3.3. In this context, accurate and rapid computation of the Gauss hypergeometric function is crucial, as it directly appears in interference characterization integral calculations essential to SINR coverage probability assessments. Given the excessive overhead of PyHyp’s performance in Section 5.1, we employ the following three distinct algorithmic settings for comparative analysis: MatHyp: MATLAB’s built-in solver serving as the precise but computationally intensive reference; FPT Only: implemented using only the Pfaff transformation-based algorithm FPT [5]; Hybrid: our proposed Hybrid algorithm as Algorithm 2 which strategically integrates RTA with FPT.

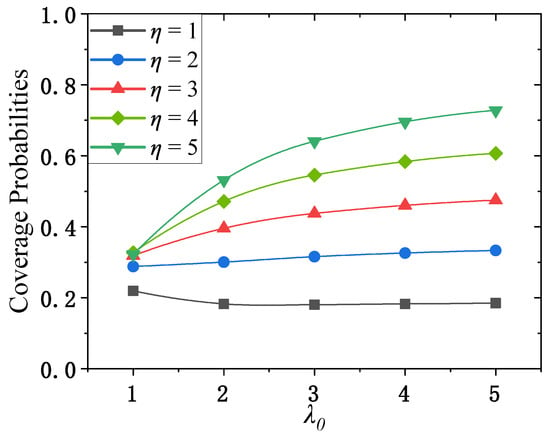

Experimental parameters were meticulously selected to reflect realistic cellular network scenarios. All simulation and numerical analysis parameters, along with their values or sampling ranges, are summarized in Table 1 for quick reference. Particular emphasis was placed on the path-loss exponent and the average AP density , which were systematically varied to assess the computational performance of under different propagation and density conditions. Each pair involved computing coverage probabilities across uniformly sampled reliable link probabilities P from 0 to 1, with runtime and relative errors averaged as experimental results. In Figure 4, we show the coverage probabilities computed by MatHyp for as the benchmark, which is in line with the work of Pratt et al. [9].

Table 1.

Simulation and numerical analysis parameters.

Figure 4.

SINR coverage simulation for different and .

Subsequently, we summarize the accuracy comparison between the FPT Only approach and the proposed Hybrid algorithm, using MatHyp as the accuracy benchmark in Table 2. Clearly, the Hybrid approach not only substantially enhances computational efficiency but also outperforms FPT Only in numerical precision. Specifically, the Hybrid algorithm maintains relative errors consistently at , demonstrating nearly perfect fidelity to MatHyp. In contrast, FPT Only exhibits slightly higher maximum relative errors ( ), indicating that integrating RTA within the Hybrid framework simultaneously enhances both efficiency and accuracy.

Table 2.

Relative error of coverage probability.

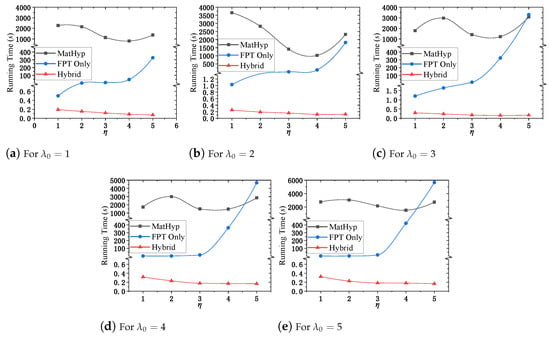

Figure 5 evaluates computational runtime with respect to varying path-loss exponent at fixed AP densities . MatHyp, although exact, exhibits severe inefficiencies, requiring excessively long computational times that limit practical feasibility in extensive simulation scenarios. The FPT Only improves efficiency significantly over MatHyp but still faces substantial runtime growth at higher , indicative of the increased complexity of evaluation under severe propagation conditions (e.g., Figure 5e, ). In sharp contrast, Hybrid displays exceptional computational stability, consistently achieving sub-second runtimes across the full spectrum of values and delivering at least a thousandfold reduction in runtime. This robustness highlights the Hybrid algorithm’s remarkable scalability in handling the direct computational challenges associated with variations in .

Figure 5.

Running time of SINR coverage simulation versus growth for different .

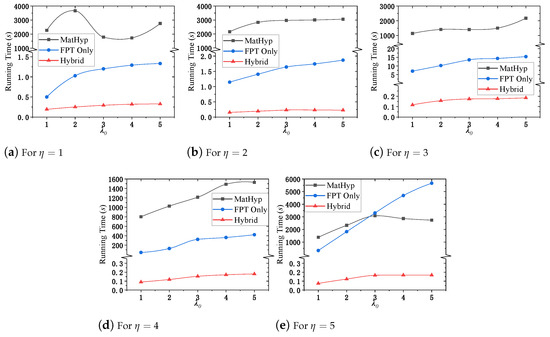

Conversely, Figure 6 provides an alternative perspective by evaluating runtime as a function of AP density for fixed . Despite not directly appearing in the expression of , the increased density indirectly amplifies computational load through more frequent function evaluations and integration complexity. Both built-in and FPT Only methods exhibit significant runtime growth with increasing AP density, especially noticeable in high- cases (e.g., Figure 6e). Remarkably, the Hybrid algorithm remains largely insensitive, maintaining minimal runtime increase even in dense scenarios. This result underscores the Hybrid algorithm’s capability to efficiently manage indirect computational complexities arising from parameter interactions beyond the direct evaluation of .

Figure 6.

Running time of SINR coverage simulation versus growth for different .

Collectively, the experimental results above robustly confirm that incorporating our RTA into the Hybrid algorithm markedly improves computational efficiency and numerical precision in SINR coverage probability simulations for non-uniform cellular networks. The substantial runtime reduction, often several orders of magnitude faster than conventional methods, paired with enhanced numerical accuracy, provides compelling evidence supporting the Hybrid algorithm’s applicability in practical large-scale network simulations and real-time optimization frameworks.

6. Conclusions

This paper proposes a highly efficient computational framework for accurately evaluating the zero-balanced Gauss hypergeometric function , which serves as a foundational mathematical kernel in various wireless network performance metrics, particularly in non-uniform cellular SINR coverage analyses. By introducing the RTA algorithm, based on a Mellin–Barnes reciprocal-argument identity, we achieve geometric convergence (convergence factor ) with analytically derived coefficients, thereby circumventing computationally intensive special-function evaluations. Our Hybrid algorithm further integrates this RTA approach with FPT, guided by a precise golden-ratio-based switching criterion, ensuring optimal computational efficiency alongside enhanced numerical precision. Comprehensive experiments confirm that our Hybrid algorithm achieves machine-precision accuracy (errors below ) within 1 μs, substantially outperforming conventional implements in speed and stability. Moreover, realistic SINR coverage evaluations reveal that our Hybrid algorithm dramatically reduced computational time from several hours to mere fractions of a second, demonstrating exceptional scalability in response to direct and indirect complexity factors.

Future work will extend our computational methodology to encompass multivariate hypergeometric functions, which are critically relevant in advanced MIMO interference analyses and ultra-dense network (UDN) scenarios. Meanwhile, drawing inspiration from recent influential studies, including the comprehensive resource allocation strategies and UDN-specific optimizations presented in [23,24,25,26,27], and the pivotal definitions outlined in [23], we will thoroughly incorporate detailed modeling of link reliability, interference effects, multipath fading, shadowing phenomena, and realistic densities of base stations and user equipment. By explicitly comparing coverage probability and SINR results against theoretical predictions, we will validate our computational methods comprehensively. Moreover, implementation-level enhancements, particularly parallelization strategies and GPU acceleration, will be systematically investigated to facilitate hardware-efficient, real-time network optimizations. These future research directions collectively aim to effectively bridge the gap between advanced analytical methodologies and practical communication system designs, laying a robust computational foundation critical for the deployment of next-generation ultra-dense wireless networks.

Author Contributions

Conceptualization, J.C.; Data curation, Z.Y.; Formal analysis, J.C.; Funding acquisition, J.C.; Investigation, Z.Y.; Methodology, J.C.; Project administration, J.C.; Resources, Z.Y.; Software, J.C.; Supervision, Z.Y.; Validation, J.C. and Z.Y.; Visualization, Z.Y.; Writing—original draft, J.C.; Writing—review and editing, J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China grant number 62402111.

Data Availability Statement

The data presented in this study are openly available in Github at https://github.com/imcjp/GaussRTA (accessed on 17 July 2025) [31].

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| RTA | Reciprocal-Argument Transformation Algorithm |

| FPT | Fast Pfaff Transformation Algorithm |

| SINR | Signal-to-Interference-plus-Noise Ratio |

| SNR | Signal-to-Noise Ratio |

References

- Gauss, C.F. Disquisitiones generales circa seriem infinitam . Comment. Soc. Regiae Sci. Gottingensis Recent. 1813, 2, 1–46. [Google Scholar]

- Kalmykov, M.; Bytev, V.; Kniehl, B.A.; Moch, S.-O.; Ward, B.F.L.; Yost, S.A. Hypergeometric Functions and Feynman Diagrams. In Anti-Differentiation and the Calculation of Feynman Amplitudes; Springer: Berlin/Heidelberg, Germany, 2021; pp. 189–234. [Google Scholar]

- Michel, N.; Stoitsov, M.V. Fast Computation of the Gauss Hypergeometric Function with All Its Parameters Complex with Application to the Pöschl–Teller–Ginocchio Potential Wave Functions. Comput. Phys. Commun. 2008, 178, 535–551. [Google Scholar] [CrossRef]

- Pearson, J.W.; Olver, S.; Porter, M.A. Numerical Methods for the Computation of the Confluent and Gauss Hypergeometric Functions. Numer. Algorithms 2017, 74, 821–866. [Google Scholar] [CrossRef]

- Cai, J.; Chen, M.; Zhang, S.; Hong, C.; Lu, Y. A fast algorithm for solving a kind of gauss hypergeometric functions in wireless communication based on pfaff transformation. In Proceedings of the 2019 International Conference on Networking and Network Applications (NaNA), Daegu, Republic of Korea, 10–13 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 85–89. [Google Scholar] [CrossRef]

- López-Benítez, M. Outage probability and average error performance of modulation schemes under η-μ and κ-μ fading channels in terms of elementary functions. In Proceedings of the 2016 10th International Symposium on Communication Systems, Networks and Digital Signal Processing (CSNDSP), Prague, Czech Republic, 20–22 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–6. [Google Scholar]

- Andrews, J.G.; Baccelli, F.; Ganti, R.K. A Tractable Approach to Coverage and Rate in Cellular Networks. IEEE Trans. Commun. 2011, 59, 3122–3134. [Google Scholar] [CrossRef]

- Mukhtar, S.; Begh, G.R. Unified Performance Analysis of Near and Far User in Downlink NOMA System over η-μ Fading Channel. J. Commun. Softw. Syst. 2021, 17, 305–313. [Google Scholar] [CrossRef]

- Pratt, P.; Dettmann, C.P.; Georgiou, O. Optimal non-uniform deployments in ultra-dense finite-area cellular networks. IEEE Commun. Lett. 2017, 21, 1139–1142. [Google Scholar] [CrossRef]

- Hankin, R.K.S. Numerical Evaluation of the Gauss Hypergeometric Function with the hypergeo Package. R J. 2015, 7, 81–88. [Google Scholar] [CrossRef]

- Pfaff, J.F. Disquisitiones Analyticae Maxime ad Calculum Integralem et Doctrinam Serierum Pertinentes; C. G. Fleckeisen: Helmstedt, Germany, 1797; Volume 1. [Google Scholar]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Functions: With Formulas, Graphs, and Mathematical Tables; Courier Corporation: North Chelmsford, MA, USA, 1965; Volume 55. [Google Scholar]

- Slater, L.J. Generalized Hypergeometric Functions; Cambridge University Press: Cambridge, UK, 1966. [Google Scholar]

- Schneider, B.I.; Miller, B.R.; Saunders, B.V. NIST’s Digital Library of Mathematical Functions. Phys. Today 2018, 71, 48–53. [Google Scholar] [CrossRef]

- Cuchta, T.; Grow, D.; Wintz, N. Discrete matrix hypergeometric functions. J. Math. Anal. Appl. 2023, 518, 126716. [Google Scholar] [CrossRef]

- Cuchta, T.; Grow, D.; Wintz, N. Divergence criteria for matrix generalized hypergeometric series. Proc. Am. Math. Soc. 2022, 150, 1235–1240. [Google Scholar] [CrossRef]

- Cuchta, T.J. Discrete Analogues of Some Classical Special Functions; Missouri University of Science and Technology: Rolla, MO, USA, 2015. [Google Scholar]

- Dickman, K. On the frequency of numbers containing prime factors of a certain relative magnitude. Ark. Mat. Astron. Och Fys. 1930, 22, A–10. [Google Scholar]

- de Bruijn, N.G. On the number of positive integers ≤ x and free of prime factors > y, II. Indag. Math 1966, 38, 239–247. [Google Scholar] [CrossRef]

- Hildebrand, A. On the number of positive integers ≤ x and free of prime factors > y. J. Number Theory 1986, 22, 289–307. [Google Scholar] [CrossRef]

- Zhang, X.; Andrews, J.G. Downlink cellular network analysis with multi-slope path loss models. IEEE Trans. Commun. 2015, 63, 1881–1894. [Google Scholar] [CrossRef]

- Jo, H.S.; Sang, Y.J.; Xia, P.; Andrews, J.G. Heterogeneous cellular networks with flexible cell association: A comprehensive downlink SINR analysis. IEEE Trans. Wirel. Commun. 2012, 11, 3484–3495. [Google Scholar] [CrossRef]

- Moghaddam, S.S.; Ashoor, E. Kuhn-Munkres-Based Sub-optimum Resource Allocation Algorithm for Ultra-Dense Networks. In Proceedings of the 2024 11th International Symposium on Telecommunications (IST), Tehran, Iran, 9–10 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 273–278. [Google Scholar] [CrossRef]

- Stoynov, V.; Poulkov, V.; Valkova-Jarvis, Z.; Iliev, G.; Koleva, P. Ultra-dense networks: Taxonomy and key performance indicators. Symmetry 2022, 15, 2. [Google Scholar] [CrossRef]

- Cho, H.; Mukherjee, S.; Kim, D.; Noh, T.; Lee, J. Facing to wireless network densification in 6G: Challenges and opportunities. ICT Express 2023, 9, 517–524. [Google Scholar] [CrossRef]

- Kwong, C.F.; Shi, C.; Liu, Q.; Yang, S.; Chieng, D.; Kar, P. Autonomous handover parameter optimisation for 5G cellular networks using deep deterministic policy gradient. Expert Syst. Appl. 2024, 246, 122871. [Google Scholar] [CrossRef]

- Kim, S.J.; Kim, J.G. Location-based resource allocation in ultra-dense network with clustering. Sensors 2021, 21, 4022. [Google Scholar] [CrossRef] [PubMed]

- Forrey, R.C. Computing the hypergeometric function. J. Comput. Phys. 1997, 137, 79–100. [Google Scholar] [CrossRef]

- Kristensson, G. Second Order Differential Equations: Special Functions and Their Classification; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Knuth, D. Art of Computer Programming, The Volume 1: Fundamental Algorithms, 3rd ed.; China Machine Press: Beijing, China, 1998. [Google Scholar]

- Cai, J. GaussRTA: A Project for Gauss RTA Implementation. GitHub. Available online: https://github.com/imcjp/GaussRTA (accessed on 17 July 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).