Abstract

Water body detection in synthetic aperture radar (SAR) imagery plays a critical role in applications such as disaster response, water resource management, and environmental monitoring. However, it remains challenging due to complex background interference in SAR images. To address this issue, a bi-encoder and hybrid feature fuse network (BiEHFFNet) is proposed for achieving accurate water body detection. First, a bi-encoder structure based on ResNet and Swin Transformer is used to jointly extract local spatial details and global contextual information, enhancing feature representation in complex scenarios. Additionally, the convolutional block attention module (CBAM) is employed to suppress irrelevant information of the output features of each ResNet stage. Second, a cross-attention-based hybrid feature fusion (CABHFF) module is designed to interactively integrate local and global features through cross-attention, followed by channel attention to achieve effective hybrid feature fusion, thus improving the model’s ability to capture water structures. Third, a multi-scale content-aware upsampling (MSCAU) module is designed by integrating atrous spatial pyramid pooling (ASPP) with the Content-Aware ReAssembly of FEatures (CARAFE), aiming to enhance multi-scale contextual learning while alleviating feature distortion caused by upsampling. Finally, a composite loss function combining Dice loss and Active Contour loss is used to provide stronger boundary supervision. Experiments conducted on the ALOS PALSAR dataset demonstrate that the proposed BiEHFFNet outperforms existing methods across multiple evaluation metrics, achieving more accurate water body detection.

MSC:

68T07

1. Introduction

Water body detection plays a crucial role in ecological monitoring, water resource management, and disaster prevention. Precise extraction of surface water information from remote sensing imagery not only facilitates early warning and assessment of disasters such as floods, but also supports environmental change monitoring. Therefore, water body detection is of great significance for ecological governance and sustainable development. Synthetic aperture radar (SAR) imagery, with its all-weather and all-day imaging capability, provides stable high-resolution images even under adverse climatic conditions such as rain, fog, and cloud cover, demonstrating unique advantages in water body detection [1,2,3]. Particularly, the distinct differences in electromagnetic backscattering characteristics between water and land make pixel-level detection based on SAR images one of the mainstream approaches [4,5]. However, SAR-image-based water body detection still faces some practical challenges. More precisely, SAR images with complex backgrounds are characterized by large variations in grayscale values and the presence of diverse surface targets, making the detection task particularly challenging. In particular, the presence of non-water features, such as wetlands and marshes, can lead to scattering patterns that resemble water bodies due to surface moisture and vegetation. This increases the likelihood of misclassification. Additionally, shadow regions cast by terrain or buildings often have a low backscatter similar to that of water bodies, resulting in further false positives. These issues are illustrated in Figure 1a and Figure 1b, respectively.

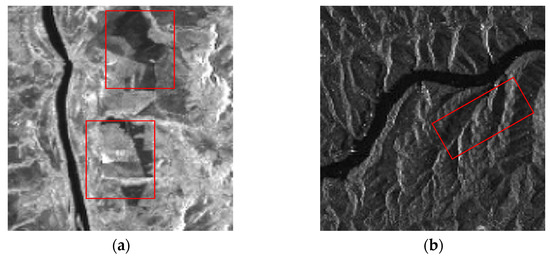

Figure 1.

Two cases of SAR images with complex backgrounds and some representative disturbing areas marked with red frames. (a) Wetlands or marshes in the background, (b) shadowed areas in the background.

From the above figure, despite not being actual water bodies, wetlands and marshes often produce water-like scattering responses due to surface moisture and vegetation, leading to frequent false positives. Similarly, shadowed areas caused by terrain or man-made structures typically appear dark in SAR images due to low backscatter and are therefore prone to being misclassified as water bodies. In addition to the above, topographic variations and imaging noise can cause blurry boundaries, fragmented predictions, and disconnected river structures, posing challenges to accurate water body segmentation. Moreover, traditional methods often depend strongly on local features and struggle to efficiently combine local details with global contextual information, further limiting detection accuracy. Therefore, achieving accurate water body detection under complex backgrounds remains a highly challenging task.

Water body detection in SAR images can generally be classified as unsupervised and supervised approaches. Unsupervised methods typically rely on pixel-level statistical features for classification, such as histogram-based thresholding, clustering analysis, and edge detection. Although these techniques yield good results in simple backgrounds, their effectiveness significantly declines in complex environments due to the lack of contextual understanding and deep feature modeling capabilities. To overcome the limitations of traditional unsupervised methods in challenging scenarios, researchers have turned to supervised learning approaches, which utilize labeled data to extract richer image features for classification. Pulvirenti et al. [6] proposed a flood mapping algorithm for SAR images based on fuzzy logic reasoning. Qin et al. [7] integrated polarimetric decomposition features with a Naive Bayes classifier. Gasnier et al. [8] employed external auxiliary databases and Conditional Random Fields (CRFs) to propose an automatic method for refining water boundaries. Although these supervised approaches have achieved noticeable improvements in feature representation and detection accuracy compared to traditional unsupervised methods, they still heavily rely on handcrafted features, making it difficult to fully capture the complex nonlinear relationships intrinsic to SAR imagery.

In recent years, deep learning has achieved remarkable success in the field of image segmentation and classification. Deep learning methods model spatial relationships between pixels more effectively than traditional methods, which improves detection accuracy in complex scenes. The fully convolutional network (FCN) [9] was the first to introduce convolutional neural networks (CNNs) for end-to-end pixel-wise prediction tasks. U-Net [10] markedly enhanced segmentation performance by incorporating skip connections, which align encoder and decoder features to retain spatial details more effectively. SegNet [11] recorded pooling indices during downsampling to guide accurate feature reconstruction, making it suitable for high-resolution segmentation tasks. The DeepLab [12] series leveraged atrous convolutions and gradually combined atrous spatial pyramid pooling (ASPP) [13] to enhance multi-scale contextual awareness. Due to these advances, deep learning has been widely applied to water body detection in SAR images. EDWNet [14] introduced a symmetric encoder–decoder architecture with an edge refinement module, improving water edge localization. MADF-Net [15] adopted a dense feature fusion module to integrate semantic and edge features, boosting representational capacity and detection accuracy. SCR-Net [16] used a two-branch encoder to enhance water feature extraction by incorporating spectral information from different SAR bands. FFEDN [17] utilized polarimetric and backscattering features of SAR images to better delineate water structures. SDNet [18] proposed a hierarchical sandwich-style decoder to refine object boundaries and structures progressively, achieving excellent results in small-scale water detection. FWENet [19] presented a multi-scale decoder integrating dilated convolutions and residual connections to improve generalization in complex scenes. Furthermore, attention mechanisms have been incorporated to enhance model performance. DAM-Net [20] introduced a differential attention mechanism and Vision Transformer to dynamically model structural changes across time-series SAR imagery, achieving high-precision detection. WaterFormer [21] combined CNN and Transformer structures, leveraging self-attention to enhance global contextual understanding while maintaining spatial resolution. FCMANet [22] fused class-aware and multi-scale attention to enable finer feature classification and integration, significantly improving the accuracy of water body detection.

Although the aforementioned studies have made notable advances in water body detection, they still fall short in effectively extracting and focusing on water-specific features. As a result, existing methods often fail to achieve satisfactory detection accuracy under complex backgrounds. To address these challenges, we propose a novel water body detection network called BiEHFFNet, which integrates the bi-encoder architecture, hybrid feature fusion module, and multi-scale content-aware upsampling module. The main contributions of this work are summarized as follows:

- (1)

- A bi-encoder architecture combining ResNet and Swin Transformer is employed to jointly extract local texture details and global contextual semantics. This collaborative design enhances the completeness of feature representation and better characterizes the shape and boundaries of water bodies under complex backgrounds. In addition, the CBAM is incorporated to inhibit useless information of the output features of each ResNet stage.

- (2)

- A cross-attention-based hybrid feature fusion (CABHFF) module is proposed to fuse features from the bi-encoder. By integrating cross-attention and channel attention for learning feature weights, this module enables interaction and effective fusion between local and global features, thereby improving the model’s capability to capture water-related structures.

- (3)

- A multi-scale content-aware upsampling (MSCAU) module, which integrates ASPP and the lightweight CARAFE operator, is incorporated in the decoder part to enhance multi-scale feature representation and restore fine spatial details, thereby alleviating feature distortion during upsampling and improving the precision of water body detection.

- (4)

- A composite loss function is designed by combining Dice loss and Active Contour loss. This combination not only addresses class imbalance between water and non-water regions but also strengthens boundary constraints, further boosting the detection performance.

2. Materials and Methods

2.1. Encoder–Decoder Architecture

The encoder–decoder architecture is one of the most widely adopted frameworks in deep learning, initially introduced by Cho [23] et al. and Sutskever [24] et al. Its fundamental principle involves compressing input data into low-dimensional latent representations through an encoder, followed by a decoder that reconstructs the output through progressive upsampling, facilitating end-to-end representation learning and output synthesis. In semantic segmentation tasks, the encoder extracts multi-scale and high-level semantic features via progressive spatial downsampling, while the decoder restores spatial resolution to produce pixel-wise predictions aligned with the input image. In water body detection, this structure effectively models the semantic contrast between water and non-water regions in challenging scenes and enhances boundary recovery and small target recognition through decoding. A variety of models have further developed this architecture: U-Net++ [25] enhances multi-scale feature fusion via nested and dense connections; UNet 3+ [26] utilizes full-scale skip connections in the encoder–decoder pathway to boost spatial information restoration in upsampling; SegFormer [27] improves boundary localization by employing a Transformer-based encoder combined with a lightweight decoder, enabling efficient multi-scale feature aggregation and precise segmentation; the DeepLabv3+ [28] incorporates atrous convolution and spatial pyramid pooling to strengthen contextual modeling; and BASNet [29] combines deep supervision with a residual refinement module to simultaneously capture global semantics and fine details. Overall, the encoder–decoder framework is good at capturing features, reconstructing spatial details, and scaling. By using skip connections, multi-scale fusion, and advanced upsampling methods, it reduces information loss and semantic confusion in deep networks. As such, it has become a foundational and continually evolving framework in water body detection, significantly contributing to the advancement of segmentation performance in complex scenarios.

2.2. Attention Mechanism

In recent years, owing to the remarkable success of attention mechanisms [30,31,32] across various tasks, a growing number of studies have integrated attention modules into deep learning models [33,34]. The core idea of attention is to capture feature correlations. It enhances important regions and suppresses irrelevant information, helping the model focus on key targets. The Squeeze-and-Excitation (SE) [35] module enhances significant channel-specific features by modeling inter-channel dependencies. The convolutional block attention module (CBAM) [36] decouples channel and spatial attention, significantly enhancing the network’s spatial localization and semantic focus capabilities. The dual attention module [37] simultaneously models global interactions in spatial and channel domains, improving semantic understanding and structural modeling. Boundary-aware attention mechanisms directly increase responsiveness to object edges, thereby refining localization accuracy. Cross-attention [38] facilitates feature interaction between different sources and has shown effectiveness in multi-modal fusion and multi-scale integration tasks. Coordinate attention [39] combines channel information with spatial positional encoding, enabling fine-grained modeling of spatially structured targets such as elongated objects and water bodies. Inspired by these approaches, this work implements specialized attention modules at every encoder level to enhance multi-scale feature extraction according to the characteristics and distributions of hierarchical representations. Furthermore, Cross-attention and channel attention mechanisms are employed during the feature fusion stage to strengthen the interaction between dual encoder streams, thereby boosting detection accuracy under challenging conditions.

3. Methodology

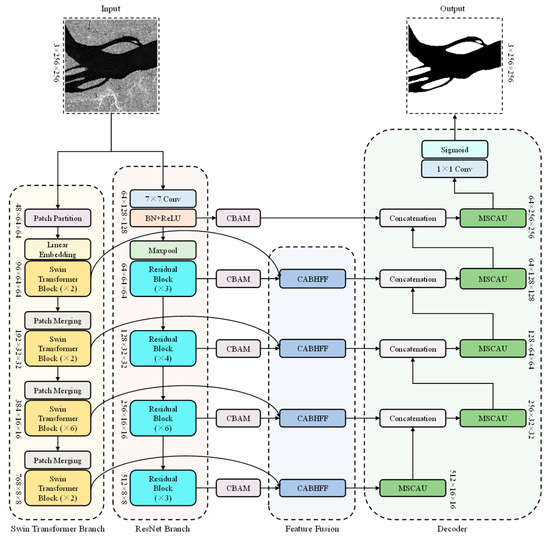

BiEHFFNet is built upon a classic encoder–decoder architecture and consists of five major components: (1) input, (2) bi-encoders, (3) feature fusion module, (4) decoder, and (5) output. The network takes a synthetic aperture radar (SAR) image of size 256 × 256 pixels as input. The core of the architecture is the adoption of a bi-backbone encoder design. The ResNet-34 branch focuses on extracting local textures and edge details through residual learning, with CBAM modules applied at each stage to suppress redundant features. The other branch employs the Swin Transformer, which leverages self-attention mechanisms to capture long-range dependencies and global contextual features. The obtained features from the above two branches at corresponding stages are progressively aligned and fused using a hybrid feature fusion module. Following this, the fused features are then decoded through a sequence of MSCAU modules. Additionally, skip connections are employed to incorporate features from the shallow layers of the encoders, facilitating the recovery of spatial details. Finally, the network outputs a pixel-wise segmentation map that has the same resolution as the input image. The specific structure of BiEHFFNet is shown in Figure 2.

Figure 2.

Structure of the proposed BiEHFFNet.

3.1. Encoder of BiEHFFNet

- (1)

- ResNet branch: This branch adopts a lightweight ResNet-34 [40] as the backbone network, leveraging its shallow residual structure to effectively model edge and texture features. Local fine-grained spatial representations are progressively extracted through its multi-level architecture, which consists of an initial convolution layer followed by four residual stages. The initial convolution layer comprises a standard convolution operation and a max pooling layer. The four subsequent stages comprise multiple residual blocks, with output channel dimensions of 64, 128, 256, and 512, respectively. To enhance feature discrimination and reduce computational redundancy in the bi-branch structure, a CBAM is inserted after each residual stage. It sequentially applies channel and spatial attention to emphasize important features and suppress irrelevant background noise. This not only improves the network’s focus on water-relevant regions under complex backgrounds, but also reduces computational cost by refining feature responses before fusion. These features provide detailed support for precise water body boundary localization.

- (2)

- Swin Transformer branch: This branch uses the Swin Transformer architecture [41] to enhance the global semantic representation of SAR images by capturing long-range dependencies. Its multi-level architecture, which uses shifted window mechanisms, balances computational efficiency and contextual learning effectively by aggregating both local and global information. The structure consists of four stages, each of which is composed of multiple Swin Transformer blocks. To enable efficient feature fusion with the ResNet branch, the output channels of the Swin stages (originally 96, 192, 384, and 768) are adjusted to match the corresponding dimensions of the ResNet stage outputs. This bi-branch encoder design significantly enhances the model’s ability to identify water bodies amidst complex background interference.

3.2. Cross-Attention-Based Hybrid Feature Fusion Module

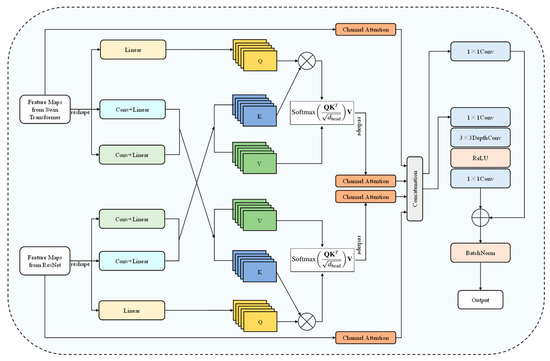

In complex background scenarios, the representational gap between local texture details and global semantic information often makes it difficult for traditional fusion methods to effectively coordinate multi-source features [42], leading to poor boundary localization and suboptimal detection accuracy. To address this issue, we propose a Cross-Attention-Based Hybrid Feature Fusion (CABHFF) module. Specifically, this module firstly introduces a bidirectional cross-attention mechanism to establish interactive correlations between features extracted by the ResNet and Swin Transformer branches, enabling comprehensive integration of local and global representations. Then, the efficient channel attention [43] is employed to learn feature weights, which are used to perform weighted fusion of multi-source features. This enables the model to highlight important cues and reduce background interference. By progressively fusing the heterogeneous features from both encoders, CABHFF achieves complementary enhancement of spatial details and semantic context, ultimately boosting detection performance for water boundaries amid complex noise. The specific structure of CABHFF is shown in Figure 3.

Figure 3.

Structure of the proposed CABHFF.

- (1)

- Cross-attention-based local–global feature interaction: First, the input features from the ResNet and Swin Transformer encoders are individually processed through channel compression and linear projection to standardize their feature dimensions, making them suitable for the subsequent cross-attention computation. A bidirectional cross-attention pathway is then constructed. In one direction, the ResNet branch serves as the query, while the Swin branch provides the key and value, enabling global semantic features from Swin to guide and enhance the local representations of ResNet. Conversely, in the other direction, the Swin branch is used as the query and the ResNet branch as the key and value, allowing local features from ResNet to refine the global semantics of Swin. For each attention direction, the attention scores are calculated by Softmax and used to weight value features via matrix multiplication to obtain the final enhanced outputs.

- (2)

- Channel-attention-based hybrid feature fusion: After the bidirectional cross-attention enhancement stage is complete, a residual connection is introduced to retain the original input features and improve robustness and feature variability. In the final fusion stage, four sets of features including the original features from both encoder branches, as well as the two cross-attention-enhanced features are used together to achieve comprehensive information integration. A channel attention module is applied to each feature to compute importance weights and adaptively balance their contributions. The four reweighted feature maps are then concatenated along the channel dimension, resulting in a weighted concatenation-based fusion that effectively integrates shallow spatial details and deep semantic information. The fused feature is further refined through a dual-branch residual structure to improve semantic coherence. One branch applies a standard convolution operation, while the other branch passes the features through a sequence of convolution, depthwise convolution, and ReLU activation, followed by another convolution to reduce the channel dimension. The outputs of the two branches are aggregated and standardized to produce the final fused representation. This CABHFF module, by integrating residual learning, attention guidance, and structured fusion, significantly enhances the interaction between the two encoder branches (ResNet and Swin), supporting complementary fusion of features from different sources. The proposed hybrid feature fusion module leverages residual preservation, spatially adaptive weighting, and structured fusion refinement to facilitate more effective interaction among heterogeneous features. Consequently, it substantially improves the representation of water bodies in complex backgrounds.

3.3. Decoder of BiEHFFNet

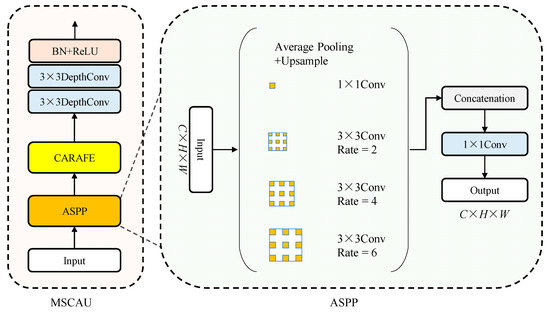

In SAR images with complex backgrounds, water body boundaries are often rendered indistinct and easily obscured by surrounding noise, resulting in discontinuous edge structures in detection outcomes. To address this issue, a MSCAU module is designed, which integrates ASPP and the CARAFE [44] block. This module adopts a fusion-prioritized multi-scale restoration scheme to enhance the structural restoration of water bodies, following a bottom-up, layer-wise reconstruction approach. BiEHFFNet differs from conventional “upsample-then-fuse” strategies by performing feature fusion before upsampling at each decoding stage, except at the lowest level. At each stage, the current encoder output is concatenated with the previous MSCAU output along the channel dimension. The combined features are then upsampled. This design enables the progressive integration of semantic information and spatial details, thereby improving structural coherence in the reconstruction. The ASPP module employs parallel dilated convolutions with dilation rates of 1, 2, 4, and 6 to extract context across multiple scales. Smaller dilation rates are more effective at extracting fine-grained water body structures and boundary details, resulting in more accurate edge delineation. The fused features are aligned via convolution and then passed to the CARAFE upsampling module. CARAFE produces adaptive kernels guided by content relevance to redistribute features based on local content, significantly improving the restoration of fine boundary details and alleviating the distortion commonly introduced by standard upsampling methods. The upsampled features are further processed by two convolution layers with ReLU activation before being sent to the subsequent decoder level. This fusion-first strategy improves the model’s ability to perceive water bodies and achieves better performance in complex backgrounds. The specific structure of MSCAU is shown in Figure 4.

Figure 4.

Structure of the proposed MSCAU module.

3.4. Loss Function

In water body detection tasks, small-scale water bodies are often challenging to handle. These targets are typically characterized by limited size, unclear edges, and low contrast with the background. Conventional single loss function often struggles to ensure both accurate region detection and complete boundary delineation simultaneously. To address this issue, we design a composite loss function that integrates Dice loss [45] and Active Contour (AC) loss [46].

- (1)

- Dice Loss: This loss aims to enhance foreground recognition capability and overall detection accuracy. Dice loss is well suited for scenarios where the foreground occupies a small portion of the image. It boosts recall by maximizing the overlap between the predicted result and the ground truth mask, reducing the risk of missing small water bodies. The expression of the loss function is as follows:

- (2)

- Active Contour loss: To enhance the model’s ability to capture water boundary structures, an AC loss is introduced, which incorporates region-based energy and boundary smoothness constraints. This loss function integrates the principles of region energy and boundary regularization from classical Active Contour models within the training objective, serving as a structure-aware form of supervision. It guides the network to accurately delineate object regions and locate precise boundary positions, thereby improving the structural accuracy of water body detection. The AC loss consists of a region energy term and a boundary energy term, and is formally defined as follows:

In the above formula, denotes the predicted probability map from the model, and represents the ground truth labels. and correspond to the average intensities of the foreground and background regions, respectively. denotes the gradient of the predicted probability map. The parameters , and are the weighting coefficients for the respective terms, which are set to 0.8, 0.4, and 0.01 in this study.

- (3)

- Overall loss function: the final loss function used during training is defined as a weighted sum of the two sub-losses:

This composite loss function enables the model to jointly optimize for accurate segmentation of the overall water regions while also refining boundary details. As a result, it markedly enhances detection outcomes under complex background conditions.

4. Experiments

4.1. Experiment Data

The ALOS PALSAR dataset employed in this paper is derived from the Phased Array type L-band Synthetic Aperture Radar (PALSAR) onboard the ALOS satellite, which was launched by the Japan Aerospace Exploration Agency (JAXA) on 24 January 2006. Each SAR image has a spatial resolution of 256 × 256, and the dataset contains 1000 samples in total. To ensure fair performance evaluation and enhance the model’s generalization ability, the dataset is partitioned into training, validation, and testing subsets following a ratio of 6:2:2.

The Sen1-SAR dataset used in this study is constructed from SAR imagery provided by the Sentinel-1 satellite, sourced from the publicly available Sen1Floods11 dataset. From this resource, 1000 SAR image samples of size 512 × 512 are selected, with corresponding water/non-water labels generated using the Otsu thresholding method. All images are preprocessed and uniformly resized to 256 × 256 before being used for training and evaluation, following the same 6:2:2 split as the ALOS PALSAR dataset.

The above partitioned ratio is in line with the approach taken in multiple studies on the detection of water bodies. Specifically, the training set is used to optimize model parameters; the validation set guides hyperparameter tuning; and the testing set is withheld for independent evaluation after training, in order to comprehensively assess the model’s detection performance on unseen data. For medium-sized datasets like ours, the 6:2:2 split strikes a balance by providing a sufficient number of training samples while maintaining relatively equal proportions for validation and testing. This facilitates more stable hyperparameter tuning and a more reliable evaluation of the model’s generalization performance. Furthermore, it mitigates the risk of overfitting during tuning due to an undersized validation set.

4.2. Evaluation Metrics

To comprehensively evaluate the performance of the proposed model in water body detection tasks, five commonly used evaluation metrics are adopted, namely Intersection over Union (IoU), mean Intersection over Union (mIoU), Overall Accuracy (OA), Recall (Re), and F1 Score. These metrics assess the model’s prediction quality and robustness from multiple perspectives. IoU, defined as the ratio of intersection over union between prediction and ground truth, reflects their spatial agreement. MIoU is the average of IoUs across all classes and is used in this task to evaluate prediction performance on both water and non-water categories simultaneously. OA is the proportion of correctly classified pixels to the total number of pixels, providing a basic measure of detection correctness. Re measures the proportion of correctly identified water pixels among all actual water pixels, reflecting the model’s detection capability. The F1 Score is the harmonic mean of precision and recall and provides a balanced evaluation of the model’s precision and completeness in detecting water bodies. The calculation formulas for these metrics are defined as follows:

where , , and refer to the count of true-positive, true-negative, false-positive, and false-negative pixels, respectively. In water body detection tasks, especially in the presence of small and fragmented water regions, significant class imbalance is a common challenge. The IoU metric typically focuses on the accuracy of water class predictions, often neglecting the evaluation of the non-water class. As a result, it might not adequately capture the model’s performance under complex background conditions. To address this limitation, the mIoU is introduced. By averaging the IoU across all classes, mIoU offers a more comprehensive measure of segmentation quality across the complete label space, capturing both foreground and background segmentation quality. This is particularly important for evaluating generalization ability under diverse and complex scenes. In summary, the combination of the above metrics enables a more thorough and objective assessment of the model’s performance, covering both global accuracy and fine-grained detail quality.

4.3. Experiment Setting

All experiments are conducted on a mobile workstation equipped with an NVIDIA RTX 4070 GPU (8 GB VRAM) and CUDA 12.1. The model is implemented using Python 3.9.1 and PyTorch 2.5.1+cu121. The development and training are performed in PyCharm 2024.1.4, under Windows 11. This setup ensures efficient training and inference with full support for modern GPU acceleration. During training, the Adam [47] optimizer is employed with an initial learning rate set to 0.001. A learning rate scheduling strategy based on validation results (ReduceLROnPlateau) is adopted to dynamically adjust the learning rate according to the validation loss. To accelerate computation and reduce memory consumption, mixed-precision training is utilized. Each training epoch uses a batch size of 2, and the training is scheduled for 100 epochs. An early stopping mechanism is enabled to mitigate the risk of overfitting. In the overall loss function of the proposed model, the weights for each component loss, namely, and , are set to 0.5 and 0.5, respectively. The key hyperparameters, including the learning rate, batch size and loss weighting factors, are determined through a comprehensive process involving prior research references, empirical tuning and automated optimization techniques. This ensures reproducibility and robust model performance. Firstly, we set baseline values by consulting the hyperparameter settings commonly used in recent studies on water body detection. We then perform empirical adjustments via preliminary experiments, iteratively fine-tuning the parameters based on validation metrics in order to achieve stable convergence and improved accuracy. Automated optimization strategies are also applied to further refine the selection of hyperparameters. Due to GPU memory limits under mixed-precision training, the batch size is specifically constrained to two, balancing computational resources and training stability.

4.4. Comparison Experiments Against Other Models

The presentation of the experimental results is divided into two parts. First, we show the water body detection on SAR images of Figure 1 by the proposed model and compare its results with other related models to demonstrate the effectiveness of our model in processing the disturbing factors in SAR images. The water body detection performance of the proposed BiEHFFNet is reported in the table below and compared against six mainstream models in order to validate its detection accuracy under complex and challenging background conditions. The comparative models include classical convolutional neural network architectures (UNet and ResUNet [48]), enhanced models with multi-scale feature extraction capabilities (UNet++ and DeepLabV3+), and two representative attention-based methods developed in recent years (SwinUNet [49] and TransUNet [50]). To ensure a fair and rigorous comparative evaluation, all baseline models are re-implemented based on their official or publicly available repositories, with initial hyperparameters set according to the recommendations in their original papers. On this basis, we conduct consistent empirical adjustments to improve their performance on our dataset. Specifically, the learning rate is first set according to the suggested value in the original paper, and then gradually adjusted using linear or exponential strategies. The final learning rate is selected by observing the trend of the training loss curve and choosing the value that led to faster and more stable convergence. We also experiment with different optimizers such as Adam and SGD (Stochastic Gradient Descent), as well as learning rate schedulers including step decay and cosine annealing, and select the configuration that yielded better performance on the validation set. In addition, regularization methods such as weight decay and dropout are applied to all models to mitigate overfitting. In summary, following the approach described above, each model undergoes multiple rounds of experimentation, with hyperparameters carefully tuned in each round, while being trained and validated using the same data splits, ensuring that any performance differences are attributed solely to model architectures rather than inconsistencies in training conditions. Finally, the best-performing results are selected as the comparative experimental references. The corresponding water body detection results are illustrated in Figure 5 and Figure 6.

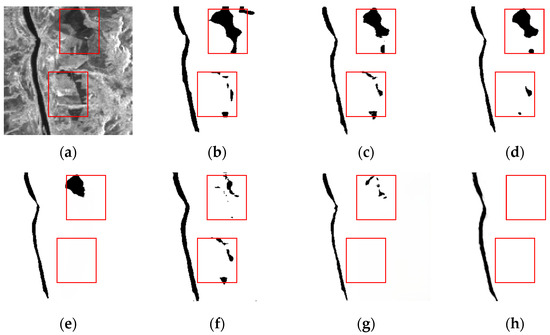

Figure 5.

Water body detection on Figure 1a by different methods, in which the disturbing areas highlighted with a red frame are likely to be misclassified as a water body. (a) Original SAR image, (b) UNet, (c) ResUNet, (d) UNet++, (e) DeepLabV3+, (f) SwinUNet, (g) TransUNet, (h) BiEHFFNet.

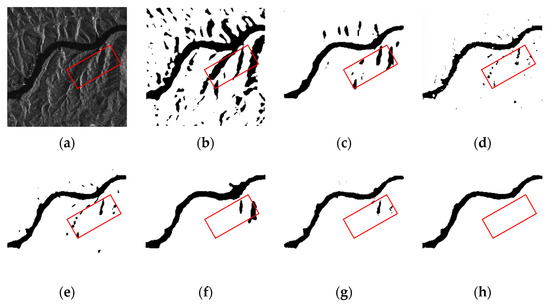

Figure 6.

Water body detection on Figure 1b by different methods, in which the disturbing areas highlighted with a red frame are likely to be misclassified as a water body. (a) Original SAR image, (b) UNet, (c) ResUNet, (d) UNet++, (e) DeepLabV3+, (f) SwinUNet, (g) TransUNet, (h) BiEHFFNet.

As shown in Figure 5 and Figure 6, BiEHFFNet effectively suppresses interference from background factors. It successfully avoids falsely detecting shadowed regions and ambiguous regions as water bodies.

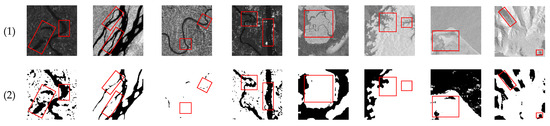

To better prove the effectiveness of the proposed model, we show the water body detection results by the proposed model and the above six deep-learning-based models on the ALOS PALSAR dataset and the Sen1-SAR dataset. Their results are illustrated in Figure 7.

Figure 7.

Water body detection results on SAR images, including the original label and the outputs from seven different models. (a) UNet, (b) ResUNet, (c) UNet++, (d) DeepLabV3+, (e) SwinUNet, (f) TransUNet, (g) BiEHFFNet, (h) Ground truth.

In Figure 7, (1) represents the original SAR image; (2)–(8) show the detection results of UNet, ResUNet, UNet++, DeepLabV3+, SwinUNet, TransUNet, and BiEHFFNet, respectively; and (9) denotes the ground truth mask. Subfigures (a–d) show results from the ALOS PALSAR dataset, while subfigures (e–h) present the results from the Sen1-SAR dataset. The visualizations encompass a variety of challenging scenarios frequently encountered in water body segmentation tasks. These include complex backgrounds that may lead to false positives, slender and fragmented stream-shaped water bodies susceptible to disconnection or omission, mixed-scale scenes containing both large water regions and narrow rivers, and water bodies with multiple fine-grained branches that pose challenges to structural continuity. Additionally, the presence of terrain shadows, such as those cast by mountainous regions, can visually resemble water and further mislead segmentation models. The red-boxed regions highlight typical failure cases across these diverse and complex conditions.

In complex scenes (a), CNN-based models like UNet and ResUNet often confuse background textures with water surfaces, resulting in false positives and over-segmentation. Although multi-scale models like UNet++ and DeepLabV3+ mitigate this issue by incorporating contextual information, they still struggle to suppress small-scale noise in low-contrast regions. Transformer-based approaches, including SwinUNet and TransUNet, offer improved global modeling and handle large-scale structures better, but still exhibit inaccuracies along water boundaries, especially at transitions with complex backgrounds. In contrast, BiEHFFNet effectively minimizes distraction from background textures through cross-attention fusion and hierarchical reconstruction, yielding more accurate and consistent segmentation. In scenes dominated by fine and narrow water bodies (b, c), segmentation becomes more challenging due to limited spatial extent and severe class imbalance. CNN-based models often produce fragmented or disconnected results, missing thin or curved streams. Multi-scale methods improve overall structure detection but still fail in intricate regions with highly irregular shapes. Transformer-based models capture global context better, yet often show incomplete edge predictions in extremely narrow areas. BiEHFFNet, by contrast, demonstrates superior capability in preserving fine-grained structural details, maintaining continuity even in highly curved or fragmented streams. In mixed-scale scenes (d), where wide water bodies and narrow streams coexist, models must balance large-scale integrity with fine-scale continuity. CNN-based models tend to favor large, high-contrast areas, overlooking minor branches. While multi-scale and Transformer-based methods enhance feature representation across varying spatial extents, they still struggle with transitions between scales, often leading to local discontinuities. BiEHFFNet effectively integrates global and local features through its fusion-first reconstruction strategy, maintaining both large-scale integrity and fine-scale connectivity. As highlighted in the red-boxed regions, it consistently delivers more complete and coherent segmentation under complex, mixed-scale conditions. In scenarios featuring water bodies with multiple fine-grained branches, the segmentation task becomes even more difficult due to increased structural complexity and high sensitivity to spatial discontinuity. CNN-based models often fail to capture these subtle branches, resulting in incomplete or overly smoothed predictions. Multi-scale and Transformer-based approaches partially alleviate this issue by enhancing spatial resolution and global context awareness but still suffer from broken connections and loss of detail in highly branched regions. BiEHFFNet effectively addresses this challenge through its detail-aware reconstruction modules, enabling the model to capture intricate water structures and preserve branch continuity with high precision. Similarly, in scenes containing mountainous terrain or background shadows, misclassification becomes a serious issue. Conventional CNNs are prone to confusing shadowed or textured terrain with water surfaces, particularly in low-intensity regions. While Transformer-based models benefit from global reasoning, they may still be misled by the visual similarity between shadows and actual water bodies. BiEHFFNet, leveraging both hierarchical semantic guidance and attention-based suppression of irrelevant background cues, demonstrates superior robustness in distinguishing water from shadow artifacts, thereby reducing false positives and enhancing segmentation reliability in these visually ambiguous conditions. Quantitative evaluation results on the two datasets are summarized in the following table.

Overall, the proposed BiEHFFNet demonstrates superior performance across multiple aspects. First, it adopts a bi-encoder structure that organically combines the local feature extraction capability of ResNet with the global semantic understanding of Swin Transformer, effectively balancing contour refinement and semantic consistency. Second, the cross-attention mechanism introduced in the feature fusion stage computes channel-wise attention weights to enable adaptive coordination between heterogeneous sources. In the MSCAU, the incorporation of ASPP and the CARAFE upsampling unit enables progressive reconstruction, significantly enhancing edge restoration and yielding smoother boundaries closely aligned with the ground truth. Furthermore, to improve adaptability under complex background interference, a composite loss function integrating Dice loss and Active Contour loss is introduced, effectively optimizing both boundary adherence and regional coherence. Compared to the six benchmark models, BiEHFFNet achieves the best results across all five evaluation metrics, namely, IoU, mIoU, OA, Re, and F1 Score, as detailed in Table 1.

Table 1.

Performance comparison of different methods on ALOS PALSAR and Sen1-SAR by five evaluation metrics.

On the ALOS PALSAR dataset, traditional CNN-based models such as UNet and ResUNet achieved relatively lower performance, with IoUs of 89.81% and 91.37%, respectively. The nested architecture in UNet++ brought a notable improvement in IoU, 92.69%, while DeepLabV3+ benefited from atrous spatial pyramid pooling, achieving an IoU of 93.12% and an mIoU of 81.74%. Transformer-based models such as SwinUNet and TransUNet further enhanced global context modeling, with SwinUNet achieving an mIoU of 82.38% and an F1 Score of 93.32%. Among all models, BiEHFFNet achieved the most superior performance, with an IoU of 96.36%, mIoU of 84.37%, OA of 96.30%, Re of 97.38%, and an F1 Score of 97.30%. These results demonstrate that BiEHFFNet can effectively integrate multi-scale contextual information and cross-branch features, providing superior segmentation accuracy. In addition, similar performance advantages are consistently observed on the Sen1-SAR dataset, further demonstrating the robustness and generalization capability of the proposed method.

4.5. Computational Complexity and Model Efficiency Analysis

The proposed architecture integrates a bi-encoder backbone, attention modules, multi-stage feature fusion, and custom upsampling operations, which inevitably increase computational complexity. To evaluate the model’s efficiency and suitability for real-time applications, several commonly used metrics were employed: FLOPs, Parameters, Time Per Epoch (TPE) and FPS (Frames Per Second). FLOPs (Floating Point Operations) measure the total number of floating-point operations required by the model to process one input sample. These reflect the computational workload of the model; lower numbers of FLOPs generally indicate higher efficiency. Parameters represent the total number of learnable weights in the model. A smaller Parameter count usually means less memory consumption and potentially faster inference. Time Per Epoch measures the average time consumed to process one epoch during training, including forward and backward passes. FPS measures the inference speed on specific hardware, indicating real-time processing capability. The expression for FPS is as follows:

where denotes the number of input samples processed and represents the total processing time in seconds. This experiment was conducted on the ALOS PALSAR dataset.

Table 2 summarizes the FLOPs, Parameter, TPE and FPS of the proposed model in comparison with several representative architectures.

Table 2.

Computational efficiency and model complexity comparison.

As shown in the quantitative comparison, BiEHFFNet exhibits the highest overall complexity among all evaluated models across multiple dimensions. Specifically, it has the largest parameter count and the second-highest FLOPs, exceeding most baseline models. This elevated complexity primarily stems from its bi-encoder backbone and the integration of multiple attention-enhanced fusion modules, which are designed to effectively capture multi-scale contextual information and refine structural details. In terms of FPS, BiEHFFNet ranks the lowest among all compared methods, reflecting the trade-off between accuracy and inference speed due to its heavier architecture. Likewise, the time per epoch is the highest, further underscoring its computational intensity during inference. Although BiEHFFNet’s complex structure leads to increased inference time and memory consumption, it is purposefully constructed to enhance detection robustness and boundary sensitivity. To address the resulting efficiency limitations, future optimizations may involve the use of lightweight modules such as depthwise separable convolutions and simplified residual connections. These strategies could substantially reduce FLOPs and parameters, improving FPS and batch processing time without compromising its high-level feature representation capacity.

4.6. Ablation Studies

To validate the effectiveness of the key components in the proposed BiEHFFNet for water body detection under complex background conditions, a series of systematic ablation studies were conducted on the ALOS PALSAR dataset. Specifically, the following four modules were evaluated for their individual contributions: bi-encoder structure (ResNet + Swin Transformer), CBAM, CABHFF, and MSCAU. The quantitative results of the ablation experiments are presented in Table 3. For comparison, a baseline model was implemented using ResNet as the sole encoder, integrated with skip connections, and employing bilinear interpolation in the decoder for progressive upsampling.

Table 3.

Results of ablation experiments on ALOS PLOSAR dataset.

Starting with the baseline ResNet encoder, we introduced a bi-encoder by adding a parallel Swin Transformer branch, termed the two-branch architecture in the table. Compared to the baseline model, this modification significantly improved performance, with the IoU increasing by 2.11% and the mIoU by 5.04%. This confirms the effectiveness of the Swin Transformer in capturing long-range dependencies and global semantics for water segmentation. To further enhance feature representation, we evaluated two attention-based strategies based on the two-branch configuration. Incorporating the CBAM improved performance compared to the two-branch configuration, with mIoU increasing by 2.27% and OA by 0.91%. This highlights the role of CBAM in refining spatial and channel-wise features while suppressing background noise. Separately, adding the CABHFF module brought notable gains over the two-branch configuration, with the F1 Score increasing by 2.31% and Re increasing by 2.60%. This indicates the module’s strength in promoting bidirectional information exchange and enhancing structural coherence across heterogeneous branches. We then applied the MSCAU module to the two-branch model during decoding. This brought notable improvements compared to the original two-branch configuration, with the IoU increasing by 0.39% and the mIoU by 3.15%. This demonstrates the model’s effectiveness in recovering fine details and aggregating multi-scale contextual information. Combining CBAM and CABHFF yielded greater improvements than either alone, increasing IoU by 3.03% and mIoU by 6.51% over the plain two-branch setup. These results demonstrate the complementary strengths of localized attention refinement and hybrid feature fusion in enhancing segmentation performance. Finally, the BiEHFFNet which integrates all modules, achieved the best performance, with an IoU of 96.36%, mIoU of 84.37%, Re of 97.38% and F1 Score of 97.30%. This validates the effectiveness and synergy of the proposed modules in accurately segmenting water bodies across complex and diverse remote sensing scenes.

Furthermore, BCE loss is commonly used in conventional segmentation tasks due to its simplicity. However, it often suffers from class imbalance, tending to favor background regions and resulting in poor performance on small or fragmented water bodies. To address this, Dice loss is adopted to directly optimize prediction-ground truth overlap, improving detection of small water structures. Nonetheless, Dice loss alone may not sufficiently enforce boundary smoothness or structural consistency. To complement this, we incorporate the AC loss, which introduces boundary-aware regularization by penalizing irregular or noisy edges. The combined Dice and AC losses form a composite loss function that integrates region-level accuracy with boundary-level refinement, leading to more complete and coherent segmentation. To evaluate its effectiveness, we conduct comparative experiments using BCE, Dice, and Dice + AC loss, respectively, on both the baseline model and BiEHFFNet, as presented in Table 4.

Table 4.

Comparison of BiEHFFNet with different loss settings on ALOS PLOSAR dataset.

It can be observed that using only BCE loss results in a noticeable decline across all evaluation metrics, especially in boundary localization and the detection of small-scale or low-contrast water bodies. When switching to Dice loss, the performance improves significantly, particularly in terms of foreground recall, as Dice better handles class imbalance and emphasizes region overlap. Adding Active Contour (AC) loss to Dice further improves boundary continuity and structural completeness. These results demonstrate that Dice loss enhances the segmentation of sparse and minor water regions, while AC loss imposes geometric constraints that refine boundary smoothness. The combined effect of these components effectively mitigates common challenges in remote sensing water body segmentation, such as foreground sparsity, small target omission, and boundary ambiguity, thus boosting the model’s overall performance under complex background conditions.

To investigate the effect of different dilation rate group designs on boundary detail extraction, we conducted an ablation study comparing several configurations of atrous convolutions within the context enhancement module. Rather than using a single fixed dilation rate, we applied multi-branch parallel dilated convolutions with varying dilation rate sets to simulate multi-scale receptive fields. Specifically, we tested four dilation rate groups with different spacing patterns. Group A adopts a moderate and progressively increasing pattern with dilation rates of 1, 2, 4, and 6, which also serves as the default setting in our model. Group B employs a uniformly spaced design with wider intervals, using dilation rates of 1, 3, 6, and 9. Group C adopts an aggressively dilated configuration with rates of 1, 4, 8, and 12, aiming to capture larger-scale context. Lastly, Group D applies standard convolutions with all dilation rates set to 1, effectively serving as the baseline without any dilation. This experiment was conducted on the ALOS PALSAR dataset.

All model variants shared the same architecture except for the dilation rates used in the context enhancement block. We evaluated the models using F1 Score and OA to quantify segmentation performance. A detailed comparison of these results is provided in Table 5.

Table 5.

Comparison of segmentation performance under different dilation group.

As shown in Table 5, Group A achieves the best performance, with an IoU of 96.36% and an OA of 96.30%. This specific dilation configuration proves especially effective due to its balanced progression of receptive field sizes. The smaller dilation rates like 1 and 2 focus on capturing fine local details essential for precise boundary delineation and subtle texture differences. Meanwhile, the moderately larger dilation rates like 4 and 6 incorporate broader contextual cues, enabling the model to understand spatial relationships beyond immediate neighborhoods and to suppress noise from complex backgrounds. The relatively close spacing between these rates ensures that receptive fields overlap sufficiently to avoid gaps in spatial coverage, thereby maintaining continuity in feature extraction across scales. In contrast, Group B uses the wider gaps between scales, which may lead to discontinuities in feature extraction and reduced sensitivity to local variations, resulting in a slight performance drop. Group C, configured with dilation rates of 1, 4, 8, and 12, shows an unexpected performance drop compared to other groups, which is likely due to excessive expansion of the receptive field, which results in sparse sampling and gridding effects. This impairs the model’s ability to capture fine-grained structures and maintain spatial continuity, while Group D, with uniform dilation rates of 1, essentially performs repeated stacking of 3 × 3 convolutions without enlarging the receptive field, leading to redundant feature representations and insufficient semantic diversity. Such redundancy may increase the risk of overfitting and cause abnormal declines in evaluation metrics.

The aforementioned ablation study results demonstrate that each module contributes meaningfully to improving water body detection performance from different aspects. The bi-encoder structure incorporating the Swin encoder, along with the MSCAU, significantly enhances global modeling capacity and detail restoration. Meanwhile, the CABHFF module effectively promotes the fusion of multi-source features, thereby improving detection accuracy in complex backgrounds. Additionally, the ablation study on dilation rate configurations confirms that a balanced and progressively increasing dilation strategy is crucial for capturing multi-scale contextual information and preserving boundary details. Lastly, the composite loss function balances class imbalance, boundary preservation, and sensitivity to small targets during optimization, leading to superior quantitative performance.

5. Conclusions

This paper proposes a BiEHFFNet to improve the accuracy of water body detection in SAR images against complex backgrounds. The method makes full use of the strengths of CNN and Transformer architectures by constructing a bi-encoder structure. Specifically, the ResNet branch extracts local spatial detail features, and the Swin Transformer branch models long-range contextual dependencies through hierarchical self-attention mechanisms. This achieves an effective integration of detail preservation and global perception capabilities. To fuse heterogeneous feature information effectively, BiEHFFNet uses a hybrid feature fusion module based on cross-attention to enhance the synergistic representation of different source features, thereby significantly improving the model’s sensitivity to boundaries and small targets. During the decoding stage, the model combines ASPP with content-aware upsampling operations to effectively mitigate feature distortion caused by traditional upsampling, thereby enhancing boundary restoration continuity and structural consistency. Furthermore, a composite loss function integrating Dice loss and AC loss is constructed to address common challenges in water body detection, such as ambiguous boundaries and imbalanced target sizes. This further strengthens the model’s ability to delineate water boundaries and detect small targets. Experiments on the ALOS PALSAR and Sen1-SAR datasets demonstrate that, compared with existing mainstream methods, BiEHFFNet achieves more accurate results for water body detection, showing consistent advantages across the IoU, mIoU, OA, Re and F1 Score metrics.

Despite the promising performance of BiEHFFNet, its bi-encoder structure and multiple attention and fusion modules result in high computational complexity, limiting deployment on resource-constrained devices. Subsequent studies will investigate a range of model compression and acceleration methodologies. These will include model pruning to eliminate superfluous parameters, quantization to minimize numerical precision and consequently reduce memory requirements, and knowledge distillation to transfer knowledge from a voluminous model to a more streamlined one. Furthermore, the substitution of standard convolutions with more efficient operations, such as depthwise separable convolutions or group convolutions, has been demonstrated to result in a substantial reduction in both the number of parameters and computational costs while maintaining a high level of accuracy. Furthermore, enhancing the model’s capacity to process high-resolution SAR images will necessitate the integration of multi-source data fusion and adaptive noise modeling techniques. These methodologies are intended to facilitate the effective detection of continuous water bodies and the minimization of false positives, thereby enhancing the model’s robustness across diverse environments.

Author Contributions

Conceptualization, B.H.; methodology, B.H.; software, B.H.; validation, B.H., X.H. and F.X.; formal analysis, X.H.; investigation, F.X.; resources, B.H.; data curation, F.X.; writing—original draft preparation, B.H.; writing—review and editing, X.H. and F.X.; visualization, X.H.; supervision, F.X.; project administration, B.H.; funding acquisition, B.H. and F.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under grant 62201281, the Natural Science Foundation of Jiangsu Province under grant BK20220392, the Natural Science Research Start-up Foundation of Recruiting Talents of Nanjing University of Posts and Telecommunications under grants NY222004 and NY222120, and the State Key Laboratory of Millimeter Waves under grant K202513.

Data Availability Statement

All data included in this study are available upon request by contact with the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Amitrano, D.; Ciervo, F.; Di Martino, G.; Papa, M.N.; Iodice, A.; Koussoube, Y.; Mitidieri, F.; Riccio, D.; Ruello, G. Modeling watershed response in semiarid regions with high-resolution synthetic aperture radars. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2732–2745. [Google Scholar] [CrossRef]

- Pesaresi, M.; Benediktsson, J.A. A new approach for the morphological segmentation of high-resolution satellite imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 309–320. [Google Scholar] [CrossRef]

- Klemenjak, S.; Waske, B.; Valero, S.; Chanussot, J. Automatic detection of rivers in high-resolution SAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1364–1372. [Google Scholar] [CrossRef]

- Mondal, M.S.; Sharma, N.; Garg, P.K.; Bohm, B.; Flugel, W.A.; Garg, R.D.; Singh, R.P. Water area extraction using geocoded high resolution imagery of TerraSAR-X radar satellite in cloud prone Brahmaputra River valley. J. Geomat. 2009, 3, 9–12. [Google Scholar]

- Markert, K.N.; Chishtie, F.; Anderson, E.R.; Saah, D.; Griffin, R.E. On the merging of optical and SAR satellite imagery for surface water mapping applications. Results Phys. 2018, 9, 275–277. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Pierdicca, N.; Chini, M.; Guerriero, L. An algorithm for operational flood mapping from synthetic aperture radar (SAR) data using fuzzy logic. Nat. Hazards Earth Syst. Sci. 2011, 11, 529–540. [Google Scholar] [CrossRef]

- Qin, Y.; Ban, Y.; Li, S. Water body mapping using Sentinel-1 SAR and Landsat data with modified decision tree algorithm. Remote Sens. 2019, 11, 1552. [Google Scholar]

- Gasnier, C.; Baillarin, S.; Fayolle, G. Automatic extraction and monitoring of water bodies from high-resolution satellite SAR images using external data and CRF. In Proceedings of the IGARSS, Valencia, Spain, 22–27 July 2018; pp. 2300–2303. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhang, T.; Ji, W.; Li, W.; Qin, C.; Wang, T.; Ren, Y.; Fang, Y.; Han, Z.; Jiao, L. EDWNet: A novel encoder-decoder architecture network for water body extraction from optical images. Remote Sens. 2024, 16, 4275. [Google Scholar] [CrossRef]

- Wang, J.; Jia, D.; Xue, J.; Wu, Z.; Song, W. Automatic water body extraction from SAR images based on MADF-Net. Remote Sens. 2024, 16, 3419. [Google Scholar] [CrossRef]

- Weng, Z.; Li, Q.; Zheng, Z.; Wang, L. SCR-Net: A dual-channel water body extraction model based on multi-spectral remote sensing imagery—A case study of Daihai Lake, China. Sensors 2025, 25, 763. [Google Scholar] [CrossRef] [PubMed]

- Yuan, D.; Wang, C.; Wu, L.; Yang, X.; Guo, Z.; Dang, X.; Zhao, J.; Li, N. Water stream extraction via feature-fused encoder-decoder network based on SAR images. Remote Sens. 2023, 15, 1559. [Google Scholar] [CrossRef]

- Ni, H.; Li, J.; Wang, C.; Zhou, Z.; Wang, X. SDNet: Sandwich decoder network for waterbody segmentation in remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025; in press. [Google Scholar]

- Wang, J.; Wang, S.; Wang, F.; Zhou, Y.; Wang, Z.; Ji, J.; Xiong, Y.; Zhao, Q. FWENet: A deep convolutional neural network for flood water body extraction based on SAR images. Int. J. Digit. Earth 2022, 15, 345–361. [Google Scholar] [CrossRef]

- Saleh, T.; Weng, X.; Holail, S.; Hao, C.; Xia, G.-S. DAM-Net: Flood detection from SAR imagery using differential attention metric-based vision transformers. ISPRS J. Photogramm. Remote Sens. 2024, 212, 440–453. [Google Scholar] [CrossRef]

- Kang, J.; Guan, H.; Ma, L.; Wang, L.; Xu, Z.; Li, J. WaterFormer: A coupled transformer and CNN network for waterbody detection in optical remotely-sensed imagery. ISPRS J. Photogramm. Remote Sens. 2023, 206, 222–241. [Google Scholar] [CrossRef]

- He, Z.-F.; Shi, B.-J.; Zhang, Y.-H.; Li, S.-M. Remote sensing image segmentation of around plateau lakes based on multi-attention fusion. Acta Electron. Sin. 2023, 51, 885–895. [Google Scholar]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. U-Net++: A nested U-Net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–11. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.-W.; Wu, J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 1055–1059. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Hartwig, A. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Qin, X.; Zhang, Z.; Huang, C.; Gao, C.; Dehghan, M.; Jagersand, M. BASNet: Boundary-aware salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 6478–6487. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16 × 16 words: Transformers for image recognition at scale. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021. [Google Scholar]

- Roy, A.; Saha, S. SCSE: A spatial-channel squeeze-and-excitation network for image segmentation. IEEE Trans. Image Process. 2020, 29, 3004–3014. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 3146–3154. [Google Scholar]

- Liu, M.; Yin, H. Cross attention network for semantic segmentation. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 2364–2368. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 13713–13722. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the EEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Li, J.; Xu, Q.; He, X.; Liu, Z.; Zhang, D.; Wang, R.; Qu, R.; Qiu, G. CFFormer: Cross CNN-transformer channel attention and spatial feature fusion for improved segmentation of low quality medical images. arXiv 2025, arXiv:2501.03629. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; CVPR 2020. pp. 11534–11542. [Google Scholar]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. CARAFE: Content-aware reassembly of features. In Proceedings of the EEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.-A. V-Net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the IEEE International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Chen, X.; Williams, B.M.; Vallabhaneni, S.R.; Czanner, G.; Williams, R.; Zheng, Y. Learning Active Contour Models for Medical Image Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11632–11640. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-like pure transformer for medical image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 205–218. [Google Scholar]

- Chen, J.; Mei, J.; Li, X.; Lu, Y.; Yu, Q.; Wei, Q.; Luo, X.; Xie, Y.; Adeli, E.; Wang, Y.; et al. TransUNet: Rethinking the U-Net architecture design for medical image segmentation through the lens of transformers. Med. Image Anal. 2024, 91, 103280. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).