Abstract

This study presents a new comparative analysis of the cognitive control methods of HVAC systems that assess reinforcement learning (RL) and traditional proportional-integral-derivative (PID) control. Through extensive simulations in various building environments, we have shown that while the PID controller provides stability under predictable conditions, the RL-based control can improve energy efficiency and thermal comfort in dynamic environments by constantly adapting to environmental changes. Our framework integrates real-time sensor data with a scalable RL architecture, allowing autonomous optimization without the need for a precise system model. Key findings show that RL largely outperforms PID during disturbances such as occupancy increases and weather fluctuations, and that the preferably optimal solution balances energy savings and comfort. The study provides practical insight into the implementation of adaptive HVAC control and outlines the potential of RL to transform building energy management despite its higher computational requirements.

Keywords:

reinforcement learning; proportional-integral derivative; HVAC system; energy efficiency; thermal comfort; cognitive control MSC:

93C40; 93E35; 68T05; 65K10

1. Introduction

Heating, ventilation, and air conditioning (HVAC) systems play an important role in maintaining indoor temperature comfort while accounting for a significant portion of global energy consumption, usually 20–40 per cent of total energy consumption in buildings [1]. As sustainability and energy efficiency become increasingly important, optimizing HVAC systems to balance occupant comfort and energy consumption has become a key research challenge [2]. The integration of this cognitive control system ensures people’s comfort without the need for manual intervention and addresses the limitations of traditional human systems. There are several methods proposed to maximize building energy efficiency and comfort, ranging from model-based approaches such as fuzzy control [3], linear quadratic control [4], proportional-integral-derivative (PID) control [5], and model predictive control (MPC) [6] to data-based strategies. However, model-based methods face challenges in capturing complex connections between the HVAC components and dynamic environmental factors. Cognitive control systems, particularly those that use machine learning (ML), provide a transformative alternative by adjusting in real time without relying on rigid mathematical models. In contrast to MPC, which relies on forecasts and high computational costs, reinforcement learning (RL) excels as a model-free approach, extracting insights directly from operational data during training and achieving lower post-deployment performance overheads [7].

This paper presents a systematic comparative analysis of the control strategies based on PID and RL in HVAC systems, which focus on their effectiveness in energy management and thermal comfort. Our study was designed as a foundational comparison between two fundamentally different control paradigms. On one hand, PID control represents the most widely deployed, rule-based, and low-complexity baseline in HVAC systems. On the other hand, model-free reinforcement learning (Q-learning) offers a cognitive, adaptive, and lightweight learning-based method that does not require a predictive model or extensive data preprocessing.

The study contributes to the growing body of research on cognitive HVAC control by

1. Empirically validating RL’s advantages in energy efficiency and comfort optimization under real-world disturbances.

2. Identifying trade-offs between PID’s reliability and RL’s flexibility, supported by a data-driven framework that eliminates manual intervention.

3. Proposing a scalable RL deployment strategy, where simulation-trained policies are transferred to physical systems for ongoing learning.

The remainder of this paper is organized as follows: Section 2 critically reviews prior research on HVAC control methods, covering traditional PID, model-based approaches (e.g., MPC), and emerging reinforcement learning techniques. Section 3 details the architectural design and operational workflow of the proposed framework, including sensor integration, state variables, and the multi-objective optimization problem. Section 4 formalizes the RL-based control approach, defining the state space, action space, reward function, and Q-learning implementation. Section 5 evaluates the RL agent’s training performance and compares its results against PID control in terms of energy efficiency, comfort adherence, and disturbance rejection. Section 6 (Conclusions) summarizes key findings, discusses practical implications, and outlines future research directions, including hybrid control strategies and real-world deployment.

2. Related Work

The evolution of HVAC control methods has taken place at different stages, ranging from basic rule systems to sophisticated data-based approaches, each addressing fundamental limitations in energy efficiency and thermal comfort management. Traditional control strategies, especially PID controllers, have been standard in the industry for a long time due to their simple implementation and reliable performance under stable conditions [8,9]. These controllers operate with carefully tuned proportional, integral, and derivative terms, minimizing collective temperature variations while maintaining system stability. An example of a PID regulator’s typical equation is

u_PID(t) = Kp e(t) + Kd (de(t))/dt + Ki ∫e(t)dt

The proportional parameter is represented by Kp, the integral parameter is denoted by Ki, and the derivative parameter is denoted by Kd. By carefully choosing these characteristics, a system can be built with short rise time, little overshoot, and little steady-state error.

However, because of their fixed parameter nature, they are insufficient for dynamic environments where occupancy patterns and weather conditions change unpredictably. This limitation is particularly evident in comparative studies, in which PID systems are often more energy-intensive during transitional periods [10] and consume between 20% and 30% more energy than advanced controllers under similar conditions. Advanced strategies based on models have emerged to address these shortcomings, and model predictive control is the most advanced approach. MPC systems use building thermal models to optimize HVAC operations over the predictive horizon and theoretically achieve superior performance through anticipatory control actions [11,12]. In practice, however, these methods face substantial challenges related to the complexity of computation and the requirements for model accuracy. The need for accurate weather forecasts and detailed building thermal characteristics often makes implementation of the MPC very expensive for the widespread deployment, especially in retrofit scenarios where building metadata may be incomplete or unreliable. In addition, the computational burden to solve optimization problems in real time limits the scalability of large or complex construction portfolios.

The advent of machine learning and reinforcement learning has brought potential for transformative HVAC control, particularly through model-free methods that learn directly from operational data [13,14]. The reinforcement of learning systems, such as the implementation of Q-learning detailed in this work, overcomes many constraints arising from traditional and model-based methods by continuously adapting control policies through environmental interactions. These systems are excellent at dealing with nonlinear building dynamics and multivariable optimization challenges that confuse conventional controllers. The recent progress in replay buffers and experience sampling has further strengthened the practicality of RL and enabled stable learning without excessive data requirements. Field implementations have demonstrated superior adaptability of RL, and documented examples show energy savings of 40–55% compared to PID controllers while improving comfort measurements [15,16]. The fundamental advantage is that RL’s ability to develop context-sensitive policies that consider not only current temperature conditions but also predictive factors such as occupancy trends and thermal inertia [17,18]. Hybrid control architectures are emerging as a promising middle ground by combining traditional method reliability and adaptability of learning-based methods [19,20]. These systems usually use PIDs or MPCs as the basic controllers, but they use machine learning to dynamically adjust parameters or override decisions if necessary. These configurations provide practical means for an incremental modernization of existing building management systems, although they create additional complexity in integration and tuning. The most successful implementations show how hierarchical control structures can take advantage of each approach’s strengths while reducing each approach’s shortcomings.

Among Deep Reinforcement Learning (DRL) algorithms, Deep Deterministic Policy Gradient (DDPG) and Proximal Policy Optimization (PPO) have shown promise in HVAC control. DDPG, suited for continuous action spaces, allows precise management of variables like airflow and temperature but is sensitive to hyperparameters and exploration strategies [21]. PPO, known for its stability and efficiency, excels in optimizing long-term energy and comfort goals, even in complex settings [22]. Despite their strengths, challenges like model complexity and data demands persist. This study instead adopts a lightweight, interpretable Q-learning approach to explore adaptive HVAC control.

Ref. [23] provides a comprehensive overview of how reinforcement learning (RL) is applied to optimize HVAC systems in smart buildings, highlighting key algorithms, implementation challenges, and future research directions for energy-efficient and adaptive climate control Ref. [24] explores how deep RL techniques can enhance energy efficiency by intelligently managing indoor temperature and reducing heating energy use in building environments Ref. [25] systematically reviews AI methods for optimizing energy management in HVAC systems, focusing on their effectiveness, applications, and future potential.

This environment of HVAC control innovation reveals persistent challenges in generalization, computational efficiency, and deployment in the real world [26]. Many advanced controllers can be well simulated in controlled test environments, but they face noise and unpredictability in the actual construction process. While prior work has shown that RL outperforms traditional HVAC controllers in static simulations, our study extends this line of inquiry by examining RL’s robustness under real-world disturbances and detailing a deployable architecture. By incorporating environmental events like occupancy spikes and window openings, and visualizing policy behavior, we demonstrate RL’s superior adaptability and operational feasibility in dynamic environments—an area underexplored in earlier research.

Despite promising advances in ML and RL for HVAC optimization, key gaps remain. Many approaches rely on accurate models, large datasets, or high computational power, limiting real-time applicability. Others neglect performance under real-world disturbances or lack focus on interpretability and deployment feasibility. This study addresses these issues by (1) evaluating model-free Q-learning in dynamic HVAC settings; (2) comparing it with PID control under disturbances; and (3) proposing a scalable, low-overhead architecture for real-time use. The goal is to bridge the gap between theoretical RL progress and practical building energy management.

3. System Architecture and Problem Formulation

The effectiveness of cognitive HVAC control hinges on two pillars: (1) a robust sensor infrastructure capable of capturing real-time environmental dynamics, and (2) a formal optimization framework that balances energy efficiency with occupant comfort. This section delineates the architecture of our proposed system and mathematically formulates the control problem.

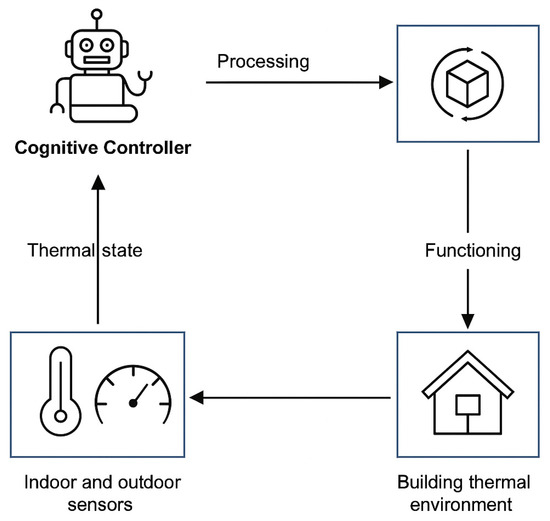

Figure 1 shows the reference architecture of the thermal comfort regulation system of the building. The system uses a distributed sensor network to continuously monitor internal and external environmental parameters, including temperature gradients and relative humidity levels, but not limited to these. In response to sensor measurements, a cognitive controller adjusts the HVAC system configuration to maintain the building temperature. These cognitive controllers are essential to the system, not only to collect data from HVAC systems, but also to use advanced sensor networks to determine building thermal conditions. Cognitive controllers use real-time data and adaptive algorithms to adjust the HVAC system’s settings based on these data in accordance with thermal comfort control policies. The HVAC system operates dynamically in response to the cognitive control’s updated setting points. HVAC systems operate according to the following thermal control principle: When the internal temperature falls below a pre-defined setpoint , the system starts cooling until the temperature balance is reached (). Conversely, if exceeds , the system activates the heating mechanism to restore the normal comfort parameters.

Figure 1.

Data collection and adjustment process of HVAC system by the cognitive controller.

The energy consumption profile of the HVAC system is significantly dependent on the indoor and outdoor environment. Given the double impact of these parameters on system power requirements and thermal comfort of occupants, they are included in our control framework. At each discrete time interval t, we define the following state variables:

- : Indoor air temperature

- : Indoor relative humidity

- : Outdoor air temperature

- : Outdoor relative humidity

These environmental variables are continuously monitored by a distributed sensor network integrated into the smart building infrastructure. The control system adjusts the HVAC operating parameters dynamically by modulating the temperature and humidity setting points, known as and . The setpoint optimization occurs at the beginning of the time interval t, and the objective function balances two factors:

where represents energy consumption, quantifies comfort deviations, defined as the absolute deviation of the indoor temperature Tin,t from the target comfort temperature Tset, i.e.,

Ct = ∣Tin,t − Tset ∣

is a weight parameter, and is a control action vector. This multi-objective optimization framework aims to achieve pareto-optimal solutions between energy efficiency and thermal comfort.

The operation energy expenditure of HVAC systems includes three main thermodynamic processes: heating, heat extraction (cooling), and moisture removal (dehumidification). The integrated intelligent measurement infrastructure provides a total energy consumption measurement in standardized units at each discrete time interval t.

Let denote the total HVAC power consumption during time slot , where

with representing the instantaneous power draw at time . The smart metering system records at each interval boundary through discrete sampling.

For modeling purposes, we consider composite energy consumption rather than decomposing it into constituent thermal processes. This aggregated approach provides sufficient granularity for system-level energy optimization while maintaining computational tractability.

4. Reinforcement Learning

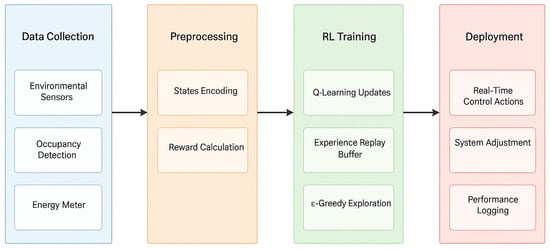

The enhanced methodology for HVAC control that integrates a reinforcement learning (RL) feedback loop across four main operational stages: Data Collection, Preprocessing, RL Training, and Deployment. The process begins with the Data Collection stage, where environmental sensors measure indoor and outdoor temperature and humidity (Tin, Hin, Tout, Hout), while additional modules perform occupancy detection and energy usage tracking via power meters (P). These raw observations are passed to the Preprocessing module, where they are converted into structured state representations and encoded actions. Specifically, states are represented as a vector of environmental conditions, while actions reflect control signals such as toggling heating or cooling modes. The reward signal is then computed using a weighted formulation that penalizes energy consumption and comfort deviation.

Following preprocessing, the data enters the RL Training stage. Here, the agent undergoes iterative learning using a Q-learning update rule, where experiences are stored in a replay buffer to promote stable learning. Exploration is guided by an ε-greedy strategy that balances exploitation of known policies with exploration of new actions. The training block also features a feedback loop: the current policy is evaluated during deployment, and performance metrics are logged and fed back to improve the learning cycle. The Deployment stage includes real-time execution of control actions, dynamic adjustment of HVAC setpoints (Tset and Hset), and continuous logging of performance.

The feedback loop plays a critical role in ensuring that real-world performance influences future policy updates. Performance metrics are used to assess effectiveness, and state–reward data gathered during execution is recycled into the training phase, enhancing model robustness. This closed-loop architecture emphasizes adaptive control, allowing the RL system to continuously improve by learning from its operational outcomes in real environments.

In our model, t = 0,1,2,… is represented by a discrete time model. Each time slot is between a few minutes and an hour. In time slot t, the control agent registers the state , performs action, and receives the reward . Each agent can approach its zone in its own way by considering its observations and rewards. To address this problem, we must establish a set of states S, actions A, and rewards R, representing the perceived environment of the agent and possible activities within it.

4.1. State

Each time slot starts with current indoor and outdoor temperatures. This condition has an important impact on the energy consumption of HVAC systems and on the comfort levels of residents. In this case, it represents the state St as

- : room temperature, measured by the temperature sensor in degrees Celsius; unit: °C;

- : outdoor temperature measured by the meteorological station and expressed in degrees Celsius);

- : humidity level measured by the humidity sensor inside the room. This value affects the comfort and efficiency of the HVAC system because humidity affects the perceived temperature.

- : humidity level outside the building. This external factor can affect how much the HVAC system needs to work to maintain the desired levels of humidity and temperature.

4.2. Action

Control measures to regulate the HVAC system include changing the air temperature and humidity threshold. Control actions directly affect indoor temperature, occupants’ comfort in heating and HVAC system energy consumption. Control actions are described as follows:

where represents the action taken at the time slot .

The controller agent chooses the control action by converting the current state into a suitable action .

The action space is formally defined as the set of all admissible control actions available to the HVAC system, comprising four distinct operational modes:

- Heat_on: Activates the heating subsystem, initiating thermal energy transfer to the interior space;

- Heat_off: turns off heating;

- Cool_on: turns on cooling;

- Cool_off: turns off cooling.

4.3. Reward

If the thermal comfort rating is lower than , the inhabitants will feel too cold. Once it surpasses , the occupants will feel unbearable heat. There are two variables ( and ). The comfort zone is .

We take the sum of the two components and weigh them to obtain the reward for time slot .

- Penalty thresholds D1 and D2: These were selected based on typical acceptable temperature deviation ranges in HVAC standards (e.g., ASHRAE guidelines), where deviations below D1 are considered negligible, deviations between D1 and D2 are mildly uncomfortable, and deviations beyond D2 indicate significant discomfort.

- Comfort metric Mt: We have defined Mt as a bounded comfort index derived from the absolute deviation between indoor temperature and setpoint, normalized to allow consistent scaling across different environments.

In this context, represents the HVAC energy usage weight and denotes the reward for time slot . The relative importance of thermal comfort for the occupants and energy consumption is indicated by the weight .

The energy consumption penalty function incorporates a linear weighting factor that uniformly scales the cost associated with each unit of energy expenditure. This formulation establishes a direct proportional relationship between energy consumption and deviations from the prescribed thermal comfort bounds, as evidenced by the governing equation.

For scenarios prioritizing occupant comfort over energy conservation, the parameter should be assigned a reduced numerical value. This adjustment effectively increases the relative weight of comfort violations in the optimization objective, thereby biasing the system toward maintaining stricter thermal conditions. Energy efficiency can be achieved by tuning to a bigger value otherwise.

It is possible to build a reward function that evaluates the trade-off between the occupants’ thermal comfort and the HVAC system’s energy consumption . There is a weight for thermal comfort and a weight for energy cost.

The relative relevance of thermal comfort and energy cost can be represented by adjusting the values of and , respectively. As a result, there is more flexibility to accommodate different preferences and priorities in various construction settings.

4.4. Q-Learning Method

By modeling HVAC control problems, we can design solutions that effectively address environmental uncertainty. Like many learning challenges in the real world, there is a lack of a comprehensive environmental model, including reward distribution and transitional probability.

Consequently, conventional dynamic programming approaches, including value iteration and policy iteration algorithms, demonstrate limited efficacy in this problem domain due to inherent computational complexities. As an alternative framework, model-free reinforcement learning techniques, particularly Q-learning, offer a viable solution for deriving optimal control policies without requiring explicit system dynamics. The Q-learning algorithm operates by iteratively approximating the action-value function through temporal difference updates:

Where

- −

- is the learning rate,

- −

- is the discount factor,

- −

- is the reward received after taking action in state ,

- −

- is the current estimate of the Q -value.

The state–action value function represents the expected discounted return when executing action in state and subsequently following the optimal policy.

One can use a generalized function approximator or display the estimated action values in tabular form for each state–action pair . The learning agent prioritizes long-term gains over short-term gains, with the goal of maximizing its returns at the end. One way to manage an agent’s myopia is to include a discount factor . Future rewards are given more weight when is close to 1, but only the most recent rewards are considered when is close to 0. Methods based on reinforcement learning can weigh the pros and cons of many behaviors and select the one with the highest cumulative reward at the end of the cycle.

Q-learning frequently necessitates substantial hands-on experience in a specific setting to master an effective policy. The state and action spaces’ sizes are major determinants of this.

4.5. Method Overview

The overarching goal of this framework is to minimize energy consumption (P) while maintaining occupant comfort (C), using RL to dynamically adjust HVAC systems in response to real-time environmental conditions.

Figure 2 depicts a Reinforcement Learning (RL)-based control system designed to optimize energy usage while maintaining comfortable environmental conditions in a building. The process begins with Data Collection, where environmental sensors measure indoor and outdoor temperature (Tin, Tout) and humidity (Hin, Hout), occupancy detection determines whether the building is occupied, and an energy meter (P) monitors power consumption. These inputs provide real-time data about the building’s state and energy use. Next, the system performs Preprocessing to structure the data for RL. The State Representation (S) is defined as a vector combining sensor readings, such as S = [Tin, Hin, Tout, Hout], and may include occupancy status. The Action Encoding (A) consists of discrete control actions, such as turning the heating or cooling system On/Off.

Figure 2.

Thermal comfort rating to penalize discomfort.

The Reward Calculation (R) balances energy efficiency and occupant comfort. For RL Training, the system employs Q-Learning (Equation (8)) to iteratively update action values based on observed rewards. An Experience Replay Buffer stores past state–action–reward transitions to improve learning stability, while ε-Greedy Exploration ensures a balance between exploring new actions and exploiting learned strategies. Once trained, the Policy Update deploys the RL model to control HVAC systems in real time. The system operates in a Feedback Loop, continuously acquiring new sensor data, processing it into states, and refining the policy through ongoing training.

5. Simulation and Results

To empirically validate the performance of RL and PID control in HVAC systems, we conducted extensive simulations under dynamic environmental conditions. The simulation platform was developed using Python 3.10 and structured around a customized environment compatible with the OpenAI Gym interface. The thermal dynamics of the building were modeled using a simplified yet realistic representation that captures the influence of indoor and outdoor temperatures, humidity levels, occupancy fluctuations, and HVAC control actions. To simulate dynamic conditions, scenarios such as window openings, sudden occupancy spikes, and weather transitions were scripted to evaluate control robustness. The simulation operated at a 15 min time resolution, aligning with standard building management system sampling intervals, and each training episode corresponded to a 24 h virtual operational cycle. Energy consumption was computed using an aggregated thermodynamic load model that estimated power draw from heating, cooling, and dehumidification processes.

The reinforcement learning (RL) controller was constructed using a tabular Q-learning algorithm, implemented in Python with the help of NumPy and custom-built training routines. The state space was defined as a discretized vector combining indoor and outdoor temperature and humidity values (Tin, Hin, Tout, Hout), with each feature binned into 10 intervals. The action space consisted of four discrete HVAC control decisions: turning heating or cooling on or off. The reward function was formulated as a weighted sum of energy consumption penalties and thermal comfort deviations, encouraging the agent to minimize power usage while maintaining temperature within the predefined comfort band. The agent was trained over 300 episodes, using an ε-greedy exploration strategy with linearly decaying ε (from 1.0 to 0.1). Learning rate and discount factor were set at 0.1 and 0.95, respectively. The training process was fully conducted offline on a standard workstation (Intel Core i7, 16 GB RAM) without the need for GPU acceleration. Once trained, the agent executed control decisions in real-time by querying the learned Q-table, making the deployment phase computationally lightweight and well-suited for edge devices or integration into existing building management systems. The experiments were designed to assess: (i) the RL agent’s learning efficacy and policy adaptability, and (ii) the comparative advantages of RL over PID in energy efficiency and disturbance rejection. Simulations leveraged real-world building data, including occupancy patterns, weather fluctuations, and thermal inertia, to ensure practical relevance. Below, we present the results in two parts: first, the training and policy analysis of the RL-based controller, followed by a head-to-head comparison with PID control.

5.1. Machine Learning-Based Control Performance

This section evaluates the RL agent’s ability to learn optimal HVAC control strategies without prior system modeling. The agent’s performance was tested across diverse scenarios to quantify its adaptability to thermal dynamics and energy-saving potential.

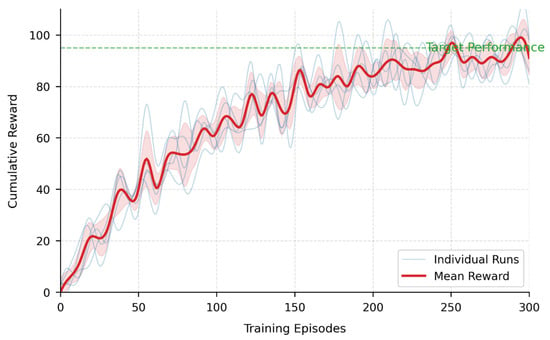

Figure 3 shows the progress of RL agents through the evolution of cumulative rewards over 300 training episodes. The x-axis represents the number of episodes, and the y-axis represents the cumulative reward accumulated by the agent. The blue line represents the results of several independent training runs, reflecting variability due to stochastic environments and initialization conditions. The bold red curves above represent the average reward in each run and are accompanied by a red shaded region representing the standard deviation. The horizontal green line marked “Target Performance” sets a baseline for acceptable policy performance, indicating the minimum cumulative compensation needed for the agent to be considered successful training. With the progress of training, the average reward shows a clear trend upwards and periodic fluctuations, eventually converging near the target line after about 200 episodes. This trajectory confirms that RL agents can successfully learn from experience and achieve performance that satisfies the predefined objectives.

Figure 3.

Reinforcement learning agent training progress.

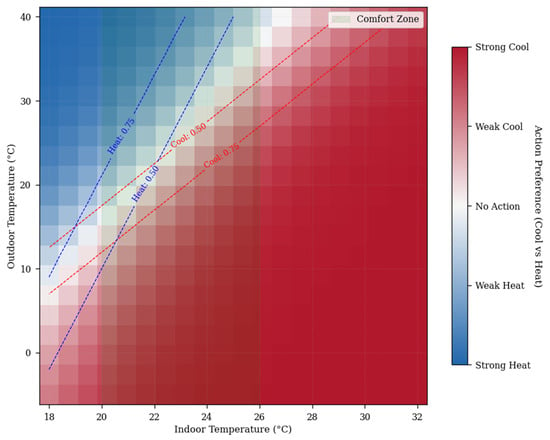

Figure 4 shows the action selection policy of RL agents in the form of a heat map, which maps decisions preferences based on the temperature conditions inside and outside. The x-axis represents the temperature in degrees Celsius, and the y-axis represents the corresponding temperature in the outdoors. The color scale encodes the agent’s action preference, from high heat (dark blue) to high cooling (dark red), light shades show moderate preferences, with white corresponding to neutral or non-action zones. The contour lines overlaid show the probability of action of heating (blue) and cooling (red), and the thresholds marked 0.50 and 0.75 show confidence in both directions of action. The “comfort zone” shade area is a combination of temperature values intended to maintain indoor conditions, which helps to contextualize decision-making boundaries. Figure 4 shows that when indoor temperatures rise, especially when outdoor temperatures are also high, the agent’s preference changes strongly towards cooling actions. Conversely, under colder indoor and outdoor conditions, policy is increasingly favoring heating. This heat map provides a clear and interpretable representation of the control strategy learned by the agent, showing how thermal comfort is dynamically balanced through targeted heating or cooling action depending on the environmental context.

Figure 4.

RL agent policy: action probability heatmap.

5.2. Comparative Analysis: RL vs. PID Control

We contrast the RL controller’s performance with a conventional PID baseline, focusing on responsiveness, energy efficiency, and comfort maintenance under disturbances.

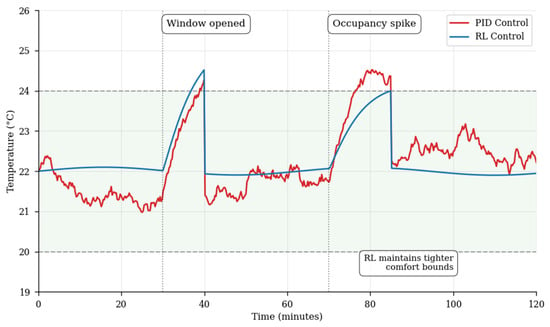

Figure 5 shows the performance of PID control and reinforcement learning control (RL) for maintaining temperature stability in a heating and air conditioning system when it is affected by two different disturbances: the opening of the window and an occupancy spike.

Figure 5.

HVAC system response to disturbances (window opening, occupancy spike).

At a specific time interval two disturbances occur:

- Window opening: This event may cause a sudden drop in indoor temperature due to the inflow of cold air, which is likely to challenge the ability of the HVAC system to maintain the setpoint.

- Occupancy spike: The increase in room occupancy generates additional heat, leading to an increase in temperature that the system has to counteract.

Figure 5 shows how each control method reacts to these disruptions:

- The PID controller has a more oscillating response and a significant overshoot or undershoot before stabilization. This reflects the fixed parameter design, which struggled to adapt quickly to sudden changes.

- RL controllers have a smoother and more adaptive response that adjusts better to disturbances with minimal deviations from the desired temperature. This highlights the strength of RL to learn and adapt to dynamic conditions.

By comparing the two lines, the figure visually emphasizes the superior performance of RL in dealing with real-world HVAC disruptions, maintaining improved temperature stability, and reducing energy-intensive oscillations. This ties with the broader findings of the study on the benefits of RL in unpredictable environments.

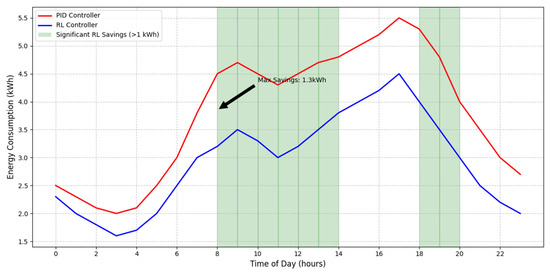

Figure 6 presents a 24 h comparison of energy consumption between PID and RL controllers in HVAC systems. The x-axis tracks time in 2 h increments, while the y-axis measures energy consumption in kWh.

Figure 6.

Comparative energy consumption: PID vs. RL controllers.

There are two distinct trends:

- PID controller shows a relatively stable but higher daily energy consumption pattern. Consistent energy consumption indicates that there is less adaptability to different conditions, such as occupancy changes and fluctuations in external temperature.

- RL controller shows dynamic energy consumption with consumption levels consistently lower than PID. In particular, RL controllers generate 1.3 kWh peak savings in certain periods, demonstrating their ability to optimize energy use in real time by learning from environmental data.

The most significant energy savings occur during high demand periods (e.g., mid-day or night), where the RL adapters outperform the rigid control of the PID. This is consistent with the study’s conclusions that RL controllers perform well under variable conditions and reduce energy waste while maintaining performance. Figure 6 shows the superior efficiency of the RL over conventional PID control, especially in scenarios requiring flexibility. The visual difference between the two curves highlights the practical benefits of AI-driven HVAC management for long-term energy savings.

The reinforcement learning controller demonstrated strong adaptability to dynamic conditions such as occupancy spikes and environmental changes, outperforming PID control in both comfort stability and energy efficiency. This confirms RL’s suitability for real-world HVAC control scenarios where uncertainty and variability are prevalent.

While this study evaluates conventional PID control with fixed parameters, we acknowledge that adaptive PID controllers—those using rule-based threshold adjustments or gain scheduling—can partially address dynamic fluctuations in indoor environments. However, such methods require handcrafted logic and often depend on prior knowledge of system behavior or external conditions (e.g., weather, occupancy). In contrast, the reinforcement learning (RL) controller does not rely on predefined rules; it autonomously learns control policies from environmental interactions and continuously adapts to changes. This model-free adaptability enables RL to outperform even adaptive PID controllers in complex, time-varying scenarios. As part of future work, we are extending this study to include comparisons with rule-based PID strategies to quantify the trade-offs further. Preliminary tests suggest that while adaptive PID improves responsiveness, RL maintains superior performance in terms of energy savings and comfort stability, especially during unexpected disturbances.

5.3. Summative Comparison

The comparative analysis reveals fundamental trade-offs between PID and RL-based HVAC control strategies, as illustrated in Table 1. While PID controllers offer simplicity, low computational overhead, and industry familiarity, their fixed-gain architecture limits performance in dynamic environments, resulting in higher energy consumption (up to 55% more than RL) and wider comfort deviations (±1.2 °C). In contrast, RL controllers demonstrate superior adaptability through model-free optimization, achieving tighter temperature regulation (±0.5 °C) and significant energy savings (up to 55%) by learning from environmental interactions. Although RL requires substantial upfront training (~300 episodes) and more complex sensor integration, its ability to generalize across building types and self-optimize over time presents a transformative advantage for modern energy-efficient buildings. The choice between approaches ultimately depends on operational priorities: PID remains suitable for stable, low-complexity systems, while RL excels in dynamic environments where long-term energy savings and comfort precision are paramount. This comparison underscores RL’s potential to redefine HVAC control paradigms, particularly when paired with emerging digital twin technologies for scalable deployment.

Table 1.

Comparative performance of PID vs. RL controllers in HVAC systems.

6. Conclusions

This study conducts a comprehensive comparison between the RL and PID control method of HVAC systems, focusing on their ability to optimize energy efficiency while maintaining the comfort of users. Through simulations and empirical validation, we evaluate both approaches in diverse building scenarios, quantifying their performance in terms of energy savings, comfort adherence, and computational overhead. Our findings highlight RL’s superior adaptability in dynamic settings, achieving significant cost reductions (e.g., 55% lower heating costs compared to PID in winter trials), while PID remains a robust choice for stable conditions. In a frequently fluctuating environment, RL has obvious advantages in optimizing long-term efficiency and comfort, despite the higher initial computational complexity. PID, on the contrary, is a practical choice for stable systems that prioritize simplicity and reliability. While our study focuses on simulation-based validation, the deployment of RL in real-world HVAC systems is increasingly feasible due to advances in sensor technology, edge computing, and open building management platforms. Practical deployment strategies include using RL as a supervisory setpoint optimizer, integrating it with existing control frameworks, and ensuring safety via rule-based overrides or constrained policy learning.

Looking ahead, research into hybrid approaches that combine the strengths of both methods could provide even stronger solutions in the future. Furthermore, the implementation of various types of buildings in the real world would help to validate the scalability of RL-based HVAC control. This work contributes to the ongoing development of intelligent and energy-efficient building management systems and supports the transition to more sustainable and adaptive infrastructure.

Author Contributions

Conceptualization, A.G. and M.A.; methodology, Y.E.T. and A.G.; software, M.A.; validation, A.G., M.A. and Y.E.T.; formal analysis, A.G.; resources, Z.K.; writing—original draft preparation, A.G.; writing—review and editing, A.G. and N.A.; visualization, M.A.; supervision, N.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors extend their appreciation to the Deanship of Scientific Research at Northern Border University, Arar, KSA for supporting this research work through the project number “NBU- FPEJ-2025-2441-01”.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mckoy, D.R.; Tesiero, R.C.; Acquaah, Y.T.; Gokaraju, B. Review of HVAC systems history and future applications. Energies 2023, 16, 6109. [Google Scholar] [CrossRef]

- Torriani, G.; Lamberti, G.; Fantozzi, F.; Babich, F. Exploring the impact of perceived control on thermal comfort and indoor air quality perception in schools. J. Build. Eng. 2023, 63, 105419. [Google Scholar] [CrossRef]

- Abuhussain, M.A.; Alotaibi, B.S.; Aliero, M.S.; Asif, M.; Alshenaifi, M.A.; Dodo, Y.A. Adaptive HVAC System Based on Fuzzy Controller Approach. Appl. Sci. 2023, 13, 11354. [Google Scholar] [CrossRef]

- Li, H.; Xu, J.; Zhao, Q.; Wang, S. Economic model predictive control in buildings based on piecewise linear approximation of predicted mean vote index. In IEEE Transactions on Automation Science and Engineering; IEEE: New York, NY, USA, 2023. [Google Scholar]

- Cao, S.; Zhao, W.; Zhu, A. Research on intervention PID control of VAV terminal based on LabVIEW. Case Stud. Therm. Eng. 2023, 45, 103002. [Google Scholar] [CrossRef]

- Khosravi, M.; Huber, B.; Decoussemaeker, A.; Heer, P.; Smith, R.S. Model Predictive Control in buildings with thermal and visual comfort constraints. Energy Build. 2024, 306, 113831. [Google Scholar] [CrossRef]

- Singh, D.; Arshad, M.; Tyagi, B.; Kalia, G. Predictive Maintenance Strategies for HVAC Systems: Leveraging MPC, Dynamic Energy Performance Analysis, and ML Classification Models. IRE J. 2023, 7, 98–108. [Google Scholar]

- Jung, W.; Jazizadeh, F. Comparative assessment of HVAC control strategies using personal thermal comfort and sensitivity models. Build. Environ. 2019, 158, 104–119. [Google Scholar] [CrossRef]

- Isa, N.M.; Hariri, A.; Hussein, M. The Comparison of P, PI and PID Strategy Performance as Temperature Controller in Active Iris Damper for Centralized Air Conditioning System. J. Adv. Res. Fluid Mech. Therm. Sci. 2023, 102, 143–154. [Google Scholar] [CrossRef]

- Rojas, J.D.; Arrieta, O.; Vilanova, R. Industrial PID Controller Tuning; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar]

- Selamat, H.; Haniff, M.F.; Sharif, Z.M.; Attaran, S.M.; Sakri, F.M.; Razak, M.A.H.B.A. Review on HVAC System Optimization Towards Energy Saving Building Operation. Int. Energy J. 2020, 20, 345–358. [Google Scholar]

- Kohlhepp, P.; Gröll, L.; Hagenmeyer, V. Characterization of aggregated building heating, ventilation, and air conditioning load as a flexibility service using gray-box modeling. Energy Technol. 2021, 9, 2100251. [Google Scholar] [CrossRef]

- Kou, X.; Du, Y.; Li, F.; Pulgar-Painemal, H.; Zandi, H.; Dong, J.; Olama, M.M. Model-based and data-driven HVAC control strategies for residential demand response. IEEE Open Access J. Power Energy 2021, 8, 186–197. [Google Scholar] [CrossRef]

- Gao, C.; Wang, D. Comparative study of model-based and model-free reinforcement learning control performance in HVAC systems. J. Build. Eng. 2023, 74, 106852. [Google Scholar] [CrossRef]

- Kotevska, O.; Kurte, K.; Munk, J.; Johnston, T.; Mckee, E.; Perumalla, K.; Zandi, H. Rl-Hems: Reinforcement Learning based Home Energy Management System for HVAC Energy Optimization; Oak Ridge National Lab.(ORNL): Oak Ridge, TN, USA, 2020. [Google Scholar]

- Lu, R.; Li, X.; Chen, R.; Lei, A.; Ma, X. An Alternative Reinforcement Learning (ARL) control strategy for data center air-cooled HVAC systems. Energy 2024, 308, 132977. [Google Scholar] [CrossRef]

- Biswas, D. Reinforcement learning based HVAC optimization in factories. In Proceedings of the Eleventh ACM International Conference on Future Energy Systems, Melbourne, Australia, 22–26 June 2020; pp. 428–433. [Google Scholar]

- Esrafilian-Najafabadi, M.; Haghighat, F. Occupancy-based HVAC control systems in buildings: A state-of-the-art review. Build. Environ. 2021, 197, 107810. [Google Scholar] [CrossRef]

- Raman, N.S.; Devraj, A.M.; Barooah, P.; Meyn, S.P. Reinforcement learning for control of building HVAC systems. In Proceedings of the 2020 American Control Conference (ACC), Denver, CO, USA, 1–3 July 2020; pp. 2326–2332. [Google Scholar]

- Green, C.; Garimella, S. Residential microgrid optimization using grey-box and black-box modeling methods. Energy Build. 2021, 235, 110705. [Google Scholar] [CrossRef]

- Li, W.; Wu, H.; Zhao, Y.; Jiang, C.; Zhang, J. Study on indoor temperature optimal control of air-conditioning based on Twin Delayed Deep Deterministic policy gradient algorithm. Energy Build. 2024, 317, 114420. [Google Scholar] [CrossRef]

- Ghane, S.; Jacobs, S.; Elmaz, F.; Huybrechts, T.; Verhaert, I.; Mercelis, S. Federated proximal policy optimization with action masking: Application in collective heating systems. Energy AI 2025, 20, 100506. [Google Scholar] [CrossRef]

- Al Sayed, K.; Boodi, A.; Broujeny, R.S.; Beddiar, K. Reinforcement learning for HVAC control in intelligent buildings: A technical and conceptual review. J. Build. Eng. 2024, 95, 110085. [Google Scholar] [CrossRef]

- Brandi, S.; Piscitelli, M.S.; Martellacci, M.; Capozzoli, A. Deep reinforcement learning to optimise indoor temperature control and heating energy consumption in buildings. Energy Build. 2020, 224, 110225. [Google Scholar] [CrossRef]

- Aghili, S.A.; Haji Mohammad Rezaei, A.; Tafazzoli, M.; Khanzadi, M.; Rahbar, M. Artificial Intelligence Approaches to Energy Management in HVAC Systems: A Systematic Review. Buildings 2025, 15, 1008. [Google Scholar] [CrossRef]

- Ascione, F.; Bianco, N.; Iovane, T.; Mauro, G.M.; Napolitano, D.F.; Ruggiano, A.; Viscido, L. A real industrial building: Modeling, calibration and Pareto optimization of energy retrofit. J. Build. Eng. 2020, 29, 101186. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).