1. Introduction

The journey toward autonomous cleaning began with the shift from manual cleaning to semi-automated machines, which introduced basic mechanical assistance. As operational demands increased, especially in larger or more complex areas, fully autonomous systems emerged. For outdoor applications, early efforts involved retrofitting manual sweepers with drive-by-wire systems, onboard computing, and environmental sensors to enable autonomous navigation. Despite these improvements, many existing systems remain tailored to specific environments and lack the flexibility to operate across mixed-use urban spaces such as pavements, parks, and campuses [

1,

2].

Outdoor cleaning robots are increasingly deployed in environments with highly variable surface conditions, posing significant challenges to maintaining consistent and safe operation. In these unstructured settings, where surface material, friction, and slope may shift frequently, robots must continuously adapt their behavior to prevent instability or loss of performance [

3,

4]. Recognizing different surface types, such as wood, concrete, or smooth tile, is not merely helpful but essential to performing cleaning tasks safely and efficiently [

3,

5]. This need has spurred advancements in terrain-aware navigation, where robots sense and interpret environmental features in real time, transforming raw sensor data into actionable insights [

6].

Perception is a cornerstone of autonomous cleaning, especially outdoors where lighting and terrain may shift unpredictably. In recent developments, artificial intelligence and advanced perception techniques have further enhanced the autonomy of cleaning robots. Depth cameras that fuse RGB and spatial data allow robots to identify high-traffic areas, focus their cleaning efforts accordingly, and minimize energy spent in low-activity zones [

7,

8]. Additionally, several studies have shown that robots can identify floor types and adjust cleaning behavior dynamically using image-based dirt detection and unsupervised learning techniques [

9]. While RGB-D and stereo vision systems offer valuable depth and visual information, their dependence on ambient lighting often limits their effectiveness in dim or inconsistent conditions [

10,

11]. These limitations have motivated the adoption of Light Detection and Ranging (LiDAR), which emits its own near-infrared light in the non-visible spectrum and produces dense 3D point clouds that remain accurate regardless of environmental lighting. For this reason, LiDAR can provide robust, long-range sensing independent of illumination constraints [

12].

To enable terrain awareness, modern systems commonly employ photometric or range-based images for surface classification [

13]. Depth cameras and LiDAR sensors, in combination with deep learning techniques, have been widely adopted to support real-time recognition of terrain types. Among these, convolutional neural networks, particularly those using ResNet architectures, have demonstrated reliable segmentation performance when processing fused LiDAR and visual data [

14,

15]. These systems allow robots to recognize unstructured or risky surfaces but typically do not react to these conditions beyond passive identification. In contrast, our work integrates this perception into an adaptive control loop, enabling the robot to respond in real time by slowing down, adjusting brush speed, or modifying cleaning height to match surface requirements [

14]. ResNet’s residual connections further help preserve performance in deeper networks, ensuring reliable terrain classification [

15].

Slope estimation plays an equally vital role in ensuring safety, particularly on inclined or multilevel terrain. Inertial Measurement Units (IMUs) are frequently used to capture angular data that help estimate slope and adjust motion control strategies [

16]. When combined with other sensing modalities such as stereo cameras, ultrasonic sensors, or LiDAR, these systems can distinguish between flat, stepped, and inclined surfaces. This enables the robot to take proactive steps, like reducing speed or rerouting, to maintain stability in complex environments [

17,

18].

While detecting terrain type and slope is essential, it is not sufficient on its own [

19]. The robot must also interpret this data and adapt its behavior accordingly to ensure safe and efficient operation. Fuzzy logic offers an effective framework for these problems, allowing systems to handle uncertainty and imprecision in sensor data through rule-based reasoning [

20]. Widely used in robotics, fuzzy control frameworks are often applied for real-time behavioral adjustments in response to environmental conditions [

21]. Prior studies have used fuzzy logic primarily to influence navigation direction or turning angle in high-risk scenarios, such as obstacle avoidance [

4,

22]. However, these applications have not leveraged fuzzy inference to adjust internal cleaning parameters such as brush speed, motor torque, or robot speed. In contrast, our approach extends the role of fuzzy control by integrating terrain and slope perception directly into the cleaning decision-making process, allowing dynamic modulation of cleaning intensity and motion behavior based on surface conditions.

Beyond improving safety, fuzzy control has also been linked to energy-efficient behavior by balancing locomotion stability and power consumption, especially under changing or uncertain terrain conditions [

23]. These systems often operate alongside higher-level path planning and obstacle avoidance algorithms, allowing for intelligent, responsive navigation. When inputs such as pavement width and surface condition are considered, the fuzzy inference engine can recommend appropriate speeds and adjust motor responses accordingly [

9,

19,

24]. In cleaning-specific use cases, fuzzy logic has also been applied to regulate operational parameters such as brush speed and fan power, based on dirt type and quantity, typically in human-operated machines rather than autonomous robots [

25].

By integrating terrain classification, slope detection, and fuzzy control, it becomes possible to build genuinely terrain-aware cleaning robots. While prior studies have demonstrated individual components, such as terrain classification [

5,

26], slope detection [

27], or fuzzy logic for the control of cleaning machines [

25], these capabilities were typically explored in isolation and not combined into a unified, responsive system. To the best of our knowledge, no previous work has implemented a complete pipeline that integrates these modules for real-time, adaptive outdoor cleaning. This paper introduces a safety-aware pavement-cleaning robot that adjusts its driving speed, brush height, and brush speed in real time based on the detected floor type and slope, powered by a ResNet-based terrain classifier, IMU-based slope estimation, and a fuzzy logic control engine. LiDAR serves as the primary sensor, ensuring consistent perception performance in outdoor environments regardless of lighting variability.

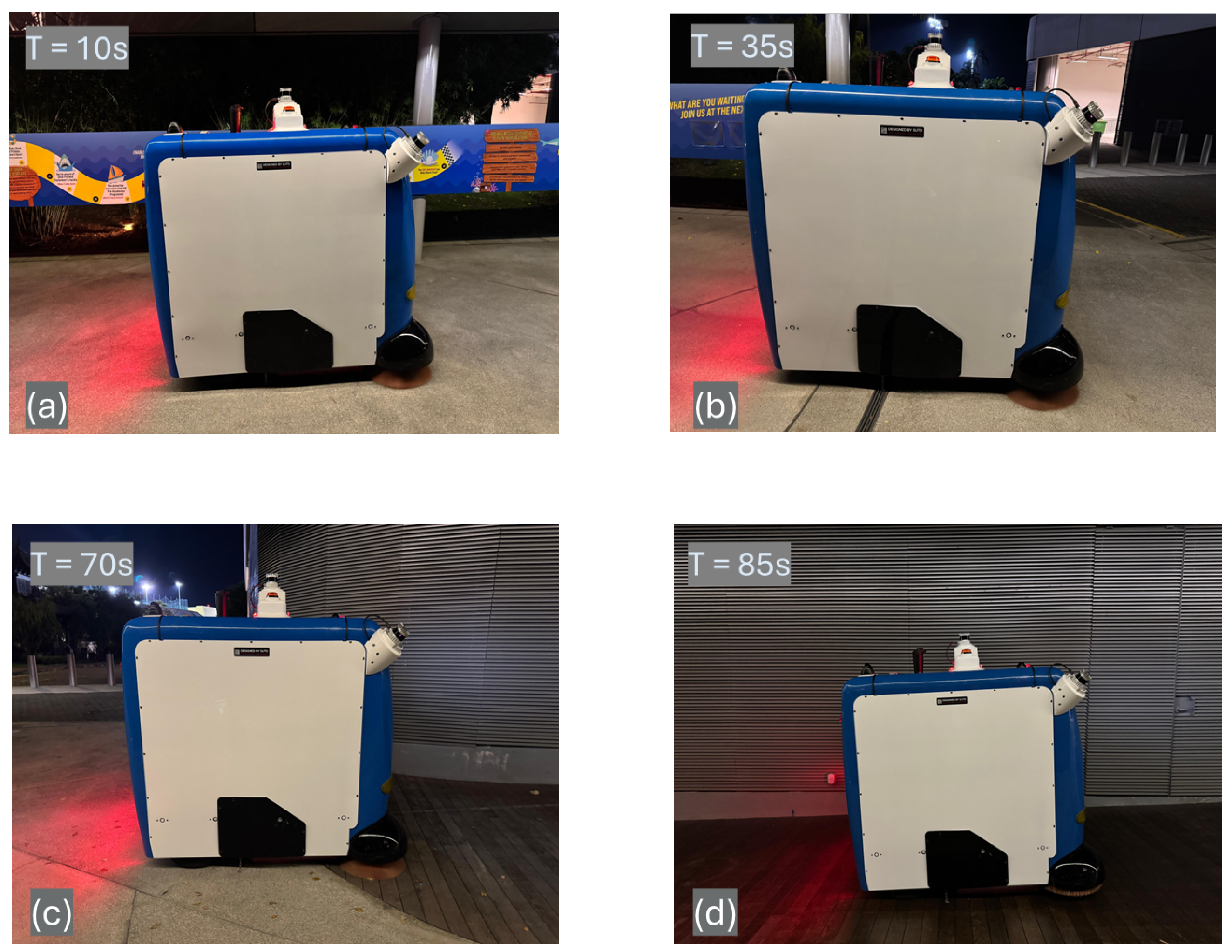

This work addresses the challenge of real-time terrain-adaptive cleaning for outdoor mobile robots operating on diverse and unpredictable surfaces. Unlike traditional cleaning systems that rely on fixed schedules or static configurations, Panthera 2.0 incorporates a fuzzy logic-based framework that dynamically adjusts key cleaning parameters, including brush height, brush speed, and robot velocity, based on live terrain classification and slope estimation. By relying solely on LiDAR signal data and IMU feedback, the system avoids the limitations of vision-based methods in low-light conditions. The proposed approach is validated through extensive experiments and offers a practical, energy-aware solution for safe and effective autonomous cleaning in complex outdoor environments.

The rest of this paper is organized as follows.

Section 2 presents the robot platform used for the implementation. The proposed terrain classification and adaptive cleaning are presented in

Section 3. The results for validating the proposed approach are given in

Section 4.

Section 5 concludes this paper.

2. Robot Platform, Panthera

The Panthera 2.0 is an outdoor pavement-cleaning robot equipped with cleaning modules and a sensing system that enables terrain-adaptive cleaning. Designed specifically for outdoor public environments such as park connectors and urban walkways, Panthera is capable of operating efficiently across different urban settings. The robot integrates a smart lighting system comprising beacons, mode indicator lights, and a user-friendly dashboard to facilitate operator interaction. The Panthera robot is shown in

Figure 1.

Depending on the cleaning scenario, the robot supports two main navigation strategies. For long, linear routes (e.g., park connectors), it follows a pre-planned point-to-point trajectory using a path-tracking controller such as Pure Pursuit. In contrast, when operating in wider, open areas like plazas or pedestrian squares, a grid-based coverage path planner is employed to ensure complete surface coverage. These navigation approaches are selected based on task context and terrain layout, allowing Panthera to flexibly adapt its cleaning behavior. While the robot features multiple functional subsystems, this paper focuses exclusively on the cleaning modules and terrain detection system, which are discussed in the following sections.

2.1. Sensing System

Terrain detection is primarily handled by a 128-channel Ouster LiDAR mounted at the front of the robot. To support autonomous navigation, a second 128-channel Ouster LiDAR is mounted on top and used for either mapping or localization, depending on the operational phase. This setup ensures accurate positioning in outdoor environments during cleaning operations. Additionally, a 32-channel LiDAR is positioned at the rear of the robot to assist with obstacle detection and avoidance. This sensor enhances safety by identifying static and dynamic objects in the robot’s blind spots, such as pedestrians, animals, or bicycles. The arrangement of these LiDAR sensors is shown in

Figure 1. In addition, ten ultrasonic sensors are distributed around the chassis to enable close-range obstacle detection, further enhancing autonomous navigation capabilities. To monitor the robot’s orientation during slope traversal, a VectorNAV VN-100 IMU sensor from VectorNAV, based in Dallas, USA is used. Although it outputs quaternion values (x, y, z, w), our focus is on extracting the pitch angle, which is critical for evaluating cleaning performance during uphill movement.

2.2. Cleaning Modules

The cleaning modules consist of a brush system, a vacuum system, and a standard bin for the storage of waste.

2.2.1. Brush System

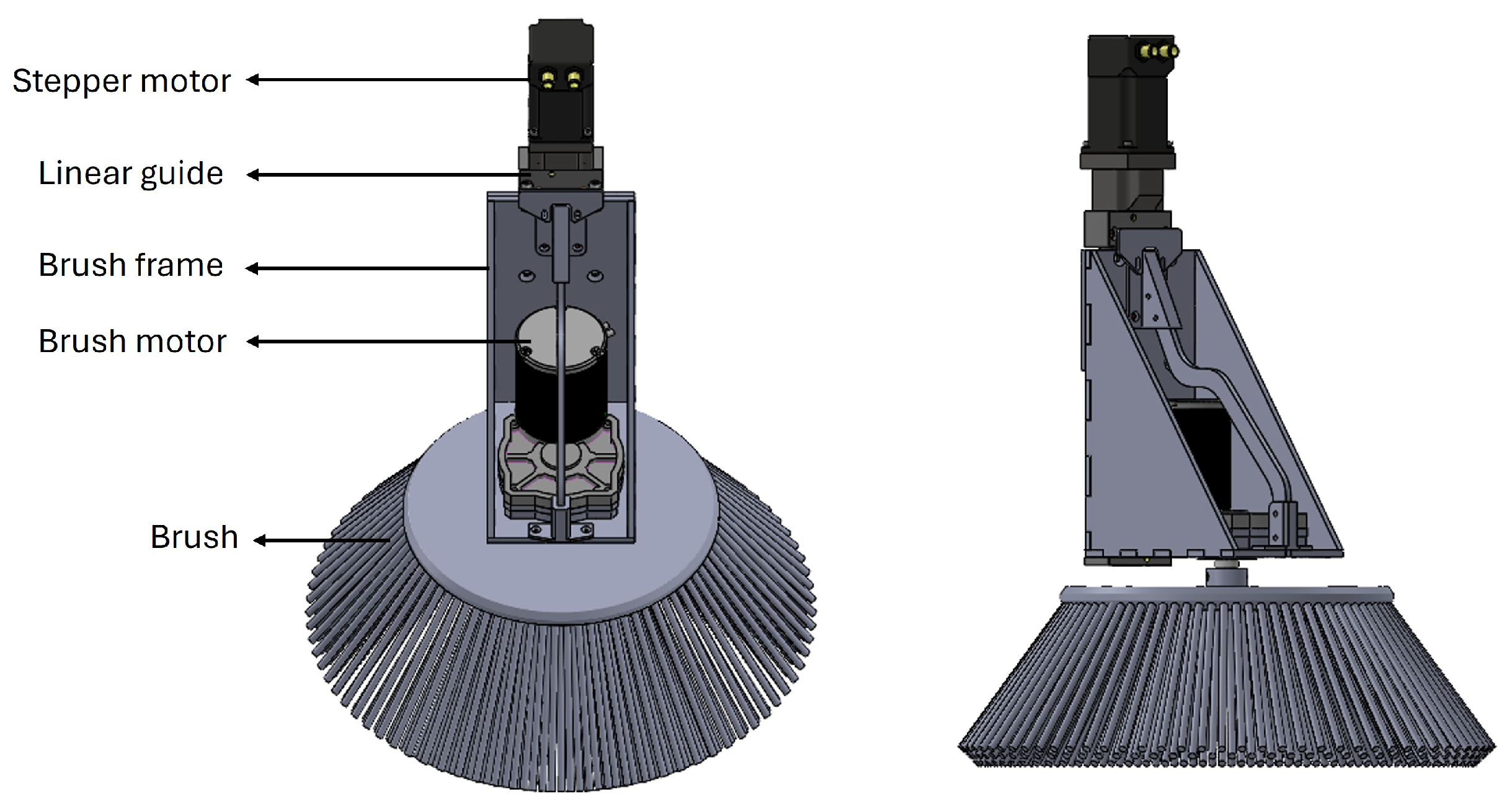

The brush system includes two types of brushes for sweeping, and they are side brushes and cylindrical brushes. The Panthera 2.0 robot is equipped with two side brushes located on the front of the robot. The main function of the side brushes is to sweep the trash and dirt on the ground, directing them toward the central section of the robot. Side brushes also enable the robot to clean edges and reach tight corners, increasing the cleaning effectiveness of the robot. The side brush mechanism is shown in

Figure 2. The mechanism has several key components that are essential for the side brushes to carry out its main function of sweeping. These components are the stepper motor, linear guide, brush frame, brush motor, and brush. A stepper motor and linear guide assembly is mounted to each brush frame. This assembly enables the vertical motion of the brush frame and the brush attached, allowing the brush to move up when not in use and move down to come into contact with the ground when performing cleaning operations. The side brushes are each powered by a brush motor, providing the rotational motion required for sweeping to the side brush.

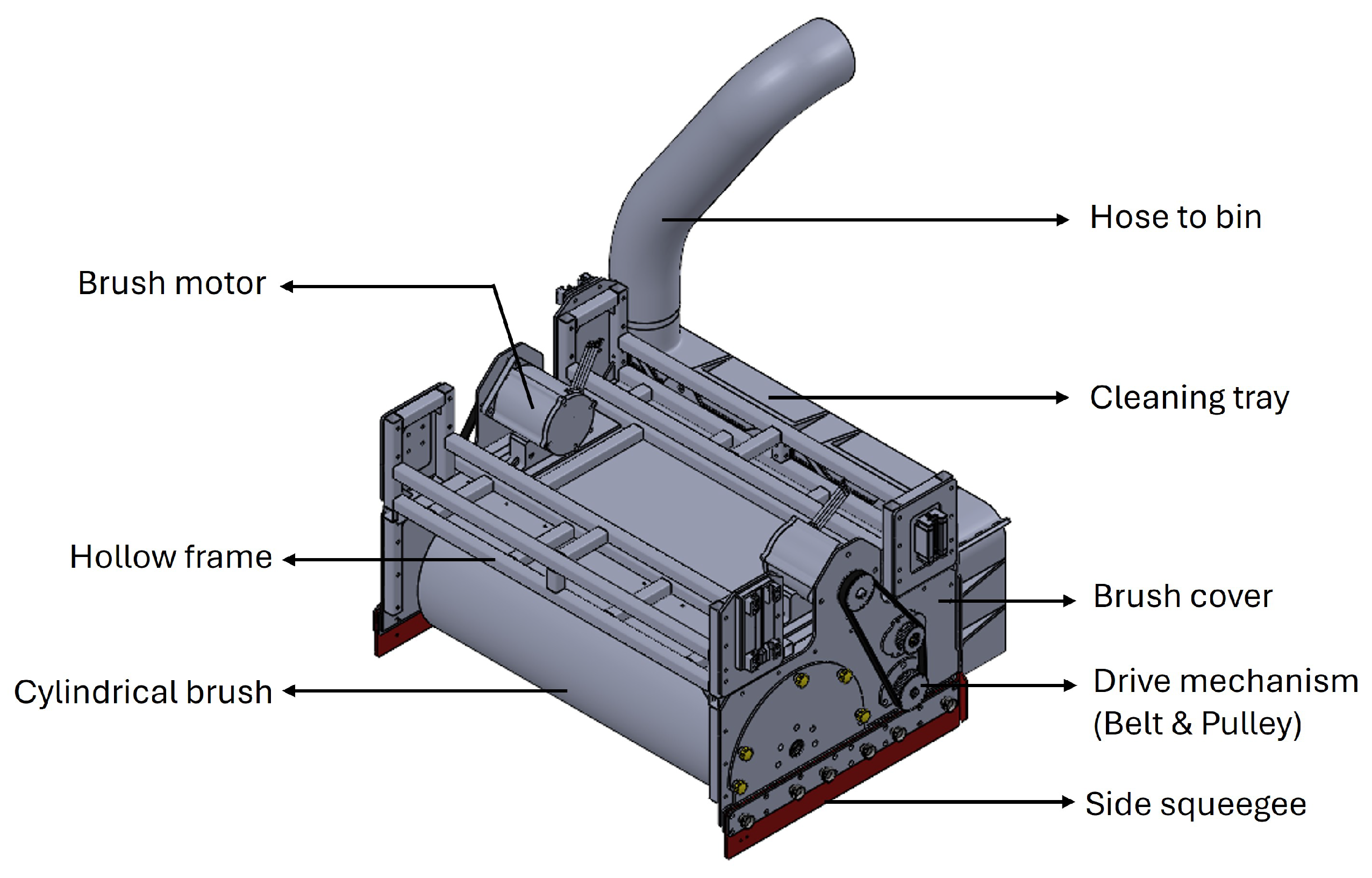

The Panthera 2.0 robot is also fitted with a cylindrical brush assembly as shown in

Figure 3, which is located centrally under the robot. The main function of the cylindrical brush assembly is to collect all debris directed and swept in by the side brushes. The debris is subsequently picked up and transferred to the cleaning tray to be kept temporarily. The cylindrical brush assembly consists of several components such as brush motors, cylindrical brushes, a cleaning tray, and the drive mechanism. The assembly has a hollow frame and brush covers acting as a support structure to mount the components. Two counter-rotating cylindrical brushes operating at rotational speeds of between 700 and 1200 RPM help to gather debris from the ground and transfer it to the cleaning tray for temporary storage. Powering these two cylindrical brushes are brush motors that provide rotational motion through the drive mechanisms, comprising belts and pulleys. The combination of side brushes and cylindrical brushes enables the Panthera 2.0 robot to sweep and remove debris from the ground effectively.

2.2.2. Vacuum System and Bin

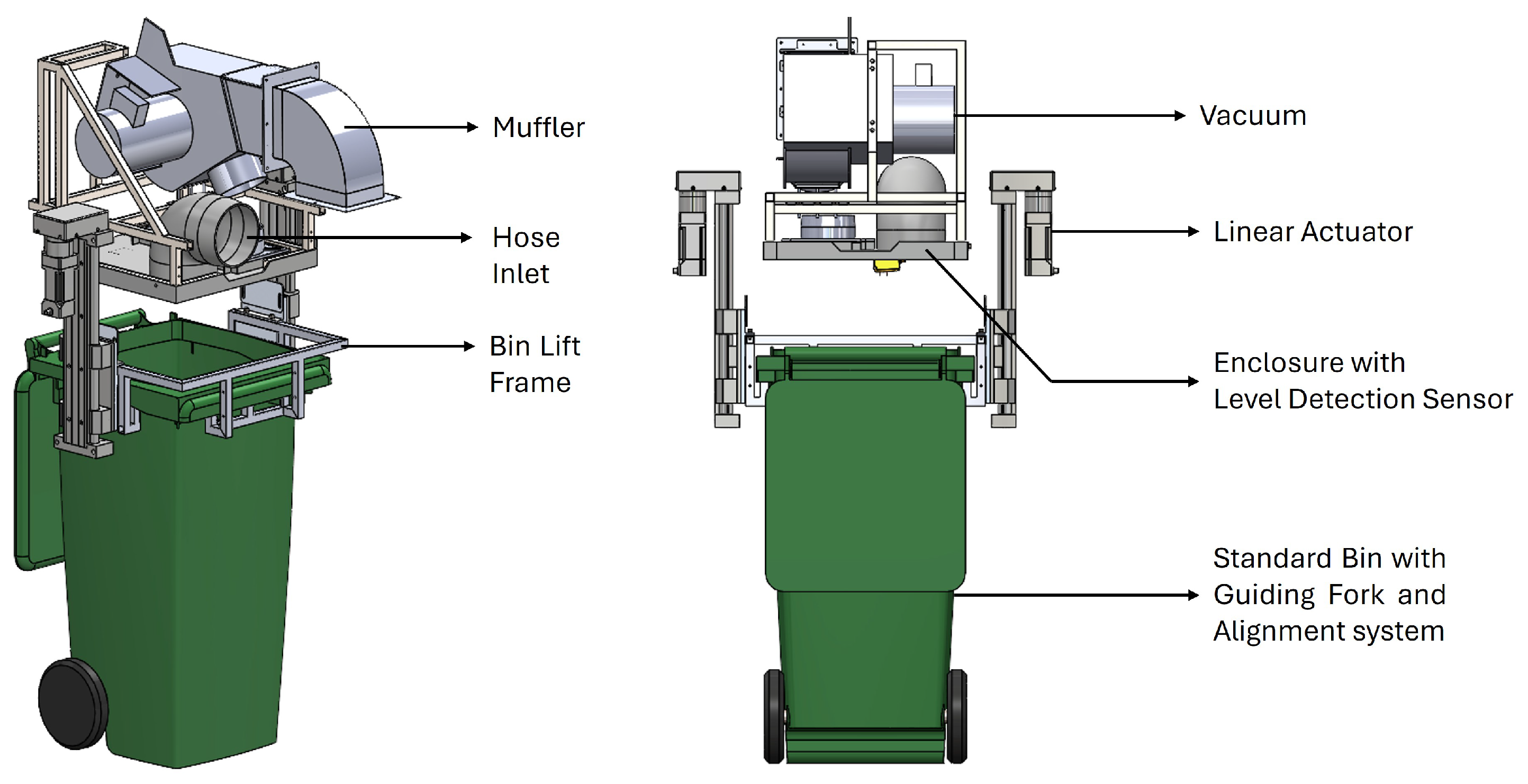

The primary purpose of the vacuum system is to suck debris from the cleaning tray and transport it into a standard size bin for storage. The vacuum is mounted to the bin by a bin mechanism assembly as shown in

Figure 4. The vacuum system has two modes: vacuum mode and blower mode. In vacuum mode, the vacuum provides suction power to suck debris from the cleaning tray into the bin via the hose. In blower mode, the vacuum expels air to help unclog any debris stuck in the hose. The vacuum is attached with a muffler to help silence vacuum motor exhaust and improve sound absorption, with minimal disruption to vacuum suction.

The bin found on the Panthera 2.0 robot is a 120 L standard bin, having dimensions of 0.9 m (H) × 0.4 m (W) × 0.4 m (L), and weighing approximately 2.2 kg. It is supported by a bin lift frame that moves up and down together with the bin. The bin is raised up to a height of 140 mm from the ground when the robot is moving around and performing cleaning operations. The bin is lowered to ground level when it is full and needs to be manually emptied by a human operator. This vertical motion is made possible by the bin mechanism assembly. The assembly is made up of three key components: linear actuators, an enclosure with level detection sensor, and a bin alignment system. Two linear actuators on each side of the bin deliver the force required to lift and lower the bin in a steady manner. Bin guiding forks and the alignment system consisting of level measurement sensors ensure that the bin is aligned perpendicularly to the ground while it is moving up or down. An enclosure provides a tight fitting cover when the bin is raised up fully, preventing any debris from dispersing out when the robot is cleaning. The trash level detection sensor mounted on the enclosure measures the level of rubbish currently in the bin and updates the user interface to notify robot users of the trash levels.

2.3. Motor Control

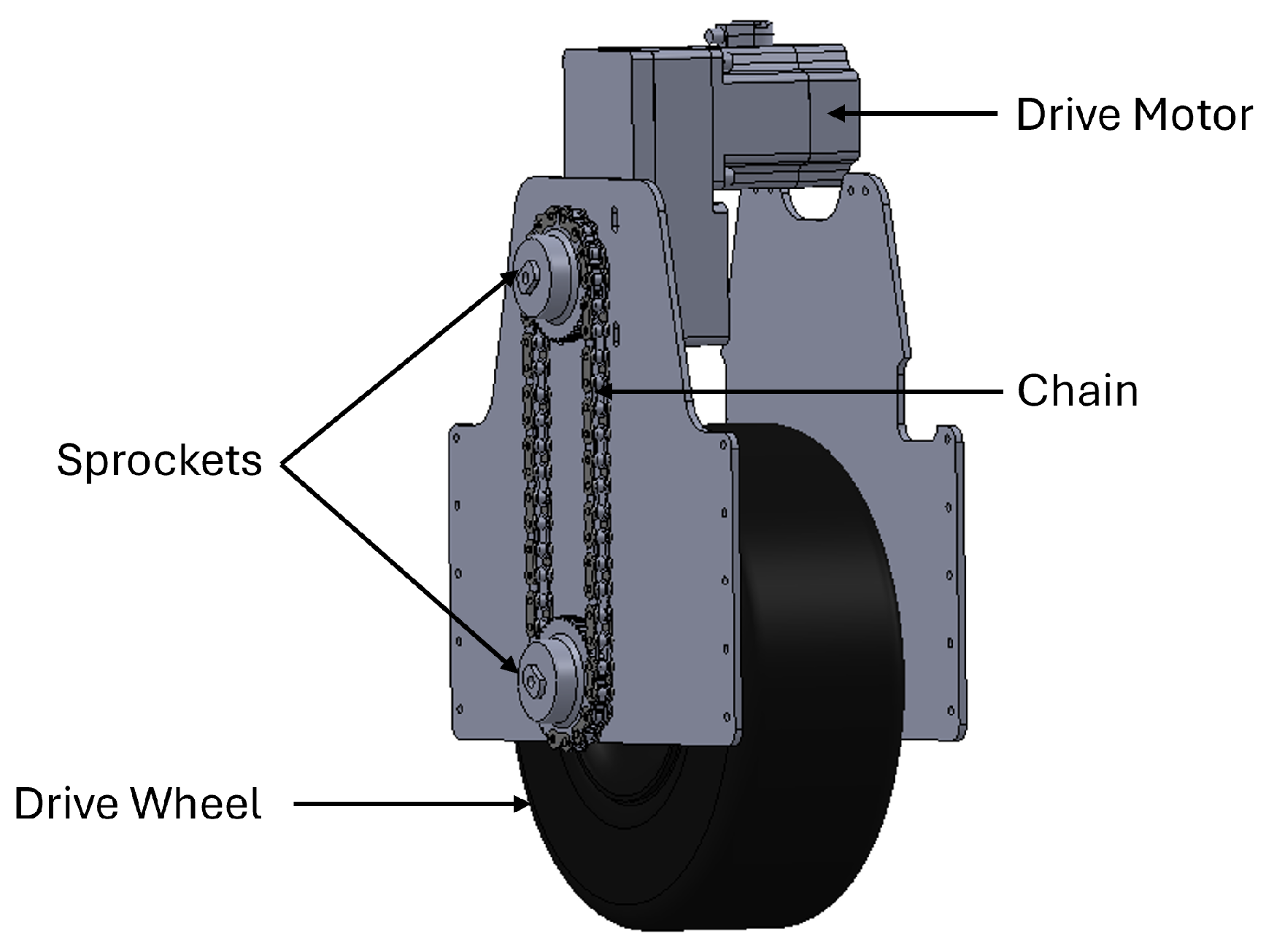

Panthera 2.0 relies on a network of motors and controllers to coordinate both locomotion and cleaning operations. For movement, the robot is equipped with two Oriental BLV640NM100F drive motors, each linked to a drive wheel via a chain-and-sprocket mechanism, as shown in

Figure 5. These motors are regulated by an Oriental BLVD40NM motor controller, enabling precise adjustment of driving speed and direction. Both items are manufactured by Oriental Motor based in Tokyo, Japan.

For cleaning, each side brush assembly includes a dedicated motor for rotation and a stepper motor for vertical adjustment. Brush rotation is controlled via a Roboteq SBLG2360T controller, while height adjustments are handled by an IGUS D1 controller actuating a lifting motor. These components allow for real-time adaptation of brush height and brush speed to suit varying terrain conditions (see

Figure 2).

All motor controllers interface with a central Industrial PC (IPC), which serves as the control hub for locomotion and cleaning. Communication occurs over the Modbus protocol, allowing the IPC to transmit real-time commands derived from the fuzzy inference engine. This architecture enables the robot to adjust its mechanical behavior in direct response to changes in floor type and slope detected by the LiDAR and IMU modules.

This unified control structure ensures that brush speed, brush height, and drive velocity are updated dynamically, supporting safe and efficient cleaning performance across diverse terrain conditions.

3. Terrain Classification and Adaptive Cleaning

3.1. Terrain Classification

For this paper, a ResNet-18 convolutional neural network architecture was utilized through the Roboflow platform to perform floor-type classification using LiDAR signal images. The dataset consisted of grayscale images generated from the signal intensity of a 128-channel Ouster LiDAR sensor, mounted on the front-right corner of the Panthera platform, and can be accessed in the

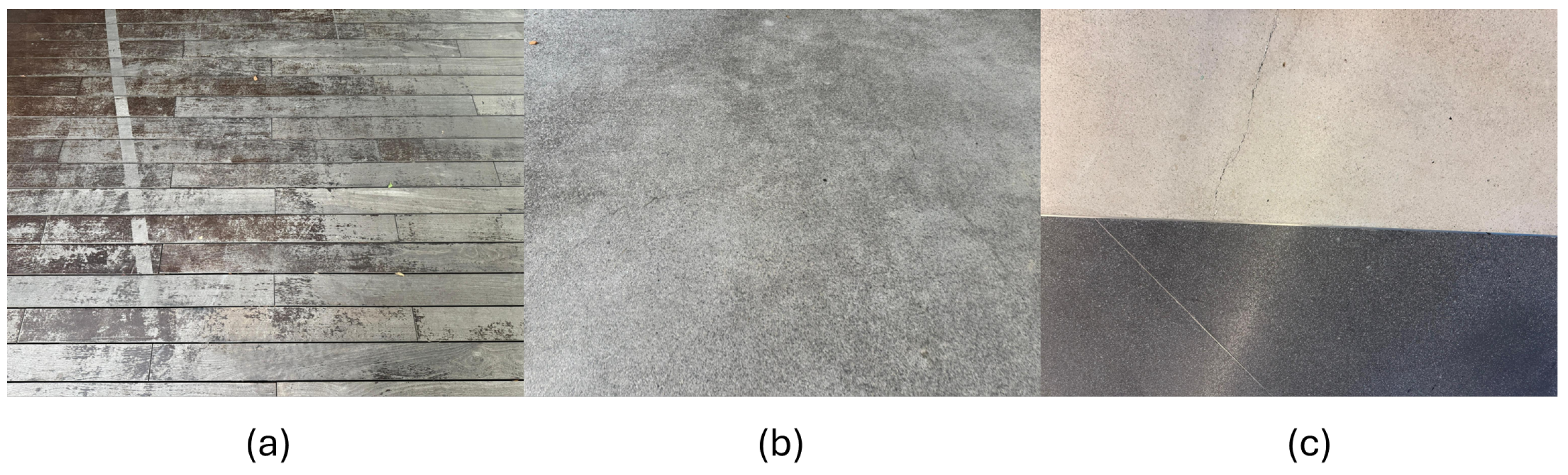

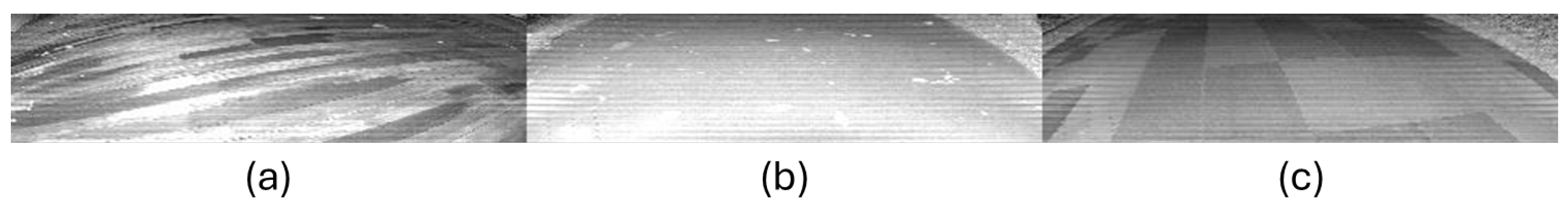

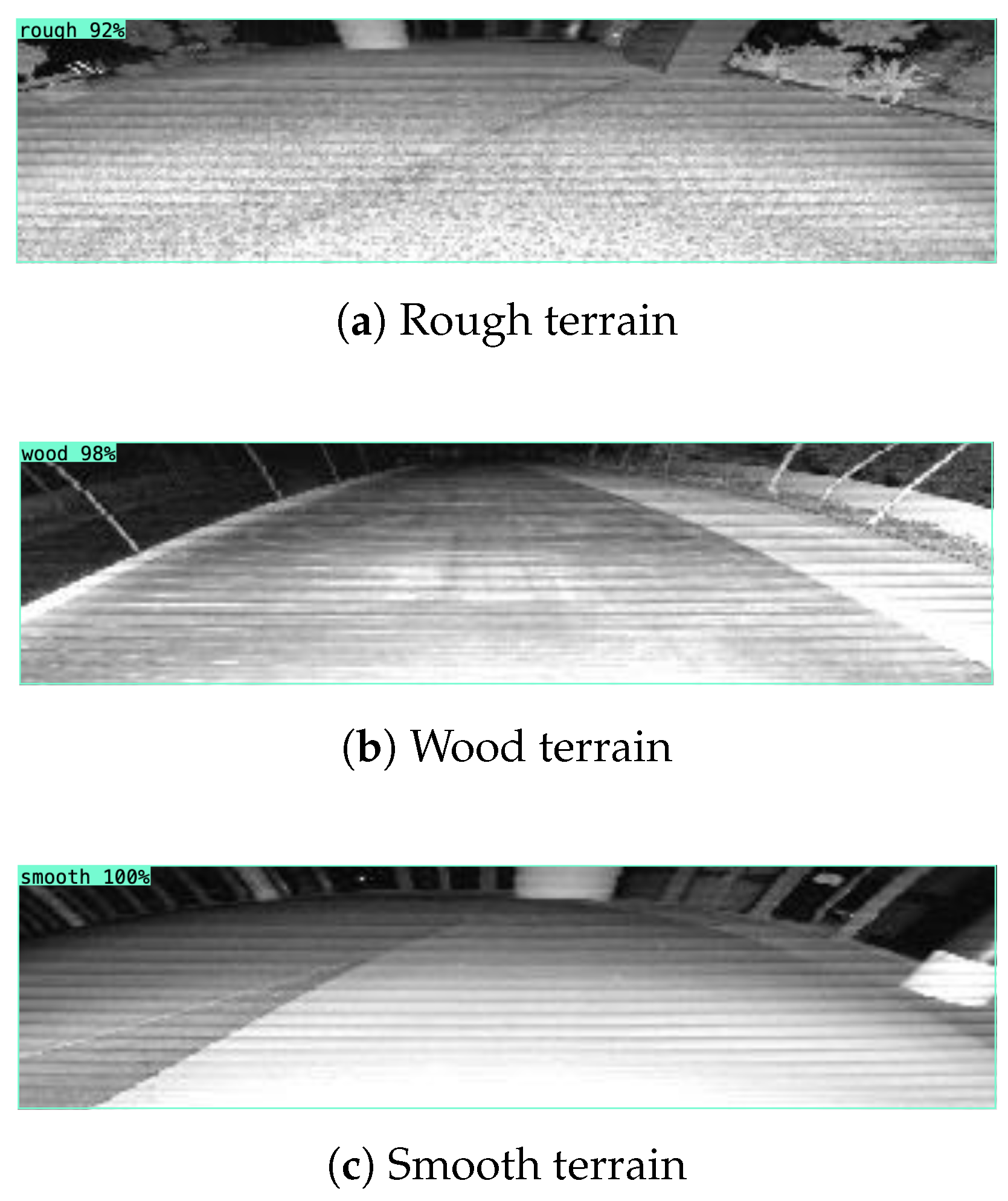

Supplementary Materials. The sensor, which is used for obstacle detection as well, is as such angled downward to capture consistent floor scans during motion. The classification task involved three floor categories: wood, characterized by distinct planks along with textured grain and straight, narrow gaps; smooth, comprising glossy and flat surfaces; and rough, consisting of gravel, asphalt, and concrete textures as shown in

Figure 6 and

Figure 7.

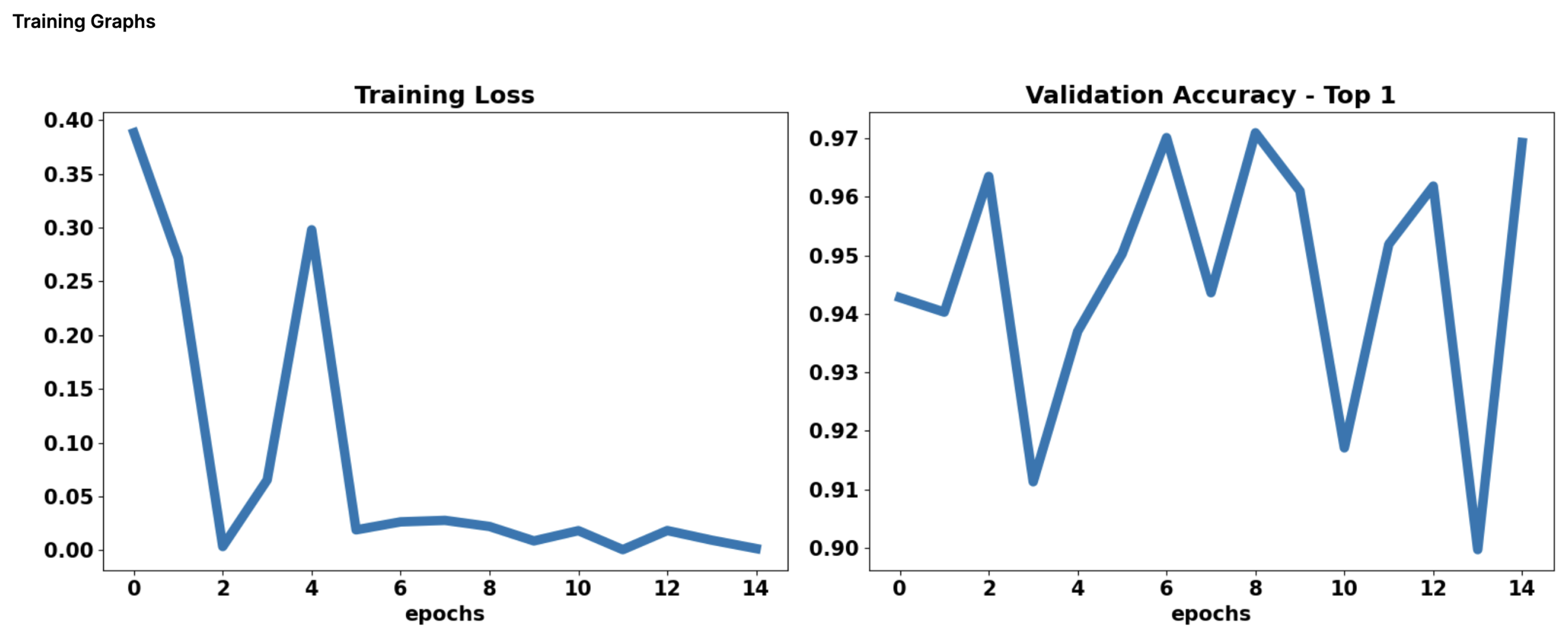

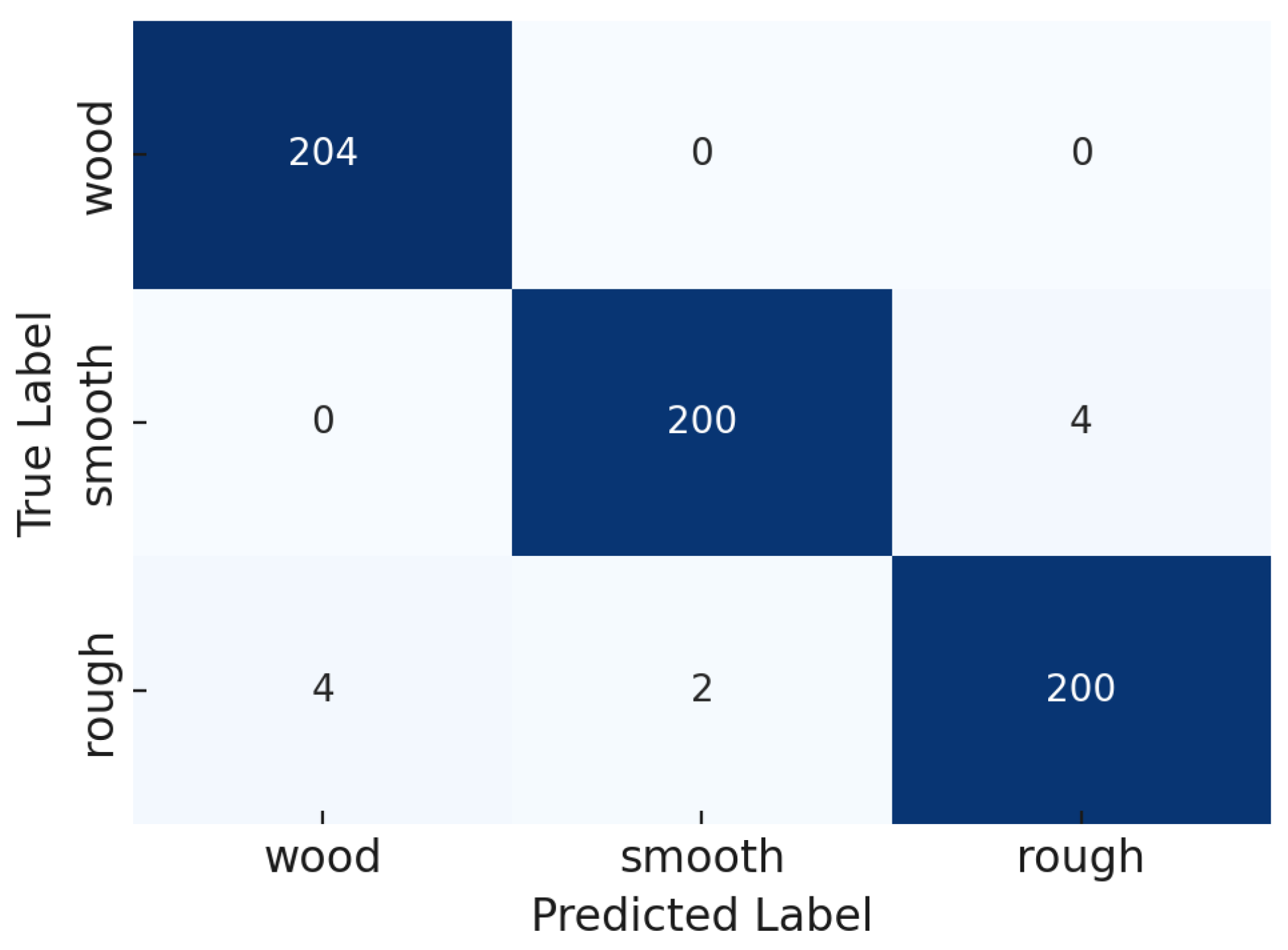

In order to focus exclusively on the floor and remove extraneous regions such as walls or distant objects, each raw image was cropped from a resolution of 1028 × 128 to 320 × 80 pixels. The resulting dataset was then split into training (70%), validation (20%), and testing (10%) sub datasets. Furthermore, to improve generalization and account for the model’s exposure to realistic differences in the images, a series of augmentations were applied. These included horizontal and vertical flips, 90-degree rotations, and minor rotations of ±15 degrees. These augmentations help the model learn features that are rotationally independent and make it more resilient to changes in robot orientation and floor alignment during deployment. Crucially, no augmentations such as blurring and brightness shifts were applied, as LiDAR signal images are inherently invariant to lighting conditions and do not show photometric distortions found in conventional RGB images. Following the application of all the augmentations, the final dataset consisted of 14,727 images. The model was initialized with pre-trained ImageNet weights to enable effective transfer learning by adapting features learned from large-scale natural image datasets to the domain of LiDAR-based floor classification.

3.2. Adaptive Cleaning

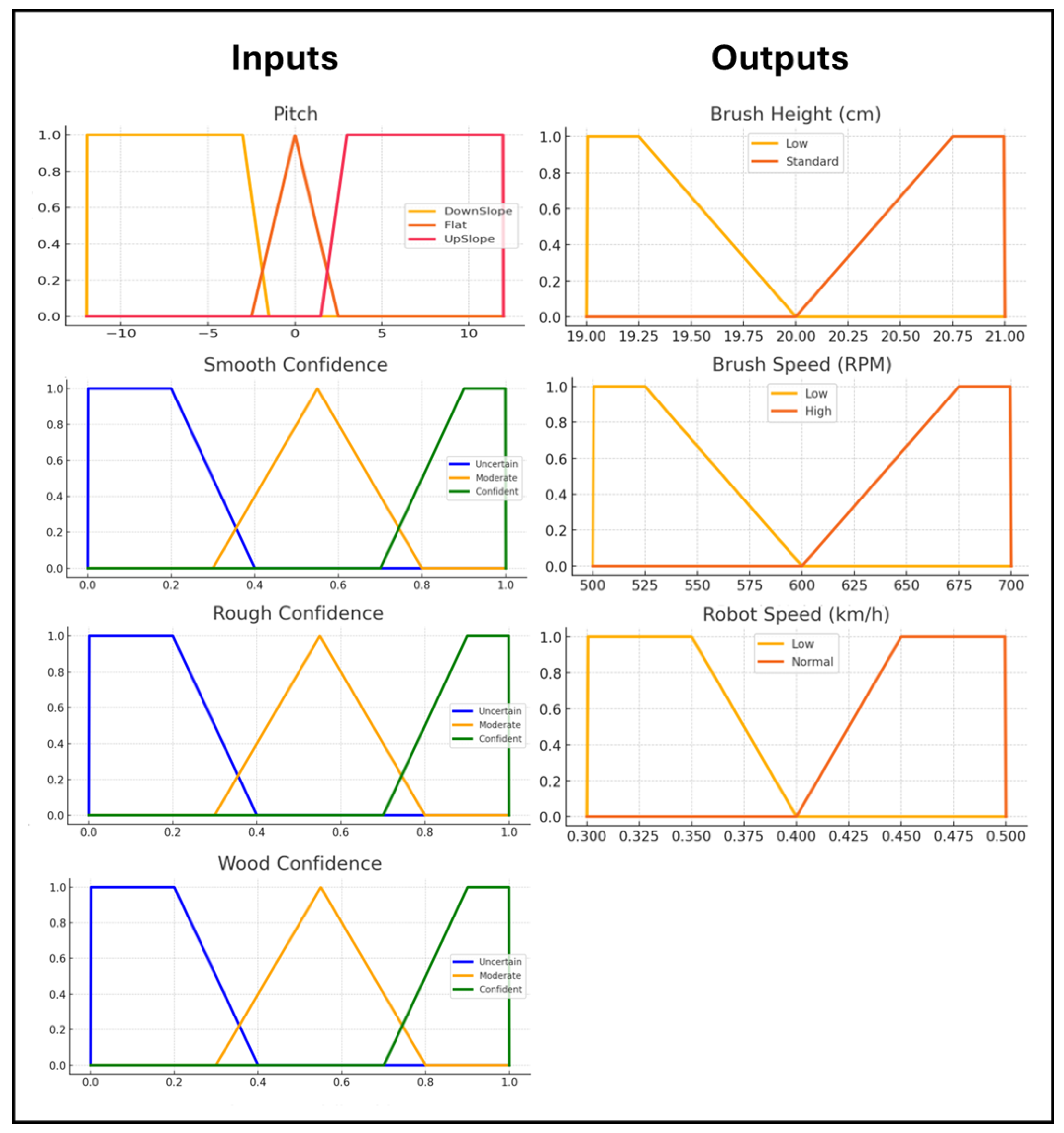

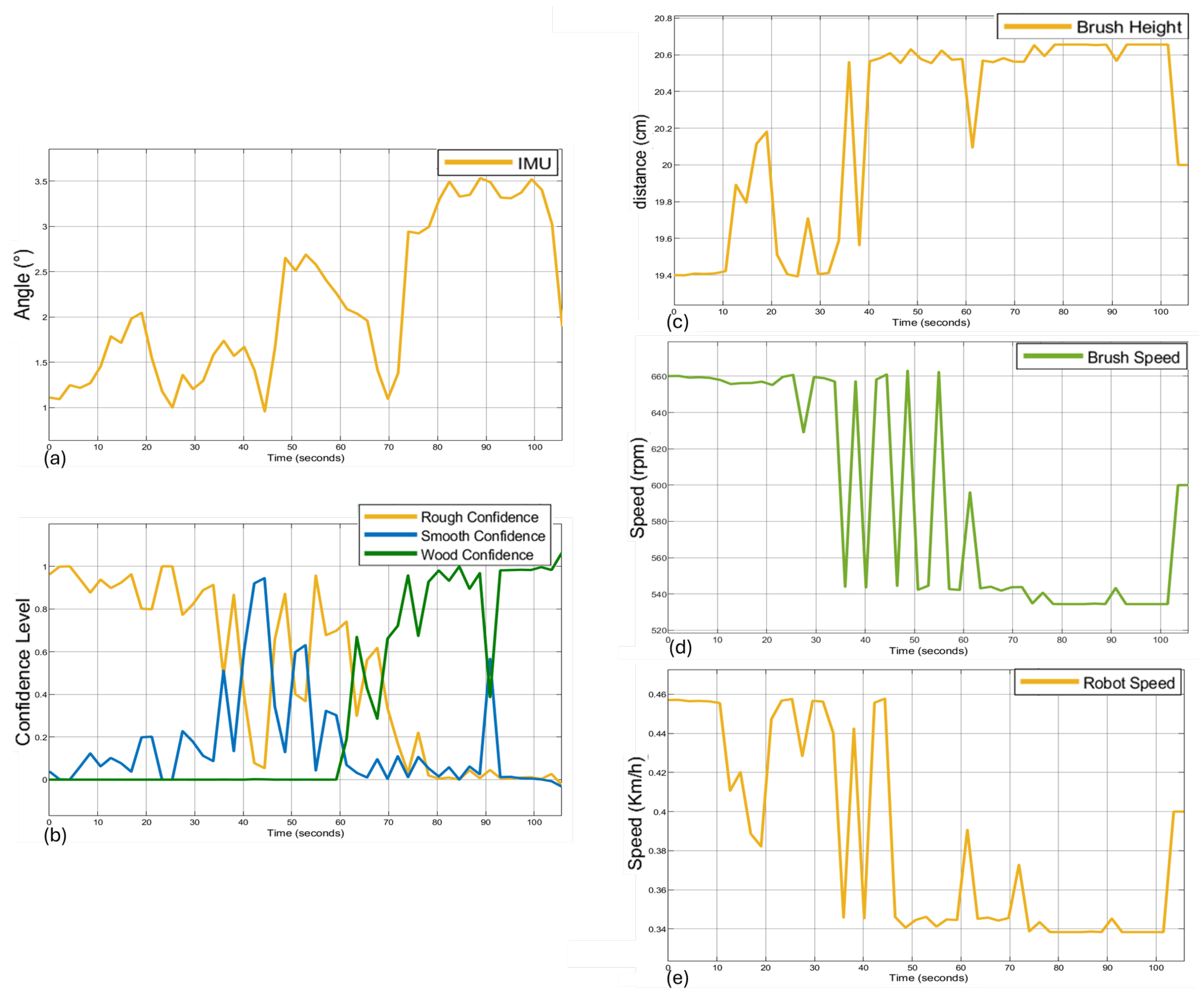

To enable adaptive cleaning behavior based on the terrain classification output, a fuzzy logic system was developed to convert surface and slope characteristics into real-time cleaning commands. The fuzzy logic engine was designed to take four inputs: the confidence scores for wood, smooth, and rough floors obtained from the ResNet classifier (normalized between 0 and 1 and mapped to fuzzy sets of Uncertain, Moderate, and Confident), and the pitch angle from the IMU, which was categorized into DownSlope, Flat, and UpSlope using predefined trapezoidal and triangular membership functions. The fuzzy outputs control three key cleaning parameters: brush height, brush speed, and robot speed. Each output was mapped into fuzzy categories, such as Low or Standard brush height, Low or High brush speed, and Low or Normal robot speed, with membership values calibrated through experimental data. The membership functions used are shown in

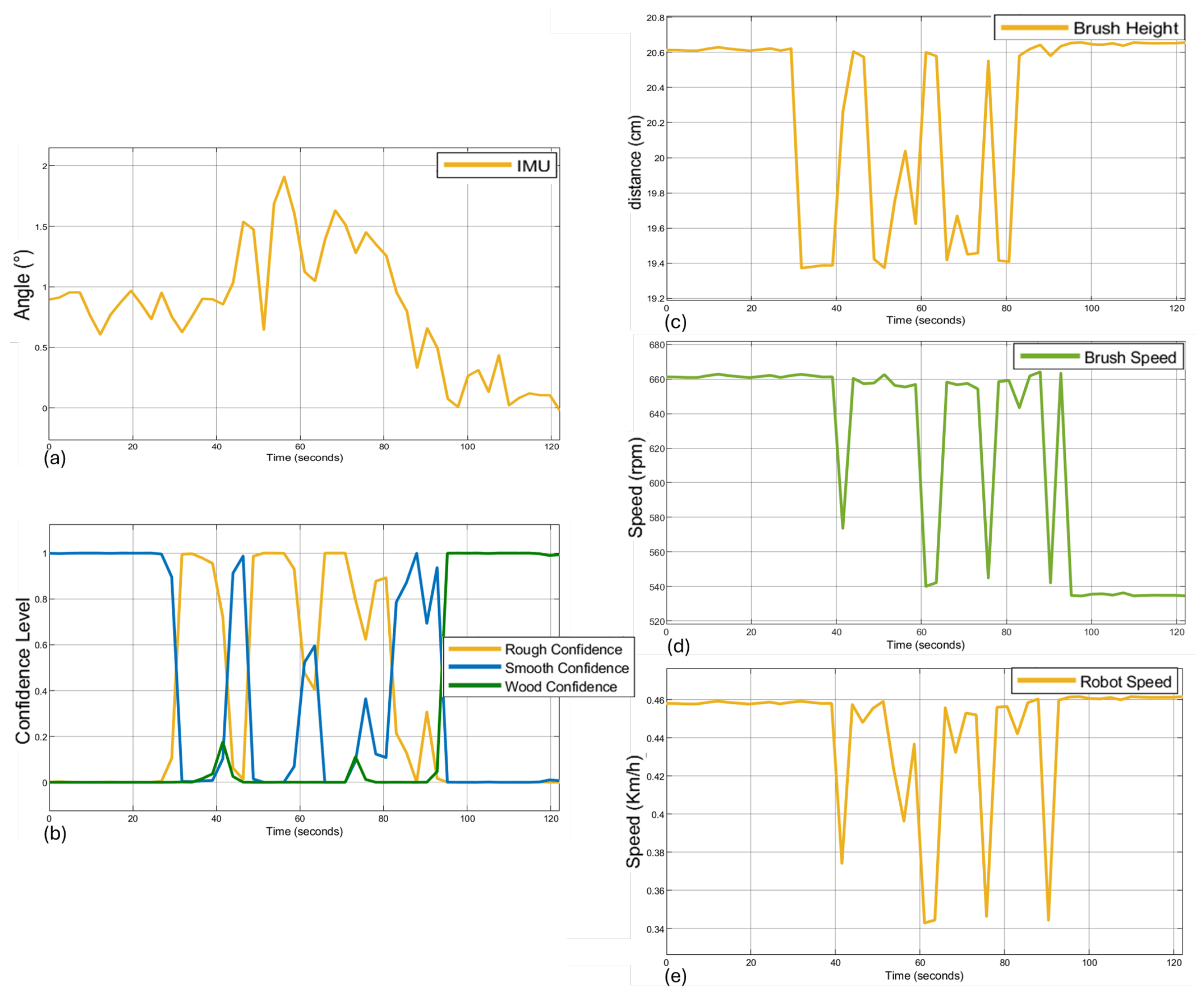

Figure 8.

To define the fuzzy rules, a total of 48 cleaning experiments were conducted—16 for each terrain type: rough, smooth, and wood. For every surface type, eight tests were performed on flat ground and eight on inclined terrain, covering all combinations of three cleaning parameters: brush height (low/standard), brush speed (low/high), and robot speed (low/normal), encoded as binary triplets (e.g., 0, 0, 0 for all low settings). During each test, the average current drawn by the left and right brush motors was recorded, along with maximum and minimum values, to evaluate cleaning effort and surface resistance. A summary of selected results is shown in

Table 1, highlighting conditions that motivated the definition of safe and energy-efficient fuzzy rules. The fuzzy membership functions for pitch were defined with conservative thresholds of ±12°, based on empirical slope measurements observed in some outdoor deployment areas. During preliminary testing, slopes up to 7° were measured, which informed the need to support inclines beyond standard flat or mildly sloped environments. The ±12° range provides additional margin to account for unexpected terrain variations, ensuring the system remains responsive and robust under diverse real-world conditions. Similarly, the membership functions for the three cleaning parameters—brush speed, robot speed, and brush height—were defined based on trends observed during the same 48 cleaning experiments. The parameter cutoffs were chosen to reflect configurations that balanced cleaning performance with energy efficiency. For example, brush speeds below 600 RPM consistently led to lower power consumption on smooth and wooden surfaces, while higher speeds (above 675 RPM) were reserved for rough terrain or uncertain classifications. Robot speed membership ranges were selected to avoid instability at high speeds on slopes or delicate surfaces. Likewise, brush height boundaries were informed by current draw measurements: a low brush height (19–20 cm from home) provided effective contact on flat terrain, whereas slightly elevated positions (20.75–21 cm) reduced drag and current spikes on inclines. These experimentally derived boundaries form the basis for the fuzzy sets used in the rule base.

Results from the rough terrain experiments indicated that higher brush heights consistently reduced motor current, especially on inclined surfaces. Low brush height combined with high robot speed significantly increased power draw. On smooth surfaces, parameter sensitivity was lower, with the robot tolerating a broader range of speeds and heights without excessive current. Wood surfaces required special caution due to potential surface damage under aggressive configurations. As a result, fuzzy rules for wood surfaces prioritize conservative configurations, especially when classification confidence is high. These findings align with expected physical interactions between terrain and cleaning hardware. Increased surface roughness and incline naturally elevate mechanical resistance, raising motor current. Conversely, smooth or flat surfaces present minimal resistance, supporting faster motion and more aggressive cleaning configurations.

A Mamdani-type fuzzy inference system with centroid defuzzification was implemented, resulting in a total of 81 rules governing the output actions. These rules were derived empirically from experimental trends observed across the 48 cleaning experiments and were derived directly from experimental insights and encode terrain-dependent control strategies. For instance, when terrain is classified as rough with high confidence and the slope is upward, the robot slows down and raises the brush to reduce drag. Conversely, when smooth terrain is detected on flat ground, the system increases speed and lowers the brush for optimal coverage. In cases of uncertainty or conflicting terrain confidence, the system defaults to a safe fallback rule with low brush speed, low robot speed, and standard brush height to avoid damage. A representative subset of these rules is listed in

Table 2.

3.3. Mathematical Formulation of the Fuzzy Inference System

Let denote the pitch angle obtained from the IMU, and let represent the confidence levels for rough, smooth, and wood terrain classifications, respectively, as output by the LiDAR-based ResNet model. Each input is associated with fuzzy sets defined via trapezoidal or triangular membership functions, denoted , where j indicates the fuzzy label (e.g., Flat, UpSlope, Confident).

The fuzzy rule base comprises 81 rules of the form:

where

are fuzzy sets corresponding to the input conditions in rule

k, and

defines the output actions: brush speed, robot speed, and brush height.

A Mamdani-type fuzzy inference system is used, where individual rule outputs are aggregated, and final crisp values are obtained via centroid defuzzification. For each output variable

, the defuzzified result is calculated as:

where

is the aggregated membership function for the

output parameter.

This formulation enables real-time terrain-aware adjustment of cleaning parameters based on both surface classification confidence and slope, improving operational robustness, energy efficiency, and cleaning adaptability.

All fuzzy rules and membership functions were implemented onboard the robot’s control architecture, enabling real-time terrain-aware decision-making. This integration allows the robot to dynamically adjust its cleaning parameters in response to terrain classification and slope inputs, supporting energy efficiency, operational safety, and improved cleaning effectiveness in diverse environments. While the current fuzzy system uses a fixed rule base of 81 empirically derived rules, its scalability is limited due to the rapid growth of rules with each added input category. For instance, adding more terrain types would significantly expand the rule set. Future work could explore ways to simplify the rule base or automate its adaptation, making the system easier to maintain and extend to new environments.

3.4. Overall Architecture

Figure 9 illustrates the overall control architecture of the terrain-adaptive fuzzy logic system, showing the flow from LiDAR and IMU sensors through the classification model to the fuzzy inference system and adaptive cleaning commands.

5. Conclusions

This work proposed a terrain-aware fuzzy logic control system for adaptive cleaning in autonomous outdoor robots. The system combines LiDAR-based terrain classification, IMU-based slope detection, and a fuzzy inference engine to dynamically adjust key cleaning parameters, such as brush speed, robot speed, and brush height, in real time.

A ResNet-18 model was trained on LiDAR signal intensity images to classify terrain into three categories: rough, smooth, and wood. Classification confidence scores were mapped to fuzzy sets, along with slope angle input from the IMU, to drive a Mamdani-type fuzzy controller composed of 81 rules. These rules were empirically derived from 48 cleaning experiments designed to evaluate the relationship between terrain type, cleaning parameters, and brush motor current.

The system was validated through two real-world experiments involving flat and sloped terrain transitions. Results demonstrated that the robot successfully adjusted its behavior according to terrain conditions, raising brush height and reducing speed on delicate or sloped surfaces, while increasing brush speed and lowering brush height on rough surfaces to improve contact. These findings support the system’s ability to enhance safety, cleaning effectiveness, and energy efficiency in unstructured outdoor environments. Notably, all experiments were performed under night-time conditions, confirming the system’s robustness in low-light environments.

Future work will focus on integrating multi-sensor fusion to improve terrain classification robustness under variable environmental conditions, and on extending the control logic to handle wet or slippery surfaces, where traction and cleaning dynamics present additional challenges.