Abstract

Large language models (LLMs) have attracted a lot of attention in various fields due to their superior performance, aiming to train hundreds of millions or more parameters on large amounts of text data to understand and generate natural language. As the superior performance of LLMs becomes apparent, they are increasingly being applied to knowledge graph embedding (KGE)-related tasks to improve the processing results. Traditional KGE representation learning methods map entities and relations into a low-dimensional vector space, enabling the triples in the knowledge graph to satisfy a specific scoring function in the vector space. However, based on the powerful language understanding and semantic modeling capabilities of LLMs, which have recently been invoked to varying degrees in different types of KGE-related scenarios such as multi-modal KGE and open KGE according to their task characteristics, researchers are increasingly exploring how to integrate LLMs to enhance knowledge representation, improve generalization to unseen entities or relations, and support reasoning beyond static graph structures. In this paper, we investigate a wide range of approaches for performing LLMs-related tasks in different types of KGE scenarios. To better compare the various approaches, we summarize each KGE scenario in a classification. In the article we also discuss the applications in which the methods are mainly used and suggest several forward-looking directions for the development of this new research area.

MSC:

94-11

1. Introduction

Knowledge graphs (KGs) are a technology that organizes and represents knowledge in the form of a graph structure, describing complex real-world associations through entities, attributes, and relations. With the development of society and the advancement of science and technology, KGs have been more and more widely used in applications containing rich semantic information in different fields. Compared to traditional data analysis methods, KGs can more intuitively model heterogeneous relations and enable interpretable reasoning across various application domains [1]. At present, KGs have been widely used in various fields such as social networks [2,3], bioinformatics networks [4,5], and traffic flow prediction [6,7], proved to have superior performance. Traditional approaches to handling the aforementioned tasks typically rely on learning knowledge graph embedding (KGE) representations as a foundational step. KG organize factual knowledge as structured semantic networks, while KGE provide differentiable vector-space representations that preserve KG’s topological and relational properties for downstream analytic tasks. To facilitate the reader’s understanding of the relationship between KG and KGE, we provide an example here. Suppose that we have a KG that contains entities “Elon Musk” and “Tesla Inc.”, as well as the relationship “founded”. The task of the KGE is to map these entities and relations into a low-dimensional vector space. For example, the embedding vector for “Elon Musk” might be ‘[0.1, 0.2, 0.3]’, for “founded” it might be ‘[0.4, 0.5, 0.6]’, and for “Tesla Inc.” it might be ‘[0.7, 0.8, 0.9]’. Through this embedding, the triples in the KG can satisfy certain semantic relations in the vector space, such as ‘embedding (Elon Musk) + embedding (founded) ≈ embedding (Tesla Inc.)’. This embedding method not only preserves the structural information of the KG but also supports complex reasoning and analytical tasks. In the example, the dimensions and initial values of the entity mapping vectors are generated based on predefined rules instead of being random, and such low-dimensional embeddings can effectively preserve the structural information of the knowledge graph to support complex reasoning and analytical tasks. Subsequently, relevant tasks are carried out on the basis of these precise KGE representations. However, deep learning models in traditional approaches often suffer from performance limitations due to their training scale. Despite the need for large amounts of text data for training, LLMs are increasingly being applied to KGE-related tasks due to their multiple advantages. Pre-trained on massive text data, LLMs can capture rich linguistic patterns and semantic knowledge, which can be effectively transferred to KGE tasks to provide a strong foundation for learning relationships and structures within knowledge graphs.

A large language model (LLM) is a kind of deep learning model trained on massive text data that can understand and generate human language, and it can perform various natural language processing tasks (such as translation, text generation, etc.) through contextual learning. LLMs are increasingly being applied to KGE-related tasks to enhance their processing because of their superior performance. LLMs can be built upon datasets that are several times larger than those of traditional models. When combined with techniques such as fine-tuning and the use of calling cue words, they can achieve even more remarkable performance in KGE tasks compared to traditional predictive models [8,9,10,11,12]. The aim of this paper is to provide a comprehensive overview and structural categorization of the various task approaches that have been applied to LLMs in different KGE scenarios.

Unlike previous surveys that may have provided fragmented or narrow coverage, this survey aims to offer a truly comprehensive overview of the work related to various KGE scenarios within the context of LLMs. By approaching the topic from the perspective of different methods of calling LLMs, it comprehensively encompasses all aspects of KGE application scenarios and use cases, presenting a more in-depth and holistic analysis that fills the gaps left by previous works. The previous reviews [13] try to classify LLMs and KGE from the perspective of enhancement methods, or some reviews [14] classify the different stages of KG enhancement and pre-trained language model enhancement based on LLMs. With the continuous progress of research, various emerging application scenarios for KGE are emerging. However, there is still a lack of LLM classification overview of KGE representation learning in various application scenarios. There have also been a number of surveys about KGE or LLM before. Some articles have been investigated only for KGE aspects. For example, the review written by Liu et al. [15] discusses in detail the current application status, technical methods, practical applications, challenges, and future prospects of KGEs in industrial control system security situational awareness and decision-making. A review by Zhu et al. [16] summarized the KG entity alignment method based on representation learning, proposed a new framework, and compared the performance of different models on diverse datasets in detail. Some articles have been investigated only for some applications related to LLMs. For example, a review by Huang et al. [17] explores the security and credibility of LLMs in industrial applications, analyzes vulnerabilities and limitations, explores integrated and extended verification and validation techniques, and contributes to the full lifecycle security of LLMs and its applications. Kumar, P [18] comprehensively explores the applications of LLMs in language modeling, word embedding, and deep learning, analyzes their diverse applications in multiple fields and the limitations of existing methods, and proposes future research directions and potential progress. The aforementioned studies primarily focus on isolated aspects of KGE applications. In contrast, our work presents a comprehensive investigation into the novel integration of large language models (LLMs) with knowledge graph embeddings (KGEs).

While Liu et al. [15] provided a detailed analysis of KGE applications in industrial control systems, covering technical approaches and practical challenges, their work was limited to a single domain without exploring the synergistic potential with LLMs, resulting in insufficient generalizability. Zhu et al. [16] systematically summarized representation learning-based KG entity alignment methods, yet failed to consider LLMs’ dynamic reasoning capabilities in entity alignment. Huang et al. [17] conducted an in-depth examination of security risks in LLM industrial applications and proposed lifecycle verification techniques with strong practical guidance, but they completely overlooked the role of KGE in enhancing LLM trustworthiness (e.g., knowledge verification). Kumar [18] comprehensively reviewed LLM potential in language modeling and cross-domain applications, but lacked systematic analysis of KGE-LLM integration.

Integrating LLMs into KGE tasks brings significant advantages. The pre-trained knowledge of LLMs provides a strong semantic foundation for KGE models, reducing the number of iterations required for training from scratch and thereby significantly shortening training time and accelerating model convergence. Meanwhile, the incorporation of LLMs lowers the dependence on large-scale labeled data, allowing KGE models to achieve good performance with less labeled data while enhancing data diversity and improving model generalization through their generative capabilities. In terms of hyperparameter tuning, the pre-trained structure and parameters of LLMs offer a stable reference, reducing the workload and difficulty of adjustment and improving tuning efficiency. Additionally, the efficient computing power and optimized pre-trained structure of LLMs lower energy consumption during training and inference enhance the model’s ability to handle large-scale knowledge graphs. Overall, these advantages demonstrate great application potential in knowledge graph-related tasks and bring new breakthroughs to the field of knowledge graph embedding.

Through in-depth analysis, we have identified two common limitations in current survey works, outlined as follows: (1) insufficient research on the integration of KGE and LLMs, and (2) narrow research scopes or application scenarios. In response, our study makes the following two key contributions:

- We propose a unified methodology for KGE-LLM synergy, systematically analyzing their complementary enhancement mechanisms across diverse application scenarios (e.g., dynamic knowledge graphs and multimodal reasoning), thereby overcoming the single-perspective limitations of existing research.

- We introduce an innovative classification framework for LLM-KGE integration technologies across broader research domains and application scenarios, addressing the gaps in systematicity and scalability in current studies.

Building upon the research groundwork and surveys presented in the aforementioned related papers, we conduct a more comprehensive literature review. Our primary focus lies on the current KGE application scenarios. We categorize and discuss these diverse KGE application scenarios based on the degree of LLM invocations. Through such in-depth descriptions, we achieve a comprehensive exploration of this domain, uncovering nuances and relations that have not been fully examined before. This paper is organized as follows: In Section 2, we introduce some basic concepts related to this paper. In Section 3, we discuss the application of LLMs in different KGE scenarios. In Section 4, we propose some possible future applications and research directions. In Section 5, we introduce some famous datasets and code resources; finally, we conclude the paper in Section 6.

2. Preliminaries

To ensure comprehensibility for the reader, we introduce relevant basic concepts in this section. We summarize all acronyms used throughout the manuscript in Table 1.

Table 1.

Symbols and their specific meanings.

2.1. Large Language Models (LLMs)

LLMs can be defined as a type of artificial intelligence technology based on deep learning, trained on massive text corpora to generate and understand natural language. Their basic process involves extracting language patterns from large-scale data, modeling them with neural networks, and generating high-quality language outputs based on user inputs. Their applications span various fields, including text generation [19], machine translation [20], question answering [21], sentiment analysis [22], and content summarization [23]. Compared to traditional methods, LLMs offer superior contextual understanding, higher output quality, and enhanced transfer learning capabilities.

2.2. KGE-Related Tasks

In this section, we introduce several tasks related to knowledge graph embedding.

Relationship Between KG and KGE

With the development of society and the progress of science and technology, graph data have been increasingly used in various fields, such as social networks [24,25], academic networks [26], biological networks [27], etc. Graph data in various domains often consist of multiple types of edges and nodes, which contain rich semantic information, and they put high demands on accurate graph data information representation in various types of downstream applications in different domains. KGs are structured representations of knowledge that model real-world entities, their attributes, and interrelationships as nodes and edges in a semantic network, enabling formalized knowledge organization and storage. Building upon this structured foundation, KGEs generate low-dimensional, continuous vector representations by learning from KGs, effectively preserving their inherent relational patterns while transforming discrete graph structures into numerical forms suitable for machine learning and statistical inference. KGEs transform entities and relations in KGs into embedded representations mapped to a low-dimensional continuous vector space. KGEs can adequately capture the complex graph data structure and rich semantic information of various domains, facilitating the management of the huge amount of graph data in different domains, use, and maintenance. At present, in various fields such as medicine [28,29], news media [30], etc. KGEs have been widely used in related applications, and they have become one of the core technologies of today’s scientific and technological development. Currently, several widely used benchmark datasets [31,32,33] contain millions of entities and relations, including information about locations, music, and more. These datasets are commonly used for evaluation tasks across various domains. In some specific assessment tasks, specific datasets are also used, such as the COMBO dataset used in the assessment task of the open KG [34].

2.3. Classic Knowledge Graph (CKG)

In the remainder of this section we discuss several types of classic quest scenarios.

2.3.1. Link Prediction

Link prediction is a core task in KG completion, aimed at predicting missing entity relation pairs (i.e., missing edges) in a KG [35]. The goal is to predict potential new entity relation pairs based on existing entities and relations.

2.3.2. Entity Alignment

Entity alignment is a key task in KG fusion. Its goal is to find nodes (entities) representing the same real-world entities in multiple KGs and align them. For example, the “Barack Obama” entity in one KG should correspond to the ”Obama president” entity in another KG. Entity alignment is crucial for the integration of KGs, data fusion, and cross-domain graph inference.

2.3.3. KG Canonicalization

KG canonicalization is an important task in knowledge storage, sharing, retrieval, and application. Its goal is to map multiple entities in KGs to a standardized embedding representation, eliminating redundancy and ambiguity, thereby improving the quality and consistency of KGEs, for example, mapping different embedding representations such as “New York City”, “NYC”, and “Big Apple” to a unified embedding representation. KG canonicalization typically involves two main steps. The first step involves learning accurate embedding representations. In the second step, entities are clustered based on the similarity of their learned embeddings, and a unified representation is assigned to each cluster.

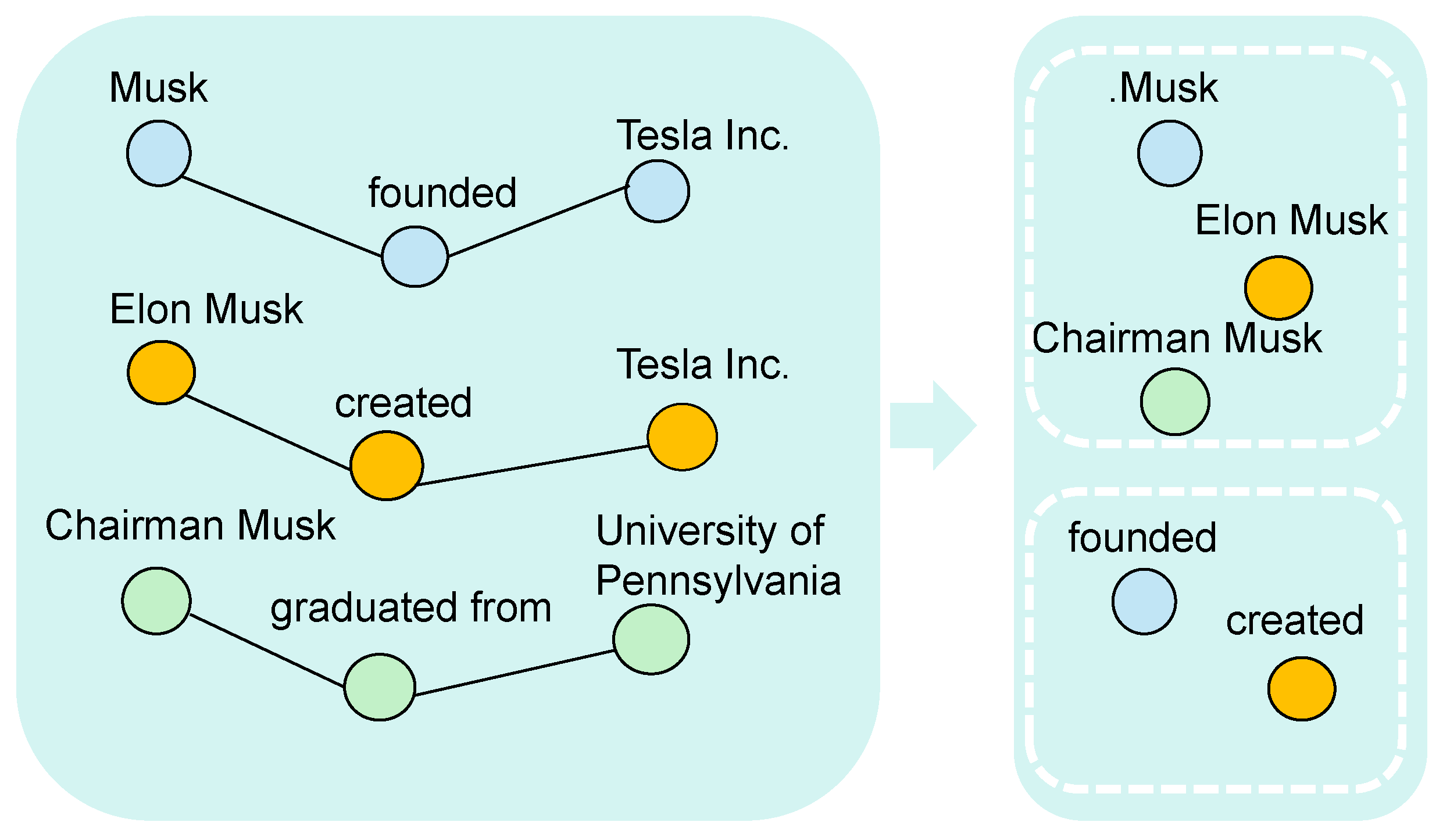

A concrete example is provided in Figure 1 for reference as follows: “Musk”, “Elon Musk”, and “Chairman Musk” all represent the same entity, while “founded” and “created” convey identical relationships. In this task, phrases representing the same meaning can be clustered together and assigned a unified identifier to prevent redundancy and ambiguity.

Figure 1.

Process of KG canonicalization.

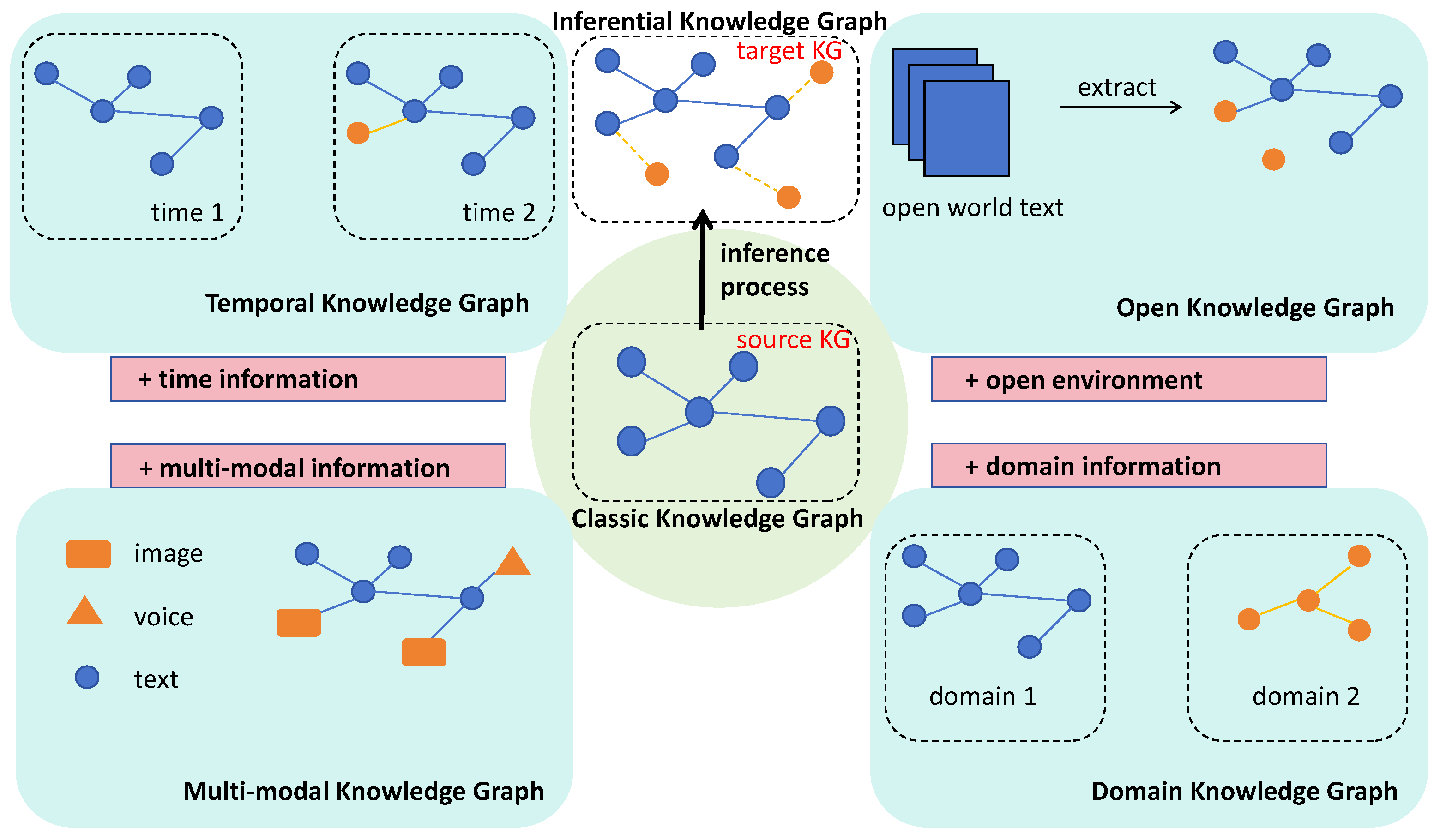

3. Techniques of KGE with LLMs

We depict the differences between the above categories of KGs and traditional KGs in Figure 2. The characteristics of KGE methods vary across different KG scenarios, reflecting their unique structural and semantic requirements. Current traditional KGE methods face limitations in handling complex relations, long-tail entities, and dynamic knowledge updates, and they struggle to effectively capture semantic information and contextual associations. LLMs can compensate for the shortcomings of traditional KGE in semantic expression and contextual reasoning through their powerful semantic understanding and generation capabilities, thereby enhancing the accuracy of knowledge representation and reasoning. With the introduction of LLMs, novel approaches combining pre-trained language models have emerged in the KGE field, demonstrating significant advantages and development potential in knowledge completion, relation prediction, and cross-domain applications. We classify the utilization of LLMs based on various KGE application scenarios and elaborate on this in the following section. In Table 2, we present a comparative analysis of the six distinct types of KGs discussed in our study, examining their key characteristics, application scenarios, as well as their respective strengths and limitations.

Figure 2.

KGs in different scenarios.

Table 2.

Comparison of different types of knowledge graphs.

3.1. Classic Knowledge Graph (CKG)

A CKG is a structured semantic knowledge base based on graphs, which stores graph data in the form of a triplet structure. Currently, CKGSs have been widely used in various task categories in various fields. As research continues to deepen and new application requirements emerge, several KG scenarios distinct from CKGs have been proposed. These scenarios will be introduced in detail in the following sections. To distinguish it from knowledge graphs in other scenarios, we refer to this type as the classic knowledge graph (CKG) throughout the remainder of this paper.

Based on the extent to which large language models (LLMs) are utilized, KGE methods within the CKG scenario can be broadly categorized into four types as follows: (1) methods that use LLMs’ prompts; (2) methods for fine-tuning LLMs; (3) methods of pre-trained LLMs; and (4) methods to use LLMs as agents. This classification reflects the varying degrees of LLM integration in these approaches. A comparative summary of these four categories—highlighting their workflows, strengths, and limitations—is presented in Table 3.

Table 3.

Comparison of the 4 categories of KGE methods in the CKG scenario.

3.1.1. Methods That Use LLMs’ Prompts

Initially, the structural information and auxiliary details of triplets are extracted and then transformed into formatted text descriptions as prompt inputs for large language models (LLMs). By leveraging the text-generation and knowledge-reasoning capabilities of LLMs, tasks based on Knowledge Graph Embedding (KGE) methods can be completed. For instance, KICGPT [36] adopts a “knowledge cues” contextual learning strategy, encoding structured knowledge into representations to guide LLMs. FireRobBrain [37] integrates a prompt module to assist LLMs in generating suggestions for bot responses. The combination of LLMs and contextual knowledge graph (CKG) embeddings—facilitated by a well-designed cue module—significantly enhances answer quality. PEG [38] utilizes prompt-based ensemble KGE, augmenting existing methods through knowledge acquisition, aggregation, and injection, refining evidence via semantic filtering, and aggregating it into global KGE through implicit or explicit approaches. KC-GenRe [39] performs knowledge-enhanced constrained inference for CKG completion, supporting contextual prompts and controlled generation to achieve effective rankings. CP-KGC [40] adapts prompts to different datasets to enhance semantic richness, using a contextual constraint strategy to effectively identify multi-aggregated entities. KGCD [41] employs an LLM-driven distillation framework with mixed prompts, which enhances the completion of low-resource knowledge graphs through LLM prompt utilization.

This type of method can effectively utilize the powerful text generation and inference capabilities of LLMs, integrating structured triplet knowledge embeddings from CKGs in a more natural way, thereby providing assistance for complex tasks such as knowledge completion, and potentially uncovering hidden semantic relations between triplets. However, there may be information loss or semantic bias during the process of converting triplets into textual descriptions. Because the triplet structure of CKGs is precise, the accuracy of the original structural information may decrease after conversion to text due to factors such as linguistic ambiguity.

3.1.2. Methods for Fine-Tuning LLMs

First, CKG triplet data are collected and transformed into a format highly compatible with model training requirements. The preprocessed triplet text data are then fed into LLMs. Suitable loss functions are defined, and model parameters are strictly fine-tuned according to specific task objectives to optimize the model for accurate and efficient task execution. To improve performance while reducing the amount of instruction data, DIFT [42] employs a truncated sampling method to select useful facts for fine-tuning and injects KG embeddings into the LLM. RecIn-Dial [43] fine-tunes large-scale pre-trained LLMs to generate fluent and diverse responses, introducing knowledge-aware biases learned from entity-oriented CKGs to enhance recommendation performance. KG-LLM [44] converts structured CKG data into natural language, using these prompts to fine-tune LLMs for improved embedding-based multi-hop link prediction. RPLLM [45] leverages CKG node names to fine-tune LLMs for relational prediction, enabling the model to operate effectively in low-resource settings using only node names. KG-Adapter [46] is a parameter-efficient CKG ensemble method based on prompt-tuning, introducing a novel adapter structure for decoder LLMs that encodes CKGs from node and relation-centric perspectives for joint inference with LLMs. LPKG [47] augments LLMs with CKG-derived data, fine-tuning them to adapt to complex KG scenarios. Filippo Bianchini et al. [48] propose a Retrieval Augmented Generation (RAG) pipeline for complex legal linguistic tasks, integrating two Knowledge Graphs (KGs) and leveraging the iterative fine-tuning of Large Language Models (LLMs). This approach addresses the fallacies from sub-symbolic processing in conventional LLMs by fine-tuning LLMs with knowledge integration.

Through targeted fine-tuning, the model learns the semantic relations and knowledge embedded in CKG triplet structures, improving its performance in CKG tasks. This approach effectively utilizes CKG data to enhance the model’s expertise in knowledge graph domains. It is important to note that fine-tuning requires substantial high-quality annotated data. Collecting and organizing CKG triplet data into suitable formats is time-consuming and labor-intensive. Inadequate data quality or quantity may lead to model overfitting, resulting in poor generalization on unseen real-world data.

3.1.3. Methods of Pre-Trained LLMs

CKG triplets and relevant background information are first transformed into a text format suitable for subsequent processing, which is then used for pre-training. For the specially designed pre-training tasks, model parameters are updated by calculating the loss between predicted results and true labels, enabling the model to effectively learn language patterns and semantic relation embeddings within the CKG. Once knowledge graph triples and background information are converted to text, parameters are updated through tasks such as masked language modeling (MLM) and next sentence prediction (NSP). Take MLM as an example; by predicting masked entity or relation tokens in sentences, the model learns semantic associations between KG entities (e.g., drug-indication relationships). The NSP task helps the model understand the logical order between triples. LiNgGuGKD [49] adopts label-oriented instruction tuning for pre-trained LLM teachers, introducing a hierarchical adaptive contrastive distillation strategy to align node features between teacher and student networks in the latent space, thus effectively transferring the understanding of semantics and complex relations. GLAME [50] utilizes public pre-trained LLMs with a KG augmentation module to discover edited relevant knowledge and obtain its internal embedding in LLMs. Salnikov et al. [51] explore text-to-text pre-trained LLMs, enriching them with CKG information to answer factual questions. PDKGC [52] employs pre-trained language models for KGC by freezing the PLM and training two prompts—a hard task prompt and a disentangled structure prompt—to enable the PLM to integrate text and graph structure knowledge, falling under the category of pre-trained LLM methods.

During pre-training, LLMs capture semantic relations and language patterns in CKG, enhancing their understanding and embedding representation of CKG while enriching knowledge reserves. However, converting CKG to text may introduce noise or information loss, affecting pre-training effectiveness and making acquired knowledge less accurate.

3.1.4. Methods to Use LLMs as Agents

The use of LLMs as agents enables the interactive exploration of relevant entities and relations on CKGs, along with inference based on retrieved knowledge. However, this approach remains underutilized and warrants further exploration in future research. ToG [53] employs an LLM agent to iteratively perform beam search on the KG, identifying the most promising inference paths and returning the most likely inference results. ODA [10] integrates LLMs with KGs as an observation-driven agent, leveraging a cyclical paradigm of observation, action, and reflection to enhance reasoning. This framework falls under the category of methods that utilize LLMs as agents for knowledge graph interaction.

When LLMs play the role of an agent, they can take advantage of the large amount of knowledge and reasoning patterns obtained in the pre-training process, and combine these with the structured information in CKG to perform more complex knowledge reasoning.

3.2. Temporal Knowledge Graph (TKG)

TKG is a kind of dynamic KG that contains facts that change over time. At present, LLMs mainly use the method based on prompts in the field of TKG for KGE tasks, accompanied by local use based on fine-tuning and other methods. In TKG, the processing of temporal information is crucial for prompt construction. For example, to query the efficacy changes of a drug within a specific time period, a prompt like this can be constructed: “Considering the time interval from the start time to the end time, analyze the efficacy data of the drug name in each quarter, including changes in indicators such as cure rate and remission rate. Please provide a dynamic evolution analysis of the drug’s efficacy based on the relevant entities and relationships in the knowledge graph within this time range.” By clarifying the time interval and specific temporal granularity, the model is guided to focus on knowledge of a specific period, effectively using the temporal information in TKG for reasoning and analysis.

In the LLM method based on prompts, the data in the TKG is generally processed first, so that it can be effectively understood and processed by LLMs, especially for the processing of time information. The prompts are usually constructed according to the specific time characteristics and other effective characteristics of the time series task. In TKG’s question–answering tasks, GenTKGQA [54] guides LLMs to answer the temporal question through two phases: subgraph retrieval and answer generation. The virtual knowledge index is designed, which is helpful for the open-source LLMs to deeply understand the temporal order and structural dependence between the retrieved facts through instruction adjustment. In the temporal relation prediction task, zrLLM [55] inputs textual descriptions of KG relations into LLMs to generate relation embedding representations, and then introduces them into an embedding-based approach. The representation of LLMs authorization can capture the semantic information in the description of the relation. This makes relations with similar semantics, close in the embedding space, enabling the model to recognize zero-shot relations even when no context observes. CoH [56] enhances the prediction ability of LLMs by using higher-order history chains step by step, gradually provides high-order historical information, and fuses it with graph neural network (GNN) results to obtain more accurate prediction results. Unlike previous tasks, GenTKG [57] also uses fine-tuning, using an instruction fine-tuning strategy with few sample parameters to align with the task of LLMs, and using a retrieval enhancement generation framework to solve the challenge through a two-stage approach.

The prompt-based method does not require a large amount of training data and complex fine-tuning process in the TKG task, and can directly use the ability of the pre-trained LLMs to guide the model to generate results that meet the requirements through well-designed prompts, which can effectively reduce the cost and time of the task. However, the design and selection of prompts are highly demanding, and if the prompts are not accurate, clear or comprehensive, the model may generate inaccurate or irrelevant results, and different prompts need to be designed for different tasks, and the universality is limited. In contrast, the fine-tuning-based method can optimize the pre-trained model according to specific tasks and datasets in TKG-related tasks, so that the model can better adapt to specific fields and tasks and improve the performance and accuracy of the model on this task, which is worthy of further exploration in the future. TPNet [58] introduces a pre-trained language model (PLM) to jointly encode temporal paths with a time-aware encoder, addressing the failure to handle continuously emerging unseen entities and ignore historical dependencies in traditional TKGR methods. DSEP [59] employs a pre-trained language model (PLM) to learn the evolutionary characteristics of historical related facts, addressing the neglect of inherent semantic information in historical facts by existing TKGR methods.

3.3. Multi-Modal Knowledge Graph (MMKG)

A multi-modal knowledge graph is a kind of KG that combines multiple data modalities (e.g., text, image, video, etc.) to construct and represent facts. MMKG enhances the diversity and accuracy of knowledge representation by enriching modal data. For MMKG, integrating data from different modalities is key. Take a medical knowledge graph containing text and images as an example. To identify potential risk factors for a specific disease, a prompt can be designed as follows: “The text describes patient information including symptoms and medical history, with key details such as long-term smoking history and family genetic history listed. Meanwhile, the provided medical images show critical features like the shape, size, and location of lung shadows. Please combine the textual and image information, infer the patient’s potential disease risk factors based on entity relationships in the knowledge graph, and provide relevant justifications”. In this way, by separately presenting the core content of multi-modal data and emphasizing reasoning by integrating both, the model can fully leverage diverse data in MMKG to enhance the accuracy and comprehensiveness of task execution.

Currently, prompt-based LLM methods are primarily applied in the domain of MMKGs. Features from different modalities are extracted and encoded into prompts, which are then fed into LLMs to perform multi-modal fusion and reasoning, enabling the completion of downstream tasks. In the KG completion task, MPIKGC [60] prompts the LLMs to generate auxiliary text to improve the performance of the model. MML [61] initiates the process by meticulously constructing MMKGs with the specific aim of extracting regional embedding representations that inherently encapsulate spatial heterogeneity features. Subsequently, it deftly employs LLMs to generate diverse multi-modal features pertaining to the ecological civilization model. KPD [62] designs a prompt template with three contextual examples to understand all aspects of a web page for phishing detection. In order to construct a unified KG, Docs2KG [63] extends these nodes with multi-hop queries to retrieve relevant information, thereby enhancing the response prompts to queries.

The prompt-based LLM method can enhance the understanding and fusion ability of multi-modal information, handle complex multi-modal tasks better, and improve the application effect and value. However, the results may be inaccurate and unreliable due to the limitations of LLMs themselves, such as hallucinations and insufficient attention to modal information such as images. Suggested LLMs based on pre-training or agents can be explored in the next step.

3.4. Inductive Knowledge Graph (IKG)

Inductive knowledge graph is a type of KG that focuses on transferring knowledge patterns from a source graph to a target graph, enabling the prediction of new entities and relations in unseen scenarios. IKG includes a source KG and a target KG with specific conditions on relations and entities. The goal of embedding representation learning for inductive KGs is to capture structural patterns from the source KG and transfer them to the target KG. At present, in the direction of IKG, LLMs are almost always applied to related tasks in the form of prompts. According to the structure and task design prompts of IKG, the relevant text and prompt words can be input into the LLMs. LLMs can infer and analyze KG according to the prompts and generate the results. Xing et al. [64] construct a fusion inductive framework to effectively alleviate the illusory problem of LLMs, combined with predefined prompts, to provide powerful and intelligent decision support for practical applications. LLAMA3 [65] uses the current generation of LLMs for link prediction, and it realizes the new entity link prediction in the IKG by generating prompts, answer processing, entity candidate search, and final link prediction. Different from the previous two attempts in the inductive link prediction task, LLM-KERec [66] innovates in the IKG of the industrial recommendation system and designs prompts covering multiple aspects. ProLINK [67] employs prompt-based LLMs to generate graph-structural prompts for enhancing pre-trained GNNs, addressing the challenge of handling low-resource scenarios with scarcity in both textual and structural aspects in KG inductive reasoning. By introducing an entity extractor, it extracts unified conceptual terms from project and user information. To provide cost-effective and reliable prior knowledge, entity pairs are generated based on the popularity of the entity and specific strategies.

The prompt-based approach can enhance IKG’s inductive ability to better handle complex textual information and reasoning tasks with the help of the language understanding and generation capabilities of LLMs. At the same time, hallucinations may occur, resulting in inaccurate or unreliable reasoning results, affecting the quality and application effect of IKGs. More attempts with fine-tuning-based or pre-training-based approaches are recommendation in the future.

3.5. Open Knowledge Graph (OKG)

OKG is a kind of KG derived from open datasets, user contributions or internet information, emphasizing the openness and scalability of knowledge. OKG lowers the threshold of knowledge access through openness and sharing, while facing the problems of data quality and reliability. With the advent of the big data era, massive data sources continue to be generated. OKGs have gained attention in more and more scenarios, such as responding to cyber threat attacks [68], mental health observation [69], and understanding geographic relations [70]. Based on the different degrees of use of such methods for LLMs, we can roughly divide them into two categories as follows: (1) methods that use LLMs’ prompts; (2) methods for fine-tuning LLMs.

3.5.1. Methods That Use LLMs’ Prompts

Specific prompts are designed to transform data in OKGs into inputs meeting the requirements. This enables LLMs to generate textual embedding representations, which are then encoded or converted into vector-form embeddings to support subsequent tasks like knowledge reasoning. Arsenyan et al. [71] propose guided prompt design for LLM utilization, constructing OKGs from electronic medical record notes. The KG entities include diseases, influencing factors, treatments, and manifestations co-occurring with patients during illness. Xu et al. [72] leverage LLMs and medical expert refinement to build a heart failure KG, applying prompt engineering across three phases: schema design, information extraction, and knowledge completion. Task-specific prompt templates and two-way chat methods achieve optimal performance. The approach by AlMahri et al. [73] automates supply chain information extraction from diverse public sources, constructing KGs to capture complex interdependencies between supply chain entities. Zero-shot prompts are used for named entity recognition and relation extraction, eliminating the need for extensive domain-specific training. Datta et al. [74] introduced a zero-shot prompt-based method using OpenAI’s GPT-3.5 model to extract hyperrelational knowledge from text, where each prompt contains a natural language query designed to elicit specific responses from pre-trained language models. Paper2lkg [75] employs prompt-based generative LLMs to automate NLP tasks in local KG construction, addressing the challenge of transforming academic papers into structured KG representations for enriching existing academic KGs.

Prompts can guide LLMs to focus on specific knowledge content and reduce redundant expressions. By accurately designing prompts, LLMs can directly cut into key information and avoid the output of irrelevant knowledge, so as to effectively filter out redundant information that may exist in OKG. However, due to the ambiguity of the expression of OKG itself, prompts may exacerbate the misunderstanding. If the prompts do not accurately fit the ambiguous knowledge statement, LLMs may generate results based on incorrect understanding, amplifying the adverse effects of the ambiguous parts of OKG.

3.5.2. Methods for Fine-Tuning LLMs

The knowledge in OKG is extracted and converted into a format suitable for LLM input, such as text sequences, followed by fine-tuning for training. This fine-tuning process enables LLMs to learn the embedding representations of entities, relations, and other knowledge in OKG, which are then applied to downstream tasks like knowledge completion and question answering. UrbanKGent [70] first designs specific instructions to help LLMs understand complex semantic relations in urban data, and it then invokes geocomputing tools and iterative optimization algorithms to enhance the LLMs’ comprehension and reasoning about geospatial relations. Finally, the LLMs are further trained via fine-tuning to better accomplish various OKG tasks. LLM-TIKG [76] leverages ChatGPT’s few-shot learning capabilities for data annotation and augmentation, creating a dataset to fine-tune a smaller language model. The fine-tuned model is used to classify collected reports by subject and extract entities and relations.

Through fine-tuning, LLMs can learn the semantic and structural information in OKG, so as to express knowledge more accurately when generating text, reducing the expression redundancy caused by inaccurate understanding of knowledge. However, if there is bias or noise in the data used in the fine-tuning process, it may lead to the LLMs overfitting these inaccurate information, consequently producing more ambiguity or misunderstanding when processing the OKG.

3.6. Domain Knowledge Graph (DKG)

DKG is a kind of KG for a specific domain, which can often record specific knowledge in a specific domain and solve problems in a specific domain in a more targeted manner. At present, DKG has been studied on how to be applied to various domains such as medicine [77,78,79] and electric power [80], and it is broadly significant for the development of specific fields. Based on the different degrees of use of such methods for LLMs, we can roughly divide them into three categories as follows: (1) methods that use LLMs’ prompts; (2) methods for fine-tuning LLMs; and (3) methods of pre-trained LLMs.

3.6.1. Methods That Use LLMs’ Prompts

Prompts play a crucial role in guiding LLMs to focus on domain-specific knowledge, often requiring the design of tailored prompt templates based on the characteristics of specific domains. This approach represents the most commonly used method in DKG research. In the medical domain’s DKG, KG-Rank [77] is employed to enhance medical question answering. As the first LLM framework for medical question answering, it integrates knowledge graph embedding (KGE) with ranking technology to generate and refine answers, applying medical prompts to identify relevant medical entities for given questions. Yang et al. [81] developed Scorpius, a conditional text generation model that generates malicious paper abstracts for promoted drugs and target diseases using prompts. By poisoning knowledge graphs, it elevates false relevance between entities, demonstrating the risk of LLMs manipulating scientific knowledge discovery. In the power engineering domain, Wang et al. [80] utilize LLMs to generate a domain-specific question–answer dataset. Their approach not only incorporates KG triplet relations into question prompts to improve answer quality but also uses triplet-extended question sets as knowledge augmentation data for LLM fine-tuning. However, these methods are limited to single-domain scenarios. In contrast to single-domain DKG applications, KGPA [82] addresses cross-domain KGE scenarios by using KGs to generate original and adversarial prompts, evaluating LLM robustness through these prompts. Neha Mohan Kumar et al. [83] proposed a hybrid malware classification system that employs prompt chaining with a cybersecurity knowledge graph. This system refines initial classifications from fine-tuned LLMs, addressing the challenge of improving detection accuracy and interpretability in malware classification.

Based on the prompt, it can focus on domain knowledge, and it can guide LLMs to focus on specific fields, such as accurately obtaining medical-related content when building a medical KG. By designing different prompts, it can also adapt to a variety of KGE tasks. However, the domain adaptability of such methods is often limited, and for some specific and highly specialized fields, prompts may not be well understood and are not provided with valid answers due to insufficient LLMs training data.

3.6.2. Methods for Fine-Tuning LLMs

In scenarios where special domain data are continuously generated, fine-tuned models can better adapt to knowledge evolution, facilitate dynamic updates of DKGs, and maintain knowledge integrity and timeliness. For the KG alignment task, GLAM [84] converts KGs into alternative text representations of tagged question–answer pairs through LLM fine-tuning. Leveraging LLM generative capabilities to create datasets, it proposes an effective retrieval-enhanced generation approach. Li et al. [85] develop a fine-tuning model for KG fault diagnosis and system maintenance, with key contributions including KG construction and fine-tuning dataset development for LLM deployment in industrial fields. Additionally, in auxiliary fault diagnosis, Liu et al. [86] embed aviation assembly KGs into LLMs. The model uses graph-structured big data in DKGs to prefix LLMs, enabling online reconfiguration via DKG-based prefix tuning and avoiding substantial computational overhead. GaLM [84] introduces an LLM fine-tuning framework that transforms knowledge graphs into text-based question–answer pairs, addressing LLMs’ limited reasoning ability over domain-specific KGs and minimizing hallucination.

Through fine-tuning, the large model can better adapt to the KG tasks in a specific domain, and it can use the annotated data in the domain to further learn and optimize, so as to improve the accuracy, recall, and other performance indicators of the tasks in the field. However, there may be a risk of overfitting, if the amount of domain data used in fine-tuning is insufficient or over-trained, the large model may overfit these limited data, resulting in poor performance in unseen domain data or real-world application scenarios, reduced generalization ability, and inability to effectively process emerging situations or data.

3.6.3. Methods for Pre-Training LLMs

The integration of DKG’s structured knowledge in the pre-training process enables LLMs to directly learn KG-contained knowledge, enhancing their understanding and mastery of domain-specific knowledge while providing a robust knowledge base for DKG tasks. In the automotive electrical systems domain, Wawrzik et al. [87] utilize a Wikipedia-derived electronic dataset for pre-training, optimizing it with multiple prompting methods and features to enable timely access to structured information. In food testing, FoodGPT [88] introduces an incremental pre-training step to inject knowledge embeddings into LLMs, proposing a method to handle structured knowledge and scanned documents. To address machine hallucination, a DKG is constructed as an external knowledge base to support LLM retrieval. FuseLinker [89] employs pre-trained LLMs to derive text embeddings for biomedical knowledge graphs, tackling the challenge of fully leveraging graph textual information to enhance link prediction performance.

The pre-trained LLM method can improve the efficiency and quality of knowledge extraction in DKG tasks, and it can directly extract knowledge such as entities and relations from specific domain texts with strong language understanding and text generation capabilities, achieving specific specialized extraction in specific domains. However, there may be knowledge illusions and errors, which can generate knowledge that does not match the facts or does not exist, resulting in the existence of false information in DKGs.

3.7. Summary of Method Evaluation

3.7.1. Effectiveness

The application of Large Language Models (LLMs) across various types of Knowledge Graphs (KGs) has shown varying degrees of effectiveness. In Classic Knowledge Graphs (CKGs), methods like KICGPT [36] and FireRobBrain [37] have demonstrated significant improvements in knowledge completion tasks by leveraging structured knowledge prompts. However, these methods are susceptible to information loss and semantic bias during the conversion of triplets into textual prompts. In Temporal Knowledge Graphs (TKGs), approaches such as GenTKGQA [54] and zrLLM [55] have effectively handled temporal reasoning tasks through well-designed prompts and embeddings, though their performance heavily relies on the quality and clarity of the prompts. For Multi-modal Knowledge Graphs (MMKGs), MPIKGC [60] and MML [61] have shown promise in integrating multi-modal information into LLMs, but their effectiveness is limited by the LLMs’ ability to accurately interpret multi-modal features. In Inductive Knowledge Graphs (IKGs), methods like the fusion inductive framework [64] and LLAMA3 [65] have effectively guided LLMs in inductive reasoning tasks, but their success is contingent on high-quality, domain-specific training data. Open Knowledge Graphs (OKGs) have benefited from methods like UrbanKGent [70] and LLM-TIKG [76], which have enhanced reasoning abilities through fine-tuning, though the risk of generating hallucinations remains a concern. Lastly, Domain Knowledge Graphs (DKGs) have seen improvements in tasks such as medical question answering with KG-Rank [77] and biomarker discovery with KG-LLM [78], but their effectiveness is limited by the quality of domain-specific data and the risk of overfitting.

3.7.2. Scalability

The scalability of LLMs in different KG scenarios is influenced by several factors. In CKGs, fine-tuning methods like DIFT [42] and RecIn-Dial [43] are computationally intensive and require large amounts of high-quality annotated data, posing challenges for scalability. TKGs have seen more scalable approaches with prompt-based methods like GenTKGQA [54] and zrLLM [55], which do not require extensive fine-tuning but may struggle with complex temporal patterns. MMKGs face scalability challenges due to the complexity of multi-modal data and the need for high-quality, multi-modal training data. IKGs have benefited from prompt-based methods like the fusion inductive framework [64] and LLAMA3 [65], which are relatively scalable but require significant domain expertise for prompt design. OKGs have seen scalable solutions with fine-tuning methods like UrbanKGent [70] and LLM-TIKG [76], though the risk of overfitting remains a concern. DKGs have shown promise with fine-tuning methods like KG-Rank [77] and KG-LLM [78], but their scalability is limited by the need for domain-specific training data and the computational cost of fine-tuning large models.

3.7.3. Practical Impact

The practical impact of LLMs in KG tasks is evident across various domains. In CKGs, methods like KICGPT [36] and FireRobBrain [37] have enhanced knowledge completion and reasoning, though they may generate inaccurate results due to semantic bias. TKGs have benefited from methods like GenTKGQA [54] and zrLLM [55], which have improved temporal reasoning but may produce unreliable results if prompts are poorly designed. MMKGs have seen practical applications with MPIKGC [60] and MML [61], which have enhanced multi-modal knowledge representation but may struggle with certain modalities like images. IKGs have benefited from methods like the fusion inductive framework [64] and LLAMA3 [65], which have improved inductive reasoning but may generate hallucinations. OKGs have seen practical applications with UrbanKGent [70] and LLM-TIKG [76], which have enhanced reasoning abilities but may produce unreliable results due to data quality issues. DKGs have benefited from methods like KG-Rank [77] and KG-LLM [78], which have improved domain-specific knowledge management but may generate inaccurate results due to overfitting.

3.8. Analysis of LLMs in Tasks

3.8.1. Specific Improvements

The integration of LLMs into various types of Knowledge Graphs (KGs) has brought several specific improvements. In CKGs, LLMs have enhanced the accuracy of knowledge completion and reasoning tasks through their powerful text-generation capabilities. In TKGs, LLMs have improved temporal reasoning by capturing the semantic information in textual descriptions of temporal relations. MMKGs have benefited from LLMs’ ability to integrate multi-modal information, leading to more sophisticated knowledge representation. IKGs have seen improvements in inductive reasoning and knowledge transfer through LLMs’ ability to generalize from source to target graphs. OKGs have benefited from LLMs’ ability to handle open-domain knowledge and generate accurate and relevant information. DKGs have seen enhancements in domain-specific knowledge management through LLMs’ ability to generate human-like text and perform complex reasoning.

3.8.2. Defects

Despite these improvements, LLMs exhibit notable defects that pose challenges in different KG scenarios. A common issue is the “hallucination” phenomenon, where LLMs generate plausible but factually incorrect information. This is particularly problematic in critical applications such as medical knowledge graphs and cybersecurity, where accuracy is paramount. The black box nature of LLMs further complicates matters, as it makes their reasoning process difficult to interpret. This lack of transparency can undermine trust and accountability, especially in knowledge-intensive tasks that require high precision and reliability. Additionally, LLMs’ reliance on large amounts of high-quality training data and the risk of overfitting pose scalability and generalization challenges, especially in dynamic and evolving knowledge domains like OKGs and DKGs.

4. Prospective Directions

At present, in various KGE application scenarios, LLMs have already provided effective solutions to specific problems through different degrees of invocation. There are still many prospective research directions for the application of LLMs in various KGE scenarios in the future, which we will introduce in this section for researchers’ reference. We sincerely hope that the proposed potential directions can pique the research interests of beginners in this field. In the process of exploration, we have also found some examples in specific processes, which we will incorporate in the discussion of this section to facilitate comprehensibility for the readers.

4.1. Cross-Domain CKG Fusion

An investigation reveals that current applications of LLMs in the CKG domain primarily focus on single-domain KGs or generalized KGs, with limited exploration of cross-domain KG content fusion. For instance, studies have leveraged KGs and ranking techniques to enhance medical question answering in the medical domain [77] or empowered LLMs as political experts using KGs [38]. These works remain confined to single domains (e.g., medicine or politics) and overlook the synergistic potential with LLMs, leading to insufficient generalizability. Future research could utilize LLM comprehension and reasoning capabilities to align KG concepts across two domains by leveraging contextual and background knowledge—for example, identifying different nodes representing the same entity in two KGs.

Here we present a specific challenge for readers to contemplate: While LLMs are predominantly applied in single-domain KGs, cross-domain KG fusion confronts the challenge of concept alignment—such as entity ambiguity between medical and chemical domains. Cross-domain datasets like YAGO [31] and UMLS [90] can be leveraged to design a prompt-based bidirectional alignment mechanism. Specifically, LLMs can generate domain mapping rules (e.g., “Aspirin is an antipyretic drug in medicine and an organic compound in chemistry”), which are combined with the RotatE [91] model to optimize the cross-domain embedding space. The Hits@10 metric can be used to compare cross-domain link prediction accuracy, with alignment effects validated on the cross-domain subset of FB15k-237 [92].

4.2. Few-Shot Learning for TKG

Currently, the application aspects of LLMs for TKG are mainly focused on link prediction tasks or knowledge quiz tasks with sufficient samples, and very few articles apply LLMs to few-shot scenarios for TKG. Current research proposes [93] a novel few-shot solution using KG-driven incremental broad learning, and it leverages [76] ChatGPT’s few-shot learning capabilities to achieve data annotation and augmentation. However, the current work has not devoted effort to the processing of temporal information. The few existing methods attempt to augment the idea of generating rich relational descriptions by LLMs, but they neglect the use of temporal attributes for TKG. We suggest that in the future, LLMs can be fine-tuned on a small amount of TKG data to allow the model to capture temporal relations more accurately by adding explicit representations of temporal data. With prompt learning, LLMs can quickly adapt to few-shot scenarios and provide reasonable modeling of timing data.

Traditional TKG models suffer from insufficient generalization in scenarios with scarce samples (such as emerging infectious disease research), and LLMs struggle to capture the dynamic patterns of time series. A few-shot learning framework can be constructed based on the sparse time slices of the ICEWS 05-15 dataset [94], where LLMs generate natural language descriptions of temporal relations (e.g., “The efficacy improvement of Drug A in Q1 2020 is associated with viral mutations”), combined with meta-learning algorithms to fine-tune model parameters. It is recommended to measure the improvement of the MRR metric on the subset of the GDELT dataset [95].

4.3. Application of MMKG Tasks for More Specific Domains

The research of LLMs in domain-specific MMKG is still seriously insufficient, and this has only been attempted in a few specific domains. For example, ref. [76] leverages large language models and multimodal KGs to detect phishing web pages with or without logos. It lacks a targeted design for more domain-specific KGs involving multi-modal problems. LLMs can be useful for cross-modal information fusion to obtain information from multiple perspectives, providing richer and multidimensional semantic understanding. We propose that in future research, LLMs can be used to reason and fuse more domain-specific MMKG tasks based on the relations between text and other modalities (e.g., image, audio, etc.). For example, in the medical field, LLMs are able to combine the analysis results of CT scan images with the text of the patient’s medical history to provide more accurate disease diagnosis and personalized treatment plans.

In medical MMKGs, there exists a semantic gap in cross-modal alignment between text and medical images (e.g., mismatch between “pulmonary CT shadow” and medical terminology in text descriptions). It is proposed to adopt text-image pair data, use LLMs to generate semantic annotations for images (e.g., converting CT images into text descriptions like “ground-glass opacity in the right upper lobe of the lung”), and construct a cross-modal contrastive loss function in conjunction with the CLIP model to optimize the consistency of entity embeddings. The evaluation can be conducted on the MIMIC-CXR dataset by assessing the accuracy of MMKG completion with Precision@5.

4.4. Generic Framework Design for IKG

Since IKGs are relatively new concepts, the current applications of LLMs in IKG scenarios are still extremely underdeveloped, lacking a generic framework design for tasks such as link prediction in this direction. The survey revealed only a limited number of studies based on IKG, such as leveraging current-generation LLMs to achieve new entity link prediction in KGs through prompt generation, answer processing, entity candidate search, and final link prediction [65]. Both KG scenarios involve emergent new knowledge, and LLMs can play an important role in the process of updating new knowledge through their powerful language comprehension and reasoning capabilities. We propose using LLMs to integrate new knowledge from multiple sources, deal with redundant, conflicting, or missing information, and ensure the automatic correction and generation of new knowledge. For example, when confronted with inconsistent descriptions in different texts, the model can determine the most accurate knowledge based on context, timestamp, and other information.

Existing IKG approaches lack generalization ability for dynamically added new entities, and LLMs are prone to hallucinations when predicting unknown relations. We propose designing an incremental learning scenario based on the WN18RR [96] dataset, leveraging the in-context learning capability of LLMs to generate entity descriptions (e.g., “The relationship of the new entity ‘AI drug’ should be associated with ‘targeted therapy’”), and combining graph neural networks for inductive embedding updates. In the above scenario, the effectiveness can be validated by comparing the changes in AUC-ROC curves of link prediction under the condition of dynamically adding 20% new entities.

4.5. OKG Canonicalization

The redundancy and ambiguity characteristics of OKG can severely limit its related tasks, and the current LLMs are more often applied to the downstream tasks related to OKG, while the role of its expression canonicalization process is neglected. We observe that LLMs have been applied in OKG tasks such as electronic medical record [71], achieving data annotation and augmentation [76] and constructing KGs [72], but their application in the canonicalization tasks of OKG has not yet been identified. We have not found current research that leverages the rich pre-trained background of LLMs to assist in the canonicalization process. We propose obtaining richer knowledge background information based on LLMs and enhancing the soft clustering process for synonymous noun or verb phrase representations based on LLMs, which can help to fully utilize the sufficient pre-training advantages of LLMs.

In OKGs, there are a large number of redundant entities (e.g., “New York” and “Big Apple”), and LLMs have difficulty automatically identifying semantic equivalence. We propose using the COMBO dataset [97], generating semantic similarity scores for entity aliases through LLMs (such as calculating the semantic distance between “aspirin” and “acetylsalicylic acid”), and performing normalization in combination with K-means clustering. Subsequently, the entity alignment accuracy before and after normalization can be measured on the open subset of DBpedia [98].

4.6. Unified Representation Learning Framework for Multiple Types and Domains

After investigation, it is found that LLMs in different KG scenarios are often designed based on individual KG task scenarios or task scenarios in individual task domains, but there is a lack of a unified representation learning framework based on LLMs for multiple types and domains. It is recommended that the powerful language comprehension and highly scalable nature of LLMs be fully utilized in future research to establish a universal framework suitable for various task types, improve task generalization, and facilitate the development and integration of various types of KG downstream tasks.

The embedding spaces of KGs in different domains (such as biomedicine and social networks) are difficult to unify, and knowledge forgetting occurs in cross-domain migration of LLMs. We propose integrating the FB15k-237 [92] general dataset and the LMKG medical dataset [29] and designing an LLM fine-tuning framework based on adapters—setting up exclusive parameter layers for each domain and dynamically activating domain knowledge through prompt words (e.g., “Switch to biomedicine mode: explain drug-disease relationships”). In cross-domain link prediction tasks, the maximum mean difference (MMD) index of domain adapters is used to evaluate the consistency of embedding spaces.

4.7. Standard Framework and Criteria for Evaluating the Fusion Effect of LLM and KGE

The evaluation of the fusion effect between LLMs and KGE should be constructed based on the following three dimensions: knowledge representation ability, reasoning generalizability, and scenario adaptability. In terms of knowledge representation, the semantic consistency of entity embeddings (e.g., calculating the cosine similarity of cross-modal entity vectors) and the accuracy of relationship prediction (using traditional KGE metrics such as Hits@k and MRR) can be measured to verify whether the natural language prompts generated by LLMs effectively enhance the semantic expression capability of KGs. For reasoning generalizability, cross-domain migration tasks (such as migrating from general knowledge graphs to biomedical KGs) should be designed, and the AUC-ROC curve of link prediction before and after migration should be compared to evaluate the model’s knowledge generalization ability in different scenarios.

For temporal knowledge graphs, the focus should be on examining the model’s ability to capture dynamic temporal features, which can be verified by measuring the change in prediction error after dynamically adding timestamps on temporal datasets such as ICEWS. For multimodal KGs, cross-modal alignment metrics (such as the text-image matching score of the CLIP model) need to be introduced to evaluate whether LLMs effectively bridge the semantic gap between modalities such as text and images. In addition, a robustness evaluation mechanism should be established to test the model’s anti-interference ability in knowledge pollution scenarios by injecting adversarial perturbations, ensuring the practical application reliability of the fusion method.

4.8. Summary of the Advantages and Disadvantages of Existing Research Work

In recent years, significant progress has been made in the integration of LLM for KGE, demonstrating multiple advantages. Firstly, LLM significantly improves the performance of KGE tasks through its powerful semantic understanding and generation capabilities. For example, LLM can transform structured knowledge into natural language descriptions through prompt engineering in CKG, thereby enhancing the accuracy of knowledge reasoning. In TKG, LLM is capable of processing dynamic temporal information, supporting event prediction and drug efficacy analysis. In addition, MMKG achieves the fusion of text and image modalities through LLM, further enriching knowledge representation. The introduction of LLM also addresses the limitations of traditional KGE methods in handling long tail entities, dynamic knowledge updates, and cross domain inference, improving the model’s generalization ability.

However, there are still some shortcomings in existing research. On the one hand, the “illusion” problem of LLM (i.e., generating seemingly reasonable but actually erroneous knowledge) may have serious consequences in critical areas such as healthcare and cybersecurity. On the other hand, the black box nature of LLM makes its inference process difficult to explain, which affects the credibility of the model. In addition, existing methods are often designed for a single task or domain, lacking a unified framework to support KGE tasks of multiple types and domains. For example, the canonicalization of cross domain knowledge graph fusion and OKG is still in the exploratory stage. Data quality and annotation cost are also key factors that constrain the deep integration of LLM and KGE, especially in scenarios with few samples and low resources. Future research needs to further optimize the robustness and interpretability of the model and explore more efficient cross domain and dynamic knowledge integration methods.

5. Datasets and Code Resources

In this section, we meticulously curate a collection of classic datasets applicable to various KGE scenarios, aiming to provide valuable resources for readers. Additionally, we systematically organize the code resources derived from some of the articles cited in this paper, facilitating a more comprehensive understanding and practical application for the readers.

It should be noted that the download links for the following datasets are all presented in Table 4.

Table 4.

Research resources related to knowledge graphs based on large language models.

5.1. Dataset

In this section, we introduce the various related datasets and have listed the relevant metrics for the comparison of the different datasets in Table 5.

Table 5.

Comparison of relevant metrics for different datasets.

5.1.1. ICEWS 05-15

ICEWS is an integrated conflict early warning system, with data sourced from global news media, government reports, and sociological research. It contains over 5 millions social and political events from 2005 to 2015. ICEWS 05-15 includes about 50 k entities (such as individuals, organizations, countries, etc.) and approximately 4 millions event triplets. It records political, social, and economic events on a global scale, suitable for time-series KGs and event prediction tasks.

5.1.2. GDELT

GDELT is a global event database developed by Google, with data sourced from global news media reports. It contains over 3 billion event records. GDELT includes about 100 k entities (such as countries, cities, organizations, etc.) and approximately 3 k millions event triplets. It records events, language, and emotional information on a global scale, suitable for social science research such as conflict analysis and political stability research.

5.1.3. YAGO

YAGO is a KG extracted from resources such as Wikipedia, WordNet, and GeoNames. It contains over 1 million entities and over 350 k facts. YAGO includes about 1.2 millions entities (such as names, locations, events, etc.) and approximately 350 k triplets. It provides rich semantic information, suitable for tasks such as entity linking, relation extraction, and KG construction.

5.1.4. UMLS

UMLS is a comprehensive medical terminology system developed by the National Library of Medicine in the United States. It contains over 300 k medical terms and concepts. UMLS includes about 300 k medical terms (such as diseases, drugs, treatment methods, etc.) and approximately 1 million triplets. It is used for biomedical text processing, information retrieval, and KG construction.

5.1.5. MetaQA

MetaQA is a multi hop question answering dataset for question answering systems, sourced from a movie database. It includes 1-hop, 2-hop, and 3-hop question answering pairs. It contains approximately 4.3k entities (such as movie characters, movie titles, etc.) and about 300 k triplets. MetaQA is suitable for machine reading comprehension, question answering system development, and multi-hop reasoning tasks.

5.1.6. ReVerb45K

ReVerb45K is a set of relation facts automatically extracted from web page text. It contains 4.5 k relation instances, about 10 k entities, and about 45 k triplets. ReVerb45K is used for relation extraction and KG construction tasks.

5.1.7. FB15k-237

FB15k-237 is a subset of the Freebase KG that has been processed to reduce overlap between the training and testing sets. It contains 15028 entities, 237 relations, and approximately 310 k triplets. FB15k-237 is widely used for KG completion and link prediction tasks.

5.1.8. WN18RR

WN18RR is a subset of the WordNet KG that has been revised to increase task difficulty. It contains 40,943 entities, 11 types of relations, 40,943 entities, and approximately 93 k triplets. WN18RR is mainly used for research on KG completion and link prediction.

5.2. Code Resources

Table 6 lists some important code resources and open source projects related to the research work discussed in this article. These resources cover multiple cutting-edge research directions combining large-scale pre-trained language models with KG. Through these open-source codes, readers can gain a deeper understanding of the implementation details of related research, reproduce experimental results, or make further extensions and innovations.

Table 6.

Code resources related to knowledge graphs based on large language models.

6. Conclusions

LLMs have been widely used in tasks in multiple scenarios with KGEs [93,99,100,101,102]. Currently, this promising field is attracting increasing attention from researchers. A large number of advanced methods has been proposed. In this paper, we propose a new taxonomy for the investigation of the current applications of LLMs in the field of KGE. Specifically, we categorize them according to the KG scenarios in which they are applied and innovate them according to the different degrees of use for LLMs in different scenarios. We not only discuss the use of LLMs in various KGE tasks, but we also explore the relevant applications and innovation directions that may be developed in the future. Through this survey, we offer a comprehensive and systematic overview of the application of LLMs within the KGE domain. We sincerely hope that this overview can serve as a valuable guide for future research endeavors.

Funding

This research is sponsored by the Key R&D Program of Shandong Province, China, (No. 2023CXGC010801) and the National Key R&D Program of China (2019YFA0709401).

Data Availability Statement

No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Vidal, M.; Chudasama, Y.; Huang, H.; Purohit, D.; Torrente, M. Poisoning scientific knowledge using large language models. J. Web Semant. 2025, 84. [Google Scholar]

- Gao, F.; He, Q.; Wang, X.; Qiu, L.; Huang, M. An Efficient Rumor Suppression Approach with Knowledge Graph Convolutional Network in Social Network. IEEE Trans. Comput. Soc. Syst. 2024, 11, 6254–6267. [Google Scholar] [CrossRef]

- Yang, Y.; Peng, C.; Cao, E.-Z.; Zou, W. Building Resilience in Supply Chains: A Knowledge Graph-Based Risk Management Framework. IEEE Trans. Comput. Soc. Syst. 2024, 11, 3873–3881. [Google Scholar] [CrossRef]

- Jiang, C.; Tang, M.; Jin, S.; Huang, W.; Liu, X. KGNMDA: A Knowledge Graph Neural Network Method for Predicting Microbe-Disease Associations. IEEE/ACM Trans. Comput. Biol. Bioinform. 2023, 20, 1147–1155. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Qi, X.; Chen, Y.; Qiao, Y.; Bu, D.; Wu, Y.; Luo, Y.; Wang, S.; Zhang, R.; Zhao, Y. Biological Knowledge Graph-Guided Investigation of Immune Therapy Response in Cancer with Graph Neural Network. Brief. Bioinform. 2023, 24, bbad023. [Google Scholar] [CrossRef]

- Huang, C.; Chen, D.; Fan, T.; Wu, B.; Yan, X. Incorporating Environmental Knowledge Embedding and Spatial-Temporal Graph Attention Networks for Inland Vessel Traffic Flow Prediction. Eng. Appl. Artif. Intell. 2024, 133, 108301. [Google Scholar] [CrossRef]

- Mlodzian, L.; Sun, Z.; Berkemeyer, H.; Monka, S.; Wang, Z.; Dietze, S.; Halilaj, L.; Luettin, J. nuScenes Knowledge Graph—A Comprehensive Semantic Representation of Traffic Scenes for Trajectory Prediction. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Paris, France, 2–6 October 2023; pp. 42–52. [Google Scholar] [CrossRef]

- Agrawal, G.; Pal, K.; Deng, Y.; Liu, H.; Chen, Y.-C. CyberQ: Generating Questions and Answers for Cybersecurity Education Using Knowledge Graph-Augmented LLMs. In Proceedings of the AAAI Conference on Artificial Intelligence 2024, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 23164–23172. [Google Scholar] [CrossRef]

- Panda, P.; Agarwal, A.; Devaguptapu, C.; Kaul, M.; Ap, P. HOLMES: Hyper-Relational Knowledge Graphs for Multi-hop Question Answering Using LLMs. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; pp. 13263–13282. [Google Scholar]

- Sun, L.; Tao, Z.; Li, Y.; Arakawa, H. ODA: Observation-Driven Agent for Integrating LLMs and Knowledge Graphs. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2024, Bangkok, Thailand, 11–16 August 2024; Association for Computational Linguistics: Kerrville, TX, USA, 2024; pp. 7417–7431. [Google Scholar]

- Venkatakrishnan, R.; Tanyildizi, E.; Canbaz, M.A. Semantic interlinking of Immigration Data using LLMs for Knowledge Graph Construction. In Proceedings of the ACM Web Conference 2024 (WWW ’24), Singapore, 13–17 May 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 605–608. [Google Scholar] [CrossRef]

- Sun, L.; Zhang, P.; Gao, F.; An, Y.; Li, Z.; Zhao, Y. SF-GPT: A training-free method to enhance capabilities for knowledge graph construction in LLMs. Neurocomputing 2025, 613, 128726. [Google Scholar] [CrossRef]

- Pan, S.; Luo, L.; Wang, Y.; Chen, C.; Wang, J.; Wu, X. Unifying Large Language Models and Knowledge Graphs: A Roadmap. IEEE Trans. Knowl. Data Eng. 2024, 36, 3580–3599. [Google Scholar] [CrossRef]

- Yang, L.; Chen, H.; Li, Z.; Ding, X.; Wu, X. Give us the Facts: Enhancing Large Language Models with Knowledge Graphs for Fact-Aware Language Modeling. IEEE Trans. Knowl. Data Eng. 2024, 36, 3091–3110. [Google Scholar] [CrossRef]

- Liu, L.; Xu, P.; Fan, K.; Wang, M. Research on application of knowledge graph in industrial control system security situation awareness and decision-making: A survey. Neurocomputing 2025, 613, 128721. [Google Scholar] [CrossRef]

- Zhu, B.; Wang, R.; Wang, J.; Zhao, F.; Wang, K. A Survey: Knowledge Graph Entity Alignment Research Based on Graph Embedding. Artif. Intell. Rev. 2024, 57, 229. [Google Scholar] [CrossRef]

- Huang, X.; Ruan, W.; Huang, W.; Jin, G.; Dong, Y.; Wu, C.; Bensalem, S.; Mu, R.; Qi, Y.; Zhao, X.; et al. A survey of safety and trustworthiness of large language models through the lens of verification and validation. Artif. Intell. Rev. 2024, 57, 175. [Google Scholar] [CrossRef]

- Kumar, P. Large language models (LLMs): Survey, technical frameworks, and future challenges. Artif. Intell. Rev. 2024, 57, 260. [Google Scholar] [CrossRef]

- Zhao, S.; Sun, X. Enabling controllable table-to-text generation via prompting large language models with guided planning. Knowl.-Based Syst. 2024, 304, 112571. [Google Scholar] [CrossRef]

- Cui, M.; Du, J.; Zhu, S.; Xiong, D. Efficiently Exploring Large Language Models for Document-Level Machine Translation with In-context Learning. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2024, Bangkok, Thailand, 11–16 August 2024; Association for Computational Linguistics: Kerrville, TX, USA, 2024; pp. 10885–10897. [Google Scholar]

- Liu, C.; Wang, C.; Peng, Y.; Li, Z. ZVQAF: Zero-shot visual question answering with feedback from large language models. Neurocomputing 2024, 580, 127505. [Google Scholar] [CrossRef]

- Hellwig, N.C.; Fehle, J.; Wolff, C. Exploring large language models for the generation of synthetic training samples for aspect-based sentiment analysis in low resource settings. Expert Syst. Appl. 2025, 261, 125514. [Google Scholar] [CrossRef]

- Song, H.; Su, H.; Shalyminov, I.; Cai, J.; Mansour, S. FineSurE: Fine-grained Summarization Evaluation using LLMs. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; Association for Computational Linguistics: Kerrville, TX, USA, 2024; pp. 906–922. [Google Scholar]

- Zhou, Z.; Elejalde, E. Unveiling the silent majority: Stance detection and characterization of passive users on social media using collaborative filtering and graph convolutional networks. EPJ Data Sci. 2024, 13, 28. [Google Scholar] [CrossRef]