Abstract

In this work, we investigate a new design strategy for the implementation of a deep neural network (DNN)-based limited-feedback relay system by using conventional filters to acquire training data in order to jointly solve the issues of quantization and feedback. We aim to maximize the effective channel gain to reduce the symbol error rate (SER). By harnessing binary feedback information from the implemented DNNs together with efficient beamforming vectors, a novel approach to the resulting problem is presented. We compare our proposed system to a Grassmannian codebook system to show that our system outperforms its benchmark in terms of SER.

Keywords:

multiple-input multiple-output (MIMO); deep learning (DL); deep neural network (DNN); limited feedback; relay; symbol error rate (SER); codebook MSC:

94A05

1. Introduction

Limited-feedback systems have been studied widely with the aim of improving the performance of closed-loop communication under frequency-division duplexing (FDD) [1,2]. The generic models adopt two stages of communication which involve (i) channel estimation (CE) via pilot signals and (ii) the received pilot signal quantization and feedback of the codebook index of the estimated channel to the transmitter for successive transmissions [3,4].

Recognizing the vital role that codebooks play in closed-loop systems, studies on limited feedback systems have focused on improving the design of codebooks to optimize channel estimation, quantization, and the index feedback chain [5]. The Grassmannian, Lloyd, and discrete Fourier transform (DFT)-based codebook designs are among the results of such attempts [1,6]. Predominant among the optimization parameters in the literature is the chordal distance criterion.

In [7], the authors proposed a new Grassmannian-based approach to massive multiple-input multiple-output (MIMO) two-tier system designs in order to facilitate limited feedback communication. Vital to their codebooks is the problem of estimating the channel state information (CSI). Nevertheless, solving that problem together with codebook quantization is non-convex due to the highly combinatorial nature of the joint problem [8,9].

To extend transmission range, MIMO systems turn to relays [10,11]. The MIMO relay system in [12] relies on Wiener filter-based techniques to present a new design strategy as a competitor to traditional singular value decomposition (SVD)-based approaches. Their approach seeks to optimize the computation of the minimum mean-squared error (MMSE). In [13], the use of an improved adaptive codebook channel estimation method was investigated to exploit multi-carrier parameters in its CE and feedback attempts.

However, the same non-joint problem exists in the systems studied in [12,13]; channel quantization and channel estimation are each solved independently, which is suboptimal. Another problem is that MIMO relay systems only use single feedback links. These links exist either as destination-to-relay or destination-to-source. Neither the inter-relay (for multi-relay cases) or transmitter-to-relay links have their feedback channels considered. Hence, both channel estimation and quantization are not performed on the neglected links. Recent works [8,14] have attempted a joint solution using MIMO relay feedback systems with deep neural networks (DNNs) and deep learning (DL), respectively, while Refs. [8,14] consider only point-to-point MIMO systems. To the best of our knowledge, no other work has considered joint CE and quantization for limited-feedback MIMO relay systems using DNN [15].

Motivated by the above, this paper considers a MIMO relay system with limited feedback using DNN. Specifically, we focus on the joint CE and quantization solution in a dual-hop limited feedback chain of a MIMO relay system using DNNs. Consequently, our system is expected to react better in more dynamic real-world situations where the channel is changing constantly and where the assumption of perfect CSI becomes unrealistic. The main contributions of this paper are as follows: (i) the introduction of a dual-hop DNN-driven limited-feedback MIMO relaying system that offers significant gain over existing conventional and codebook-based limited feedback MIMO relay systems, and (ii) the joint solution for the CE and quantization phases in an amplify-and-forward (AF) MIMO relaying system via DNN.

The rest of this paper is organized as follows: Section 2 presents the system model and problem formulation; the implementation of the DNN-based system is provided in Section 3; Section 4 presents the simulation results and analysis; finally, our conclusions are provided in Section 5.

Notation: denotes a matrix with dimensions N by M; indicates the set of integers comprising 1 and ; and represent the trace and conjugate transpose of matrix , respectively; estimations of matrix and vector are presented as and , respectively; and are the determinant and the norm of , respectively; denotes an N × N identity matrix; denotes a circularly symmetric complex Gaussian random variable n with zero mean and variance ; is the expectation operation over random variable X; and correspond to the real and imaginary components, respectively; and represent the vectorization and diagonalization operations, respectively.

2. System Model and Problem Formulation

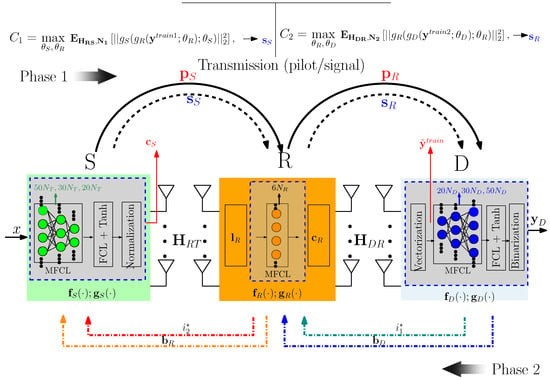

We consider a scenario in which a source node communicates with its destination node through a relay node by using a dual-hop limited-feedback chain, as shown in Figure 1. The main DNN layers located at each node, and their roles are summarized in Table 1. The source, relay, and destination nodes are equipped with antennas, denoted , and , respectively. In addition, represents the number of streams. We assume that there is no direct path between the source and destination due to high path loss and shadowing. We define the channels from the source to the relay as and from the relay to the destination as .

Figure 1.

DNN-based relay-aided limited-feedback design and signal flow.

Table 1.

Main DNN layers present at nodes and their roles.

Phase 1 of Figure 1 is made up of two main stages, with stage 1 being all pilot selection instances and stage 2 being all data transmission occurrences; any feedback process falls under phase 2. First, the pilot signal is sent from the transmitter to the relay in order to estimate the channel . The optimal precoding matrix index (PMI) [16,17] of the estimated channel is then fed back to the transmitter from the relay; see Figure 1. The index is used to select the transmit precoder, from a predefined codebook for the transmission of the precoded symbol to the relay. Here, B denotes the total feedback bits, which also determines the size of . Note that in the DNN the vectors and are considered independent but equivalent characterizations of indices and from the relay and destination, respectively. In the case of the transmitter DNN, the unit norm vector is constructed from to maximize the effective channel gain in (3). The signal at the relay is provided as follows [12,18]:

where denotes the relay receive filter, while and indicate the source’s symbol transmit energy and noise vector signal at the relay, respectively. We also assume an amplify-and-forward protocol in our transmissions.

Similarly, R sends the pilot signal to D in order to estimate . The resulting channel estimate from the destination filtering (2) is used to select the corresponding index , also selected from codebook , for feedback to R. The received signal at the destination is expressed as

where and identify the noise at the destination and the relay precoder, respectively, while represents the symbol transmit energy. The elements of are modeled as .

Conventionally, the optimization of the CE and feedback processes are highly combinatorial and non-convex [8,19], and as such are solved separately, leading to suboptimal solutions. Thus, in Section 2.2 we introduce a limited-feedback design in which DNNs replace both the CE and quantized feedback stages (see the incorporated DNN layers at each node of the dashed boxes in Figure 1). Using a DNN, we unite the two problems into one in order to offer a joint solution. Therefore, the proposed system envisions a two-phase training/machine learning (ML) lead chain primed on a DNN, which offers an approach to jointly optimize our limited-feedback system.

2.1. Conventional Approach

The optimal PMI indices and obtained in the piloting stage are used to select the precoders and , respectively, from the codebook of interest for stage 2 of phase 1. The optimal PMI is chosen by solving for the effective channel gain as

where , is the i-th vector selected from the codebook , and denotes the channel estimate. The obtained is then sent through the feedback channel to the preceding node for codebook selection and precoding. We rewrite (3) as follows:

Note that and here represent the selection of precoders from the indicated codebook based on the corresponding index. We adopt a system that uses a Grassmannian codebook as a benchmark against our system [20]. For all transmissions, we assume a maximum ratio transmission (MRT) scheme, while all receiving uses maximum ratio combining (MRC) filters (i.e., at both the relay and receiver sides).

2.2. DNN Approach

Unlike the conventional method, the DNN method jointly considers the processes of CE and limited feedback. Again, we consider two main phases. During phase 1 (modeled by (5)), two training sets and are formed for the DNN layers at R and D, respectively. The training sets consist of the transmission and aggregation of pilot signals.

In phase 2, training occurs at R and D using training sets and . The training at R and D results in bipolar vectors and , respectively; see Figure 1. The two vectors are understood as equivalent representations of and found in the conventional scheme. For maximum effective channel gain, they are employed to construct their respective equivalent unit-norm beamforming vectors at the source and relay, respectively, within our DNN system. Thus, the DNN is utilized for both joint CE and quantization. Three different DNN layers are implemented at the transmitter, relay, and destination which have respective mappings of , and [8]. The three mappings correspond positionally to the conventional codebook selection functions, denoted as , and . Next, we provide a detailed description of phases 1 and 2.

2.2.1. Pilot Signal Modeling and Aggregation

We propose a DL structure for the limited-feedback system illustrated in Figure 1. The signal received at the destination is provided by redefining (2) as , where

, where L represents the total number of pilot instances and where and denote the noise vectors at the relay and destination, respectively, for pilot transmission l. As such, the matrix indicates all the signal vectors used in pilot transmission with ; the pilot signal is generated using the normalized discrete Fourier transform codebook outlined in Table I of [21]. These L instances of the pilot train transmissions are aggregated into a matrix as to form a dataset. The resulting dataset is vectorized using the function. Afterwards, the real and imaginary parts of the complex vector dataset are partitioned by using the function [8] to form . Thus, we have

as the new training set. Next, the data are preprocessed and readied for phase 2 of training and feedback through the DNN.

2.2.2. DNN Training and Learning

The preprocessed dataset from (6) is fed into the DNN. The DNNs employed at S, R, and D have mappings , respectively; see Figure 1. The destination DNN comprises three main layers, with each having a dense input layer, a batch normalizer, and an activation function (i.e., a rectified linear unit (ReLU)). The three dense input layers of the three main groups have dimensions , and , respectively. Hence, the output of the first set’s dense layer is fed into its batch normalizer and the resulting output is also fed into its ReLU activator to provide the first layer’s output. That output is then fed as input into the second layer’s set of modules, with the input dense layer dimension set as . The second layer’s output is then fed into the final set with dimension . Similarly, the relay provided by is made up of a single set of layers and has dimensions . Finally, the DNN at node S completes the final link of the DNN-modeled limited feedback link. This is a model with a set of modules similar to that of the receiver; however, its dimensions are in reverse order to that of the receiver, that is, for set one, for set two, and finally .

The various mappings in the proposed limited-feedback MIMO relay system are described as follows. At D, the optimal PMI which maximizes the effective channel gain over and (provided by (3)) is realized as the bipolar vector of length B. The function implicitly represents the extraction of the integer and the subsequent codebook selection at the receiver side. Correspondingly, represents the DNN replacement for those two actions. The functions and along with their respective DNN counterparts and follow the same logic. Equivalently, at R, the beamforming vector from the feedback information is incorporated into the expression to provide its resulting bipolar vector at the relay node. Eventually, the end of the limited-feedback chain as realized at the transmitter side is characterized by the expression . Note that , and represent the parameters to be optimized in satisfying (14) and (15). In addition, the elements of both and are either or 1 [8]. The complete feedback chain mapping for acquisition of the fully optimized feedback information is then provided by the following expression:

Functionally, represents the final complex beamforming vector, which serves as the final DNN output at our transmitter. For input data, a corresponding output from the fully-connected middle layers is subsequently passed through a ReLU layer for activation. Owing to the vanishing gradient problem [22,23], where gradient descent (GD)-based DNN optimizers such as the Adam optimizer see their gradient updates fail, resulting in poor convergence to a solution, we employ two other stages of activation as post-activation mitigators [8]. These are collectively termed as stochastic binarization.

2.2.3. Transformation of Optimization Problem Resulting from Chaining

Given the understanding of (4) and assuming that the relay perfectly decodes the symbol, we aim to maximize the received energy at the destination:

where and . Note that below represents only the beamforming functionality of the relay DNN. The equivalent compact end-to-end form for our DNN learning model is provided as follows:

subject to

This represents a more detailed version of (7) characterizing the chain of steps in the DNN process.

2.2.4. Stochastic Binarization

In the stochastic binarization phase, two sequential activation functions are used to ameliorate the vanishing gradient problem associated with the DNN. The function activation (11) serves to constrain the output between the unity range of . Subsequently, a stochastic activation is employed in the binarization function , which is defined on a noise distribution in (12):

where

Thanks to the stochastic binarization, the combinatorial limitation of the optimization problem resulting from the chaining of the function operations provided by (7), which would otherwise have been expressed as

can now instead be presented as

and

Equations (14) and (15) are a modification of (10) to account for the training parameters of our models. This represents the two-stage training problem; see Figure 1. With this, the training phase is organized into several stochastic gradient descent (SGD) batches having iterated samples drawn from the previously preprocessed training set. The training set for our simulations is generated from a collection of channel and noise data. SGD follows the same update rule for its parameter set as provided by Equation (11) in [8], with the training being performed offline. After training, the learned parameters and can be stored in memory units of the destination, relay, and transmitter, respectively. These are then used for real-time limited feedback, and can be thought of as a substitute for the PMI-based codebooks used in conventional schemes.

3. Configuration and Training of DNN Model

This section highlights the underlying mathematical structure of the inherent layers of the DNN present at the nodes and constituting the limited feedback chain. The dimensions of the hidden layers that make up the fully connected DNNs for modeling the set of layers at the source, relay, and destination are denoted as , , and , respectively, for , , and . Here, , , , denote the final layer counts at each respective node. The output of each of the corresponding hidden layers is provided as , , and . Each node’s output is then added to a bias vector and passed through an activation layer, denoted . We represent the possible pre-activation weight matrices of each of the nodes as , where depending on the node for which learning is taking place. Hence, after every DNN-infused node (each modeled as layers), the general expression for the learning process carried across all layers is expressed by

The above equation represents consecutive computations, which feed an initial parameter set of with the goal of yielding a final set that maximizes the overall performance of the DNN as captured by (7). Practical realization of the DNN model is achieved using the Tensorflow framework via Python version 3.12.2. It utilizes specialized functions to harness the results of both the forward and backward propagation algorithms to recursively obtain optimal values for the parameter set that minimizes the overall cost function. To obtain the data for our DNN model, we first run simulations using a setup making use of the conventional model to generate the datasets and . Afterward, the essential components of the conventional scheme (essentially, the codebooks and their selection schemes) are replaced by their DNN equivalents to provide our DNN model. Training and testing are carried out using the and datasets, respectively. Finally, the performance of our system is compared with that of conventional schemes.

Complexity Analysis

A comparison of the proposed DNN system and traditional systems under various complexity considerations is presented in Table 2. Note that represents the big-O notation, while M and N indicate the number of codewords and number of antennas, respectively. In terms of beamforming, the matrix inversion takes up the greater computational requirement in traditional systems, while our system’s main computational demand is based on the required number of parameters. For example, for an complex MIMO channel, which results in real-valued inputs after flattening, we consider the hidden layer and an output one-hot vector of about for codewords based on feedback bits. With this, our parameter count for the destination node is provided by (17), which can be calculated using (16).

Table 2.

Computational complexity analysis of traditional sytems vs. the DNN-based system.

Computation of the other parametric requirements of the other nodes can be easily performed in a similar fashion. Based on this understanding of computational complexity, an additional comparison of the memory and training complexities of our system versus the traditional system is presented in Table 2. In addition, we point out the feedback overhead considerations between the two classes of systems.

4. Simulation Results

In this section, we analyze the performance of the proposed DNN limited-feedback relay system against a Grassmannian-based codebook system. For our simulation, we have three hidden layers each for the DNNs used for the transmitter, relay, and destination. We perform iterations over a batch snap-shot size of for our SGD training. For codebook definition, we use a codebook size of 64 with the number of feedback bits (codeword length) set at 6. For simplicity of analysis, we consider a single datastream for the transmissions. We use QPSK as our modulation scheme.

4.1. Grassmannian-Based Benchmark

The Grassmannian codebook (GB) is used in our benchmarking scheme. GB adopts LMMSE for channel estimation, and we assume both to be present at each node. GB serves as a very common codebook choice in terms of its performance. Theoretically, GB outperforms Lloyd-based codebooks. However, the practical implementation of GB presents several challenges due to the difficulties encountered in constructing analytic approaches. Therefore, most designs resort to brute-force searches [24].

Our training dataset is made up of training examples comprising tuples of , from which our is generated. The received signal matrix serves as input to the DNNs. The elements of are modeled using a zero-mean complex Gaussian function with covariance . We use (18) to model the correlation between antennas; the elements of channel matrix are indexed as , with u denoting the row and v the column. We evaluate the performance of our proposed DNN limited-feedback system when altering the complex correlation coefficient t [8]:

4.2. Analysis

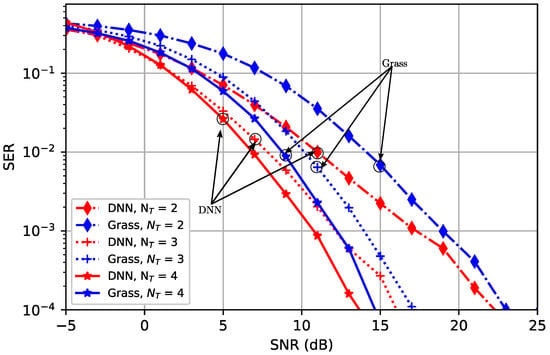

We analyze the performance of the proposed DNN-based system in terms of the symbol error rate (SER) metric. It is observable that the SER falls with an increase in the number of antennas, with our setup outperforming the GB scheme. In Figure 2, we obtain a performance gap of 1 to 3 dB between the DNN scheme and the GB scheme for antenna configurations of for the comparative SER values. This is understandable, as the optimization is performed separately, and hence sub-optimally, in the case of the benchmark scheme. Additionally, more information is lost with GB due to its quantization approach. For a finite number of bits accessible in the codebook design structure, there is a limited range of precoders from which selections could be made to characterize the quantized channel. On the other hand, the DNN aggregates a training set when performing its optimization operations, yielding the two beamformers used at the source and destination. This implies that it has access to a greater level of information than the competing scheme. Furthermore, the stochastic gradient descent used in training the DNN results in a more highly refined and optimized gradient vector set over the better information space available to it. Therefore, it is reasonable that the DNN vastly outperforms the GB setup. It is also noteworthy that for lower SNR values of 0 dB to 3 dB, the DNN approach with outperforms the Grass-based setup with by an average difference of about 1 dB. This suggests that a DNN-based approach with a lower number of antennas could be used to reduce implementation costs in practical applications where there are low power concerns.

Figure 2.

SER vs. varying SNR for different number of antennas = .

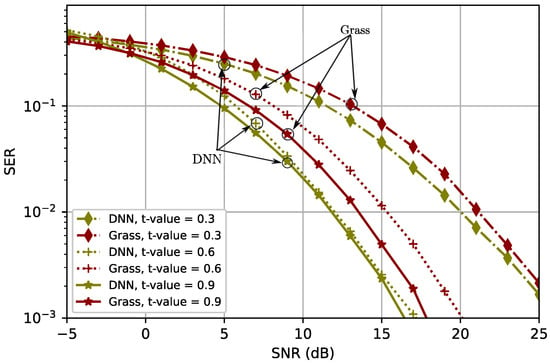

It is understood that the DNN approach performs better with an increase in the correlation of the antennas [8]. Thus, for Figure 3, an increase in correlation value—indicated by t-values () of 0.3, 0.6, and 0.9—reflects increasing levels of performance. These observations again confirm our expectations. Because the benchmarking scheme handles its quantization and limited feedback processes separately, it does not realize the same that are gains achievable from jointly optimizing the problem under consideration. Of great importance here is the sensitivity of the proposed model to variations in the correlation coefficient; whereas a reduction in the correlation results in performance degradation in the Grassmannian approach, the DNN approach responds more sensitively to jumps in correlation (e.g., from 0.6 to 0.3). Nevertheless, it performs better than the benchmark regardless of the correlation level. In addition, the performance benefits become less apparent as the antennas become more highly correlated, at which point more performance can only be extracted from the system by relying on increasing the SNR value.

Figure 3.

SER vs. varying SNR for different t-values = .

4.3. Deployment, Practical Considerations, and Limitations

In terms of practical deployment, we envision our system being made operational in a distributed form. Here, the different components of the DNN are placed on graphic processing units (GPUs) or tensor processing units (TPUs) at each node to each instantiate the section of the DNN attributed to them. The limited feedback would then serve the purpose of sending back the most recently updated weights of the preceding node to the next node in the feedback chain. Training could be carried out either offline or online depending on the constraints on the deployed system. For offline systems, designers should consider limitations such as the memory requirements needed for offline training and testing. The parameters obtained after training could then be used for the deployed system. With online training, consideration would have to be given to power limitations if the system is running off the power grid or on a limited power system such as a simultaneous wireless information and power transfer system (SWIPT), as found in [10].

Regardless of the constraints and limitations, our system is more adaptable than traditional codebook-based systems. It can learn from previously unseen instances of CSI as well as node-centric received signals, whether offline or online. The only difference is that the online case takes place in real time, incurring a higher computation cost, whereas the offline case is not real-time, meaning that it has a lower computational cost but will likely be less robust than its online counterpart. It will also require periodic offline retraining for possible updates to its weights and to make improvements. Highlights of the benefits of the different computational complexities are captured in Table 2.

For traditional systems, the feedback overhead comes from the bits sent over to index the beamformer from the codebook, i.e., and . On the other hand, the corresponding overhead for the DNN-based system comes from the neural-quantized vectors, i.e., and . Although the latter system’s overhead cost may be comparatively higher, it is easily offset by the computation benefit of not needing to dereference a codebook at each node for the corresponding beamformer, as well as the practical benefit of generating a beamformed signal that is more suitable to the real-time channel state information (CSI) demands of such a system.

5. Conclusions

This paper has investigated a limited-feedback MIMO relay system using DNN. The DNNs are implemented at each node in the feedback chain. They map the analytic functions that compose our highly nonconvex problem to DNN functions. The performance gap between the DNN scheme and its benchmark increases noticeably with an increase in SNR for each of the performance metrics considered. This indicates that with lower-powered resources, the DNN system can provide similar performance benefits as the predesigned codebooks while considerably outperforming the benchmark at higher SNRs. From our numerical results, the proposed DNN system outperforms the GB scheme in terms of SER serving. Thus, the DNN approach serves as a viable substitute for the codebook approaches.

For future work, our results open up several interesting opportunities in the area of relay-centric DNN-based MIMO systems. The extension of the work to include multiple relays in a cooperative structure or multi-hop setup could offer favorable prospects. In the same vein, a comparison of our system under different modulation schemes could offer further insights. Another route could be the incorporation of reinforcement learning strategies as the learning approach at the different nodes to make the learning process more adaptable to environmental changes. In considering channel estimation and quantization, this work has investigated one-way channel estimation and quantization. Thus, an additional future extension could consider two-way channel estimation based on other machine learning techniques such as generative adversarial networks (cGAN), as discussed in [25].

Author Contributions

Conceptualization, K.B.O.-A. and K.-J.L.; methodology, K.B.O.-A.; validation, K.B.O.-A.; formal analysis, K.B.O.-A. and K.-J.L.; investigation, S.S.; resources, S.S.; validation, K.B.O.-A.; writing—original draft preparation, K.B.O.-A.; writing—review and editing, B.D.A.-B. and P.A.; visualization, K.B.O.-A.; supervision, K.-J.L.; project administration, K.B.O.-A. and K.-J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by a Korea Research Institute for Defense Technology Planning and Advancement (KRIT) grant funded by the Korea government (DAPA: Defense Acquisition Program Administration) (KRIT-CT-22-047, Space-Layer Intelligent Communication Network Laboratory, 2022); in part by the National Research Foundation of Korea (NRF) through the Ministry of Science and Information and Communication Technology (MSIT), Korea Government (2022R1A2C2007089); in part by an Institute of Information & Communications Technology Planning & Evaluation (IITP)-ITRC (Information Technology Research Center) grant funded by the Korea Government (MSIT) (IITP-2025-RS-2024-00437886, 50) and the ICAN (ICT Challenge and Advanced Network of HRD) support program (IITP-2025-RS-2022-00156212); and in part by the research fund of Hanbat National University in 2022.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AF | Amplify-and-Forward |

| CE | Channel Estimation |

| CSI | Channel State Information |

| DF | Decode-and-Forward |

| DFT | Discrete Fourier Transform |

| DL | Deep Learning |

| DNN | Deep Neural Network |

| FCL | Fully Connected Layers |

| FDD | Frequency-Division Duplexing |

| GB | Grassmannian Codebook |

| GD | Gradient Descent |

| GPU | Graphic Processing Units |

| MFCL | Multiple Fully Connected Layers |

| MIMO | Multiple-Input Multiple-Output |

| ML | Machine Learning |

| MMSE | Minimum Mean-Squared Error |

| PMI | Precoding Matrix Index |

| ReLU | Rectified Linear Unit |

| SER | Symbol Error Rate |

| SGD | Stochastic Gradient Descent |

| SVD | Singular Value Decomposition |

| TPU | Tensor Processing Unit |

References

- Guo, J.; Wen, C.K.; Chen, M.; Jin, S. Environment Knowledge-Aided Massive MIMO Feedback Codebook Enhancement Using Artificial Intelligence. IEEE Trans. Commun. 2022, 70, 4527–4542. [Google Scholar] [CrossRef]

- Liang, P.; Fan, J.; Shen, W.; Qin, Z.; Li, G.Y. Deep Learning and Compressive Sensing-Based CSI Feedback in FDD Massive MIMO Systems. IEEE Trans. Veh. Technol. 2020, 69, 9217–9222. [Google Scholar] [CrossRef]

- Zhu, Z.; Yang, R.; Zhang, J.; Xu, S.; Li, C.; Huang, Y.; Yang, L. Sparse Bayesian Learning-Based Adaptive Codebook for Near-Field Channel Estimation. In Proceedings of the ICC 2024—IEEE International Conference on Communications, Denver, CO, USA, 9–13 June 2024; pp. 2366–2371. [Google Scholar] [CrossRef]

- Yoon, S.G.; Lee, S.J. Improved Hierarchical Codebook-Based Channel Estimation for mmWave Massive MIMO Systems. IEEE Wirel. Commun. Lett. 2022, 11, 2095–2099. [Google Scholar] [CrossRef]

- Fu, X.; Le Ruyet, D.; Visoz, R.; Ramireddy, V.; Grossmann, M.; Landmann, M.; Quiroga, W. A Tutorial on Downlink Precoder Selection Strategies for 3GPP MIMO Codebooks. IEEE Access 2023, 11, 138897–138922. [Google Scholar] [CrossRef]

- Mabrouki, S.; Dayoub, I.; Li, Q.; Berbineau, M. Codebook Designs for Millimeter-Wave Communication Systems in Both Low- and High-Mobility: Achievements and Challenges. IEEE Access 2022, 10, 25786–25810. [Google Scholar] [CrossRef]

- Schwarz, S.; Rupp, M.; Wesemann, S. Grassmannian Product Codebooks for Limited Feedback Massive MIMO with Two-Tier Precoding. IEEE J. Sel. Top. Signal Process. 2019, 13, 1119–1135. [Google Scholar] [CrossRef]

- Jang, J.; Lee, H.; Hwang, S.; Ren, H.; Lee, I. Deep learning-based limited feedback designs for MIMO systems. IEEE Wirel. Commun. Lett. 2019, 9, 558–561. [Google Scholar] [CrossRef]

- Ahmed, Y.N.; Fahmy, Y. On the complexity reduction of codebook search in FDD massive MIMO using hierarchical search. In Proceedings of the 2018 International Conference on Innovative Trends in Computer Engineering (ITCE), Aswan, Egypt, 19–21 February 2018; pp. 175–179. [Google Scholar] [CrossRef]

- Ofori-Amanfo, K.B.; Asiedu, D.K.P.; Ahiadormey, R.K.; Lee, K.J. Multi-Hop MIMO Relaying Based on Simultaneous Wireless Information and Power Transfer. IEEE Access 2021, 9, 144857–144870. [Google Scholar] [CrossRef]

- Lee, K.J.; Sung, H.; Park, E.; Lee, I. Joint Optimization for One and Two-Way MIMO AF Multiple-Relay Systems. IEEE Trans. Wirel. Commun. 2010, 9, 3671–3681. [Google Scholar] [CrossRef]

- Song, C.; Lee, K.J.; Lee, I. Performance Analysis of MMSE-Based Amplify and Forward Spatial Multiplexing MIMO Relaying Systems. IEEE Trans. Commun. 2011, 59, 3452–3462. [Google Scholar] [CrossRef]

- Zhang, Y.; El-Hajjar, M.; Yang, L.L. Adaptive Codebook-Based Channel Estimation in OFDM-Aided Hybrid Beamforming mmWave Systems. IEEE Open J. Commun. Soc. 2022, 3, 1553–1562. [Google Scholar] [CrossRef]

- Wen, C.K.; Shih, W.T.; Jin, S. Deep learning for massive MIMO CSI feedback. IEEE Wirel. Commun. Lett. 2018, 7, 748–751. [Google Scholar] [CrossRef]

- Brilhante, D.d.S.; Manjarres, J.C.; Moreira, R.; de Oliveira Veiga, L.; de Rezende, J.F.; Müller, F.; Klautau, A.; Leonel Mendes, L.; de Figueiredo, F.A.P. A literature survey on AI-aided beamforming and beam management for 5G and 6G systems. Sensors 2023, 23, 4359. [Google Scholar] [CrossRef] [PubMed]

- Nada, A.E.R.; Mehana, A.M.H. A Comparative Study of PMI/RI Selection Schemes for LTE/LTEA Systems. IEEE Trans. Veh. Technol. 2018, 67, 1444–1453. [Google Scholar] [CrossRef]

- Zhang, M.; Shafi, M.; Smith, P.J.; Dmochowski, P.A. Precoding Performance with Codebook Feedback in a MIMO-OFDM System. In Proceedings of the 2011 IEEE International Conference on Communications (ICC), Kyoto, Japan, 5–9 June 2011; pp. 1–6. [Google Scholar] [CrossRef]

- Asiedu, D.K.P.; Lee, H.; Lee, K.J. Simultaneous Wireless Information and Power Transfer for Decode-and-Forward Multihop Relay Systems in Energy-Constrained IoT Networks. IEEE Internet Things J. 2019, 6, 9413–9426. [Google Scholar] [CrossRef]

- Laue, H.E.A.; du Plessis, W.P. A Coherence-Based Algorithm for Optimizing Rank-1 Grassmannian Codebooks. IEEE Signal Process. Lett. 2017, 24, 823–827. [Google Scholar] [CrossRef]

- Schwarz, S.; Tsiftsis, T. Codebook Training for Trellis-Based Hierarchical Grassmannian Classification. IEEE Wirel. Commun. Lett. 2022, 11, 636–640. [Google Scholar] [CrossRef]

- Li, S.; Jia, H.; Kang, J. Robust Codebook Design Based on Unitary Rotation of Grassmannian Codebook. In Proceedings of the 2010 IEEE 72nd Vehicular Technology Conference—Fall, Ottawa, ON, Canada, 6–9 September 2010. [Google Scholar] [CrossRef]

- Lim, S.; Shin, M.; Paik, J. Point Cloud Generation Using Deep Adversarial Local Features for Augmented and Mixed Reality Contents. IEEE Trans. Consum. Electron. 2022, 68, 69–76. [Google Scholar] [CrossRef]

- Melgar, A.; de la Fuente, A.; Carro-Calvo, L.; Barquero-Pérez, Ó.; Morgado, E. Deep Neural Network: An Alternative to Traditional Channel Estimators in Massive MIMO Systems. IEEE Trans. Cogn. Commun. Netw. 2022, 8, 657–671. [Google Scholar] [CrossRef]

- Villardi, G.P.; Ishizu, K.; Kojima, F. Reducing the Codeword Search Complexity of FDD Moderately Large MIMO Beamforming Systems. IEEE Trans. Commun. 2019, 67, 273–287. [Google Scholar] [CrossRef]

- Baek, S.; Moon, J.; Park, J.; Song, C.; Lee, I. Real-Time Machine Learning Methods for Two-Way End-to-End Wireless Communication Systems. IEEE Internet Things J. 2022, 9, 22983–22992. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).