Abstract

This paper investigated an ultrahigh-dimensional feature screening approach for additive models with multivariate responses. We proposed a nonparametric screening procedure based on random vector correlations between each predictor and multivariate response, and we established the theoretical results of sure screening and ranking consistency properties under regularity conditions. We also developed an iterative sure independence screening algorithm for convenient and efficient implementation. Extensive finite-sample simulations and a real data example demonstrate the superiority of the proposed procedure over 58–100% of existing candidates. On average, the proposed method outperforms 79% of existing methods across all scenarios considered.

Keywords:

sure independence screening; ultrahigh dimensional; additive model; multivariate response; random vector correlation MSC:

62H20

1. Introduction

Many scientific fields work with ultrahigh-dimensional data, where the data dimensionality far exceeds the sample size. Examples include microarray analysis, tumor classification, and biomedical imaging. Analyzing such data presents challenges in terms of computational expediency, statistical accuracy, and algorithmic stability, as pointed out in Fan and Lv [1], Fan et al. [2], Li et al. [3] and Liu et al. [4].

Several methods have been proposed to tackle the challenges associated with handling ultrahigh-dimensional data. Fan and Lv [1] introduced the concept of sure screening, which screens for a property that all the important variables survive after applying a variable screening procedure with probability tending to 1, and they proposed the sure independence screening (SIS) method based on the Pearson correlation for Gaussian predictors and responses in linear models. They also developed an iterative sure independence screening (ISIS) method to improve its effectiveness. Fan and Song [5] extended SIS and ISIS to generalized linear models by ranking the maximum marginal likelihood estimates. Zhu et al. [6] proposed a sure-independent ranking and screening (SIRS) procedure for selecting active predictors in multi-index models. Fan et al. [7] extended correlation learning to marginal nonparametric learning and introduced a nonparametric independence screening (NIS) procedure for sparse additive models, utilizing B-spline expansion for approximating the nonparametric projections. They also developed an iterative nonparametric independence screening (INIS) procedure to improve the performance of NIS in terms of false positive rates. Yuan and Billor [8] proposed a functional version of SIS (functional SIS) by applying the SIS method to functional regression models with a scalar response and functional predictors. Cui et al. [9] introduced a modified SIS (EVSIS) to select important predictors in ultrahigh-dimensional linear models with measurement errors.

The methods mentioned above are model-based. On the other hand, there are model-free feature screening procedures that use the measure of independence. For example, Li et al. [10] developed a sure independence screening procedure based on distance correlation (DC-SIS). Shao and Zhang [11] employed martingale difference correlation to construct a screening procedure (MDC-SIS). Cui et al. [12] proposed a screening method (MV-SIS) using a mean–variance index for ultrahigh-dimensional discriminant analysis. Chen and Lu [13] proposed a quantile-composited feature screening (QCS) procedure for ultrahigh-dimensional grouped data by transforming the continuous predictor to a Bernoulli variable. Liu et al. [14] introduced a screening method based on the proposed generalized Jaccard coefficient (GJAC-SIS) in ultrahigh-dimensional survival data. Sang and Dang [15] utilized the Gini distance correlation between a group of covariates with responses to establish a grouped screening method for discriminant analysis in ultrahigh-dimensional data. Wu and Cui [16] developed feature screening procedures on the basis of the Hellinger distance (HD-SIS and AHD-SIS), which are applicable to both discrete and continuous response variables. Zhong et al. [17] offered a groupwise feature screening procedure via the semi-distance correlation for ultrahigh-dimensional classification problems. Tian et al. [18] proposed a conditional mean independence screening procedure via variations in the conditional mean.

Out of the screening methods mentioned earlier, only DC-SIS can handle multivariate responses. However, there is a growing need for ultrahigh-dimensional feature selection for multivariate response in applications such as pathway analysis. If we overlook the correlation among the multivariate response and only apply the screening procedure to each component of the response, we may miss some important predictors. As a result, Li et al. [3] developed a projection screening procedure to select variables for linear models with multivariate response and ultrahigh-dimensional covariates. Using the generalized correlation between each predictor and multivariate response, Liu et al. [4] proposed a screening method form ultrahigh-dimensional additive models (GCPS and IGCPS). Li et al. [19] generalized the martingale difference correlation (GMDC) to measure the conditional mean independence and conditional quantile independence, and they utilized it as a marginal utility to develop high-dimensional variable screening (GMDC-SIS and quantile GMDC-SIS) procedures.

Due to the lack of a priori information about the model structure, a more flexible class of nonparametric models, such as the additive model, can be used to promote the flexibility of models. Some studies on additive model with multivariate response are listed as follows. Liu et al. [20] proposed a novel approach for multivariate additive models by treating the coefficients in the spline expansions as a third-order tensor and combined dimension reduction with penalized variable selection to deal with ultrahigh dimensionality. They applied the proposed method to a breast cancer study and selected several important genes that have significant associations between the measured genome copy numbers and the level of gene transcripts. Desai et al. [21] introduced a new method for the simultaneous selection and estimation of multivariate sparse additive models with correlated errors. The method was utilized to investigate the protein–mRNA expression levels, and it helped to explore and establish nonlinear mRNA–protein associations.

Therefore, in this study, we focus on an additive model for sparse ultrahigh-dimensional data with multivariate response. A nonparametric screening procedure is proposed to measure the relationship between the multivariate response and each predictor simultaneously in the additive model. In particular, we obtain a normalized B-spline basis for each predictor and then compute the random vector correlation between the multivariate response and this basis. The predictors are then ranked based on the random vector correlation. The proposed method has sure screening and ranking consistency properties under some regularity conditions. Furthermore, to address the issue of the marginal method selecting unimportant predictors, which is due to their high correlations with important ones, an iterative sure independence screening method is also developed to improve the finite sample’s performance.

The remainder of this paper is organized as follows. In Section 2, we proposed sure independence screening based on the random vector correlation (RVC-SIS) procedure for sparse additive models with multivariate response and study its theoretical properties. Section 3 presents an iterative sure independence screening (RVC-ISIS) procedure to enhance the performance of RVC-SIS. In Section 4, we conduct numerical studies and real data analysis to demonstrate the new procedure’s finite sample performance in comparison to some existing methods. Section 5 provides an application example. Finally, we discuss the performance and limitations of the proposed method in Section 6 and conclude in Section 7. The technical proofs are presented in Appendix A.

2. Sure Independence Screening Using Random Vector Correlation

Suppose is from the following additive model:

where is a predictor vector, denotes the unknown vector functions, is the random error with the zero mean, and is a q-vector intercept term. Note that is a nonparametric smoothing functions; we use a cubic B-spline parameterization method to approximate them. Specifically, let be a normalized B-spline basis with , where is the number of the basis function, and is the sup norm. Then, can be well approximated by

with and , which motivates us to utilize the random vector correlation (RVC hereafter) defined by Escoufier [22] as a marginal utility screening index:

where is the population covariance matrix between and , is the square covariance matrix of and and the squared covariance matrix of , and denotes the trace operator. Intuitively, if and are independent, then . Otherwise, would be positive. This desired property allows us to use to propose a feature screening method for additive models with multivariate responses.

Suppose that and , are the samples from and , respectively. Then, the sample version of is . An unbiased estimator of is given by

where and are

with and . The sample RVC between and is defined by

Thus, a sure independence screening procedure based on a random vector can be carried out according to .

To study the sure screening property of the RVC-SIS procedure, we define the subset of the truly important predictors as

The following conditions are imposed to facilitate the proof of the properties:

- (C1)

- The distribution of is absolutely continuous, and its density is bounded away from zero and infinity on , where a and b are finite real numbers.

- (C2)

- The nonparametric components , , belong to , where the rth derivative, , exists and satisfies the Lipschitz Condition with exponent : for some positive constant K. Here, r is a non-negative integer and such that .

- (C3)

- is bound: .

- (C4)

- The random error vector satisfies for all .

- (C5)

- for some and .

- (C6)

- There exist and such that and . Here, , , and are given in Fact 1 and Fact 3 of [7].

Based on Condition (C1), we can infer that the marginal density of is bounded on the interval , away from zero and infinity. The Lipschitz Condition (C2) requires the function to be sufficiently smooth to determine the convergence rate for its spline estimates. Condition (C4) controls the tail distribution of the random error vector to ensure the sure screening property. Condition requires that the signal of the important predictors is not too weak. Condition (C6) is imposed to show the good approximation of relative to . Together with Conditions (C4) and (C6), Condition (C3) ensures uniform control in the probability inequality. These conditions are given in light of Li et al. [3], Liu et al. [4], Fan et al. [7] and the reference therein.

Note that it will be shown that

under the conditions aforementioned. We thus select a set of predictors

from a theoretical perspective. In addition, there exists an efficient way to choose a subset of variables at a practical application level. To be specific, we sort in descending order and pick out the first and largest to form , where denotes the integer part of a.

Theorem 1

(Sure Screening Property). Under Conditions (C1)–(C4), for and , there exist some positive constants and such that

Along with Conditions (C5)–(C6), we have that

where is the cardinality of .

Theorem 1 states that the proposed procedure is capable of handling the NP-dimensionality . To balance the two terms on the right side of (1), we have . As a result, (1) can be expressed as

This implies that we can handle the NP-dimensionality , indicating that dimensionality depends on the the minimum true signal strength and the number of basis functions.

The proposed procedure enjoys the ranking consistency property.

Theorem 2

(Ranking Consistency Property). If Conditions (C1)–(C6) hold, then we have

Compared to the sure screening property, Theorem 2 demonstrates a stronger theoretical result. It indicates, with overwhelming probability, that the truly active predictors tend to have larger magnitudes of than the inactive ones. In other words, the active predictors can be ranked at the top by our screening method.

3. Iterative Sure Independence Screening Using Random Vector Correlation

Three main challenges identified by Fan and Lv [1] may also arise with RVC-SIS. Firstly, RVC-SIS may prioritize unimportant predictors that are highly correlated with important predictors over other important predictors that are relatively weakly related to the response. Secondly, RVC-SIS may fail to select an important predictor that is marginally uncorrelated but jointly correlated with the response, leading to its exclusion from the estimated model. Finally, all the important predictors may not be screened out by RVC-SIS due to the collinearity among predictors. To address these three challenges, we use the joint information of the covariates more comprehensively in variable selection rather than solely relying on marginal information, to develop the RVC-ISIS method that is summarized in Algorithm 1.

| Algorithm 1 RVC-ISIS Algorithm |

|

RVC-ISIS focuses on Step 3, which operates in two ways: Firstly, when an active variable is correlated with other active variables but marginally independent of the response, Step 3 helps make it relevant to the response, and then, it becomes marginally detectable. Secondly, when many irrelevant variables are highly correlated with the active variables, Step 3 utilizes the correlated active variables selected in Step 1 to make the rest of the active variables easier to detect, rather than detecting the irrelevant variables.

4. Numerical Studies

In this section, we evaluate the finite-sample performance of our proposed screening procedure through a series of simulation studies and a real data example. We compare its performance with Naive-SIS1, Naive-ISIS1, Naive-SIS2, Naive-ISIS2, PS, and IPS in Li et al. [3]; GCPS and IGCPS in [4]; DC-SIS in Li et al. [10]; and DC-ISIS in Zhong and Zhu [23]. Our simulation studies are conducted using R version 4.1.2. We set the total number of predictors as and the sample size as . For our proposed method, we take as the number of internal knots. We adopt the criteria from Liu et al. [24] to assess the performance of the proposed method:

- (i)

- : The average rank of in terms of the sorted list via the screening procedure based on 1000 replications. The higher the ranking of , the greater the probability of being selected.

- (ii)

- M: The minimum model size to include all active predictors. In other words, M represents the largest rank of the true predictors: , where is the true model. We report the , and quantiles of M from 1000 repetitions.

- (iii)

- : The proportion of important predictor being selected for a given model size in the 1000 replications.

- (iv)

- : The proportion of all active predictors being selected into the submodel with size over 1000 simulations.

Example 1.

Following Li et al. [3], we consider a linear model with multivariate responses as follows.

The dimension of the response is . The truly active predictors set is . is drawn from a multivariate normal distribution , where Σ is a covariance matrix with elements . The nonzero coefficient is given by where is a binary random variable with and . The random error term follows the standard normal distribution .

Example 2.

We apply the screening procedures to the additive model when the components of the multivariate response are strongly correlated:

with

and

The predictor is generated by

where and U are i.i.d. U(0,1). The truly active predictors set is . The random error term is from the standard normal distribution .

Example 3.

The following model is taken from Fan and Lv [1] and Li et al. [3].

follows a multivariate normal distribution . The covariance matrix Σ satisfies and the off-diagonal elements , except for . The random error term follows a standard normal distribution . The set of truly active predictors is .

Example 4.

We adopt the simulation model in [1,3] as follows.

The covariance matrix Σ is the same as in Example 3, except that . is uncorrelated with any . The random error term is from the standard normal distribution .

In Example 1, the model is linear, and the response is multivariate. All methods perform well under this scenario, as shown in Table 1, Table 2 and Table 3.

Table 1.

The average rank of the true predictor for Examples 1–4.

Table 2.

The selecting rate of the true predictors and for Examples 1–4.

Table 3.

The quantiles of for Examples 1–4.

In Example 2, the model is nonlinear. Each component of the multivariate response has the same nonlinear function of the active predictors. The only difference is the corresponding coefficients. It is easy to check, in this example, that the components of the response are strongly correlated. Note that the smaller the rank of a predictor, the easier it is to be selected. Thus, we can conclude from Table 1 that our proposed method RVC-SIS and RVC-ISIS outperforms the remaining ones in terms of the rank of the important predictors. Naive-SIS1, Naive-SIS2, and PS perform badly in selecting the important predictors –. Naive-ISIS1 and Naive-ISIS2 still fail to detect –, while IPS still exhibits bad performances in choosing due to the large rank. The rank of active variables – in GCPS is too high to be chosen, while the rank of in IGCPS is low enough to be selected. Although DC-SIS behaves badly in selecting in this context, DC-ISIS dramatically improves its performance. From Table 2, we can see that DC-ISIS, RVC-SIS, and RVC-ISIS can pick up – with an overwhelming probability. Naive-SIS1, Naive-SIS2, and PS select these predictors with little chance. Naive-ISIS1 and Naive-ISIS2 still fail to detect –, while IPS cannot identify . GCPS selects with overwhelming probability, while IGCPS chooses – with a large probability. Table 3 demonstrates that only DC-ISIS, RVC-SIS, and RVC-ISIS include all important predictors – with a low M, indicating that the four predictors have a higher priority of being selected by these screening methods and thus are ranked at the top.

In Example 3, it is evident that predictor is marginally uncorrelated with at the population level, but it is important to . Table 1 shows that Naive-SIS1, Naive-SIS2, PS, DC-SIS, GCPS, and RVC-SIS consistently identify with a larger rank, while the proposed iterative screening procedures of these methods screen out with a smaller rank. This indicates that the iterative screening procedure can effectively tackle the second challenge that is mentioned at the beginning of Section 3. Table 2 demonstrates that Naive-SIS1, Naive-SIS2, PS, DC-SIS, GCPS, and RVC-SIS select with little probability, while their respective iterative screening procedures can pick it up with an overwhelming probability. It can be seen from Table 3 that the model size of Naive-SIS1, Naive-SIS2, PS, DC-SIS, GCPS, and RVC-SIS is consistently large due to the incapability of identifying .

In Example 4, it is worth noting that important predictor is again uncorrelated with the response , and has a very small correlation with response . In fact, variable has the same proportion of contributions to the response as noise does. Similar conclusions can be drawn from these tables due to the existence of . Furthermore, these screening methods have a lower priority of selecting than – due to the small contribution of to the response.

5. Application

In order to demonstrate the application of our proposed method, we used yeast cell-cycle gene expression data in Spellman et al. [25] and chromatin immunoprecipitation on chip (CHIP-chip) data from Lee et al. [26]. The yeast cell-cycle gene expression data consist of 542 genes from a factor-based experiment in which 18 mRNA levels are measured every 7 min for 119 min.

The chromatin immunoprecipitation on chip (ChIP-chip) data contain the binding information for 106 transcription factors (TFs), which are related to cell regulation. These two datasets are combined as the yeast cell-cycle dataset available in the R package spls. For further details about this dataset, please refer to Chun and Keles [27].

First, the data are randomly split into a training set of 300 and a test set of 242. We apply all screening methods on the training set to select the active predictors. To obtain the threshold in the screening procedures, we introduce auxiliary variables proposed by Zhu et al. [6]. That is, we independently generate auxiliary variables such that is independent of both and . Consider the -dimensional vector as the predictors and as the response. Then, we calculate for . Note that is truly inactive via artificial construction, and we can define a threshold

to select the important predictors. We repeated the random data segmentation 100 times to avoid the impact of random segmentation on the results and comparisons.

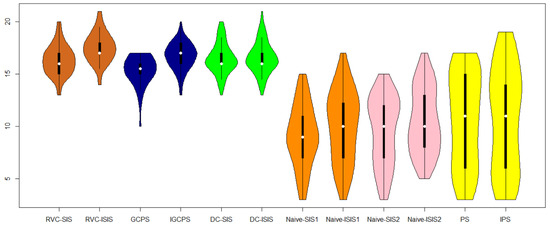

Note that there are 21 known and experimentally verified TFs related to the cell-cycle process, as stated in Wang et al. [28]. We then compare the number of confirmed TFs selected in the screening procedure mentioned earlier. The results are reported in Figure 1. From this figure, it can be concluded that RVC-ISIS performs best among all screening procedures, and RVC-SIS performs best among the screening procedures except the iterative ones.

Figure 1.

The number of confirmed TFs being selected by different screening methods, obtained from 100 random data partitions.

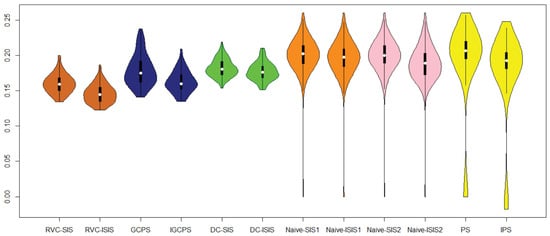

Furthermore, we applied the test set to assess the performance of the screening procedures in terms of the mean square error of the predictions, which can be found in Figure 2 and Table 4. It can be seen that these iterative screening procedures uniformly outperform the original non-iterative screening ones.

Figure 2.

The mean squared errors of prediction based on 100 random partitions of the data.

Table 4.

Averaged mean squared errors (AMSEs) of the prediction over 100 random data partitions and the corresponding standard deviations of the screening procedures.

6. Discussion

Through extensive simulations and a real data example, we see that the proposed procedure outperforms 58–100% of the existing candidates such as the Naive-SIS1, Naive-ISIS1, Naive-SIS2, Naive-ISIS2, PS and IPS by Li et al. [3], GCPS and IGCPS by Liu et al. (2020) [4], DC-SIS by Li et al. [10], and DC-ISIS by Zhong and Zhu [23]. On average, the proposed method outperforms 79% of existing methods across both linear and nonlinear scenarios. In particular, the proposed method has an enormous advantage in the nonlinear scenarios, with multivariate responses being strongly correlated, like in Example 2.

There exists some limitations in the proposed screening method as well. First, as Muthyala et al. (2024) [29] pointed out, although sure independence screening methods have been proven to be particularly effective in the natural sciences and clinical studies, its widespread adoption has been limited by performance inefficiencies and the challenges posed by its R-based or FORTRAN-based implementation, especially in modern computing environments with massive data. More fast and efficient implementation using new techniques, such as PyTorch 3.12 —based implementations, are necessary for the proposed RVC-SIS and RVC-ISIS algorithms, which is critical for projects like chronic thromboembolic pulmonary hypertension studies [30] with ultrahigh-dimensional multimodal medical data, including radiomics features, genomics data, laboratory omics data, and so on.

Second, this paper only considered the sure independence screening for additive models of continuous multivariates response. However, in clinical practice, such as the pulmonary thromboembolism study, the majority of clinical outcomes is of the binary or survival type. The proposed method and algorithm cannot be directly applied to either scenarios with multivariate, binary, or multilevel categorical outcomes or the scenarios with survival outcomes. However, it is relatively easy to adaptive the proposed RVC-SIS and RVC-ISIS to other types of outcomes using routine methods of related statistical fields.

Last but not the least, we do not investigate the performance of our proposed method for the skewed and heavy-tailed distributed data [31]. Further exploring the interactions between (among) predictors and detecting the corresponding ones is beyond the scope of this paper. These could be interesting topics for future research.

7. Conclusions

This paper investigated a nonparametric screening method for sparse, ultrahigh-dimensional additive models with multivariate responses. We proved that the RVC-SIS exhibits sure screening and ranking consistency properties under certain regularity conditions. In addition, we show, through extensive simulation study, that the proposed RVC-ISIS procedure effectively detects the truly important predictors even in the presence of complex correlations among predictors. The proposed method in this article has great potential for applications in developing nonlinear models with both ultrahigh-dimensional predictors and multivariate responses, especially in the chronic thromboembolic pulmonary hypertension study, where there exist multi-organ linkage responses, such as the right-sided heart failure in hearts, chronic thromboembolic pulmonary hypertension in lungs, and cerebral thrombosis in the brain.

Author Contributions

Methodology, Y.C. and B.L.; Software, Y.C.; Validation, B.L.; Formal analysis, Y.C. and B.L.; Investigation, B.L.; Resources, B.L.; Writing—original draft, Y.C. and B.L.; Writing—review & editing, B.L.; Funding acquisition, B.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Noncommunicable Chronic Diseases-National Science and Technology Major Project (No. 2023ZD0506600) and the Capital’s Funds for Health Improvement and Research (grant number: 2024-1G-4251).

Data Availability Statement

The original data presented in the study are publicly available in R package ‘spls’ (https://cran.r-project.org/web/packages/spls/index.html, accessed on 5 May 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Proof of Theorem 1.

We first demonstrate the consistency of the numerator of . It is easy to show that is a U statistic of order four: that is,

where

with .

Rewrite the U-statistic as follows:

where M will be specified later. Accordingly, can be decomposed into two parts:

Evidently, and are unbiased estimators of and , respectively.

First, we demonstrate the consistency of . Note that is a U-statistic of order four. We can therefore conclude, based on theorem 5.6.1.A of Serfling [32], that for any given . In light of the symmetry of U statistics, it is straightforward to obtain

Next, we consider the consistency of . Notice that . Using the Cauchy–Schwarz inequality, we have Together with Markov’s inequality, it is not dfficult to show that for any . By choosing for , provided that n is sufficiently large and Conditions (C3)–(C4) hold. Meanwhile, we can confirm that the events satisfy . Otherwise, if holds for all , then we can conclude that . Therefore , which contradicts event . Under Conditions (C3)–(C4), for any , there exists a positive constant C such that . Consequently, . which implies that

Similarly, we can determine the convergence rate of the denominator. Furthermore, recalling Fact 3 in Fan et al. [7], we can show that . Hence, we obtain the convergence rate of , which is similar to the form of (A3). To be precise, . Let , where any and satisfies ; we have

To deal with the second part, we first show that . By invoking Condition (C5), for any , there exists such that . Under Conditions (C1), (C2), and (C6), we can conclude from Lemma 1 and Fact 3 in Fan et al. [7] that , which implies that provided that . Let us introduce the event . It is worth noting that . Therefore,

where the last inequality follows from (A3). □

Proof of Theorem 2.

Recall the definition of ; it is clear that for and for . Under Conditions (C1), (C2), (C5), and (C6), we can show that . Then, it is straightforward to verify

Using the result of Theorem 1 and Fatou’s Lemma, we can derive

The proof is now completed. □

References

- Fan, J.; Lv, J. Sure independence screening for ultrahigh-dimensional feature space. J. R. Stat. Soc. Ser. (Stat. Methodol.) 2008, 70, 849–911. [Google Scholar] [CrossRef]

- Fan, J.; Samworth, R.; Wu, Y. Ultrahigh-dimensional feature selection: Beyond the linear model. J. Mach. Learn. Res. 2009, 10, 2013–2038. [Google Scholar] [PubMed]

- Li, X.; Cheng, G.; Wang, L.; Lai, P.; Song, F. Ultrahigh-dimensional feature screening via projection. Comput. Stats Data Anal. 2017, 114, 88–104. [Google Scholar] [CrossRef]

- Liu, S.; Li, X.; Zhang, J. Ultrahigh-dimensional feature screening for additive model with multivariate response. J. Stat. Comput. Simul. 2020, 90, 775–799. [Google Scholar] [CrossRef]

- Fan, J.; Song, R. Sure independence screening in generalized linear models with NP-dimensionality. Ann. Stat. 2010, 38, 3567–3604. [Google Scholar] [CrossRef]

- Zhu, L.P.; Li, L.; Li, R.; Zhu, L.X. Model-free feature screening for ultrahigh-dimensional data. J. Am. Stat. Assoc. 2011, 106, 1464–1475. [Google Scholar] [CrossRef]

- Fan, J.; Feng, Y.; Song, R. Nonparametric independence screening in sparse ultra-high-dimensional additive models. J. Am. Stat. Assoc. 2011, 106, 544–557. [Google Scholar] [CrossRef]

- Yuan, Y.; Billor, N. Sure independent screening for functional regression model. Commun. Stat.-Simul. Comput. 2024, 1–20. [Google Scholar] [CrossRef]

- Cui, H.; Zou, F.; Ling, L. Feature screening and error variance estimation for ultrahigh-dimensional linear model with measurement errors. Commun. Math. Stat. 2025, 13, 139–171. [Google Scholar] [CrossRef]

- Li, R.; Zhong, W.; Zhu, L. Feature screening via distance correlation learning. J. Am. Stat. Assoc. 2012, 107, 1129–1139. [Google Scholar] [CrossRef]

- Shao, X.; Zhang, J. Martingale difference correlation and its use in high-dimensional variable screening. J. Am. Stat. Assoc. 2014, 109, 1302–1318. [Google Scholar] [CrossRef]

- Cui, H.; Li, R.; Zhong, W. Model-free feature screening for ultrahigh-dimensional discriminant analysis. J. Am. Stat. Assoc. 2015, 110, 630–641. [Google Scholar] [CrossRef]

- Chen, S.; Lu, J. Quantile-Composited feature screening for ultrahigh-dimensional data. Mathematics 2023, 11, 2398. [Google Scholar] [CrossRef]

- Liu, R.; Deng, G.; He, H. Generalized Jaccard feature screening for ultra-high dimensional survival data. AIMS Math. 2024, 9, 27607–27626. [Google Scholar] [CrossRef]

- Sang, Y.; Dang, X. Grouped feature screening for ultrahigh-dimensional classification via Gini distance correlation. J. Multivar. Anal. 2024, 204, 105360. [Google Scholar] [CrossRef]

- Wu, J.; Cui, H. Model-free feature screening based on Hellinger distance for ultrahigh-dimensional data. Stat. Pap. 2024, 65, 5903–5930. [Google Scholar] [CrossRef]

- Zhong, W.; Li, Z.; Guo, W.; Cui, H. Semi-distance correlation and its applications. J. Am. Stat. Assoc. 2024, 119, 2919–2933. [Google Scholar] [CrossRef]

- Tian, Z.; Lai, T.; Zhang, Z. Variation of conditional mean and its application in ultrahigh-dimensional feature screening. Commun. Stat.-Theory Methods 2025, 54, 352–382. [Google Scholar] [CrossRef]

- Li, L.; Ke, C.; Yin, X.; Yu, Z. Generalized martingale difference divergence: Detecting conditional mean independence with applications in variable screening. Comput. Stat. Data Anal. 2023, 180, 107618. [Google Scholar] [CrossRef]

- Liu, L.; Lian, H.; Huang, J. More efficient estimation of multivariate additive models based on tensor decomposition and penalization. computational statistics and data analysis. J. Mach. Learn. Res. 2024, 25, 1–27. [Google Scholar]

- Desai, N.; Baladandayuthapani, V.; Shinohara, R.; Morris, J. Covariance Assisted Multivariate Penalized Additive Regression (CoMPAdRe). J. Comput. Graph. Stat. 2024, 1–10. [Google Scholar] [CrossRef]

- Escoufier, Y. Le traitement des variables vectorielles. Biometrics 1973, 29, 751–760. [Google Scholar] [CrossRef]

- Zhong, W.; Zhu, L. An iterative approach to distance correlation-based sure independence screening. J. Stat. Comput. Simul. 2015, 85, 2331–2345. [Google Scholar] [CrossRef]

- Liu, J.; Li, R.; Wu, R. Feature selection for varying coefficient models with ultrahigh-dimensional covariates. J. Am. Stat. Assoc. 2014, 109, 266–274. [Google Scholar] [CrossRef]

- Spellman, P.T.; Sherlock, G.; Zhang, M.Q.; Iyer, V.R.; Anders, K.; Eisen, M.B.; Brown, P.O.; Botstein, D.; Futcher, B. Comprehensive identification of cell cycle–regulated genes of the yeast Saccharomyces cerevisiae by microarray hybridization. Mol. Biol. Cell 1998, 9, 3273–3297. [Google Scholar] [CrossRef]

- Lee, T.I.; Rinaldi, N.J.; Robert, F.; Odom, D.T.; Bar-Joseph, Z.; Gerber, G.K.; Hannett, N.M.; Harbison, C.T.; Thompson, C.M.; Young, R.A.; et al. Transcriptional regulatory networks in Saccharomyces cerevisiae. Science 2002, 298, 799–804. [Google Scholar] [CrossRef] [PubMed]

- Chun, H.; Keles, S. Sparse partial least squares regression for simultaneous dimension reduction and variable selection. J. R. Stat. Soc. Ser. (Stat. Methodol.) 2010, 72, 3–25. [Google Scholar] [CrossRef]

- Wang, L.; Chen, G.; Li, H. Group SCAD regression analysis for microarray time course gene expression data. Bioinformatics 2007, 23, 1486–1494. [Google Scholar] [CrossRef]

- Muthyala, M.; Sorourifar, F.; Paulson, J.A. TorchSISSO: A PyTorch-based implementation of the sure independence screening and sparsifying operator for efficient and interpretable model discovery. Digit. Chem. Eng. 2024, 13, 100198. [Google Scholar] [CrossRef]

- Xie, W.; Yu, Y.; Huang, Q.; Yan, X.; Yang, Y.; Xiong, C.; Liu, Z.; Wan, J.; Gong, S.; Wang, L.; et al. Epidemiology and management patterns of chronic thromboembolic pulmonary hypertension in China: A systematic literature review and meta-analysis. Chin. Med J. 2025, 138, 1000–1002. [Google Scholar] [CrossRef]

- Song, Y.; Zhou, W.; Zhou, W.X. Large-scale inference of multivariate regression for heavy-tailed and asymmetric data. Stat. Sin. 2023, 33, 1831–1852. [Google Scholar] [CrossRef]

- Serfling, R.J. Approximation Theorems of Mathematical Statistics; John Wiley & Sons: Hoboken, NJ, USA, 1980. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).