Review of GrabCut in Image Processing

Abstract

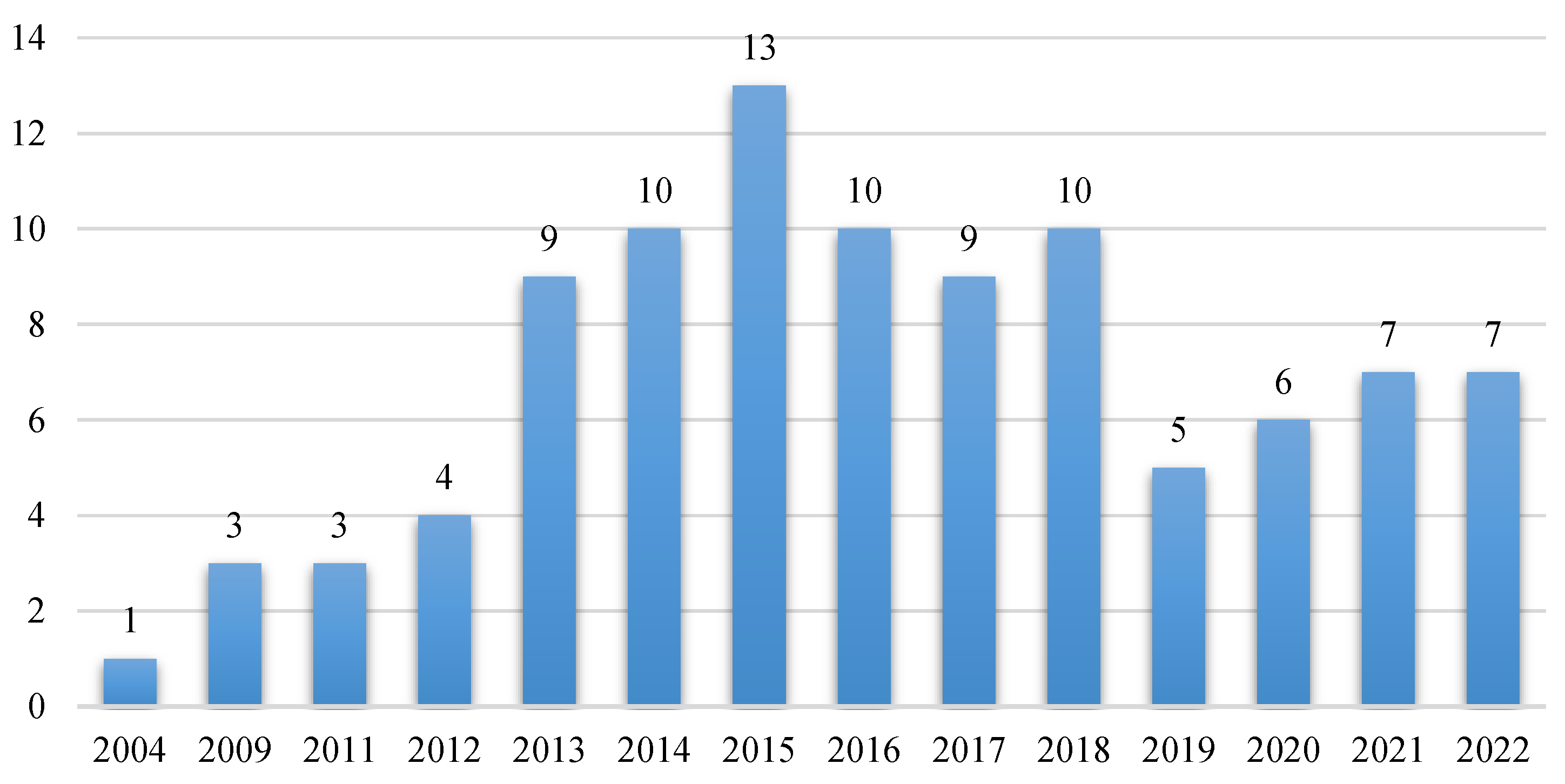

1. Introduction

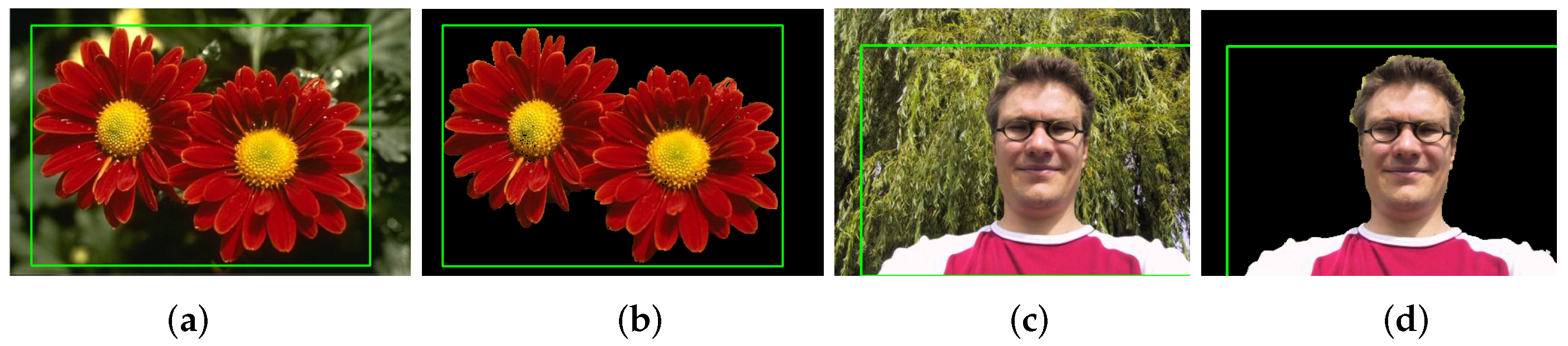

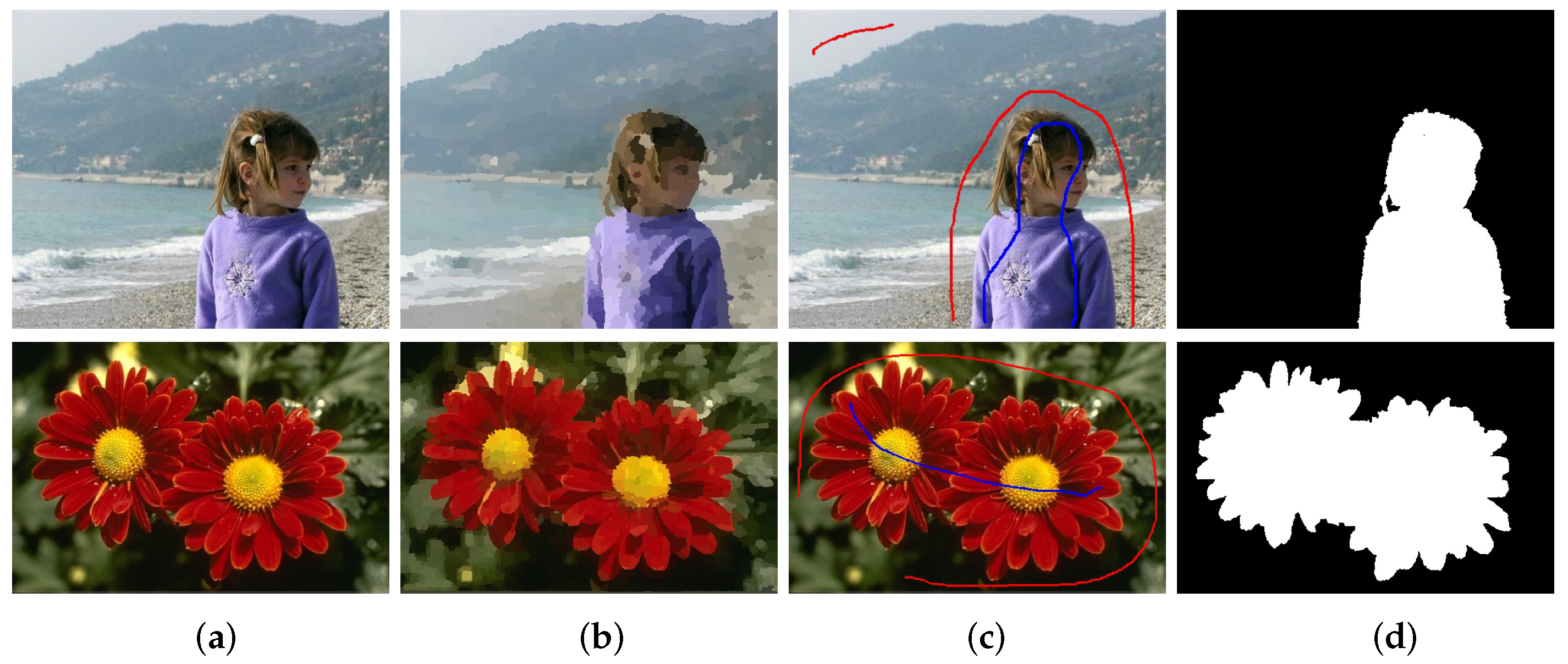

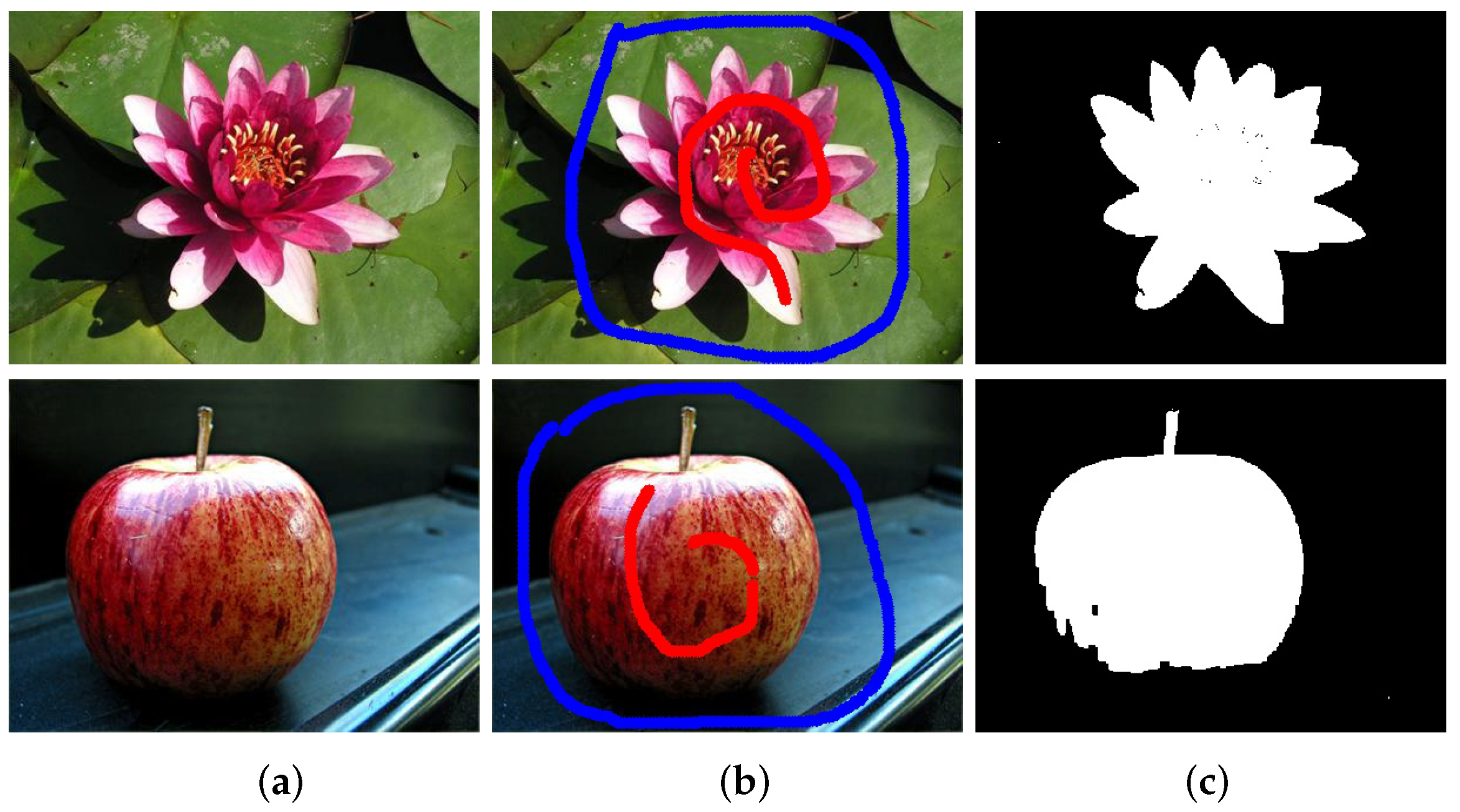

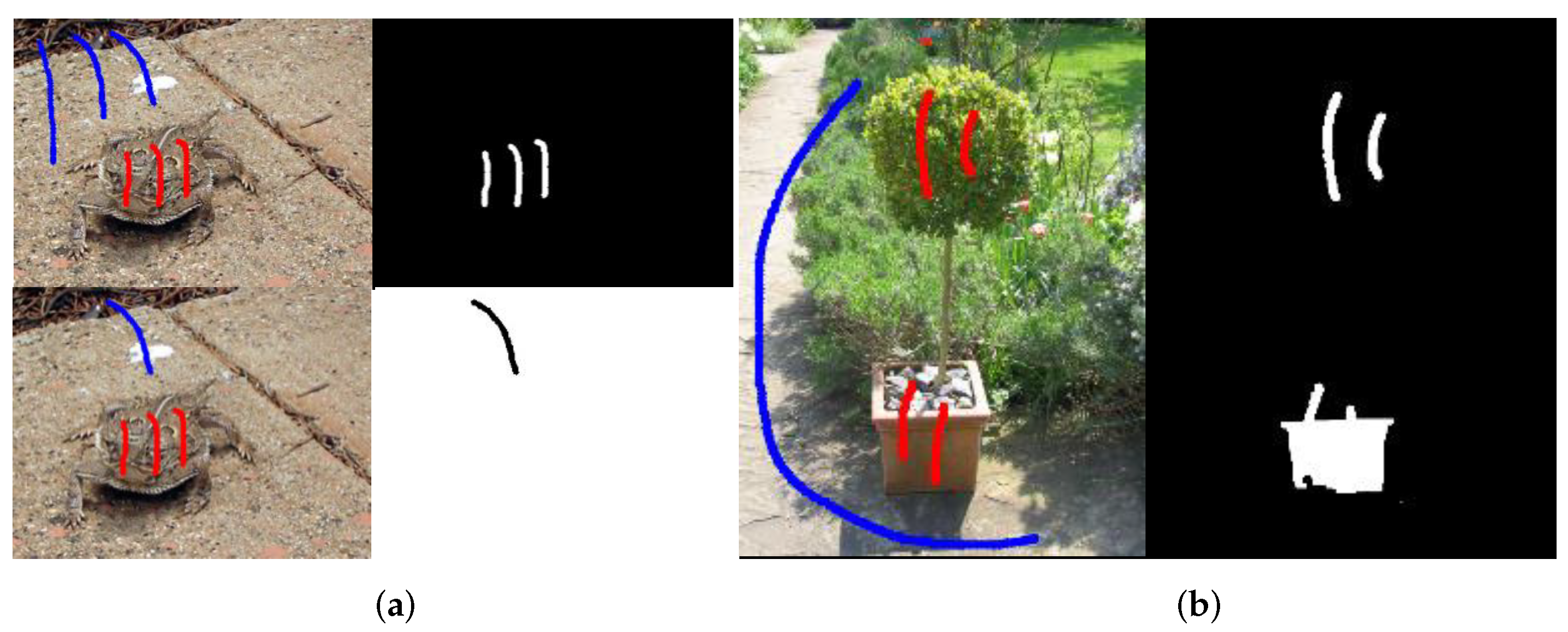

2. GrabCut Model

3. Improved GrabCut

3.1. GrabCut Based on Superpixel

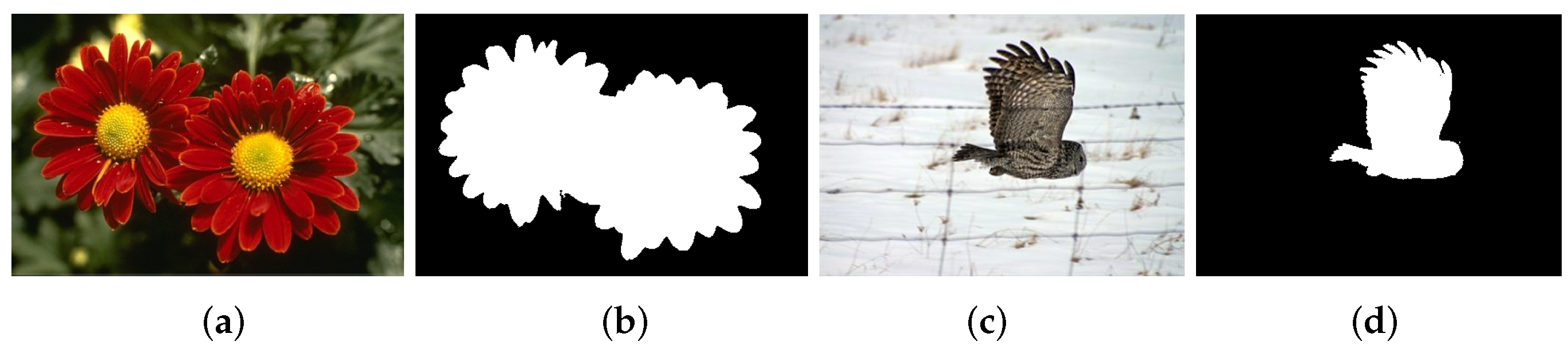

3.2. GrabCut Based on Salient Object Segmentation

3.3. GrabCut Based on Modified Energy Function

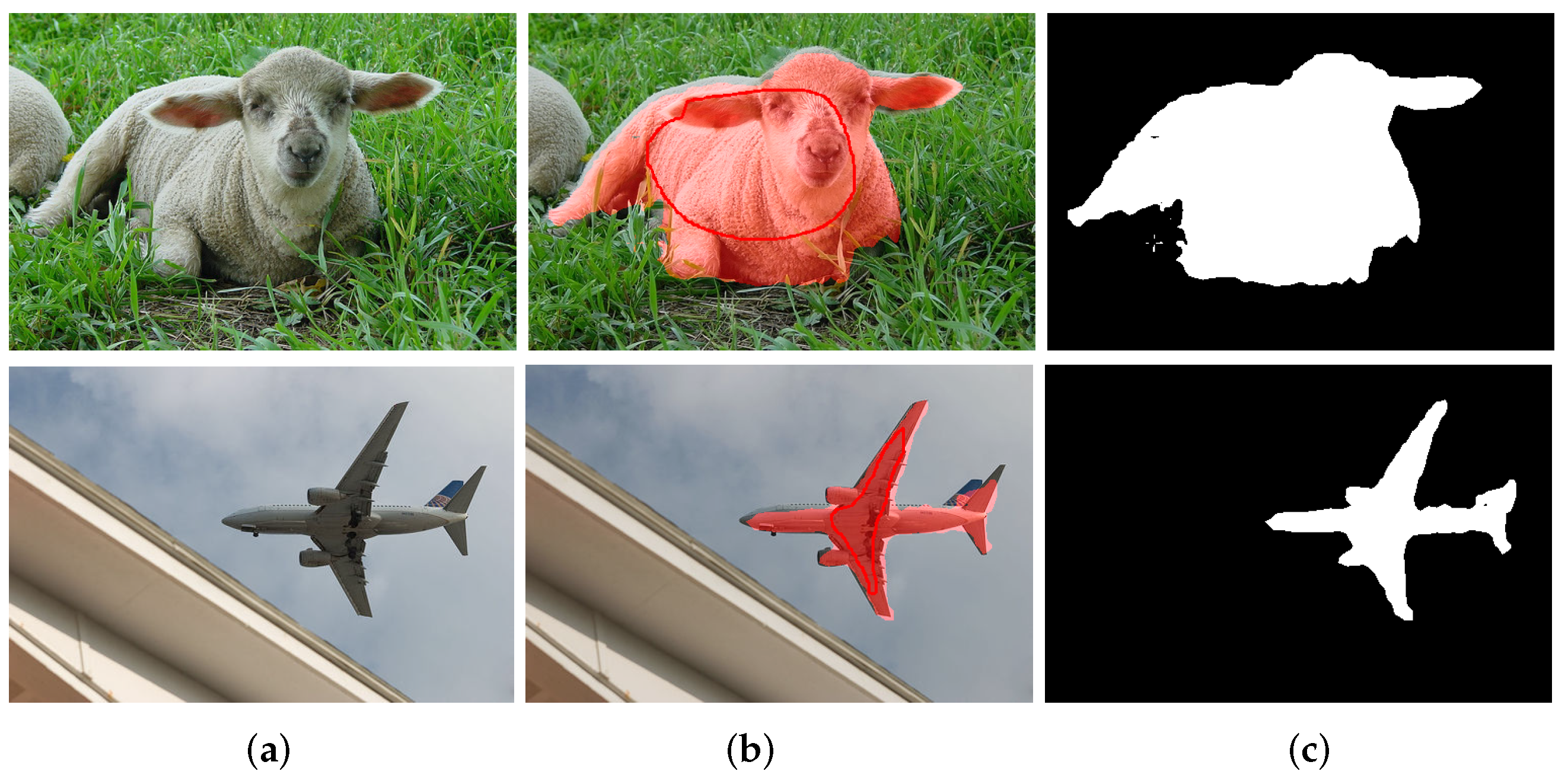

3.4. Non-Interactive GrabCut

3.5. Others

4. GrabCut Applications

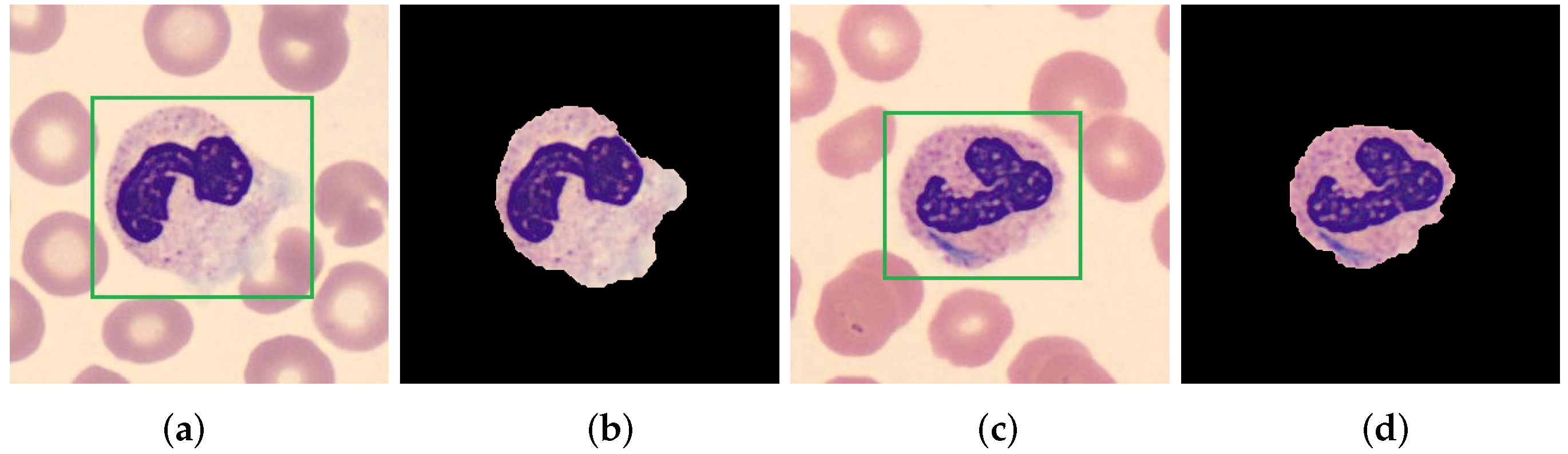

4.1. Medical Images

4.2. Non-Medical Images

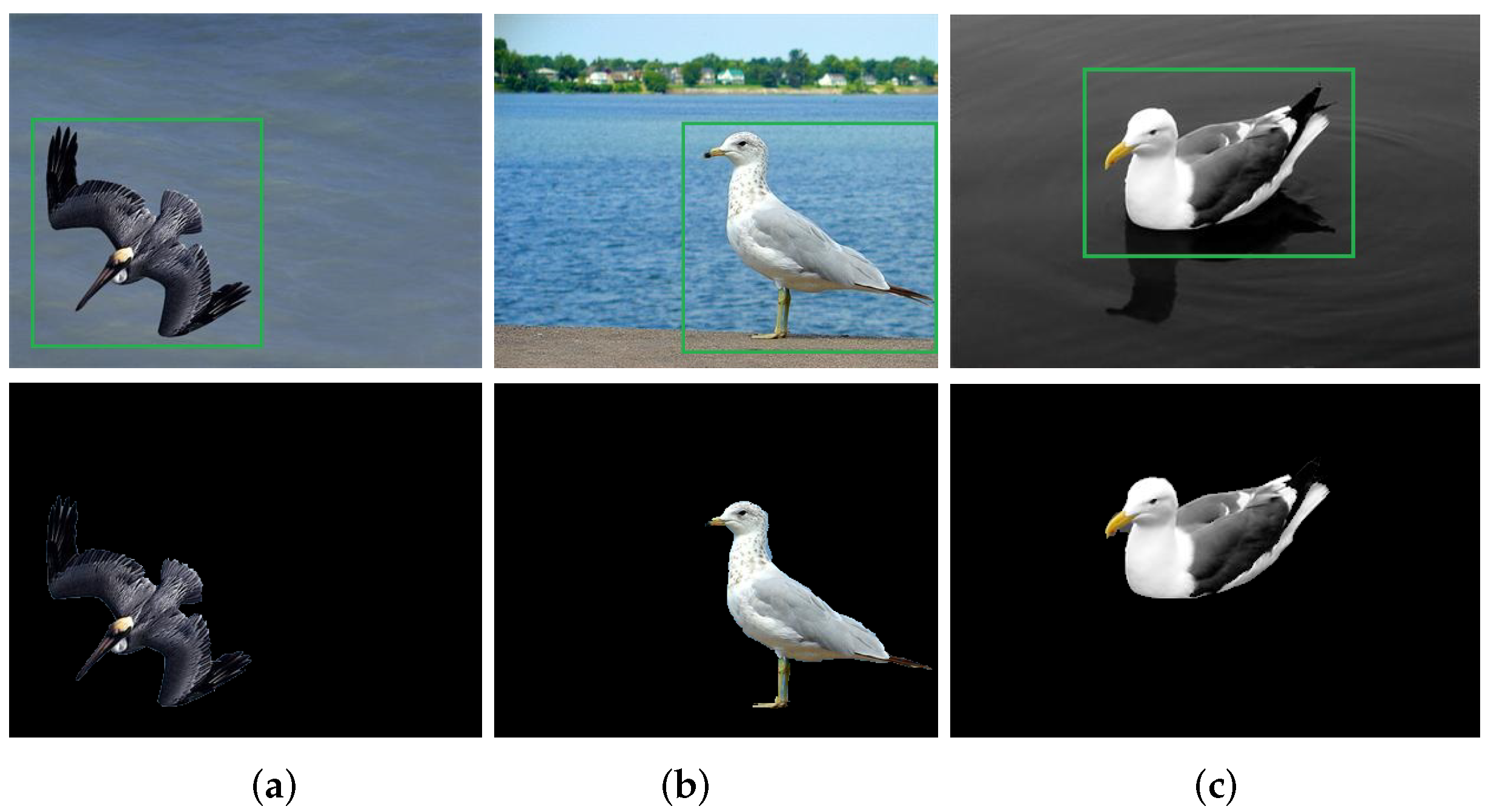

4.2.1. Applications in Object Detection and Recognition

4.2.2. Applications in Video Processing

4.2.3. Applications in Agriculture and Animal Husbandry

4.2.4. Applications in Human Body Images

4.2.5. Other Applications

5. Discussion

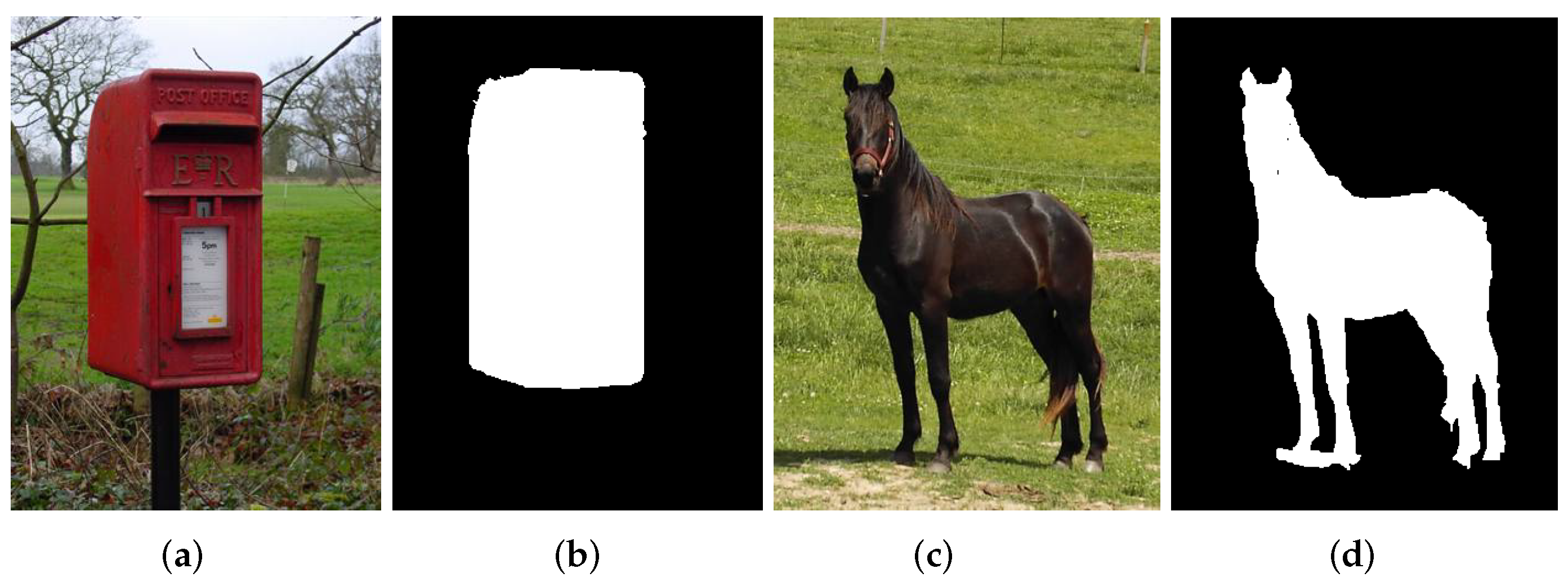

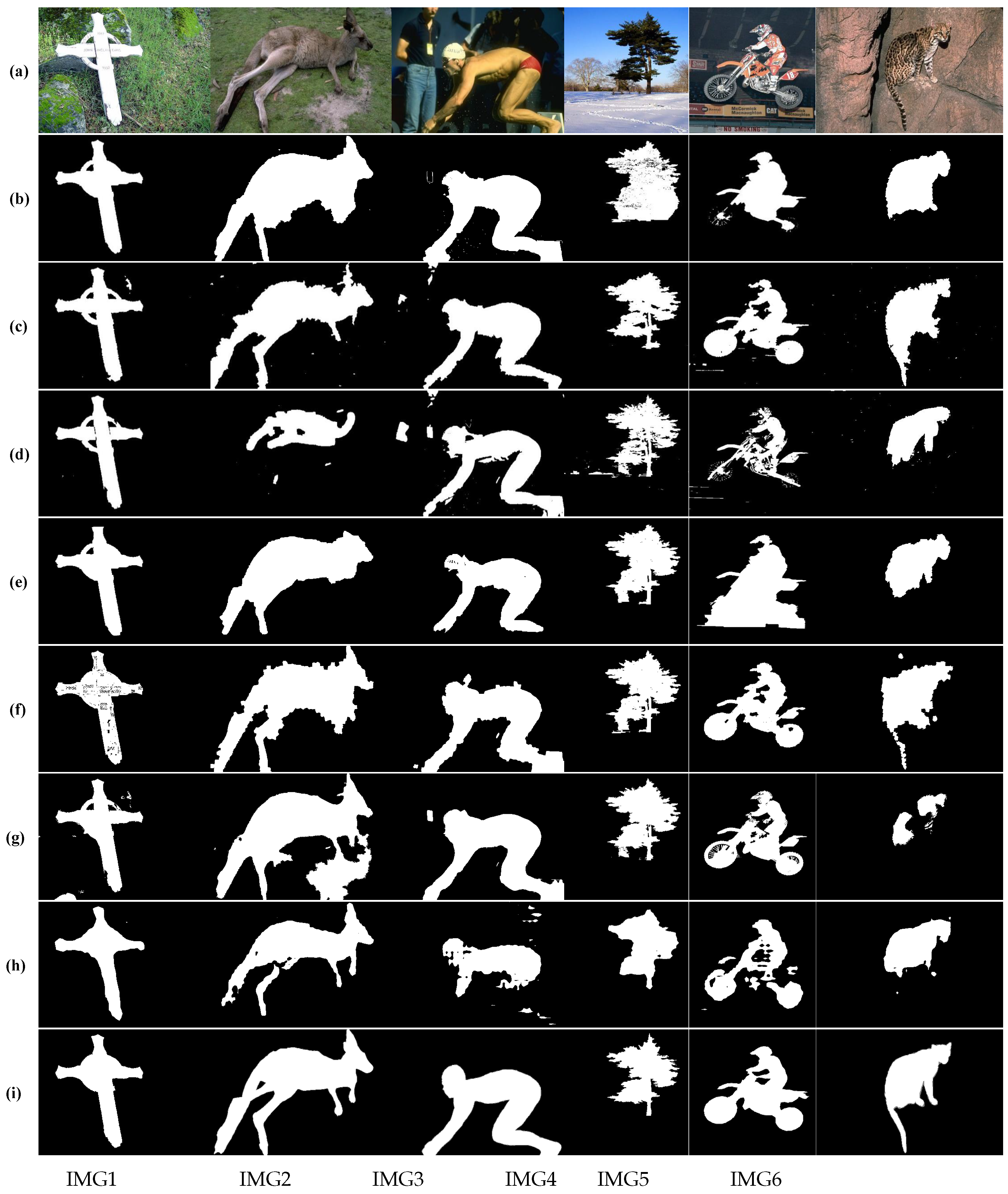

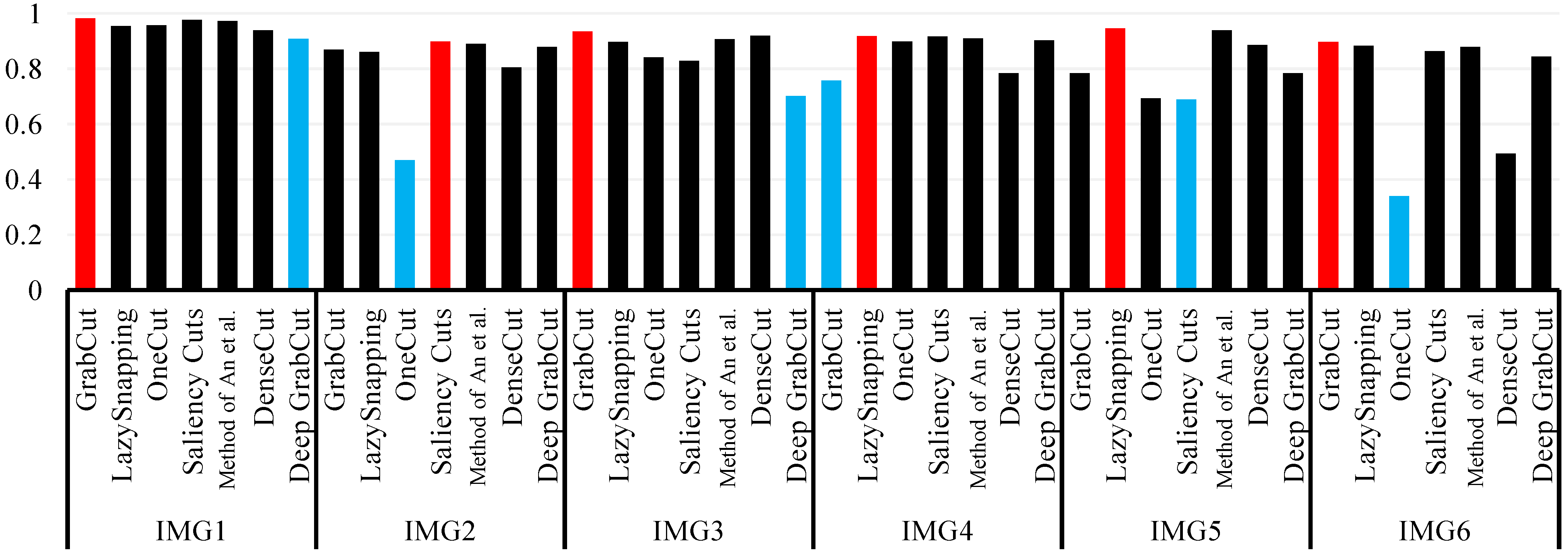

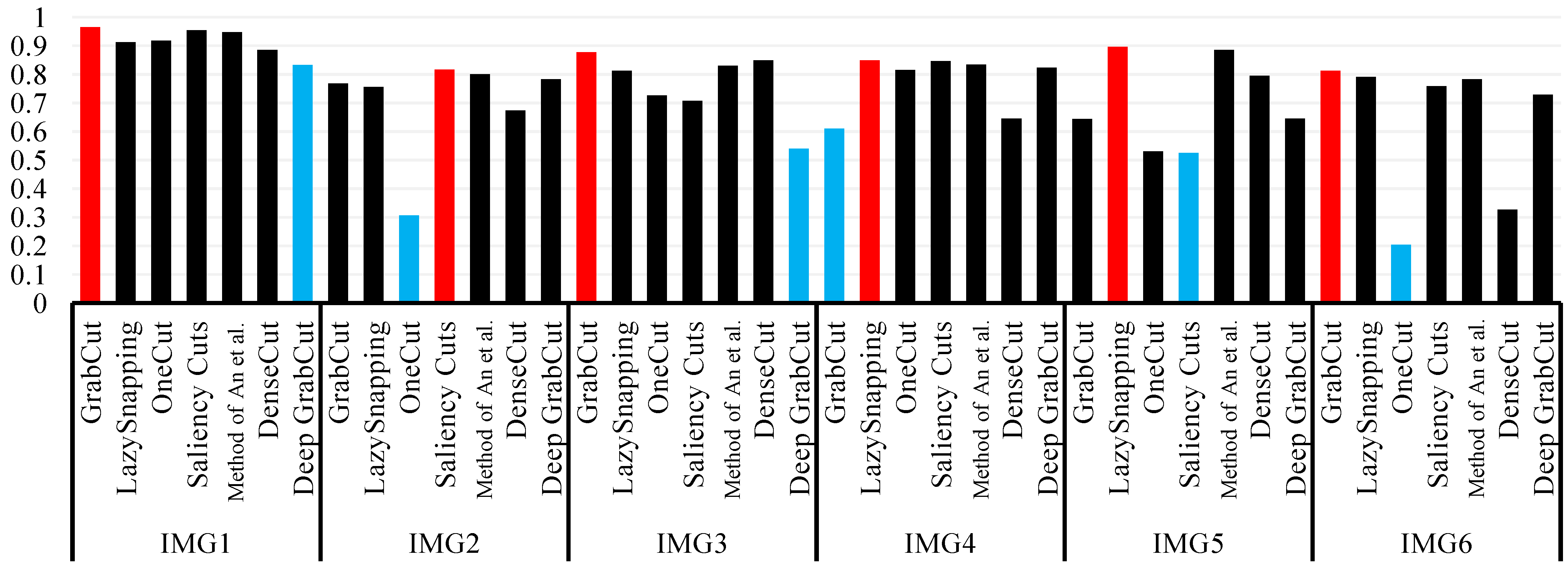

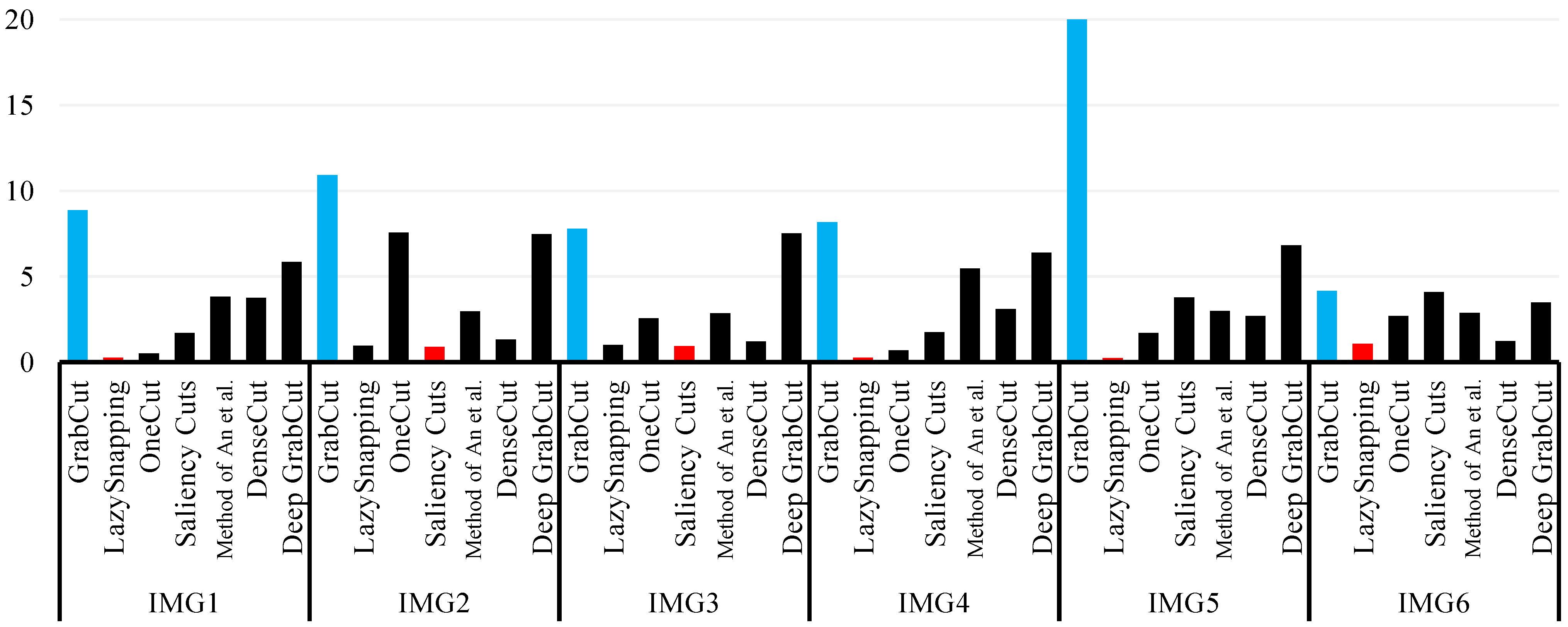

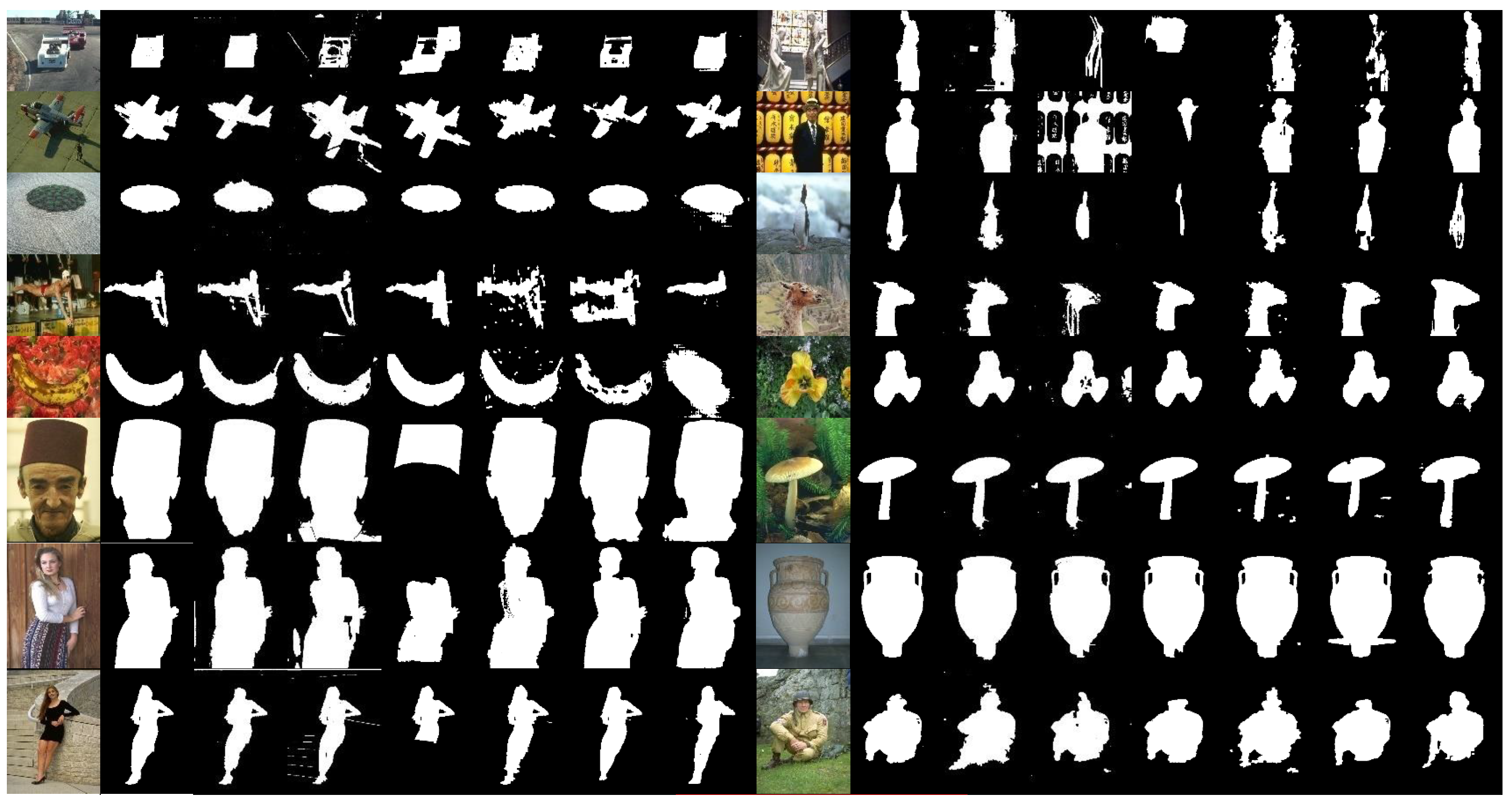

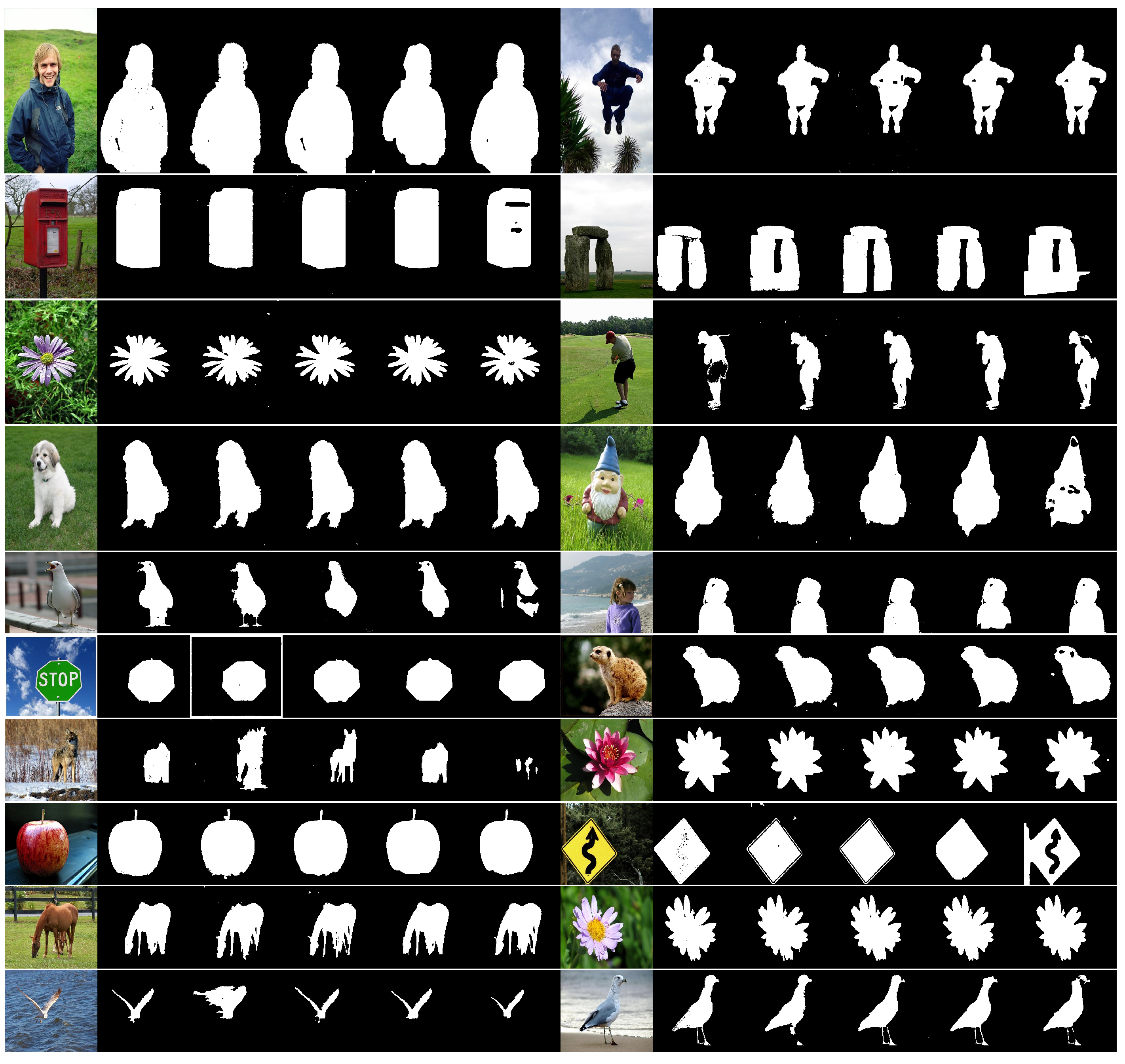

5.1. Experimental Results

5.2. Influence of Deep Learning

6. Future Work and Challenges

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, Z.; Ma, B.; Zhu, Y. Review of level set in image segmentation. Arch. Comput. Methods Eng. 2021, 28, 2429–2446. [Google Scholar] [CrossRef]

- Rother, C.; Kolmogorov, V.; Blake, A. “GrabCut”—Interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. 2004, 23, 309–314. [Google Scholar] [CrossRef]

- Blake, A.; Rother, C.; Brown, M.; Perez, P.; Torr, P. Interactive image segmentation using an adaptive GMMRF model. In Computer Vision-ECCV 2004: 8th European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; Springer: Berlin/Heidelberg, Germany, 2004; Volume 3021, pp. 428–441. [Google Scholar]

- Rother, C.; Minka, T.; Blake, A.; Kolmogorov, V. Cosegmentation of Image Pairs by Histogram Matching—Incorporating a Global Constraint into MRFs. In Proceedings of the Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006. [Google Scholar]

- Geman, S. Stochastic Relaxation, Gibbs Distributions, and the Bayesian Restoration of Images. IEEE Trans. Pattern Anal. Mach. Intell 1984, 6, 721–741. [Google Scholar] [CrossRef] [PubMed]

- Selim, S.Z.; Ismail, M.A. K-Means-Type Algorithms—A Generalized Convergence Theorem And Characterization Of Local Optimality. IEEE Trans. Pattern Anal. Mach. Intell. 1984, 6, 81–87. [Google Scholar] [CrossRef] [PubMed]

- Vincent, L.; Soille, P. Watersheds in digital spaces: An efficient algorithm based on immersion simulations. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 583–598. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Susstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2281. [Google Scholar] [CrossRef]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Li, Y.; Sun, J.; Tang, C.K.; Shum, I.Y. Lazy snapping. Acm Trans. Graph. 2004, 23, 303–308. [Google Scholar] [CrossRef]

- An, N.; Pun, C. Iterated Graph Cut Integrating Texture Characterization for Interactive Image Segmentation. In Proceedings of the 2013 10th International Conference Computer Graphics, Imaging and Visualization, Los Alamitos, CA, USA, 6–8 August 2013; pp. 79–83. [Google Scholar]

- Ren, D.Y.; Jia, Z.H.; Yang, J.; Kasabov, N.K. A Practical GrabCut Color Image Segmentation Based on Bayes Classification and Simple Linear Iterative Clustering. IEEE Access 2017, 5, 18480–18487. [Google Scholar] [CrossRef]

- Li, X.L.; Liu, K.; Dong, Y.S. Superpixel-Based Foreground Extraction With Fast Adaptive Trimaps. IEEE Trans. Cybern. 2018, 48, 2609–2619. [Google Scholar] [CrossRef]

- e Silva, R.H.L.; Machado, A.M.C. Automatic measurement of pressure ulcers using Support Vector Machines and GrabCut. Comput. Methods Programs Biomed. 2021, 200, 105867. [Google Scholar] [CrossRef]

- Wu, S.Q.; Nakao, M.; Matsuda, T. SuperCut: Superpixel Based Foreground Extraction With Loose Bounding Boxes in One Cutting. IEEE Signal Process. Lett. 2017, 24, 1803–1807. [Google Scholar] [CrossRef]

- Van den Bergh, M.; Boix, X.; Roig, G.; Van Gool, L. SEEDS: Superpixels Extracted Via Energy-Driven Sampling. Int. J. Comput. Vis. 2015, 111, 298–314. [Google Scholar] [CrossRef]

- Long, J.W.; Feng, X.; Zhu, X.F.; Zhang, J.X.; Gou, G.L. Efficient Superpixel-Guided Interactive Image Segmentation Based on Graph Theory. Symmetry 2018, 10, 169. [Google Scholar] [CrossRef]

- Zhou, X.N.; Wang, Y.N.; Zhu, Q.; Xiao, C.Y.; Lu, X. SSG: Superpixel segmentation and GrabCut-based salient object segmentation. Vis. Comput. 2019, 35, 385–398. [Google Scholar] [CrossRef]

- Borji, A.; Cheng, M.M.; Hou, Q.; Jiang, H.; Li, J. Salient object detection: A survey. Comput. Vis. Media 2019, 5, 117–150. [Google Scholar] [CrossRef]

- Fu, Y.; Cheng, J.; Li, Z.L.; Lu, H.Q. Saliency Cuts: An Automatic Approach to Object Segmentation. In Proceedings of the 19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; Volumes 1–6, pp. 696–699. [Google Scholar]

- Kim, K.S.; Yoon, Y.J.; Kang, M.C.; Sun, J.Y.; Ko, S.J. An Improved GrabCut Using a Saliency Map. In Proceedings of the 2014 IEEE 3rd Global Conference on Consumer Electronics (GCCE), Tokyo, Japan, 7–10 October 2014; pp. 317–318. [Google Scholar]

- Li, S.Z.; Ju, R.; Ren, T.W.; Wu, G.S. Saliency Cuts Based on Adaptive Triple Thresholding. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 4609–4613. [Google Scholar]

- Cheng, M.M.; Mitra, N.J.; Huang, X.L.; Torr, P.H.S.; Hu, S.M. Global Contrast Based Salient Region Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 569–582. [Google Scholar] [CrossRef]

- Gupta, N.; Jalal, A.S. A robust model for salient text detection in natural scene images using MSER feature detector and Grabcut. Multimed. Tools Appl. 2019, 78, 10821–10835. [Google Scholar] [CrossRef]

- Peng, Y.; Zhang, Z.; He, G.; Wei, M. An Improved GrabCut Method Based on a Visual Attention Model for Rare-Earth Ore Mining Area Recognition with High-Resolution Remote Sensing Images. Remote Sens. 2019, 11, 987. [Google Scholar] [CrossRef]

- Niu, Y.Z.; Su, C.R.; Guo, W.Z. Salient Object Segmentation Based on Superpixel and Background Connectivity Prior. IEEE Access 2018, 6, 56170–56183. [Google Scholar] [CrossRef]

- Wang, Y.; Ren, T.; Zhong, S.H.; Liu, Y.; Wu, G. Adaptive saliency cuts. Multimed. Tools Appl. 2018, 77, 22213–22230. [Google Scholar] [CrossRef]

- Vicente, S.; Kolmogorov, V.; Rother, C. Joint optimization of segmentation and appearance models. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision (Iccv), Kyoto, Japan, 29 September–2 October 2009; pp. 755–762. [Google Scholar]

- Komodakis, N.; Paragios, N.; Tziritas, G. MRF Energy Minimization and Beyond via Dual Decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 531–552. [Google Scholar] [CrossRef] [PubMed]

- Tang, M.; Gorelick, L.; Veksler, O.; Boykov, Y. GrabCut in One Cut. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 1769–1776. [Google Scholar]

- Gao, Z.S.; Shi, P.; Karimi, H.R.; Pei, Z. A mutual GrabCut method to solve co-segmentation. EURASIP J. Image Video Process. 2013, 2013, 1–11. [Google Scholar] [CrossRef]

- Zhou, H.L.; Zheng, J.M.; Wei, L. Texture aware image segmentation using graph cuts and active contours. Pattern Recognit. 2013, 46, 1719–1733. [Google Scholar] [CrossRef]

- Cheng, M.M.; Prisacariu, V.A.; Zheng, S.; Torr, P.H.S.; Rother, C. DenseCut: Densely Connected CRFs for Realtime GrabCut. Comput. Graph. Forum 2015, 34, 193–201. [Google Scholar] [CrossRef]

- Guan, Q.; Hua, M.; Hu, H.G. A Modified Grabcut Approach for Image Segmentation Based on Local Prior Distribution. In Proceedings of the 2017 International Conference on Wavelet Analysis And Pattern Recognition (ICWAPR), Ningbo, China, 9–12 July 2017; pp. 122–126. [Google Scholar]

- Hua, S.Y.; Shi, P. GrabCut Color Image Segmentation Based on Region of Interest. In Proceedings of the 2014 7th International Congress on Image And Signal Processing (CISP 2014), Dalian, China, 14–16 October 2014; pp. 392–396. [Google Scholar]

- Yong, Z.; Jiazheng, Y.; Hongzhe, L.; Qing, L. GrabCut image segmentation algorithm based on structure tensor. J. China Univ. Posts Telecommun. 2017, 24, 38–47. [Google Scholar] [CrossRef]

- Yu, H.K.; Zhou, Y.J.; Qian, H.; Xian, M.; Wang, S. Loosecut: Interactive Image Segmentation with Loosely Bounded Boxes. In Proceedings of the 2017 24th IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3335–3339. [Google Scholar]

- He, K.; Wang, D.; Tong, M.; Zhang, X. Interactive Image Segmentation on Multiscale Appearances. IEEE Access 2018, 6, 67732–67741. [Google Scholar] [CrossRef]

- He, K.; Wang, D.; Tong, M.; Zhu, Z. An Improved GrabCut on Multiscale Features. Pattern Recognit. 2020, 103, 107292. [Google Scholar] [CrossRef]

- Fu, R.G.; Li, B.; Gao, Y.H.; Wang, P. Fully automatic figure-ground segmentation algorithm based on deep convolutional neural network and GrabCut. IET Image Process. 2016, 10, 937–942. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Nver, H.M.; Ayan, E. Skin Lesion Segmentation in Dermoscopic Images with Combination of YOLO and GrabCut Algorithm. Diagnostics 2019, 9, 72. [Google Scholar]

- Zhang, N.C. Improved GrabCut Algorithm Based on Probabilistic Neural Network. Adv. Laser Optoelectron. 2021, 58, 0210024. [Google Scholar] [CrossRef]

- Kim, G.; Sim, J.Y. Depth Guided Selection of Adaptive Region of Interest for Grabcut-Based Image Segmentation. In Proceedings of the 2016 Asia-Pacific Signal And Information Processing Association Annual Summit And Conference (APSIPA), Jeju, Republic of Korea, 13–16 December 2016. [Google Scholar]

- Sanguesa, A.A.; Jorgensen, N.K.; Larsen, C.A.; Nasrollahi, K.; Moeslund, T.B. Initiating GrabCut by Color Difference for Automatic Foreground Extraction of Passport Imagery. In Proceedings of the 2016 Sixth International Conference on Image Processing Theory, Tools and Applications (IPTA), Oulu, Finland, 12–15 December 2016. [Google Scholar]

- Deng, L.L. Pre-detection Technology of Clothing Image Segmentation Based on GrabCut Algorithm. Wirel. Pers. Commun. 2018, 102, 599–610. [Google Scholar] [CrossRef]

- Orchard, M.T.; Bouman, C.A. Color Quantization Of Images. IEEE Trans. Signal Process. 1991, 39, 2677–2690. [Google Scholar] [CrossRef]

- Khattab, D.; Ebied, H.M.; Hussein, A.S.; Tolba, M.F. Color Image Segmentation Based on Different Color Space Models Using Automatic GrabCut. Sci. World J. 2014, 2014, 126025. [Google Scholar] [CrossRef]

- Kohonen, T.; Oja, E.; Simula, O.; Visa, A.; Kangas, J. Engineering applications of the self-organizing map. Proc. IEEE 1996, 84, 1358–1384. [Google Scholar] [CrossRef]

- Khattab, D.; Ebied, H.M.; Hussein, A.S.; Tolba, M.F. Automatic GrabCut for Bi-label Image Segmentation Using SOFM. Intell. Syst. Vol 2 Tools, Archit. Syst. Appl. 2015, 323, 579–592. [Google Scholar]

- Wang, P.H. Pattern-Recognition with Fuzzy Objective Function Algorithms-Bezdek, Jc. Siam Rev. 1983, 25, 442. [Google Scholar]

- Khattab, D.; Ebeid, H.M.; Tolba, M.F.; Hussein, A.S. Clustering-based Image Segmentation using Automatic GrabCut. In Proceedings of the International Conference on Informatics and Systems (INFOS 2016), Cairo, Egypt, 9–11 May 2016; pp. 95–100. [Google Scholar]

- Ye, H.J.; Liu, C.Q.; Niu, P.Y. Cucumber appearance quality detection under complex background based on image processing. Int. J. Agric. Biol. Eng. 2018, 11, 193–199. [Google Scholar]

- Sun, S.; Jiang, M.; He, D.; Long, Y.; Song, H. Recognition of green apples in an orchard environment by combining the GrabCut model and Ncut algorithm. Biosyst. Eng. 2019, 187, 201–213. [Google Scholar] [CrossRef]

- Deshpande, A.; Dahikar, P.; Agrawal, P. An Experiment with Random Walks and GrabCut in One Cut Interactive Image Segmentation Techniques on MRI Images. In Proceedings of the International Conference on Computational Vision and Bio Inspired Computing; Springer: Berlin/Heidelberg, Germany, 2019; pp. 993–1008. [Google Scholar]

- Jiang, F.; Pang, Y.; Lee, T.N.; Liu, C. Automatic object segmentation based on grabcut. In Proceedings of the Science and Information Conference; Springer: Berlin/Heidelberg, Germany, 2019; pp. 350–360. [Google Scholar]

- Sallem, N.K.; Devy, M. Extended GrabCut for 3D and RGB-D Point Clouds. Adv. Concepts Intell. Vis. Syst. Acivs 2013, 8192, 354–365. [Google Scholar]

- Dietterich, T.G.; Lathrop, R.H.; LozanoPerez, T. Solving the multiple instance problem with axis-parallel rectangles. Artif. Intell. 1997, 89, 31–71. [Google Scholar] [CrossRef]

- Wu, J.J.; Zhao, Y.B.; Zhu, J.Y.; Luo, S.W.; Tu, Z.W. MILCut: A Sweeping Line Multiple Instance Learning Paradigm for Interactive Image Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 256–263. [Google Scholar]

- Lee, G.; Lee, S.; Kim, G.; Park, J.; Park, Y. A Modified GrabCut Using a Clustering Technique to Reduce Image Noise. Symmetry 2016, 8, 64. [Google Scholar] [CrossRef]

- Niu, S.X.; Chen, G.S. The Improvement of the Processes of a Class of Graph-Cut-Based Image Segmentation Algorithms. Ieice Trans. Inf. Syst. 2016, E99d, 3053–3059. [Google Scholar] [CrossRef]

- Lu, Y.W.; Jiang, J.G.; Qi, M.B.; Zhan, S.; Yang, J. Segmentation method for medical image based on improved GrabCut. Int. J. Imaging Syst. Technol. 2017, 27, 383–390. [Google Scholar] [CrossRef]

- Rajchl, M.; Lee, M.C.H.; Oktay, O.; Kamnitsas, K.; Passerat-Palmbach, J.; Bai, W.; Damodaram, M.; Rutherford, M.A.; Hajnal, J.V.; Kainz, B.; et al. DeepCut: Object Segmentation From Bounding Box Annotations Using Convolutional Neural Networks. IEEE Trans. Med. Imaging 2017, 36, 674–683. [Google Scholar] [CrossRef]

- Lee, J.; Kim, D.W.; Won, C.S.; Jung, S.W. Graph Cut-Based Human Body Segmentation in Color Images Using Skeleton Information from the Depth Sensor. Sensors 2019, 19, 393. [Google Scholar] [CrossRef]

- Xu, N.; Price, B.L.; Cohen, S.; Yang, J.; Huang, T.S. Deep GrabCut for Object Selection. arXiv 2017, arXiv:1707.00243. [Google Scholar]

- Kalshetti, P.; Bundele, M.; Rahangdale, P.; Jangra, D.; Chattopadhyay, C.; Harit, G.; Elhence, A. An interactive medical image segmentation framework using iterative refinement. Comput. Biol. Med. 2017, 83, 22–33. [Google Scholar] [CrossRef]

- Baracho, S.F.; Pinheiro, D.J.L.L.; de Godoy, C.M.G.; Coelho, R.C. A segmentation method for myocardial ischemia/infarction applicable in heart photos. Comput. Biol. Med. 2017, 87, 285–301. [Google Scholar] [CrossRef]

- Liu, Y.H.; Cao, F.L.; Zhao, J.W.; Chu, J.J. Segmentation of White Blood Cells Image Using Adaptive Location and Iteration. IEEE J. Biomed. Health Inf. 2017, 21, 1644–1655. [Google Scholar] [CrossRef]

- Wu, S.B.; Yu, S.D.; Zhuang, L.; Wei, X.H.; Sak, M.; Duric, N.; Hu, J.N.; Xie, Y.Q. Automatic Segmentation of Ultrasound Tomography Image. Biomed Res. Int. 2017, 2017, 2059036. [Google Scholar] [CrossRef]

- Yu, S.D.; Wu, S.B.; Zhuang, L.; Wei, X.H.; Sak, M.; Neb, D.; Hu, J.N.; Xie, Y.Q. Efficient Segmentation of a Breast in B-Mode Ultrasound Tomography Using Three-Dimensional GrabCut(GC3D). Sensors 2017, 17, 1827. [Google Scholar] [CrossRef]

- Jaisakthi, S.M.; Mirunalini, P.; Aravindan, C. Automated skin lesion segmentation of dermoscopic images using GrabCut and k-means algorithms. IET Comput. Vis. 2018, 12, 1088–1095. [Google Scholar] [CrossRef]

- Mao, J.F.; Wang, K.H.; Hu, Y.H.; Sheng, W.G.; Feng, Q.X. GrabCut algorithm for dental X-ray images based on full threshold segmentation. IET Image Process. 2018, 12, 2330–2335. [Google Scholar] [CrossRef]

- Xiao, C.F.; Li, W.F.; Deng, H.; Chen, X.; Yang, Y.; Xie, Q.W.; Han, H. Effective automated pipeline for 3D reconstruction of synapses based on deep learning. BMC Bioinform. 2018, 19, 263. [Google Scholar] [CrossRef] [PubMed]

- Frants, V.; Agaian, S. Dermoscopic image segmentation based on modified GrabCut with octree color quantization. In Proceedings of the Mobile Multimedia/Image Processing, Security, and Applications 2020; SPIE: Bellingham, DC, USA, 2020; Volume 11399, pp. 119–130. [Google Scholar]

- Saeed, S.; Abdullah, A.; Jhanjhi, N.; Naqvi, M.; Masud, M.; AlZain, M.A. Hybrid GrabCut Hidden Markov Model for Segmentation. Comput. Mater. Contin. 2022, 72, 851–869. [Google Scholar] [CrossRef]

- Yoruk, U.; Hargreaves, B.A.; Vasanawala, S.S. Automatic renal segmentation for MR urography using 3D-GrabCut and random forests. Magn. Reson. Med. 2018, 79, 1696–1707. [Google Scholar] [CrossRef]

- Wei, Z.; Liang, D.; Zhang, D.; Zhang, L.; Geng, Q.; Wei, M.; Zhou, H. Learning calibrated-guidance for object detection in aerial images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2721–2733. [Google Scholar] [CrossRef]

- Zhu, H.; Li, P.; Xie, H.; Yan, X.; Liang, D.; Chen, D.; Wei, M.; Qin, J. I Can Find You! Boundary-Guided Separated Attention Network for Camouflaged Object Detection; AAAI: Washington, DC, USA, 2022. [Google Scholar]

- Kang, B.; Liang, D.; Mei, J.; Tan, X.; Zhou, Q.; Zhang, D. Robust RGB-T Tracking via Graph Attention-Based Bilinear Pooling. IEEE Trans. Neural Netw. Learn. Syst. 2022. [Google Scholar] [CrossRef]

- Lin, C.Y.; Muchtar, K.; Yeh, C.H. Robust Techniques for Abandoned and Removed Object Detection Based on Markov Random Field. J. Vis. Commun. Image Represent. 2016, 39, 181–195. [Google Scholar] [CrossRef]

- Zhang, H.; Song, A.G. A map-based normalized cross correlation algorithm using dynamic template for vision-guided telerobot. Adv. Mech. Eng. 2017, 9, 1687814017728839. [Google Scholar] [CrossRef]

- Xu, S.X.; Zhu, Q.Y. Seabird image identification in natural scenes using Grabcut and combined features. Ecol. Inf. 2016, 33, 24–31. [Google Scholar] [CrossRef]

- Hao, Y.P.; Wei, J.; Jiang, X.L.; Yang, L.; Li, L.C.; Wang, J.K.; Li, H.; Li, R.H. Icing Condition Assessment of In-Service Glass Insulators Based on Graphical Shed Spacing and Graphical Shed Overhang. Energies 2018, 11, 318. [Google Scholar] [CrossRef]

- Li, S.; Liu, Y.; Zhao, Q.; Feng, Z. Learning residue-aware correlation filters and refining scale estimates with the grabcut for real-time UAV tracking. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; pp. 1238–1248. [Google Scholar]

- Salau, A.O.; Yesufu, T.K.; Ogundare, B.S. Vehicle plate number localization using a modified GrabCut algorithm. J. King Saud Univ.-Comput. Inf. Sci. 2021, 33, 399–407. [Google Scholar] [CrossRef]

- Shi, H.; Zhao, D. License Plate Localization in Complex Environments Based on Improved GrabCut Algorithm. IEEE Access 2022, 10, 88495–88503. [Google Scholar] [CrossRef]

- Brkic, K.; Hrkac, T.; Kalafatic, Z. Protecting the privacy of humans in video sequences using a computer vision-based de-identification pipeline. Expert Syst. Appl. 2017, 87, 41–55. [Google Scholar] [CrossRef]

- Dong, L.; Feng, N.; Mao, M.; He, L.; Wang, J. E-GrabCut: An economic method of iterative video object extraction. Front. Comput. Sci. 2017, 11, 649–660. [Google Scholar] [CrossRef]

- Kang, F.; Wang, C.; Li, J.; Zong, Z. A Multiobjective Piglet Image Segmentation Method Based on an Improved Noninteractive GrabCut Algorithm. Adv. Multimed. 2018, 2018, 1083876. [Google Scholar] [CrossRef]

- Qi, F.; Wang, Y.; Tang, Z. Lightweight Plant Disease Classification Combining GrabCut Algorithm, New Coordinate Attention, and Channel Pruning. Neural Process. Lett. 2022, 54, 5317–5331. [Google Scholar] [CrossRef]

- Zhang, S.; Huang, W.; Wang, Z. Combing modified Grabcut, K-means clustering and sparse representation classification for weed recognition in wheat field. Neurocomputing 2021, 452, 665–674. [Google Scholar] [CrossRef]

- Tan, K.; Zhang, Y.; Tong, X. Cloud Extraction from Chinese High Resolution Satellite Imagery by Probabilistic Latent Semantic Analysis and Object-Based Machine Learning. Remote Sens. 2016, 8, 963. [Google Scholar] [CrossRef]

- Yamasaki, Y.; Migita, M.; Koutaki, G.; Toda, M.; Kishigami, T. ISHIGAKI Region Extraction Using Grabcut Algorithm for Support of Kumamoto Castle Reconstruction. In Proceedings of the International Workshop on Frontiers of Computer Vision, Daegu, Republic of Korea, 22–23 February 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 106–116. [Google Scholar]

- Zhang, K.; Chen, H.; Xiao, W.; Sheng, Y.; Su, D.; Wang, P. Building Extraction from High-Resolution Remote Sensing Images Based on GrabCut with Automatic Selection of Foreground and Background Samples. Photogramm. Eng. Remote Sens. 2020, 86, 235–245. [Google Scholar] [CrossRef]

- Liu, T.; Yuan, Z.J.; Sun, J.A.; Wang, J.D.; Zheng, N.N.; Tang, X.O.; Shum, H.Y. Learning to Detect a Salient Object. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 353–367. [Google Scholar] [PubMed]

- Wang, Z.; Wang, E.; Zhu, Y. Image segmentation evaluation: A survey of methods. Artif. Intell. Rev. 2020, 53, 5637–5674. [Google Scholar] [CrossRef]

- Taha, A.A.; Hanbury, A. Metrics for evaluating 3D medical image segmentation: Analysis, selection, and tool. BMC Med. Imaging 2015, 15, 29. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NE, USA, 26 June–1 July 2016. [Google Scholar]

| Image | Method | Recall | Precision | JAC | Time (secs) | |

|---|---|---|---|---|---|---|

| IMG1 | GrabCut | 0.9752 | 0.9889 | 0.9820 | 0.9647 | 8.8695 |

| LazySnapping | 0.9494 | 0.9597 | 0.9545 | 0.9130 | 0.2570 | |

| OneCut | 0.9201 | 0.9969 | 0.9569 | 0.9175 | 0.5093 | |

| Saliency Cuts | 0.9641 | 0.9892 | 0.9765 | 0.9541 | 1.7063 | |

| Method of [11] | 0.9244 | 0.9876 | 0.9550 | 0.9139 | 3.8284 | |

| DenseCut | 0.9445 | 0.9342 | 0.9393 | 0.8856 | 3.7621 | |

| Deep GrabCut | 0.8752 | 0.9447 | 0.9085 | 0.8324 | 5.8396 | |

| IMG2 | GrabCut | 0.9911 | 0.7739 | 0.8691 | 0.7685 | 10.9130 |

| LazySnapping | 0.8737 | 0.8481 | 0.8607 | 0.7554 | 0.9667 | |

| OneCut | 0.7026 | 0.3530 | 0.4700 | 0.3072 | 7.5650 | |

| Saliency Cuts | 0.9075 | 0.8901 | 0.8987 | 0.8160 | 0.9005 | |

| Method of [11] | 0.9749 | 0.8177 | 0.8894 | 0.8008 | 2.9662 | |

| DenseCut | 0.9806 | 0.6820 | 0.8045 | 0.6729 | 1.3253 | |

| Deep GrabCut | 0.8018 | 0.9713 | 0.8785 | 0.7833 | 7.4800 | |

| IMG3 | GrabCut | 0.9748 | 0.8983 | 0.9350 | 0.8779 | 7.7970 |

| LazySnapping | 0.8763 | 0.9186 | 0.8969 | 0.8131 | 1.0038 | |

| OneCut | 0.8213 | 0.8624 | 0.8413 | 0.7261 | 2.5656 | |

| Saliency Cuts | 0.7304 | 0.9561 | 0.8281 | 0.7067 | 0.9423 | |

| Method of [11] | 0.9422 | 0.8743 | 0.9070 | 0.8298 | 2.8496 | |

| DenseCut | 0.9373 | 0.9006 | 0.9186 | 0.8495 | 1.2150 | |

| Deep GrabCut | 0.5559 | 0.9507 | 0.7016 | 0.5403 | 7.5170 | |

| IMG4 | GrabCut | 0.9355 | 0.6369 | 0.7577 | 0.6099 | 8.1819 |

| LazySnapping | 0.9439 | 0.8941 | 0.9183 | 0.8490 | 0.2536 | |

| OneCut | 0.9379 | 0.8617 | 0.8982 | 0.8152 | 0.6877 | |

| Saliency Cuts | 0.9904 | 0.8529 | 0.9166 | 0.8460 | 1.7416 | |

| Method of [11] | 0.9802 | 0.8487 | 0.9098 | 0.8345 | 5.4663 | |

| DenseCut | 0.9980 | 0.6455 | 0.7839 | 0.6447 | 3.0919 | |

| Deep GrabCut | 0.9147 | 0.8915 | 0.9029 | 0.8231 | 6.3885 | |

| IMG5 | GrabCut | 0.6700 | 0.9425 | 0.7833 | 0.6437 | 20.2160 |

| LazySnapping | 0.9183 | 0.9740 | 0.9453 | 0.8964 | 0.2394 | |

| OneCut | 0.5358 | 0.9824 | 0.6934 | 0.5307 | 1.6940 | |

| Saliency Cuts | 0.8033 | 0.6021 | 0.6883 | 0.5247 | 3.7740 | |

| Method of [11] | 0.9077 | 0.9723 | 0.9389 | 0.8848 | 2.9830 | |

| DenseCut | 0.7989 | 0.9937 | 0.8857 | 0.7949 | 2.6913 | |

| Deep GrabCut | 0.7058 | 0.8824 | 0.7843 | 0.6451 | 6.8088 | |

| IMG6 | GrabCut | 0.8858 | 0.9068 | 0.8962 | 0.8119 | 4.1540 |

| LazySnapping | 0.9332 | 0.8383 | 0.8832 | 0.7908 | 1.0684 | |

| OneCut | 0.6809 | 0.2262 | 0.3397 | 0.2046 | 2.6872 | |

| Saliency Cuts | 0.7904 | 0.9497 | 0.8628 | 0.7587 | 4.0841 | |

| Method of [11] | 0.9406 | 0.8240 | 0.8785 | 0.7833 | 2.8732 | |

| DenseCut | 0.3270 | 1.0000 | 0.4929 | 0.3270 | 1.2402 | |

| Deep GrabCut | 0.7619 | 0.9445 | 0.8434 | 0.7292 | 3.4945 |

| Method | Recall | Precision | JAC | Time (secs) | |

|---|---|---|---|---|---|

| GrabCut | 0.9668 | 0.9213 | 0.9407 | 0.8927 | 11.0076 |

| LazySnapping | 0.9681 | 0.9104 | 0.9357 | 0.8842 | 1.3669 |

| OneCut | 0.8585 | 0.7926 | 0.7899 | 0.6974 | 6.1393 |

| Saliency Cuts | 0.8371 | 0.8892 | 0.8255 | 0.7458 | 0.6803 |

| Method of [11] | 0.9614 | 0.8878 | 0.9212 | 0.8597 | 3.5718 |

| DenseCut | 0.8427 | 0.9418 | 0.8561 | 0.7927 | 1.3851 |

| Deep GrabCut | 0.8854 | 0.8774 | 0.8701 | 0.7849 | 10.3698 |

| Method | Recall | Precision | JAC | Time (secs) | |

|---|---|---|---|---|---|

| GrabCut | 0.9429 | 0.9251 | 0.9301 | 0.8772 | 7.2807 |

| LazySnapping | 0.9548 | 0.8680 | 0.9008 | 0.8348 | 0.6805 |

| OneCut | 0.8609 | 0.8531 | 0.8363 | 0.7539 | 1.6462 |

| Saliency Cuts | 0.8704 | 0.8764 | 0.8614 | 0.7933 | 0.7436 |

| Method of [11] | 0.9463 | 0.8905 | 0.9141 | 0.8507 | 2.5903 |

| DenseCut | 0.8323 | 0.9419 | 0.8676 | 0.7951 | 0.9125 |

| Deep GrabCut | 0.8702 | 0.8765 | 0.8641 | 0.7833 | 5.9086 |

| Characteristic | Deep Learning | GrabCut |

|---|---|---|

| accuracy | Higher | Secondary |

| problems during learning | It may require a lot of tag data and a lot of computing resources to train the model | For complex images, we need to manually specify the foreground and background |

| training effort | Relatively time-consuming, requiring a lot of tag data | Need to label foreground and background, but not too much tag data |

| applicable scenario | Process complex image tasks | Process foreground extraction in still images or videos |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Lv, Y.; Wu, R.; Zhang, Y. Review of GrabCut in Image Processing. Mathematics 2023, 11, 1965. https://doi.org/10.3390/math11081965

Wang Z, Lv Y, Wu R, Zhang Y. Review of GrabCut in Image Processing. Mathematics. 2023; 11(8):1965. https://doi.org/10.3390/math11081965

Chicago/Turabian StyleWang, Zhaobin, Yongke Lv, Runliang Wu, and Yaonan Zhang. 2023. "Review of GrabCut in Image Processing" Mathematics 11, no. 8: 1965. https://doi.org/10.3390/math11081965

APA StyleWang, Z., Lv, Y., Wu, R., & Zhang, Y. (2023). Review of GrabCut in Image Processing. Mathematics, 11(8), 1965. https://doi.org/10.3390/math11081965