1. Introduction

Elliptic interface problems with discontinuous coefficients and singular sources are found in many applications, such as incompressible two-phase flow [

1,

2], computational electro-magnetics [

3], heat conduction between materials of different heat capacity and conductivity [

4,

5,

6], thermal convection in a Forchheimer–Brinkman model [

7,

8], etc. The solution of elliptic interface problems with discontinuous coefficients has low global regularity and usually belongs to

if the jump conditions are homogeneous. When the jump conditions are nonhomogeneous, the solutions to the elliptic interface problems are often discontinuous [

9]. It is a difficult job to construct an efficient numerical method for such problems. In the past decades, various numerical methods have been provided to solve these kinds of problems. According to the geometric relationship between the computational grid and the material interface, the numerical method can be generally divided into two categories: (1) The interface-fitted methods [

10,

11,

12,

13,

14]. In this kind of method the computational mesh fits the interface, which means that an element of the underlying mesh is required to intersect with the interface only through its edges. This approach is beneficial for the numerical scheme to reach optimal convergence. Material interactions in real-world applications can be geometrically complex and very heterogeneous. Geometric singularities, such as sharp edges, cusps, and tips, may be encountered in some extreme circumstances with nonsmooth surfaces or interfaces with Lipschitz continuity. Yet, creating high-quality meshes for some exotically complicated geometries may be challenging and time-consuming. Such a challenge drives the development of numerical algorithms that employ structured meshes and allow the interface to cut through elements. (2) The interface-unfitted method [

15,

16,

17,

18,

19,

20]. By allowing the interface to cut computational elements, the immersed interface approach lessens the effort of creating meshes for complicated domains and interface geometry. Specific interface techniques are required to manage interface jump conditions, which are essential for the well-posedness of the interface problem. The convergence order in the

norm oscillates while using the interface immersed numerical approach, and the point-by-point error across the interface elements is often large.

To secure accuracy near the interface, the jump conditions on the interface have to be incorporated into the numerical discretization in a certain manner, and some special treatment needs to be introduced on the elements through which the interface passes. Although both sorts of solutions have had some success in tackling interface difficulties, implementing such numerical schemes is not an easy undertaking, due to the inhomogeneous jump conditions on the interface. High dimensional interface difficulties, nonlinear interface problems, and other generic interface problems continue to provide significant hurdles to traditional numerical methods.

Deep neural networks (DNNs) have recently received a lot of interest in the field of scientific machine learning (SciML) and have been used to build new ways of solving partial differential equations, e.g., the deep Ritz method (DRM) [

21], deep Galerkin method [

22] and physics-informed neural networks (PINNs) [

23]. DNNs provide nonlinear approximation through the composition of hidden layers because of their universal approximation capabilities, which do not limit the approximation to linear spaces. In SciML, PINN has become one of the most prominent deep learning approaches. While the differential operators in the governing PDEs are approximated by automated differentiation, PINNs provide a mesh-free approach. Such techniques have also been applied in solving different types of partial differential equations (PDEs), including integro-differential equations [

24], fractional PDEs [

25], and stochastic PDEs [

26,

27]. Furthermore, PINNs have been effectively used to tackle a variety of issues in other domains, such as optics [

28,

29], fluid mechanics [

30], systems biology [

31], etc.

Several initiatives have been launched in recent years to employ neural networks to tackle interface problems, since neural network approaches are meshless and may benefit from deep learning techniques, such as automated differentiation and GPU acceleration. In particular, the use of multiple neural networks based on the domain decomposition method (DDM) have attracted increasing attention as they are more accurate and flexible in dealing with the interface and have shown remarkable success in various interface problems [

32,

33,

34,

35,

36,

37,

38].

In [

34], a deep-learning-based domain decomposition approach (DeepDDM) was introduced, which uses deep neural networks to discretize the subproblems split by domain decomposition methods (DDMs) to solve PDEs with complicated interfaces in the computational domain. Wang [

35] proposed a mesh-free method based on DRM to solve interface problems with high-contrast discontinuous coefficients. He et al. [

37] proposed a mesh-free method using piecewise DNN for elliptic interface problems with discontinuous solution and derivatives across the interface. In order to ensure that the solution is smooth in each subdomain, they approximate the solution using two neural networks that correspond to two distinct subdomains. In [

33], it was suggested to use a conservative physics-informed neural network (cPINN) on discrete subdomains for nonlinear conservation laws. The computing domain is subdivided into discrete subdomains, with a different PINN applied in each subdomain. The conservation property of the cPINN is then attained by enforcing flux continuity in the strong form along the subdomain interfaces. A generalized space–time domain decomposition framework, named eXtended PINN (xPINN) was proposed in [

32] to solve nonlinear PDEs in arbitrary complex-geometry domains. Wu et al. [

38] performed a convergence analysis of neural network combined with domain decomposition technologies and gradient-enhanced strategies for solving second-order elliptic interface problems. It was demonstrated that, as the number of samples increased, the neural network sequence generated by minimizing a Lipschitz regularized loss function converged to the unique solution to the interface problem in

.

In this paper, we propose a soft constraint physics-informed neural network to solve the nonhomogeneous elliptic interface problems with discontinuous solutions and derivatives across the interface. Since the interface divides the domain into two disjoint parts, the solution may change dramatically across the interface. Instead of representing the approximate solutions on the whole domain with a single DNN structure, we employ two DNN structures to approximate the solution when the interface splits the domain into two subdomains. The solution is approximated by the PINNs, which formulate the PDEs and jump condition on the interface into the loss of the neural network. To improve the computational efficiency for more challenging problems, the adaptive activation function, as well as the adaptive sampling strategy, are employed to achieve the best performance as the network is optimized in the learning process. Lastly, we present many numerical experiments, in both 2D and 3D, to demonstrate the flexibility, efficacy, and accuracy of the proposed technique for handling interface issues.

The remainder of the paper is structured as follows.

Section 2 describes the second-order elliptic interface issue and its three types of boundary conditions. The topology of neural networks with adaptive activation functions is discussed in

Section 3. We present physical-informed neural networks with soft constraints in

Section 4.

Section 5 shows numerical data to demonstrate the efficacy of the suggested technique. Lastly, in

Section 6, we draw some conclusions.

3. Mathematical Setup for Dual Neural Networks Structure

A feed-forward neural network of

L layers and

neurons in the

kth layer

, and

) is denoted by

.

and

are the weight matrix and bias vector in the

kth layer

, respectively. The input vector is denoted by

and the output vector at the

kth layer is denoted by

and

. The activation function is denoted by

and is applied layer-wise together with the scalable parameters

, where

n is the scaling factor. The extra parameters

modify the slope of the activation function in each hidden layer, resulting in faster training speed. By means of the slope recovery term, the activation slopes can also influence the loss function, see [

39,

40] for more details. Such locally adaptable activation functions, in particular during the initial training phase, improve the network’s potential for learning. This document uses a scaling factor

for all hidden layers and an initialization

.

The

-hidden layer feed-forward neural network is defined by

and

, where, on the first layer, the activation function is identity.

is the collection of all weights, biases, and slopes and takes

as the parameter space.

However, unlike conventional approaches that use only a single DNN to approximate the

solution over the entire

domain, we use two DNN structures independently to estimate

and

on

and

, respectively. For

, a dual neural network is used to approximate

, as follows,

where

highlights the dependence of the neural network output

on

. Then, the global approximation of

can be defined as follows,

where

, if

.

emphasizes the dependence of the neural network output

on

.

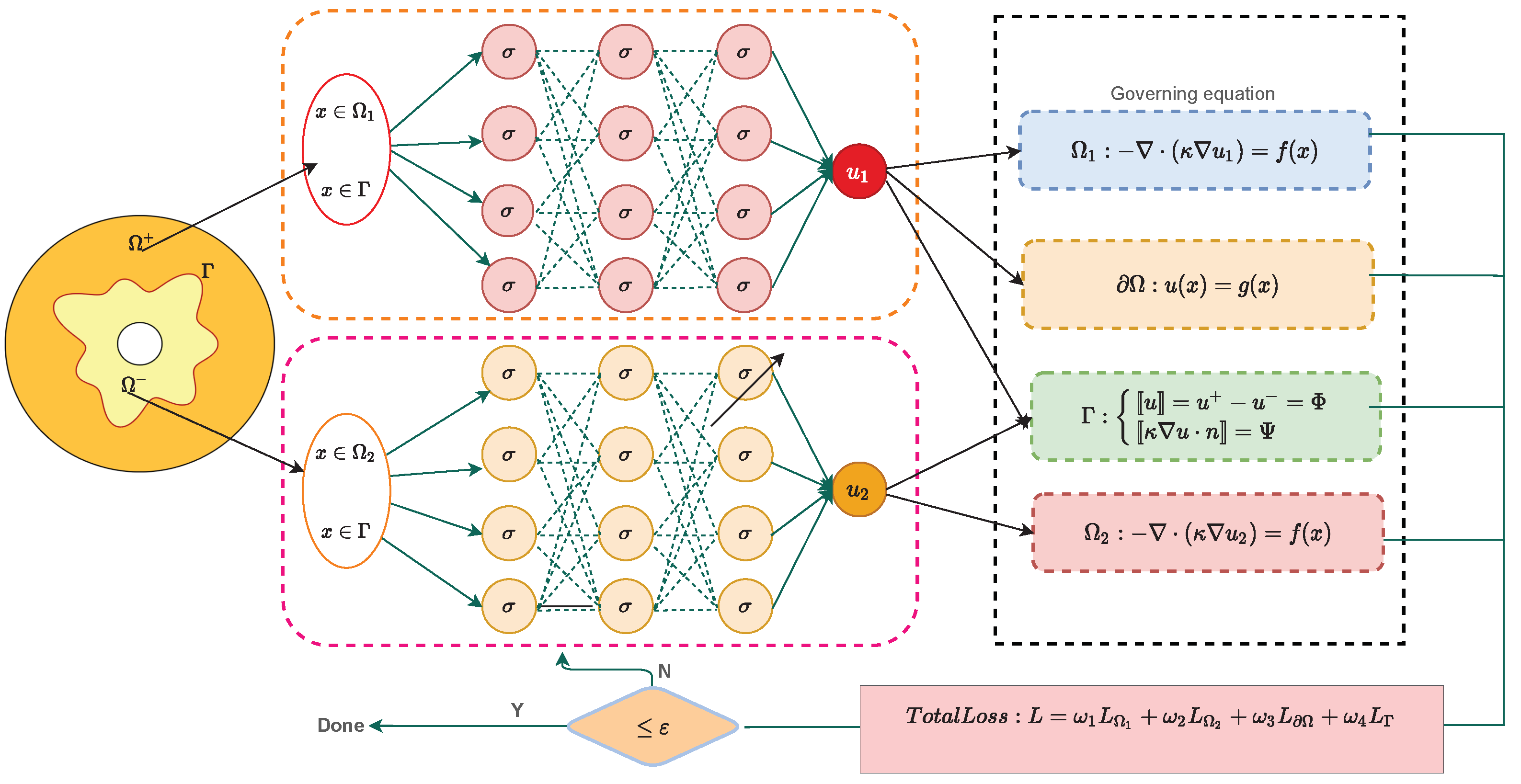

4. Physics-Informed Neural Networks with Soft Constraints

A key feature of a PINN is that it can easily turn a PDE problem into an optimization problem by combining all available information, including control equations, empirical data, and initial/boundary conditions into a loss function. The advantage of this approach is that it provides a meshless algorithm, because the differential operators in the managed PDE are approximated by an automatic discriminant. To reduce the difficulty of neural network learning, we hope that the network constructed can, as far as possible, meet the general solution of PDE, and then achieve the goal of accelerating convergence speed and improving solution accuracy. The dual neural networks structure of the approximation

is shown in

Figure 1. As we can see, these two networks were used to approximate the solution in different domains. The sampling points were classified, according to the location of the sample pickup points, and then it was determined to which separate DNN network they belonged. This method can effectively approximate the singular discontinuous solution along the material interface.

For a forward problem, we define the loss function as

where

and

are the losses corresponding to the residuals of governing equations in subdomains

and

, respectively.

is the loss due to the boundary condition,

is the loss due to the interface conditions, and

is the loss corresponding to label data (if any).

where

and

are the collocation points randomly distributed in the domain

and

,

are the boundary condition points,

are the interface condition points, and

are sample data (if any).

,

,

,

,

denote the total number of collocation points in two different regions, interface points, boundary points and labeled data, respectively. The weights

,

,

,

and

are the weights of residuals for two governing equations, interface conditions, boundary conditions, and labeled data. Weights in PINNs are crucial for learnability and can be set manually or auto-configured [

41,

42,

43].

The basic idea is to train a neural network to approximate the solution of PDE by minimizing the physically-informed loss function, given the residual of the PDEs together with the interface and boundary conditions, as follows:

where

is the minimizer and

is the related DNN approximation.

5. Numerical Experiments

Several numerical tests for the elliptic interface problems in two and three dimensions were carried out, using the current soft constraint PINNs. Due to these intricate contact geometries, traditional numerical methods for handling such problems provide a significant challenge to the robustness of numerical schemes. Apart from numerical scheme concerns, the great computational complexity of typical numerical approaches for three-dimensional problems is a significant challenge in dealing with complex interface challenges. As a result, this section highlights the benefits and potential utility of deep learning approaches in dealing with such difficult numerical instances. The setup we use in the example below aims to demonstrate the robustness and efficacy of the suggested algorithm, even in the case of a small network architecture. By choosing the sigmoid as the activation function, we terminate the learning process when the stopping standardization

is satisfied (the

tolerance was set to at least

). Cross-validation was used in machine learning practice for validation. That is, we used the test error instead of the training error to measure the accuracy of the solution. The

and

norm errors are calculated by randomly selecting test points

located in

as

where

was the function obtained by the present PINN.

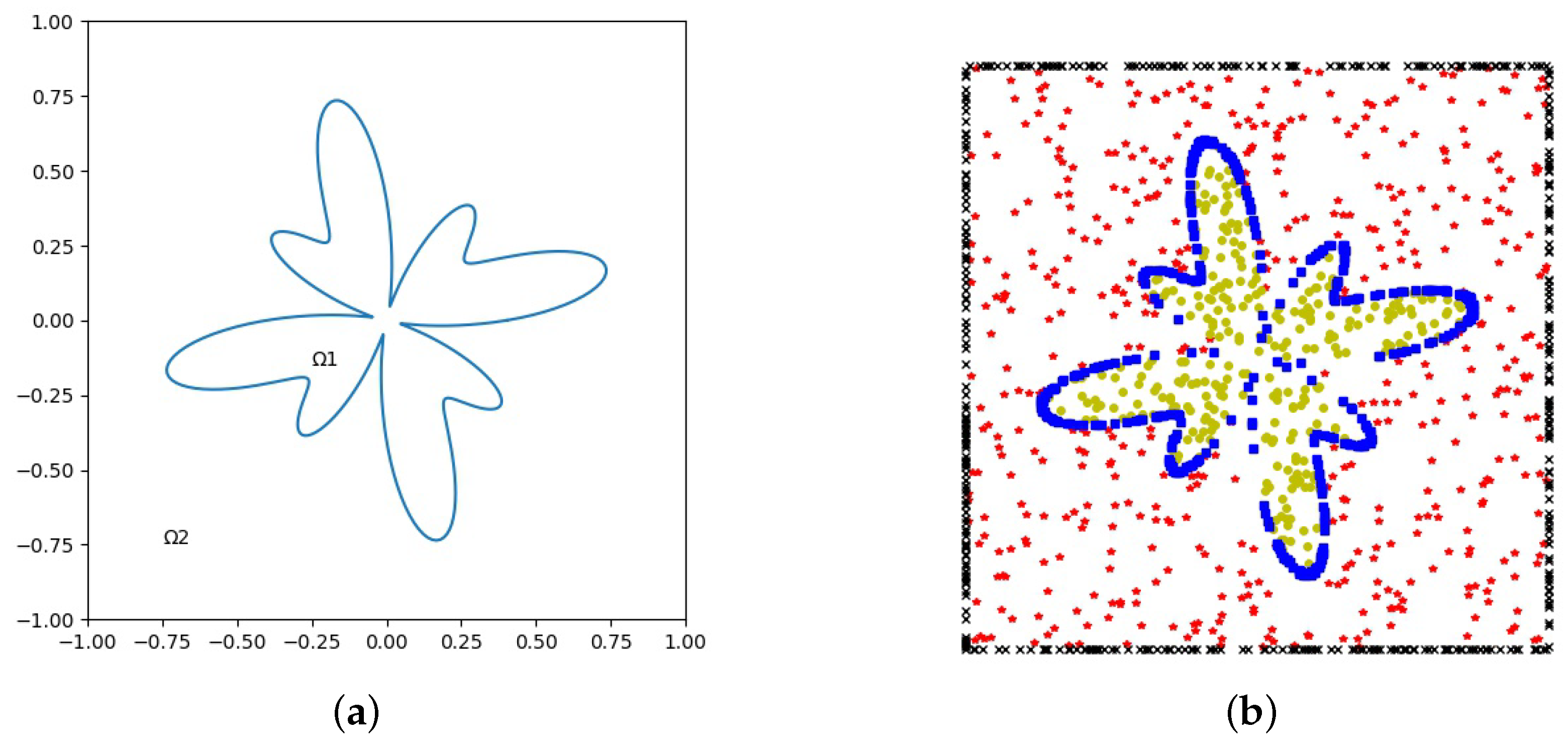

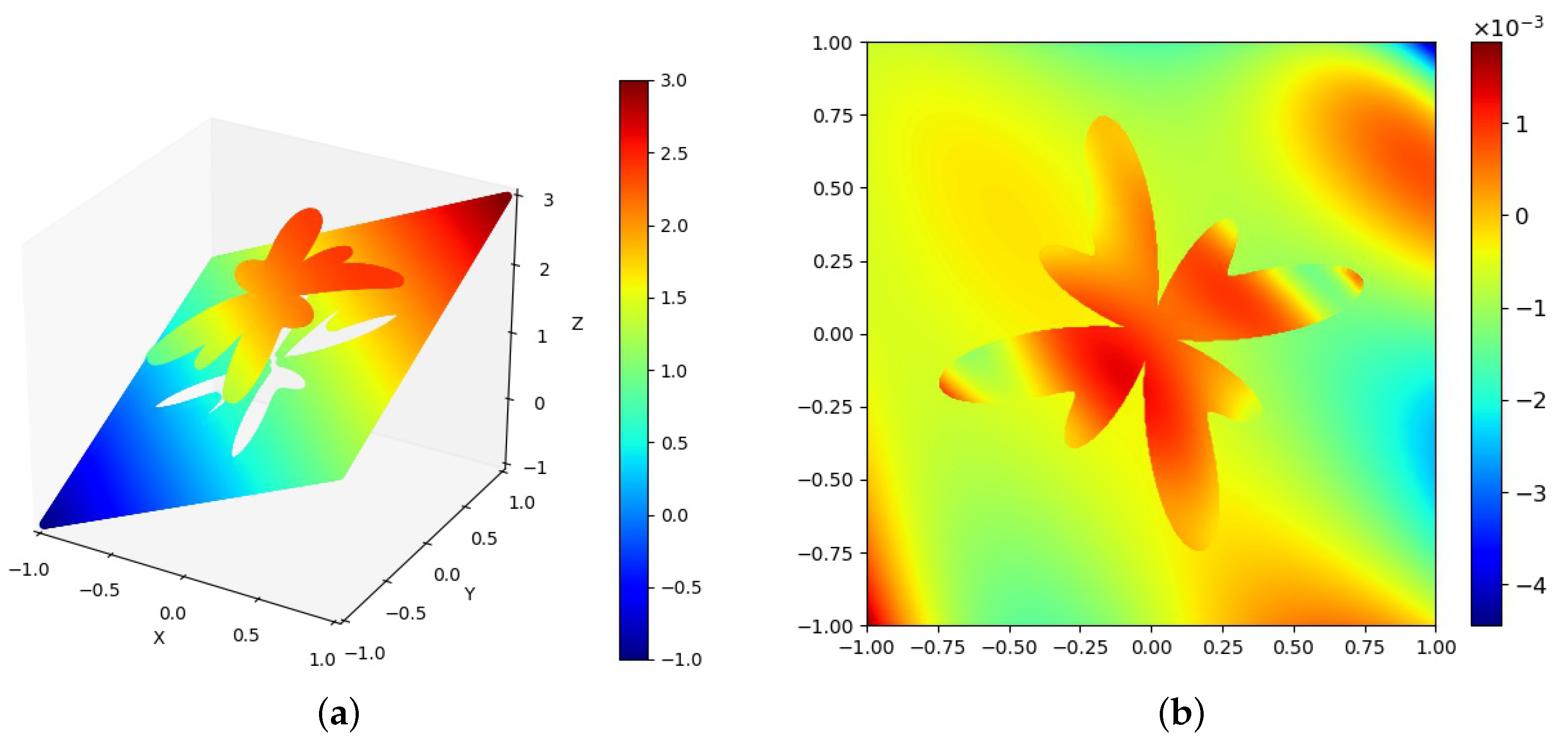

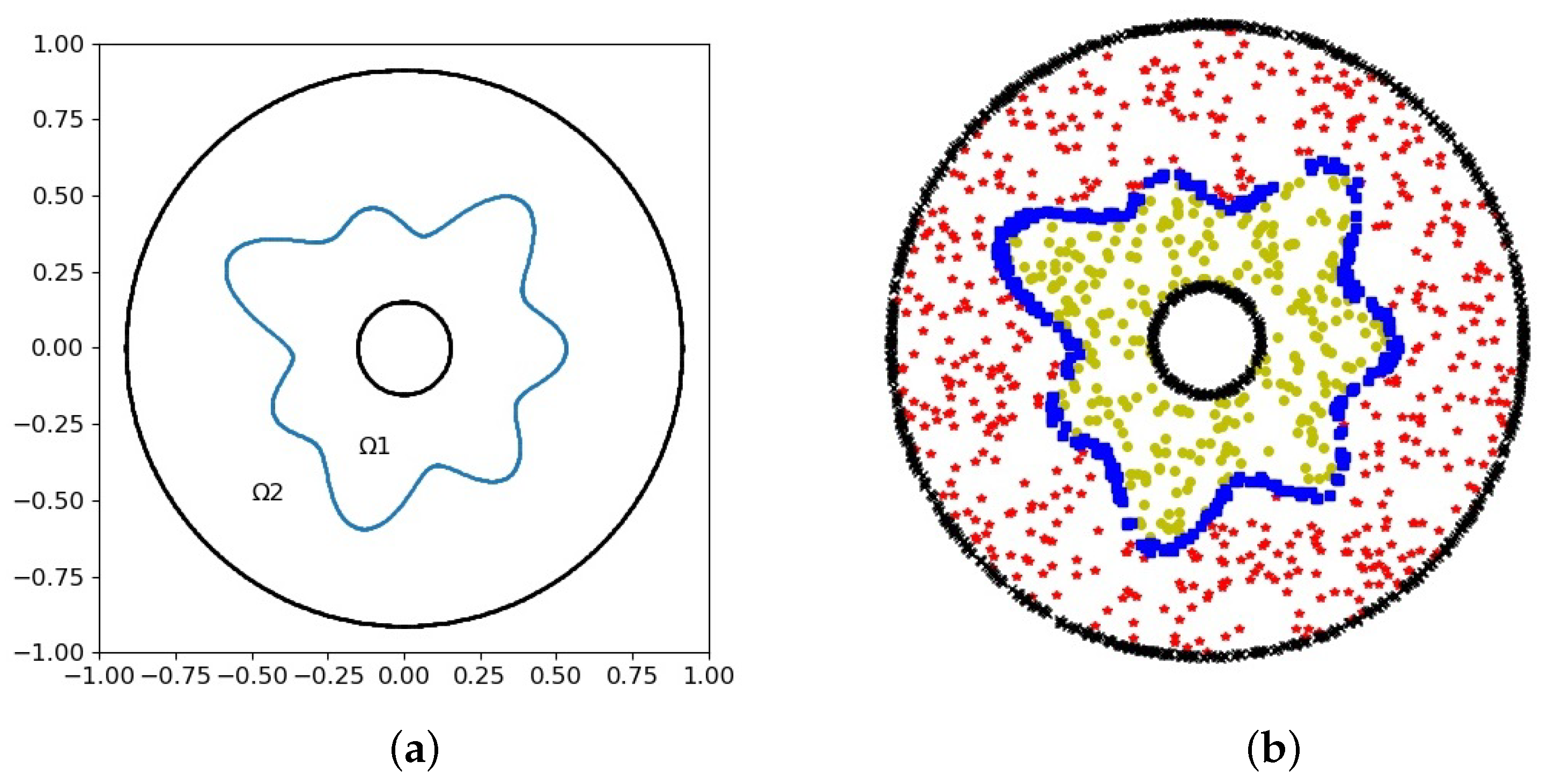

Example 1. This example employs a sophisticated interface in the form of parameterswhere , , and . The coefficients and the solutions are given as follows A dual neural network with 3 layers and 20 neurons in each layer was used. The geometry of interface

and subdomains is illustrated in

Figure 2a. The uniform sampled points with

on the domain

,

on the domain

and

on the interface

, and

on the boundary

, were used to calculate the numerical solution, see

Figure 2b. The balance weights in the loss function were chosen as

,

,

,

. The PINN solution is shown in

Figure 3a. The error between PINN and exact solutions is shown in

Figure 3b. It can be seen that the presented PINN was able to approximate the solution of the interface problem, and the relative error between the numerical and the analytical solution was between

and

.

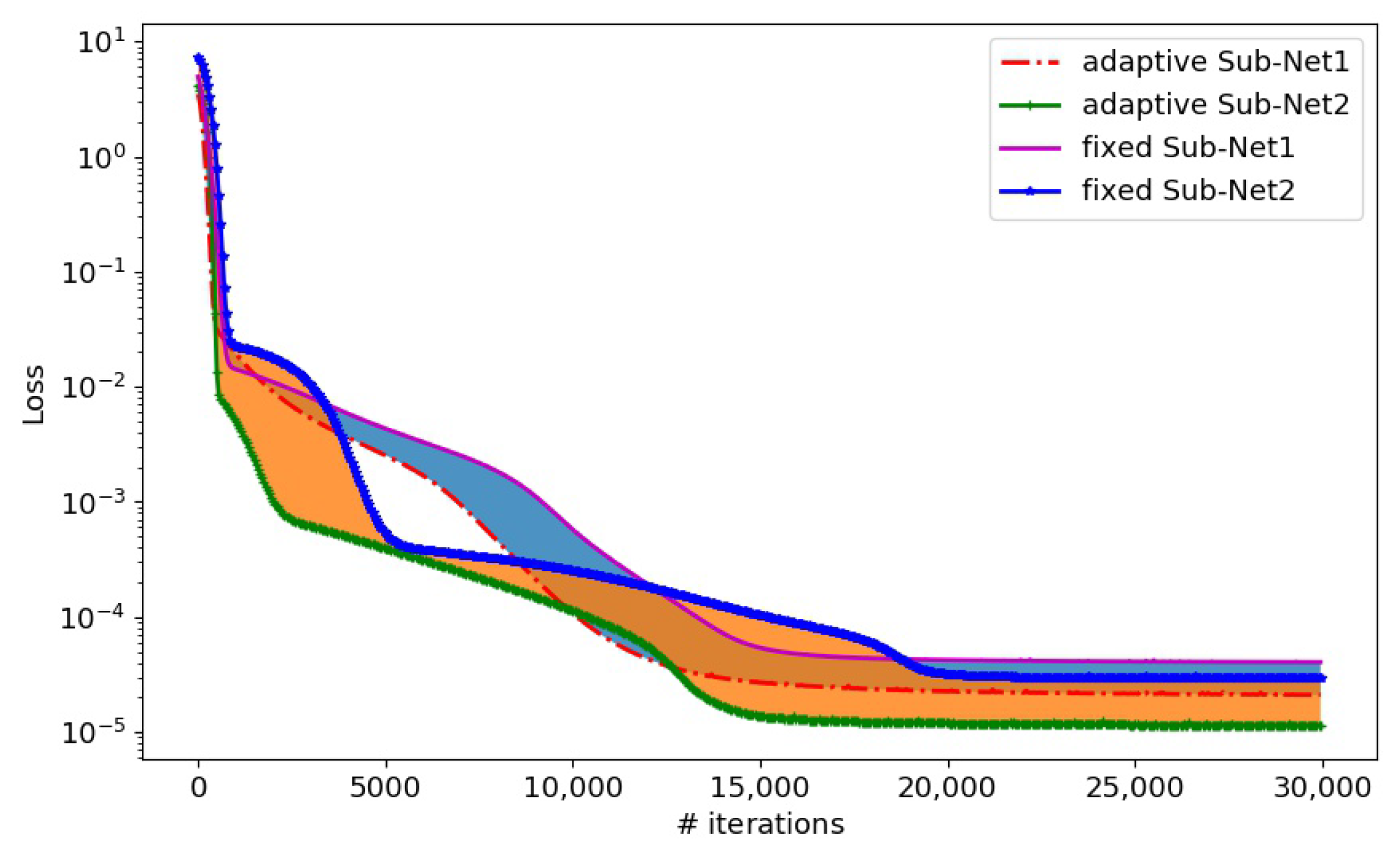

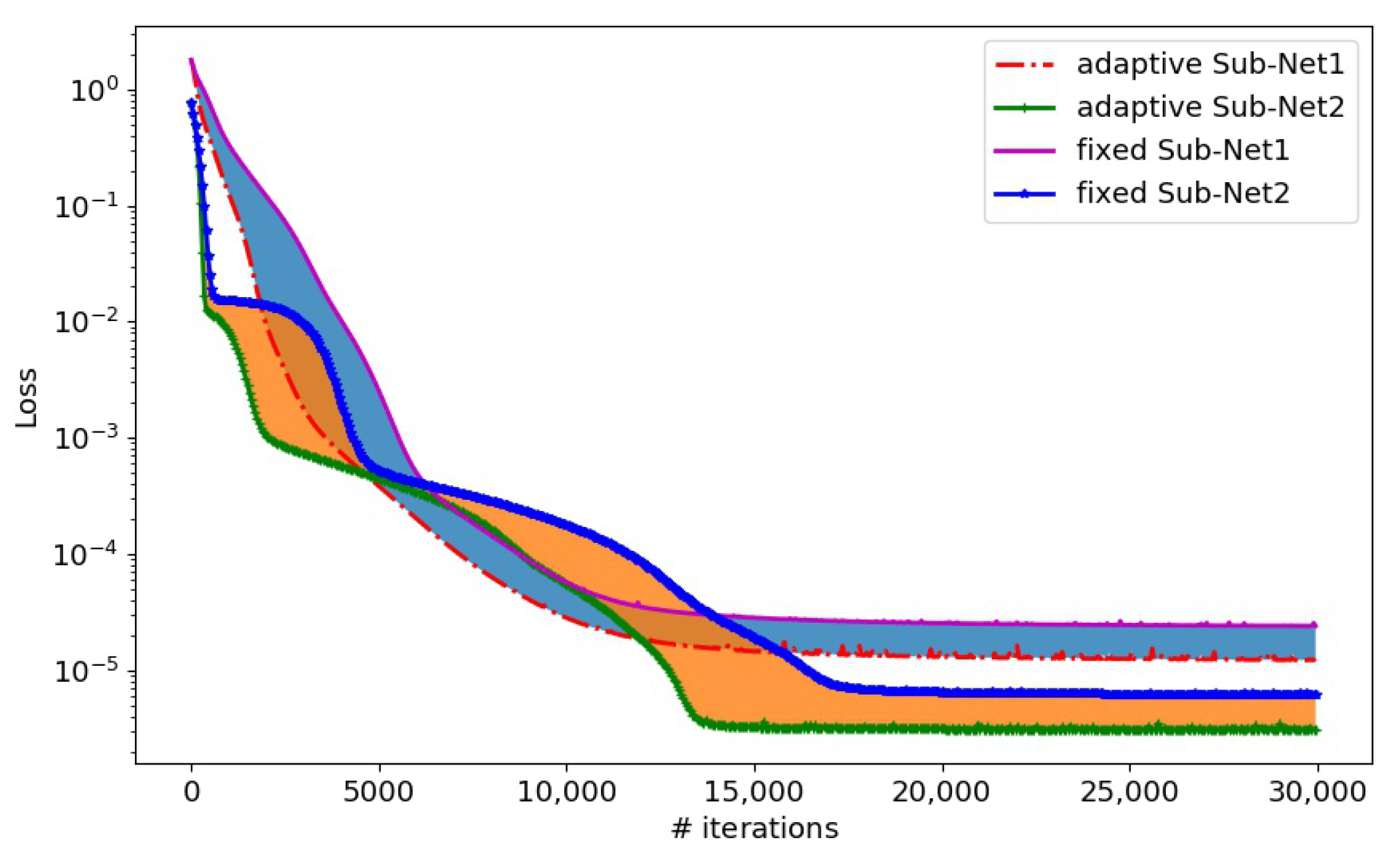

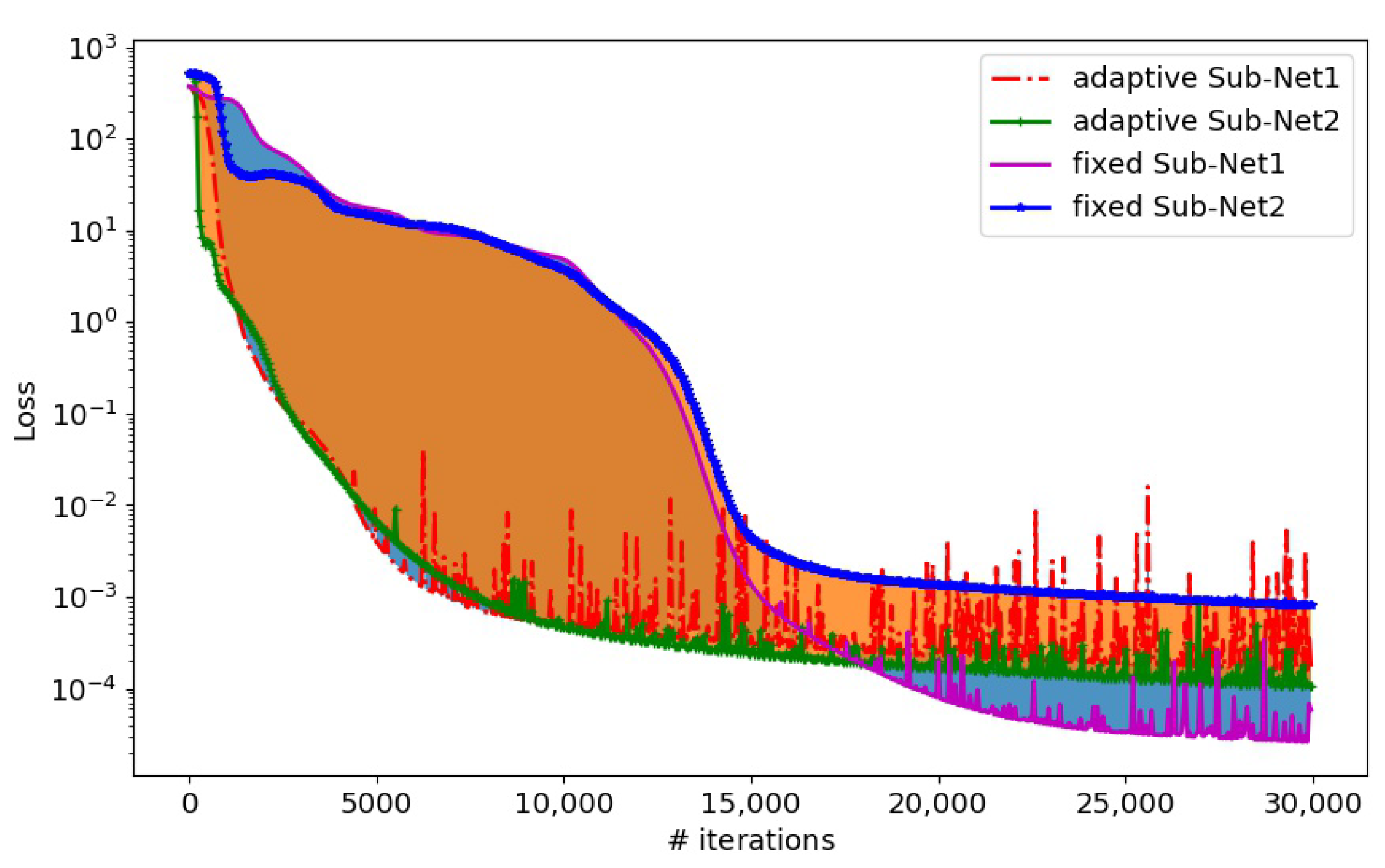

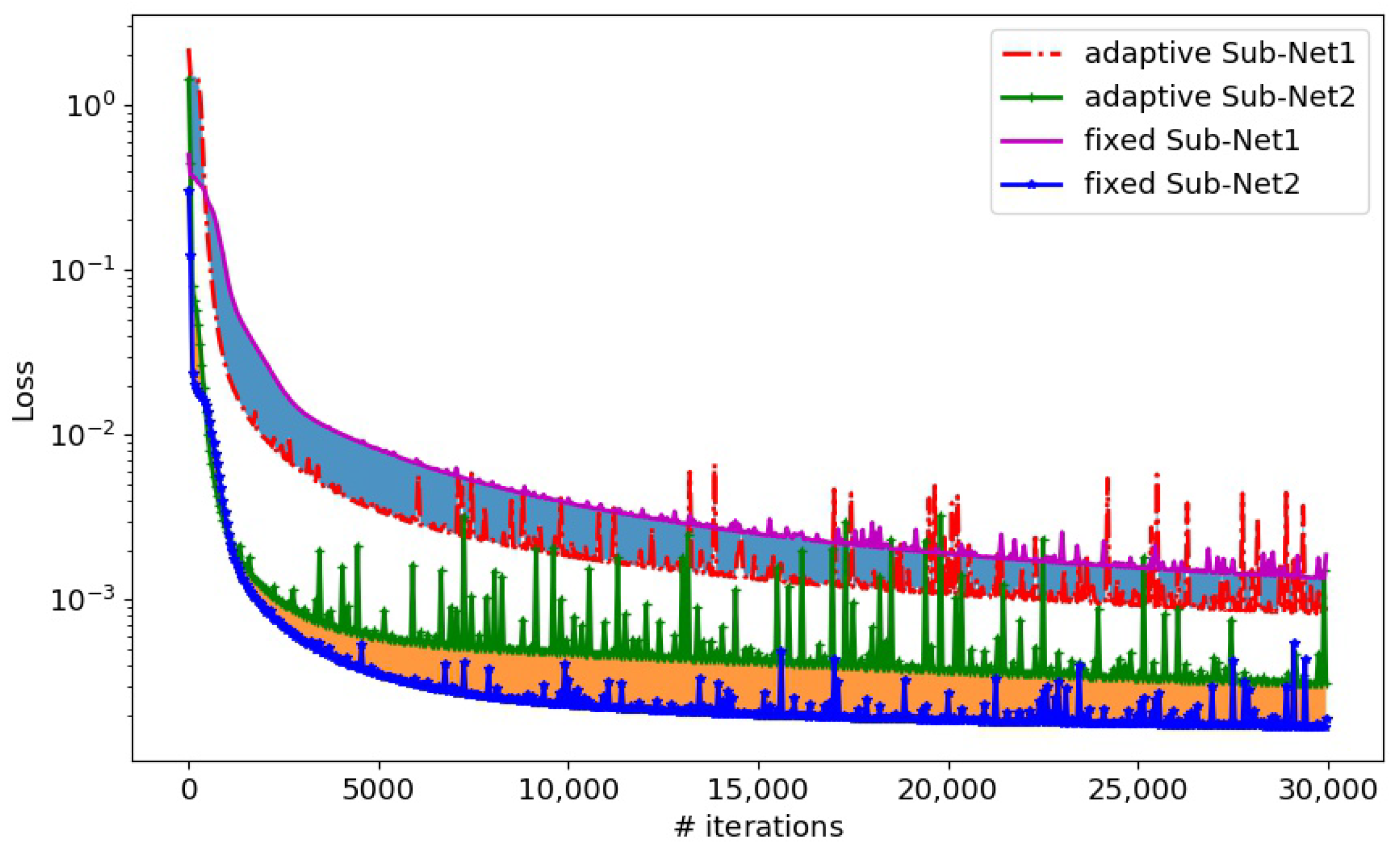

Figure 4 presents the evolution process of the loss function with adaptive and fixed activation functions for Sub-Net1 and Sub-Net2, respectively. It is shown that the adaptive activation function was beneficial in improving the convergence speed of the loss function in the process of deep learning.

Table 1 shows the relative

errors with the different number of training points under the adaptive activation function and fixed activation function.

,

,

and

are the loss errors at

,

, interface

and boundary

, respectively. It can be seen that the error decreased with increase in the number of training points on the boundary and interface, and the error by the adaptive activation function method was better than that of the fixed activation function.

Table 2 presents the relative

errors of the same training points with different numbers of neurons. In this table, we can see that the calculation accuracy increased with increase in the number of neurons, but the calculation time cost increased accordingly. Therefore, the appropriate network depth and width should be selected in order to acquire good accuracy and efficiency.

Example 2. This example used the same interface geometric parameter equation as in Example 1. The same problem setting and analysis solution as in the previous example and the balance weight in the loss function were used. Here, , , , and . The geometry of interface Γ and subdomains is illustrated in Figure 5a. The numerical solution was calculated on the uniform sampled points with , , , and , see Figure 5b. The PINN solution is shown in Figure 6a. The relative error in norm is plotted in Figure 6. It can be seen that the present method effectively approximated the solution of the interface problem, and the range of error was between to . This example shows that, even at uniform sampling points, the present dual neural network technology could produce sufficient accurate approximation for two-dimensional elliptic problems with complex interface geometry. Figure 7 displays the evolution process of the loss function with adaptive and fixed activation functions for Sub-Net1 and Sub-Net2, respectively. It is shown that the adaptive activation function was beneficial in improving the convergence speed of the loss function in the process of deep learning. Table 3 compares the relative

errors in a different number of training points with adaptive and fixed activation functions, respectively. We can see that the error decreased with increase in the number of training points on the boundary and interface, and the error of the adaptive activation function method was better than that of the fixed activation function.

Table 4 shows the relative

errors of the same training points with a different number of neurons. It is shown that the calculation accuracy increased with increase of the number of neurons, but the calculation time cost increased accordingly.

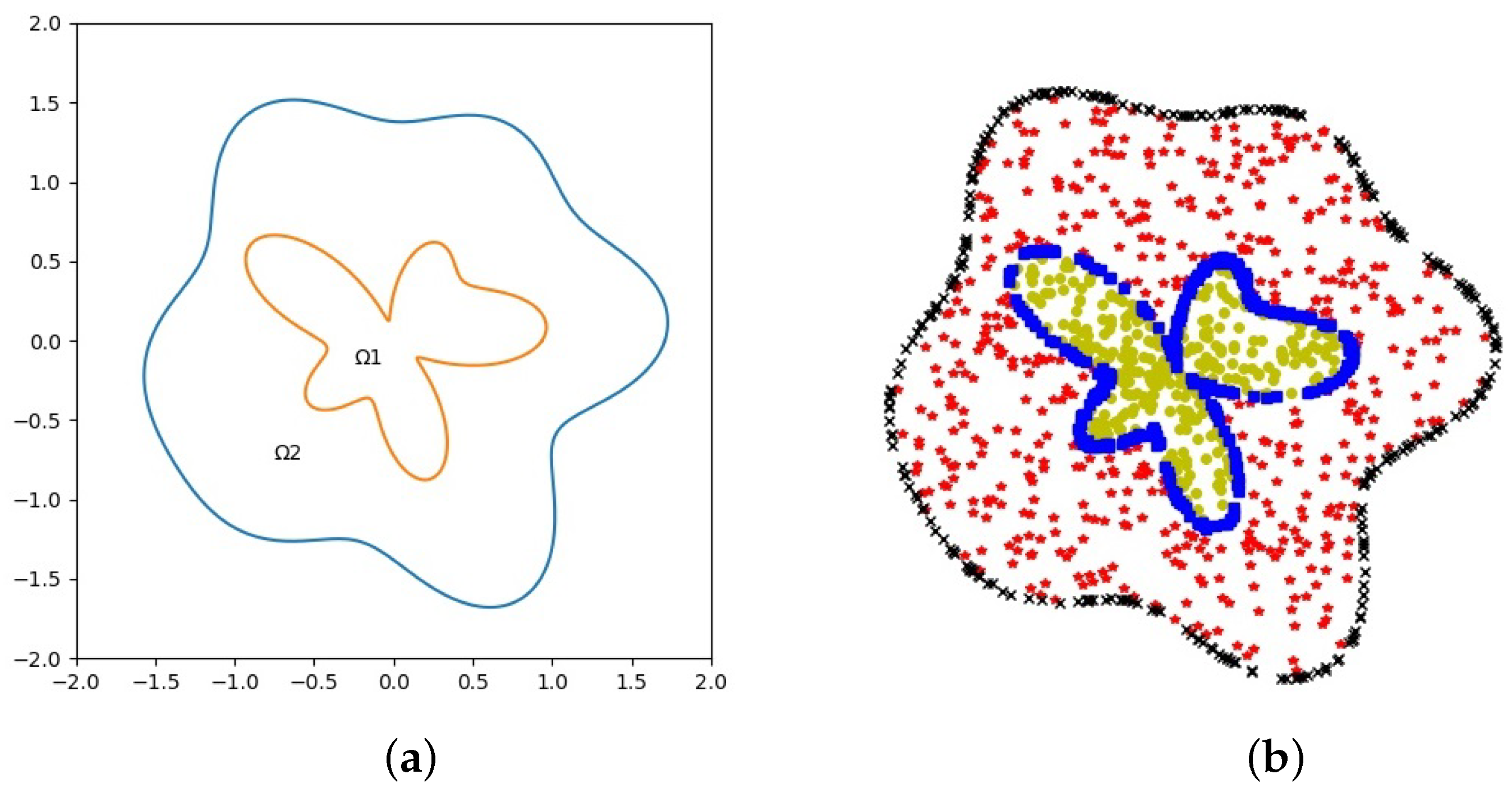

Example 3. Consider the following level set function to describe an annular region with inner and outer radii and with an immersed star interface.with parameters: We tested the problems on dual neural networks. Each neural network had 3 layers and each layer had 20 neurons. The geometry of interface

and subdomains is illustrated in

Figure 8a. The numerical solution was calculated on the uniform sampled points with

,

,

, and

,

, see

Figure 8b. The PINN solution is shown in

Figure 9a. The errors between PINN and the exact solutions is shown in

Figure 9b. It can be seen that the presented method effectively approximated the solution of the interface problem, and the range of error was from

to

.

Figure 10 shows the evolution process of the loss function with adaptive and fixed activation functions for Sub-Net1 and Sub-Net2, respectively.

Table 5 compares the relative

errors with a different number of training points under adaptive and fixed activation functions. We can see that the error decreased with increase in the number of training points on the boundary and interface, and the error of the adaptive activation function method was better than that of the fixed activation function.

and

were the loss errors on the internal and the outer boundary, respectively.

Table 6 illustrates the relative

errors of the same training points with a different number of neurons. It is shown that the calculation accuracy increased with increase in the number of neurons.

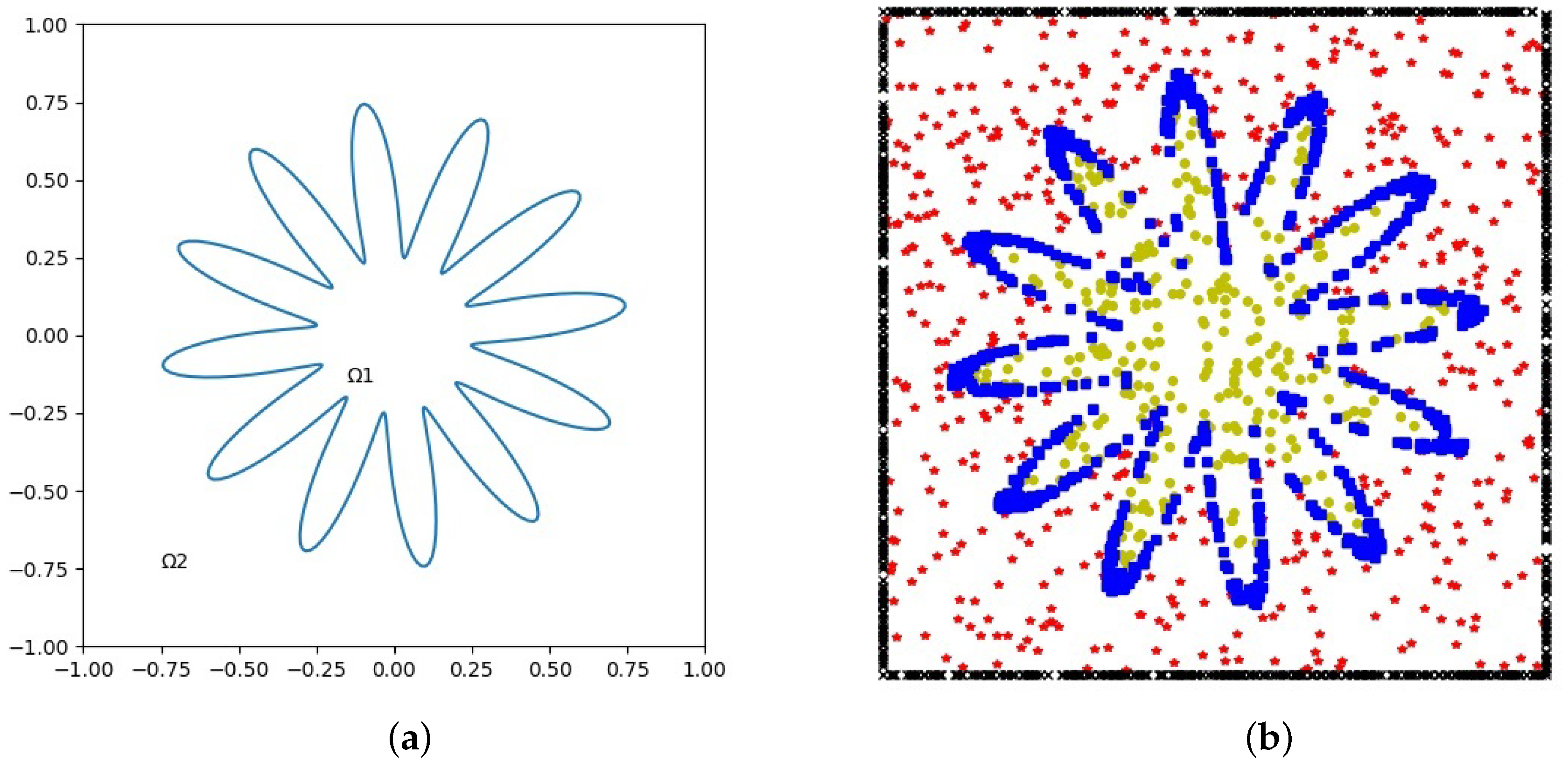

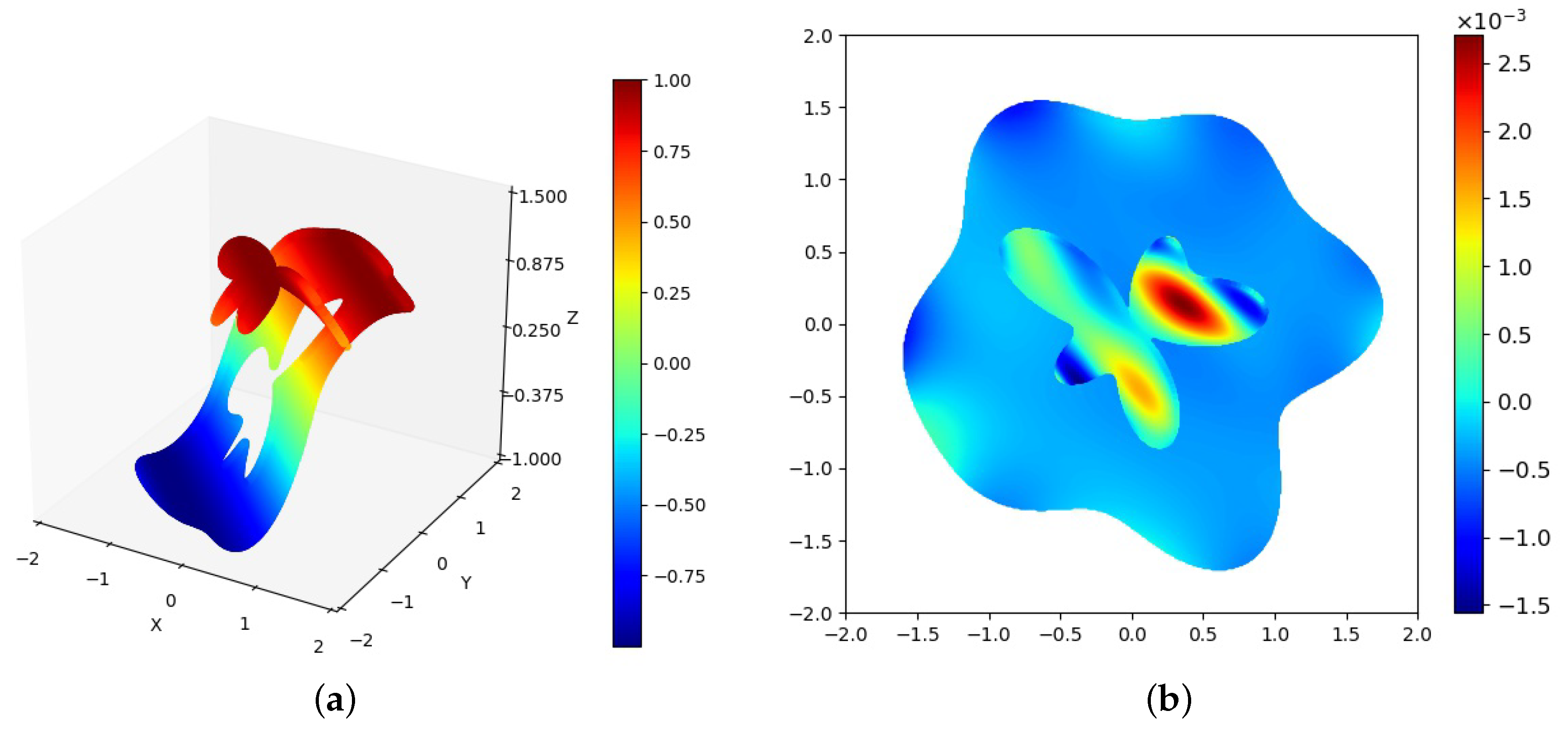

Example 4. A two-dimensional elliptic problem with irregular interface geometry.

We tested an interface problem with variable coefficients, and the computational domain is shown in

Figure 11. The boundary is given in polar coordinates

, where

, and the boundary points are obtained as

and

. The computational domain is further divided into two highly irregular, non-convex subdomains, where the interface is given by

and the corresponding interface points are obtained as

and

. The coefficient

is defined as

The necessary forcing term f, boundary and interface conditions are divided from the exact solution.

The geometry of interface

and subdomains is illustrated in

Figure 11a. The numerical solution was calculated on the uniform sampled points with

,

,

, and

, see

Figure 11b. The balance weights in this example were chosen as

,

,

,

,

. The PINN solution is shown in

Figure 12a. The error between PINN and exact solutions was between

and

, as shown in

Figure 12b.

Figure 13 shows the evolution process of the loss function with adaptive and fixed activation functions for Sub-Net1 and Sub-Net2, respectively.

Table 7 compares the relative

errors with different numbers of training points under adaptive activation function and fixed activation function. We can see that the error decreased with increase in the number of training points on the boundary and interface, and the error of the adaptive activation function method was better than that of the fixed activation function.

Table 8 illustrates the relative

errors of the same training points with a different number of neurons. It is shown that the calculation accuracy decreased with increase in the number of neurons, but the calculation time cost increased accordingly.

Example 5. Constant gradient jump over a flat interface.

We also tested a more complicated three-dimensional cube interface problem. Along the

z- axis, there were two zones that made up the cube. The conductivity values of the bottom and top sections were 1 and 8 W/m·K, respectively, and the material contact was planar. The prescribed temperatures were 5 and 10 °C along the top and bottom surfaces, respectively, while the remaining surfaces were insulated.

Figure 14 depicts the problem’s size and boundary conditions.

With the origin of the Cartesian coordinates system located at the lower front corner of the cubic domain, a distributed heat source

was applied, yielding the following exact temperature field in the domain:

We adopted a dual neural network structure, which included two neural networks. Each network had 3 layers, and each layer had 20 neurons. The numerical solution was calculated on the uniform sampled points with

,

,

, and

,

.

Figure 15a shows the PINN solution. The balance weights in this problem were chosen as

,

,

,

,

. The error between PINN and exact solutions is shown in

Figure 15b and the error range was between

and

. This method was shown to accurately approximate the solution of three-dimensional interface problems.

Figure 16 shows the evolution process of the loss function with adaptive and fixed activation functions for Sub-Net1 and Sub-Net2, respectively. The effectiveness of the presented algorithm for complex three-dimensional problems on uniform sampling points can be demonstrated by this example.

Table 9 compares the relative

errors with different numbers of training points under the adaptive activation function and the fixed activation function. We can see that the error decreased with increase in the number of training points on the boundary and interface, and the error of the adaptive activation function method was better than that of the fixed activation function.

Table 10 illustrates the relative

errors of the same training points with a different number of neurons. It was shown that the calculation accuracy decreased with increase in the number of neurons, but the calculation time cost increased accordingly.

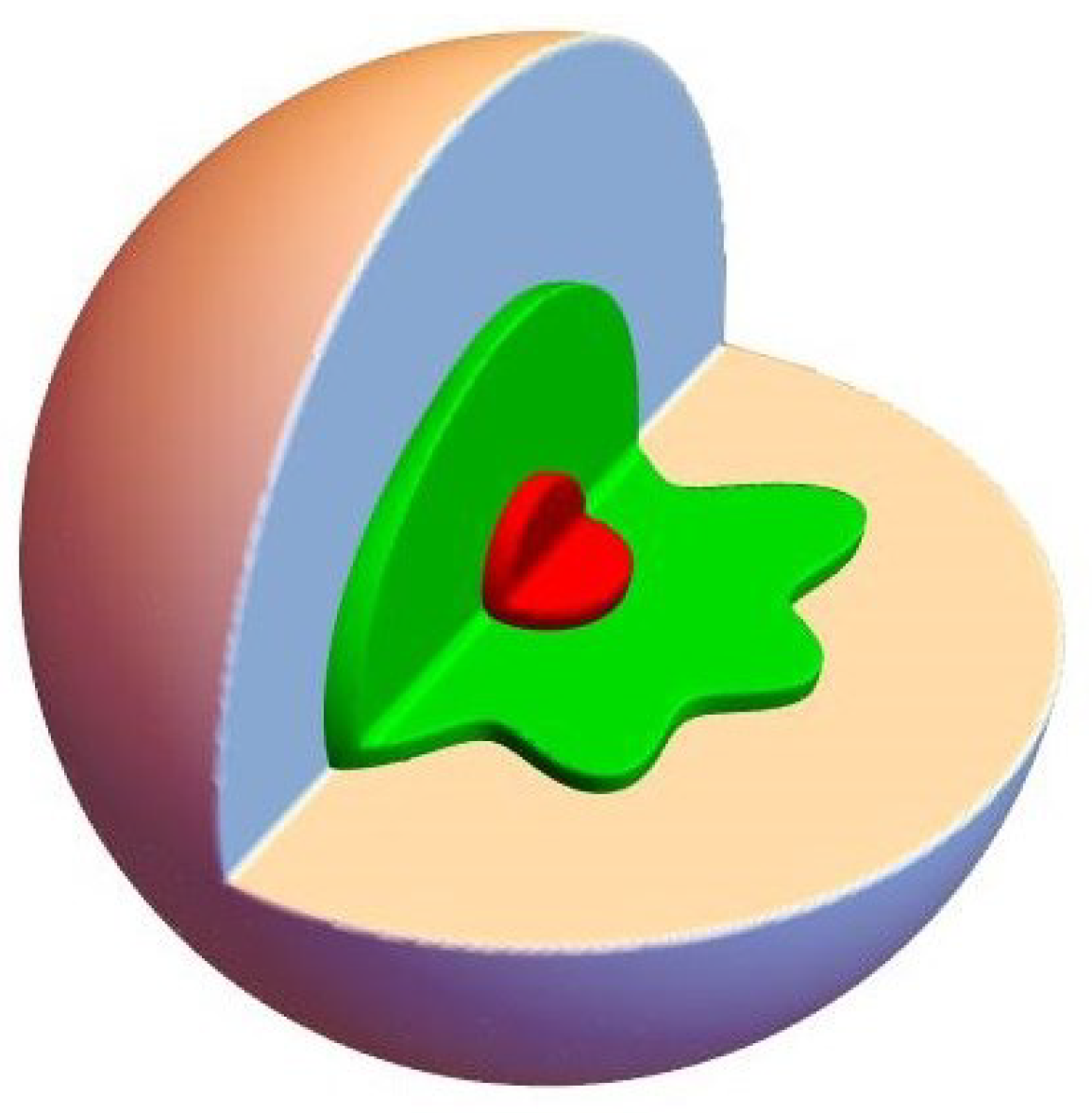

Example 6. Three-dimensional sphere with a complicated inner region.

Consider a three-dimensional spherical shell with internal and exterior radii of

and

, respectively, in which there is an immersed-type complex star interface and the interface geometry is given by the level set function shown below.

The exact solution is taken as follows with the same parameters (

10) as for the two-dimensional case.

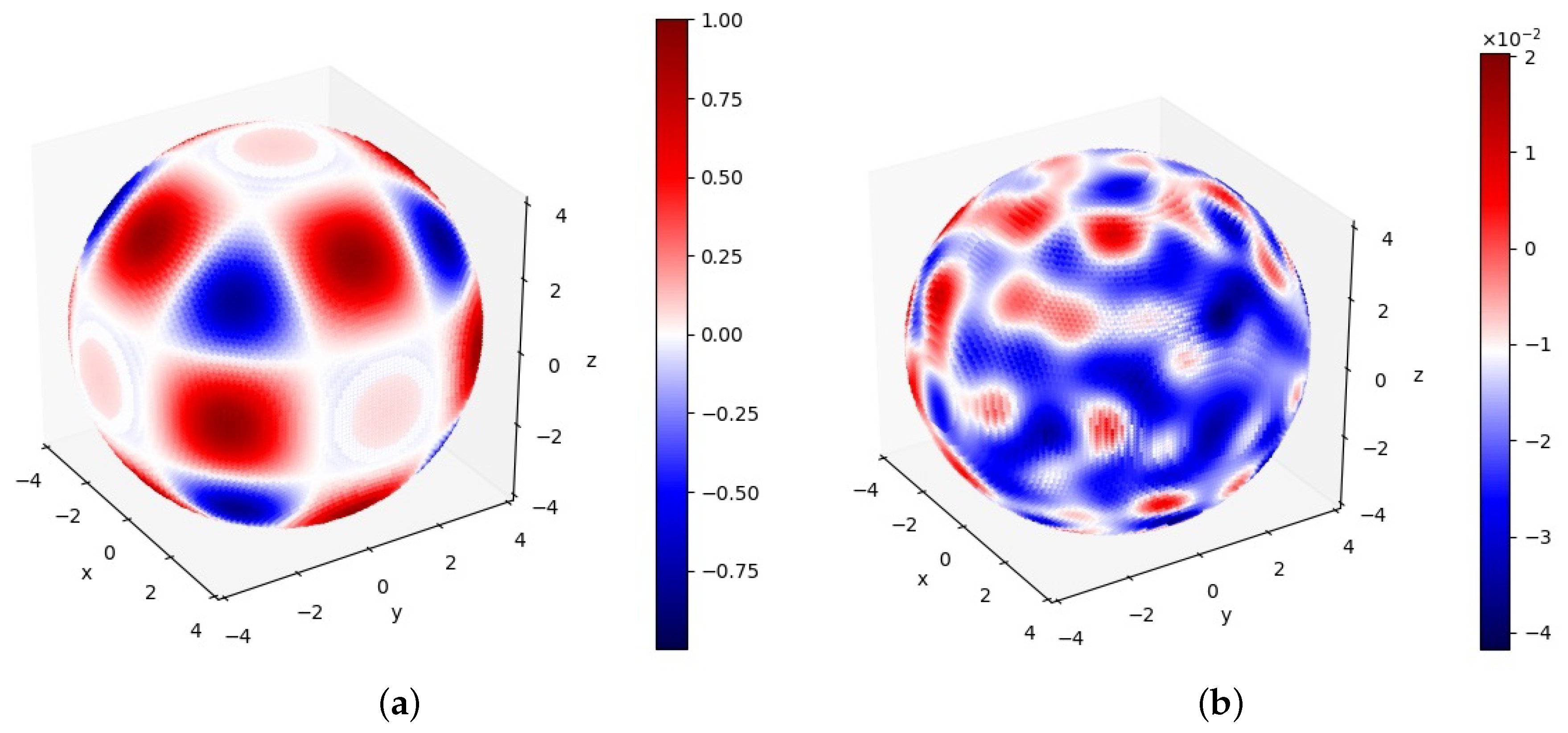

The problem geometry is illustrated in

Figure 17.

The numerical solution is calculated on the uniform sampled points with , , , and , . The balance weights in the loss function were chosen as , , , , .

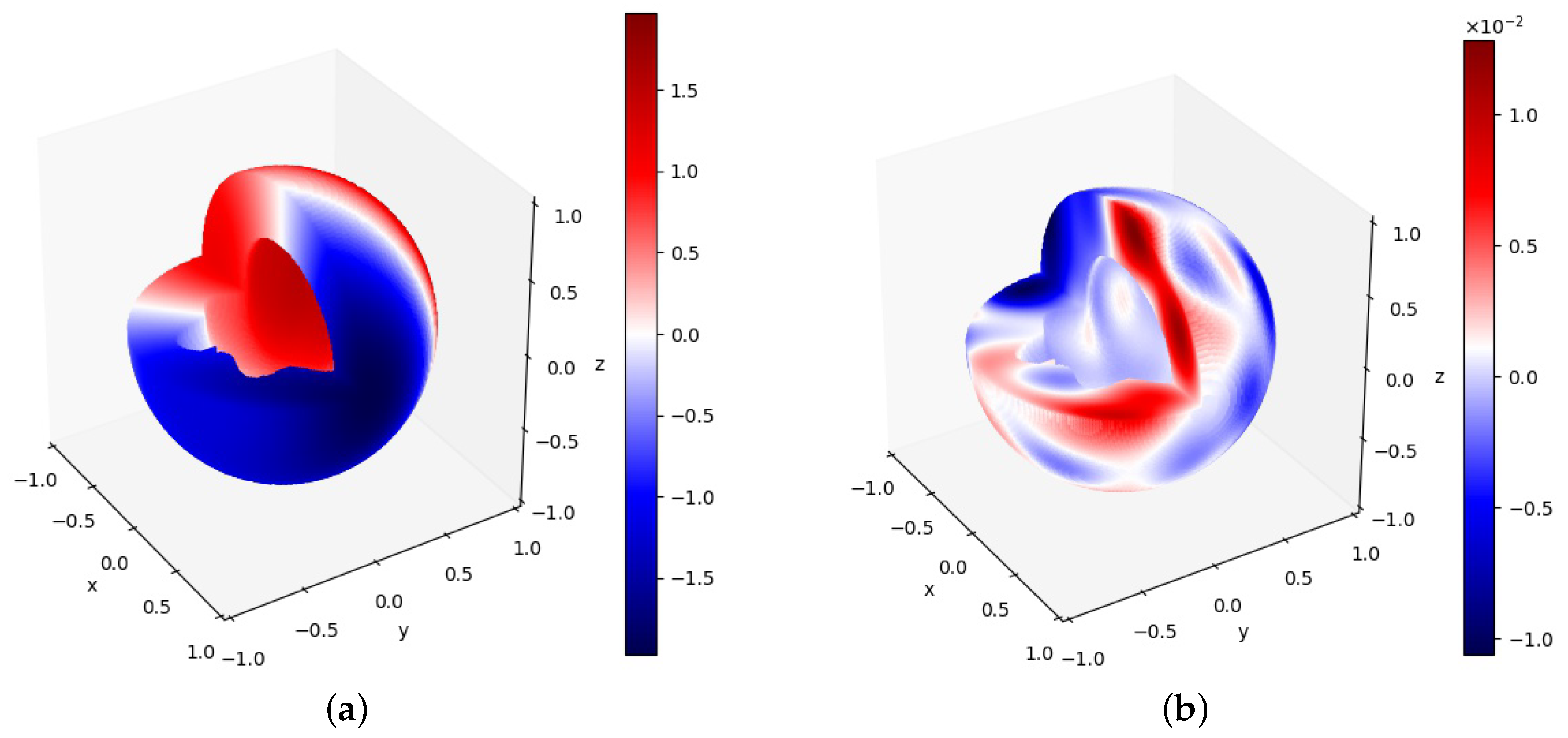

Figure 18a depicts the PINN solution on the domain inside the interface. The error between PINN and the exact solutions in the internal domain is shown in

Figure 18b. The relative error range of the internal domain was between

and

.

Figure 19a depicted the PINN solution on the domain outside the interface. The error between PINN and the exact solutions in the external domain is shown in

Figure 19b. It can be seen that the relative error range of the external domain was between

and

.

Figure 20 shows the evolution process of the loss function with adaptive and fixed activation functions for Sub-Net1 and Sub-Net2, respectively.

Table 11 compares the relative

errors with different numbers of training points under the adaptive activation function and the fixed activation function. We can see that the error decreased with increase in the number of training points on the boundary and interface, and the error of the adaptive activation function method was better than that of the fixed activation function.

Table 12 illustrates the relative

errors of the same training points with a different number of neurons. It is shown that the calculation accuracy decreased with increase in the number of neurons, but the calculation time cost increased accordingly.

Example 7. Consider a sphere with an inner doughnut, the level set function of the inner doughnut interface is given as: The elliptic coefficients and exact solution are given as prior: In this problem, a dual neural network structure with two neural networks was adopted. Each network had 4 layers, and each layer had 20 neurons. The numerical solution was calculated on the uniform sampled points with

,

,

, and

. The balance weights were chosen as

,

,

,

,

.

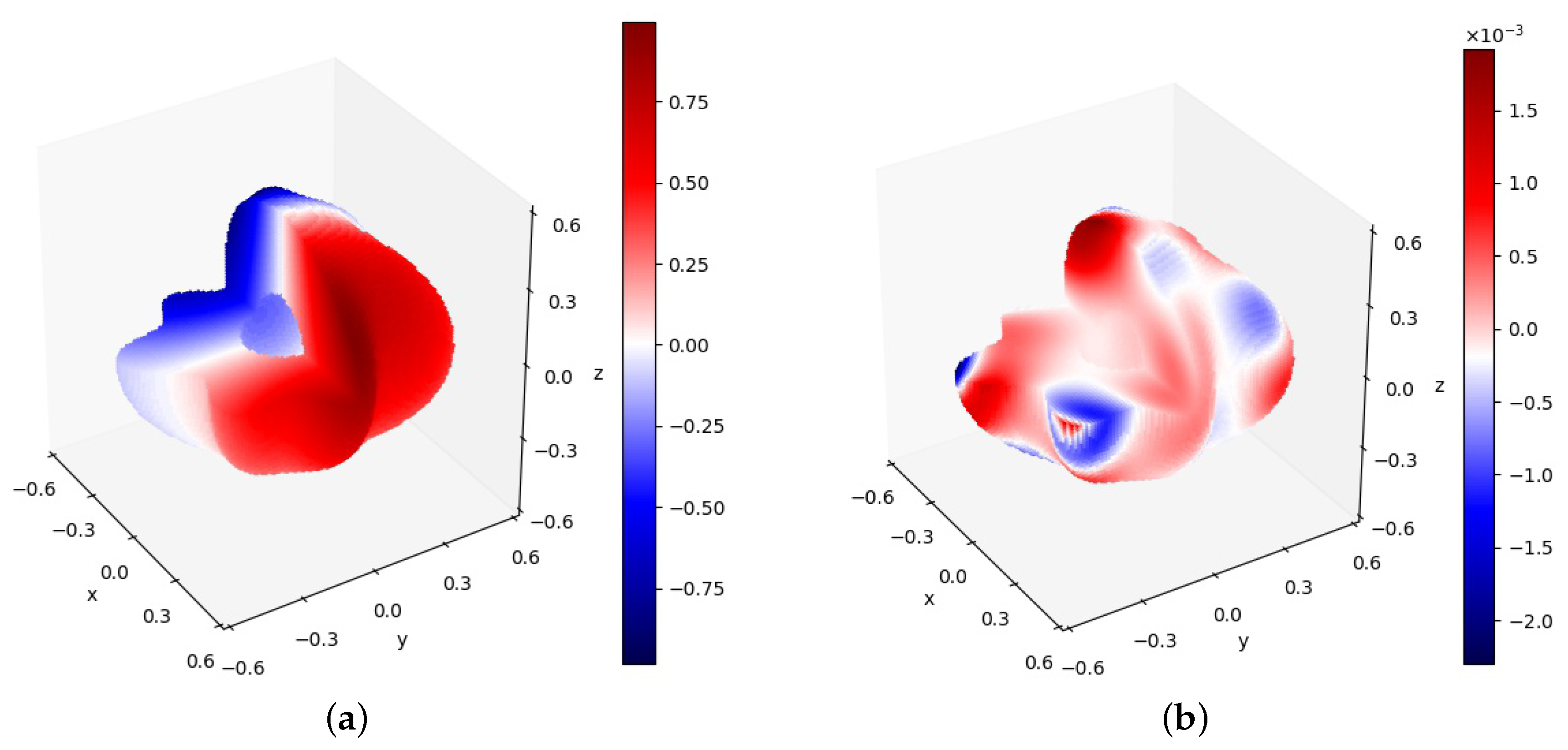

Figure 21a shows the PINN solution within the interface. The error between PINN and the exact solutions on the internal domain is shown in

Figure 21b. It is shown that the relative error range of the internal domain was between

and

.

Figure 22 plots the PINN solution outside the interface. The errors between PINN and the exact solutions on the external domain is shown in

Figure 22b. It can be seen that the relative error range of the external domain was between

and

.

Figure 23 shows the evolution process of the loss function with adaptive and fixed activation functions for Sub-Net1 and Sub-Net2, respectively.

Table 13 compares the relative

errors with different numbers of training points under the adaptive activation function and the fixed activation function. We can see that the error decreased with increase in the number of training points on the boundary and interface, and the error of the adaptive activation function method was better than that of the fixed activation function.

Table 14 illustrates the relative

errors of the same training points with a different number of neurons. It is shown that the calculation accuracy decreased with increase in the number of neurons, while the calculation time cost increased accordingly.