Tensor Train-Based Higher-Order Dynamic Mode Decomposition for Dynamical Systems

Abstract

1. Introduction

2. Methods

2.1. High-Order Dynamic Mode Decomposition

2.2. Proposed Algorithm

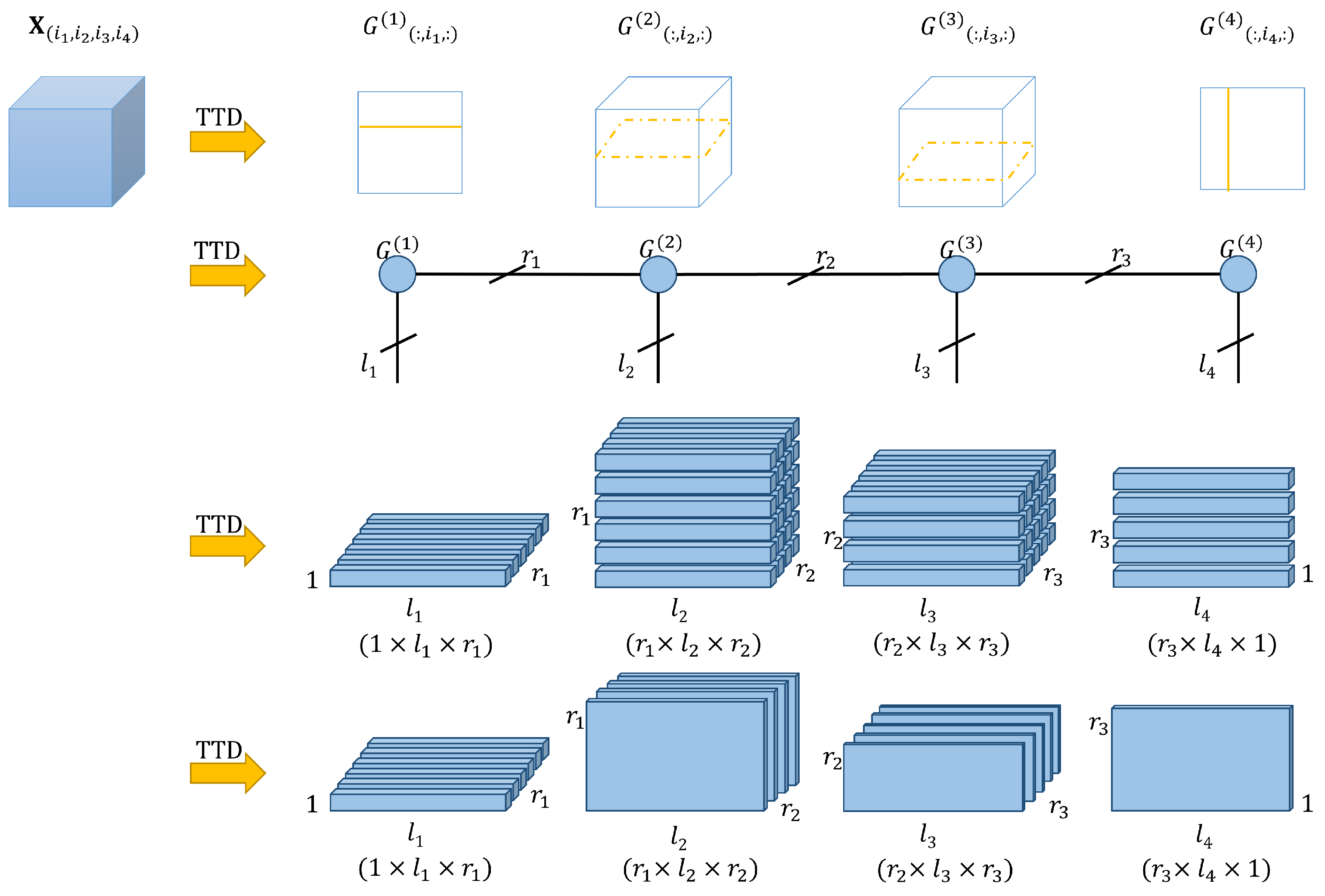

2.2.1. TT-Format

2.2.2. Tensor-Train-Based HODMD

3. Results and Discussion

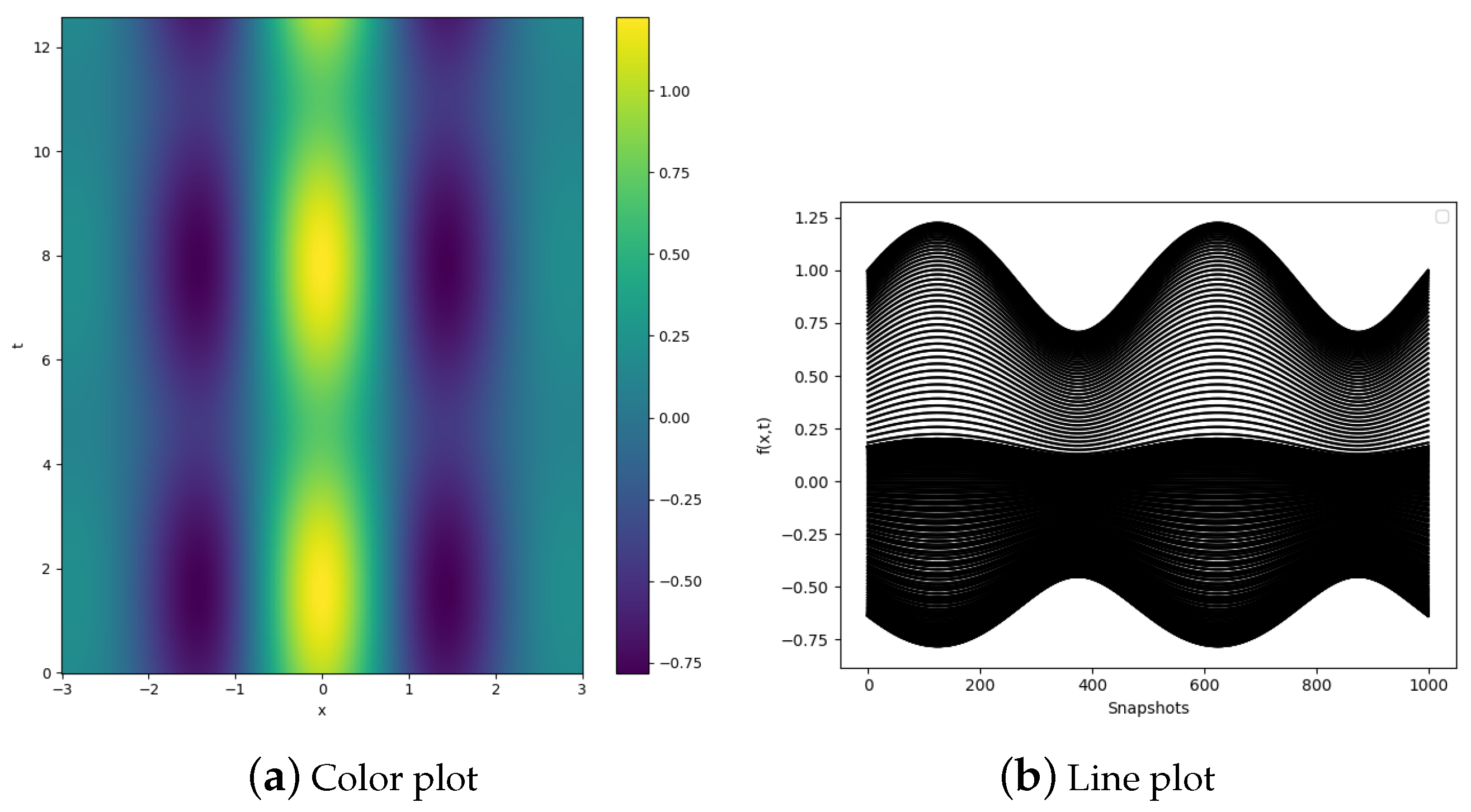

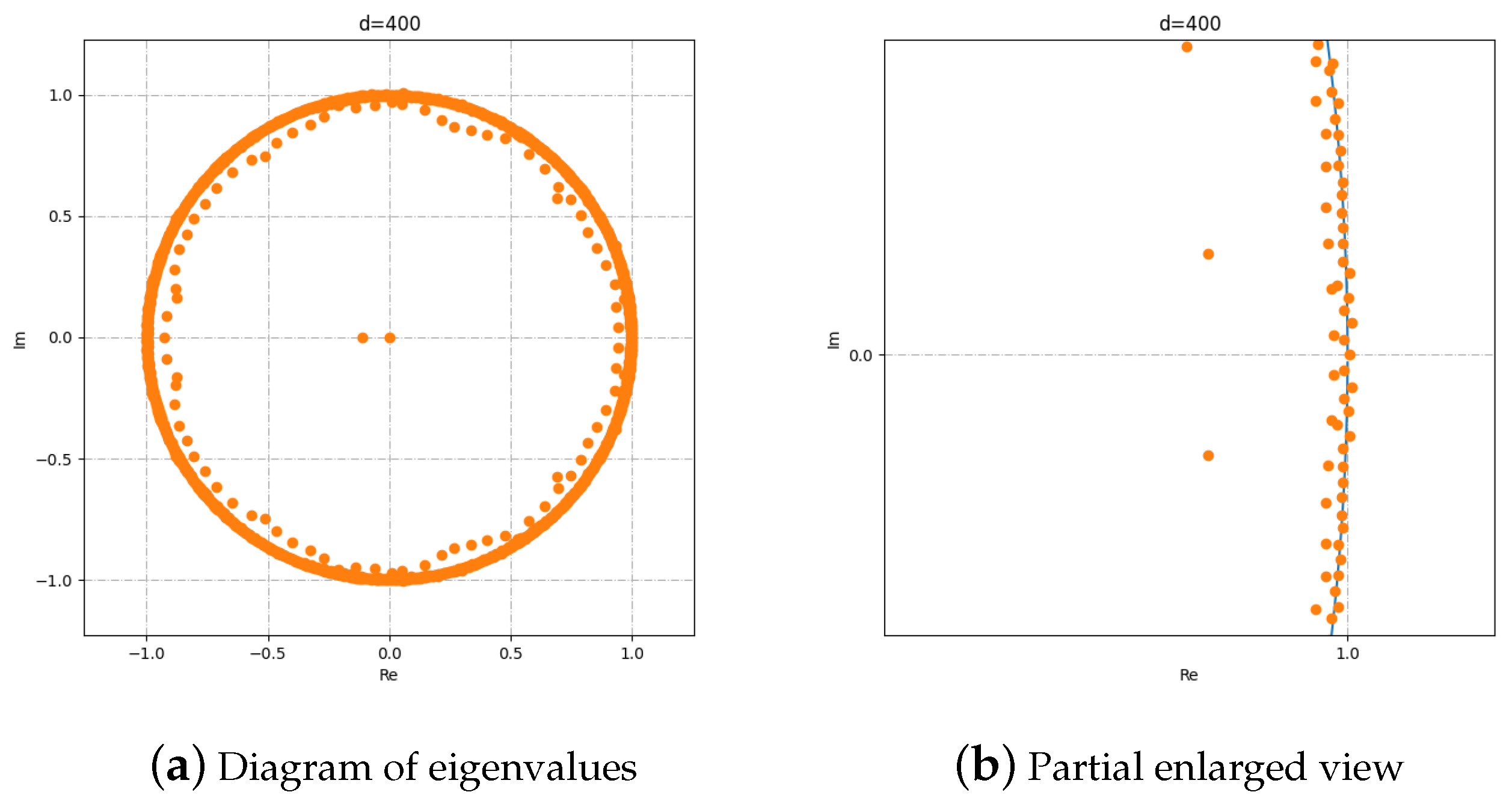

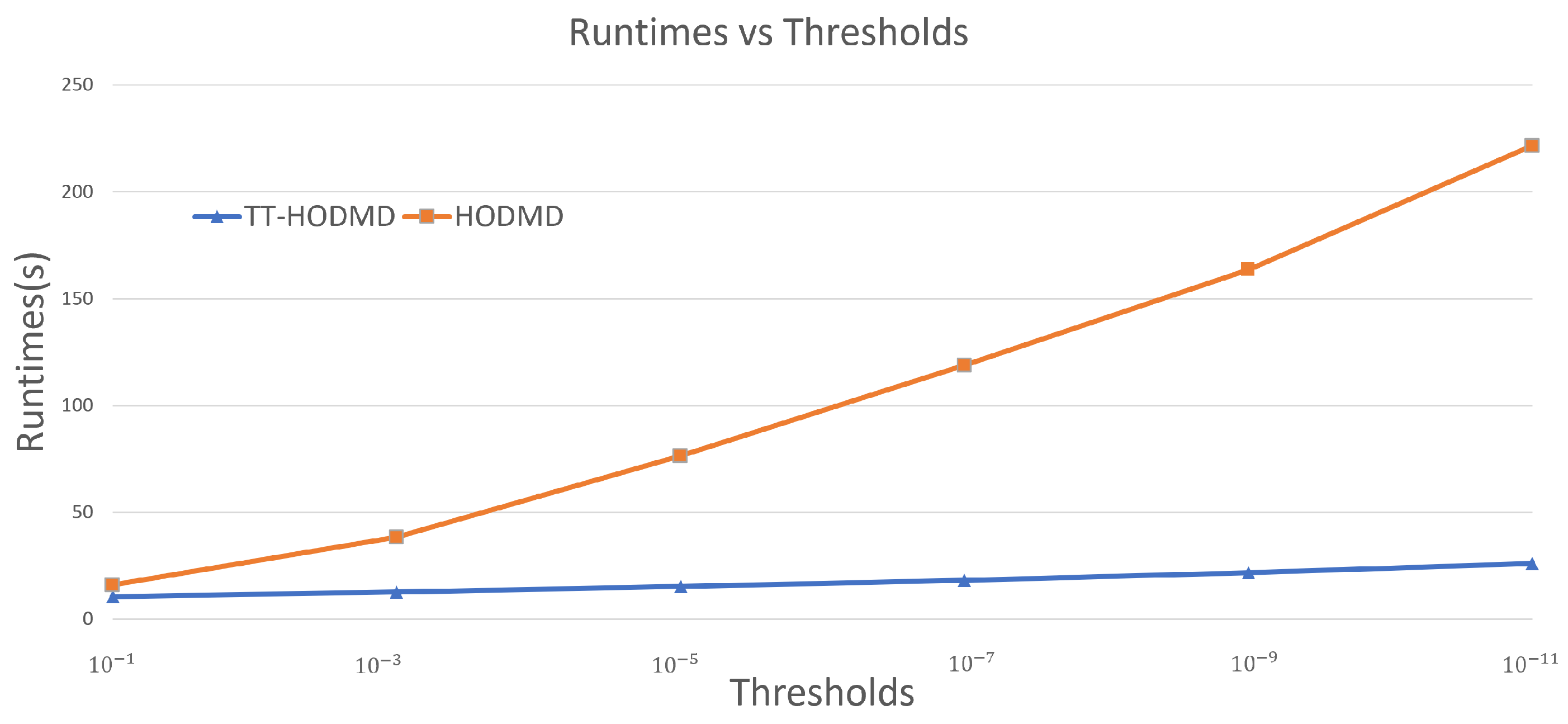

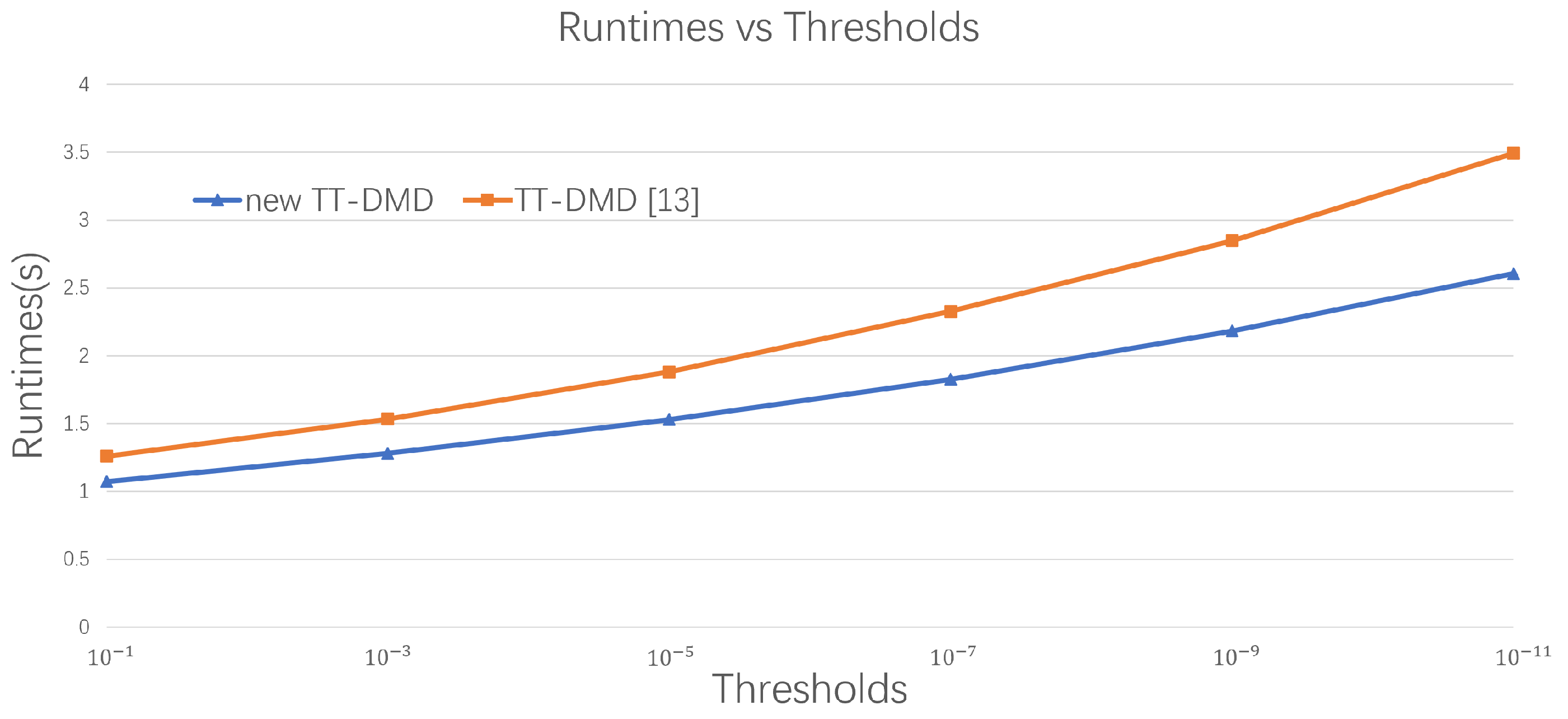

3.1. Validation of TT-HODMD

3.2. Realization of STLF in Power System Based on TT-HODMD

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DMD | Dynamic mode decomposition |

| HODMD | Higher-order dynamic mode decomposition |

| TT-format | Tensor-train format |

| TTD | Tensor-train decomposition |

| SVD | Singular value decomposition |

| TT-HODMD | Tensor train-based higher order dynamic mode decomposition |

| STLF | Short-term load forecasting |

| TT-DMD | Tensor train-based dynamic mode decomposition |

References

- Schmid, P.J. Dynamic mode decomposition of numerical and experimental data. J. Fluid Mech. 2010, 656, 5–28. [Google Scholar] [CrossRef]

- Le Clainche, S.; Vega, J.M. Higher order dynamic mode decomposition. SIAM J. Appl. Dyn. Syst. 2017, 16, 882–925. [Google Scholar] [CrossRef]

- Lorenz, E.N. Deterministic nonperiodic flow. J. Atmos. Sci. 1963, 20, 130–141. [Google Scholar] [CrossRef]

- Mengmeng, W.U.; Zhonghua, H.A.N.; Han, N.I.E.; Wenping, S.O.N.G.; Le Clainche, S.; Ferrer, E. A transition prediction method for flow over airfoils based on high-order dynamic mode decomposition. Chin. J. Aeronaut. 2019, 32, 2408–2421. [Google Scholar]

- Le Clainche, S.; Han, Z.H.; Ferrer, E. An alternative method to study cross-flow instabilities based on high order dynamic mode decomposition. Phys. Fluids 2019, 31, 094101. [Google Scholar] [CrossRef]

- Le Clainche, S.; Vega, J.M. Higher order dynamic mode decomposition to identify and extrapolate flow patterns. Phys. Fluids 2017, 29, 084102. [Google Scholar] [CrossRef]

- Kou, J.; Le Clainche, S.; Zhang, W. A reduced-order model for compressible flows with buffeting condition using higher order dynamic mode decomposition with a mode selection criterion. Phys. Fluids 2018, 30, 016103. [Google Scholar] [CrossRef]

- Le Clainche, S.; Vega, J.M.; Soria, J. Higher order dynamic mode decomposition of noisy experimental data: The flow structure of a zero-net-mass-flux jet. Exp. Therm. Fluid Sci. 2017, 88, 336–353. [Google Scholar] [CrossRef]

- Le Clainche, S.; Sastre, F.; Vega, J.M.; Velazquez, A. Higher order dynamic mode decomposition applied to post-process a limited amount of noisy PIV data. In Proceedings of the 47th AIAA Fluid Dynamics Conference, Denver, CO, USA, 5–9 June 2017. [Google Scholar]

- Jones, C.; Utyuzhnikov, S. Application of higher order dynamic mode decomposition to modal analysis and prediction of power systems with renewable sources of energy. Int. J. Electr. Power Energy Syst. 2022, 138, 107925. [Google Scholar] [CrossRef]

- Kressner, D.; Tobler, C. Low-rank tensor Krylov subspace methods for parametrized linear systems. SIAM J. Matrix Anal. Appl. 2011, 32, 1288–1316. [Google Scholar] [CrossRef]

- Oseledets, I.V. Tensor-train decomposition. SIAM J. Sci. Comput. 2011, 33, 2295–2317. [Google Scholar] [CrossRef]

- Holtz, S.; Rohwedder, T.; Schneider, R. The alternating linear scheme for tensor optimization in the tensor train format. Siam J. Sci. Comput. 2012, 34, A683–A713. [Google Scholar] [CrossRef]

- Klus, S.; Gelß, P.; Peitz, S.; Schütte, C. Tensor-based dynamic mode decomposition. Nonlinearity 2018, 31, 3359. [Google Scholar] [CrossRef]

- Mezić, I. Analysis of fluid flows via spectral properties of the Koopman operator. Annu. Rev. Fluid Mech. 2013, 45, 357–378. [Google Scholar] [CrossRef]

- Lin, Y.T.; Tian, Y.; Livescu, D.; Anghel, M. Data-Driven Learning for the Mori–Zwanzig Formalism: A Generalization of the Koopman Learning Framework. SIAM J. Appl. Dyn. Syst. 2021, 20, 2558–2601. [Google Scholar] [CrossRef]

- Ding, J.; Du, Q.; Li, T.Y. High Order Approximation of the Frobenius-Perron Operator. Appl. Math. Comput. 1993, 53, 151–171. [Google Scholar] [CrossRef]

- Klus, S.; Koltai, P.; Schtte, C. On the numerical approximation of the Perron-Frobenius and Koopman operator. J. Comput. Dyn. 2016, 3, 51–79. [Google Scholar]

- Tu, J.H. Dynamic Mode Decomposition: Theory and Applications. Ph.D. Thesis, Princeton University, Princeton, NJ, USA, 2013. [Google Scholar]

- Beylkin, G.; Mohlenkamp, M.J. Numerical operator calculus in higher dimensions. Proc. Natl Acad. Sci. USA 2002, 99, 10246–10251. [Google Scholar] [CrossRef]

- Grasedyck, L.; Kressner, D.; Tobler, C. A literature survey of low-rank tensor approximation techniques. GAMM-Mitt. 2013, 36, 53–78. [Google Scholar] [CrossRef]

- Hackbusch, W. Tensor Spaces and Numerical Tensor Calculus; Springer: Berlin, Germany, 2012; Volume 42. [Google Scholar]

- Hackbusch, W. Numerical tensor calculus. Acta Numer. 2014, 23, 651–742. [Google Scholar] [CrossRef]

- Yang, J.-H.; Zhao, X.-L.; Ji, T.-Y.; Ma, T.-H.; Huang, T.-Z. Low-rank tensor train for tensor robust principal component analysis. Appl. Math. Comput. 2020, 367, 124783. [Google Scholar] [CrossRef]

- Cichocki, A. Era of big data processing: A new approach via tensor networks and tensor decompositions. In Proceedings of the Conference: International Workshop on Smart Info-Media Systems in Asia, Nagoya, Japan, 30 September–2 October 2013. [Google Scholar]

- Garulli, A.; Paoletti, S.; Vicino, A. Models and techniques for electric load forecasting in the presence of demand response. IEEE Trans. Control Syst. Technol. 2014, 23, 1087–1097. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, B.; Ji, W.; Gao, X.; Li, X. Short-term load forecasting method based on GRU-NN model. Autom. Electr. Power Syst. 2019, 43, 53–62. [Google Scholar]

- Hong, T.; Pinson, P.; Fan, S. Global energy forecasting competition 2012. Int. J. Forecast. 2014, 30, 357–363. [Google Scholar] [CrossRef]

- Cichocki, A. Tensor networks for big data analytics and large-scale optimization problems. arXiv 2014, arXiv:1407.3124. [Google Scholar]

- Nationalgrid.com. Demand Forecasting|National Grid Gas. 2022. Available online: https://www.nationalgrid.com/gas-transmission/about-us/system-operator-incentives/demand-forecasting (accessed on 13 July 2022).

- Hemati, M.S.; Williams, M.O.; Rowley, C.W. Dynamic mode decomposition for large and streaming datasets. Phys. Fluids 2014, 26, 111701. [Google Scholar] [CrossRef]

| RMSE | |

|---|---|

| Tensor-Based Data | Time-Series-Based Data | |

|---|---|---|

| Data structure | Effective structure protection for high-dimensional data | Only the structure of one-dimensional data is well protected, and for high-dimensional data only the unfolding process is possible |

| Data meaning | Each dimension of high-dimensional data has a corresponding meaning and does not facilitate unfolding | It is mainly the temporal arrangement of the data in advance that is of relatively simple significance |

| Data processing | Can handle high-dimensional data immediately | High-dimensional data must be unfolded before they can be processed, significantly reducing efficiency |

| Data efficiency | Provides a compact form | Unfolding can lead to matrices of a very high dimension |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, K.; Utyuzhnikov, S. Tensor Train-Based Higher-Order Dynamic Mode Decomposition for Dynamical Systems. Mathematics 2023, 11, 1809. https://doi.org/10.3390/math11081809

Li K, Utyuzhnikov S. Tensor Train-Based Higher-Order Dynamic Mode Decomposition for Dynamical Systems. Mathematics. 2023; 11(8):1809. https://doi.org/10.3390/math11081809

Chicago/Turabian StyleLi, Keren, and Sergey Utyuzhnikov. 2023. "Tensor Train-Based Higher-Order Dynamic Mode Decomposition for Dynamical Systems" Mathematics 11, no. 8: 1809. https://doi.org/10.3390/math11081809

APA StyleLi, K., & Utyuzhnikov, S. (2023). Tensor Train-Based Higher-Order Dynamic Mode Decomposition for Dynamical Systems. Mathematics, 11(8), 1809. https://doi.org/10.3390/math11081809