Abstract

For a vertex and edge weighted (VEW) graph with a vertex weight function let where, and denotes the distance, the minimum sum of edge weights across all the paths connecting . Assume is a VEW tree, and fails. If we reconnect the two components of with new edge such that, is minimum, then is called a best switch (BS) of w.r.t. . We define three notions: convexity, discrete derivative, and discrete integral for the VEW graphs. As an application of the notions, we solve some BS problems for positively VEW trees. For example, assume is an -vertex VEW tree. Then, for the inputs and , we return , , and with the worst average time of and the best time of where is a BS of w.r.t. and the weight of is .

Keywords:

discrete derivative; discrete integral; best switch; convex graph; weighted total distance; weighted tree MSC:

05C12; 05C22; 68Q25; 05C05; 68W05

1. Introduction and Notations

Unlike Euclidean space, which is equipped with coordinate systems, we cannot stay at a network node and visualize where we are and how to achieve our goal. On the other hand, analyzing a network using local information can lower the cost of tasks [1,2,3,4,5,6]. Preprocessing may help to establish a network coordinate for local tasks and reach the destination step by step.

This paper defines discrete derivative, discrete integral, and convexity notions for vertex and edge-weighted graphs, which will help with local tasks. To do that, we choose the common definition of distance for edge-weighted graphs in the literature, which can be generalized or modified to satisfy metric properties. Applying the notions above, we design and solve some tree-related problems.

Unless otherwise stated, we assume a graph is weighted (vertices and edges) and connected without loops or multiple edges. Then, by saying that a graph is -weighted, the vertex weight function is and the edge weight function is . The weight of a path in is . And denotes the distance between that is, the weight of the minimum weight paths across all the paths connecting and . Note that the distance of a vertex with itself is zero, and the distance between two vertices is infinite if they are not connected with a path. By saying a graph is positively weighted, we mean both vertex and edge weights are positive. We define the following numerical invariant for a -weighted graph ,

And for , and , we define

And for the given subgraphs and of , let:

Definition 1.

For a tree and , } has two components. We name the components and with and . As an extension for the later notation to subtrees, if , and denotes the components of and so on. Also, set and .

By Definition 1 and . We remind the reader that denotes a vertex ′s neighbors.

Using the above notation for a tree let, and . For more detail and are the number of vertices in and , respectively. And and are the total weight of vertices in and , respectively.

For ease and based on the definition of , we define two more distance-based numerical graph invariants for a -weighted graph as follows:

Indeed, , and .

Using the notations, the coordinate of is shown and defined with

Assume is a -weighted tree and . If fails (removed from the edge set), then we have that has two components. If we reconnect the two components of with an edge, , we have . For ease, we will denote by . Then is called a switch of and the tree is called a switch. Moreover, if and is minimum, is called a best switch (BS) of w.r.t. . Note that is a tree, , and .

Now suppose is an -vertex and positively weighted tree with coordinate . We are interested in solving the following problem. Get , , and and return , , and , where is a BS of w.r.t. and . (by we mean , , and ). We solve the problem with the worst average time of and the best average time of . Clearly, using the output of the problem, if we solve the problem with some complexities, we can keep getting and and solve the problem on with the same complexities on , . Changing edge weights within the positive range are also supported in -time per change to update the coordinates at any meaningful stage. The mentioned problem is the primary example that will solve in the application section as an application of our tools in the following section. The problem can be seen as a generalization of [7,8,9] that has found some applications [6,10,11,12]. For some relevant optimization problems over spanning trees, see [13,14,15,16,17,18,19]. This paper uses extensive notation for more precise interaction, which may take some time to get used to. But our solutions are natural, utilizing some calculus intuition behind the notations.

We might be able to partially compare our solution for the above problem with top tree methods [7,8,9] (see the final section). Generalizing the top tree method solves the problem above for some specified edges and fixed with the same average complexity as our solution. However, by changing in the top tree method, one needs to repeat the preprocessing, which is expensive. We also compare our method to the swap edge problem of spanning trees [20,21], which requires a conversion. See the last section for more details.

As the main topics of this paper, we have the following sections. We will define some concepts and achieve some results. Applying the results, we find an efficient solution for BS of positively weighted trees mentioned above. Our method is general, and we have applied it to other problems [22].

2. Discrete Derivative and Integral

Discrete derivative, discrete integral, and convexity are three intuitive concepts for weighted graphs that we define in this section. We have some results and general examples specifically for trees.

Definition 2.

Suppose is a -weighted graph. The discrete derivative of a vertex toward is defined and denoted as follows:

We say is consistent if results in , .

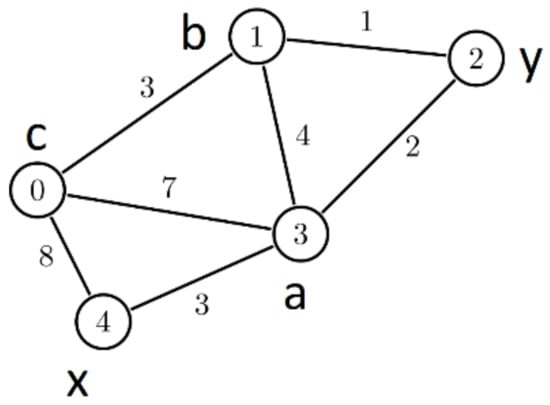

Using Figure 1 as graph , where . And .

Figure 1.

A vertex and edge-weighted graph on 5 vertices.

Lemma 1.

Assume and are two vertex weight functions for a graph. If , then , where . Moreover, if an are consistent, then also is.

A path, , is called -decreasing if it is a path and

An increasing path is defined in the same sense. Clearly, if is a -decreasing path and edge weights are positive, then , .

Definition 3.

Suppose is a -weighted graph. Then, is called -Root or -Roof of if is minimum or maximum, respectively. Let is the set of -Roots (-Roofs) of . Then we say is -convex (concave) if and only if for every there is a decreasing (increasing) path from to some .

Whenever there is no confusion, we may use Root instead of, -Roots, etc. Using the above image, the vertex with weight 0 is a Root, and the vertex with weight 4 is a Roof in the depicted graph.

To employ discrete derivatives as a tool, we first create some induced weight functions using the current weight of the graph for the vertices of an -weighted graph, . The first one is as follows:

Let be a -weighted tree (the vertex-weight function is, ). If and , then , otherwise:

Because

Another vertex-weight function for is induced using the primary weight functions as follows:

To help with the computation of s for a -weighted tree , let and be an ′s neighbor. Thus,

Using the above equations, . Therefore, if , then , otherwise:

Using Equations (3) and (4), and are consistent for any tree .

Let and . Using Equations (2) and (5), , and , one can compute and easily. If for every then for every . The following algorithm extracts for a tree , starts with the edges adjacent to a leaf and then deletes the leaves at each step until the edge set is empty. It is linear regarding the number of vertices and uses the fact that for every (Algorithm 1):

| Algorithm 1 Computing or |

| Input: Adjacency list of an -vertex, nontrivial -weighted tree and |

| Output: |

| Initiate with |

| ( |

| #Will be a leaf |

| #Remove from adj list |

Corollary 1.

For an -vertex tree , the sets, and, can be computed in .

Corollary 2.

Let be a -weighted tree with vertices and for some, . One can compute and sort for all with the time complexity of , that is on average .

The following result gives us an important fact about the weighted trees.

Lemma 2.

Suppose , , is a path in a -weighted tree . If and , then is, -increasing. If , , and , then is, -increasing. If , (for , , and , then is, -increasing.

Proof.

Assume is a -weighted tree and is a path in . Without loss of generality, we can prove the lemma for . We know that and for any . Thus, for some ,

and

Therefore,

We use Equations (2) and (5) for computing and . Then by the assumption . Thus by Equation (8) . Since , . That is, which completes the proof for case. Similarly, assume . If , by Equation (8) . If, in addition , then . Thus, by Definition 2, . That completes the proof for case. The proof for the case is like case. This completes the proof. □

Using Lemma 2, we attained the following result regarding the convexity of a tree.

Theorem 1.

Any positively edge-weighted tree is -convex, and any positively weighted tree is (-convex, for .

Proof.

Let us have a positively edge-weighted tree with at least three vertices. Assume a vertex, , is not a -Root and is the shortest possible path (in terms of the number of edges) between and a -Root, . Assume toward a contradiction that is not decreasing. Thus, suppose is the first edge from toward , such that . Since the edge weights are positive, . Then, by Lemma 2, the path is increasing. That is, and . If , also is a -Root and is shorter than while having the same specification, which is a contradiction with being the shortest. And if, , contradicts with, being a -Root. Therefore, is decreasing. Since was arbitrary, the tree is -convex. For the proof of case, use the same strategy as for , along with the positivity of the vertices’ weight. □

Corollary 3.

Assume that , . Then, a positively weighted tree has at most two -Roots. If and are two -Roots of a tree, then they are adjacent, and . Moreover, if is a tree’s -Root and is a non-Root neighbor of , then , and . Usefully, if is a decreasing path between and a -Root, , then if and only if , . In addition, is the longest -decreasing path starting from . Finally, a leaf is not a -Root of a positively weighted tree with more than 2 vertices.

Definition 4.

Suppose is a -weighted graph. Regarding and , we define and denote the discrete integral of along a path, from to as follows:

If and is an arbitrary path between and we denote by

By Definitions 2 and 4, we have:

Theorem 2.

Suppose is a connected -weighted graph and . If is consistent, then for any path from to ,

Using Figure 1 as graph with the given weights and the above result or Definition 2.12, one can see that,

3. Some Applications of Discrete Integral and Derivative and Convexity

In this section, we formally define three optimization problems on trees. As an application of the results of the previous section, we will have efficient solutions to the problems with some possible comparisons with existing solutions at the end. The compared problems are not clearly defined in the same way we do. We try to convert them for some partial comparison.

The routing cost of for a given set of sources and the total distance of are defined as follows, respectively:

The Wiener index, , of a simple graph , is equal to its total distance [23,24]. By setting , and ; , defined in the introduction, can produce any of , , , , or for a graph, . So, generalizes all the mentioned graph invariants.

Definition 5.

Let be a weighted tree and , where and .

- We say is a switch of , if is a tree. Then is called a switch.

- We say is a BS of w.r.t. if is minimum, i.e.,

- The coordinate of is shown and defined with .

Based on the BS definition, we define three problems. The second and third problems are created merely for comparison. Assume is a weighted tree with and we have coordinated, .

| Problem1: Input: 1. , 2. , 3. , 4. . Output: 1. , where is a BS of w.r.t. with . 2. , 3. , 4. . |

| Problem2: Input: 1. with 2. , 3. . Output: 1. , 2. , 3. , 4. , 5. , where and is a BS of w.r.t. with . (Overall best switch). |

| Problem3: Input: 1. with , 2. , 3. . Output: 1. , where is a BS of w.r.t. with . (All best switches). |

Definition 6.

For a numerical graph invariant, , and a switch let

Lemma 3.

Let be a -weighted tree, , and for some . Using, and , we can calculate on the path from toward any vertex of . A similar argument holds for a path from toward any vertex of .

Proof.

Let be a -weighted tree and with and , . Assume is a path in , with . Using Equations (1), (2), (5), and we could extract, and , and so on . However, we need to get through . To do so, one can check for

Thus, using Equations (1), (2) and (5), along with (9) and (10):

That is, using and , we can compute as follows:

Symmetrically, the argument holds for the and case. □

It is straightforward but involves some long computation to find out the following.

Lemma 4.

Suppose is a -weighted tree with and . If and , then

where,

and

Proof.

Assume is a tree. If we remove and connect, and with where and , then

Using the fact that and the above formula,

where and

Since and are connected, and and are consistent; by Lemma 1 and Theorem 2, we have:

The above lemma gives a clue between a BS of w.r.t and the -Roots and -Roots of and . Often, finding a BS is equivalent to finding some Roots.

Lemma 5.

Suppose is a positively weighted tree with at least three vertices and . If and are a -Root of and a -Root of , respectively, then a BS of w.r.t is with and . More precisely, let the following be vertex weight functions for the relevant vertices:

Then a choice of as a BS of w.r.t is as follows:

- A

- If -Root of , -Root of ,

- where , or

- where .

If , 1 gives a choice for ; otherwise, . - B

- If -Root of , -Root of , then -Root of , -Root of .

Moreover, and except for the edges on and . More precisely, if is the path between and ,

And, if is a path between and ,

Proof.

Assume we have the lemma assumptions. By Equation (11),

where is independent of the choice of s and . And the values of and are independent of each other. By definition, if and minimize Equation (12) and then is a BS of . One sees that by minimizing Equation (12) inclusively, we do not exclude as a choice for , since:

Accordingly, minimizes Equation (12) if and only if . That is, -Root of , -Root of .

If case A happens, which is -Root of , -Root of and also -Root of , -Root of , then we might have . To exclude in the minimization of Equation (12), we avoid the case that and appear concurrently by letting is equal to either or . By Theorem 1 and the assumptions and are convex. That is,

As a result, either

Per Lemma 4, in case 1—, and in case 2—. Thus, if , then 1 gives the choice of . If , then comes from 2. Otherwise, either 1 or 2 is the choice.

Now assume -Root of , -Root of . If -Root of , -Root of , then . Moreover, minimizes in Equation (12). So is the right choice. This completes the proof of B.

To form we remove and reconnect the components of using and , . Thus, and in general. Precisely, or happens if or . By Definition 1 and . And, by Lemma 3 Equations (9) and (10), if is the path between and ,

And symmetrically, for with and ,

□

Lemma 6.

For a -weighted tree ,

and,

Proof.

We prove the first equation using the double-counting principle. Assume is a copy of with the edge weights set to 0 at the start. Let and is the path between and . For every add the number to the corresponding edge of in . Then . Thus, suppose we repeat the above process for every pair . If so, then

And on the other hand, one can see that for . Thus, That proves the formula of . The proof for the case is quite similar. □

Corollary 4.

Assume is a -weighted -vertex tree. We can compute and in -time. Moreover, if by changing the weight of to we get a tree , then

Remark 1.

Here, we give a simple intuition behind an algorithm for problem 1 when the tree is positively weighted. Using Lemma 5, finding a BS for , we need to have the -Roots and -Roots of and respectively. By definition and . By Corollary 3, the longest decreasing path from ends up with a -Root of say , and similarly, the longest decreasing path from ends up at a -Root of say . Moreover, by Corollary 3, those paths are the only decreasing paths in their respective components. So, one can easily take some derivatives to reach from to or from to since the paths are decreasing and unique. Then by Lemma 5, a BS of is one of the following cases as detailed in the lemma.

- ,

- an edge between and a vertex of ,

- an edge between and a vertex of .

Lemma 5 details how to choose among the above limited cases using derivatives. As we find the unique and decreasing path between

and

and also

and

, one can update

as in Lemma 5. Having those paths also eases calculating

,

, and

from Lemma 4. The following proposition details the mentioned intuition with proof of time complexity.

Proposition 1.

Let be an -vertex positively weighted tree with a coordinate, . If we get and , then we can compute , , , and , with the worst average time of and the best average time of , where is a BS of w.r.t and . Updating, after edge-weight change to a positive number is supported in -time per change.

Proof.

Assume we have an -vertex positively weighted tree , and , . Let and be some induced vertex weight functions for an as follows,

Then with the results so far, we have the following:

1. Computing a or in -time. Using and , Lemma 3, and Definition 2, computing costs -time for any where and we know the direction. A similar argument holds for computing on .

2. Checking whether a vertex is a Root on average of -time. By Corollary 3, if for only one , then is not a -Root. The following can verify whether a vertex is or -Root or not. (Let or )

| # Takes a vertex and a and says it is a -Root or not |

The average degree in a tree is . Thus, run time is on average.

3. Finding a neighbor with a minimum or in -time on average. If , then by Theorem 2 and Definition 4, . By 1- computing takes -time. Moreover, on average. Thus, finding with takes -time on average. Computing min neighbor follows the same rules. One implements the mentioned idea as follows.

| # Takes a vertex, , finds with min and returns and ( or ) |

4. Finding, or in on average, where and are and -Root. By Theorem 1, is -convex. Assume that is the decreasing path between and a -Root, . By Corollary 3, is the longest decreasing path beginning with , and if and only if , , . So, starting with we can find when we have and compute as follows:

| # Takes a vertex, , and returns and where is a ( or ) |

| . |

For finding from , we can exclude and jump to as the process does. That reduces the average complexity. Moreover, when we are on the vertex , , one takes at most | derivatives to find , since we know, is the only vertex with negative derivatives toward it. So, returns, by taking some derivatives, at most, as many as the total degree of the vertices on where is a -Root. By [25], the path length in a tree is on average, where it can be as well. In addition, the average degree in a tree is , and taking each derivative requires -time. Moreover, is a -Root (has min ) and . Thus, the worst average run time of is and the best average time is . Similarly, has the same run time to return where is a -Root.

5. Computing and in -time and -time, respectively. Per Definition 4, Theorem 2, and 1, if we have a path, , we can compute the integral of in -time as follows:

| # Takes a path and and returns ( or ) |

6. Updating to and to in + time. Per Lemma 5, and updates to and to , where is a process as follows.

| # Takes a path and updates and accordingly |

Using the devised procedures in 1- to 6-, we can implement Lemma 4 and 5, which finds us , , , and , for the input and , where is a BS of w.r.t and . The full detail is presented with the following algorithm: BS algorithm (Algorithm 2). Each line’s average time complexity bound is shown in the results.

| Preprocessing----------------------------------------- |

| Compute Corollary 1 & Lemma 6 |

| -------------------------------------------------------------------------------------------------------------- |

| Algorithm 2 BS Algorithm |

| Input: Adj list of a -weighted tree , , , |

| Output: , , , where is a BS of w.r.t. and |

| ---------Finding , and where is a BS of (Lemma 5)----- |

| { |

| { |

| { |

| } |

| { |

| -------------Computing -------( Lemma 4 )------------------------------------- |

| -----------------updating --------( Lemma 5 )----------------------------- |

| ------------------------ |

| -------------------------------------------------------------------------------------------------------------- |

Using 1 to 6 above, the average time complexity of every line of Algorithm 2. is bounded by, . By [25], the average path length of is which accounts for the worst average time complexity of the algorithm. The best average complexity can be because and the degrees on and can be . Finally, assume is a tree, and we have the preprocessing, . If we change the weight of one edge, then and remain unchanged, and by Corollary 4, we can update and in -time. This completes the proof. □

The subsequent two propositions can be proved based on Proposition 1. They are indeed a solution to Problems 2 and 3, respectively.

Proposition 2.

Let be an

-vertex positively weighted tree with

. If we get

, then we can find an

such that

and compute

, in an average time of

and the best time of

, where

with

is a BS of

w.r.t.

,

.

Proposition 3.

Let be an

-vertex positively weighted tree with

. If we get

, then we can find a BS,

with

w.r.t.

for all

and compute

,

, in an average time of

and the best time of

.

For simplicity, we avoided adding some facts for better performance of the BS algorithm. For example, by Corollary 3, a leaf is not a root of a tree with more than 2 vertices. In the BS algorithm, we either require a root or a neighbor of a root. Thus, if or are not trivial (single vertex), then the version of without the leaves can be used. That improves the actual performance of the BS algorithm.

Proposition 4.

For a simple tree , there are exactly

distinct switches. More precisely,

and if

and ,

Proof.

Let be a simple tree. Using Definition 5, is a switch of , if and only if . Otherwise, is disconnected, indicating that it is not a switch. Therefore, there are exactly distinct switches regarding . Thus, the total number of distinct switches is as follows:

And In addition, is a tree if and only if is an edge between and . Thus, This completes the proof. □

For of a simple tree with vertices by definition, . Also, by [26,27,28], .

4. Some Comparisons and Discussion

In this section, we compare our method with some existing methods. For brevity, we mean average time complexity when we talk about complexity. The compared methods are not solving the same problems that we did, but it is possible to convert them with some effort.

The top tree method can find a positively weighted tree’s median(s). It is almost equivalent to finding the Root of a tree in our method, disregarding and . Thus, for an -vertex tree, and we somehow can convert the top tree methods [7,8,9] to find a BS w.r.t. in -time for most cases. However, if connects a Root of and a Root of , then the top tree method does not work. They also do not compute of the switches regularly, which does not let us solve problem 2 even for their specific case of . Generalizing the top tree method, we may be able to solve problem 1 for a fixed and compute , which is overcomplicated intuitively. But, if we renew the initial values of to some , then we have to repeat their -time preprocessing, which is costly. As we have seen, our method in the BS algorithm solves problem 1 in for any , computes s, and does not require a fresh preprocessing for the change of . Our method updates the preprocessing, in -time after an edge-weight change, whereas using top trees, an edge weight update takes up to -time.

For another comparison, we borrow the solution of the best swap edge of multiple-source routing tree algorithms [15,19,20,21,29,30] for problem 3. Suppose is a spanning tree of a positively edge-weighted graph with edges and vertices. Then, in [15,19,20,21,29,30], researchers choose the BS from , whereas we choose from . Therefore, by choosing to be , the mentioned algorithms can solve problem 3. Also, they choose the BS w.r.t. as the routing cost rather than its generalization, . Let be a set of sources and . Setting for all and for all , results in

That is, by specifying vertices weights as mentioned and letting for , and , algorithms of [20,21] will solve problem 3. The mentioned algorithms are expensive specifically for a single failed edge, and after updating the edge weights or inserting a BS, their preprocessing is not valid. They also do not compute ′s. More importantly, regarding the mentioned conversion, they solve problem 3 in more than -time and up to depending on the number of sources, where and sources are specified. In the terminology of [20,21,22], we do not limit the number of sources and, , where we attain an average time of and the best time of for Problem 3.

As a general fact, note that choosing a brute-force strategy and distance matrix for the positively edge-weighted graph, computing takes . Moreover, there are between to switches regarding an edge. Therefore, using the bute-force strategy, we will need between to time to compute a switch with the minimum . While our method executes that in with the same flexibility. This difference is significant. Note that the existing discussed methods are comparable to ours with some constraints, but they have much less flexibility.

Our tools are general and can be applied to similar optimization problems. For instance, see [15], where we use our method for the best swap edge of spanning trees. Another interesting problem can be solving the BS problems regarding by considering the weight for the vertices of a tree, . See Appendix A, where we have some computations related to the derivative and integral of, .

Finding the minimum -sources routing cost spanning tree is an -hard problem for any and is a polynomial problem for [29,30,31].

Author Contributions

Methodology, M.H.K.; Resources, A.-H.E. All authors have read and agreed to the published version of the manuscript.

Funding

This material is based upon work supported by the NSF Program on Fairness in AI in collaboration with Amazon under grant IIS-1939368. Any opinion, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation or Amazon.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Let be a tree and . Then, using the definition ():

Also, one can use the same idea of Equation (11) and see that,

We had that, is consistent, thus by Theorem 2,

Therefore,

References

- Alexopoulos, C.; Jacobson, J.A. State space partition algorithms for stochastic systems with applications to minimum spanning trees. Networks 2000, 35, 118–138. [Google Scholar] [CrossRef]

- Aziz, F.; Gul, H.; Uddin, I.; Gkoutos, G.V. Path-based extensions of local link prediction methods for complex networks. Sci. Rep. 2020, 10, 19848. [Google Scholar] [CrossRef] [PubMed]

- Dong, Z.; Chen, Y.; Tricco, T.S.; Li, C.; Hu, T. Hunting for vital nodes in complex networks using local information. Sci. Rep. 2021, 11, 9190. [Google Scholar] [CrossRef] [PubMed]

- Hamilton, W.L.; Ying, R.; Leskovec, J. Inductive representation learning on large graphs. In Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS’17, Red Hook, NY, USA, 4–9 December 2017; pp. 1025–1035. [Google Scholar]

- Zhou, T. Progresses and challenges in link prediction. iScience 2021, 24, 103217. [Google Scholar] [CrossRef] [PubMed]

- Kanevsky, A.; Tamassia, R.; Battista, G.D.; Chen, J. On-line maintenance of the four-connected components of a graph. In Proceedings of the 32nd FOCS, San Juan, Puerto Rico, 1–4 October 1991; pp. 793–801. [Google Scholar]

- Alstrup, S.; Holm, J.; de Lichtenberg, K.; Thorup, M. Maintaining information in fully dynamic trees with top trees. ACM Trans. Algorithm 2005, 1, 243–264. [Google Scholar] [CrossRef]

- Alstrup, S.; Holm, J.; Thorup, M. Maintaining center and median in dynamic trees. In Proceedings of the 8th Scandinavian Workshop on Algorithm Theory, Berlin, Germany, 3–5 July 2000; pp. 46–56. [Google Scholar]

- Wang, H.-L. Maintaining centdians in a fully dynamic forest with top trees. Discrete App. Math. 2015, 181, 310–315. [Google Scholar] [CrossRef]

- Gabow, H.N.; Kaplan, H.; Tarjan, R.E. Unique maximum matching algorithms. J. Algorithms 2001, 40, 159–183. [Google Scholar] [CrossRef]

- Holm, J.; de Lichtenberg, K.; Thorup, M. Poly-logarithmic deterministic fully-dynamic algorithms for connectivity, minimum spanning tree, 2-edge and biconnectivity. J. ACM 2001, 48, 723–760. [Google Scholar] [CrossRef]

- Nardelli, E.; Proietti, G.; Widmayer, P. Finding all the best swaps of a minimum diameter spanning tree under transient edge failures. J. Graph Algorithms Appl. 2001, 5, 39–57. [Google Scholar] [CrossRef]

- Baste, J.; Gözüpek, D.; Paul, C.; Sau, I.; Shalom, M.; Thilikos, D.M. Parameterized complexity of finding a spanning tree with minimum reload cost diameter. Networks 2020, 75, 259–277. [Google Scholar] [CrossRef]

- Biloò, D.; Colella, F.; Gualà, L.; Leucci, S.; Proietti, G. An Improved Algorithm for Computing All the Best Swap Edges of a Tree Spanner. Algorithmica 2020, 82, 279–299. [Google Scholar] [CrossRef]

- Fischetti, M.; Lancia, G.; Serafini, P. Exact algorithms for minimum routing cost trees. Networks 2002, 39, 161–173. [Google Scholar] [CrossRef]

- Gfeller, B.; Santoro, N.; Widmayer, P. A Distributed Algorithm for Finding All Best Swap Edges of a Minimum-Diameter Spanning Tree. IEEE Trans. Dependable Secur. Comput. 2011, 8, 1–12. [Google Scholar] [CrossRef]

- Kobayashi, M.; Okamoto, Y. Submodularity of minimum-cost spanning tree games. Networks 2014, 63, 231–238. [Google Scholar] [CrossRef]

- Seth, P. Sensitivity analysis of minimum spanning trees in sub-inverse-Ackermann time. In Proceedings of the 16th International Symposium on Algorithms and Computation, Sanya, China, 19–21 December 2005; pp. 964–973. [Google Scholar]

- Wu, B.Y.; Lancia, G.; Bafna, V.; Chao, K.-M.; Ravi, R.; Tang, C.Y. A polynomial time approximation scheme for minimum routing cost spanning trees. SIAM J. Comput. 1999, 29, 761–777. [Google Scholar] [CrossRef]

- Bilò, D.; Gualà, L.; Proietti, G. Finding Best Swap Edges Minimizing the Routing Cost of a Spanning Tree. Algorithmica 2014, 68, 337–357. [Google Scholar] [CrossRef]

- Wu, B.Y.; Hsiao, C.-Y.; Chao, K.-M. The swap edges of a multiple-sources routing tree. Algorithmica 2008, 50, 299–311. [Google Scholar] [CrossRef]

- Khalifeh, M.H.; Esfahanian, A.-H. A Faster Algorithm for Finding Best Swap Edges of multiple-source Routing Tree. Networks 2023. submitted. [Google Scholar]

- Meyerson, A.; Tagiku, B. Minimizing Average Shortest Path Distances via Shortcut Edge Addition. In Approximation, Randomization, and Combinatorial Optimization, Algorithms and Techniques: 12th International Workshop, APPROX 2009, and 13th International Workshop, RANDOM 2009, Berkeley, CA, USA, August 21–23, 2009, Proceedings; Springer: Berlin/Heidelberg, Germany, 2009; pp. 272–285. [Google Scholar]

- Wiener, H. Structural determination of the paraffin boiling points. J. Am. Chem. Soc. 1947, 69, 17–20. [Google Scholar] [CrossRef]

- Shen, Z. The average diameter of general tree structures. Comput. Math. Appl. 1998, 36, 111–130. [Google Scholar] [CrossRef]

- Dunklemann, P.; Entringer, R.C. Average distance, minimum degree and spanning trees. J. Graph Theory 2000, 33, 1–13. [Google Scholar] [CrossRef]

- Roger, C.E.; Douglas, E.J.; Snyder, D.A. Distance in graphs. Czech. Math. J. 1976, 26, 283–296. [Google Scholar]

- McKay, B.D. The expected eigenvalue distribution of a large regular graph. Linear Algebra Its Appl. 1981, 40, 203–216. [Google Scholar] [CrossRef]

- Bilò, D.; Gualà, L.; Proietti, G. A faster computation of all the best swap edges of a shortest paths tree. Algorithmica 2015, 73, 547–570. [Google Scholar] [CrossRef]

- Wu, B.Y. A polynomial time approximation scheme for the two-source minimum routing cost spanning trees. J. Algorithms 2002, 44, 359–378. [Google Scholar] [CrossRef]

- Wu, B.Y. Approximation algorithms for the optimal p-source communication spanning tree. Discrete App. Math. 2004, 143, 31–42. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).