Multi-Method Diagnosis of Histopathological Images for Early Detection of Breast Cancer Based on Hybrid and Deep Learning

Abstract

1. Introduction

- Overlapping filters were applied: one to remove artifacts and the second to show the edges of regions of interest;

- A hybrid method of CNN was applied for feature extraction and machine learning (SVM) to classify deep features with high accuracy;

- Vectors of fusion features were produced using combining CNN and handcrafted features;

- Automatic systems were developed that assist pathologists in making their decisions using diagnostic techniques.

2. Related Work

3. Materials and Methods

3.1. Dataset Description

3.2. Enhancing Histopathological Images

3.3. Hybrid of CNN and SVM

3.3.1. Feature Extraction

3.3.2. SVM Classifier

3.4. ANN Based on the Fusion Features

4. Results

4.1. Splitting Dataset

4.2. Evaluation Metrics

4.3. Data Augmentation Method

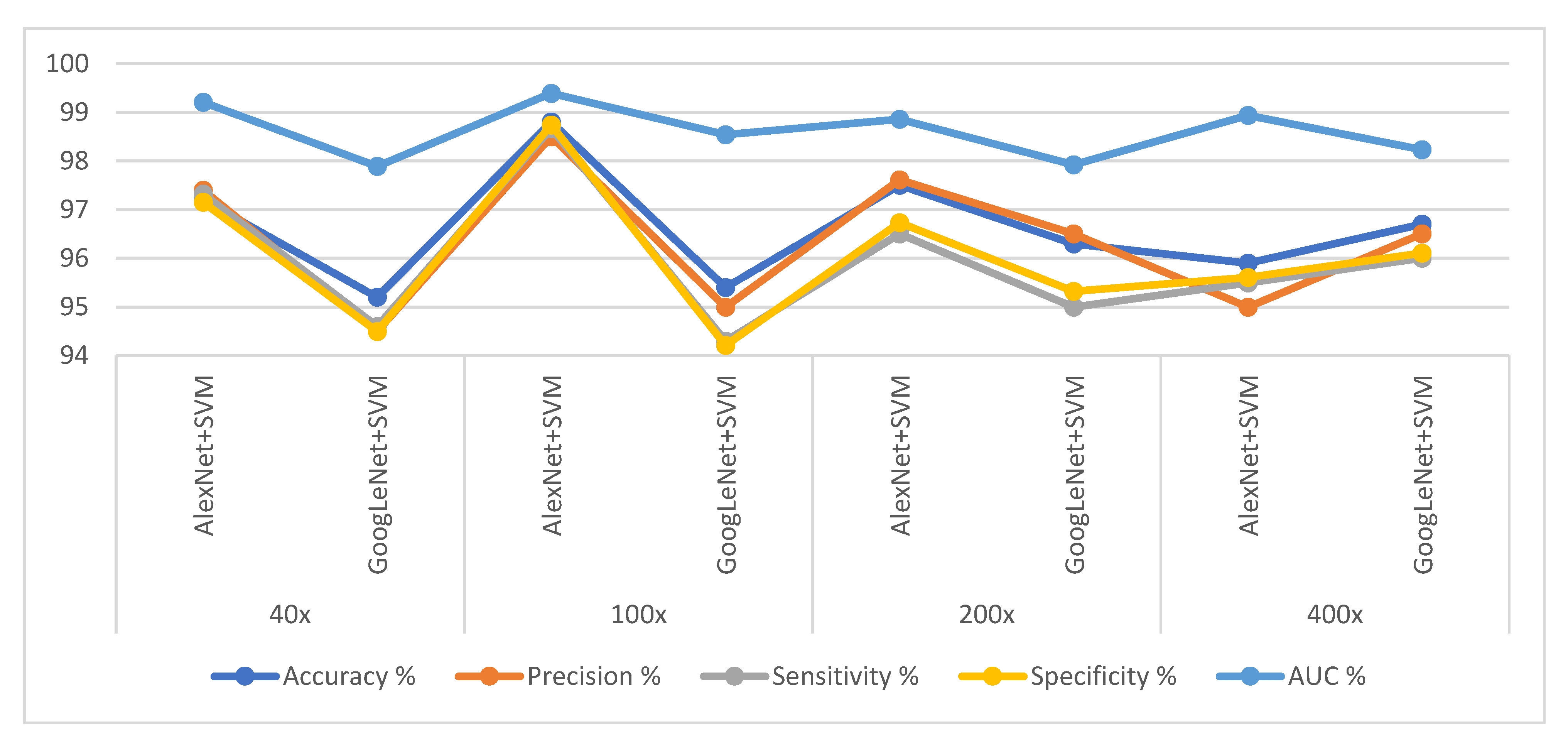

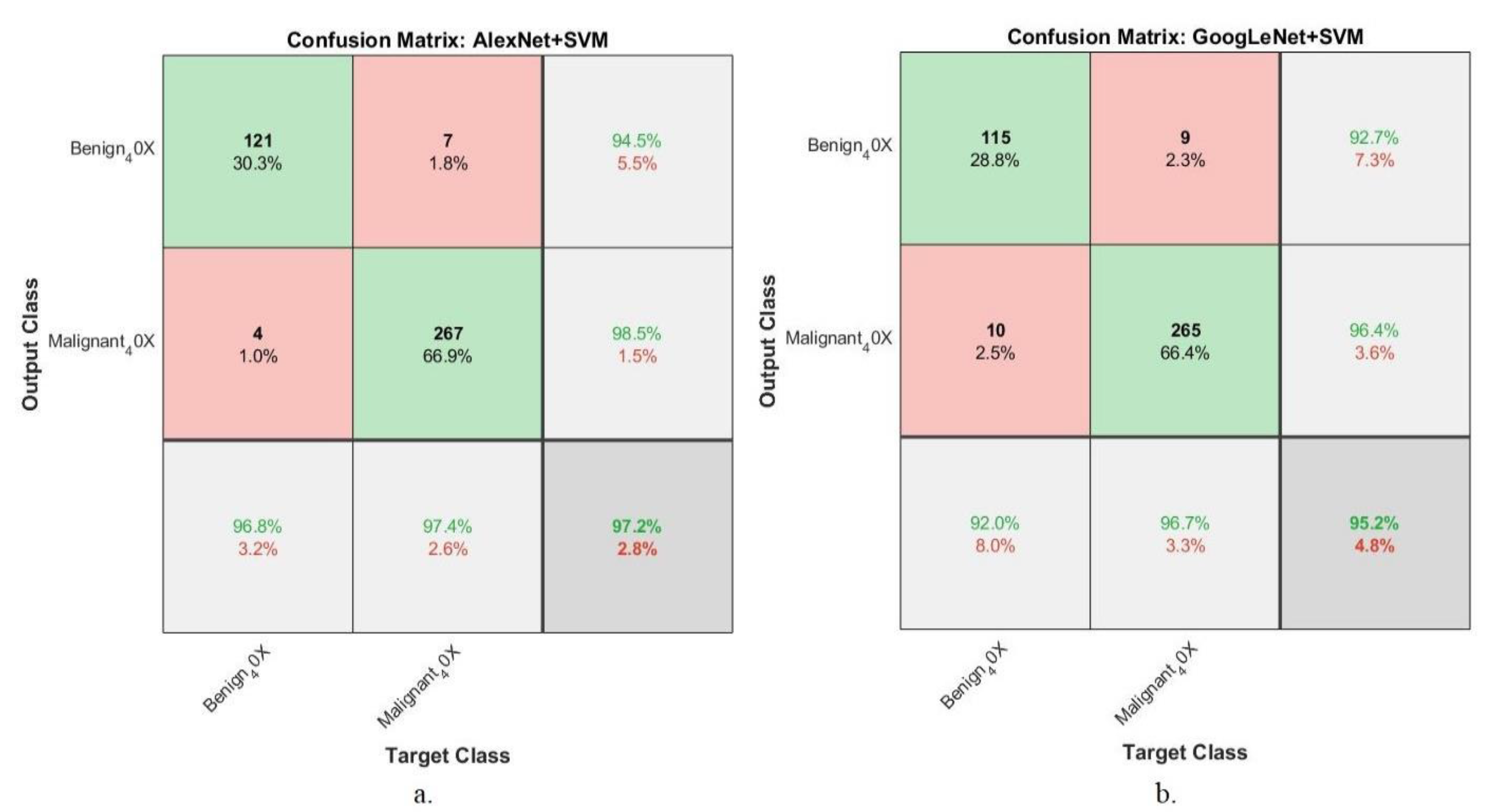

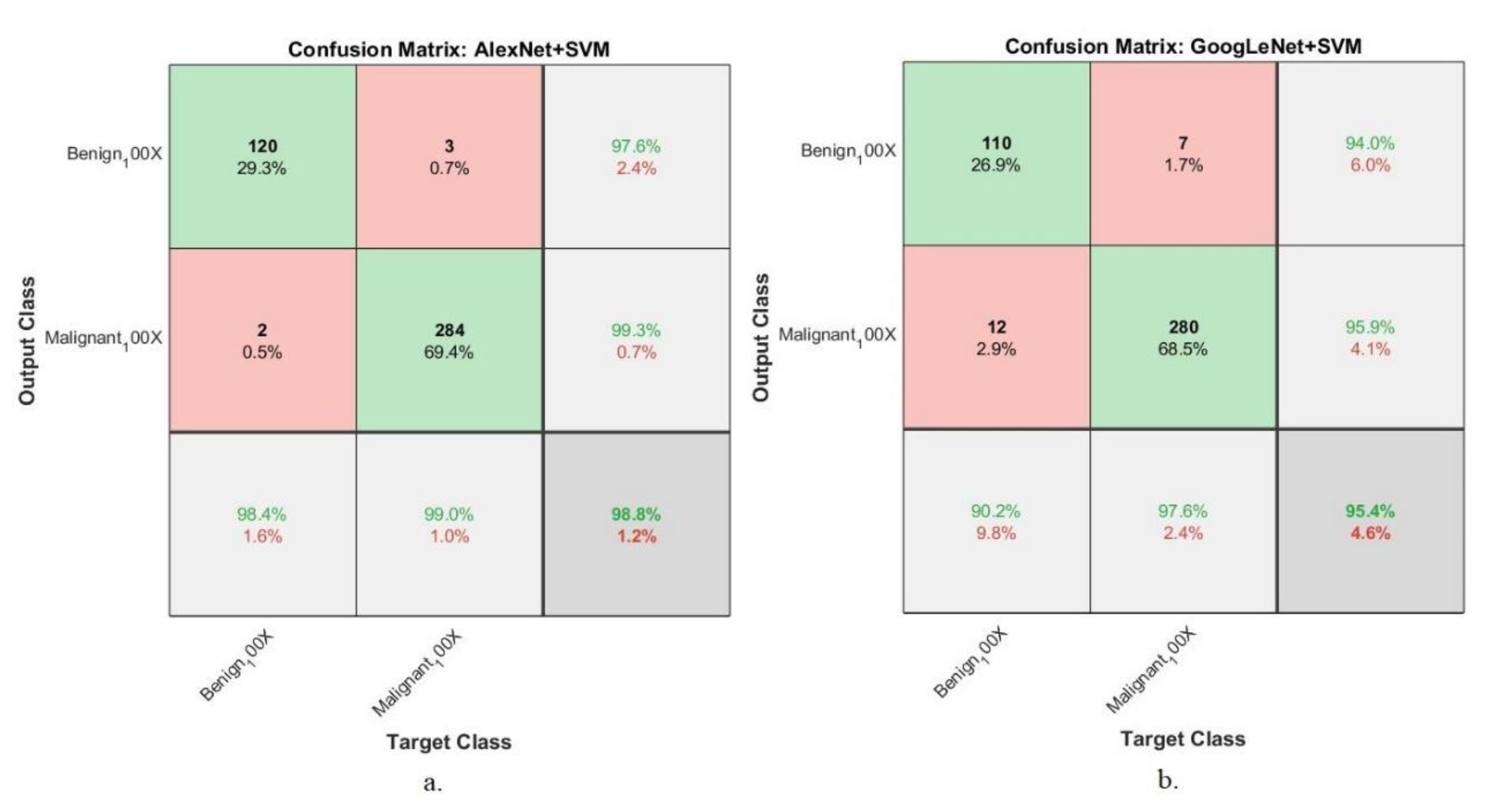

4.4. Results of Hybrid of CNN and SVM

4.5. Results of the ANN Based on the Fusion Features (CNN with Handcrafted)

4.5.1. Best Validation Performance

4.5.2. Error Histogram

4.5.3. Gradient

4.5.4. Receiver Operating Characteristic (ROC)

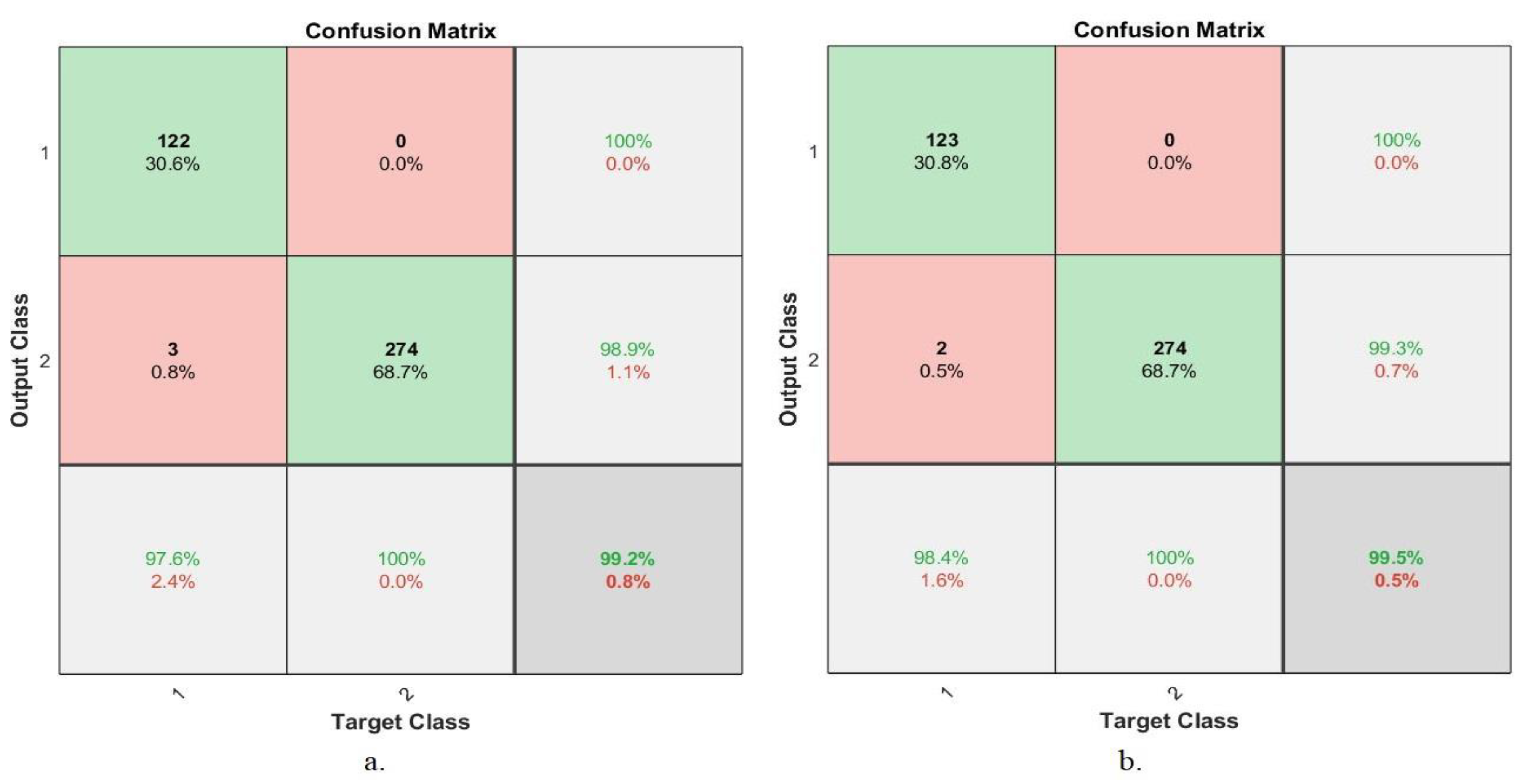

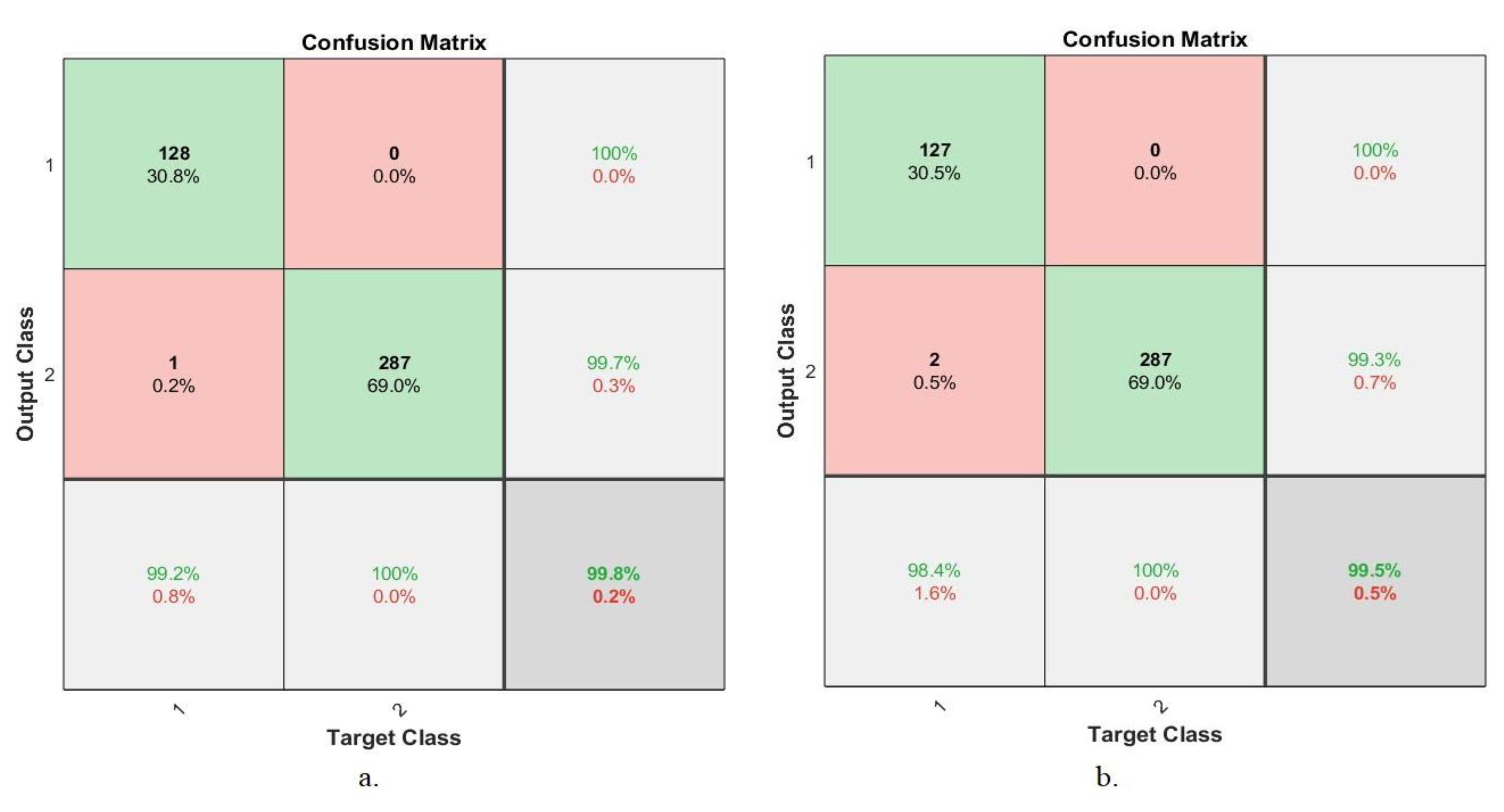

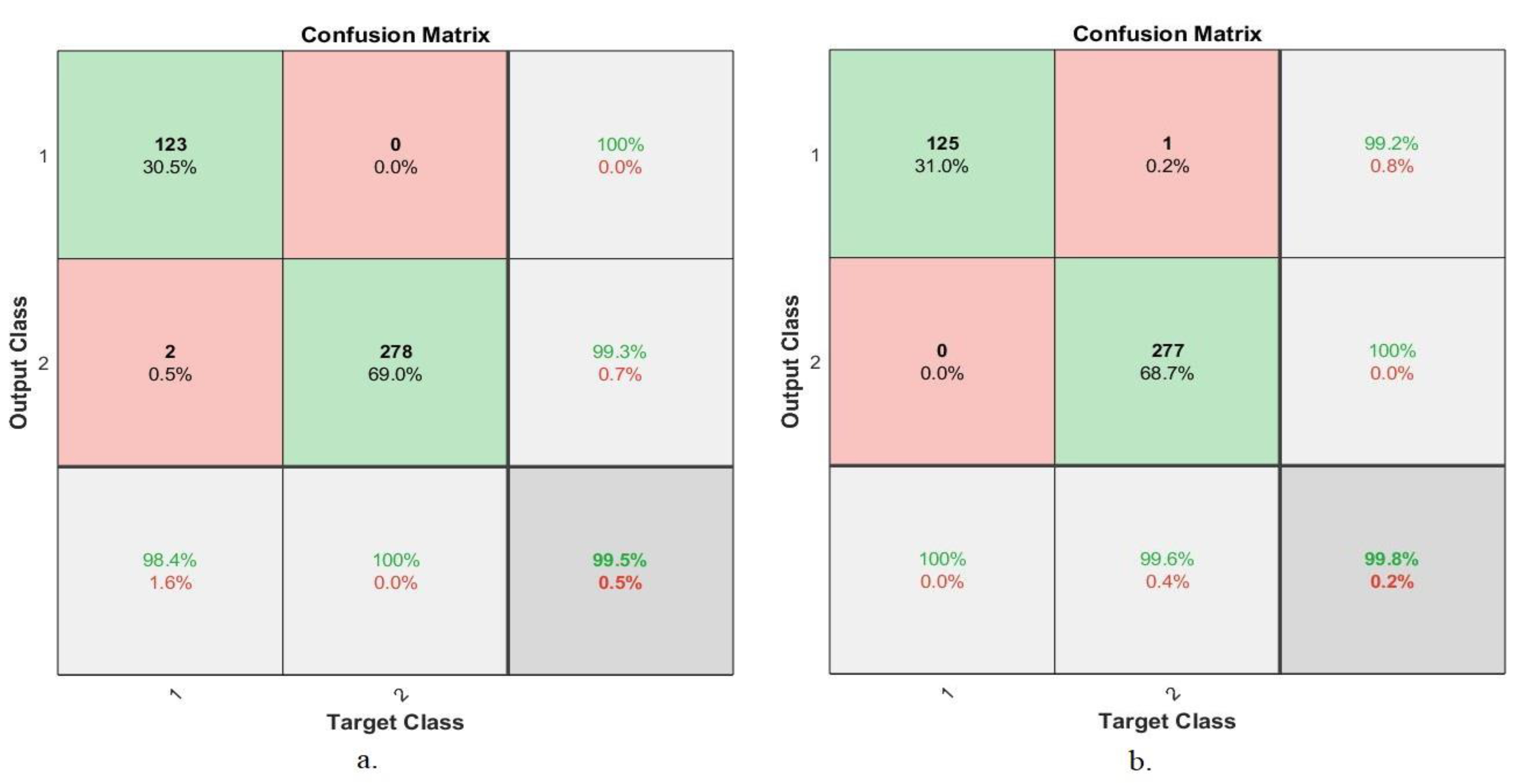

4.5.5. Confusion Matrix

5. Discussion and Comparison of the Systems

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sibbering, M.; Courtney, C.A. Management of breast cancer: Basic principles. Surgery 2016. 34, 25. [CrossRef]

- Wang, L. Early Diagnosis of Breast Cancer. Sensors 2017, 17, 1572. [Google Scholar] [CrossRef] [PubMed]

- Moo, T.A.; Sanford, R.; Dang, C.; Morrow, M. Overview of breast cancer therapy. PET Clin. 2018, 13, 339–354. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Hernandez, J.L.; Recinella, A.N.; Kandlikar, S.G.; Dabydeen, D.; Medeiros, L.; Phatak, P. Technology, application and potential of dynamic breast thermography for the detection of breast cancer. Int. J. Heat Mass Transf. 2019, 131, 558–573. [Google Scholar] [CrossRef]

- Waks, A.G.; Winer, E.P. Breast cancer treatment: A review. JAMA 2019, 321, 288–300. [Google Scholar] [CrossRef]

- Breast Cancer. Available online: https://www.who.int/news-room/fact-sheets/detail/breast-cancer (accessed on 2 March 2022).

- Sibbering, M.; Courtney, C.A. Management of breast cancer: Basic principles. Surgery 2019, 37, 157–163. [Google Scholar] [CrossRef]

- Łukasiewicz, S.; Czeczelewski, M.; Forma, A.; Baj, J.; Sitarz, R.; Stanisławek, A. Breast Cancer—Epidemiology, Risk Factors, Classification, Prognostic Markers, and Current Treatment Strategies—An Updated Review. Cancers 2021, 13, 4287. [Google Scholar] [CrossRef]

- Rustam, Z.; Hapsari, V.A.W.; Solihin, M.R. Optimal cervical cancer classification using Gauss-Newton representation based algorithm. In Proceedings of the 4th International Symposium on Current Progress in Mathematics and Sciences (ISCPMS2018), Depok, Indonesia, 30–31 October 2018. [Google Scholar] [CrossRef]

- Ibraheem, A.M.; Rahouma, K.H.; Hamed, H.F. 3PCNNB-net: Three parallel CNN branches for breast cancer classification through histopathological images. J. Med. Biol. Eng. 2021, 41, 494–503. [Google Scholar] [CrossRef]

- Das, K.; Conjeti, S.; Roy, A.G.; Chatterjee, J.; Sheet, D. Multiple instance learning of deep convolutional neural networks for breast histopathology whole slide classification. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging, Washington, DC, USA, 4–7 April 2018; IEEE: New York, NY, USA, 2018; pp. 578–581. [Google Scholar]

- Zewdie, E.T.; Tessema, A.W.; Simegn, G.L. Classification of breast cancer types, sub-types and grade from histopathological images using deep learning technique. Health Technol. 2021, 11, 1277–1290. [Google Scholar] [CrossRef]

- Ting, F.F.; Tan, Y.J.; Sim, K.S. Convolutional neural network improvement for breast cancer classification. Expert Syst. Appl. 2019, 120, 103–115. [Google Scholar] [CrossRef]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. A dataset for breast cancer histopathological image classification. IEEE Trans Biomed. Eng. 2015, 63, 1455–1462. Available online: https://ieeexplore.ieee.org/abstract/document/7312934/ (accessed on 20 November 2022). [CrossRef]

- Wang, P.; Wang, J.; Li, Y.; Li, P.; Li, L.; Jiang, M. Automatic classification of breast cancer histopathological images based on deep feature fusion and enhanced routing. Biomed. Signal Process. Control 2021, 65, 102341. [Google Scholar] [CrossRef]

- Gaber, H.; Mohamed, H.; Ibrahim, M. Breast cancer classification from histopathological images with separable convolutional neural network and parametric rectified linear unit. In Proceedings of the International Conference on Advanced Intelligent Systems and Informatics, Cairo, Egypt, 19–21 October 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 370–382. [Google Scholar] [CrossRef]

- Zhu, C.; Song, F.; Wang, Y.; Dong, H.; Guo, Y.; Liu, J. Breast cancer histopathology image classification through assembling multiple compact CNNs. BMC Med. Inform. Decis. Mak. 2019, 19, 1–17. [Google Scholar] [CrossRef]

- Saxena, S.; Shukla, S.; Gyanchandani, M. Breast cancer histopathology image classification using kernelized weighted extreme learning machine. Int. J. Imaging Syst. Technol. 2021, 31, 168–179. [Google Scholar] [CrossRef]

- Toğaçar, M.; Özkurt, K.B.; Ergen, B.; Cömert, Z. BreastNet: A novel convolutional neural network model through histopathological images for the diagnosis of breast cancer. Phys. A Stat Mech. Appl. 2020, 545, 123592. Available online: https://www.sciencedirect.com/science/article/pii/S0378437119319995 (accessed on 20 November 2022). [CrossRef]

- Saini, M.; Susan, S. Deep transfer with minority data augmentation for imbalanced breast cancer dataset. Appl. Soft Comput. 2020, 97, 106759. [Google Scholar] [CrossRef]

- Sudharshan, P.J.; Petitjean, C.; Spanhol, F.; Oliveira, L.E.; Heutte, L.; Honeine, P. Multiple instance learning for histopathological breast cancer image classification. Expert Syst. Appl. 2019, 117, 103–111. Available online: https://www.sciencedirect.com/science/article/pii/S0957417418306262 (accessed on 20 November 2022). [CrossRef]

- Saxena, S.; Shukla, S.; Gyanchandani, M. Pre-trained convolutional neural networks as feature extractors for diagnosis of breast cancer using histopathology. Int. J. Imaging Syst. Technol. 2020, 30, 577–591. [Google Scholar] [CrossRef]

- Gour, M.; Jain, S.; Sunil Kumar, T. Residual learning based CNN for breast cancer histopathological image classification. Int. J. Imaging Syst. Technol. 2020, 30, 621–635. [Google Scholar] [CrossRef]

- Afify, H.M.; Mohammed, K.K.; Hassanien, A.E. Multi-images recognition of breast cancer histopathological via probabilistic neural network approach. J. Syst. Manag. Sci. 2020, 1, 53–68. [Google Scholar] [CrossRef]

- Xiang, Z.; Ting, Z.; Weiyan, F.; Cong, L. Breast cancer diagnosis from histopathological image based on deep learning. In Proceedings of the 2019 Chinese Control and Decision Conference, Nanchang, China, 2–5 June 2019; IEEE: New York, NY, USA, 2019; pp. 4616–4619. [Google Scholar] [CrossRef]

- Jiang, Y.; Chen, L.; Zhang, H.; Xiao, X. Breast cancer histopathological image classification using convolutional neural networks with small SE-ResNet module. PLoS ONE 2019, 14, e0214587. [Google Scholar] [CrossRef] [PubMed]

- Budak, Ü.; Cömert, Z.; Rashid, Z.N.; Şengür, A.; Çıbuk, M. Computer-aided diagnosis system combining FCN and Bi-LSTM model for efficient breast cancer detection from histopathological images. Appl. Soft Comput. 2019, 85, 105765. Available online: https://www.sciencedirect.com/science/article/pii/S1568494619305460 (accessed on 20 November 2022). [CrossRef]

- Yari, Y.; Nguyen, T.V.; Nguyen, H.T. Deep learning applied for histological diagnosis of breast cancer. IEEE Access 2020, 8, 162432–162448. [Google Scholar] [CrossRef]

- BreakHis|Kaggle. Available online: https://www.kaggle.com/datasets/ambarish/breakhis (accessed on 13 February 2023).

- Abunadi, I.; Senan, E.M. Deep Learning and Machine Learning Techniques of Diagnosis Dermoscopy Images for Early Detection of Skin Diseases. Electronics 2021, 10, 3158. [Google Scholar] [CrossRef]

- Mohammed, B.A.; Senan, E.M.; Rassem, T.H.; Makbol, N.M.; Alanazi, A.A.; Al-Mekhlafi, Z.G.; Almurayziq, T.S.; Ghaleb, F.A. Multi-Method Analysis of Medical Records and MRI Images for Early Diagnosis of Dementia and Alzheimer’s Disease Based on Deep Learning and Hybrid Methods. Electronics 2021, 10, 2860. [Google Scholar] [CrossRef]

- Adeniyi, A.A.; Adeshina, S.A. Automatic Classification of Breast Cancer Histopathological Images Based on a Discriminatively Fine-Tuned Deep Learning Model. In Proceedings of the 2021 1st International Conference on Multidisciplinary Engineering and Applied Science (ICMEAS), Abuja, Nigeria, 15–16 July 2021; IEEE: New York, NY, USA, 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Zahoor, S.; Shoaib, U.; Lali, I.U. Breast Cancer Mammograms Classification Using Deep Neural Network and Entropy-Controlled Whale Optimization Algorithm. Diagnostics 2022, 12, 557. [Google Scholar] [CrossRef]

- Kassani, S.H.; Kassani, P.H.; Wesolowski, M.J.; Schneider, K.A.; Deters, R. Breast cancer diagnosis with transfer learning and global pooling. In Proceedings of the 2019 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 16–18 October 2019; IEEE: New York, NY, USA, 2019; pp. 519–524. [Google Scholar] [CrossRef]

- Senan, E.M.; Jadhav, M.E.; Rassem, T.H.; Aljaloud, A.S.; Mohammed, B.A.; Al-Mekhlafi, Z.G. Early diagnosis of brain tumour mri images using hybrid techniques between deep and machine learning. Comput. Math. Methods Med. 2022, 2022, 8330833. Available online: https://www.hindawi.com/journals/cmmm/2022/8330833/ (accessed on 20 November 2022). [CrossRef]

- Abunadi, I.; Senan, E.M. Multi-Method Diagnosis of Blood Microscopic Sample for Early Detection of Acute Lymphoblastic Leukemia Based on Deep Learning and Hybrid Techniques. Sensors 2022, 22, 1629. [Google Scholar] [CrossRef]

- Senan, E.M.; Abunadi, I.; Jadhav, M.E.; Fati, S.M. Score and Correlation Coefficient-Based Feature Selection for Predicting Heart Failure Diagnosis by Using Machine Learning Algorithms. Comput. Math. Methods Med. 2021, 2021, 8500314. [Google Scholar] [CrossRef]

- Kaur, P.; Singh, G.; Kaur, P. Intellectual detection and validation of automated mammogram breast cancer images by multi-class SVM using deep learning classification. Inform. Med. Unlocked 2019, 16, 100151. [Google Scholar] [CrossRef]

- Mushtaq, Z.; Yaqub, A.; Hassan, A.; Su, S.F. Performance analysis of supervised classifiers using PCA based techniques on breast cancer. In Proceedings of the 2019 International Conference on Engineering and Emerging Technologies (ICEET), Lahore, Pakistan, 21–22 February 2019; IEEE: New York, NY, USA, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Senan, E.M.; Jadhav, M.E.; Kadam, A. Classification of PH2 images for early detection of skin diseases. In Proceedings of the 2021 6th International Conference for Convergence in Technology, Maharashtra, India, 2–4 April 2021; IEEE: New York, NY, USA, 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Senan, E.M.; Jadhav, M.E. Techniques for the Detection of Skin Lesions in PH 2 Dermoscopy Images Using Local Binary Pattern (LBP). In International Conference on Recent Trends in Image Processing and Pattern Recognition; Springer: Singapore, 2020; pp. 14–25. [Google Scholar] [CrossRef]

- Senan, E.M.; Jadhav, M.E. Diagnosis of dermoscopy images for the detection of skin lesions using SVM and KNN. In Proceedings of the Third International Conference on Sustainable Computing; Springer: Singapore, 2022; pp. 125–134. [Google Scholar] [CrossRef]

- Mohammed, B.A.; Senan, E.M.; Alshammari, T.S.; Alreshidi, A.; Alayba, A.M.; Alazmi, M.; Alsagri, A.N. Hybrid Techniques of Analyzing MRI Images for Early Diagnosis of Brain Tumours Based on Hybrid Features. Processes 2023, 11, 212. [Google Scholar] [CrossRef]

- Ahmed, I.A.; Senan, E.M.; Rassem, T.H.; Ali, M.A.H.; Shatnawi, H.S.A.; Alwazer, S.M.; Alshahrani, M. Eye Tracking-Based Diagnosis and Early Detection of Autism Spectrum Disorder Using Machine Learning and Deep Learning Techniques. Electronics 2022, 11, 530. [Google Scholar] [CrossRef]

- Mohammed, B.A.; Senan, E.M.; Al-Mekhlafi, Z.G.; Alazmi, M.; Alayba, A.M.; Alanazi, A.A.; Alreshidi, A.; Alshahrani, M. Hybrid Techniques for Diagnosis with WSIs for Early Detection of Cervical Cancer Based on Fusion Features. Appl. Sci. 2022, 12, 8836. [Google Scholar] [CrossRef]

- Al-Mekhlafi, Z.G.; Senan, E.M.; Mohammed, B.A.; Alazmi, M.; Alayba, A.M.; Alreshidi, A.; Alshahrani, M. Diagnosis of Histopathological Images to Distinguish Types of Malignant Lymphomas Using Hybrid Techniques Based on Fusion Features. Electronics 2022, 11, 2865. [Google Scholar] [CrossRef]

- Ahmed, I.A.; Senan, E.M.; Shatnawi, H.S.A.; Alkhraisha, Z.M.; Al-Azzam, M.M.A. Multi-Techniques for Analyzing X-ray Images for Early Detection and Differentiation of Pneumonia and Tuberculosis Based on Hybrid Features. Diagnostics 2023, 13, 814. [Google Scholar] [CrossRef]

- Fati, S.M.; Senan, E.M.; Javed, Y. Early Diagnosis of Oral Squamous Cell Carcinoma Based on Histopathological Images Using Deep and Hybrid Learning Approaches. Diagnostics 2022, 12, 1899. [Google Scholar] [CrossRef]

- Fati, S.M.; Senan, E.M.; ElHakim, N. Deep and Hybrid Learning Technique for Early Detection of Tuberculosis Based on X-ray Images Using Feature Fusion. Appl. Sci. 2022, 12, 7092. [Google Scholar] [CrossRef]

- Umer, M.J.; Sharif, M.; Kadry, S.; Alharbi, A. Multi-Class Classification of Breast Cancer Using 6B-Net with Deep Feature Fusion and Selection Method. J. Pers. Med. 2022, 12, 683. [Google Scholar] [CrossRef]

- Ogundokun, R.O.; Misra, S.; Akinrotimi, A.O.; Ogul, H. MobileNet-SVM: A Lightweight Deep Transfer Learning Model to Diagnose BCH Scans for IoMT-Based Imaging Sensors. Sensors 2023, 23, 656. [Google Scholar] [CrossRef]

- Clement, D.; Agu, E.; Obayemi, J.; Adeshina, S.; Soboyejo, W. Breast Cancer Tumor Classification Using a Bag of Deep Multi-Resolution Convolutional Features. Informatics 2022, 9, 91. [Google Scholar] [CrossRef]

- Obayya, M.; Maashi, M.S.; Nemri, N.; Mohsen, H.; Motwakel, A.; Osman, A.E.; Alneil, A.A.; Alsaid, M.I. Hyperparameter Optimizer with Deep Learning-Based Decision-Support Systems for Histopathological Breast Cancer Diagnosis. Cancers 2023, 15, 885. [Google Scholar] [CrossRef] [PubMed]

- Hamza, M.A.; Mengash, H.A.; Nour, M.K.; Alasmari, N.; Aziz, A.S.A.; Mohammed, G.P.; Zamani, A.S.; Abdelmageed, A.A. Improved Bald Eagle Search Optimization with Synergic Deep Learning-Based Classification on Breast Cancer Imaging. Cancers 2022, 14, 6159. [Google Scholar] [CrossRef] [PubMed]

| Magnification | 40× | 100× | 200× | 400× | Total |

|---|---|---|---|---|---|

| Benign | 625 | 644 | 623 | 588 | 2480 |

| Malignant | 1370 | 1437 | 1390 | 1232 | 5429 |

| Total | 1995 | 2081 | 2013 | 1820 | 7909 |

| Magnification | Phase | (80:20) | Testing (20%) | |

|---|---|---|---|---|

| Classes | Training (80%) | Validation (20%) | ||

| 40× | Benign | 400 | 100 | 125 |

| Malignant | 877 | 219 | 274 | |

| 100× | Benign | 412 | 103 | 129 |

| Malignant | 920 | 230 | 287 | |

| 200× | Benign | 398 | 100 | 125 |

| Malignant | 890 | 222 | 278 | |

| 400× | Benign | 376 | 94 | 118 |

| Malignant | 846 | 212 | 265 | |

| Phase | Training Phase | |||||||

|---|---|---|---|---|---|---|---|---|

| Magnification | 40× | 100× | 200× | 400× | ||||

| Classes Name | Benign | Malignant | Benign | Malignant | Benign | Malignant | Benign | Malignant |

| Before-augm | 400 | 877 | 412 | 920 | 398 | 890 | 376 | 846 |

| After-augm | 2400 | 2631 | 2472 | 2760 | 2388 | 2670 | 2256 | 2538 |

| Magnification | Systems | Accuracy % | Precision % | Sensitivity % | Specificity % | AUC % |

|---|---|---|---|---|---|---|

| 40× | AlexNet + SVM | 97.2 | 97.4 | 97.32 | 97.15 | 99.21 |

| GoogLeNet + SVM | 95.2 | 94.5 | 94.6 | 94.5 | 97.89 | |

| 100× | AlexNet + SVM | 98.8 | 98.5 | 98.67 | 98.74 | 99.39 |

| GoogLeNet + SVM | 95.4 | 95 | 94.3 | 94.21 | 98.54 | |

| 200× | AlexNet + SVM | 97.5 | 97.61 | 96.5 | 96.73 | 98.86 |

| GoogLeNet + SVM | 96.3 | 96.5 | 95 | 95.32 | 97.92 | |

| 400× | AlexNet + SVM | 95.9 | 95 | 95.5 | 95.61 | 98.94 |

| GoogLeNet + SVM | 96.7 | 96.5 | 96 | 96.11 | 98.23 |

| Dataset | Fusion Features | Validation Performance | Error Histogram | Gradient | ROC % |

|---|---|---|---|---|---|

| 40× | AlexNet with handcrafted | 0.0039891 at epoch 41 | −0.9446 to 0.9446 | 0.00059127 at epoch 47 | 99.69 |

| GoogLeNet with handcrafted | 0.017289 at epoch 36 | −0.9373 to 0.9373 | 0.0025839 at epoch 42 | 99.62 | |

| 100× | AlexNet with handcrafted | 0.041676 at epoch 27 | −0.9483 to 0.9483 | 0.030079 at epoch 33 | 98.84 |

| GoogLeNet with handcrafted | 0.040614 at epoch 33 | −0.9493 to 0.9493 | 0.0039246 at epoch 39 | 99.28 | |

| 200× | AlexNet with handcrafted | 0.028025 at epoch 32 | −0.9181 to 0.9181 | 0.034159 at epoch 38 | 99.11 |

| GoogLeNet with handcrafted | 0.017914 at epoch 31 | −0.9496 to 0.9496 | 0.0027874 at epoch 37 | 99.03 | |

| 400× | AlexNet with handcrafted | 0.003744 at epoch 21 | −0.42 to 0.42 | 0.00054491 at epoch 27 | 100 |

| GoogLeNet with handcrafted | 0.00703 at epoch 26 | −0.8325 to 0.8325 | 0.00029889 at epoch 32 | 99.75 |

| Datasets | 40× | 100× | 200× | 400× | ||||

|---|---|---|---|---|---|---|---|---|

| Fusion Features | AlexNet with Handcrafted | GoogLeNet with Handcrafted | AlexNet with Handcrafted | GoogLeNet with Handcrafted | AlexNet with Handcrafted | GoogLeNet with Handcrafted | AlexNet with Handcrafted | GoogLeNet with Handcrafted |

| Accuracy % | 99.2 | 99.5 | 99.8 | 99.5 | 99.5 | 99.8 | 100 | 99.7 |

| Precision % | 99.5 | 99.72 | 100 | 99.64 | 99.58 | 99.81 | 100 | 99.78 |

| Sensitivity % | 99.42 | 99.21 | 99.82 | 99.11 | 99.75 | 100 | 100 | 100 |

| Specificity % | 99.23 | 99.43 | 99.67 | 99.23 | 99.34 | 100 | 100 | 100 |

| AUC % | 99.69 | 99.62 | 98.84 | 99.28 | 99.11 | 99.03 | 100 | 99.75 |

| Techniques | Datasets | Proposed Systems | Benign | Malignant | Accuracy % |

|---|---|---|---|---|---|

| Hybrid CNN with SVM | 40× | AlexNet +SVM | 96.8 | 97.4 | 97.2 |

| GoogLeNet +SVM | 92 | 96.7 | 95.2 | ||

| 100× | AlexNet +SVM | 98.4 | 99 | 98.8 | |

| GoogLeNet +SVM | 90.2 | 97.6 | 95.4 | ||

| 200× | AlexNet +SVM | 93.6 | 99.3 | 97.5 | |

| GoogLeNet +SVM | 91.2 | 96.6 | 96.3 | ||

| 400× | AlexNet +SVM | 94.1 | 96.7 | 95.9 | |

| GoogLeNet +SVM | 94.1 | 98 | 96.7 | ||

| ANN based on fusion features | 40× | AlexNet and traditional | 97.6 | 100 | 99.2 |

| GoogLeNet and traditional | 98.4 | 100 | 99.5 | ||

| 100× | AlexNet and traditional | 99.2 | 100 | 99.8 | |

| GoogLeNet and traditional | 98.4 | 100 | 99.5 | ||

| 200× | AlexNet and traditional | 98.4 | 100 | 99.5 | |

| GoogLeNet and traditional | 100 | 99.6 | 99.8 | ||

| 400× | AlexNet and traditional | 100 | 100 | 100 | |

| GoogLeNet and traditional | 100 | 99.6 | 99.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Jabbar, M.; Alshahrani, M.; Senan, E.M.; Ahmed, I.A. Multi-Method Diagnosis of Histopathological Images for Early Detection of Breast Cancer Based on Hybrid and Deep Learning. Mathematics 2023, 11, 1429. https://doi.org/10.3390/math11061429

Al-Jabbar M, Alshahrani M, Senan EM, Ahmed IA. Multi-Method Diagnosis of Histopathological Images for Early Detection of Breast Cancer Based on Hybrid and Deep Learning. Mathematics. 2023; 11(6):1429. https://doi.org/10.3390/math11061429

Chicago/Turabian StyleAl-Jabbar, Mohammed, Mohammed Alshahrani, Ebrahim Mohammed Senan, and Ibrahim Abdulrab Ahmed. 2023. "Multi-Method Diagnosis of Histopathological Images for Early Detection of Breast Cancer Based on Hybrid and Deep Learning" Mathematics 11, no. 6: 1429. https://doi.org/10.3390/math11061429

APA StyleAl-Jabbar, M., Alshahrani, M., Senan, E. M., & Ahmed, I. A. (2023). Multi-Method Diagnosis of Histopathological Images for Early Detection of Breast Cancer Based on Hybrid and Deep Learning. Mathematics, 11(6), 1429. https://doi.org/10.3390/math11061429