Abstract

Spatial-temporal sequence prediction is one of the hottest topics in the field of deep learning due to its wide range of potential applications in video-like data processing, specifically weather forecasting. Since most spatial-temporal observations evolve under physical laws, we adopt an attentional gating scheme to leverage the dynamic patterns captured by tailored convolution structures and propose a novel neural network, PastNet, to achieve accurate predictions. By highlighting useful parts of the whole feature map, the gating units help increase the efficiency of the architecture. Extensive experiments conducted on synthetic and real-world datasets reveal that PastNet bears the ability to accomplish this task with better performance than baseline methods.

MSC:

68T07

1. Introduction

Weather forecasting has always drawn the concern of scientists since it facilitates human welfare in various aspects, including agriculture, transportation, social and economic decisions, as well as catastrophe prevention, among others [1,2]. Operational numerical weather prediction (NWP) systems which simulate weather processes under the governing of nonlinear atmospheric physical equations (including Navier–Stokes and mass continuity equations) on a discrete numerical grid have dominated this task since being introduced decades ago [3]. Although improved continuously in different ways, NWP systems usually consume heavy computing resources with hours of calculations to reach steady state simulation [4], resulting in weakness for some essential scenarios. On top of that, climate change, as a conspicuous reality, unsurprisingly leads to increasing extreme weather frequency with higher losses of life and property [1,5]. Thus more actionable solutions to effectively capture the underlying pattern of drifting is urged, as is providing accurate weather predictions for crisis management and resource planning. Meanwhile, artificial intelligence (AI) methods, most prominently deep neural networks (DNNs), have shown their ability to learn from nonlinear systems through an array of successful applications, such as language understanding, visual object recognition, as well as drug discovery and genomics [6]. Inspired by these exiting accomplishments, researchers have exploited DNNs to enhance weather forecasting in different aspects. Rasp et al. in [7,8,9] adopted DNNs as a post-processing method for NWP to correct its systematic bias. Adewoyin and Rodrigues [10,11] proposed deep architectures to downscale NWP products into higher horizontal resolutions. Liu et al. conducted some explorations of AI-based severe weather and climate event identification [12,13,14]. These works prove that machine learning algorithms can be used in concert with NWP models to boost their performance. However, their dependency on the output of NWPs lead to them suffering from poor timeliness for the emergent situation.

On the other hand, spatial-temporal data processing has become a research hot spot in recent years, owing to the ubiquity of video data generated by smartphones, autonomous vehicles, and surveillance systems, and deep learning approaches have found considerable success in this field [15,16,17]. Deriving from those works, spatial-temporal sequence-to-sequence predictive networks were introduced as a pure data-driven method to describe weather conditions in advance [18,19,20]. In these works, meteorology radar echo or two-dimensional weather features were processed as images with deep learning approaches, in which convolutional layers were used to discover the spatial correlation of different places and recurrent structures and then capture their developing trends over time. Meanwhile, different from videos, the evolution of meteorology data is strongly restricted by physical laws, which are ignored by typical CNNs. To overcome this disadvantage, variations of recurrent neural networks have been introduced to represent partial differential equations (PDEs) in order to leverage physical knowledge and, unsurprisingly, outperform their counterparts [21,22]. Guen et al. mapped frame series in a latent space where they segmented the information into two separate parts, namely physical features, which can be described by PDEs, and the residual part, which carries the constant details with CNNs. Despite its exiting representational performance, this arbitrary divide-and-combine architecture could not make the most of the dynamical information, since the residual outputs were generated with an independent convolutional recurrent network. In this set-up, the recognition of dynamical regions in the residual part will be suppressed by the major areas of each frame which remain static between time steps.

To address this problem, this paper adopts an attention scheme which can automatically learn to focus on target regions without additional supervision [23]. It sets an attention gate between the physical and residual paths in order to leverage the dynamical information and better capture interesting details. It also proposes a novel physical, attention-gated spatial-temporal predictive network (PastNet). Benefiting from physical attention as an indication of evolution from frame to frame, PastNet can successfully highlight salient features useful for generative tasks. In extensive experiments, it performs better than others and has the potential to generate relatively more accurate information in scenarios involving physical movement, especially weather forecasting. The rest of the sections are organized as follows. Section 2 and Section 3 provide preliminary information about spatial-temporal predictive methodologies for weather prediction and demonstrate the design detail of the proposed models. Experiments with both synthetic and real-world datasets are discussed in Section 4. Section 5 concludes the whole paper and illustrates the future work.

2. Preliminaries

2.1. Problem Statement

Spatiotemporal sequence-to-sequence predictive problems, including data-driven weather forecasting, take spatial data sequences as input to generate the unobserved sequences. The known data from periodical observations of a dynamical system over a spatial region is represented by an grid which consists of M rows and N columns. For each observation, C measurements are taken at every point in the grid. One observation can be represented by a tensor , where R denotes the value space of the observations. The sequence with a length T used for prediction is denoted by . The prediction objective is the future system status with a length K, denoted by . Thus, the problem can be defined as shown in Equation (1):

To be specific, we take the air temperature as an instance, and the observation over a square region at every timestamp is a two-dimensional matrix.

2.2. Physical Dynamics and PhyDnet

To solve the above problem, we adopted the framework in [22]. Guen et al. assumed that there exists a conceptual latent space , in which the system status can be decomposed as a physical dynamic factor and residual factor. Formally, let encoder , decoder , and so that

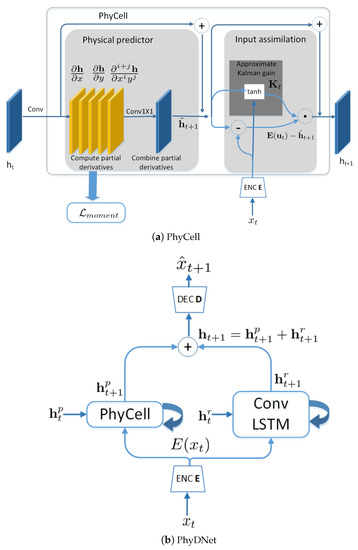

where and represent the physical component and residual component of h, respectively. The evolution of is governed by partial differential equations (PDEs), but that of is not. For the physical part, PhyCell was proven to be capable of learning the general forms of PDEs to build physically constrained neural networks [22]. As shown in Figure 1a, PhyCell generates the physical status of the next time step.

Figure 1.

PhyCell for estimation of PDEs and PhyDNet for spatiotemporal prediction.

For the residual part, ConvLSTM was used to generate the residual status in a space :

where and denote PhyCell and ConvLSTM, respectively. Then, PhyDNet, as demonstrated in Figure 1b, directly sums them up and decodes them to compose a final prediction of the next time step in the space R. A strong assumption was adopted by PhyDNet that and are totally independent of each other, which can hardly be satisfied in the real world. From intuition, there is a sound possibility that the residual features would be somewhat changed along with the physical state transition. At the same time, within the whole scope feature map, only a minor proportion of pixels contributes to the evolution of the sequence, which will be suppressed by the other useless information without any emphasis operation.

2.3. Attention Gates

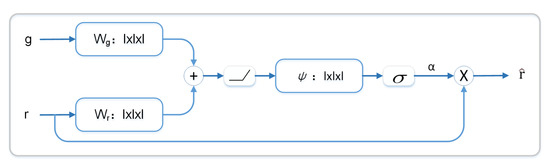

Attention gates have been proven to be competent at identifying objectively interesting parts of features from irrelevant ones within a global scope in order to assist with the identification of salient information carried by sparse feature maps [23]. As shown in Figure 2, we used the physical dynamic feature as a gating vector for each pixel to determine the focus regions in the corresponding residual map.

Figure 2.

Attention gate.

The physical gating vector already contains dynamic information about the whole picture which may reveal the location where the evolution will take place in detail. After this gate, the residual parts with higher physical attention coefficients will be enlarged, while irrelevant regions will be suppressed. Similar to [23], in this work, we adopt additive attention to achieve higher prediction accuracy than multiplicative attention [24], which can be formulated in Equations (6) and (7):

where r and g denote the residual part and the physical gating vector which is calculated by PhydCell, respectively, and and indicate the ReLU and sigmoid activation functions, respectively. Thus, the residual part can be updated with the attention coefficients as in Equation (8).

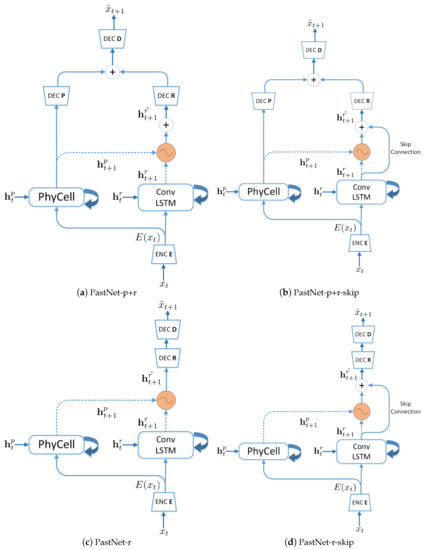

With different treatments of the dynamic feature and skip connection, as detailed in Table 1, we implemented four versions of PastNet, namely PastNet-p+r, PastNet-p+r-skip, PastNet-r, and PastNet-r-skip.

Table 1.

Set-up of four versions of PastNet. PastNet-p+r decodes physical dynamic feature to and adds it to to compose . PastNet-p+r-skip has a skip connection from and . PastNet-r drops after the attention gate and only keeps to feed in the final decoder. PastNet-r-skip adds a skip connection between and on PastNet-r.

Their architectures are demonstrated in Figure 3. First, the data sample goes through an encoder, where the observation is mapped into a latent space to be disentangled into physical factors and residual features. After that, the encoded features will go to PhyCell and ConvLSTM separately for further information extraction. In all four structures shown in Figure 3, the encoded feature map is taken by PhyCell for movement pattern understanding, with an output of the next time step physical status in a hidden space, denoted by .

Figure 3.

Four versions of PastNet. PastNet-p+r adds and to compose . PastNet-p+r-skip has a skip connection from and . PastNet-r drops after the attention gate and only keeps to feed into the final decoder. PastNet-r-skip adds a skip connection between and on PastNet-r.

Meanwhile, a convolutional LSTM block will intake recurrently for sequence generation, which is a common solution for spatiotemporal prediction tasks but suffers from the problem mentioned above. To tackle this, the physical information will then serve as a gating vector to trigger the attention gate and adjust the focus distribution of the network to improve the accuracy of the residual estimation of the next frame, denoted by . In PastNet-p+r-skip and PastNet-r-skip, a highway is set from the ConvLSTM block and the output of the attention gate, while in PastNet-r and PastNet-r-skip, the physical dynamic feature is no longer useful, and only the residual part is decoded for the next step. Lastly, the generated hidden feature of the next time step is mapped back by a decoder block with a final output of the network , which is used to represent the value of x at time t + 1. For instance, the process of PastNet-p+r can be formally described by Equation (9):

Both the PhyCell and ConvLSTM blocks are recurrent structures which contain hidden state vectors to memorize useful signals that pass through. They deal with sequential data samples successively so that the underlying temporal pattern can be captured and leveraged to estimate the future development of the observed variables.

3. PastNet Model for Spatiotemporal Prediction

Inspired by the works mentioned above, we propose a physical attention-gated spatiotemporal predictive network (PastNet), which leverages the physical dynamic feature captured by PhyCell as a gating vector to trigger the attention scheme with the purpose of assisting the convolutional recurrent neural network to concentrate more on changing areas and better predict the future states of the spatial data series. After that, the physical feature may be either dropped or decoded by a specific decoder, as in the PhyDNet architecture. On top of that, as the skip connection is a popular structure in DNNs for avoiding gradient vanishing, we also consider using it in PastNet.

4. Experiments

To evaluate the performance of our architecture, we first compared it with PhyDNet on a synthetic Moving MNIST dataset to test the effectiveness of the attention gate. To verify the capability of our model in the weather forecasting problem, we extracted the air temperature subset of a certain region from ERA-5-Land, (The dataset can be downloaded at https://cds.climate.copernicus.eu/cdsapp#!/dataset/reanalysis-era5-land?tab=overview, accessed on 9 March 2023 ) which is commonly used for meteorological method studies. All the experiments were conducted on a computer with 64 Intel Xeon 5218 CPUs and 512 GB of RAM. The DNNs were implemented with Pytorch 1.8.0 and executed on an Nvidia A100 GPU with 40 GB of memory.

4.1. Datasets

Moving MNIST is a standard synthetic dataset derived from MNIST. Similar to previous works, we generated moving and bouncing handwritten digits at randomly initiated velocity amplitudes in a box [25,26]. For each data instance with 20 images, the first 10 images were taken as input observations, and the subsequent 10 were output predictions. The volumes for training, validation, and testing were 10,000, 2000, and 3000 respectively.

ERA5-Land was derived from ERA5, which is the fifth-generation European Centre for Medium-Range Weather Forecasts (ECMWF) atmospheric reanalysis for the global climate and weather for the past decades. Compared with ERA5, ERA5-Land contains variables around the land surface at an enhanced resolution of . ERA5-Land was produced by replaying the land component of the ECMWF ERA5 climate reanalysis, which combines model data with observations from across the world into a globally complete and consistent dataset using the laws of physics. In this work, we selected hourly air temperatures at two meters above the surface of the land from January 2019 to December 2020 as the training set and data from January to August 2021 as the testing set. All data frames covered the region of Chongqing City, which sits between 26° N–34° N and 104° E–112° E.

4.2. Evaluation Metrics

We adopted the mean square error (MSE) and mean absolute error (MAE) as evaluation metrics to evaluate the accuracy of the spatiotemporal sequence forecasting models. It is worth mentioning that structural information is vital for Moving MNIST, so the structural similarity (SSIM) was compared between the models on this dataset.

4.3. Results and Analysis

Similar to Guen et al., we set the PhyCell kernel size as and used the moment matrix to impose physical constraints on the 49 filters. The residual path was composed of ConvLSTM with 3 layers containing 128, 128, and 64 hidden filters. The network was optimized with Adam, and the initiated learning rate was with a decay rate of 0.9.

The comparisons in Table 2 reveal that all variants of PastNet outperformed PhyDNet with fewer parameters and lower training times. Among them, PastNet-p+r-skip performed best in all metrics, with an MSE lower by 2.6 and MAE lower by 6 than PhyDNet and using about 4000 more parameters. On top of that, PastNet-r and PastNet-r-skip had fewer parameters than PhyDNet, since they dropped the physical features after the attention gate and the corresponding decoder was no longer needed.

Table 2.

Comparison of different versions of PastNet and baseline methods on Moving MNIST. All networks were trained on the dataset for 2000 rounds with a batch size of 64. All PastNet models outperformed ConvLSTM and PhyDNet, with slightly more parameters. PastNet-p+r-skip gained the best MSE, MAE, and SSIM.

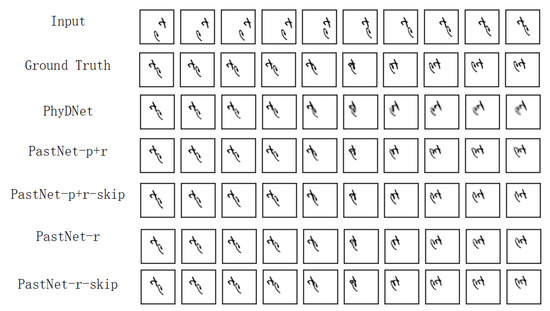

Prediction examples of PastNet and PhyDNet on Moving MNIST with two digits are depicted in Figure 4. It can be seen that the images generated by PastNet are noticeably clearer than those generated by PhyDNet.

Figure 4.

Output examples of models evaluated on Moving MNIST.

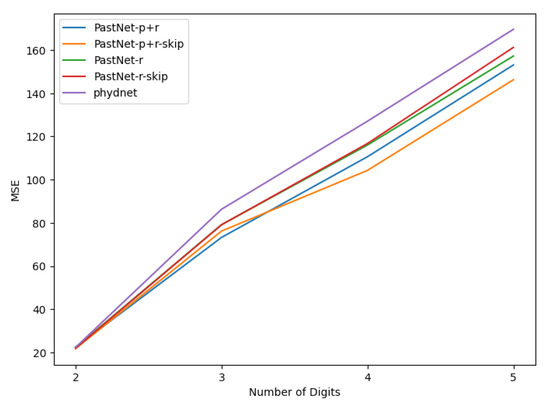

To further evaluate the performance of our models on an out-of-domain dataset, we tested them on Moving MNIST with numbers of digits from 3 to 5. Not surprisingly, as depicted in Figure 5, the MSEs of all tested models grew with the number of digits within an image, and the MSE from every version of PastNet was always smaller than that for PhyDNet.

Figure 5.

MSEs of trained models on Moving MNIST with different numbers of digits.

For the air temperature prediction task, we ran all models on a subset of the ERA5-land dataset covering the area of Chongqing. In data preprocessing, we used a sliding window scheme to transform the data series into samples with the shape of , containing 20 temporally successive image-like feature maps with 1 channel and 80 pixels for the width and height. The first 10 frames were used for the input, and the remaining 10 were for the ground truth. The set-ups for the tested models and computing environment were the same as in the experiments on Moving MNIST. The evaluation results are showcased in Table 3. It is clear that PastNet outperformed PhyDNet in this task.

Table 3.

MSEs and MAEs for air temperature prediction task.

5. Conclusions

In this paper, we proposed and evaluated a deep architecture, PastNet, which pays attention to the physically constrained dynamics to assist spatial-temporal prediction tasks which are governed by physical laws. Extensive experiments on both a synthetic Moving MNIST dataset and real-world air temperature dataset showed that PastNet can make more accurate predictions than its counterparts. In future work, other methods can be tried to improve the proposed models, such as replacing the summation before the decoder units with nonlinear transformation layers for feature merging, as well as using a transformer instead of a recurrent network to improve parallelability.

Author Contributions

Conceptualization, X.Z. and Q.S.; methodology, X.Z. and X.L.; model building and code writing, X.Z.; writing—original draft preparation, X.Z.; writing—review and editing, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded partially by the Chinese Academy of Sciences (No. KFJ-STS-QYZD-2021-01-001), Department of Science and Technology of Inner Mongolia Autonomous Region (No. 2022ZY0131), as well as the Inner Mongolia Meteorological Observatory (No. QXT21111102).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Racah, E.; Beckham, C.; Maharaj, T.; Kahou, S.E.; Prabhat, M.; Pal, C. ExtremeWeather: A large-scale climate dataset for semi-supervised detection, localization, and understanding of extreme weather events. Adv. Neural Inf. Process. Syst. 2017, 30, 3403–3414. [Google Scholar]

- McGovern, A.; Elmore, K.L.; Gagne, D.J.; Haupt, S.E.; Karstens, C.D.; Lagerquist, R.; Smith, T.; Williams, J.K. Using artificial intelligence to improve real-time decision-making for high-impact weather. Bull. Am. Meteorol. Soc. 2017, 98, 2073–2090. [Google Scholar] [CrossRef]

- Bauer, P.; Thorpe, A.; Brunet, G. The quiet revolution of numerical weather prediction. Nature 2015, 525, 47–55. [Google Scholar] [CrossRef]

- Ravuri, S.; Lenc, K.; Willson, M.; Kangin, D.; Lam, R.; Mirowski, P.; Fitzsimons, M.; Athanassiadou, M.; Kashem, S.; Madge, S.; et al. Skilful precipitation nowcasting using deep generative models of radar. Nature 2021, 597, 672–677. [Google Scholar] [CrossRef]

- Fang, W.; Sha, Y.; Sheng, V.S. Survey on the Application of Artificial Intelligence in ENSO Forecasting. Mathematics 2022, 10, 3793. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Rasp, S.; Lerch, S. Neural networks for postprocessing ensemble weather forecasts. Mon. Weather Rev. 2018, 146, 3885–3900. [Google Scholar] [CrossRef]

- Price, I.; Rasp, S. Increasing the Accuracy and Resolution of Precipitation Forecasts Using Deep Generative Models. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Virtual, 28–30 March 2022; pp. 10555–10571. [Google Scholar]

- Zhao, X.; Sun, Q.; Tang, W.; Yu, S.; Wang, B. A comprehensive wind speed forecast correction strategy with an artificial intelligence algorithm. Front. Environ. Sci. 2022, 10, 1–12. [Google Scholar] [CrossRef]

- Adewoyin, R.A.; Dueben, P.; Watson, P.; He, Y.; Dutta, R. TRU-NET: A deep learning approach to high resolution prediction of rainfall. Mach. Learn. 2021, 110, 2035–2062. [Google Scholar] [CrossRef]

- Rodrigues, E.R.; Oliveira, I.; Cunha, R.; Netto, M. DeepDownscale: A deep learning strategy for high-resolution weather forecast. In Proceedings of the 2018 IEEE 14th International Conference on e-Science (e-Science), Amsterdam, The Netherlands, 29 Octomber–1 November 2018; pp. 415–422. [Google Scholar]

- Liu, Y.; Racah, E.; Correa, J.; Khosrowshahi, A.; Lavers, D.; Kunkel, K.; Wehner, M.; Collins, W. Application of deep convolutional neural networks for detecting extreme weather in climate datasets. arXiv 2016, arXiv:1605.01156. [Google Scholar]

- Gagne, D.J.; Haupt, S.E.; Nychka, D.W.; Thompson, G. Interpretable deep learning for spatial analysis of severe hailstorms. Mon. Weather Rev. 2019, 147, 2827–2845. [Google Scholar] [CrossRef]

- Essa, Y.; Hunt, H.G.; Gijben, M.; Ajoodha, R. Deep Learning Prediction of Thunderstorm Severity Using Remote Sensing Weather Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4004–4013. [Google Scholar] [CrossRef]

- Oprea, S.; Martinez-Gonzalez, P.; Garcia-Garcia, A.; Castro-Vargas, J.A.; Orts-Escolano, S.; Garcia-Rodriguez, J.; Argyros, A. A review on deep learning techniques for video prediction. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 2806–2826. [Google Scholar] [CrossRef]

- Wang, S.; Cao, J.; Yu, P.S. Deep Learning for Spatio-Temporal Data Mining: A Survey. IEEE Trans. Knowl. Data Eng. 2022, 34, 3681–3700. [Google Scholar] [CrossRef]

- Luo, A.; Shangguan, B.; Yang, C.; Gao, F.; Fang, Z.; Yu, D. Spatial-Temporal Diffusion Convolutional Network: A Novel Framework for Taxi Demand Forecasting. ISPRS Int. J. Geo-Inf. 2022, 11, 193. [Google Scholar] [CrossRef]

- Shi, X.; Yeung, D.Y. Machine learning for spatiotemporal sequence forecasting: A survey. arXiv 2018, arXiv:1808.06865. [Google Scholar]

- Wang, Y.; Wu, H.; Zhang, J.; Gao, Z.; Wang, J.; Yu, P.; Long, M. PredRNN: A Recurrent Neural Network for Spatiotemporal Predictive Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2208–2225. [Google Scholar] [CrossRef]

- Castro, R.; Souto, Y.M.; Ogasawara, E.; Porto, F.; Bezerra, E. STConvS2S: Spatiotemporal Convolutional Sequence to Sequence Network for weather forecasting. Neurocomputing 2021, 426, 285–298. [Google Scholar] [CrossRef]

- Long, Z.; Lu, Y.; Ma, X.; Dong, B. PDE-Net: Learning PDEs from data. In Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholm, Sweden, 10–15 July 2018; Volume 7, pp. 5067–5078. [Google Scholar]

- Guen, V.L.; Thome, N. Disentangling physical dynamics from unknown factors for unsupervised video prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11474–11484. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Luong, M.T.; Pham, H.; Manning, C.D. Effective approaches to attention-based neural machine translation. In Proceedings of the Conference Proceedings—EMNLP 2015: Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1412–1421. [Google Scholar] [CrossRef]

- Srivastava, N.; Mansimov, E.; Salakhutdinov, R. Unsupervised learning of video representations using LSTMs. In Proceedings of the 32nd International Conference on Machine Learning, ICML 2015, Lille, France, 6–11 July 2015; Volume 1, pp. 843–852. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28, 802–810. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).