Solar Energy Production Forecasting Based on a Hybrid CNN-LSTM-Transformer Model

Abstract

1. Introduction

- Quadratic time computation: the main operation for the self-attention block proposed by Transformers, named canonical dot product [22], is computationally extensive and requires large memory storage.

- Very large memory for large input: large input requires stacking more encoder/decoder layers, which results in the doubling of the required memory by a factor equal to the number of stacked encoder/decoder layers. This limits the use of Transformer for processing large inputs, such as long time series.

- Low processing speed: the processing speed of the encoder/decoder structures works sequentially, which increases the processing time.

- The proposed clustering technique increased the forecasting accuracy. The solar irradiation in the collected data presents wide variation between different seasons. Thus, clustering the data into four main clusters based on seasons enhanced the learning capacity of the proposed model.

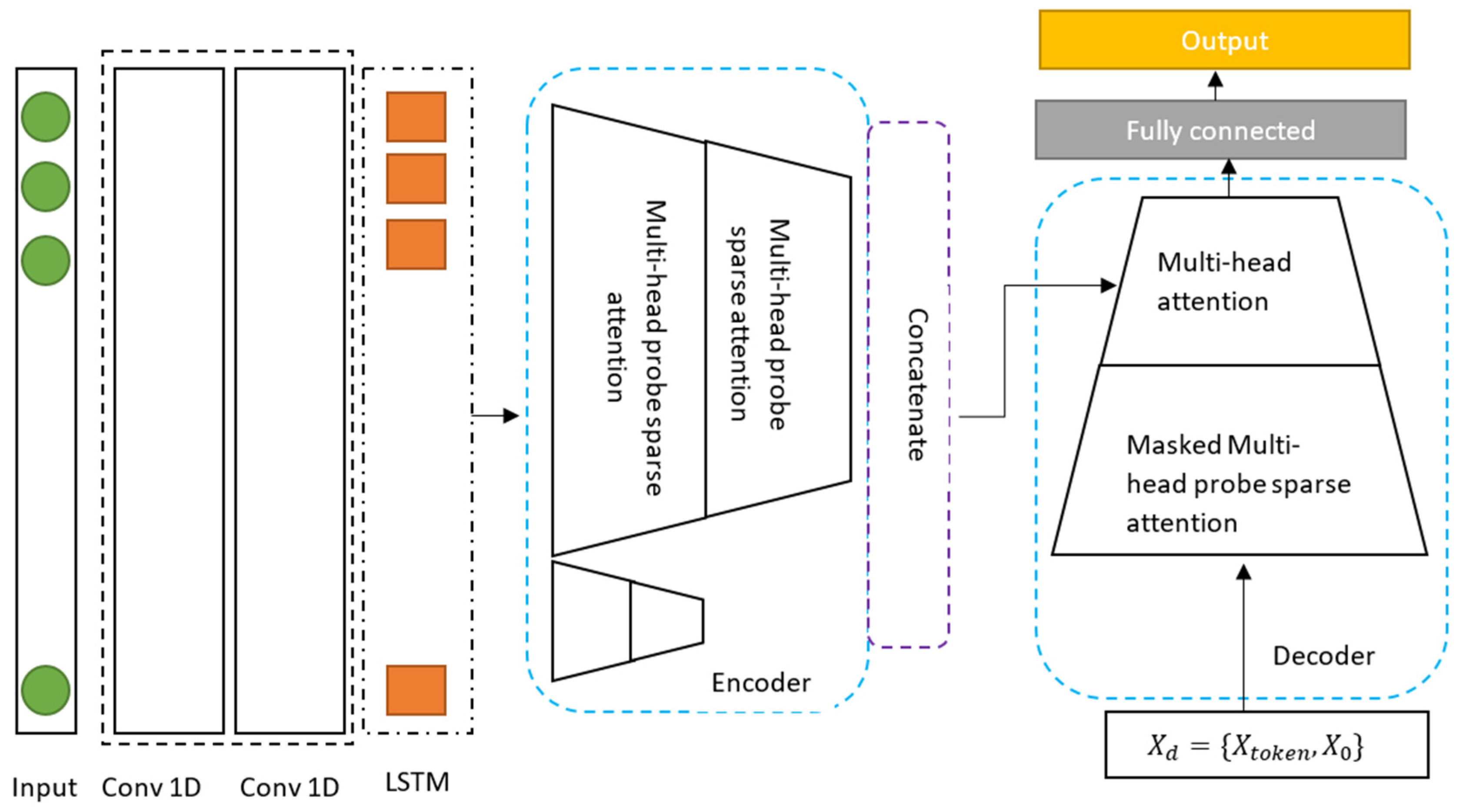

- The CNN-LSTM combination represented a more powerful forecasting model. CNN and LSTM are two powerful Deep Learning models for time-series forecasting. The combination of the two models was very effective for forecasting solar energy production.

- Adopting a Transformer achieved a better performance. The proposed Transformer was very important for enhancing the performance of the CNN-LSTM combination. It was used to force the LSTM to pay attention to relevant features in the historical time series and to generate more accurate predictions.

- Proposing a probe sparse operation was important to replace the canonical dot product operation in the self-attention block. The proposed operation reduced the computational time and storage memory.

- Proposing a distillation technique helped privilege the dominating attention scores in the stacked encoder. This technique reduced the computational complexity and allowed the processing of longer sequences.

- Proposing a generative decoder helped acquire long sequences through a simple forward pass. It was useful for preventing the spread of cumulative errors in the inference.

2. Related Works

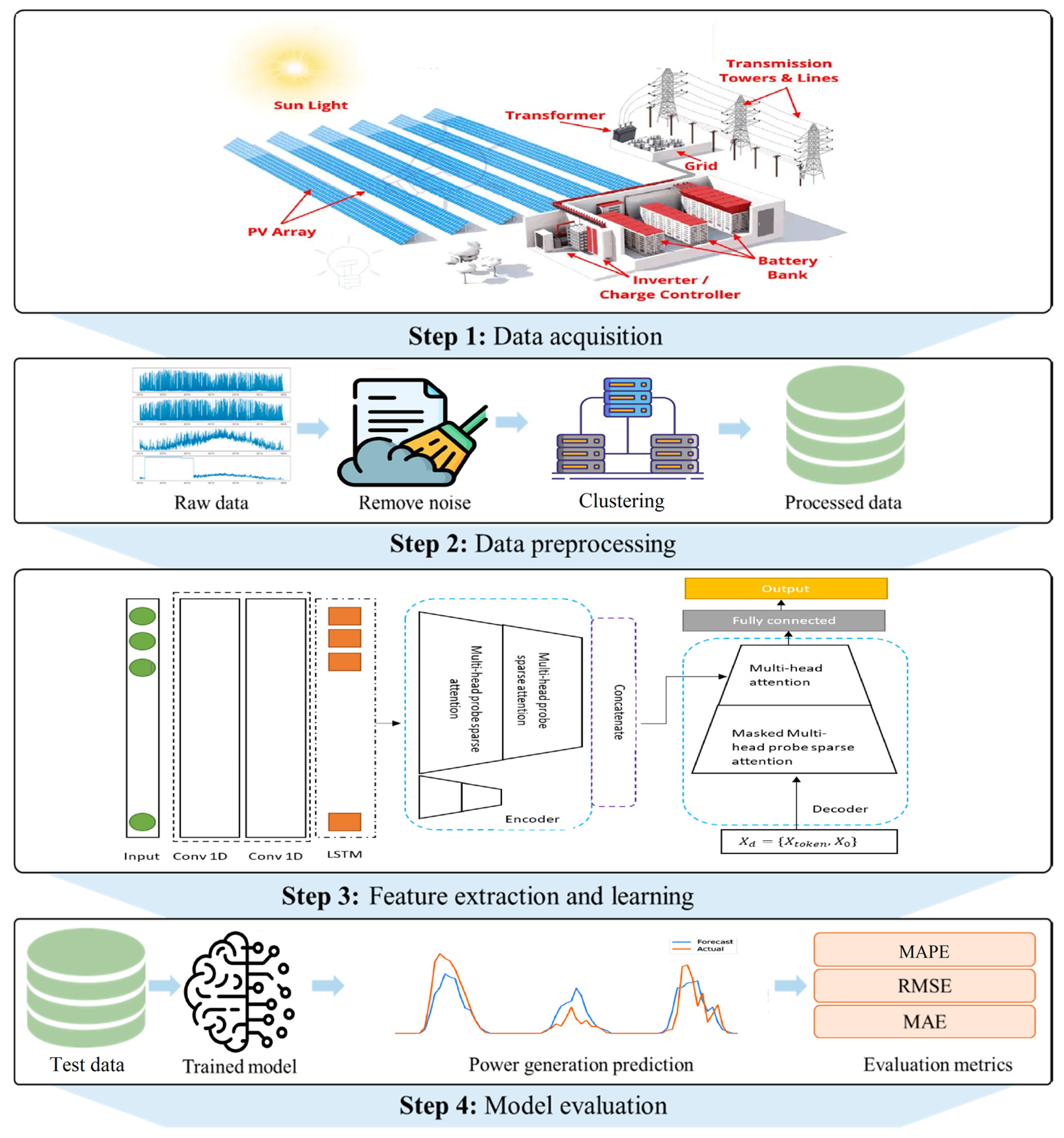

3. Proposed Approach

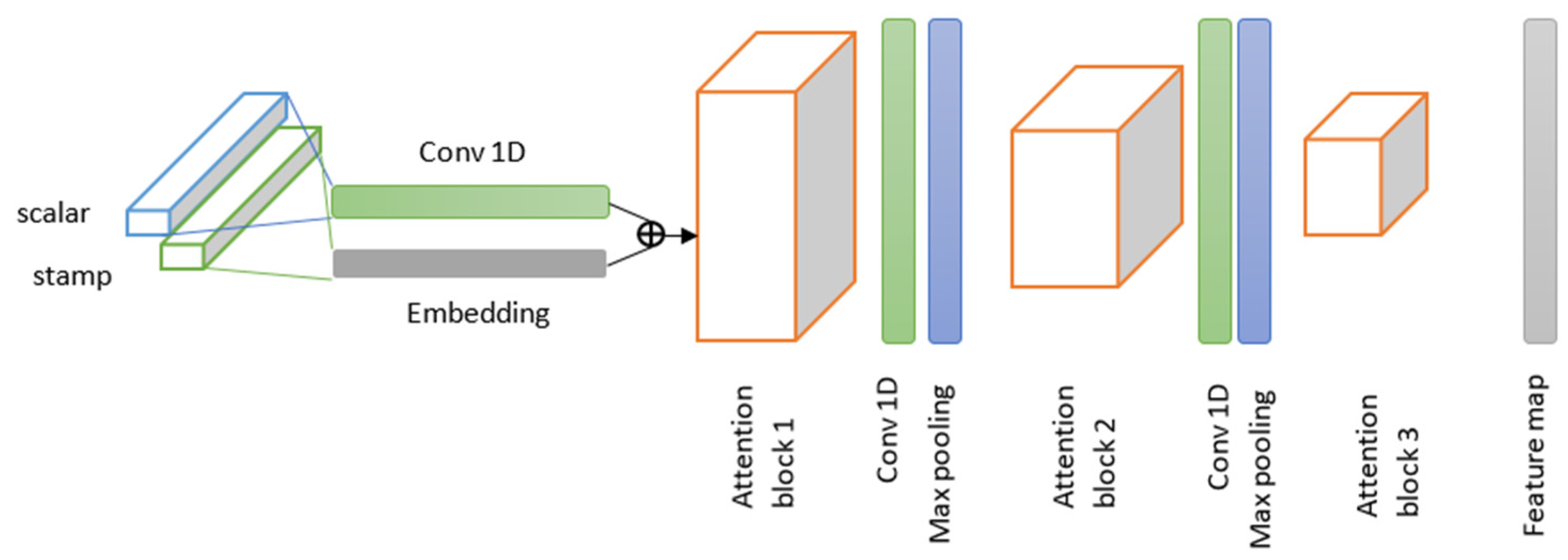

3.1. Proposed Forecasting Model

3.2. Self-Organizing Map Algorithm

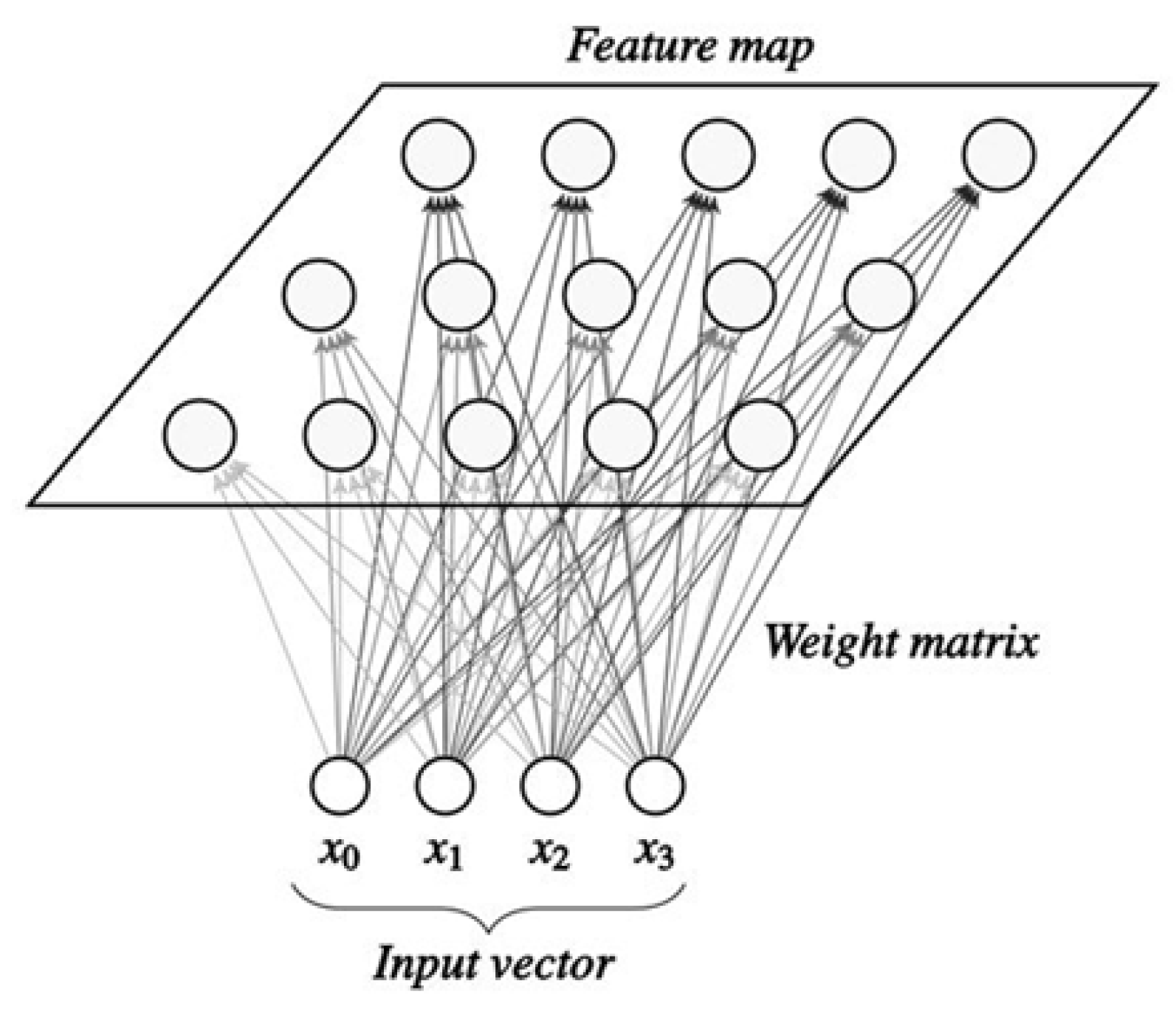

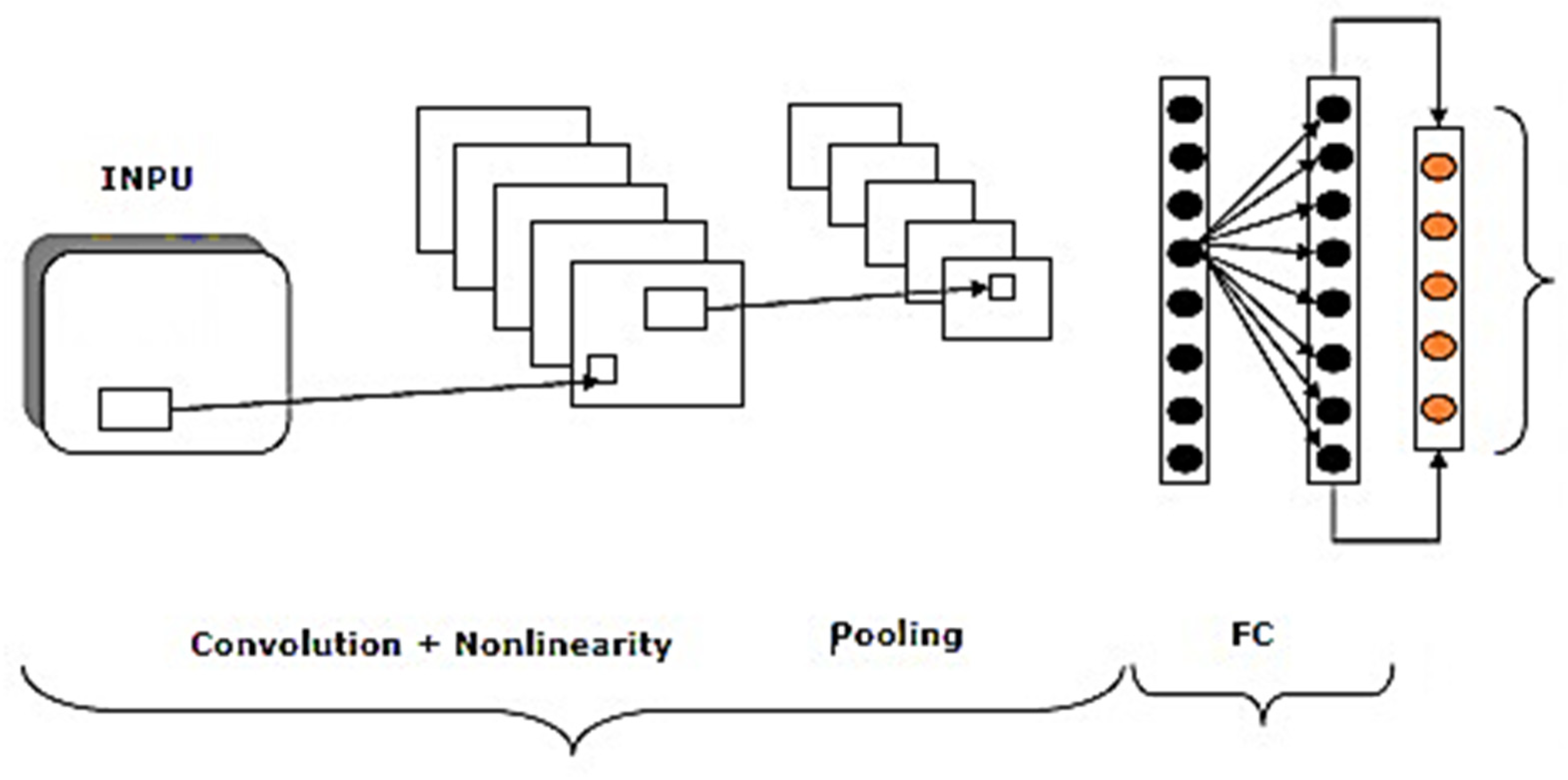

3.3. Convolutional Neural Network

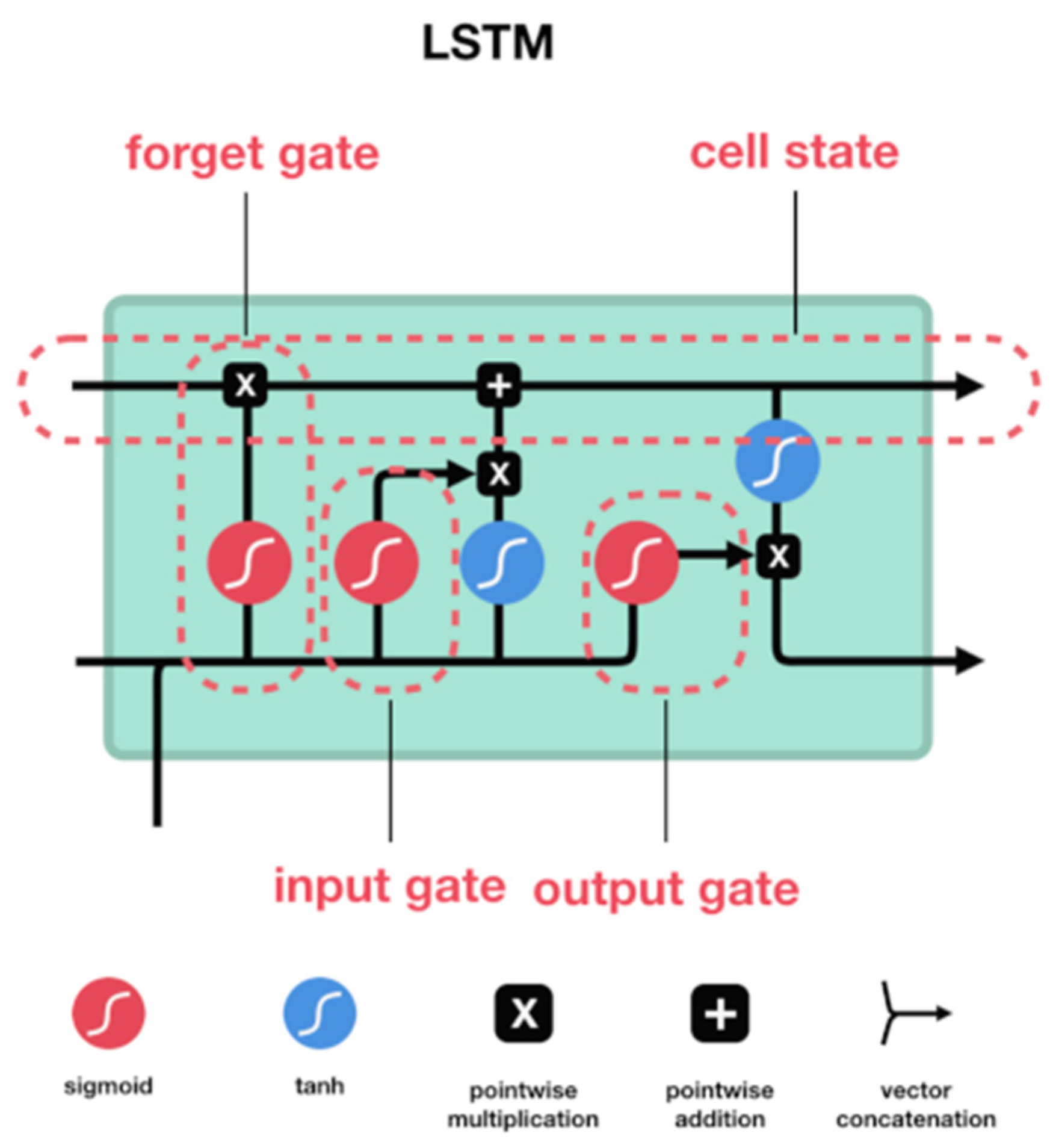

3.4. LSTM Unit

3.5. Transformer

- Quadratic time computation: the main operation for the self-attention block proposed by Transformers, named canonical dot product, is computationally extensive and requires large memory storage.

- Very large memory for large input: large input requires stacking more encoder/decoder layers, which results in the doubling of the required memory by a factor equal to the number of stacked encoder/decoder layers. This limits the use of Transformers for processing large inputs, such as long time series.

- Low processing speed: the processing speed of the encoder/decoder structures works sequentially, which increases the processing time.

4. Experiment and Results

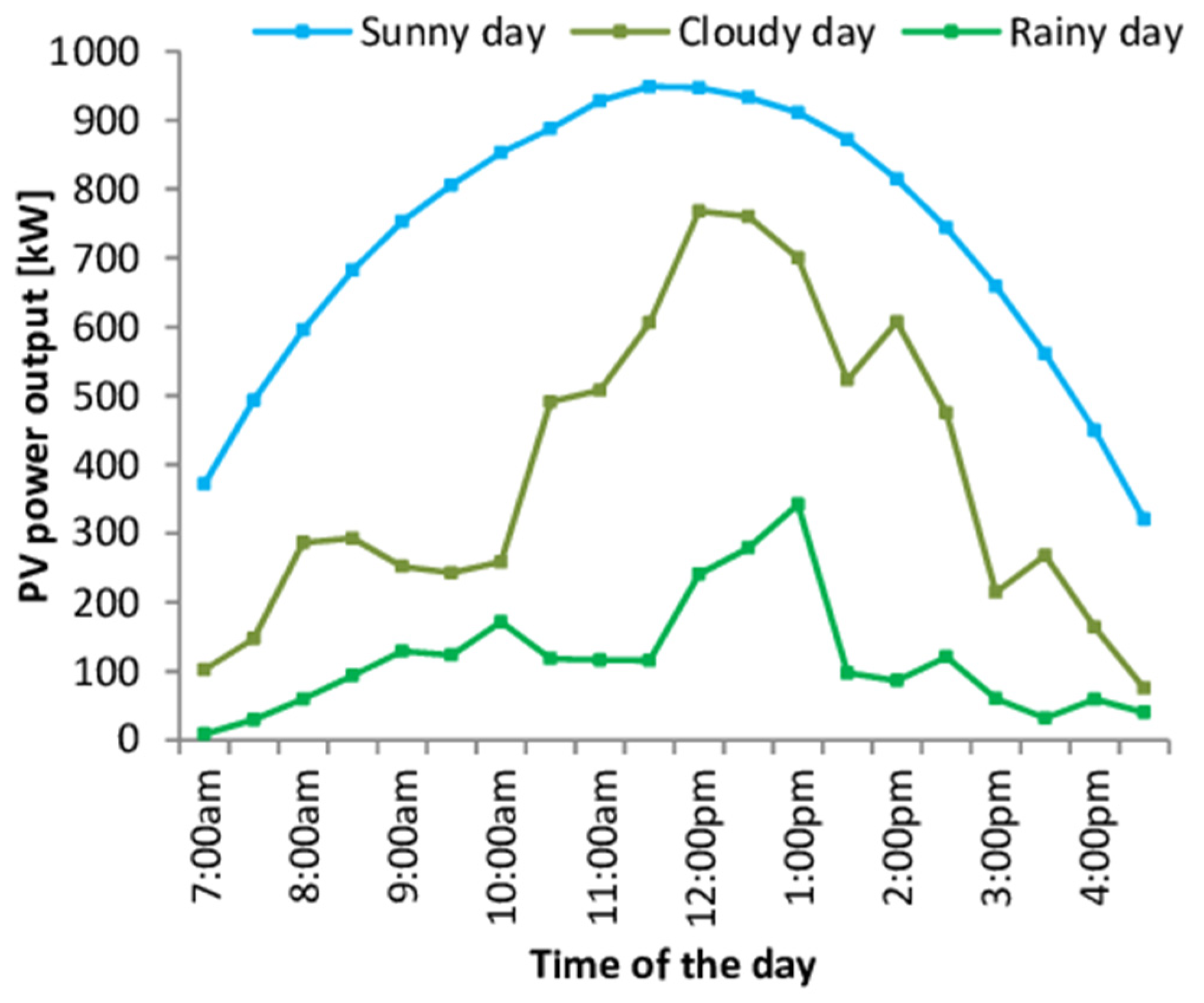

4.1. Dataset

4.2. Experimental Details

4.3. Evaluation Metrics

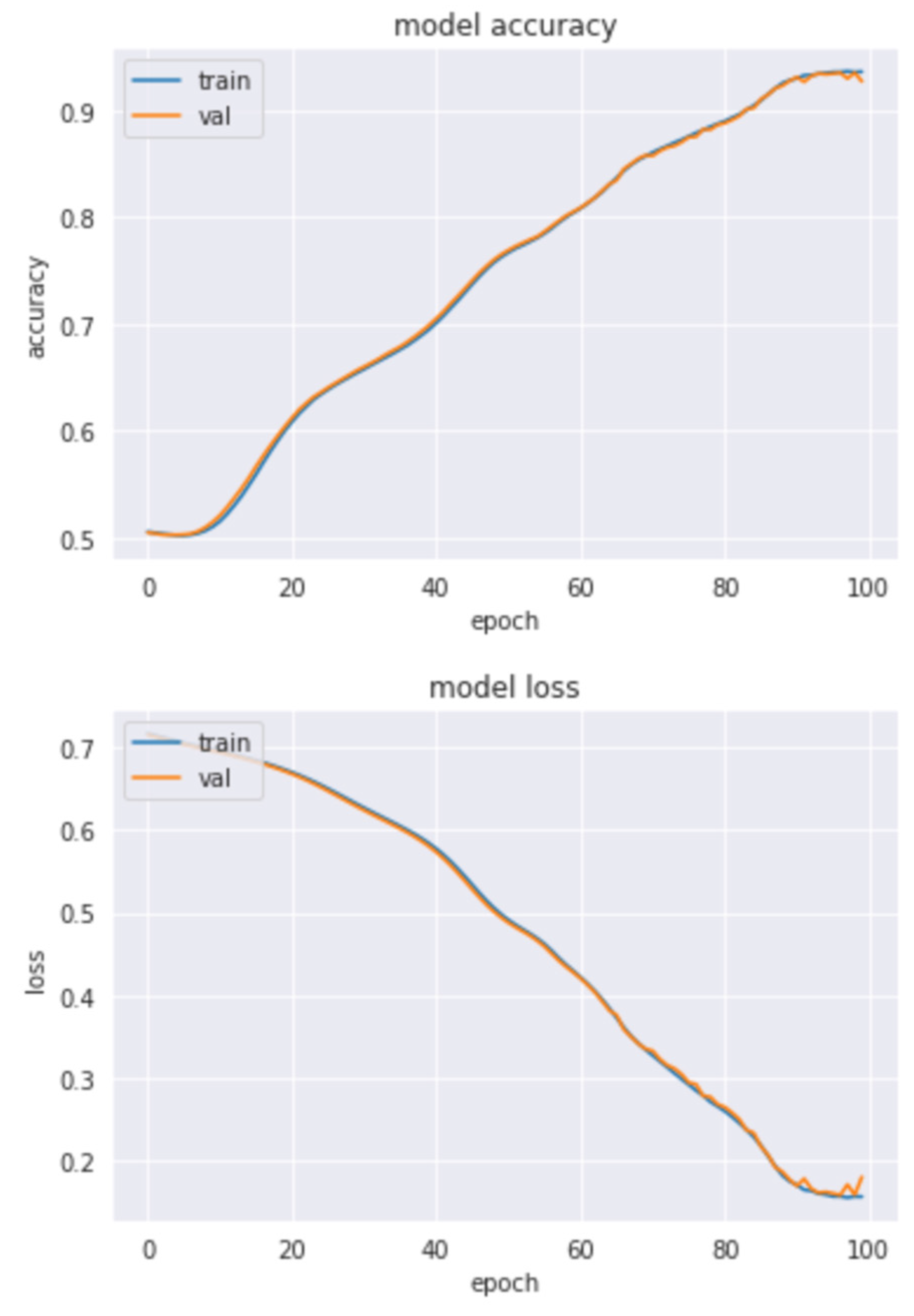

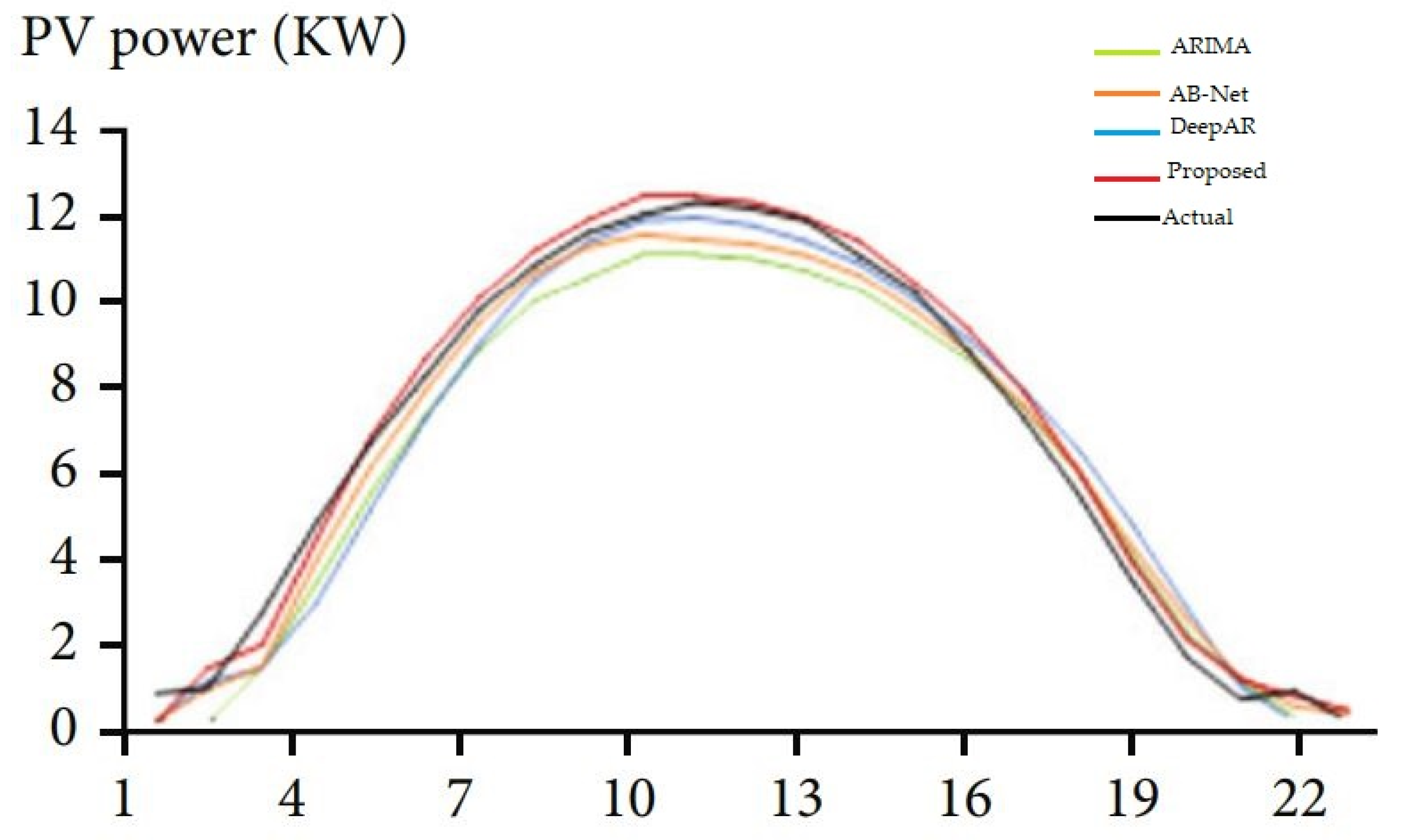

4.4. Results and Discussion

4.5. Ablation Study

4.6. Computational Efficiency

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Nomenclature

| CNN | Convolutional Neural Network |

| LSTM | Long Short-Term Memory |

| AI | Artificial Intelligence |

| DKASC | Desert Knowledge Australia Solar Centre |

| RND | Rounding function. |

| Q | Query |

| K | Key |

| V | Value |

| ARIMA | Autoregressive Integrated Moving Average |

| RMSE | Root Mean Square Error |

| MAPE | Average Absolute Percentage Error |

| MAE | Mean Absolute Error |

References

- Renewable Power Generation Costs in 2019. Available online: https://www.irena.org/publications/2020/Jun/Renewable-Power-Costs-in-2019 (accessed on 15 December 2022).

- Hajji, Y.; Bouteraa, M.; Elcafsi, A.; Bournot, P. Green hydrogen leaking accidentally from a motor vehicle in confined space: A study on the effectiveness of a ventilation system. Int. J. Energy Res. 2021, 45, 18935–18943. [Google Scholar] [CrossRef]

- IRENA (International Renewable Energy Agency). Future of Solar Photovoltaic: Deployment, Investment, Technology, Grid Integration and Socio-Economic Aspects; IRENA: Abu Dhabi, United Arab Emirates, 2019; pp. 1–73. [Google Scholar]

- Hajji, Y.; Bouteraa, M.; Bournot, P.; Bououdina, M. Assessment of an accidental hydrogen leak from a vehicle tank in a confined space. Int. J. Hydrogen Energy 2022, 47, 28710–28720. [Google Scholar] [CrossRef]

- Variable Renewable Energy Forecasting: Integration into Electricity Grids and Markets: A Best Practice Guide. Available online: https://cleanenergysolutions.org/resources/variable-renewable-energy-forecasting-integration-electricity-grids-markets-best-practice (accessed on 15 December 2022).

- Ayachi, R.; Said, Y.; Atri, M. A Convolutional Neural Network to Perform Object Detection and Identification in Visual Large-Scale Data. Big Data 2021, 9, 41–52. [Google Scholar] [CrossRef] [PubMed]

- Afif, M.; Ayachi, R.; Said, Y.; Atri, M. Deep Learning Based Application for Indoor Scene Recognition. Neural Process. Lett. 2020, 51, 2827–2837. [Google Scholar] [CrossRef]

- Ayachi, R.; Afif, M.; Said, Y.; Ben Abdelaali, A. Real-Time Implementation of Traffic Signs Detection and Identification Application on Graphics Processing Units. Int. J. Pattern Recognit. Artif. Intell. 2021, 35, 2150024. [Google Scholar] [CrossRef]

- Wang, K.; Qi, X.; Liu, H. Photovoltaic power forecasting based LSTM-Convolutional Network. Energy 2019, 189, 116225. [Google Scholar] [CrossRef]

- Wang, H.; Lei, Z.; Zhang, X.; Zhou, B.; Peng, J. A review of deep learning for renewable energy forecasting. Energy Convers. Manag. 2019, 198, 111799. [Google Scholar] [CrossRef]

- Alia, M.; Prasad, R. Significant wave height forecasting via an extreme learning machine model inte-grated with improved complete ensemble empirical mode decomposition. Renew. Sustain. Energy Rev. 2019, 104, 281–295. [Google Scholar] [CrossRef]

- Deo, R.C.; Wen, X.; Qi, F. A wavelet-coupled support vector machine model for forecasting global incident solar radiation using limited meteorological dataset. Appl. Energy 2016, 168, 568–593. [Google Scholar] [CrossRef]

- Sharifian, A.; Ghadi, M.J.; Ghavidel, S.; Li, L.; Zhang, J. A new method based on Type-2 fuzzy neural network for accurate wind power forecasting under uncertain data. Renew. Energy 2018, 120, 220–230. [Google Scholar] [CrossRef]

- Sharif, A.; Noureen, S.; Roy, V.; Subburaj, V.; Bayne, S.; Macfie, J. Forecasting of total daily solar energy generation using ARIMA: A case study. In Proceedings of the 9th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 7–9 January 2019; pp. 114–119. [Google Scholar]

- Feng, Q.; Chen, L.; Chen, C.L.P.; Guo, L. Deep learning. High-Dimensional Fuzzy Clustering. IEEE Trans. Fuzzy Syst. 2020, 28, 1420–1433. [Google Scholar]

- Afif, M.; Ayachi, R.; Said, Y.; Atri, M. An evaluation of EfficientDet for object detection used for indoor robots assistance navigation. J. Real Time Image Process. 2022, 19, 651–661. [Google Scholar] [CrossRef]

- Ayachi, R.; Afif, M.; Said, Y.; Ben Abdelali, A. Drivers Fatigue Detection Using EfficientDet In Advanced Driver Assistance Systems. In Proceedings of the 18th International Multi-Conference on Systems, Signals & Devices, Monastir, Tunisia, 22–25 March 2021; pp. 738–742. [Google Scholar] [CrossRef]

- Ayoobi, N.; Sharifrazi, D.; Alizadehsani, R.; Shoeibi, A.; Gorriz, J.M.; Moosaei, H.; Khosravi, A.; Nahavandi, S.; Chofreh, A.G.; Goni, F.A.; et al. Time series forecasting of new cases and new deaths rate for COVID-19 using deep learning methods. Results Phys. 2021, 27, 104495. [Google Scholar] [CrossRef] [PubMed]

- El Alani, O.; Abraim, M.; Ghennioui, H.; Ghennioui, A.; Ikenbi, I.; Dahr, F.-E. Short term solar irradiance forecasting using sky images based on a hybrid CNN–MLP model. Energy Rep. 2021, 7, 888–900. [Google Scholar] [CrossRef]

- Harrou, F.; Kadri, F.; Sun, Y. Forecasting of Photovoltaic Solar Power Production Using LSTM Approach. In Advanced Statistical Modeling, Forecasting, and Fault Detection in Renewable Energy Systems; Intechopen: London, UK, 2020. [Google Scholar] [CrossRef]

- Saffari, M.; Khodayar, M.; Jalali, S.M.J.; Shafie-Khah, M.; Catalao, J.P.S. Deep Convolutional Graph Rough Variational Auto-Encoder for Short-Term Photovoltaic Power Forecasting. In Proceedings of the 2021 International Conference on Smart Energy Systems and Technologies (SEST), Vaasa, Finland, 6–8 September 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Processing Syst. 2017, 30, 1–11. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, preprint. arXiv:2010.11929. [Google Scholar]

- Kohonen, T. The self-organizing map. Comput. Intell. Res. Front. 1990, 78, 1464–1480. [Google Scholar] [CrossRef]

- Fingrid Solar Power Generation Search and Download Data. Available online: https://data.fingrid.fi/en/dataset/solar-power-generation-forecast-updated-every-hour/resource/8b6b8bff-0181-48e1-aa86-1af97f81ce5a (accessed on 15 September 2022).

- Khan, N.; Ullah, F.U.M.; Haq, I.U.; Khan, S.U.; Lee, M.Y.; Baik, S.W. AB-Net: A Novel Deep Learning Assisted Framework for Renewable Energy Generation Forecasting. Mathematics 2021, 9, 2456. [Google Scholar] [CrossRef]

- Qu, Y.; Xu, J.; Sun, Y.; Liu, D. A temporal distributed hybrid deep learning model for day-ahead distributed PV power forecasting. Appl. Energy 2021, 304, 117704. [Google Scholar] [CrossRef]

- Agga, A.; Abbou, A.; Labbadi, M.; El Houm, Y.; Ali, I.H.O. CNN-LSTM: An efficient hybrid deep learning architecture for predicting short-term photovoltaic power production. Electr. Power Syst. Res. 2022, 208, 107908. [Google Scholar] [CrossRef]

- Rai, A.; Shrivastava, A.; Jana, K.C. A robust auto encoder-gated recurrent unit (AE-GRU) based deep learning approach for short term solar power forecasting. Optik 2022, 252, 168515. [Google Scholar] [CrossRef]

- Sabri, M.; El Hassouni, M. A Novel Deep Learning Approach for Short Term Photovoltaic Power Forecasting Based on GRU-CNN Model. E3S Web Conf. 2022, 336, 00064. [Google Scholar] [CrossRef]

- Zheng, J.; Zhang, H.; Dai, Y.; Wang, B.; Zheng, T.; Liao, Q.; Liang, Y.; Zhang, F.; Song, X. Time series prediction for output of multi-region solar power plants. Appl. Energy 2020, 257, 114001. [Google Scholar] [CrossRef]

- Kohonen, T. Essentials of the self-organizing map. Neural Netw. 2013, 37, 52–65. [Google Scholar] [CrossRef] [PubMed]

- Child, R.; Gray, S.; Radford, A.; Sutskever, I. Generating long sequences with sparse transformers. arXiv 2019, preprint. arXiv:1904.10509. [Google Scholar]

- Shiyang, L.; Jin, X.; Xuan, Y.; Zhou, X.; Chen, W.; Wang, Y.X.; Yan, X. Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. Adv. Neural Inf. Processing Syst. 2019, 32, 1–11. [Google Scholar]

- Joyce, J.M. Kullback-leibler divergence. In International Encyclopedia of Statistical Science; Springer: Berlin/Heidelberg, Germany, 2011; pp. 720–722. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, preprint. arXiv:1511.07122. [Google Scholar]

- David, S.; Flunkert, V.; Gasthaus, J.; Januschowski, T. DeepAR: Probabilistic forecasting with auto-regressive recurrent networks. Int. J. Forecast. 2020, 36, 1181–1191. [Google Scholar]

- Sean, J.T.; Letham, B. Forecasting at scale. Am. Stat. 2018, 72, 37–45. [Google Scholar]

| Model | MAPE | MAE | RMSE |

|---|---|---|---|

| CNN-LSTM-Transformer | 0.041 | 0.393 | 0.344 |

| CNN-LSTM-Transformer * | 0.048 | 0.399 | 0.378 |

| AB-Net [26] | 0.052 | 0.436 | 0.486 |

| GRU-CNN [28] | 0.053 | 0.443 | 0.461 |

| ARIMA [14] | 0.073 | 0.764 | 0.879 |

| DeepAR [37] | 0.051 | 0.465 | 0.478 |

| Prophet [38] | 0.065 | 0.524 | 0.598 |

| Prediction Length | 336 | 720 | |||||

|---|---|---|---|---|---|---|---|

| Input Size | 336 | 720 | 1440 | 720 | 1440 | 2880 | |

| CNN-LSTM-Transformer | RMSE | 0.249 | 0.225 | 0.216 | 0.271 | 0.261 | 0.257 |

| MAE | 0.393 | 0.384 | 0.376 | 0.435 | 0.431 | 0.422 | |

| CNN-LSTM-Transformer * | RMSE | 0.251 | 0.234 | X | 0.285 | X | X |

| MAE | 0.399 | 0.398 | X | 0.442 | X | X | |

| Prediction Length | 336 | 480 | |||||

|---|---|---|---|---|---|---|---|

| Encoder Input | 336 | 480 | 720 | 336 | 480 | 960 | |

| CNN-LSTM-Transformer * (with distilling) | RMSE | 0.249 | 0.208 | 0.225 | 0.197 | 0.243 | 0.192 |

| MAE | 0.393 | 0.385 | 0.384 | 0.388 | 0.392 | 0.377 | |

| CNN-LSTM-Transformer * (without distilling) | RMSE | 0.229 | 0.215 | X | 0.224 | X | X |

| MAE | 0.391 | 0.377 | X | 0.381 | X | X | |

| Prediction Length | 336 | 480 | |||||

|---|---|---|---|---|---|---|---|

| Prediction Offset | +0 | +12 | +24 | +0 | +48 | +96 | |

| CNN-LSTM-Transformer * (with generative decoder) | RMSE | 0.207 | 0.209 | 0.211 | 0.198 | 0.203 | 0.208 |

| MAE | 0.385 | 0.387 | 0.393 | 0.390 | 0.392 | 0.401 | |

| CNN-LSTM-Transformer * (without generative decoder) | RMSE | 0.209 | X | X | 0.392 | X | X |

| MAE | 0.393 | X | X | 0.484 | X | X | |

| Prediction Length | 336 | |||

|---|---|---|---|---|

| Input Size | 48 | 96 | 168 | |

| CNN-LSTM-Transformer | Train speed (hours) | 3.2 | 5.1 | 6.3 |

| Inference speed (hours) | 0.1 | 0.13 | 0.17 | |

| Memory (GB) | 2.1 | 2.3 | 2.5 | |

| CNN-LSTM-Transformer * | Train speed (hours) | 7.4 | 8.1 | 9.3 |

| Inference speed (hours) | 0.3 | 0.38 | 0.42 | |

| Memory | 3.5 | 3.7 | 3.8 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Ali, E.M.; Hajji, Y.; Said, Y.; Hleili, M.; Alanzi, A.M.; Laatar, A.H.; Atri, M. Solar Energy Production Forecasting Based on a Hybrid CNN-LSTM-Transformer Model. Mathematics 2023, 11, 676. https://doi.org/10.3390/math11030676

Al-Ali EM, Hajji Y, Said Y, Hleili M, Alanzi AM, Laatar AH, Atri M. Solar Energy Production Forecasting Based on a Hybrid CNN-LSTM-Transformer Model. Mathematics. 2023; 11(3):676. https://doi.org/10.3390/math11030676

Chicago/Turabian StyleAl-Ali, Elham M., Yassine Hajji, Yahia Said, Manel Hleili, Amal M. Alanzi, Ali H. Laatar, and Mohamed Atri. 2023. "Solar Energy Production Forecasting Based on a Hybrid CNN-LSTM-Transformer Model" Mathematics 11, no. 3: 676. https://doi.org/10.3390/math11030676

APA StyleAl-Ali, E. M., Hajji, Y., Said, Y., Hleili, M., Alanzi, A. M., Laatar, A. H., & Atri, M. (2023). Solar Energy Production Forecasting Based on a Hybrid CNN-LSTM-Transformer Model. Mathematics, 11(3), 676. https://doi.org/10.3390/math11030676