Abstract

Textual documents serve as representations of discussions on a variety of subjects. These discussions can vary in length and may encompass a range of events or factual information. Present trends in constructing knowledge bases primarily emphasize fact-based common sense reasoning, often overlooking the temporal dimension of events. Given the widespread presence of time-related information, addressing this temporal aspect could potentially enhance the quality of common-sense reasoning within existing knowledge graphs. In this comprehensive survey, we aim to identify and evaluate the key tasks involved in constructing temporal knowledge graphs centered around events. These tasks can be categorized into three main components: (a) event extraction, (b) the extraction of temporal relationships and attributes, and (c) the creation of event-based knowledge graphs and timelines. Our systematic review focuses on the examination of available datasets and language technologies for addressing these tasks. An in-depth comparison of various approaches reveals that the most promising results are achieved by employing state-of-the-art models leveraging large pre-trained language models. Despite the existence of multiple datasets, a noticeable gap exists in the availability of annotated data that could facilitate the development of comprehensive end-to-end models. Drawing insights from our findings, we engage in a discussion and propose four future directions for research in this domain. These directions encompass (a) the integration of pre-existing knowledge, (b) the development of end-to-end systems for constructing event-centric knowledge graphs, (c) the enhancement of knowledge graphs with event-centric information, and (d) the prediction of absolute temporal attributes.

MSC:

68T35

1. Introduction

Understanding the discourse described in documents is a critical task for many AI systems that need to comprehend text. Understanding the discourse boils down to grasping the sequence of events that occur in a document and the people or things involved in those events. One particularly important part of events is their timing, as it helps us arrange the events in order of when they happened. The most common way to represent this information is by using knowledge graphs. Most research on creating knowledge graphs focuses on information about entities. This means that the knowledge graphs store facts about entities and how they are connected. Some well-known knowledge graphs that contain this kind of information are DBpedia [1], Wikidata [2], and YAGO [3]. These knowledge graphs often also include some information about events, especially historical events. For instance, Wang et al. added information about the timing of historical events to the YAGO knowledge base [4]. Such knowledge graphs are predominantly centered around entities and their attributes. However, in many real-world use cases, like clinical dead end prediction or authomatic timeline reconstruction, it can be more important to understand the discourse in text. For understanding such discourse, it is better to use a knowledge graph centered around events. In this type of graph, we represent events as nodes and the connections between events as edges. These connections mostly show the order in which events happened or how one event caused another. An interesting knowledge graph that contains common-sense information about events and entities is ATOMIC 2020 [5]. This graph contains common-sense information about how events are related. These relations cover more detailed information than just showing which event caused another event. The authors of the ATOMIC knowledge base also created a model that can predict these common-sense connections between events and entities that are not in the knowledge base. Besides events and their connections, knowledge graphs also often include other things that describe the time-related aspects of events.

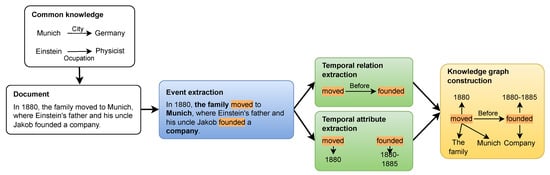

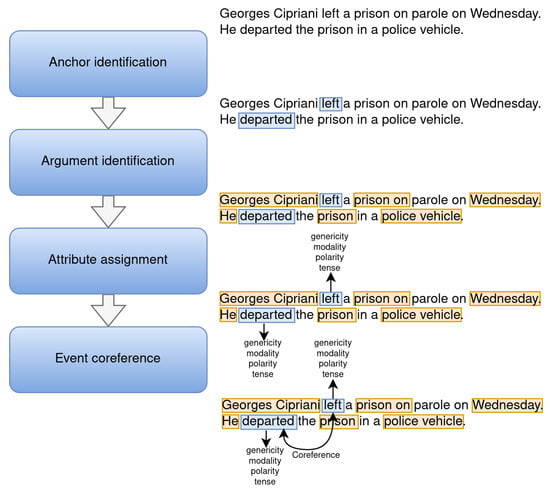

Building knowledge graphs focused on events typically involves a series of smaller tasks. A general overview of how a graph is constructed is shown in Figure 1. There are three main steps: (1) figuring out what the events are and gathering information about them, (2) finding out how events are related in terms of time and cause and effect, and (3) creating a knowledge graph from all this information. Some methods combine the steps of figuring out events and their relationships into one step to make a more straightforward process. This is most common in the medical field because events there usually do not need additional details. Some models also use extra knowledge when figuring out the timing of events. This extra information can make the models work better in some cases. Instead of using a knowledge graph, some previous studies represent events in timelines, which are simpler but can still give a good idea of how the events occurred in a document.

Figure 1.

General pipeline for event-centric temporal knowledge graph construction.

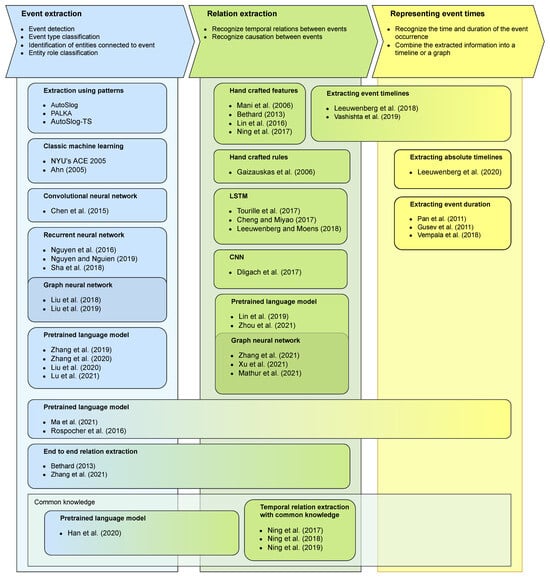

In this survey, we provide an overview of the primary methods employed to address the tasks essential for creating knowledge graphs centered around events using unstructured documents. Figure 2 illustrates the systems discussed in our paper, positioning them based on their key characteristics. We assess these systems according to whether they engage in event extraction, temporal relation extraction, event duration identification, or event timeline generation. Notably, only two of the systems we present encompass the complete process, spanning from event extraction to knowledge base construction. We cluster the systems into smaller groups, based on the machine learning techniques employed (see Figure 2).

We introduce systems tailored to extract events and their associated attributes, temporal relationships, and additional temporal characteristics, ultimately culminating in the development of event-centric knowledge graphs or timelines. A comprehensive comparative analysis of all the systems featured herein is presented in Table 1. Our overarching objective is to guide future research endeavors toward the automated construction of temporal event-centric knowledge graphs. We achieve this by delineating existing work in this domain and discussing the remaining challenges and opportunities that need to be addressed to enhance the efficacy of such systems.

Table 1.

Comparison of features from all of the described projects. EE: event extraction, EA: event attribute extraction, TR: temporal relation extraction, ED: event duration recognition, TC: timeline creation, add: additional attributes, SY: syntactic dependencies, CL: cross-lingual model, CK: common knowledge.

Table 1.

Comparison of features from all of the described projects. EE: event extraction, EA: event attribute extraction, TR: temporal relation extraction, ED: event duration recognition, TC: timeline creation, add: additional attributes, SY: syntactic dependencies, CL: cross-lingual model, CK: common knowledge.

| Paper | EE | EA | TR | ED | TC | Add. | Domain |

|---|---|---|---|---|---|---|---|

| Riloff (1993) [6] | ✓ | ✓ | ✗ | ✗ | ✗ | News | |

| Riloff (1995) [7] | ✓ | ✓ | ✗ | ✗ | ✗ | News | |

| Kim and Moldovan (1995) [8] | ✓ | ✓ | ✗ | ✗ | ✗ | News | |

| Grishman et al. (2005) [9] | ✓ | ✓ | ✗ | ✗ | ✗ | News | |

| Ahn (2006) [10] | ✓ | ✓ | ✗ | ✗ | ✗ | News | |

| Yang and Mitchell (2016) [11] | ✓ | ✓ | ✗ | ✗ | ✗ | News | |

| Chen et al. (2015) [12] | ✓ | ✓ | ✗ | ✗ | ✗ | News | |

| Nguyen et al. (2016) [13] | ✓ | ✓ | ✗ | ✗ | ✗ | News | |

| Sha et al. (2018) [14] | ✓ | ✓ | ✗ | ✗ | ✗ | SY | News |

| Liu et al. (2018) [15] | ✓ | ✓ | ✗ | ✗ | ✗ | SY | News |

| Liu et al. (2019) [16] | ✓ | ✓ | ✗ | ✗ | ✗ | SY, CL | News |

| Zhang et al. (2019) [17] | ✓ | ✓ | ✗ | ✗ | ✗ | News | |

| Zhang et al. (2020) [18] | ✗ | ✓ | ✗ | ✗ | ✗ | News | |

| Ji (2009) [19] | ✓ | ✓ | ✗ | ✗ | ✗ | CL | News |

| Zhu et al. (2014) [20] | ✓ | ✓ | ✗ | ✗ | ✗ | CL | News |

| Chen et al. (2009) [21] | ✓ | ✓ | ✗ | ✗ | ✗ | CL | News |

| Liu et al. (2020) [22] | ✓ | ✓ | ✗ | ✗ | ✗ | News | |

| Lu et al. (2021) [23] | ✓ | ✓ | ✗ | ✗ | ✗ | News | |

| Gaizauskas et al. (2006) [24] | ✗ | ✗ | ✓ | ✗ | ✗ | News | |

| Mani et al. (2006) [25] | ✗ | ✗ | ✓ | ✗ | ✗ | News | |

| Bethard (2013) [26] | ✓ | ✗ | ✓ | ✗ | ✗ | News | |

| Lin et al. (2016) [27] | ✗ | ✗ | ✓ | ✗ | ✗ | Medical | |

| Ning et al. (2017) [28] | ✗ | ✗ | ✓ | ✗ | ✗ | CK | News |

| Tourille et al. (2017) [29] | ✗ | ✗ | ✓ | ✗ | ✗ | Medical | |

| Dligach et al. (2017) [30] | ✗ | ✗ | ✓ | ✗ | ✗ | Medical | |

| Lin et al. (2019) [31] | ✗ | ✗ | ✓ | ✗ | ✗ | Medical | |

| Cheng and Miyao (2017) [32] | ✗ | ✗ | ✓ | ✗ | ✗ | SY | News |

| Leeuwenberg and Moens (2018) [33] | ✗ | ✗ | ✓ | ✓ | ✓ | News | |

| Zhou et al. (2021) [34] | ✗ | ✗ | ✓ | ✗ | ✗ | CL | Medical |

| Zhang et al. (2021) [35] | ✓ | ✗ | ✓ | ✗ | ✗ | SY | News |

| Xu et al. (2021) [36] | ✗ | ✗ | ✓ | ✗ | ✗ | SY | News |

| Mathur et al. (2021) [37] | ✗ | ✗ | ✓ | ✗ | ✗ | SY | News |

| Ning et al. (2018) [38] | ✗ | ✗ | ✓ | ✗ | ✗ | CK | News |

| Ning et al. (2019) [39] | ✗ | ✗ | ✓ | ✗ | ✗ | CK | News |

| Han et al. (2020) [40] | ✓ | ✗ | ✓ | ✗ | ✗ | CK | News, Medical |

| Leeuwenberg and Moens (2020) [41] | ✗ | ✗ | ✗ | ✓ | ✓ | Medical | |

| Vashishtha et al. (2019) [42] | ✗ | ✗ | ✓ | ✓ | ✓ | News | |

| Pan et al. (2011) [43] | ✗ | ✗ | ✗ | ✓ | ✗ | News | |

| Gusev et al. (2011) [44] | ✗ | ✗ | ✗ | ✓ | ✗ | News | |

| Vempala et al. (2018) [45] | ✗ | ✗ | ✗ | ✓ | ✗ | News | |

| Rospocher et al. (2016) [46] | ✓ | ✓ | ✓ | ✗ | ✓ | News | |

| Ma et al. (2021) [47] | ✓ | ✓ | ✓ | ✓ | ✓ | News | |

| Jindal and Roth (2013) [48] | ✓ | ✗ | ✗ | ✗ | ✗ | Medical |

Figure 2.

The systems performing main tasks required for event-centric knowledge graph construction [6,7,8,9,10,12,13,14,15,16,17,18,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,49].

The main contributions of this work are summarized as follows:

- (a)

- Literature review for all tasks related to temporal event knowledge graph construction. We identify the tasks required for the automatic construction of event-centric temporal knowledge graphs and provide a review of research dealing with each of the tasks. We compare different approaches and identify the most successful model designs.

- (b)

- Identification of emerging advancements. We identify the directions in which systems for each of the tasks are likely to evolve in the future. We also provide our opinion on which approaches seem to be the most prospective for future systems.

- (c)

- Identification and proposal of open research problems. We summarize each of the areas and highlight promising future research directions. We also provide the main criteria that future work should achieve.

We identified several surveys that address general relation extraction [50,51,52,53,54,55]. In contrast, there are fewer surveys dedicated to the topics of event extraction [56] and temporal relation extraction [57,58]. Additionally, we found surveys that explore knowledge graphs [59,60].

To the best of our knowledge, our survey paper represents the first comprehensive examination of the entire process involved in constructing temporal event-centric knowledge graphs. While previous surveys have delved into individual aspects of this process, none have provided a comprehensive overview of all the challenges that must be addressed to facilitate the automated creation of such knowledge graphs.

The remainder of this paper is structured as follows: In Section 3, we elucidate the methodologies employed in conducting our survey. Section 4 details the existing standards for representing events, their relationships, and associated properties. We also present datasets containing temporal information about events in documents. Section 5 offers an overview and comparison of existing models for event extraction. In Section 6, we present models designed for the extraction of temporal relations. Subsequently, in Section 7, we introduce existing systems capable of constructing event timelines and knowledge graphs. Finally, Section 8 and Section 9 delve into prospects for future research in this domain and provide a concluding perspective on the paper’s findings.

Formal Definition of an Event-Centric Knowledge Graph

An event-centric temporal knowledge graph is a structured representation of knowledge that models and organizes information about events, their attributes, and their temporal relationships within a domain. It is composed of a directed graph consisting of nodes and edges, where nodes represent events and their associated attributes, and edges represent temporal relationships between events. The knowledge graph is designed to capture the temporal nature of events, allowing for the representation of chronological sequences, durations, and temporal overlaps [61].

We define an event-centric temporal knowledge graph with the following components:

- Nodes: The knowledge graph is comprised of a set of nodes representing individual events. Each node corresponds to a specific event.

- Attributes: Each node contains a set of attributes associated with the event. We denote the set of attributes as .

- Edges: The knowledge graph contains a set of directed edges between nodes and , where each edge represents a temporal relation. We denote a set of edges as , where each edge is a triplet of two nodes and a relation r from the set of valid relations R, which differs between different domains and use cases.

Event-centric temporal knowledge graphs have a number of properties that need to hold for a valid graph:

- Temporal consistency: A temporal knowledge graph ensures consistency between temporal relations so that events with temporal relations form valid timelines.

- Granularity: An event-centric knowledge graph needs to accommodate different levels of event granularities. An event might be a specific instance that occurred at a specific point in time or a general concept that can occur in multiple situations.

2. Motivation

In the rapidly evolving landscape of information, the ability to extract temporal sequences from unstructured documents holds immense potential for unlocking valuable insights. This survey explores innovative approaches that empower the recognition of temporal patterns, laying the groundwork for constructing event timelines and unraveling the dynamics of how events unfold. Extracting temporal information allows us to create a structured representation of the chronological order in which events occur. This chronological perspective is invaluable for understanding the evolution of various phenomena and gaining insights into the underlying mechanisms.

The extraction of temporal sequences facilitates the identification of patterns and trends within corpora. By discerning the temporal relationships between events, we can unveil hidden correlations, enabling us to make informed predictions and strategic decisions. This capability is useful in diverse domains, such as customer behavior analysis, where the identification of patterns can be harnessed for applications like recommender systems [62].

Temporal knowledge graphs are also a powerful tool with interdisciplinary applications. Beyond the realms of computer science, they find utility in social sciences, history, disaster management, and beyond. These knowledge graphs serve as dynamic repositories of temporal information, fostering a deeper understanding of complex relationships and interactions across diverse domains. An example of such use is trend analysis as demonstrated by Bonifazi et al. [63]. Their exploration of time patterns in TikTok trends not only sheds light on emerging trends but also helps discern potentially harmful trends. This analytical approach is instrumental in staying ahead of the curve in dynamic environments. Recent developments in sentiment scope analysis, as highlighted by Bonifazi et al. [64], showcase another facet of the importance of temporal sequence extraction. Understanding the temporal scope of a user’s sentiment on a topic in a social platform enables a nuanced understanding of evolving opinions and attitudes over time.

The extraction of temporal sequences from documents transcends disciplinary boundaries, offering a versatile toolkit for understanding, predicting, and influencing various phenomena. From constructing event timelines to uncovering hidden patterns and trends, the applications are diverse and impactful, making this field a focal point of research and development across multiple domains.

3. Methodology

The objective of this review is to present the primary methodologies for automating the construction of event-centric temporal knowledge graphs. We identified event extraction, temporal relation, and attribute extraction, as well as knowledge graph or timeline construction as the three main steps required for constructing an event-centered knowledge graph. These tasks were determined through an analysis of existing models that are capable of automatically generating event-centric temporal graphs or timelines.

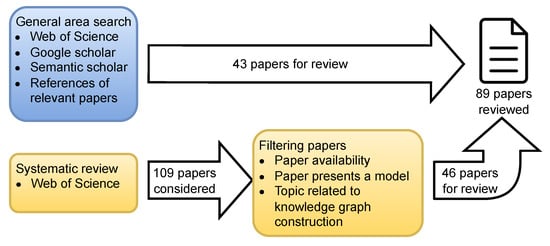

Our selection of papers for inclusion in this survey adhered to a systematic methodology as illustrated in Figure 3. Initially, we conducted queries on scientific search engines Google Scholar, Semantic Scholar, and Web of Science, using general queries pertaining to the creation of event-centric knowledge graphs. Subsequently, we scrutinized the top search results to gain a foundational understanding of the field. Additionally, we considered papers that were referenced in the papers we identified as pertinent to our survey.

Figure 3.

Flow diagram of our systematic review process.

Upon acquiring a comprehensive understanding of the tasks associated with extracting temporal information about events and the primary strategies for addressing these tasks, we systematically reviewed papers related to the identified tasks. To accomplish this, we utilized the Web of Science database, executing a distinct query for each task. For event extraction, we searched for papers featuring the terms “event” and “extraction” in their titles. Given that many returned papers were not directly relevant, we refined the query by stipulating that the paper also includes the phrase “natural language processing”. This modification ensured that the majority of retrieved papers were germane to our survey. In the case of temporal relation extraction, we sought papers with titles containing the words “temporal”, “relation”, and “extraction”. For the extraction of event-centric knowledge graphs, we identified papers with titles comprising the terms “event”. “knowledge graph” or “timeline”, and “extraction” or “construction”.

Our selection criteria for including papers in this survey were based on specific considerations. Firstly, a paper had to present a functional model or pipeline. Secondly, the task addressed in the paper needed to be directly related to the construction of event-centric temporal knowledge graphs. Furthermore, we excluded papers that had already been included in our preliminary review. During the systematic phase of the review, we assessed a total of 109 papers, ultimately incorporating 46 papers into our survey.

In addition to discussing information extraction models, we also introduce datasets containing temporal information about events. These datasets can prove invaluable for the development of future models. We identified these relevant datasets by analyzing the results provided by Google Scholar and examining the datasets employed in papers outlining pertinent models.

4. Schemas and Datasets

For the tasks of event, temporal relation, and temporal attribute extraction, we need datasets that we can use to train machine learning models. To create the datasets, we need to define annotation schemas for annotating the information we would like to capture. In this section, we describe the schemas used to present events and their information.

4.1. Schemas for Capturing Events

In the realm of event extraction, two prominent event schemas have emerged to structure and annotate textual information: the TimeML model [65] and the ACE (Automatic Content Extraction) model [66]. Each schema serves a distinct purpose in capturing information about events, with its own characteristics:

TimeML Model:

- Aim:

- The TimeML model seeks to identify and capture all mentions of events within the text and establish relations between these events.

- Coverage:

- It aims for comprehensive event recognition, encompassing a broad spectrum of event types.

- Annotations:

- TimeML employs XML tags to annotate text, marking event mentions and their attributes, temporal expressions, their meanings, and relationships between them.

- Additional Information:

- Documents in TimeML format often incorporate the recording of document creation times as a special time expression.

ACE (Automatic Content Extraction) Model:

- Aim:

- The primary objective of the ACE event model is to provide more detailed information about each event, focusing on a predefined set of event types.

- Coverage:

- ACE defines a specific set of 34 event types, and the model is designed to capture events that fall within these predetermined categories.

- Annotations:

- ACE specifies a structured format for annotating text with details about events, including their attributes.

- Attributes:

- Each event type in the ACE model comes with a predefined set of attributes, which vary depending on the specific event type.

These schemas play a vital role in the annotation of text documents to extract information about events and time expressions. The TimeML specification, in particular, has served as a foundation for the annotation format in numerous time-centric corpora, such as TimeBank [67], AQUAINT [68], i2b2 [69], and WikiWars [70]. By adopting these standardized schemas and formats, researchers can effectively annotate text data to facilitate event-centric knowledge graph construction and temporal information extraction.

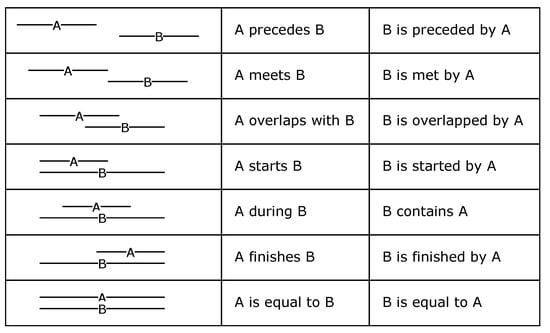

4.2. Schemas for Capturing Temporal Relations

Temporal relations are a fundamental aspect of representing the temporal dynamics of events in a document. They provide crucial information about the sequence in which events occurred. Among the various temporal relations, some of those most commonly of interest include before, after, and during. In 1989, Allen and Hayes [71] defined a comprehensive set of 13 possible relations between two time intervals as illustrated in Figure 4. However, in practice, many temporal relation extraction datasets focus on annotating a subset of Allen’s relations that are most pertinent to the specific task or domain under consideration. These annotated datasets play a pivotal role in training and evaluating machine learning models for temporal relation extraction and event-centric knowledge graph construction.

Figure 4.

Temporal relations between events as defined by Allen and Hayes [71].

The TimeML schema [65,72,73] serves as the most prevalent schema for representing temporal relations between events. It offers a structured framework for encoding basic temporal relations such as “Before”, “After”, and “During”.

In addition to these fundamental temporal relations, there has been research dedicated to extracting more precise relations that encompass estimates of the time intervals, event durations, and related temporal attributes [33,42]. These precise relations are particularly valuable for constructing event timelines and demand a distinct annotation schema. Unlike the standardized TimeML schema, projects focusing on these precise relations often define their own schemas tailored to their specific requirements and objectives. As a result, there is no unified schema for representing these intricate temporal relationships.

4.3. Capturing Temporal Properties of Events

In the context of describing the time of an event, it is imperative to define the specific time properties of interest. For that purpose, we analyzed the properties that are defined in the OWL time ontology (https://www.w3.org/TR/owl-time/ (accessed on 12 October 2023)), as it is commonly used to record the time in knowledge graphs. The OWL time ontology encapsulates several key time components, including (1) time of the event (date, year, month, day, day of the week, time zone, etc.), (2) duration (years, months, weeks, days, etc.), (3) interval (combination of a time and duration), and (4) temporal relation (before, after, meets, met by, overlaps overlapped by, starts, started by, during, contains, finishes, finished by, and equals). We found that in addition to the temporal properties defined in the time ontology, it would also be useful to track the repetition of an event. For example, an event of taking medication might repeat every 24 h for a set amount of time. Leeuwenberg and Moens [41] provided annotations for event times and durations. The prediction of event duration has also been explored in multiple other systems (presented in Section 7.3). Temporal attributes other than the time and duration of an event remain unexplored.

4.4. General Datasets for Temporal Description and Relation Extraction

One of the earliest temporal-centric corpora introduced to the field is the TimeBank 1.1 corpus [74]. This corpus comprises 186 documents extracted from the domain of news articles. Annotations in this corpus adhere to the TimeML standard, version 1.1 [65], and were established as a proof of concept for the TimeML specification [67]. However, the TimeBank 1.1 corpus harbors certain inconsistencies and errors, leading to its replacement by version 1.2, known as the TimeBank 1.2 corpus [75]. The TimeBank 1.2 corpus adheres to the updated TimeML specification [72] and encompasses 183 news articles, encompassing approximately 61,000 tokens. This dataset encompasses texts featuring annotated time expressions, events, and temporal relations between the events. In addition to conforming to the TimeML standard, the corpus includes supplementary information, such as sentence separation. In parallel to the TimeBank corpus, the TimeML specification was also leveraged to construct the AQUAINT corpus [68] The AQUAINT corpus consists of 73 English news reports and bears structural similarities to the TimeBank corpus.

Notably, these datasets, which facilitate the extraction of temporal information from textual documents, have been featured in the SemEval shared tasks. The inaugural shared task TempEval took place during the 2007 SemEval challenge [76] and utilized a dataset derived from the TimeBank 1.2 corpus. However, it included only a subset of the original dataset’s relations and annotations. A follow-up task, TempEval-2, was conducted in 2010 [77], employing a dataset based on the TimeBank 1.2 corpus but featuring distinct annotated relations. In 2013, SemEval introduced TempEval-3 [78], aimed at enabling models that leverage large neural networks. For this purpose, the organizers provided an extensive dataset conforming to the TimeML annotation specification. They augmented the TimeBank 1.2 dataset by incorporating missing relations and annotations. This dataset served as both the gold and evaluation components. Additionally, a substantial amount of text from the English Gigaword corpus [79] was automatically annotated to generate a sizable "silver" dataset. The silver dataset, while potentially containing errors, primarily serves as training data for large neural models. Notably, this corpus introduces over 600 thousand new silver tokens, 20 thousand new gold tokens, and 20 thousand new evaluation tokens.

One limitation of the TimeBank corpus is the relatively sparse occurrence of temporal relations among event pairs. To address this, Cassidy et al. [80] developed the TimeBank-Dense dataset. This dataset entails manual re-annotation of temporal relations between a substantially larger number of event pairs, effectively mitigating the sparsity issue. While the dataset comprises only 36 documents, the overall number of annotated relations is four times greater than those in the TimeBank corpus. Nevertheless, the TimeBank-Dense dataset still grapples with low inter-annotator agreement as identified by Ning et al. [38]. In response, Ning et al. [38] introduced the MATRES dataset. In MATRES, temporal relations between events are based solely on the starting point of the event. Notably, MATRES employs multiple axes to compare events to one another, enhancing the precision of temporal annotations.

In 2017, Zhong et al. [81] introduced the Tweets dataset, annotating time expressions in concise text documents sourced from the Twitter social network. This dataset encompasses 942 documents and 18 thousand tokens, covering a range of general topics rather than focusing on a specific domain. Notably, Twitter documents differ from other similar datasets in their brevity and inclusion of precise time and date of posting.

We present the comparison of the available datasets in Table 2. The most commonly used datasets for temporal information extraction from news articles are the dataset provided for the TempEval-3 challenge, TimeBank-Dense and the MATRES dataset. The TempEval-3 dataset is useful for its large number of documents, enabling the use of neural network models. Its main drawback is the sparsity and low quality of annotated relations. These problems are addressed in the TimeBank-Dense and MATRES datasets. While the number of documents in this dataset is significantly smaller, the annotated relations are a lot more complete. We believe that the MATRES dataset is better than TimeBank-Dense for most applications, due to its higher inter-annotator agreement.

Table 2.

The datasets on general domains for extracting temporal expressions and temporal event relations.

4.5. Domain Specific Datasets

The task of temporal information extraction finds applicability across diverse domains, prompting the development of domain-specific datasets aimed at capturing unique temporal information within those domains.

One such domain-specific dataset is WikiWars, introduced in 2010 [70]. This corpus comprises Wikipedia articles centered around famous wars. Within these documents, time expressions are meticulously annotated in the TIMEX2 format (a subset of the TimeML annotation schema). The WikiWars dataset encompasses 22 documents, containing nearly 120 thousand tokens and featuring 2671 annotated temporal expressions. Another domain-specific temporal dataset emerges from the i2b2 2012 challenge [69], catering to the medical domain. This dataset supplies 310 discharge summaries replete with annotated time expressions, events, and the temporal relations existing between these events. Notably, the temporal relations in this dataset exhibit greater granularity, with eight distinct relation types: “before”, “after”, “simultaneous”, “overlap”, “begun by”, “ended by”, “during”, and “before with overlap”.

Expanding further into the medical extraction of temporal information, the THYME dataset emerged for the 2015 Medical TempEval task [82]. This dataset, drawn from clinical notes on patients with colon cancer from the Mayo Clinic, comprises approximately 600 documents, each painstakingly annotated. Annotations encompass marked events, time expressions, and temporal relations between events. The same task was subsequently revisited in SemEval 2016 [83] using the THYME dataset. In this iteration, the training and testing segments of the SemEval 2015 dataset were employed as the training set, with new test data introduced.

SemEval 2017 saw the reappearance of the Clinical TempEval task [84], this time with a focus on evaluating the transfer learning capabilities of temporal information extraction systems. Models were trained on clinical notes related to colon cancer patients from the 2016 Clinical TempEval task and subsequently tested on clinical notes pertaining to patients with brain cancer. The dataset designed for this task encompasses 591 documents on colon cancer patients and 595 documents on brain cancer patients. Annotations within these documents mirror those employed in previous Clinical TempEval tasks, ensuring consistency across evaluations and facilitating the exploration of transfer learning in the context of temporal information extraction.

A comparative analysis of datasets specific to various domains is provided in Table 3. Predominantly, the medical domain emerges as the most frequently explored domain in the context of temporal relation extraction. Within this domain, two primary datasets stand out: the i2b2 2012 dataset and the THYME dataset. The THYME dataset exhibits notable advantages in terms of its size, rendering it particularly advantageous for training machine learning models with a substantial number of parameters. Consequently, it is considered a robust dataset for training models geared toward temporal relation extraction. Conversely, the i2b2 2012 dataset offers additional value by being annotated with supplementary temporal attributes by Leeuwenberg and Moens [41]. This enrichment extends its utility beyond temporal relation extraction, encompassing the extraction of absolute temporal attributes, such as duration and event times. Importantly, all the presented datasets adhere to the TimeML annotation schema, facilitating the possibility of combining multiple datasets to train a single model. This approach can be particularly advantageous for training larger neural network models, leveraging the diversity of data sources to enhance model performance.

Table 3.

The datasets on specific domains for extracting temporal expressions and temporal event relations.

4.6. Datasets for More Precise Temporal Relation Extraction

Several researchers have dedicated their efforts to establishing more fine-grained temporal relations between events, enabling the construction of comprehensive timelines within documents. These relations are not restricted to a limited set of categories (e.g., “before” and “after”) but instead are represented as continuous values that denote the precise chronological order of events. To facilitate the training of models for this purpose, multiple datasets have been developed. A comparison of datasets for precise temporal relations is provided in Table 4.

Table 4.

The datasets for more advanced tasks in temporal information extraction.

The Event StoryLine Corpus [85] is one such dataset, designed to facilitate the creation of storylines from news articles. A unique challenge addressed by this dataset is the detection of event co-occurrence, allowing for the identification and prevention of event duplication when the same event is mentioned in the text.

The Fine-grained Temporal Relations dataset [42] endeavors to construct event timelines by going beyond the coarse temporal relations explored in previous datasets. This dataset not only recognizes coarse temporal relations but also identifies event durations and overlaps. This additional information facilitates the placement of each event on a relative timeline. Notably, the corpus employs a distinct annotation schema, departing from the TimeML standard. It comprises 91 thousand event pairs positioned on a relative timeline. The motivation for building event timelines also underlies the dataset developed by Leeuwenberg and Moens [41]. This dataset incorporates documents from the 2012 i2b2 challenge, specifically medical discharge summaries featuring labeled events. Within this dataset, absolute start times, end times, and event durations are annotated. Recognizing that precise absolute times and durations are often unexpressed in documents, the dataset provides upper and lower bounds for each time or duration.

Many existing datasets primarily encompass relations between events mentioned within the same or neighboring sentences. However, there is a need to discern temporal relations between events that appear in different sections of a document. To support systems capable of extracting such distant relations, the TDDiscourse dataset [86] was conceived. This dataset incorporates the same documents employed in the TimeBank-Dense dataset and comprises relations between events separated by more than one sentence in the original document. It comprises 6 thousand manually annotated relations and an additional 38 thousand relations automatically extracted. Another dataset catering to the extraction of relations between distant events is the Cross-document Event Corpus [87]. This corpus includes news documents sourced from the ACE2005 dataset, supplemented by additional news documents. In total, the dataset encompasses 125 documents and features 26 thousand event pairs.

The datasets presented in Table 4 fulfill two main goals. The first three datasets enable the extraction of event timelines instead of extracting individual temporal relations. The last two datasets, on the other hand, aim to enable relation and event extraction across an entire document or even multiple documents. When working with event timelines, we believe that the most interesting dataset is the one provided by Leeuwenberg and Moens since it contains absolute times of the events, while the other two datasets only contain relative positions of events on a timeline. The absolute times enable a number of additional applications that are not possible when only working with relative timelines.

4.7. Temporal Extraction for Non-English Languages

Most of the available datasets for temporal extraction contain English texts. Some other languages, however, might contain different properties that make models trained on English perform poorly. To enable the training of models designed to work in a non-English language or to work in multiple different languages, some additional datasets have been released.

In 2005, the ACE 2005 Multilingual Training Corpus was released [66]. The corpus focuses on event extraction tasks but also contains temporal attributes. The ACE corpus contains documents in three different languages. The included languages are English, Arabic, and Chinese. The documents came from multiple different sources and include newswire, broadcast news, conversation, weblog, discussion forums, and telephone conversations. The dataset contains almost 1800 documents.

Multiple versions of the TempEval corpus have also been created in different languages. Currently available languages are Arabic [73], French [88], Portuguese [89], Korean [90], Spanish [91], Romanian [92] and Italian [93]. All of the mentioned datasets are compared in Table 5.

Table 5.

The temporal datasets in non-English languages.

4.8. Other Similar Corpora

The 2015 SemEval challenge featured the QA TempEval task [94], which focuses on answering temporal questions. Questions in this task were formulated in the following manner: “Is <entityA><RELATION><entityB>?” The primary objective of this task was to assess how well-recognized relations could aid in answering human questions, rather than solely evaluating their performance against official answers. Training data for this task were drawn from the annotated TempEval-3 dataset. It comprised 79 yes and no questions. The test dataset was compiled from various sources, including news articles, Wikipedia, and informational blog posts, totaling 28 documents and 294 questions. Some questions in this dataset pertained to entities mentioned more than one sentence apart in the document.

A similar task was proposed by Chen et al. [95], involving the creation of a dataset for answering time-sensitive questions. In this dataset, the task involves answering questions based on the provided text, with questions designed to necessitate a temporal understanding. For example, a question might inquire about George Washington’s position in 1777. The dataset relies on text sourced from Wikipedia articles.

5. Event Extraction

The construction of event knowledge graphs hinges on a fundamental task known as event extraction, which involves identifying events mentioned within a text document. In addition to recognizing events, many systems performing event extraction also aim to identify the event types and their associated attributes [96,97,98]. These attributes may include details about the entities participating in an event, the event’s location, timing, and more. In this section, we present and compare existing models for extracting events from text documents.

Event extraction from text has been investigated by various researchers, resulting in the development of several different methods. The scope of event extraction tasks may range from solely identifying event mentions in the document to a more comprehensive recognition of event types and their attributes.

In the context of event extraction, common terminology is used in research papers. The Oxford English Dictionary defines an event as something that happens or takes place, especially something significant or noteworthy [99]. An event mention refers to the words within a document that describe an event, with each mention typically associated with an event trigger, which is the key word that triggers the event. Researchers often define a finite set of event types under which the events of interest can be categorized. Each event type is associated with a set of expected attributes that describe the event.

While much of the work on event extraction has focused on news articles, the application of event extraction is equally important in the context of medical documents. Events in the medical domain differ significantly from those in the news domain. Medical events are often described using longer phrases, such as “a pain in the lower right arm”, whereas typical news events are described by single words or short phrases like “attack”. Furthermore, the event types relevant to the medical domain, such as “test”, “problem”, “treatment”, “clinical departments”, “evidential information”, and “clinically relevant occurrence” [69,100], differ substantially from those in news articles, which may include “attack”, “transport”, “injure”, “meet”, and more [101]. Consequently, most systems designed for event extraction from news articles may not perform well on medical documents due to these significant differences in language and event types.

5.1. Event Extraction Using Pattern Matching

In the early stages of automated event extraction, initial methodologies involved the creation of manually crafted rules to identify event mentions. One of the pioneering systems from this era was AutoSlog as documented in 1993 [6]. The AutoSlog system facilitated the development of event-matching patterns by suggesting potential event patterns based on pre-defined linguistic structures and a given corpus. These linguistic structures were established to represent syntactic patterns. For example, one such pattern was “<subject> active-verb”, which aimed to identify nouns acting as subjects followed by an active verb. AutoSlog utilized these linguistic patterns to generate candidate patterns for event recognition, which were then presented to users to expedite the process of crafting rules for event extraction. In an application of this system, the authors employed it to construct a model for extracting terrorist events from the MUC-4 corpus. Subsequently, the authors introduced an enhanced version of the system known as AutoSlog-TS [7]. AutoSlog-TS improved upon AutoSlog by eliminating the need for an annotated corpus. Instead, it employed the CIRCUS syntactic analyzer to automatically determine the syntactic roles of words within a corpus.

Similarly, Kim and Moldovan proposed a system called PALKA (Parallel Automatic Linguistic Knowledge Acquisition) [8], which also automatically identified event patterns from an annotated corpus. PALKA’s primary objective was to create a system that was domain-independent. Differing from the AutoSlog system, PALKA had the capability to generate patterns for recognizing multiple attributes within the context of the same event. For instance, PALKA could produce a pattern like “(perpetrator) attack (target) with (instrument)”, where the attack event would encompass three attributes: perpetrator, target, and instrument. Additionally, several other systems have been developed employing manually defined rules and patterns for event extraction and argument identification [97,102,103,104,105,106,107].

5.2. Event Extraction Using Traditional Machine Learning

The advent of the Automatic Content Extraction (ACE) dataset [66] marked a significant turning point in the research landscape, spurring heightened interest in the automated event extraction domain within the research community. Much of the ensuing research efforts were directed towards the development of machine learning models capable of discerning events, categorizing them by type, and identifying their associated attributes. Notably, the ACE dataset encompassed documents in three languages: English, Chinese, and Arabic. Each document containing meticulously annotated events and their corresponding attributes.

In the realm of event trigger recognition, the majority of machine learning approaches function by first classifying individual words as either event triggers or not, then classifying the remaining words to detect event attributes. Next, they recognize the attributes of recognized events and finally detect event coreference [10]. When classifying the event triggers, the trigger class is commonly subdivided into various event types [9,108]. The process is illustrated in Figure 5.

Figure 5.

The process of event extraction described by Ahn [10].

The most common technique for detecting event triggers and their attributes is the use of SVM classifiers and Maximum Entropy models. Such approaches were used by several systems between the years 2010 and 2016 [48,109,110,111,112]. Yang and Mitchell (2016) [11] presented another event extraction system that relies on classical machine learning. Their approach employs conditional random fields based on manually selected features. Importantly, it does not consider each event in isolation but models relations between multiple events within the document, enhancing event-type predictions. For instance, an event of type “attack” is likely to occur in proximity to events of type “injure” and “die”, and this kind of contextual information aids in event type recognition. Similar improvements were also proposed by a number of other researchers [113,114,115,116,117].

5.3. Event Extraction Using Neural Networks

The field of event extraction has witnessed significant advancements thanks to developments in neural networks. One of the earlier neural network-based approaches was introduced by Chen et al. [12]. They proposed a system that leverages a convolutional neural network (CNN) on precomputed word embeddings to classify trigger candidates and candidate event arguments into their respective roles. The neural network takes a sentence with a marked candidate trigger or candidate argument and assigns it a role.

A notable shift in event extraction research occurred with the introduction of recurrent neural networks (RNNs) [98,118,119,120,121,122]. One of the earliest approaches using RNNs was proposed by Nguyen et al. [13]. They employed a Bidirectional Long Short-Term Memory (LSTM) RNN to extract events. At each token, the network identifies the event type triggered by that token and the argument role it represents concerning other potential event triggers. In 2019, Nguyen and Nguyen [49] updated this approach by replacing the LSTM layer with bidirectional gated recurrent units (GRUs). Sha et al. [14] enhanced the bidirectional LSTM approach by incorporating additional connections between words in a sentence based on their syntactic relations. Liu et al. [15] also explored the use of syntactical dependencies to enhance event recognition. Their approach involved computing token embeddings, applying a bidirectional LSTM, and then utilizing resulting vectors as input for a graph neural network. The edges of the graph were computed based on syntactic dependencies between words. The graph neural network produced a vector representation for each word in a sentence. In the final stages, vectors representing event trigger candidates were classified to determine the event type. For recognizing event arguments, the vectors representing the event and argument candidates were combined using mean pooling and classified to ascertain the argument type. This approach yielded improved results compared to Sha et al.’s approach. Graph neural networks for event extraction have also been employed by Guo et al. [123] and Wu et al. [124]. We also identified several approaches in the medical domain using similar architectures [96,125,126].

5.4. Use of Pretrained Neural Language Models

In the realm of event extraction, the adoption of pretrained language models (PLMs) has ushered in substantial advancements, with the most popular PLM for this task being Bidirectional Encoder Representations from Transformers (BERT) and its variants [127,128,129,130,131]. Zhang et al. [132] leveraged a BERT model to extract implicit event arguments. Their model aims to identify event arguments, including those not explicitly linked to the event mention. This is accomplished by considering BERT embeddings of the event trigger and the argument candidate head. The model employs a biaffine module to calculate the probability of a selected argument filling a particular role for the chosen event. The argument with the highest score is designated for each argument type. Following argument head determination, the network performs head-to-span expansion to identify tokens that are part of an argument based on token embeddings and the argument head. A multi-layer perceptron is employed for this task. The algorithm’s evaluation was conducted on the RAMS dataset, which includes implicit arguments, making direct comparisons to algorithms designed for the ACE dataset unfeasible.

Another approach using a pretrained BERT network was proposed by [17]. Their approach involves a transition system based on token embeddings. These embeddings are derived from BERT, as well as other features, such as part-of-speech tags, character-level LSTM representations, and GloVe word embeddings. The transition system executes a sequence of actions to determine the event’s position and event arguments. This methodology achieved state-of-the-art results on the ACE dataset.

In the medical domain, several systems utilize BERT models for event extraction [40,129,130,131]. For example, Han et al. [40] use a BERT model to perform event extraction on medical documents by classifying each token into one of the event classes. Based on the classification results, the tokens are then grouped together into events.

With the advent of large language models (LLMs), researchers have also explored using such models for tasks akin to event extraction [133,134,135]. These approaches typically prompt the model to annotate events within the text. Wei et al. [134] improved their predictions by employing chat-based prompts, which engage the model with a series of questions more akin to a conversation. However, despite these efforts, all observed approaches achieve performance levels significantly lower than those based on BERT [40,129,130,131].

5.5. Cross-Lingual Event Extraction

The development of event extraction models is often hindered by the scarcity of labeled data, particularly for smaller or less-resourced languages. To address this challenge, researchers have proposed cross-lingual event extraction methods, which aim to leverage information acquired from training data in one language for event extraction in another language. Several implementations of such systems have been explored [19,20,21]. However, a common limitation of these approaches is their reliance on a substantial number of parallel documents between the languages, which can be challenging to obtain. Liu et al. [16] introduced a system designed to alleviate the need for a large number of parallel documents. They accomplished this by introducing context-dependent lexical mapping, which allows embeddings from one language to be translated into another. To address the issue of differing word orders between languages, they employed a convolutional graph neural network that operates based on syntactic connections between words rather than their order within a sentence. This innovative approach was tested on both the ACE 2005 dataset and the TAC KBP 2017 dataset. In both cases, the model outperformed models trained exclusively on the target language, demonstrating its effectiveness in cross-lingual event extraction.

5.6. Transfer Learning

Researchers have explored innovative approaches to leverage advancements in other areas of natural language processing for event extraction tasks. One such approach was introduced by Liu et al. [22], which frames event extraction as a reading comprehension task. In this approach, the document serves as the source of knowledge for the model, and a series of questions are posed to the model about the event. Event triggers are identified using a specialized query prompt, while event attributes are extracted using prompts designed to elicit specific information, such as “What instrument did the protester use to stab an officer?” This reading comprehension-based approach offers a unique perspective on event extraction.

Another intriguing approach for event extraction was presented by Lu et al. [23]. They designed a transformer model, built upon the T5 pretrained language model, which transforms text into a structured representation. The model takes a sentence as input and outputs a structured representation containing the event, its type, and its associated arguments. This approach aims to capture the essence of events and their attributes within a structured format, offering potential benefits for downstream applications. Zhang et al. [136] explored an approach that combines text and images for event extraction. Their model connects textual and image information, combining both modalities within a single model. This multimodal approach opens up new possibilities for event extraction by incorporating visual cues from images to enhance the understanding of events described in the text.

5.7. Discussion

In Table 6, we compare the systems for event extraction. We compare the systems based on F1 scores on the four tasks supported by the ACE 2005 corpus. The event identification task requires the model to identify the event triggers in a document. By doing so, the model recognizes the events that are described. In the event classification task, the model has to identify the type of the detected events. Since some models solve the first two tasks in a single classification, we report only a single score in that case. The argument identification task requires the model to recognize the arguments of the identified events and the argument role classification task requires the classification of a role each argument plays in regards to the event. The presented scores are reported by each individual paper and are not directly comparable because of that; however, the reported values still give us an idea of the system’s performance. We see that the use of graph neural networks for the introduction of syntactic dependencies in a sentence proposed by Liu et al. [15] provides a big improvement. We believe that future systems for event extraction will also use graph neural networks in a similar way. Another idea that is likely to be used in future systems is the use of pretrained language models. We observed good results achieved by Zhang et al. [17,132] by using pretrained language models, so we believe that this is another promising future direction. Pretrained language models can also be combined with graph neural networks, providing benefits for both.

Table 6.

Comparison of the described event extraction systems. We report the F1 scores for the tasks defined in the ACE 2005 challenge where available. The results are the best results reported by each of the papers.

6. Temporal Relation Extraction

The task of temporal relation extraction was first popularized by the creation of the TimeBank corpus [74]. The first successful approaches for recognizing temporal relations were based on hand-defined rules [24,137].

6.1. Temporal Relation Extraction Using Traditional Machine Learning

Temporal relation extraction, a task that involves identifying relationships between events or between events and temporal expressions, saw early efforts rooted in traditional machine learning techniques. In these approaches, machine learning models are coupled with hand-crafted features to achieve temporal relation recognition.

Mani et al. [25] tackled the recognition of temporal relation types by experimenting with various machine learning models, including Maximum Entropy (ME), Naive Bayes, and Support Vector Machine (SVM) classifiers. Their features encompass event class, aspect, modality, tense, negation, event string, and signal. Building on this foundation, Bethard [26] extended the approach to the medical domain using the TempEval 2013 dataset. Their system not only identified temporal relations but also detected events and time expressions within documents, relying on a feature set similar to that of Mani et al. [25], albeit without the signal feature.

Lin et al. [27] applied SVM models to medical documents found in the THYME and 2012 i2b2 datasets. They developed separate classifiers for recognizing relations between two events and between an event and a time expression. Features for these classifiers included part-of-speech tags, event attributes, dependency paths, and more. Ning et al. [28] further improved temporal relation prediction by introducing logical rules that considered multiple relations concurrently. For example, if event B occurred after event A and event C followed event B, then event C was inferred to be after event A. These logical rules were applied by calculating probabilities for individual relations and selecting the most likely combination. Their classification utilized a perceptron model with an expanded feature set encompassing lexical, syntactic, semantic, linguistic, and time interval features.

Table 7 provides a comparative overview of these traditional machine learning-based approaches to temporal relation extraction. Although the models were evaluated on different datasets, the commonality among them was the utilization of Support Vector Machines and Maximum Entropy models, reflecting the popularity of these traditional machine learning techniques in the domain of temporal relation extraction.

Table 7.

Systems using hand-crafted features for temporal relation extraction. Values report accuracy for event–event (EE) relations and event–time (ET) relations.

6.2. Temporal Relation Extraction Using Neural Networks

In recent times, the field of temporal relation extraction has witnessed a shift towards the adoption of deep neural network architectures. These neural network-based models have proven effective in capturing complex temporal relationships in text. Table 8 presents a comparison of temporal relation extraction models employing neural networks.

Table 8.

Comparison of temporal relation extraction models on several datasets. The reported F-scores are the ones achieved by the best configuration. Some models only predict the “contains” relation in a dataset and are marked with the contains only column. Some results are split into scores for event–event (EE) relations and event–time (ET) relations.

Early neural network architectures in this context commonly incorporated Long Short-Term Memory (LSTM) layers on top of word embeddings to generate event embeddings, which were then used for relation classification [29,32,33].

Tourille et al. [29] introduced a deep neural network with an LSTM layer. They computed word embeddings by combining manually engineered features, word2vec embeddings, and character-level LSTM embeddings. These embeddings were then fed into a bidirectional LSTM layer. The resulting vectors from this layer were concatenated and used for classification into relation classes. This approach was tested on medical documents from the THYME corpus. Cheng and Miyao [32] adopted a similar approach but with a focus on inter-sentence temporal relation recognition. Instead of applying the LSTM over neighboring tokens, they applied it over tokens located on a syntactic dependency path connecting one event to another. This allowed them to establish connections between events described in different sentences, thereby enhancing inter-sentence temporal relation recognition.

Dligach et al. [30] delved deeper into the use of neural networks for temporal relation extraction. They explored various network architectures and found that neural networks outperformed hand-engineered features. Interestingly, they discovered that convolutional neural network (CNN) architectures performed better than LSTM networks. Their experiments were conducted on the THYME dataset, which primarily focused on the “contains” relation (referred to as “during” in Alen’s categorization in Figure 4).

In addition to LSTM and CNN architectures, researchers have also explored the application of other neural network architectures, such as graph neural networks [140] and attention-based neural networks [141]. These architectures offer diverse approaches to capturing temporal relations in text, contributing to the advancement of the field.

6.3. Use of Pretrained Neural Language Models

The recent breakthroughs in natural language processing have been driven by the widespread use of large pretrained language models. These models, trained on vast amounts of text, leverage their learned knowledge to excel in various language-related tasks, including temporal relation extraction [28,31,34,142].

Lin et al. [31] introduced an early approach to temporal relation extraction using pretrained language models. Their method involved marking events with special tokens and processing the sequence using a BERT network. The final classification relied on the embedding of a “[CLS]” token. This model primarily recognized “CONTAINS” and “CONTAINED_BY” relations and experimented with BERT models pretrained on different types of text, with models pretrained on more relevant data, yielding improved results. Zhou et al. [34] built on top of this approach by introducing probabilistic soft logic, incorporating logical rules that must hold between temporal relations. This approach, similar to the work by Ning et al. [28], significantly improved results over the baseline BERT model. Their evaluation encompassed the 2012 i2b2 medical dataset and the TimeBank-Dense dataset.

To improve on simply classifying temporal relations based on embeddings provided by BERT, some researchers proposed to complement them by graph neural networks (GNNs), which offer additional information about sentence structure and inter-sentence relations. Zhang et al. [35] employed BERT to compute token embeddings and then used two graph neural networks—one that accepted events and related tokens and another that accepted the shortest syntactic path between events. This approach yielded a 5% improvement compared to only using BERT when tested on the TimeBank-Dense and MATRES datasets. Xu et al. [36] proposed another approach, taking advantage of a GNN to improve relation extraction performance. The approach incorporated attention mechanisms, outperforming the LSTM-based architecture when evaluated on the TimeBank-Dense dataset. Mathur et al. [37] extended the use of graph neural networks in conjunction with BERT embeddings for document-level temporal relation extraction. Their approach incorporated multiple graph neural networks with gating convolutional layers, achieving superior results compared to baselines across multiple datasets, including MATRES, TimeBank-Dense, and a subset of the TDDiscourse corpus.

6.4. Use of External Knowledge

When humans comprehend natural language, they rely on their shared knowledge to make educated guesses about implicit details not explicitly mentioned in the text. For instance, if a text describes someone as feeling hungry and having lunch, we intuitively understand that the hunger likely preceded the meal and that the meal itself probably took a relatively short amount of time. These inferences are drawn from our common background knowledge and contextual cues.

In contrast, computer models often lack access to this common knowledge and can only extract information explicitly stated in the text. To address this limitation, researchers have proposed methods to integrate general knowledge into computer models [38,39,40,143].

One approach to incorporating general knowledge into models involves compiling statistics about target variables from a large corpus of text. For instance, by observing temporal relations between events in a corpus, we can predict the likelihood of each relation occurring between the observed events. This statistical knowledge aids the model in relation extraction. Ning et al. [38] developed such a statistical resource, containing probabilities for temporal relations between events in the news domain. This resource was constructed using news articles from The New York Times over a 20-year period. Their findings demonstrate that integrating this resource enhances the performance of existing temporal relation extraction systems. In subsequent work, Ning et al. [39] introduced a novel state-of-the-art system for temporal relation extraction that leverages this statistical resource. Their model employs an architecture that applies a Long Short-Term Memory (LSTM) network over contextualized token embeddings. Additional knowledge is incorporated through a common sense encoder, which is concatenated with the event token embeddings. This inclusion of common sense knowledge led to a 3% improvement in results. Han et al. [40] proposed a similar approach for temporal relation classification that also integrates common sense knowledge. Their model begins by computing BERT token embeddings, which are then passed through an LSTM layer. The resulting representation is used to extract events and predict probabilities for their temporal relations. Events are then associated with their types, and probabilities of temporal relations between them are estimated based on corpus statistics. The relation probabilities predicted by the model are combined with those computed from corpus statistics using maximum a posteriori estimation. This approach employs probabilistic constraints instead of rigid constraints.

Certain authors also utilize constraints grounded in their domain knowledge for temporal relation extraction. Zhou et al. [34] and Ning et al. [28] applied transitivity between temporal relations to enhance their predictions. These types of constraints introduce domain-specific knowledge into the model, even without statistics from a large corpus.

6.5. Large Language Models

With the rise of large language models like ChatGPT and GPT-4 [144], which have demonstrated their effectiveness across various tasks, researchers have explored the possibility of using such general models for classification tasks like temporal relation extraction.

The primary advantage of these approaches is that they enable zero-shot classification [138,139,145]. This means that these models can be used to extract temporal relations without requiring any specific training data that provide examples of temporal relations. Researchers have investigated the use of such models by fine-tuning or adapting prompts to optimize extraction reliability. However, it is worth noting that the prediction accuracy of these general models tends to be considerably lower than that of purpose-built models [138,139].

To enhance the performance of these models, efforts have been made to design prompts that provide explanations about the question setting and causal relations [139]. Yuan et al. [138] took a step further by introducing "chain of thought" prompts, where the model is prompted with a series of questions concerning the timing of events. Despite these improvements, researchers still report significantly lower performance compared to some approaches utilizing other specialized architectures for temporal relation extraction.

6.6. Discussion

Temporal relations are the most commonly extracted temporal features of events in the papers that we analyzed. In order to compare the systems for temporal relation extraction, we present them in two separate tables. Table 7 compares systems using traditional machine learning models, and Table 8 compares systems using neural network architectures. Since the models using traditional machine learning use different datasets, we cannot make meaningful comparisons of their performance. Recent research suggests that the use of language models like BERT and graph neural networks provides the best results [35,36,37].

We also believe that the use of common knowledge resources could provide large benefits in the process of extracting temporal relations. Ning et al. [39] and Han et al. [40] showed significant improvements to the results when using statistical resources to complement extracted relations. We believe that there is still a lot of unexplored potential for the use of common sense knowledge in the process of temporal relation extraction. Research in other areas of natural language processing has shown that the integration of common sense knowledge is an effective way of improving model performance, especially in cases where not a lot of training data are available. Liu et al. [146] demonstrated the improvements that come from additional domain knowledge on a variety of natural language tasks.

7. Timeline and Knowledge Graph Construction

Once information about events and their temporal attributes is extracted from text, the next step is to represent this information in a machine-readable format. A common representation for knowledge extracted from plain text documents is a knowledge graph [1]. Most existing knowledge graphs are centered around entities. In such graphs, nodes represent entities, and edges represent relations between them. However, we can also use knowledge graphs to represent event information, leading to event-centric knowledge graphs.

Event-centric knowledge graphs, like EventKG constructed by Gottschalk [147], focus on events as the central entities. EventKG was created by extracting events from various sources, including Wikidata [2], DBpedia [1], YAGO [3], Wikipedia Event Lists, and Wikipedia Current Events Portal. It comprises 322,669 events with 88,473,111 relations, including information about the time and location of events when available.

In this survey, we explore the automatic generation of event-centric knowledge graphs from unstructured documents. Automatically constructing such graphs can have various applications, including event information analysis and using the extracted knowledge graphs as sources of external knowledge as discussed in Section 6.4. To automatically create event-centric knowledge graphs, we need to integrate the methods for information extraction described earlier with techniques for disambiguation and constructing knowledge graphs.

7.1. Constructing Temporal Knowledge Graphs

Adding temporal information to a knowledge graph to create a temporal knowledge graph has gained significant attention in recent years [148]. One example of automatically building an event-centric temporal knowledge graph was presented by Rospocher et al. [46]. They developed a comprehensive pipeline of existing natural language processing tools capable of extracting information from plain text news articles to construct a knowledge graph containing events and their associated attributes. Another system for constructing event-centric knowledge graphs was introduced by Song [149]. This system crawls news articles from the web to extract credit events and represent them as nodes and their causal relations as edges in a knowledge graph. The system detects events and their causal relations based on syntactic dependencies between words. These methods rely on traditional techniques for extracting events and temporal relations and could potentially be enhanced with the integration of deep learning methods.

Similar approaches have been employed to construct knowledge graphs, such as the Global Database of Event Language and Tone (GDELT) [150] and the Integrated Crisis Early Warning System (ICEWS) [151]. These knowledge graphs contain temporal information about events sourced from news articles, government reports, and other publicly available documents. The methods used in these cases rely on statistical natural language processing techniques, like Dirichlet Localization Algorithms (ALDs). EventKG [147] is another example of a temporal knowledge graph. EventKG is constructed from existing structured data sources, including DBpedia, Wikidata, Yago, and Wikipedia. Unlike the previously mentioned models, EventKG does not work directly with natural language text but starts with structured data. The construction of EventKG involves several steps, including identifying events, extracting relations, integrating events and entities, and fusing the temporal and spatial information associated with the events.

7.2. Estimating Event Timelines

The representation of temporal information about events in the form of timelines can provide a more intuitive understanding for humans. Timelines can capture the sequencing of events and their durations, making them easier to comprehend. However, while timelines are beneficial for human interpretation, they may lack the ability to record additional extracted data compared to knowledge graphs. Timelines can be categorized as relative or absolute. Relative timelines focus on capturing the sequence of events without specifying their actual time points, relying on temporal relations between events. In contrast, absolute timelines record the actual times of events, enabling the combination of multiple timelines. Absolute timelines are more informative but require more extensive temporal information. Leeuwenberg and Moens [33] proposed an architecture to create relative event timelines directly. Instead of predicting temporal relations, they predict relative times, allowing the direct construction of timelines. Their model predicts relative start and end times for events, where greater values indicate later times. The architecture employs two Bidirectional Long Short-Term Memory (Bi-LSTM) layers, one for predicting event start times and another for predicting event durations. These predictions are combined with token embeddings of event and time expressions. Evaluation is performed on the THYME medical corpus by checking if the predicted time points align with the annotated relations. Similarly, Vashishtha et al. [42] constructed a dataset containing relative timeline positions of events in a document. Their model takes pairs of events within a sentence and positions them relative to each other on a timeline. Subsequently, these relative timelines are combined into a single document timeline. The model also predicts the duration class (e.g., seconds, minutes, hours, and days) for events in the timeline. In 2020, Leeuwenberg and Moens [41] extended their work to extract absolute event times and durations. They annotated the i2b2 medical corpus with the event starting times, ending times, and durations. The model for predicting absolute event times uses the ELMo embeddings of words, which are processed through an LSTM or a convolutional layer. The predictions are generated using a multi-layer perceptron. For predicting event durations, the model considers only the event and its surrounding context. The predicted values represent the absolute time of the event and its duration.

7.3. Estimating Event Duration

Predicting the duration of events is a crucial aspect of temporal information extraction. Various approaches have been explored for this task, ranging from binary classification to fine-grained classification and continuous variable prediction. Pan et al. [43] created a corpus of news articles and annotated events from the TimeBank corpus with their durations. They designed a binary classification task to predict whether events are short (less than a day) or long (more than a day), using hand-crafted features, including event properties, bag-of-words vectors, subject and object of the events, and WordNet hypernyms. Support Vector Machine (SVM) achieved the best results for this binary classification. Gusev et al. [44] extended Pan et al.’s work by expanding the dataset with additional annotations and predicting multiple duration classes, such as seconds, minutes, hours, etc. They employed a similar set of features, including event attributes, named entity classes, typed dependencies, and whether an event is a reporting verb. Various machine learning models, including Naive Bayes, Logistic Regression, Maximum Entropy, and SVM, were evaluated, with Maximum Entropy achieving the best performance. Additionally, Gusev et al. proposed an unsupervised model to extract event durations using patterns. Vempala et al. [45] introduced a neural network-based approach for event duration estimation. Their model utilizes three Long Short-Term Memory (LSTM) networks over word embeddings in a sentence: one capturing information from the entire sentence, one from words before the event, and one from words after the event. These vectors, combined with the event’s embedding, are classified using a softmax layer. While they improved upon the previous binary classification models, their approach still predicted event duration as a binary output.

Several models designed for event timeline construction also incorporate event duration prediction, which is crucial for the timeline sequencing task [33,41,42]. Vashishtha et al. [42] performed the fine-grained classification of event durations, classifying events into categories such as seconds, minutes, hours, days, weeks, months, years, decades, centuries, and forever.

7.4. Discussion