Abstract

Breast Cancer (BC) detection and classification are critical tasks in medical diagnostics. The lives of patients can be greatly enhanced by the precise and early detection of BC. This study suggests a novel approach for detecting BC that combines deep learning models and sophisticated image processing techniques to address those shortcomings. The BC dataset was pre-processed using histogram equalization and adaptive filtering. Data augmentation was performed using cycle-consistent GANs (CycleGANs). Handcrafted features like Haralick features, Gabor filters, contour-based features, and morphological features were extracted, along with features from deep learning architecture VGG16. Then, we employed a hybrid optimization model, combining the Sparrow Search Algorithm (SSA) and Red Deer Algorithm (RDA), called Hybrid Red Deer with Sparrow optimization (HRDSO), to select the most informative subset of features. For detecting BC, we proposed a new DenseXtNet architecture by combining DenseNet and optimized ResNeXt, which is optimized using the hybrid optimization model HRDSO. The proposed model was evaluated using various performance metrics and compared with existing methods, demonstrating that its accuracy is 97.58% in BC detection. MATLAB was utilized for implementation and evaluation purposes.

Keywords:

BC detection; image processing techniques; histogram equalization; adaptive filtering; Haralick features; Gabor filters; contour-based features MSC:

68U10; 68T99

1. Introduction

The continuous growth and spread of abnormal cells in the human body are thought to be causes of cancer. Because breast tissue cells develop so quickly, women might be diagnosed with Breast cancer. Depending on the region, size, and location, the BC Reporting and Data System (BI-RAD) values define how those massive tumor cells divide into benign and malignant cells [1,2,3,4]. Breast tumors can be either benign or malignant. A benign tumor is one in which the cancerous cells are contained and have not spread to the surrounding tissue. The word “benign” describes the primary tumor area of non-cancerous tumors, while the word “malignant” describes secondary tumors. A malignant disease might arise from the malignancy and spread. In contrast to a malignant tumor, which spreads sick cells throughout the body via the circulatory system and results in death, a benign tumor is easily cured and is less lethal. Malignant tumors are the deadliest type, and the majority of women diagnosed with them do not survive. Malignant breast tumors are the more common of the two types.

Breast images distinguish between cases with and without tumors [5]. It is significant to note that breast tumors can appear in diverse areas of the breast tissue, and the photos emphasize this variety. Since tumors can manifest in any area of the breast, locating them is difficult in the realm of medical imaging. The three essential components of the breast are the connective tissue, ducts, and lobes. The ducts or lobules are where the bulk of BCs begin. Therefore, earlier BC identification is essential for raising patient survival rates. Researchers are creating ever-more-accurate cancer identification algorithms in response to the high morbidity and significant cost burden of disease-related healthcare. The two most common methods for identifying BC are mammography and biopsies [6,7]. Radiologists employ a particular kind of breast picture while doing mammography on female patients in order to search for early signs of cancer. According to studies, mammography has helped reduce the amount of BC mortality. A biopsy is a further accurate diagnostic technique for detecting BC [8,9]. The key issues with BC pictures are the automatic localization and detection of cancer cells because of the variability in their size, shape, and location [10].

Bilateral CC [11] and MLO [12] pictures of each breast are obtained during mammography [13], which is comparable to obtaining an X-ray of the chest in which the chest is pressed down between two plates. However, given the complexity of mammography and the massive number of exams each radiologist does, a mistake might still happen [14]. Much more study is still needed in the fields of FPR, FNR, and MCC score despite the fact that many researchers have worked hard to establish a network that reliably identifies breast cancers with positive findings [15]. A high score from MCC is only given to predictions that performed well in each of the confusion matrix’s four zones. By lowering FPR and FNR rates, MCC should be improved since it is a more trustworthy performance parameter than binary classification accuracy. The study’s main contributions are these:

- ➢

- To select the features from the extracted features effectively, the hybrid Red Deer with Sparrow optimization (HRDSO), which is the combination of Sparrow Search Algorithm (SSA) and Red Deer Algorithm (RDA), was introduced. This can be used to obtain a balance between both the exploration and exploitation phase of the optimization.

- ➢

- To accurately detect the BC from the input image, the DenseNet model was connected in between the convolution and global averaging layers of the ResNet; this model is named DenseXtNet.

The rest of the paper is organized as follows: The detailed literature review is presented in Section 2, while Section 3 presents detailed description of the proposed model for BC detection and classification utilizing TL approaches. Section 4 contains a comparison of the experimental findings with actual data. Section 5 presents the conclusion.

2. Literature Review

This section discusses the most current papers on BC that used mammography scans.

Hekal et al. [16] introduced a CAD system that identifies tumor-like regions (TLRs) via automated Otsu thresholding, processed them through deep CNNs for feature extraction, and employed a support vector machine for accurate classification of benign and malignant mammogram structures. Ragab et al. [17] explored deep CNNs for breast cancer lesion classification, conducting experiments involving pre-trained CNNs, SVM classification using deep features, feature fusion, and PCA. Their results indicated the efficacy of feature fusion. Khan et al. [18] addressed breast cancer classification in cytology images through transfer learning, leveraging pre-trained CNNs to extract features for precise classification. Lbachir et al. [19] focused on a comprehensive CAD system for mass identification, employing preprocessing, segmentation, and classification stages to aid radiologists in diagnosing breast abnormalities. Patil and Biradar [20] aimed to enhance mammogram breast detection with an optimized hybrid classifier, combining image preprocessing, tumor segmentation, feature extraction, and classification using CNN and RNN, achieving improved diagnostic accuracy. These studies collectively underscore the significant potential of deep learning in improving breast cancer detection and classification methodologies.

Suh et al. [21] employed artificial intelligence to automate breast cancer detection in digital mammograms across varying densities. Using two convolutional neural networks, DenseNet-169 and EfficientNet-B5, they achieved strong performance, with AUCs of 0.952 and 0.954, respectively. Superior sensitivity and specificity of DenseNet-169 were evident, highlighting its potential for diverse-density mammograms. Sasaki et al. [22] evaluated breast cancer detection using human readers and the AI-based Transpara system. Human readers outperformed Transpara, which exhibited sensitivity and specificity variations. However, AI advancements are anticipated to bridge this performance gap. Maqsood et al. [23] introduced an efficient deep learning system for breast cancer recognition in mammogram screening. This approach incorporates contrast enhancement, a transferable texture CNN (TTCNN), and convolutional sparse image decomposition for fusion. Altameem et al. [24] leveraged deep learning for breast cancer detection in mammograms. Their innovative fuzzy ensemble technique, particularly the Inception V4 ensemble model, attained remarkable 99.32% accuracy, suggesting significant potential in enhancing early diagnosis and patient outcomes.

In order to detect and categorize breast cancer, Abunasser et al. [25] suggested using the Xception algorithm, highlighting the usefulness of deep learning techniques in this situation. Using the MobileNet model and optimal region growing segmentation for increased accuracy in breast cancer identification, Dafni Rose et al. [26] presented a computer-aided diagnosis technique for breast cancer in their study. The potential of the modified YOLOv5 for breast cancer analysis was highlighted by Mohiyuddin et al.’s [27] approach for breast tumor detection and classification in mammography images. A two-stage deep learning technique designed by Ibrokhimov and Kang [28] for breast cancer detection utilizing high-resolution mammography images raised the possibility of increased diagnostic precision. In order to identify breast cancer in mammography images, A promising method to improve the accuracy of breast cancer detection was introduced by Ragab et al. [29] in an ensemble deep learning-enabled clinical decision support system for breast cancer diagnosis and classification using ultrasound images.

The literature review illuminates the detection and classification of breast cancer, highlighting significant research gaps that the suggested model can successfully fill. Studies that have already been published in the literature have mostly focused on using deep learning models to identify breast cancer, leaving opportunity for improvements in the incorporation of cutting-edge image processing methods. By including methods like histogram equalization, adaptive filtering, and data augmentation through CycleGANs, the proposed model makes significant progress toward closing this gap. The breast cancer dataset’s quality is improved through these preprocessing processes, which ultimately strengthen the model’s robustness. Furthermore, the literature highlights a lack of precise feature extraction or selection methods in earlier publications that relied on deep learning models. The suggested approach steps in at this point by adding a hybrid optimization model called HRDSO, which combines the Red Deer and Sparrow Search algorithms to simplify feature selection. By determining the most informative subset of characteristics, this hybrid technique aims to optimize model performance while simultaneously lowering computing overhead. Ingeniously, our study forges a distinctive path by introducing the DenseXtNet architecture, whereas past studies have tended to gravitate toward well-established deep learning architectures. This innovative design combines improved ResNeXt and DenseNet and is tuned with the HRDSO model. In comparison to current techniques, this innovation has the potential to perform better. Furthermore, by contrasting the suggested model with current approaches, our work complies with the literature’s call for rigorous performance evaluation. Our research confirms the model’s effectiveness, reaching an amazing accuracy rate of 97.58% in the field of breast cancer diagnosis through this thorough assessment using a variety of performance indicators. The suggested model makes a valuable contribution to the body of literature by skillfully addressing the ongoing research gaps in the detection and classification of breast cancer. By combining cutting-edge image processing methods, careful feature selection, the introduction of a creative architecture, and extensive performance evaluation using MATLAB, this research has the potential to significantly increase breast cancer diagnosis. The detailed discussion of the proposed method is presented in the subsequent sections.

3. Proposed Methodology

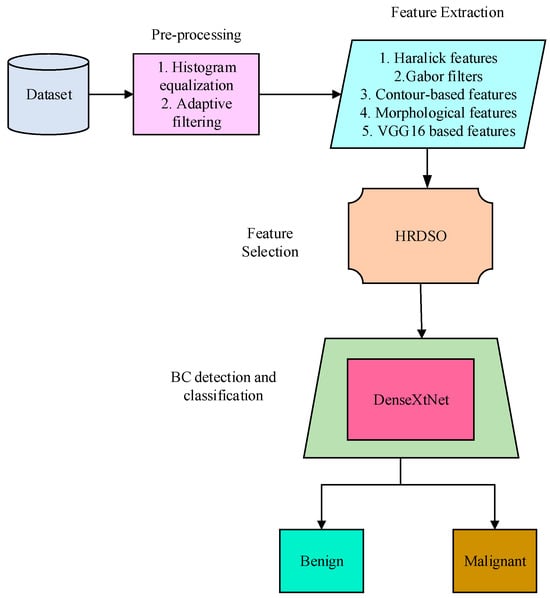

A mammogram is a sort of imaging technology that uses the mammography pictures captured by a mammogram to diagnose BC. The block diagram of the proposed BC detection model is presented in Figure 1. The suggested method for the detection of BC uses the recorded mammography picture as input. The mammography picture is impacted by a form of noise known as salt-and-pepper noise at the moment the image is obtained. These noises are reduced using the pre-processing stage. The required features are extracted from the pre-processed image and these features are used for evaluating the presence of BC. The hybrid optimization model is used for effectively selecting the required features, and the final detection and classification of BC is performed using the newly introduced DenseXtNet.

Figure 1.

Block diagram of the proposed BC detection model.

3.1. Pre-Processing

3.1.1. Histogram Equalization

Pre-processing methods, such as histogram equalization and adaptive filtering, are used after the acquisition of the BC dataset. Low contrast photographs can have their contrast reduced by a procedure called histogram equalization. When we state that there is little change in brightness between the image’s highest and lowest values, we are referring to the image contrast. The contrast of the input image is increased as much as feasible during histogram equalization. Consider an input picture as a matrix of integer pixel intensities spaced in the range . represents the potential intensity quantity and can be either 256 (for unit 8 class) or 1, depending on the type of class. describes the image’s normalized histogram for the potential intensities:

where is the total number of pixels with intensity and is the total number of pixels, which is . The histogram equalization is shown below based on the previous definition:

where indicates to round the number to the next nearest integer. This method is based on the expression and , which are continuous random variables, and that and ’s intensities are in the range . A presentation of is

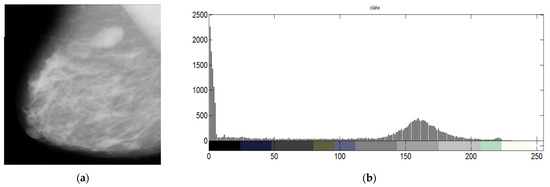

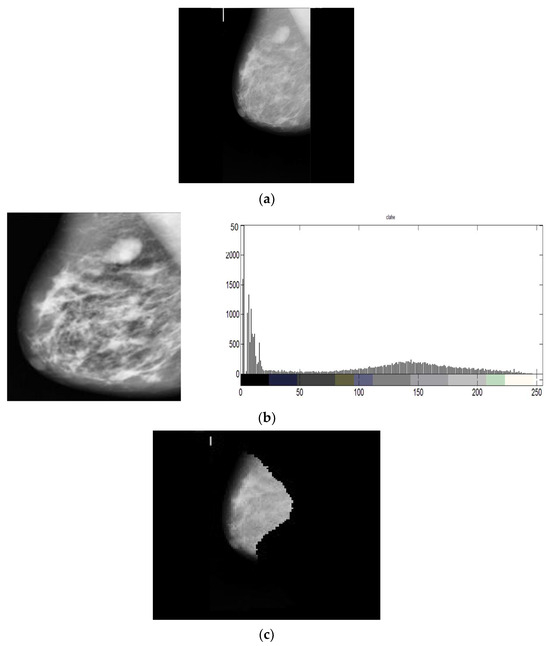

where the cumulative distributive function of times () is denoted by the letter , and denotes the histogram of . is seen as being invertible and differentiable. An example of the mammographic images and its corresponding histogram is shown in Figure 2. The different colors in the histogram represent different ranges of pixel intensities. The x-axis of the histogram shows the pixel intensity, and the y-axis shows the number of pixels with that intensity.

Figure 2.

(a) mammographic image and (b) its histogram.

3.1.2. Adaptive Filtering

Adaptive filters can also be applied to image processing tasks to enhance image quality, reduce noise, and improve image restoration. To improve the quality of images, particularly in the context of image processing and analysis, our method combines adaptive filtering and histogram equalization. By segmenting the original image into numerous non-overlapping sub-images using this technique, also known as adaptive histogram equalization, the image is efficiently divided into more manageable parts. The histograms are altered inside each of these sub-images in a way that clips the intensity values, reducing the amount of augmentation that is applied to each individual pixel. The main goal of this procedure is to emphasize the smaller elements already existing in the image and make them stand out more against the background. We achieve a balance between the foreground and background features in the image by concentrating on these sub-images and improving their local histograms. In order to produce high-contrast output photos, the foreground and background must be equalized. As a result, the overall image quality is substantially enhanced and the fine details within the image become more noticeable and simpler to distinguish. This method is critical in applications like medical imaging, where image sharpness and clarity are important for precise diagnosis and analysis. Thus, adaptive filtering with histogram equalization is an important tool in our methodology for image enhancement and analysis. It makes sure that both foreground and background information are effectively and uniformly improved to produce an output image that is more visually instructive and contextually significant.

3.2. Data Augmentation via Cycle-Consistent GANs (CycleGANs)

Through the use of cycle-consistent GANs (CycleGANs), sophisticated data augmentation techniques will be used to the pre-processed pictures. The CycleGAN approach is used to simultaneously train two sets of GANs, each set of GAN consisting of a generator and a discriminator. The two GANs’ inputs are given two unpaired photos.

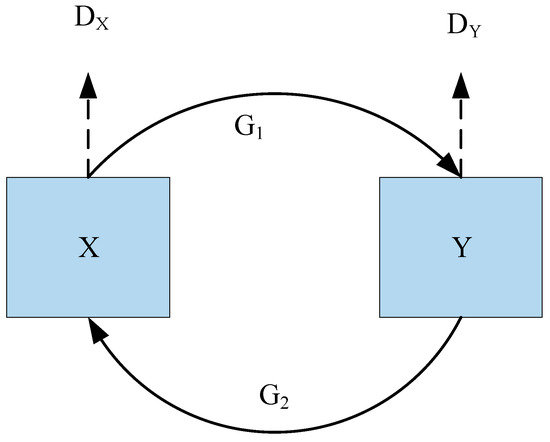

CycleGAN is trying to figure out how two photo sets map to one another, and , given two image collections. According to Figure 3, CycleGAN is made up of two generators, and , as well as two discriminators, and . and attempt to discriminate between created samples and actual samples, whereas attempts to produce pictures that are indistinguishable from images.

Figure 3.

Architecture of CycleGAN.

Neural networks are used to build generators and discriminators. The generator has nine residual blocks that may convert feature vectors from the initial domain to the destination domain. Additionally, the generator contains two deconvolution layers, three convolution layers for matching the geographic distribution of the desired domain, one convolution layer for recovering low-level features from feature vectors, and three convolutional layers for obtaining characteristics from input pictures. Then, the generator utilized five convolutional layers for the discriminator. The final layer is specifically employed to provide a 1-dimensional output that indicates whether the input pictures are real or fraudulent.

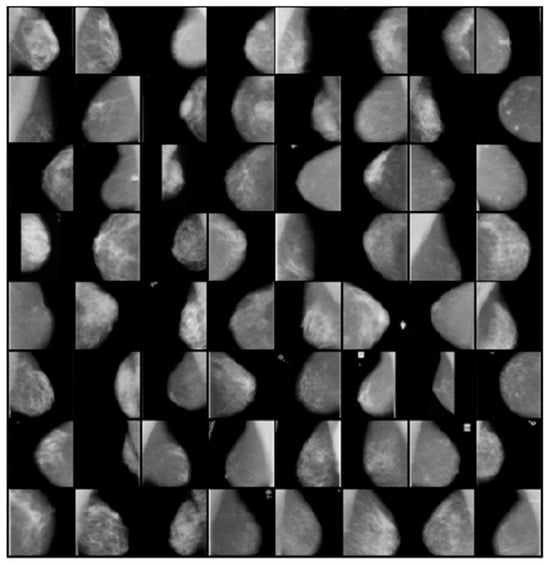

Using CycleGANs, we have created a collection of enhanced photos that are shown in Figure 4. These enhanced photos are a key component of our strategy for this investigation. We can efficiently enrich and diversify the dataset thanks to CycleGANs, which enhances model performance.

Figure 4.

Samples of Augmented Images Using CycleGANs for Diverse Breast Cancer Detection Scenarios.

3.3. Feature Extraction

A combination of manually created and features based on deep learning is extracted during the feature extraction phase. Deep learning architectures VGG16 are calculated alongside conventional features including Haralick features, Gabor filters, contour-based features, and morphological features, which may be retrieved.

3.3.1. Haralick Features

The relationship between the brightness of neighboring pixels is extremely effectively described by haralick features. Haralick offered fourteen metrics for textual qualities that were derived from a co-occurrence matrix. It reveals the relationship between the intensity in a certain pixel at a given point and its nearby pixels. For pictures with N dimensions, where N is the number of intensity levels in the image, the Gray-Level Co-occurrence Matrix (GLCM) is created. In order to assess fourteen aspects for each image, GLCM is built. Haralick generated 24 distinct statistical characteristics from the normalized GLCM matrix, Cn. These characteristics quantify a crucial component of the spatial and local information included in the picture. Although all 24 traits may be relevant in various textural studies, only 5 of them stand out as particularly promising; therefore, they are presented in Equations (7)–(11) in this study after a few experimental combinations were made to extract the required information.

Homogeneity: specifies the degree to which each GLCM element is in proximity to its diagonal elements.

Entropy: Entropy is a metric used to assess how disorderly or unpredictable a picture is.

Energy: Energy is the source of the Angular Second Moment, which provides measurements of the regional uniformity of the grey levels.

Correlation: The co-occurrence matrix’s characteristic that displays the linear dependence of gray-level values.

where the long horizontal and vertical spatial planes, , , and , , respectively, denote the means and standard deviations of the summed cooccurrence matrix . The measure of gray-level intensity fluctuation between pixels is known as contrast, sometimes known as standard deviation.

3.3.2. Gabor Filters

The spatial frequency content of a picture may be examined and represented using Gabor filters. Edge detection, texture analysis, feature extraction, and picture segmentation are just a few of the many tasks they are frequently employed for. In jobs requiring simultaneous analysis of both frequency and orientation material, Gabor filters are particularly good at collecting localized frequency information. The Gabor filter kernel function is shown in Equation (12),

With , .

Where Pixel location is represented as is the sinusoidal component’s wave length, is the orientation of the Gabor function’s normal to its parallel stripes, is the standard deviation with respect to the and axes, is the evidence supporting the ellipticity of the Gabor variable and the spatial aspect ratio, is the phase of the sinusoidal function’s start. A bank of Gabor filters with two frequencies and four orientations was employed during the experiment to extract ROI characteristics.

3.3.3. Contour

An effective technique for object recognition and shape analysis is contour. In order to estimate a polygon with fewer vertices so that the distance between them is less than or equal to the given precision, we used the Douglas–Peucker method to extract an accurate contour of the foreground object (binary picture). By applying Sklansky’s approach, which has an complexity, we extracted the convex hull of the polygon (a 2D point set). Since the black smoke often occurs near the vehicle’s rear, we examined the shape and convex hull of the foreground area there.

The contour of the foreground area is represented by (2D point set). The convex hull of the contour is represented by . Let the shape behind the car be represented by ( ). The convex hull behind the vehicle is represented by ( ). Let point (, ) and r stands for the minimum enclosing circle of contour ’s respective centre and radius. Let (, ) and (, ) represent the start and end points, respectively, of the convex hull . The moving object’s bounding box is represented by the green rectangular box. Convex hull is represented by the blue curve from point to point , and contour is represented by the purple curve from point to point . The calculation of and , is done using Equation (13):

where is a correction factor. In this study, we have considered .

3.3.4. Morphological Features

The forms and borders of malignant breast tumors are frequently amorphous, according to skilled medical professionals. The ability to discriminate between benign and malignant tumors using morphological characteristics is crucial. As morphological characteristics for this work, we have retrieved mass roundness, normalized radius entropy, normalized radius variance, acreage ratio, and roughness.

3.3.5. VGG 16

The VGG16 deep neural network, which is used to extract bottleneck characteristics, was pre-trained using the ImageNet dataset. Following the initial five blocks of convolutional layers, the VGG16 architecture consists of three completely connected layers. Each activation map in a convolutional layer uses a 33 kernel with a stride of 1 and padding of 1 to maintain the same spatial dimensions as the layer before it. The bottleneck characteristics are extracted using the settings of the transferred convolutional layer. Each convolution is followed by a spatial dimension reduction using the max pooling approach, and then a ReLU activation. To ensure that each spatial dimension of the preceding layer’s activation map is cut in half, max pooling layers utilize 22 kernels with a stride of 2 and no padding. The last 1000 totally connected SoftMax layer is employed after two entirely linked layers with 4096 ReLU activation units. A high evaluation cost as well as a large memory and parameter need are some of the drawbacks of the VGG16 model. Then, 138 million or more parameters compose VGG16. These features exist in 123 million instances in the fully connected layers.

3.4. Feature Selection Using HRDSO Algorithm

A new hybrid optimization model, which combines the Sparrow Search Algorithm (SSA) and Red Deer Algorithm (RDA), is employed to identify the most informative subset of features for BC classification. SSA is inspired by the foraging behavior of sparrows and emphasizes exploration, while RDA is based on the herding behavior of red deer and focuses on exploitation. By combining these two algorithms, the hybrid approach can strike a balance between exploration and exploitation, enabling efficient search across the solution space. The hybrid optimization called HRDSO having the following steps,

Step 1: Initialization.

The following matrix can be used to depict the search agent’s location using Equation (14):

where n represents the number of search agents, and d represents the dimensions of the variables that need to be optimized. Tent chaotic sequence can be used to initialize the population of the search agent.

Step 2: Population updating using Tent chaotic sequence.

In deterministic nonlinear systems, the chaos phenomenon is a special phenomenon that can arise without the addition of any random variables. Chaotic variables can continuously seek and traverse the whole space and reveal followable laws as a result of their inherent unpredictability. In order to prevent being stuck in local optima, chaos is frequently employed to optimize search difficulties. Typical chaotic modeling includes logistic mapping. However, due to the straightforward nature of the model, an irregular traverse during setup diminishes the optimization benefits. Compared to the Logistic map, the Tent map travels more consistently and searches more quickly. The Tent chaotic map is expressed in Equation (15) as follows:

The following Equation (16) is the Tent chaotic map following the Bernoulli shift transformation:

Following examination, the Tent chaotic sequence is found to have unstable periodic patches and transient intervals, preventing it from being a momentary or unstable phase point. The improved equation is as follows, which included the random variable to the initial Tent mapping expression:

Following the Bernoulli transformation, the Equation (18) is:

In this case, NA is the chaotic sequence’s particle count, and rand (0, 1) is a random integer chosen at a random number from the range [0, 1]. When creating a chaotic series in the applicable region using the enhanced chaotic map expression, the following procedures should be followed in accordance with the properties of the Tent chaotic map:

- In the range of (0, 1), produce the initial value at random with the index equal to 0.

- Start an iterative computation using Equation (18) to produce the sequence, with increasing by 1 each time.

- When the allotted number of iterations has been completed, stop saving the generated x sequence.

Step 3: Fitness computation.

In HRDSO, the objective function represents the measure of quality or fitness of a solution. Fitness calculation involves evaluating the objective function for each potential solution or candidate in the search space. The objective function provides a quantifiable measure of how well a particular solution satisfies the optimization criteria or goals. It helps distinguish between better and worse solutions, enabling the search algorithm to iteratively improve the solutions over time. The fitness computation is performed using the Equation (19)

where is the error value. For computing the fitness function, the best and worst fitness values are calculated.

Step 4: Position updating.

Male RD is roaring throughout this phase in an effort to boost their elegance. As it occurs in nature, the roaring process may thus succeed or fail. Male RDs are, notably, the best solutions using this methodology. It excels at locating the male RD’s neighboring solutions within the solution space and substituting them with superior objective functions from previous iterations. Every male RD is actually allowed to switch positions. The discoverer (male), who is in charge of finding food, is supposed to be the first five best solutions, while the participants (female), who are the next five best solutions, are thought to be the guards. Males’ status has been updated using Equation (20):

where is the updated position of the male. All female search agents update ( their places in accordance with the formula in Equation (21), with the exception of the discoverer.

where represents the current worst position, is the next best solution for the discover in current state, is the matrix with dimension .

Step 5: Fight between male discoverers.

Every commander was given a random pair of stags to battle with. Afterwards, let a commander and a stag come together at the location where the answer would be found. To enhance effectiveness, the commander is to be replaced with a more optimal solution. This involves assessing four choices, namely the commander, stag, and two newly acquired solutions. It obtain two new options and replace the commander with the best solution. Two equations in mathematics are offered to describe the fighting process in Equation (22):

where New1 and New2 represent the two novel solutions generated by the battle process. The words Com and Stag stand in for the emblems for commanders and stags, respectively. Boundaries of the search space’s upper and lower bounds are and , respectively. These bounds restrict the viability of new solutions. Due to the randomness of the combat process, and are produced by a uniform distribution function between zero and one. Only the best option in terms of the objective function is chosen out of the four options, including , , New1 and New2.

Step 6: Guards updating.

When a threat is identified, the search agent group will engage in anti-predation behaviors, and its location will be updated in Equation (24) as follows:

In the equation, the step length controlling parameter is denoted by the symbol , and it is a random integer from a normal distribution having a mean equal to 0 and a variance value of 1. is a random value that also serves as the step length control parameter and represents the direction in which the search agent moves. To avoid the denominator from being zero, is set to a minimal constant; stands for the fitness value of the search agent; and are the search agent, which is at the periphery of the population and is vulnerable to assault from predators when > ; it implies that the middle-class search agents are aware of the threat presented by the predators and must work together to reduce their risk of being eaten.

Step 7: Mating.

Crossover, mutation, and gender clustering all occur during mating in LA. Male and female produce cubs through this crossover and mutation process, which serves as an extraction of the male and female’s constituent parts. The literature has created a number of research projects on crossover, mutation, and the need for evolutionary algorithms. When the lions were changed as losers in territorial takeover or survival struggles, they started to age and became sterile throughout the processing phases of LA. Males and females have either reached the local or global optimum, from which the better answer cannot be discovered, if they become saturated in line with their fitness.

Evaluation of fertility has aided in the transition away from the regional optimal solution. When the updated female is represented as , the mating process evolves in line with the enhancement. However, until the number of women in generation gc reaches gmax c, where gmax c is set at 10, the updating process continues. When there is no to replace the female, is thought to be fertile to produce superior cubs throughout the update procedure.

where, and are vector elements of and , respectively. The random number that was generated within the range [0, 1] is shown as and , the female update position is shown as , and the random integer that was generated within the range is shown as and .

Step 8: Save the so far acquired best solution.

Step 9: Return the best solution.

The pseudocode for the feature selection process is given in Algorithm 1.

| Algorithm 1 Pseudocode for HRDSO algorithm |

| Step 1: Initialization |

| Initialize the search agent matrix X using Equation (14) with random values |

| Step 2: Population updating using Tent chaotic sequence |

| Initialize the Tent chaotic map sequence in the range (0,1) |

| for i = 1 to N do |

| for j = 1 to d do |

| if x <= 0.5 then |

| else |

| end if |

| end for |

| end for |

| Step 3: Fitness computation |

| Evaluate the fitness function for each search agent using Equation (19) |

| Step 4: Position updating |

| Update the locations of male search agents using Equation (20) |

| for i = 1 to 5 do |

| end for |

| Update the positions of female search agents (excluding the discoverer) using Equation (21) |

| for i = 6 to N do |

| if then |

| else |

| end if |

| end for |

| Step 5: Fight between male discoverers |

| for i = 1 to 5 do |

| Generate two new solutions using Equations (22) and (23) |

| Evaluate the fitness of the new solutions and choose the best one |

| Replace the male search agent with the best solution |

| end for |

| Step 6: Guards updating |

| Positions for female (guards) search agents should be updated using Equation (24) |

| Step 7: Mating |

| Update the locations of female search agents (potential mothers) using Equations (25)–(27) |

| Step 8: Save the most effective solution found so far |

| Step 9: Return the best solution |

3.5. BC Detection Phase

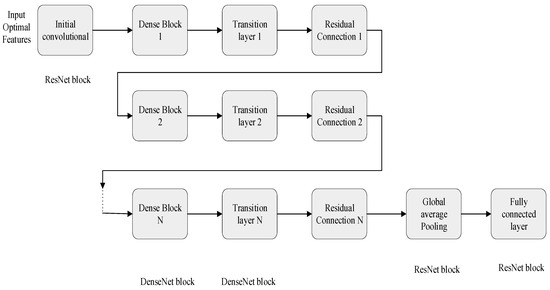

The residual block of ResNet and the DenseNet’s fundamental units are tightly connected to create the DenseXtNet. The convolutional and global averaging layers of the ResNet are coupled via the DenseNet. Dense and transition layers are present in the DenseNet. For frontend BC detection, these building pieces are layered into deep networks. The recommended DenseXtNet blocks enable feature fusion on each fundamental unit, allowing for the collection of crucial data that may be used to train more selective individual embeddings, unlike model ensemble techniques that combine the outcomes of numerous systems. Figure 5 displays the DenseXtNet model’s block diagram.

Figure 5.

Hybrid architecture of DenseXtNet model.

The DenseNet is connected in between the initial convolutional layer and transition layer of the ResNet. DenseNet is a recent CNN architecture used for object recognition in images, which achieves higher performance with fewer parameters. Its structure is similar to that of ResNet, but with some modifications. DenseNet connects the output of each layer to the input of all subsequent layers using concatenation, whereas ResNet uses addition to combine the output of one layer with the input of another layer. Densely linking all layers using DenseNet, the vanishing gradient problem is addressed. Typically, conventional CNNs use a transformation function that is not linear, and it is represented as (.) to calculate the output layers ( based on the output of the previous layer .

DenseNet’s concatenate layer output feature maps with inputs instead of adding them together like traditional CNNs. As a result, information may move across layers more effectively. The layer in a DenseNet takes input from the feature maps of all earlier layers. This transformation for the dense layer is expressed mathematically as:

In the DenseNet architecture, a single tensor is created by concatenating the output maps of earlier layers, []. The (.) is composed of three important functions like activation (ReLU), batch normalization, and pooling and convolution (CONV). The function is applied to the concatenated tensor to produce the output of the current layer. The growth rate k is used to determine the number of channels in the current layer based on the number of channels in the previous layer. Specifically, the growth rate of the lth layer, , is determined by adding to , where is the number of channels in the first layer.

The ResNet’s global average pooling layer receives the combined output of the transition layer utilizing the residual connection. The fully connected layer of ResNet, which does the BC prediction, eventually receives the average value. The MATLAB platform is used to assess the suggested model’s performance.

4. Result and Discussion

In this part, the proposed DenseXtNet model is assessed in terms of performance metrics like accuracy, specificity, precision, sensitivity, and recall. It is also assessed in terms of F-Measure, and NPV, FPR, FNR, and MCC. These are commonly used performance matrices to evaluate the effectiveness of machine learning models. These metrics are typically calculated based on predictions made by the model on a separate testing dataset that it has not seen during its training phase. The MIAS mammography dataset is taken for implementation [30]. The data from the MIAS mammography dataset have been thoughtfully divided, with 70% of the data for training, and 20% for testing, and the remaining 10% for validation. A solid model is developed, and an accurate evaluation is ensured by this balanced split. The split was conducted using a conventional random process. This technique is a well-known data science and machine learning practice to guarantee that the datasets are representative and that the evaluation results are trustworthy. The implementation is performed using the MATLAB platform. The proposed model is compared with the existing techniques including ResNeXt, DenseNet, ResNet, VGG Net and AlexNet.

4.1. Dataset Description

The MIAS mammography dataset [30] provides a valuable resource for detecting breast cancer through mammography scans. It includes images and annotations, offering crucial information for research and medical applications. The dataset contains diverse cases of abnormalities, such as calcifications, well-defined masses, speculated masses, architectural distortions, asymmetries, and more. Each entry is accompanied by information about tissue type, class of abnormality, severity, and location details. This dataset is well-documented and maintained by the Mammographic Image Analysis Society. It serves as a foundation for developing automated lesion detection and malignancy prediction tools, ultimately aiding physicians in accurate breast cancer diagnosis.

Since the mammography images used in this work are an open-access dataset that can be found on the Kaggle website, there are no moral or ethical concerns. A strong ethical framework guided the choice to use mammography pictures in this study, and using an open-access dataset from Kaggle is a key component of ethical research. This work falls within the ethical parameters of medical imaging research because of its dedication to openness, data accessibility, and ethical principles. This guarantees that the scientific community may progress knowledge without jeopardizing the confidentiality or integrity of critical medical data.

4.2. Performance Metrics

The performance metrics and their calculation formulas are given in this section. The terms true positives (TP), false positives (FP), true negatives (TN), and false negatives (FN) are critical for evaluating the effectiveness of classification models in the field of medical diagnostics, such as the identification of breast cancer. Instances of true positives show when the algorithm successfully diagnoses patients with the illness, ensuring that cancer patients receive an appropriate diagnosis. False positives, on the other hand, happen when the model misclassifies people who do not have the condition as having it, potentially causing extra stress and medical treatments for patients who are genuinely cancer-free. Those who are cancer-free will receive accurate diagnoses since true negatives demonstrate the model’s capacity to distinguish people without the illness correctly. False negatives, on the other hand, refer to situations where the model is unable to recognize patients who actually have the ailment, thus delaying required treatment and raising serious issues for medical diagnostics. These four factors are essential for assessing the accuracy and efficacy of classification models, particularly in the setting of medicine where misclassification can have serious repercussions.

- Sensitivity

In our breast cancer detection model, sensitivity—also known as the true positive rate—is of the utmost importance. It measures the percentage of real positive cases that our model accurately detected. Sensitivity is a crucial parameter since it directly affects the model’s capacity to identify breast cancer, especially in circumstances where it is present [31].

- Specificity

Specificity is an important indicator since it measures how well the model can recognize negative cases. Specificity is essential for reducing false alarms or misclassification of non-cancerous cases as cancerous when it comes to the detection of breast cancer. The model’s capacity to distinguish between real negatives is ensured by this metric [32].

- Accuracy

By taking into account both true positives and true negatives in respect to all instances, accuracy evaluates the overall accuracy of our breast cancer detection algorithm [33]. It offers a thorough assessment of the model’s effectiveness and its capacity to make accurate predictions in all situations.

- Precision

As it assesses the proportion of correctly predicted positive cases to all positive predictions, precision, also known as positive predictive value, is important in the context of breast cancer detection [34]. This parameter aids in determining how well the model can predict breast cancer with accuracy.

- Recall

The recall rate is a measure of how many genuine samples overall are considered when categorizing data using all samples from the same categories from the training data. Recall, also known as the true positive rate, is crucial for determining the percentage of real positive cases that our model properly recognized [34]. Recall in the context of breast cancer refers to the model’s ability to prevent missing actual cancer instances.

- F-Score

The definition of the F-score is the harmonic mean of recall rate and accuracy. A crucial metric for balancing precision and recall is the F-score, which is the harmonic mean of the two metrics [35]. It offers a thorough evaluation of the model’s overall effectiveness in detecting breast cancer.

- Negative Prediction Value (NPV)

The NPV is defined as the ratio of TN and the sum of TN and FN. Assessing the ratio of genuine negatives to all possible negative forecasts requires consideration of NPV. It aids in assessing how well the model can recognize non-cancerous instances. Negative Predictive Value (NPV) is especially beneficial in skewed class scenarios [36]. It quantifies the likelihood that a negative prediction is accurate, and it is particularly pertinent in medical diagnostics. A high NPV indicates reliability in excluding the presence of a condition when a negative prediction is made.

- Matthew’s correlation coefficient (MCC)

When evaluating the model’s overall performance, MCC takes both true and erroneous positives and negatives into account. It helps us evaluate the overall effectiveness of our breast cancer detection algorithm [36]. The Matthew’s Correlation Coefficient (MCC) is a widely recognized metric in binary classification, especially vital when dealing with imbalanced classes. It comprehensively considers true positives, true negatives, false positives, and false negatives, providing a single value that encapsulates a classifier’s overall performance. This metric holds particular value when class distribution is uneven. An MCC of +1 signifies perfect classification, 0 represents random classification, and −1 implies complete disagreement between predictions and actual outcomes. The two-by-two binary variable association measure, sometimes referred to as MCC, is shown in the equation below.

- False Positive Ratio (FPR)

The FPR is computed by dividing the total number of adverse events by the total number of adverse events that were incorrectly classified as positive. Understanding FPR is crucial for comprehending the model’s rate of false alarms or false positives. Making sure that non-cancerous instances are not mistakenly labeled as cancerous requires minimizing the FPR [36].

- False Negative Ratio (FNR)

It is often known as the “miss rate”, the FNR is the probability that a true positive may go unnoticed by the test. The FNR is crucial in determining how likely it is that the model will fail to detect a true positive case. To avoid missing actual cancer patients when detecting breast cancer, lowering the FNR is essential [36].

The consequences of misclassifying something as “true” when it is actually “false” depend on the particular performance metrics being utilized in the context of breast cancer diagnosis. The misclassification of real negatives as “true” has a detrimental impact on sensitivity, which assesses the model’s capacity to correctly identify positive cases. Since early diagnosis of breast cancer has a substantial impact on treatment outcomes, this leads to a lower sensitivity score, raising worries about the possibility of missing cancer cases. Similarly, when genuine negatives are incorrectly classified as “true”, specificity—a measure of the model’s ability to effectively detect negative cases—is weakened. Due to the decrease in specificity, there is an increased possibility of misclassifying non-cancerous cases as cancerous, which could put patients through extra stress and necessitate more diagnostic testing. The misclassification of genuine negatives as “true” reduces accuracy, which includes both true positives and true negatives. The model’s overall failure to produce accurate predictions in all circumstances is highlighted by the decline in accuracy, which compromises the model’s dependability. False positives also have a negative impact on precision, which reflects the accuracy of positive predictions. Precision declines when real negatives are mistakenly labeled as “true”, suggesting that a sizable fraction of positive forecasts may be wrong, possibly leading to pointless medical procedures or treatments.

False Positive Rate (FPR) and False Negative Rate (FNR) are pivotal in binary classification, particularly in domains like medical diagnosis. FPR gauges the ratio of wrongly classified actual negatives as positives, while FNR signifies the proportion of actual positives wrongly classified as negatives. In medical contexts, where misclassifications carry distinct consequences, a high FNR could lead to missed detections, while a high FPR might trigger unnecessary interventions.

These indicators, encompassing MCC, FPR, FNR, and NPV, enrich our understanding of a machine learning model’s performance, especially in intricate scenarios involving class imbalances and the varying implications of misclassifications. They offer more nuanced insights compared to simple accuracy metrics, contributing to a holistic assessment of model behavior.

4.3. Overall Comparison of the Proposed Model

The performance metrics for the suggested model is compared with the existing techniques. The evaluation reveals that the proposed model exhibits efficient performance compared to the other techniques. This is due to the hybrid model used in the feature selection stage. The comparison for the training dataset (70%) is shown in Table 1.

Table 1.

Comparison for the training dataset (70%).

The table presents a comparative analysis of different machine learning techniques on a training dataset, showcasing their performance across various evaluation metrics. These metrics provide a comprehensive view of each technique’s strengths and weaknesses in binary classification tasks. For instance, the proposed DenseXtNet stands out with the highest sensitivity (ability to correctly identify positive cases), specificity (ability to correctly identify negative cases), accuracy (overall correctness), precision (correctly predicting positive cases), recall (detecting positive instances), and F-Measure (a balance of precision and recall). Conversely, AlexNet has relatively lower values across these metrics, indicating room for improvement in correctly classifying positive and negative cases. The metrics serve as valuable benchmarks for selecting the most suitable model for specific classification tasks and understanding its performance characteristics. Similarly, the validation dataset (10%) is compared in Table 2.

Table 2.

Comparison of the validation dataset (10%).

Table 2 presents a comparative evaluation of different machine learning techniques using a 10% validation dataset for binary classification tasks. The metrics assessed include sensitivity (ability to correctly identify positive cases), specificity (ability to correctly identify negative cases), accuracy (overall correctness), precision (proportion of true positive predictions among positive predictions), recall (detection of positive instances), F-Measure (a balance of precision and recall), NPV (ability to correctly identify true negatives), FPR (rate of false positives), FNR (rate of false negatives), and MCC (a comprehensive performance metric). The proposed DenseXtNet demonstrates strong performance across several metrics, particularly in sensitivity and F-Measure, indicating its suitability for the task. On the other hand, AlexNet exhibits lower performance in several aspects, highlighting areas where improvements may be needed. These metrics help in understanding the strengths and weaknesses of each technique in handling binary classification challenges. This is due to the hybrid model used in the feature selection stage. The comparison for the testing dataset is shown in Table 3.

Table 3.

Overall comparison of the performance metrics for testing dataset (20%).

The proposed DenseXtNet technique accurately identified 91.30% of the actual positive cases (sensitivity), and it correctly identified 98.43% of the actual negative cases (specificity). The overall correctness of its predictions was 97.58% (accuracy), and when it made positive predictions, 88.73% were correct (precision). It captured 91.30% of the actual positive cases (recall), and the harmonic mean of precision and recall was 90.00% (F-measure). Additionally, it correctly identified 98.82% of the actual negative cases out of all the NPV made. The FPR was 1.57%, and the FNR was 8.70%.

The proposed DenseXtNet technique shows the highest sensitivity (0.9130), indicating its efficiency in correctly identifying most positive cases. On the other hand, lower sensitivity, as seen with VGG Net (0.5435), suggests a higher likelihood of missing true cancer cases when applying this technique. Specificity evaluates the model’s capacity to accurately classify negative cases. A high specificity value suggests a low rate of false positives (non-cancerous cases wrongly classified as cancerous). The proposed DenseXtNet achieves the highest specificity (0.9843), representing its ability to minimize false alarms. In contrast, a lower specificity, as observed with VGG Net (0.9510), indicates a relatively higher rate of false positives. The proposed DenseXtNet again excels in accuracy (0.9758), indicating a high proportion of correct predictions overall. Conversely, lower accuracy, as seen with VGG Net (0.9024), implies a higher proportion of incorrect predictions. The proposed DenseXtNet showcases the highest precision (0.8873), signifying its capability to make precise predictions for breast cancer. In contrast, lower precision, as evident with VGG Net (0.6000), implies a higher likelihood of false positives. The proposed DenseXtNet demonstrates strong recall (0.9130), indicating its effectiveness in identifying true cancer cases. Conversely, lower recall, as observed with VGG Net (0.5435), suggests a higher likelihood of missing true cancer cases. A high F-Measure, as seen with the proposed DenseXtNet (0.9000), implies a balanced trade-off between precision and recall, resulting in robust model performance. In contrast, a lower F-Measure, as observed with VGG Net (0.5703), indicates an imbalance between precision and recall, potentially affecting overall model performance. The proposed DenseXtNet achieves a high NPV (0.9882), indicating a low rate of false negatives (true non-cancerous cases wrongly classified as cancerous). A lower NPV, as seen with VGG Net (0.9390), implies a relatively higher rate of false negatives. A lower FPR, as demonstrated by the proposed DenseXtNet (0.0157), implies fewer false positives and a lower rate of false alarms. Conversely, a higher FPR, as observed with VGG Net (0.0490), suggests a higher rate of false alarms. A lower FNR, as achieved by the proposed DenseXtNet (0.0870), indicates a lower probability of missing true positive cases. In contrast, a higher FNR, as seen with VGG Net (0.4565), implies a higher likelihood of missing true positive cases. MCC considers both true and false positives and negatives, providing a comprehensive measure of overall model performance. A higher MCC, as achieved by the proposed DenseXtNet (0.8864), signifies superior overall model performance. Conversely, a lower MCC, as observed with VGG Net (0.5163), indicates lower overall performance quality.

Table 4 provides a comparison of accuracy scores for different base methods, serving as a benchmark for evaluating their performance. Two methods including AlexNet (BC-BM-MC-MM) [16] with an accuracy of 0.93, CAD SVM [19] with an accuracy of 0.9044, and the proposed DenseXtNet with an accuracy of 0.9758. The accuracy values represent the overall correctness of each method in the context of the task being evaluated. The higher the accuracy score, the better the method is considered to perform. In this comparison, the DenseXtNet method demonstrates the highest accuracy among the listed approaches, suggesting that it may be particularly effective for the specific task or dataset under consideration.

Table 4.

Base paper comparison.

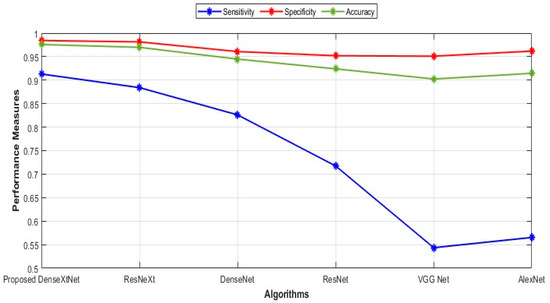

Figure 6 shows the graphical representation of the sensitivity, specificity, and accuracy.

Figure 6.

Comparison of sensitivity, specificity, and accuracy.

Sensitivity values for the proposed DenseXtNet, ResNeXt, DenseNet, ResNet, VGG Net, and AlexNet are 0.9130, 0.8841, 0.8261, 0.7174, 0.5435, and 0.5652, respectively. The specificity values are 0.9843, 0.9814, 0.9608, 0.9520, 0.9510, and 0.9618 for the proposed DenseXtNet, ResNeXt, DenseNet, ResNet, VGG Net, and AlexNet, respectively. Similarly, the accuracy values are 0.9758, 0.9698, 0.9447, 0.9240, 0.9024, and 0.9145. Figure 7 depicts the precision, F-Measure, and recall in graphical form.

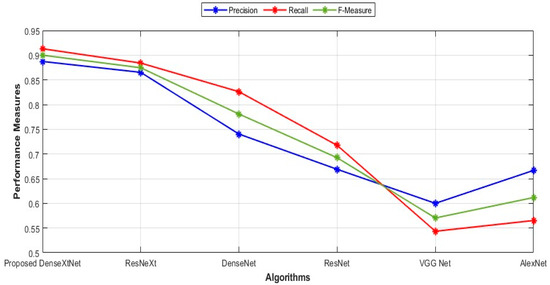

Figure 7.

Comparison of precision, recall, and F-Measure.

The precision, recall, and F-Measure values for several proposed models: DenseXtNet has a precision of 0.8873, recall of 0.9130, and F-Measure of 0.9000. ResNeXt follows with a precision of 0.8652, recall of 0.8841, and F-Measure of 0.8746. Next is DenseNet with a precision of 0.7403, recall of 0.8261, and F-Measure of 0.7808. ResNet has a precision of 0.6689, recall of 0.7174, and F-Measure of 0.6923. VGG Net’s values are 0.6000 for precision, 0.5435 for recall, and 0.5703 for F-Measure. Finally, AlexNet’s precision, recall, and F-Measure are 0.6667, 0.5652, and 0.6118, respectively. These metrics provide a comprehensive overview of the performance characteristics of each model in terms of precision, recall, and F-Measure, aiding in the comparative analysis of their effectiveness. The NPV and MCC are represented graphically in Figure 8.

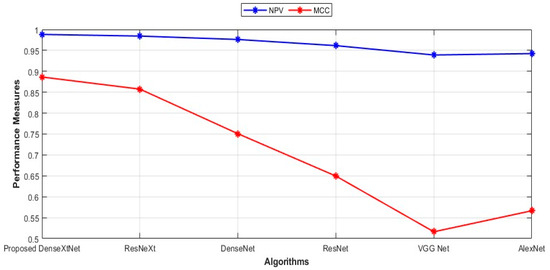

Figure 8.

Comparison of NPV and MCC.

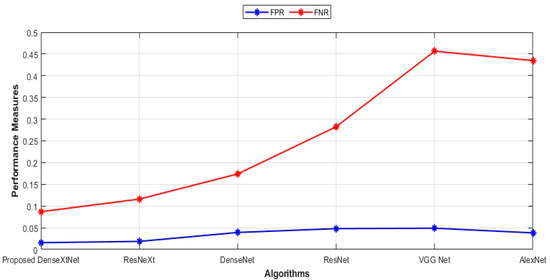

The NPV values for the proposed DenseXtNet, ResNeXt, DenseNet, ResNet, VGG Net, and AlexNet are 0.9882, 0.9843, 0.9761, 0.9614, 0.9390, and 0.9424, respectively, and the MCC values are given as 0.8864, 0.8574, 0.7508, 0.6495, 0.5163, and 0.5665. Figure 9 displays FPR and FNR in a graphical representation.

Figure 9.

Comparison of FPR and FNR.

The FPR and FNR values for the proposed DenseXtNet, ResNeXt, DenseNet, ResNet, VGG Net, and AlexNet are 0.0157, 0.0186, 0.0392, 0.0480, 0.0490, 0.0382 and 0.0870, 0.1159, 0.1739, 0.2826, 0.4565, 0.4348, respectively. By comparing the FPR and FNR values for both the existing and proposed techniques, the proposed model produced the lowest FPR and FNR values, which indicates that the proposed model has the lowest error value.

Gravity of fault: In scenarios involving breast cancer detection, missing potential malignancies, such as calcifications or masses (false negatives), can lead to delayed diagnosis and disease stages. These situations require high sensitivity. False positives, though leading to unnecessary interventions, are generally less critical. The proposed DenseXtNet model’s balanced performance metrics show its potential in mitigating these faults effectively.

Figure 10 shows a crucial sequence of image processing processes. The “Original Input Image”, an unaltered version of the medical image being examined, is where the adventure starts. This original image often includes a mammography, a crucial diagnostic technique in the context of finding breast cancer. This initial image’s clarity and accuracy are crucial since any traits or anomalies within it must be precisely captured to ensure a trustworthy diagnosis. By the time we reach the “Pre-Processed Image”, we have seen the use of sophisticated image processing methods. In this step, techniques like histogram equalization and adaptive filtering are used to improve the quality and contrast of the image and make minor features that could be a symptom of breast abnormalities more visible. The pre-processed image forms the basis for further analysis and is crucial to the precision of the final diagnosis. The “Segmented Image” represents the culmination of this image processing adventure. At this point, the image has undergone a thorough analysis and partitioning process to identify regions of interest, especially those that may contain potential breast cancer indications. To separate these zones and make them easier to identify for subsequent investigation, which is essential for precise detection and classification, segmentation techniques are used. The diagnostic procedure is advanced by using the segmented image as the foundation for later feature extraction and classification methods.

Figure 10.

(a) Original Input Image (b) Pre-Processed Image (c) Segmented Image.

5. Conclusions

In conclusion, BC detection and classification play a vital role in medical diagnostics, enabling early detection and improved patient outcomes. This paper presented a comprehensive approach that combines image processing techniques and deep learning models to enhance the accuracy and efficiency of BC detection. The proposed approach addressed the limitations of existing methods by incorporating various innovative components. The BC dataset underwent pre-processing techniques such as histogram equalization and adaptive filtering, which improved image quality and contrast. Data augmentation using cycle-consistent GANs (CycleGANs) augmented the dataset, increasing its diversity and robustness. Feature extraction involved the extraction of handcrafted features, including Haralick features, Gabor filters, contour-based features, and morphological features. Additionally, features from the deep learning architecture VGG16 were extracted, capturing both low-level and high-level information from the images. To optimize the feature selection process, a hybrid optimization model named HRDSO was proposed. This model combined the SSA and RDA to identify the most informative subset of features, enhancing the classification performance. For BC detection, a novel DenseXtNet architecture was introduced, combining DenseNet and optimized ResNeXt. This architecture facilitated better gradient flow and feature reuse, improving the model’s capacity to learn discriminative features. The hyperparameters of the DenseXtNet model were optimized using the HRDSO hybrid optimization model. The proposed model was evaluated using various performance metrics, demonstrating its high accuracy of 97.58% in BC detection. In conclusion, this comprehensive approach combining image processing techniques, feature extraction, hybrid optimization, and deep learning models offers a promising solution for BC detection and classification. The proposed model’s high accuracy highlights its potential to assist medical professionals in making timely and accurate diagnoses, ultimately improving patient outcomes in the fight against BC.

With a combination of many approaches, the proposed method for breast cancer detection appears promising. However, given that these approaches may affect the model’s application and efficacy, it is crucial to understand methodology’s limits. In addition to deep learning-based features, the process involves extracting a variety of characteristics from VGG16, such as features from Haralick, Gabor filters, contours, and morphology. Despite the potential benefits of this diversity, it may also result in a heavy computational cost, and overextracting features might lead to overfitting. For feature selection, the proposed HRDSO was presented. It combines the SSA and the RDA, two optimization algorithms. The model’s ability to be tailored for certain use cases may be difficult due to the complexity of merging these two algorithms. To maintain the model’s efficacy, meticulous parameter adjustment is required. The technique does not specify whether the model has been clinically validated on external datasets or in actual clinical settings, despite the fact that the model may have performed well on the dataset it was trained on. The model must be practical and generalizable in order for its results to be used in clinical practice.

To improve the breast cancer detection model’s diagnostic precision, it would be beneficial to investigate the possibility of combining additional medical imaging modalities, such as ultrasound or MRI. Investigating the viability of the DenseXtNet model’s real-time implementation in clinical settings and its integration into current healthcare systems could also considerably improve patient care and early breast cancer diagnosis.

Funding

This research was funded by Deanship of Scientific Research at Majmaah University for supporting this work under Project Number No. R-2023-834.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The author declares no conflict of interest.

References

- Sha, Z.; Hu, L.; Rouyendegh, B.D. Deep learning and optimization algorithms for automatic BC detection. Int. J. Imaging Syst. Technol. 2020, 30, 495–506. [Google Scholar] [CrossRef]

- Al-Antari, M.A.; Al-Masni, M.A.; Kim, T.S. Deep learning computer-aided diagnosis for breast lesion in digital mammogram. In Deep Learning in Medical Image Analysis: Challenges and Applications; Lee, G., Fujita, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2020; pp. 59–72. [Google Scholar]

- Al-Antari, M.A.; Han, S.-M.; Kim, T.-S. Evaluation of deep learning detection and classification towards computer-aided diagnosis of breast lesions in digital X-ray mammograms. Comput. Methods Programs Biomed. 2020, 196, 105584. [Google Scholar] [CrossRef]

- Zeiser, F.A.; da Costa, C.A.; Zonta, T.; Marques, N.M.C.; Roehe, A.V.; Moreno, M.; Righi, R.d.R. Segmentation of Masses on Mammograms Using Data Augmentation and Deep Learning. J. Digit. Imaging 2020, 33, 858–868. [Google Scholar] [CrossRef]

- Okikiola, F.M.; Aigbokhan, E.E.; Mustapha, A.M.; Onadokun, I.O.; Akinade, O.A. Design and Implementation of a Fuzzy Expert System for Diagnosing BC. J. Adv. Math. Comput. Sci. 2019, 32, 1–14. [Google Scholar] [CrossRef][Green Version]

- Saber, A.; Sakr, M.; Abo-Seida, O.M.; Keshk, A.; Chen, H. A novel deep-learning model for automatic detection and classification of BC using the transfer-learning technique. IEEE Access 2021, 9, 71194–71209. [Google Scholar] [CrossRef]

- Vijayarajeswari, R.; Parthasarathy, P.; Vivekanandan, S.; Basha, A.A. Classification of mammogram for early detection of BC using SVM classifier and Hough transform. Measurement 2019, 146, 800–805. [Google Scholar] [CrossRef]

- Freitas, D.L.; Câmara, I.M.; Silva, P.P.; Wanderley, N.R.; Alves, M.B.; Morais, C.L.; Martin, F.L.; Lajus, T.B.; Lima, K.M. Spectrochemical analysis of liquid biopsy harnessed to multivariate analysis towards BC screening. Sci. Rep. 2020, 10, 12818. [Google Scholar] [CrossRef]

- Mascara, M.; Constantinou, C. Global perceptions of women on BC and barriers to screening. Curr. Oncol. Rep. 2021, 23, 74. [Google Scholar] [CrossRef]

- Raj, A.M.A.E.; Sundaram, M.; Jaya, T. Thermography based breast cancer detection using self-adaptive gray level histogram equalization color enhancement method. Int. J. Imaging Syst. Technol. 2021, 31, 854–873. [Google Scholar]

- Duffy, S.W.; Tabár, L.; Yen, A.M.F.; Dean, P.B.; Smith, R.A.; Jonsson, H.; Törnberg, S.; Chen, S.L.S.; Chiu, S.Y.H.; Fann, J.C.Y.; et al. Mammography screening reduces rates of advanced and fatal BCs: Results in 549,091 women. Cancer 2020, 126, 2971–2979. [Google Scholar] [CrossRef]

- Ha, R.; Chang, P.; Karcich, J.; Mutasa, S.; Van Sant, E.P.; Liu, M.Z.; Jambawalikar, S. Convolutional neural network based BC risk stratification using a mammographic dataset. Acad. Radiol. 2019, 26, 544–549. [Google Scholar] [CrossRef]

- Ma, W.; Zhao, Y.; Ji, Y.; Guo, X.; Jian, X.; Liu, P.; Wu, S. BC molecular subtype prediction by mammographic radiomic features. Acad. Radiol. 2019, 26, 196–201. [Google Scholar] [CrossRef]

- Montaha, S.; Azam, S.; Rafid, A.K.M.R.H.; Ghosh, P.; Hasan, M.; Jonkman, M.; De Boer, F. BreastNet18: A high accuracy fine-tuned VGG16 model evaluated using ablation study for diagnosing BC from enhanced mammography images. Biology 2021, 10, 1347. [Google Scholar] [CrossRef]

- Bhardwaj, R.; Hooda, N. Prediction of Pathological Complete Response after Neoadjuvant Chemotherapy for BC using ensemble machine learning. Inform. Med. Unlocked 2019, 16, 100219. [Google Scholar] [CrossRef]

- Hekal, A.A.; Elnakib, A.; Moustafa, H.E.D. Automated early BC detection and classification system. Signal Image Video Process 2021, 15, 1497–1505. [Google Scholar] [CrossRef]

- Ragab, D.A.; Attallah, O.; Sharkas, M.; Ren, J.; Marshall, S. A framework for BC classification using multi-DCNNs. Comput. Biol. Med. 2021, 131, 104245. [Google Scholar] [CrossRef]

- Khan, S.; Islam, N.; Jan, Z.; Din, I.U.; Rodrigues, J.J.C. A novel deep learning based framework for the detection and classification of BC using transfer learning. Pattern Recognit. Lett. 2019, 125, 1–6. [Google Scholar] [CrossRef]

- Lbachir, I.A.; Daoudi, I.; Tallal, S. Automatic computer-aided diagnosis system for mass detection and classification in mammography. Multimed. Tools Appl. 2021, 80, 9493–9525. [Google Scholar] [CrossRef]

- Patil, R.S.; Biradar, N. Automated mammogram BC detection using the optimized combination of convolutional and recurrent neural network. Evol. Intell. 2021, 14, 1459–1474. [Google Scholar] [CrossRef]

- Suh, Y.J.; Jung, J.; Cho, B.J. Automated BC detection in digital mammograms of various densities via deep learning. J. Pers. Med. 2020, 10, 211. [Google Scholar] [CrossRef]

- Sasaki, M.; Tozaki, M.; Rodríguez-Ruiz, A.; Yotsumoto, D.; Ichiki, Y.; Terawaki, A.; Oosako, S.; Sagara, Y.; Sagara, Y. Artificial intelligence for BC detection in mammography: Experience of use of the ScreenPoint Medical Transpara system in 310 Japanese women. BC 2020, 27, 642–651. [Google Scholar]

- Maqsood, S.; Damaševičius, R.; Maskeliūnas, R. TTCNN: A BC detection and classification towards computer-aided diagnosis using digital mammography in early stages. Appl. Sci. 2022, 12, 3273. [Google Scholar] [CrossRef]

- Altameem, A.; Mahanty, C.; Poonia, R.C.; Saudagar, A.K.J.; Kumar, R. BC detection in mammography images using deep convolutional neural networks and fuzzy ensemble modeling techniques. Diagnostics 2022, 12, 1812. [Google Scholar] [CrossRef]

- Abunasser, B.S.; Al-Hiealy, M.R.J.; Zaqout, I.S.; Abu-Naser, S.S. Breast Cancer Detection and Classification using Deep Learning Xception Algorithm. Int. J. Adv. Comput. Sci. Appl. 2022, 13. [Google Scholar] [CrossRef]

- Rose, J.D.; VijayaKumar, K.; Singh, L.; Sharma, S.K. Computer-aided diagnosis for breast cancer detection and classification using optimal region growing segmentation with MobileNet model. Concurr. Eng. 2022, 30, 181–189. [Google Scholar] [CrossRef]

- Mohiyuddin, A.; Basharat, A.; Ghani, U.; Peter, V.; Abbas, S.; Bin Naeem, O.; Rizwan, M. Breast Tumor Detection and Classification in Mammogram Images Using Modified YOLOv5 Network. Comput. Math. Methods Med. 2022, 2022, 1359019. [Google Scholar] [CrossRef]

- Ibrokhimov, B.; Kang, J.-Y. Two-Stage Deep Learning Method for Breast Cancer Detection Using High-Resolution Mammogram Images. Appl. Sci. 2022, 12, 4616. [Google Scholar] [CrossRef]

- Ragab, M.; Albukhari, A.; Alyami, J.; Mansour, R.F. Ensemble Deep-Learning-Enabled Clinical Decision Support System for Breast Cancer Diagnosis and Classification on Ultrasound Images. Biology 2022, 11, 439. [Google Scholar] [CrossRef]

- Available online: https://www.kaggle.com/datasets/kmader/mias-mammography (accessed on 5 June 2023).

- Shreffler, J.; Huecker, M.R. Diagnostic Testing Accuracy: Sensitivity, Specificity, Predictive Values and Likelihood Ratios. 2020. Available online: https://europepmc.org/article/nbk/nbk557491 (accessed on 3 October 2023).

- van Stralen, K.J.; Stel, V.S.; Reitsma, J.B.; Dekker, F.W.; Zoccali, C.; Jager, K.J. Diagnostic methods I: Sensitivity, specificity, and other measures of accuracy. Kidney Int. 2009, 75, 1257–1263. [Google Scholar] [CrossRef]

- Forbes, A.D. Classification-algorithm evaluation: Five performance measures based onconfusion matrices. J. Clin. Monit. Comput. 1995, 11, 189–206. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef]

- Wong, H.B.; Lim, G.H. Measures of Diagnostic Accuracy: Sensitivity, Specificity, PPV and NPV. Proc. Singap. Health 2011, 20, 316–318. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).