Abstract

In recent years, integrated production and distribution scheduling (IPDS) has become an important subject in supply chain management. However, IPDS considering distributed manufacturing environments is rarely researched. Moreover, reinforcement learning is seldom combined with metaheuristics to deal with IPDS problems. In this work, an integrated distributed flow shop and distribution scheduling problem is studied, and a mathematical model is provided. Owing to the problem’s NP-hard nature, a multi-objective Q-learning-based brain storm optimization is designed to minimize makespan and total weighted earliness and tardiness. In the presented approach, a double-string representation method is utilized, and a dynamic clustering method is developed in the clustering phase. In the generating phase, a global search strategy, a local search strategy, and a simulated annealing strategy are introduced. A Q-learning process is performed to dynamically choose the generation strategy. It consists of four actions defined as the combinations of these strategies, four states described by convergence and uniformity metrics, a reward function, and an improved ε-greedy method. In the selecting phase, a newly defined selection method is adopted. To assess the effectiveness of the proposed approach, a comparison pool consisting of four prevalent metaheuristics and a CPLEX optimizer is applied to conduct numerical experiments and statistical tests. The results suggest that the designed approach outperforms its competitors in acquiring promising solutions when handling the considered problem.

Keywords:

integrated production and distribution scheduling; distributed flow shop; brain storm optimization; Q-learning MSC:

90B50

1. Introduction

Facing the competitive market environments, an increasing number of enterprises are adjusting their production and distribution activities in an integrated manner in order to quickly deliver orders to meet customer expectations. Production and distribution are two core components which have a crucial impact in driving the business performance of the supply chain [1]. Traditionally, these two components are individually organized and managed, resulting in poor efficiency from an economic and customer satisfaction point of view [2]. Furthermore, researchers have revealed that the integrated scheduling of production and distribution can reduce total operation costs by 3% to 20% [3]. Hence, integrated production and distribution scheduling (IPDS) is necessary to achieve overall optimization. In the real world, applications involving IPDS appear in many fields, e.g., newspapers, medicine, and furniture manufacturing [4]. In the IPDS process, orders are initially manufactured on machines and, subsequently, timely transported by vehicles to customers in diverse locations. In this situation, managers must make production and distribution decisions concurrently, leading to strong competitiveness and high service quality. Thus, IPDS has attracted much attention from scholars and practitioners [5].

With the development of the globalized economy and the intensive cooperation among enterprises, an emerging distributed manufacturing mode containing multiple factories is replacing the traditional centralized manufacturing mode with a single factory [6,7]. The distributed flow shop is highly utilized as a distributed manufacturing system [8], with its versatile functionality being beneficial in enhancing productivity and lowering costs across many industries, e.g., cell manufacturing, petrochemical, and automotive engines [9]. Motivated by this, distributed flow shop scheduling problems considering various constraints, including blocking, lot streaming, no wait, no idle, and limited buffers, have been addressed in the last decade [10,11,12,13,14]. Moreover, as these problems are recognized as being NP-hard, a variety of metaheuristics have been put forward as a viable means of solving them [13]. Despite the extensive efforts that have been made on distributed flow shop scheduling in terms of problem investigation and method development, few researchers have addressed its integration with distribution planning. However, their integration scheduling has far-reaching applications in reality, e.g., house customization, catering, healthcare, and e-commerce businesses. Inspired by this prospect, this work presents an integrated distributed flow shop and distribution scheduling problem.

Over the past few years, metaheuristics have become commonly used methods for tackling IPDS problems, and their search efficiency has been rigorously confirmed [5]. Nevertheless, these techniques still exhibit certain limitations, such as a lack of self-learning ability and less use of historical information. Hence, to identify more effective solutions, many investigations have integrated advanced tools, e.g., reinforcement learning (RL), into the framework of metaheuristics [15]. The combination of metaheuristics and RL allows for an adaptive parameter adjustment and a dynamic search operator selection, resulting in a significant improvement in optimization performance [16]. With its powerful capability to address optimization problems in diverse application areas (e.g., control, manufacturing, and routing), the combination of metaheuristics and RL has been increasingly recognized among researchers as a suitable approach [17,18,19]. However, it has not been investigated in the context of IPDS problems by prior studies. Accordingly, this work combines a brain storm optimization (BSO) [20] with a RL algorithm, namely, Q-learning, to construct a solution approach. Against the previous literature, threefold contributions are summarized below:

- A multi-objective integrated distributed flow shop and distribution scheduling problem is addressed. To clearly describe it, this work formulates a mathematical model with makespan and total weighted earliness and tardiness minimization;

- A multi-objective Q-learning-based brain storm optimization (MQBSO) is designed to handle the addressed problem. In MQBSO, a double-string representation approach is used to denote a solution, and a random method is employed to initialize the population. In the clustering phase, a dynamic clustering method is adopted to create clusters. In the generating phase, a Q-learning process is performed to guide MQBSO in choosing the generation strategy, where four actions, four states, a reward function, and an improved ε-greedy method are included. In the selecting phase, a new selection method is applied to obtain a better population;

- To examine the performance of MQBSO, experiments are implemented on a group of instances in comparison with four metaheuristics and a CPLEX solver. The experimental results reveal that MQBSO exhibits excellent performance.

We organize the rest of this work as follows: in Section 2, the relative literature is reviewed; in Section 3, the problem under study is presented and formulated; in Section 4, BSO and Q-learning are described; in Section 5, the introduced method is given in detail; numerical experiments and statistical tests are provided in Section 6; and finally, Section 7 ends this work with some conclusions and points out research directions for the future.

2. Literature Review

In this section, three parts are included. First, we reviewed the relevant literature on IPDS problems with respect to production scheduling models, distribution models, objective functions, and solution methods. Second, we reviewed the studies involving the combination of metaheuristics with Q-learning in scheduling problems. Finally, the related studies about the BSO were reviewed.

2.1. Relevant Literature on IPDS

The research of IPDS problems can be traced back to the 1970s and was introduced by Potts [21]. This research addressed a scheduling problem with a single machine, job release time, and job ship time but not the loading capacity or vehicle routing. In recent years, a significant amount of work on IPDS under different environments has been reported [5,22]. Liu and Liu [4] explored an IPDS regarding perishable items, where multiple orders, multiple vehicles, multiple customers, and one machine were involved. They employed CPLEX and an improved large neighborhood search mechanism to minimize total weighted delivery time. Roberto and Marcelo [23] focused on an IPDS to reach total system makespan minimization. They adopted a set of equivalent parallel machines to process jobs and employed a single vehicle to perform multiple route tasks. To address this problem, they developed an iterated greedy algorithm. Jia et al. [24] studied an IPDS aiming at minimizing total weighted tardiness. In this problem, non-identical parallel batch machines were used to process jobs, and multiple vehicles were adopted to execute distribution tasks. They presented an ant colony optimization as a solution approach. In light of the advancements made in the economy and technology, manufacturing systems have become more complex. Yagmur and Kesen [25] put forward an integrated flow shop and distribution scheduling aimed at minimizing the sum of total traveling time and total tardiness. In this problem, jobs were processed on machines in a flow shop and delivered to customers in batches. They proposed a memetic algorithm to deal with it. Mohammadi et al. [26] investigated an integrated flexible job shop and distribution scheduling to minimize the sum of scheduling costs and the weighted sum of earliness and tardiness. To tackle it, a hybrid particle swarm optimization was given.

Unlike the above IPDS problems that dealt with one factory and a vehicle routing problem during production and distribution stages, respectively, research concerning distributed production environments and multi-depot vehicle routing problems have been reported. Gharaei and Jolai [27] addressed an IPDS with minimizing total tardiness and total delivery cost. This problem involved the fabrication of jobs across several non-identical factories, followed by transportation to customers via vehicles. They used a multi-objective evolutionary algorithm combined with a bee method to solve it. Fu et al. [28] introduced an integrated distributed flow shop and a vehicle routing problem with the aim of minimizing the makespan. To figure it out, they designed an enhanced black widow optimization. Additionally, they extended this IPDS problem with time window constraints [29]. To confront the problem, they presented an enhanced brain storm optimization. Qin et al. [30] put forward an IPDS in a distributed hybrid flow shop environment to reach a minimal sum of earliness, tardiness, and delivery cost. Given the NP-hard nature of the problem, they developed an adaptive human-learning-based genetic algorithm.

By analyzing the IPDS problems in the literature, we observed that they have the following characteristics:

- Most studies considered a single factory at the production stage, while the distributed manufacturing system was not fully considered;

- Most research concentrated on a single-objective optimization which usually involved time and cost criteria, while not enough consideration was given to multi-objective optimization;

- Metaheuristics have become the mainstream method to cope with IPDS problems, and their outstanding performance has been verified.

2.2. Q-Learning Applied to Scheduling

Recently, RL has provided an effective approach to handling optimization problems, and has attracted increasing concern from researchers and engineers [31]. Q-learning is a typical RL method, which guides agents to decide the optimum behavior through the trial-and-error method [32]. Motivated by this, many researchers have combined it with metaheuristics to tackle production scheduling problems in recent years. Li et al. [16] applied a Q-learning algorithm to an artificial bee colony algorithm for solving a flow shop scheduling. Zhao et al. [32] put forward a hyper-heuristic combining with a Q-Learning process to deal with a distributed blocking flow shop scheduling. Li et al. [15] developed a new shuffled frog-leaping algorithm with a RL algorithm named Q-learning to work out a distributed assembly hybrid flow shop scheduling. Li et al. [33] introduced a multi-objective evolutionary algorithm based on decomposition with Q-learning for a fuzzy, flexible job shop scheduling. Wang et al. [34] devised a dual Q-learning method to handle an assembly job shop scheduling. Cheng et al. [18] designed a multi-objective Q-learning-based hyper-heuristic method to figure out a mixed shop scheduling. In the aforementioned literature, the combination of metaheuristics with Q-Learning has been studied in various production scheduling problems, e.g., flow shops, job shops, and mix shops. Nevertheless, research on IPDS in a distributed flow shop environment remains scarce. To this end, this work presents a combination of BSO and Q-Learning to address an integrated distributed flow shop and distribution scheduling.

2.3. Relevant Literature on BSO

The BSO algorithm is a promising metaheuristic method, introduced by Shi in 2011 [20]. It has many advantages, such as easy implementation, high stability, and a fine search ability. In past years, BSO and its various variants have been widely developed to settle all kinds of complex and intractable optimization problems. Xu et al. [35] introduced an improved BSO to handle a real-parameter numerical optimization problem. Cheng et al. [36] proposed a modified BSO for solving a knowledge spillover problem. Hao et al. [37] designed a hybrid BSO to tackle a distributed hybrid flow shop scheduling problem. Zhao et al. [38] developed a Q-learning-based BSO to cope with an energy-efficient distributed assembly no-wait flow shop scheduling problem. Ma et al. [39] presented a BSO method with multi-objective search mechanisms to handle a home health care scheduling and routing problem. Ke [40] devised an enhanced BSO with new convergent and divergent operations to deal with a cumulative capacitated vehicle routing problem. Many existing studies have verified its powerful exploration and exploitation abilities. However, to the best of our knowledge, it has rarely been applied to IPDS problems. Thus, this work considers it as a basic optimizer.

3. Proposed Problem and Model

3.1. Problem Description

This work delves into a multi-objective IPDS with minimizing makespan and total weighted earliness and tardiness, consisting of two stages, namely the production stage and the distribution stage. The former comprises multiple identical factories, and each one is a flow shop. A specific set of jobs must be allocated across the flow shops, and each job must follow a fixed route on the machines at their respective flow shop. After processing, the jobs need to be shipped to customers located in different geographical areas via vehicles within time windows during the distribution stage. Consequently, to handle this problem, four decisions are made simultaneously, i.e., factory assignment of jobs, job processing sequence, vehicle allocation of jobs, and the job delivery sequence.

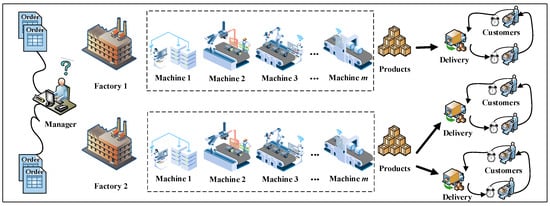

Note that a job is an order from a unique customer. Thus, the terms “job”, “order”, and “customer” are interchangeable as they are directly related to each other on a one-to-one basis. To clarify the problem being investigated, a visual example is shown in Figure 1 along with a mathematical model. A comprehensive listing of the related parameters and variables involved in constructing this model is provided in Table 1.

Figure 1.

A visual example of the devised problem.

Table 1.

The parameters and variables.

3.2. Mathematical Model

For a solution to be considered viable, it must adhere to the following restrictions:

- At time zero, all machines must be available for use;

- Once a machine processes a job, it cannot process another one;

- At no time can a single job be worked on by multiple machines;

- Interrupting a job that has already started on a machine is not allowed;

- Each customer is visited only once;

- Vehicles must obey capacity limits.

Using the definitions provided above, we constructed the mathematical model below.

s.t.

The objective functions represented by Equations (1) and (2) are to minimize makespan and total weighted earliness and tardiness, respectively. The constraints laid out in Equations (3) and (4) dictate that there is precisely one preceding job and one succeeding job for each job on the machine. The constraint outlined in Equation (5) states that each job cannot be allocated to multiple factories simultaneously. Equation (6) signifies that each job owns at most one predecessor and one successor on a machine concurrently. Equation (7) mandates that there exists a strict ordering between two consecutive jobs. Equation (8) denotes that each job is worked on by only one machine at any given time. According to Equation (9), each machine must be dedicated to processing a single job at any given time. Equation (10) limits the number of vehicles. Equations (11) and (12) guarantee that no customer is visited multiple times. Equation (13) shows the constraint of maintaining continuity in vehicle routes. Equation (14) ensures that a vehicle’s maximum capacity limit is strictly observed. Equation (15) stipulates that no vehicle can be employed more than once. Equation (16) demonstrates the start time for vehicle delivery. Equations (17) and (18) provide information regarding when the vehicles arrive at customers, whereas Equation (19) determines when the vehicles depart from customers. Equations (20) and (21) define the makespan and total weighted earliness and tardiness, respectively. Equations (22)–(26) show the variable’s domain.

4. BSO and Q-Learning

4.1. BSO

Vitalized by the human inventive idea creation process, i.e., brainstorming process, Shi initially proposed a promising swarm-based metaheuristic: BSO [20]. The original BSO is straightforward in its design and can be easily implemented. Over the past years, BSO and its derivatives have achieved impressive results in tackling a range of complex problems, including real-parameter numerical optimization, distributed flow shops, and knowledge spillover problems [35,36,37,38,39,40]. Comprehensive experiments have verified that BSO possesses powerful performance to provide an outstanding compromise between exploration and exploitation abilities.

BSO begins with a population composed of multiple candidate individuals and each one means a solution to the optimization problem. Then, it performs three phases called the clustering phase, generating phase, and selecting phase to search solutions. The clustering phase involves using a clustering method to partition the population into several distinct clusters. For each cluster, the best individual in it is denoted as the center individual and the remaining are regarded as the normal ones. In the generating phase, new individuals are produced by employing one or two individuals from clusters. Each newly created individual is paired with an individual from the existing population. In the selecting phase, a selection method is adopted to store the better one from the paired individuals and save it for the next population. Finally, the three phases are iterated until a termination criterion is reached and the optimal solution is returned.

4.2. Q-Learning

Q-learning, as a typical model-free algorithm in RL, was originally introduced by Watkins and Dayan in 1992 [41]. Through a trial-and-error process, the method guides agents towards selecting the most beneficial behavior, and it comprises an environment, an agent, an action set, a state set, and a reward function. The main procedure of Q-learning is shown below. An agent selects an action in terms of its state at time in the environment. Then, it will obtain an immediate reward and its state will be transferred to a new state . Meanwhile, the Q-table is updated according to Equation (27), where stands for the Q-value of conducting an action at state , represents the learning rate, refers to the discount rate, and means the biggest Q-value in the Q-table at state .

The action selection is carried out in line with the Q-table. The values in an initial Q-table are equal to 0, in which the quantity of rows and columns matches the quantity of states and actions, respectively. The -greedy approach is commonly applied, and its details are described below. If a random value is less than , then choose an action randomly. Otherwise, the action with a maximum Q-value is selected.

5. Presented MQBSO Algorithm

This section introduces the framework of MQBSO as follows: Step 1, initialize the population and parameters; Step 2, implement a dynamic clustering method to construct multiple clusters; Step 3, employ a Q-learning process to determine a search strategy for generating new individuals; Step 4, apply a selection approach to create the next population; and Step 5, update the population state and Q-table.

5.1. Solution Representation and Initial Population

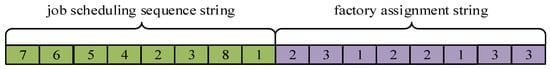

A double-string representation method is employed to represent solutions, that is, individuals. The solution is denoted as a job scheduling sequence string and a factory assignment string , where indicates a job index, , , means a factory index assigned to the job located at the -th position in the job scheduling sequence string, . To better clarify this method, Figure 2 provides a visual example using a scenario where eight jobs are assigned to three factories. In it, jobs 5 and 3 are handled in factory 1, jobs 7, 4, and 2 are assigned to factory 2, and the rest belong to factory 3.

Figure 2.

Illustration of the solution representation method.

Note that the aforementioned approach only focuses on assigning jobs to factories without obtaining other decisions, namely, the sequence in which jobs are processed on machines, allocation of vehicles for jobs, and delivery sequence of jobs. To convert the solution into an actionable plan, we present the following rules:

- Jobs that have been allocated to a factory are processed in a sequence that matches their assigned order on the machines;

- After the production of jobs, they are assigned in a sequential manner to a vehicle. The earlier the processing is completed, the sooner the vehicle is assigned. Meanwhile, vehicles are required not to exceed their maximum load capacity.

- Jobs are transported in the exact sequence in which they are loaded into the vehicle.

According to the above method and rules, we can successfully transform a solution into practicable decisions.

Ensuring both the stability and diversity of MQBSO, this work adopts a random method to initialize feasible solutions for constructing the initial population.

5.2. Clustering

Along with the evolution of BSO, the population should be gathered into various numbers of clusters during the iteration. Thus, this work develops a dynamic clustering approach driven by a nondominated sorting method [42]. This work sorts all individuals in the population and assigns them sorted values based on their dominated relationships. The smaller the sorted value, the better the individual. Hence, this work sets the best individuals with the smallest sorted value of 1 as the center individuals, while the rest as normal individuals. For each center individual, this work constructs a cluster. To conduct such work, the normal ones are randomly assigned to these clusters. Notice that when only one individual has the sorted value of 1, this work selects the individuals with the sorted value of 2 as center individuals.

5.3. Generating

Generating is the core process of BSO, which controls the global search and local search abilities of an algorithm through applying one individual or two individuals in clusters. In this process, three vital parameters are used, i.e., , , . Let decide whether a new individual is constructed by one individual or two individuals. determines whether a new individual is created by utilizing one center individual or one normal individual. decides whether a new individual is produced via employing two center individuals or two normal ones. Based on them, this work introduces a global search strategy and a local search strategy. The details about them are provided as follows:

- A global search strategy is designed when utilizing two randomly chosen center or normal individuals to construct new individuals, where the sequence-based [43] and the two-point crossover [44] methods are adopted. The former is applied to update the job scheduling sequence string and the latter is utilized to update the factory assignment string. The main contents of these two methods are described below.

- Sequence-based crossover method: First, randomly have two job position indexes , . Then, jobs between them in the individual are copied to the same positions of a new individual . Finally, the missing jobs in are added as their appearance in the individual . Hence, the job scheduling sequence string of is obtained. Two-point crossover method: First, two factory position indexes , are generated at random. Then, extract factory assignments beyond and in and place them into the same positions of . Finally, the rest factory assignments in are filled with the elements at the same positions of . Thus, the factory assignment string of is acquired. By employing these two methods, a new individual is successfully produced. To clearly exhibit the generation process, Figure 3 depicts an illustrative example.

Figure 3. Illustration of a new individual generation process.

Figure 3. Illustration of a new individual generation process. - A local search strategy is developed when using one randomly selected center or normal individual to construct new individuals, where five kinds of neighborhood structures, named NS1, NS2, NS3, NS4, and NS5 are introduced. The main idea of NS1 is as follows: randomly produce two job positions for a chosen individual, then swap these two jobs and exchange their respective factory assignments.

Different from NS1, the problem-dependent information is considered for the final four neighborhood structures. Given that one of the primary objectives of the problem investigated is to optimize the makespan criterion, it is essential to focus on improving the performance of the key factory that has a maximum completion time. To reach this, it is vital to adjust both the factory assignment and job processing sequence associated with this factory. According to the key factory theory [45] and the characteristics of the presented problem, this work designs four problem-dependent neighborhood structures. The details about them are given as follows: NS2 randomly swaps two jobs in the key factory; NS3 employs an insert operator, where two jobs in the key factory are randomly chosen, and one of which is inserted into the front of the other, along with corresponding changes are made to their factory assignments; NS4 is similar to NS2 except that the factory assignment of the two jobs is different, and one must be the key factory; and NS5 changes the factory assignment of a job in the key factory.

It is worth mentioning that for each chosen center or normal individual, only one of five neighborhood structures is selected at random to produce a new individual. This work stores the better one between the chosen and new individuals in accordance with their dominated relationship. To intuitively understand the five neighborhood structures, Figure 4 shows five examples of these structures, and the key factory is denoted as “*”.

Figure 4.

Examples of five types of neighborhood structures.

Furthermore, to avoid premature convergence and enhance the local search ability, we adopt a simulated annealing strategy [46]. The steps of this strategy are outlined below.

Step 1: Set an initial temperature , a final temperature , and update the current temperature .

Step 2: Select a center or normal individual at random and set an intermediary individual .

Step 3: Randomly perform one of the five neighborhood structures on to generate a new individual .

Step 4: If dominates , ; otherwise, .

Step 5: Adjust the temperature according to the formula: , and repeat Steps 3–5 until .

Step 6: Generate the new individual and save it.

In the generating phase, Q-learning is implemented to assist MQBSO in choosing a generation strategy, the introduction of this process is described in Section 5.5.

5.4. Selecting

In the original BSO, the selecting phase is performed to store better individuals to enter the next population. It usually saves the better ones between new individuals and their connected individuals in the population. This method may discard some better individuals. In order to strengthen MQBSO’s capability to explore promising directions, this work employs an effective selection method [44] by utilizing the nondominated sorting and crowding distance approaches. The basic idea of this method is shown as follows: First, merge the existing population with new individuals; second, sort all individuals and calculate their crowding distance values; and finally, extract the best individuals as the next population based on their sorted and crowding distance values.

5.5. Q-Learning Process

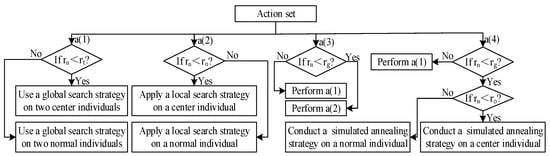

In this work, Q-learning is composed of four actions, four states, a reward function, and an action selection method. Four actions are defined as the combinations of a global search strategy, a local search strategy, and a simulated annealing strategy. Four states are described by the convergence and uniformity metrics. A reward function is designed based on the states and an improved -greedy method is newly developed.

5.5.1. Action Set

The action set consists of four actions, and each one is a combination of a global search strategy, a local search strategy, and a simulated annealing strategy. Action contains a global search strategy. Action includes a local search strategy. Action is composed of a global search strategy and a local search strategy. Action is made up of a global search strategy and a simulated annealing strategy. According to the above designs, Figure 5 gives the framework of an action set in MQBSO, where denotes a random number between .

Figure 5.

Framework of an action set in MQBSO.

This work uses an improved -greedy method [32] to conduct the selection of an action, where is calculated by Equation (28). In Equation (28), denotes the current number of fitness function evaluations, and represents a stopping condition equaling to fitness function evaluations. Randomly generate a float number , if is bigger than , one of four actions is chosen at random; otherwise, the agent selects the action with the highest Q-value as its preferred option. This method ensures that the selection probability of the two cases is about half in the beginning, along with the agent maintaining a certain ability to explore during the early stage. As the algorithm evolves, the action with the highest Q-value is favored. Thus, MQBSO can effectively intensify the exploration ability and reduce the possibility of dropping into local optima.

5.5.2. State Set

To clearly depict the state, a convergence index (C-metric) [47] and a uniformity index (D-metric) [33] are used, which are computed based on the formulas defined below.

As per Equation (29), and refer to the sets of non-dominated solutions acquired in the -th and -th iterations. quantifies the percentage of solutions in set which are dominated by solutions within set , and stands for the size of . Generally, a bigger suggests that the performance of set is better.

As per Equation (30), is a non-dominated solution set and indicates its size. refers to the shortest Euclidean distance from the -th solution and any other solution in , and means the average distance as measured using the formula presented in Equation (31). Usually, a higher D-metric value implies a better performance of uniformity.

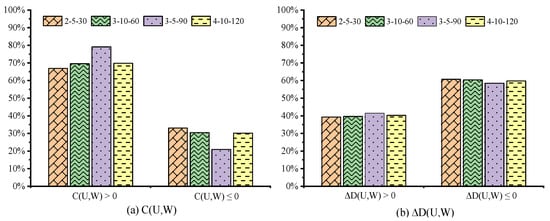

The occurrence percentage of , , , and in the entire search process of MQBSO on four instances are displayed in Figure 6. We see that each of the four cases appear. As a result, it is rational to consider four combinations of and as four states, i.e., : and , : and , : and , and : and .

Figure 6.

The occurrence percentage on each case of and on four instances.

5.5.3. Reward

In this work, four states are labeled as , , , and . The bigger the label value of the state, the worse the state. means the best state while is the worst one. Thus, rewards are set based on the label value of states. Four reward values are 5, 3, 3, and 1 for these four states, respectively.

After a detailed discussion, Algorithm 1 provides the main procedure of MQBSO.

| Algorithm 1: MQBSO |

| Input: Population size , three generation parameters , , , learning rate , discount rate , and a stopping condition . |

| Output: A non-dominated solution set. |

| Initialize the population and parameters (c.f. Section 5.1) |

| While the stopping condition is not reached do |

| Implement a dynamic clustering method in the clustering phase (c.f. Section 5.2). |

| Employ a Q-learning process in the generating phase (c.f. Section 5.3 and Section 5.5). |

| Apply a selection method in the selecting phase (c.f. Section 5.4). |

| Update the population state and Q-table. |

| End while |

6. Experimental Evaluations

In this section, comparison experiments and statistical tests are conducted to study MQBSO’s performance. MQBSO and comparison algorithms are programmed in C++ and performed on a personal computer equipped with HUAWEI Core i5-8265U CPU and 8 GB RAM in Qingdao, China. Four comparison methods are well-known metaheuristics: multi-objective whale swarm algorithm (MOWSA) [43], multi-objective brain storm optimization (MOBSO) [44], nondominated sorting genetic algorithm II (NSGA-II) [42], and multi-objective evolutionary algorithm based on decomposition (MOEA/D) [48]. In fairness, this work sets fitness function evaluations as a termination criterion, and all methods independently solve each instance 20 times.

6.1. Test Instances

Since the previous work does not address the presented problem, we cannot find available benchmarks. Therefore, we produce a set of instances based on the problems’ features. The benchmarks regarding flow shop scheduling and vehicle routing problems are employed to construct instances, i.e., the VFR benchmark [49] for the former, and the Gehring & Homberger benchmark [50] for the latter.

In the VFR benchmark, “VFRa_b_c” means the -th instance of a test problem having jobs and machines. Four instances are adopted, including VFR30_5_1, VFR30_10_1, VFR60_5_1, and VFR60_10_1, respectively. The instances VFR90_5_1, VFR90_10_1, VFR120_5_1, and VFR120_10_1 do not exist in the VFR benchmark. Thus, this work creates them by applying the information of the first 90, 120 jobs and the first 5, 10 machines regarding the VFR100_20_1 and VFR200_20_1.

In the Gehring & Homberger benchmark, an instance named C1_2_1 is applied, where coordinates, time windows, and service times are provided. Through choosing the first 30, 60, 90, and 120 customers from it, four instances, namely, C1_2_1, C1_2_1, C1_2_1, and C1_2_1 are obtained. The chosen instances contain eight combinations of : , where and indicate the number of jobs and machines, respectively. This work sets the number of factories . Hence, a total of 24 test instances are successfully generated.

For convenience, Table 2 provides abbreviations for the 24 test instances. In it, the first instance called “2-5-30” consists of 2 factories, 5 machines, and 30 jobs, in which the production and distribution data are from VFR30_5_1 and C1_2_1, respectively. The remaining can be deduced by analogy. Furthermore, this work sets the load of jobs from an interval [10,40], the maximum load capacity of vehicles is 100, 125, 150, and 200 when the quantity of jobs is 30, 60, 90, and 120, respectively, and the unit earliness and tardiness weights of jobs are generated at random from .

Table 2.

Abbreviation information of 24 test instances.

6.2. Parameters Setting

An orthogonal experiment method [51] is employed in this research to implement the parameter experiment on a moderate-size instance named “3-5-60” with 3 factories, 5 machines and 60 jobs. This method is viewed as a modern technique for analyzing and optimizing parameters across various research fields. Generally, practical engineering design and optimization tasks require the consideration of three or more influential factors, leading to the application of full factorial design analysis. MQBSO considers four parameters, i.e., , , , and . Each parameter has four levels: , , , , and an array containing 16 combinations is created. To promote fairness in the comparison of different algorithms, this work sets the same number of fitness function evaluations as a stopping condition, i.e., , where and are the quantity of jobs and machines, respectively. Each combination is executed 20 times individually and the results are analyzed by applying the IGD-metric. The average IGD (AIGD) results are calculated and provided in Table 3. Through analyzing the obtained results, a hopeful parameter combination is produced, i.e., , , , .

Table 3.

Orthogonal array and AIGD results of MQBSO.

Similarly, the parameters of four peers are achieved via using the same method, and their parameters are displayed in Table 4. In addition, the learning rate and discount rate are set by drawing on existing research [32], which are 0.5 and 0.8, respectively.

Table 4.

Parameter settings of four compared algorithms.

6.3. Performance Metrics

Convergence and uniformity are two significant measures for examining the efficiency of multi-objective algorithms. Hence, this work utilizes three common performance metrics to carry out algorithmic measurements, i.e., C-metric [47], Inverted Generational Distance-metric (IGD-metric) [47], and Hypervolume-metric (HV-metric) [52]. The C-metric is defined in Equation (29), and the details of the IGD- and HV-metrics are offered below.

The IGD-metric is applied to reflect both convergence and uniformity of a method and its calculation formula is shown as:

where and mean an obtained solution set by an algorithm and the Pareto optimal front, respectively. In reality, the Pareto optimal front is difficult to achieve for the examined problem. Hence, this work generates by merging the obtained solution sets of all employed algorithms and discarding the dominated solutions. represents the shortest Euclid distance from a solution in and any other solution in , and is the quantity of solutions in . In this work, all objective values are normalized within , and an algorithm with lower IGD-metric values is ideal.

The HV-metric is also used to account for the convergence and uniformity of an algorithm. It estimates the volume of the area covered by the obtained nondominated solutions of an algorithm and a known reference point . It is calculated as:

where the Lebesgue measure is implemented to determine the volume of a set. denotes the quantity of solutions in the solution set , and is the super volume of the area coved by the -th solution in and . As in the IGD-metric, the obtained objective values of all algorithms are transformed into the range of , and is . Generally, a larger HV-metric value shows a better performance.

6.4. Experimental Results and Analysis

This section compares MQBSO with its four rivals on the C-metric, IGD-metric, and HV-metric. The four peers are, respectively, MOWSA, MOBSO, NSGA-II, and MOEA/D, and the results are presented in Table 5, Table 6 and Table 7.

Table 5.

Results of MQBSO and its peers concerning C-metric.

Table 6.

Results of MQBSO and its peers about IGD-metric.

Table 7.

Results of MQBSO and its peers regarding HV-metric.

Table 5 exhibits the comparison results concerning C-metric, where the symbols “QB”, “WS”, “BS”, “GA”, and “EA” indicate the nondominated solution sets obtained by MQBSO, MOWSA, MOBSO, NSGA-II, and MOEA/D, respectively. A higher value of suggests a superior performance of MQBSO, . As shown in Table 5, the values of and are bigger than those of and in 22 instances, along with the values of and which are larger than those of and in 21 instances. Thus, MQBSO is significantly better than its peer methods. Furthermore, we find that MQBSO obtains better average results than MOWSA, MOBSO, NSGA-II, and MOEA/D. In light of the comparative analysis results, it is evident that MQBSO is a valid method.

Table 6 reports the comparison results with respect to the IGD-metric. Usually, a lower IGD-metric value means a better performance of an algorithm. As displayed in Table 6, MQBSO produces the best results in 22 out of 24 test instances compared with MOWSA, MOBSO, NSGA-II, and MOEA/D. Furthermore, the average values of all instances are shown at the bottom of Table 6. The average values of MQBSO, MOWSA, MOBSO, NSGA-II, and MOEA/D are 0.1077, 0.3497, 0.3714, 0.4178, and 0.4655, respectively. Clearly, MQBSO achieves a minimum average result. Thereby, we verify that MQBSO can obtain more promising solutions than its competitors in settling the studied problem.

Table 7 provides the comparison results regarding the HV-metric, where an algorithm with higher HV-metric values exhibits better than those with lower values. The results demonstrated in Table 7 ensure that the solutions obtained by MQBSO can cover a large volume on most test instances, meaning that MQBSO is superior. MQBSO is better than MOWSA and MOBSO in 22 instances, and it performs better than NSGA-II and MOEA/D in 20 out of 24 test instances. Thereby, we verify that MQBSO is competent at generating better solutions. In addition, by analyzing the average values acquired by MQBSO, MOWSA, MOBSO, NSGA-II, and MOEA/D, we draw a similar conclusion, the average values are, respectively, 0.8440, 0.5340, 0.4696, 0.4269, and 0.3785. Based on the ongoing study, we declare that MQBSO is an outstanding solver.

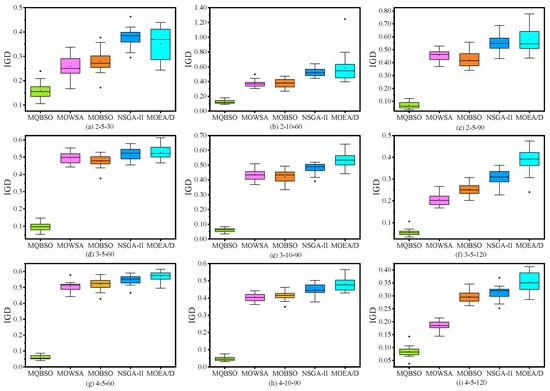

To clearly illustrate the experimental findings of MQBSO and other four rivals, boxplots are drawn to visualize the performance of all algorithms across nine instances with varying sizes. Figure 7 and Figure 8, respectively, display the boxplots of MQBSO and its competitors with respect to IGD- and HV-metric. According to Figure 7, we find that MQBSO achieves a significantly lower median value than its rivals across all selected instances concerning IGD-metric. Similarly, as can be observed from Figure 8, MQBSO owns a greater median value than its rivals in all the chosen instances in terms of the HV-metric. Therefore, the collected experimental results and subsequent analysis state that MQBSO has an obvious advantage over its rivals in handling the problem under study.

Figure 7.

Boxplots for the results of nine test instances regarding the IGD-metric.

Figure 8.

Boxplots for the results of nine test instances regarding the HV-metric.

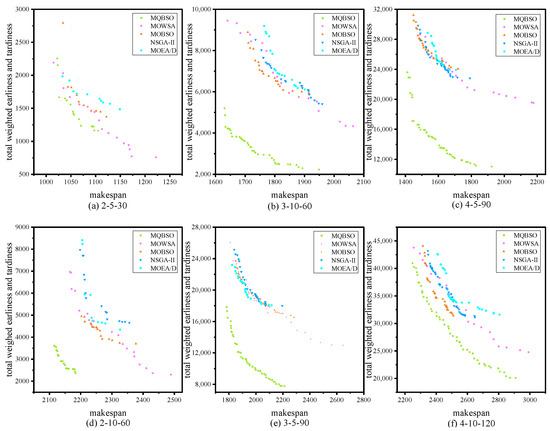

For a further comparison, Figure 9 shows the distribution graphs of the nondominated solutions achieved by MQBSO and its peers in settling six instances with different sizes. From Figure 9, it is apparent that the nondominated solutions obtained by MQBSO are consistently much better than its rivals on the chosen test instances. Specifically, we see that MQBSO produces uniformly distributed and better approximated solutions. Hence, MQBSO can be taken as an outstanding optimizer.

Figure 9.

Nondominated solutions obtained by MQBSO and its peers.

Moreover, this work applies statistical tests to verify whether the performance difference between MQBSO and its peers is significantly different. The Friedman and Wilcoxon signed rank tests [53,54,55] are carried out on each instance of the IGD- and HV-metrics at level of significance. The average rank values of MQBSO, MOWSA, MOBSO, NSGA-II, and MOEA/D with respect to the IGD-metric are 1.3333, 2.5417, 2.7083, 3.7917, and 4.6250, respectively, and those of the five algorithms regarding the HV-metric are 1.5000, 2.1667, 3.1667, 3.7500, and 4.4167, respectively. Obviously, MQBSO obtains a minimum average rank value on the two metrics. Furthermore, the test statistic indices regarding the IGD- and HV-metrics are calculated, i.e., 39.8483 and 8.0714. These values surpass the critical value of 2.53. Accordingly, the performance difference among all algorithms is statistically significant. The statistical results of the Wilcoxon signed rank test are displayed in Table 8, with respect to the IGD- and HV-metrics. The acquired values are greater than the values, and the obtained -values are lower than 0.05. As a consequence, we state that MQBSO significantly outperforms its peers.

Table 8.

Results of the Wilcoxon signed rank test.

6.5. Effectiveness of the Q-Learning Process in MQBSO

To demonstrate the effect of the Q-learning process in MQBSO, a variant without using it (named MBSO) is constructed. In MBSO, the generation strategy is selected at random to produce new individuals. Table 9 displays the comparison results of MQBSO and MBSO regarding the C-, IGD-, and HV-metrics. MQBSO acquires better results than MBSO on 21 instances of the C- and HV-metrics and performs better than MBSO in 20 instances with respect to the IGD-metric. In addition, MQBSO has an advantage over MBSO in terms of the average value for three metrics. Thus, the Q-learning process is effective in MQBSO when solving the investigated problem.

Table 9.

Results of MQBSO and MBSO in the C-, IGD-, and HV-metrics.

6.6. Comparison of Five Algorithms in CPU Time

To examine the computation efficiency of MQBSO, this work records the CPU time of MQBSO and its competitors in handling all the employed test instances. The instances are organized into groups based on their job quantity, and the average CPU time is computed for each group, as provided in Table 10. The average CPU time of MOWSA and MOBSO is longer than that of MQBSO in all groups. Thus, MQBSO has better abilities to tackle the considered problem by utilizing less time. The average CPU time of NSGA-II and MOEA/D exhibits a slight advantage over MQBSO in all groups. However, the difference between MQBSO and NSGA-II, along with MQBSO and MOEA/D is not very large. Thus, MQBSO is still regarded as an outstanding solver.

Table 10.

Average CPU time of all the used algorithms in different groups.

6.7. Comparison of MQBSO and CPLEX

To verify MQBSO’s performance for seeking optimal solutions, CPLEX is used for comparisons. The experiments are conducted as follows: this work develops three instances based on the first 8 jobs, the first 10 jobs, and all jobs of a test problem with 12 jobs, respectively. Table 11 and Table 12 provide the information of the used test problem.

Table 11.

Information for the test problem.

Table 12.

The drive time for the numerical example.

Considering that CPLEX cannot cope with a multi-objective optimization problem, we employ the weighted sum method to develop a variant of the problem under study. For each instance, three weight vectors are used, i.e., (1.0, 0.0), (0.5, 0.5), and (0.0, 1.0), to construct three sub-problems. There are two factories, and each factory contains two machines and two vehicles. The maximum load capacity of vehicles is 60, 80, and 100 when the number of jobs is 8, 10, and 12, respectively. The service time of customers is 90. This work sets the maximum running time of CPLEX as 3600 s. In scenarios where CPLEX fails to identify the global optimal solution in the allowed time, it outputs an approximate optimal value (AOV) instead. Table 13 shows the comparison results.

Table 13.

Comparison results of MQBSO and CPLEX.

From Table 13, we can see that CPLEX achieves an optimal solution on two sub-problems and obtains the same value as MQBSO on one sub-problem when solving the instance with 8 jobs. On the contrary, MQBSO shows better than CPLEX on two sub-problems for solving the instances with 10 and 12 jobs, and it acquires the same value as CPLEX on one sub-problem. Moreover, the running time of MQBSO is significantly shorter than CPLEX. Thus, we can infer that MQBSO exhibits clear advantages over CPLEX in handling the proposed problem if the problem scale is larger.

Through analyzing the achieved experimental and statistical results in the previous sections, we verify that MQBSO has a powerful ability to gain better results in handling the problem under consideration. In MQBSO, a dynamic clustering method is designed in the clustering phase. A global search strategy, a local search strategy, and a simulated annealing strategy are used in the generating phase. A Q-learning process is conducted to dynamically choose the generation strategy. It includes four actions defined as the combinations of these strategies, four states described by convergence and uniformity metrics, a reward function, and an improved -greedy method. A selection method is employed in the selecting phase. By employing these methods iteratively, MQBSO has a superior trade-off between its abilities to explore and exploit the solution space, thus rendering it an outstanding solver for the problem being examined.

7. Conclusions

This work, for the first time, addresses a multi-objective integrated distributed flow shop and distribution scheduling to minimize makespan and total weighted earliness and tardiness. A mathematical model is given to define it and an MQBSO is developed to cope with it. In MQBSO, a double-string representation method is applied, and a dynamic clustering method is designed. Q-learning is applied to choose the generation strategy, where four actions, four states, a reward function, and an improved -greedy approach are designed. To assess the performance of MQBSO, numerical experiments are implemented on test instances by comparing with MOWSA, MOBSO, NSGA-II, MOEA/D, and CPLEX. The results suggest that MQBSO is superior.

The future directions are pointed out, which are encouraged to expand our study in several aspects, e.g., inventory, multiple time windows, stochastic models, flexible distributed scheduling, and energy-related objectives [56,57]. In addition, we will focus on RL to design effective solution methods.

Author Contributions

Conceptualization, J.X. and S.Z.; methodology, S.Z.; software, S.Z. and Y.Q.; validation, S.Z. and Y.Q.; formal analysis, S.Z. and Y.Q.; investigation, S.Z.; resources, J.X.; data curation, Y.Q.; writing—original draft preparation, S.Z. and Y.Q.; writing—review and editing, S.Z., J.X. and Y.Q.; visualization, S.Z.; supervision, J.X.; project administration, J.X.; funding acquisition, J.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part, by the National Natural Science Foundation of China: grant number 72271048.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rafiei, H.; Safaei, F.; Rabbani, M. Integrated production-distribution planning problem in a competition-based four-echelon supply chain. Comput. Ind. Eng. 2018, 119, 85–99. [Google Scholar] [CrossRef]

- Ganji, M.; Kazemipoor, H.; Molana, S.M.H.; Sajadi, S.M. A green multi-objective integrated scheduling of production and distribution with heterogeneous fleet vehicle routing and time windows. J. Clean. Prod. 2020, 259, 120824. [Google Scholar] [CrossRef]

- Chandra, P.; Fisher, M.L. Coordination of production and distribution planning. Eur. J. Oper. Res. 1994, 72, 503–517. [Google Scholar] [CrossRef]

- Liu, L.; Liu, S. Integrated production and distribution problem of perishable products with a minimum total order weighted delivery time. Mathematics 2020, 8, 146. [Google Scholar] [CrossRef]

- Moons, S.; Ramaekers, K.; Caris, A.; Arda, Y. Integrating production scheduling and vehicle routing decisions at the operational decision level: A review and discussion. Comput. Ind. Eng. 2017, 104, 224–245. [Google Scholar] [CrossRef]

- Wang, J.J.; Wang, L. A bi-population cooperative memetic algorithm for distributed hybrid flow-shop scheduling. IEEE Trans. Emerg. Top. Comput. Intell. 2020, 5, 947–961. [Google Scholar] [CrossRef]

- Shao, W.S.; Shao, Z.S.; Pi, D.C. Modeling and multi-neighborhood iterated greedy algorithm for distributed hybrid flow shop scheduling problem. Knowl.-Based Syst. 2020, 194, 105527. [Google Scholar] [CrossRef]

- Wang, J.J.; Wang, L. A knowledge-based cooperative algorithm for energy-efficient scheduling of distributed flow-shop. IEEE Trans. Syst. Man Cybern. Syst. 2020, 50, 1805–1819. [Google Scholar] [CrossRef]

- Lu, C.; Gao, L.; Gong, W.Y.; Hu, C.Y.; Yan, X.S.; Li, X.Y. Sustainable scheduling of distributed permutation flow-shop with non-identical factory using a knowledge-based multi-objective memetic optimization algorithm. Swarm Evol. Comput. 2021, 60, 100803. [Google Scholar] [CrossRef]

- Shao, W.S.; Pi, D.C.; Shao, Z.S. Optimization of makespan for the distributed no-wait flow shop scheduling problem with iterated greedy algorithms. Knowl.-Based Syst. 2017, 137, 163–181. [Google Scholar] [CrossRef]

- Gong, D.W.; Han, Y.Y.; Sun, J.Y. A novel hybrid multi-objective artificial bee colony algorithm for blocking lot-streaming flow shop scheduling problems. Knowl.-Based Syst. 2018, 148, 115–130. [Google Scholar] [CrossRef]

- Zheng, J.; Wang, L.; Wang, J.J. A cooperative coevolution algorithm for multi-objective fuzzy distributed hybrid flow shop. Knowl.-Based Syst. 2020, 194, 105536. [Google Scholar] [CrossRef]

- Fu, Y.P.; Hou, Y.S.; Wang, Z.F.; Wu, X.W.; Gao, K.Z.; Wang, L. A review of distributed scheduling problems in intelligent manufacturing systems. Tsinghua Sci. Technol. 2021, 26, 625–645. [Google Scholar] [CrossRef]

- Zhao, F.Q.; Shao, D.Q.; Wang, L.; Xu, T.P.; Zhu, N.N.; Jonrinal, D. An effective water wave optimization algorithm with problem-specific knowledge for the distributed assembly blocking flow-shop scheduling problem. Knowl.-Based Syst. 2022, 243, 108471. [Google Scholar] [CrossRef]

- Li, H.X.; Gao, K.Z.; Duan, P.Y.; Li, J.Q.; Zhang, L. A novel shuffled frog-leaping algorithm with reinforcement learning for distributed assembly hybrid flow shop scheduling. Int. J. Prod. Res. 2022, 61, 1233–1251. [Google Scholar] [CrossRef]

- Li, H.X.; Gao, K.Z.; Duan, P.Y.; Li, J.Q.; Zhang, L. An improved artificial bee colony algorithm with Q-learning for solving permutation flow-shop scheduling problems. IEEE Trans. Syst. Man Cybern. Syst. 2022, 53, 2684–2693. [Google Scholar] [CrossRef]

- Luo, B.; Liu, D.; Huang, T.; Wang, D. Model-free optimal tracking control via critic-only Q-learning. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 2734–2744. [Google Scholar] [CrossRef] [PubMed]

- Cheng, L.X.; Tang, L.X.; Zhang, L.P.; Zhang, Z.K. Multi-objective Q-learning-based hyper-heuristic with Bi-criteria selection for energy-aware mixed shop scheduling. Swarm Evol. Comput. 2022, 69, 100985. [Google Scholar] [CrossRef]

- Bdeir, A.; Boeder, S.; Dernedde, T.; Tkachuk, K.; Falkner, J.K.; Schmidt-Thieme, L. RP-DQN: An application of Q-learning to vehicle routing problems. Adv. Artif. Intell. 2021, 12873, 3–16. [Google Scholar] [CrossRef]

- Shi, Y.H. Brain storm optimization algorithm. In International Conference in Swarm Intelligence; Springer: Berlin/Heidelberg, Germany, 2011; pp. 303–309. [Google Scholar]

- Potts, C.N. Analysis of a heuristic for one machine sequencing with release dates and delivery times. Oper. Res. 1980, 28, 1436–1441. [Google Scholar] [CrossRef]

- Chen, Z.L. Integrated production and outbound distribution scheduling: Review and extensions. Oper. Res. 2010, 58, 120–148. [Google Scholar] [CrossRef]

- Roberto, F.T.N.; Marcelo, S.N. An iterated greedy approach to integrate production by multiple parallel machines and distribution by a single capacitated vehicle. Swarm Evol. Comput. 2019, 44, 612–621. [Google Scholar] [CrossRef]

- Jia, Z.H.; Cui, Y.F.; Li, K. An ant colony-based algorithm for integrated scheduling on batch machines with non-identical capacities. Appl. Intell. 2022, 52, 1752–1769. [Google Scholar] [CrossRef]

- Yagmur, E.; Kesen, S.E. A memetic algorithm for joint production and distribution scheduling with due dates. Comput. Ind. Eng. 2020, 142, 106342. [Google Scholar] [CrossRef]

- Mohammadi, S.; Al-e-Hashem, S.M.; Rekik, Y. An integrated production scheduling and delivery route planning with multi-purpose machines: A case study from a furniture manufacturing company. Int. J. Prod. Econ. 2020, 219, 347–359. [Google Scholar] [CrossRef]

- Gharaei, A.; Jolai, F. A multi-agent approach to the integrated production scheduling and distribution problem in multi-factory supply chain. Appl. Soft Comput. 2018, 65, 577–589. [Google Scholar] [CrossRef]

- Fu, Y.P.; Hou, Y.S.; Chen, Z.H.; Pu, X.J.; Gao, K.Z.; Sadollah, A. Modelling and scheduling integration of distributed production and distribution problems via black widow optimization. Swarm Evol. Comput. 2021, 68, 101015. [Google Scholar] [CrossRef]

- Hou, Y.S.; Fu, Y.P.; Gao, K.Z.; Zhang, H.; Sadollah, A. Modelling and optimization of integrated distributed flow shop scheduling and distribution problems with time windows. Expert Syst. Appl. 2021, 187. [Google Scholar] [CrossRef]

- Qin, H.; Li, T.; Teng, Y.; Wang, K. Integrated production and distribution scheduling in distributed hybrid flow shops. Memetic Comput. 2021, 13, 185–202. [Google Scholar] [CrossRef]

- Wang, L.; Pan, Z.X.; Wang, J.J. A review of reinforcement learning based intelligent optimization for manufacturing scheduling. Complex Syst. Model. Simul. 2021, 1, 257–270. [Google Scholar] [CrossRef]

- Zhao, F.; Di, S.; Wang, L. A hyperheuristic with Q-learning for the multiobjective energy-efficient distributed blocking flow shop scheduling problem. IEEE Trans. Cybern. 2022, 53, 3337–3350. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Gong, W.Y.; Lu, C. A reinforcement learning based RMOEA/D for bi-objective fuzzy flexible job shop scheduling. Expert Syst. Appl. 2022, 203, 117380. [Google Scholar] [CrossRef]

- Wang, H.X.; Sarker, B.R.; Li, J.; Li, J. Adaptive scheduling for assembly job shop with uncertain assembly times based on dual Q-learning. Int. J. Prod. Res. 2020, 59, 5867–5883. [Google Scholar] [CrossRef]

- Xu, P.; Luo, W.; Lin, X. BSO20: Efficient brain storm optimization for real-parameter numerical optimization. Complex Intell. Syst. 2021, 7, 2415–2436. [Google Scholar] [CrossRef]

- Cheng, S.; Zhang, M.; Ma, L.; Lu, H.; Wang, R.; Shi, Y.H. Brain storm optimization algorithm for solving knowledge spillover problems. Neural Comput. Appl. 2021, 35, 12247–12260. [Google Scholar] [CrossRef]

- Hao, J.H.; Li, J.Q.; Du, Y.; Song, M.X.; Duan, P.; Zhang, Y.Y. Solving distributed hybrid flowshop scheduling problems by a hybrid brain storm optimization algorithm. IEEE Access 2019, 7, 66879–66894. [Google Scholar] [CrossRef]

- Zhao, F.Q.; Hu, X.T.; Wang, L.; Xu, T.P.; Zhu, N.N.; Jonrinaldi. A reinforcement learning-driven brain storm optimisation algorithm for multi-objective energy-efficient distributed assembly no-wait flow shop scheduling problem. Int. J. Prod. Res. 2023, 61, 2854–2872. [Google Scholar] [CrossRef]

- Ma, X.M.; Fu, Y.P.; Gao, K.Z.; Zhu, L.H.; Sadollah, A. A multi-objective scheduling and routing problem for home health care services via brain storm optimization. Complex Syst. Model. Simul. 2023, 3, 32–46. [Google Scholar] [CrossRef]

- Ke, L. A brain storm optimization approach for the cumulative capacitated vehicle routing problem. Memetic Comput. 2018, 10, 411–421. [Google Scholar] [CrossRef]

- Watkins, C.J.C.H.; Dayan, P. Technical note: Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Wang, G.C.; Gao, L.; Li, X.Y.; Li, P.G.; Tasgetiren, M.F. Energy-efficient distributed permutation flow shop scheduling problem using a multi-objective whale swarm algorithm. Swarm Evol. Comput. 2020, 57, 100716. [Google Scholar] [CrossRef]

- Fu, Y.P.; Tian, G.D.; Fard, A.M.H.; Ahmadi, A.; Zhang, C.Y. Stochastic multi-objective modelling and optimization of an energy-conscious distributed permutation flow shop scheduling problem with the total tardiness constraint. J. Clean. Prod. 2019, 226, 515–525. [Google Scholar] [CrossRef]

- Lu, C.; Gao, L.; Yi, J.; Li, X. Energy-efficient scheduling of distributed flow shop with heterogeneous factories: A real-world case from automobile industry in China. IEEE Trans. Ind. Inform. 2020, 17, 6687–6696. [Google Scholar] [CrossRef]

- Kuidi, M. A memetic algorithm with novel semi-constructive evolution operators for permutation flowshop scheduling problem. Appl. Soft Comput. 2020, 94, 106458. [Google Scholar] [CrossRef]

- Zitzler, E.; Deb, K.; Thiele, L. Comparison of multiobjective evolutionary algorithms: Empirical results. Evol. Comput. 2000, 8, 173–195. [Google Scholar] [CrossRef]

- Zhang, Q.F.; Li, H. A multiobjective evolutionary algorithm based on decomposition. IEEE Trans. Evol. Comput. 2007, 11, 712–731. [Google Scholar] [CrossRef]

- Vallada, E.; Ruiz, R.; Framinan, J.M. New hard benchmark for flowshop scheduling problems minimising makespan. Eur. J. Oper. Res. 2015, 240, 666–677. [Google Scholar] [CrossRef]

- Gehring, H.; Homberger, J. A parallel hybrid evolutionary metaheuristic for the vehicle routing problem with time windows. In Proceedings of EUROGEN99; Springer: Berlin/Heidelberg, Germany, 1999; Volume 2, pp. 57–64. [Google Scholar]

- Karna, S.K.; Sahai, R. An overview on Taguchi method. Int. J. End. Math. Sci. 2012, 1, 1–7. [Google Scholar]

- Zitzler, E.; Thiele, L. Multiobjective evolutionary algorithms: A comparative case study and the strength Pareto approach. IEEE Trans. Evol. Comput. 1999, 3, 257–271. [Google Scholar] [CrossRef]

- Friedman, M. The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J. Am. Stat. Assoc. 1937, 32, 675–701. [Google Scholar] [CrossRef]

- Wilcoxon, F.; Katti, S.K.; Wilcox, R.A. Critical values and probability levels for the Wilcoxon rank sum test and the Wilcoxon signed rank test. Sel. Tables Math. Stat. 1970, 1, 171–259. [Google Scholar]

- Hou, Y.S.; Wang, H.F.; Fu, Y.P.; Gao, K.Z.; Zhang, H. Multi-objective brain storm optimization for integrated scheduling of distributed flow shop and distribution with maximal processing quality and minimal total weighted earliness and tardiness. Comput. Ind. Eng. 2023, 179, 109217. [Google Scholar] [CrossRef]

- Li, F.; Chen, Z.L.; Tang, L. Integrated production, inventory and delivery problems: Complexity and algorithms. INFORMS J. Comput. 2017, 29, 232–250. [Google Scholar] [CrossRef]

- Wang, J.; Song, G.; Liang, Z.; Demeulemeester, E.; Hu, X.; Liu, J. Unrelated parallel machine scheduling with multiple time windows: An application to earth observation satellite scheduling. Comput. Oper. Res. 2023, 149, 106010. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).