Abstract

In this paper, we propose to study the asymptotic properties of some conditional functional parameters, such as the distribution function, the density, and the hazard function, for an explanatory variable with values in a Hilbert space (infinite dimension) and a response variable real in a quasi-associated dependency framework. We consider the non parametric estimation of the conditional distribution function by the kernel method in the presence of the quasi-associated dependence, and we establish under general hypotheses the almost complete convergence with speed of the estimator built in the associated case. The estimation of the conditional hazard function will be conducted by utilizing the two outcomes of the conditional distribution function and the conditional density. We establish the asymptotic normality of the kernel estimator as the conditional risk function of a properly normalized functional. We explicitly give the asymptotic variance. Simulation studies were conducted to investigate the behavior of the asymptotic property in the context of finite sample data. All the statistical analyses were performed using R software.

Keywords:

distribution function; asymptotic property; conditional risk function; weak dependence; functional data MSC:

62G05; 62F12; 62G20; 62M09

1. Introduction

Mathematics and statistical analysis techniques have been of considerable relevance in a variety of scientific sectors in recent years, including engineering, economics, clinical medicine, and healthcare. In particular, the application of methods of mathematical and statistical analysis have been applied to engineering, economics, healthcare, and clinical medicine, and is has been demonstrated how these approaches may assist in such vital areas as comprehension, prediction, correlation, diagnosis, therapy, and data processing.

It is crucial to note that the study of conditional models, which is included in nonparametric functional data analysis, is one of the most significant approaches of statistical analysis. An examination of this kind is carried out with the primary purpose of investigating and modeling the connection that exists between a scalar response variable and a functional regressor. In addition, two essential asymptotic features are the consistency and the asymptotic normality of particular statistical parameter estimators.

Functional data are the subject of this research. As defined in Ref. [1], statistics functional data analysis (FDA) analyzes infinite-dimensional variables, including curves, sets, and pictures. The “Big Data” revolution has spurred its rapid expansion over the past 20 years.

This may be demonstrated by researching the topic’s past (for an example, see [2]). In [3], the topics of density and mode estimation for normed vector space data and the problem of excessive dimensionality in functional data and are discussed and potential remedies are offered. Nonparametric models were investigated for use in regression estimation in [4].

The treatment of functional data today typically involves contemporary theory. For example, reference [5] presented the consistency rates of a variety of conditional distribution functionals, such as the regression function, conditional cumulative distribution, and conditional density, uniformly over a subset of the explanatory variable. These functionals include the conditional cumulative distribution and conditional density. The conditional cumulative distribution, as well as the conditional density, are both included in these functionals.

Uniformly in bandwidth (UIB) consistency was extended to the ergodic scenario, and the rates of consistency for different functional nonparametric models were investigated in [6]. The regression function was a part of these models, a conditional hazard function, conditional distribution, and conditional density.

In the field of statistical mathematics, in recent years, there has been a surge of curiosity in the statistical analysis of functional data. These numbers are used in econometrics, medicine, environmental science, and many other fields. In the statistical functional, ref. [4] made the first attempt to estimate the conditional density function and its derivatives. In addition, they were the ones who were the first to do so in the scientific community. These authors reached an extent of convergence in the i.i.d. case that was really near to being finished. Since this work was published, much more research has been done on estimating the conditional density and its derivatives, especially for computing the conditional mode. In point of fact, ref. [7] demonstrated that a kernel estimator of the conditional mode will almost certainly converge to the true value. The authors showed this by taking into account data that included a-mixing.

The point that nullifies the kernel density estimator derivative was used by [8,9] to estimate the conditional mode. This was done to determine the conditional mode. The outcomes were comparable using this approach, but they put more of an emphasis on the estimator’s asymptotic normality, which was provided in both the i.i.d and mixed circumstances, respectively. Both scenarios included mixing. Ref. [10] was able to identify the level of accuracy of the terms that dominate the quadratic error that is produced by the kernel density estimator.

We suggest that the reader check [11] for further information on the smoothing parameter that should be used in estimating the conditional density in relation to the functional explanatory variable.

The concept of a quasi-association variable refers to a variable that exhibits some degree of association with another variable; examples of research that processed data under both positive and negative dependent random variables are [12,13,14]. In Ref. [15], the authors were the first people to offer the concept of quasi-association to conduct an analysis of real-valued stochastic occurrences. This is a very striking illustration of the idea of weak reliance. It was used by [16] for real-valued random fields, and it provides a unified technique for studying families of positively dependent and negatively dependent random variable families.

According to our knowledge, the nonparametric estimation of quasi-associated random variables is addressed in a vanishingly small number of published papers. The study conducted by [17] focuses on a limit theorem for quasi-associated Hilbertian random variables. The research conducted by [18] explores asymptotic results for an M-estimator of the regression for weak dependance; and in Ref. [19], the authors investigated both quasi-associated processes and asymptotic results. Ref. [20] investigated the asymptotic normality of this final estimator as part of the study, which focused on the single-index structure of the conditional hazard function.

To solve relative regression, ref. [21] explored the nonparametric estimate for linked random variables; it was found to be significant in [22,23]. Both of these results were found to be significant. The authors were responsible for conducting these investigations in their own separate ways. The authors in [24] demonstrate the robust uniform consistency characteristics of partial derivatives of multivariate density functions under weak dependence, namely inside compact subsets of , and they determine the relevant rates of convergence to establish the asymptotic normality of these estimators.

In [25], the authors examine the application of the kernel nearest neighbors (k-nn) technique in a regression model with a single index. They specifically focus on cases where the explanatory variable is measured in functional space, in the context of the association dependency condition. The primary outcome of this study involves the determination of the asymptotic distribution for the single index estimator using the k-nn.

The study conducted in [26] examines the application of the k-nn approach within the single index regression model. This particular investigation focuses on scenarios where the predictor is functional in nature, and the response is scalar. The primary outcome of this study is the determination of the almost comprehensive rates of convergence under the assumption of weak dependence.

The primary outcome of the study referenced in [27] is the establishment of the asymptotic properties, specifically the almost complete convergence rates and the asymptotic normality, of nonparametric estimation techniques for the regression function in the context of the single functional index model (SFIM). These features are derived under the assumption of quasi-association dependence.

It is important to keep in mind that our results are connected to the model’s functional space in some manner, just as they are related to every previous asymptotic statistics functional nonparametric finding.

In this research, we establish under general hypotheses the almost complete convergence with the speed of the estimator built in the quasi-associated case, and we will apply the two results of the conditional distribution function and the conditional density in [28] to estimate the conditional hazard function. We establish the asymptotic normality of the kernel estimator as the conditional hazard function of a properly normalized functional. We give explicitly the asymptotic variance.

In the subsequent sections of this work, we will introduce our model, which can be found in Section 2. In Section 3 of this paper, the primary findings are presented. The confidence bands is the subject of discussion in Section 4. In Section 5, an analysis and evaluation of the behavior of our asymptotic normality findings on finite sample data is conducted. The conclusion is articulated in Section 6. The proof of the intermediate findings is provided in Appendix A.

2. Model and Estimator

To commence, we provide a precise delineation of quasi-association about random variables that possess values within a separable Hilbert space.

Consider a separable Hilbert space furnished with an orthonormal basis. The sequence of elements is represented by .

Let be a sequence of real random variables with values in . The statement is made that this sequence exhibits quasi-association about the basis. The user defines as a variable. The term “quasi-associated” is used to describe the sequence if, for any positive integer d, the d-dimensional sequence is also quasi-associated.

In this analysis, we will examine a group of n quasi-associated random variables, which we will represent as . The random variables mentioned exhibit the same distribution as the random variable , which represents values in a separable real Hilbert space denoted as . This Hilbert space is equipped with an inner product denoted as , which generates the norm. The semi-metric d is considered, as given by: .

For a fixed r in the space, its fixed neighborhood and a compact subset of all have the notation . For each , there is a S such that . Using a sample of n dependent observations from , the conditional distribution function is estimated. We present the estimator of , a kernel type estimator, defined as:

K: denotes the kernel, H represents a particular distribution function, and the sequence of positive real integers (resp. ) converges to zero when n increases to infinity. We define estimator of the conditional density , given by:

This is the derivative of H expressed as .

Finally, we obtain the conditional hazard function estimator . This estimator is defined as follows:

3. The Consistency and Asymptotic Normality of the Kernel Estimators

3.1. Assumptions and Necessary Background Knowledge

When there is no chance of misunderstanding, we shall designate any strictly positive generic constants in the paper by the notation l or/and . This will only happen when there is no risk of mistakes. The expression w in the process signifies a fixed point in , and stands for a fixed neighborhood of w. We take into account the fact that the random couple is a process that is stationary.

Let denote the covariance coefficient, which may be found by using the equation:

In

The component of , as represented by , where is the definition of . Let us denote the ball as . This represents the ball with a center of w and a radius when .

For the purpose of establishing the virtually full convergence of the estimator of . In order to establish the results of our research, it will be necessary to rely on the following assumptions.

- (P1)

- and the function is a differentiable at 0.There exists a function such that:

- (P2)

- The conditional distribution function satisfies the Holder condition, that is:Here, is a fixed compact subset of .

- (P3)

- The kernel, denoted by H, is a differentiable function, and its inverse, denoted by , is a positive, bounded, and Lipschitzian continuous function:

- (P4)

- For K, we have the necessary conditions for it to be a bounded continuous Lipschitz function, which are:Here, is referred to as an indicator function.

- (P5)

- A quasi-association exists between the sequence of random pairings , where , and the covariance coefficient , as long as the conditions are met.

- (P6)

- The joint distribution functions are defined so that they hold for each and every pair .satisfy:

- (P7)

- The frequencies , verified:(i)(ii)(iii) for

3.2. Brief Comment on the Conditions

The attribute of concentration of the explanatory variable is denoted by the Assumption (P1) in the context of tiny balls. The function is very important to any asymptotic study, but especially for the variance term. The inclusion of Condition (P2) in our model serves the purpose of regulating the smoothness of the functional space. The inclusion of these components is important in order to accurately calculate the bias component of the convergence rates. The (P3) and (P4) Assumptions, similarly, center on the cumulative function H and its associated kernels and K. The word “bias” is purposefully left out of the asymptotic normalcy result thanks to this assumption. The presumption (P5) represents a normative restriction on the quasi-associated information. Our model is assumed to be asymptotically normal under quasi-association if and only if the joint distribution of the pair () follows the assumptions of assumption (P6). This will enable us to demonstrate that our model exhibits asymptotic normality. Adopting assumption (P7) is required to rule out the possibility of “bias” in the final result of asymptotic normalcy, and this assumption is well-known as a classical one in functional estimation in spaces of finite or infinite dimensions.

3.3. Almost Complete Convergence of

Theorem 1.

Based on assumptions (P1)–(P7), we have:

Proof of Theorem 1.

The subsequent decomposition, together with the lemmas that are listed below, provide the foundation for the proof:

where

and

with

Lemma 1.

Based on assumptions (P1)–(P4) and (P6):

Corollary 1.

Based on assumptions (P1)–(P4) and (P6), we have:

Lemma 2.

Based on assumptions (P1)–(P6), we have:

Lemma 3.

Based on assumptions (P1)–(P7), we have:

□

Theorem 1 may be derived from these lemmas, where their proofs appear in Appendix A.

3.4. Almost Complete Convergence of

Theorem 2.

Based on assumptions (P1)–(P7), we have:

Proof of Theorem 2.

The basis for this analysis is derived from the subsequent decomposition.

and therefore asymptotic results for the estimator can be readily deduced from and .

After the decomposition precedent, all you have to do demonstrate:

Lemma 4

(See proof of Theorem 1). Based on assumptions (P1)–(P7), we obtain:

Lemma 5

(See Bouaker et al. (2021) [28]). Based on assumptions (P1)–(P7), we obtain:

Corollary 2.

Based on assumptions (P1)–(P7), we obtain:

□

3.5. Asymptotic Normality of the Conditional Hazard Function Estimate

Theorem 3.

Based on the assumptions, we obtain, for any :

where

and

In

The symbol denotes convergence in distribution.

Proof of Theorem 3.

The basis for this analysis is derived from the subsequent decomposition.

where

Lemma 6.

Based on assumptions (P1)–(P7), we have:

where

Lemma 7.

(See Daoudi, H. and Mechab, B. (2019) [22]). Under assumptions (P1)–(P5); we have:

Lemma 8.

Based on assumptions (P1)–(P7), we have:

Corollary 3.

Based on assumptions (P1)–(P7), we have:

□

4. Confidence Bands

One important aspect in statistical analysis is the establishment of confidence bands for estimations. These confidence bands provide a range of values within which we can be confident that the true value lies. By calculating and interpreting these confidence bands, we can gain a better understanding of the uncertainty associated with our estimations. The objective of this section create confidence intervals for the actual value of for a specified curve with the format . Estimation using nonparametric methods relies on the asymptotic variance, which is determined by a number of unknown functions. Regarding our situation, we have:

The variables , and are not known in advance and must be approximated during the practical implementation. It is possible to derive confidence bands even when is functionally given. An estimate for may be produced using , , , and for , , , and , respectively.

The constants and are estimated empirically in the following manner:

where

The expression for the asymptotic confidence band at a significance level of for is provided as follows.

where denotes the quantile of the standard normal distribution.

5. A Simulation Study

Within this particular section, we examine how our asymptotic normality conclusions behave across data from a limited sample. Our primary purpose is to demonstrate how simple the conditional risk function is to develop and to study the effect of dependency on this asymptotic characteristic.

We produce functional observations for this purpose by investigating the functional nonparametric model that is shown below:

where

The linear process with quasi-associated variables is well known to satisfy requirement (). As a result, the functional regressor with quasi-associates shown below is constructed.

where

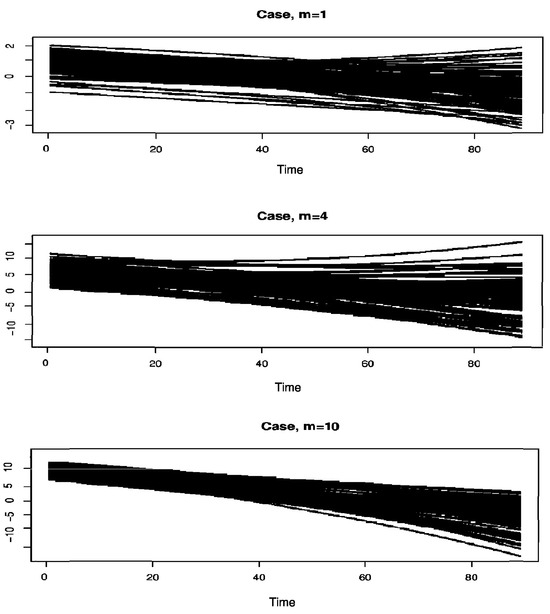

Figure 1 shows the ’s curves discretized in the same 100-point grid in [0, 1] for m = 1, 4, and 10 values. Furthermore, the regression operator computes the scalar variable :

Figure 1.

A sample of 200 curves.

It can be seen that, by examining the error distribution (), we are able to infer the theoretical conditional distribution function of Z given . This function is explicitly determined by shifting the distribution of () by . Therefore, the determination of the theoretical distribution of the conditional hazard function may be easily determined.

To demonstrate this function’s asymptotic normality, we fix one curve, , and , from the created data, then collect m independent n-samples of the same data and compute the quantity:

where is the previous section’s standard deviation estimation.

The collected sample is next tested for normality. With the cross-validation approach determined, we utilized a quadratic kernel, represented as:

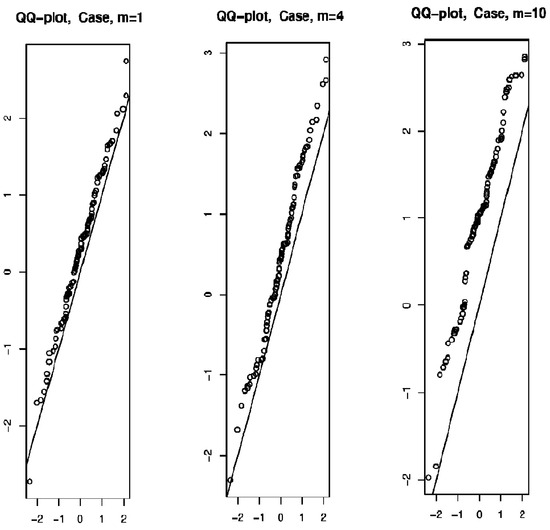

Figure 2 depicts a graph of the collected sample versus a typical normal distribution at varied m = 1, 4, and 10 values.

Figure 2.

The QQ-plot of the obtained sample.

The performance of our estimator demonstrates its favorable characteristics and reliable performance in practical applications. Furthermore, the correlation of the data has a significant impact on the pace at which the asymptotic normality converges to its final state. Specifically, its magnitude diminishes in proportion to the values of m.

Table 1 summarizes the p-value from the Kolmogorov–Smirnov test for each value of m, which confirms an inverse relationship between the correlation of the data and the convergence rate of the asymptotic normality.

Table 1.

The p-value indicated by the test of Kolmogorov–Smirnov.

6. Conclusions and Perspectives

The current study examines the asymptotic properties of certain conditional functional parameters, specifically the distribution function, density, and hazard function. These parameters are analyzed in the context of an explanatory variable that takes values in a Hilbert space of infinite dimension and a response variable that is real in a quasi-associated dependency framework. The estimators’ asymptotic characteristics, including practically certain convergence and normality, are derived in this study using standard conditions that encompass the key components of the research, such as the functional related assumption and the nonparametric nature of the model. The computational aspect highlights the significance of this estimator in practical applications due to its efficiency in updating findings with each new piece of information. Furthermore, the current contribution also presents intriguing avenues for further exploration. It would be of interest to establish the asymptotic normality of the estimators we have proposed in order to generalize the findings to incomplete data, such as missing, censored, or shortened data. One potential trajectory for future research involves the exploration of more intricate dependence structures, such as the ergodic spatial dependence.

Author Contributions

Conceptualization, Z.C.E. and H.D.; methodology, Z.C.E. and H.D.; software, H.D.; validation, Z.C.E. and H.D.; formal analysis, Z.C.E., F.A. and H.D.; investigation, Z.C.E., F.A. and H.D.; resources, Z.C.E., F.A. and H.D.; data curation, H.D.; writing—original draft preparation, H.D.; writing—review and editing, Z.C.E., F.A. and H.D.; visualization, H.D.; supervision, Z.C.E. and F.A.; project administration, Z.C.E.; funding acquisition, Z.C.E. and F.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research project was funded by (1) Princess Nourah bint Abdulrahman University Researchers Supporting Project Number (PNURSP2023R358), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia, and (2) The Deanship of Scientific Research at King Khalid University through Small group Research Project under grant number R.G.P. 1/366/44.

Data Availability Statement

Not applicable.

Acknowledgments

The authors express their gratitude to the Editors, the Associate Editor, and the anonymous reviewers for their insightful comments and suggestions, which significantly enhanced the overall quality of a previous iteration of this manuscript. They thank and extend their appreciation to the Deanship of Scientific Research at Princess Nourah bint Abdulrahman University and King Khalid University for funding this work.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Corollary A1

(See [17]). Let be a quasi-associated sequence of random variables with values in . Let and , for some finite disjoint subsets . Then

where is the set of bounded Lipschitz functions , and is the set of bounded functions.

Corollary A2

(See [29]). Let be the real random variables such that and for all and some ; let .

Assume, furthermore, that there exist and such that, for all u-uplets , with

The following inequality is fulfilled:

Then,

for some

and

Proof of Lemma 1.

We put:

where

, : and

Moreover, we can write:

and

In order to use the lemma that was developed by [29], we need to do an evaluation of the variance term as well as the covariance term .

Both of these terms are denoted by the following for all with .

We explore the following cases with respect to the covariance term if . Utilizing the reality that

and

we have:

If it , under , the quasi-association yields:

On the other hand, if we take into account , we have:

In addition, by taking a of (A7), and a of (A8), we are able to derive an upper-bound of the tree terms as follows: for:

Second, we entered the following information for the variance term for all :

For the first term , we have:

Then,

As a result, considering (P2) and (P3) and integrating over the real component y gives us the following:

As for all :

Then,

It follows that:

Regarding the covariance term in (A10), the following decomposition will be utilized.

where is an infinite series of positive integers as n tends to infinity. The (P1)–(P3) and (P6) presuppositions we obtain, if :

where H and K are both bounded and Lipschitz kernels, we obtain:

Then, by (A15) and (A16), we get

By choosing:

We get:

Finally, we get this result by integrating the previous three results (A10), (A14), and (A17).

Therefore, the criteria of the lemma are satisfied by , where .

Thus,

by (P7). Last but not least, with an appropriate selection of , the Borel–Cantelli lemma makes it possible to conclude the proof of Lemma 1. □

Proof of Corollary 1.

We have:

Therefore,

For , we apply the result of Lemma 1 and show that:

□

Proof of Lemma 2.

can write:

We derive the following by making use of the stationarity of the data, the conditioning by the variable that is doing the explaining, and the customary change in the variable :

and we deduce,

Therefore, by (P2) we get

This inequality holds true everywhere in S and, after substituting in (A22) and simplifying the expression , we get that:

In conclusion, the evidence of Lemma 2 is provided by Hypothesis (P4) and Corollary 1. □

Proof of Lemma 3.

Substituting for in Lemma 1’s proof yields a simple application of Lemma 3.

□

Proof of Lemma 6.

We denote

where

and

Therefore,

the outcome is

We employ Doob’s basic technique [30]. Indeed, we select two natural number sequences that go to infinity.

and we split into

where

with

Observe that, for (where [.] stands for the integral part), we have and , which means as as . Our asymptotic result is now founded on:

and

□

Proof of Equation (A26).

Stationarity gives us:

and

The fact that gives us

In another way,

We will now write down this last covariance.

where a positive integer sequence that goes to infinity as .

In he term we utilize (P1), (P3), and (P7), to demonstrate that, for

For (II), we utilize lipschitz and the understanding that the H and K are constrained to demonstrate:

When we add all of these inequality problems together, we get

By choosing we get

Thus, we obtain,

Then

From (A28)–(A31), we obtain

To utilize the stationary, go to (A27) and look to the right.

For all and , then

By this and (A31), we can write

Now, with regard to , we obtain:

Then,

Via (A26), the proof of (A27) is complete.

□

Proof of Equation (A29).

Based on:

and

□

Proof of Equation (A34).

Successively, we have

Once again, we apply the lemma that was developed by [29] to write:

through the last result of each expression on the right-hand side of (A37):

For every , we have then

Hence, (A36) is transformed.

□

Proof of Equation (35).

By the same reasoning used in (A28), we have:

Through a straightforward computation, we establish that:

which imply that

Hence

For (A35), we conclude and Tchebychev’s inequality to get

□

Proof of Lemma 8.

Remember that we demonstrated previously:

Therefore, by (P5), we obtain

As , then all that remains is to prove that

This is a direct result of:

and

The three proofs are all quite similar and very close to the evidence of (A30). As a result, for the purpose of brevity, we simply provide the first evidence. Indeed,

For the first term,

Then,

It follows that:

Let us now consider the sum’s asymptotic behavior in term two of (A38). This necessitates to separate:

where is a positive integer sequence that goes to infinity as .

From Assumptions , and we have, for

Because K is bounded and Lipschitzian, we obtain:

Then, by (A40) and (A41), we get

By choosing

we get

By (A38), (A39), and (A42) we conclude:

□

References

- Aneiros, G.; Cao, R.; Fraiman, R.; Genest, C.; Vieu, P. Recent advances in functional data analysis and high-dimensional statistics. Multivar. Anal. 2019, 170, 3–9. [Google Scholar] [CrossRef]

- Araujo, A.; Giné, E. The Central Limit Theorem for Real and Banach Valued Random Variables; Wiley Series in Probability and Mathematical Statistics; John Wiley and Sons: New York, NY, USA; Chichester, UK; Brisbane, Australia, 1980; p. xiv+233. [Google Scholar]

- Gasser, T.; Hall, P.; Presnell, B. Nonparametric estimation of the mode of a distribution of random curves. J. R. Stat. Society. Ser. B 1998, 60, 681–691. [Google Scholar] [CrossRef]

- Ferraty, F.; Vieu, P. Nonparametric Functional Data Analysis: Theory and Practice; Springer Series in Statistics; Springer: New York, NY, USA, 2006. [Google Scholar]

- Ferraty, F.; Laksaci, A.; Tadj, A.; Vieu, P. Rate of uniform consistency for nonparametric estimates with functional variables. J. Stat. Plan. Inference 2010, 140, 335–352. [Google Scholar] [CrossRef]

- Kara-Zaitri, L.; Laksaci, A.; Rachdi, M.; Vieu, P. Uniform in bandwidth consistency for various kernel estimators involving functional data. J. Non Parametr. Stat. 2017, 29, 85–107. [Google Scholar] [CrossRef]

- Ferraty, F.; Peuch, A.; Vieu, P. Modèle à indice fonctionnel simple. Comptes Rendus Math. 2003, 336, 1025–1028. [Google Scholar] [CrossRef]

- Ezzahrioui, M.; Ould-Saïd, E. Asymptotic Results of a Nonparametric Conditional Quantile Estimator for Functional Time Series. Commun. Stat. Theory Methods 2008, 37, 2735–2759. [Google Scholar] [CrossRef]

- Ezzahrioui, M.; Ould-Said, E. On the asymptotic properties of a nonparametric estimator of the conditional mode for functional dependent data. J. Nonparametr. Stat. 2008, 20, 3–18. [Google Scholar] [CrossRef]

- Laksaci, A. Convergence en moyenne quadratique de l’estimateur à noyau de la densité conditionnelle avec variable explicative fonctionnelle. Ann. L’Institut Stat. L’Université Paris 2007, 51, 69–80. [Google Scholar]

- Laksaci, A.; Maref, F. Estimation non paramétrique de quantiles conditionnels pour des variables fonctionnelles spatialement dépendantes. Comptes Rendus Math. 2009, 347, 1075–1080. [Google Scholar] [CrossRef]

- Matula, P. A note on the almost sure convergence of sums of negatively dependent random variables. Stat. Probab. Lett. 1992, 15, 209–213. [Google Scholar] [CrossRef]

- Newman, C.M. Asymptotic independence and limit theorems for positivelyand negatively dependent random variables: In Inequalities in Statistics and Probability. IMS Lect. Notes Monogr. Ser. 1984, 5, 127–140. [Google Scholar]

- Roussas, G.G. Positive and negative dependence with some statistical applications. In Asymptotics, Nonparametrics and Time Series; Ghosh, S., Ed.; Marcell Dekker, Inc.: New York, NY, USA, 1999; pp. 757–788. [Google Scholar]

- Doukhan, P.; Louhichi, S. A new weak dependence condition andapplications to moment inequalities. Stoch. Process. Their Appl. 1999, 84, 313–342. [Google Scholar] [CrossRef]

- Bulinski, A.; Suquet, C. Normal approximation for quasi-associated random fields. Stat. Probab. Lett. 2001, 54, 215–226. [Google Scholar] [CrossRef]

- Douge, L. Théorèmes limites pour des variables quasi-associées hilbertiennes. Ann. L’Institut Stat. L’Université Paris 2010, 54, 51–60. [Google Scholar]

- Attaoui, S.; Laksaci, A.; Ould-Said, E. Asymptotic Results for an M-estimator of the Regression Function for Quasi-Associated Processes. In Functional Statistics and Applications. Contributions to Statistics; Springer International Publishing: Cham, Switzerland, 2015; pp. 3–28. [Google Scholar] [CrossRef]

- Tabti, H.; Ait Saïdi, A. Estimation and simulation of conditionalhazard function in the quasi-associated framework when the observationsare linked via a functional single-index structure, Commun. Stat. Theory Methods 2017, 47, 816–838. [Google Scholar]

- Hamza, D.; Mechab, B.; Chikr Elmezouar, Z. Asymptotic normality of a conditional hazard function estimate in the single index for quasi-associated data. Commun. Stat. Theory Methods 2020, 49, 513–530. [Google Scholar] [CrossRef]

- Laksaci, A.; Mechab, W. Nonparametric relative regression for associatedrandom variables. Metron 2016, 74, 75–97. [Google Scholar]

- Daoudi, H.; Mechab, B. Asymptotic Normality of the Kernel Estimate of Conditional Distribution Function for the quasi-associated data. Pak. J. Stat. Oper. Res. 2019, 15, 999–1015. [Google Scholar]

- Daoudi, H.; Mechab, B.; Benaissa, S.; Rabhi, A. Asymptotic normality of the nonparametric conditional density function esti-mate with functional variables for the quasi-associated data. Int. J. Stat. Econ. 2019, 20, 94–106. [Google Scholar]

- Allaoui, S.; Bouzebda, S.; Chesneau, C.; Liu, J. Uniform almost sure convergence and asymptotic distribution of the wavelet-based estimators of partial derivatives of multivariate density function under weak dependence. J. Nonparametr. Stat. 2021, 33, 170–196. [Google Scholar] [CrossRef]

- Mohammedi, M.; Bouzebda, S.; Laksaci, A.; Bouanani, O. Asymptotic normality of the k-NN single index regression estimator for functional weak dependence data. Commun. Stat. Theory Methods 2022, 33, 1–26. [Google Scholar] [CrossRef]

- Bouzebda, S.; Laksaci, A.; Mohammedi, M. The k-nearest neighbors method in single index regression model for functional quasi-associated time series data. Rev. Mat. Complut. 2023, 36, 361–391. [Google Scholar] [CrossRef]

- Bouzebda, S.; Laksaci, A.; Mohammedi, M. Single Index Regression Model for Functional Quasi-Associated Times Series Data. REVSTAT Stat. J. 2023, 20, 605–631. [Google Scholar]

- Bouaker, I.; Belguerna, A.; Daoudi, H. The consistency of the kernel estimation of the Function conditional density for associated Data in high-dimensional statistics. J. Sci. Arts 2022, 22, 247–256. [Google Scholar] [CrossRef]

- Kallabis, R.S.; Neumann, M.H. An exponential inequality under weakdependence. Bernoulli 2006, 12, 333–350. [Google Scholar] [CrossRef]

- Doob, J.L. Stochastic Processes; John Wiley and Sons: New York, NY, USA, 1953. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).