Enhancing Robustness of Viewpoint Changes in 3D Skeleton-Based Human Action Recognition

Abstract

1. Introduction

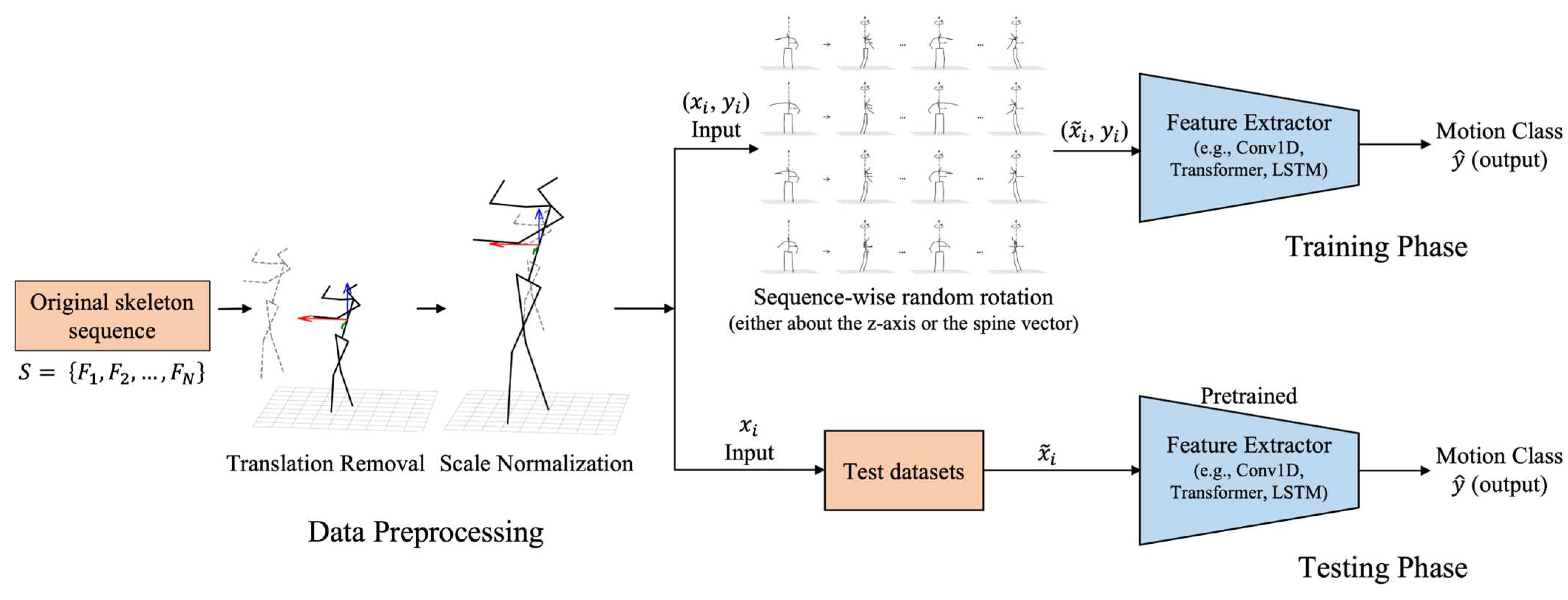

- We propose a novel augmentation method designed to bolster the robustness and generalization performance of 3D skeleton-based action recognition models by effectively mitigating the impacts of variations in viewing direction, primarily within the horizontal plane, for tasks involving natural human motions;

- We extensively validate the robustness and generalizability of our proposed approach using four public datasets, as well as a custom dataset, thereby ensuring the broad applicability and robustness of our approach;

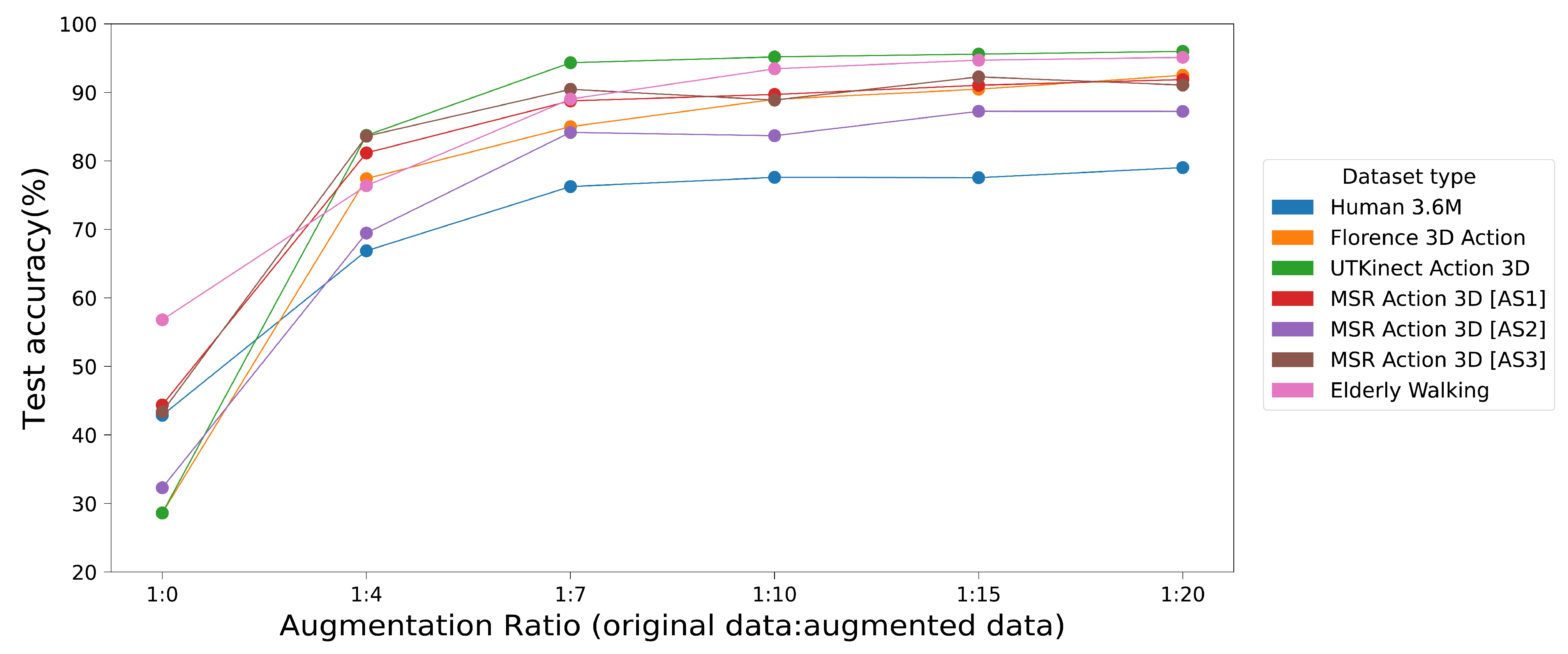

- Through additional experiments, we determine the optimal ratio of original to augmented training data, providing a comprehensive guide for the practical implementation of our proposed methods in future applications.

2. Related Works

2.1. Data Augmentation for Skeleton-Based Action Recognition

2.2. Robustness against Viewpoint Variations

3. Methods

3.1. Data Preprocessing

3.1.1. Translation Removal

3.1.2. Scale Normalization

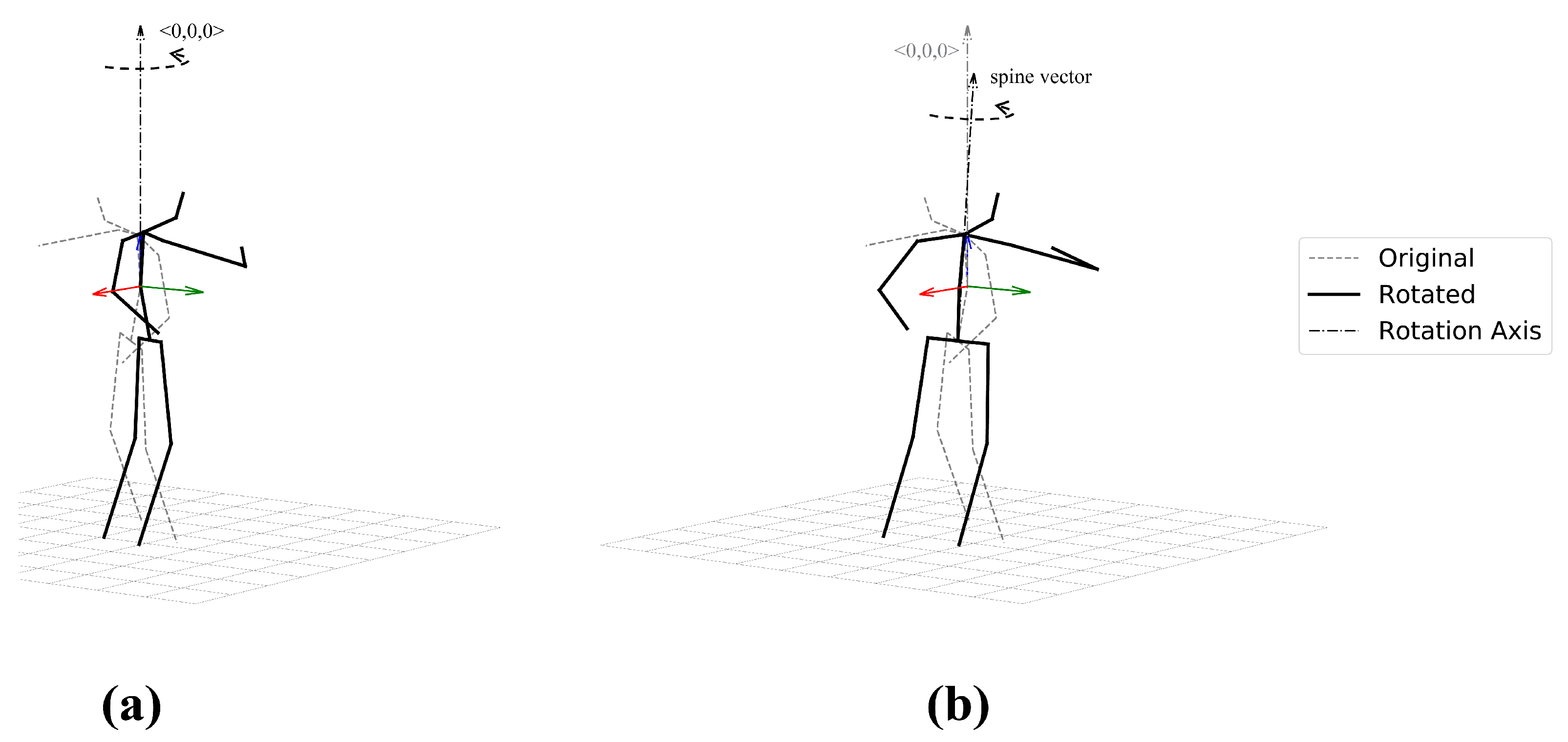

3.2. Data Augmentation

3.3. Datasets

4. Experiment

4.1. Experimental Setup

4.1.1. Data Segmentation

4.1.2. Data Augmentation

4.1.3. Network Architecture and Training Process

4.2. Test Sets

5. Results and Discussions

5.1. Classification Results

5.1.1. Original Dataset (T0) vs. View Normalization (T1)

5.1.2. View Normalization (T1) vs. Proposed Augmentations (T2 and T3)

5.1.3. Rotation about z-Axis (T2) vs. Spine Vector (T3)

5.1.4. Overall Assessment

5.1.5. Model

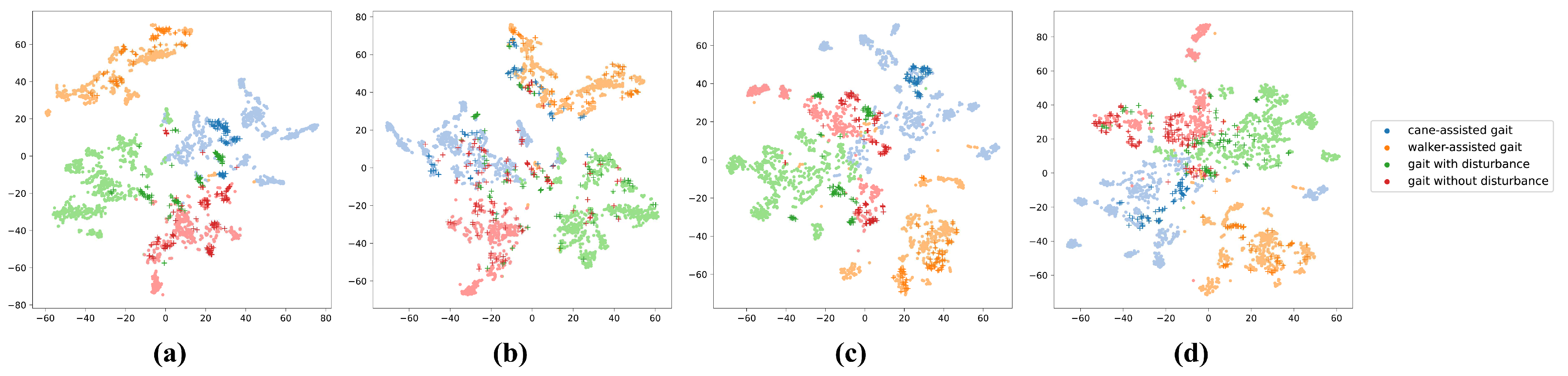

5.2. Validation of Unseen Data

5.3. Optimal Ratio of Original to Augmented Training Data

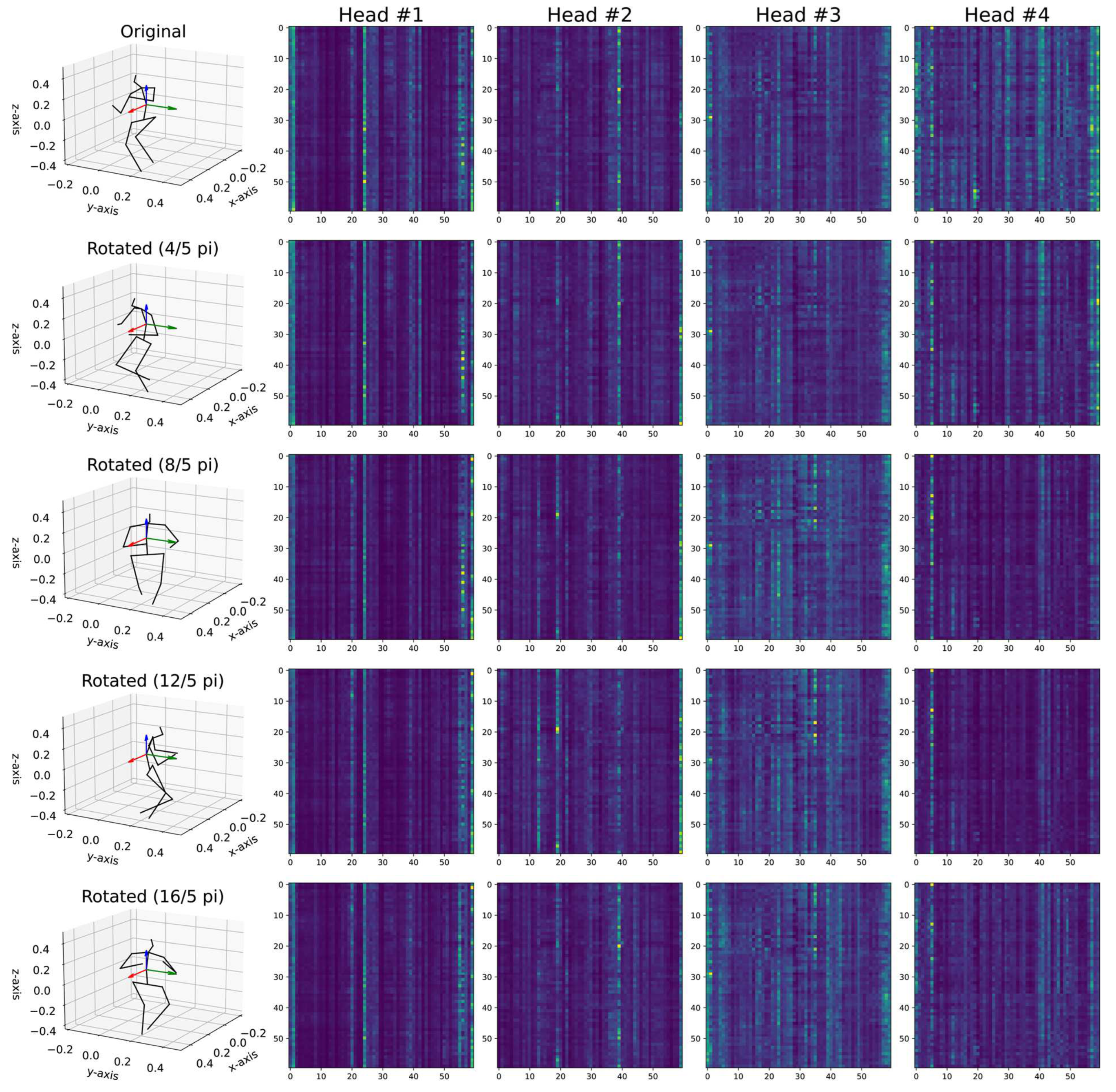

5.4. Visualization of Attention Maps

5.5. Limitations and Future Work

5.6. Applications

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shotton, J.; Sharp, T.; Kipman, A.; Fitzgibbon, A.; Finocchio, M.; Blake, A.; Cook, M.; Moore, R. Real-time human pose recognition in parts from single depth images. Commun. ACM 2013, 56, 116–124. [Google Scholar] [CrossRef]

- Zhang, Z. Microsoft kinect sensor and its effect. IEEE Multimed. 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Pavllo, D.; Feichtenhofer, C.; Grangier, D.; Auli, M. 3d human pose estimation in video with temporal convolutions and semi-supervised training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019. [Google Scholar]

- Li, W.; Zhang, Z.; Liu, Z. Action recognition based on a bag of 3d points. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Seidenari, L.; Varano, V.; Berretti, S.; Bimbo, A.; Pala, P. Recognizing actions from depth cameras as weakly aligned multi-part bag-of-poses. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Xia, L.; Chen, C.-C.; Aggarwal, J.K. View invariant human action recognition using histograms of 3d joints. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Ionescu, C.; Li, F.; Sminchisescu, C. Latent structured models for human pose estimation. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Shahroudy, A.; Liu, J.; Ng, T.-T.; Wang, G. Ntu rgb+ d: A large scale dataset for 3d human activity analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Gao, L.; Ji, Y.; Gedamu, K.; Zhu, X.; Xu, X.; Shen, H.T. View-Invariant Human Action Recognition Via View Transformation Network (VTN). IEEE Trans. Multimed. 2021, 24, 4493–4503. [Google Scholar] [CrossRef]

- Zhang, P.; Lan, C.; Xing, J.; Zeng, W.; Xue, J.; Zheng, N. View adaptive recurrent neural networks for high performance human action recognition from skeleton data. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- LeCun, Y.; Bengio, Y. Convolutional networks for images, speech, and time series. In The Handbook of Brain Theory and Neural Networks; The MIT Press: Cambridge, MA, USA, 1995; Volume 3361, p. 1995. [Google Scholar]

- Chen, Y. Convolutional Neural Network for Sentence Classification. Master’s Thesis, University of Waterloo, Waterloo, ON, Canada, 2015. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Wang, H.; Wang, L. Modeling temporal dynamics and spatial configurations of actions using two-stream recurrent neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Li, B.; Dai, Y.; Cheng, X.; Chen, H.; Lin, Y.; He, M. Skeleton based action recognition using translation-scale invariant image mapping and multi-scale deep CNN. In Proceedings of the 2017 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Hong Kong, China, 10–14 July 2017. [Google Scholar]

- Liu, M.; Liu, H.; Chen, C. Enhanced skeleton visualization for view invariant human action recognition. Pattern Recognit. 2017, 68, 346–362. [Google Scholar] [CrossRef]

- Thoker, F.M.; Doughty, H.; Snoek, C.G. Skeleton-contrastive 3D action representation learning. In Proceedings of the 29th ACM International Conference on Multimedia, Online, China, 20–24 October 2021. [Google Scholar]

- Ahmad, T.; Jin, L.; Lin, L.; Tang, G. Skeleton-based action recognition using sparse spatio-temporal GCN with edge effective resistance. Neurocomputing 2021, 423, 389–398. [Google Scholar] [CrossRef]

- Yan, S.; Li, Z.; Xiong, Y.; Yan, H.; Lin, D. Convolutional sequence generation for skeleton-based action synthesis. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Junejo, I.N.; Dexter, E.; Laptev, I.; Pérez, P. Cross-view action recognition from temporal self-similarities. In Proceedings of the Computer Vision–ECCV 2008: 10th European Conference on Computer Vision, Marseille, France, 12–18 October 2008. [Google Scholar]

- Kitt, B.M.; Rehder, J.; Chambers, A.D.; Schonbein, M.; Lategahn, H.; Singh, S. Monocular visual odometry using a planar road model to solve scale ambiguity. In Proceedings of the Proceedings of 5th European Conference on Mobile Robots (ECMR ’11), Örebro, Sweden, 7–9 September 2011. [Google Scholar]

- Yang, J.; Lu, H.; Li, C.; Hu, X.; Hu, B. Data Augmentation for Depression Detection Using Skeleton-Based Gait Information. arXiv 2022, arXiv:2201.01115. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.-J.; Kim, H.; Park, J.; Oh, B.; Kim, S.-C. Recognition of Gait Patterns in Older Adults Using Wearable Smartwatch Devices: Observational Study. J. Med. Internet Res. 2022, 24, e39190. [Google Scholar] [CrossRef] [PubMed]

- Rhif, M.; Wannous, H.; Farah, I.R. Action recognition from 3d skeleton sequences using deep networks on lie group features. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018. [Google Scholar]

- Vemulapalli, R.; Arrate, F.; Chellappa, R. Human action recognition by representing 3d skeletons as points in a lie group. In Proceedings of the IEEE conference on COMPUTER Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Lyu, H.; Huang, D.; Li, S.; Ng, W.W.; Ma, Q. Multiscale echo self-attention memory network for multivariate time series classification. Neurocomputing 2023, 520, 60–72. [Google Scholar] [CrossRef]

- Kim, H.; Lee, H.; Park, J.; Paillat, L.; Kim, S.C. Vehicle Control on an Uninstrumented Surface with an Off-the-Shelf Smartwatch. IEEE Trans. Intell. Veh. 2023, 8, 3366–3374. [Google Scholar] [CrossRef]

- Lee, K.-W.; Kim, S.-C.; Lim, S.-C. DeepTouch: Enabling Touch Interaction in Underwater Environments by Learning Touch-Induced Inertial Motions. IEEE Sens. J. 2022, 22, 8924–8932. [Google Scholar] [CrossRef]

- Perol, T.; Gharbi, M.; Denolle, M. Convolutional neural network for earthquake detection and location. Sci. Adv. 2018, 4, e1700578. [Google Scholar] [CrossRef] [PubMed]

- Meng, F.; Liu, H.; Liang, Y.; Tu, J.; Liu, M. Sample fusion network: An end-to-end data augmentation network for skeleton-based human action recognition. IEEE Trans. Image Process. 2019, 28, 5281–5295. [Google Scholar] [CrossRef] [PubMed]

- Song, S.; Lan, C.; Xing, J.; Zeng, W.; Liu, J. An end-to-end spatio-temporal attention model for human action recognition from skeleton data. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Li, C.; Wang, P.; Wang, S.; Hou, Y.; Li, W. Skeleton-based action recognition using LSTM and CNN. In Proceedings of the 2017 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Hong Kong, China, 10–14 July 2017. [Google Scholar]

- Liu, J.; Shahroudy, A.; Xu, D.; Kot, A.C.; Wang, G. Skeleton-based action recognition using spatio-temporal LSTM network with trust gates. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 3007–3021. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Zhang, T.; Zhou, P.; Yan, C.; Li, C. OFPI: Optical Flow Pose Image for Action Recognition. Mathematics 2023, 11, 1451. [Google Scholar] [CrossRef]

- Supratak, A.; Dong, H.; Wu, C.; Guo, Y. DeepSleepNet: A model for automatic sleep stage scoring based on raw single-channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1998–2008. [Google Scholar] [CrossRef] [PubMed]

- Mazzia, V.; Angarano, S.; Salvetti, F.; Angelini, F.; Chiaberge, M. Action Transformer: A self-attention model for short-time pose-based human action recognition. Pattern Recognit. 2022, 124, 108487. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Action Class | # of Total Trials | Max # of Frames | Min # of Frames | Average # of Frames (Std.) | Average Length (Secs) |

|---|---|---|---|---|---|

| Directions | 13 | 4973 | 1381 | 2449 (884) | 48.99 |

| Discussion | 14 | 6090 | 1931 | 3983 (1574) | 79.65 |

| Eating | 14 | 3763 | 2010 | 2656 (467) | 53.12 |

| Greeting | 14 | 3199 | 1149 | 1841 (551) | 36.81 |

| Phoning | 14 | 4354 | 2085 | 3070 (665) | 61.40 |

| Photo | 14 | 3325 | 1036 | 1882 (599) | 37.63 |

| Posing | 14 | 2320 | 992 | 1728 (526) | 34.55 |

| Purchases | 14 | 3124 | 1026 | 1471 (577) | 29.42 |

| Sitting | 14 | 4533 | 1817 | 2799 (832) | 55.98 |

| SittingDown | 14 | 6343 | 1501 | 2903 (1494) | 58.06 |

| Smoking | 14 | 4870 | 2410 | 3372 (774) | 67.43 |

| Waiting | 14 | 4856 | 1440 | 2735 (1193) | 54.70 |

| WalkDog | 14 | 2732 | 1187 | 1924 (422) | 38.45 |

| Walking | 14 | 3737 | 1612 | 2893 (782) | 57.86 |

| WalkTogether | 14 | 3016 | 1231 | 2027 (617) | 40.53 |

| Average length in secs (std.) | 45.30 (11.07) | ||||

| Dataset | Model | Accuracies on Test Set (%) | |

|---|---|---|---|

| Original | SW RR (z-Axis) | ||

| H36M | Conv1D | 64.12 | 14.07 |

| LSTM | 54.26 | 14.07 | |

| Transformer | 60.09 | 13.57 | |

| UTK | Conv1D | 83.34 | 29.08 |

| LSTM | 63.82 | 31.21 | |

| Transformer | 86.92 | 28.61 | |

| FLO | Conv1D | 86.93 | 34.88 |

| LSTM | 78.28 | 37.12 | |

| Transformer | 90.60 | 42.88 | |

| MSRA | Conv1D | 95.40 | 36.49 |

| LSTM | 69.69 | 15.70 | |

| Transformer | 95.85 | 40.04 | |

| EW | Conv1D | 94.87 | 57.28 |

| LSTM | 93.85 | 54.87 | |

| Transformer | 94.72 | 56.81 | |

| Dataset | Model | Training Condition | Accuracies on Test Set (%) | ||

|---|---|---|---|---|---|

| Original | SW VN | SW RR (z-Axis) | |||

| H36M | Conv1D | T1 | 16.75 | 66.52 | 15.49 |

| T2 | 90.94 | 93.01 | 89.80 | ||

| T3 | 82.74 | 74.46 | 64.94 | ||

| LSTM | T1 | 14.68 | 57.92 | 14.43 | |

| T2 | 45.81 | 46.89 | 41.05 | ||

| T3 | 36.07 | 30.66 | 29.94 | ||

| Transformer | T1 | 15.61 | 61.71 | 14.92 | |

| T2 | 96.65 | 96.82 | 96.00 | ||

| T3 | 89.49 | 81.02 | 70.75 | ||

| UTK | Conv1D | T1 | 11.00 | 81.34 | 15.50 |

| T2 | 95.00 | 94.50 | 95.50 | ||

| T3 | 94.00 | 67.32 | 80.82 | ||

| LSTM | T1 | 12.05 | 55.26 | 13.11 | |

| T2 | 88.97 | 91.97 | 89.95 | ||

| T3 | 90.00 | 61.84 | 64.34 | ||

| Transformer | T1 | 16.00 | 87.45 | 21.05 | |

| T2 | 95.50 | 91.00 | 92.50 | ||

| T3 | 91.37 | 65.79 | 77.32 | ||

| FLO | Conv1D | T1 | 83.23 | 89.31 | 39.55 |

| T2 | 85.13 | 85.61 | 86.08 | ||

| T3 | 89.31 | 86.54 | 74.87 | ||

| LSTM | T1 | 67.90 | 71.17 | 36.36 | |

| T2 | 86.00 | 86.00 | 82.73 | ||

| T3 | 85.41 | 83.59 | 67.84 | ||

| Transformer | T1 | 86.49 | 89.29 | 41.93 | |

| T2 | 83.77 | 82.79 | 79.03 | ||

| T3 | 82.27 | 78.98 | 66.95 | ||

| MSRA | Conv1D | T1 | 16.72 | 69.16 | 32.05 |

| T2 | 94.93 | 74.16 | 82.82 | ||

| T3 | 95.98 | 29.12 | 56.19 | ||

| LSTM | T1 | 12.98 | 48.26 | 24.41 | |

| T2 | 88.70 | 85.10 | 88.27 | ||

| T3 | 92.25 | 28.21 | 54.62 | ||

| Transformer | T1 | 14.26 | 69.46 | 31.01 | |

| T2 | 90.25 | 88.44 | 89.20 | ||

| T3 | 92.17 | 28.81 | 55.59 | ||

| EW | Conv1D | T1 | 33.06 | 95.11 | 46.04 |

| T2 | 95.11 | 95.39 | 94.87 | ||

| T3 | 95.35 | 63.93 | 73.49 | ||

| LSTM | T1 | 33.38 | 92.27 | 45.13 | |

| T2 | 94.87 | 95.23 | 94.64 | ||

| T3 | 96.10 | 65.45 | 72.03 | ||

| Transformer | T1 | 33.57 | 94.04 | 48.25 | |

| T2 | 95.58 | 95.58 | 95.11 | ||

| T3 | 95.13 | 64.34 | 73.57 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.; Kim, C.; Kim, S.-C. Enhancing Robustness of Viewpoint Changes in 3D Skeleton-Based Human Action Recognition. Mathematics 2023, 11, 3280. https://doi.org/10.3390/math11153280

Park J, Kim C, Kim S-C. Enhancing Robustness of Viewpoint Changes in 3D Skeleton-Based Human Action Recognition. Mathematics. 2023; 11(15):3280. https://doi.org/10.3390/math11153280

Chicago/Turabian StylePark, Jinyoon, Chulwoong Kim, and Seung-Chan Kim. 2023. "Enhancing Robustness of Viewpoint Changes in 3D Skeleton-Based Human Action Recognition" Mathematics 11, no. 15: 3280. https://doi.org/10.3390/math11153280

APA StylePark, J., Kim, C., & Kim, S.-C. (2023). Enhancing Robustness of Viewpoint Changes in 3D Skeleton-Based Human Action Recognition. Mathematics, 11(15), 3280. https://doi.org/10.3390/math11153280