Abstract

Digital images have become an important carrier for people to access information in the information age. However, with the development of this technology, digital images have become vulnerable to illegal access and tampering, to the extent that they pose a serious threat to personal privacy, social order, and national security. Therefore, image forensic techniques have become an important research topic in the field of multimedia information security. In recent years, deep learning technology has been widely applied in the field of image forensics and the performance achieved has significantly exceeded the conventional forensic algorithms. This survey compares the state-of-the-art image forensic techniques based on deep learning in recent years. The image forensic techniques are divided into passive and active forensics. In passive forensics, forgery detection techniques are reviewed, and the basic framework, evaluation metrics, and commonly used datasets for forgery detection are presented. The performance, advantages, and disadvantages of existing methods are also compared and analyzed according to the different types of detection. In active forensics, robust image watermarking techniques are overviewed, and the evaluation metrics and basic framework of robust watermarking techniques are presented. The technical characteristics and performance of existing methods are analyzed based on the different types of attacks on images. Finally, future research directions and conclusions are presented to provide useful suggestions for people in image forensics and related research fields.

MSC:

94A08; 68U10

1. Introduction

Digital images are important information carriers, and with the rapid development of this technology, digital images have gradually been included in all aspects of life. However, data stored or transmitted in digital form is vulnerable to external attacks, and digital images are particularly susceptible to unauthorized access and illegal tampering. As a result, the credibility and security of digital images are under serious threat. If these illegally accessed and tampered images appear in the news media, academic research, and judicial forensics, which require high originality of images, social stability and political security will be seriously threatened. To solve the above problems, digital image forensics has become a hot issue for research and is the main method used to identify whether the images are illegally acquired or tampered with. Digital image forensic technology is a novel technique to determine the authenticity, integrity, and originality of image content by analyzing the statistical characteristics of images, which is of great significance for securing cyberspace and maintaining social order.

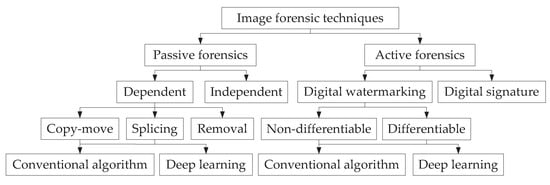

Digital image forensics technology is mainly used to detect the authenticity of digital images and realize image copyright protection, which can be divided into active forensics techniques and passive forensic techniques according to different detection methods, as shown in Figure 1.

Figure 1.

Classification of image forensic techniques.

The active forensic techniques are used to embed prior information, and then extract the embedded information, such as image watermarking and image signatures, and compare it with the original information to identify whether the image was illegally obtained. Image watermarking is a technique that embeds hidden information into digital images with the aim of protecting the copyright and integrity of the images. Watermarks are usually invisible or difficult to perceive and are used to identify the owner or provide authorization information for the image. They can be used to trace and prevent unauthorized copying, modification, or tampering of the image. Image watermarking techniques typically utilize image processing algorithms and encryption techniques to ensure the robustness and security of the watermark. On the other hand, image signature is a technique used to verify the integrity and authenticity of an image. Image signatures are typically based on digital signatures using encryption and hash functions to ensure that the image has not been tampered with during transmission or storage. With the rapid development of the digital information era, there has been an alarming rise in copyright infringement facilitated by image tampering techniques. Among the aforementioned active forensic techniques, image watermarking, especially robust image watermarking that can recover watermark information intact against intentional or unintentional attacks, is more effective compared to image signatures in authenticating and protecting copyright information when images are subjected to malicious tampering. Therefore, our focus in active forensics is on image watermarking, which aligns with our research interests as well.

Passive forensic techniques, which do not require prior information about the image, determine whether the image has been illegally tampered with by analyzing the structure and statistical characteristics of the image. In passive forensic techniques, even if an image has been forged beyond recognition by the human eyes, the statistical characteristics of the image will change, causing various inconsistencies in the image, and these inconsistencies are used to detect and locate tampered images. With the development of deep learning technology, deep learning technology has been widely used in many fields, such as speech processing, computer vision, natural language processing, and medical applications [1]. Deep learning technology has been widely applied in the field of image forensics, which has promoted the development of image forensics technology. In this survey, we focus on passive image forgery detection technology based on deep learning and robust image watermarking technology based on deep learning.

There have been many reviews of passive forgery detection techniques over the last few years. Kuar et al. [2] created a detailed overview of the process of image tampering and the current problems of tampering detection, and sorted out the conventional algorithms in tampering detection from different aspects, without reviewing the techniques of deep learning. Zhang et al. [3] sorted out and compared the conventional copy-move tampering detection algorithms, giving the advantages and disadvantages of each conventional algorithm in detail, without reviewing the techniques related to deep learning. Zanardelli et al. [4] reviewed deep learning-based tampering algorithms and compared copy-move, splicing, and deep fake techniques. However, generic tampering detection algorithms were not provided in detail. Nabi et al. [5] reviewed image and video tampering detection algorithms, giving a detailed comparison of tampering detection datasets and algorithms, but did not summarize the evaluation metrics of tampering detection algorithms. Surbhi et al. [6] focused on the universal type-independent techniques for image tampering detection. Some generic methods based on resampling, inconsistency-based detection, and compression are compared and analyzed in terms of three aspects: the methodology used, the dataset and classifier used, and performance. A generic framework for image tampering detection is given, including dataset selection, data preparation, feature selection and extraction, classifier selection, and performance evaluation. The top journals and tampering public datasets in the field of tampering detection are organized. Finally, a reinforcement learning-based model is proposed to provide an outlook on future works. However, in-depth analysis and summary of image tampering detection-based deep learning are not presented. This survey provides an overview of deep learning techniques and reviews the latest generic tamper detection algorithms. In this survey the evaluation metrics commonly used for tampering detection are summarized.

For active forensics, Rakhmawati et al. [7] analyzed a fragile watermarking model for tampering detection. Kumar et al. [8] summarized the existing work on watermarking from the perspective of blind and non-blind watermarking, robust and fragile watermarking, but it did not focus on the methods to improve the robustness. Menendez et al. [9] summarized the reversible watermarking model and analyzed its robustness. Agarwal et al. [10] reviewed the robustness and imperceptibility of the watermarking model from the spatial domain and transform domain perspectives. Amrit and Singh [11] analyzed the watermarking models based on deep watermarking in recent years, but they did not discuss the methods to improve the robustness of the models for different attacks. Wan et al. [12] analyzed robustness enhancement methods for geometric attacks in deep rendering images, motion images, and screen content images. Evsutin and Dzhanashia [13] analyzed the characteristics of removal, geometric and statistical attacks and summarized the corresponding attack robustness enhancement methods, but there was less analysis of watermarking models based on deep learning. Compared to the existing active forensic reviews, we start from one of the fundamentals of model robustness enhancement (i.e., generating attack-specific adversarial samples). According to their compatibility with deep learning-based end-to-end watermarking models, the attacks are initially classified into differentiable attacks and non-differentiable attacks. According to their impact on the watermarking model, the network structure and training methods to improve the robustness of different attacks are further subdivided.

The rest of this survey is organized as follows: Section 2 gives the basic framework for tampering detection and robust watermarking based on deep learning, evaluation metrics, attack types, and tampering datasets. Section 3 presents the state-of-the-art techniques of image forgery detection based on deep learning. Section 4 describes the state-of-the-art techniques of robust image watermarks based on deep learning. Section 5 gives the conclusion and future work.

2. Image Forensic Techniques

2.1. Passive Forensics

Passive image forgery detection techniques can be classified as conventional manual feature-based and deep learning-based. The conventional detection algorithms for copy-move forgery detection (CMFD) are: discrete cosine transform (DCT) [14], discrete wavelet transform (DWT) [15], polar complex exponential transform (PCET) [16], scale-invariant feature transform (SIFT) [17], speeded up robust feature (SURF) [18], etc. The conventional detection algorithms for splicing tamper detection are: inconsistency detection by color filter array (CFA) interpolation [19], and inconsistency detection by noise features [20]. However, these conventional detection methods have the drawbacks of low generalization, poor robustness, and low detection accuracy. Deep learning-based detection algorithms, which take advantage of autonomous learning features, solve the above problems of conventional algorithms. In this survey, we mainly review forgery detection algorithms based on deep learning.

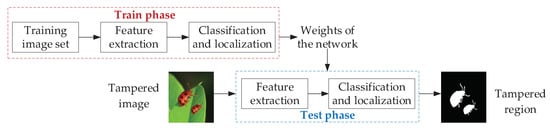

2.1.1. Basic Framework of Image Forgery Detection

With the development of deep learning techniques, certain advantages have been achieved in areas such as image classification and segmentation. Deep learning is also increasingly being applied to image forgery detection. A basic framework of image forgery detection based on deep learning is shown in Figure 2. First, the tamper detection network is built, and feature extraction, feature classification, and localization are performed by the network model. The weights of the network are saved by learning the parameters within the network through big data training to obtain the weights when optimal. The image to be detected is input to the network and tampering detection is performed using the saved network model.

Figure 2.

A basic framework of image forgery detection based on deep learning.

2.1.2. Performance Evalution Metrics

The forgery detection task can be considered to be a binary classification task of pixels, i.e., whether a pixel is tampered with or not. Therefore, the evaluation metrics of the forgery detection algorithm need to use the amount of categorization of the samples, including true positive, false positive, true negative, and false negative. The commonly used evaluation metrics for forgery detection are precision p, recall r, and score [3], which are expressed as Equations (1)–(3), respectively:

where denotes the number of tampered pixels detected as tampered; denotes the number of authentic pixels detected as tampered; denotes the number of tampered pixels detected as authentic. The values of p, r, and are in the range [0, 1]. The larger p, r, and are, the higher the accuracy of detection results is.

Another important metric is the area under curve (AUC), which is defined as the area under the receiver operating characteristic (ROC) curve and can reflect the classification performance of a binary classifier. Like the score, AUC can evaluate the precision and recall together. Its value is generally between 0.5 and 1. The closer the value of AUC is to 1, the higher the performance of the algorithm. When it is equal to 0.5, the true value is the lowest and has no application value.

2.1.3. Datasets for Image Forgery Detection

Diverse datasets for forgery detection are described in this section. These datasets contain original images, tampered images, binary labels, and partially post-processed images. Different datasets are used depending on the problem being solved. In order to validate the results of tamper detection algorithms, different public tampered image datasets are used to test the performance of these algorithms. Table 1 describes the 14 public datasets for image forgery detection, presenting the type of forgery, number of forged images and authentic images, image format, and image resolution.

Table 1.

Datasets for image forgery detection.

2.2. Active Forensics

Digital watermarking is one of the most effective active forensics methods to realize piracy tracking and copyright protection. It can be classified into conventional methods based on manually designed features and deep learning-based methods. Early digital watermarking was mostly embedded in the spatial domain, such as least significant bits (LSB) [32], but it lacked robustness and was easily detected by sample pair analysis [33]. To improve robustness, a series of transform domain-based methods were proposed, such as DWT [34], DCT [35], contourlet transform [36], and Hadamard transform [37]. In recent years, with the continuous update and progress of deep learning technology, deep learning has been widely used in image watermarking and achieved remarkable achievements. In this survey, we will focus on the analysis of deep learning-based robust watermarking.

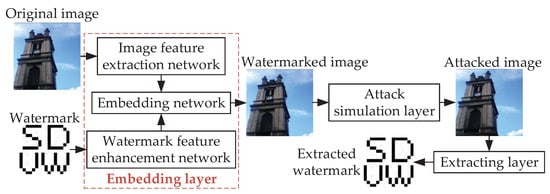

2.2.1. Basic Framework of Robust Image Watermarking Algorithm

The main basic framework of deep learning-based end-to-end robust watermarking is shown in Figure 3. It consists of three components: embedding layer (including image feature extraction network and watermark feature enhancement network), attack layer, and extraction layer. The model includes two stages of forward propagation and reverse gradient updating when iteratively updating parameters. During forward propagation, the original image and the watermarked image pass through the image feature extraction network and the watermark feature enhancement network, respectively, to extract high-order image features and high-order watermark features. The output results are then fed into the watermark embedding network to obtain the watermarked image. The attack simulation layer includes various types of attacks such as noise and filtering, geometric attacks, and JPEG compression. When the watermarked image passes through the attack simulation layer, different adversarial samples after attacks are generated. The extraction layer performs watermark extraction on the adversarial samples or the watermarked images to obtain the watermark authentication information. Above is the model forward propagation training. In backpropagation, the PSNR loss and SSIM, which measures the similarity of two images from three aspects: grayscale, contrast, and structure loss of the original image and the watermarked image are usually set to improve the similarity between the watermarked image and the original image, which are expressed as Equations (4) and (5), respectively. At the same time, the MSE loss of extracted watermark and original watermark, which are expressed as Equation (6), is set to improve the accuracy of watermark recovery.

where P denotes the width of the original image; Q denotes the length of the original image; and denote the mean value of the gray value of the original image and the watermarked image, respectively; and denote the variance of the gray value of the original image and the watermarked image, respectively; and denote the original image and watermarked image; denotes the covariance of the original image and the watermark image; and are constant in range [, 9 × ]. Then the model calculated the corresponding loss point by point by a gradient from the output end of the model according to the above loss, and the optimizer (usually Adam optimizer, SGD optimizer) was used to update the model parameters, so as to optimize the task of the model (improving the imperceptibility of the watermarked image and the accuracy of watermark extraction after the watermarked image was attacked).

Figure 3.

A basic framework of end-to-end robust watermarking based on deep learning.

2.2.2. Performance Evaluation Metrics

In a digital image watermarking algorithm, the most important three evaluation indicators are robustness, imperceptibility, and capacity.

Robustness: Robustness is used to measure the ability of a watermark model to recover the original watermark after an image has been subjected to a series of intentional or unintentional image processing during electronic or non-electronic channel transmission. Bit error rate (BER) and normalized cross-correlation (NCC) are usually used as the objective evaluation metrics, which are expressed as Equations (7) and (8), respectively:

where and represent the ith bit of the original watermark and the mean value of the original watermark, respectively; and represent the i th bit of the extracted watermark and the mean value of the extracted watermark; L represents the length of the watermark.

Imperceptibility: Imperceptibility is used to measure the sensory impact of the em-bedding point on the whole image after the model has completed watermark embedding (i.e., the watermarked image is guaranteed to be indistinguishable from the original image). Peak signal-to-noise ratio (PSNR) and structural similarity (SSIM) are usually used as the objective evaluation metrics, which are expressed as Equations (9) and (10), respectively:

where W denotes the width of the original image; H denotes the length of the original image; denotes the maximum gray level of the original image; and denote the mean value of the gray value of the original image and the watermarked image, respectively; and denote the variance of the gray value of the original image and the watermarked image, respectively; and denote the original image and watermarked image; denotes the covariance of the original image and the watermark image; and are constant in range [, 9 × ].

Capacity: The capacity represents the maximum watermark embedding bits of the model while maintaining the established required imperceptibility and robustness metrics. It is mutually constrained with imperceptibility and robustness. Increasing the watermark embedding capacity, imperceptibility and robustness decrease, and vice versa. The number of embedded watermark bits per pixel (bpp) is usually used to measure the capacity metric of the model, which is expressed as Equation (11):

where denotes the number of watermark bits, and denotes the total number of original image pixels.

2.2.3. Attacks of Robust Watermarking

In the end-to-end watermarking framework, the attack simulation layer plays a decisive role in improving the robustness of the framework. However, when implementing backpropagation updates in parameters, it is necessary to ensure that each node is differentiable. For non-differentiable attacks, the model cannot perform parameter updates during backpropagation. Therefore, here comes a subdivision of whether the attack is differentiable or not.

Differentiable Attacks:

Noise and Filtering Attacks: Noise and filtering attacks refer to some intentional or unintentional attacks on the watermarked image in the electronic channel transmission, such as Gaussian noise, salt and pepper noise, Gaussian filtering, and median filtering. Its robustness can usually be improved by generating adversarial samples in the ASL layer.

Geometric Attacks: Applying geometric attacks to an image can break the synchronization between the watermark decoder and the watermarked image. Geometric attacks can be subdivided into rotation, scaling, translation, cropping, etc.

Non-differentiable Attacks:

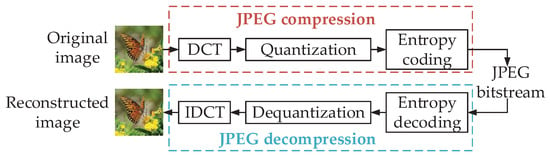

Joint Photographic Experts Group (JPEG) Attacks: The JPEG standard has two basic compression methods: the DCT-based method and the lossless compression prediction method. In this survey, we focus on the DCT-based JPEG compression, which can consist of DCT, inverse DCT, quantization, inverse quantization, entropy coding, and entropy decoding (i.e., color conversion and sampling), as shown in Figure 4. Quantization and dequantization are non-differentiable processes, leading to the incompatibility of the model with simulated attacks.

Figure 4.

A flowchart of JPEG compression.

Screen-shooting Attacks: In the process of watermarked images through the camera photo processing, it will undergo a series of analog-digital (A/D) conversion and digital-analog (D/A) conversion inevitably, both of which affect the extraction of the watermark seriously.

Agnostic Attacks: Agnostic attacks refer to attacks in which the model parameters are unknown to the attack’s prior information. For neural network models, it is difficult for the encoder and decoder to adapt to agnostic attacks without generating attack prior samples.

3. Image Forgery Detection Based on Deep Learning

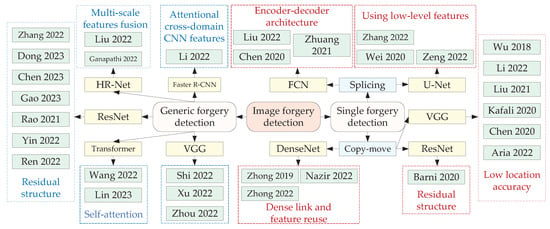

Image passive forgery detection methods can be divided into a single type of forgery detection and generic forgery detection depending on the detection types. Single forgery detection methods can only detect specific types of tampered images, including image copy-move forgery detection and image splicing forgery detection. Generic forgery detection methods can be applied to different types of tampered images, including copy-move, splicing, and removal. Diverse passive forgery detection methods are described in this section. A table is given to compare the performance of passive forgery detection methods from different aspects at the end of this section. An overview of image forgery detection based on deep learning, as shown in Figure 5.

Figure 5.

An overview of image forgery detection based on deep learning.

3.1. Image Copy-Move Forgery Detection

The CMFD methods detect tampered images by extracting features associated with tampering. For an image, it is possible to globally capture the features either for the entire image or locally for regions. The choice of feature extraction methods affects the performance of CMFD methods greatly. The CMFD methods are divided into two categories, conventional manual feature-based methods and deep learning-based methods.

The conventional manual feature methods can be divided into block-based and keypoint-based CMFD methods. The block-based CMFD methods can locate tampered regions accurately, but there are main problems, such as high computational complexity and difficulty in resisting large-scale rotation and scaling. To solve these problems, keypoint-based CMFD methods are proposed. The keypoint-based CMFD methods use the keypoint extraction techniques to extract keypoints of the images and find similar features using feature-matching algorithms. The keypoint-based CMFD methods can accurately locate tampered regions of common tampered images, but it mainly suffers from problems, such as the small number of keypoints in smooth regions leading to undetectable and poor algorithm generalization ability. To solve the problems of conventional manual feature methods, deep learning-based CMFD methods are proposed.

With the rapid development of deep learning techniques, deep learning-based methods have been applied to the field of tampering detection. Deep learning-based CMFD methods have shown great performance improvements. A well-trained model can learn the latent features of images. The difference between the two types of images is found to discriminate the tampered image. Compared with conventional methods, deep learning-based CMFD can provide more accurate and comprehensive feature descriptors.

To detect whether an image was tampered, Rao and Ni [38] proposed a deep convolutional neural network (DCNN)-based model that used the spatial rich models (SRM) with 30 basic high-pass filters to initialize the weights of the first layer, which helped suppress the effects of image semantics and accelerated the network convergence. The image features were extracted by the convolutional layer and classified using a support vector machine (SVM) to discern whether the image was tampered with or not. Kumar and Gupta [39] proposed a convolutional neural network (CNN)-based model to automatically extract image features for image tampering classification with robust to image compression, scaling, rotation, and blurring, but the method also required analysis at the pixel level to locate tampered with regions. In [38,39], the authors only perform the detection of whether tampering has been performed, and cannot localize tampered regions, which has a limited application.

To further improve the application space of the algorithm and achieve the localization of copy and move regions, Li et al. [40] proposed a method combining image segmentation and DCNN, using Super-BPD [41] to segment the image to obtain the edge information of the image. VGG 16 and atrous spatial pyramid pooling (ASPP) [42] networks obtained the multi-scale features of the image to improve the accuracy of the algorithm. The feature matching module was introduced to achieve the localization of tampered regions. But the segmentation module leads to high computational complexity. Liu et al. [43] designed a two-stage detection framework. The first stage introduced atrous convolution with autocorrelation matching based on spatial attention to improve similarity detection. In the second stage, the superglue method was proposed to eliminate false warning regions and repair incomplete regions, thus improving the detection accuracy of the algorithm. Zhong and Pun [44] created an end-to-end deep neural network, referring to the Inception architecture fusing multi-scale convolution to extract multi-scale features. Kafali et al. [45] proposed a nonlinear inception module based on a second-order Volterra kernel by considering the linear and nonlinear interactions among pixels. Nazir et al. [46] created an improved mask region-based convolution network. The network used the DenseNet 41 model to extract deep features, which were classified using the Mask-RCNN [47] to locate tampered regions. Zhong et al. [48] proposed a coarse-fine spatial channel boundary attention network and designed the attention module for boundary refinement to obtain finer forgery details and improve the performance of detection. In a few papers [38,39,40,43,44,45], the authors improved the detection performance and robustness of the algorithm but did not distinguish between source and target areas.

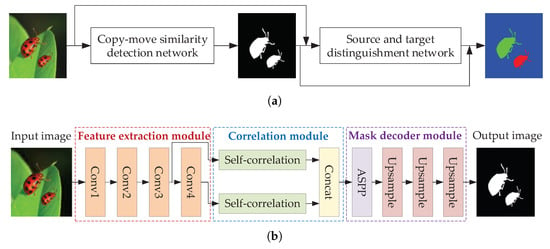

To correctly distinguish between source and target regions, some methods have been proposed. Wu et al. [28] proposed the first parallel network for distinguishing between source and target. The manipulation detection branch located potential manipulation regions by visual artifacts, and the similarity detection branch located source and target regions by visual similarity. Chen et al. [49] used a serial structure and added the atrous convolutional attention module in the similarity detection phase to improve the detection accuracy. The network structure is shown in Figure 6. Aria et al. [50] proposed a quality-independent detection method, which used a generative adversarial network to enhance image quality and a two-branch convolutional network for tampering detection. The network could detect multiple tampered regions simultaneously and distinguish the source and target of tampering. It was resistant to various post-processing attacks and had good detection results in low-quality tampered images. Barni et al. [51] proposed a multi-branch CNN network, which exploited the irreversibility caused by interpolation traces and the inconsistency of target region boundaries to distinguish source and target regions.

Figure 6.

A serial network for CMFD: (a) the architecture of the proposed scheme and (b) the architecture of copy-move similarity detection network. Adapted from [49].

3.2. Image Splicing Forgery Detection

Image splicing forgery is also one of the most popular ways to manipulate the content of an image. The manipulation operation of splicing is copying an area from one image and pasting it to another image. The tampered images are a serious threat to the security of image information. It is crucial to develop suitable methods to detect image splicing forgery. Image splicing detection techniques are divided into manual feature-based methods and deep learning-based methods.

The current manual feature-based methods can be divided into three categories: textural feature-based techniques, noise-based techniques, and other techniques. The textural feature-based techniques use the difference between the local frequency distribution in tampered images and the local frequency of the real image to detect image splicing. The commonly used textural feature descriptors are local binary pattern (LBP) [52], gray level co-occurrence matrixes (GLCM) [53], local directional pattern (LDP) [54], etc. Since the splicing images come from two different images, the noise distribution of the tampered image is changed. Therefore, the noise-based techniques detect tamper by estimating the noise of the tampered image. The detection of splicing images can also be performed by fusing multiple features. Manual feature-based methods use different descriptors to obtain specific features, and the detection effect is various for different datasets. The generalization of manual feature-based techniques is poor. The deep learning-based methods can automatically learn a large number of features, which improves the accuracy and generalization ability performance of image splicing detection.

Deep learning-based methods can learn and optimize the feature representations for forgery forensics directly. This has inspired researchers to develop different techniques to detect image splicing. In recent years, the U-Net structure has been more widely used in splicing forgery detection. Wei et al. [55] proposed a tamper detection and localization network based on U-Net with multi-scale control. The network used a rotated residual structure to enhance the learning ability of features. Zeng et al. [56] proposed a multitask model for locating splicing tampering in an image, which fused an attention mechanism, densely connected network, ASPP, and U-Net. The model can capture more multi-scale features while expanding the receptive field and improving the detection accuracy of image splicing tampering. Zhang et al. [57] created a multi-task squeeze and extraction network for splicing localization. The network consisted of a label mask stream and edge-guided stream, which used U-Net architecture. Squeeze and excitation attention modules (SEAMs) were incorporated into the multi-task network to recalibrate the fused features and enhance the feature representation.

Many researchers have also used fully convolutional networks (FCN) commonly used in semantic segmentation for image splicing forgery detection. Chen et al. [58] proposed a residual-based fully convolutional network for image splicing localization. The residual blocks were added to FCN to make the network easier to optimize. Zhuang et al. [59] created a dense fully convolutional network for image tampering localization. This structure comprised dense connections and dilated convolutions to capture subtle tampering traces and obtain finer feature maps for prediction. Liu et al. [60] proposed an FCN with a noise feature. The network enhanced the generalization ability by extracting noise maps in the pre-processing stage to expose the subtle changes in the tampered images and improved the robustness by adding the region proposal network. The technique could accurately locate the tampered regions of images and improve generalization ability and robustness.

In recent years, attention mechanisms have been deeply developed and have gained a great advantage in the field of natural language processing. Many researchers have started to incorporate the attention mechanism in tampering detection. Ren et al. [61] proposed a multi-scale attention context-aware network and designed a multi-scale multi-level attention module, which not only effectively solved the inconsistency of features at different scales, but also automatically adjusted the coefficients of features to obtain a finer feature representation. To address the problem of poor accuracy of splicing boundary, Sun et al. [62] proposed an edge-enhanced transformer network. A two-branch edge-aware transformer was used to generate forgery features and edge features to improve the accuracy of tampering localization.

3.3. Image Generic Forgery Detection

To enable multiple types of tampering detection, the algorithm has been made more generalizable. Zhang et al. [63] proposed a two-branch noise and boundary network that used an improved constrained convolution to extract the noise map. It can effectively solve the problem of training instability. The edge prediction module was added to extract the tampered edge information to improve the accuracy of localization. But the detection performance was poor when the tampered image contained less tampered information. Dong et al. [64] designed a multi-view, multi-scale supervised image forgery detection model. The model combined the boundary and noise features of the tampered regions to learn semantic-independent features with stronger generalization, which improved detection accuracy and generalization. But the detection effect was poor when the tampered region was the background region. Chen et al. [65] proposed a network based on signal noise separation to improve the robustness. The signal noise separation module separated the tampered regions from the complex background regions with post-processing noise, reducing the negative impact of post-processing operations on the image and improving the robustness of the algorithm. Liu et al. [66] proposed a network for learning and enhancing multiple tamper traces, fusing multiple features of global noise, local noise, and detailed artifact features for forgery detection, which enabled the algorithm to have high generalization and detection accuracy. However, when the tampering artifacts are reduced, the lack of effective tampering traces results in tampered regions being undetectable. Wang et al. [67] proposed a multimodal transformer framework, which consisted of three main modules: high-frequency feature extraction, an object encoder, and an image decoder, to address the difficulty of capturing invisible subtle tampering in the RGB domain. The frequency features of the image were first extracted, and the tampered regions were identified by combining RGB features and frequency features. The effectiveness of the method was shown on different datasets.

To achieve a more refined prediction of tampering masks, a progressive mask-decoding approach is used. Liu et al. [68] proposed a progressive spatio-channel correlation network. The network used two paths, the top-down path acquired local and global features of the image, and the bottom-up path was used to predict the tampered mask. The spatio-channel correlation module was introduced to capture the spatial and channel correlation of features and extract features with global clues to enable the network to cope with various attacks and improve the robustness of the network. To solve the problem of irrelevant semantic information, Shi et al. [69] proposed a progressively-refined neural network. Tampered regions were localized progressively under a coarse-to-fine workflow and rotated residual structure was used to suppress the image content during the generation process. Finally, the refined mask was obtained.

To solve the existing problems of low detection accuracy and poor boundary localization, Gao et al. [70] proposed an end-to-end two-stream boundary-aware network for generic image forgery detection and localization. The network introduced an adaptive frequency selection module to adaptively select appropriate frequencies to mine inconsistent statistical information and eliminate the interference of redundant information. Meanwhile, a boundary artifact localization module was used to improve the boundary localization effect. To address the problem of poor generalization ability to invisible manipulation, Ganapathi et al. [71] proposed a channel attention-based image forgery detection framework. The network introduced a channel attention module to detect and localize forged regions using inter-channel interactions to focus more on tampered regions and achieve accurate localization. To identify forged regions by capturing the connection between foreground and background features, Xu et al. [72] proposed a mutually complementary forgery detection network, which consisted of two encoders for extracting foreground and background features, respectively. A mutual attention module was used to extract complementary information from the features, which consisted of self-feature attention and cross-feature attention. The network significantly improved the localization of forged regions using the complementary information between foreground and background.

To improve the generalization ability of the network model, Rao et al. [73] proposed a multi-semantic conditional random field model to distinguish the tampered boundary from the original boundary for the localization of the forged regions. The attention blocks were used to guide the network to capture more intrinsic features of the boundary transition artifacts. The attention maps with multiple semantics were used to make full use of local and global information, thus improving the generalization ability of the algorithm. Li et al. [74] proposed an end-to-end attentional cross-domain network. The network consisted of three streams that extracted three types of features, including visual perception, resampling, and local inconsistency. The fusion of multiple features improved the generalization ability and localization accuracy of the algorithm effectively. Yin et al. [75] proposed a multi-task network based on contrast learning for the localization of multiple manipulation detection. Contrast learning was used to measure the consistency of statistical properties of different regions to enhance the feature representation and improved the performance of detection and localization.

To solve the problems of low accuracy and insufficient training data, Zhou et al. [76] designed a coarse-to-fine tampering detection network based on a self-adversarial training strategy. A self-adversarial training strategy was used to dynamically extend the training data to achieve higher accuracy. Meanwhile, to solve the problem of the insufficient dataset, Ren et al. [77] designed a novel dataset, called the multi-realistic scene manipulation dataset, which consisted of three kinds of tampering, including copy-move, splicing, and removal, and covered 32 different tampering scenarios in life. A general and efficient search and recognition network was proposed to reduce the computational complexity of forgery detection.

The state-of-the-art deep learning-based CMFD algorithms and performance comparison are described in Table 2. Table 2 describes the methods from four aspects: type of detection, backbone, robustness performance, and dataset.

Table 2.

A comparison of deep learning-based passive forgery detection methods.

4. Robust Image Watermarking Based on Deep Learning

According to the gradient updating feature of the attack, robust watermarking can be classified into two categories: robust differentiable attack watermarking and robust non-differentiable attack watermarking. For differentiable attacks, the adversarial simulation layer (ASL) can be introduced into the model to generate attack counterexamples directly, while non-differentiable attacks require other means to improve their robustness, such as differentiable approximation, two-stage training, and network structure improvement, etc.

4.1. Robust Image Watermark against Differentiable Attack

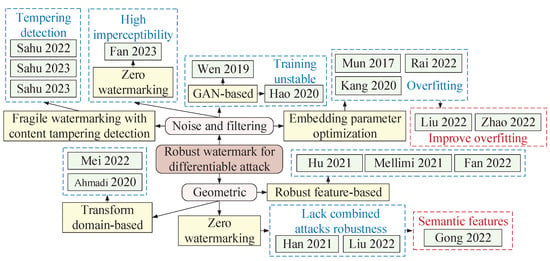

As shown in Figure 7, differential attacks can be further categorized into noise and filtering attacks, as well as geometric attacks.

Figure 7.

An overview of robustness enhancement methods for differential attacks.

4.1.1. Robust Image Watermark against Noise and Filtering Attack

Noise and filtering attacks apply corresponding noise or filtering to the pixel from the spatial domain and transform domain directly, affecting the amplitude coefficient synchronization of the codec. There are three methods for recovering amplitude synchronization: zero-watermarking, generative adversarial network (GAN) [82]-based, and embedding coefficient optimization.

Gaussian attacks primarily blur the details of an image by adding Gaussian noise, thereby reducing the robustness of the watermark. This attack affects the position and intensity of the embedded watermark, which may result in incorrect extraction or a decrease in the quality of the extracted watermark information. To counter Gaussian attacks, traditional methods typically employ anti-noise and filtering techniques to enhance the robustness of the watermark. For example, adaptive filters [83] and noise estimation [84] techniques can be used to reduce the impact of Gaussian noise. In deep learning approaches, methods such as adversarial training, zero-watermarking, and embedding parameter optimization are utilized to enhance the robustness against Gaussian attacks. For example, Wen and Aydore [85] introduced adversarial training into the watermarking algorithm where the distortion type and the distortion strength were adaptively selected thus minimizing the decoding error.

Zero-watermarking: The conventional zero-watermarking algorithm consists of three steps: original image robust feature extraction, zero-watermark generation, and zero-watermark verification. To extract noise-invariant features, Fan et al. [86] used a pre-trained Inception V3 [87] network to extract the image feature tensor initially, and extracted its low-frequency subbands by DCT transform to generate a robust feature tensor. They dissociated the binary sequence generated by chaos mapping with the watermark information to obtain a noise-invariant zero-watermark.

GAN-based: In the watermarking model, GAN can generate high-quality images more efficiently by competing between the discriminator (i.e., watermarked image discriminator) and the generator (i.e., encoder) under adversarial loss supervision to continuously update each other’s parameters and improve the decoding accuracy by supervising the decoder through total loss function indirectly. Since the human visual system focuses more on the central region of the image, Wen and Aydore [85] introduced adversarial training into the watermarking algorithm where the distortion type and the distortion strength were adaptively selected thus minimizing the decoding error. Hao et al. [88] added a high-pass filter before the discriminator so that the watermark tended to be embedded in the mid-frequency region of the image, giving a higher weight to the middle region pixels in the computed loss function. However, it could not resist geometric attacks effectively. Zhang et al. [89] proposed an embedding-guided end-to-end framework for robust watermarking. It uses a prior knowledge extractor to obtain the chrominance and edge saliency of cover images for guiding the watermark embedding. However, it could not be applied to practical scenarios such as printing, camera photography, and geometric attacks. Li et al. [90] designed a single-frame exposure optical image watermarking framework using conditional generative adversarial network (CGAN) [91]. Yu [92] introduced an attention mechanism to generate attention masks to guide the encoder to generate better target images without disturbing the spotlight, and improved the reliability of the model by combining GAN with a circular discriminant model and inconsistency loss. However, refs. [85,88,89,90,92] did not effectively address the GAN network training instability problem, resulting in none of them being able to further improve the balance of robustness and imperceptibility.

Embedding Parameter Optimization: The position and strength of the embedding parameters determine the algorithm performance directly. Mun et al. [93] performed an iterative simulation of the attack on the watermarking system. But it can only obtain one bit of watermark information from a sub-block. Kang et al. [94] first subjected the host image to DCT transformation to extract the human eye-insensitive LH sub-band and HL sub-band. Particle swarm optimization (PSO) was used to find the best DCT coefficients and the best embedding strength to improve the imperceptibility and robustness of the watermarking algorithm. However, due to the training overfitting of PSO [95], its model generalized and achieved good robust performance only on the experimental dataset. Rai and Goyal [96] combined fuzzy, backpropagation neural networks and shark optimization algorithms. However, refs. [94,96] had the problem of training overfitting. To improve the training overfitting problem, Liu et al. [97] introduced a two-dimensional image information entropy loss to enhance the ability of the model to generate different watermarks, ensuring that the model was always able to assign enough information to a single host image for different watermark inputs and the extractor can extract the watermark information completely, therefore enhancing the dynamic randomness of the watermark embedding. Zhao et al. [98] specifically adopted an end-to-end robust image watermarking algorithm framework, known as the embed-attack-extract paradigm. In the embedding layer of the network, it incorporated the channel spatial attention mechanism. As a result, during training, after forward and backward propagation for parameter updates, the embedding layer’s parameters were able to focus on more effective channel and spatial information, which also made the model focus on the optimization of increasing the accuracy of the extracted watermark. To sum up, this optimization of the watermark embedding parameters contributed to enhancing the model’s resistance to noise and filtering attacks.

Deep learning-based image watermarking against noise and filtering attack algorithms and performance comparison are described in Table 3. Table 3 describes the methods from five aspects: watermark size (container size), category, method (effect), robustness, and dataset, where , , and represents the variance of Gaussian blur, Gaussian filtering, and Gaussian noise, respectively.

Table 3.

The comparison of deep learning-based image watermarking against noise and filtering.

Fragile Watermark with Content Tampering Detection: The above is based on robust watermarking to achieve the active forensics of noise and filtering attacks, the following is based on fragile watermarking to achieve the copyright protection of noise and filtering attacks and content tampering proof.

Sahu [104] proposed a fragile watermarking scheme based on logical mapping to effectively detect and locate the tampered region of watermarked images. It took advantage of the sensitivity of logical mapping to generate watermark bits by performing a logical XOR operation between the first intermediate significant bit (ISB) and the watermark bit, and embedding the result in the rightmost least significant bits (LSBs). The watermarked image obtained by this method is of high quality and good imperceptibility with an average peak signal-to-noise ratio (PSNR) of 51.14 dB. It can effectively detect and locate the tampering area from the image, and can resist both intentional and unintentional attacks to a certain extent. However, it cannot recover the tampered region.

Sahu et al. [105] studies content tampering in multimedia watermarking. It introduces a feature association model based on a multi-task learning framework that can relatively detect multiple modifications based on location and time. Multi-task learning frameworks leverage the interrelationships between multiple related problems or tasks and can effectively learn from shared representations to provide better models. It also utilizes convolutional neural networks (CNNs) for climate factor prediction tasks, which can be trained and evaluated on large-scale datasets to learn different convolutional models using regression models and distance-based loss functions. The results show that the method using deep learning can achieve high precision and extremely high accuracy. It ensures that image data are collected from reliable sources and manually verifies the authenticity of the data, including images collected from AMOS (archive of many outdoor scenes) and Internet webcams with time stamps, camera ids, and location annotations. This ensures that the information collected is true.

There are still difficulties in the detection of tampered image metadata: the literature points out that the open-source ExifTool is applied to access and modify image metadata between digital workflows, but this method is easy to be tampered with, so additional evidence is needed to prove the authenticity of image content, such as the analysis of other climate factor images. This indicates that there are still challenges in tampering detection of metadata. To sum up, the advantages of this literature are a reliable data acquisition process, multi-task learning framework, and prediction accuracy based on deep learning. However, it is difficult to detect the tampered image metadata and the data set is not evenly distributed. It can also be a baseline in future works to propose a CNN-based content retrieval and tampering strategy. Sahu et al. [106] proposed an efficient reversible fragile watermarking scheme based on two images (DI-RFWS), which can accurately detect and locate the tamper region in the image. The scheme used a pixel adjustment strategy to embed two secret bits in each host image (HI) pixel to obtain a double-watermarked image (WI). According to the watermark information, the non-boundary pixels of the image are modified to the maximum of ±1. Due to the potency of reversibility, the proposed scheme can be adapted to a wide range of contemporary applications.

4.1.2. Robust Image Watermark against Geometric Attacks

Geometric attacks mainly include rotation, cropping, and translation that change the spatial position relationship of pixels and affect the spatial synchronization of codecs. According to the method of recovering spatial synchronization, it can be divided into transform domain-based, zero-watermarking, and robust feature-based.

For cropping attacks, when the cropping involves the watermark embedding region, the watermark extraction algorithm may not match the watermark features correctly, resulting in the quality of the extracted watermark information being reduced. Multi-location embedding, multi-watermark embedding, and multi-watermark embedding are three main methods to improve the robustness of cropping attacks. Hsu and Tu [107] used the sinusoidal function and the wavelength of the sinusoidal function to design the embedding rule, which gives each watermark bit multiple copies spread across different blocks. Therefore, even under cropping attacks, other watermarks can be saved to achieve copyright authentication.

Transform Domain-based: The transform domain-based watermarking algorithm embeds the watermark into the transform domain coefficients, avoiding geometric attacks from corrupting the synchronization of the codec effectively. Ahmadi [108] implemented watermark embedding and extraction in the transform domain (such as DCT, DWT, Haar, etc.), and introduced circular convolution in the convolution layer used for feature extraction to make the watermark information diffuse in the transform domain, which effectively improved the robustness against cropping. Mei et al. [109] embedded a watermark in the DCT domain and introduced the attention mechanism to calculate the confidence of each image block. In addition, joint source-channel coding was introduced to make the algorithm maintain good robustness and imperceptibility under the gray image watermarking with the background.

Zero-watermarking: The idea of the zero-watermarking is to obtain geometrically invariant features from robust features in images and generate zero-watermark through zero-watermark generation by sequence synthesis operation such as dissimilar operation. Han et al. [110] used pre-trained VGG19 [111] network to extract original image features and selected DFT-transformed low-frequency sub-bands to construct a medical image feature matrix. Liu et al. [112] used neural style transfer (NST) [113] technique combined with a pre-trained VGG19 network to extract the original image style features, fused the original image style with the original watermark content to obtain the style fusion image, and Arnold dislocation [114] to obtain the zero-watermark. Gong et al. [115] used the low-frequency features of the DCT of the original image as labels. Skip connection and loss functions were applied to enhance and extract high-level semantic features. However, none of the authors [110,112,115] could resist the robustness of multiple types of attacks effectively.

Robust Feature-based: Unlike zero-watermarking, the idea of this method is to search for embedded feature coefficients or tensors in the image and embed the watermark in it robustly. Hu et al. [116] embedded the watermark in low-order Zernike moments with rotation and interpolation invariance. Mellimi et al. [117] proposed a robust image watermarking scheme based on lifting wavelet transform (LWT) and deep neural network (DNN). The DNN was trained to identify the changes caused by attacks in different frequency bands and select the best subbands for embedding. However, it was not robust to speckle noise. Fan et al. [118] combined the multiscale features in GAN and used pyramid filters and multiscale maximum pooling techniques to learn the watermark feature distribution and improve the geometric robustness of watermarking fully.

The state-of-the-art deep learning-based image watermarking against geometric attacks algorithms and performance comparison are described in Table 4. Table 4 describes the methods from five aspects: watermark size (container size), category, method (effect), robustness, and dataset, where represents the scaling factor for the scaling attack; represents the cropping ratio for cropping; represents the clockwise rotation of the rotation attack.

Table 4.

A comparison of deep learning-based image watermarking against geometric attacks.

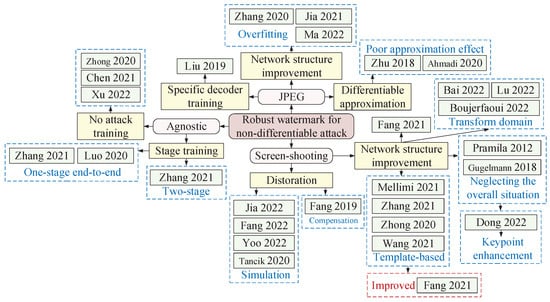

4.2. Robust Image Watermark against Non-Differentiable Attack

As shown in Figure 8, non-differential attacks can be further categorized into JPEG attacks, screen-shooting attacks, and agnostic attacks.

Figure 8.

An overview of robustness enhancement methods for non-differential attacks.

4.2.1. Robust Image Watermark against JPEG Attack

In recent years, end-to-end watermarking algorithms based on deep learning have been emerging, and thanks to the powerful feature extraction capabilities of CNN, watermarks can be covertly embedded in low-perception pixel regions of the human eye (such as diagonal line, textured complex regions, high-brightness slow-change regions, etc.) to obtain watermarked images that are very similar to the original images. In the end-to-end training, to improve the robustness of the watermarking algorithm, the watermarked image is added to the differentiable attack by introducing an attack simulation layer to generate the attacked counterexamples, and the decoder parameters are updated by decoding losses such as mean square error (MSE), binary cross entropy (BCE) of the original watermark and decoded watermark, etc. However, due to the introduction of the non-differentiable nature of real JPEG compression, it cannot be introduced into the end-to-end network to implement back-propagation updates of model parameters directly. To address this problem, relevant studies have recently been proposed, which can be subdivided into three directions according to the differences in the methods used to generate the JPEG counterexamples: differentiable approximation, specific decoder training, and network structure improvement.

Differentiable Approximation: Zhu et al. [121] proposed the JPEG-MASK approximation method first, which mainly set the high-frequency DCT coefficients to 0 and retained the 5 × 5 low-frequency coefficients of the Y channel and the 3 × 3 low-frequency coefficients in the U and V channels, which had some simulation effect on JPEG. Based on [121], Ahmadi et al. [108] added a residual network with adjustable weight factors to the watermark embedding network to achieve autonomous adjustment of imperceptibility and robustness. Meanwhile, unlike conventional convolution operation, circular convolution was introduced to achieve watermark information diffusion and redundancy. SSIM was used as the loss function of the encoder network to make the watermarked image more closely resemble the original image in terms of contrast, brightness, and structure. Although differentiable approximation methods effectively solve the back-propagation update problem for training parameters, the two papers [108,121] both suffered from bad simulation approximation, which led to a decoder that cannot perform robust parameter updates more efficiently against real JPEG in turn.

Specific Decoder Training: To avoid the introduction of non-differentiable components in the overall training process of the model. Liu et al. [122] proposed a two-stage separable training watermarking algorithm consisting of noise-free end-to-end adversarial training (FEAT) and a decoder only trained (ADOT) with an attack simulation layer. In FEAT, the encoder and decoder were jointly trained to obtain a redundant encoder, and in ADOT, the encoder parameters were fixed and spectral regularization was used to effectively mitigate the training instability problem of GAN networks. At the same time, corresponding attacks were applied to the watermarked images to obtain the corresponding attack samples, which were then used as the training set to train the dedicated decoder. The disadvantages of the non-gradient nature of JPEG were solved, but the phased training suffered from the problem of training local optima.

Network Structure Improvement: Chen et al. [123] proposed a JPEG simulation network JSNet which could simulate JPEG lossy compression with any quality factors. The three processes of sampling, DCT, and quantization in JPEG were simulated by a maximum pooling layer, a convolution layer, and a 3D noise mask. However, it was found experimentally that the model was still less robust to JPEG compression after the introduction of JSNet (i.e., BER is greater than 30% under both ImageNet [124] and Boss Base [102] dataset tests). This was related to its use of random initialization of parameters in the maximum pooling, convolutional layer, and 3D noise layer during the simulation, which resulted in a poor simulation effect for JPEG compression.

Due to the introduction of the non-differentiable nature of real JPEG compression, it cannot be introduced into the end-to-end network to implement back-propagation updates of model parameters directly. To address this problem, Jia et al. [125] used a mini-batch of real and simulated (MBRS) JPEG compression to improve the JPEG compression attack robustness. In the attack layer, one of several small mini-batches attacks was selected randomly from the real, simulated, and equivalent sound layers as the noise layer. Please note that the attacks polled by the model in the first iteration are simulated to facilitate cumulative updates based on the differentiable gradient of the first iteration. This was performed thanks to the Adam momentum optimizer with its historical gradient update, which is expressed as Equations (12)–(16).

where and denote the first and second moment estimates at the time step t , i.e., the exponential moving average of the gradient and the gradient squared. Where and denote the average coefficient, usually set to a value close to 1. denotes the gradient in time step. denotes the model parameter in time step t. denotes the learning rate. denotes a small constant added for numerical stability to prevent the denominator from being zero. and denote unbiased estimation modified to the first and second moment estimation, respectively.

Thanks to the Adam momentum optimizer with its historical gradient update, even if the attack layer rotated to the non-differentiable real JPEG compression attack, the internal parameters of the codec network could still be updated by the accumulation of historical differentiable gradients, which avoided the problem of non-differentiable real JPEG compression and achieved a better simulation quality of JPEG compression effectively. However, the model ignored the feature tensor of the image in the spatial and channel directions for image feature extraction, which led to poor robustness in the face of high-intensity salt and pepper noise. Zhang et al. [126] proposed a pseudo-differentiable JPEG method. JPEG pseudo-noise was the difference between the compressed processed image and the original image. Since its backpropagation without going through the pseudo-noise sound path, there was no problem of non-differentiable. However, its robustness to conventional noise was poor due to the lack of noise prior when back propagating. Ma et al. [127] proposed a robust watermarking framework by combining reversible and irreversible mechanisms. In the reversible part, a diffusion extraction module (DEM) (consisting of a series of fully connected layers and a Haar transform) and a fusion separation module (FSM) were designed to implement watermark embedding and extraction in a reversible manner. For the irreversible part, the irreversible attention model which was composed of a series of convolution layers including full-connected layer, squeeze and excitation block, and a dedicated noise selection module (NSM) were introduced to improve the JPEG compression robustness.

The state-of-the-art deep learning-based image watermarking against JPEG attack algorithms and performance comparison are described in Table 5. Table 5 describes the methods from five aspects: watermark size (container size), method, structure, robustness, imperceptibility, and dataset, where represents the quality factor of the JPEG compression.

Table 5.

A comparison of deep learning-based image watermarking against JPEG attack.

4.2.2. Robust Image Watermark against Screen-Shooting Attack

Screen-shooting attacks mainly cause image transmission distortion, brightness distortion, and Moiré distortion [128]. To improve the robustness of the screen-shooting attacks, relevant studies have recently been proposed, which can be subdivided into template-based, distortion compensation-based, decoding based on the attention mechanism, keypoint enhancement, transform domain, and distortion simulation.

Template-based: Template-based watermarking algorithms have a high watermarking capacity. The templates characterizing the watermarking information are embedded in the image in a form similar to additive noise. To ensure robustness, templates usually carry special data distribution features, but conventional template-based watermarking algorithms [117,129,130,131] were designed with low complexity manually and thus could not cope with sophisticated attacks. Fang et al. [132] designed a template-based watermarking algorithm by exploiting the powerful feature learning capability of DNN. In the watermark embedding phase, the embedding template was designed based on the insensitivity of human eyes to the specific chromatic components, the proximity principle, and the tilt effect. In the watermark extraction phase, a two-stage DNN was designed, containing an auxiliary enhancement sub-network for enhancing the embedded watermark features and classification of the sub-network for extracting the internal information of the watermark.

Distortion Compensation-based: Fang et al. [133] used the method of swapping DCT coefficients to achieve watermark embedding and a distortion compensation extraction algorithm to achieve the robustness of the watermark to photographic processing. Specifically, a line spacing region and a symmetric embedding block were used to reduce the distortion generated by the text.

Decoding Based on Attention Mechanism: Fang et al. [134] designed a transparency, efficiency, robustness, and adaptability coding to effectively mitigate the conflict between transparency, efficiency, robustness, and adaptability. A color decomposition method was used to improve the visual quality of watermarked images, and a super-resolution scheme was used to ensure the embedding efficiency. Bose Chaudhuri Hocquenghem (BCH) coding [135] and an attention decoding network (ADN) were used to further ensure robustness and adaptivity.

Keypoint Enhancement: The feature enhanced keypoints are used to locate the watermark embedding region, but the existing keypoint enhancement methods [136,137] ignore the improvement of the overall algorithm by separating the two steps of keypoint enhancement and watermark embedding. Dong et al. [138] used a convex optimization framework to unify the two steps to improve the accuracy of watermark extraction and blind synchronization of embedding regions effectively.

Transform Domain: Bai et al. [139] introduced a separable noise layer over the DCT domain in the embedding and extraction layers to simulate screen-shooting attacks. SSIM-based loss functions were introduced to improve imperceptibility. Spatial transformation networks were used to correct the values of pixels on the image formed by the geometric attacks before extracting the watermark. Considering that conventional CNN-based algorithms introduce noise in the convolution operation, Lu et al. [140] used DWT and IDWT instead of down-sampling and up-sampling operations in CNN to enable the network to learn a more stable feature representation from the noisy samples and introduced a residual regularization loss containing the image texture to improve the image quality and watermark capacity. The Fourier transform is invariant to rotation and translation; Boujerfaoui et al. [141] improved the Fourier transform-based watermarking method using a frame-based transmission correction of the captured image in the distortion correction process.

Distortion Simulation: Jia et al. [142] introduced a 3D rendering distortion network to improve the robustness of the model to camera photography and introduced a human visual system-based loss function to supervise the training of the encoder, which mainly contained the just notice difference (JND) loss and learned perceptual image patch similarity (LPIPS) loss of the original and watermarked images to improve the quality of the watermarked images. Fang et al. [143] modeled the three components of distortion with the greatest impacts: transmission distortion, luminance distortion, and Moiré distortion and further differentiated the operation so that the network can be trained end-to-end. The network was trained with end-to-end parameters and the residual noise was simply simulated with Gaussian noise. For imperceptibility, a mask-based edge loss was proposed to limit the embedding region which improved the watermarked image quality. This was performed to address the difficulty of conventional 3D watermarking algorithms to achieve watermark extraction from 2D meshes. Yoo et al. [144] proposed an end-to-end framework containing an encoder, a distortion simulator (i.e., a differentiable rendering layer that simulated the results of a 3D watermarked target after different camera angles), and a decoder to decode from 2D meshes. Tancik et al. [145] proposed the stegastamp steganography model to implement the encoding and decoding of hyperlinks. The encoder used a U-Net-like [146] structure to transform a 400 × 400 tensor with 4 channels (including the input RGB image and watermark information) into a tensor of residual image features. However, the algorithm had a small embedding capacity.

The state-of-the-art deep learning-based image watermarking against screen-shooting attack algorithms and performance comparison are described in Table 6 and Table 7. Table 6 describes the methods from four aspects: watermark size (container size), category, robustness with BER metrics, and dataset. Table 7 describes the methods from four aspects: watermark size (container size), category, robustness with other metrics, and dataset.

Table 6.

A comparison of deep learning-based image watermarking against screen-shooting attack with BER metrics.

Table 7.

A comparison of deep learning-based image watermarking against screen-shooting attack with other experiment conditions.

4.2.3. Robust Image Watermark against Agnostic Attack

Agnostic attacks refer to attacks where the attack model cannot access prior information about the attack (i.e., the model cannot generate corresponding adversarial examples precisely to guide the decoder to improve its robustness). To address these problems, relevant studies have recently been proposed, which can be subdivided into two-stage separable training, no-attack training, and one-stage end-to-end.

Two-stage Separable Training: Zhang et al. [129] proposed a two-stage separable watermark training model. The first stage jointly trained the codec as well as an attack classification discriminator which used multivariate cross-entropy loss for convergence to obtain encoder parameters that generated stable image quality and an attack classification discriminator that can accurately classify the type of attacks on the image. In the second stage, a fixed encoder, a multiple classification discriminator, and an attack layer were set up using the obtained watermarked image and attacked image prior to training a specific decoder. However, it still did not solve the local optimal solution of the two-stage separable training.

No Attack Training: Zhong et al. [130] introduced a multiscale fusion convolution module, avoiding the loss of image detail feature information as the number of layers of the network deepens, which triggered the inability of the encoder to find an effective hidden embedding point. The invariance layer was set in the encoder and decoder to reproject the most important information and to disable the neural connections in the independent regions of the watermark. Chen et al. [149] proposed a watermark classification network for implementing copyright authentication of attacked watermarks. In the training phase, the training set was generated by calculating the NC value of each watermarked image and classifying its labels into forged and genuine images according to the set threshold. The training set was fed into the model and supervised by the BCE loss function to obtain the model parameters which can accurately classify the authenticity of the watermark. Under high-intensity attacks, the model can still distinguish the real watermark effectively. However, the classification accuracy was affected by the NC threshold setting of the model itself. Xu et al. [150] proposed a blockchain-based zero-watermarking approach, which alleviates the pressure of authenticating zero-watermarks through third parties effectively.

One-stage End-to-end Training: The encoder trained on a fixed attack layer is prone to model overfitting, which is clearly not applicable to realistic watermarking algorithms that need to resist many different types of attacks, and Luo et al. [151] proposed the use of CNN-based adversarial training and channel encoding which can add redundant information to the encoded watermark to improve the algorithm robustness. Zhang et al. [152] proposed the reverse ASL end-to-end model (i.e., the gradient propagation update of parameters was involved in the forward propagation ASL layer, and the gradient does not pass through the ASL layer in the reverse propagation). Reverse ASL can effectively mitigate model overfitting and improve the robustness against agnostic attacks. Zheng et al. [153] proposed a new Byzantine-robust algorithm WMDefence which detected Byzantine malicious clients by embedding the degree of degradation of the model watermark.

The state-of-the-art deep learning-based image watermarking against agnostic attack algorithms and performance comparison are described in Table 8. Table 8 describes the methods from five aspects: watermark size (container size), category, structure, robustness, and dataset, where represents the ratio of salt and pepper in salt and pepper noise.

Table 8.

A comparison of deep learning-based image watermarking against agnostic attack.

5. Future Research Directions and Conclusions

5.1. Future Research Directions

On the basis of the existing problems in the current research status, this subsection gives future research directions for image forensics.

For passive forensics, although tampering detection algorithm techniques have been developed to some extent, there are still some problems of low generalization ability and poor robustness. Because the performance of deep learning-based tampering localization models depends on the training dataset heavily, the performance usually degrades significantly for the test samples from different datasets. It requires us to analyze the intrinsic relationship among images from different sources more deeply and improve the network architecture to learn more effective features. The network model performance also degrades when the images are subjected to certain post-processing attacks, such as scaling, rotation, and JPEG compression. It requires us to perform data augmentation on the data during training to improve the robustness of the model and push the algorithm into practical applications. The current problem of insufficient tampering and low quality of tampering detection datasets seriously affects the development of deep learning-based tampering detection techniques. It is also very important to construct a dataset that meets the actual forensic requirement. Deep learning techniques continue to evolve, bringing many opportunities and challenges to passive image forensics. We should continuously update tampering detection techniques and use more effective network models and learning strategies to improve the accuracy and robustness of algorithms.

For active forensics, future research on robust image watermarking will use algorithms based on deep learning. In differentiable attacks, for noise enhancement and filtering attacks, choosing a more stable training framework and training methods is the primary method to effectively solve the current training instability, imperceptibility, and robustness tradeoff, such as using the diffusion model [154,155,156] and training codecs in divided stages. To enhance geometry attacks, designing the structure and transformation of restoring synchronization between watermark information and the decoder is an effective way to solve the problem of lack of synchronization between the decoder and watermark destroyed by geometry attacks. For example, an end-to-end deep learning model combined with the scale-invariant feature transform (SIFT) algorithm can effectively improve the robustness of rotation attacks. In the part of a non-differentiable attack, for a JPEG compression attack, the design of a more effective and realistic simulation of differentiable JPEG compression structure is the primary method to solve the problem that non-differentiable JPEG compression can not achieve model training and the poor effect of differentiable simulation JPEG training, for example, the differentiable analog JPEG combined with the attention mechanism to improve the simulation effect. In digital screen camera attacks, designing an effective analog distortion degradation structure is the primary method to solve the problem of poor robustness due to the difficult prediction of screen camera attack distortion. For example, multiple denoizing and de-denoizing processes in diffusion model [154,155,156] are used to simulate the distortion of screen photography to improve the robustness of screen photography attacks. In the unknown attack, it is an effective method to improve the robustness of the unknown attack to design a watermarked image in the attack layer to generate a wider variety of attack adversarial samples after passing through the attack layer. For example, in model training, unsupervised sample types are first enriched, and then supervised watermark recovery accuracy is improved.

5.2. Conclusions

In this review, we synthesize existing research on deep learning-based image forensics from both passive forensics (i.e., tampering detection) and active forensics (i.e., digital watermarking), respectively.