Abstract

When realized on computational devices with finite quantities of memory, feedforward artificial neural networks and the functions they compute cease being abstract mathematical objects and turn into executable programs generating concrete computations. To differentiate between feedforward artificial neural networks and their functions as abstract mathematical objects and the realizations of these networks and functions on finite memory devices, we introduce the categories of general and actual computabilities and show that there exist correspondences, i.e., bijections, between functions computable by trained feedforward artificial neural networks on finite memory automata and classes of primitive recursive functions.

Keywords:

computability theory; theory of recursive functions; artificial neural networks; number theory MSC:

03D32

1. Introduction

An offspring of McCollough and Pitts’ research on foundations of cybernetics [1], artificial neural networks (ANNs) entered mainstream machine learning after the discovery of backpropagation by Rumelhart, Hinton, and Williams [2]. ANNs proved to be universal approximators of different classes of functions when no limits are imposed on the number of artificial neurons in any layer (arbitrary width) or on the number of hidden layers (arbitrary depth) and even with bounded widths and depths (e.g., [3,4,5]). ANNs cease being abstract mathematical objects when implemented in specific programming languages on computational devices with finite quantities of internal and external memory, to which we interchangeably refer in our article as finite memory devices (FMDs) and finite memory automata (FMA). To differentiate between functions computable by ANNs in principle and functions computable by ANNs realized on FMA, we introduce the categories of general and actual computabilities and show that there exist correspondences, i.e., bijections, between functions computable by trained feedforward ANNs (FANNs) on FMA and classes of primitive recursive functions.

Our article is organized as follows. In Section 2, we expound the terms, definitions, and notational conventions for functions and predicates espoused in this article and define the term finite memory automaton. In Section 3, we explicate the categories of general and actual computabilities and elucidate their similarities and differences. In Section 4, we formalize FANNs in terms of recursively defined functions. In Section 5, we present primitive recursive techniques to pack finite sets and Cartesian powers thereof into Gödel numbers. In Section 6, we use the set packing techniques of Section 5 to show that functions computable by trained FANNs implemented on FMA can be archived into natural numbers. In Section 7, we show how such archives can be used to define primitive recursive functions corresponding to functions computable by FANNs. In Section 8, we discuss theoretical and practical reasons for separating computability into the general and actual categories and pursue some implications of the theorems proved in Section 7. In Section 9, we summarize our conclusions. For the reader’s convenience, Appendix A gives supplementary definitions, results, and examples that are referenced in the main text when relevant.

2. Preliminaries

2.1. Functions and Predicates

If f is a function, and denote the domain and the co-domain of f, respectively. Statements such as abbreviate the logical conjunction . A function f is partial on a set S if is a proper subset of S, i.e., . Thus, if and , then f is partial on S, because . If S and R are sets, then is logically equivalent to the logical conjunction , i.e., S is a subset of R, and vice versa. If f is partial on S and , the following statements are equivalent: (1) ; (2) f is defined on z; (3) is defined; and (4) . The following statements are also equivalent: (1) ; (2) f is undefined on z; (3) is undefined; and (4) . If f is partial on S and , then f is total on S. Thus, is total on . When is a bijection, i.e., f is injective (one-to-one) and surjective (onto), f is a correspondence between S and R.

If S is a set, then is the cardinality of S, i.e., the number of elements in S. S is finite if and only if (iff) . For , is the n-th Cartesian power of S, i.e., Thus, if , . The symbol is a sequence of numbers, i.e., a vector, from a set S, i.e., ; is the empty sequence. If , its individual elements are , , or, equivalently, , , . If and , = = = . If is a bijection, the inverse of f is . When the arguments of f are evident, f or abbreviate , , or

A total function is a predicate if, for any , or , where 1 arbitrarily designates the logical truth and 0 designates a logical falsehood. The symbols ¬, ∧, ∨, →, respectively, refer to logical not, logical and, logical or, and logical implication. We abbreviate to and to . If P and Q are predicates, then is logically equivalent to , i.e., . For clarity, sub-predicates of compound predicates may be included in matching pairs of . Thus, if a compound predicate P consists of predicates , , , and , it can be defined as . The symbols ∃ and ∀ refer to the logical existential (there exists) and universal (for all) quantifiers, respectively. Thus, the statement is logically equivalent to the statement that holds for at least one in , while the statement is logically equivalent to the statement that holds for all in .

2.2. Finite Memory Automata

A finite memory device is a physical or abstract automaton with a finite quantity of internal and external memory and an automated capability of executing programs, i.e., finite sequences of instructions written in a formalism, e.g., a programming language for , and stored in the finite memory of . Since bijections exist between expressions over any finite alphabet, i.e., a finite set of symbols or signs, and subsets of [6], we call the memory of numerical memory. The numerical memory consists of registers, each of which is a sequence of numerical unit cells, e.g., digital array cells, mechanical switches, and finite state machine tape cells. The quantity of numerical memory is the product of the number of registers and the number of unit cells in each register, i.e., this quantity is a natural number.

A cell holds exactly one elementary sign from a finite alphabet, e.g., { “.”, “0”, “1”, “2”, “3”, “4”, “5”, “6”, “7”, “8”, “9” }, or is empty. The sign of the empty cell is unique and is not an elementary sign. A number sign is a sequence of elementary signs in consecutive cells of a register with no empty cells to the left of the first elementary sign and possibly some empty cells to the right of the rightmost elementary sign. Thus, if “|” is the empty sign on , the alphabet is { “.”, “-”, “0”, “1”, “2”, “3”, “4”, “5”, “6”, “7”, “8”, “9” }, and each register on has seven cells, then “3.1||||”, “3.14|||”, “3.141||”, “3.1415|”, and “3.14159” are number signs conventionally interpreted as the real numbers 3.1, 3.14, 3.141, 3.1415, and 3.14159, respectively. An arbitrary number sign interpretation is fixed a priori for a given alphabet and and does not change from sign to sign. Thus, if the alphabet is { “,”, “0”, “f0”, “ff0”, “fff0”, “ffff0”, “fffff0”, “ffffff0”, “fffffff0”, “ffffffff0”, “fffffffff0” } and the interpretation is such that “,” is interpreted as the decimal point, “0” as 0, “f0” as 1, “ff0” as 2, “fff0” as 3, etc., “*” is the empty sign, and each sign is read left to right, then, if each register on has twenty three cells, the sign “f0,ffff0f0ffff0ff0f0***” is interpreted as 1.41421.

A real number x is signifiable on iff a register on can hold its sign. Put another way, a number is signifiable on if, in a programming language L for , the number’s sign can be assigned to a variable, i.e., stored in a designated register. When x is signifiable on , we say that x is simply signifiable. A set or a sequence of numbers is signifiable if each number in the set or sequence is signifiable.

is the smallest positive signifiable real number on iff for any signifiable x, there is no signifiable y such that . The finite set of real numbers in the closed interval between 0 and 1 signifiable on is

We note, in passing, a notational convention in Equation (1) to which we adhere in our article: if is an FMA, then the Latin letter j in subscripts or superscripts of symbols is used to emphasize that they are defined with respect to . Thus, if and are two FMDs with different quantities of numerical memory, .

Lemma 1.

If is a maximal element of and , then .

Proof.

If , then , because 1 is the only number in greater than z. If , then and . □

A corollary of Lemma 1 is that if are signifiable, , then

is the finite set of signifiable numbers in the closed interval from a to b such that there exists no signifiable number between any two consecutive members of when the latter is sorted in non-descending order.

Lemma 2.

If are signifiable and , there exists a bijection : ↦ = , , where . If is signifiable, it is the smallest signifiable number .

Proof.

Let

Let . If , then , for or . If , then , for . Let . If , then or . If , then . Let be signifiable. If , it is vacuously the smallest signifiable number . If , then, since , the assertion that or leads to a contradiction. □

A corollary of Lemma 2 is that is

Lemmas 1 and 2 draw on the empirically verifiable fact manifested by division underflow errors in modern programming languages: given an FMD and two signifiable real numbers a and b, with , the set of signifiable real numbers in the closed interval between a and b is a proper finite subset of the set of real numbers . Thus, bijections are possible between and finite subsets of . While these bijections may differ from FMA to FMA in that they depend on the exact quantity of memory on a given FMA, they differ only in terms of the cardinalities of their domains and co-domains: the larger the quantity of memory, the greater the cardinality. A constructive interpretation of Lemmas 1 and 2 is that if we take two signifiable real numbers a and b such that , we can effectively enumerate the elements of by iteratively adding increasing integer multiples of to a until we reach b, i.e., , or go slightly above it, i.e., , for .

To map the elements of to , we define the bijection and its inverse as

If we abbreviate , , , , , and , to , , , , R, Z, and I, respectively, and let , we have the following example.

Example 1.

For , we have another example.

Example 2.

3. Computability: General vs. Actual

Computability theory lacks a uniform, commonly accepted formalism for computable, partially computable, and primitive recursive functions. The treatment of such functions in our article is based, in part, on the formalism by Davis, Sigal, and Weyuker (Chapters 2 and 3 in [7]), which has, in turn, much in common with Kleene’s formalism (Chapter 9 in [8]). Alternative treatments include [9], where primitive recursive functions are formalized as loop programs consisting of assignment and iteration statements similar to DO statements in FORTRAN, and [10], where -calculus is used. These symbolically different treatments have one feature in common: computable, partially computable, and primitive recursive functions operate on natural numbers and the underlying automata, explicit or implicit, on which these functions can, in principle, be executed if implemented as programs in some formalism, have access to infinite numerical memory. To distinguish computability in principle from computability on finite memory automata, we introduce the categories of general and actual computabilities.

3.1. General Computability

As our formalism in this section, we use the programming language developed in Chapter 2 in [7] and subsequently used in that book to define partially computable, computable, and primitive recursive functions and to prove various properties thereof. An program is a finite sequence of instructions. The unique variable Y is designated as the output variable where the output of on a given input is stored. designate input variables, and refer to internal variables, i.e., variables in that are not input variables. No bounds are imposed on the magnitude of natural numbers assigned to variables. has conditional dispatch instructions; line labels; elementary arithmetic operations on and comparisons of natural numbers; and macros, i.e., statements expandable into primitive instructions.

A computation of on some input , , is a finite sequence of snapshots , where each snapshot , , specifies the number of the instruction in to be executed and the value of each variable in . The snapshot is the initial snapshot, where the values of all input variables are set to their initial values, the program instruction counter is set to 1, i.e., the number of the first instruction in , and the values of all the other variables in are set to 0. The snapshot in is a terminal snapshot, where the instruction counter is set to the number of the instructions in plus 1. Not all snapshot sequences are computations. If is a computation of on , i.e., , , …, , then there is a function that, given the text of and a snapshot in the computation, generates the next snapshot of the computation. This function can verify if constitutes the computation of on . The existence of such functions implies that each instruction in is interpreted unambiguously. If some program in takes m inputs and the values of the input variables are , , …, , then

denotes the value of Y in the terminal snapshot if there exists a computation of on and is undefined otherwise.

Definition 1.

A function , , is partially computable if f is partial and there is an program such that Equation (7) holds.

Equation (7) is interpreted so that iff and iff .

Definition 2.

A function , , is computable if it is total, i.e., , and partially computable.

Let and , , . Then, is obtained by composition from if

Let , , and , , be total. If h is obtained from by the recurrences in (9) or from f and g by the recurrences in (10), then h is obtained from or from f and g by primitive recursion or simply by recursion. The recurrences in (10) are isomorphic to Gödel’s recurrences (Section 2, Equation (2) in [6]) where he introduces the concept of recursively defined number-theoretic function. The three functions in (11) are the initial functions.

Definition 3.

An implication of Definition 3 is that if f is a primitive recursive function, then there is a sequence of functions , , where every function in the sequence is an initial function or is obtained from the previous functions in the sequence by composition or recursion.

A class of total functions is primitive recursively closed (PRC) if the initial functions are in it and any function obtained from the functions in by composition or recursion is also in . It has been shown (Chapter 3 in [7]) that (1) the class of computable functions is PRC; (2) the class of primitive recursive functions is PRC; and (3) a function is primitive recursive iff it belongs to every PRC class. A corollary of (3) is that every primitive recursive function is computable.

If includes all functions of a certain type, we refer to it as the class of those functions, e.g., the class of partially computable functions, the class of computable functions, the class of primitive recursive functions, etc. When we say that is a class of functions of a certain type, we mean that , where is the class of functions of that type.

3.2. Actual Computability

In general, the FMA defined in Section 2.2 is different from the finite state automata of classical computability theory, because the latter, e.g., a Turing machine (TM), do not impose any limitations on memory. A TM becomes an FMA iff the number of cells on its tape where it reads and writes symbols is finite. Analogously, a finite state automaton (FSA) of classical computability is an FMA iff there is a limit, expressed as a natural number, on the length of the input tape from which the FSA reads sign sequences over a given alphabet.

As is the case with general computability, we let be a L program, i.e., a finite sequence of unambiguous instructions in a programming language L for an FMD . Thus, if is a physical computer with an operating system, e.g., Linux, a programming language for can be Lisp, C, Perl, Python, etc. If is an abstract FMA, e.g., a TM with a finite number of cells on its tape, then is programmed with the standard quadruple formalism (Chapter 6 in [7]). If is a mechanical device, then we assume that there is a formalism that consists of instructions such as “set switch i to position p”, “turn handle full circle clockwise t times”, etc. A state of while executing on some input includes the number of the instruction in to execute next and, depending on , may include the contents of each register, the signs on the finite input tape, or the state of each mechanical switch. As we did with general computability, we call such a state a snapshot of for and define a computation of on to be a finite sequence of snapshots , , where each subsequent snapshot is computed from the previous snapshot, the initial snapshot has the values of all the variables in appropriately specified and the instruction counter of set to 1, and the terminal snapshot has the instruction counter set to the number of the instructions in plus 1. We let

denote the number sign corresponding to the output of executed on . It is irrelevant to our discussion where this number sign is stored (e.g., in a register, a section of a finite tape, or the sequence of the positions of the mechanical switches examined left to right or right to left, etc.) so long as it is understood that the output, whenever there is a computation, is unambiguously interpreted as a real number according to an interpretation fixed a priori.

Definition 4.

A partial function , , is actually partially computable on if Equation (12) holds.

Equation (12) of actual computability is interpreted so that iff , i.e., iff , for any and signifiable on , and iff . However, unlike Equation (7) of general computability, which is defined only on natural numbers and every natural number is signifiable by implication, in actual computability, we have to make provisions for non-signifiable real numbers. Toward that end, we introduce the following inequality, which holds when a non-signifiable number is encountered during a computation of .

Inequality (13) can be illustrated with two examples. Let have two cells per register, let be , and let be a program that implements f, i.e., adds two number signs of and and puts the number sign of in a designated output register. Let number signs be interpreted in standard decimal notation. Furthermore, if some number x is not signifiable on , only the first two elementary signs of the number sign of x are placed into a register, i.e., number signs are truncated to fit into registers, as is common in many programming languages. Then, after “100” is truncated to “10”,

and

because 213 is not signifiable on and is truncated to “21.” In both cases, , as a mathematical object, is total, and there is a computation of on and , but during both computations, non-signifiable numbers, i.e., 100 and 213, are encountered.

Definition 5.

A function , , is actually computable on if it is total, i.e., , and actually partially computable.

A program that implements an actually computable is guaranteed to have a computation for any signifiable . However, Inequality (13) may still hold if a non-signifiable number is produced during a computation. Functions can be defined for a specific so that they deal only with signifiable numbers, e.g., whose domains and codomains are, respectively, finite signifiable proper subsets of and . The next definition characterizes these functions.

Definition 6.

A function , , is absolutely actually computable on if it is actually computable and Inequality (13) holds for no computation of , where is signifiable on .

An implication of Definitions 4–6 is that if satisfies Definition 4, it is partially computable according to Definition 1, and if it satisfies Definitions 5 or 6, it is computable according to Definition 2, because, if no memory limitations are placed on registers, every natural number is signifiable.

We call an FMD sufficiently significant if three conditions are satisfied. First, a programming language L for exists with the same control structures as the programming language described in Section 3.1 such that L (1) is capable of signifying a finite subset of and (2) capable of specifying the following operations on numbers: addition, subtraction, multiplication, division, assignment, i.e., setting the value of a register to a number sign, comparison, i.e., , , , , , on any signifiable a and b, and the truncation of the signs of non-signifiable numbers to fit them into registers. Second, the finite memory of suffices to hold L programs of length , where the length of the program is the number of instructions in it. Third, the finite memory of suffices, in addition to holding a program of at most N instructions, to hold number signs in registers.

Lemma 3.

Let an FMA be sufficiently significant with , signifiable, , and let , , be the smallest signifiable number greater than or equal to b. Let : ↦ be the bijection in (5). Let , , be a program for that iterates from a to in positive unit integer increments of until k or z that satisfies the conditions in (3) is encountered, and the length of . Then, is absolutely actually computable.

Proof.

Since , and are signifiable, so are and . The finite memory of suffices to hold , and needs access to five signifiable numbers to iterate over : a, b, i, , . Since , the signs of these numbers are placed in registers , , , , and . After is placed in register , sets to 0. If , goes into a while loop with the condition of , i.e., . Inside the loop, when , is incremented by 1 and placed into the output register , and exits. Otherwise, the loop continues with incremented by 1. If , goes into a while loop with the condition of , i.e., , and keeps incrementing by 1 inside the loop. After the loop terminates, is incremented by 1 and placed into the output register , and exits. □

A corollary of Lemma 3 is that is absolutely actually computable.

4. A Recursive Formalization of Feedforward Artificial Neural Networks

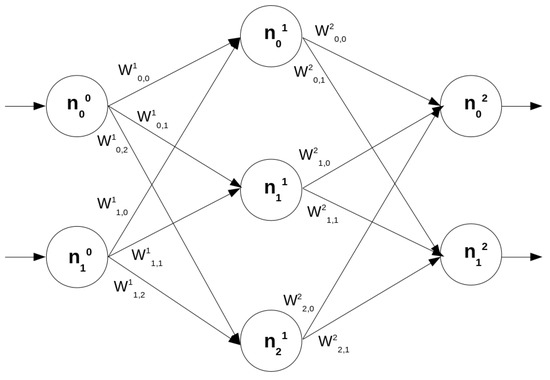

A trained feedforward artificial neural network (FANN) implemented in a programming language L on a sufficiently significant FMA is a finite set of artificial neurons, each of which is connected to a finite number of the neurons in the same set through the synapses, i.e., directed weighted edges (See Figure 1). The neurons are organized into layers , with being the input layer; being the output layer; and , , being the hidden layers. We let denote the number of layers in and refer to the i-th neuron in layer in . We abbreviate to , because always refers to a unique neuron in . The function specifies the number of neurons in layer of and is abbreviated .

Figure 1.

A 3-layer fully connected feedforward artificial neural network (FANN); layer 0 includes the neurons and ; layer 1 includes the neurons , , and ; layer 2 includes the neurons and ; the two arrows coming into and signify that layer 0 is the input layer; the two arrows going out of and signify that layer 2 is the output layer; , , is the weight of the synapse from to , e.g., is the weight of the synapse from to and is the weight of the synapse from to .

We assume that is trained, i.e., the synapse weights are fixed automatically or manually, and fully connected, i.e., there is a synapse from every neuron in layer to every neuron in layer . Each synapse has a weight, i.e., a signifiable real number, associated with it. We let , , denote the weight of the synapse from to (see Figure 1) and refer to a vector of all synaptic weights between and . We define . Thus, for the FANN in Figure 1, and . We assume, without loss of generality, that all numbers in are in defined in (1), because, if that is not the case, they can be so scaled, nor is there any loss of generality associated with the assumption of full connectivity, because partial connectivity can be defined by setting the weights of the appropriate synapses to 0.

If is abbreviated to , each in , , computes an activation function

where is the vector of the activations, i.e., real signifiable numbers, of the neurons in layer . For ,

where and , . Thus, if , as in Figure 1, then, given the input , , , . Since is implemented on a sufficiently significant , all activation functions are absolutely actually computable. It is irrelevant to our discussion whether the activation functions are the same, e.g., sigmoid, for all or some neurons, or each neuron has its own activation function.

The term feedforward means that the activations of the neurons are computed layer by layer from the input layer to the output layer, because the activation functions of the neurons in the next layer require only the weights of the synapses connecting the next layer with the previous one and the activation values, i.e., the outputs of the activation functions of the neurons in the previous layer. To define the activation vectors of individual layers, let

where and is an input vector. For each , we define the absolutely actually computable function that computes as

5. Finite Sets as Gödel Numbers

Our primitive recursive techniques to pack finite sets and Cartesian powers thereof into Gödel numbers in this section rely, in part, on our previous work on primitive recursive characteristics of chess [11], which, in turn, was based on several functions shown to be primitive recursive in [7]. For the reader’s convenience, Appendix A.1 in Appendix A gives the functions shown to be primitive recursive in [7] and gives the necessary auxiliary definitions and theorems. Appendix A.2 in Appendix A gives the functions or variants thereof shown to be primitive recursive in [11]. When we use the functions from [7,11] in this section, we refer to their definitions in the above two sections of Appendix A as necessary.

Let G be a Gödel number (G-number) as defined in (A8). The primitive recursive predicate in (18) uses the bounded existential quantification of a primitive recursive predicate defined in (A2) and the primitive recursive functions and , respectively, defined in (A9) and (A10).

The logical structure of is , where , , and are

The predicate holds for G-numbers with at least one element and whose elements themselves have the same length, i.e., the same number of elements, greater than 0. Thus, , , and , but and .

Let G be a G-number, the predicate be as defined in (A13), the function be as defined in (11), and the function be as defined in (A15), and let

Then, the primitive recursive function

turns a G-number into another G-number whose elements are the elements of the original G-number G, each of which is placed into a G-number whose length is 1. Thus, . In general, if , , , i.e., , for , then .

Then, the primitive recursive function

adds g to each element of G. Thus, and .

Let and be two G-numbers, and let

Then, the primitive recursive function

adds each element of to each element of . Thus,

Let G be a G-number, and let

Then, the primitive recursive function

computes, for , a Gödel number whose components are Gödel numbers representing all sequences of elements of G. Thus,

Let , , and . An induction on t shows that, for , is a G-number representation of in the sense that iff .

If is an FMA, we let

where is defined in (2) and is defined in (A17). If we recall from Lemma 2 and (5) that : ↦ = , where is the smallest signifiable real number on , we observe that is a G-number representation of . Thus, if we return to Example 2 and use the accessor function in (A9), then for , we have

In general, for ,

Then, is a G-number representation of , i.e., the t-th Cartesian power of . Since both and ∸ are primitive recursive functions, and are primitive recursively computable.

Example 3.

Let , and . Then,

We note that iff .

Let , , , and let and be defined as

If is signifiable, iff , for any . If is not signifiable, and are actually computable; if is signifiable, the functions are absolutely actually computable.

Example 4.

To continue with Example 3, if and , then, if we abbreviate , to , , we have

6. Numbers and : Packing FANNs into Natural Numbers

Let us assume that is absolutely actually computable on a sufficiently significant FMA and abbreviate to , to , and in (25) to . Let be as defined in (A5) and be as defined in (A10). Then, for each input neuron in an FANN , let

We recall that is the number of layers in . Then, for a hidden or output neuron , , let

For an FANN on and , let

An implication of the definitions of in (A5) and the G-number in (A8) is that is unique for , because the only way for another FANN on to have is for to have the same number of layers, the same number of neurons in each layer, the same activation function in each neuron, and the same synapse weights between the same neurons, i.e., . Appendix A.3 in Appendix A gives several examples of how the numbers are computed for in Figure 1.

Lemma 4.

Let be absolutely actually computable on a sufficiently significant FMA and let be an FANN implemented on . Let , , , and in (25) be signifiable on . Then, and .

7. FANNs and Primitive Recursive Functions

For , , , let

where and are defined in (A6) and (A19), respectively. An example of computing is given at the end of Appendix A.3 in the Appendix A.

Lemma 5.

If and , for , let

If and , for , let

Theorem 1.

Proof.

Let us abbreviate to f, to , to , to , to , and to . Since is signifiable, and are absolutely actually computable. Let

Let us abbreviate to , and let . Then and . We observe that

Since is an absolutely actually computable bijection,

By (26), iff . Thus, iff .

Let . Then,

By Lemma 5,

Since is an absolutely actually computable bijection,

whence, since iff , iff .

Let us assume iff for . Then,

and

Then,

whence, by induction, since iff , iff . □

Let, for and ,

and, for , let

Then, is the absolutely actually computable function computed by and, by Theorem 1, is primitive recursive. We are now in a position to prove the final theorem of this article.

Theorem 2.

Let

be the set of FANNs implemented on a sufficiently significant FMA , and let

be the set of corresponding absolutely actually computable functions of the FANNs in , as defined in (33). There exists a bijection between and a class of primitive recursive functions.

8. Discussion

The definition of the finite memory device or automation (FMD or FMA) in Section 2.2 has four main implications. First, a physical or abstract automaton is an FMD when its memory amount is quantifiable as a natural number. Second, characters and strings are not necessary, because bijections exist between any finite alphabet of symbols and natural numbers and, through Gödel numbering, between any strings over a finite alphabet and natural numbers, hence the term numerical memory used in the article. Third, an FSA of classical computability becomes an FMA when the quantity of its internal and external memory is finite, i.e., there is an upper bound in the form of a natural number on the quantity of the machine’s memory. It is irrelevant for the scope of this investigation whether the input tape of an FSA, the input and output tapes of such FSA modifications as the Mealy and Moore machines (Chapter 2 in [12]) or the finite state transducers (Chapter 3 in [13]), and the input tape and the stack of a pushdown automaton (PDA) (Chapter 5 in [12]) are considered internal or external memory. Fourth, a universal Turing machine (UTM) (Chapter 6 in [7]) is an FMA when the number of its tape cells is bounded by a natural number, which a fortiori makes any physical computer an FMA. Thus, only one type of universal computer is needed to define all FMA it can simulate.

Consider a universal computer capable of executing the universal L program constructed to prove the Universality Theorem (Theorem 3.1, Chapter 3 in [7]). The computer , equivalent to a UTM, takes an arbitrary L program P, an input to that program in the form of a natural number stored in its input register , which can be a Gödel number encoding an array of numbers, executes P on by encoding the memory of P as another Gödel number and returns the output of P as a natural number, which can also be a Gödel number encoding a sequence of natural numbers, saved in its output register Y. Since characters and character sequences can be bijectively mapped to natural numbers, can simulate any FSA or a modification thereof, e.g., a Mealy machine, a Moore machine, a finite state transducer, or a PDA. Technically speaking, there is no need to distinguish between the Mealy and Moore machines, because they are equivalent (Theorems 2.6, 2.7, Chapter 2 in [12]). When a limit is placed on the numerical memory of by way of the number of registers it can use and the size of the numbers signifiable in them, the input and output registers included, immediately becomes an FMD and so a fortiori any device that is capable of simulating.

The separation of computability into the two overlapping categories, general and actual, is necessary for theoretical and practical reasons. A theoretical reason, generally accepted in classical computability theory, is that it is of no advantage to put any memory limitations on automata or on the a priori counts of unit time steps that automata may take to execute programs that implement functions in order to show that those functions are computable. Were it not the case, we would not be able to investigate what is computable in principle. Rogers [10] succinctly expresses this point of view:

"[w]e thus require that a computation

terminate after some finite number

of steps; we do not insist on an a

priori ability to estimate this number."

An implication of the above assumption is that an automaton, explicit or implicit, on which the said computation is executed has access to, literally, astronomical quantities of numerical memory. For a thought experiment, consider an automaton programmable in of Chapter 2 of [7] that we used in Section 3.1, and let a program , , compute the G-number of the sequence , i.e., the function computed by is , as defined in (A8). Then, is a primitive recursive function and, hence, computable in the general sense of Definition 2. Thus, is signifiable for any on the automaton. In particular, if n is the Eddington number, i.e., , estimating the number of hydrogen atoms in the observable universe [14], there is a computation and, by implication, a variable in to which the G-number of can be assigned.

The foregoing paragraph brings us to a practical reason for separating computability into the general and actual categories: it is of little use for an applied scientist who wants to implement a number-theoretic function f in a programming language L for an FMA to know that f is generally computable and the L program can, therefore, compute, in principle, some characteristic of arbitrarily large natural numbers, e.g., the Eddington number. If no natural number greater than some is signifiable on , the scientist must make provisions in the program for the non-signifiable numbers in order to achieve feasible results with absolutely actually computable functions.

Theorem 1 shows that the computation of a trained FANN on a finite memory device can be packed into a unique natural number. Once packed, the natural number can be used as an archive, after a fashion, to look up natural numbers that correspond, in the bijective sense of the term, to the real vectors computed by the function of an FANN implemented on the device. The correspondence is such that for any signifiable , the output of , i.e., , corresponds to the natural number computed by the primitive recursive function , i.e., , and the input corresponds to the natural number . Thus, iff . Furthermore, the function is computable in the general sense and is absolutely actually computable on any FMA where the natural number is signifiable.

A correspondence established in Theorem 2 should be construed so that the uniqueness of does not imply the uniqueness of because the same function can be computed by different FANNs. What it implies is that, for any two different FANNs and , (e.g., different numbers of layers or different numbers of nodes in a layer or different activation functions or different weights), implemented on the same FMA , . However, it may be the case that for any signifiable , and consequently, .

9. Conclusions

To differentiate between feedforward artificial neural networks and their functions as abstract mathematical objects and the realizations of these networks and functions on finite memory devices, we introduced the categories of general and actual computability. We showed that correspondences are possible between trained feedforward artificial neural networks on finite memory devices and classes of primitive recursive functions. We argued that there are theoretical and practical reasons why computability should be separated into these categories. The categories are overlapping in the sense that some functions belong in both categories.

Funding

This research received no external funding.

Data Availability Statement

No additional data are provided for this article.

Conflicts of Interest

The author declares no conflict of interest with himself.

Abbreviations

The following abbreviations are used in this article:

| ANN | Artificial Neural Network |

| FANN | Feedforward Artificial Neural Network |

| FMA | Finite Memory Automaton or Automata |

| FMD | Finite Memory Device |

| G-number | Gödel Number |

| TM | Turing Machine |

| UTM | Universal Turing Machine |

| FSA | Finite State Automaton or Automata |

| PDA | Pushdown Automaton or Automata |

Appendix A

Appendix A.1. Primitive Recursive Functions and Predicates

In this section, we define several functions shown to be primitive recursive in [7]. All smallcase variables in this section, e.g, x, y, z, t, n, and m, with and without subscripts, refer to natural numbers and the term number is synonymous with the term natural number.

The expression

is called the bounded existential quantification of the predicate P and holds iff for at least one t such that . The expression

is called a bounded universal quantification of P and holds iff for every t such that . If is a predicate and z is a number, then

is called the bounded minimalization of P and defines the smallest number t for which P holds or 0 if there is no such number. It is shown in [7] that (1) the predicates , , , , , , and , i.e., x divides y, are primitive recursive; (2) a finite logical combination of primitive recursive predicates is primitive recursive; and (3) if a predicate is primitive recursive, then so are its negation, its bounded minimalization, and its bounded universal and existential quantifications.

Let

The pairing function of natural numbers x and y, , is

where

For any number z, there are unique x and y such that . For example, if , then

The functions and

return the left and right components of any number z so that . Thus, if , then , .

The symbol refers to the n-th prime, i.e., , , , etc., and , by definition. The primes are computed by the following primitive recursive function.

Thus, , , , , , , etc. If is a sequence of numbers, the function

computes the Gödel number (G-number) of this sequence. The G-number of the empty number sequence is 1. Thus, the G-number of is .

If , the accessor function

returns the i-th element of x. Thus, if , then , , , and for or .

The length of a Gödel number x is the position of the last non-zero prime power in x. Specifically, if , its length is computed by the function defined as

Thus, . iff , when , and . , where .

The function returns the integer part of the quotient . Thus, , , , and for any number x.

Appendix A.2. Gödel Number Operators

The functions in this section or variants thereof were shown to be primitive recursive in [11]. The function

assigns the value of the i-th element of the G-number b to v. Thus, if , , and , then

The function in (A12), where is one of the three initial functions defined in (11) and is defined in (A9), returns the count of occurrences of x in y. Thus, if , then . A convention in (A12) and other equations in this section is that the name of auxiliary functions end in “x”.

If y is a G-number, then the predicate

holds if x is an element of y. Thus, , but . The function

appends x to the right of the rightmost element of y. Thus,

Let

Then, the function

places all numbers in y, in order, to the left of the first number in x, while the function

places all numbers of y, in order, to the right of the rightmost number in x. We refer to the function in (A15) as left concatenation and to the function in (A16) as right concatenation. Thus, ; ; ; .

Let

Then, for and , the function

generates a G-number whose numbers start at l and go to u in positive integer increments of k. Thus, ; ; ; ; . The abbreviation stands for generator of Gödel numbers.

The function

returns the smallest index t of such that . Thus, if

then , , . The function

returns the pair from y at the index t returned by . Thus, if

then

Appendix A.3. Examples of Ω Numbers

Let us abbreviate in (25) to and consider the FANN in Figure 1. Let us assume that, as in Example 3, , and , and

In other words, is a G-number such that iff . , whose definition we omit for space reasons, is a G-number whose length is 125 such that iff , e.g., iff . We can compute for the FANN in Figure 1 as follows.

We can compute individual elements of . For example, since ,

Since ,

where . We know that because . Thus, , for . Let us therefore assume, for the sake of this example, that . Then,

where .

References

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Hornik, K. Approximation capabilities of multilayer feedforward networks. Neural Netw. 1991, 4, 251–257. [Google Scholar] [CrossRef]

- Gripenberg, G. Approximation by neural networks with a bounded number of nodes at each level. J. Approx. Theory 2003, 122, 260–266. [Google Scholar] [CrossRef]

- Guliyev, N.; Ismailov, V. On the approximation by single hidden layer feedforward neural networks with fixed weights. Neural Netw. 2019, 98, 296–304. [Google Scholar] [CrossRef] [PubMed]

- Gödel, K. On formally undecidable propositions of Principia Mathematica and related systems I. In Kurt Gödel Collected Works Volume I Publications 1929–1936; Feferman, S., Dawson, J.W., Kleene, S.C., Moore, G.H., Solovay, R.M., van Heijenoort, J., Eds.; Oxford University Press: Oxford, UK, 1986. [Google Scholar]

- Davis, M.; Sigal, R.; Weyuker, E. Computability, Complexity, and Languages: Fundamentals of Theoretical Computer Science, 2nd ed.; Harcourt, Brace & Company: Boston, MA, USA, 1994. [Google Scholar]

- Kleene, S.C. Introduction to Metamathematics; D. Van Nostrand: New York, NY, USA, 1952. [Google Scholar]

- Meyer, M.; Ritchie, D. The complexity of loop programs. In Proceedings of the ACM National Meeting, Washington, DC, USA, 14–16 November 1967; pp. 465–469. [Google Scholar]

- Rogers, H., Jr. Theory of Recursive Functions and Effective Computability; The MIT Press: Cambridge, MA, USA, 1988. [Google Scholar]

- Kulyukin, V. On primitive recursive characteristics of chess. Mathematics 2022, 10, 1016. [Google Scholar] [CrossRef]

- Hopcroft, J.E.; Ullman, J.D. Introduction to Automata Theory, Languages, and Computation; Narosa Publishing Hourse: New Delhi, India, 2002. [Google Scholar]

- Jurafsky, D.; Martin, J.H. Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 2000. [Google Scholar]

- Eddington, A.S. The constants of nature. In The World of Mathematics; Newman, J.R., Ed.; Simon and Schuster: New York, NY, USA, 1956; Volume 2, pp. 1074–1093. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).