Omni-Domain Feature Extraction Method for Gait Recognition

Abstract

1. Introduction

2. Related Work

2.1. Model-Based

2.1.1. Pose Estimation

2.1.2. Feature Extraction

2.2. Appearence-Based

2.2.1. Spatial Feature Extraction

2.2.2. Temporal Representation

2.2.3. Spatio-Temporal Feature Fusion

3. Materials and Methods

3.1. Overview

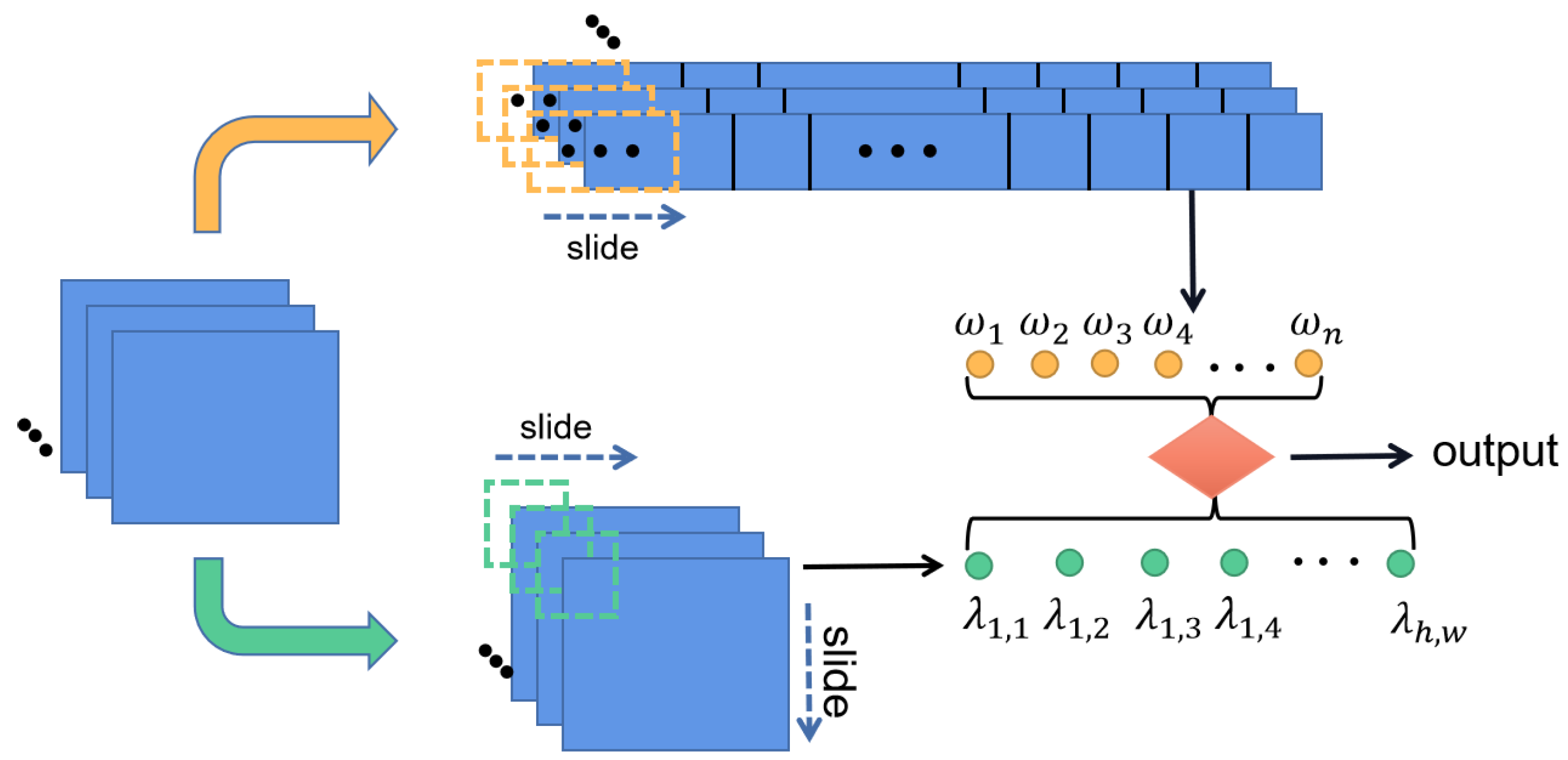

3.2. Temporal-Sensitive Feature Extractor

3.2.1. Discussion

3.2.2. Operation

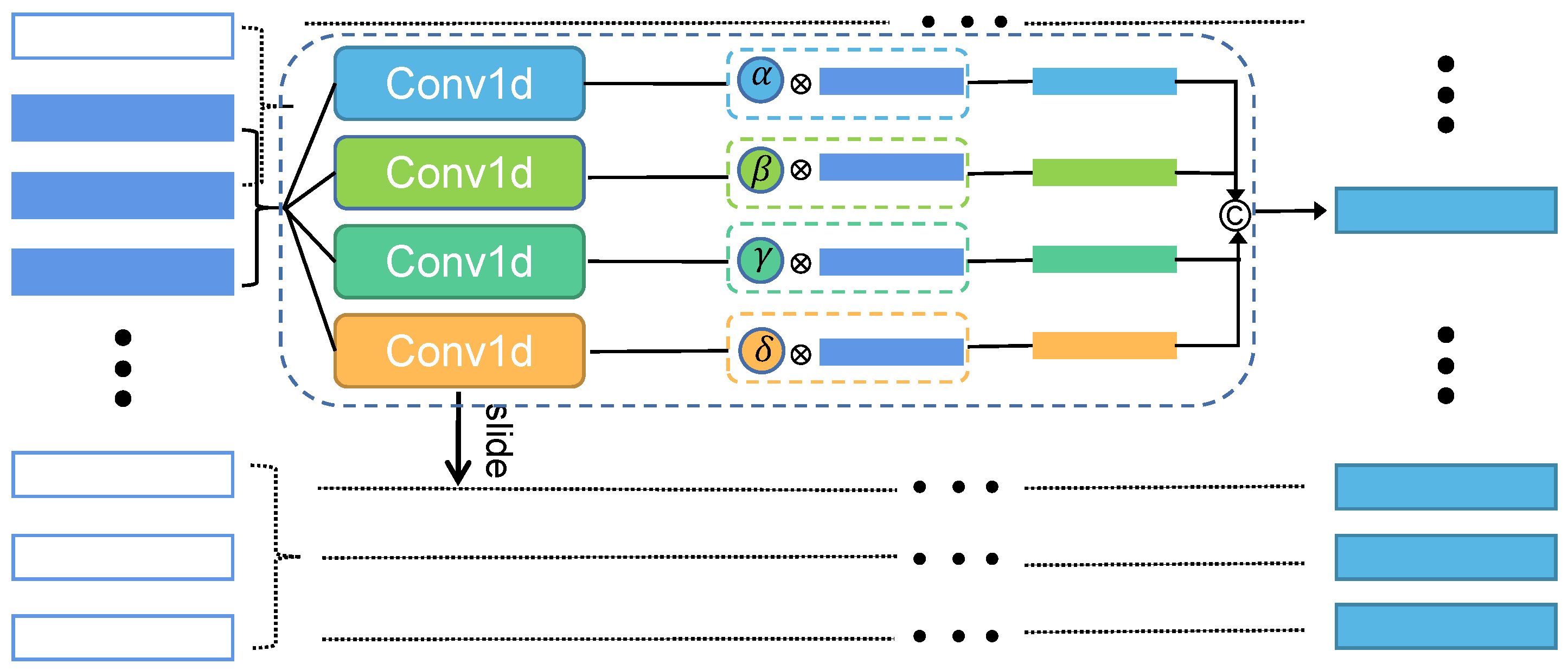

3.3. Dynamic Motion Capture

3.3.1. Discussion

3.3.2. Operation

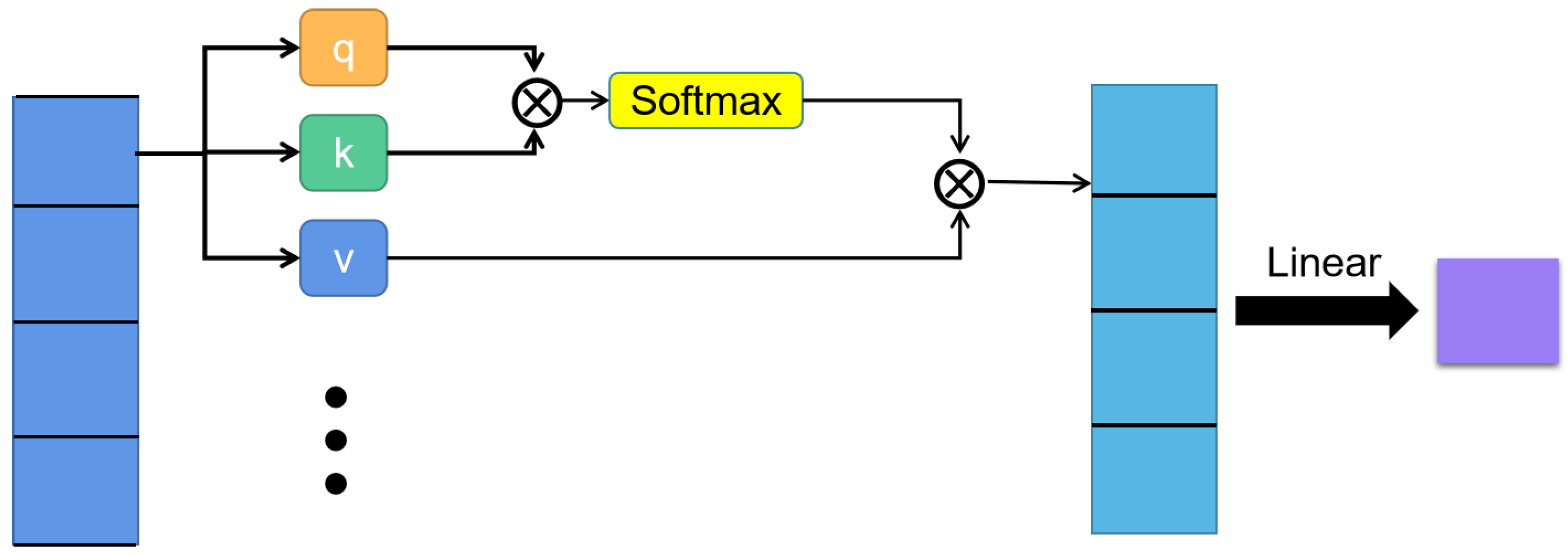

3.4. Omni-Domain Feature Balance Module

3.4.1. Discussion

3.4.2. Operation

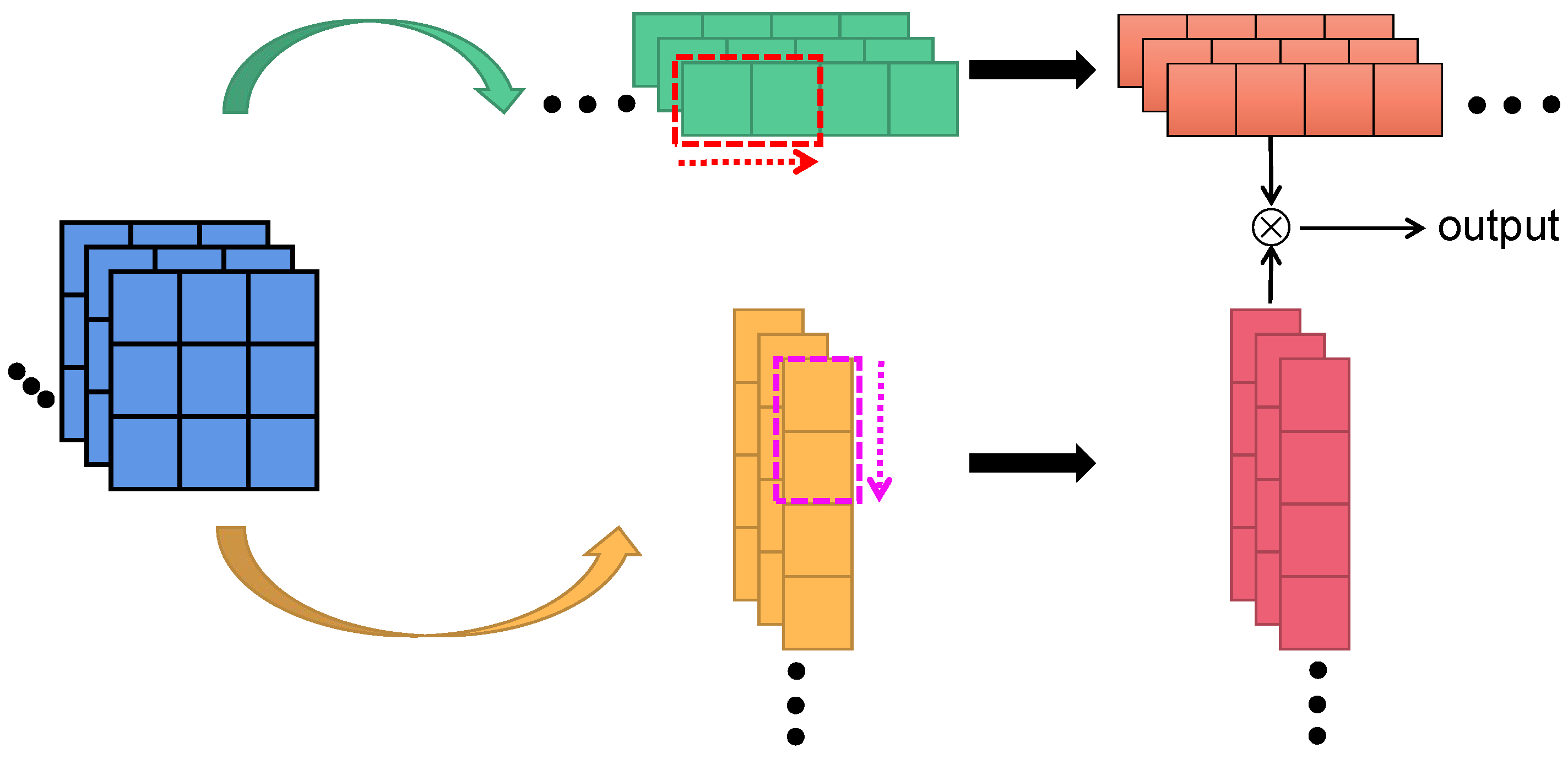

3.5. Interval Module Design

3.5.1. Learning Ability to Preserve Spatial Feature

3.5.2. Increase the Learning Ability of Temporal Information

4. Results

4.1. DataSets

4.2. Implementation Details

4.2.1. Dataset Partition Criteria

4.2.2. Parameter Settings

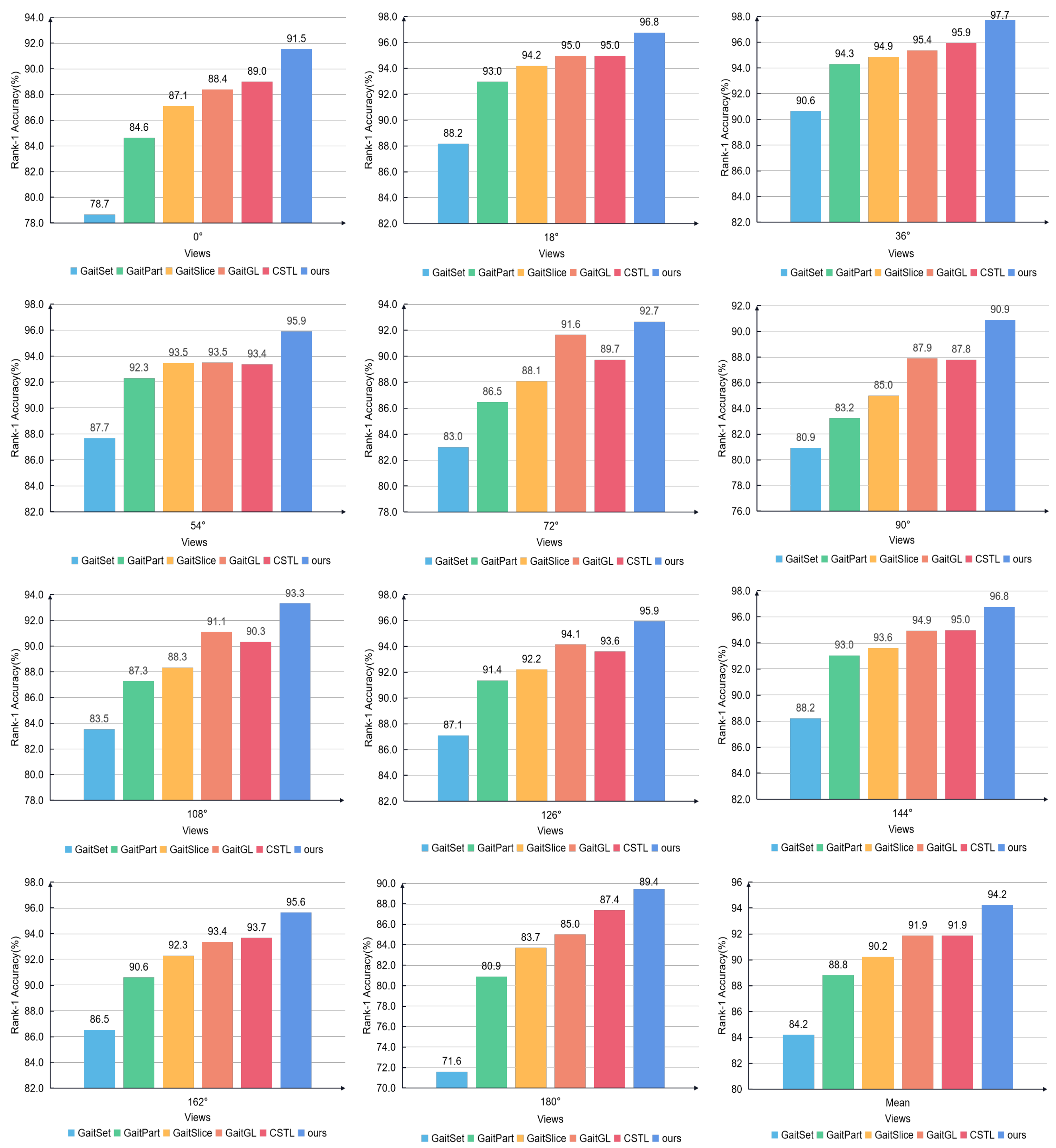

4.3. Compared with State-of-the-Art Methods

4.3.1. CASIA-B

4.3.2. OU-MVLP

4.4. Ablation Study

4.4.1. Effectiveness of Each Module

4.4.2. Impact of the Dilation Operation in Temporal Dimension

4.4.3. Impact of Interval Frame Module

4.4.4. Impact of Interval Frame Sampling Distance

4.4.5. Impact of Different Concatenation Strategy

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sarkar, S.; Liu, Z.; Subramanian, R. Gait recognition. In Encyclopedia of Cryptography, Security and Privacy; Springer: Berlin/Heidelberg, Germany, 2021; pp. 1–7. [Google Scholar]

- Nixon, M. Model-based gait recognition. In Enclycopedia of Biometrics; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Liao, R.; Cao, C.; Garcia, E.B.; Yu, S.; Huang, Y. Pose-based temporal–spatial network (PTSN) for gait recognition with carrying and clothing variations. In Proceedings of the Biometric Recognition: 12th Chinese Conference, CCBR 2017, Shenzhen, China, 28–29 October 2017; Proceedings 12. Springer: Cham, Switzerland, 2017; pp. 474–483. [Google Scholar]

- Liao, R.; Yu, S.; An, W.; Huang, Y. A model-based gait recognition method with body pose and human prior knowledge. Pattern Recognit. 2020, 98, 107069. [Google Scholar] [CrossRef]

- Li, X.; Makihara, Y.; Xu, C.; Yagi, Y.; Yu, S.; Ren, M. End-to-end model-based gait recognition. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November–4 December 2020. [Google Scholar]

- Teepe, T.; Khan, A.; Gilg, J.; Herzog, F.; Hörmann, S.; Rigoll, G. Gaitgraph: Graph convolutional network for skeleton-based gait recognition. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 2314–2318. [Google Scholar]

- Liu, X.; You, Z.; He, Y.; Bi, S.; Wang, J. Symmetry-Driven hyper feature GCN for skeleton-based gait recognition. Pattern Recognit. 2022, 125, 108520. [Google Scholar] [CrossRef]

- Yin, Z.; Jiang, Y.; Zheng, J.; Yu, H. STJA-GCN: A Multi-Branch Spatial–Temporal Joint Attention Graph Convolutional Network for Abnormal Gait Recognition. Appl. Sci. 2023, 13, 4205. [Google Scholar] [CrossRef]

- Fu, Y.; Meng, S.; Hou, S.; Hu, X.; Huang, Y. GPGait: Generalized Pose-based Gait Recognition. arXiv 2023, arXiv:2303.05234. [Google Scholar]

- Liao, R.; Li, Z.; Bhattacharyya, S.S.; York, G. PoseMapGait: A model-based gait recognition method with pose estimation maps and graph convolutional networks. Neurocomputing 2022, 501, 514–528. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5693–5703. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef]

- Fang, H.S.; Li, J.; Tang, H.; Xu, C.; Zhu, H.; Xiu, Y.; Li, Y.L.; Lu, C. Alphapose: Whole-body regional multi-person pose estimation and tracking in real-time. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 7157–7173. [Google Scholar] [CrossRef]

- Song, Y.F.; Zhang, Z.; Shan, C.; Wang, L. Stronger, faster and more explainable: A graph convolutional baseline for skeleton-based action recognition. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 1625–1633. [Google Scholar]

- Chao, H.; He, Y.; Zhang, J.; Feng, J. Gaitset: Regarding gait as a set for cross-view gait recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8126–8133. [Google Scholar]

- Lin, B.; Zhang, S.; Yu, X. Gait recognition via effective global-local feature representation and local temporal aggregation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 14648–14656. [Google Scholar]

- Li, H.; Qiu, Y.; Zhao, H.; Zhan, J.; Chen, R.; Wei, T.; Huang, Z. GaitSlice: A gait recognition model based on spatio-temporal slice features. Pattern Recognit. 2022, 124, 108453. [Google Scholar] [CrossRef]

- Hou, S.; Cao, C.; Liu, X.; Huang, Y. Gait lateral network: Learning discriminative and compact representations for gait recognition. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part IX. Springer: Cham, Switzerland, 2020; pp. 382–398. [Google Scholar]

- Qin, H.; Chen, Z.; Guo, Q.; Wu, Q.J.; Lu, M. RPNet: Gait recognition with relationships between each body-parts. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 2990–3000. [Google Scholar] [CrossRef]

- Huang, X.; Zhu, D.; Wang, H.; Wang, X.; Yang, B.; He, B.; Liu, W.; Feng, B. Context-sensitive temporal feature learning for gait recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 12909–12918. [Google Scholar]

- Fan, C.; Peng, Y.; Cao, C.; Liu, X.; Hou, S.; Chi, J.; Huang, Y.; Li, Q.; He, Z. Gaitpart: Temporal part-based model for gait recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 14225–14233. [Google Scholar]

- Huang, Z.; Xue, D.; Shen, X.; Tian, X.; Li, H.; Huang, J.; Hua, X.S. 3D local convolutional neural networks for gait recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 14920–14929. [Google Scholar]

- Mogan, J.N.; Lee, C.P.; Lim, K.M.; Ali, M.; Alqahtani, A. Gait-CNN-ViT: Multi-Model Gait Recognition with Convolutional Neural Networks and Vision Transformer. Sensors 2023, 23, 3809. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Yun, L.; Li, R.; Cheng, F.; Wang, K. Multi-View Gait Recognition Based on a Siamese Vision Transformer. Appl. Sci. 2023, 13, 2273. [Google Scholar] [CrossRef]

- Chen, J.; Wang, Z.; Zheng, C.; Zeng, K.; Zou, Q.; Cui, L. GaitAMR: Cross-view gait recognition via aggregated multi-feature representation. Inf. Sci. 2023, 636, 118920. [Google Scholar] [CrossRef]

- Sun, G.; Zhang, X.; Jia, X.; Ren, J.; Zhang, A.; Yao, Y.; Zhao, H. Deep fusion of localized spectral features and multi-scale spatial features for effective classification of hyperspectral images. Int. J. Appl. Earth Obs. Geoinf. 2020, 91, 102157. [Google Scholar] [CrossRef]

- Lin, J.; Gan, C.; Han, S. Tsm: Temporal shift module for efficient video understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 7083–7093. [Google Scholar]

- Yu, S.; Tan, D.; Tan, T. A Framework for Evaluating the Effect of View Angle, Clothing and Carrying Condition on Gait Recognition. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 4, pp. 441–444. [Google Scholar] [CrossRef]

- Takemura, N.; Makihara, Y.; Muramatsu, D.; Echigo, T.; Yagi, Y. Multi-view large population gait dataset and its performance evaluation for cross-view gait recognition. IPSJ Trans. Comput. Vis. Appl. 2018, 10, 1–14. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

- Hermans, A.; Beyer, L.; Leibe, B. In Defense of the Triplet Loss for Person Re-Identification. arXiv 2017, arXiv:1703.07737. [Google Scholar]

- Yu, S.; Chen, H.; Wang, Q.; Shen, L.; Huang, Y. Invariant feature extraction for gait recognition using only one uniform model. Neurocomputing 2017, 239, 81–93. [Google Scholar] [CrossRef]

- Zhao, H.; Fang, Z.; Ren, J.; MacLellan, C.; Xia, Y.; Li, S.; Sun, M.; Ren, K. SC2Net: A Novel Segmentation-Based Classification Network for Detection of COVID-19 in Chest X-Ray Images. IEEE J. Biomed. Health Inform. 2022, 26, 4032–4043. [Google Scholar] [CrossRef]

- Ma, P.; Ren, J.; Sun, G.; Zhao, H.; Jia, X.; Yan, Y.; Zabalza, J. Multiscale Superpixelwise Prophet Model for Noise-Robust Feature Extraction in Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Yan, Y.; Ren, J.; Zhao, H.; Windmill, J.F.; Ijomah, W.; De Wit, J.; Von Freeden, J. Non-destructive testing of composite fiber materials with hyperspectral imaging—Evaluative studies in the EU H2020 FibreEUse project. IEEE Trans. Instrum. Meas. 2022, 71, 1–13. [Google Scholar] [CrossRef]

- Xie, G.; Ren, J.; Marshall, S.; Zhao, H.; Li, R.; Chen, R. Self-attention enhanced deep residual network for spatial image steganalysis. Digit. Signal Process. 2023, 139, 104063. [Google Scholar] [CrossRef]

- Ren, J.; Sun, H.; Zhao, H.; Gao, H.; Maclellan, C.; Zhao, S.; Luo, X. Effective extraction of ventricles and myocardium objects from cardiac magnetic resonance images with a multi-task learning U-Net. Pattern Recognit. Lett. 2022, 155, 165–170. [Google Scholar] [CrossRef]

- Fan, D.P.; Zhou, T.; Ji, G.P.; Zhou, Y.; Chen, G.; Fu, H.; Shen, J.; Shao, L. Inf-net: Automatic covid-19 lung infection segmentation from ct images. IEEE Trans. Med. Imaging 2020, 39, 2626–2637. [Google Scholar] [CrossRef] [PubMed]

- Liu, D.; Cui, Y.; Yan, L.; Mousas, C.; Yang, B.; Chen, Y. Densernet: Weakly supervised visual localization using multi-scale feature aggregation. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 6101–6109. [Google Scholar]

- Li, Y.; Ren, J.; Yan, Y.; Liu, Q.; Ma, P.; Petrovski, A.; Sun, H. CBANet: An End-to-end Cross Band 2-D Attention Network for Hyperspectral Change Detection in Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2023. [Google Scholar] [CrossRef]

- Sun, G.; Fu, H.; Ren, J.; Zhang, A.; Zabalza, J.; Jia, X.; Zhao, H. SpaSSA: Superpixelwise Adaptive SSA for Unsupervised Spatial–Spectral Feature Extraction in Hyperspectral Image. IEEE Trans. Cybern. 2022, 52, 6158–6169. [Google Scholar] [CrossRef] [PubMed]

- Sun, H.; Ren, J.; Zhao, H.; Yuen, P.; Tschannerl, J. Novel Gumbel-Softmax Trick Enabled Concrete Autoencoder With Entropy Constraints for Unsupervised Hyperspectral Band Selection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Fu, H.; Sun, G.; Zhang, A.; Shao, B.; Ren, J.; Jia, X. Unsupervised 3D tensor subspace decomposition network for hyperspectral and multispectral image spatial-temporal-spectral fusion. IEEE Trans. Geosci. Remote Sens. 2023, in press. [Google Scholar] [CrossRef]

- Das, S.; Meher, S.; Sahoo, U.K. A Unified Local–Global Feature Extraction Network for Human Gait Recognition Using Smartphone Sensors. Sensors 2022, 22, 3968. [Google Scholar] [CrossRef] [PubMed]

- Yan, Y.; Ren, J.; Tschannerl, J.; Zhao, H.; Harrison, B.; Jack, F. Nondestructive phenolic compounds measurement and origin discrimination of peated barley malt using near-infrared hyperspectral imagery and machine learning. IEEE Trans. Instrum. Meas. 2021, 70, 1–15. [Google Scholar] [CrossRef]

- Chen, R.; Huang, H.; Yu, Y.; Ren, J.; Wang, P.; Zhao, H.; Lu, X. Rapid Detection of Multi-QR Codes Based on Multistage Stepwise Discrimination and A Compressed MobileNet. IEEE Internet Things J. 2023. [Google Scholar] [CrossRef]

- Sergiyenko, O.Y.; Tyrsa, V.V. 3D optical machine vision sensors with intelligent data management for robotic swarm navigation improvement. IEEE Sens. J. 2020, 21, 11262–11274. [Google Scholar] [CrossRef]

| Gallery NM #1–4 | – | Mean | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Probe | ||||||||||||||

| LT (74) | NM (#5–6) | GaitSet [4] | 90.8 | 97.9 | 99.4 | 96.9 | 93.6 | 91.7 | 95.0 | 97.8 | 98.9 | 96.8 | 85.8 | 95.0 |

| GaitPart [7] | 94.1 | 98.6 | 99.3 | 98.5 | 94.0 | 92.3 | 95.9 | 98.4 | 99.2 | 97.8 | 90.4 | 96.2 | ||

| GaitSlice [14] | 95.5 | 99.2 | 99.6 | 99.0 | 94.4 | 92.5 | 95.0 | 98.1 | 99.7 | 98.3 | 92.9 | 96.7 | ||

| GaitGL [18] | 96.0 | 98.3 | 99.0 | 97.9 | 96.9 | 95.4 | 97.0 | 98.9 | 99.3 | 98.8 | 94.0 | 97.5 | ||

| CSTL [22] | 97.2 | 99.0 | 99.2 | 98.1 | 96.2 | 95.5 | 97.7 | 98.7 | 99.2 | 98.9 | 96.5 | 97.8 | ||

| Ours | 96.9 | 99.3 | 99.3 | 98.8 | 97.8 | 96.2 | 97.9 | 99.2 | 99.6 | 99.4 | 96.4 | 98.3 | ||

| BG (#1–2) | GaitSet [17] | 83.8 | 91.2 | 91.8 | 88.8 | 83.3 | 81.0 | 84.1 | 90.0 | 92.2 | 94.4 | 79.0 | 87.2 | |

| GaitPart [23] | 89.1 | 94.8 | 96.7 | 95.1 | 88.3 | 84.9 | 89.0 | 93.5 | 96.1 | 93.8 | 85.8 | 91.5 | ||

| GaitSlice [19] | 90.2 | 96.4 | 96.1 | 94.9 | 89.3 | 85.0 | 90.9 | 94.5 | 96.3 | 95.0 | 88.1 | 92.4 | ||

| GaitGL [18] | 92.6 | 96.6 | 96.8 | 95.5 | 93.5 | 89.3 | 92.2 | 96.5 | 98.2 | 96.9 | 91.5 | 94.5 | ||

| CSTL [22] | 91.7 | 96.5 | 97.0 | 95.4 | 90.9 | 88.0 | 91.5 | 95.8 | 97.0 | 95.5 | 90.3 | 93.6 | ||

| Ours | 94.0 | 97.6 | 98.4 | 97.2 | 93.3 | 92.0 | 94.0 | 97.1 | 98.2 | 97.1 | 92.9 | 95.6 | ||

| CL (#1–2) | GaitSet [17] | 61.4 | 75.4 | 80.7 | 77.3 | 72.1 | 70.1 | 71.5 | 73.5 | 73.5 | 68.4 | 50.0 | 70.4 | |

| GaitPart [23] | 70.7 | 85.5 | 86.9 | 83.3 | 77.1 | 72.5 | 76.9 | 82.2 | 83.8 | 80.2 | 66.5 | 78.7 | ||

| GaitSlice [19] | 75.6 | 87.0 | 88.9 | 86.5 | 80.5 | 77.5 | 79.1 | 84.0 | 84.8 | 83.6 | 70.1 | 81.6 | ||

| GaitGL [18] | 76.6 | 90.0 | 90.3 | 87.1 | 84.5 | 79.0 | 84.1 | 87.0 | 87.3 | 84.4 | 69.5 | 83.6 | ||

| CSTL [22] | 78.1 | 89.4 | 91.6 | 86.6 | 82.1 | 79.9 | 81.8 | 86.3 | 88.7 | 86.6 | 75.3 | 84.2 | ||

| Ours | 83.7 | 93.4 | 95.5 | 91.7 | 86.9 | 84.5 | 88.1 | 91.5 | 92.5 | 90.4 | 79.0 | 88.8 | ||

| Probe | Gallery All 14 Views | |||||

|---|---|---|---|---|---|---|

| GaitSet [17] | GaitPart [23] | GaitSlice [19] | GaitGL [18] | CSTL [22] | Ours | |

| 79.5 | 82.6 | 84.1 | 84.9 | 87.1 | 87.3 | |

| 87.9 | 88.9 | 89 | 90.2 | 91.0 | 91.2 | |

| 89.9 | 90.8 | 91.2 | 91.1 | 91.5 | 91.5 | |

| 90.2 | 91.0 | 91.6 | 91.5 | 91.8 | 92.0 | |

| 88.1 | 89.7 | 90.6 | 91.1 | 90.6 | 91.1 | |

| 88.7 | 89.9 | 89.9 | 90.8 | 90.8 | 91.0 | |

| 87.8 | 89.5 | 89.8 | 90.3 | 90.6 | 90.9 | |

| 81.7 | 85.2 | 85.7 | 88.5 | 89.4 | 89.5 | |

| 86.7 | 88.1 | 89.3 | 88.6 | 90.2 | 90.5 | |

| 89.0 | 90.0 | 90.6 | 90.3 | 90.5 | 90.7 | |

| 89.3 | 90.1 | 90.7 | 90.4 | 90.7 | 91.0 | |

| 87.2 | 89.0 | 89.8 | 89.6 | 89.8 | 90.1 | |

| 87.8 | 89.1 | 89.6 | 89.5 | 90.0 | 90.1 | |

| 86.2 | 88.2 | 88.5 | 88.8 | 89.4 | 89.5 | |

| Mean | 87.1 | 88.7 | 89.3 | 89.7 | 90.2 | 90.5 |

| Model | Rank-1 Accuracy (%) | ||||

|---|---|---|---|---|---|

| NM | BG | CL | Mean | ||

| GaitSet [17] | 95.0 | 87.2 | 70.4 | 88.0 | |

| GaitPart [23] | 96.2 | 91.5 | 78.7 | 88.0 | |

| GaitSlice [19] | 96.7 | 92.4 | 81.6 | 88.0 | |

| GaitGL [18] | 97.4 | 94.5 | 83.6 | 91.8 | |

| CSTL [22] | 97.8 | 93.6 | 84.2 | 91.9 | |

| Ours | Baseline | 97.2 | 92.6 | 82.4 | 90.7 |

| TSFE + Baseline | 97.3 | 93.5 | 83.2 | 91.3 | |

| Baseline + DMC | 97.5 | 94.2 | 84.3 | 92.0 | |

| Baseline + ODB | 98.0 | 93.9 | 83.9 | 91.9 | |

| Baseline + TSFE + DMC + ODB | 98.3 | 95.6 | 88.8 | 94.2 | |

| Where | How | Rank-1 Accuracy (%) | |||

|---|---|---|---|---|---|

| NM | BG | CL | Mean | ||

| Before | In-Place | 97.0 | 93.2 | 83.8 | 92.3 |

| Before | Residual | 97.3 | 94.0 | 84.0 | 92.8 |

| After | In-Place | 97.5 | 93.3 | 84.1 | 92.7 |

| After | Residual | 98.3 | 95.6 | 88.8 | 94.2 |

| Settings | Rank-1 Accuracy (%) | |||

|---|---|---|---|---|

| NM | BG | CL | Mean | |

| no_dilation | 97.6 | 94.9 | 88.1 | 93.5 |

| dilation_in_first_layer | 97.3 | 94.9 | 89.9 | 94.0 |

| dilation_in_first_two_layers | 97.6 | 95.5 | 90.6 | 94.6 |

| dilation_in_first_three_layers | 98.1 | 95.5 | 89.3 | 94.3 |

| dilation_in_first_four_layers | 98.3 | 95.6 | 88.8 | 94.2 |

| The Gap of Interval Frame | Rank-1 Accuracy (%) | |||

|---|---|---|---|---|

| NM | BG | CL | Mean | |

| 0 | 98.1 | 95.6 | 85.8 | 93.2 |

| 1 | 98.3 | 95.6 | 88.8 | 94.2 |

| 2 | 98.0 | 95.7 | 86.6 | 93.4 |

| 3 | 97.5 | 95.0 | 85.0 | 92.5 |

| Addition | Concatenation | Rank-1 Accuracy (%) | |||||

|---|---|---|---|---|---|---|---|

| AdaptiveAvgPool | AdaptiveMaxPool | 1D-Convolution | NM | BG | CL | Mean | |

| ✓ | ✕ | ✕ | ✕ | 98.0 | 95.3 | 89.0 | 94.1 |

| ✕ | ✓ | ✕ | ✕ | 98.3 | 95.6 | 88.8 | 94.2 |

| ✕ | ✕ | ✓ | ✕ | 97.8 | 95.2 | 88.5 | 93.8 |

| ✕ | ✕ | ✕ | ✓ | 97.2 | 94.5 | 85.2 | 92.3 |

| ✕ | ✓ | ✓ | ✕ | 98.1 | 95.3 | 88.5 | 94.0 |

| ✕ | ✓ | ✕ | ✓ | 97.0 | 93.2 | 84.1 | 91.4 |

| ✕ | ✕ | ✓ | ✓ | 97.1 | 93.8 | 85.0 | 92.0 |

| ✕ | ✓ | ✓ | ✓ | 97.3 | 94.2 | 84.2 | 91.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wan, J.; Zhao, H.; Li, R.; Chen, R.; Wei, T. Omni-Domain Feature Extraction Method for Gait Recognition. Mathematics 2023, 11, 2612. https://doi.org/10.3390/math11122612

Wan J, Zhao H, Li R, Chen R, Wei T. Omni-Domain Feature Extraction Method for Gait Recognition. Mathematics. 2023; 11(12):2612. https://doi.org/10.3390/math11122612

Chicago/Turabian StyleWan, Jiwei, Huimin Zhao, Rui Li, Rongjun Chen, and Tuanjie Wei. 2023. "Omni-Domain Feature Extraction Method for Gait Recognition" Mathematics 11, no. 12: 2612. https://doi.org/10.3390/math11122612

APA StyleWan, J., Zhao, H., Li, R., Chen, R., & Wei, T. (2023). Omni-Domain Feature Extraction Method for Gait Recognition. Mathematics, 11(12), 2612. https://doi.org/10.3390/math11122612