A Medical Endoscope Image Enhancement Method Based on Improved Weighted Guided Filtering

Abstract

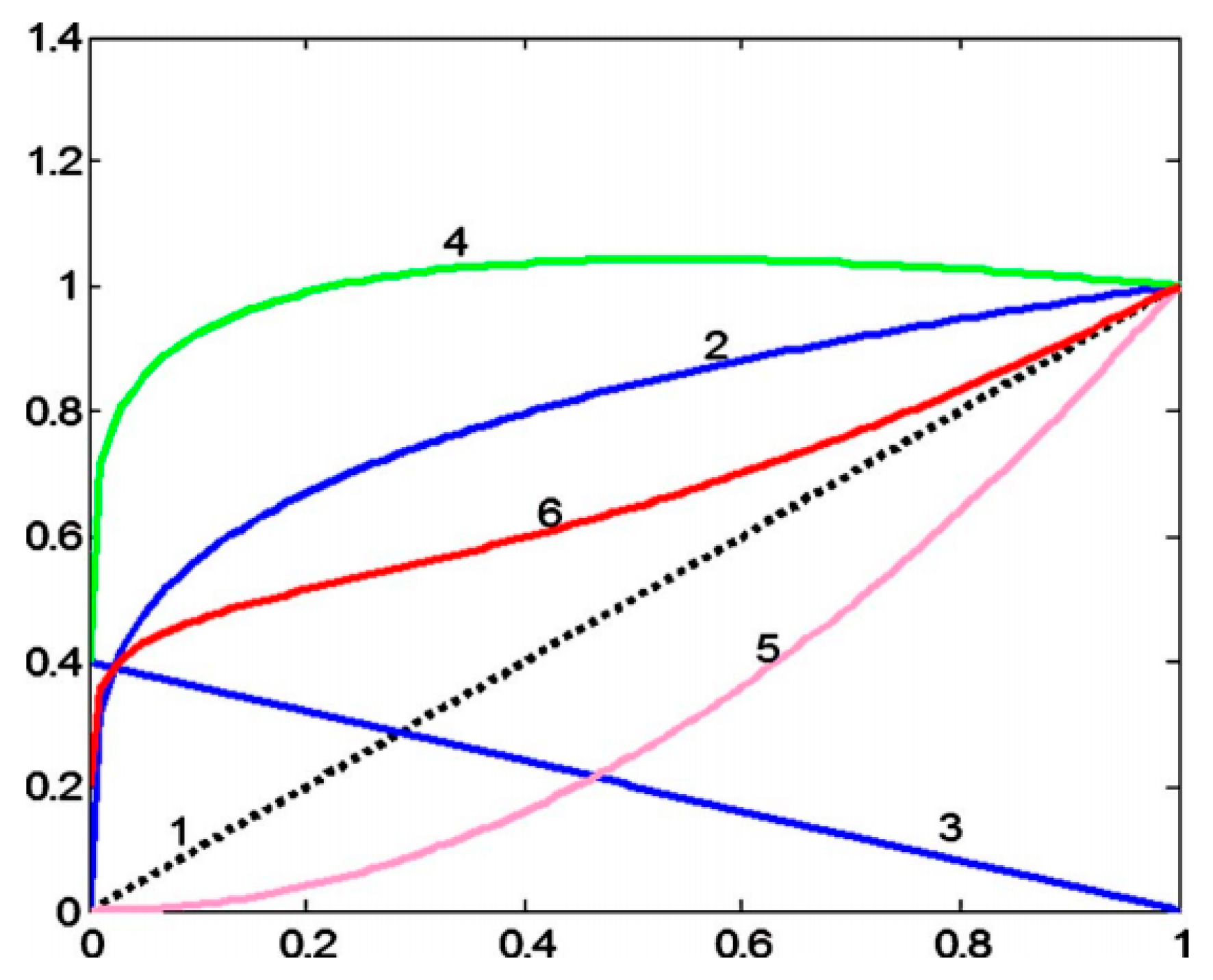

:1. Introduction

2. Methods

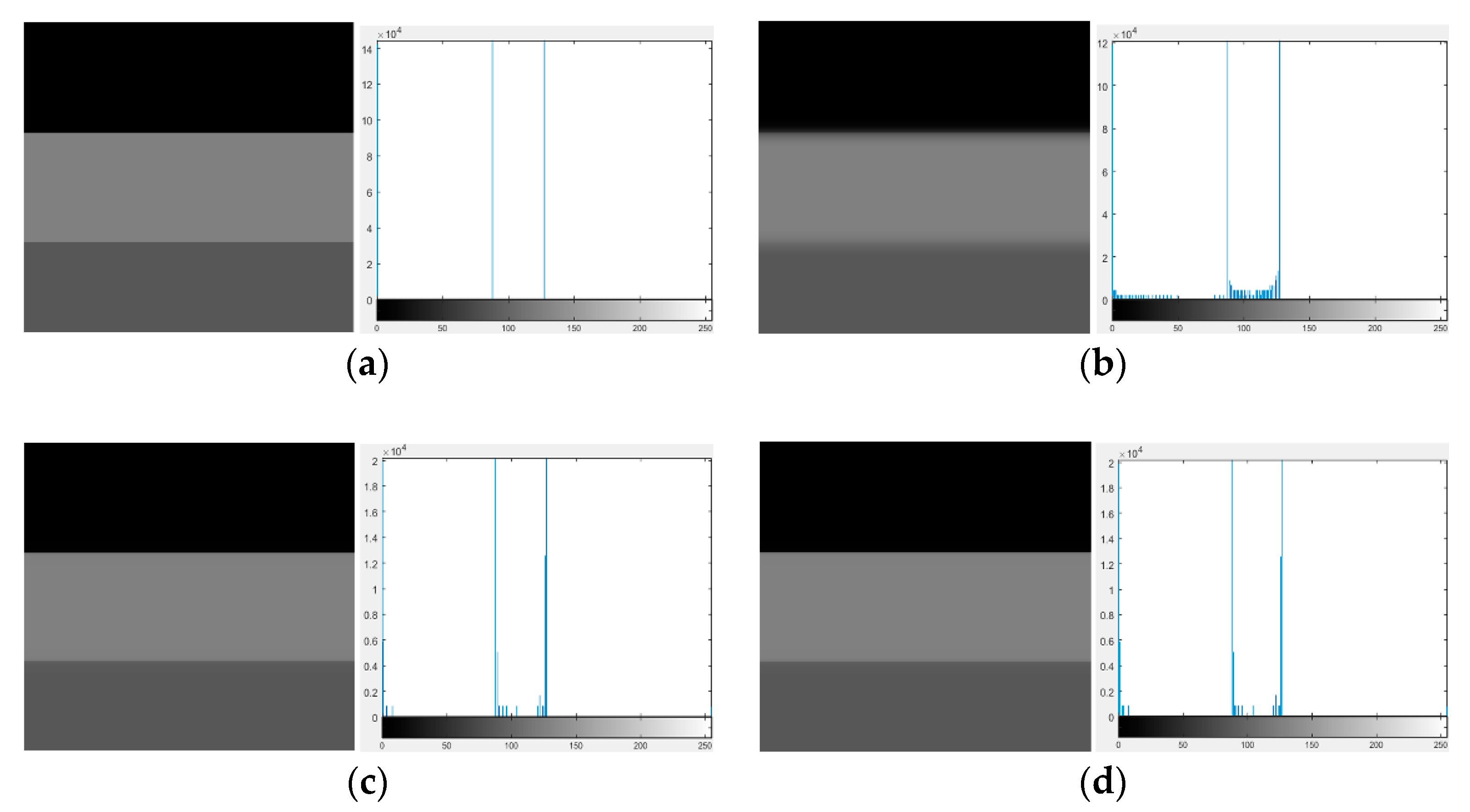

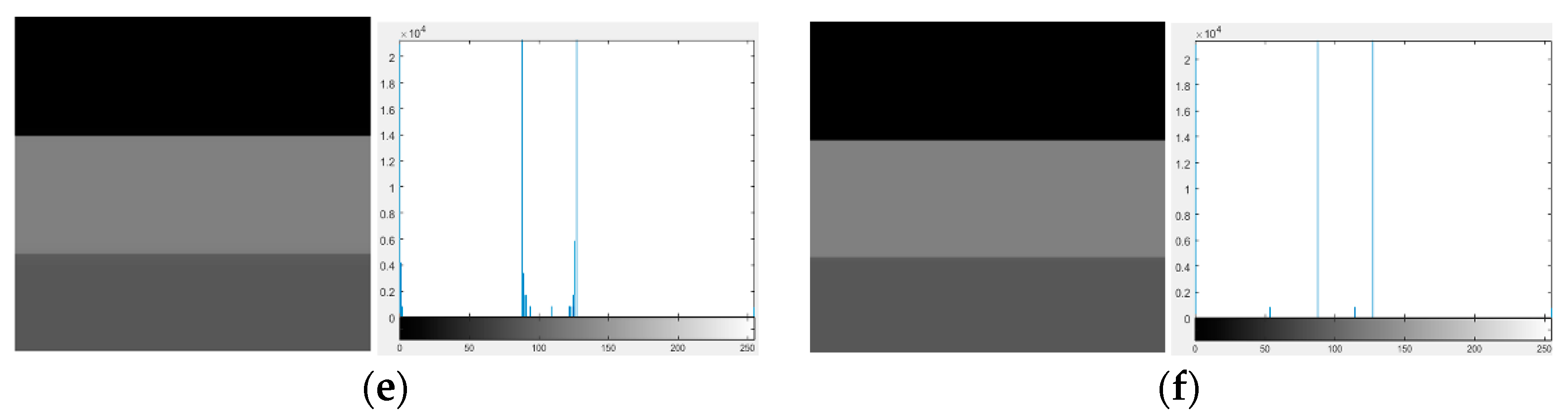

2.1. Guided Filtering

2.2. Weighted Guided Filtering

2.3. Detail Enhancement of Endoscopic Images

2.4. Endoscopic Image Contrast Enhancement

- (1)

- Input the th image, where the image size is 316 × 258. If is greater than , end the training and skip to S8; otherwise, execute S2.

- (2)

- Select the vessel and background regions of the endoscopic image.

- (3)

- Enter the th group of parameters. If is greater than , skip to S7; otherwise, go to S4.

- (4)

- A stretch is performed on each of the three channels using the following formula:

- (5)

- Both the original training image and processed image are in the RGB space and now they are converted to CIE space. The conversion formula is as follows:

- (6)

- Calculate the distance between the original image and blood vessel and the distance between the original image and background of the processed image; save the ratio in the array and go back to S3.

- (7)

- According to the maximization objective of Formula (12), a set of optimal parameters and of the original image can be obtained, and the optimal parameters of this set are saved in and , respectively; finally, go back to S2.

- (8)

- Take the average of and , and obtain a set of optimal parameters and ; finally, end the training.

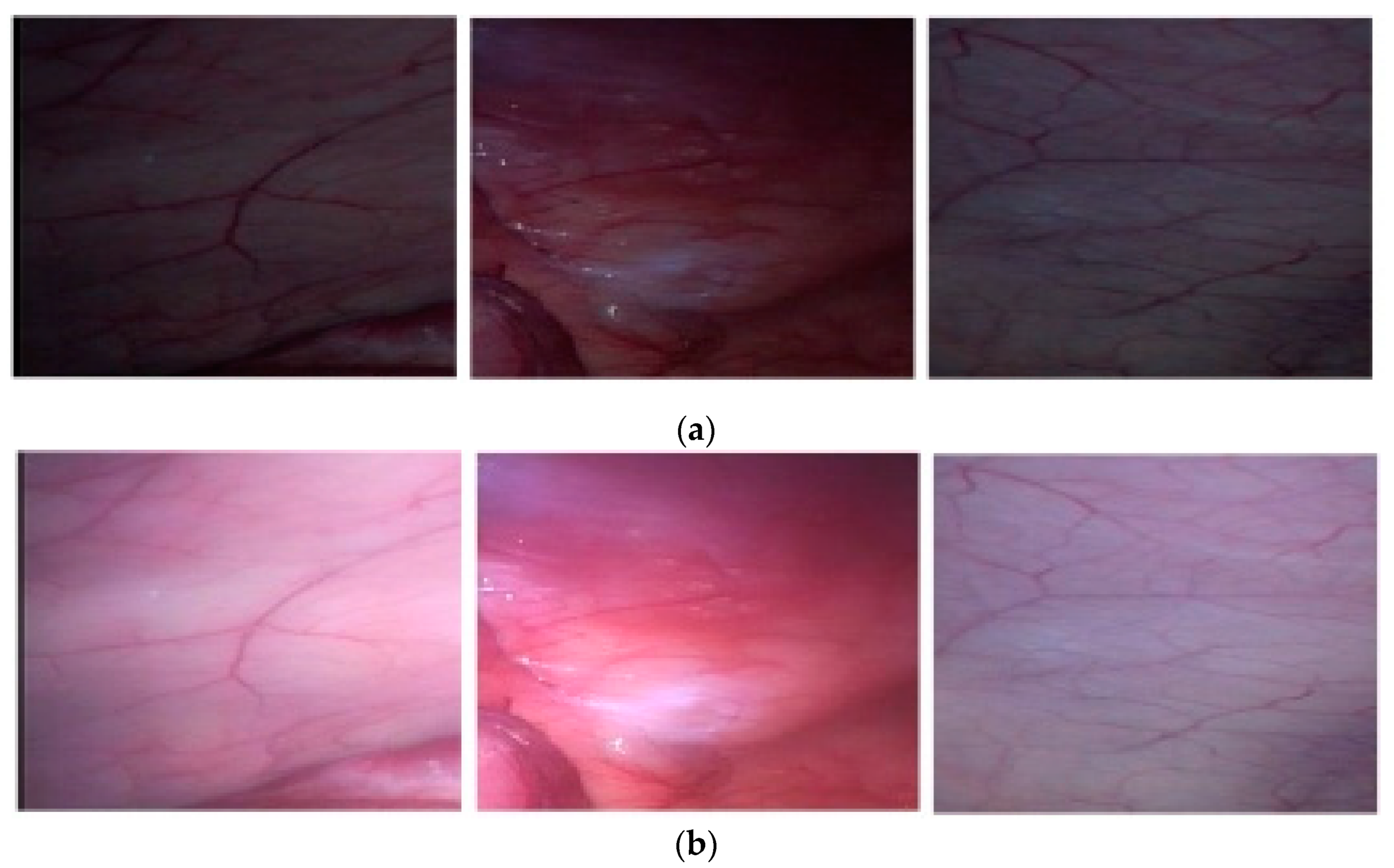

2.5. Brightness Enhancement of Endoscopic Images

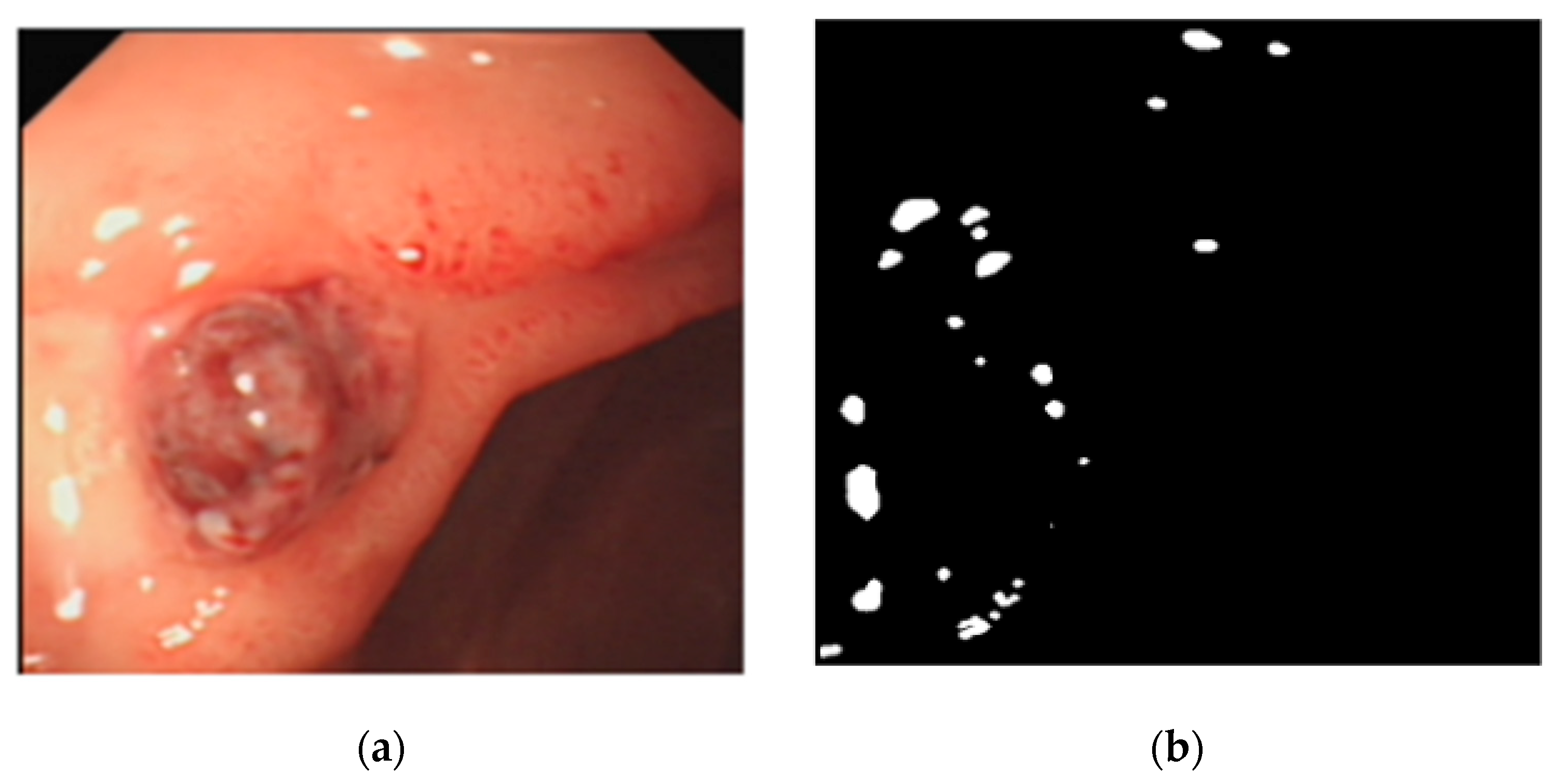

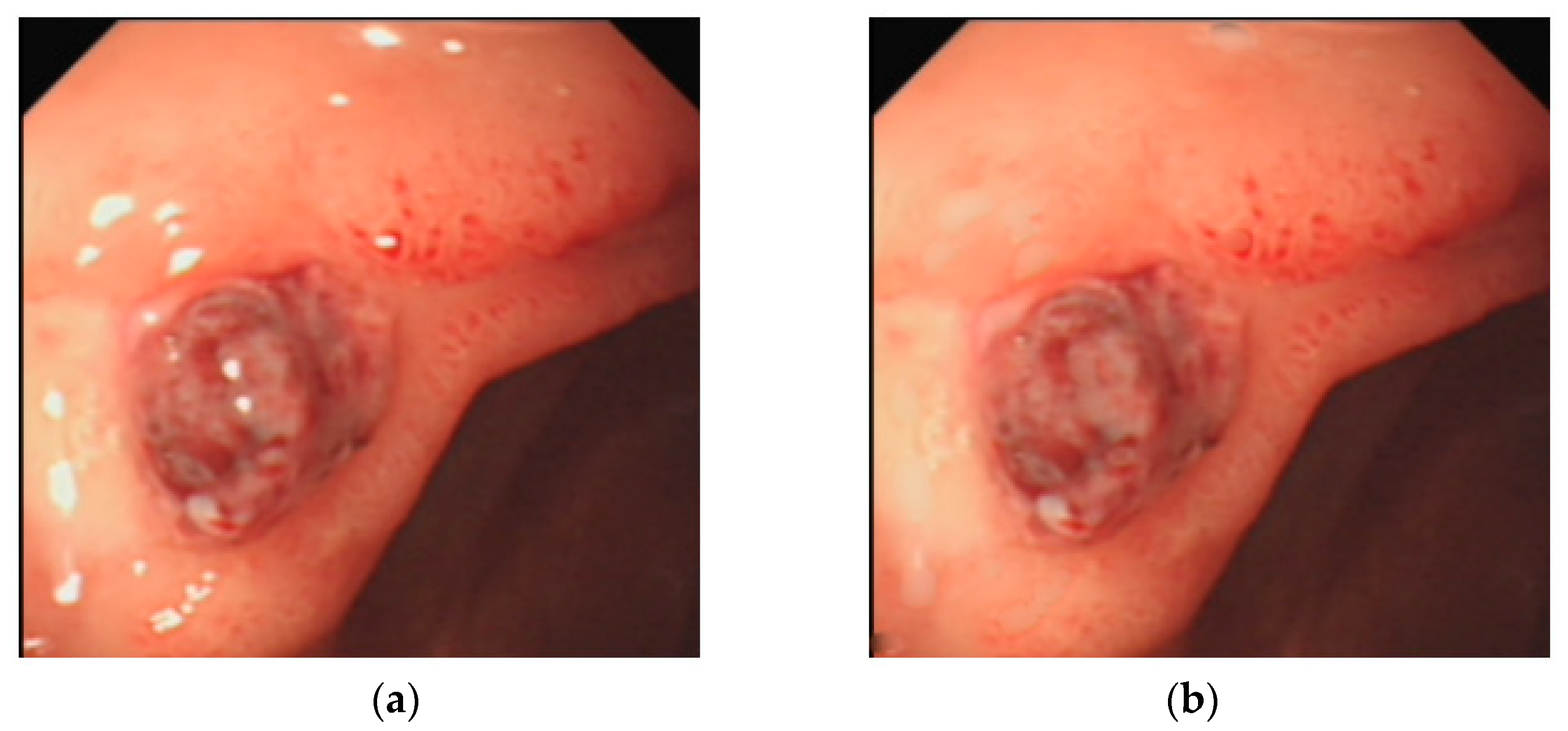

2.6. Removal of Highlights from Endoscopic Images

2.7. Specific Steps of the Endoscope IE Algorithm

- (1)

- Categorize the original endoscope image into R, G, and B channels.

- (2)

- Obtain the base layer image of each channel using the quadratic improved WGF algorithm for the three channels.

- (3)

- Subtract the corresponding base layer images of R, G, and B of the three channels to obtain the images of the detailed layer of the three channels.

- (4)

- Multiply the detailed layer images of the three channels by the coefficient α to obtain the enhanced detailed layer images.

- (5)

- Add the detailed layer images and the corresponding base layer images of the three channels. Finally, merge the three channels to obtain the enhanced endoscope image.

2.8. Evaluation Method

- (1)

- Compute a histogram of the local variance values in the augmented image (each pixel is within a 5 × 5 field). The given threshold Tv is 5. Pixels that do not exceed this threshold are the detailed areas; otherwise, they are the background areas.

- (2)

- The DV-BV value is estimated as DV/BV. Its value is proportional to the degree of image detail enhancement.

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bray, F.; Ferlay, J.; Soerjomataram, I.; Siegel, R.L.; Jemal, A. Global cancer statistics 2018: Globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2018, 68, 394–424. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Togashi, K.; Osawa, H.; Koinuma, K.; Hayashi, Y.; Miyata, T.; Sunada, K.; Nokubi, M.; Horie, H.; Yamamoto, H. A comparison of conventional endoscopy, chromoendoscopy, and the optimal-band imaging system for the differentiation of neoplastic and non-neoplastic colonic polyps. Gastrointest. Endos. 2009, 69, 734–741. [Google Scholar] [CrossRef]

- Li, T.; Ni, B.; Xu, M.; Wang, M.; Gao, Q.; Yan, S. Data-driven affective filtering for images and videos. IEEE Trans. Cybern. 2015, 45, 2336–2349. [Google Scholar] [CrossRef] [PubMed]

- Li, T.; Yan, S.; Mei, T.; Hua, X.S.; Kweon, I.S. Image decomposition with multilabel context: Algorithms and applications. IEEE Trans. Image Processing 2010, 20, 2301–2314. [Google Scholar]

- Schuellerweidekamm, C.; Schaeferprokop, C.M.; Weber, M.; Herold, C.J.; Prokop, M. CT angiography of pulmonary arteries to detect pulmonary embolism: Improvement of vascular enhancement with low kilovoltage settings. Radiology 2006, 241, 899–907. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Wang, H.; Yuan, B.; Wang, L. An image enhancement technique using nonlinear transfer function and unsharp masking in multispectral endoscope. In International Conference on Innovative Optical Health Science; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10245, p. 1024504. [Google Scholar]

- Yang, S.F.; Cheng, C.H. Fast computation of Hessian-based enhancement filters for medical images. Comput. Meth. Prog. Biomedcine 2014, 116, 215–225. [Google Scholar] [CrossRef]

- Oh, J.; Hwang, H. Feature enhancement of medical images using morphology-based homomorphic filter and differential evolution algorithm. Int. J. Control. Autom. 2010, 8, 857–861. [Google Scholar] [CrossRef]

- Yin, X.; Ng, B.W.; He, J.; Zhang, Y.; Abbott, D. Accurate image analysis of the retina using Hessian matrix and binarisation of thresholded en-tropy with application of texture mapping. PLoS ONE 2014, 9, e95943. [Google Scholar]

- Ajam, A.; Aziz, A.A.; Asirvadam, V.S.; Izhar, L.I.; Muda, S. Cerebral vessel enhancement using bilateral and Hessian-based filter. In Proceedings of the 2016 6th International Conference on Intelligent and Advanced Systems (ICIAS), Kuala Lumpur, Malaysia, 15–17 August 2016. [Google Scholar]

- Kim, J.Y.; Kim, L.S.; Hwang, S.H. An advanced contrast enhancement using partially overlapped sub-block histogram equalization. IEEE Trans. Circ. Syst. Vid. Technol. 2001, 11, 475–484. [Google Scholar]

- Ma, J.; Fan, X.; Yang, S.X.; Zhang, X.; Zhu, X. Contrast limited adaptive histogram equalization-based fusion in YIQ and HSI color spaces for underwater image enhancement. Int. J. Pattern Recognit. Artif. Intell. 2017, 32, 1854018. [Google Scholar] [CrossRef]

- Yang, Y.; Su, Z.; Sun, L. Medical image enhancement algorithm based on wavelet transform. Electron. Lett. 2010, 46, 120–121. [Google Scholar] [CrossRef]

- Thirumala, K.; Pal, S.; Jain, T.; Umarikarb, A.C. A classification method for multiple power quality disturbances using EWT based adaptive filtering and multiclass SVM. Neurocomputing 2019, 334, 265–274. [Google Scholar] [CrossRef]

- Tian, C.; Fei, L.; Zheng, W.; Xu, Y.; Zuo, W.; Ling, C.W. Deep learning on image denoising: An overview. Neural Netw. 2020, 131, 251–257. [Google Scholar] [CrossRef] [PubMed]

- Tchaka, K.; Pawar, V.M.; Stoyanov, D. Chromaticity based smoke removal in endoscopic images. In Medical Imaging 2017: Image Processing; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10133, p. 101331M. [Google Scholar]

- Okuhata, H.; Nakamura, H.; Hara, S.; Tsutsui, H.; Onoye, T. Application of the real-time Retinex image enhancement for endoscopic images. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 3407–3410. [Google Scholar]

- Gopi, V.P.; Palanisamy, P. Capsule endoscopic image denoising based on double density dual tree complex wavelet transform. Int. J. Imag. Robot. 2013, 9, 48–60. [Google Scholar]

- Imtiaz, M.S.; Mohammed, S.K.; Deeba, F.; Wahid, K.A. Tri-Scan: A three stage color enhancement tool for endoscopic images. J. Med. Syst. 2017, 41, 102. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Wang, T.; Dong, J.; Yu, H. Underwater image enhancement via extended multi-scale Retinex. Neurocomputing 2017, 245, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Zheng, S.; Han, D.; Yuan, Y. 2-D image reconstruction of photoacoustic endoscopic imaging based on time-reversal. Comput. Biol. Med. 2016, 76, 60–68. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern. Anal. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Zheng, J.; Zhu, Z.; Yao, W.; Wu, S. Weighted Guided Image Filtering. IEEE Trans. Image Process. 2015, 24, 120–129. [Google Scholar]

- Li, T.; Asari, V.K. Adaptive and integrated neighborhood-dependent approach for nonlinear enhancement of color images. J. Electronc. Imaging 2005, 14, 043006. [Google Scholar]

- Ghimire, D.; Lee, J. Nonlinear transfer function-based local approach for color image enhancement. IEEE Trans. Consum. Electr. 2011, 57, 858–865. [Google Scholar] [CrossRef]

- Hong, G.; Koo, M.; Saha, A.; Kim, B. Efficient local stereo matching technique using weighted guided image filtering (WGIF). In Proceedings of the 2016 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 7–11 January 2016; pp. 484–485. [Google Scholar]

- Zhao, D.; Xu, L.; Yan, Y.; Chen, J.; Duan, L.Y. Multi-scale Optimal Fusion model for single image dehazing. Signal. Process-Image 2019, 74, 253–265. [Google Scholar] [CrossRef]

- Fragkou, P.; Galiotou, E.; Matsakas, M. Enriching the e-GIF Ontology for an Improved Application of Linking Data Technologies to Greek Open Government Data. Procedia Soc. Behav. Sci. 2014, 147, 167–174. [Google Scholar] [CrossRef] [Green Version]

- Sato, T. TXI: Texture and color enhancement imaging for endoscopic image enhancement. J. Healthc. Eng. 2021, 2021, 5518948. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Tao, P.; Yuan, B.; Wang, L. Vessel enhancement of endoscopic image based on multi-color space. Opto-Electron. Eng. 2020, 47, 190268. [Google Scholar]

- Qayyum, A.; Sultani, W.; Shamshad, F.; Tufail, R.; Qadir, J. Single-Shot Retinal Image Enhancement Using Untrained and Pretrained Neural Networks Priors Integrated with Analytical Image Priors. TechRxiv 2021. Preprint. [Google Scholar] [CrossRef]

- Fang, S.; Xu, C.; Feng, B.; Zhu, Y. Color Endoscopic Image Enhancement Technology Based on Nonlinear Unsharp Mask and CLAHE. In Proceedings of the 2021 IEEE 6th International Conference on Signal and Image Processing (ICSIP), Nanjing, China, 22–24 October 2021; pp. 234–239. [Google Scholar]

- Gómez, P.; Semmler, M.; Schützenberger, A.; Bohr, C.; Döllinger, M. Low-light image enhancement of high-speed endoscopic videos using a convolutional neural network. Med. Biol. Eng. Comput. 2019, 57, 1451–1463. [Google Scholar] [CrossRef]

| Image Number | GIF | WGIF | GDGIF | EGIF | Proposed |

|---|---|---|---|---|---|

| (1) | 20.9569 | 24.5021 | 24.7910 | 26.6537 | 29.4090 |

| (2) | 19.7178 | 23.6563 | 24.0289 | 25.1088 | 28.4882 |

| (3) | 20.0447 | 23.3038 | 23.3767 | 24.6815 | 28.0150 |

| (4) | 29.5328 | 31.2070 | 31.8931 | 33.8716 | 37.5925 |

| (5) | 21.3806 | 24.7216 | 25.1418 | 29.3361 | 29.9575 |

| (6) | 33.3530 | 36.4773 | 37.1277 | 32.8452 | 42.1757 |

| Average | 24.1643 | 27.3114 | 27.7265 | 28.7495 | 32.6063 |

| Image Number | GIF | WGIF | GDGIF | EGIF | Proposed |

|---|---|---|---|---|---|

| (1) | 0.7531 | 0.8373 | 0.8444 | 0.9156 | 0.9212 |

| (2) | 0.7341 | 0.8368 | 0.8458 | 0.8919 | 0.9214 |

| (3) | 0.7241 | 0.8105 | 0.8124 | 0.8808 | 0.9071 |

| (4) | 0.8778 | 0.9026 | 0.9138 | 0.9417 | 0.9681 |

| (5) | 0.7024 | 0.7771 | 0.7904 | 0.9225 | 0.9304 |

| (6) | 0.9478 | 0.9644 | 0.9686 | 0.9450 | 0.9868 |

| Average | 0.7899 | 0.8548 | 0.8626 | 0.9163 | 0.9391 |

| Image Number | Original Value | Proposed |

|---|---|---|

| (1) | 17.1937 | 26.9770 |

| (2) | 22.8585 | 45.6375 |

| (3) | 32.8440 | 68.8868 |

| (4) | 7.8341 | 10.0505 |

| (5) | 12.5660 | 24.4680 |

| (6) | 9.5032 | 17.3654 |

| Average | 17.1333 | 32.2309 |

| Score | Enhanced Image Features |

|---|---|

| 1 | Some areas of the image are severely distorted |

| 2 | Mild image distortion |

| 3 | Harder to spot image distortion |

| 4 | The visual effect of the image is better |

| 5 | Image is very clear |

| Algorithm | Edge Sharpening | Clarity | Invariance | Acceptability |

|---|---|---|---|---|

| GIF | 3.2 ± 0.16 | 3.5 ± 0.21 | 0.2 ± 0.25 | 1.4 ± 0.24 |

| WGIF | 3.8 ± 0.24 | 3.6 ± 0.21 | 0.5 ± 0.16 | 2.7 ± 0.41 |

| GDGI | 4.2 ± 0.16 | 3.8 ± 0.16 | 0.5 ± 0.25 | 3.8 ± 0.21 |

| EGIF | 4.0 ± 0.40 | 4.0 ± 0.29 | 0.6 ± 0.24 | 3.9 ± 0.29 |

| Proposed | 4.1 ± 0.29 | 4.1 ± 0.24 | 0.7 ± 0.24 | 4.2 ± 0.16 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, G.; Lin, J.; Cao, E.; Pang, Y.; Sun, W. A Medical Endoscope Image Enhancement Method Based on Improved Weighted Guided Filtering. Mathematics 2022, 10, 1423. https://doi.org/10.3390/math10091423

Zhang G, Lin J, Cao E, Pang Y, Sun W. A Medical Endoscope Image Enhancement Method Based on Improved Weighted Guided Filtering. Mathematics. 2022; 10(9):1423. https://doi.org/10.3390/math10091423

Chicago/Turabian StyleZhang, Guo, Jinzhao Lin, Enling Cao, Yu Pang, and Weiwei Sun. 2022. "A Medical Endoscope Image Enhancement Method Based on Improved Weighted Guided Filtering" Mathematics 10, no. 9: 1423. https://doi.org/10.3390/math10091423

APA StyleZhang, G., Lin, J., Cao, E., Pang, Y., & Sun, W. (2022). A Medical Endoscope Image Enhancement Method Based on Improved Weighted Guided Filtering. Mathematics, 10(9), 1423. https://doi.org/10.3390/math10091423