Abstract

The analysis of time series in 4D commutative hypercomplex algebras is introduced. Firstly, generalized Segre’s quaternion (GSQ) random variables and signals are studied. Then, two concepts of properness are suggested and statistical tests to check if a GSQ random vector is proper or not are proposed. Further, a method to determine in which specific hypercomplex algebra is most likely to achieve, if possible, the properness properties is given. Next, both the linear estimation and prediction problems are studied in the GSQ domain. Finally, ARMA modeling and forecasting for proper GSQ time series are tackled. Experimental results show the superiority of the proposed approach over its counterpart in the Hamilton quaternion domain.

Keywords:

ARMA models; Eα0-properness; generalized Segre’s quaternions; Hα0-properness; prediction; proper signal processing MSC:

37M10

1. Introduction

Time series theory is a very well-known statistical methodology for the analysis of stochastic processes that has found widespread applications in signal processing, communications engineering, economics, astronomy, meteorology, seismology, oceanography, among other fields [1]. The most extensively used class of stationary process models are the so-called autoregressive moving average (ARMA) processes. The relevance of ARMA models stems from their power to approximate any stationary process arbitrarily well [2,3]. In addition, ARMA models can be cast in a state-space framework and also have enormous significance in prediction problems, since they facilitate the derivation of several efficient prediction algorithms [3].

The traditional settings for developing time series analysis have been the real and complex numbers, while other number systems have demonstrated a high potential to outperform them in some applications. The most paradigmatic example is the system of Hamilton quaternions (HQs), which has proven to be of great value for the description of 3-dimensional (3D) movements, in fields such as graphic design or automated control [4,5]. Actually, HQs have become the most popular and researched system of hypercomplex numbers [6]. In spite of its relevance, the use of this system in time series analysis is scarce [7].

HQs belong to a more general family of algebras, named hypercomplex systems, which extends the real and complex numbers to higher dimensions. Among them, 4D hypercomplex systems are of special relevance since four coordinates are usually considered for describing the physical world [6]. Further, depending on how the product is defined, they can be classified into two types: noncommutative and commutative systems. HQs are of the first type, i.e., they form a normed division algebra, and this property makes them well suited to deal with signal processing benchmark problems, such as the signal estimation problem [8]. Though commutative hypercomplex numbers, which includes the generalized Segre’s quaternions (GSQs), are less well known, their interest is increasing within the signal processing community due to their availability to extend the methodologies developed in the complex and real field to the 4D case [9,10,11,12,13,14,15,16,17,18,19,20,21]. These commutative hypercomplex algebras possess zero divisors, which implies that the Euclidean norm is not multiplicative [13]. This drawback, however, has essentially no impact on signal and image processing applications [10].

Interestingly, a recent line of research has discovered that the HQs algebra is not always the best option for processing 4D hypercomplex signals [22,23,24,25]. Specifically, some investigations have revealed that properness, a structural property of the hypercomplex signals, determines the performance of the used processing [24,25]. In all of these papers, the analyses have been performed by comparing HQs with tessarines, and several scenarios where the latter outperforms the former have been shown. It should be noted that tessarines are a subset of GSQs obtained by defining a particular product. In general, hypercomplex algebras are characterized by its product, which is usually provided by a multiplication table (Appendix A in [6]). From an intuitive point of view, it is to be expected that more general commutative hypercomplex algebras than tessarines can attain better performance results. On that basis, special attention is paid to such systems in this paper.

As indicated above, properness is a crucial property of hypercomplex signals. This property has two important effects: it determines which type of processing should be used, and also it achieves a significant decrease in the computational complexity involved in comparison to the real processing. Properness has been investigated in detail in the HQ domain [26,27,28,29,30], and for tessarines [24,25]. To our knowledge, however, properness has not been studied for GSQs yet.

Inspired by all the above discussed, the main objective of this paper is to present the time series analysis in the GSQs domain while analyzing the properness properties in this setting. Specifically, the concept of properness is introduced for GSQ signals and their properties studied. Remarkably, two tests to experimentally check whether real data are proper in the GSQs field are proposed and an estimate of the parameter characterizing the product in the hypercomplex algebra is provided. Such an estimate gives the best value of the parameter under which the properness properties could be fulfilled by the signal of interest. It should be highlighted that, unlike the existing approaches, a specific hypercomplex algebra is not fixed in advance in the proposed strategy, but a broad family of commutative hypercomplex algebras is considered. So, the suggested estimate allows us to select the particular algebra in which it is most likely to achieve, if possible, the properness properties. A simulation example illustrates how this approach is effective.

The linear estimation problem is also tackled. Although GSQ numbers are not a normed algebra and thus, the classical projection theorem cannot be applied to obtain the optimum linear estimate, we exploit its metric space feature to prove the existence and uniqueness of the projection and an extension of the innovations algorithm for proper GSQ signals is devised. As could be expected, the proposed algorithms attain a notable reduction in the computational burden. Finally, ARMA modeling for proper GSQ time series is introduced. For that, we first investigate an extension of the Yule–Walker equations and then, the prediction problem is addressed. The particular structure of the ARMA models allows us to improve the innovations algorithm giving rise to a more efficient prediction method. The steady-state properties of the suggested predictor are also studied. To conclude, the superiority in terms of performance of our proposal in comparison with its counterpart in the HQ domain is numerically analyzed.

The rest of this paper is organized as follows. Section 2 presents both the GSQ random variables and signals. Then, Section 3 introduces the concepts of properness in the GSQs domain and provides the statistical inference methods for testing the properness as well as estimating the associated parameter that characterizes the product in the hypercomplex algebra. This section ends with a simulation example. The linear estimation problem for GSQ random variables and the prediction problem for GSQ random signals are analyzed in Section 4. Section 5 focuses on the analysis of time series in the GSQs setting. It deals with the problems of modeling and forecasting time series data. A final example is included to show the efficiency of the proposed strategy. To improve the readability of the manuscript, all proofs have been deferred to Appendix B, Appendix C, Appendix D, Appendix E and Appendix F.

Notation

We designate matrices with boldface uppercase letters, column vectors with lowercase letters, and scalar quantities with lightface lowercase letters. Moreover, the symbols , , , and represent the set of integer, real, complex, and GSQ numbers, respectively. In this regard, (respectively, ) indicates that is a real (respectively, a GSQ) matrix of dimension . Similarly (respectively, ) means that is a p-dimensional real (respectively, a GSQ) vector. In particular, represents the zero matrix of dimension , and is the identity matrix of dimension p. In addition, denotes the element of the matrix and stands for a diagonal (or block diagonal) matrix with entries (or block entries) specified in the operator argument.

Furthermore, superscript “” represents the transpose. “⊗”stands for the Kronecker product. represents the expectation operator. denotes the real part of a GSQ, and is either the complex modulus if or the absolute value if . Finally, denotes the Kronecker delta function.

Unless otherwise stated, all random variables are assumed to have a zero-mean throughout this paper.

A list of symbols that are used in the paper is given in Table 1.

Table 1.

List of symbols.

2. Generalized Segre’s Quaternion Random Variables

This section establishes the basic concepts and properties of interest in the GSQ domain. For that, we first introduce the GSQ random variables and then the GSQ random signals are considered.

A random variable can be defined as where are random variables and the imaginary units satisfy the following multiplication rules:

where is a nonzero real number.

As is well-known, the form in which the product is defined is an essential feature of hypercomplex numbers. The product defined in (1) is commutative, associative, and distributive over addition; however, these algebras have divisors of zero. In contrast to GSQs, the product in the HQs is defined in such a way that the algebra becomes noncommutative and does not have divisors of zero [6]. Further, two special algebras in GSQs are of interest: for , is called the hyperbolic quaternions system and for , is the elliptic quaternions system [6]. In particular, if then, is named the tessarine numbers.

The real vector of is defined by . Moreover, we define the following auxiliary GSQ random variables:

The triplet are the so-called principal conjugations and obey the following properties.

Property 1.

Let be random variables, then

Consider the vector random variables and . We define the following functions:

where , , and ; however, for reasons that will become clear, they are denoted by , , and . Likewise, we denote , .

Now, we extend some of the above concepts to the case of random signals. Consider a random signal vector , , where , , with , , , and real random signals. The real vectors associated to is denoted by

where , , , and .

In a similar way to (2), we define the following functions for the random signal vectors and :

It is easy to check, for , that

We define the augmented vector of as

with , for . The following relationship between the augmented vector and the real vector given in (3) can be established

where with

Moreover, from Property 1, the next result can be stated.

Property 2.

for , and . The functions , , and , , are obtained in Table A1 (see Appendix A) in function of the correlation functions (denoted by ) of the components of the real vector (3).

3. Properness Conditions

This section is devoted to analyze the concepts of properness for GSQ random signals. After introducing the notion of properness, a characterization of this property based on the correlations of the real vectors is presented.

Definition 1.

A random signal vector is said to be -proper if, and only if, there exists a value such that the functions , , vanish .

In a similar manner, two random signal vectors and are cross -proper if, and only if, there exists a value such that the functions , , vanish . Finally, and are jointly -proper if, and only if, are -proper and cross -proper.

A similar definition about -properness can be given by relplacing by , by , and by .

Remark 1.

The -properness condition introduced in [24] is a particular case of -properness for .

From Table A1, we have the following characterization result.

Proposition 1.

Let be a random signal vector. Then

- is -proper if, and only if, the correlation of their real vectors verifieswith .

- is -proper if, and only if, the correlation of their real vectors verifieswith .

From an experimental standpoint, it becomes necessary to develop a method for checking whether a random vector is proper and devise an estimate of the associated . Specifically, based on the information supplied by a random sample, we aim to test if is -proper (or -proper) and we wish to estimate the suitable value of under which such properness properties could be satisfied by , if possible. To this end, we consider the following statistical hypotheses test:

The following result derives two generalized likelihood ratio tests (GLRT), Wilks’ statistics, for the problem (8). These statistics are denoted by . The value assigned to the letter “” as subscript, superscript or in any other role will indicate when -properness () or -properness () should be assumed. This convention is applied henceforth. Notice that, these tests generalize the one given in ([24], Theorem 1) to the GSQs domain. The main difference between them lies in the choice of the appropriate to achieve properness conditions.

Theorem 1.

Given n independent and identically distributed random samples of a random vector such that follows a Gaussian distribution then, the GLRT statistics for (8) are given by

where denotes the determinant under the product associated to ,

is the sample autocorrelation matrix and

The parameter can be estimated as

with .

Further, assuming that is true, the distributions of the statistics and tend to a chi-squared distribution with degrees of freedom equal to and , respectively, as the sample size tends to infinity.

Example

The following two cases are studied:

- Case 1: and in an hyperbolic quaternions algebra with . From Proposition 1, we have that the vector is -proper under these conditions. Moreover, from Theorem 1, the GLRT statistic converges to a .

- Case 2: in an elliptic quaternions algebra with . It follows that is -proper. Further, converges to a .

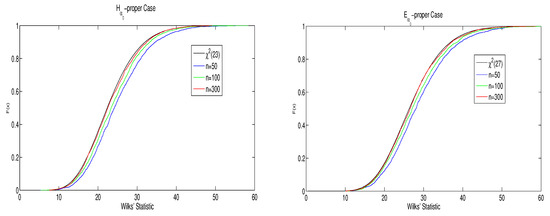

On the one hand, the behavior of the cumulative distribution functions (CDFs) of , , is analyzed in both cases in terms of the sample size. For that, and through Montecarlo simulations, 2000 values of the statistics , , have been generated for . The corresponding empirical CDFs are displayed in Figure 1. At a glance, this figure shows the rapid convergence of the empirical CDFs to the target chi-squared distributions.

Figure 1.

Wilks’ statistic for case 1 (left) and case 2 (right).

On the other hand, we assess the accuracy of the estimations of depending on the sample size n. The maximization process in (9) can be achieved using the optimization toolbox in Matlab (The Nelder–Mead search algorithm is used). Table 2 and Table 3 depict the 95% confidence intervals for obtained from 2000 simulated runs. As can be expected, the amplitudes of the confidence intervals become narrower as the sample size grows.

Table 2.

The 95% confidence intervals for in case 1.

Table 3.

The 95% confidence intervals for in case 2.

4. Linear Estimation and Prediction

The objective of this section is to solve the linear estimation problem for proper GSQ random variables. Since it is not possible to define a norm in the set of GSQs then, we cannot apply the projection theorem to obtain the optimum linear estimator. So, we first need to define a suitable metric and thus, we prove the existence and the uniqueness of the projection.

Given the random variables , we define the product

where is the scalar version of given in (2).

For any random variables and deterministic coefficients , , it follows that

- .

- .

Thus, we can define a distance as

where .

Consider the set of random vectors , with , , and denote by the linear span of , i.e., the set of all finite linear combinations of elements of . The issue of existence and uniqueness of the projection of a given random variable onto the set is now briefly investigated. This projection is denoted by .

In a metric space, neither the existence nor the uniqueness of is assured; however, following a reasoning similar to [24], the existence and uniqueness of can be proved. Further, is the projection of y onto the set if, and only if, , . As a consequence, if is a basis of then,

where the deterministic coefficients are obtained from the system

Now, we are prepared to introduce the notion of linear estimator in the GSQ domain.

Definition 2.

The projection of y onto the set , , is called the estimator of y respect to and, from now on, it is denoted by . If the information set is formed by augmented vectors defined in (5), i.e., if we consider with , , then, the projection of y onto the set is called SWL estimator of y respect to and it is denoted by .

These concepts are easily extended to the vectorial case. For example, the estimator of the random vector respect to is , where is the projection of the component jth of onto the set .

Theorem 2.

Consider a random variable and the set of random vectors , with , . Then,

- The SWL estimator of y respect to is obtained aswhere the deterministic vectors are computed through the equationwith .Moreover, this estimator and its associated error are independent of the value of α, i.e., and , , where .

- The estimator of y respect to is calculated aswhere the deterministic vectors are computed through the equationwith

- .

- If are jointly -proper (respectively, -proper) and y is cross -proper (respectively, -proper) with each element of then, .

Remark 2.

Theorem 2 shows that whatever hypercomplex algebra you choose provides the same SWL estimator. Likewise, the computational burden of the SWL estimator can be notably reduced whenever hyperbolic or elliptic conditions of properness are fulfilled (compare (13) with (14)). Effectively, while the computational complexity of SWL estimators is of order , this is of order under hyperbolic or elliptic properness. More importantly, this reduction in computational complexity cannot be achieved in the real numbers domain.

Now, we are concerned with the prediction problem for random signals. Consider a random signal vector . Our aim is to obtain the one-stage predictor of from the information contained in the set , denoted by . Analogously, the SWL one-stage predictor is denoted by .

Note that a similar result to Theorem 2 can be stated for random signals, but we do not include it here for the sake of brevity. It should be remarked, however, that a computational problem remains still unsolved. Although the estimation problem is indeed simplified due to the reduction in dimension implied by the properness conditions, we have to cope with the inverse in (14) as the number of observations t grows. The following result overcomes this problem for proper signals.

Theorem 3

(The innovations algorithm). Consider a -proper (respectively, -proper) random signal vector . If is nonsingular for then, and can be recursively obtained in the following way:

with and where

for , with and

with the initial condition . Moreover, the error associated to the component is given by

5. Proper ARMA Models

This section constitutes the core of the paper. Starting from the definition of proper ARMA models in the GSQs domain we then solve the one-stage prediction problem.

5.1. ARMA Modeling

Next, we adapt the proper ARMA signal concept to the GSQs domain and extend the Yule–Walker equations to this kind of signals. To do so, we first extend the concept of stationarity to the GSQs domain in the proper signal scenario.

Definition 3.

A -proper random signal vector is said to be stationary if , , i.e., only depends on the lag h for a given . In this case, we denote . The concept of a -proper stationary signal is defined analogously by replacing by .

Definition 4.

A random signal vector is said to be a -proper ARMA signal of orders p and q (ARMA()) if it is -proper, stationary, and can be modeled by the following system:

where , are deterministic matrices and is a -proper white noise verifying

with a deterministic matrix.

The concept of a -proper ARMA() signal is defined analogously by replacing -proper by -proper, and by in (21).

The following result extends the Yule–Walker equations to the case of proper ARMA signals.

Proposition 2.

Let be a -proper (or -proper) ARMA() signal. Consider the matrix-valued polynomial with , . If , such that then, , , obeys the equation

where the matrices are determined by

with , and , .

5.2. One-Stage Prediction

We aim to improve the recursive algorithm for given in Theorem 3 by making use of the particular structure of proper ARMA() models.

Theorem 4.

Let be a -proper (or -proper) ARMA() signal. If , such that then, and this predictor can be calculated through the following recursive procedure:

with , and the matrices are calculated by replacing in (17) and (18) by the following function:

with for , Ω given in (21), and obeys (22). Moreover, the error associated to (23) coincides with (19).

Remark 3.

The advantage of working with this algorithm is that for and thus, unlike the innovations algorithm given in Theorem 3, only , for , have to be computed in each recursion.

Corollary 1.

For an ARMA() signal it follows that

and the one-stage predictor is computed through

where

and is computed solving the following system of matrix equations:

To conclude, a steady-state performance analysis for the one-stage predictor (23) and its associated error is fulfilled.

5.3. Example

By way of illustration, we consider a proper ARMA(1,1) model. With this model, we first carry out a performance comparative analysis between the one-stage predictor (25), and its counterpart in the HQ domain, . Our aim is to show the superiority in terms of performance of the proposed approach respect to the usual HQ methodology under different initial conditions. Finally, a steady-state performance analysis is carried out.

Let the ARMA(1,1) system be

with , and a white noise whose real vector (3) verifies where is the matrix given in (10).

Two scenarios are contemplated: in the first one, we consider that and in , and three different hyperbolic quaternion algebras are studied (). In the second one, we set the value of the parameters and to be both 0 and three elliptic quaternion algebras are analyzed (). These scenarios present an interesting characteristic: from Proposition 1, is either -proper (in scenario 1) or -proper (in scenario 2); however, the degree of improperness of in the HQ system increases as grows.

Note that the conditions of Proposition 2 and Corollary 2 are fulfilled and hence, can be obtained through the recursions (25) and (26) and the steady-state results in (27) hold.

The errors associated to and depend on the value and thus, they are denoted by and , respectively. To assess the performance of both approaches, the following measure is computed:

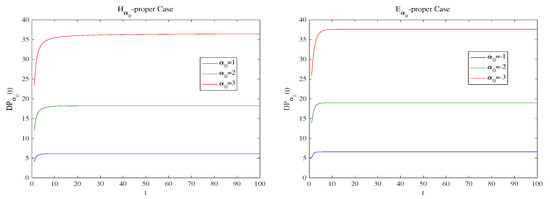

Figure 2 depicts the measure in (29), for , in the above two scenarios. As can be seen, the one-stage predictor outperforms the HQ one-stage predictor in both scenarios. Furthermore, the measure becomes greater as grows, i.e., as the degree of HQ improperness grows; being slightly greater for the -proper case.

Figure 2.

in scenarios 1 (left) and 2 (right).

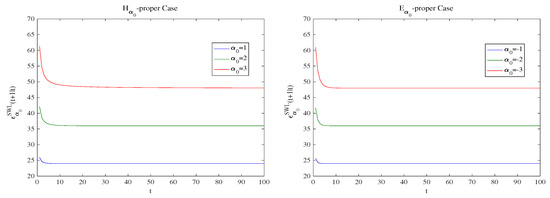

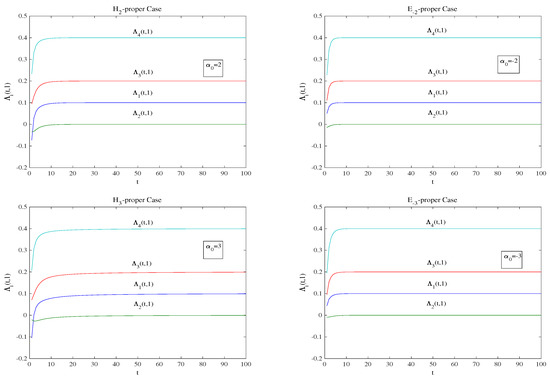

Now, we analyze the steady-state performance of both the estimate (25) and its error. The errors (28) computed for different values of appear in Table 4. Further, Figure 3 shows as converges toward their respective steady-state errors when t grows. These plots indicate that the convergence is rapid.

Table 4.

Steady-state errors of the one-stage predictor for the different values of .

Figure 3.

for .

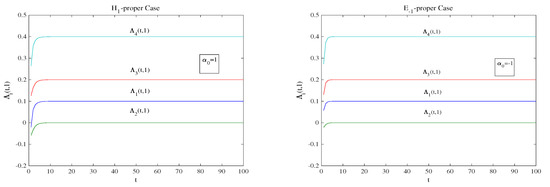

Finally, Figure 4 displays the convergence performance of the components of given in (26). As could be expected from (27), we also obtain here a rapid convergence toward the target values and, in general, this convergence is slightly slower as grows as well as in the -proper case.

Figure 4.

Values of the components , , for .

6. Discussion

Recent investigations have shown that HQs are not always the best choice for processing 4D hypercomplex signals. These results suggest distinct strategies depending on whether properness conditions are complied with. As the main characteristic, they offer a significant computational saving in relation to the real-valued processing. Unlike earlier research, the approach described in this paper has the additional advantage of the flexibility in the choice of the hypercomplex algebra. In our opinion, this research topic opens a broad range of possibilities for studying new applications in signal processing. This paper precisely proposes the time series analysis as a possible field of application of the proper GSQ signal processing. Other potential areas include dynamic linear models, filtering and smoothing, signal detection, etc.

Author Contributions

Conceptualization, J.C.R.-M.; Formal analysis, J.N.-M.; Methodology, J.N.-M.; Investigation, J.N.-M., R.M.F.-A. and J.C.R.-M.; Visualization, J.N.-M., R.M.F.-A. and J.C.R.-M.; Writing—original draft preparation, J.N.-M.; Writing—review and editing, J.N.-M., R.M.F.-A. and J.C.R.-M.; Funding acquisition, J.N.-M. and R.M.F.-A.; Project administration, J.N.-M. and R.M.F.-A.; Software, J.N.-M.; Supervision, J.N.-M., R.M.F.-A. and J.C.R.-M.; Validation, J.N.-M., R.M.F.-A. and J.C.R.-M. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been supported in part by I+D+i project with reference number 1256911, under ‘Programa Operativo FEDER Andalucía 2014–2020’, Junta de Andalucía, and Project EI-FQM2-2021 of ‘Plan de Apoyo a la Investigación 2021–2022’ of the University of Jaén.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study: in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Appendix A

Table A1.

Expressions for and , , in function of the correlation functions of the components of (3).

Table A1.

Expressions for and , , in function of the correlation functions of the components of (3).

Appendix B

Proof of Theorem 1.

The proof is similar to that of Theorem 1 in [24]. □

Appendix C

Appendix D

Proof of Theorem 3.

We carry out the demonstration for the -proper case. The -proper case is analogous. Firstly, since is -proper and applying Theorem 2 we have that . Now, consider the next product respect to

and define the distance

where . Following a similar procedure to the one used with (11), the existence and uniqueness of the projection of onto the set associated to the metric (A2) is guaranteed. Further, this projection coincides with , which is obtained from (11).

Define the innovations as . Thus, the set spans and, from (4), it follows that

Then, (16) holds. Now, denoting , we obtain

Taking into account that is nonsingular and hence, is also nonsingular, we obtain (17).

Appendix E

Proof of Proposition 2.

From (20), we have

where B denotes the backward shift operator, and , . Thus, post-multiplying (A4) by , taking expectations and taking into account the -properness (respectively, -properness), (22) holds. The recursive expression for the matrices can be derived as follows. Taking into account that such that , we have that (Theorem 11.3.1 in [3]).

By comparing coefficients of in the power series after multiplying through by and from the block diagonality of and , the result follows. □

Appendix F

Proof of Proposition 4.

The proof is given for the -proper case and the -proper case is similarly obtained. Since is -proper and from Theorem 2 we have that .

Now, consider the transformed signal

It is trivial that with and . Then, defining and denoting by the one-stage predictor of respect to under (A2), we have

Thus, the problem of computing is equivalent to calculating . It is not difficult to prove that given in (24). Since if and , then, from Theorem 3, we obtain for and , and hence,

with and the matrices calculated from (17) and (18) for .

(23) follows. □

References

- Pollock, D.S.G. A Handbook of Time-Series Analysis, Signal Processing and Dynamics; Academic Press: San Diego, CA, USA, 1999. [Google Scholar]

- Manolakis, D.; Bosowski, N.; Ingle, V.K. Count time-series analysis: A signal processing perspective. IEEE Signal Process. Mag. 2019, 36, 64–81. [Google Scholar] [CrossRef]

- Brockwell, P.J.; Davis, R.A. Time Series: Theory and Methods, 2nd ed.; Springer: New York, NY, USA, 1991. [Google Scholar]

- Kuipers, J.B. Quaternions and Rotation Sequences: A Primer with Applications to Orbits, Aerospace and Virtual Reality; Princeton University Press: Princeton, NJ, USA, 2002. [Google Scholar]

- Hanson, A.J. Visualizing Quaternions; The Morgan Kaufmann Series in Interactive 3D Technology; Elsevier: Amsterdam, The Netherlands, 2005. [Google Scholar]

- Catoni, F.; Boccaletti, D.; Cannata, R.; Catoni, V.; Nichelatti, E.; Zampetti, P. The Mathematics of Minkowski Space-Time: With an Introduction to Commutative Hypercomplex Numbers; Birkhaüser Verlag: Basel, Switzerland, 2008. [Google Scholar]

- Ginzberg, P.; Walden, A.T. Quaternion VAR modelling and estimation. IEEE Trans. Signal Process. 2013, 61, 154–158. [Google Scholar] [CrossRef] [Green Version]

- Tobar, F.; Mandic, D.P. Quaternion reproducing kernel Hilbert spaces: Existence and uniqueness conditions. IEEE Trans. Inform. Theory 2014, 60, 5736–5749. [Google Scholar] [CrossRef]

- Schütte, H.D.; Wenzel, J. Hypercomplex numbers in digital signal processing. In Proceedings of the International Symposium on Circuits and Systems, New Orleans, LA, USA, 1–3 May 1990; pp. 1557–1560. Available online: https://ieeexplore.ieee.org/document/112431 (accessed on 15 February 2022).

- Pei, S.H.; Chang, J.H.; Ding, J.J. Commutative reduced biquaternions and their Fourier transform for signal and image processing applications. IEEE Trans. Signal Process. 2004, 52, 2012–2031. [Google Scholar] [CrossRef]

- Labunets, V. Clifford algebras as unified language for image processing and pattern recognition. In Computational Noncommutative Algebra and Applications; NATO Science Series II: Mathematics, Physics and Chemistry; Byrnes, J., Ed.; Springer: Dordrecht, The Netherlands, 2004; Volume 136, pp. 197–225. [Google Scholar]

- Alfsmann, D. On families of 2N-dimensional hypercomplex algebras suitable for digital signal processing. In Proceedings of the 14th European Signal Processing Conference (EUSIPCO 2006), Florence, Italy, 4–8 September 2006; pp. 1–4. [Google Scholar]

- Alfsmann, D.; Göckler, H.G.; Sangwine, S.J.; Ell, T.A. Hypercomplex algebras in digital signal processing: Benefits and drawbacks. In Proceedings of the 15th European Signal Processing Conference, Poznan, Poland, 3–7 September 2007; pp. 1322–1326. [Google Scholar]

- Pei, S.H.; Chang, J.H.; Ding, J.J.; Chen, M.Y. Eigenvalues and singular value decompositions of reduced biquaternion matrices. IEEE Trans. Circuits Syst. I 2008, 525, 2673–2685. [Google Scholar]

- Guo, L.; Zhu, M.; Ge, X. Reduced biquaternion canonical transform, convolution and correlation. Signal Process. 2011, 91, 2147–2153. [Google Scholar] [CrossRef]

- Hahn, S.L.; Snopek, K.M. Complex and Hypercomplex Analytic Signals: Theory and Applications; Artech House: Norwood, MA, USA, 2016. [Google Scholar]

- Kamal, A.T.; El-Melegy, M.T. Color image processing using reduced biquaternions with applications to face recognition in a PCA framework. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 3039–3046. [Google Scholar]

- Esposito, A.; Faundez-Zanuy, M.; Morabito, F.C.; Pasero, E. Neural Advances in Processing Nonlinear Dynamic Signals; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Kobayashi, M. Twin-multistate commutative quaternion Hopfield neural networks. Neurocomputing 2018, 320, 150–156. [Google Scholar] [CrossRef]

- Zanetti de Castro, F.; Eduardo Valle, M. A broad class of discrete-time hypercomplex-valued Hopfield neural networks. Neural Netw. 2020, 122, 54–67. [Google Scholar] [CrossRef] [Green Version]

- Takahashi, K. Comparison of high-dimensional neural networks using hypercomplex numbers in a robot manipulator control. Artif. Life Robot. 2021, 26, 367–377. [Google Scholar] [CrossRef]

- Ortolani, F.; Scarpiniti, M.; Comminiello, D.; Uncini, A. On the influence of microphone array geometry on the behavior of hypercomplex adaptive filters. In Proceedings of the 5th IEEE Microwaves, Radar and Remote Sensing Symposium (MRRS), Kiev, Ukraine, 29–31 August 2017; pp. 37–42. [Google Scholar]

- Ortolani, F.; Scarpiniti, M.; Comminiello, D.; Uncini, A. On 4-dimensional hypercomplex algebras in adaptive signal processing. In Neural Advances in Processing Nonlinear Dynamic Signals; Springer: Berlin, Germany, 2017; pp. 131–140. [Google Scholar]

- Navarro-Moreno, J.; Fernández-Alcalá, R.M.; López-Jiménez, J.D.; Ruiz-Molina, J.C. Tessarine signal processing under the T-properness condition. J. Frankl. Inst. 2020, 357, 10100–10126. [Google Scholar] [CrossRef]

- Navarro-Moreno, J.; Ruiz-Molina, J.C. Wide-sense Markov signals on the tessarine domain. A study under properness conditions. Signal Process. 2021, 183C, 108022. [Google Scholar] [CrossRef]

- Took, C.C.; Mandic, D.P. Augmented second-order statistics of quaternion random signals. Signal Process. 2011, 91, 214–224. [Google Scholar] [CrossRef] [Green Version]

- Navarro-Moreno, J.; Fernández-Alcalá, R.M.; Ruiz-Molina, J.C. Semi-widely simulation and estimation of continuous-time Cη-proper quaternion random signals. IEEE Trans. Signal Process. 2015, 63, 4999–5012. [Google Scholar] [CrossRef]

- Navarro-Moreno, J.; Ruiz-Molina, J.C. Semi-widely linear estimation of Cη-proper quaternion random signal vectors under Gaussian and stationary conditions. Signal Process. 2016, 119, 56–66. [Google Scholar] [CrossRef]

- Nitta, T.; Kobayashi, M.; Mandic, D.P. Hypercomplex widely linear estimation through the lens of underpinning geometry. IEEE Trans. Signal Process. 2019, 67, 3985–3994. [Google Scholar] [CrossRef]

- Grassucci, E.; Comminiello, D.; Uncini, A. An information-theoretic perspective on proper quaternion variational autoencoders. Entropy 2021, 23, 856. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).