1. Introduction

Nature-Inspired Optimization Algorithms (NIOAs) are one of the dominant techniques used due to their simplicity and flexibility in solving large-scale optimization problems [

1]. The search process of the nature-inspired optimization algorithms was developed based on the behavior or processes encountered from nature. Notably, the NIOAs have emphasised the significant collection of algorithms, like the evolutionary algorithm (EA), swarm intelligence (SI), physical algorithm, and bio-inspired algorithm. These algorithms have illustrated their efficiency in solving wide variants of real-world problems [

2]. A variety of nature-inspired optimization algorithms, such as the Genetic Algorithm (GA) [

3], Differential Evolution (DE) [

4], Ant Colony Optimization (ACO) [

5] Artificial Bee Colony (ABC) [

6], Particle Swarm Optimization (PSO) [

7], Firefly Algorithm (FA) [

8], Cuckoo Search (CO) [

9], Bat Algorithm [

10], Monkey Algorithm (MA) [

11], Spider Monkey Algorithm (SMO) [

12], Reptile Search Algorithm (RSA) [

13], Membrane Computing (MC) [

2], and whale optimization [

14] use simple local searches for convoluted learning procedures to tackle complex real-world problems.

The complexity of real-world problems increases with the current scenario. Hence, the nature-inspired optimization algorithm has to find the best feasible solution with respect to the decision and objective space of the optimization problem. The increase in a decision variable may directly increase the size of the problem space. Then the complexity of the search space is also exponentially increased due to an increase in decision variables. Similarly, the search space will also increase due to an increase in the number of objectives [

1]. Thus, the performance of the optimization algorithms will depend on two major components, as follows [

15]: (i) the exploration used to generate the candidate solution to explore the search space globally; and (ii) the exploitation used to focus on exploiting the search space in the local region to find the optimal solution in the particular region. Thus, it is of essential importance for the optimization algorithm to acquire an equilibrium between the exploitation and exploration for solving different kinds of the optimization problem [

16].

2. Literature Survey

Many optimization algorithms in the literature have been modified from the initial version to a hybrid model in order to tackle the balance between exploration and exploitation [

17,

18]. For example, we consider the most famous nature-inspired optimizations, such as GA, PSO, DE, ABC, and the most recent algorithms, such as the grey wolf-optimizer, reptile search algorithm, Spider Monkey Algorithm, and whale optimization algorithm. The main operators of the genetic algorithm are selection, crossover, and mutation [

19]. Many authors [

20] in the literature have proposed novel crossover operators in order to adjust the exploration capability of the genetic algorithm. Then, the selection process [

21] and mutation operators [

22,

23] have been modified by authors in order to maintain a diverse and converged population. The author in the Ref. [

24] proposed a special chromosome design based on a mixed-graph model to address the complex scheduling problem, and this technique enhances the heuristic behaviour of a genetic algorithm. Further, the genetic algorithm is combined with other techniques in order to improve its performance, such as a self-organization map [

25,

26], adaptive techniques [

27], and other optimization algorithms [

28]. These operators help to attain a balance over convergence and randomness to find the optimal results [

29]. However, the convergence depends on the mutation operator because of its dual nature, that is, it either slows down the convergence or attains the global optima. Thus, fine-tuning the operators concerning the optimization problem is quite difficult [

30].

The next feasible approach for solving the optimization problem is differential evolution. It also uses vector-based operators in the search process. However, the mutation operator shows its dominance over the search process by contributing to the weighted divergence between two individuals. The selection procedures of the DE have a crucial impact on the search process and also influences diversity among the parents and offspring [

31]. However, the author in the Ref. [

32] developed a new technique based on self-adaptive differential evolution with weighted strategies to address these large-scale problems. In the Particle Swarm Optimizer (PSO) [

7], the selection process of a global best seems to play a vital role in finding the global optimal solution—that is, it can accelerate the convergence, or it can lead to premature convergence. ACO is based on the pheromone trails, such as trail-leaving and trail-after practices of ants, in which every ant sees synthetic pheromone fixations on the earth, and acts by probabilistic selecting bearings focused around the accessible pheromone fixation. However, the search process of the ACO is well-suited for exploration, whereas the exploitation has considerably lower importance [

33].

The Artificial Immune System (AIS) [

34] is mimicked by the biological behavior of the immune system. The AIS uses the unsupervised learning technique obtained from the immune cell over the infected cells. Hence, the algorithm suits cluster-oriented optimization problems well, and strong exploitation and learning processes are intensified at the poor level [

35]. The bacterial foraging algorithm (BFA) was developed by Passino [

36], which works on the basic principle of the natural selection of bacteria. It is also a non-gradient optimization algorithm that mimicks the foraging behavior of the bacteria from the landscape towards the available nutrients [

37]. However, the algorithm has different kinds of operators, where it depicts less convergence over complex optimization problems [

38]. The Krill Herd (KH) is a bio-inspired optimization algorithm which imitates the behavior of krill [

39]. The basic underlying concept of a krill herd is to reduce the distance between the food and each individual krill in the population. However, the author of the Ref. [

40] stated that the KH algorithm does not maintain a steady process between exploration and exploitation.

The dominant author in the field of NIA, Yang [

41], proposed the Firefly Algorithm (FA). The lighting behavior of fireflies during the food acquirement process is the primary ideology of the firefly algorithm [

42]. Thus, the search process of the firefly algorithm is well-suited for solving multi-model optimization using a multi-population [

8]. However, the cuckoo search well-utilises the random walk process that explores the search in an efficient manner, which may lead to slow convergence [

43]. Further, the bat algorithm finds difficulty in the optimal adjustment of the frequency for an echo process, which helps to attain the optimal solution in the search process [

9].

The various types of algorithms evolved in the literature based on the behavior of monkeys, such as the monkey algorithm (MA), monkey king evolution (MKO), and spider monkey algorithm (SMO).The initial solution was brought into existence via a random process, and it completes the local search process using the limb process and also performs a somersault process for the global search operation. Further, an improved version of the monkey algorithm was proposed in the Ref. [

11], which includes the evolutionary speed factor to dynamically change the climb process and aggregation degree to dynamically assist somersault distance. The Spider Monkey Algorithm (SMO) was developed based on the swarm behavior of monkeys, and performs well with regard to the local search problem. The SMO also incorporates a fitness-based position update to enhance the convergence rate [

44]. The Ageist Spider Monkey Algorithm (ASMO) includes features of agility and swiftness based on their age groups, which work based on the age differences present in the spider monkey population [

12]. However, the algorithm performance decreases during the global search operation.

An empirical study from the literature [

45] clearly shows the importance of a diverse population to attain the best solutions worldwide. Hence, a recent advancement in the field of an optimization algorithm incorporated multi-population methods where the population was subdivided into many sub-populations to avert the local optima and maintain diversity among the population [

46]. Thus, multi-population methods guide the search process either in exploiting or exploring the search space. The significance of a multi-population has been incorporated in several dominant optimization algorithms [

8,

47].

Though multi-population-based algorithms have attained viable advantages in exploring and exploiting the search space, it has some consequences in designing the algorithm, such as the number of sub-populations, and communication and strategy among the sub-population [

48]. Firstly, the number of sub-populations helps to spread the individuals over the search space. In fact, the small number of sub-populations leads to local optima, whereas the increase in the number of sub-populations leads to a wastage of computation resources and also extends the convergence [

49]. The next important issue is the communication handling procedure between sub-populations, such as communication rates and policy [

50]. The communication rate determines the number of individuals in a sub-population who have to interact with other sub-populations, and a communication policy is subjected to the replacement of individuals with other sub-populations.

The above discussion clearly illustrates the importance of balanced exploration and exploitation in the search process. Most of the optimization algorithms have problems in tuning the search operators and with diversity among the individuals. On the other hand, the multi-population-based algorithm amply shows its efficiency in maintaining a diverse population. However, it also requires some attention towards communication strategies between the sub-population. Hence, this motivated our research to develop a novel optimization algorithm with well-balanced exploration and exploitation. In particular, the Java macaque is also the vital primitive among the monkeys family and is widely available in South-Asian countries. Thus, the Java macaques have suitable behavior for balancing the exploration and exploitation search process.

The novel contribution of the proposed Java macaque algorithm is described as follows:

- (1)

We introduced the novel optimization algorithm based on the behavior of Java macaque monkeys for balancing the search operation. The balance is achieved by modeling the behavioral nature of Java macaque monkeys, such as multi-group behavior, multiple search agents, a social hierarchy-based selection strategy, mating, male replacement, and learning process.

- (2)

The multi-group population with multiple search agents as male and female monkeys helps to explore the different search spaces and also maintain diversity.

- (3)

This algorithm utilises the dominance hierarchy-based mating process to explore the complex search spaces.

- (4)

In order to address the communication issues in a multi-group population, the Java monkeys have unique behavior, called male replacement.

- (5)

The exploitation phase of the proposed algorithm is achieved by the learning process.

- (6)

This algorithm utilises a multi-leader approach using male and female search agents, which consists of an alpha male and alpha female in each group, and also remains as the best solution globally. Thus, the multi-leader approach assists in a smooth transition from the exploration phase to exploitation phase [

50]. Further, the social hierarchy-based selecting strategy helps in maintaining both an improved converged and diverse solution in each group, as well as in the population.

Further in this paper,

Section 3 describes the brief behavior analysis of the Java macaque monkey.

Section 4 formulates the algorithmic compounds using the Java macaque behavior model. Then,

Section 3.1 explores the stability of Java macaque behavior, and

Section 4 introduces the generic version of the proposed Java macaque algorithm for the optimization problem. Further,

Section 5 provides a brief experimentation of the continuous optimization problem, and

Section 6 illustrates the detailed experimentation of discrete optimization. Finally, the paper is concluded in

Section 8.

3. Behavior Analysis of the Java Macaque Monkey

The Java macaque monkey is an essential native breed that lives with a social structure, and it has almost 95% of gene similarity when compared with human beings [

51]. The basic search agent of the java monkey is divided into male and female, where males are identified with a moustache and females with a beard. The average number of male and female individuals is 5.7 and 9.9 in each group, respectively. Usually, the Java macaque lives (with a group community of 20–40 monkeys) in an environment where macaques try to dominate over others in their region of living. Age, size and fighting skills are the factors used to determine social hierarchy among macaques [

52]. The structure of social hierarchy has been followed among the java monkey groups where the lower-ranking individuals are dominated by the higher-ranking individuals when accessing food and resources [

51,

53]. The higher-ranking adult male and female macaques of a group are called the “Alpha Male” and “Alpha Female”. Further, studies have shown that the alpha male has dominant access to females, which probably sires the offspring.

The number of female individuals is higher in comparison with the male individual for every group. The next essential stage of the java monkey culture is mating. The mating operator depends on the hierarchical structure of the macaques, that is, the selection of male and female macaques is based on the social rank [

54]. The male macaque attracts the female by creating a special noise and gesture for the reproduction. The higher-ranking male individuals are typically attracted to the female individual due to their social power and fitness. The male individual reaches sexual maturity approximately at the age of 6 years, while the female matures at the age of 4 years [

55]. The newborn offspring or juvenile’s social status depends on the parent’s social rank and matrilineal hierarchy, which traces their descent through the female line. In detail, the offspring of the dominant macaque have a higher level of security when compared with offspring of lower-ranking individuals [

56].

The male offspring are forced to leave their natal group after reaching sexual maturity and become stray males, whereas the female can populate in the same group based on their matrilineal status [

53]. Hence, stray males have to join another social group, otherwise they are subjected to risk in the form of predators, disease, and injury. Male replacement is a process in which stray males can reside in another group in two ways—they either have to dominate the existing alpha male or sexually attract a female, and that way the macaque can convince another member to let the stray male into the group. Hence, the behavior of the Java macaque shows excellent skill in solving real-world problems in order to protect their group from challenging circumstances. Thus, the stray male can continuously learn from the dominant behavior of other macaques. Thus, the improvement in the ability of the Java macaque enhances its social ranking and provides higher access to food and protection. Further, the monkey can improve its ranking via a learning process and can also become the alpha monkey, which leads to the attainment of higher power within the group.

The behavior of Java macaque monkeys illustrates the characteristics of an optimization algorithm, such as selection, mating, maintaining elitism, and male replacement, finding the best position via learning from nature. The Java macaque exhibits population-based behavior with multiple groups in it and also adapts a dominant hierarchy. Then, each group can be divided into male and female search agents, which can be further divided into an adult, sub-adult, juvenile, and infant based on age. Next, an important characteristic of a java monkey is mating. In particular, the selection procedure for mating predominantly depends on the fitness hierarchy of the individuals. Further, the dominant individuals in a male and female population are maintained in each group of the population, known as the alpha male and female. The ageing factor is also considered to be an important deciding factor. The male monkey which attains sexual maturity at the age of 4 is forced to leave its natal group, and becomes a stray male. Thus, the stray male has to find another suitable group based on fitness ranking, and this process is known as male replacement. In addition to the above behavior, the monkey efficiently utilises the learning behavior from dominant individuals in order to attain the dominant ranking. The behavior of java monkeys does well to attain global optimal solutions in real-world problems. According to the observations from the above discussion, the behavior of java monkeys exhibits the following features: (1) the group behavior of individuals with different search agents helps in exploring a complex search space; (2) exploration and exploitation is performed using selection, mating, male replacement, and learning behavior; and (3) dominant monkeys are maintained within the group using an alpha male and female.

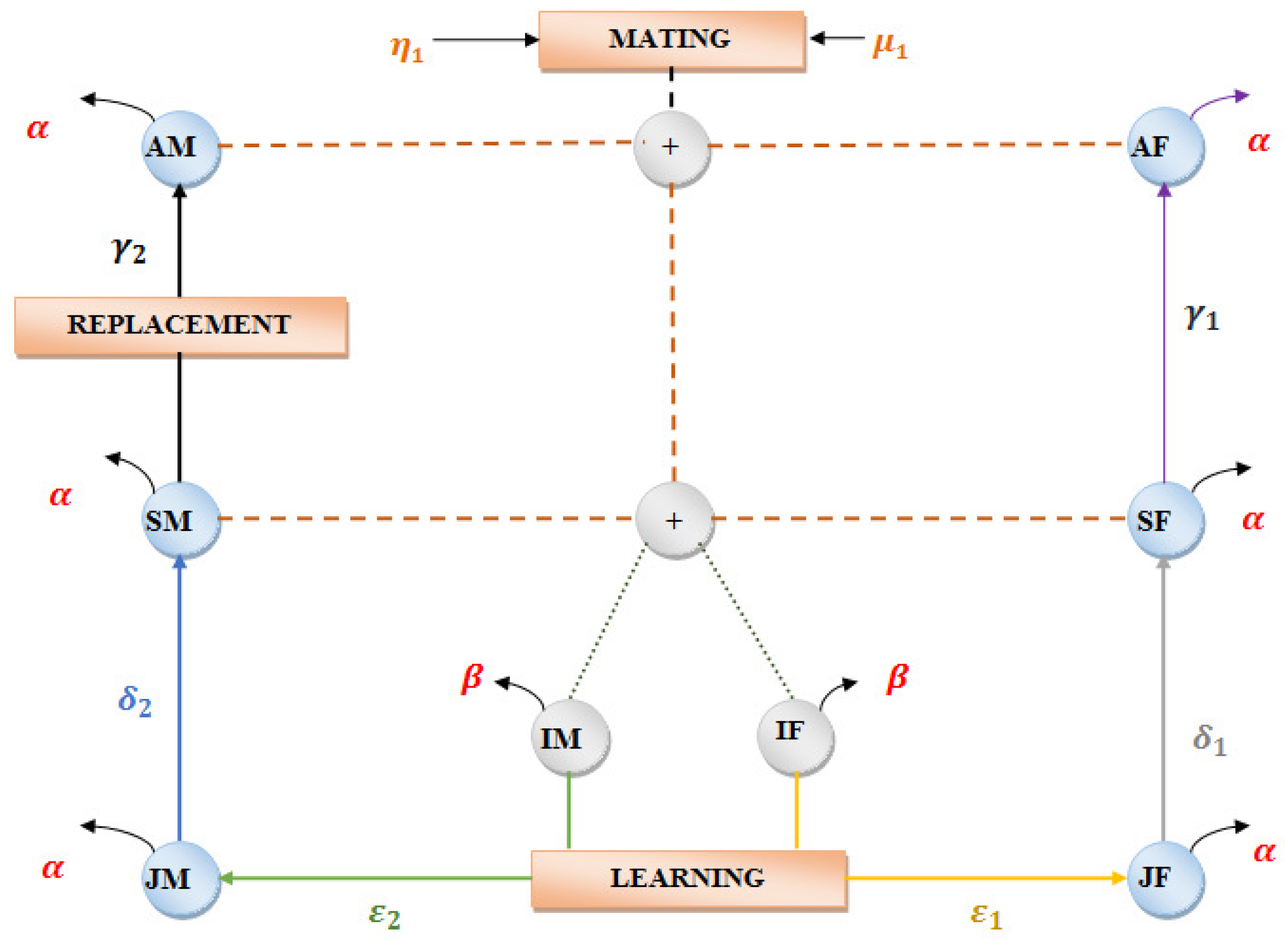

3.1. State Space Model for Java Macaque

Over the centuries, Java macaque monkeys have survived in this world because of their well-balanced behavior. This amply shows that the behavior of Java macaque monkeys has stability within the population. Then, the state space model for the Java macaque monkey in

Figure 1 was designed using the demographic data shown in

Table 1, obtained from the Ref. [

57]. The demographic composition of the monkey population over the period of 1978 to 1982 was used to develop the state space model of the java monkey.

The generalized equation for female individuals in the state space models are represented as:

where

are the death rate of the Infant (Offspring) and Non-Infant. Input variables

are

, and the output variables

are

with respect to the state variable

. Additionally, AF, SF, JF and IF indicates the adult, sub-adult, juvenile and infant females.

Similarly, the generalized equation for male individuals in the population are presented as:

where

are the death rate of the Infant (Offspring) and Non-Infant. Input variables

are

and the output variable

are

with respect to state variable

. Additionally, AM, SM, JM and IM indicate the adult, sub-adult, or stray, juvenile, and infant males.

By using the state space model, we generated the transition matrix, which helps in proving the stability of the population using the - diagonally dominant method, which has been clearly explained in the supplementary documents.

6. Discrete Optimization Problem

The problem space of a discrete optimization problem is represented as the set of all feasible solutions that satisfy the constraint and the fitness function, which maps each element to the problem space. Thus, the discrete or combinatorial optimization problem searches for the optimal solution from the set of feasible solutions.

The generic form of the discrete optimization problem is represented as follows [

63]:

where

is the objective function with discrete problem space, which maps each individual in the search space

to the problem space

and the set of feasible solutions is

.

The individuals were generated with the mapping (

) between the dimensional vector of the search space

to the dimensional vector in the problem space

. In the discrete optimization problem, the total number of elements

is represented as the dimensional vector of the problem space, and it must be mapped as the element of search spaces of individual.

where

is an individual, represented as a tuple

of a dimensional vector

in the search space

and the corresponding dimensional vector

in the problem space

.

presents the group

i with

of male individuals and

of female individuals in the population. The initialization process starts with a minimum number of individuals in the group with respect to the problem size.

The learning process is a mechanism which adapts the learning model for enhancing individual fitness. However, it increases individual fitness by exploiting the search space with respect to the problem space. The individual

in the

should improve its fitness value

via learning and increase the probability of attaining a global optimum. Then, the learning for discrete optimization is defined as:

where

represents the set of individuals,

is the feasible search space of solutions,

is the fitness function, and

is referred to as the learning rate of the individual between

.

Then,

is the learning process

.

where the two values are randomly generated for i and j. Thus, the

x is in linear decreasing order, generated between max to 3

.

where

is the best individual obtained from the different learning process and replaced

in the POP.

6.1. Experimentation and Result Analysis of Travelling Salesman Problem

The mathematical model for the travelling salesman problem is formulated as:

where

is the distance between two cities

and

and

indicate the tour between the last city

and first city

.

The standard experimental setup for the proposed Java macaque algorithm is as follows: (i) the initial population was randomly generated with 60 individuals in each group (m = 60); (ii) the number of groups (n = 5); (iii) executed up to 1000 iterations. The performance of the proposed JMA is correlated with an Imperialist Competitive Algorithm with a particle swarm optimization (ICA-PSO) [

64], Fast Opposite Gradient Search with Ant Colony Optimization (FOGS-ACO) (Saenphon et al., 2014 [

65]), and effective hybrid genetic algorithm (ECOGA) (Li and Zhang, 2007 [

66]). Each algorithm was run on each instance 25 times, and hence, the best among the 25 runs was considered for analysis and validation purposes.

6.2. Parameter for Performance Assessment

This section briefly explains the list of parameters which evaluates the performance of the proposed Java macaque algorithm with the existing algorithm. It also helps to explore the efficiency of the proposed algorithm in various aspects. The various types of investigation parameters are the convergence rate, error rate, convergence diversity, and average convergence from the population. Thus, the parameter for the performance assessment is summarized as follows [

3,

67]:

Best Convergence Rate: In the experimentation, the best convergence rate measures the quality of the best individual obtained from the population in terms of percentage with regard to the optimal value. It can be measured as follows:

where

) is the fitness of the best individual in the population and

indicates the optimal fitness value of the instances.

Average Convergence Rate: The average percentage of the fitness value of the individual in the population with regard to the optimal fitness value is known as the average convergence rate. This can be calculated as:

where

indicates the average fitness of all the individuals in the population.

Worst Convergence Rate: This parameter measures the percentage fitness of the worst individual in the population with regard to optimal fitness. It can be represented as:

where

indicates the worst fitness value of the individual in the population.

Error rate: The error rate measures the percentage difference between the fitness value of the best individual and the optimal value of the instances. It can be given as:

Convergence diversity: Measures the difference between the convergence rate of the dominant individual and worst individuals found in the population. It can be represented as

7. Result Analysis and Discussion

The computational results of the experimentation results are illustrated in this section. The

Table 9 consists of the small-scale TSP instance,

Table 10 has the results of the medium-scale TSP instance, and finally,

Table 11 shows the results of large-scale instances. Thus, the experimentation were directed to evaluate the performance of the proposed JMA with other existing FOGS–ACO, ECO–GA and ICA–PSO. Consider, the sample instance eil51 for the result evaluation. The best convergence rate for ICA–PSO is 94.35%, FOGS–ACO is 92.88%, and ECO–GA is 97%, but the convergence rate of JMA is 100% for the instance eil76. Then the average convergence rate for ICA–PSO, FOGS–ACO, ECO–GA, and JMA are 65.64%, 80.39%, 70.94%, and 85.5%, respectively. On the other hand, this pattern of dominance is maintained in terms of error rate for all instances in

Table 9. Further, the

Table 11 depicts that the convergence rate of the proposed algorithm is above 99% for all the large-scale instances except rat575, whereas the existing ICA–PSO, FOGS–ACO, and ECO–GA are achieved above 90%, 93%, and 96% for the same. It can be observed from the Tables that the existing algorithm values for the average convergence rate is lower than the proposed JMA in most of the instances.The examination of the proposed algorithm dominated the existing ones in all the performance assessment parameters, except that the convergence diversity of ECO–GA is better than the proposed algorithm, but the proposed algorithm maintained well-balanced diversity in order to achieve optimal results.

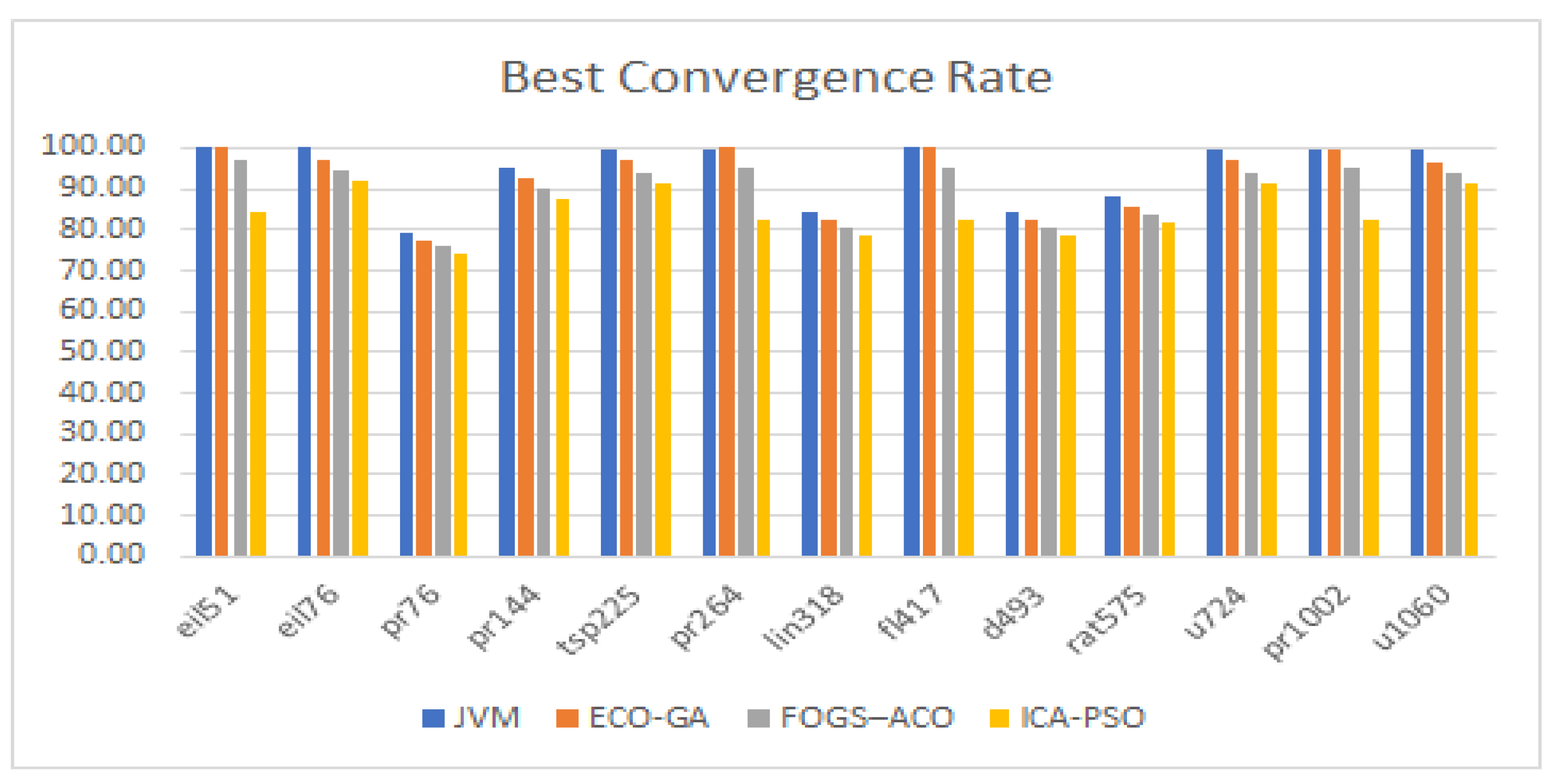

7.1. Analyzes Based on Best Convergence Rate

The best convergence rate reflects the effectiveness of the population-generated by optimization algorithm.

Figure 5 depicts a comparison of the proposed algorithm to the existing algorithm in terms of convergence rate. The JMA holds lesser value as 74.23% for pr76 in small-scale instances. For medium-scale instances, the JMA holds 100% for the instance fl417. Then, the ECO–GA has the highest value of 99.97%, and JMA achieves convergence of 99.96% for the instance pr1002. As a result, on a medium scale, the instance lin318 has the lowest value of a 72.67% convergence rate in the ICA–PSO and the highest of 81.52 % for the JMA and 75.52 % for the FOGS–ACO, while the ECO–GA comes in second with a value of 78.44 %. In terms of convergence rate, the proposed Java macaque algorithm outperforms the existing algorithm.

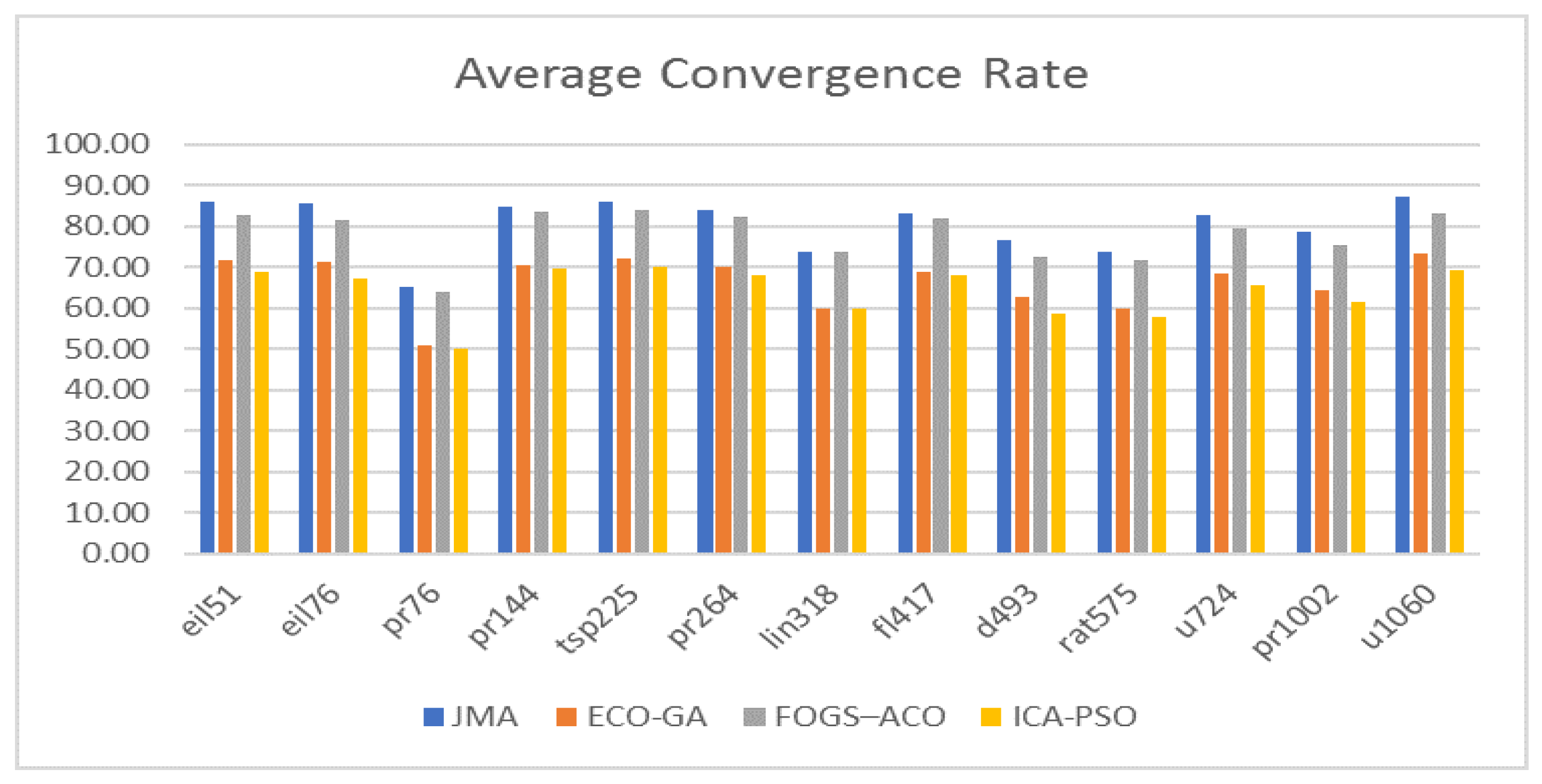

7.2. Analyzes Based on Average Convergence Rate

Figure 6 depicts the results of an average convergence rate comparison between the proposed algorithm and existing algorithms. As an outcome, the investigation in terms of average convergence for JMA revealed that it outperformed the other algorithm. The average convergence rate of JMA has to attain a value above 65%, but the FOGS–ACO, ECO–GA, and ICA–PSO obtained values above 64%, 51%, and 50%. The JMA achieved a maximum value of 87.36% for u1060, while the FOGS–ACO achieved 88.74% for pr144, and ECO–GA reached an average convergence of 73.34% for u1060, and lastly, the ICA–PSO attained a maximum of 70.02%, for instance, tsp225, respectively. While comparing the performance of JMA and FOGS–ACO for the average convergence rate, both algorithms perform quite well, but JMA dominates in many cases.

7.3. Analyzes Based on the Worst Convergence Rate

Figure 7 shows the performance of the experimentation with regard to the worst convergence rate. The proposed Java macaque algorithm dominated the existing algorithm by all means. For example, by acknowledging the occurrence of instance pr144, the ICA–PSO, FOGS–ACO, ECO–GA, and JMA obtained the worst convergence rates of 53.92%, 69.47%, 57.21%, and 72.62%, respectively. Then, by considering the pr1002 instance from a large-scale dataset, the proposed JMA and FOGS–ACO held an equal value of 55.14%, which was followed by an ECO–GA value of 41.13%, and the lowest value of 40.43% was attained by ICA–PSO. Further, in terms of medium–scale instances, the proposed Java macaque algorithm also shows its superiority over the existing algorithm, such as FOGS–ACO, ECO–GA, and ICA–PSO.

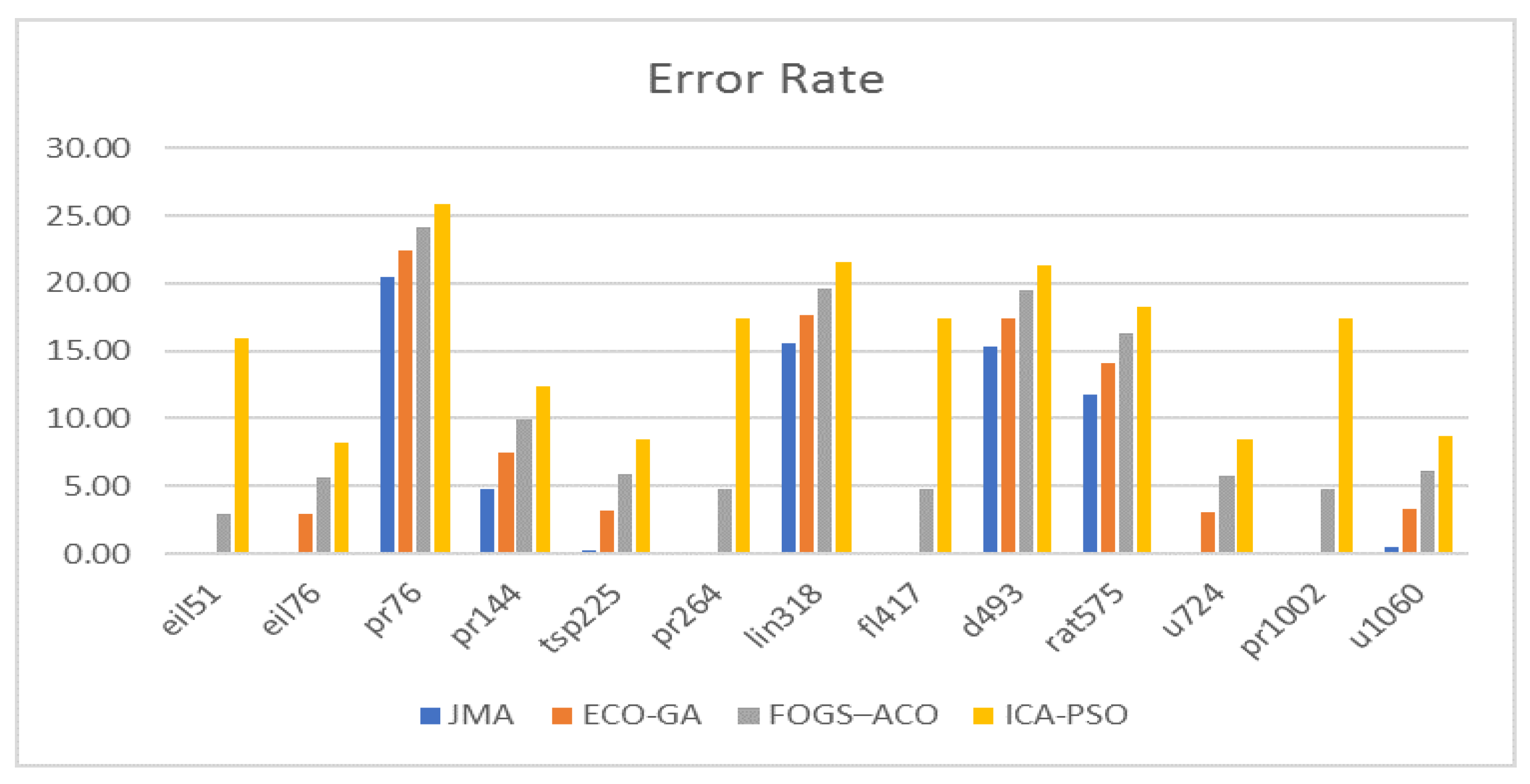

7.4. Analyzes Based on Error Rate

Figure 8 depicts the algorithm’s performance in terms of error rate. The best error rate shows how far the best individual’s convergence rate deviates from the optimal fitness value, whereas the worst error rate shows the difference between the worst individual’s convergence rate and the optimal solution.

Consequently, the maximum error rate of 25.83 percent for particle swarm optimization, 24.14 percent for ant colony optimization, 22.37 percent for genetic algorithm, Java macaque algorithm is 20.52 percent, and in that order. Meanwhile, the least error rate value for JMA was 0% for instances such as eil51, eil76, tsp225, pr264, fl417, u724, pr1002 and u1060, whereas the genetic algorithm had 0% for instances eil51, fl417 and the particle swarm optimization had an approximate value of 8% for instances eil76, tsp225 and u724, and FOGS–ACO has less than 3% for instance eil51, respectively. JMA obtained better performance compared with the existing algorithm.

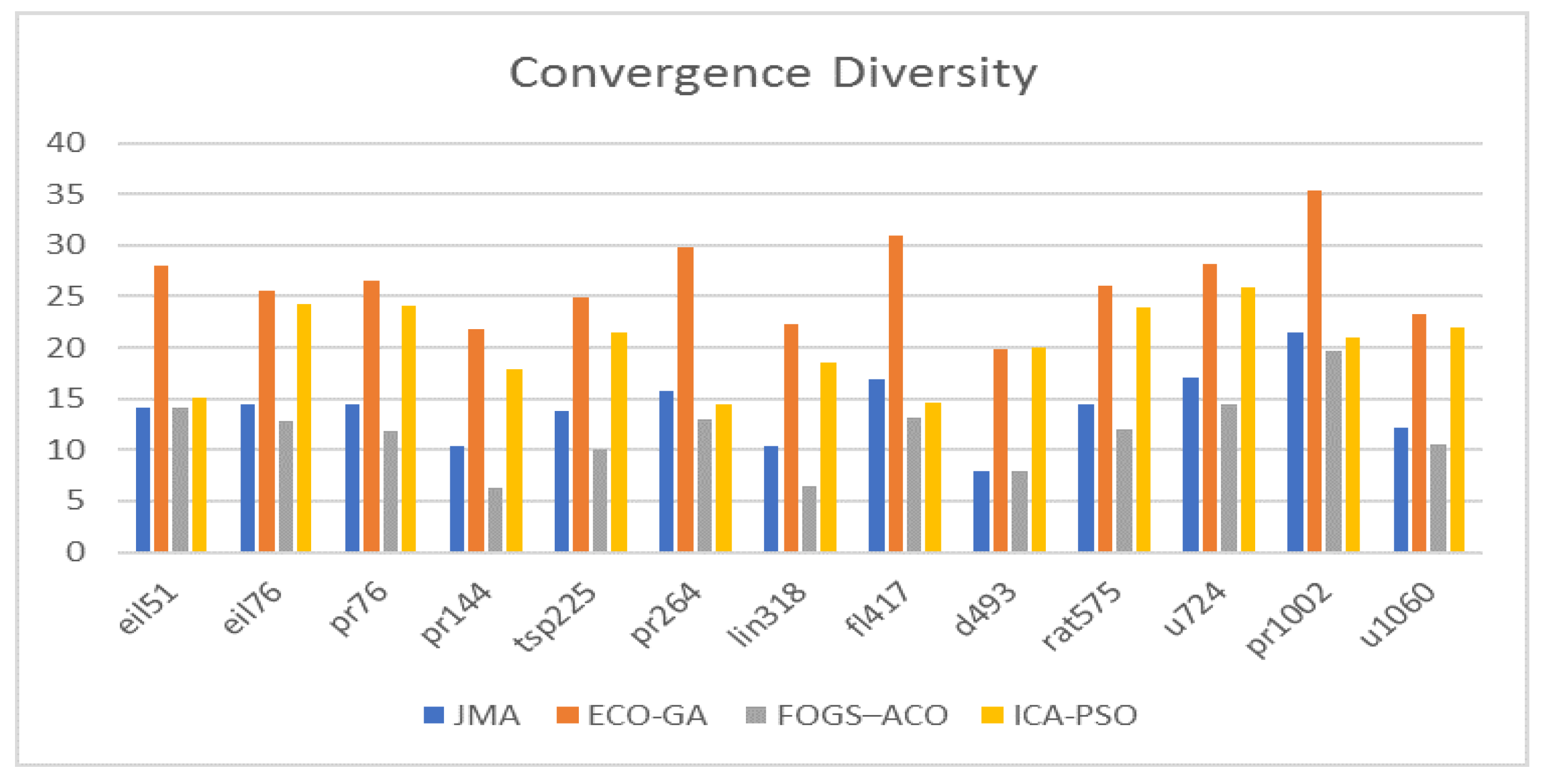

7.5. Analyzes Based on Convergence Diversity

One of the important assessment criteria that provides a concrete evidence of the optimal solution is convergence diversity. According to

Figure 9, the performance of the existing ECO–GA surpassed the other algorithms in terms of diversity. For the sample instance u1060, the exisiting FOGS–ACO has a convergence diversity of 10.58%, the JMA has a convergence diversity for the instance as 12.19%, then the ICA–PSO has a value of 22%, whereas the ECO–GA achieved a highest value of 23.31%. The proposed JMA dominated the existing FOGS–ACO and was dominated by the other existing techniques, such as ECO–GA and ICA–PSO. Thus, the proposed JMA maintained the satisfactory level of convergence diversity among the population, and also achieved the best performance in terms of convergence with the existing algorithm.