1. Introduction

Consider cross-classified data: , where , , (for . Such data are often presented as an contingency table where is the number of times happens. Suppose that are exchangeable and extendible. Then, de Finetti’s theorem says:

Theorem 1. For exchangeable taking values in where . The representing measure μ is unique. A popular model for cross classified data is

Here is a Bayesian, parameter free, description.

Theorem 2. For exchangeable taking values in , a necessary and sufficient condition for the mixing measure μ in Theorem 1 to be supported on (with ), sois that Condition (

1)

is to hold for any and any . Proof. Condition (

1) implies for all

n and

(surpressing

P a.s. throughout)

Let

and then

. Let

be the tail field of

. Then, Doob’s increasing and decreasing martingale theorems show

However, a standard form of de Finetti’s theorem says that, given

, the

are i.i.d. with

. Thus

Finally, observe that (

3) implies (writing

,

)

□

We remark the following points.

- 1

If

condition (

2) is equivalent to

for all

n and

(

is the symmetric group over

). Since

are exchangeable this is equivalent to saying the law is invariant under

.

- 2

The mixing measure

allows general dependence between the row parameters

and column parameters

. Classical Bayesian analysis of contingency tables often chooses

so that

and

are independent. A parameter free version is that under

P, the row sums

and column sums

are independent. It is natural to weaken this to “close to independent” along the lines of [

1] or [

2]. See also [

3].

- 3

Theorems 1 and 2 have been stated for discrete state spaces. By a standard discretization argument, they hold for quite general spaces. For example:

Theorem 3. Let be exchangeable with , , complete separable metric spaces, . Supposefor all measurable and all n. Then,with the probabilities on the Borel sets of . The mixing measure μ is unique. - 4.

Theorem 2 is closely related to de Finetti’s work in [

1,

4].

- 5.

De Finetti’s law of large numbers holds as well, in Theorem 3

One object of this paper is to develop similar parameter free de Finetti theorems for widely used log-linear models for discrete data.

Section 2 begins by relating this to an ongoing conversation with Eugenio Regazzini.

Section 3 provides needed background on discrete exponential families and algebraic statistics.

Section 4 and

Section 5 apply those tools to give de Finetti style partially exchangeable theorems for some widely used hierarchical and graphical models for contingency tables.

Section 6 shows how these exponential family tools can be used for other Bayesian tasks: building “de Finetti priors” for “almost exchangeability” and running the “exchange” algorithm for doubly intractable Bayesian computation. Some philosophy and open problems are in the final section.

2. Some History

I was lucky enough to be able to speak at Eugenio Regazzini’s 60TH birthday celebration, in Milan, in 2006. My talk began this way:

≪ Hello, my name is Persi and I have a problem. ≫

For those of you not aware of the many “10 step-programs” (alcoholics anonymous, gamblers anonymous, …) they all begin this way, with the participants admitting to having a problem. In my case the problem was this:

- (a)

After 50 years of thinking about it, I think that the subjectivist approach to probability, induction and statistics is the only thing that works;

- (b)

At the same time, I have done a lot of work inventing and analyzing various schemes for generating random samples for things like contingency tables with given row and column sums; graphs with given degree sequences; …; Markov Chain Monte Carlo. These are used for things like permutation tests and Fisher’s exact test.

There is a lot of nice mathematics and hard work in (b) but such tests violate the likelihood principle and lead to poor scientific practice. Hence my problem (I still have it): (a) and (b) are incompatible.

There has been some progress. I now see how some of the tools developed for (b) can be usefully employed for natural tasks suggested by (a). Not so many people care about such inferential questions in these ’big data’ days. However, there are also lots of small datasets where the inferential details matter. There are still useful questions for people like Eugenio (and me).

3. Background on Exponential Families and Algebraic Statistics

The following development is closely based on [

5], which should be considered for examples, proofs and more details.

Let

be a finite set. Consider the exponential family:

Here,

is a normalizing constant and

. If

are independent and identically distributed from (

4), the statistic

is sufficient for

. Let

Under (

4), the distribution of

given

t is uniform on

. It is usual to write

Example 1. For contingency tables The usual model for independence has a vector of length with two non zero entries equal 1. The 1’s in are in the place and position j of the last j places. The sufficient statistic t contains the row and column sums of the contingency table associated to the first n observations. The set is the set of an tables with these row and column sums.

A Markov chain on this can be based on the following moves: pick , and change the entries in the current f by adding in pattern This does not change the row sums and it does not change the column sums. If told to go negative, just pick new . This gives a connected, aperiodic Markov chain on with a uniform stationary distribution. See [6]. Returning to the general case, an analog of moves is given by the following:

Definition 1 (Markov basis).

A Markov basis is a set of functions from to such thatand that for any t and there are with , such that This allows the construction of a Markov chain on

: from

f, pick

and

at random and consider

. If this is positive, move there. If not, stay at

f. Assumptions (

5) and (

6) ensure that this Markov chain is symmetric and ergodic with a uniform stationary distribution. Below, I will use a Markov basis to formulate a de Finetti theorem to characterize mixtures of the model (

4).

One of the main contributions of [

5] is a method of effectively constructing Markov bases using polynomial algebra. For each

, introduce an indeterminate, also called

x. Consider the ring of polynomials

in these indeterminates where

k is a field, e.g., the complex numbers. A function

is represented as a monomial

. The function

gives a homomorphism

extended linearly and multiplicatively (

and

and so on). The basic object of interest is the kernel of

:

This is an ideal in

. A key result of [

5] is that a generating set for

is equivalent to a Markov basis. To state this, observe that any

can be written

with

and

. Observe

iff

. The key result is

Theorem 4. A collection of functions is a Markov basis if and only if the setgenerates the ideal . Now, the Hilbert Basis Theorem shows that ideals in have finite bases and modern computer algebra packages give an effective way of finding bases.

I do not want (or need) to develop this further. See [

5] or the book by Sullivant [

7] or Aoki et al. [

8]. There is even a Journal of Algebraic Statistics.

I hope that the above gives a flavor for what I mean by “working in (b) is hard honest work”. Most of the applications are for standard frequentist tasks. In the following sections, I will give Bayesian applications.

4. Log Linear Model for Contingency Tables

Log linear models for multiway contingency tables are a healthy part of the modern statistics. The index set is

with

indexing categories and

the levels of

. Let

be the probability of falling into cell

. A log linear model can be specified by writing:

The sum ranges over subsets

a of

and

means a function that only depends on

x through the coordinates in

a. Thus,

is a constant and

is allowed to depend on all coordinates. Specifying

for some class of sets

a determines a model. Background and extensive references are in [

9]. If the

a with

permitted form a simplicial complex

(so

and

) the model is called

hierarchical. If

consists of the cliques in a graph, the model is called

graphical. If the graph is chordal (every cycle of length

contains a chord) the graphical model is called

decomposable.

Example 2 (3 way contingency tables).

The graphical models for three way tables are:![Mathematics 10 00442 i001]()

The simplest hierarchical model that is not graphical is No Three Way Interaction Model.

This can be specified by saying ’the odds rate of any pair of variables does not depend on the third’. Thus,

As one motivation, recall that for two variables, the independence model is specified by

For three variables, suppose there are parameters

satisfying:

It is easy to see that (

8) entails (

7) hence ’no three way interaction’. Cross multiplying (

7) entails

This is the form we will work with for the de Finetti theorems below.

For background, history and examples (and some nice theorems) see ([

10], Section 8.2), [

11,

12], Simpsons ’paradox’ [

13] is based on understanding the no three way interaction model. Further discussion is in

Section 5 below.

5. From Markov Bases to de Finetti Theorems

Suppose

is a finite set,

is a statistic and

is a Markov basis as in

Section 3. The following development shows how to translate this into de Finetti theorems for the contingency table examples of

Section 4. The first argument abstracts the argument used for Theorem 2 above.

Lemma 1 (Key Lemma).

Let be a finite set and an exchangeable sequence of -valued random variables. Suppose for all In (

10)

, are fixed and are arbitrary. Then, if is the tail field of and , Proof. From (

10) and exchangeability

so

Let

and then

, use Doob’s upward and then downward martingale convergence theorems to see:

Now, de Finetti’s theorem implies (

11). □

Remark 1. The Key Lemma shows that the satisfy certain relations. Using choices of derived from a Markov basis will show that satisfy the required independence properties. Suppose that , and . Let , . Say . Enumerate , . Assumptions (10) and conclusion (11) will give our theorems. Example 3 (Independence in a two way table).

Let . A minimal basis for the independence model is given by :The condition of the Key Lemma becomes: Passing to the limit givesand so This is precisely Theorem 2 of the Introduction. □

Example 4 (Complete independence in a three way table).

The sufficient statistics are . From [5], there are two kinds of moves in a minimal basis. Up to symmetries, these are:| Class I | Class II |

| |

Passing to the limit, this entails: These may be said as ’the product of any remains unchanged if the middle coordinates are exchanged’. By symmetry, this remains true if the two first or last coordinates are exchanged. As above, this entails These observations can be rephrased into a statement that looks more similar to the classical de Finetti theorem; using symmetry:

Theorem 5. Let be exchangeable, taking values in . Thenfor all n, and is necessary and sufficient for there to exist a unique μ on with □

Example 5 (One variable independent of the other two).

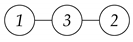

Suppose, without loss, that the graph is![Mathematics 10 00442 i002]()

Identify the pairs with with . The problem reduces to Example 4. A minimal basis consists of (again, up to relabeling) We may conclude

Theorem 6. Let be exchangeable, taking values in . Thenfor all n, and is necessary and sufficient for there to exist a unique μ on with □

Example 6 (Conditional independence).

Suppose variable i and j are conditionally independent given k.![Mathematics 10 00442 i003]()

Rewrite the parameter condition of section four as The sufficient statistics are . From [5], a minimal generating set is From this, the Key Lemma shows (for all ) Again, phrasing the condition (

10)

in terms of symmetry. Theorem 7. Let be exchangeable, taking values in . Then,for all n, and , , is necessary and sufficient for there to exist a unique family on Both (

12) and (

13) have a simple interpretation. For (

12),

are exchangeable 3-vectors. For any

k and specified sequence of values

the chance of observing these values is unchanged under permuting the

, by permutations

. Here

are allowed to depend on

k.

On the right of (

13), the mixing measure may be understood as follows. There is a probability

on

. Pick

. Given this

r, pick

from

on the

copy of

. These choices are allowed to depend on

r but are independent, conditional on

r,

.

All of this simply says that, conditional on the tail field,

The first two coordinates are conditionally independent given the third.

Example 7 (No three way interaction).

The model is described in Section 4. The sufficient statistics are Minimal Markov bases have proved intractable. See [5] or [8]. For any fixed , the computer can produce a Markov basis but these can have a huge number of terms. See [7,8] and their references for a surprisingly rich development.There is a pleasant surprise. Markov bases are required to connect the associated Markov chain. There is a natural subset, the first moves anyone considers, and and these are enough for a satisfactory de Finetti theorem (!).

Described informally, for an array, pick a pair of parallel planes, say the planes in the three dimensional array, and consider moves depicted as These moves preserve all line sums (the sufficient statistics). They arenotsufficient to connect any two datasets with the same sufficient statistics. Using the prescription in the Key Lemma, suppose: Passing to the limit gives This is exactly the no three way interaction condition. Or, equivalently: The odds ratios are constant on the and planes (of course, they depend on ). These considerations imply:

Theorem 8. Let be exchangeable, taking values in . Then, condition (

14)

is necessary and sufficient for the existence of a unique probability μ on , supported on the no three way interaction variety (

15)

satisfying We remark on the following points.

It follows from theorems in [

12] and [

11] that, if all

, condition (

15) is equivalent to the unique representation,

where

have positive entries and satisfy

and

The integral representation in the theorem can be stated in this parametrization. The condition is equivalent to on observables.

Condition (

14) does not have an obvious symmetry interpretation.

Conditions (

14) and (

15) are stated via varying the third variable when

are fixed. Because of (

16), if they hold in this form, they hold for any two variables fixed as the third varies.

It is possible to go on, but, as John Darroch put it, ’the extensions to higher order interactions… are not likely to be of practical interest’. The most natural development—the generalization to decomposable models—is being developed by Paula Gablenz.

There are many extensions of the Key Lemma above. These allow a similar development for more general log linear models and exponential families.

6. Discussion and Conclusions

The tools of algebraic statistics have been harnessed above to develop partial exchangeability for standard contingency table models. I have used them for two further Bayesian tasks: approximate exchangeability and the problem of ’doubly intractable priors’. As both are developed in papers, I will be brief.

Approximate exchangeability.Consider

n men and

m women along with a binary outcome. If the men are judged exchangeable (for fixed outcomes for the women) and vice versa, and, if both sequences are extendable, de Finetti [

1] shows that there is a unique prior on the unit square

such that, for any outcomes

in

with

,

.

If, for the outcome of interest,

were almost fully exchangeable (so the men/ women difference is judged practically irrelevant) the prior

would be concentrated near the diagonal of

. De Finetti suggested implementing this by considering priors of the form

for

A large.

In joint work with Sergio Bacallado and Susan Holmes [

3], multivariate versions of such priors are developed. These are required to concentrate near sub-manifolds of cubes or products of simplicies; think about ‘approximate no three way interaction’. We used the tools of algebraic statistics to suggest appropriate many variable polynomials which vanish on submanifold of interest. Many ad hoc choices were involved. Sampling from such priors or posteriors is a fresh research area. See [

2,

14,

15].

Doubly intractable priors. Consider an exponential family as in

Section 3:

Here a finite set, and . In many real examples, the normalizing constant will be unknown and unknowable. For a Bayesian treatment, let be a prior distribution on . For example, the conjugate prior.

If

is as i.i.d. sample from

,

T is a sufficient statistic and the posterior has the form

with

and

Z another normalizing constant. The problem is that

depends on

and is unknown!

The exchange algorithm and many variants offer a useful solution. See [

16,

17].

In practical implementations, there is an intermediary step requiring a sample form

, the measure induced by

under

. This is a discrete sampling task and Markov basis techniques have been proved useful. See [

16].

A philosophical comment. The task undertaken above, finding believable Bayesian interpretations for widely used log linear models, goes somewhat against the grain of standard statistical practice. I do not think anyone takes a reasonably complex, high dimensional hierarchical model seriously. They are mostly used as a part of exploratory data analysis; this is not to deny their usefulness. Making any sense of a high dimensional dataset is a difficult task. Practitioners search through huge collections of models in an automated way. Usually, any reflection suggests the underlying data is nothing like a sample from a well specified population. Nonetheless, models are compared using product likelihood criteria. It is a far far cry from being based on anyone’s reasoned opinion.

I have written elsewhere about finding Bayesian justification for important statistical tasks such as graphical methods or exploratory data analysis [

18]. These seem like tasks similar to ’how do you form a prior’. Different from the focus of even the most liberal Bayesian thinking.

The sufficiency approach. There is a different approach to extending de Finetti’s theorem. This uses ‘sufficiency’. Consider exchangeable

. For each

n, suppose

is a function. The

have to fit together according to simple rules satisfied in all of the examples above. Call

partially exchangeable with respect to if

is uniform. Then, Diaconis and Freedman [

19] show that a version of de Finetti’s theorem holds. The law of

is a mixture of i.i.d. laws indexed by extremal laws. In dozens of examples, these extremal laws can be identified with standard exponential families. This last step remains to be carried out in the generality of

Section 3 above. What is required is a version of the Koopman–Pitman–Darmois theorem for discrete random variables. This is developed in [

19] when

and

. Passing to interpretation, this version of partial exchangeability has the following form:

This is neat mathematics (and allows a very general theoretical development). However, it does not seem as easy to think about in natural examples. Exchangeability via symmetry is much easier. The development above is a half-way house between symmetry and sufficiency. A close relative of the sufficiency approach is the topic of ‘extremal models’ as developed by Martin-Löf and Lauritzen. See [

20] and its references. Moreover, Refs. [

21,

22] are recent extensions aimed at contingency tables.

Classical Bayesian contingency table analysis. There is a healthy development of parametric analysis for the examples of

Section 5. This is based on natural conjugate priors. It includes nice theory and R packages to actually carry out calculations in real problems. Three papers that I like are [

23,

24,

25,

26]. The many wonderful contributions by I.J. Good are still very much worth consulting. See [

27] for a survey.

Section 5 provides ‘observable characterizations’ of the models. The problem of providing ‘observable characterizations’ of the associated conjugate priors (along the lines of [

28]) remains open.