Abstract

Many analog neural network approaches for sparse recovery were based on using -norm as the surrogate of -norm. This paper proposes an analog neural network model, namely the Lagrange programming neural network with objective and quadratic constraint (LPNN-LPQC), with an -norm sparsity measurement for solving the constrained basis pursuit denoise (CBPDN) problem. As the -norm is non-differentiable, we first use a differentiable -norm-like function to approximate the -norm. However, this -norm-like function does not have an explicit expression and, thus, we use the locally competitive algorithm (LCA) concept to handle the nonexistence of the explicit expression. With the LCA approach, the dynamics are defined by the internal state vector. In the proposed model, the thresholding elements are not conventional analog elements in analog optimization. This paper also proposes a circuit realization for the thresholding elements. In the theoretical side, we prove that the equilibrium points of our proposed method satisfy Karush Kuhn Tucker (KKT) conditions of the approximated CBPDN problem, and that the equilibrium points of our proposed method are asymptotically stable. We perform a large scale simulation on various algorithms and analog models. Simulation results show that the proposed algorithm is better than or comparable to several state-of-art numerical algorithms, and that it is better than state-of-art analog neural models.

MSC:

94A12; 68T01; 68T07

1. Introduction

1.1. Background

The last few decades have seen increasingly rapid advances in analog neural networks for solving optimization problems. In an analog neural network, the state transitions of neurons are governed by some differential equations. After the dynamics of the network converge to an equilibrium point, the solution of the problem is obtained from the state of the neurons. From many neural pioneers [1,2,3,4,5,6], this approach is very attractive when real-time solutions are required.

The research of the analog neural network approach could be dated back to the 1980s [2]. One of the earliest analog networks is the Hopfield model [2]. Early applications of the Hopfield model are analog-to-digital conversion and the traveling salesman problem. Later, many analog models [3,4,5,6,7] for various optimization problems were proposed. Over the last decade, many new applications of the analog neural modes were investigated, including image processing, sparse approximation [5,8], mobile target localization [9,10], and feature selection [6]. Recently, several analog techniques [5,8,11,12,13] were designed for solving sparse recovery problems.

In sparse recovery [8,14,15,16,17,18], the aim is to recover an unknown sparse vector from an observation vector . For many real life signals, their internal representations are with the sparse property [19]. For example, audio signals are approximately sparse in the time-frequency domain [20]. Sparse recovery techniques can be in many signal processing applications. For instance, we use can sparse recovery techniques for image restoration [18,21,22]. Additionally, they can be used to remove the stripes in hyperspectral images [23]. In the inverse synthetic aperture radar (ISAR) application [24,25], a high-quality image of an object can be obtained from the Fourier transformed signal based on sparse recovery techniques. Another application of sparsity recovery is to process electrocardiogram (ECG) signal for classification of various heart diseases [26].

One of sparse recovery problems is the following -norm optimization problem:

where is the measurement matrix. When there are some measurement noise in , the problem becomes the constrained basis pursuit denoise (CBPDN) problem:

where is the standard deviation of observation noise. Since the -norm is difficult to handle, we usually use the -norm to replace the -norm. The problems, stated in (1) and (2), become

respectively. In the last two decades, many -norm based numerical algorithms were proposed, such as BPDN-interior [27] and Homotopy [28]. In addition, elegant implementation packages [29,30] are available, such as SPGL1 [30].

Although the aforementioned -norm relaxation approaches were well studied, they have some drawbacks. For instance, in the BPDN-interior algorithm, the solution vector contain many small non-zero elements [31]. As mentioned in [32,33], -norm () is a better choice for replacing -norm. However, -norm is a non-convex function, which introduces complex behaviours in the problem-solving process. Therefore, we can use some approximation functions to replace the -norm term, such as minimax concave penalty (MCP) function [34,35]. In addition, there are other methods, which directly handle the -norm. They are normalized iterative hard threshold (NIHT) method [36], approximate message passing (AMP) [37], -norm zero attraction projection (-ZAP) [38], -norm alternating direction method of multipliers (-ADMM) [39,40], and expectation-conditional maximization either (ECME) [41]. All the mentioned -norm or -norm techniques in this paragraph are digital numerical algorithms.

1.2. Motivation

Apart from using the numerical methods to solve the sparse recovery problem, we can consider using the analog neural approach for solving sparse recovery problems [5,8,11,12,13,21]. However, those analog models in [5,8,11,12,13,21] were developed based on the -norm relaxation techniques. Since the -norm is a surrogate function of the -norm only, directly using the -norm or a -like norm usually leads to a better performance. There is indeed a -norm-based analog model, namely local competition algorithm (LCA) [31]. However, it was designed for unconstrained sparse recovery problems only.

As directly working with the -norm or -norm usually results in better performance, it is interesting to develop some -norm or -norm analog models for sparse recovery problems with constraints. Another shortcoming of existing -norm relaxation neural models [8,11,12,13] is that the corresponding circuit realizations, especially the circuit for projection and thresholding operations, were not discussed.

1.3. Contribution and Organization

This paper focuses on using the analog technique to solve the CBPDN problem with the -norm objective, stated in (2). Strictly speaking, the -norm is not a norm and is not differentiable. These properties create difficulties for constructing the analog model for the CBPDN problem.

The paper proposes a -norm-like function for representing the sparsity measurement. The proposed -norm-like function is differentiable. We then apply the Lagrange programming neural network (LPNN) framework [8,42] for solving the CBPDN problem. The proposed -norm-like function does not have a simple expression, but its derivative has. Hence, we borrow the internal state concept from the LCA [31] to construct a LPNN model for the CBPDN problem. We call our model “LPNN with objective and quadratic constraint (LPNN-LPQC)”.

In developing an analog neural model, one of difficulties is to analyze the behaviour of the analog neural network dynamics, especially, for non-convex objective function without an explicit expression. The paper discusses the stability of the proposed LPNN-LPQC model. We theoretically prove that the equilibrium points of our proposed LPNN-LPQC satisfy Karush Kuhn Tucker (KKT) conditions of the approximated CBPDN problem. We use the term “approximated” because we have applied an approximation for the -norm. In addition, we prove that the equilibrium points of our proposed method are asymptotically stable.

Unlike some existing analog neural results which do not discuss the circuit realization [8,11,12,13], this paper also discusses the circuit realization of the proposed model. In particular, the detailed circuit design for the thresholding element is given. We then use the MATLAB Simulink to verify our design. In the verification, we find that the MATLAB Simulink results are nearly the same as the corresponding results obtained from the discretized dynamic equations.

This paper also presents a large scale simulation. The simulation result shows that the proposed LPNN-LPQC is better than or comparable to several state-of-art digital numerical algorithms, and that is better than state-of-art analog neural networks.

The rest of this paper is organized as follows. Backgrounds on the LPNN and LCA models are described in Section 2. In Section 3, the proposed LPNN-LPQC is developed. Section 4 discusses the circuit realization of the thresholding element. Section 5 discusses the LPNN-LPQC’s stability. Simulation results and comparisons with state-of-art numerical algorithms are provided in Section 6. Finally, conclusions are drawn in Section 7.

2. LPNN Framework and LCA

2.1. LPNN

The LPNN technique [42] can be used in many applications, such as locating a target in a radar system [9] and -norm-based sparse recovery [8], and ellipse fitting [43]. It was developed for solving a general non-linear constrained optimization problem:

where is the objective, and () represents m equality constraints. In the LPNN approach, we first set up a Lagrangian function, given by

where is the Lagrange multiplier vector. An LPNN has two classes of neurons: variable neurons for holding and Lagrange neurons for holding . Its neural dynamics are

where is the characteristic time constant. Without loss of generality, we consider that is equal to 1. With (6), when some mild conditions [42] are held, the network settles down at a stable state. The main restriction of using the LPNN framework is that and should be differentiable.

2.2. LCA

The LCA [31,44] aims at solving the following unconstrained optimization problem:

where is a penalty term to improve the sparsity of the resultant . The function does not have an exact expression. Instead, it is defined by introducing an internal state vector . To define , a thresholding function on is first introduced

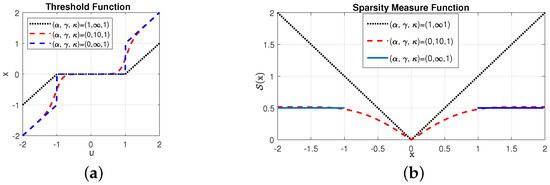

where is the threshold and is related to the magnitude of the non-zero elements, controls the threshold transition rate or slope around threshold, and indicates adjustment fraction after the internal neuron across threshold. Figure 1a illustrates the shape of under various settings. With , the threshold function is the well-known soft threshold function. Given a threshold function, the penalty function is then defined by

where is the gradient or sub-gradient of .

Figure 1.

Threshold function and sparsity measure function. (a) The shape of under various settings. (b) Sparsity measure function. For , and , the value of x cannot be in the range of based on the property of the ideal thresholding function .

In the vector form, (9) is written as

where .

From (9), is defined by the derivative. Hence, in general, there is no explicit expression for . To visualize them, we should use numerical integration. Figure 1b shows the sparsity measure function under various parameter settings. As shown in the figure, in some cases, such as a large value, the sparsity measure function is closed to -norm (). Hence, is called the -norm-like sparsity function.

With the internal state concept and (9), LCA defines the dynamics on (rather than on ) as

3. LPNN-LPQC Model

For the proposed model, , , and is a large positive number. The meaning of is that the magnitude of the non-zero elements in the resultant should be greater than .

For simplicity, we use notation to replace . We consider the following LPQC problem:

We first discuss some properties of . Afterwards, we derive the LPNN-LPQC model and perform the stability analysis on the proposed model.

3.1. -Norm-like Sparsity Measure Function

Recall that from Figure 1b, for large , is similar to the -norm. When and , the threshold function becomes

Sparsity measurement function is defined by its derivative:

Before discussing the properties of and , we would like to make some remarks. First, the explicit expression of and are not available, but the value of is easily obtained from u based on (14). Second, as shown in the rest of this paper, in the implementation of the proposed model, we need to implement rather than .

We first list a number of properties of and . From (14) and basic mathematics, we have Property 1.

Property 1.

- P1.a: is a continuous odd function.

- P1.b: is strictly monotonically increasing.

- P1.c:Inverse of exists.

- P1.d: is differentiable at everywhere for all real u.

Since is an odd function and monotonically increasing, from basic mathematics, has the following properties.

Property 2.

- P2.a: is an even function.

- P2.b: is monotonically increasing with respect to .

Additionally, has the following properties.

Property 3.

Define . Thus, we have . For small positive ϵ, we have the following properties:

- P3.a:If , then .

- P3.b:If , then .

- P3.c:If , then ;

- P3.d:If , then .

Proof.

The proof is exhibited in Appendix A. □

Remark 1.

P3.aandP3.bmean that in some regions of u, is approximately equal to either u or 0. On the contrary, the regions of are uncertain regions. However, the uncertain regions can be arbitrarily small by choosing a sufficient large γ.

With Properties 1–3, we can prove that is differentiable at everywhere.

Property 4.

is a continuously differentiable function.

Proof.

The proof is exhibited in Appendix B. □

Since is differentiable, in the rest of this paper, we replace “∂” with “∇” to indicate the partial derivative of , i.e., . In addition, we discuss two features of , which make to be a good sparsity measure function.

Property 5.

For a given ϵ and sufficient large γ,

- Boundness: for , .

- Sparsity: for , .

Proof.

The proof is exhibited in Appendix C. □

Note that when tends to , becomes the -norm and is the hard threshold function.

3.2. Properties of LPQC Problem

In order to analyze the properties of the LPQC problem, stated in (13), we review the KKT necessary conditions for general constrained optimization problems.

Lemma 1.

Consider the following non-linear optimization problem:

where is the objective, defines the inequality constraint, ϕ and h are continuously differentiable. If is a local optimum, then there exists a constant , called Lagrange multiplier, such that

- Stationarity:,

- Primal feasibility:,

- Dual feasibility:,

- Complementary slackness:.

With the arm with Lemma 1 and some reasonable assumptions, we could simplify the KKT conditions of the LPQC, stated in (13). The result is summarized in Theorem 1.

Theorem 1.

Given that , and that is a local optimum of the optimization problem (13), the KKT conditions become

Note that .

Proof.

According to (19c), is either greater than or equal to 0. The proof of is by contradiction. Assume that . From (19a), we have . Furthermore, from and (14), it is easy to prove that , and that . On the other hand, from (19b), when , we have , which contradicts our earlier assumption . Hence, must be greater than 0. In other words, the KKT conditions of (13) become (18). The proof is completed. □

3.3. Dynamics of LPNN-LPQC

In the analog neural approach, we need to design the neural dynamics such that the equilibrium points of the dynamics fulfill the KKT conditions of the problem.

From the LPNN framework, we set up a Lagrangian function:

where , which is used to simplify the proof of the Lagrange multiplier being greater than zero. Based on the concepts of LPNN and LCA, we propose the dynamics of the LPNN-LPQC as

4. Circuit Realization

In the concept of analog neural models for optimization process, one important issue is whether the operations in the dynamic equation can be implemented with analog circuits or not. This section addresses this issue.

4.1. Thresholding Element

From the dynamic equations, stated in (21), there are many conventional analog operations, such as adders [45], integrators [45], multipliers [46,47] and square circuits [48,49]. The circuit realization of those common operations are well discussed in [45,46,47,48,49].

There is an unconventional element in the dynamic equations. It is the thresholding function:

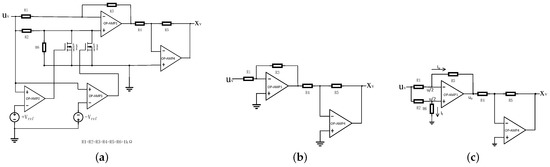

Figure 2a shows a generalized realization of the thresholding element for large . In this generalized realization, the thresholding level is , where is a positive number. The detailed function is given by

Figure 2.

(a) Circuit for the thresholding function . (b) Equivalent circuit of the thresholding function when the magnitude of input is greater than . (c) Equivalent circuit of the thresholding function when the magnitude of input is less than or equal to .

The two MOSFETs in Figure 2a control the thresholding mode.

If the magnitude of the input is greater than , then one of the two MOSFETs is on and the circuit in Figure 2a becomes an equivalent one shown in Figure 2b. Clearly, for this equivalent circuit, the output is equal to input.

On the other hand, in Figure 2a, if the magnitude of the input is less than or equal to , then the two MOSFETs are off and we obtain another equivalent circuit shown in Figure 2c. In this case, the inputs of the OP-AMP1 are clamped at . Since the two current values, and , of the upper and lower paths are equal, the output of the OP-AMP1 is zero.

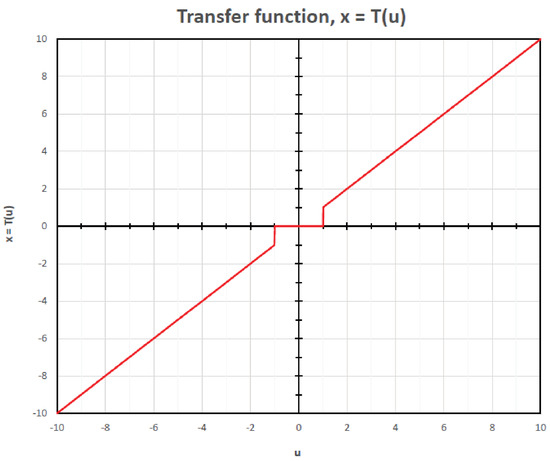

In Figure 3, we show the transfer function of our threshold function for . The transfer function is obtained from a circuit simulator. In the simulator, the open loop gain of OP-AMP1 is set to and the open loop gain of OP-AMP2-to-OP-AMP4 is set to . For the two MOSFETs, they are n-type with the following parameters: channel width = 100 m, channel length = 200 nm, transconductance = 118 A/V, zero-bias threshold voltage = 430 mV and channel-length modulation = 60 mV. From the figure, the transfer function quite matches our theoretical model with a large value of .

Figure 3.

Thresholding function obtained from the circuit simulation of Figure 2 with .

4.2. Circuit Structure

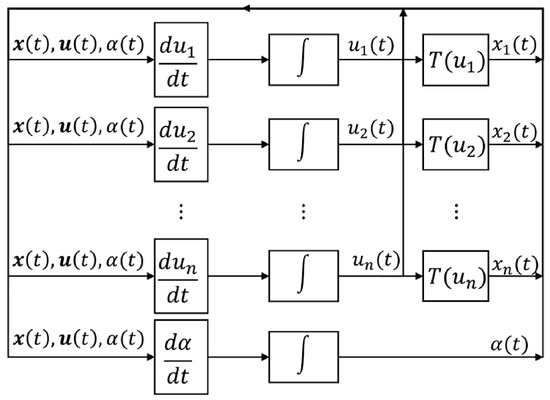

Figure 4 shows the analog realization of (21) in the block diagram level. In the realization, there are n blocks to compute ’s. Inside each block, there are adders, multipliers, and square circuits. Additionally, there is a block to compute . The time derivatives ’s and are then fed to the integrators to obtain internal variables and . In order to obtain the decision variables ’s, the internal variables are fed to n thresholding elements (Figure 2), where .

Figure 4.

Analog realization of (21) in the block diagram level.

4.3. Circuit Simulation

In this subsection, we use a small scale problem to verify our approach based on the Matlab Simulink platform. The problem details are:

- and .

- Measurement matrix:

- Real sparse vector and noisy observation vector.

- Noise tolerant parameter .

We build the Simulink file for our model, shown in Figure 4. We implement the thresholding function based on Figure 2. Other blocks are based on Simulink’s functional blocks. To verify our Simulink result, we also consider the discrete time simulation on (21). For digitization of (21), the discrete time simulation equations are

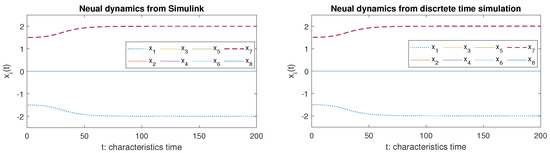

where . Figure 5 shows the dynamics from the Simulink results and discrete time simulation. From the figure, the dynamics from the two approaches are nearly the same. The final outputs are

Figure 5.

Dynamics obtained from Simulink and discrete time simulation.

Clearly, from Figure 5 and (23), both approaches produce the similar results.

5. Properties of the Dynamics

The first issue that we need to address is that equilibrium points of the LPNN-LPQC model should fulfill the KKT conditions of the LPQC problem, stated in (13). Otherwise, the LPNN-LPQC model cannot find out local/global minimums of the LPQC problem. Note that since the LPQC problem or the original -norm CBPDN problem, stated in (2), are non-convex, few algorithms can ensure that their solutions are the global minimum.

The relationship between the equilibrium points of the LPNN-LPQC model and the KKT conditions are summarized in the following theorem.

Theorem 2.

Given that with is an equilibrium point of the LPNN-LPQC model, this point corresponds to the KKT conditions of the LPQC problem. Note that .

Proof.

From (21), when is an equilibrium point, we

Clearly, (24a) is the same as (19a) with . Additionally, when , from (24b) becomes which is the same (19b). The proof is completed. □

Theorem 2 tells us that equilibrium points of the LPNN-LPQC model corresponds to the KKT condition of the LPQC problem. Another concern is the stability of the equilibrium points of the LPNN-LPQC model. Theorem 3 presents the stability of the equilibrium points of the LPNN-LPQC model.

Theorem 3.

Given a positive ϵ and for a sufficient large positive γ, if with is an equilibrium point of the LPNN-LPQC model and for all i either or , then the equilibrium point is an asymptotically stable point.

Proof.

The condition of either or is equivalent to for all i, , where . With the condition, we define two index sets, and , given by

- active set: If , then .

- inactive set: If , then .

Obviously, for a given and a sufficient large , tends to zero and the value of can be arbitrary small. Thus, the inactive neurons have nearly no effect on the dynamics, i.e., tends to 0 for sufficient large (see Property 3).

For a given , we define as the vector composed of the elements of indexed by and as the vector composed of the elements of indexed by . Similarly, given a matrix , we define as the matrix composed of the columns of indexed by and as the matrix composed of the columns of indexed by .

Now, we consider the dynamics near and . We have two index sets and As mentioned in the above, for inactive states for sufficient large . The dynamics given in (21) can be rewritten as:

Furthermore, the linearization of (25) around the equilibrium point is

where “” is the Jacobian matrix at and it is given by

From the given condition, for active nodes, we have , and for inactive nodes . After deriving the sub-matrices in (27), we obtain

where ∅ denotes a matrix of zero with appropriate size. Following the proof logic of Theorem 5 in [8], one can find that all eigenvalues of are with positive real part. Therefore, according to the classical control theory, the corresponding equilibrium point is asymptotically stable. The proof is completed. □

6. Experiment Results

6.1. Comparison Algorithms and Settings

This section compares our proposed LPNN-LPQC model with a number of digital numerical methods and analog neural methods. The comparison numerical methods are SPGL1 [30,50], MCP [35], AMP [37], -ADMM [39,40], -ZAP [38], NIHT [36], and ECME [41]. The comparison analog methods are IPNNSR [11] and PNN- [13].

The seven numerical algorithms are described as follows. The SPGL1 [30,50] is a standard -norm approach. The MCP [35] is based on the approximation of the -norm function and its turning parameters are selected by cross validation. The NIHT [36], ECME [41], and AMP [37] are iterative algorithms, and they handle the -norm term by the hard thresholding concept. The -ADMM [39,40] uses the frameworks of ADMM and hard thresholding. The ZAP [38] utilizes the idea of adaptive filter and projection. The two analog comparison models are IPNNSR [11] and PNN- [13], and are developed based the projection concepts.

The setting of our experiment follows that of the experiment in [51]. The measurement matrix is a random matrix. The dimension n of the sparse signal is 4096. A sparse signal contains k non-zero elements which locations are randomly chosen with uniform distribution. Their corresponding value are random . In our experiment we set .

6.2. Parameter Settings

To conduct the experiment, we need to select some parameters for the proposed method and comparison methods. In our proposed analog method, there are two tuning parameters which are and . To make the approximation -norm close to the norm, we should use a large . In our experiment, we set . Parameter is used to control the minimum value of the magnitudes of the decision variables . In our experiment, we set and .

The SPGL1 package is used to solve

where k is the number of non-zero elements in . In the experiment, we set the maximum number of iterations to 100,000.

The MCP algorithm is used to solve

where is a penalty function to control the sparsity of the solution, and and are parameters in . We set and use a linear search to obtain the best value of . We set the maximum number of iterations to 100,000.

The AMP, NIHT, and ECME algorithms are used to solve

where k is the number of non-zero elements in . We set the maximum number of iterations to 100,000.

The -ADMM algorithm is used to solve

where k is the number of non-zero elements in . In -ADMM, there is an augmented Lagrangian parameter . It is set to . The maximum number of iterations to 100,000.

The -ZAP algorithm is used to solve

where is used to control the sparsity of the solution vector. We use a linear search to obtain the best value of . In addition, there are two parameter and . In the experiment, and . Additionally, the maximum number of iterations is 100,000.

The IPNNSR and PNN- analog models are used to solve

For IPNNSR, we set . For PNN-, we set .

6.3. Convergence

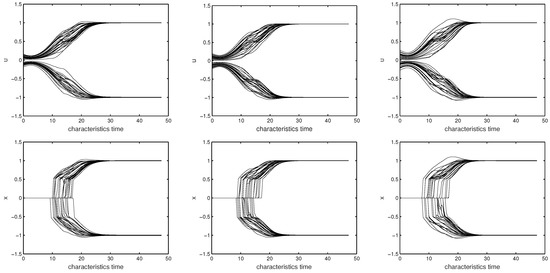

The proposed algorithm is an analog neural network. Hence, one important issue is the time to reach the equilibrium. Here, we conduct an experiment to empirically study the convergent time. Some typical dynamics are given in Figure 6. In the figure, the first row is the case of and , the second row is the case of and , and the third row is the case of and . Since there are 4096 elements in the decision variable vector , the legibility of the figure will be very poor, if we plot all ’s and ’s in the figure. Therefore, we only plot the dynamics of the ’s and ’s whose original values are non-zero. From the figure, it can be seen that within 20–40 characteristic time units, the dynamics of our proposed analog neural network settle down.

Figure 6.

Typical dynamics of and for the LPNN-LPQC model, where . The first column: , . The second column: , . The third column, , . In all sub-figures, we only show the dynamics of the ’s and ’s whose original values are non-zero, because there are 4096 curves in each sub-figure when we show all the dynamics for ’s and ’s.

6.4. Comparison with Other Algorithms

To further analyze the performance of our proposed method, we compare it with seven numerical algorithms and two analog models.

The observation vectors are generated by the following noisy model:

where is a zero mean Gaussian noise with standard deviation . The experiments repeat 100 times with different measurement matrix, initial states, and sparse signals. For each instance we declare that it is successful if

where denotes the true signal, is the recovered signal, and tol is the tolerant value. In our experiment, we set .

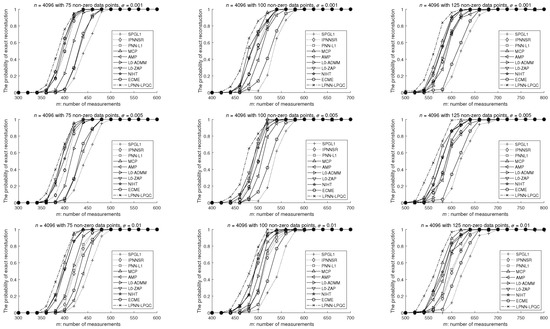

The successful rate results are shown in Figure 7. For all the algorithms, their performances are improved with the increasing numbers of measurements. From the figure, all -norm and -norm models are superior to the models, including SPGL1, IPNNSR, and PNN-. In addition, comparing with the seven numerical methods, our proposed method usually needs less measurements to obtain the same probability of exact reconstruction.

Figure 7.

Simulation results of different algorithms, where . For the first column, . For the second column, . For the third column, . The three rows are based on three different noise levels. The experiments are repeated 100 times using different settings.

Comparing with the two analog models, IPNNSR and PNN-, our LPNN-LPQC model is better. For example, with 125 non-zero elements and noise level equal to 0.01, the IPNNSR and PNN- need around 650 measurement vectors, while our LPNN-LPQC needs around 575 to 600 measurement vectors only. The rationale of the improvement is that our model uses a -norm approach, while IPNNSR and PNN- are based on the -norm.

6.5. Comparison with Other Analog Models

As the paper proposed an analog model, namely LPNN-LPQC, for sparse recovery, this subsection performs a deep discussion on the comparison among our proposed LPNN-LPQC, IPNNSR [11], and PNN- [13]. We discuss three different aspects: probability of exact reconstruction and reconstruction error. In IPNNSR [11] and PNN- [13], the circuit realization of thresholding operator and the projection operator were not addressed.

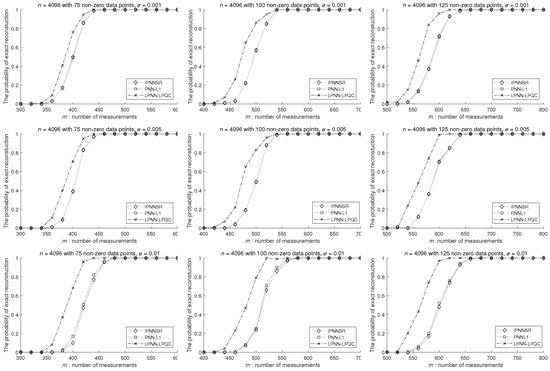

6.5.1. Successful Rate of Recall

Since Figure 7 shows the successful rates of all algorithms, it may not be easy to see the difference among the three analog models. In Figure 8, we only present the results of three analog models. From the figure, it can be seen that the performance of our LPNN-LPQC is better than that of IPNNSR and PNN-. In particular, the performance improvement is significant when the number of non-zero elements is large and the noise level is high. For instance, with 125 non-zero elements and noise level = 0.01, the IPNNSR and PNN- need around 650 measurements for high successful rates of recall, while our LPNN-LPQC needs around 575 to 600 measurements only.

Figure 8.

Comparison with other analog models on probability of reconstruction, where . For the first column, . For the second column, . For the third column, . The three rows are based on three different noise levels. The experiments are repeated 100 times using different settings.

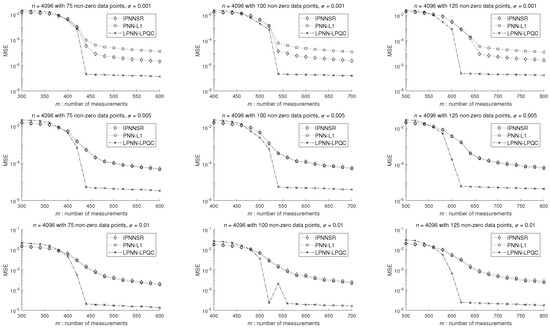

6.5.2. MSE of Recall

The successful rate results concern about whether the estimated has the correct non-zero positions. Here, in Figure 9, we present the MSE versus the number of measurements used. From Figure 9, it can be seen that in terms of MSE, the performance of our LPNN-LPQC is much better than that of IPNNSR and PNN-. In most cases, the MSE values of our proposed model are less than those of IPNNSR and PNN- in one or two orders of magnitude. For example, for , , and , the MSE values of the IPNNSR and PNN- are around , while the MSE value of the proposed model is around only.

Figure 9.

Comparison with other analog models on MSE, where . For the first column, . For the second column, . For the third column, . The three rows are based on three different noise levels. The experiments are repeated 100 times using different settings.

7. Conclusions

This paper proposed a LPNN-LPQC method for sparse recovery under noise environment. The proposed algorithm is an analog neural network. We showed that the equilibrium points of the model satisfy the KKT conditions of the LPQC problem, and that the equilibrium points of the model are asymptotically stable. From the simulation results, we can see that the performance of the proposed algorithm is comparable to, even superior to many state-of-art -norm or -norm numerical methods. In addition, our proposed algorithm is superior to two analog models. We also presented the circuit realization for the thresholding element and performed circuit simulation for verifying our realization based on the MATLAB Simulink.

In our analysis, we assume that the realization of analog circuit does not have any time delay or synchronization problem. In fact, time delay and mis-synchronization may affect the stability of analog circuits [52,53]. Hence, one of future works is to investigate the behaviour of the proposed analog model with time delay or mis-synchronization.

Author Contributions

Conceptualization, H.W., R.F., C.-S.L. and A.G.C.; methodology, C.-S.L., H.W., R.F. and H.P.C.; software, H.W., R.F., H.P.C.; writing—original draft preparation, H.W.; writing—review and editing, C.-S.L.; supervision, C.-S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant No. 62206178) and a research grant from City University of Hong Kong. Grant number 9678295.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LPNN | Lagrange Programming Neural Network |

| LPNN-LPQC | LPNN with objective and Quadratic Constraint |

| CBPDN | Constrained Basis Pursuit Denoise (CBPDN) |

| LCA | Locally Competitive Algorithm |

| KKT | Karush Kuhn Tucker |

| MCP | Minimax Concave Penalty |

| NIHT | Normalized Iterative Hard Threshold |

| AMP | Approximate Message Passing |

| -norm Zero Attraction Projection | |

| -norm Alternating Direction Method of Multipliers | |

| ECME | Expectation Conditional Maximization Either |

Appendix A. Proof of Property 3

- P3.a

Recall that , and that is an even function. Clearly, is an odd function. First, since is an even function, the proof only presents the case of .

In the case of , is monotonic increasing. Hence

In addition, it is easy to show that is monotonic increasing, and that . In conclusion, we have . P3.a is proved.

- P3.b

Since is an even function, the proof only presents that the case of .

In the case of , is monotonic increasing. Hence, we have

Additionally, it is obvious that . Therefore, . P3.b is proved.

- P3.c

For simplicity, let . Thus, the derivative of can be rewritten as

and the second derivative of is

Since is an odd function and is an even function, we only present the proof for the case of . The proof consists of two parts. First, we show the monotonic property of for . Second, the upper and lower bounds of are established.

For the case of , we have . The second derivative of in (A4) can be rewritten as:

Since , we can deduce that

From (A5), (A6), , and , we deduce that, if , then and is monotonic decreasing. Thus, for , a lower bound on is . Since and , we have

On the contrary, an upper bound in , is . The value of is

From (A5) and (A6), and the fact that is an even function, we can conclude that for a sufficiently large , if , then . The proof of P3.c is completed.

- P3.d

The proof consists of two parts. First, we show the monotonic properties of for . Second, the upper and lower bounds of are established. Since is an odd function and is an even function, we only need to consider the region of .

For , , is monotonic decreasing, and . In this region, the second derivative of is

Obviously, in this region, , and

Hence, we can conclude that, for , and then is monotonically increasing. For , the upper bound of exists when . The upper bound is

In addition, a lower bound on exists at ,

To sum up, for a sufficiently large , if , then . The proof of P3.d is completed.

Appendix B. Proof of Property 4

The continuity and differentiability of can be proved according to the following lemma [54].

Lemma A1.

(Inverse function) Let ϕ be a strictly monotone continuous function on , with ϕ differentiable at and . Then exists and is continuous and strictly monotone. Moreover, is differentiable at and

From (A3), one can easily verify that , and that is a strictly monotone increasing continuous function. Based on Lemma A1, exists. Additionally, it is continuous and differentiable at every point. Hence is a continuous and differentiable function. The proof is completed.

Appendix C. Proof of Property 5

- Boundness: Now, we use the Taylor series of to estimate the values of . Since is an even function, the proof only presents the case of .

First, we can use to obtain the Taylor series expansion of :

where and are the first and second order derivatives of at , respectively. Since and , we obtain

Additionally, . As , from Lemma 2,

Additionally, from (A3),

Thus, we have

As , we obtain . Similarly, it is also easy to obtain that .

Now we would like to know for , i.e., , what the value of is.

Let and . Since is an even function and is monotonic increasing (for ), for , we have

From P3, we have . Thus,

Thus, for a sufficient small , . In addition, for sufficient small and sufficient large , . As , we have for .

Sparsity: Since is an even function, we only show the proof for , i.e., the case of . Let and . For , is a monotonical increasing. Hence, we have . Therefore, we have

From P3.b, we can deduce that . For a sufficient small , . Obviously, in this region is bounded. Hence, we have . As , we can say that for . In conclusion, if . The proof is completed.

References

- Chua, L.; Lin, G.N. Nonlinear programming without computation. IEEE Trans. Circuits Syst. 1984, 31, 182–188. [Google Scholar] [CrossRef]

- Tank, D.; Hopfield, J. Simple ‘neural’ optimization networks: An A/D converter, signal decision circuit, and a linear programming circuit. IEEE Trans. Circuits Syst. 1986, 33, 533–541. [Google Scholar] [CrossRef]

- Xia, Y.; Leung, H.; Wang, J. A projection neural network and its application to constrained optimization problems. IEEE Trans. Circuits Syst. Fundam. Theory Appl. 2002, 49, 447–458. [Google Scholar]

- Xia, Y.; Wang, J. A general projection neural network for solving monotone variational inequalities and related optimization problems. IEEE Trans. Neural Netw. 2004, 15, 318–328. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Lee, C.M.; Feng, R.; Leung, C.S. An analog neural network approach for the least absolute shrinkage and selection operator problem. Neural Comput. Appl. 2018, 29, 389–400. [Google Scholar] [CrossRef]

- Wang, Y.; Li, X.; Wang, J. A neurodynamic optimization approach to supervised feature selection via fractional programming. Neural Netw. 2021, 136, 194–206. [Google Scholar] [CrossRef]

- Bouzerdoum, A.; Pattison, T.R. Neural network for quadratic optimization with bound constraints. IEEE Trans. Neural Netw. 1993, 4, 293–304. [Google Scholar] [CrossRef]

- Feng, R.; Leung, C.S.; Constantinides, A.G.; Zeng, W.J. Lagrange Programming Neural Network for Nondifferentiable Optimization Problems in Sparse Approximation. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2395–2407. [Google Scholar] [CrossRef]

- Wang, H.; Feng, R.; Leung, A.C.S.; Tsang, K.F. Lagrange programming neural network approaches for robust time-of-arrival localization. Cogn. Comput. 2018, 10, 23–34. [Google Scholar] [CrossRef]

- Shi, Z.; Wang, H.; Leung, C.S.; So, H.C.; Member EURASIP. Robust MIMO radar target localization based on Lagrange programming neural network. Signal Process. 2020, 174, 107574. [Google Scholar] [CrossRef]

- Liu, Q.; Wang, J. L_{1}-minimization algorithms for sparse signal reconstruction based on a projection neural network. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 698–707. [Google Scholar] [CrossRef] [PubMed]

- Yan, Z.; Le, X.; Wen, S.; Lu, J. A Continuous-Time Recurrent Neural Network for Sparse Signal Reconstruction Via ℓ1 Minimization. In Proceedings of the 2018 Eighth International Conference on Information Science and Technology (ICIST), Cordoba, Granada, and Seville, Spain, 30 June–6 July 2018; pp. 43–49. [Google Scholar] [CrossRef]

- Wen, H.; Wang, H.; He, X. A Neurodynamic Algorithm for Sparse Signal Reconstruction with Finite-Time Convergence. Circuits Syst. Signal Process. 2020, 39, 6058–6072. [Google Scholar] [CrossRef]

- Donoho, D.; Huo, X. Uncertainty principles and ideal atomic decomposition. IEEE Trans. Inf. Theory 1999, 47, 2845–2862. [Google Scholar] [CrossRef]

- Donoho, D.L.; Elad, M. Optimally sparse representation in general (nonorthogonal) dictionaries via l1 minimization. Proc. Natl. Acad. Sci. USA 2003, 100, 2197–2202. [Google Scholar] [CrossRef] [PubMed]

- Chartrand, R. Exact reconstruction of sparse signals via nonconvex minimization. IEEE Signal Process. Lett. 2007, 14, 707–710. [Google Scholar] [CrossRef]

- Blumensath, T.; Davies, M.E. Iterative thresholding for sparse approximations. J. Fourier Anal. Appl. 2008, 14, 629–654. [Google Scholar] [CrossRef]

- Jin, D.; Yang, G.; Li, Z.; Liu, H. Sparse recovery algorithm for compressed sensing using smoothed ℓ0-norm and randomized coordinate descent. Mathematics 2019, 7, 834. [Google Scholar] [CrossRef]

- Stanković, L.; Sejdić, E.; Stanković, S.; Daković, M.; Orović, I. A tutorial on sparse signal reconstruction and its applications in signal processing. Circuits Syst. Signal Process. 2019, 38, 1206–1263. [Google Scholar] [CrossRef]

- Stanković, I.; Ioana, C.; Daković, M. On the reconstruction of nonsparse time-frequency signals with sparsity constraint from a reduced set of samples. Signal Process. 2018, 142, 480–484. [Google Scholar] [CrossRef]

- Dai, C.; Che, H.; Leung, M.F. A neurodynamic optimization approach for L1 minimization with application to compressed image reconstruction. Int. J. Artif. Intell. Tools 2021, 30, 2140007. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Figueiredo, M.A. A new TwIST: Two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Trans. Image Process. 2007, 16, 2992–3004. [Google Scholar] [CrossRef] [PubMed]

- Kong, X.; Zhao, Y.; Xue, J.; Chan, J.C.W.; Kong, S.G. Global and local tensor sparse approximation models for hyperspectral image destriping. Remote Sens. 2020, 12, 704. [Google Scholar] [CrossRef]

- Costanzo, S.; Rocha, Á.; Migliore, M.D. Compressed sensing: Applications in radar and communications. Sci. World J. 2016, 2016, 5407415. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Zhao, G.; Zhang, W.; Qiu, Q.; Sun, H. ISAR imaging by two-dimensional convex optimization-based compressive sensing. IEEE Sens. J. 2016, 16, 7088–7093. [Google Scholar] [CrossRef]

- Craven, D.; McGinley, B.; Kilmartin, L.; Glavin, M.; Jones, E. Compressed sensing for bioelectric signals: A review. IEEE J. Biomed. Health Inform. 2014, 19, 529–540. [Google Scholar] [CrossRef]

- Chen, S.S.; Donoho, D.L.; Saunders, M.A. Atomic decomposition by basis pursuit. SIAM Rev. 2001, 43, 129–159. [Google Scholar] [CrossRef]

- Osborne, M.R.; Presnell, B.; Turlach, B.A. A new approach to variable selection in least squares problems. IMA J. Numer. Anal. 2000, 20, 389–403. [Google Scholar] [CrossRef]

- Candes, E.; Romberg, J. l1-Magic. 2017. Available online: https://candes.su.domains/software/l1magic/downloads/l1magic.pdf (accessed on 1 December 2022).

- van den Berg, E.; Friedlander, M.P. SPGL1: A Solver for Sparse Least Squares. 2007. Available online: https://friedlander.io/spgl1/ (accessed on 1 December 2022).

- Rozell, C.J.; Johnson, D.H.; Baraniuk, R.G.; Olshausen, B.A. Sparse coding via thresholding and local competition in neural circuits. Neural Comput. 2008, 20, 2526–2563. [Google Scholar] [CrossRef]

- Engan, K.; Rao, B.D.; Kreutz-Delgado, K. Regularized FOCUSS for subset selection in noise. In Proceedings of the NORSIG 2000, Kolmarden, Sweden, 13–15 June 2000; pp. 247–250. [Google Scholar]

- Rao, B.D.; Kreutz-Delgado, K. An affine scaling methodology for best basis selection. IEEE Trans. Signal Process. 1999, 47, 187–200. [Google Scholar] [CrossRef]

- Zhang, C.H. Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 2010, 38, 894–942. [Google Scholar] [CrossRef]

- Breheny, P.; Huang, J. Coordinate descent algorithms for nonconvex penalized regression, with applications to biological feature selection. Ann. Appl. Stat. 2011, 5, 232. [Google Scholar] [CrossRef] [PubMed]

- Blumensath, T.; Davies, M.E. Normalized iterative hard thresholding: Guaranteed stability and performance. IEEE J. Sel. Top. Signal Process. 2010, 4, 298–309. [Google Scholar] [CrossRef]

- Donoho, D.L.; Maleki, A.; Montanari, A. Message-passing algorithms for compressed sensing. Proc. Natl. Acad. Sci. USA 2009, 106, 18914–18919. [Google Scholar] [CrossRef] [PubMed]

- Jin, J.; Gu, Y.; Mei, S. A stochastic gradient approach on compressive sensing signal reconstruction based on adaptive filtering framework. IEEE J. Sel. Top. Signal Process. 2010, 4, 409–420. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Song, C.; Xia, S.T. Alternating direction algorithms for ∖ell_0 regularization in compressed sensing. arXiv 2016, arXiv:1604.04424. [Google Scholar]

- Qiu, K.; Dogandzic, A. ECME thresholding methods for sparse signal reconstruction. arXiv 2010, arXiv:1004.4880. [Google Scholar]

- Zhang, S.; Constantinidies, A.G. Lagrange Programming Neural Networks. IEEE Trans. Circuits Syst. II 1992, 39, 441–452. [Google Scholar] [CrossRef]

- Shi, Z.; Wang, H.; Leung, C.S.; So, H.C.; Liang, J.; Tsang, K.F.; Constantinides, A.G. Robust ellipse fitting based on Lagrange programming neural network and locally competitive algorithm. Neurocomputing 2020, 399, 399–413. [Google Scholar] [CrossRef]

- Balavoine, A.; Rozell, C.; Romberg, J. Global convergence of the locally competitive algorithm. In Proceedings of the IEEE Signal Processing Education Workshop (DSP/SPE) 2011, Sedona, AZ, USA, 4–7 January 2011; pp. 431–436. [Google Scholar]

- Pandey, O. Operational Amplifier (Op-Amp). In Electronics Engineering; Springer: Berlin/Heidelberg, Germany, 2022; pp. 233–270. [Google Scholar]

- Bult, K.; Wallinga, H. A CMOS four-quadrant analog multiplier. IEEE J. Solid-State Circuits 1986, 21, 430–435. [Google Scholar] [CrossRef]

- Chen, C.; Li, Z. A low-power CMOS analog multiplier. IEEE Trans. Circuits Syst. II Express Briefs 2006, 53, 100–104. [Google Scholar] [CrossRef]

- Filanovsky, I.; Baltes, H. Simple CMOS analog square-rooting and squaring circuits. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 1992, 39, 312–315. [Google Scholar] [CrossRef]

- Sakul, C. A new CMOS squaring circuit using voltage/current input. In Proceedings of the 23rd International Technical Conference on Circuits/Systems, Computers and Communications ITC-CSCC, Phuket, Thailand, 5–8 July 2008; pp. 525–528. [Google Scholar]

- van den Berg, E.; Friedlander, M.P. Probing the Pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 2008, 31, 890–912. [Google Scholar] [CrossRef]

- Ji, S.; Xue, Y.; Carin, L. Bayesian compressive sensing. IEEE Trans. Signal Process. 2008, 56, 2346–2356. [Google Scholar] [CrossRef]

- Vadivel, R.; Hammachukiattikul, P.; Zhu, Q.; Gunasekaran, N. Event-triggered synchronization for stochastic delayed neural networks: Passivity and passification case. Asian J. Control 2022. [Google Scholar] [CrossRef]

- Chanthorn, P.; Rajchakit, G.; Humphries, U.; Kaewmesri, P.; Sriraman, R.; Lim, C.P. A delay-dividing approach to robust stability of uncertain stochastic complex-valued hopfield delayed neural networks. Symmetry 2020, 12, 683. [Google Scholar] [CrossRef]

- Spruck, J. Strictly Monotone Functions and the Inverse Function Theorem. Lecture Notes in MATH–405. Available online: https://math.jhu.edu/~js/math405/405.monotone.pdf (accessed on 24 November 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).