1. Introduction

Automated authorship attribution (AA) is defined in [

1] as the task of determining authorship of an unknown text based on the textual characteristics of the text itself. Today the AA is useful in a plethora of fields: from the educational and research domain to detect plagiarism [

2] to the justice domain to analyze evidence on forensic cases [

3] and cyberbullying [

4], to the social media [

5,

6] to detect compromised accounts [

7].

Most approaches in the area of artificial intelligence treat the AA problem by using simple classifiers (e.g., linear SVM or decision tree) that have bag-of-words (character n-grams) as features or other conventional feature sets [

8,

9]. Although deep neural learning was already used for natural language processing (NLP), the adoption of such strategies for authorship identification occurred later. In recent years, pre-trained language models (such as BERT and GPT-2) have been used for finetuning and accuracy improvements [

8,

10,

11].

The challenges in solving the AA problem can be grouped into three main groups [

8]:

The lack of large-scale datasets;

The lack of methodological diversity;

The ad hoc nature of authorship.

The availability of large-scale datasets has improved in recent years as large datasets have become widespread [

12,

13]. Other issues that relate to the datasets are the language in which the texts are written, the domain, the topic, and the writing environment. Each of these aspects has its own particularities. From the language perspective, the issue is that most available datasets consist of texts written in English. There is PAN18 [

9] for English, French, Italian, Polish, and Spanish; or PAN19 [

14] for English, French, Italian, and Spanish. However, there are not very many datasets for other languages and this is crucial as there are particularities that pertain to the language [

15].

The methodological diversity has also improved in recent years, as it is detailed in [

8]. However, the ad hoc nature of authorship is a more difficult issue, as a set of features that differentiates one author from the rest may not work for another author due to the individuality aspect of different writing styles. Even for one author, the writing style can evolve or change over a period of time, or it can differ depending on the context (e.g., the domain, the topic, or the writing environment). Thus, modeling the authorial writing style has to be carefully considered and needs to be tailored to a specific set of authors [

8]. Therefore, selecting a distinguishing set of features is a challenging task.

We propose a new dataset named ROST (ROmanian Stories and other Texts) as there are few available datasets that contain texts written in Romanian [

16]. The existing datasets are small, on obscure domains, or translated from other languages. Our dataset consists of 400 texts written by 10 authors. We have elements that pertain to the intended heterogeneity of the dataset such as:

Different text types: stories, short stories, fairy tales, novels, articles, and sketches;

Different number of texts per author: ranging from 27 to 60;

Different sources: texts are collected from 4 different websites;

Different text lengths: ranging from 91 to 39,195 words per text;

Different periods: the time period in which the considered texts were written spans over 3 centuries, which introduces diachronic developments;

Different mediums: texts were written with the intention of being read from paper medium (most of the considered authors) to online (two contemporary authors). This aspect considerably changes the writing style, as shorter sentences and shorter words are used online, and they also contain more adjectives and pronouns [

17].

As our set is heterogeneous (as described above) from multiple perspectives, the authorship attribution is even more difficult. We investigate this classification problem by using five different techniques from the artificial intelligence area:

Artificial neural networks (ANN);

Multi-expression programming (MEP);

K-nearest neighbor (k-NN);

Support vector machine (SVM);

Decision trees (DT) with C5.0.

For each of these methods, we investigate different scenarios by varying the number and the type of some features to determine the context in which they obtain the best results. The aim of our investigations is twofold. On one side, the result of this investigation is to determine which method performs best while working on the same data. On another side, we try to find out the proper number and type of features that best classify the authors on this specific dataset.

The paper is organized as follows:

Section 2 describes the AA state of the art by using methods from artificial intelligence; details the entire prerequisite process to be considered before applying the specific AI algorithms (highlighting possible “stylometric features” to be considered); provides a table with some available datasets; presents a number of AA methods already proposed; describes the steps of the attribution process; presents an overview and a comparison of AA state-of-the-art methods.

Section 3 details the specific particularities (e.g., in terms of size, sources, time frames, types of writing, and writing environments) of the database we are proposing and we are going to use, and the building and scaling/pruning process of the feature set.

Section 4 introduces the five methods we are going to use in our investigation.

Section 5 presents the results and interprets them, making a comparison between the five methods and the different sets of features used; measures the results by using metrics that allow a comparison with the results of other state-of-the-art methods.

Section 6 concludes with final remarks on the work and provides future possible directions and investigations.

2. Related Work

The AA detection can be modeled as a classification problem. The starting premise is that each author has a stylistic and linguistic “fingerprint” in their work [

18]. Therefore, in the realm of AI, this means extracting a set of characteristics, which can be identified in a large-enough writing sample [

8].

2.1. Features

Stylometric features are the characteristics that define an author’s style. They can be quantified, learned [

19], and classified into five groups [

20]:

Lexical (the text is viewed as a sequence of tokens grouped into sentences, with each token corresponding to a word, number, or punctuation mark):

Token-based (e.g., word length, sentence length, etc.);

Vocabulary richness (i.e., attempts to quantify the vocabulary diversity of a text);

Word frequencies (e.g., the traditional “bag-of-words” representation [

21] in which texts become vectors of word frequencies disregarding contextual information, i.e., the word order);

Word n-grams (i.e., sequences of n contiguous words also known as word collocations);

Errors (i.e., intended to capture the idiosyncrasies of an author’s style) (requires orthographic spell checker).

Character (the text is viewed as a sequence of characters):

Character types (e.g., letters, digits, etc.) (requires character dictionary);

Character n-grams (i.e., considers all sequences of n consecutive characters in the texts; n can have a variable or fixed length);

Compression methods (i.e., the use of a compression model acquired from one text to compress another text; compression models are usually based on repetitions of character sequences).

Syntactic (text-representation which considers syntactic information):

Part-of-speech (POS) (requires POS tagger—a tool that assigns a tag of morpho-syntactic information to each word-token based on contextual information);

Chunks (i.e., phrases);

Sentence and phrase structure (i.e., a parse tree of each sentence is produced);

Rewrite rules frequencies (these rules express part of the syntactic analysis, helping to determine the syntactic class of each word as the same word can have different syntactic values based on different contexts);

Errors (e.g., sentence fragments, run-on sentences, mismatched use of tenses) (requires syntactic spell checker).

Semantic (text-representation which considers semantic information):

Application-specific (the text is viewed from an application-specific perspective to better represent the nuances of style in a given domain):

Functional (requires specialized dictionaries);

Structural (e.g., the use of greetings and farewells in messages, types of signatures, use of indentation, and paragraph length);

Content-specific (e.g., content-specific keywords);

Language-specific.

The lexical and character features are simpler because they view the text as a sequence of word-tokens or characters, not requiring any linguistic analysis, in contrast with the syntactic and semantic characteristics, which do. The application-specific characteristics are restricted to certain text domains or languages.

A simple and successful feature selection, based on lexical characteristics, is made by using the top of the

N most frequent words from a corpus containing texts of the candidate author. Determining the best value of

N was the focus of numerous studies, starting from 100 [

22], and reaching 1000 [

23], or even all words that appear at least twice in the corpus [

24]. It was observed, that depending on the value of

N, different types of words (in terms of content specificity) make up the majority. Therefore, when the size of

N falls within dozens, the most frequent words of a corpus are closed-class words (i.e., articles, prepositions, etc.), while when

N exceeds a few hundred words, open-class words (i.e., nouns, adjectives, verbs) are the majority [

20].

Even though the word n-grams approach comes as a solution to keeping the contextual information, i.e., the word order, which is lost in the word frequencies (or “bag-of-words”) approach, the classification accuracy is not always better [

25,

26].

The main advantage of character feature selection is that they pertain to any natural language and corpus. Furthermore, even the simplest in this category (i.e., character types) proved to be useful to quantify the writing style [

27].

The character n-grams have the advantages of capturing the nuances of style and being tolerant to noise (e.g., grammatical errors or making strange use of punctuation), and the disadvantage is that they capture redundant information [

20].

The syntactic feature selection requires the use of Natural Language Processing (NLP) tools to perform a syntactic analysis of texts, and they are language-dependent. Additionally, being a method that requires complex text processing, noisy datasets may be produced due to unavoidable errors made by the parser [

20].

For semantic feature selection an even more detailed text analysis is required for extracting stylometric features. Thus, the measures produced may be less accurate as more noise may be introduced while processing the text. NLP tools are used here for sentence splitting, POS tagging, text chunking, and partial parsing. However, complex tasks, such as full syntactic parsing, semantic analysis, and pragmatic analysis, are hard to be achieved for an unrestricted text [

20].

A comprehensive survey of the state of the art in stylometry is conducted in [

28].

The most common approach used in AA is to extract features that have a high discriminatory potential [

29]. There are multiple aspects that have to be considered in AA for selecting the appropriate set of features. Some of them are the language, the literary style (e.g., poetry, prose), the topic addressed by the text (e.g., sports, politics, storytelling), the length of the text (e.g., novels, tweets), the number of text samples, and the number of considered features. For instance, lexical and character features, although more simple, can considerably increase the dimensionality of the feature set [

20]. Therefore, feature selection algorithms can be applied to reduce the dimensionality of such feature sets [

30]. This also helps the classification algorithm to avoid overfitting on the training data.

Another prerequisite for the training phase is deciding whether the training texts are processed individually or cumulatively (per author). From this perspective, the following two approaches can be distinguished [

20]:

Instance-based approach (i.e., each training text is individually represented as a separate instance in the training process to create a reliable attribution model);

Profile-based approach (i.e., a cumulative representation of an author’s style, also known as the author’s profile, is extracted by concatenating all available training texts of one author into one large file to flatten differences between texts).

Efstathios Stamatatos offers in [

20] a comparison between the two aforementioned approaches.

2.2. Datasets

In

Table 1, we present a list of datasets used in AA investigations.

There is a large variation between the datasets. In terms of language, there are usually datasets with texts that are written in one language, and there are a few that have texts written in multiple languages. However, most of the available datasets contain texts written in English.

The Size column is generally the number of texts and authors that have been used in AA investigations. For example, PAN11 and PAN12 have thousands of texts and hundreds of authors. However, in the referenced paper, only a few were used. The datasets vary in the number of texts from hundreds to hundreds of thousands, and in terms of the number of authors, from tens to tens of thousands.

2.3. Strategies

According to [

46], the entire process of text classification occurs in 6 stages:

Data acquisition (from one or multiple sources);

Data analysis and labeling;

Feature construction and weighting;

Feature selection and projection;

Training of a classification model;

Solution evaluation.

The classification process initiates with data acquisition, which is used to create the dataset. There are two strategies for the analysis and labeling of the dataset [

46]: labeling groups of texts (also called

multi-instance learning) [

47], or assigning a label or labels to each text part (by using supervised methods) [

48]. To yield the appropriate data representation required by the selected learning method, first, the features are selected and weighted [

46] according to the obtained labeled dataset. Then, the number of features is reduced by selecting only the most important features and projected onto a lower dimensionality. There are two different representations of textual data:

vector space representation [

49] where the document is represented as a vector of feature weights, and

graph representation [

50] where the document is modeled as a graph (e.g., nodes represent words, whereas edges represent the relationships between the words). In the next stage, different learning approaches are used to train a classification model. Training algorithms can be grouped into different approaches [

46]:

supervised [

48] (i.e., any machine learning process),

semi-supervised [

51] (also known as self-training, co-training, learning from the labeled and unlabeled data, or transductive learning),

ensemble [

52] (i.e., training multiple classifiers and considering them as a “committee” of decision-makers),

active [

53] (i.e., the training algorithm has some role in determining the data it will use for training),

transfer [

54] (i.e., the ability of a learning mechanism to improve the performance for a current task after having learned a different but related concept or skill from a previous task; also known as

inductive transfer or

transfer of knowledge across domains), or

multi-view learning [

55] (also known as

data fusion or

data integration from multiple feature sets, multiple feature spaces, or diversified feature spaces that may have different distributions of features).

By providing probabilities or weights, the trained classifier is then able to decide a class for each input vector. Finally, the classification process is evaluated. The performance of the classifier can be measured based on different indicators [

46]: precision, recall, accuracy, F-score, specificity, area under the curve (AUC), and error rate. These all are related to the actual classification task. However, other performance-oriented indicators can also be considered, such as CPU time for training, CPU time for testing, and memory allocated to the classification model [

56].

Aside from the aforementioned challenges, there are also other sets of issues that are currently being investigated. These are:

Issues related to cross-domain, cross topic and/or cross-genre datasets;

Issues related to the specificity of the used language;

Issues regarding the style change of authors when the writing environment changes from offline to online;

The balanced or imbalanced nature of datasets.

Some examples which focus on these types of issues, alongside their solutions and/or findings, are presented next.

Participants in the Identification Task at PAN-2018 [

9], investigated two types of classifications. The corpus consists of fan-fiction texts written in English, French, Italian, Polish, and Spanish, and a set of questions and answers on several topics in English. First, they addressed the cross-domain AA, finding that heterogeneous ensembles of simple classifiers and compression models outperformed more sophisticated approaches based on deep learning. Also, the set size is inversely correlated with attribution accuracy, especially for cases when more than 10 authors are considered. Second, they investigated the detection of style changes, where single-author and multi-author texts were distinguished. Techniques ranging from machine learning ensembles to deep learning with a rich set of features have been used to detect style changes, achieving the accuracy of up to nearly 90% over the entire dataset and several reaching 100%.

The issue of cross-topic confusion is addressed in [

57] for AA. This problem arises when the training topic differs from the test topic. In such a scenario, the types of errors caused by the topic can be distinguished from the errors caused by the detection of the writing style. The findings show that classification is least likely to be affected by topic variations when parts of speech are considered as features.

The analysis conducted in [

58] aimed to determine which approach, such as topic or style, is better for AA. The findings showed that online news, which have a wide variety of topics, are better classified using content-based features, while texts with less topical variation (e.g., legal judgments and movie reviews) benefit from using style-based features.

In [

59] it is shown that syntax (e.g., sentence structure) helps AA on cross-genre texts, while additional lexical information (e.g., parts of speech such as nouns, verbs, adjectives, and adverbs) helps to classify cross-topic and single-domain texts. It is also shown that syntax-only models may not be efficient.

Language-specific issues (e.g., the complexity and structure of sentences) are addressed in [

15] in relation to the Arabic language. Ensemble methods were used to improve the effectiveness of the AA task.

The authors of [

60] propose solutions to address the many issues in AA (e.g., cross-domain, language specificity, writing environment) by introducing the concept of

stacked classifiers, which are built from words, characters, parts of speech n-grams, syntactic dependencies, word embeddings, and more. This solution proposes that these

stacked classifiers are dynamically included in the AA model according to the input.

Two different AA approaches called “writer-dependent” and “writer-independent” were addressed in [

37]. In the first approach, they used a Support Vector Machine (SVM) to build a model for each author. The second approach combined a feature-based description with the concept of dissimilarity to determine whether a text is written by a particular author or not, thereby reducing the problem to a two-class problem. The tests were performed on texts written in Portuguese. For the first approach, 77 conjunctions and 94 adverbs were used to determine the authorship and the best accuracy results on the test set composed of 200 documents from 20 different authors were 83.2%. For the second approach, the same set of documents and conjunctions was used, obtaining the best result of 75.1% accuracy. In [

38], along with conjunctions and adverbs, 50 verbs and 91 pronouns were added to improve the results obtained previously, achieving a 4% improvement in both “writer-dependent” and “writer-independent” approaches.

The challenges of variations in authors’ style when the writing environment changes from traditional to online are addressed in [

17]. These investigations consider changes in sentence length, word usage, readability, and frequency use of some parts of speech. The findings show that shorter sentences and words, as well as more adjectives and pronouns, are used online.

The authors of [

61] proposed a feature extraction solution for AA. They investigated trigrams, bags of words, and most frequent terms in both balanced and imbalanced samples and showed with 79.68% accuracy that an author’s writing style can be identified by using a single document.

2.4. Comparison

AA is a very important and currently intensively researched topic. However, the multitude of approaches makes it very difficult to have a unified view of the state-of-the-art results. In [

10], authors highlight this challenge by noting significant differences in:

Datasets

- -

In terms of size: small (CCAT50, CMCC, Guardian10), medium (IMDb62, Blogs50), and large (PAN20, Gutenberg);

- -

In terms of content: cross-topic (), cross-genre (), unique authors;

- -

In terms of imbalance (imb): i.e., standard deviation of the number of documents per author;

- -

In terms of topic confusion (as detailed in [

57]).

Performance metrics

- -

In terms of type: accuracy, F1, c@1, recall, precision, macro-accuracy, AUC, R@8, and others;

- -

In terms of computation: even for the same performance metrics there were different ways of computing them.

Methods

- -

In terms of the feature extraction method,

- *

Feature-based: n-gram, summary statistics, co-occurrence graphs;

- *

Embedding-based: char embedding, word embedding, transformers

- *

Feature and embedding-based: BERT.

The work presented in [

10] tries to address and “resolve” these differences, bringing everything to a common denominator, even when that meant recreating some results. To differentiate between different methods, authors of [

10] grouped the results in 4 classes:

Ngram: includes character n-grams, parts-of-speech and summary statistics as shown in [

57,

62,

63,

64];

PPM: uses Prediction by Partial Matching (PPM) compression model to build a character-based model for each author, with works presented in [

28,

65];

BERT: combines a BERT pre-trained language model with a dense layer for classification, as in [

66];

pALM: the per-Author Language Model (pALM), also using BERT as described in [

67].

The results of the state of the art as presented in [

10] are shown in

Table 2.

As can be seen in

Table 2, the methods in the Ngram class generally work best. However, BERT-class methods can perform better on large training sets that are not cross-topic and/or cross-genre. The authors of [

10] state that from their investigations it can be inferred that Ngram-class methods are preferred for datasets that have less than 50,000 words per author, while BERT-class methods should be preferred for datasets with over 100,000 words per author.

3. Proposed Dataset

The texts considered are Romanian stories, short stories, fairy tales, novels, articles, and sketches.

There are 400 such texts of different lengths, ranging from 91 to 39,195 words.

Table 3 presents the averages and standard deviations of the number of words, unique words, and the ratio of words to unique words for each author. There are differences up to almost 7000 words between the average word counts (e.g., between Slavici and Oltean). For unique words, the difference between averages goes up to more than 1300 unique words (e.g., between Eminescu and Oltean). Even the ratio of total words to unique words is a significant difference between the authors (e.g., between Slavici and Oltean).

Eminescu and Slavici, the two authors with the largest averages also have large standard deviations for the number of words and the number of unique words. This means that their texts range from very short to very long. Gârleanu and Oltean have the shortest texts, as their average number of words and unique words and the corresponding standard deviations are the smallest.

There is also a correlation between the three groups of values (pertaining to the words, unique words, and the ratio between the two) that is to be expected as a larger or smaller number of words would contain a similar proportion of unique words or the ratio of the two, while the standard deviations of the ratio of total words to unique words tend to be more similar. However, Slavici has a very high ratio, which means that there are texts in which he repeats the same words more often, and in other texts, he does not. There is also a difference between Slavici and Eminescu here because even if they have similar word count average and unique word count average, their ratio differs. Eminescu has a similar representation in terms of ratio and standard deviation with his lifelong friend Creangă, which can mean that both may have similar tendencies in reusing words.

Table 4 shows the averages of the number of features that are contained in the texts corresponding to each author. The pattern depicted here is similar to that in

Table 3, which is to be expected. However, standard deviations tend to be similar for all authors. These standard deviations are considerable in size, being on average as follows:

4.16 on the set of 56 features (i.e., the list of prepositions),

23.88 on the set of 415 features (i.e., the list of prepositions and adverbs),

25.38 on the set of 432 features (i.e., the list of prepositions, adverbs, and conjunctions).

This means that the frequency of feature occurrence differs even in the texts written by the same author.

The considered texts are collected from 4 websites and are written by 10 different authors, as shown in

Table 5. The diversity of sources is relevant from a twofold perspective. First, especially for old texts, it is difficult to find or determine which is the original version. Second, there may be differences between versions of the same text either because some words are no longer used or have changed their meaning, or because fragments of the text may be added or subtracted. For some authors, texts are sourced from multiple websites.

The diversity of the texts is intentional because we wanted to emulate a more likely scenario where all these characteristics might not be controlled. This is because, for future texts to be tested on the trained models, the text length, the source, and the type of writing cannot be controlled or imposed.

To highlight the differences between the time frames of the periods in which the authors lived and wrote the considered texts, as well as the environment from which the texts were intended to be read, we gathered the information presented in

Table 6. It can be seen that the considered texts were written in the time span of three centuries. This also brings an increased diversity between texts, since within such a large time span there have been significant developments in terms of language (e.g., diachronic developments), writing style relating to the desired reading medium (e.g., paper or online), topics (e.g., general concerns and concerns that relate to a particular time), and viewpoints (e.g., a particular worldview).

The diversity of the texts also pertains to the type of writing, i.e., stories, short stories, fairy tales, novels, articles, and sketches.

Table 7 shows the distribution of these types of writing among the texts belonging to the 10 authors. The difference in the type of writing has an impact on the length of the texts (for example, a

novel is considerably longer than a

short story), genre (for example,

fairy tales have more allegorical worlds that can require a specific style of writing), the topic (for example, an

article may describe more mundane topics, requiring a different type of discourse compared to the other types of writing).

Regarding the list of possible features, we selected as elements to identify the author of a text inflexible parts of speech (IPoS) (i.e., those that do not change their form in the context of communication): conjunctions, prepositions, interjections, and adverbs. Of these, we only considered those that were single-word and we removed the words that may represent other parts of speech, as some of them may have different functions depending on the context, and we did not use any syntactic or semantic processing of the text to carry out such an investigation.

We collected a list of 24 conjunctions that we checked on

dexonline.ro (i.e., site that contains explanatory dictionaries of the Romanian language) not to be any other part of speech (not even among the inflexible ones). We also considered 3 short forms, thus arriving at a list of 27 conjunctions. The process of selecting prepositions was similar to that of selecting conjunctions, resulting in a list of 85 (including some short forms).

The lists of interjections and adverbs were taken from:

To compile the lists of interjections and adverbs, we again considered only single-word ones and we eliminated words that may represent other parts of speech (e.g., proper nouns, nouns, adjectives, verbs), resulting in lists of 290 interjections and 670 adverbs.

The lists of the aforementioned IPoS also contain archaic forms in order to better identify the author. This is an important aspect that has to be taken into consideration (especially for our dataset which contains texts that were written over a time span of 3 centuries), as language is something that evolves and some words change as form and sometimes even as meaning or the way they are used.

From the lists corresponding to the considered IPoS features, we use only those that appear in the texts. Therefore, the actual lists of prepositions, adverbs, and conjunctions may be shorter. Details of the texts and the lists of inflexible parts of speech used can be found at reference [

68].

4. Compared Methods

Below we present the methods we will use in our investigations.

4.1. Artificial Neural Networks

Artificial neural networks (ANN) is a machine learning method that applies the principle function approximation through learning by example (or based on provided training information) [

69]. An ANN contains artificial neurons (or processing elements), organized in layers and connected by weighted arcs. The learning process takes place by adjusting the weights during the training process so that based on the input dataset the output outcome is obtained. Initially, these weights are chosen randomly.

The artificial neural structure is feedforward and has at least three layers: input, hidden (one or more), and output.

The experiments in this paper were performed using fast artificial neural network (FANN) [

70] library. The error is RMSE. For the test set, the number of incorrectly classified items is also calculated.

4.2. Multi-Expression Programming

Multi-expression programming (MEP) is an evolutionary algorithm for generating computer programs. It can be applied to symbolic regression, time-series, and classification problems [

71]. It is inspired by genetic programming [

72] and uses three-address code [

73] for the representation of programs.

MEP experiments use the MEPX software [

74].

4.3. K-Nearest Neighbors

K-nearest neighbors (k-NN) [

75,

76,

77] is a simple classification method based on the concept of instance-based learning [

78]. It finds the

k items, in the training set, that are closest to the test item and assigns the latter to the class that is most prevalent among these

k items found.

4.4. Support Vector Machine

A support vector machine (SVM) [

79] is also a classification principle based on machine learning with the maximization (support) of separating distance/margin (vector). As in k-NN, SVM represents the items as points in a high-dimensional space and tries to separate them using a hyperplane. The particularity of SVM lies in the way in which such a hyperplane is selected, i.e., selecting the hyperplane that has the maximum distance to any item.

LIBSVM [

80,

81] is the support vector machine library that we used in our experiments. It supports classification, regression, and distribution estimation.

4.5. Decision Trees with C5.0

Classification can be completed by representing the acquired knowledge as decision trees [

82]. A decision tree is a directed graph in which all nodes (except the root) have exactly one incoming edge. The root node has no incoming edge. All nodes that have outgoing edges are called internal (or test) nodes. All other nodes are called leaves (or decision) nodes. Such trees are built starting from the root by top–down inductive inference based on the values of the items in the training set. So, within each internal node, the instance space is divided into two or more sub-spaces based on the input attribute values. An internal node may consider a single attribute. Each leaf is assigned to a class. Instances are classified by running them through the tree starting from the root to the leaves.

See5 and C5.0 [

83] are data mining tools that produce classifiers expressed as either decision trees or rulesets, which we have used in our experiments.

5. Numerical Experiments

To prepare the dataset for the actual building of the classification model, the texts in the dataset were shuffled and divided into training (50%), validation (25%), and test (25%) sets, as detailed in

Table 8. In cases where we only needed training and test sets, we concatenated the validation set to the training set. We reiterated the process (i.e., shuffle and split 50%–25%–25%) three times and, thus, obtained three different training–validation–test shuffles from the considered dataset.

Before building a numerical representation of the dataset as vectors of the frequency of occurrence of the considered features, we made a preliminary analysis to determine which of the inflexible parts of speech are more prevalent in our texts. Therefore, we counted the number of occurrences of each of them based on the lists described in

Section 3. The findings are detailed in

Table 9.

Based on the data presented here, we decided not to consider interjections because they do not appear in all files (i.e., 44 files do not contain any interjections), and in the other files, their occurrence is much less compared to the rest of the IPoS considered. This investigation also allowed us to decide the order in which these IPoS will be considered in our tests. Thus, the order of investigation is prepositions, adverbs, and conjunctions.

Therefore, we would first consider only prepositions, then add adverbs to this list, and finally add conjunctions as well. The process of shuffling and splitting the texts into training–validation–test sets (described at the beginning of the current section, i.e.,

Section 5) was reiterated once more for each feature list considered. We, therefore, obtained different dataset representations, which we will refer further as described in

Table 10. The last 3 entries (i.e., ROST-PC-1, ROST-PC-2, and ROST-PC-3) were used in a single experiment.

Correspondingly, we created different representations of the dataset as vectors of the frequency of occurrence of the considered feature lists. All these representations (i.e., training-validation-test sets) can be found as text files at reference [

68]. These files contain feature-based numerical value representations for a different text on each line. On the last column of these files, are numbers from 0 to 9 corresponding to the author, as specified in the first columns of

Table 6,

Table 7 and

Table 8.

5.1. Results

The parameter setting for all 5 methods are presented in

Appendix A, while

Appendix B contains some prerequisite tests.

Most results are presented in a tabular format. The percentages contained in the cells under the columns named Best, Avg, or Error may be highlighted using bold text or gray background. In these cases, the percentages in bold represent the best individual results (i.e., obtained by the respective method on any ROST-*-* in the dataset, out of the 9 representations mentioned above), while the gray-colored cells contain the best overall results (i.e., compared to all methods on that specific ROST-X-n representation of the dataset).

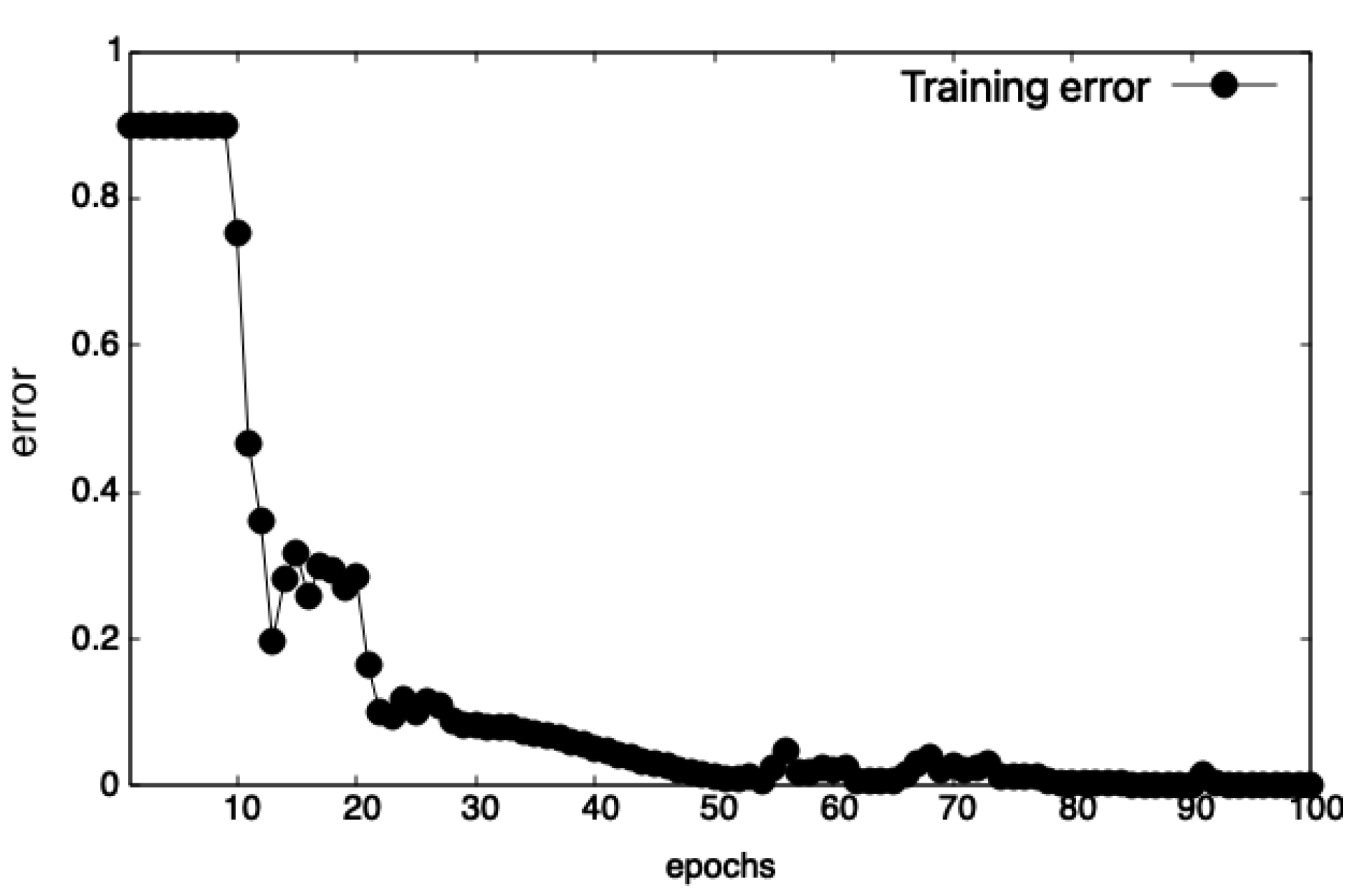

5.1.1. ANN

Results that showed that ANN is a good candidate to solve this kind of problem and prerequisite tests that determined the best ANN configuration (i.e., number of neurons on the hidden layer) for each dataset representation are detailed in

Appendix B.1. The best values obtained for test errors and the number of neurons on the hidden layer for which these “bests” occurred are given in

Table 11. These results show that the best test error rates were mainly generated by ANNs that have a number of neurons between 27 and 49. The best test error rate obtained with this method was

for ROST-PAC-3, while the best average was

for ROST-PAC-2.

5.1.2. MEP

Results that showed that MEP can handle this type of problem are described in

Appendix B.2.

We are interested in the generalization ability of the method. For this purpose, we performed full (30) runs on all datasets. The results, on the test sets, are given in

Table 12.

With this method, we obtained an overall “best” on all ROST-*-*, which is , and also an overall “average” best with a value of , both for ROST-PA-2.

One big problem is overfitting. The error on the training set is low (they are not given here, but sometimes are below 10%). However, on the validation and test sets the errors are much higher (2 or 3 times higher). This means that the model suffers from overfitting and has poor generalization ability. This is a known problem in machine learning and is usually corrected by providing more data (for instance more texts for an author).

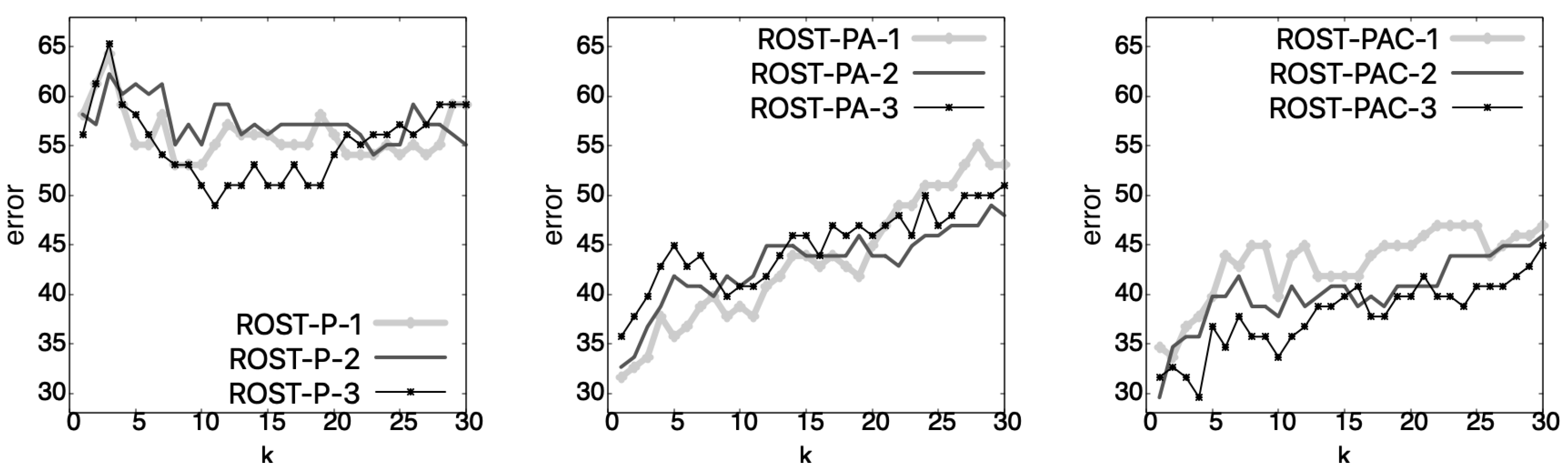

5.1.3. k-NN

Preliminary tests and their results for determining the best value of

k for each dataset representation are presented in

Appendix B.3.

The best k-NN results are given in

Table 13 with the corresponding value of

k for which these “bests” were obtained. It can be seen that for all ROST-P-*, the values of

k were higher (i.e.,

) than those for ROST-PA-* or ROST-PAC-* (i.e.,

). The best value obtained by this method was

for ROST-PAC-2 and ROST-PAC-3.

5.1.4. SVM

Prerequisite tests to determine the best kernel type and a good interval of values for the parameter

are described in

Appendix B.4, along with their results.

We ran tests for each kernel type and with

nu varying from 0.1 to 1, as we saw in

Figure A6 that for values less than 0.1, SVM is unlikely to produce the best results. The best results obtained are shown in

Table 14.

As can be seen, the best values were obtained for values of parameter nu between 0.2 and 0.6 (where sometimes 0.6 is the smallest value of the set {0.6, 0.7, ⋯, 1} for which the best test error was obtained). The best value obtained by this method was for ROST-PAC-1, using the linear kernel and nu parameter value 0.2.

5.1.5. Decision Trees with C5.0

Advanced pruning options for optimizing the decision trees with C5.0 model and their results are presented in

Appendix B.5. The best results were obtained by using

cases option, as detailed in

Table 15.

The best result obtained by this method was on ROST-PAC-2, with 14 option, on a decision tree of size 12. When no options were used, the size of the decision trees was considerably larger for ROST-P-* (i.e., ≥57) than those for ROST-PA-* and ROST-PAC-* (i.e., ≤39).

5.2. Comparison and Discussion

The findings of our investigations allow for a twofold perspective. The first perspective refers to the evaluation of the performance of the five investigated methods, as well as to the observation of the ability of the considered feature sets to better represent the dataset for successful classification. The other perspective is to place our results in the context of other state-of-the-art investigations in the field of author attribution.

5.2.1. Comparing the Internally Investigated Methods

From all the results presented above, upon consulting the tables containing the best test error rates, and especially the gray-colored cells (which contain the best results while comparing the methods amongst themselves) we can highlight the following:

ANN:

- -

Four best results for: ROST-PA-1, ROST-PA-3, ROST-PAC-2 and ROST-PAC-3 (see

Table 11);

- -

Best ANN 23.46% on ROST-PAC-3; best ANN average 36.93% on ROST-PAC-2;

- -

Worst best overall on ROST-P-1.

MEP:

- -

Two best results for ROST-PA-2 and ROST-PAC-3 (see

Table 12);

- -

Best overall 20.40% on ROST-PA-2; best overall average 27.95% on ROST-PA-2;

- -

Worst best MEP on ROST-P-1.

k-NN:

- -

- -

Best k-NN 29.59% on ROST-PAC-2 and ROST-PAC-3;

- -

Worst k-NN on ROST-P-2.

SVM:

- -

Four best results for: ROST-P-1, ROST-P-3, ROST-PAC-1 and ROST-PAC-2 (see

Table 14);

- -

Best SVM 23.44% on ROST-PAC-1;

- -

Worst SVM on ROST-P-2.

Decision trees:

- -

Two best results for: ROST-P-2 and ROST-PAC-2 (see

Table 15);

- -

Best DT 24.5% on ROST-PAC-2;

- -

Worst DT on ROST-P-2.

Other notes from the results are:

Best values for each method were obtained for ROST-PA-2 or ROST-PAC-*;

The worst of these best results were obtained for ROST-P-1 or ROST-P-2;

ANN and MEP suffer from overfitting. The training errors are significantly smaller than the test errors. This problem can only be solved by adding more data to the training set.

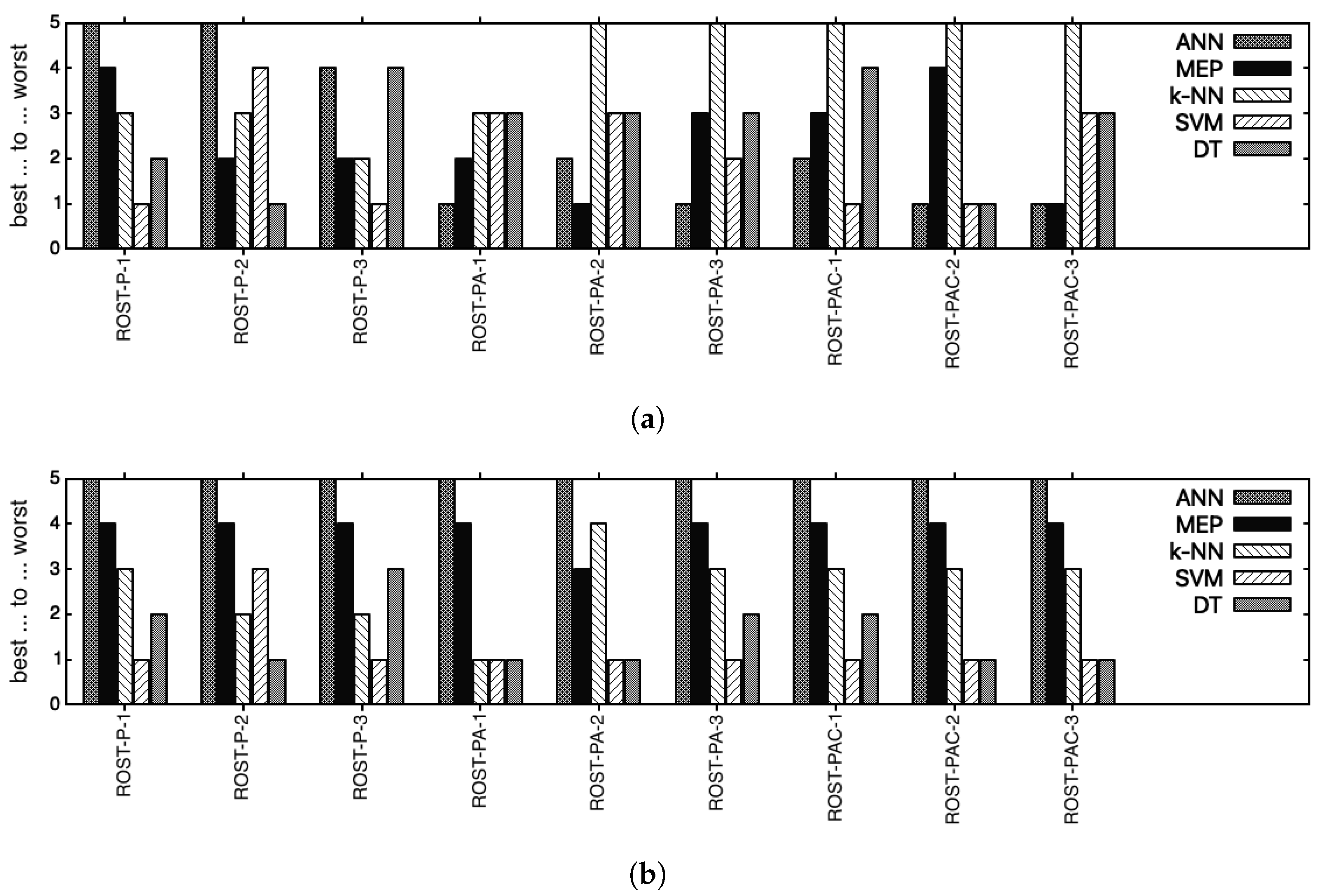

An overview of the best test results obtained by all five methods is given in

Table 16.

ANN ranks last for all ROST-P-* and ranks 1st and 2nd for ROST-PA-* and ROST-PAC-*. MEP is either ranked 1st or ranked 2nd on all ROST-*-* with three exceptions, i.e., for ROST-P-1 and ROST-PAC-2 (at 4th place) and for ROST-PAC-1 (at 3rd place). k-NN performs better (i.e., 3rd and 2nd places) on ROST-P-*, and ranks last for ROST-PA-* and ROST-PAC-*. SVM is ranked 1st for ROST-P-* and ROST-PAC-* with two exceptions: for ROST-P-2 (ranked 4th) and for ROST-PAC-3 (on 3rd place). For ROST-PA-* SVM is in 3rd and 2nd places. Decision trees (DT) with C5.0 is mainly on the 3rd and 4th places, with three exceptions: for ROST-P-1 (on 2nd place), for ROST-P-2 (on 1st place), and for ROST-PAC-2 (on 1st place).

An overview of the average test results obtained by all five methods is given in

Table 17. However, for ANN and MEP alone, we could generate different results with the same parameters, based on different starting

seed values, with which we ran 30 different runs. For the other 3 methods, we used the best results obtained with a specific set of parameters (as in

Table 16).

Comparing all 5 methods based on averages, SVM and DT take the lead as the two methods that share the 1st and 2nd places with two exceptions, i.e., for ROST-P-2 and ROST-P3 for which SVM and DT, respectively, rank 3rd. k-NN usually ranks 3rd, with four exceptions, when k-NN was ranked 2nd for ROST-P-2 and ROST-P-3, for ROST-PA-1 for which k-NN ranks 1st together with SVM and DT, and for ROST-PA-2 for which k-NN ranks 4th. MEP is generally ranked 4th with one exception, i.e., for ROST-PA-2 for which it ranks 3rd. ANN ranks last for all ROST-*-*.

We performed statistical tests to determine whether the results obtained by MEP and ANN are significantly different with a 95% confidence level. The tests were two-sample, equal variance, and two-tailed T-tests. The results are shown in

Table 18.

The p-values obtained show that the MEP and ANN test results are statistically significantly different for almost all ROST-*-* (i.e., ) with one exception, i.e., for ROST-PAC-2 for which the differences are not statistically significant (i.e., ).

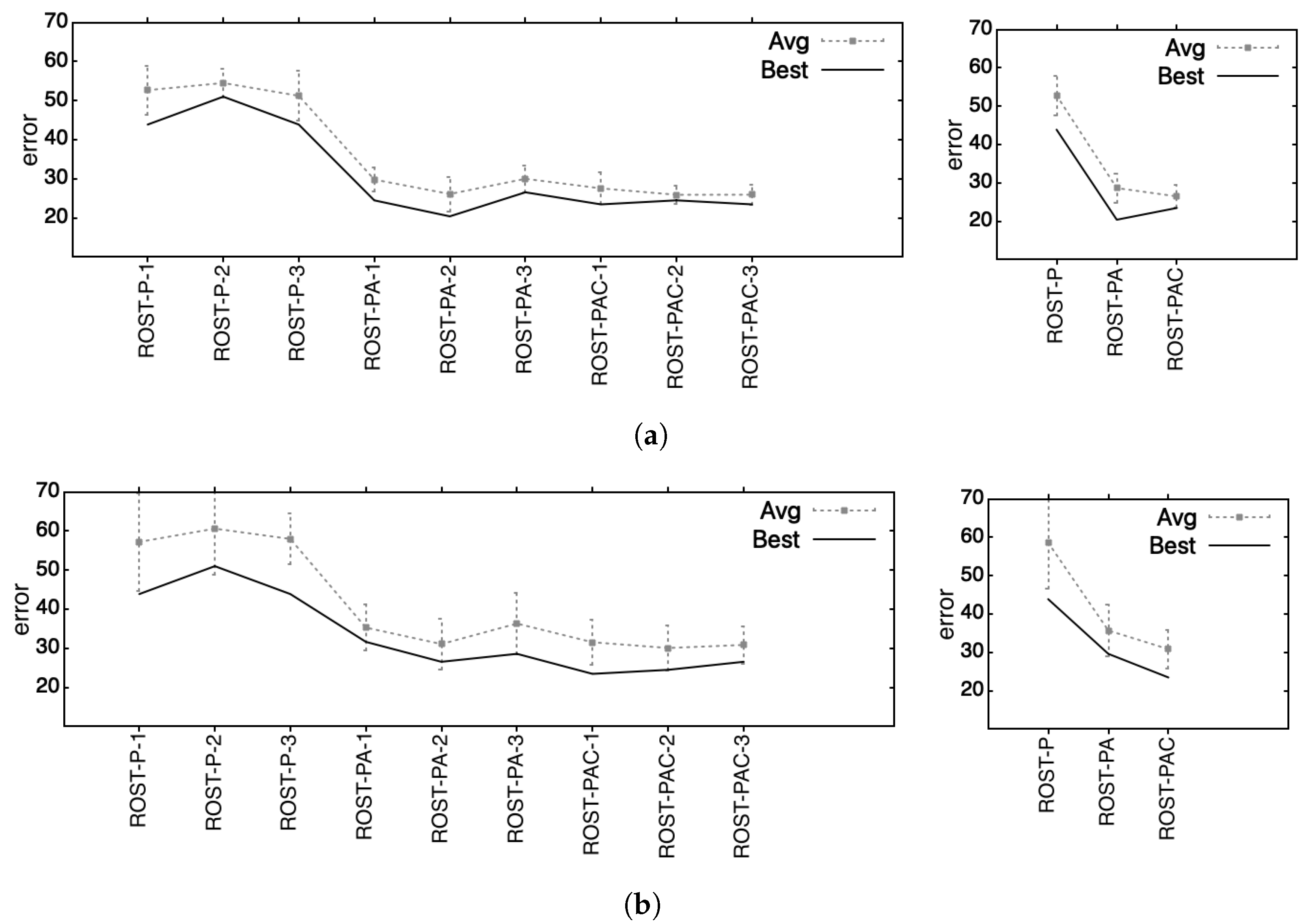

Next, we wanted to see which feature set, out of the three we used, was the best for successful author attribution. Therefore, we plotted all best and best average results obtained with all methods (as presented in

Table 16 and

Table 17) on all ROST-*-* and aggregated on the three datasets corresponding to the distinct feature lists, in

Figure 2.

Based on the results represented in

Figure 2a (i.e., which considered only the best results, as detailed in

Table 16) we can conclude that we obtained the best results on ROST-PA-* (i.e., corresponding to the 415 feature set, which contains prepositions and adverbs). However, using the average results, as shown in

Figure 2b and detailed in

Table 17 we infer that the best performance is obtained on ROST-PAC-* (i.e., corresponding to the 432-feature set, containing prepositions, adverbs, and conjunctions).

Another aspect worth mentioning based on the graphs presented in

Figure 2 is related to the standard deviation (represented as error bars) between the results obtained by all methods considered on all considered datasets. Standard deviations are the smallest in

Figure 2a, especially for ROST-PA-* and even more so for ROST-PAC-*. This means that the methods perform similarly on those datasets. For ROST-P-* and in

Figure 2b, the standard deviations are larger, which means that there are bigger differences between the methods.

5.2.2. Comparisons with Solutions Presented in Related Work

To better evaluate our results and to better understand the discriminating power of the best performing method (i.e., MEP on ROST-PA-2), we also calculate the

macro-accuracy (or

macro-average accuracy). This metric allows us to compare our results with the results obtained by other methods on other datasets, as detailed in

Table 2. For this, we considered the test for which we obtained our best result with MEP, with a test error rate of 20.40%. This means that 20 out of 98 tests were misclassified.

To perform all the necessary calculations, we used the

Accuracy evaluation tool available at [

84], build based on the paper [

85]. By inputting the vector of

targets (i.e., authors/classes that were the actual authors (i.e., correct classifications) of the test texts) and the vector of

outputs (i.e., authors/classes identified by the algorithm as the authors of the test texts), we were first given a

Confusion value of

and the

Confusion Matrix, depicted in

Table 19.

This matrix is a representation that highlights for each class/author the true positives (i.e., the number of cases in which an author was correctly identified as the author of the text), the true negatives (i.e., the number of cases where an author was correctly identified as not being the author of the text), the false positives (i.e., the number of cases in which an author was incorrectly identified as being the author of the text), the false negatives (i.e., the number of cases where an author was incorrectly identified as not being the author of the text). For binary classification, these four categories are easy to identify. However, in a multiclass classification, the true positives are contained in the main diagonal cells corresponding to each author, but the other three categories are distributed according to the actual authorship attribution made by the algorithm.

For each class/author, various metrics are calculated based on the confusion matrix. They are:

Precision—the number of correctly attributed authors divided by the number of instances when the algorithm identified the attribution as correct;

Recall (Sensitivity)—the number of correctly attributed authors divided by the number of test texts belonging to that author;

F-score—a combination of the Precision and Recall (Sensitivity).

Based on these individual values, the

Accuracy Evaluation Results are calculated. The overall results are shown in

Table 20.

Metrics marked with (Micro) are calculated by aggregating the contributions of all classes into the average metric. Thus, in a multiclass context, micro averages are preferred when there might be a class imbalance, as this method favors bigger classes. Metrics marked with (Macro) treat each class equally by averaging the individual metrics for each class.

Based on these results, we can state that the macro-accuracy obtained by MEP is 88.84%. We have 400 documents, and 10 authors in our dataset. The

content of our texts is

cross-genre (i.e., stories, short stories, fairy tales, novels, articles, and sketches) and

cross-topic (as in different texts, different topics are covered). We also calculated an average number of words per document, which is 3355, and the

imbalance (considered in [

10] to be the standard deviation of the number of documents per author), which in our case is 10.45. Our type of investigation can be considered to be part of the Ngram class (this class and other investigation-type classes are presented in

Section 2.4). Next, we recreated

Table 2 (depicted in

Section 2.4) while reordering the datasets based on their macro-accuracy results obtained by Ngram class methods in reverse order, and we have appropriately placed details of our own dataset and the macro-accuracy we achieved with MEP as shown above. This top is depicted in

Table 21.

We would like to underline the large imbalance of our dataset compared with the first two datasets, the fact that we had fewer documents, and the fact that the average number of words in our texts, although higher, has a large standard deviation, as already shown in

Table 3. Furthermore, as already presented in

Section 3, our dataset is by design very heterogeneous from multiple perspectives which are not only in terms of content and size, but also the differences that pertain to the time periods of authors, the medium they wrote for (paper or online media), and the sources of the texts. Although all these aspects do not restrict the new test texts to certain characteristics (to be easily classified by the trained model), they make the classification problem even harder.

6. Conclusions and Further Work

In this paper, we introduced a new dataset of Romanian texts by different authors. This dataset is heterogeneous from multiple perspectives, such as the length of the texts, the sources from which they were collected, the time period in which the authors lived and wrote these texts, the intended reading medium (i.e., paper or online), and the type of writing (i.e., stories, short stories, fairy tales, novels, literary articles, and sketches). By choosing these very diverse texts we wanted to make sure that the new texts do not have to be restricted by these constraints. As features, we wanted to use the inflexible parts of speech (i.e., those that do not change their form in the context of communication): conjunctions, prepositions, interjections, and adverbs. After a closer investigation of their relevance to our dataset, we decided to use only prepositions, adverbs, and conjunctions, in that specific order, thus having three different feature lists of (1) 56 prepositions; (2) 415 prepositions and adverbs; and (3) 432 prepositions, adverbs, and conjunctions. Using these features, we constructed a numerical representation of our texts as vectors containing the frequencies of occurrence of the features in the considered texts, thus obtaining 3 distinct representations of our initial dataset. We divided the texts into training–validation–test sets of 50%–25%–25% ratios, while randomly shuffling them three times in order to have three randomly selected arrangements of texts in each set of training, validation, and testing.

To build our classifiers, we used five artificial intelligence techniques, namely artificial neural networks (ANN), multi-expression programming (MEP), k-nearest neighbor (k-NN), support vector machine (SVM), and decision trees (DT) with C5.0. We used the trained classifiers for authorship attribution on the texts selected for the test set. The best result we obtained was with MEP. By using this method, we obtained an overall “best” on all shuffles and all methods, which is of a error rate.

Based on the results, we tried to determine which of the three distinct feature lists lead to the best performance. This inquiry was twofold. First, we considered the best results obtained by all methods. From this perspective, we achieved the best performance when using ROST-PA-* (i.e., the dataset with 415 features, which contains prepositions and adverbs). Second, we considered the average results over 30 different runs for ANN and MEP. These results indicate that the best performance was achieved when using ROST-PAC-* (i.e., the dataset with 432 features, which contains prepositions, adverbs, and conjunctions).

We also calculated the macro-accuracy for the best MEP result to compare it with other state-of-the-art methods on other datasets.

Given all the trained models that we obtained, the first future work is using ensemble decision. Additionally, determining whether multiple classifiers made the same error (i.e., attributing one text to the same incorrect author instead of the correct one) may mean that two authors have a similar style. This investigation can also go in the direction of detecting style similarities or grouping authors into style classes based on such similarities.

Extending our area of research is also how we would like to continue our investigations. We will not only fine-tune the current methods but also expand to the use of recurrent neural networks (RNN) and convolutional neural networks (CNN).

Regarding fine-tuning, we have already started an investigation using the top N most frequently used words in our corpus. Even though we have some preliminary results, this investigation is still a work in progress.

Using deep learning to fine-tune ANN is another direction we would like to tackle. We would also like to address overfitting and find solutions to mitigate this problem.

Linguistic analysis could help us as a complementary tool for detecting peculiarities that pertain to a specific author. For that, we will consider using long short-term memory (LSTM) architectures and pre-trained BERT models that are already available for Romanian. However, considering that a large section of our texts was written one or two centuries ago, we might need to further train BERT to be able to use it in our texts. That was one reason that we used inflexible parts of speech, as the impact of the diachronic developments of the language was greatly reduced.

We would also investigate the profile-based approach, where texts are treated cumulatively (per author) to build a profile, which is a representation of the author’s style. Up to this point we have treated the training texts individually, an approach called instance-based.

In terms of moving towards other types of neural networks, we would like to achieve the initial idea from which this entire area of research was born, namely finding a “fingerprint” of an author. We already have some incipient ideas on how these instruments may help us in our endeavor, but these new directions are still in the very early stages for us.

Improving upon the dataset is also high on our priority list. We are considering adding new texts and new authors.