1. Introduction

In many clinical research areas, logistic regression is frequently used to develop a predictive model based upon binary data to predict the likelihood of a patient’s health status, such as health or disease. In breast cancer research, for example, models can be developed to predict the likelihood of developing breast cancer. Predictions based on these models and the logistic regression framework assist physicians and patients in making joint decisions about future treatment options. Logistic regression, on the other hand, frequently suffers from severe estimation problems due to separation or multicollinearity, limiting its implementation and potentially leading to unreliable conclusions about the estimated model. Separation and multicollinearity are not insignificant issues, and they can be aggravating when they occur frequently at the same time.

The existence, finiteness, and uniqueness of maximum likelihood estimation, which has been extensively studied, determines whether the logistic regression model can draw valid conclusions. Silvapulle (1981) took a step in that direction by demonstrating that a certain degree of overlap is both a necessary and sufficient condition for the existence of maximum likelihood estimates for the binomial response model [

1]. Albert and Anderson demonstrated the existence theorems of the maximum likelihood estimates (MLEs) for the multinomial logistic regression model by considering three possible patterns for the sample points: complete separation, quasi-complete separation, and overlap [

2]. Albert and Anderson defined separation as one or more predictors having strong effects on the response and thus (almost) precisely predicting the outcome of interest (complete separation or quasi-complete separation) [

2]. In small-to medium-sized datasets, Allison found that separation happens frequently when the regression coefficient of at least one covariate value becomes infinite [

3]. Sparse data or separated data occur when the response variable is completely separated by a single covariate or linear combination of variables [

4]. Additionally, an infinite estimate can also be regarded as exceedingly inaccurate, resulting in Wald confidence intervals with infinite widths [

5]. Separation can occur even if the underlying model parameter has a low absolute value, according to Heinze and Schemper [

6]. They also showed how the sample sizes, the number of dichotomous covariates, the magnitude of the odds ratios, and the degree of balance in their distribution all affected the probability of separation [

6]. Furthermore, advanced but computationally costly techniques were exploited for detecting separation [

7,

8]. However, according to Agresti, monitoring the variance in the iteration process or supervising the iterative convergence is sufficient to proclaim separation [

9].

The majority of the papers in this field are devoted to proposing new parameter estimates that would exist and have good theoretical properties if the data were already known to be in complete or quasi-complete separation. Penalized approaches for logistic regression models have gained popularity as a means of overcoming the separation problem. They produce fewer skewed estimates and, in general, more accurate inferences. In this case, Firth’s penalized method is an alternative to the standard logistic regression approach [

10]. This method eliminates the first-order term (

) in the asymptotic bias expansion of the MLEs of the regression parameters by modifying the score equation with a penalty term known as the Jeffreys invariant prior. Heinze and Schemper applied Firth’s method to the solution of the separation problem in the logistic regression [

6]. However, one of the criticisms leveled at the Firth-type penalty in recent studies is that it is based on observed covariate data, which can result in artifacts such as estimates that fall outside the prior median and the maximum likelihood estimation (MLE) range [

11,

12]. Firth’s logistic regression diminishes bias in maximum likelihood coefficient estimates while producing bias in the predicted probabilities. The more the outcome variables are imbalanced, the greater the bias [

13]. As an alternative to this, Greenland and Mansournia proposed the log

F(1, 1) and log

F(2, 2) priors as default priors for logistic regression [

11,

12]. According to the authors, the proposed log-

F(

m,

m) priors are reasonable, transparent, and computationally straightforward for logistic regression. Emmanuel modeled the prediction of the default probability using the penalized regression models and found that the log-

F prior methods are preferred [

14]. Note that other methods imposing shrinkage on the regression coefficients can also overcome the separation issue. For example, to address the issue of point separation, Rousseeuw and Christmann presented a hidden logistic regression model [

15].

According to one assumption of binary logistic regression, explanatory variables should not be strongly correlated. Logistic regression models need to meet certain assumptions in order to produce reliable results. When the number of covariates is relatively large or the covariates are highly correlated, multicollinearity is likely to arise. One way to deal with this problem in linear regression models is called ridge regression, which was first introduced by Hoerl and Kennard [

16,

17]. The author proved that there is a non-zero ridge parameter value which makes the mean square error (MSE) of the slope parameter of the ridge regression smaller than the variance of the ordinary least squares (OLS) estimate of the corresponding parameter. Schaefer applied the ridge parameters proposed by Hoerl and Kennard in logistic regression and created a ridge-type estimator that will have a smaller total mean squared error than the maximum likelihood estimator when the independent variables are severely collinear [

18]. Furthermore, the ridge parameter, whose size is determined by the number and collinearity of covariates, controls the amount of shrinkage [

18]. Lee and Silvapulle showed that it is advised to employ ridge tracing as a diagnostic tool in logistic regression analysis, since it offers additional insight and readily draws attention to particular characteristics of the data model. They observed that a ridge type estimator is at least as good as and often much better than the standard logistic regression estimator in terms of the total and predicted mean squared error criteria in a Monte Carlo study [

19]. In their study, Le Cessie and Van Houwellingen used the ridge regression method to improve the parameter estimates and decrease prediction errors in logistic regression [

20]. Inan and Erdogan were the first to use the Liu-type estimator in the logistic regression model [

21]. For the first time, Guoping Zeng and Emily Zeng investigated the relationship between multicollinearity and separation, proving analytically that multicollinearity implies quasi-complete separation as well as the absence of a finite solution for maximum likelihood estimation [

22]. Senaviratna et al. also examined the four primary strategies for detecting multicollinearity before moving on to statistical inference, which are the tolerance, variance inflation factor (VIF), condition index, and variance proportions [

23].

Separation and multicollinearity, on the other hand, are nearly never discussed together in the literature. Methods for addressing separation and multicollinearity at the same time have received little attention. To begin, Shen and Gao introduced a double-penalized likelihood estimator, which solves these two problems concurrently by combining Firth’s penalized likelihood equation with a ridge parameter in the logistic regression model [

24]. Here, the separation problem is solved using Firth’s penalized likelihood equation, and the multicollinearity problem is solved using the ridge parameter. Nonetheless, Firth’s penalty has several unignorable flaws. Because Firth’s penalty incorporates the penalization’s intercept, it provides a bias in the average predicted probability that is much greater than that created by logistic regression [

11]. Furthermore, as Firth noted [

10], it does not minimize the MSE. Then, there’s the log

F-type penalty, which is a popular alternative to Firth-type penalization that performs better in many ways. The following are the advantages of the log

F-type penalty in further detail. First, the log

F-type penalty avoids penalizing the intercept, causing an average predicted probability equal to the proportion of observed events. Next, the log

F-type penalty is independent of the data. The log

F-type penalty, unlike the Jeffreys prior (Firth-type penalty), does not result in correlations between explanatory variables. In particular, the log

F-type penalty will minimize the MSE of efficient estimates while also providing more stable estimates. Thus, in this paper, we modify Shen and Gao’s approach and propose a more appealing double-penalized likelihood estimator (lFRE) for logistic regression models that combines a log

F-type penalized likelihood approach with a ridge penalty term.

The rest of this paper is organized as follows. We consider logistic regression, Firth’s logistic regression [

6], logistic regression with penalization by log-

F(

m,

m) [

11], and Shen and Gao’s double-penalized likelihood estimator [

24] as the comparison methods. These methods are reviewed in

Section 2. Then, in

Section 3, we propose the estimator that combines the log

F-type penalized likelihood approach with a ridge penalty term (lFRE), and we discuss the algorithm implementation of coefficient estimates and the selection of ridge parameters in this model. In

Section 4, we offer two detailed simulation studies comparing the performance of lFRE to that of previous logistic regression-based approaches presented for both prediction and effect estimation in rare event and small dataset conditions. The predictive performance of the methods under investigation is demonstrated in

Section 5 of the research for predicting the existence of breast cancer.

Section 6 concludes with the discussion and conclusions.

4. Simulations

The purpose of this section is to assess the properties of the regression coefficients of the LR, Ridge, Firth, LF22, DPLE, lFRE11, and lFRE22 methods by conducting two simulation studies. The following briefly describes how data were generated and cases varied in the simulation study.

4.1. Software and Data Generation

The simulations were programmed in R 4.0.5. The R functions glm and glmnet [

29] were used to implement LR and Ridge. Firth was implemented in the brglm2 package [

30]. The LF11 and LF22 models were implemented using the R function plogit [

12]. To generate the mixed continuous and binary covariates in a dataset, we first generated three continuous covariates (

, and

) from the multivariate normal distribution with zero means, unit variances, and a specified correlation matrix. Then, we produced two binary covariates (

and

) from binomial distribution, followed by a binary response variable generated from a Bernoulli distribution with a probability

calculated from true logistic model

, where

,

,

,

,

, and

. To ensure that the results were comparable, we used the same random number seed throughout the procedure.

4.2. Performance Evaluation of the Methods

The average parameter estimation bias and mean squared error (MSE) results were considered to evaluate the performances of the penalized methods in previous studies as mentioned in

Section 2. The average MSE for a replication was calculated using

where

is the estimate of

and

denotes the true parameter for the

pth parameter of the

sth simulated dataset.

In addition, the average parameter estimation bias was calculated by using

For the Ridge, DPLE, lFRE11, and lFRE22 penalized methods with a ridge parameter, the average MSE was calculated as follows:

Additionally, the average parameter estimation bias was computed as

where the choice of ridge parameter (

) depends on the cross-validation criterion, which was mentioned in

Section 3.3, and

was obtained from these estimators with optimal

. The appropriate tuning parameters for fitting Ridge, DPLE, lFRE11, and lFRE22 were chosen using 10-fold cross validation. The average over the number of simulations where convergence was achieved was used to calculate the estimations of the regression coefficients for the respective models.

4.3. Simulation 1

In this section, we carried out a simulation study to assess the properties of the regression coefficients of these methods (LR, Ridge, Firth, LF22, DPLE, lFRE11, and lFRE22) with respect to the bias and mean squared error (MSE). For a fixed correlation coefficient , we considered alternative scenarios in which there were three covariates with potentially strong correlations. To study the effects of the sample size in the situation of a small severe multicollinearity, the sample sizes (n) were set to 50, 80, 130, and 200. We also used the data from the first simulation here. Five coefficients (, , , , and ) were estimated in this simulation study, with , , and being continuous covariables and and being binary covariables, omitting the intercept term.

Each sample size scenario yielded 100 datasets, which we simulated. The results of the simulations are shown in

Table 2. The maximum likelihood estimates (MLEs) were nonexistent in the small sample of 50 people (due to separation problems caused by the small sample size, high correlation among covariates, or both). When we created a model using conventional maximum likelihood binary logistic regression with data with a limited number of subjects relative to the number of predictors, our results demonstrated that the MLEs were nonexistent. However, as the sample size grew, the evidence for nonexistence improved. According to the findings in

Table 2, the Ridge estimator generally offered the largest bias, followed by Firth and LF22, while DPLE, lFRE11, and lFRE22 offered very little bias. To some extent, all penalized approaches offered improvement. Firth and LF22 reduced the bias of the coefficient estimations when compared with LR in terms of the mean bias. Some bias values obtained by DPLE, lFRE11, and lFRE22 were higher than those obtained by LR, which was due to the ridge parameter penalty term. These results are to be expected, given that the ridge parameter in the double-penalized estimators compromises bias in order to lower the MSE. Firth would yield a significantly higher MSE than LF22 in every situation. This is due to the fact that Firth does not minimize the MSE. Clearly, the statistics in

Table 2 reveal that DPLE with a Firth-type penalty had a higher MSE than lFRE11 and lFRE22 with a log

F-type penalty, as evidenced by our simulation findings, which are consistent with intuition. In almost all settings, lFRE22 provided slightly larger bias on the binary covariables (

and

), but it outperformed the other approaches on continuous covariables (

,

, and

). However, when it comes to the MSE, lFRE22 was the best, while lFRE11 was at least second. When compared with other penalized methods, lFRE11 and lFRE22 had a significantly lower bias and MSE. The differences were larger when the sample size was small or medium (see

Table 2). The difference gradually decreased as the sample size grew larger.

There appeared to be a difference in the parameter estimation of binary and continuous covariates between all penalized estimators in terms of bias and MSE in our simulation. The ridge effect appeared to be stronger in the continuous covariates than in the binary covariates, as seen in

Table 2. The reduction in MSE in Firth, Ridge, and LF22 compared with DPLE, lFRE11, and lFRE22 for the binary covariates suggests a compromise in terms of increased bias. In the three double-penalized estimators, the MSE for the continuous covariates was dramatically lowered. When it came to the mean bias and MSE for the binary variable, the three doubly penalized estimators (DPLE, lFRE11, and lFRE11) were indistinguishable.

The MSE results of the DPLE, lFRE11, and lFRE22 are visualized with respect to the continuous and binary variables for different sample sizes in

Figure 1 for further information. It is clear that lFRE22 had the lowest MSE for both the continuous and binary covariables. Furthermore, DPLE, lFRE11, and lFRE22 provided greater improvement. When the sample size was small, this trend became more apparent. The difference between the three methods for continuous and binary covariates decreased as the sample size increased.

In the second simulation, we conducted two series of simulations to look at the effects of correlation among covariates (), sample size (), and covariate type (binary and continuous). To investigate the effects of the correlation and small sample size on the estimation of the regression coefficients, we used a variety of correlation coefficients and small sample sizes. We also used the data generated in the first simulation in this case. For each scenario, 100 datasets were generated, and each dataset was fitted with all of the regression approaches under consideration. Two tables present the bias and MSEs of the estimates, and two figures present the bias and MSEs for different scenarios obtained by DPLE, lFRE11, and lFRE22.

4.4. Simulation 2

The estimated bias and MSE values for LR, Firth, Ridge, LF22, DPLE, lFRE11, and lFRE22 are reported in

Table 3 and

Table 4, omitting the intercept. Other methods, with the exception of Ridge and LR, were slightly biased. Ridge produced a coefficient deviation that was even greater than that for LR and could not be ignored. In one set of simulations, these estimators were tested with a sample size of

. A second series tested these estimators with a sample size of

. LR offered infinite estimates in a sample size of 40, as shown in

Table 3, which is regarded as conditional on the presence of uncorrected MLEs due to sample size separation. Nonetheless, all of the approaches evaluated had finite bias and MSEs, allowing us to reasonably conclude that they could address the small sample separation problem. The maximum likelihood estimation converged as the sample size grew from 40 to 80, and LR produced an effective bias and MSE. One can also conclude from

Table 4 that the MSE of LR was inflated, especially when the correlation coefficient was between 0.85 and 0.95, and some MSE values exceeded 1. According to

Table 3 and

Table 4, all penalized methods outperformed LR, as evidenced by the smaller MSE. Furthermore, the biases of the Firth, LF11, and LF22 methods were less severe than that of the LR model. Because of the ridge penalty term in DPLE, lFRE11, and lFRE22, a slight increase in bias was required to ensure a decrease in the MSE. As the correlation coefficient increased, so did the MSEs of LR, Firth, Ridge, and LF22. However, it appears that DPLE, lFRE11, and lFRE22 performed the best in terms of reducing the MSE. It is worth noting that lFRE22 performed best because it achieved the greatest reduction in MSE in all experimental settings. The findings appear to suggest that lFRE in logistic regression warrants further investigation. When the covariables were highly correlated, adding a ridge parameter improved the estimator’s performance.

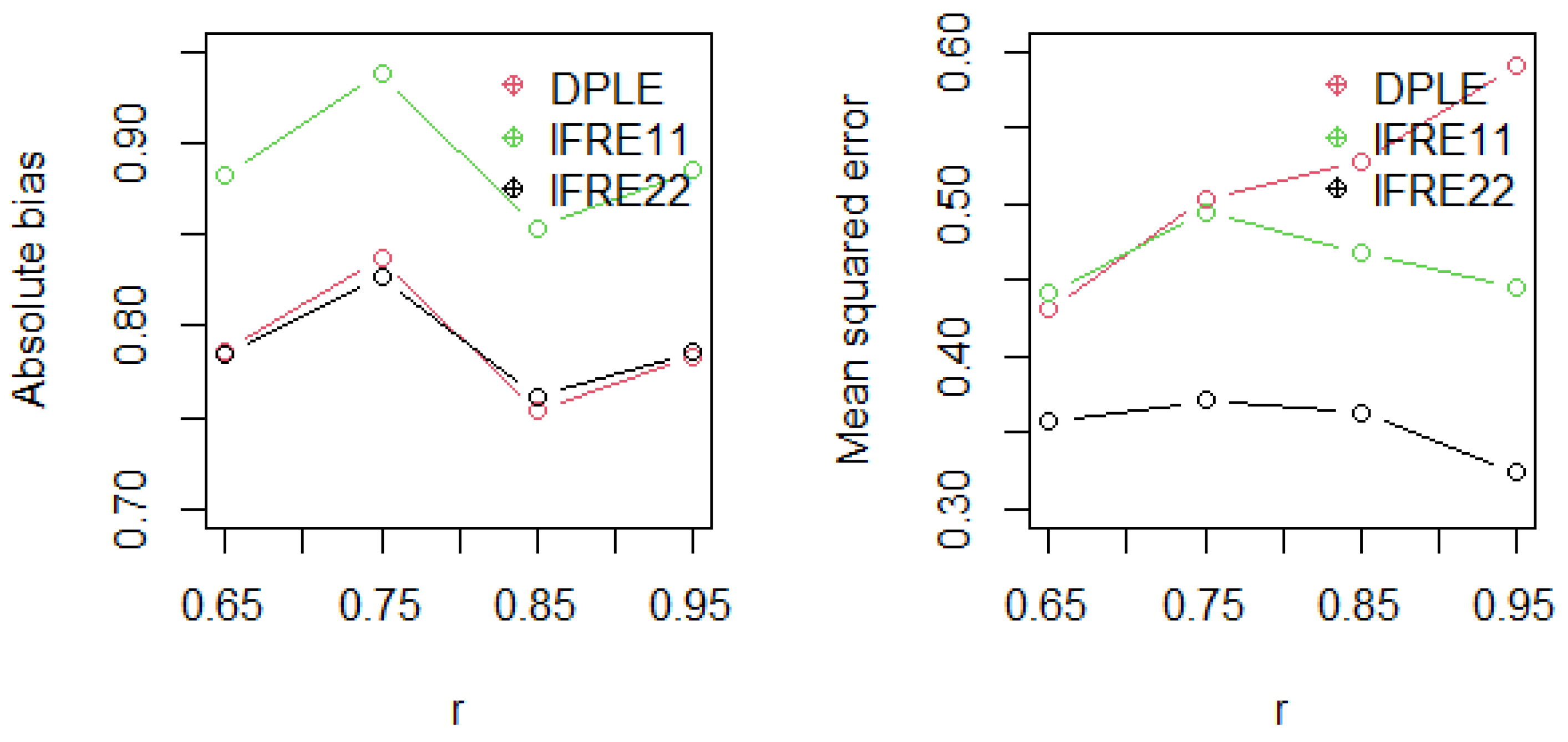

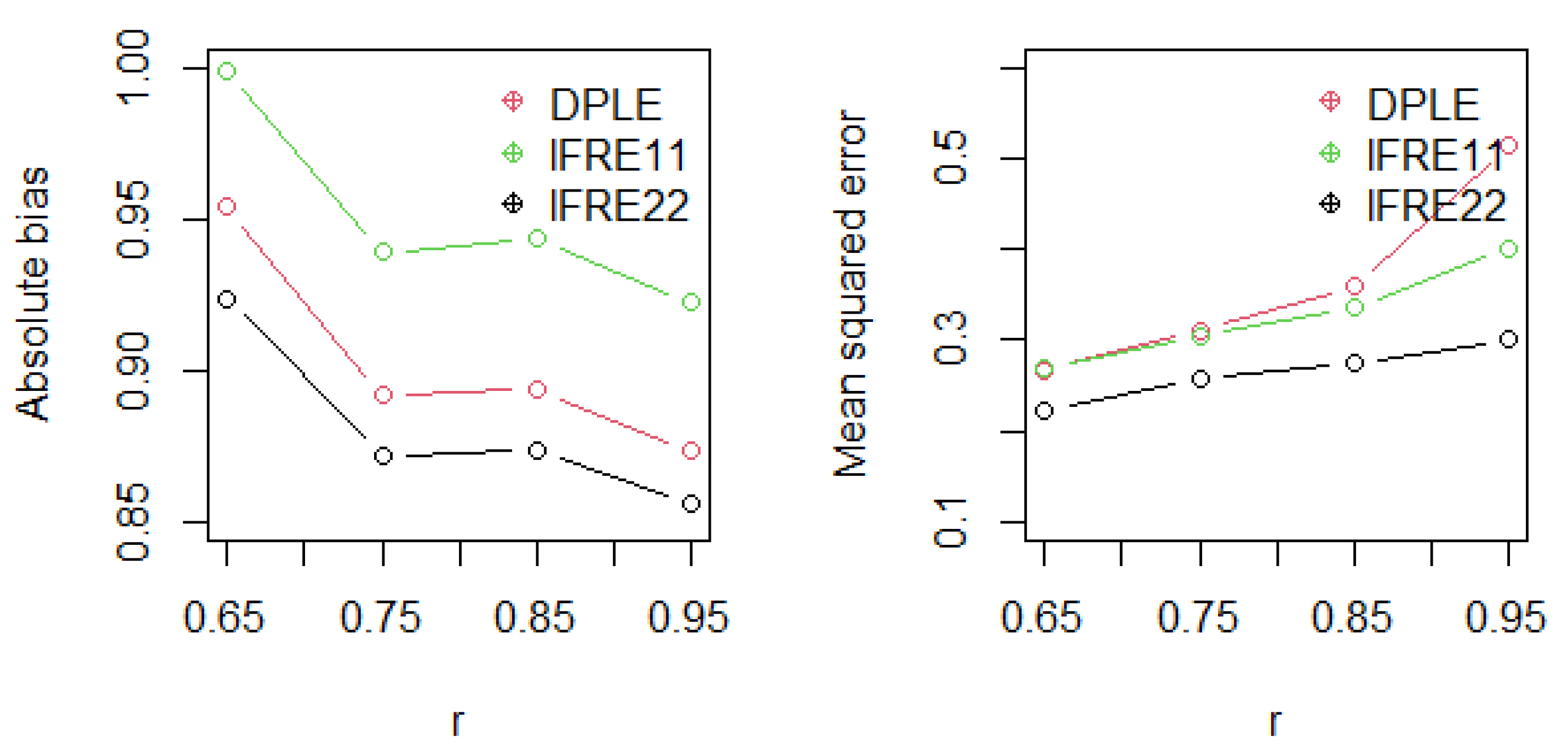

Figure 2 and

Figure 3 analyze the biases and MSEs of the DPLE, lFRE11, and lFRE22 methods over all explanatory variables, omitting the intercept for

and

, respectively. The results in

Figure 2 reveal that they provided almost the same level of bias, followed by lFRE11, lFRE22 provided the lowest MSE, and DPLE provided the highest MSE, especially when the correlation coefficient value was high in the sample size of 40. In practically all situations, lFRE22 performed best in terms of bias and MSE, as shown in

Figure 3. In the situation of increasing collinearity, DPLE also performed poorly. It was revealed that when the sample size was small and the multicollinearity was severe, it was recommended to use lFRE22, which not only yielded modest absolute bias but also provided the lowest MSE when compared with other penalized approaches.

As a result, we believe that the lFRE approach is frequently the best method for small datasets with strongly correlated independent variables, as it efficiently removes bias from predicted probabilities and provides the smallest MSE while also delivering very accurate regression coefficients. When the sample size is small and the independent variables are highly correlated, lFRE is more computationally simple than competing penalized regression approaches.

5. Breast Cancer Study

The dataset used to evaluate the predictive performance of our proposed estimator and compare it to other estimators under study is available from the University of California Irvine machine learning repository, and it was originally extracted from the study conducted by M. Pereira et al. (2018) [

31], where the goal was to obtain the selection of effective breast cancer biomarkers in predicting the presence or absence of breast cancer in 116 patients (64 patients with breast cancer and 52 healthy controls). The presence or absence of breast cancer was of particular interest as an outcome (1 = healthy controls; 2 = patients). There were nine quantitative predictors in total. Anthropometric data and parameters could be acquired during standard blood analysis. Age, BMI, blood glucose, insulin, HOMA, leptin, adiponectin, resistin, and MCP-1 were all measured or observed in each of the 166 subjects. These clinical characteristics are detailed as follows:

Age (years);

BMI (kg/m) was calculated by dividing the weight by the square of the height;

Glucose (mg/dL), namely the serum glucose levels, were measured using a commercial kit and an automated analyzer;

Insulin (U/mL), adiponectin (g/mL), resistin (ng/mL), and MCP-1(pg/dL) levels in the serum were determined using commercial enzyme-linked immunosorbent test kits for leptin, adiponectin, and resistin, as well as the chemokine Monocyte Chemoattractant Protein 1 (MCP-1);

To assess insulin resistance, the Homeostasis Model Assessment (HOMA) index was calculated (, where (If) is the fasting insulin level (U/mL) and Gf is the fasting glucose level (mmol/L)).

There were nine predictors in total, and they were all continuous. The HOMA index and insulin had a strong correlation (

). The results from fitting the logistic regression models using LR, Firth, LF11, LF22, Ridge, DPLE, lFRE11, and lFRE22 are summarized in

Table 5. The model’s discriminating ability was quantified using the area under the receiver operating characteristic curve (AUROC), which is a measure that distinguishes subjects with and without events of interest. When the AUROC value was around 0.5, that meant there was no discrimination, and when it was close to 1, that meant there was excellent discrimination. The Brier Score (BS) was used to assess the overall predictive performance, and it is the mean of the squared difference between each patient’s observed and predicted risk as determined by the model. The better the performance, the lower the value. When

, the prediction is perfect.

Table 5 reveals their predictive performance using the two most common approaches to predictive accuracy (BS and AUROC).

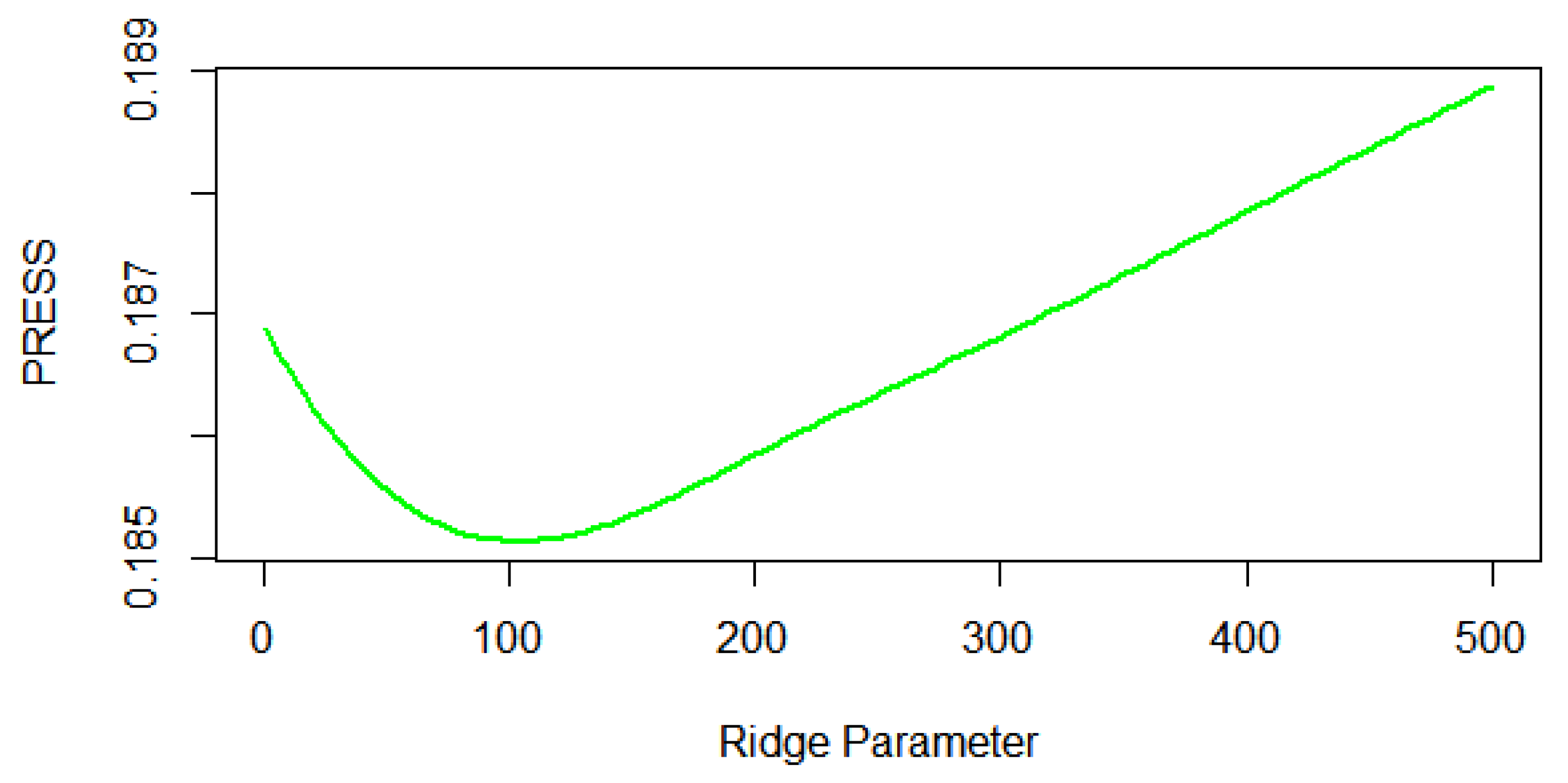

The results of ridge parameter selection using cross-validation are provided in

Figure 4. The ideal ridge parameter for this dataset was determined to be 100. The assessment metrics BS and AUROC chose the same model, lFRE22, according to the results.

Table 5 indicates that all penalized estimators increased in AUROC over LR for both predictive performance measures (BS and AUROC). It should be noted that Firth’s performance in BS was significantly worse than that of the LR model, which is consistent with earlier findings [

13]. Furthermore, the magnitudes of the three double-penalized estimators (DPLE, lFRE11, and lFRE22) were significantly smaller than those of the three single-penalized estimators (Firth, LF11, and LF22) for all items. It was also observed from the results in

Table 5 that the three double-penalized estimators with ridge parameters outperformed the three single-penalized estimators. This is because, as previously stated, the two variables of the HOMA index and insulin were highly correlated, and the ridge penalty penalized the multicollinearity effect. Regarding the the BS and AUROC, LF22 surpassed Firth and LF11, whereas Firth and LF11 outperformed each other. Similarly, lFRE11 and lFRE22 provided roughly the same degree of improvement in BS and AUROC, but DPLE gave the greatest BS value and the lowest AUROC value of the three doubly penalized estimators. As a result, in the setting of a small dataset with strongly correlated covariates, such analysis adequately demonstrates the superiority of lFRE22’s predictive ability.

Given the sample size of 116, finite MLEs were available in the conventional logistic regression model for this dataset. As a result, based on the convergent simulation results, the simulation findings for the LR model are relevant and comparable. In general, lFRE22 had the lowest BS and the highest AUROC compared with the other models discussed in this study. Such a result demonstrates that lFRE22 should be emphasized.

6. Discussion and Conclusions

In this study, separation and multicollinearity problems in logistic regression were discussed. Despite the issue being a hot topic for more than 40 years, few studies have attempted to handle both types of problems at the same time. This paper reviewed some shortcomings in Shen and Gao’s method (DPLE) and investigated a new double-penalty likelihood estimation method called lFRE, which combines a logF-type penalty with a ridge penalty to effectively solve separation and multicollinearity problems in logistic regression.

The results revealed that lFRE (lFRE11 and lFRE22) was superior to Firth, LF11, LF22, and DPLE in terms of prediction performance. Separation is commonly solved using Firth, LF11, and LF22. As long as there is no multicollinearity in logistic regression, LF22 has been proven to be the preferred method for obtaining unbiased prediction probability and the minimum MSE of coefficient estimation. However, when the separation and multicollinearity problems emerge at the same time in logistic regression, they are not appropriate. Considering separation and multicollinearity, based on the simulation results, we support that lFRE is superior to DPLE because lFRE is effective not only in solving separation problems but also in solving multicollinearity problems. On the other hand, when multicollinearity is taken seriously, DPLE will provide a non-negligible MSE.

In conclusion, Shen and Gao’s double-penalized likelihood estimator may provide a more appropriate reference point than previous penalized estimators, given that the generated estimates remain finite even when separation occurs. However, based on the research results, we suggest using lFRE instead of Shen and Gao’s method to deal with the separation and multicollinearity problems of small or sparse data with strong correlation of variables. The reasons for this are as follows. First, the proposed lFRE provides the minimum MSE in regression coefficient estimation and has greater improvement in prediction performance than other penalty method, such as DPLE. Secondly, since a logF-type penalty releases the intercept from the penalty and accurately estimates the average prediction probability, lFRE provides an unbiased prediction probability. In contrast, DPLE penalizes the intercept and introduces bias into the prediction probability. Thirdly, the logF-type penalty used by lFRE is not data-dependent, and it does not incorporate the correlation between explanatory variables, which is different from the Firth-type penalty. Finally, lFRE performs reasonably well in the case of high correlation between covariates. However, in this scenario, DPLE will result in a large MSE. As a result, lFRE is superior to DPLE, and lFRE22 is superior to lFRE11 among the two forms of lFRE11. Although both have similar prediction efficiencies, lFRE22 has a smaller MSE in terms of the regression coefficient, especially for continuous variables.

In addition, lFRE can be applied to any generalized linear model (GLIM) for future research.