A Quantum-Based Chameleon Swarm for Feature Selection

Abstract

1. Introduction

- Develop an innovative improved version of Cham using the mathematical operators of QBO to improve exploration capability.

- Employ the enhanced hybrid QCham algorithm as a new FS method to detect and eliminate the irrelevant features, which results in improving the accuracy and efficiency of the classification process.

- Evaluate the efficiency of the proposed QCham using eighteen UCI datasets and compare its performance with other well-known conventional FS approaches.

2. Related Works

3. Background

3.1. Chameleon Swarm Optimizer

| Algorithm 1. A pseudo-code of CGO algorithm. |

| 1. Set 2. The coordinates of rotation center of chameleon at iteration is given by 3. Initialize the positions and velocities of chameleons. 4. While do 5. for to do 6. for to do 7. if then 8. 9. else 10. 11. end if 12. end for 13. end for 14. for to do 15. for to do 16. 17. end for 18. end for 19. Update positions of chameleon based on predefined and 20. Set 21. end while |

3.2. Quantum-Based Optimization (QBO)

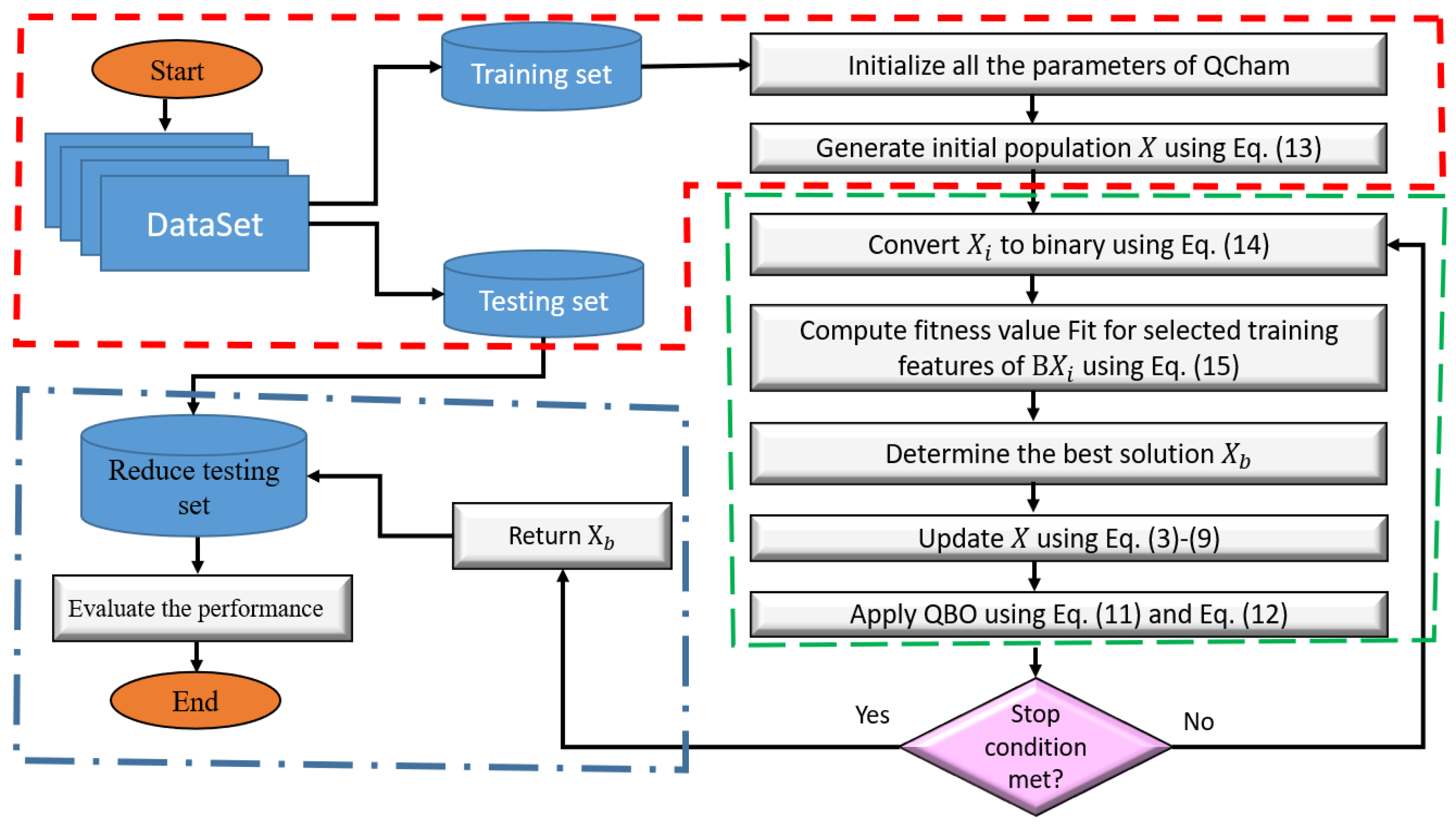

4. Proposed QCham Method

4.1. First Phase

4.2. Second Phase

4.3. Third Phase

| Algorithm 2. Procedures of QCham |

| 1. Input: Number of iterations (), tested dataset with D features, number of solutions (N), and other parameters First Stage 2. Construct training and testing sets, which represents 70% and 30%. 3. Apply Equation (13) to construct the population . Second Stage 4. 5. While () 6. 7. Using Equation (14) to obtain the Quantum version of . 8. Calculate fitness value of according to training sample as in Equation (15). 9. Allocate the best solution . 10. Using Equations (3)–(9) to update 11. 12. EndWhie Third Stage 13. Remove irrelevant features from testing set using . 14. Assess the efficiency of QCham using different measures. |

5. Experimental Results

5.1. Description of Dataset and Setting of Parameter

5.2. Experimental Results and Discussion

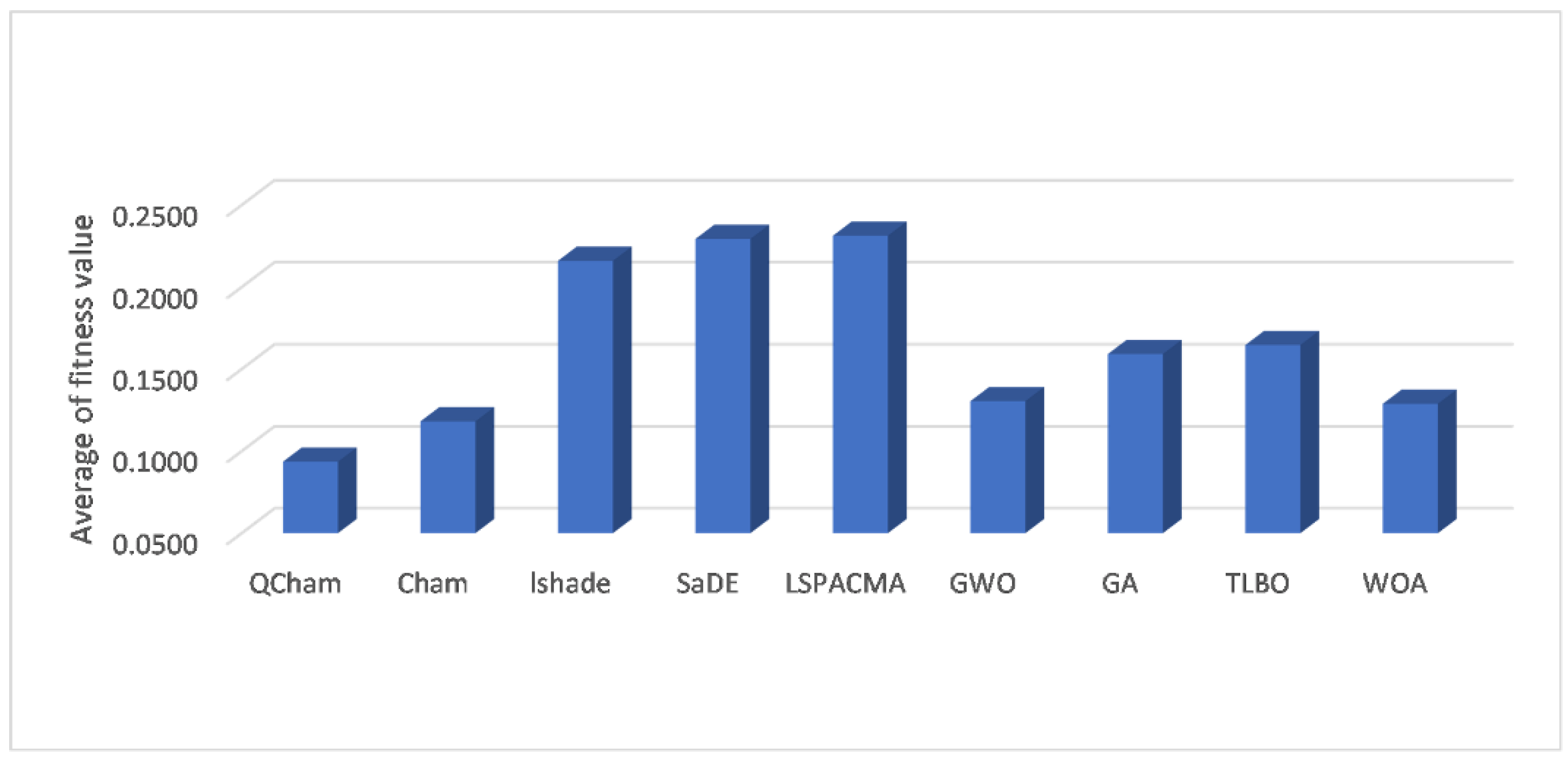

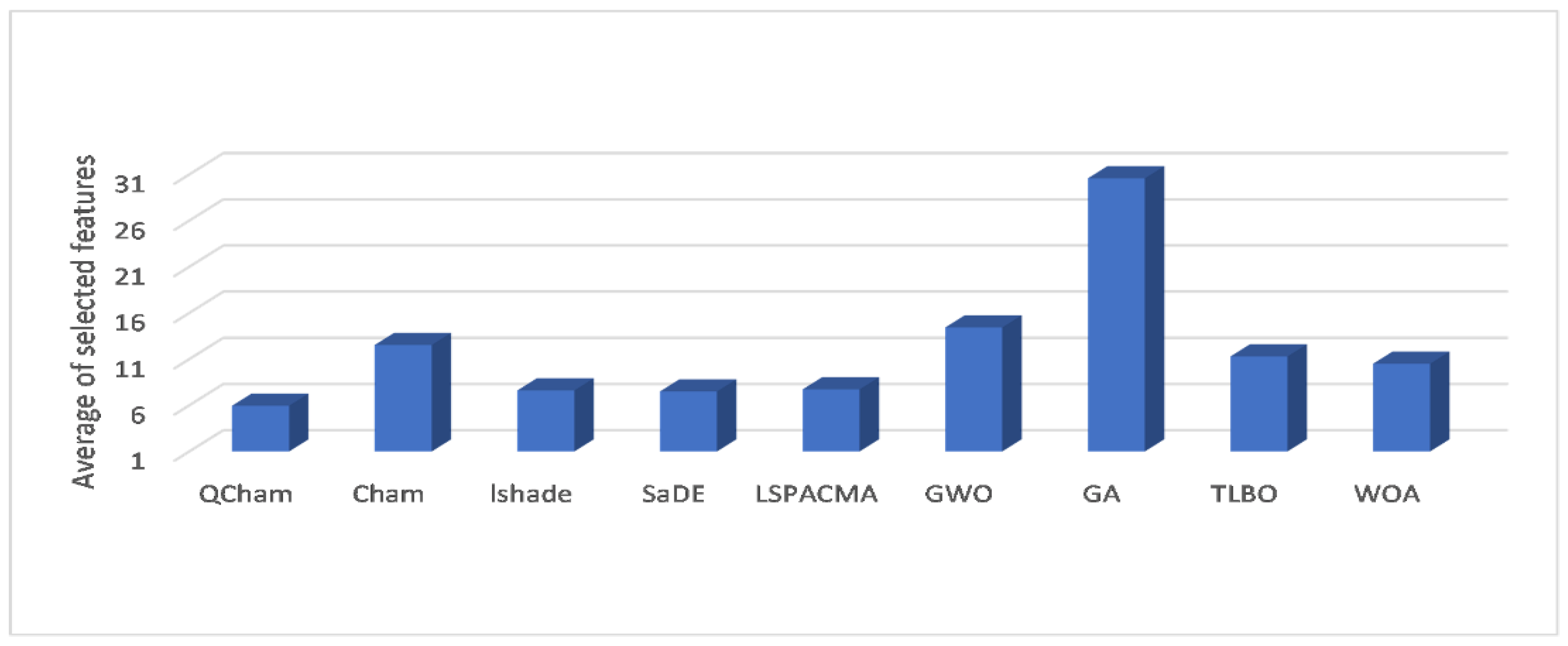

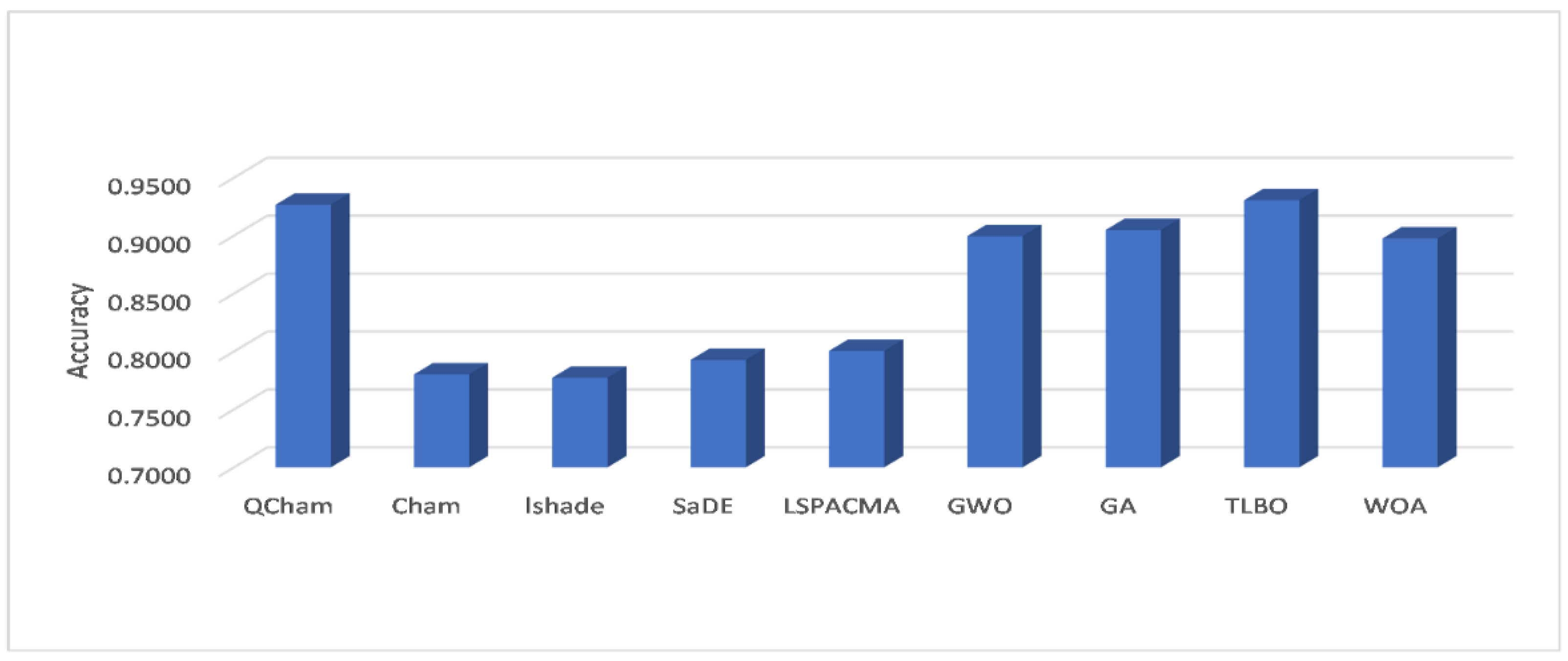

5.3. Comparison with Other FS Models

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhao, W.; Wang, C.; Nakahira, Y. Medical Application on Internet of Things. In Proceedings of the IET International Conference on Communication Technology and Application (ICCTA 2011), Beijing, China, 14–16 October 2011; pp. 660–665. [Google Scholar]

- Javaid, M.; Khan, I.H. Internet of Things (IoT) enabled healthcare helps to take the challenges of COVID-19 Pandemic. J. Oral Biol. Craniofacial Res. 2021, 11, 209–214. [Google Scholar] [CrossRef] [PubMed]

- Abd Elaziz, M.; Mabrouk, A.; Dahou, A.; Chelloug, S.A. Medical Image Classification Utilizing Ensemble Learning and Levy Flight-Based Honey Badger Algorithm on 6G-Enabled Internet of Things. Comput. Intell. Neurosci. 2022, 2022, 5830766. [Google Scholar] [CrossRef] [PubMed]

- Boursianis, A.D.; Papadopoulou, M.S.; Diamantoulakis, P.; Liopa-Tsakalidi, A.; Barouchas, P.; Salahas, G.; Karagiannidis, G.; Wan, S.; Goudos, S.K. Internet of Things (IoT) and Agricultural Unmanned Aerial Vehicles (UAVs) in smart farming: A comprehensive review. Internet Things 2022, 18, 100187. [Google Scholar] [CrossRef]

- Salih, K.O.M.; Rashid, T.A.; Radovanovic, D.; Bacanin, N. A Comprehensive Survey on the Internet of Things with the Industrial Marketplace. Sensors 2022, 22, 730. [Google Scholar] [CrossRef]

- Haghnegahdar, L.; Joshi, S.S.; Dahotre, N.B. From IoT-based cloud manufacturing approach to intelligent additive manufacturing: Industrial Internet of Things—an overview. Int. J. Adv. Manuf. Technol. 2022, 119, 1461–1478. [Google Scholar] [CrossRef]

- Abichandani, P.; Sivakumar, V.; Lobo, D.; Iaboni, C.; Shekhar, P. Internet-of-Things Curriculum, Pedagogy, and Assessment for STEM Education: A Review of Literature. IEEE Access 2022, 10, 38351–38369. [Google Scholar] [CrossRef]

- Tubishat, M.; Idris, N.; Shuib, L.; Abushariah, M.A.M.; Mirjalili, S. Improved Salp Swarm Algorithm based on opposition based learning and novel local search algorithm for feature selection. Expert Syst. Appl. 2020, 145, 113122. [Google Scholar] [CrossRef]

- Hancer, E.; Xue, B.; Karaboga, D.; Zhang, M. A binary ABC algorithm based on advanced similarity scheme for feature selection. Appl. Soft Comput. J. 2015, 36, 334–348. [Google Scholar] [CrossRef]

- Ewees, A.A.; Abualigah, L.; Yousri, D.; Algamal, Z.Y.; Al-qaness, M.A.A.; Ibrahim, R.A.; Abd Elaziz, M. Improved Slime Mould Algorithm based on Firefly Algorithm for feature selection: A case study on QSAR model. Eng. Comput. 2021, 38, 2407–2421. [Google Scholar] [CrossRef]

- Dahou, A.; Elaziz, M.A.; Zhou, J.; Xiong, S. Arabic Sentiment Classification Using Convolutional Neural Network and Differential Evolution Algorithm. Comput. Intell. Neurosci. 2019, 2019, 2537689. [Google Scholar] [CrossRef]

- Al-qaness, M.A.A. Device-free human micro-activity recognition method using WiFi signals. Geo Spat. Inf. Sci. 2019, 22, 128–137. [Google Scholar] [CrossRef]

- Sahlol, A.T.; Yousri, D.; Ewees, A.A.; Al-qaness, M.A.A.; Damasevicius, R.; Elaziz, M.A. COVID-19 image classification using deep features and fractional-order marine predators algorithm. Sci. Rep. 2020, 10, 15364. [Google Scholar] [CrossRef] [PubMed]

- Benazzouz, A.; Guilal, R.; Amirouche, F.; Hadj Slimane, Z.E. EMG Feature Selection for Diagnosis of Neuromuscular Disorders. In Proceedings of the 2019 International Conference on Networking and Advanced Systems (ICNAS), Annaba, Algeria, 26–27 June 2019; pp. 1–5. [Google Scholar]

- Nobile, M.S.; Tangherloni, A.; Rundo, L.; Spolaor, S.; Besozzi, D.; Mauri, G.; Cazzaniga, P. Computational Intelligence for Parameter Estimation of Biochemical Systems. In Proceedings of the 2018 IEEE Congress on Evolutionary Computation (CEC), Brisbane, Australia, 10–15 June 2018; pp. 1–8. [Google Scholar]

- Cheng, S.; Ma, L.; Lu, H.; Lei, X.; Shi, Y. Evolutionary computation for solving search-based data analytics problems. Artif. Intell. Rev. 2021, 54, 1321–1348. [Google Scholar] [CrossRef]

- Rundo, L.; Tangherloni, A.; Cazzaniga, P.; Nobile, M.S.; Russo, G.; Gilardi, M.C.; Vitabile, S.; Mauri, G.; Besozzi, D.; Militello, C. A novel framework for MR image segmentation and quantification by using MedGA. Comput. Methods Programs Biomed. 2019, 176, 159–172. [Google Scholar] [CrossRef]

- Ibrahim, R.A.; Ewees, A.A.; Oliva, D.; Elaziz, M.A.; Lu, S. Improved salp swarm algorithm based on particle swarm optimization for feature selection. J. Ambient Intell. Humaniz. Comput. 2018, 10, 1–15. [Google Scholar] [CrossRef]

- Ma, J.; Bi, Z.; Ting, T.O.; Hao, S.; Hao, W. Comparative performance on photovoltaic model parameter identification via bio-inspired algorithms. Sol. Energy 2016, 132, 606–616. [Google Scholar] [CrossRef]

- Chang, F.J.; Chang, Y.T. Adaptive neuro-fuzzy inference system for prediction of water level in reservoir. Adv. Water Resour. 2006, 29, 1–10. [Google Scholar] [CrossRef]

- Khanduzi, R.; Maleki, H.R.; Akbari, R. Two novel combined approaches based on TLBO and PSO for a partial interdiction/fortification problem using capacitated facilities and budget constraint. Soft Comput. 2018, 22, 5901–5919. [Google Scholar] [CrossRef]

- Elsheikh, A.H.; Muthuramalingam, T.; Abd Elaziz, M.; Ibrahim, A.M.M.; Showaib, E.A. Minimization of fume emissions in laser cutting of polyvinyl chloride sheets using genetic algorithm. Int. J. Environ. Sci. Technol. 2022, 19, 6331–6344. [Google Scholar] [CrossRef]

- Babikir, H.A.; Elaziz, M.A.; Elsheikh, A.H.; Showaib, E.A.; Elhadary, M.; Wu, D.; Liu, Y. Noise prediction of axial piston pump based on different valve materials using a modified artificial neural network model. Alexandria Eng. J. 2019, 58, 1077–1087. [Google Scholar] [CrossRef]

- Elmaadawy, K.; Elaziz, M.A.; Elsheikh, A.H.; Moawad, A.; Liu, B.; Lu, S. Utilization of random vector functional link integrated with manta ray foraging optimization for effluent prediction of wastewater treatment plant. J. Environ. Manag. 2021, 298, 113520. [Google Scholar] [CrossRef] [PubMed]

- Khoshaim, A.B.; Moustafa, E.B.; Bafakeeh, O.T.; Elsheikh, A.H. An Optimized Multilayer Perceptrons Model Using Grey Wolf Optimizer to Predict Mechanical and Microstructural Properties of Friction Stir Processed Aluminum Alloy Reinforced by Nanoparticles. Coatings 2021, 11, 1476. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Elsheikh, A.H.; Oliva, D.; Abualigah, L.; Lu, S.; Ewees, A.A. Advanced Metaheuristic Techniques for Mechanical Design Problems: Review. Arch. Comput. Methods Eng. 2022, 29, 695–716. [Google Scholar] [CrossRef]

- Shehabeldeen, T.A.; Elaziz, M.A.; Elsheikh, A.H.; Zhou, J. Modeling of friction stir welding process using adaptive neuro-fuzzy inference system integrated with harris hawks optimizer. J. Mater. Res. Technol. 2019, 8, 5882–5892. [Google Scholar] [CrossRef]

- Ekinci, S.; Hekimoğlu, B.; Izci, D. Opposition based Henry gas solubility optimization as a novel algorithm for PID control of DC motor. Eng. Sci. Technol. Int. J. 2021, 24, 331–342. [Google Scholar] [CrossRef]

- Shehabeldeen, T.A.; Elaziz, M.A.; Elsheikh, A.H.; Hassan, O.F.; Yin, Y.; Ji, X.; Shen, X.; Zhou, J. A Novel Method for Predicting Tensile Strength of Friction Stir Welded AA6061 Aluminium Alloy Joints based on Hybrid Random Vector Functional Link and Henry Gas Solubility Optimization. IEEE Access 2020, 30, 188–193. [Google Scholar] [CrossRef]

- Askari, Q.; Younas, I.; Saeed, M. Political Optimizer: A novel socio-inspired meta-heuristic for global optimization. Knowl. Based Syst. 2020, 195, 105709. [Google Scholar] [CrossRef]

- Too, J.; Abdullah, A.R.; Saad, N.M. Mohd Saad A New Quadratic Binary Harris Hawk Optimization for Feature Selection. Electronics 2019, 8, 1130. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Dahou, A.; Alsaleh, N.A.; Elsheikh, A.H.; Saba, A.I.; Ahmadein, M. Boosting COVID-19 Image Classification Using MobileNetV3 and Aquila Optimizer Algorithm. Entropy 2021, 23, 1383. [Google Scholar] [CrossRef]

- Askarzadeh, A. A novel metaheuristic method for solving constrained engineering optimization problems: Crow search algorithm. Comput. Struct. 2016, 169, 1–12. [Google Scholar] [CrossRef]

- Songyang, L.; Haipeng, Y.; Miao, W. Cat swarm optimization algorithm based on the information interaction of subgroup and the top-N learning strategy. J. Intell. Syst. 2022, 31, 489–500. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z. Artificial ecosystem-based optimization: A novel nature-inspired meta-heuristic algorithm. Neural Comput. Appl. 2020, 32, 9383–9425. [Google Scholar] [CrossRef]

- Said, M.; El-Rifaie, A.M.; Tolba, M.A.; Houssein, E.H.; Deb, S. An Efficient Chameleon Swarm Algorithm for Economic Load Dispatch Problem. Mathematics 2021, 9, 2770. [Google Scholar] [CrossRef]

- Izci, D.; Ekinci, S.; Kayri, M.; Eker, E. A novel improved arithmetic optimization algorithm for optimal design of PID controlled and Bode’s ideal transfer function based automobile cruise control system. Evol. Syst. 2022, 13, 453–468. [Google Scholar] [CrossRef]

- Rizk-Allah, R.M.; El-Hameed, M.A.; El-Fergany, A.A. Model parameters extraction of solid oxide fuel cells based on semi-empirical and memory-based chameleon swarm algorithm. Int. J. Energy Res. 2021, 45, 21435–21450. [Google Scholar] [CrossRef]

- Mostafa, R.R.; Ewees, A.A.; Ghoniem, R.M.; Abualigah, L.; Hashim, F.A. Boosting chameleon swarm algorithm with consumption AEO operator for global optimization and feature selection. Knowl. Based Syst. 2022, 246, 108743. [Google Scholar] [CrossRef]

- Umamageswari, A.; Bharathiraja, N.; Irene, D.S. A Novel Fuzzy C-Means based Chameleon Swarm Algorithm for Segmentation and Progressive Neural Architecture Search for Plant Disease Classification. ICT Express 2021, in press. [Google Scholar] [CrossRef]

- Al-Othman, A.K.; Ahmed, N.A.; Al-Fares, F.S.; AlSharidah, M.E. Parameter Identification of PEM Fuel Cell Using Quantum-Based Optimization Method. Arab. J. Sci. Eng. 2015, 40, 2619–2628. [Google Scholar] [CrossRef]

- Agrawal, R.K.; Kaur, B.; Sharma, S. Quantum based Whale Optimization Algorithm for wrapper feature selection. Appl. Soft Comput. 2020, 89, 106092. [Google Scholar] [CrossRef]

- Ho, S.L.; Yang, S.; Ni, G.; Huang, J. A Quantum-Based Particle Swarm Optimization Algorithm Applied to Inverse Problems. IEEE Trans. Magn. 2013, 49, 2069–2072. [Google Scholar] [CrossRef]

- Chaudhuri, A.; Sahu, T.P. Feature selection using Binary Crow Search Algorithm with time varying flight length. Expert Syst. Appl. 2021, 168, 114288. [Google Scholar] [CrossRef]

- Sadeghian, Z.; Akbari, E.; Nematzadeh, H. A hybrid feature selection method based on information theory and binary butterfly optimization algorithm. Eng. Appl. Artif. Intell. 2021, 97, 104079. [Google Scholar] [CrossRef]

- Maleki, N.; Zeinali, Y.; Niaki, S.T.A. A k-NN method for lung cancer prognosis with the use of a genetic algorithm for feature selection. Expert Syst. Appl. 2021, 164, 113981. [Google Scholar] [CrossRef]

- Song, X.; Zhang, Y.; Gong, D.; Sun, X. Feature selection using bare-bones particle swarm optimization with mutual information. Pattern Recognit. 2021, 112, 107804. [Google Scholar] [CrossRef]

- Sathiyabhama, B.; Kumar, S.U.; Jayanthi, J.; Sathiya, T.; Ilavarasi, A.K.; Yuvarajan, V.; Gopikrishna, K. A novel feature selection framework based on grey wolf optimizer for mammogram image analysis. Neural Comput. Appl. 2021, 33, 14583–14602. [Google Scholar] [CrossRef]

- Aljarah, I.; Habib, M.; Faris, H.; Al-Madi, N.; Heidari, A.A.; Mafarja, M.; Elaziz, M.A.; Mirjalili, S. A dynamic locality multi-objective salp swarm algorithm for feature selection. Comput. Ind. Eng. 2020, 147, 106628. [Google Scholar] [CrossRef]

- Dhiman, G.; Oliva, D.; Kaur, A.; Singh, K.K.; Vimal, S.; Sharma, A.; Cengiz, K. BEPO: A novel binary emperor penguin optimizer for automatic feature selection. Knowl. Based Syst. 2021, 211, 106560. [Google Scholar] [CrossRef]

- Amini, F.; Hu, G. A two-layer feature selection method using Genetic Algorithm and Elastic Net. Expert Syst. Appl. 2021, 166, 114072. [Google Scholar] [CrossRef]

- Neggaz, N.; Houssein, E.H.; Hussain, K. An efficient henry gas solubility optimization for feature selection. Expert Syst. Appl. 2020, 152, 113364. [Google Scholar] [CrossRef]

- Rostami, M.; Berahmand, K.; Nasiri, E.; Forouzandeh, S. Review of swarm intelligence-based feature selection methods. Eng. Appl. Artif. Intell. 2021, 100, 104210. [Google Scholar] [CrossRef]

- Agrawal, P.; Abutarboush, H.F.; Ganesh, T.; Mohamed, A.W. Metaheuristic Algorithms on Feature Selection: A Survey of One Decade of Research (2009–2019). IEEE Access 2021, 9, 26766–26791. [Google Scholar] [CrossRef]

- Braik, M.S. Chameleon Swarm Algorithm: A bio-inspired optimizer for solving engineering design problems. Expert Syst. Appl. 2021, 174, 114685. [Google Scholar] [CrossRef]

- Srikanth, K.; Panwar, L.K.; Panigrahi, B.; Herrera-Viedma, E.; Sangaiah, A.K.; Wang, G.-G. Meta-heuristic framework: Quantum inspired binary grey wolf optimizer for unit commitment problem. Comput. Electr. Eng. 2018, 70, 243–260. [Google Scholar] [CrossRef]

- Tanabe, R.; Fukunaga, A.S. Improving the Search Performance of SHADE Using Linear Population Size Reduction. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; pp. 1658–1665. [Google Scholar]

- Qin, A.K.; Suganthan, P.N. Self-Adaptive Differential Evolution Algorithm for Numerical Optimization. In Proceedings of the 2005 IEEE Congress on Evolutionary Computation, Edinburgh, UK, 2–4 September 2005; Volume 2, pp. 1785–1791. [Google Scholar]

- Gill, H.S.; Khehra, B.S.; Singh, A.; Kaur, L. Teaching-learning based optimization algorithm to minimize cross entropy for selecting multilevel threshold values. Egypt. Inform. J. 2018, 20, 11–25. [Google Scholar] [CrossRef]

- Mohamed, A.W.; Hadi, A.A.; Fattouh, A.M.; Jambi, K.M. LSHADE with Semi-Parameter Adaptation Hybrid with CMA-ES for Solving CEC 2017 Benchmark Problems. In Proceedings of the 2017 IEEE Congress on Evolutionary Computation (CEC), San Sebastian, Spain, 5–8 June 2017; pp. 145–152. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Arora, S.; Singh, H.; Sharma, M.; Sharma, S.; Anand, P. A New Hybrid Algorithm Based on Grey Wolf Optimization and Crow Search Algorithm for Unconstrained Function Optimization and Feature Selection. IEEE Access 2019, 7, 26343–26361. [Google Scholar] [CrossRef]

- Nakamura, R.Y.M.; Pereira, L.A.M.; Costa, K.A.; Rodrigues, D.; Papa, J.P.; Yang, X.-S. BBA: A Binary Bat Algorithm for Feature Selection. In Proceedings of the 2012 25th SIBGRAPI Conference on Graphics, Patterns and Images, Washington, DC, USA, 22–25 August 2012; pp. 291–297. [Google Scholar]

- Mafarja, M.; Aljarah, I.; Heidari, A.A.; Hammouri, A.I.; Faris, H.; Al-Zoubi, A.M.; Mirjalili, S. Evolutionary Population Dynamics and Grasshopper Optimization approaches for feature selection problems. Knowl. Based Syst. 2018, 145, 25–45. [Google Scholar] [CrossRef]

- Saremi, S.; Mirjalili, S.; Lewis, A. Biogeography-based optimisation with chaos. Neural Comput. Appl. 2014, 25, 1077–1097. [Google Scholar] [CrossRef]

- Ouadfel, S.; Abd Elaziz, M. Enhanced Crow Search Algorithm for Feature Selection. Expert Syst. Appl. 2020, 159, 113572. [Google Scholar] [CrossRef]

| 0 | 0 | F | 0 |

| 0 | 1 | F | 0.01 π |

| 1 | 0 | F | −0.01 π |

| 1 | 1 | F | 0 |

| 0 | 0 | T | 0 |

| 0 | 1 | T | 0 |

| 1 | 0 | T | 0 |

| 1 | 1 | T | 0 |

| Data Code | Datasets | No. Instances | No. Features | No. Classes | Data Code | Datasets | No. Instances | No. Features | No. Classes |

|---|---|---|---|---|---|---|---|---|---|

| S1 | Breastcancer | 699 | 9 | 2 | S2 | BreastEW | 569 | 30 | 2 |

| S3 | CongressEW | 435 | 16 | 2 | S4 | Exactly | 1000 | 13 | 2 |

| S5 | Exactly2 | 1000 | 13 | 2 | S6 | HeartEW | 270 | 13 | 2 |

| S7 | IonosphereEW | 351 | 34 | 2 | S8 | KrvskpEW | 3196 | 36 | 2 |

| S9 | Lymphography | 148 | 18 | 2 | S10 | M-of-n | 1000 | 13 | 2 |

| S11 | PenglungEW | 73 | 325 | 2 | S12 | SonarEW | 208 | 60 | 2 |

| S13 | SpectEW | 267 | 22 | 2 | S14 | tic-tac-toe | 958 | 9 | 2 |

| S15 | Vote | 300 | 16 | 2 | S16 | WaveformEW | 5000 | 40 | 3 |

| S17 | WaterEW | 178 | 13 | 3 | S18 | Zoo | 101 | 16 | 6 |

| QCham | Cham | LSHADE | SaDE | LSPACMA | GWO | GA | TLBO | WOA | |

|---|---|---|---|---|---|---|---|---|---|

| S1 | 0.0555 | 0.0669 | 0.0833 | 0.0917 | 0.1067 | 0.0679 | 0.1018 | 0.0925 | 0.0738 |

| S2 | 0.0638 | 0.0680 | 0.1254 | 0.1114 | 0.1342 | 0.0809 | 0.1280 | 0.0941 | 0.0699 |

| S3 | 0.0373 | 0.0613 | 0.0655 | 0.1063 | 0.0575 | 0.1075 | 0.1018 | 0.0902 | 0.0738 |

| S4 | 0.0467 | 0.0842 | 0.2504 | 0.3853 | 0.2977 | 0.1415 | 0.1923 | 0.2588 | 0.1598 |

| S5 | 0.2357 | 0.2809 | 0.2258 | 0.2285 | 0.2223 | 0.1998 | 0.3306 | 0.3158 | 0.2170 |

| S6 | 0.1457 | 0.1617 | 0.2019 | 0.2417 | 0.2519 | 0.2038 | 0.1958 | 0.2478 | 0.2160 |

| S7 | 0.0352 | 0.0594 | 0.1160 | 0.1148 | 0.1561 | 0.0817 | 0.1206 | 0.1573 | 0.0993 |

| S8 | 0.0784 | 0.0908 | 0.3904 | 0.3658 | 0.3584 | 0.0955 | 0.1148 | 0.1132 | 0.0971 |

| S9 | 0.0938 | 0.0929 | 0.2567 | 0.2516 | 0.2167 | 0.1564 | 0.1818 | 0.1889 | 0.1287 |

| S10 | 0.0491 | 0.0666 | 0.2118 | 0.2706 | 0.3197 | 0.0998 | 0.1179 | 0.1998 | 0.1176 |

| S11 | 0.0546 | 0.1471 | 0.3200 | 0.3500 | 0.2474 | 0.0489 | 0.2022 | 0.0418 | 0.0408 |

| S12 | 0.0571 | 0.0886 | 0.2833 | 0.3333 | 0.3917 | 0.0965 | 0.0890 | 0.1471 | 0.0673 |

| S13 | 0.1568 | 0.1661 | 0.1630 | 0.2417 | 0.1370 | 0.2353 | 0.2047 | 0.2027 | 0.2336 |

| S14 | 0.2148 | 0.2462 | 0.2635 | 0.2992 | 0.3208 | 0.2548 | 0.2279 | 0.2659 | 0.2572 |

| S15 | 0.0568 | 0.0909 | 0.0567 | 0.0850 | 0.1142 | 0.0533 | 0.1052 | 0.0881 | 0.0457 |

| S16 | 0.2568 | 0.2843 | 0.3574 | 0.4094 | 0.4381 | 0.3026 | 0.3075 | 0.3137 | 0.2996 |

| S17 | 0.0267 | 0.0477 | 0.1833 | 0.1583 | 0.1597 | 0.0571 | 0.0878 | 0.0956 | 0.0699 |

| S18 | 0.0196 | 0.0234 | 0.3333 | 0.0833 | 0.2333 | 0.0660 | 0.0563 | 0.0515 | 0.0533 |

| QCham | Cham | LSHADE | SaDE | LSPACMA | GWO | GA | TLBO | WOA | |

|---|---|---|---|---|---|---|---|---|---|

| S1 | 0.0526 | 0.0590 | 0.0833 | 0.0917 | 0.0967 | 0.0573 | 0.0830 | 0.0830 | 0.0590 |

| S2 | 0.0458 | 0.0470 | 0.1254 | 0.0947 | 0.1342 | 0.0607 | 0.1107 | 0.1107 | 0.0491 |

| S3 | 0.0373 | 0.0476 | 0.0655 | 0.0897 | 0.0575 | 0.0664 | 0.0769 | 0.0769 | 0.0560 |

| S4 | 0.0462 | 0.0462 | 0.2048 | 0.3853 | 0.2977 | 0.0462 | 0.0615 | 0.0615 | 0.0462 |

| S5 | 0.2327 | 0.2558 | 0.2258 | 0.2118 | 0.2223 | 0.1967 | 0.3032 | 0.3032 | 0.2102 |

| S6 | 0.1205 | 0.1462 | 0.2019 | 0.2352 | 0.2278 | 0.1628 | 0.1692 | 0.1692 | 0.1731 |

| S7 | 0.0176 | 0.0362 | 0.1160 | 0.1099 | 0.1493 | 0.0674 | 0.1048 | 0.1048 | 0.0742 |

| S8 | 0.0645 | 0.0781 | 0.3866 | 0.3658 | 0.3254 | 0.0683 | 0.1003 | 0.1003 | 0.0660 |

| S9 | 0.0556 | 0.0556 | 0.2500 | 0.2448 | 0.2167 | 0.1065 | 0.1322 | 0.1322 | 0.0471 |

| S10 | 0.0462 | 0.0462 | 0.2118 | 0.2557 | 0.3135 | 0.0538 | 0.0615 | 0.0615 | 0.0615 |

| S11 | 0.0071 | 0.0714 | 0.3200 | 0.3333 | 0.1872 | 0.0203 | 0.1982 | 0.1982 | 0.0031 |

| S12 | 0.0300 | 0.0233 | 0.2833 | 0.3333 | 0.3333 | 0.0648 | 0.0750 | 0.0750 | 0.0481 |

| S13 | 0.1394 | 0.1121 | 0.1630 | 0.2278 | 0.1370 | 0.1939 | 0.1773 | 0.1773 | 0.2182 |

| S14 | 0.2120 | 0.2243 | 0.2635 | 0.2974 | 0.3208 | 0.2307 | 0.2120 | 0.2120 | 0.2354 |

| S15 | 0.0275 | 0.0700 | 0.0567 | 0.0683 | 0.0917 | 0.0338 | 0.0625 | 0.0625 | 0.0363 |

| S16 | 0.2354 | 0.2562 | 0.3574 | 0.4094 | 0.4290 | 0.2847 | 0.2951 | 0.2951 | 0.2730 |

| S17 | 0.0154 | 0.0308 | 0.1833 | 0.1444 | 0.1194 | 0.0385 | 0.0692 | 0.0692 | 0.0462 |

| S18 | 0.0188 | 0.0125 | 0.3333 | 0.0667 | 0.2333 | 0.0438 | 0.0438 | 0.0438 | 0.0375 |

| QCham | Cham | LSHADE | SaDE | LSPACMA | GWO | GA | TLBO | WOA | |

|---|---|---|---|---|---|---|---|---|---|

| S1 | 0.0655 | 0.0719 | 0.0833 | 0.0917 | 0.1167 | 0.0818 | 0.1228 | 0.1023 | 0.0976 |

| S2 | 0.0819 | 0.0895 | 0.1254 | 0.1281 | 0.1342 | 0.1011 | 0.1365 | 0.1065 | 0.0856 |

| S3 | 0.0373 | 0.1080 | 0.0655 | 0.1230 | 0.0575 | 0.1431 | 0.1330 | 0.1017 | 0.1037 |

| S4 | 0.0538 | 0.2524 | 0.2960 | 0.3853 | 0.2977 | 0.2372 | 0.3096 | 0.2845 | 0.2822 |

| S5 | 0.2775 | 0.3000 | 0.2258 | 0.2452 | 0.2223 | 0.2121 | 0.3559 | 0.3469 | 0.3117 |

| S6 | 0.1731 | 0.1859 | 0.2019 | 0.2481 | 0.2759 | 0.2692 | 0.2090 | 0.2795 | 0.2513 |

| S7 | 0.0489 | 0.0860 | 0.1160 | 0.1197 | 0.1629 | 0.0996 | 0.1389 | 0.1894 | 0.1279 |

| S8 | 0.0894 | 0.1130 | 0.3942 | 0.3658 | 0.3915 | 0.1215 | 0.1340 | 0.1399 | 0.1160 |

| S9 | 0.1254 | 0.1322 | 0.2633 | 0.2583 | 0.2167 | 0.2052 | 0.2278 | 0.2600 | 0.2467 |

| S10 | 0.0705 | 0.1007 | 0.2118 | 0.2855 | 0.3258 | 0.1785 | 0.2106 | 0.2582 | 0.1836 |

| S11 | 0.1348 | 0.2252 | 0.3200 | 0.3667 | 0.3077 | 0.0905 | 0.2065 | 0.0449 | 0.0852 |

| S12 | 0.0862 | 0.1157 | 0.2833 | 0.3333 | 0.4500 | 0.1243 | 0.1081 | 0.1705 | 0.0867 |

| S13 | 0.1939 | 0.2167 | 0.1630 | 0.2556 | 0.1370 | 0.2652 | 0.2227 | 0.2273 | 0.2379 |

| S14 | 0.2214 | 0.2847 | 0.2635 | 0.3010 | 0.3208 | 0.2924 | 0.2793 | 0.3040 | 0.2917 |

| S15 | 0.0738 | 0.1138 | 0.0567 | 0.1017 | 0.1367 | 0.0975 | 0.1438 | 0.1075 | 0.0950 |

| S16 | 0.2741 | 0.3094 | 0.3574 | 0.4094 | 0.4472 | 0.3215 | 0.3215 | 0.3348 | 0.3229 |

| S17 | 0.0538 | 0.0692 | 0.1833 | 0.1722 | 0.2000 | 0.0712 | 0.1192 | 0.1192 | 0.0865 |

| S18 | 0.0250 | 0.0375 | 0.3333 | 0.1000 | 0.2333 | 0.0866 | 0.0688 | 0.0866 | 0.0804 |

| QCham | Cham | LSHADE | SaDE | LSPACMA | GWO | GA | TLBO | WOA | |

|---|---|---|---|---|---|---|---|---|---|

| S1 | 4 | 4 | 3 | 5 | 6 | 3 | 5 | 3 | 2 |

| S2 | 5 | 9 | 5 | 9 | 6 | 8 | 21 | 7 | 6 |

| S3 | 3 | 3 | 4 | 4 | 11 | 5 | 11 | 5 | 4 |

| S4 | 6 | 8 | 7 | 4 | 10 | 7 | 9 | 6 | 9 |

| S5 | 3 | 6 | 5 | 5 | 4 | 4 | 9 | 4 | 4 |

| S6 | 3 | 6 | 7 | 2 | 3 | 6 | 11 | 6 | 5 |

| S7 | 3 | 9 | 4 | 5 | 4 | 9 | 28 | 9 | 8 |

| S8 | 9 | 21 | 11 | 15 | 14 | 21 | 29 | 15 | 19 |

| S9 | 3 | 11 | 3 | 4 | 3 | 8 | 14 | 9 | 4 |

| S10 | 3 | 8 | 7 | 5 | 4 | 9 | 9 | 6 | 9 |

| S11 | 25 | 59 | 35 | 20 | 25 | 107 | 267 | 58 | 41 |

| S12 | 13 | 30 | 16 | 19 | 20 | 24 | 50 | 29 | 31 |

| S13 | 6 | 9 | 4 | 7 | 5 | 7 | 17 | 8 | 4 |

| S14 | 3 | 5 | 4 | 4 | 7 | 5 | 6 | 6 | 5 |

| S15 | 2 | 8 | 3 | 2 | 2 | 4 | 11 | 5 | 2 |

| S16 | 5 | 21 | 7 | 15 | 9 | 20 | 34 | 19 | 22 |

| S17 | 4 | 6 | 7 | 6 | 5 | 5 | 9 | 5 | 6 |

| S18 | 7 | 4 | 5 | 5 | 4 | 8 | 9 | 3 | 8 |

| QCham | Cham | LSHADE | SaDE | LSPACMA | GWO | GA | TLBO | WOA | |

|---|---|---|---|---|---|---|---|---|---|

| S1 | 0.9857 | 0.9689 | 0.9286 | 0.9643 | 0.9429 | 0.9567 | 0.9462 | 0.9729 | 0.9476 |

| S2 | 0.9688 | 0.9592 | 0.8684 | 0.9123 | 0.9035 | 0.9415 | 0.9351 | 0.9573 | 0.9433 |

| S3 | 0.9540 | 0.9552 | 0.9540 | 0.9195 | 0.9655 | 0.9157 | 0.9609 | 0.9655 | 0.9448 |

| S4 | 0.9743 | 0.9723 | 0.7375 | 0.6400 | 0.6700 | 0.8987 | 0.8667 | 1.0000 | 0.8960 |

| S5 | 0.7358 | 0.6370 | 0.7250 | 0.7450 | 0.7300 | 0.7900 | 0.7130 | 0.7507 | 0.7703 |

| S6 | 0.8944 | 0.6676 | 0.7593 | 0.7500 | 0.7593 | 0.8272 | 0.8753 | 0.8877 | 0.7988 |

| S7 | 0.9834 | 0.8148 | 0.9296 | 0.9789 | 0.9437 | 0.9380 | 0.9577 | 0.9859 | 0.9174 |

| S8 | 0.9627 | 0.6291 | 0.5852 | 0.5250 | 0.5594 | 0.9577 | 0.9616 | 0.9547 | 0.9507 |

| S9 | 0.9666 | 0.6400 | 0.8000 | 0.8073 | 0.8333 | 0.8756 | 0.8844 | 0.9337 | 0.8821 |

| S10 | 0.9935 | 0.5713 | 0.7450 | 0.7325 | 0.7100 | 0.9627 | 0.9477 | 0.9990 | 0.9497 |

| S11 | 0.8567 | 1.0000 | 0.7333 | 0.6667 | 0.8846 | 0.9822 | 0.8667 | 0.9511 | 0.9686 |

| S12 | 0.9714 | 0.7976 | 0.6905 | 0.6905 | 0.5357 | 0.9381 | 0.9937 | 0.9794 | 0.9825 |

| S13 | 0.8833 | 0.7556 | 0.8148 | 0.7500 | 0.8519 | 0.7716 | 0.8580 | 0.8531 | 0.7593 |

| S14 | 0.7938 | 0.5828 | 0.7188 | 0.7630 | 0.8750 | 0.7819 | 0.8250 | 0.8354 | 0.7809 |

| S15 | 0.9825 | 0.8283 | 0.9667 | 0.9500 | 0.9083 | 0.9700 | 0.9600 | 0.9711 | 0.9622 |

| S16 | 0.7732 | 0.5707 | 0.5370 | 0.5580 | 0.5170 | 0.7186 | 0.7533 | 0.7530 | 0.7283 |

| S17 | 1.0000 | 0.8486 | 0.8333 | 0.9167 | 0.9861 | 0.9833 | 0.9833 | 1.0000 | 0.9759 |

| S18 | 1.0000 | 0.8452 | 0.6667 | 1.0000 | 0.8333 | 0.9841 | 1.0000 | 1.0000 | 0.9968 |

| QCham | Cham | LSHADE | SaDE | LSPACMA | GWO | GA | TLBO | WOA | |

|---|---|---|---|---|---|---|---|---|---|

| S1 | 1.3266 | 2.5323 | 3.7597 | 3.9311 | 3.7896 | 4.8045 | 3.0372 | 7.1621 | 3.4947 |

| S2 | 2.3220 | 4.4723 | 3.6052 | 3.6613 | 3.6389 | 3.7984 | 2.8619 | 7.1806 | 3.4250 |

| S3 | 0.2932 | 0.4607 | 3.5721 | 3.6060 | 3.6004 | 3.6473 | 2.7438 | 6.4168 | 3.3492 |

| S4 | 0.3335 | 0.5275 | 3.9773 | 4.0450 | 4.0228 | 4.1293 | 3.2438 | 7.9622 | 3.8586 |

| S5 | 0.9228 | 0.4734 | 3.8583 | 3.9539 | 3.9313 | 4.0432 | 3.3300 | 7.2139 | 3.4962 |

| S6 | 0.7098 | 0.4767 | 3.4766 | 3.4843 | 3.4874 | 3.5319 | 2.5871 | 6.7831 | 3.2491 |

| S7 | 0.2854 | 0.4329 | 3.4925 | 3.4959 | 3.4998 | 3.6391 | 2.7085 | 6.7888 | 3.2704 |

| S8 | 0.6804 | 1.2744 | 8.4271 | 8.5472 | 8.4831 | 14.9635 | 13.6991 | 24.1174 | 12.5058 |

| S9 | 3.8243 | 4.4320 | 3.3085 | 3.3253 | 3.3642 | 3.4318 | 2.5320 | 6.5917 | 3.1419 |

| S10 | 2.3248 | 3.5335 | 4.0457 | 4.1456 | 4.0962 | 4.1284 | 3.2549 | 7.8806 | 3.9806 |

| S11 | 3.3222 | 2.4463 | 3.5204 | 3.5962 | 3.5438 | 6.6027 | 2.7109 | 6.5656 | 3.1659 |

| S12 | 3.2944 | 3.0633 | 3.4390 | 3.4875 | 3.4694 | 3.8382 | 2.6443 | 6.4529 | 3.1784 |

| S13 | 1.8448 | 1.4495 | 3.4457 | 3.5273 | 3.5137 | 3.5143 | 2.5964 | 6.7163 | 3.1486 |

| S14 | 2.4521 | 3.5254 | 4.1032 | 4.1750 | 4.1446 | 4.0571 | 3.2515 | 7.9514 | 3.8750 |

| S15 | 2.5025 | 2.4369 | 3.5085 | 3.5751 | 3.5403 | 3.5511 | 2.6326 | 6.7927 | 3.1668 |

| S16 | 1.4819 | 2.4791 | 21.0411 | 21.3093 | 21.1920 | 30.4215 | 34.6083 | 47.0547 | 28.8056 |

| S17 | 3.2765 | 2.4687 | 4.6537 | 4.6825 | 4.6444 | 3.4650 | 2.6549 | 6.4435 | 3.2474 |

| S18 | 0.2940 | 0.4447 | 0.0196 | 0.0304 | 0.9959 | 0.4348 | 0.6307 | 0.4831 | 0.2730 |

| Datasets | QCham | WOAT | bGWO2 | BBO | ECSA | WOAR | PSO | AGWO | BBA | EGWO | BGOA | SBO |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| S1 | 0.9857 | 0.959 | 0.975 | 0.962 | 0.972 | 0.957 | 0.967 | 0.960 | 0.937 | 0.961 | 0.969 | 0.967 |

| S2 | 0.9688 | 0.949 | 0.935 | 0.945 | 0.958 | 0.950 | 0.933 | 0.934 | 0.931 | 0.947 | 0.96 | 0.942 |

| S3 | 0.9540 | 0.914 | 0.776 | 0.936 | 0.966 | 0.910 | 0.688 | 0.935 | 0.872 | 0.943 | 0.953 | 0.950 |

| S4 | 0.9743 | 0.739 | 0.75 | 0.754 | 1 | 0.763 | 0.73 | 0.757 | 0.61 | 0.753 | 0.946 | 0.734 |

| S5 | 0.7358 | 0.699 | 0.776 | 0.692 | 0.767 | 0.690 | 0.787 | 0.695 | 0.628 | 0.698 | 0.76 | 0.709 |

| S6 | 0.8944 | 0.765 | 0.7 | 0.782 | 0.83 | 0.763 | 0.744 | 0.797 | 0.754 | 0.761 | 0.826 | 0.792 |

| S7 | 0.9834 | 0.884 | 0.963 | 0.880 | 0.931 | 0.880 | 0.921 | 0.893 | 0.877 | 0.863 | 0.883 | 0.898 |

| S9 | 0.9627 | 0.896 | 0.584 | 0.80 | 0.865 | 0.901 | 0.584 | 0.791 | 0.701 | 0.766 | 0.815 | 0.818 |

| S10 | 0.9666 | 0.778 | 0.729 | 0.880 | 1 | 0.759 | 0.737 | 0.878 | 0.722 | 0.870 | 0.979 | 0.863 |

| S11 | 0.9935 | 0.838 | 0.822 | 0.816 | 0.921 | 0.860 | 0.822 | 0.854 | 0.795 | 0.756 | 0.861 | 0.843 |

| S12 | 0.8567 | 0.736 | 0.938 | 0.871 | 0.926 | 0.712 | 0.928 | 0.882 | 0.844 | 0.861 | 0.895 | 0.894 |

| S13 | 0.9714 | 0.861 | 0.834 | 0.798 | 0.847 | 0.857 | 0.819 | 0.813 | 0.8 | 0.804 | 0.803 | 0.798 |

| S14 | 0.8833 | 0.792 | 0.727 | 0.768 | 0.842 | 0.778 | 0.735 | 0.762 | 0.665 | 0.771 | 0.951 | 0.768 |

| S15 | 0.7938 | 0.736 | 0.92 | 0.917 | 0.96 | 0.739 | 0.904 | 0.92 | 0.851 | 0.902 | 0.729 | 0.934 |

| S17 | 0.9825 | 0.935 | 0.92 | 0.966 | 0.985 | 0.932 | 0.933 | 0.957 | 0.919 | 0.966 | 0.979 | 0.968 |

| S18 | 0.7732 | 0.710 | 0.879 | 0.937 | 0.983 | 0.712 | 0.861 | 0.968 | 0.874 | 0.968 | 0.99 | 0.968 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elaziz, M.A.; Ahmadein, M.; Ataya, S.; Alsaleh, N.; Forestiero, A.; Elsheikh, A.H. A Quantum-Based Chameleon Swarm for Feature Selection. Mathematics 2022, 10, 3606. https://doi.org/10.3390/math10193606

Elaziz MA, Ahmadein M, Ataya S, Alsaleh N, Forestiero A, Elsheikh AH. A Quantum-Based Chameleon Swarm for Feature Selection. Mathematics. 2022; 10(19):3606. https://doi.org/10.3390/math10193606

Chicago/Turabian StyleElaziz, Mohamed Abd, Mahmoud Ahmadein, Sabbah Ataya, Naser Alsaleh, Agostino Forestiero, and Ammar H. Elsheikh. 2022. "A Quantum-Based Chameleon Swarm for Feature Selection" Mathematics 10, no. 19: 3606. https://doi.org/10.3390/math10193606

APA StyleElaziz, M. A., Ahmadein, M., Ataya, S., Alsaleh, N., Forestiero, A., & Elsheikh, A. H. (2022). A Quantum-Based Chameleon Swarm for Feature Selection. Mathematics, 10(19), 3606. https://doi.org/10.3390/math10193606