Modified Remora Optimization Algorithm with Multistrategies for Global Optimization Problem

Abstract

1. Introduction

- A proposed modified remora optimization algorithm (MROA) with three strategies: new host-switching mechanism, joint opposite selection, and restart strategy.

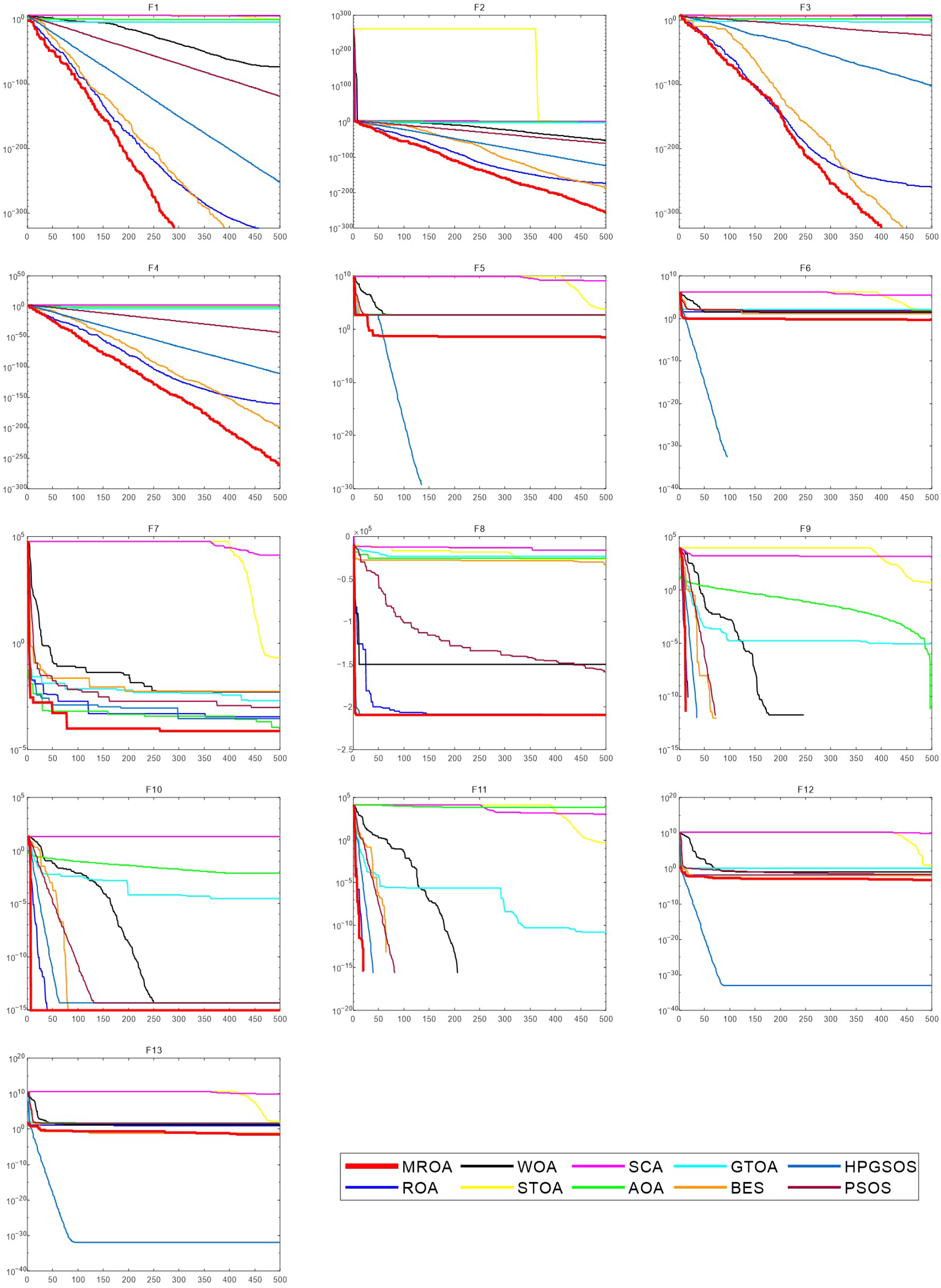

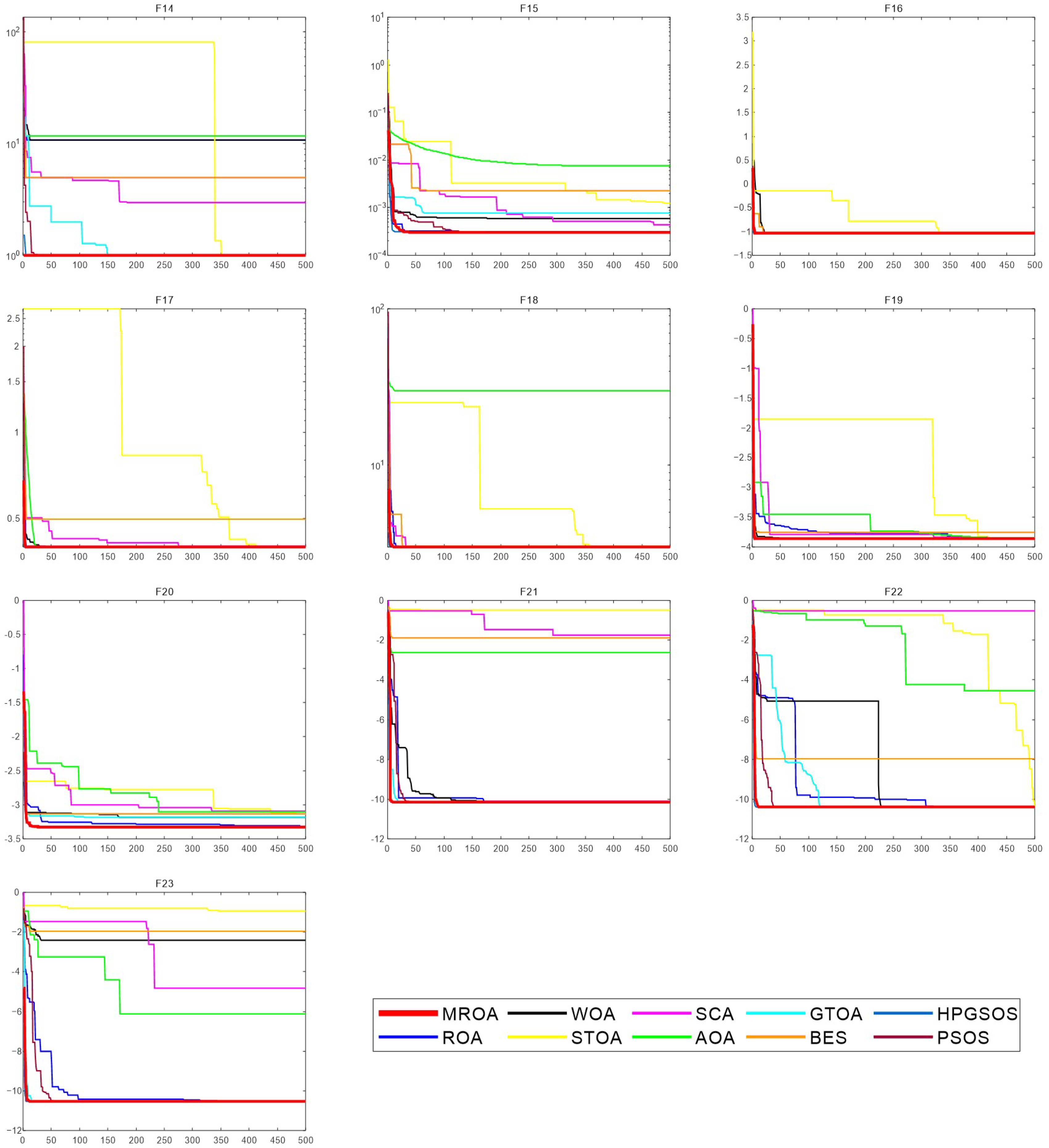

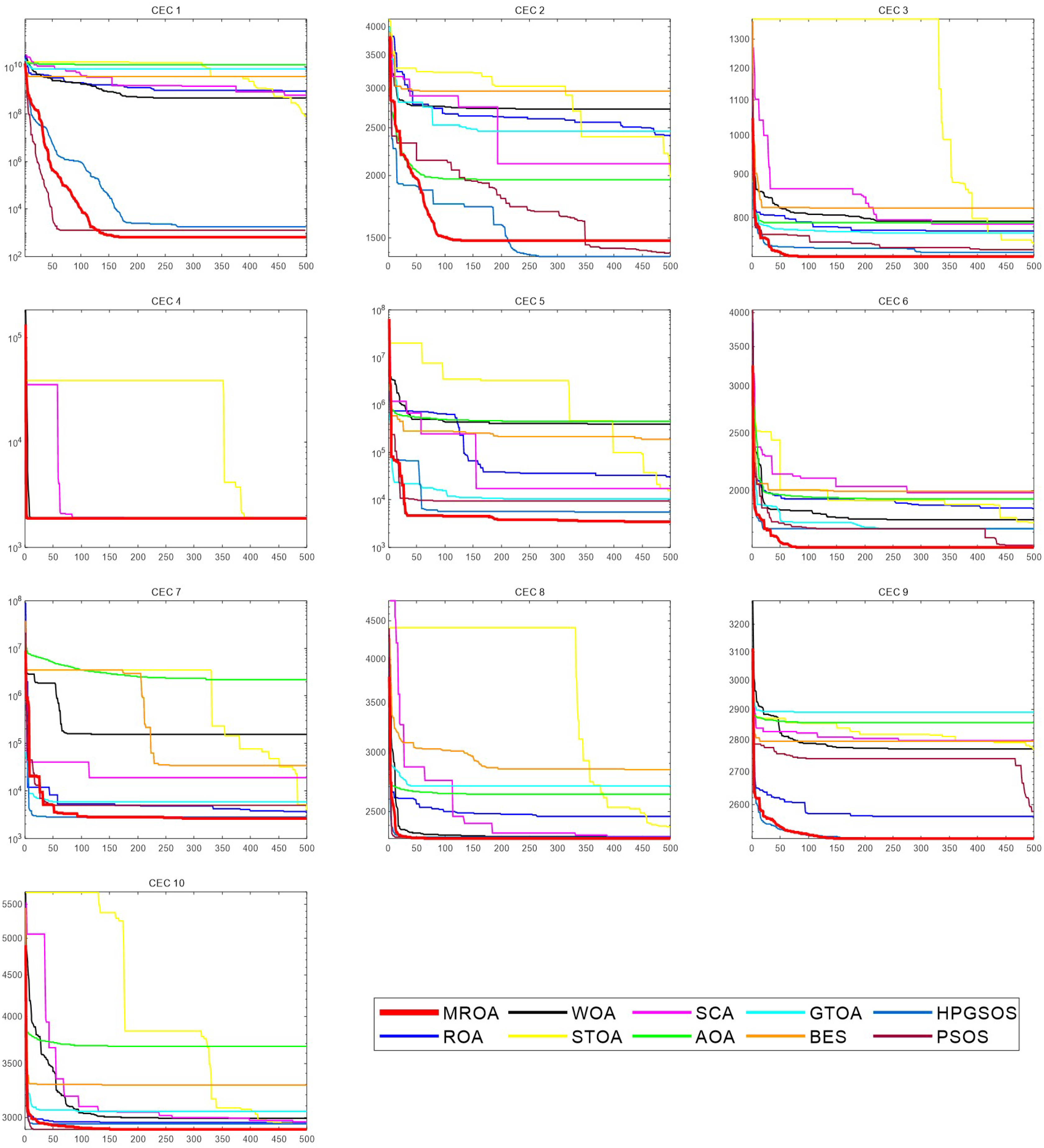

- The performance of MROA is tested in 23 standard benchmark and CEC2020 functions.

- MROA has been tested under four different dimensions (dim = 30, 100, 500, and 1000).

- Five traditional engineering problems are used to validate the engineering applicability of the proposed MROA.

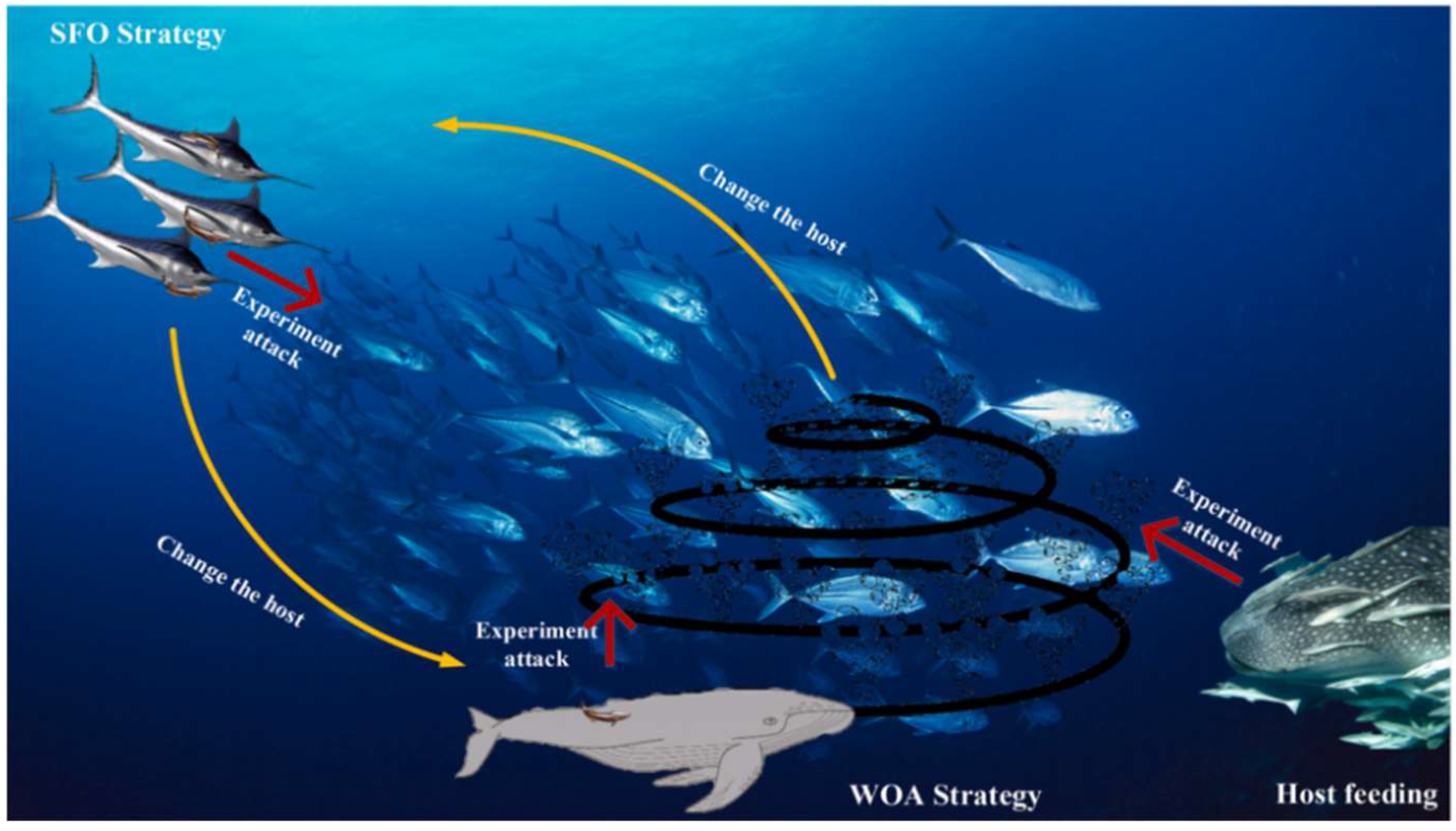

2. Remora Optimization Algorithm (ROA)

2.1. Initialization

2.2. Free Travel (Exploration)

2.2.1. SFO Strategy

2.2.2. Experience Attack

2.3. Eat Thoughtfully (Exploitation)

2.3.1. WOA Strategy

2.3.2. Host Feeding

| Algorithm 1. The pseudocode of ROA |

| 1. Initialize the population size N and the maximum number of iterations T |

| 2. Initialize the positions of population Xi(i = 1, 2,…N) |

| 3. While (t < T) |

| 4. Check if any search agent goes beyond the search space and amend it |

| 5. Calculate the fitness value of each remora and find the best position XBest |

| 6. For each remora indexed by i |

| 7. If H(i) == 0 then |

| 8. Using Equation (2) to update the position of attached sailfishes |

| 9. Else if H(i) == 1 |

| 10. Using Equations (6)–(9) to update the position of attached whales |

| 11. End if |

| 12. Make a one-step prediction by Equation (3) |

| 13. If f(Xatt) < f(Xit) |

| 14. Using Equation (4) to switch hosts |

| 15. Else |

| 16. Using Equations (10)–(13) as the host feeding mode for remora; |

| 17. End if |

| 18. End for |

| 19. End While |

| 20. Return Xbest |

3. The Proposed Multistrategies for ROA

3.1. Host-Switching Mechanism

3.2. Joint Opposite Selection

3.2.1. Selective Leading Opposition (SLO)

3.2.2. Dynamic Opposite (DO)

3.3. Restart Strategy (RS)

3.4. The Proposed MROA

| Algorithm 2. The pseudocode of MROA |

| 1. Initialize the population size N and the maximum number of iterations T |

| 2. Initialize the positions of population Xi(i = 1, 2,…N) |

| 3. While (t < T) |

| 4. Check if any search agent goes beyond the search space and amend it |

| 5. Calculate the fitness value of each remora and find the best solution XBest |

| 6. Perform SLO for each position by Equation (19) |

| 7. For each remora indexed by i |

| 8. If H(i) == 0 then |

| 9. Using Equation (2) to update the position of attached sailfishes |

| 10. Else if H(i) == 1 |

| 11. Using Equations (6)–(9) to update the position of attached whales |

| 12. End if |

| 13. Perform experience attack by Equation (3) |

| 14. If rand < P |

| 15. Make a prediction around host by Equation (14) |

| 16. If f(Xnew) < f(Xit) |

| 17. Using Equation (5) to switch hosts |

| 18. End if |

| 19. End if |

| 20. Using Equation (10) as the host feeding mode for remora; |

| 21. Perform DO for each position by Equation (25) |

| 22. Update trial(i) for each remora |

| 23. If trial(i) > limit |

| 24. Generate positions by Equations (26) and (27) |

| 25. Choose the position with the better fitness value |

| 26. End if |

| 27. End for |

| 28. End While |

| 29. Return Xbest |

3.5. The Computational Complexity of MROA

- Complexity to initialize the parameters: O(1).

- Complexity to initialize the population: O(N × dim), where N is the number of search agents and dim is the dimension size.

- The computational complexity of updating the solutions of the population comes from many aspects: The computational complexity of SFO strategy and WOA strategy is O(N × dim × T). At the same time, host feeding and experience attack’s computational complexity are also O(N × dim × T), respectively. The computational complexity of evaluating the solutions around the host is uncertain, assuming that it is the maximum value O(N × dim × T). The computational complexity of JOS is O(SLO) + O(DO), which is O(N × size (dc) × T) + O(N × dim × T × Jr), where size (dc) is the number of close-distance dimension and Jr is the jump rate; moreover, according to the literature [28], the time of the restart strategy is O(2 × N × dim × T/limit), where limit is a predefined limitation. To sum up, the computational complexity of updating the solutions of the population is O(N × T × (dim(Jr + 2/dim + 4) + size (dc)).

4. Experimental Tests and Analysis

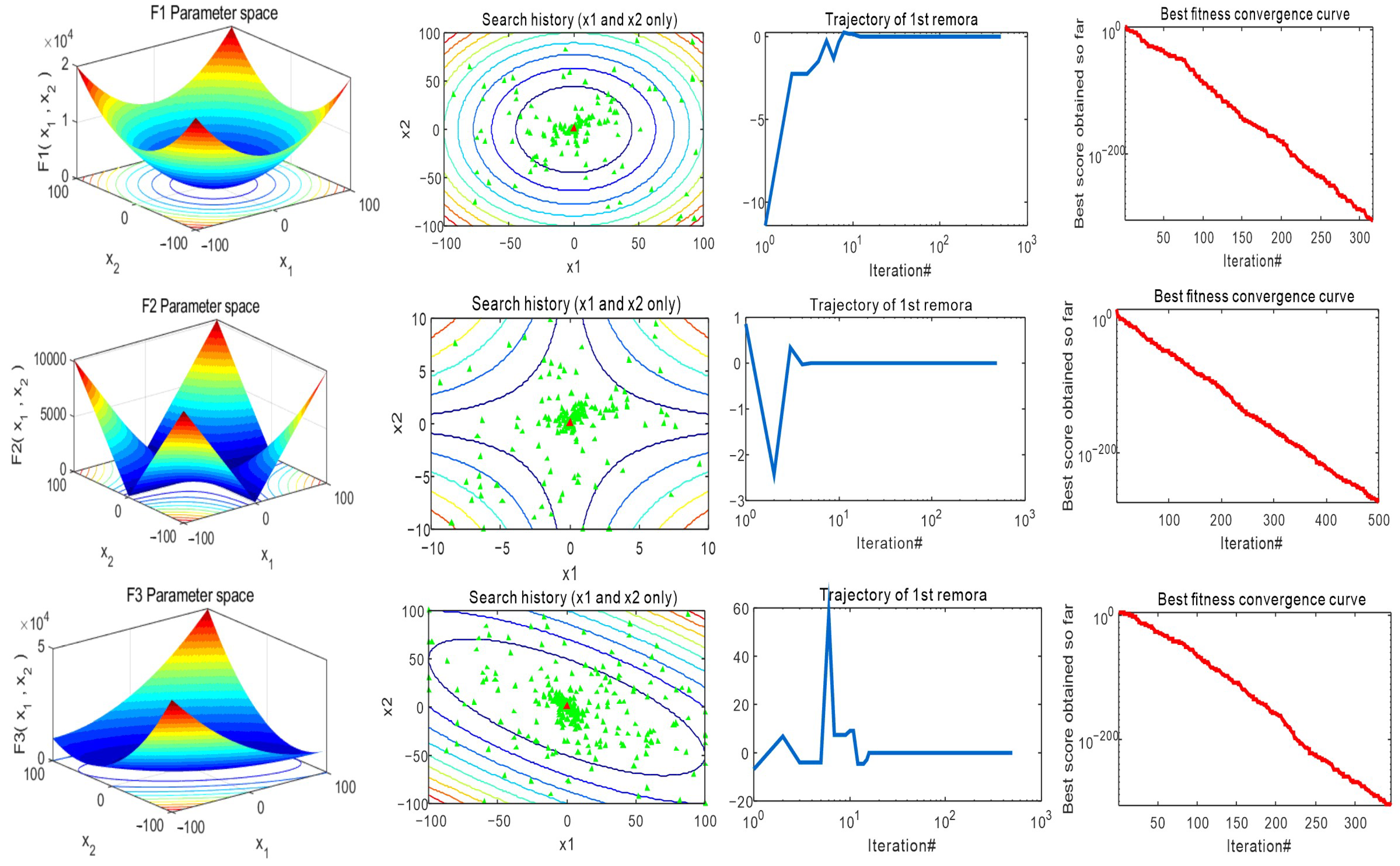

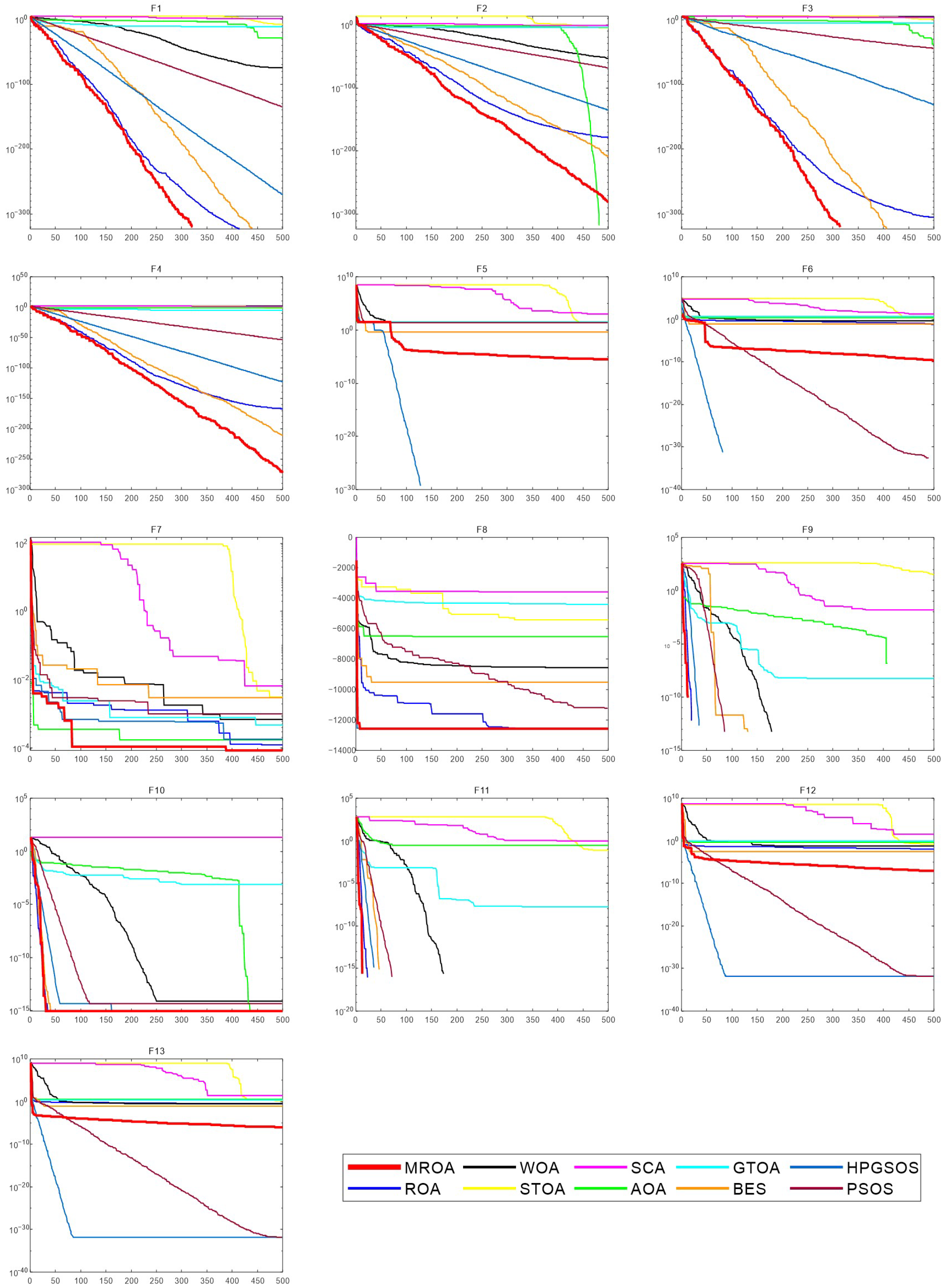

4.1. Experiments on Standard Benchmark Functions

4.2. Experiments on CEC2020 Test Suite

4.3. Sensitivity Analysis of β on MROA

5. Results of Constrained Engineering Design Problems

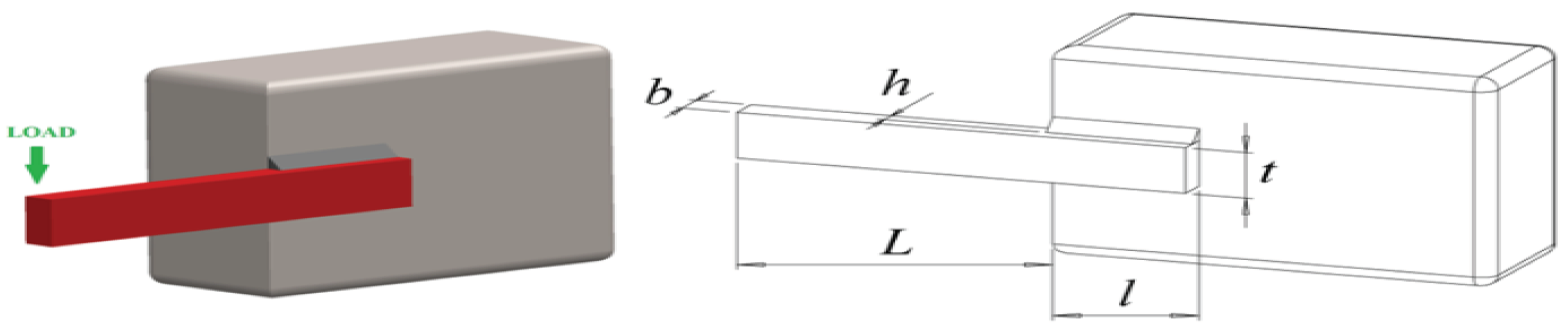

5.1. Welded Beam Design Problem

5.2. The Tension/Compression Spring Design Problem

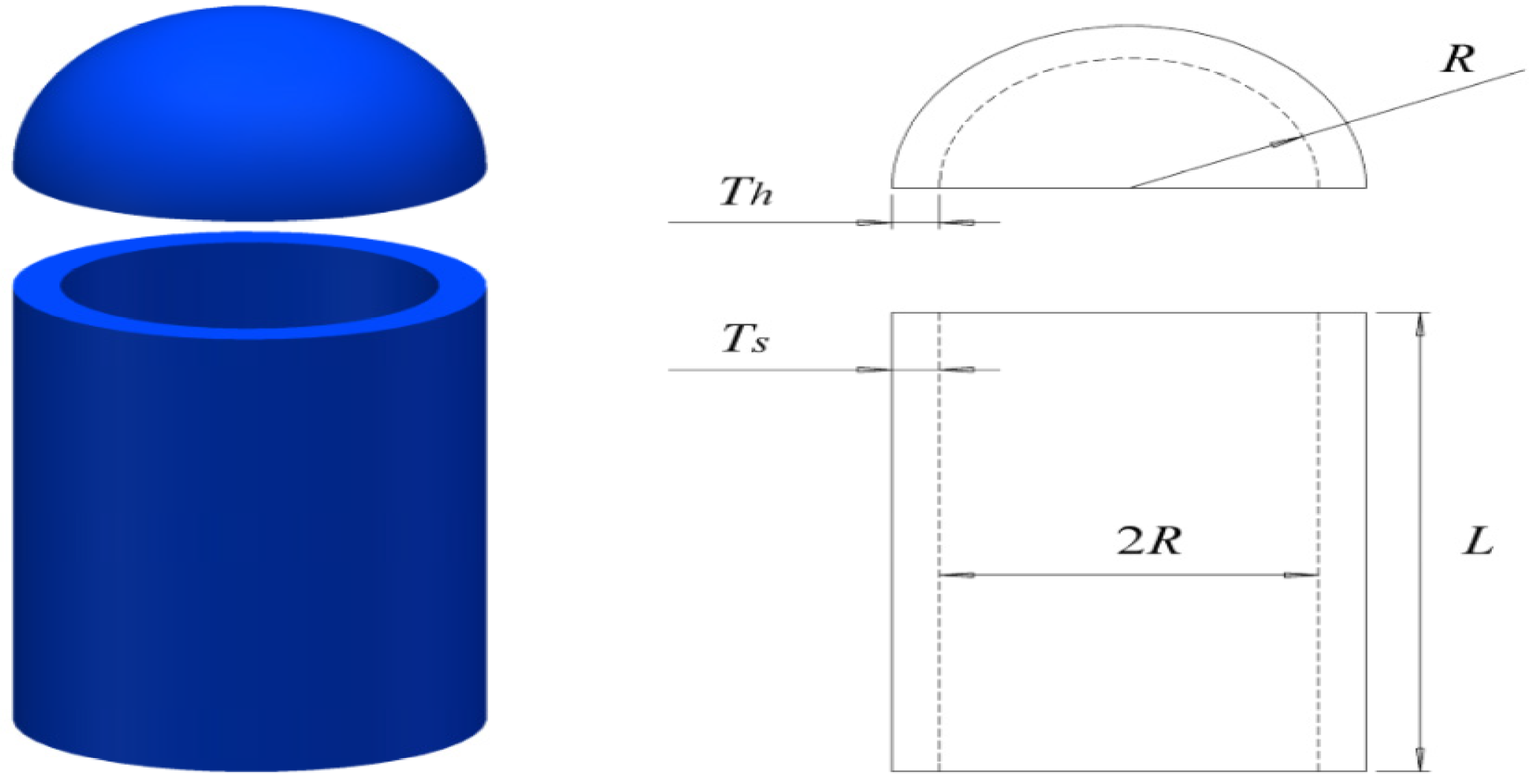

5.3. Pressure Vessel Design Problem

5.4. Speed Reducer Design Problem

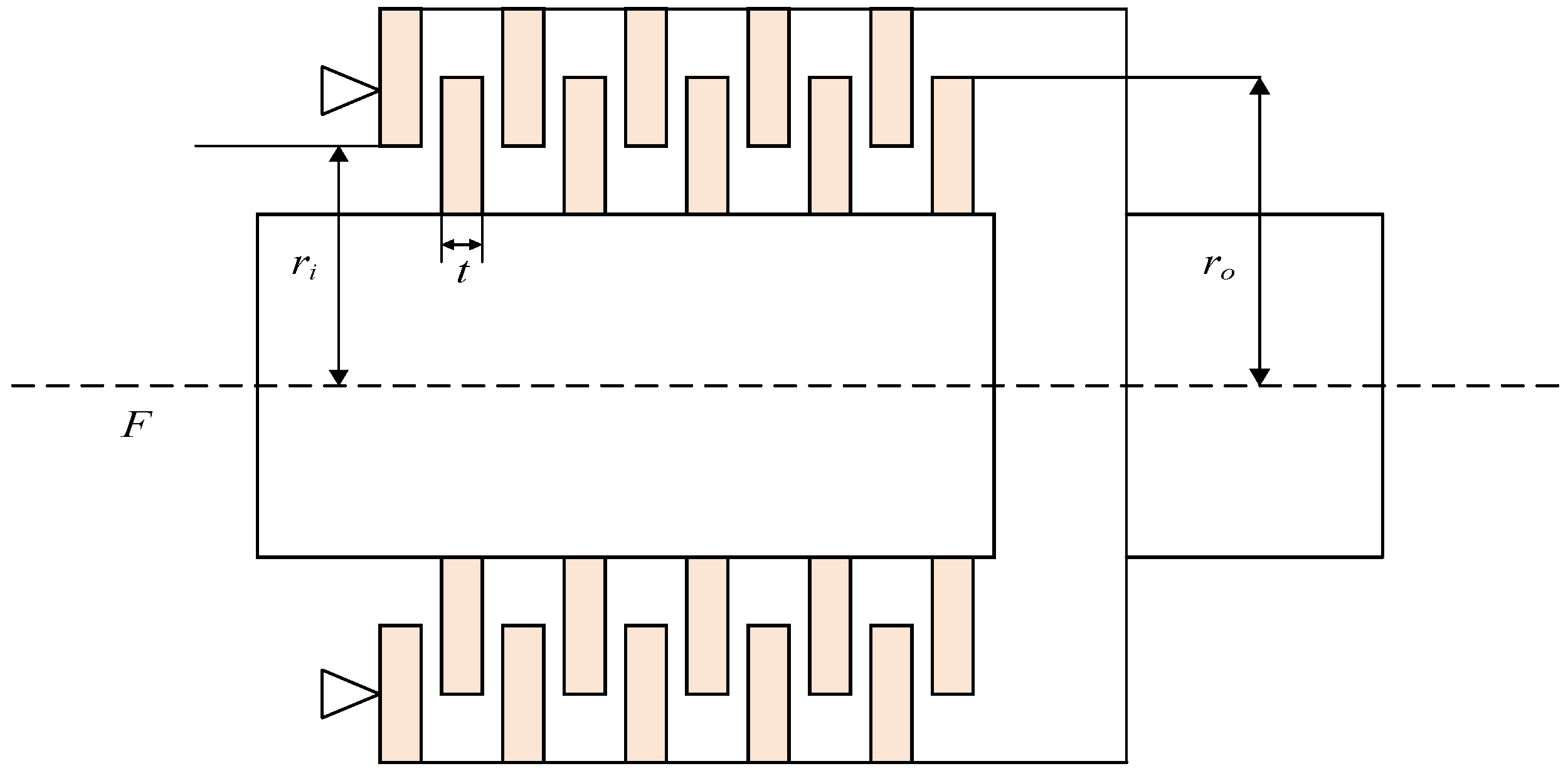

5.5. Multiple Disc Clutch Brake Problem

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Simon, D. Biogeography-Based Optimization. IEEE Trans. Evol. Comput. 2008, 12, 702–713. [Google Scholar] [CrossRef]

- Ferreira, C. Gene Expression Programming: A New Adaptive Algorithm for Solving Problems. Complex Syst. 2001, 13, 87–129. [Google Scholar]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput. Aided Design. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Zhang, Y.; Jin, Z. Group teaching optimization algorithm: A novel metaheuristic method for solving global optimization problems. Expert Syst. Appl. 2020, 148, 113246. [Google Scholar] [CrossRef]

- Zong, W.G.; Kim, J.H.; Loganathan, G.V. A new heuristic optimization algorithm: Harmony search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Cheng, S.; Qin, Q.; Chen, J.; Shi, Y. Brain storm optimization algorithm. Artif. Intell. Rev. 2016, 46, 445–458. [Google Scholar] [CrossRef]

- Fearn, T. Particle swarm optimisation. NIR News 2014, 25, 27. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussien, A.G. Snake Optimizer: A novel meta-heuristic optimization algorithm. Knowl. Based Syst. 2022, 242, 108320. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Elaziz, M.A.; Gandomi, A.H. The Arithmetic Optimization Algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for Solving Optimization Problems. Knowl. Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by Simulated Annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef] [PubMed]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2015, 27, 495–513. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Zhang, Y.; Chi, A. Group teaching optimization algorithm with information sharing for numerical optimization and engineering optimization. J. Intell. Manuf. 2021. [Google Scholar] [CrossRef]

- Sun, K.; Jia, H.; Li, Y.; Jiang, Z. Hybrid improved slime mould algorithm with adaptive β hill climbing for numerical optimization. J. Intell. Fuzzy Syst. 2020, 40, 1–13. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intel. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Shah-Hosseini, H. The intelligent water drops algorithm: A nature-inspired swarm-based optimization algorithm. Int. J. Bio. Insrip. Com. 2009, 1, 71–79. [Google Scholar]

- Weyland, D. A rigorous analysis of the harmony search algorithm: How the research community can be misled by a “novel” methodology. Int. J. Appl. Metaheur. Comput. 2010, 1, 50–60. [Google Scholar] [CrossRef]

- Camacho-Villalón, C.L.; Dorigo, M. Stützle, T. The intelligent water drops algorithm: Why it cannot be considered a novel algorithm. Swarm Intell. 2019, 13, 173–192. [Google Scholar] [CrossRef]

- Sörensen, K. Metaheuristics—the metaphor exposed. Int. Trans. Oper. Res. 2015, 22, 3–18. [Google Scholar] [CrossRef]

- Jia, H.; Peng, X.; Lang, C. Remora optimization algorithm. Expert Syst. Appl. 2021, 185, 115665. [Google Scholar] [CrossRef]

- Liu, Q.; Li, N.; Jia, H.; Qi, Q.; Abualigah, L. Modified Remora Optimization Algorithm for Global Optimization and Multilevel Thresholding Image Segmentation. Mathematics 2022, 10, 1014. [Google Scholar] [CrossRef]

- Zheng, R.; Jia, H.; Abualigah, L.; Wang, S.; Wu, D. An improved remora optimization algorithm with autonomous foraging mechanism for global optimization problems. Math. Biosci. Eng. 2022, 19, 3994–4037. [Google Scholar] [CrossRef]

- Wang, S.; Hussien, A.G.; Jia, H.; Abualigah, L.; Zheng, R. Enhanced Remora Optimization Algorithm for Solving Constrained Engineering Optimization Problems. Mathematics 2022, 10, 1696. [Google Scholar] [CrossRef]

- Arini, F.Y.; Chiewchanwattana, S.; Soomlek, C.; Sunat, K. Joint Opposite Selection (JOS): A premiere joint of selective leading opposition and dynamic opposite enhanced Harris’ hawks optimization for solving single-objective problems. Expert Syst. Appl. 2022, 188, 116001. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, Z.; Chen, W.; Heidari, A.A.; Wang, M.; Zhao, X.; Liang, G.; Chen, H.; Zhang, X. Ensemble mutation-driven salp swarm algorithm with restart mechanism: Framework and fundamental analysis. Expert Syst. Appl. 2021, 165, 113897. [Google Scholar] [CrossRef]

- Xu, Y.; Yang, Z.; Li, X.; Kang, H.; Yang, X. Dynamic opposite learning enhanced teaching–learning-based optimization. Knowl. Based Syst. 2020, 188, 104966. [Google Scholar] [CrossRef]

- Dhargupta, S.; Ghosh, M.; Mirjalili, S.; Sarkar, R. Selective opposition based grey wolf optimization. Expert Syst. Appl. 2020, 151, 113389. [Google Scholar] [CrossRef]

- Mandal, B.; Roy, P.K. Optimal reactive power dispatch using quasi-oppositional teaching learning based optimization. Int. J. Electr. Power 2013, 53, 123–134. [Google Scholar] [CrossRef]

- Fan, Q.; Chen, Z.; Xia, Z. A novel quasi-reflected Harris hawks optimization algorithm for global optimization problems. Soft Comput. 2020, 24, 14825–14843. [Google Scholar] [CrossRef]

- Dhiman, G.; Kaur, A. STOA: A bio-inspired based optimization algorithm for industrial engineering problems. Eng. Appl. Artif. Intel. 2019, 82, 148–174. [Google Scholar] [CrossRef]

- Alsattar, H.A.; Zaidan, A.A.; Zaidan, B.B. Novel meta-heuristic bald eagle search optimisation algorithm. Artif. Intel. Rev. 2020, 53, 2237–2264. [Google Scholar] [CrossRef]

- Farnad, B.; Jafarian, A. A new nature-inspired hybrid algorithm with a penalty method to solve constrained problem. Int. J. Comput. Methods 2018, 15, 1850069. [Google Scholar] [CrossRef]

- Jafarian, A.; Farnad, B. Hybrid PSOS Algorithm for Continuous Optimization. Int. J. Ind. Math. 2019, 11, 143–156. [Google Scholar]

- Hussien, A.G.; Amin, M.; Abd El Aziz, M. A comprehensive review of moth-flame optimisation: Variants, hybrids, and applications. J. Exp. Theor. Artif. Intell. 2020, 32, 705–725. [Google Scholar] [CrossRef]

- Kaveh, A.; Mahdavi, V. Colliding bodies optimization: A novel meta-heuristic method. Comput. Struct. 2014, 139, 18–27. [Google Scholar] [CrossRef]

- Mahdavi, M.; Fesanghary, M.; Damangir, E. An improved harmony search algorithm for solving optimization problems. Appl. Math. Comput. 2007, 188, 1567–1579. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- He, Q.; Wang, L. An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng. Appl. Artif. Intel. 2007, 20, 89–99. [Google Scholar] [CrossRef]

- Kaveh, A.; Khayatazad, M. A new meta-heuristic method: Ray optimization. Comput. Struct. 2012, 112, 283–294. [Google Scholar] [CrossRef]

- Hussien, A.G.; Amin, M. A self-adaptive harris hawks optimization algorithm with opposition-based learning and chaotic local search strategy for global optimization and feature selection. Int. J. Mach. Learn. Cybern. 2022, 13, 309–336. [Google Scholar] [CrossRef]

- Huang, F.-z.; Wang, L.; He, Q. An effective co-evolutionary differential evolution for constrained optimization. Appl. Math. Comput. 2007, 186, 340–356. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Kaveh, A.; Farhoudi, N. A new optimization method: Dolphin echolocation. Adv. Eng. Softw. 2013, 59, 53–70. [Google Scholar] [CrossRef]

- Dhiman, G.; Kumar, V. Spotted hyena optimizer: A novel bio-inspired based metaheuristic technique for engineering applications. Adv. Eng. Softw. 2017, 114, 48–70. [Google Scholar] [CrossRef]

- Kaveh, A.; Talatahari, S. An improved ant colony optimization for constrained engineering design problems. Eng. Comput. 2010, 27, 155–182. [Google Scholar] [CrossRef]

- Efr’en, M.; Carlos, C. An empirical study about the usefulness of evolution strategies to solve constrained optimization problem. Int. J. Gen. Syst. 2008, 37, 443–473. [Google Scholar]

- Li, S.; Chen, H.; Wangm, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.; Alavi, A.H.; Talatahari, S. Bat algorithm for constrained optimization tasks. Neural Comput. Appl. 2013, 22, 1239–1255. [Google Scholar] [CrossRef]

- He, Q.; Wang, L. A hybrid particle swarm optimization with a feasibility-based rule for constrained optimization. Appl. Math. Comput. 2007, 186, 1407–1422. [Google Scholar] [CrossRef]

- Kaveh, A.; Talatahari, S. A novel heuristic optimization method: Charged system search. Acta Mech. 2010, 213, 267–289. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A Nature-inspired Metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Baykasoglu, A.; Akpinar, S. Weighted superposition attraction (wsa): A swarm intelligence algorithm for optimization problems–part2: Constrained optimization. Appl. Soft Comput. 2015, 37, 396–415. [Google Scholar] [CrossRef]

- Czerniak, J.M.; Zarzycki, H.; Ewald, D. Aao as a new strategy in modeling and simulation of constructional problems optimization. Simul. Model. Pract. Theory 2017, 76, 22–33. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.-S.; Alavi, A.H. Cuckoo search algorithm: A metaheuristic approach to solve structural optimization problems. Eng. Comput. 2013, 29, 17–35. [Google Scholar] [CrossRef]

- Baykasoglu, A.; Ozsoydan, F.B. Adaptive firefly algorithm with chaos for mechanical design optimization problems. Appl. Soft Comput. 2015, 36, 152–164. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Sumari, P.; Geem, Z.W.; Gandomi, A.H. Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2021, 191, 116158. [Google Scholar] [CrossRef]

- Kamboj, V.K.; Nandi, A.; Bhadoria, A.; Sehgal, S. An intensify harris hawks optimizer for numerical and engineering optimization problems. Appl. Soft Comput. 2020, 89, 106018. [Google Scholar] [CrossRef]

- Eskandar, H.; Sadollah, A.; Bahreininejad, A.; Hamdi, M. Water cycle algorithm–a novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 2012, 110, 151–166. [Google Scholar] [CrossRef]

- Sayed, G.I.; Darwish, A.; Hassanien, A.E. A new chaotic multi-verse optimization algorithm for solving engineering optimization problems. J. Exp. Theor. Artif. Intell. 2018, 30, 293–317. [Google Scholar] [CrossRef]

| Algorithm | Parameters Setting |

|---|---|

| MROA | C = 0.1; β = 0.6; Jr = 0.25; limit = logt |

| ROA [23] | C=0.1 |

| WOA [9] | a1 = [2, 0]; a2 = [−2, −1]; b = 1 |

| STOA [33] | Cf = 2; u = 1; v = 1 |

| SCA [12] | α = 2 |

| AOA [11] | MOP_Max = 1; MOP_Min = 0.2; A = 5; Mu = 0.499 |

| GTOA [5] | - |

| BES [34] | α = [1.5, 2.0]; r = [0, 1] |

| HPGSOS [35] | Pm = 0.2; Pc = 0.7; c1,c2 = 2, w = 1 |

| PSOS [36] | c1,c2 = 2, w = 1 |

| F | dim | Range | fmin |

|---|---|---|---|

| 30/100/500/1000 | [−100, 100] | 0 | |

| 30/100/500/1000 | [−10, 10] | 0 | |

| 30/100/500/1000 | [−100, 100] | 0 | |

| 30/100/500/1000 | [−100, 100] | 0 | |

| 30/100/500/1000 | [−30, 30] | 0 | |

| 30/100/500/1000 | [−100, 100] | 0 | |

| 30/100/500/1000 | [−1.28, 1.28] | 0 | |

| 30/100/500/1000 | [−500, 500] | −418.9829 × dim | |

| 30/100/500/1000 | [−5.12, 5.12] | 0 | |

| 30/100/500/1000 | [−32, 32] | 0 | |

| 30/100/500/1000 | [−600, 600] | 0 | |

| 30/100/500/1000 | [−50, 50] | 0 | |

| 30/100/500/1000 | [−50, 50] | 0 | |

| 2 | [−65, 65] | 1 | |

| 4 | [−5, 5] | 0.00030 | |

| 2 | [−5, 5] | −1.0316 | |

| 2 | [−5, 5] | 0.398 | |

| 5 | [−2, 2] | 3 | |

| 3 | [−1, 2] | −3.86 | |

| 6 | [0, 1] | −3.32 | |

| 4 | [0, 10] | −10.1532 | |

| 4 | [0, 10] | −10.4028 | |

| 4 | [0, 10] | −10.5363 |

| F | dim | Metric | MROA | ROA | WOA | STOA | SCA | AOA | GTOA | BES | HPGSOS | PSOS |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | 30 | Best | 0 | 0 | 1.39 × 10−87 | 1.41 × 10−9 | 8.31 × 10−4 | 3.03 × 10−172 | 1.82 × 10−16 | 0 | 1.43 × 10−277 | 3.62 × 10−139 |

| Mean | 0 | 1.27 × 10−311 | 3.24 × 10−71 | 7.66 × 10−7 | 1.44 × 101 | 5.23 × 10−14 | 1.32 × 10−6 | 0 | 3.86 × 10−268 | 1.08 × 10−135 | ||

| Std | 0 | 0 | 2.81 × 10−70 | 1.94 × 10−6 | 3.83 × 101 | 5.12 × 10−13 | 8.35 × 10−6 | 0 | 0 | 2.57 × 10−135 | ||

| 100 | Best | 0 | 0 | 3.69 × 10−86 | 1.95 × 10−5 | 1.35 × 103 | 1.51 × 10−3 | 2.27 × 10−21 | 0 | 9 × 10−261 | 1.36 × 10−125 | |

| Mean | 0 | 6.32 × 10−307 | 8.54 × 10−71 | 1.12 × 10−2 | 1.15 × 104 | 2.51 × 10−2 | 1.97 × 10−6 | 5.9 × 10−308 | 3.94 × 10−255 | 6.14 × 10−123 | ||

| Std | 0 | 0 | 8.3 × 10−70 | 2.41 × 10−2 | 6.85 × 103 | 1.03 × 10−2 | 7.31 × 10−6 | 0 | 0 | 1.54 × 10−122 | ||

| F2 | 30 | Best | 0 | 1.56 × 10−181 | 1.32 × 10−57 | 5.28 × 10−7 | 2.13 × 10−4 | 0 | 5.15 × 10−8 | 1.98 × 10−232 | 7.32 × 10−139 | 1.75 × 10−70 |

| Mean | 2.33 × 10−242 | 1.15 × 10−153 | 7.7 × 10−49 | 1.2 × 10−5 | 2.54 × 10−2 | 0 | 4.52 × 10−4 | 2.96 × 10−148 | 2.88 × 10−135 | 4.14 × 10−69 | ||

| Std | 0 | 1.15 × 10−152 | 7.55 × 10−48 | 1.43 × 10−5 | 4.87 × 10−2 | 0 | 1.04 × 10−3 | 2.96 × 10−147 | 2.3 × 10−134 | 6.49 × 10−69 | ||

| 100 | Best | 0 | 3.01 × 10−185 | 2.94 × 10−59 | 1.93 × 10−4 | 2.35 × 10−1 | 1.34 × 10−123 | 6.15 × 10−8 | 5.58 × 10−232 | 3.32 × 10−132 | 3.76 × 10−65 | |

| Mean | 9.21 × 10−239 | 2.99 × 10−161 | 1.05 × 10−49 | 2.58 × 10−3 | 6.44 | 2.68 × 10−44 | 9.88 × 10−4 | 5.10 × 10−162 | 1.15 × 10−128 | 5.35 × 10−64 | ||

| Std | 0 | 2.81 × 10−160 | 7.92 × 10−49 | 2.03 × 10−3 | 5.06 | 2.68 × 10−43 | 1.78 × 10−3 | 5.1 × 10−161 | 3.25 × 10−128 | 5.91 × 10−64 | ||

| F3 | 30 | Best | 0 | 0 | 1.23 × 104 | 1.71 × 10−4 | 4.59 × 102 | 9.49 × 10−163 | 7.5 × 10−18 | 0 | 2.84 × 10−145 | 7.98 × 10−50 |

| Mean | 0 | 4.06 × 10−281 | 4.5 × 104 | 8.09 × 10−2 | 8.5 × 103 | 8.7 × 10−3 | 1.31 × 10−4 | 1.25 × 102 | 8.42 × 10−128 | 9.25 × 10−44 | ||

| Std | 0 | 0 | 1.42 × 104 | 2.52 × 10−1 | 5.47 × 103 | 2.12 × 10−2 | 4.39 × 10−4 | 1.22 × 103 | 8.42 × 10−127 | 8.53 × 10−43 | ||

| 100 | Best | 0 | 7.68 × 10−320 | 5.72 × 105 | 1.15 × 102 | 8.5 × 104 | 1.99 × 10−1 | 2.08 × 10−17 | 0 | 1.88 × 10−127 | 5.43 × 10−34 | |

| Mean | 0 | 1.35 × 10−272 | 1.05 × 106 | 1.95 × 103 | 2.55 × 105 | 1.43 × 103 | 3.82 × 10−3 | 5 | 5.49 × 10−110 | 2.96 × 10−27 | ||

| Std | 0 | 0 | 2.56 × 105 | 1.85 × 103 | 6.41 × 104 | 1.43 × 104 | 1.99 × 10−2 | 49.7 | 5.19 × 10−109 | 1.5 × 10−26 | ||

| F4 | 30 | Best | 0 | 1.56 × 10−182 | 1.23 × 10−1 | 4.74 × 10−3 | 8.6 | 1.69 × 10−66 | 2.93 × 10−9 | 2.96 × 10−245 | 9.43 × 10−124 | 7.39 × 10−57 |

| Mean | 6.09 × 10−233 | 6.57 × 10−153 | 46.4 | 5.19 × 10−2 | 35.5 | 2.69 × 10−2 | 2.3 × 10−4 | 1.11 × 10−168 | 1.35 × 10−120 | 5.39 × 10−55 | ||

| Std | 0 | 6.57 × 10−152 | 29.3 | 8.6 × 10−2 | 10.8 | 2.01 × 10−2 | 5.02 × 10−4 | 0 | 4.42 × 10−120 | 6.46 × 10−55 | ||

| 100 | Best | 0 | 4.22 × 10−180 | 3.91 | 10.6 | 79.3 | 7.00 × 10−2 | 8.03 × 10−10 | 5.5 × 10−238 | 1.12 × 10−116 | 4.6 × 10−49 | |

| Mean | 3.32 × 10−249 | 3.57 × 10−156 | 76.9 | 70.9 | 89.6 | 9.22 × 10−2 | 1.68 × 10−4 | 5.47 × 10−155 | 2.63 × 10−114 | 2.72 × 10−47 | ||

| Std | 0 | 3.11 × 10−155 | 22.8 | 16.6 | 2.86 | 1.25 × 10−2 | 3.41 × 10−4 | 5.45 × 10−154 | 7.11 × 10−114 | 5.16 × 10−47 | ||

| F5 | 30 | Best | 6.35 × 10−9 | 26.2 | 27.1 | 27.2 | 86.5 | 27.8 | 28.9 | 1.82 × 10−1 | 0 | 24 |

| Mean | 6.93 | 26.3 | 28 | 28.4 | 1.13 × 105 | 28.5 | 28.9 | 25 | 1.1 | 25.2 | ||

| Std | 11.2 | 4.35 | 5.06 × 10−1 | 4.72 × 10−1 | 7.04 × 105 | 3.57 × 10−1 | 3.32 × 10−2 | 9.36 | 5.42 | 6.6 × 10−1 | ||

| 100 | Best | 2.05 × 10−7 | 96.5 | 97.5 | 99.3 | 2.41 × 107 | 98.5 | 98.9 | 1.61 × 10−1 | 0 | 94 | |

| Mean | 23.1 | 97.6 | 98.2 | 1.07 × 102 | 1.21 × 108 | 98.9 | 98.9 | 85 | 4.93 × 10−30 | 95.1 | ||

| Std | 41.2 | 4.96 × 10−1 | 2.3 × 10−1 | 10.1 | 6.29 × 107 | 1.07 × 10−1 | 3.75 × 10−2 | 32.7 | 4.93 × 10−29 | 7.5 × 10−1 | ||

| F6 | 30 | Best | 4.77 × 10−12 | 1.82 × 10−2 | 1.01 × 10−1 | 1.9 | 4.72 | 2.56 | 3.98 | 1.83 × 10−4 | 0 | 0 |

| Mean | 2.95 × 10−5 | 1.11 × 10−1 | 4.73 × 10−1 | 2.71 | 28.1 | 3.22 | 5.72 | 1.89 | 0 | 5.08 × 10−33 | ||

| Std | 3.64 × 10−5 | 1.25 × 10−1 | 2.73 × 10−1 | 4.96 × 10−1 | 69.5 | 3.02 × 10−1 | 8.16 × 10−1 | 3.17 | 0 | 7.75 × 10−33 | ||

| 100 | Best | 8.53 × 10−7 | 5.88 × 10−1 | 2.01 | 16.5 | 1.44 × 103 | 16.8 | 20.8 | 2.59 × 10−4 | 0 | 1.26 × 10−13 | |

| Mean | 1.11 × 10−1 | 1.75 | 4.32 | 17.9 | 1.25 × 104 | 18.3 | 23.2 | 5.34 | 0 | 1.38 × 10−11 | ||

| Std | 1.69 × 10−1 | 7.57 × 10−1 | 1.31 | 8.3 × 10−1 | 9.61 × 103 | 5.56 × 10−1 | 8.85 × 10−1 | 9.89 | 0 | 3.91 × 10−11 | ||

| F7 | 30 | Best | 3.78 × 10−7 | 3.48 × 10−6 | 5.17 × 10−5 | 1.21 × 10−3 | 6.79 × 10−3 | 1.19 × 10−6 | 4.53 × 10−5 | 8.85 × 10−4 | 4.56 × 10−5 | 1.11 × 10−4 |

| Mean | 7.17 × 10−5 | 1.67 × 10−4 | 4.29 × 10−3 | 6.42 × 10−3 | 1.43 × 10−1 | 7.71 × 10−5 | 3.78 × 10−4 | 6.36 × 10−3 | 1.52 × 10−4 | 5.36 × 10−4 | ||

| Std | 5.69 × 10−5 | 1.99 × 10−4 | 5.63 × 10−3 | 3.78 × 10−3 | 1.97 × 10−1 | 7.26 × 10−5 | 2.59 × 10−4 | 3.91 × 10−3 | 7.98 × 10−5 | 2.68 × 10−4 | ||

| 100 | Best | 6.48 × 10−7 | 8.82 × 10−6 | 7.46 × 10−5 | 6.9 × 10−3 | 19.5 | 2.91 × 10−6 | 6.85 × 10−5 | 7.3 × 10−4 | 4.26 × 10−5 | 2.13 × 10−4 | |

| Mean | 7.24 × 10−5 | 1.68 × 10−4 | 4.59 × 10−3 | 3.02 × 10−2 | 1.44 × 102 | 7.89 × 10−5 | 4.53 × 10−4 | 6.07 × 10−3 | 1.76 × 10−4 | 7.02 × 10−4 | ||

| Std | 5.89 × 10−5 | 1.84 × 10−4 | 5.93 × 10−3 | 1.69 × 10−2 | 77.3 | 8.89 × 10−5 | 3.68 × 10−4 | 3.44 × 10−3 | 9.44 × 10−5 | 2.84 × 10−4 | ||

| F8 | 30 | Best | −1.26 × 104 | −1.26 × 104 | −1.26 × 104 | −6.28 × 103 | −4.22 × 103 | −6.06 × 103 | −6.54 × 103 | −1.26 × 104 | −1.26 × 104 | −1.23 × 104 |

| Mean | −1.26 × 104 | −1.23 × 104 | −9.92 × 103 | −5.06 × 103 | −3.75 × 103 | −5.22 × 103 | −5.09 × 103 | −9.56 × 103 | −1.26 × 104 | −1.10 × 104 | ||

| Std | 3.79 × 10−4 | 6.94 × 102 | 1.87 × 103 | 4.65 × 102 | 3.14 × 102 | 4.56 × 102 | 7.09 × 102 | 1.76 × 103 | 1.83 × 10−12 | 6.94 × 102 | ||

| 100 | Best | −4.19 × 104 | −4.19 × 104 | −4.19 × 104 | −1.33 × 104 | −8.1 × 103 | −1.12 × 104 | −1.27 × 104 | −4.19 × 104 | −4.19 × 104 | −3.73 × 104 | |

| Mean | −4.19 × 104 | −4.12 × 104 | −3.42 × 104 | −1.03 × 104 | −6.87 × 103 | −9.94 × 103 | −9.79 × 103 | −3.04 × 104 | −4.19 × 104 | −2.96 × 104 | ||

| Std | 6.29 × 10−3 | 1.76 × 103 | 6.06 × 103 | 1.41 × 103 | 6.02 × 102 | 7.17 × 102 | 1.48 × 103 | 5.9 × 103 | 2.61 × 10−11 | 4.54 × 103 | ||

| F9 | 30 | Best | 0 | 0 | 0 | 4.41 × 10−9 | 5.12 × 10−3 | 0 | 0 | 0 | 0 | 0 |

| Mean | 0 | 0 | 5.68 × 10−16 | 6.92 | 37.4 | 0 | 8.02 × 10−6 | 7.32 | 0 | 0 | ||

| Std | 0 | 0 | 5.68 × 10−15 | 6.94 | 35.4 | 0 | 2.2 × 10−5 | 36.3 | 0 | 0 | ||

| 100 | Best | 0 | 0 | 0 | 7.72 × 10−6 | 79.8 | 0 | 0 | 0 | 0 | 0 | |

| Mean | 0 | 0 | 3.41 × 10−15 | 14.8 | 2.64 × 102 | 0 | 2.26 × 10−5 | 0 | 0 | 0 | ||

| Std | 0 | 0 | 2.53 × 10−14 | 19.9 | 1.17 × 102 | 0 | 1.38 × 10−4 | 0 | 0 | 0 | ||

| F10 | 30 | Best | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 20 | 1.61 × 10−2 | 8.88 × 10−16 | 1.3 × 10−11 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 |

| Mean | 8.88 × 10−16 | 8.88 × 10−16 | 4.55 × 10−15 | 20 | 13.7 | 8.88 × 10−16 | 1.77 × 10−4 | 8.88 × 10−16 | 3.34 × 10−15 | 4.16 × 10−15 | ||

| Std | 0 | 0 | 2.50 × 10−15 | 1.55 × 10−3 | 8.8 | 0 | 4.05 × 10−4 | 0 | 1.65 × 10−15 | 9.69 × 10−16 | ||

| 100 | Best | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 20 | 6.63 | 8.88 × 10−16 | 1.41 × 10−11 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | |

| Mean | 8.88 × 10−16 | 8.88 × 10−16 | 4.23 × 10−15 | 20 | 18.6 | 3.55 × 10−4 | 1.17 × 10−4 | 9.24 × 10−16 | 4.19 × 10−15 | 4.37 × 10−15 | ||

| Std | 0 | 0 | 2.41 × 10−15 | 2.96 × 10−4 | 4.34 | 8.68 × 10−4 | 2.16 × 10−4 | 3.55 × 10−16 | 9.11 × 10−16 | 5 × 10−16 | ||

| F11 | 30 | Best | 0 | 0 | 0 | 7.65 × 10−9 | 3.07 × 10−3 | 3.33 × 10−4 | 0 | 0 | 0 | 0 |

| Mean | 0 | 0 | 7.36 × 10−3 | 3.19 × 10−2 | 9.81 × 10−1 | 1.84 × 10−1 | 2.9 × 10−6 | 0 | 0 | 0 | ||

| Std | 0 | 0 | 3.54 × 10−2 | 3.58 × 10−2 | 4.61 × 10−1 | 1.45 × 10−1 | 1.25 × 10−5 | 0 | 0 | 0 | ||

| 100 | Best | 0 | 0 | 0 | 2.24 × 10−6 | 7.75 | 1.99 × 102 | 0 | 0 | 0 | 0 | |

| Mean | 0 | 0 | 7.93 × 10−3 | 3.67 × 10−2 | 95.8 | 6.33 × 102 | 7.95 × 10−6 | 0 | 0 | 0 | ||

| Std | 0 | 0 | 4.63 × 10−2 | 5.8 × 10−2 | 66 | 1.98 × 102 | 2.88 × 10−5 | 0 | 0 | 0 | ||

| F12 | 30 | Best | 6.45 × 10−13 | 2.34 × 10−3 | 6.21 × 10−3 | 1.03 × 10−1 | 9.69 × 10−1 | 4.32 × 10−1 | 2.7 × 10−1 | 8.68 × 10−6 | 1.57 × 10−32 | 1.57 × 10−32 |

| Mean | 1.04 × 10−6 | 1.06 × 10−2 | 7.2 × 10−2 | 2.78 × 10−1 | 3.25 × 105 | 5.24 × 10−1 | 5.88 × 10−1 | 1.75 × 10−1 | 1.57 × 10−32 | 1.63 × 10−32 | ||

| Std | 1.72 × 10−6 | 6.81 × 10−3 | 4.43 × 10−1 | 1.65 × 10−1 | 1.65 × 106 | 5.27 × 10−2 | 2.3 × 10−1 | 4.03 × 10−1 | 5.5 × 10−48 | 1.43 × 10−33 | ||

| 100 | Best | 7.06 × 10−16 | 5.64 × 10−3 | 1.54 × 10−2 | 5.74 × 10−1 | 7.48 × 107 | 8.61 × 10−1 | 7.63 × 10−1 | 4.73 × 10−6 | 4.71 × 10−33 | 1.67 × 10−15 | |

| Mean | 7.51 × 10−5 | 2.58 × 10−2 | 5.18 × 10−2 | 8.4 × 10−1 | 3.59 × 108 | 9.06 × 10−1 | 9.9 × 10−1 | 1.65 × 10−1 | 4.71 × 10−33 | 9.79 × 10−14 | ||

| Std | 1.25 × 10−4 | 1.25 × 10−2 | 3.09 × 10−2 | 2.93 × 10−1 | 1.85 × 108 | 2.37 × 10−2 | 1.15 × 10−1 | 4.17 × 10−1 | 6.88 × 10−49 | 4.79 × 10−13 | ||

| F13 | 30 | Best | 3.15 × 10−12 | 4.87 × 10−2 | 1.19 × 10−1 | 1.46 | 3.58 | 2.62 | 2.2 | 6.86 × 10−4 | 1.35 × 10−32 | 1.35 × 10−32 |

| Mean | 8.92 × 10−4 | 2.54 × 10−1 | 5.81 × 10−1 | 1.92 | 1.15 × 106 | 2.83 | 2.87 | 1.44 | 1.35 × 10−32 | 2.78 × 10−31 | ||

| Std | 3.31 × 10−3 | 1.57 × 10−1 | 2.84 × 10−1 | 3.13 × 10−1 | 6.4 × 106 | 1.05 × 10−1 | 2.52 × 10−1 | 1.46 | 5.5 × 10−48 | 2.04 × 10−30 | ||

| 100 | Best | 9.55 × 10−9 | 2.82 × 10−1 | 1.2 | 9.06 | 1.48 × 108 | 9.83 | 9.98 | 1.33 × 10−4 | 1.35 × 10−32 | 1.59 × 10−12 | |

| Mean | 5.92 × 10−3 | 1.34 | 3.07 | 10.7 | 6.28 × 108 | 9.96 | 9.99 | 3.51 | 1.35 × 10−32 | 2.4 | ||

| Std | 1.43 × 10−2 | 7.66 × 10−1 | 1.17 | 1.06 | 3.09 × 108 | 6.38 × 10−2 | 4.2 × 10−3 | 4.64 | 5.5 × 10−48 | 3.65 | ||

| F14 | 2 | Best | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 |

| Mean | 9.98 × 10−1 | 5.14 | 3.59 | 2.31 | 2.14 | 10.4 | 1.24 | 3.34 | 9.98 × 10−1 | 9.98 × 10−1 | ||

| Std | 4.92 × 10−16 | 5 | 3.66 | 2.49 | 2.36 | 3.8 | 8.56 × 10−1 | 1.54 | 0 | 0 | ||

| F15 | 4 | Best | 3.07 × 10−4 | 3.08 × 10−4 | 3.17 × 10−4 | 3.44 × 10−4 | 3.7 × 10−4 | 3.49 × 10−4 | 3.07 × 10−4 | 3.47 × 10−4 | 3.08 × 10−4 | 3.07 × 10−4 |

| Mean | 3.17 × 10−4 | 4.78 × 10−4 | 8.58 × 10−4 | 2.91 × 10−3 | 1.13 × 10−3 | 1.95 × 10−2 | 3.35 × 10−3 | 7.63 × 10−3 | 3.34 × 10−4 | 3.56 × 10−4 | ||

| Std | 9.16 × 10−5 | 2.71 × 10−4 | 1.33 × 10−3 | 5.86 × 10−3 | 3.66 × 10−4 | 2.76 × 10−2 | 6.8 × 10−3 | 1.05 × 10−2 | 1.33 × 10−4 | 2.01 × 10−4 | ||

| F16 | 2 | Best | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 |

| Mean | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −1.03 | −9.56 × 10−1 | −1.03 | −1.03 | ||

| Std | 6.51 × 10−16 | 6.58 × 10−8 | 3.89 × 10−9 | 3.11 × 10−6 | 4.28 × 10−5 | 1.43 × 10−7 | 1.81 × 10−14 | 2.29 × 10−1 | 4.27 × 10−7 | 6.64 × 10−16 | ||

| F17 | 2 | Best | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 |

| Mean | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 4 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | 6.93 × 10−1 | 3.98 × 10−1 | 3.98 × 10−1 | ||

| Std | 0 | 1.15 × 10−5 | 2.27 × 10−5 | 1.21 × 10−4 | 1.9 × 10−3 | 1.34 × 10−7 | 3.96 × 10−16 | 5.36 × 10−1 | 1.82 × 10−16 | 0 | ||

| F18 | 5 | Best | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 |

| Mean | 3 | 3 | 3.54 | 3 | 3 | 10.8 | 3.81 | 5.18 | 3.27 | 3 | ||

| Std | 2.65 × 10−15 | 1.72 × 10−4 | 3.8 | 2.42 × 10−4 | 4.22 × 10−4 | 15.5 | 8.1 | 5.99 | 2.7 | 9.82 × 10−16 | ||

| F19 | 3 | Best | −3.86 | −3.86 | −3.86 | −3.86 | −3.86 | −3.86 | −3.86 | −3.86 | −3.86 | −3.86 |

| Mean | −3.86 | −3.86 | −3.86 | −3.86 | −3.85 | −3.85 | −3.86 | −3.64 | −3.86 | −3.86 | ||

| Std | 1.74 × 10−15 | 2.29 × 10−3 | 7.08 × 10−3 | 5.17 × 10−3 | 7.6 × 10−3 | 4.41 × 10−3 | 9.46 × 10−15 | 2.63 × 10−1 | 2.16 × 10−15 | 2.23 × 10−15 | ||

| F20 | 6 | Best | −3.32 | −3.32 | −3.32 | −3.32 | −3.32 | −3.32 | −3.32 | −3.32 | −3.32 | −3.32 |

| Mean | −3.28 | −3.23 | −3.21 | −2.85 | −2.82 | −3.04 | −3.24 | −2.84 | −3.3 | −3.25 | ||

| Std | 5.74 × 10−2 | 1.43 × 10−1 | 1.75 × 10−1 | 5.28 × 10−1 | 4.27 × 10−1 | 1.16 × 10−1 | 8.2 × 10−2 | 3.34 × 10−1 | 4.87 × 10−2 | 5.86 × 10−2 | ||

| F21 | 4 | Best | −10.2 | −10.2 | −10.2 | −10.1 | −5.27 | −6.77 | −10.2 | −10.1 | −10.2 | −10.2 |

| Mean | −10.2 | −10.1 | −8.19 | −3.48 | −2.08 | −3.74 | −8.08 | −5.97 | −10.2 | −8.83 | ||

| Std | 4.79 × 10−15 | 3.45 × 10−2 | 2.79 | 4.16 | 1.79 | 1.3 | 2.74 | 2.720 | 1.03 × 10−14 | 2.24 | ||

| F22 | 4 | Best | −10.4 | −10.4 | −10.4 | −10.4 | −6.59 | −6.72 | −10.4 | −10.4 | −10.4 | −10.4 |

| Mean | −10.4 | −10.4 | −7.27 | −5.26 | −2.82 | −3.76 | −7.69 | −6.07 | −10.4 | −9.39 | ||

| Std | 5.13 × 10−15 | 2.89 × 10−2 | 3.14 | 4.45 | 1.74 | 1.74 | 3.21 | 3.08 | 9.11 × 10−15 | 2.1 | ||

| F23 | 4 | Best | −10.5 | −10.5 | −10.5 | −10.5 | −7.39 | −7.4 | −10.5 | −10.5 | −10.5 | −10.5 |

| Mean | −10.5 | −10.4 | −6.65 | −5.94 | −3.92 | −3.75 | −7.62 | −5.83 | −10.5 | −9.94 | ||

| Std | 4.46 × 10−15 | 7.72 × 10−1 | 3.53 | 4.26 | 1.77 | 1.59 | 3.31 | 2.86 | 2.8 × 10−15 | 1.71 |

| F | dim | Metric | MROA | ROA | WOA | STOA | SCA | AOA | GTOA | BES | HPGSOS | PSOS |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | 500 | Best | 0 | 0 | 5.1 × 10−83 | 8.92 × 10−1 | 7.97 × 104 | 5.66 × 10−1 | 6.58 × 10−16 | 0 | 2.86 × 10−255 | 1.62 × 10−119 |

| Mean | 0 | 1.03 × 10−309 | 1.79 × 10−71 | 9.56 | 2.14 × 105 | 6.38 × 10−1 | 5.66 × 10−6 | 5.22 × 10−315 | 7.48 × 10−248 | 3.55 × 10−116 | ||

| Std | 0 | 0 | 6.88 × 10−71 | 7.65 | 7.63 × 104 | 4.2 × 10−2 | 1.51 × 10−5 | 0 | 0 | 1.8 × 10−115 | ||

| 1000 | Best | 0 | 0 | 4.65 × 10−81 | 15.5 | 1.37 × 105 | 1.57 | 1.73 × 10−14 | 0 | 1.85 × 10−254 | 3.02 × 10−118 | |

| Mean | 0 | 2.02 × 10−313 | 1.46 × 10−65 | 72.3 | 4.96 × 105 | 1.72 | 1.94 × 10−5 | 0 | 6.28 × 10−249 | 1.45 × 10−115 | ||

| Std | 0 | 0 | 7.97 × 10−65 | 46.6 | 1.76 × 105 | 8.26 × 10−2 | 6.68 × 10−5 | 0 | 0 | 5.15 × 10−115 | ||

| F2 | 500 | Best | 0 | 2.45 × 10−183 | 5.07 × 10−57 | 1.02 × 10−2 | 32.2 | 5.14 × 10−13 | 2.47 × 10−9 | 1.68 × 10−234 | 1.27 × 10−127 | 1.67 × 10−62 |

| Mean | 9.80 × 10−237 | 4.31 × 10−160 | 1.09 × 10−49 | 7.64 × 10−2 | 1.04 × 102 | 8.77 × 10−4 | 2.54 × 10−3 | 7.25 × 10−174 | 1.99 × 10−124 | 1.82 × 10−61 | ||

| Std | 0 | 2.31 × 10−159 | 5.05 × 10−49 | 5.91 × 10−2 | 57 | 1.35 × 10−3 | 5.59 × 10−3 | 0 | 1.04 × 10−123 | 2.6 × 10−61 | ||

| 1000 | Best | 0 | 2.62 × 10−179 | 2.75 × 10−56 | 6.96 × 10−2 | Inf | 5.13 × 10−3 | 7.25 × 10−9 | 1.99 × 10−222 | 5.59 × 10−127 | 7.39 × 10−62 | |

| Mean | 5.16 × 10−240 | 1.8 × 10−158 | 3.37 × 10−48 | 2.72 × 10−1 | Inf | 1.43 × 10−2 | 1.15 × 10−2 | 9.01 × 10−173 | 2.57 × 10−124 | 1.11 × 10−60 | ||

| Std | 0 | 9.86 × 10−158 | 1.36 × 10−47 | 2.27 × 10−1 | NaN | 4.95 × 10−3 | 2.22 × 10−2 | 0 | 6.33 × 10−124 | 1.39 × 10−60 | ||

| F3 | 500 | Best | 0 | 4.48 × 10−299 | 1.56 × 107 | 1.71 × 105 | 4.29 × 106 | 13.2 | 1.12 × 10−19 | 0 | 2.04 × 10−114 | 4.21 × 10−24 |

| Mean | 0 | 1.06 × 10−257 | 2.93 × 107 | 4.9 × 105 | 6.77 × 106 | 31.5 | 5.35 × 10−2 | 3.09 × 10−1 | 3.98 × 10−74 | 2.83 × 10−19 | ||

| Std | 0 | 0 | 8.13 × 106 | 1.56 × 105 | 1.41 × 106 | 13.1 | 1.35 × 10−1 | 1.69 | 2.18 × 10−73 | 1.14 × 10−18 | ||

| 1000 | Best | 0 | 6.45 × 10−292 | 6.045 × 107 | 1.25 × 106 | 1.225 × 107 | 60.7 | 2.665 × 10−8 | 0 | 1.145 × 10−112 | 2.035 × 10−22 | |

| Mean | 0 | 3.215 × 10−240 | 1.295 × 108 | 2.385 × 106 | 2.655 × 107 | 1.815 × 104 | 5.115 × 10−1 | 1.435 × 103 | 1.165 × 10−44 | 3.745 × 10−17 | ||

| Std | 0 | 0 | 5.455 × 107 | 7.485 × 105 | 7.285 × 106 | 9.855 × 104 | 2.12 | 7.835 × 103 | 6.375 × 10−44 | 1.95 × 10−16 | ||

| F4 | 500 | Best | 0 | 2.175 × 10−174 | 50.6 | 96.9 | 98 | 1.625 × 10−1 | 2.695 × 10−9 | 4.235 × 10−248 | 3.815 × 10−113 | 3.525 × 10−44 |

| Mean | 4.495 × 10−234 | 2.825 × 10−157 | 90.4 | 98.6 | 99.1 | 1.8 × 10−1 | 2.29 × 10−4 | 9.23 × 10−154 | 2.03 × 10−110 | 7.94 × 10−43 | ||

| Std | 0 | 1.43 × 10−156 | 10.6 | 7.42 × 10−1 | 3.11 × 10−1 | 1.23 × 10−2 | 5.62 × 10−4 | 5.05 × 10−153 | 4.61 × 10−110 | 1.32 × 10−42 | ||

| 1000 | Best | 0 | 8.44 × 10−174 | 28.1 | 99 | 99.3 | 1.95 × 10−1 | 1.47 × 10−6 | 1.73 × 10−229 | 2.04 × 10−111 | 5.36 × 10−43 | |

| Mean | 6.25 × 10−232 | 2.12 × 10−157 | 80.2 | 99.5 | 99.6 | 2.12 × 10−1 | 4.52 × 10−4 | 2.17 × 10−184 | 2.41 × 10−109 | 6.64 × 10−42 | ||

| Std | 0 | 8.71 × 10−157 | 19.7 | 1.85 × 10−1 | 1.14 × 10−1 | 9.2 × 10−3 | 1.07 × 10−3 | 0 | 8.79 × 10−109 | 6.42 × 10−42 | ||

| F5 | 500 | Best | 6.72 × 10−9 | 4.94 × 102 | 4.96 × 102 | 3.34 × 103 | 1.43 × 109 | 4.99 × 102 | 4.99 × 102 | 40.2 | 0 | 4.94 × 102 |

| Mean | 1.01 × 102 | 4.95 × 102 | 4.96 × 102 | 2.13 × 104 | 2.03 × 109 | 4.99 × 102 | 4.99 × 102 | 3.83 × 102 | 0 | 4.96 × 102 | ||

| Std | 2 × 102 | 3.6 × 10−1 | 5.12 × 10−1 | 4.58 × 104 | 4.95 × 108 | 1 × 10−1 | 4.11 × 10−2 | 2.11 × 102 | 0 | 7.35 × 10−1 | ||

| 1000 | Best | 9.73 × 10−5 | 9.89 × 102 | 9.92 × 102 | 2.81 × 104 | 2.12 × 109 | 9.99 × 102 | 9.99 × 102 | 6.36 | 0 | 9.96 × 102 | |

| Mean | 4.34 × 102 | 9.9 × 102 | 9.9 × 102 | 1.48 × 105 | 4.35 × 109 | 9.99 × 102 | 9.99 × 102 | 8.98 × 102 | 0 | 9.97 × 102 | ||

| Std | 4.95 × 102 | 4.52 × 10−1 | 8.8 × 10−1 | 1.29 × 105 | 1.03 × 109 | 1.02 × 10−1 | 3.91 × 10−2 | 2.93 × 102 | 0 | 5.67 × 10−1 | ||

| F6 | 500 | Best | 2.54 × 10−6 | 8.98 | 22.8 | 1.17 × 102 | 1.23 × 105 | 1.14 × 102 | 1.22 × 102 | 2.7 × 10−2 | 0 | 22.1 |

| Mean | 2.35 | 17.9 | 35.1 | 1.28 × 102 | 2.23 × 105 | 1.16 × 102 | 1.23 × 102 | 30.3 | 0 | 48.5 | ||

| Std | 3.17 | 6.98 | 10.1 | 12.6 | 8.2 × 104 | 1.39 | 1.02 | 53.2 | 0 | 17.1 | ||

| 1000 | Best | 1.45 × 10−3 | 10.1 | 31.9 | 2.49 × 102 | 2.02 × 105 | 2.41 × 102 | 2.45 × 102 | 7.75 × 10−3 | 0 | 1.69 × 102 | |

| Mean | 10.6 | 33.2 | 64.4 | 2.97 × 102 | 5.3 × 105 | 2.43 × 102 | 2.48 × 102 | 27.2 | 0 | 1.92 × 102 | ||

| Std | 14.3 | 15.2 | 16.2 | 34 | 1.25 × 105 | 1.11 | 1.12 | 75.6 | 0 | 12.8 | ||

| F7 | 500 | Best | 2.99 × 10−6 | 1.16 × 10−5 | 1.29 × 10−4 | 1.68 × 10−1 | 1.14 × 104 | 3.12 × 10−6 | 5.49 × 10−5 | 1.19 × 10−3 | 5.6 × 10−5 | 3.66 × 10−4 |

| Mean | 8.25 × 10−5 | 2.27 × 10−4 | 3.98 × 10−3 | 4.31 × 10−1 | 1.49 × 104 | 9.94 × 10−5 | 4.97 × 10−4 | 5.77 × 10−3 | 1.78 × 10−4 | 8.83 × 10−4 | ||

| Std | 5.64 × 10−5 | 2.46 × 10−4 | 4.64 × 10−3 | 2.32 × 10−1 | 3.38 × 103 | 6.76 × 10−5 | 5.59 × 10−4 | 3.17 × 10−3 | 8.93 × 10−5 | 3.85 × 10−4 | ||

| 1000 | Best | 2.29 × 10−6 | 6.63 × 10−6 | 2.37 × 10−4 | 4.95 × 10−1 | 3.54 × 104 | 4.59 × 10−6 | 7.19 × 10−5 | 7.83 × 10−4 | 4.69 × 10−5 | 3.89 × 10−4 | |

| Mean | 7.69 × 10−5 | 1.71 × 10−4 | 4.72 × 10−3 | 3.19 | 6.68 × 104 | 1.01 × 10−4 | 5.79 × 10−4 | 4.96 × 10−3 | 1.81 × 10−4 | 1.05 × 10−3 | ||

| Std | 6.5 × 10−5 | 1.81 × 10−4 | 5.03 × 10−3 | 2.25 | 1.29 × 104 | 9.81 × 10−5 | 4.14 × 10−4 | 3.11 × 10−3 | 9.47 × 10−5 | 5 × 10−4 | ||

| F8 | 500 | Best | −2.09 × 105 | −2.09 × 105 | −2.09 × 105 | −2.94 × 104 | −1.91 × 104 | −2.53 × 104 | −2.97 × 104 | −1.59 × 105 | −2.09 × 105 | −1.75 × 105 |

| Mean | −2.09 × 105 | −2.02 × 105 | −1.83 × 105 | −2.48 × 104 | −1.55 × 104 | −2.24 × 104 | −2.28 × 104 | −5.87 × 104 | −2.09 × 105 | −1.58 × 105 | ||

| Std | 7.76 × 102 | 1.41 × 104 | 3.11 × 104 | 2.91 × 103 | 1.2 × 103 | 1.49 × 103 | 3.42 × 103 | 4.68 × 104 | 2.96 × 10−11 | 7.93 × 103 | ||

| 1000 | Best | −4.19 × 105 | −4.19 × 105 | −4.19 × 105 | −4.62 × 104 | −2.84 × 104 | −3.85 × 104 | −4.1 × 104 | −3.36 × 105 | −4.19 × 105 | −3.6 × 105 | |

| Mean | −4.19 × 105 | −4.11 × 105 | −3.56 × 105 | −3.7 × 104 | −2.23 × 104 | −3.19 × 104 | −3.19 × 104 | −1.28 × 105 | −4.19 × 105 | −3.19 × 105 | ||

| Std | 4.16 × 10−2 | 2.01 × 104 | 5.73 × 104 | 5.62 × 103 | 1.97 × 103 | 2.92 × 103 | 3.88 × 103 | 1.12 × 105 | 1.18 × 10−10 | 2.19 × 104 | ||

| F9 | 500 | Best | 0 | 0 | 0 | 1.58 | 5.7 × 102 | 0 | 0 | 0 | 0 | 0 |

| Mean | 0 | 0 | 9.09 × 10−14 | 19.1 | 1.46 × 103 | 6.81 × 10−6 | 2.47 × 10−5 | 0 | 0 | 0 | ||

| Std | 0 | 0 | 3.66 × 10−13 | 15.8 | 5.32 × 102 | 7.31 × 10−6 | 9.79 × 10−5 | 0 | 0 | 0 | ||

| 1000 | Best | 0 | 0 | 0 | 1.4 | 8.39 × 102 | 1.46 × 10−5 | 0 | 0 | 0 | 0 | |

| Mean | 0 | 0 | 1.21 × 10−13 | 25.1 | 2.13 × 103 | 6.36 × 10−5 | 5.07 × 10−5 | 0 | 0 | 0 | ||

| Std | 0 | 0 | 6.64 × 10−13 | 19.4 | 6.94 × 102 | 1.82 × 10−5 | 1.56 × 10−4 | 0 | 0 | 0 | ||

| F10 | 500 | Best | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 20 | 5.73 | 7.28 × 10−3 | 2.57 × 10−13 | 8.88 × 10−16 | 4.44 × 10−15 | 4.44 × 10−15 |

| Mean | 8.88 × 10−16 | 8.88 × 10−16 | 3.97 × 10−15 | 20 | 19.1 | 7.87 × 10−3 | 1.74 × 10−4 | 8.88 × 10−16 | 4.32 × 10−15 | 4.44 × 10−15 | ||

| Std | 0 | 0 | 2.23 × 10−15 | 5.9 × 10−5 | 4.12 | 3.34 × 10−4 | 2.84 × 10−4 | 0 | 6.49 × 10−16 | 0 | ||

| 1000 | Best | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 20 | 9.17 | 8.76 × 10−3 | 7.86 × 10−9 | 8.88 × 10−16 | 4.44 × 10−15 | 4.44 × 10−15 | |

| Mean | 8.88 × 10−16 | 8.88 × 10−16 | 3.61 × 10−15 | 20 | 19.3 | 9.3 × 10−3 | 1.61 × 10−4 | 1.13 × 10−15 | 4.44 × 10−15 | 4.44 × 10−15 | ||

| Std | 0 | 0 | 2.41 × 10−15 | 2.97 × 10−5 | 3.62 | 2.85 × 10−4 | 3.17 × 10−4 | 9.01 × 10−16 | 0 | 0 | ||

| F11 | 500 | Best | 0 | 0 | 0 | 1.72 × 10−1 | 6.07 × 102 | 6.49 × 103 | 1.11 × 10−16 | 0 | 0 | 0 |

| Mean | 0 | 0 | 0 | 5.93 × 10−1 | 1.77 × 103 | 1.05 × 104 | 1.93 × 10−5 | 0 | 0 | 0 | ||

| Std | 0 | 0 | 0 | 3.15 × 10−1 | 5.94 × 102 | 2.72 × 103 | 6.7 × 10−5 | 0 | 0 | 0 | ||

| 1000 | Best | 0 | 0 | 0 | 6.27 × 10−1 | 6.46 × 102 | 2.71 × 104 | 0 | 0 | 0 | 0 | |

| Mean | 0 | 0 | 0 | 1.34 | 4.21 × 103 | 2.83 × 104 | 3.9 × 10−6 | 0 | 0 | 0 | ||

| Std | 0 | 0 | 0 | 3.91 × 10−1 | 1.6 × 103 | 4.2 × 102 | 1.04 × 10−5 | 0 | 0 | 0 | ||

| F12 | 500 | Best | 3.21 × 10−8 | 1.12 × 10−2 | 3.42 × 10−2 | 1.73 | 4.82 × 109 | 1.05 | 1.07 | 1.97 × 10−7 | 9.42 × 10−34 | 1.16 × 10−2 |

| Mean | 1.18 × 10−4 | 3.5 × 10−2 | 9.51 × 10−2 | 4.89 | 6.74 × 109 | 1.08 | 1.14 | 3.59 × 10−3 | 9.42 × 10−34 | 3.16 × 10−2 | ||

| Std | 1.66 × 10−4 | 1.56 × 10−2 | 4.96 × 10−2 | 2.25 | 8.71 × 108 | 1.16 × 10−2 | 3.08 × 10−2 | 6.49 × 10−3 | 1.74 × 10−49 | 2.02 × 10−2 | ||

| 1000 | Best | 5.01 × 10−7 | 2.65 × 10−3 | 4.19 × 10−2 | 5.48 | 8 × 109 | 1.1 | 1.13 | 1.06 × 10−5 | 4.71 × 10−34 | 1.6 × 10−1 | |

| Mean | 1.02 × 10−3 | 3.5 × 10−2 | 1.29 × 10−1 | 8.74 × 103 | 13.3 | 1.12 | 1.17 | 4.25 × 10−2 | 4.71 × 10−34 | 2.49 × 10−1 | ||

| Std | 4.57 × 10−3 | 1.88 × 10−2 | 6 × 10−2 | 2.43 × 104 | 2.72 × 109 | 6.47 × 10−3 | 1.2 × 10−2 | 2.17 × 10−1 | 8.7 × 1050 | 9.77 × 10−2 | ||

| F13 | 500 | Best | 1.02 × 10−7 | 3.84 | 12.7 | 1.06 × 102 | 7.76 × 109 | 50.2 | 50 | 1.09 × 102 | 1.35 × 10−32 | 49.5 |

| Mean | 1.16 × 10−2 | 9.27 | 19.1 | 6.41 × 102 | 10.1 | 50.2 | 50 | 22 | 1.35 × 10−32 | 49.6 | ||

| Std | 1.98 × 10−2 | 4.98 | 7.89 | 2.62 × 103 | 2.28 × 109 | 5.43 × 10−2 | 3.28 × 10−3 | 24.6 | 5.57 × 10−48 | 9.31 × 10−2 | ||

| 1000 | Best | 4.53 × 10−7 | 1.77 | 14.5 | 3.77 × 102 | 13.7 | 1 × 102 | 1 × 102 | 9.36 × 10−3 | 1.35 × 10−32 | 99.6 | |

| Mean | 7.56 × 10−1 | 16.1 | 33.8 | 6.29 × 104 | 22.1 | 1.01 × 102 | 1 × 102 | 19 | 1.35 × 10−32 | 9.97 | ||

| Std | 2.42 | 9.55 | 11.4 | 8.64 × 104 | 4.07 × 109 | 5.74 × 10−2 | 4.88 × 10−3 | 36.7 | 5.57 × 10−48 | 8.03 × 10−2 |

| F | dim | MROA vs. ROA | MROA vs. WOA | MROA vs. STOA | MROA vs. SCA | MROA vs. AOA | MROA vs. GTOA | MROA vs. BES | MROA vs. HPGSOS | MROA vs. PSOS |

|---|---|---|---|---|---|---|---|---|---|---|

| F1 | 30 | 7.81 × 10−3 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 1 | 3.9 × 10−18 | 3.9 × 10−18 |

| 100 | 1.25 × 10−1 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 1 | 3.9 × 10−18 | 3.9 × 10−18 | |

| F2 | 30 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 5.28 × 10−14 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 |

| 100 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | |

| F3 | 30 | 8.33 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 6.25 × 10−2 | 3.9 × 10−18 | 3.9 × 10−18 |

| 100 | 5.70 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 9.77 × 10−4 | 3.9 × 10−18 | 3.9 × 10−18 | |

| F4 | 30 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 |

| 100 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | |

| F5 | 30 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 2.26 × 10−16 | 1.85 × 10−14 | 5.03 × 10−17 |

| 100 | 4.27 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 1.2 × 10−15 | 3.9 × 10−18 | 3.41 × 10−9 | |

| F6 | 30 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 |

| 100 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 1.19 × 10−8 | 3.9 × 10−18 | 3.9 × 10−18 | |

| F7 | 30 | 1.2 × 10−5 | 5.27 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 5.29 × 10−1 | 2.08 × 10−17 | 3.9 × 10−18 | 4.16 × 10−12 | 4.27 × 10−18 |

| 100 | 4.83 × 10−4 | 8.02 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 5.76 × 10−3 | 4.92 × 10−15 | 3.9 × 10−18 | 9.55 × 10−9 | 4.02 × 10−18 | |

| F8 | 30 | 5.27 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 1.22 × 10−17 | 3.9 × 10−18 |

| 100 | 5.43 × 10−18 | 4.02 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 5.7 × 10−18 | 3.9 × 10−18 | |

| F9 | 30 | 1 | 5 × 10−1 | 3.9 × 10−18 | 3.9 × 10−18 | 1 | 5.7 × 10−18 | 2.5 × 10−1 | 1 | 1 |

| 100 | 1 | 1 | 3.9 × 10−18 | 3.9 × 10−18 | 1 | 3.9 × 10−18 | 1 | 1 | 1 | |

| F10 | 30 | 1 | 9.87 × 10−15 | 3.9 × 10−18 | 3.9 × 10−18 | 1 | 3.9 × 10−18 | 5 × 10−1 | 2.84 × 10−18 | 1.44 × 10−21 |

| 100 | 1 | 2.01 × 10−17 | 3.9 × 10−18 | 3.9 × 10−18 | 1.65 × 10−8 | 3.9 × 10−18 | 1 | 1.15 × 10−22 | 2.53 × 10−23 | |

| F11 | 30 | 1 | 7.81 × 10−3 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 5.7 × 10−18 | 1 | 1 | 1 |

| 100 | 1 | 1.25 × 10−1 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 5.7 × 10−18 | 1 | 1 | 1 | |

| F12 | 30 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 4.67 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 |

| 100 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 1.27 × 10−15 | 3.9 × 10−18 | 3.9 × 10−18 | |

| F13 | 30 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 1.22 × 10−17 | 3.9 × 10−18 | 3.9 × 10−18 |

| 100 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 4.22 × 10−17 | 3.9 × 10−18 | 1.71 × 10−3 | |

| F14 | 2 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 1.71 × 10−5 | 3.9 × 10−18 | 2.62 × 10−14 | 2.62 × 10−14 |

| F15 | 4 | 1.07 × 10−12 | 5.88 × 10−13 | 9.89 × 10−18 | 1.24 × 10−16 | 2.93 × 10−16 | 4.44 × 10−13 | 5.77 × 10−18 | 2.55 × 10−11 | 3.13 × 10−7 |

| F16 | 2 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 2.5 × 10−1 | 3.9 × 10−18 | 5 × 10−1 | 1 |

| F17 | 2 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 1 | 3.9 × 10−18 | 1 | 1 |

| F18 | 5 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 4.7 × 10−1 | 3.9 × 10−18 | 5.11 × 10−1 | 2.58 × 10−18 |

| F19 | 3 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 6.25 × 10−2 | 3.9 × 10−18 | 1 | 5 × 10−1 |

| F20 | 6 | 1.35 × 10−4 | 1.36 × 10−5 | 4.81 × 10−18 | 4.4 × 10−18 | 4.27 × 10−18 | 1.27 × 10−2 | 4.02 × 10−18 | 5.05 × 10−4 | 6.73 × 10−1 |

| F21 | 4 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 1.22 × 10−17 | 3.9 × 10−18 | 2.78 × 10−8 | 3.76 × 10−1 |

| F22 | 4 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.89 × 10−18 | 3.9 × 10−18 | 1.87 × 10−9 | 1.55 × 10−2 |

| F23 | 4 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 3.9 × 10−18 | 9.14 × 10−18 | 3.9 × 10−18 | 1.18 × 10−9 | 3.33 × 10−7 |

| F | dim | MROA vs. ROA | MROA vs. WOA | MROA vs. STOA | MROA vs. SCA | MROA vs. AOA | MROA vs. GTOA | MROA vs. BES | MROA vs. HPGSOS | MROA vs. PSOS |

|---|---|---|---|---|---|---|---|---|---|---|

| F1 | 500 | 0 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1.73 × 10−6 | 1.73 × 10−6 |

| 1000 | 3.13 × 10−2 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F2 | 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 |

| 1000 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F3 | 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 5 × 10−1 | 1.73 × 10−6 | 1.73 × 10−6 |

| 1000 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.25 × 10−1 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F4 | 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 |

| 1000 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F5 | 500 | 2.35 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 3.06 × 10−4 | 1.73 × 10−6 | 1.73 × 10−6 |

| 1000 | 6.34 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 2.37 × 10−5 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F6 | 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.89 × 10−4 | 1.73 × 10−6 | 1.73 × 10−6 |

| 1000 | 9.32 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 7.19 × 10−1 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F7 | 500 | 9.27 × 10−3 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 5.98 × 10−2 | 2.13 × 10−6 | 1.73 × 10−6 | 2.11 × 10−3 | 1.73 × 10−6 |

| 1000 | 1.40 × 10−2 | 1.92 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 4.28 × 10−2 | 1.73 × 10−6 | 1.73 × 10−6 | 6.64 × 10−4 | 1.73 × 10−6 | |

| F8 | 500 | 3.52 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 2.56 × 10−6 | 1.73 × 10−6 |

| 1000 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 3.79 × 10−6 | 1.73 × 10−6 | |

| F9 | 500 | 1 | 1 | 1.73 × 10−6 | 1.73 × 10−6 | 1.96 × 10−4 | 2.56 × 10−6 | 1 | 1 | 1 |

| 1000 | 1 | 1 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 8.3 × 10−6 | 1 | 1 | 1 | |

| F10 | 500 | 1 | 3.89 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.95 × 10−3 | 1.73 × 10−6 | 2.5 × 10−1 | 4.32 × 10−8 | 4.32 × 10−8 |

| 1000 | 1 | 5.31 × 10−5 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 4.32 × 10−8 | 4.32 × 10−8 | |

| F11 | 500 | 1 | 1 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1 | 1 |

| 1000 | 1 | 1 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1 | 1 | 1 | |

| F12 | 500 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 4.86 × 10−5 | 1.73 × 10−6 | 1.73 × 10−6 |

| 1000 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.97 × 10−5 | 1.73 × 10−6 | 1.73 × 10−6 | |

| F13 | 500 | 1.92 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 4.45 × 10−5 | 1.73 × 10−6 | 1.73 × 10−6 |

| 1000 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 4.07 × 10−5 | 1.73 × 10−6 | 1.73 × 10−6 |

| No. | Type | Function | dim | Range | fmin |

|---|---|---|---|---|---|

| CEC 1 | Unimodal Function | Shifted and Rotated Bent Cigar Function | 10 | [−100, 100] | 100 |

| CEC 2 | Simple Multimodal Functions | Shifted and Rotated Schwefel’s Function | 10 | [−100, 100] | 1100 |

| CEC 3 | Simple Multimodal Functions | Shifted and Rotated Lunacek bi-Rastrigin Function | 10 | [−100, 100] | 700 |

| CEC 4 | Simple Multimodal Functions | Expanded Rosenbrock’s plus Griewangk’s Function | 10 | [−100, 100] | 1900 |

| CEC 5 | Hybrid Functions | Hybrid Function 1 (N = 3) | 10 | [−100, 100] | 1700 |

| CEC 6 | Hybrid Functions | Hybrid Function 2 (N = 4) | 10 | [−100, 100] | 1600 |

| CEC 7 | Hybrid Functions | Hybrid Function 3 (N = 5) | 10 | [−100, 100] | 2100 |

| CEC 8 | Composition Functions | Composition Function 1 (N = 3) | 10 | [−100, 100] | 2200 |

| CEC 9 | Composition Functions | Composition Function 2 (N = 4) | 10 | [−100, 100] | 2400 |

| CEC 10 | Composition Functions | Composition Function 3 (N = 5) | 10 | [−100, 100] | 2500 |

| CEC | Metric | MROA | ROA | WOA | STOA | SCA | AOA | GTOA | BES | HPGSOS | PSOS |

|---|---|---|---|---|---|---|---|---|---|---|---|

| CEC 1 | Best | 1 × 102 | 2.57 × 106 | 4.08 × 106 | 2.11 × 106 | 3.41 × 108 | 1.13 × 109 | 7.62 × 107 | 5.64 × 108 | 1.48 × 102 | 1.31 × 102 |

| Mean | 2.56 × 103 | 8.25 × 108 | 4.86 × 107 | 2.46 × 108 | 1.02 × 109 | 9.94 × 109 | 1.21 × 109 | 4.26 × 109 | 2.54 × 103 | 3.71 × 103 | |

| Std | 2.05 × 103 | 9.36 × 108 | 5.06 × 107 | 2.18 × 108 | 3.64 × 108 | 4.27 × 109 | 1.11 × 109 | 3.34 × 109 | 2.51 × 103 | 3.48 × 103 | |

| CEC 2 | Best | 1.26 × 103 | 1.76 × 103 | 1.81 × 103 | 1.7 × 103 | 2.23 × 103 | 1.92 × 103 | 1.55 × 103 | 2.22 × 103 | 1.32 × 103 | 1.13 × 103 |

| Mean | 1.92 × 103 | 2.14 × 103 | 2.32 × 103 | 2.09 × 103 | 2.6 × 103 | 2.3 × 103 | 2.14 × 103 | 2.71 × 103 | 1.51 × 103 | 1.37 × 103 | |

| Std | 2.02 × 102 | 3.12 × 102 | 3.11 × 102 | 2.71 × 102 | 2.09 × 102 | 2.24 × 102 | 3.6 × 102 | 2.17 × 102 | 2.27 × 102 | 1.87 × 102 | |

| CEC 3 | Best | 7.11 × 102 | 7.67 × 102 | 7.6 × 102 | 7.46 × 102 | 7.72 × 102 | 7.81 × 102 | 7.46 × 102 | 7.75 × 102 | 7.19 × 102 | 7.21 × 102 |

| Mean | 7.46 × 102 | 7.93 × 102 | 7.98 × 102 | 7.68 × 102 | 7.88 × 102 | 8.06 × 102 | 7.65 × 102 | 8.08 × 102 | 7.3 × 102 | 7.3 × 102 | |

| Std | 12.9 | 22.1 | 40 | 14.9 | 12.8 | 16.5 | 24.5 | 25.5 | 9.88 | 8.04 | |

| CEC 4 | Best | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 |

| Mean | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | 1.90 × 103 | |

| Std | 0 | 0 | 2.51 × 10−1 | 4.08 × 10−1 | 1.10 | 0 | 1.2 × 10−12 | 1.22 | 0 | 0 | |

| CEC 5 | Best | 1.8 × 103 | 3.12 × 103 | 9.08 × 103 | 4.65 × 103 | 1.18 × 104 | 1.47 × 104 | 2.53 × 103 | 4.87 × 104 | 5.27 × 103 | 1.91 × 103 |

| Mean | 4.29 × 103 | 6.03 × 104 | 3.66 × 105 | 1.23 × 105 | 6.5 × 104 | 4.49 × 105 | 4.93 × 103 | 9.66 × 105 | 1.31 × 105 | 5.86 × 103 | |

| Std | 1.79 × 103 | 9.9 × 104 | 5.81 × 105 | 1.79 × 105 | 8.02 × 104 | 3.2 × 105 | 3.17 × 103 | 1.43 × 106 | 1.41 × 105 | 7.62 × 103 | |

| CEC 6 | Best | 1.6 × 103 | 1.72 × 103 | 1.74 × 103 | 1.66 × 103 | 1.73 × 103 | 1.89 × 103 | 1.73 × 103 | 1.75 × 103 | 1.6 × 103 | 1.6 × 103 |

| Mean | 1.7 × 103 | 1.85 × 103 | 1.88 × 103 | 1.8 × 103 | 1.87 × 103 | 2.11 × 103 | 1.9 × 103 | 2.03 × 103 | 1.72 × 103 | 1.67 × 103 | |

| Std | 87.4 | 1.44 × 102 | 1.58 × 102 | 1.10 × 102 | 92.2 | 1.8 × 102 | 1.45 × 102 | 1.94 × 102 | 89 | 84.9 | |

| CEC 7 | Best | 2.19 × 103 | 2.61 × 103 | 9.37 × 103 | 3.2 × 103 | 6.11 × 103 | 3.99 × 103 | 2.43 × 103 | 4.65 × 103 | 2.62 × 103 | 2.14 × 103 |

| Mean | 2.68 × 103 | 1.1 × 104 | 6.24 × 105 | 9.81 × 103 | 1.5 × 104 | 8.44 × 105 | 3.14 × 103 | 1.66 × 105 | 4.16 × 104 | 2.82 × 103 | |

| Std | 3.64 × 102 | 1.01 × 104 | 1.38 × 106 | 7.53 × 103 | 8.69 × 103 | 2.12 × 106 | 5.89 × 102 | 4.25 × 105 | 7.36 × 104 | 1.14 × 103 | |

| CEC 8 | Best | 2.24 × 103 | 2.31 × 103 | 2.25 × 103 | 2.25 × 103 | 2.3 × 103 | 2.41 × 103 | 2.28 × 103 | 2.42 × 103 | 2.3 × 103 | 2.3 × 103 |

| Mean | 2.3 × 103 | 2.4 × 103 | 2.4 × 103 | 2.65 × 103 | 2.4 × 103 | 3.07 × 103 | 2.46 × 103 | 2.81 × 103 | 2.31 × 103 | 2.3 × 103 | |

| Std | 17.4 | 84.7 | 3.03 × 102 | 5.52 × 102 | 49 | 3.45 × 102 | 1.27 × 102 | 3.34 × 102 | 5.37 | 3.54 | |

| CEC 9 | Best | 2.50 × 103 | 2.75 × 103 | 2.76 × 103 | 2.75 × 103 | 2.78 × 103 | 2.79 × 103 | 2.76 × 103 | 2.78 × 103 | 2.5 × 103 | 2.5 × 103 |

| Mean | 2.76 × 103 | 2.77 × 103 | 2.78 × 103 | 2.76 × 103 | 2.8 × 103 | 2.88 × 103 | 2.78 × 103 | 2.8 × 103 | 2.67 × 103 | 2.71 × 103 | |

| Std | 11.9 | 58.6 | 57.4 | 14.4 | 10.4 | 94.2 | 74.4 | 57.6 | 1.22 × 102 | 85.2 | |

| CEC 10 | Best | 2.6 × 103 | 2.94 × 103 | 2.94 × 103 | 2.92 × 103 | 2.96 × 103 | 3.17 × 103 | 2.95 × 103 | 3.02 × 103 | 2.9 × 103 | 2.9 × 103 |

| Mean | 2.92 × 103 | 3.0 × 103 | 2.99 × 103 | 2.95 × 103 | 2.99 × 103 | 3.46 × 103 | 3.04 × 103 | 3.28 × 103 | 2.94 × 103 | 2.93 × 103 | |

| Std | 63.9 | 1.1 × 102 | 1.16 × 102 | 33.7 | 31.5 | 2.86 × 102 | 1.50 × 102 | 2.93 × 102 | 22.4 | 24.1 |

| CEC | MROA vs. ROA | MROA vs. WOA | MROA vs. STOA | MROA vs. SCA | MROA vs. AOA | MROA vs. GTOA | MROA vs. BES | MROA vs. HPGSOS | MROA vs. PSOS |

|---|---|---|---|---|---|---|---|---|---|

| CEC 1 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 2.7 × 10−2 | 2.41 × 10−3 |

| CEC 2 | 1.71 × 10−3 | 2.61 × 10−4 | 8.59 × 10−2 | 4.29 × 10−6 | 1.96 × 10−2 | 1.59 × 10−1 | 1.97 × 10−5 | 4.73 × 10−6 | 1.92 × 10−6 |

| CEC 3 | 2.37 × 10−5 | 4.86 × 10−5 | 2.41 × 10−3 | 3.18 × 10−6 | 1.73 × 10−6 | 2.05 × 10−4 | 3.88 × 10−6 | 1.8 × 10−5 | 8.19 × 10−5 |

| CEC 4 | 1 | 1.56 × 10−2 | 2.44 × 10−4 | 1.32 × 10−4 | 1 | 3.91 × 10−3 | 2.5 × 10−1 | 1 | 1 |

| CEC 5 | 3.18 × 10−6 | 1.92 × 10−6 | 1.92 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 2.06 × 10−1 | 1.73 × 10−6 | 1.92 × 10−6 | 1.78 × 10−1 |

| CEC 6 | 2.83 × 10−4 | 8.22 × 10−3 | 1.25 × 10−2 | 3.32 × 10−4 | 2.6 × 10−6 | 8.73 × 10−3 | 6.34 × 10−6 | 5.71 × 10−2 | 1.11 × 10−3 |

| CEC 7 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 3.33 × 10−2 | 1.73 × 10−6 | 3.18E-06 | 4.95 × 10−2 |

| CEC 8 | 1.73 × 10−6 | 3.41 × 10−5 | 1.92 × 10−6 | 1.73 × 10−6 | 1.73 × 10−6 | 2.35 × 10−6 | 1.73 × 10−6 | 2.99 × 10−1 | 2.35 × 10−6 |

| CEC 9 | 2.22 × 10−4 | 4.53 × 10−4 | 8.59 × 10−2 | 2.26 × 10−3 | 3.88 × 10−6 | 5.79 × 10−5 | 3.06 × 10−4 | 2.6 × 10−5 | 1.97 × 10−5 |

| CEC 10 | 3.88 × 10−6 | 3.16 × 10−2 | 1.04 × 10−2 | 3.18 × 10−6 | 1.73 × 10−6 | 3.88 × 10−6 | 1.73 × 10−6 | 2.18 × 10−2 | 1.20 × 10−3 |

| CEC | Metric | β = 0.1 | β = 0.2 | β = 0.3 | β = 0.4 | β = 0.5 | β = 0.6 | β = 0.7 | β = 0.8 | β = 0.9 |

|---|---|---|---|---|---|---|---|---|---|---|

| CEC 1 | Best | 1.03 × 102 | 1 × 102 | 1.6 × 102 | 1.13 × 102 | 1.01 × 102 | 1.02 × 102 | 1 × 102 | 1.17 × 102 | 1.71 × 102 |

| Mean | 4.08 × 103 | 2.56 × 103 | 4.19 × 103 | 2.89 × 103 | 2.53 × 103 | 4.32 × 103 | 3.55 × 103 | 3.55 × 103 | 3.4 × 103 | |

| Std | 3.68 × 103 | 2.05 × 103 | 3.47 × 103 | 1.98 × 103 | 3.02 × 103 | 2.91 × 103 | 3.07 × 103 | 3.09 × 103 | 3.69 × 103 | |

| CEC 2 | Best | 1.34 × 103 | 1.26 × 103 | 1.42 × 103 | 1.33 × 103 | 1.7 × 103 | 1.73 × 103 | 1.35 × 103 | 1.46 × 103 | 1.34 × 103 |

| Mean | 2.01 × 103 | 1.92 × 103 | 2 × 103 | 2 × 103 | 2.09 × 103 | 2.08 × 103 | 2.03 × 103 | 2 × 103 | 1.92 × 103 | |

| Std | 2.54 × 102 | 2.02 × 102 | 2 × 102 | 2.58 × 102 | 1.62 × 102 | 1.48 × 102 | 1.97 × 102 | 2.38 × 102 | 2.54 × 102 | |

| CEC 3 | Best | 7.25 × 102 | 7.11 × 102 | 7.24 × 102 | 7.19 × 102 | 7.23 × 102 | 7.17 × 102 | 7.16 × 102 | 7.17 × 102 | 7.23 × 102 |

| Mean | 7.55 × 102 | 7.46 × 102 | 7.5 × 102 | 7.49 × 102 | 7.5 × 102 | 7.56 × 102 | 7.51 × 102 | 7.51 × 102 | 7.50 × 102 | |

| Std | 17.5 | 12.9 | 18.3 | 17.6 | 20.3 | 27.1 | 16.2 | 18.6 | 18.2 | |

| CEC 4 | Best | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 |

| Mean | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | 1.9 × 103 | |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| CEC 5 | Best | 2.09 × 103 | 1.80 × 103 | 1.91 × 103 | 1.88 × 103 | 2.05 × 103 | 1.98 × 103 | 2.06 × 103 | 2.01 × 103 | 2 × 103 |

| Mean | 4.18 × 103 | 4.29 × 103 | 3.75 × 103 | 3.59 × 103 | 3.64 × 103 | 3.72 × 103 | 4.13 × 103 | 4.09 × 103 | 3.69 × 103 | |

| Std | 2.12 × 103 | 1.79 × 103 | 1.93 × 103 | 1.35 × 103 | 1.74 × 103 | 1.61 × 103 | 1.96 × 103 | 1.59 × 103 | 1.59 × 103 | |

| CEC 6 | Best | 1.60 × 103 | 1.6 × 103 | 1.60 × 103 | 1.60 × 103 | 1.60 × 103 | 1.60 × 103 | 1.60 × 103 | 1.60 × 103 | 1.60 × 103 |

| Mean | 1.75 × 103 | 1.7 × 103 | 1.76 × 103 | 1.74 × 103 | 1.74 × 103 | 1.75 × 103 | 1.76 × 103 | 1.75 × 103 | 1.72 × 103 | |

| Std | 1.08 × 102 | 87.4 | 1.07 × 102 | 1.13 × 102 | 1.08 × 102 | 1.1 × 102 | 99.8 | 1.2 × 102 | 85.7 | |

| CEC 7 | Best | 2.15 × 103 | 2.19 × 103 | 2.13 × 103 | 2.15 × 103 | 2.27 × 103 | 2.2 × 103 | 2.17 × 103 | 2.27 × 103 | 2.23 × 103 |

| Mean | 2.66 × 103 | 2.68 × 103 | 2.59 × 103 | 2.66 × 103 | 2.68 × 103 | 2.89 × 103 | 2.76 × 103 | 3.01 × 103 | 3.12 × 103 | |

| Std | 3.4 × 102 | 3.64 × 102 | 3.41 × 102 | 3.81 × 102 | 2.5 × 102 | 4.83 × 102 | 6.13 × 102 | 8.67 × 102 | 7.58 × 102 | |

| CEC 8 | Best | 2.26 × 103 | 2.24 × 103 | 2.23 × 103 | 2.26 × 103 | 2.27 × 103 | 2.21 × 103 | 2.24 × 103 | 2.3 × 103 | 2.23 × 103 |

| Mean | 2.31 × 103 | 2.3 × 103 | 2.31 × 103 | 2.31 × 103 | 2.31 × 103 | 2.3 × 103 | 2.31 × 103 | 2.31 × 103 | 2.3 × 103 | |

| Std | 9.86 | 17.4 | 14.7 | 10 | 8.92 | 22.6 | 13.7 | 4.81 | 20.1 | |

| CEC 9 | Best | 2.74 × 103 | 2.5 × 103 | 2.50 × 103 | 2.74 × 103 | 2.50 × 103 | 2.50 × 103 | 2.73 × 103 | 2.50 × 103 | 2.74 × 103 |

| Mean | 2.76 × 103 | 2.76 × 103 | 2.75 × 103 | 2.76 × 103 | 2.75 × 103 | 2.75 × 103 | 2.75 × 103 | 2.75 × 103 | 2.76 × 103 | |

| Std | 15.6 | 11.9 | 47.9 | 11 | 49.5 | 48.6 | 11.7 | 48.7 | 11 | |

| CEC 10 | Best | 2.9 × 103 | 2.6 × 103 | 2.9 × 103 | 2.9 × 103 | 2.9 × 103 | 2.9 × 103 | 2.9 × 103 | 2.9 × 103 | 2.9 × 103 |

| Mean | 2.94 × 103 | 2.92 × 103 | 2.93 × 103 | 2.94 × 103 | 2.94 × 103 | 2.94 × 103 | 2.93 × 103 | 2.94 × 103 | 2.93 × 103 | |

| Std | 22.5 | 63.9 | 22.1 | 31.6 | 26.7 | 20 | 30.1 | 26 | 24.7 |

| Algorithm | Optimal Values for Variables | Optimum Weight | |||

|---|---|---|---|---|---|

| h | l | t | b | ||

| MROA | 0.2062185 | 3.254893 | 9.020003 | 0.206489 | 1.699058 |

| ROA [23] | 0.200077 | 3.365754 | 9.011182 | 0.206893 | 1.706447 |

| MFO [37] | 0.2057 | 3.4703 | 9.0364 | 0.2057 | 1.72452 |

| CBO [38] | 0.205722 | 3.47041 | 9.037276 | 0.205735 | 1.724663 |

| IHS [39] | 0.20573 | 3.47049 | 9.03662 | 0.2057 | 1.7248 |

| GWO [40] | 0.205676 | 3.478377 | 9.03681 | 0.205778 | 1.72624 |

| MVO [14] | 0.205463 | 3.473193 | 9.044502 | 0.205695 | 1.72645 |

| WOA [9] | 0.205396 | 3.484293 | 9.037426 | 0.206276 | 1.730499 |

| CPSO [41] | 0.202369 | 3.544214 | 9.04821 | 0.205723 | 1.73148. |

| RO [42] | 0.203687 | 3.528467 | 9.004233 | 0.207241 | 1.735344 |

| IHHO [43] | 0.20533 | 3.47226 | 9.0364 | 0.2010 | 1.7238 |

| Algorithm | Optimal Values for Variables | f(x) | ||

|---|---|---|---|---|

| d | D | N | ||

| MROA | 0.05 | 0.374430 | 8.5497203 | 0.009875331 |

| GA [1] | 0.05148 | 0.351661 | 11.632201 | 0.01270478 |

| HS [6] | 0.051154 | 0.349871 | 12.076432 | 0.0126706 |

| CSCA [44] | 0.051609 | 0.354714 | 11.410831 | 0.0126702 |

| PSO [41] | 0.051728 | 0.357644 | 11.244543 | 0.0126747 |

| AOA [11] | 0.05 | 0.349809 | 11.8637 | 0.012124 |

| WOA [9] | 0.051207 | 0.345215 | 12.004032 | 0.0126763 |

| GSA [45] | 0.050276 | 0.32368 | 13.52541 | 0.0127022 |

| DE [46] | 0.051609 | 0.354714 | 11.410831 | 0.0126702 |

| RLTLBO [33] | 0.055118 | 0.5059 | 5.1167 | 0.010938 |

| RO [42] | 0.05137 | 0.349096 | 11.76279 | 0.0126788 |

| Algorithm | Optimal Values for Variables | Optimum Weight | |||

|---|---|---|---|---|---|

| Ts | Th | R | L | ||

| MROA | 0.742578894 | 0.368384814 | 40.33385234 | 199.802664 | 5735.8501 |

| SHO [47] | 0.77821 | 0.384889 | 40.31504 | 200 | 5885.5773 |

| GWO [40] | 0.8125 | 0.4345 | 42.089181 | 176.758731 | 6051.5639 |

| ACO [48] | 0.8125 | 0.4375 | 42.103624 | 176.572656 | 6059.0888 |

| WOA [9] | 0.8125 | 0.4375 | 42.0982699 | 176.638998 | 6059.741 |

| ES [49] | 0.8125 | 0.4375 | 42.098087 | 176.640518 | 6059.7456 |

| SMA [50] | 0.7931 | 0.3932 | 40.6711 | 196.2178 | 5994.1857 |

| BA [51] | 0.8125 | 0.4375 | 42.0984 | 176.6366 | 6059.7143 |

| HPSO [52] | 0.8125 | 0.4375 | 42.0984 | 176.6366 | 6059.7143 |

| CSS [53] | 0.8125 | 0.4375 | 42.1036 | 176.5727 | 6059.0888 |

| MPA [54] | 0.77816876 | 0.38464966 | 40.31962084 | 199.9999935 | 5885.3353 |

| Algorithm | Optimal Values for Variables | Optimal Weight | ||||||

|---|---|---|---|---|---|---|---|---|

| x1 | x2 | x3 | x4 | x5 | x6 | x7 | ||

| MROA | 3.497571 | 0.7 | 17 | 7.3 | 7.8 | 3.350057 | 5.285540 | 2995.437447 |

| AOA [11] | 3.50384 | 0.7 | 17 | 7.3 | 7.72933 | 3.35649 | 5.2867 | 2997.9157 |

| MFO [37] | 3.497455 | 0.7 | 17 | 7.82775 | 7.712457 | 3.351787 | 5.286352 | 2998.94083 |

| WSA [55] | 3.5 | 0.7 | 17 | 7.3 | 7.8 | 3.350215 | 5.286683 | 2996.348225 |

| AAO [56] | 3.499 | 0.6999 | 17 | 7.3 | 7.8 | 3.3502 | 5.2872 | 2996.783 |

| CS [57] | 3.5015 | 0.7 | 17 | 7.605 | 7.8181 | 3.352 | 5.2875 | 3000.981 |

| FA [58] | 3.507495 | 0.7001 | 17 | 7.719674 | 8.080854 | 3.351512 | 5.287051 | 3010.137492 |

| RSA [59] | 3.50279 | 0.7 | 17 | 7.30812 | 7.74715 | 3.35067 | 5.28675 | 2996.5157 |

| HS [6] | 3.520124 | 0.7 | 17 | 8.37 | 7.8 | 3.36697 | 5.288719 | 3029.002 |

| hHHO-SCA [60] | 3.506119 | 0.7 | 17 | 7.3 | 7.99141 | 3.452569 | 5.286749 | 3029.873076 |

| Algorithm | Optimal Values for Variables | Optimum Weight | ||||

|---|---|---|---|---|---|---|

| x1 | x2 | x3 | x4 | x5 | ||

| MROA | 69.99999995 | 90 | 1 | 600 | 2 | 0.235242458 |

| TLBO [4] | 70 | 90 | 1 | 810 | 3 | 0.313656611 |

| WCA [61] | 70 | 90 | 1 | 910 | 3 | 0.313656 |

| MVO [14] | 70 | 90 | 1 | 910 | 3 | 0.313656 |

| CMVO [62] | 70 | 90 | 1 | 910 | 3 | 0.313656 |

| MFO [37] | 70 | 90 | 1 | 910 | 3 | 0.313656 |

| RSA [59] | 70.0347 | 90.0349 | 1 | 801.7285 | 2.974 | 0.31176 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wen, C.; Jia, H.; Wu, D.; Rao, H.; Li, S.; Liu, Q.; Abualigah, L. Modified Remora Optimization Algorithm with Multistrategies for Global Optimization Problem. Mathematics 2022, 10, 3604. https://doi.org/10.3390/math10193604

Wen C, Jia H, Wu D, Rao H, Li S, Liu Q, Abualigah L. Modified Remora Optimization Algorithm with Multistrategies for Global Optimization Problem. Mathematics. 2022; 10(19):3604. https://doi.org/10.3390/math10193604

Chicago/Turabian StyleWen, Changsheng, Heming Jia, Di Wu, Honghua Rao, Shanglong Li, Qingxin Liu, and Laith Abualigah. 2022. "Modified Remora Optimization Algorithm with Multistrategies for Global Optimization Problem" Mathematics 10, no. 19: 3604. https://doi.org/10.3390/math10193604

APA StyleWen, C., Jia, H., Wu, D., Rao, H., Li, S., Liu, Q., & Abualigah, L. (2022). Modified Remora Optimization Algorithm with Multistrategies for Global Optimization Problem. Mathematics, 10(19), 3604. https://doi.org/10.3390/math10193604